#cameraposition

Photo

Going Low We’ve previously noted that it makes sense to lower the camera when shooting subjects such as children, dogs, cats, and other short subjects. In this frame from “Ireland’s First Wildlife Hospital,” the director @PhilipBromwell demonstrates that going low can also be effective when the action is close to the ground. The technique works in every genre, and it doesn’t require fancy equipment. You just need to crouch or otherwise lower yourself…and your camera. Bromwell’s intriguing two-and-a-half minute @RTEnews report is the current Mobile Movie of the Week. You can see it at MobileMovieMaking (link in profile). #shotoniPhone #mobilejournalism #IrishTV #cinematography #cameraposition #lowlevelshot https://www.instagram.com/p/CLsfLtfBfv4/?igshid=1q83ipooz182w

0 notes

Link

Studio Lighting Equipment

Photography blog

If you want to learn more about photography?

Visit our blog page.

https://eeswarcreativestudio.com/studio-lighting-equipment/

#studiolightingequipment#studio#lighting#equipment#cameraposition#camera#position#lights#reflectors#otherequipment#photography#photographyblog#photostudio#pondicherry#puducherry#eeswarcreativestudio#eeswar#creative#wedding#portrait#candid#landscape#photographywebsite

0 notes

Text

MMD FX file reading for shaders: a translation by ryuu

The following tutorial is an English translation of the original one in Japanese by Dance Intervention P.

This English documentation was requested by Chestnutscoop on DeviantArt, as it’ll be useful to the MME modding community and help MMD become open-source for updates. It’s going to be an extensive one, so take it easy.

Disclaimer: coding isn’t my area, not even close to my actual career and job (writing/health). I have little idea of what’s going on here and I’m relying on my IT friends to help me with this one.

Content Index:

Introduction

Overall Flow

Parameter Declaration

Outline Drawing

Non-Self-shadow Rendering

Drawing Objects When Self-shadow is Disabled

Z-value Plot For Self-shadow Determination

Drawing Objects in Self-shadowing

Final Notes

1. INTRODUCTION

This documentation contains the roots of .fx file reading for MME as well as information on DirectX and programmable shaders while reading full.fx version 1.3. In other words, how to use HLSL for MMD shaders. Everything in this tutorial will try to stay as faithful as possible to the original text in Japanese.

It was translated from Japanese to English by ryuu. As I don’t know how to contact Dance Intervention P for permission to translate and publish it here, the original author is free to request me to take it down. The translation was done with the aid of the online translator DeepL and my friends’ help. This documentation has no intention in replacing the original author’s.

Any coding line starting with “// [Japanese text]” is the author’s comments. If the coding isn’t properly formatted on Tumblr, you can visit the original document to check it. The original titles of each section were added for ease of use.

2. OVERALL FLOW (全体の流れ)

Applicable technique → pass → VertexShader → PixelShader

• Technique: processing of annotations that fall under <>.

• Pass: processing unit.

• VertexShader: convert vertices in local coordinates to projective coordinates.

• PixelShader: sets the color of a vertex.

3. PARAMETER DECLARATION (パラメータ宣言)

9 // site-specific transformation matrix

10 float4x4 WorldViewProjMatrix : WORLDVIEWPROJECTION;

11 float4x4 WorldMatrix : WORLD;

12 float4x4 ViewMatrix : VIEW;

13 float4x4 LightWorldViewProjMatrix : WORLDVIEWPROJECTION < string Object = “Light”; >;

• Float4x4: 32-bit floating point with 4 rows and 4 columns.

• WorldViewProjMatrix: a matrix that can transform vertices in local coordinates to projective coordinates with the camera as the viewpoint in a single step.

• WorldMatrix: a matrix that can transform vertices in local coordinates into world coordinates with the camera as the viewpoint.

• ViewMatrix: a matrix that can convert world coordinate vertices to view coordinates with the camera as the viewpoint.

• LightWorldViewProjMatrix: a matrix that can transform vertices in local coordinates to projective coordinates with the light as a viewpoint in a single step.

• Local coordinate system: coordinates to represent the positional relationship of vertices in the model.

• World coordinate: coordinates to show the positional relationship between models.

• View coordinate: coordinates to represent the positional relationship with the camera.

• Projection Coordinates: coordinates used to represent the depth in the camera. There are two types: perspective projection and orthographic projection.

• Perspective projection: distant objects are shown smaller and nearby objects are shown larger.

• Orthographic projection: the size of the image does not change with depth.

15 float3 LightDirection : DIRECTION < string Object = “Light”; >;

16 float3 CameraPosition : POSITION < string Object = “Camera”; >;

• LightDirection: light direction vector.

• CameraPosition: world coordinates of the camera.

18 // material color

19 float4 MaterialDiffuse : DIFFUSE < string Object = “Geometry”; >;

20 float3 MaterialAmbient : AMBIENT < string Object = “Geometry”; >;

21 float3 MaterialEmmisive : EMISSIVE < string Object = “Geometry”; >;

22 float3 MaterialSpecular : SPECULAR < string Object = “Geometry”; >;

23 float SpecularPower : SPECULARPOWER < string Object = “Geometry”; >;

24 float3 MaterialToon : TOONCOLOR;

25 float4 EdgeColor : EDGECOLOR;

• float3: no alpha value.

• MaterialDiffuse: diffuse light color of material, Diffuse+A (alpha value) in PMD.

• MaterialAmbient: ambient light color of the material; Diffuse of PMD?

• MaterialEmmisive: light emitting color of the material, Ambient in PMD.

• MaterialSpecular: specular light color of the material; PMD’s Specular.

• SpecularPower: specular strength. PMD Shininess.

• MaterialToon: shade toon color of the material, lower left corner of the one specified by the PMD toon texture.

• EdgeColor: putline color, as specified by MMD’s edge color.

26 // light color

27 float3 LightDiffuse : DIFFUSE < string Object = “Light”; >;

28 float3 LightAmbient : AMBIENT < string Object = “Light”; >;

29 float3 LightSpecular : SPECULAR < string Object = “Light”; >;

30 static float4 DiffuseColor = MaterialDiffuse * float4(LightDiffuse, 1.0f);

31 static float3 AmbientColor = saturate(MaterialAmbient * LightAmbient + MaterialEmmisive);

32 static float3 SpecularColor = MaterialSpecular * LightSpecular;

• LightDiffuse: black (floa3(0,0,0))?

• LightAmbient: MMD lighting operation values.

• LightSpecular: MMD lighting operation values.

• DiffuseColor: black by multiplication in LightDiffuse?

• AmbientColor: does the common color of Diffuse in PMD become a little stronger in the value of lighting manipulation in MMD?

• SpecularColor: does it feel like PMD’s Specular is a little stronger than MMD’s Lighting Manipulation value?

34 bool parthf; // perspective flags

35 bool transp; // semi-transparent flag

36 bool spadd; // sphere map additive synthesis flag

37 #define SKII1 1500

38 #define SKII2 8000

39 #define Toon 3

• parthf: true for self-shadow distance setting mode2.

• transp: true for self-shadow distance setting mode2.

• spadd: true in sphere file .spa.

• SKII1:self-shadow A constant used in mode1. The larger the value, the weirder the shadow will be, and the smaller the value, the weaker the shadow will be.

• SKII2: self-shadow A constant used in mode2. If it is too large, the self-shadow will have a strange shadow, and if it is too small, it will be too thin.

• Toon: weaken the shade in the direction of the light with a close range shade toon.

41 // object textures

42 texture ObjectTexture: MATERIALTEXTURE;

43 sampler ObjTexSampler = sampler_state {

44 texture = <ObjectTexture>;

45 MINFILTER = LINEAR;

46 MAGFILTER = LINEAR;

47 };

48

• ObjectTexture: texture set in the material.

• ObjTexSampler: setting the conditions for acquiring material textures.

• MINIFILTER: conditions for shrinking textures.

• MAGFILTER: conditions for enlarging a texture.

• LINEAR: interpolate to linear.

49 // sphere map textures

50 texture ObjectSphereMap: MATERIALSPHEREMAP;

51 sampler ObjSphareSampler = sampler_state {

52 texture = <ObjectSphereMap>;

53 MINFILTER = LINEAR;

54 MAGFILTER = LINEAR;

55 };

• ObjectSphereMap: sphere map texture set in the material.

• ObjSphareSampler: setting the conditions for obtaining a sphere map texture.

57 // this is a description to avoid overwriting the original MMD sampler. Cannot be deleted.

58 sampler MMDSamp0 : register(s0);

59 sampler MMDSamp1 : register(s1);

60 sampler MMDSamp2 : register(s2);

• register: assign shader variables to specific registers.

• s0: sampler type register 0.

4. OUTLINE DRAWING (輪郭描画)

Model contours used for drawing, no accessories.

65 // vertex shader

66 float4 ColorRender_VS(float4 Pos : POSITION) : POSITION

67 {

68 // world-view projection transformation of camera viewpoint.

69 return mul( Pos, WorldViewProjMatrix );

70 }

Return the vertex coordinates of the camera viewpoint after the world view projection transformation.

Parameters

• Pos: local coordinates of the vertex.

• POSITION (input): semantic indicating the vertex position in the object space.

• POSITION (output): semantic indicating the position of a vertex in a homogeneous space.

• mul (x,y): perform matrix multiplication of x and y.

Return value

Vertex coordinates in projective space; compute screen coordinate position by dividing by w.

• Semantics: communicating information about the intended use of parameters.

72 // pixel shader

73 float4 ColorRender_PS() : COLOR

74 {

75 // fill with outline color

76 return EdgeColor;

77 }

Returns the contour color of the corresponding input vertex.

Return value

Output color

• COLOR: output color semantic.

79 // contouring techniques

80 technique EdgeTec < string MMDPass = "edge"; > {

81 pass DrawEdge {

82 AlphaBlendEnable = FALSE;

83 AlphaTestEnable = FALSE;

84

85 VertexShader = compile vs_2_0 ColorRender_VS();

86 PixelShader = compile ps_2_0 ColorRender_PS();

87 }

88 }

Processing for contour drawing.

• MMDPASS: specify the drawing target to apply.

• “edge”: contours of the PMD model.

• AlphaBlendEnable: set the value to enable alpha blending transparency. Blend surface colors, materials, and textures with transparency information to overlay on another surface.

• AlphaTestEnable: per-pixel alpha test setting. If passed, the pixel will be processed by the framebuffer. Otherwise, all framebuffer processing of pixels will be skipped.

• VertexShader: shader variable representing the compiled vertex shader.

• PixelShader: shader variable representing the compiled pixel shader.

• vs_2_0: vertex shader profile for shader model 2.

• ps_2_0: pixel shader profile for shader model 2.

• Frame buffer: memory that holds the data for one frame until it is displayed on the screen.

5. NON-SELF-SHADOW SHADOW RENDERING (非セルフシャドウ影描画)

Drawing shadows falling on the ground in MMD, switching between showing and hiding them in MMD's ground shadow display.

94 // vertex shader

95 float4 Shadow_VS(float4 Pos : POSITION) : POSITION

96 {

97 // world-view projection transformation of camera viewpoint.

98 return mul( Pos, WorldViewProjMatrix );

99 }

Returns the vertex coordinates of the source vertex of the shadow display after the world-view projection transformation of the camera viewpoint.

Parameters

• Pos: local coordinates of the vertex from which the shadow will be displayed.

Return value

Vertex coordinates in projective space.

101 // pixel shader

102 float4 Shadow_PS() : COLOR

103 {

104 // fill with ambient color

105 return float4(AmbientColor.rgb, 0.65f);

106 }

Returns the shadow color to be drawn. The alpha value will be reflected when MMD's display shadow color transparency is enabled.

Return value

Output color

108 // techniques for shadow drawing

109 technique ShadowTec < string MMDPass = "shadow"; > {

110 pass DrawShadow {

111 VertexShader = compile vs_2_0 Shadow_VS();

112 PixelShader = compile ps_2_0 Shadow_PS();

113 }

114 }

Processing for non-self-shadow shadow drawing.

• “shadow”: simple ground shadow.

6. DRAWING OBJECTS WHEN SELF-SHADOW IS DISABLED (セルフシャドウ無効時オブジェクト描画)

Drawing objects when self-shadowing is disabled. Also used when editing model values.

120 struct VS_OUTPUT {

121 float4 Pos : POSITION; // projective transformation coordinates

122 float2 Tex : TEXCOORD1; // texture

123 float3 Normal : TEXCOORD2; // normal vector

124 float3 Eye : TEXCOORD3; // position relative to camera

125 float2 SpTex : TEXCOORD4; // sphere map texture coordinates

126 float4 Color : COLOR0; // diffuse color

127 };

A structure for passing multiple return values between shader stages. The final data to be passed must specify semantics.

Parameters

• Pos:stores the position of a vertex in projective coordinates as a homogeneous spatial coordinate vertex shader output semantic.

• Tex: stores the UV coordinates of the vertex as the first texture coordinate vertex shader output semantic.

• Normal: stores the vertex normal vector as the second texture coordinate vertex shader output semantic.

• Eye: (opposite?) stores the eye vector as a #3 texture coordinate vertex shader output semantic.

• SpTex: stores the UV coordinates of the vertex as the number 4 texture coordinate vertex shader output semantic.

• Color: stores the diffuse light color of a vertex as the 0th color vertex shader output semantic.

129 // vertex shader

130 VS_OUTPUT Basic_VS(float4 Pos : POSITION, float3 Normal : NORMAL, float2 Tex : TEXCOORD0, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon)

131 {

Converts local coordinates of vertices to projective coordinates. Sets the value to pass to the pixel shader, which returns the VS_OUTPUT structure.

Parameters

• Pos: local coordinates of the vertex.

• Normal: normals in local coordinates of vertices.

• Tex: UV coordinates of the vertices.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of sphere map usage, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

• uniform: marks variables with data that are always constant during shader execution.

Return value

VS_OUTPUT, a structure passed to the pixel shader.

132 VS_OUTPUT Out = (VS_OUTPUT)0;

133

Initialize structure members with 0. Error if return member is undefined.

134 // world-view projection transformation of camera viewpoint.

135 Out.Pos = mul( Pos, WorldViewProjMatrix );

136

Convert local coordinates of vertices to projective coordinates.

137 // position relative to camera

138 Out.Eye = CameraPosition - mul( Pos, WorldMatrix );

The opposite vector of eye? Calculate.

139 // vertex normal

140 Out.Normal = normalize( mul( Normal, (float3x3)WorldMatrix ) );

141

Compute normalized normal vectors in the vertex world space.

• normalize (x): normalize a floating-point vector based on x/length(x).

• length (x): returns the length of a floating-point number vector.

142 // Diffuse color + Ambient color calculation

143 Out.Color.rgb = AmbientColor;

144 if ( !useToon ) {

145 Out.Color.rgb += max(0,dot( Out.Normal, -LightDirection )) * DiffuseColor.rgb;

By the inner product of the vertex normal and the backward vector of the light, the influence of the light (0-1) is calculated, and the diffuse light color calculated from the influence is added to the ambient light color. DiffuseColor is black because LightDifuse is black, and AmbientColor is the diffuse light of the material. Confirmation required.

• dot (x,y): return the inner value of the x and y vectors.

• max (x,y): choose the value of x or y, whichever is greater.

146 }

147 Out.Color.a = DiffuseColor.a;

148 Out.Color = saturate( Out.Color );

149

• saturate (x): clamp x to the range 0-1. 0>x, 1>x truncated?

150 // texture coordinates

151 Out.Tex = Tex;

152

153 if ( useSphereMap ) {

154 // sphere map texture coordinates

155 float2 NormalWV = mul( Out.Normal, (float3x3)ViewMatrix );

X and Y coordinates of vertex normals in view space.

156 Out.SpTex.x = NormalWV.x * 0.5f + 0.5f;

157 Out.SpTex.y = NormalWV.y * -0.5f + 0.5f;

158 }

159

Converts view coordinate values of vertex normals to texture coordinate values. Idiomatic.

160 return Out;

161 }

Return the structure you set.

163 // pixel shader

164 float4 Basic_PS(VS_OUTPUT IN, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon) : COLOR0

165 {

Specify the color of pixels to be displayed on the screen.

Parameters

• IN: VS_OUTPUT structure received from the vertex shader.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of using sphere map, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

Output value

Output color

166 // specular color calculation

167 float3 HalfVector = normalize( normalize(IN.Eye) + -LightDirection );

Find the half vector from the inverse vector of the line of sight and the inverse vector of the light.

• Half vector: a vector that is the middle (addition) of two vectors. Used instead of calculating the reflection vector.

168 float3 Specular = pow( max(0,dot( HalfVector, normalize(IN.Normal) )), SpecularPower ) * SpecularColor;

169

From the half-vector and vertex normals, find the influence of reflection. Multiply the influence by the specular intensity, and multiply by the specular light color to get the specular.

• pow (x,y): multiply x by the exponent y.

170 float4 Color = IN.Color;

171 if ( useTexture ) {

172 // apply texture

173 Color *= tex2D( ObjTexSampler, IN.Tex );

174 }

If a texture is set, extract the color of the texture coordinates and multiply it by the base color.

• tex2D (sampler, tex): extract the color of the tex coordinates from the 2D texture in the sampler settings.

175 if ( useSphereMap ) {

176 // apply sphere map

177 if(spadd) Color += tex2D(ObjSphareSampler,IN.SpTex);

178 else Color *= tex2D(ObjSphareSampler,IN.SpTex);

179 }

180

If a sphere map is set, extract the color of the sphere map texture coordinates and add it to the base color if it is an additive sphere map file, otherwise multiply it.

181 if ( useToon ) {

182 // toon application

183 float LightNormal = dot( IN.Normal, -LightDirection );

184 Color.rgb *= lerp(MaterialToon, float3(1,1,1), saturate(LightNormal * 16 + 0.5));

185 }

In the case of the PMD model, determine the influence of the light from the normal vector of the vertex and the inverse vector of the light. Correct the influence level to 0.5-1, and darken the base color for lower influence levels.

• lerp (x,y,s): linear interpolation based on x + s(y - x). 0=x, 1=y.

186

187 // specular application

188 Color.rgb += Specular;

189

190 return Color;

191 }

Add the obtained specular to the base color and return the output color.

195 technique MainTec0 < string MMDPass = "object"; bool UseTexture = false; bool UseSphereMap = false; bool UseToon = false; > {

196 pass DrawObject {

197 VertexShader = compile vs_2_0 Basic_VS(false, false, false);

198 PixelShader = compile ps_2_0 Basic_PS(false, false, false);

199 }

200 }

Technique performed on a subset of accessories (materials) that don’t use texture or sphere maps when self-shadow is disabled.

• “object”: object when self-shadow is disabled.

• UseTexture: true for texture usage subset.

• UseSphereMap: true for sphere map usage subset.

• UseToon: true for PMD model.

7. Z-VALUE PLOT FOR SELF-SHADOW DETERMINATION (セルフシャドウ判定用Z値プロット)

Create a boundary value to be used for determining the self-shadow.

256 struct VS_ZValuePlot_OUTPUT {

257 float4 Pos : POSITION; // projective transformation coordinates

258 float4 ShadowMapTex : TEXCOORD0; // z-buffer texture

259 };

A structure for passing multiple return values between shader stages.

Parameters

• Pos: stores the position of a vertex in projective coordinates as a homogeneous spatial coordinate vertex shader output semantic.

• ShadowMapTex: stores texture coordinates for hardware calculation of z and w interpolation values as 0 texture coordinate vertex shader output semantics.

• w: scaling factor of the visual cone (which expands as you go deeper) in projective space.

261 // vertex shader

262 VS_ZValuePlot_OUTPUT ZValuePlot_VS( float4 Pos : POSITION )

263 {

264 VS_ZValuePlot_OUTPUT Out = (VS_ZValuePlot_OUTPUT)0;

265

266 // do a world-view projection transformation with the eyes of the light.

267 Out.Pos = mul( Pos, LightWorldViewProjMatrix );

268

Conversion of local coordinates of a vertex to projective coordinates with respect to a light.

269 // align texture coordinates to vertices.

270 Out.ShadowMapTex = Out.Pos;

271

272 return Out;

273 }

Assign to texture coordinates to let the hardware calculate z, w interpolation values for vertex coordinates, and return the structure.

275 // pixel shader

276 float4 ZValuePlot_PS( float4 ShadowMapTex : TEXCOORD0 ) : COLOR

277 {

278 // record z-values for R color components

279 return float4(ShadowMapTex.z/ShadowMapTex.w,0,0,1);

280 }

Divide the z-value in projective space by the magnification factor w, calculate the z-value in screen coordinates, assign to r-value and return (internal MMD processing?).

282 // techniques for Z-value mapping

283 technique ZplotTec < string MMDPass = "zplot"; > {

284 pass ZValuePlot {

285 AlphaBlendEnable = FALSE;

286 VertexShader = compile vs_2_0 ZValuePlot_VS();

287 PixelShader = compile ps_2_0 ZValuePlot_PS();

288 }

289 }

Technique to be performed when calculating the z-value for self-shadow determination.

• “zplot”: Z-value plot for self-shadow.

8. DRAWING OBJECTS IN SELF-SHADOWING (セルフシャドウ時オブジェクト描画)

Drawing an object with self-shadow.

295 // sampler for the shadow buffer. “register(s0)" because MMD uses s0

296 sampler DefSampler : register(s0);

297

Assign sampler register 0 to DefSampler. Not sure when it’s swapped with MMDSamp0 earlier. Not replaceable.

298 struct BufferShadow_OUTPUT {

299 float4 Pos : POSITION; // projective transformation coordinates

300 float4 ZCalcTex : TEXCOORD0; // z value

301 float2 Tex : TEXCOORD1; // texture

302 float3 Normal : TEXCOORD2; // normal vector

303 float3 Eye : TEXCOORD3; // position relative to camera

304 float2 SpTex : TEXCOORD4; // sphere map texture coordinates

305 float4 Color : COLOR0; // diffuse color

306 };

VS_OUTPUT with ZCalcTex added.

• ZCalcTex: stores the texture coordinates for calculating the interpolation values of Z and w for vertices in screen coordinates as the 0 texture coordinate vertex shader output semantic.

308 // vertex shader

309 BufferShadow_OUTPUT BufferShadow_VS(float4 Pos : POSITION, float3 Normal : NORMAL, float2 Tex : TEXCOORD0, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon)

310 {

Converts local coordinates of vertices to projective coordinates. Set the value to pass to the pixel shader, returning the BufferShadow_OUTPUT structure.

Parameters

• Pos: local coordinates of the vertex.

• Normal: normals in local coordinates of vertices.

• Tex: UV coordinates of the vertices.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of sphere map usage, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

Return value

BufferShadow_OUTPUT.

311 BufferShadow_OUTPUT Out = (BufferShadow_OUTPUT)0;

312

Initializing the structure.

313 // world-view projection transformation of camera viewpoint.

314 Out.Pos = mul( Pos, WorldViewProjMatrix );

315

Convert local coordinates of vertices to projective coordinates.

316 // position relative to camera

317 Out.Eye = CameraPosition - mul( Pos, WorldMatrix );

Calculate the inverse vector of the line of sight.

318 // vertex normal

319 Out.Normal = normalize( mul( Normal, (float3x3)WorldMatrix ) );

Compute normalized normal vectors in the vertex world space.

320 // world View Projection Transformation with Light Perspective

321 Out.ZCalcTex = mul( Pos, LightWorldViewProjMatrix );

Convert local coordinates of vertices to projective coordinates with respect to the light, and let the hardware calculate z and w interpolation values.

323 // Diffuse color + Ambient color Calculation

324 Out.Color.rgb = AmbientColor;

325 if ( !useToon ) {

326 Out.Color.rgb += max(0,dot( Out.Normal, -LightDirection )) * DiffuseColor.rgb;

327 }

328 Out.Color.a = DiffuseColor.a;

329 Out.Color = saturate( Out.Color );

Set the base color. For accessories, add a diffuse color to the base color based on the light influence, and set each component to 0-1.

331 // texture coordinates

332 Out.Tex = Tex;

Assign the UV coordinates of the vertex as they are.

334 if ( useSphereMap ) {

335 // sphere map texture coordinates

336 float2 NormalWV = mul( Out.Normal, (float3x3)ViewMatrix );

Convert vertex normal vectors to x and y components in view space coordinates when using sphere maps.

337 Out.SpTex.x = NormalWV.x * 0.5f + 0.5f;

338 Out.SpTex.y = NormalWV.y * -0.5f + 0.5f;

339 }

340

341 return Out;

342 }

Convert view space coordinates to texture coordinates and put the structure back.

344 // pixel shader

345 float4 BufferShadow_PS(BufferShadow_OUTPUT IN, uniform bool useTexture, uniform bool useSphereMap, uniform bool useToon) : COLOR

346 {

Specify the color of pixels to be displayed on the screen.

Parameters

• IN: BufferShadow_OUTPUT structure received from vertex shader.

• useTexture: determination of texture usage, given by pass.

• useSphereMap: determination of sphere map usage, given by pass.

• useToon: determination of toon usage. Given by pass in the case of model data.

Output value

Output color

347 // specular color calculation

348 float3 HalfVector = normalize( normalize(IN.Eye) + -LightDirection );

349 float3 Specular = pow( max(0,dot( HalfVector, normalize(IN.Normal) )), SpecularPower ) * SpecularColor;

350

Same specular calculation as Basic_PS.

351 float4 Color = IN.Color;

352 float4 ShadowColor = float4(AmbientColor, Color.a); // shadow’s color

Base color and self-shadow base color.

353 if ( useTexture ) {

354 // apply texture

355 float4 TexColor = tex2D( ObjTexSampler, IN.Tex );

356 Color *= TexColor;

357 ShadowColor *= TexColor;

358 }

When using a texture, extract the color of the texture coordinates from the set texture and multiply it by the base color and self-shadow color respectively.

359 if ( useSphereMap ) {

360 // apply sphere map

361 float4 TexColor = tex2D(ObjSphareSampler,IN.SpTex);

362 if(spadd) {

363 Color += TexColor;

364 ShadowColor += TexColor;

365 } else {

366 Color *= TexColor;

367 ShadowColor *= TexColor;

368 }

369 }

As with Basic_PS, when using a sphere map, add or multiply the corresponding colors.

370 // specular application

371 Color.rgb += Specular;

372

Apply specular to the base color.

373 // convert to texture coordinates

374 IN.ZCalcTex /= IN.ZCalcTex.w;

Divide the z-value in projective space by the scaling factor w and convert to screen coordinates.

375 float2 TransTexCoord;

376 TransTexCoord.x = (1.0f + IN.ZCalcTex.x)*0.5f;

377 TransTexCoord.y = (1.0f - IN.ZCalcTex.y)*0.5f;

378

Convert screen coordinates to texture coordinates.

379 if( any( saturate(TransTexCoord) != TransTexCoord ) ) {

380 // external shadow buffer

381 return Color;

Return the base color if the vertex coordinates aren’t in the 0-1 range of the texture coordinates.

382 } else {

383 float comp;

384 if(parthf) {

385 // self-shadow mode2

386 comp=1-saturate(max(IN.ZCalcTex.z-tex2D(DefSampler,TransTexCoord).r , 0.0f)*SKII2*TransTexCoord.y-0.3f);

In self-shadow mode2, take the Z value from the shadow buffer sampler and compare it with the Z value of the vertex, if the Z of the vertex is small, it isn't a shadow. If the difference is small (close to the beginning of the shadow), the shadow is heavily corrected. (Weak correction in the upward direction of the screen?) Weakly corrects the base color.

387 } else {

388 // self-shadow mode1

389 comp=1-saturate(max(IN.ZCalcTex.z-tex2D(DefSampler,TransTexCoord).r , 0.0f)*SKII1-0.3f);

390 }

Do the same for self-shadow mode1.

391 if ( useToon ) {

392 // toon application

393 comp = min(saturate(dot(IN.Normal,-LightDirection)*Toon),comp);

In the case of MMD models, compare the degree of influence of the shade caused by the light with the degree of influence caused by the self-shadow, and choose the smaller one as the degree of influence of the shadow.

• min (x,y): select the smaller value of x and y.

394 ShadowColor.rgb *= MaterialToon;

395 }

396

Multiply the self-shadow color by the toon shadow color.

397 float4 ans = lerp(ShadowColor, Color, comp);

Linearly interpolate between the self-shadow color and the base color depending on the influence of the shadow.

398 if( transp ) ans.a = 0.5f;

399 return ans;

400 }

401 }

If translucency is enabled, set the transparency of the display color to 50% and restore the composite color.

403 // techniques for drawing objects (for accessories)

404 technique MainTecBS0 < string MMDPass = "object_ss"; bool UseTexture = false; bool UseSphereMap = false; bool UseToon = false; > {

405 pass DrawObject {

406 VertexShader = compile vs_3_0 BufferShadow_VS(false, false, false);

407 PixelShader = compile ps_3_0 BufferShadow_PS(false, false, false);

408 }

409 }

Technique performed on a subset of accessories (materials) that don’t use a texture or sphere map during self-shadowing.

• “object-ss”: object when self-shadow is disabled.

• UseTexture: true for texture usage subset.

• UseSphereMap: true for sphere map usage subset.

• UseToon: true for PMD model.

9. FINAL NOTES

For further reading on HLSL coding, please visit Microsoft’s official English reference documentation.

5 notes

·

View notes

Photo

Untitled {"colorSpace":"kCGColorSpaceDisplayP3","cameraType":"Wide","macroEnabled":true,"qualityMode":3,"deviceTilt":-0.022357137783170167,"customExposureMode":1,"extendedExposure":false,"whiteBalanceProgram":0,"cameraPosition":1,"focusMode":1} (via Flickr from Blogger via IFTTT

2 notes

·

View notes

Photo

«» {"colorSpace":"kCGColorSpaceDisplayP3","cameraType":"Wide","macroEnabled":true,"qualityMode":3,"deviceTilt":-0.012154436837796823,"customExposureMode":0,"extendedExposure":false,"whiteBalanceProgram":0,"cameraPosition":1,"focusMode":0} (via Flickr https://flic.kr/p/2fKiSjb)

1 note

·

View note

Text

Oyster Stew with Heavy Cream & Almond Milk

Oyster Stew with Heavy Cream & Almond Milk

Oyster stew is a classic dish for Christmas Eve, but it is also a great comfort food dish that will get you through any cold evening. Fresh oysters are ideal for this creamy soup, but canned oysters can work almost as well.

Oyster stew with heavy cream and almond milk Keys to Perfect Oyster Stew from Scratch

You can purchase oyster stew in a can from some grocers, but it really…

View On WordPress

0 notes

Text

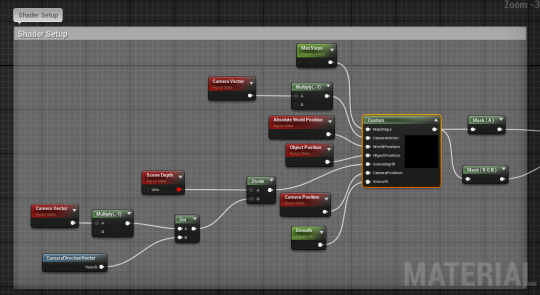

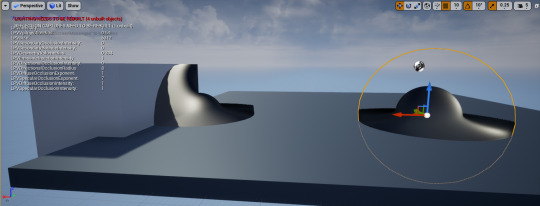

Raymarching Blop Shader - Material Editor

The main purpose of the material editor for our shader is to supply all the releavant values for our shader code. This includes:

Max Steps - Number of steps to iterate for

CameraVector - Vector Direction to calculate from

WorldPosition - Position in the world

ObjectPosition - Local position (both used for calculating distance)

SceneDepth - As we’re working in 3D

CameraPosition - The position of the ray start

Smooth - int for smoothing.

We then take the RGBA output, split it to use the mask for opacity, without which the effect is broken as the hidden shape volume is visible and second the normal map so we can manipulate how the material appears.

0 notes

Photo

by WIERCIPIETAS {"cameraType":"Dual","macroEnabled":false,"qualityMode":2,"deviceTilt":-0.094897083938121796,"customExposureMode":0,"extendedExposure":false,"whiteBalanceProgram":0,"cameraPosition":1,"focusMode":0} https://flic.kr/p/2jU2AsY

0 notes

Note

Hey Hopoo Team, big fan of the first RoR and DEADBOLT, found out about this DevBlog by chance and naturally binged through all the Updates. I am super excited. As I looked at all the little sneak peeks I was wondering about the cameraposition of the player. As seen in most Screenshots, the perspective is 'Above-the-Head', will it be possible to change that to a more 'Over-The-Shoulder' kind of view as an option?

Hi!Been gettin’ that a lot - on a technical level, the camera position is simply a vector3 and can be easily adjusted.On a design standpoint, a game’s entire gameplay is really based around its camera position. We’ve gotten a lot of requests for over-the-shoulder.Our logic for doing directly behind is:-The camera has to be zoomed out enough to see your feet for platforming sections. Most 3D platformers have their camera centered or in fixed position to help aid jumps

-Risk of Rain has a lot of spherical/orbital auras around your player. It’s important you can see the edge of the sphere, and if it’s offset, it can be disorienting and also the radius has to be smaller equal to the offset so it still remains in the camera frame-You get swarmed a lot, and a bias to one side can make certain directions of attack deadlier than others

-Obscuring the attack path from the player to the cursor is usually not too big of an issue because of the scale of the enemies.

However... this is obviously our first 3D game, so our logic could to be totally wrong. Tell us if this makes sense - we try over the shoulder semi frequently, but the things above always cause us issues.

62 notes

·

View notes

Photo

ความสัมพันธ์คือของมีค่า... เพราะเมื่อเชื้อเชิญหญิงมีอายุคนนี้ ร่วมถ่ายภาพกับเพื่อนของเธอ เธอก็ได้แต่ยิ้มและปฏิเสธอย่างสุภาพ ค่อยๆ เดินห่างออกมา และบอกเพียงแค่ว่า... ถ่ายเขาเถอะ ด้วยรอยยิ้มยินดี มันก็เกิดคำถามขึ้นว่า เพราะอะไร... หรือเพราะด้วยความเขินอายใดๆ ก็แล้วแต่ จึงไม่ได้คาดคั้น คะยั้นคะยออะไร แล้วก็ตอบขอบคุณและติดรอยยิ้มจากผู้หญิงคนนั้นกลับมา... . . . Nikon FM2 : Fuji Acros 100 | Nikkor 50mm 1.8 AI Film Develop : Adox Borax MQ 1:1 13:30 Mins. Process and Scan : Positive Lab+ by neno cameraPositive Lab+ by neno camera . . . #street #roadside #ishootfilm #photography #filmphotography #filmdeveloping #filmisnotdead #film #filmcamera #cat #mammal #people #woman #bangkok #thailand #35mmfilm #50mm #nikon #nikkor #nikonfm2 #walking #fujifilm #fujiacros100

#nikon#roadside#35mmfilm#filmcamera#bangkok#filmdeveloping#filmphotography#50mm#street#woman#filmisnotdead#people#fujiacros100#ishootfilm#nikkor#fujifilm#film#thailand#cat#walking#mammal#photography#nikonfm2

1 note

·

View note

Text

HOLLY WILSON / SON RELATOS QUE NUNCA ACABAN

HOLLY WILSON / SON RELATOS QUE NUNCA ACABAN

{“cameraType”:”Wide”,”macroEnabled”:false,”qualityMode”:3,”deviceTilt”:0.021211548647608325,”customExposureMode”:1,”extendedExposure”:false,”whiteBalanceProgram”:0,”cameraPosition”:1,”focusMode”:1}

El artista, cuando es consciente de su desempeño de creador, necesita concebir formas que simbolicen su mundo, sus vivencias, y que a su vez se convierten en un espacio visual para todos.

En el…

View On WordPress

0 notes

Text

{“cameraType”:”Wide”,”macroEnabled”:false,”qualityMode”:3,”deviceTilt”:0.017500650137662888,”customExposureMode”:0,”extendedExposure”:false,”whiteBalanceProgram”:0,”cameraPosition”:1,”focusMode”:0}

{“cameraType”:”Wide”,”macroEnabled”:false,”qualityMode”:3,”deviceTilt”:0.026483569294214249,”customExposureMode”:0,”extendedExposure”:false,”whiteBalanceProgram”:0,”cameraPosition”:1,”focusMode”:0}

Believe it or not, a lot of progress has been made in the last week. The construction tent is gone and we can use our garage. The wall in front of the house is complete and the work table is gone. Now we have a blank canvas to work with.

{“cameraType”:”Wide”,”macroEnabled”:false,”qualityMode”:3,”deviceTilt”:0.055966842919588089,”customExposureMode”:0,”extendedExposure”:false,”whiteBalanceProgram”:0,”cameraPosition”:1,”focusMode”:0}

{“cameraType”:”Wide”,”macroEnabled”:false,”qualityMode”:3,”deviceTilt”:-0.06119193509221077,”customExposureMode”:1,”extendedExposure”:false,”whiteBalanceProgram”:0,”cameraPosition”:1,”focusMode”:1}

{“cameraType”:”Wide”,”macroEnabled”:false,”qualityMode”:3,”deviceTilt”:0.039791803807020187,”customExposureMode”:1,”extendedExposure”:false,”whiteBalanceProgram”:0,”cameraPosition”:1,”focusMode”:1}

{“cameraType”:”Wide”,”macroEnabled”:false,”qualityMode”:3,”deviceTilt”:0.020478697493672371,”customExposureMode”:1,”extendedExposure”:false,”whiteBalanceProgram”:0,”cameraPosition”:1,”focusMode”:1}

The Meyer lemon buds are blossoming and smell divine! Fern shoots in different stages are unfolding. During the week, I was in Maui so I missed the first magnolia bloom, BUT this one:

Phenology – Volcano 3 Believe it or not, a lot of progress has been made in the last week. The construction tent is gone and we can use our garage.

0 notes

Photo

The solitude of the solitary man

{"colorSpace":"kCGColorSpaceSRGB","cameraType":"Wide","macroEnabled":false,"qualityMode":3,"deviceTilt":0.015232167206704617,"customExposureMode":0,"extendedExposure":false,"whiteBalanceProgram":0,"cameraPosition":1,"focusMode":0}

Stock available from 500px at http://bit.ly/2lez0jc

0 notes

Photo

Untitled {"colorSpace":"kCGColorSpaceDisplayP3","cameraType":"Wide","macroEnabled":true,"qualityMode":3,"deviceTilt":-0.04757578849637234,"customExposureMode":1,"extendedExposure":false,"whiteBalanceProgram":0,"cameraPosition":1,"focusMode":1} (via Flickr from Blogger via IFTTT

0 notes

Photo

«» {"colorSpace":"kCGColorSpaceDisplayP3","cameraType":"Wide","macroEnabled":true,"qualityMode":3,"deviceTilt":-0.012697730226091331,"customExposureMode":0,"extendedExposure":false,"whiteBalanceProgram":0,"cameraPosition":1,"focusMode":0} (via Flickr https://flic.kr/p/2gnpizE)

0 notes

Text

Plum Sauce for Fish Dinners

Plum Sauce for Fish Dinners

This is an easy 5-minute plum sauce that pairs wonderfully with many different types of fish (and other proteins). The bite of the plums is a wonderful addition to cod, tuna, mahi mahi or salmon. This recipe is for pan-fried fish but the plum sauce would work with grilled, baked or even poached fish.

Mahi mahi filets with a plum saucePlum Sauce Variations for Other Proteins

Chinese fish…

View On WordPress

0 notes