#azure data training

Explore tagged Tumblr posts

Text

SQL Server deadlocks are a common phenomenon, particularly in multi-user environments where concurrency is essential. Let's Explore:

https://madesimplemssql.com/deadlocks-in-sql-server/

Please follow on FB: https://www.facebook.com/profile.php?id=100091338502392

#technews#microsoft#sqlite#sqlserver#database#sql#tumblr milestone#vpn#powerbi#data#madesimplemssql#datascience#data scientist#datascraping#data analytics#dataanalytics#data analysis#dataannotation#dataanalystcourseinbangalore#data analyst training#microsoft azure

5 notes

·

View notes

Text

Navigating the Data Landscape: A Deep Dive into ScholarNest's Corporate Training

In the ever-evolving realm of data, mastering the intricacies of data engineering and PySpark is paramount for professionals seeking a competitive edge. ScholarNest's Corporate Training offers an immersive experience, providing a deep dive into the dynamic world of data engineering and PySpark.

Unlocking Data Engineering Excellence

Embark on a journey to become a proficient data engineer with ScholarNest's specialized courses. Our Data Engineering Certification program is meticulously crafted to equip you with the skills needed to design, build, and maintain scalable data systems. From understanding data architecture to implementing robust solutions, our curriculum covers the entire spectrum of data engineering.

Pioneering PySpark Proficiency

Navigate the complexities of data processing with PySpark, a powerful Apache Spark library. ScholarNest's PySpark course, hailed as one of the best online, caters to both beginners and advanced learners. Explore the full potential of PySpark through hands-on projects, gaining practical insights that can be applied directly in real-world scenarios.

Azure Databricks Mastery

As part of our commitment to offering the best, our courses delve into Azure Databricks learning. Azure Databricks, seamlessly integrated with Azure services, is a pivotal tool in the modern data landscape. ScholarNest ensures that you not only understand its functionalities but also leverage it effectively to solve complex data challenges.

Tailored for Corporate Success

ScholarNest's Corporate Training goes beyond generic courses. We tailor our programs to meet the specific needs of corporate environments, ensuring that the skills acquired align with industry demands. Whether you are aiming for data engineering excellence or mastering PySpark, our courses provide a roadmap for success.

Why Choose ScholarNest?

Best PySpark Course Online: Our PySpark courses are recognized for their quality and depth.

Expert Instructors: Learn from industry professionals with hands-on experience.

Comprehensive Curriculum: Covering everything from fundamentals to advanced techniques.

Real-world Application: Practical projects and case studies for hands-on experience.

Flexibility: Choose courses that suit your level, from beginner to advanced.

Navigate the data landscape with confidence through ScholarNest's Corporate Training. Enrol now to embark on a learning journey that not only enhances your skills but also propels your career forward in the rapidly evolving field of data engineering and PySpark.

#data engineering#pyspark#databricks#azure data engineer training#apache spark#databricks cloud#big data#dataanalytics#data engineer#pyspark course#databricks course training#pyspark training

3 notes

·

View notes

Text

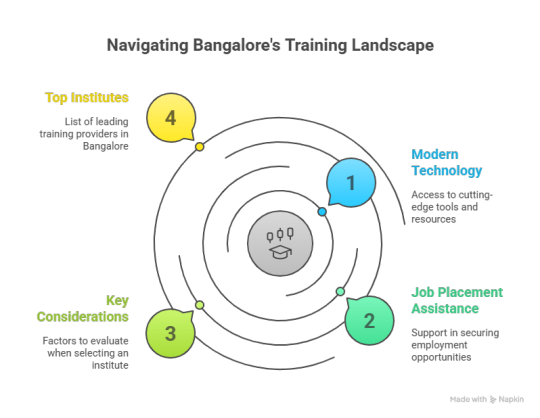

Top Training And Placement Institutes in Bangalore

Bangalore commonly referred to as"the Silicon Valley of India, is home to numerous training and placement institutions. These institutions provide students and professionals with modern technology and aid to secure lucrative jobs at top companies.

The blog in this article will discuss how choosing the right institute for training and placement is vital, the main aspects to take into consideration, as well as our top list of institutes in Bangalore including Kodestree Technologies. We employ simple English as well as natural keywords and a simple style to help you make the right decision to advance your career.

Why Training and Placement Institutes Matter

In today's rapidly-changing IT world, possessing the required skills isn't enough. You'll require guidance, hands-on experience and guidance to get into the top firms. Training and placement institutions offer:

Structured Learning Paths Institutes design courses that cover fundamentals to advanced topics, ensuring you build skills step by step.

Industry-Relevant Projects Real-world projects help you apply theory in practice and prepare you for job challenges.

Placement Support Dedicated placement teams connect you with recruiters, conduct mock interviews, and help you craft strong resumes.

Certification Recognized certificates add credibility to your resume and boost your chances of landing interviews.

Peer Learning & Networking Interacting with classmates and alumni helps you learn from others and expand your professional network.

Choosing a reputed institute can make a significant difference in your job search and career growth.

How to Choose the Right Institute

Before you enrol, consider these key factors:

Course Curriculum Look for up-to-date content that covers the latest tools and technologies. For example, if you want a Data Science Course in Bangalore, ensure it includes Python, machine learning, and data visualization.

Faculty Experience Instructors with real industry experience can offer practical insights beyond textbooks. Ask about faculty profiles and their project backgrounds.

Hands-On Training Theory without practice won’t help you in interviews. Check if the institute offers lab sessions, capstone projects, and live assignments.

Placement Record A strong placement history shows that past students have successfully entered good companies. Look for placement percentages, average salary packages, and recruiter partnerships.

Student Reviews & Testimonials Read honest feedback from alumni about the learning environment, support quality, and job assistance. Authentic reviews help you gauge institute strengths and weaknesses.

By evaluating these elements, you can choose an institute that aligns with your career goals and learning style.

Top 5 Training and Placement Institutes in Bangalore

Below are our recommendations for the top training and placement institutions in Bangalore. Each institution has its own strengths, courses and support for placement. We narrow our list down to five names that stand out to make it easier for you to choose.

1. Kodestree Technologies

Kodestree Technologies stands out as one of the best software training institutes in Bangalore. Here’s why:

Comprehensive Bootcamps Kodestree offers a range of bootcamps, including the Azure Master Program Bootcamp, Data Science Bootcamp, and Python Full Stack Development Bootcamp. Each program is designed to cover fundamentals, advanced topics, and real-world projects.

Expert Mentors Industry professionals lead sessions, sharing insights from their work in MNCs. This ensures that you learn not only theory but also best practices used in top companies.

Placement Assistance Kodestree’s dedicated placement cell conducts regular mock interviews, resume workshops, and connects students with hiring partners. Their track record shows over 90% placement success and average packages of 6–10 LPA.

Hands-On Projects You’ll work on live projects such as end-to-end web applications, DevOps pipelines, and data analysis tasks. These projects become part of your portfolio to showcase to recruiters.

Flexible Learning With both weekday and weekend batches, plus online training options, Kodestree caters to working professionals and freshers alike.

Student Success Story “I enrolled in the Data Science Bootcamp at Kodestree and secured a role as a Data Analyst within three months. The practical projects and expert guidance made all the difference.” – Ananya R., Alumni

Learn more about Kodestree’s courses and upcoming batches on their website. Book a demo Session - Call Now +91 72046 14489.

2. NIIT

NIIT is a well-known name in IT training institutes in Bangalore with over 35 years of experience. It offers:

Diverse Course Portfolio NIIT provides courses in Data Science, Cloud Computing, DevOps, and more. Their Post Graduate Program in Cloud Computing is popular among graduates.

Global Partnerships Collaborations with IBM, Microsoft, and AWS ensure that course content stays current and aligned with industry needs.

State-of-the-Art Labs NIIT’s training centres feature modern labs and virtual environments for hands-on practice.

Placement Support The NIIT placement cell hosts job fairs, campus drives, and partner meets to connect students with top recruiters.

Certification & Accreditation Courses come with placement guarantee options and recognized certificates that enhance your resume.

With multiple branches across Bangalore, NIIT makes learning accessible for students in various localities.

3. FITA Academy

FITA Academy is another leading software training institute known for:

Customized Training FITA offers tailored programs in Full Stack Development, DevOps, and Data Science, with the flexibility to focus on specific tools like Azure DevOps or AWS DevOps.

Practical Workshops Regular hackathons, code clinics, and group projects help reinforce learning and build teamwork skills.

Industry Projects Partnerships with startups and MNCs provide real-time projects, enabling students to work on live applications.

Interview Prep FITA’s mock interview sessions, group discussions, and aptitude tests prepare you for placement drives.

Flexible Schedules Weekday, weekend, and fast-track batches give you the freedom to choose a schedule that suits you.

FITA Academy’s focus on job-oriented software training makes it a popular choice among fresh graduates and professionals.

4. Aptron Solutions

Aptron Solutions has carved a niche as a reliable IT training institute in Bangalore:

Wide Range of Courses From Cyber Security and Ethical Hacking to Cloud Computing and DevOps, Aptron covers in-demand technologies.

Certified Trainers Trainers hold certifications like CISSP, Azure DevOps Engineer, and AWS Certified Solutions Architect.

Live Project Experience Students work on live network setups, security audits, and deployment pipelines to gain hands-on skills.

Placement Outreach Aptron’s placement team works with over 100 hiring partners, hosting on-campus and off-campus drives.

Student Support 24×7 lab access and doubt-clearing sessions ensure you stay on track throughout the course.

Aptron’s emphasis on cloud training courses in Bangalore attracts students looking to specialize in cloud and security domains.

5. Besant Technologies

Besant Technologies is known for practical, project-based learning in:

Full Stack Development Covers front-end frameworks, back-end APIs, and database management.

DevOps & Cloud Courses include Azure DevOps Training Online, AWS DevOps, and Docker–Kubernetes integration.

Data Science & AI Besant’s data science program includes Python, R, machine learning algorithms, and data visualization.

Placement Services Besant conducts resume workshops, technical tests, and soft-skill training to prepare candidates for interviews.

Community Learning Active alumni network and student forums encourage knowledge sharing and networking.

With flexible batches and online options, Besant Technologies caters to a wide range of learners.

Conclusion & Next Steps

Choosing the right training and placement institute in Bangalore can set you on a path to a successful IT career. Consider factors like course content, faculty expertise, hands-on training, and placement support before making your decision.

Among the top names, Kodestree Technologies stands out for its comprehensive bootcamps, expert mentors, and excellent placement record. Whether you aim to master DevOps, Data Science, Full Stack Development, or Cloud Computing, Kodestree has a program designed for you.

Ready to take the next step?Visit Kodestree Technologies today to view upcoming batches, request a demo, and start building your dream IT career in Bangalore.

#Top Training And Placement Institutes In Bangalore#Software Training Institute in Bangalore#Best Software Training Institute#IT Training Institute in Bangalore#Software Courses with Placement#Best Software Courses in Bangalore#Software Development Course in Bangalore#Full Stack Developer Course in Bangalore#Job Oriented Software Training#Data Science Course in Bangalore#Azure DevOps Training Online#Azure DevOps Certification#Learn Azure DevOps#Azure Cloud DevOps#Cloud Computing Courses in Bangalore#Training Institutes for Data Science#DevOps Training Institute in Bangalore.

0 notes

Text

The Different Aspects of the Self-Taught Process and Formal Courses to Learn Linux Management

If we talk about the enlarging world of IT, Linux is considered to be a critical skill that incorporates everything, starting from web servers to enterprise systems. No matter if you are a professional or a beginner, understanding the power of Linux management can create a lot of opportunities for your career.

However, the question is whether you should go for self-learning or apply for a formal course. As per the statistics, around 39.2% of the effective websites are powered by Linux. So, to be unique among the trends, you need to be thorough about the respective practices. This is why many learners are going for Linux Management Training in Ahmedabad.

Let us further learn the different aspects of self-taught and formal course features for learning Linux management.

The Flexibility of Self-taught Approach

You might be thinking learning Linux management on your own can be flexible and serve as a cost-effective way to strengthen your skills. However, it can be challenging. You can find a number of resources like YouTube, community forums or online blogs to learn Linux management. Some of the effective benefits have been listed below:

You can get a series of cost-efficient resources.

You can learn the process at your own pace without any restrictions.

This approach can be reliable for individuals with prior IT knowledge.

Disadvantages of the self-taught approach

Here, you can go through certain disadvantages of a self-taught approach.

You can’t get any reliable Red Hat Certification in Ahmedabad. So, you may lack proper recognition.

Without expert guidance, you can’t get a precise structure of how to learn.

You may lack knowledge in troubleshooting complicated issues. So, the result can be time-consuming without expert help.

This approach mainly suits IT professionals who want to upgrade their knowledge in their free time. However, it lacks in-depth understanding, clarity and understanding.

Structured Learning through Formal Courses

If you enrol in formal Linux management training in Ahmedabad, you can easily get a well-structured curriculum. Moreover, the best part is you can have better access to industry experts and hands-on experience. Get reliable certifications to be recognised on global platforms. Professional Solutions can offer you specialised knowledge and practical skills to solve real-world issues. This program is suitable for candidates who want to build a strong foundation, gain access to knowledgeable mentors and get globally recognised credentials.

You can get a structured learning path.

Get acquainted with practical and hands-on training.

Avail better career opportunities with recognised certifications.

If you want to get high-paying jobs or want to be a certified Linux professional, formal training can be a suitable option for you. Both the self-taught and formal courses are reliable. However, you need to choose the formal one if you want your career to flourish. However, rely on experienced and professional service to get unmatched value, just like Highsky IT Solutions. Learn to tackle real-world IT challenges and perform exceptionally in the market.

#red hat certification ahmedabad#linux certification ahmedabad#linux online courses in ahmedabad#data science training ahmedabad#red hat training ahmedabad#aws security training ahmedabad#rhce rhcsa training ahmedabad#docker training ahmedabad#microsoft azure cloud certification

1 note

·

View note

Text

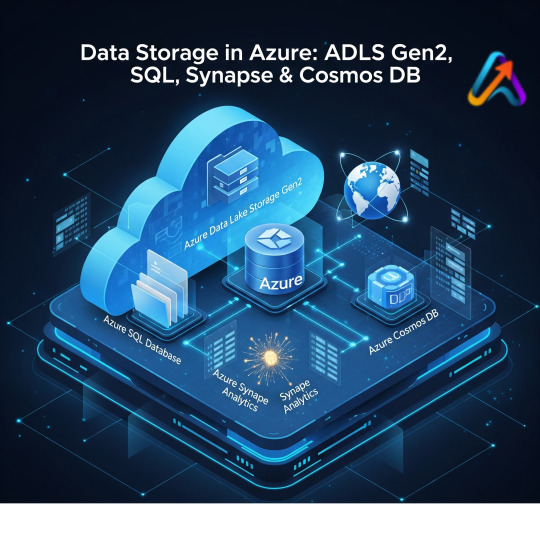

Azure Data Engineer Online Training | Azure Data Engineer Online Course

Master Azure Data Engineering with AccentFuture. Get hands-on training in data pipelines, ETL, and analytics on Azure. Learn online at your pace with real-world projects and expert guidance.

🚀 Enroll Now: https://www.accentfuture.com/enquiry-form/

📞 Call Us: +91–9640001789

📧 Email Us: [email protected]

🌐 Visit Us: AccentFuture

#Azure data engineer course#Azure data engineer course online#Azure data engineer online training#Azure data engineer training

0 notes

Text

Azure Data Certifications and Training- NOVA SQL User Group - July 24, 1 Hour

Azure Data Certifications and Training- NOVA SQL User Group – July 24, 1 Hour – Timothy McAliley. source

0 notes

Text

Your Complete Guide to Azure Data Engineering: Skills, Certification & Training

Introduction

Why Azure Data Engineering Matters

Today, as we live in the big data and cloud computing era, Azure Data Engineering is considered one of the most sought-after skills around the world. If you want to get a high-paying job in technology or enhance your data toolbox, learning Azure data services can put you ahead of the competition in today's IT world. This guide will provide you with an insight into what Azure Data Engineering is, why certification is important, and how good training can kick off your data career.

What is Azure Data Engineering?

Azure Data Engineering is focused on designing, building, and maintaining elastic data pipelines and data storage arrangements using Microsoft Azure. It involves:

Building data solutions with tools like Azure Data Factory and Azure Synapse Analytics

Building ETL (Extract, Transform, Load) data workflows for big data processing

Synchronizing cloud data infrastructure efficiently

Enabling data analytics and business intelligence using tools like Power BI

An Azure Data Engineer certification helps businesses transform raw data into useful insights.

Benefits of Obtaining Azure Data Engineer Certification

Becoming an Azure Data Engineer certified isn't just a credential — it's a career enhancer. Here's why:

Confirms your technical know-how in real Azure environments

Enhances your hiring prospects with businesses and consumers

Opens up global opportunities and enhanced salary offers

Keep yourself updated with Microsoft Azure's evolving ecosystem

Starting with Azure Data Engineer Training

To become a successful Azure Data Engineer, proper training is required. Seek an Azure Data Engineer training program that offers:

• In-depth modules on Azure Data Factory, Azure Synapse, Azure Databricks

• Hands-on labs and live data pipeline projects

• Integration with Power BI for end-to-end data flow

• Mock exams, doubt-clearing sessions, and job interview preparation

By the time you finish your course, you should be prepared to take the Azure Data Engineer certification exam.

Azure Data Engineering Trends

The world is evolving quickly. Some of the top trends in 2025 include:

Massive shift to cloud-native data platforms across industries

Integration of AI and ML models within Azure pipelines

Increased demand for automation and data orchestration skills

Heightened need for certified professionals who can offer insights at scale

Why Global Teq for Azure Data Engineer Training?

In your pursuit of a career in Azure Data Engineering, Global Teq is your partner in learning. Here's why:

Expert Trainers – Get trained by actual Azure industry experts

Industry-Ready Curriculum – Theory, practice, and project experience

Flexible Learning Modes – Online learning at your own pace

Career Support – Resume guidance, mock interviews & placement assistance

Low Cost – Affordable quality training

Thousands of students have built their careers with Global Teq. Join the crowd and unlock your potential as a certified Azure Data Engineer!

Leap into a Data-Driven Career

As an Azure Data Engineer certified, it's not only a career shift—it's an investment in your future. With the right training and certification, you can enjoy top jobs in cloud computing, data architecture, and analytics. Whether you're new to industry or upskilling, Global Teq gives you the edge you require.

Start your Azure Data Engineering profession today with Global Teq. Sign up now and become a cloud data leader!

#Azure#azure data engineer course online#Azure data engineer certification#Azure data engineer course#Azure data engineer training#Azure certification data engineer

0 notes

Text

Master Playwright Test Automation with Expert-Led Online Training

Master Playwright Test Automation with Expert-Led Online Training

#AWS Training#Azure#DevOps Training#Data Science#MSBI#Data Analytics Training#Cyber Securit#playwrite

0 notes

Text

Why Azure Data Engineer Certification Is a Game-Changer in 2025

In today’s data-driven world, businesses rely on skilled professionals to manage, process, and analyze massive volumes of data. The Azure Data Engineer Certification has emerged as a must-have credential for professionals aiming to excel in this dynamic field. As we step into 2025, this certification is proving to be a game-changer, opening doors to high-demand roles, competitive salaries, and cutting-edge career opportunities. Whether you're a beginner or a seasoned IT professional, enrolling in an Azure data engineer course can set you apart in the competitive tech landscape. In this blog post, we’ll explore why the Azure certification data engineer is a smart investment and how Global Teq can help you achieve it.

What Is the Azure Data Engineer Certification?

The Azure Data Engineer Associate Certification (Microsoft Certified: Azure Data Engineer Associate) validates your ability to design and implement data solutions using Microsoft Azure’s cloud platform. It focuses on critical skills like data storage, processing, security, and analytics, making it highly relevant for today’s data-centric industries. To earn this certification, you need to pass the DP-203: Data Engineering on Microsoft Azure exam, which tests your expertise in Azure tools like Azure Data Factory, Azure Synapse Analytics, and Azure Databricks.

This certification is ideal for:

Aspiring data engineers

IT professionals transitioning to cloud-based roles

Data analysts or developers looking to upskill

Why Is Azure Data Engineer Certification in Demand in 2025?

The demand for data engineers is skyrocketing as organizations increasingly adopt cloud solutions to manage their data. Here’s why the Azure data engineer certification is a game-changer in 2025:

1. Growing Adoption of Azure Cloud

Microsoft Azure is one of the leading cloud platforms, competing closely with AWS and Google Cloud. In 2025, more businesses are migrating their data operations to Azure due to its scalability, security, and integration capabilities. Certified Azure data engineers are in high demand to design and manage these cloud-based data pipelines.

2. Lucrative Career Opportunities

Data engineering roles are among the highest-paying in tech. According to industry reports, Azure data engineers in the U.S. can earn between $100,000 and $150,000 annually, with senior roles commanding even higher salaries. The certification signals to employers that you have the skills to deliver real-world data solutions.

3. Versatility Across Industries

From healthcare to finance to retail, every sector needs data engineers to transform raw data into actionable insights. The Azure data engineer training equips you with skills to work on diverse projects, such as:

Building data pipelines for real-time analytics

Optimizing data storage for cost efficiency

Ensuring data security and compliance

4. Future-Proofing Your Career

As AI, machine learning, and big data technologies evolve, Azure’s tools are at the forefront of innovation. Earning the Azure certification data engineer ensures you stay relevant in a rapidly changing tech landscape.

Real-World Applications of Azure Data Engineering Skills

The skills you gain from an Azure data engineer course are directly applicable to real-world challenges. Here are some examples:

Data Integration: Use Azure Data Factory to connect disparate data sources, enabling seamless data flow for business intelligence.

Big Data Processing: Leverage Azure Databricks to process massive datasets for machine learning models or predictive analytics.

Real-Time Analytics: Build streaming data pipelines with Azure Stream Analytics to support real-time decision-making, such as fraud detection in banking.

Data Governance: Implement security measures using Azure Purview to ensure compliance with regulations like GDPR or HIPAA.

These applications make Azure data engineers indispensable to organizations aiming to harness the power of their data.

Why Choose Global Teq for Azure Data Engineer Training?

When it comes to preparing for the Azure data engineer certification, choosing the right training provider is critical. Global Teq stands out as a trusted partner for aspiring data engineers. Here’s why:

Expert Instructors: Learn from industry professionals with hands-on experience in Azure data engineering.

Comprehensive Curriculum: Global Teq’s Azure data engineer training covers all DP-203 exam topics, including data storage, processing, and security.

Hands-On Labs: Gain practical experience through real-world projects and Azure simulations.

Flexible Learning Options: Choose from online, self-paced, or instructor-led courses to fit your schedule.

Career Support: Get guidance on resume building, interview preparation, and job placement to kickstart your career.

With Global Teq, you’re not just preparing for an exam—you’re building a foundation for long-term success in data engineering.

How to Get Started with Azure Data Engineer Certification

Ready to take the leap? Here’s a step-by-step guide to earning your Azure certification data engineer:

Understand the Exam: Review the DP-203 exam objectives on Microsoft’s official website.

Enroll in Training: Join a reputable Azure data engineer course like those offered by Global Teq.

Practice with Azure Tools: Get hands-on experience with Azure Data Factory, Synapse Analytics, and Databricks.

Take Practice Exams: Test your knowledge with mock exams to identify areas for improvement.

Schedule the Exam: Book your DP-203 exam through Microsoft’s testing platform.

Stay Updated: Follow Azure updates and trends to stay ahead in the field.

Trends Shaping Azure Data Engineering in 2025

The data engineering landscape is evolving, and Azure is at the forefront of these trends:

AI Integration: Azure’s integration with AI tools like Azure Machine Learning is creating new opportunities for data engineers to support AI-driven projects.

Hybrid Cloud Solutions: Businesses are adopting hybrid cloud models, requiring data engineers to manage on-premises and cloud data seamlessly.

Focus on Data Security: With increasing cyber threats, Azure data engineers are critical in implementing robust security measures.

By earning the Azure data engineer certification, you position yourself to capitalize on these trends and stay ahead of the curve.

Conclusion: Invest in Your Future with Azure Data Engineer Certification

The Azure Data Engineer Certification is more than just a credential—it’s a gateway to a rewarding career in one of the most in-demand fields of 2025. With businesses relying on data to drive decisions, certified Azure data engineers are essential to building scalable, secure, and efficient data solutions. By enrolling in an Azure data engineer course with Global Teq, you’ll gain the skills, confidence, and support needed to pass the DP-203 exam and thrive in the industry.

Ready to transform your career? Explore Global Teq’s Azure data engineer training options today and take the first step toward becoming a certified Azure data engineer!

#azure data engineer course online#azure data engineer training#online courses#Azure certification data engineer#Azure data engineer course#Azure#microsoft#DP 203

0 notes

Text

Rise with Intelligence: Machine Learning in Chandigarh 2025 by CNT Technologies

Step into the world of intelligent technology with CNT Technologies’ advanced program for Machine Learning in Chandigarh in 2025. Designed for innovators, students, and working professionals, this course offers in-depth training in supervised learning, neural networks, data science, and automation. Learn from industry leaders, work on real-time projects, and gain skills that are in high demand across global tech industries. With a future-focused curriculum, expert mentorship, and placement support, CNT Technologies is your gateway to a successful AI career through the most trusted Machine Learning in Chandigarh training of 2025. For more information, go to https://www.cnttech.org/master-machine-learning-in-chandigarh-with-cnt-technologies-2025/

#aws training in chandigarh#digital marketing course training chandigarh#angular js training in chandigarh#data science training in chandigarh#cyber security and ethical hacking training in chandigarh#full stack training in chandigarh#6 weeks training in chandigarh#6 months Training in chandigarh#job oriented training in chandigarh#php trainig in chandigarh#data science in chandigarh#software development in chandigarh#cloud training in chandigarh#mechanical training in chandigarh#data science training online#machine learning in chandigarh#azure training in chandigrah#linux training in chandigrah#cloud computing training in chandigrah#android training in chandigarh#ccna training in chandigarh#civil training in chandigarh#mcse training in chandigarh#java training in chandigarh#c & c++ training in chandigarh#.net training in chandigarh#seo training chandigarh#web designing training in chandigarh#python training in chandigarh#vmware training in chandigarh

0 notes

Text

Cloud Computing Courses in Ahmedabad

Build your demand skills with top Cloud Computing Courses in Ahmedabad. Get certified with real-world projects, expert support, live classes and flexible batches to start your journey!

#linux certification ahmedabad#red hat certification ahmedabad#linux online courses in ahmedabad#data science training ahmedabad#rhce rhcsa training ahmedabad#aws security training ahmedabad#docker training ahmedabad#red hat training ahmedabad#microsoft azure cloud certification#python courses in ahmedabad

0 notes

Text

Real-time Data Processing with Azure Stream Analytics

Introduction

The current fast-paced digital revolution demands organizations to handle occurrences in real-time. The processing of real-time data enables organizations to detect malicious financial activities and supervise sensor measurements and webpage user activities which enables quicker and more intelligent business choices.

Microsoft’s real-time analytics service Azure Stream Analytics operates specifically to analyze streaming data at high speed. The introduction explains Azure Stream Analytics system architecture together with its key features and shows how users can construct effortless real-time data pipelines.

What is Azure Stream Analytics?

Algorithmic real-time data-streaming functions exist as a complete serverless automation through Azure Stream Analytics. The system allows organizations to consume data from different platforms which they process and present visual data through straightforward SQL query protocols.

An Azure data service connector enables ASA to function as an intermediary which processes and connects streaming data to emerging dashboards as well as alarms and storage destinations. ASA facilitates processing speed and immediate response times to handle millions of IoT device messages as well as application transaction monitoring.

Core Components of Azure Stream Analytics

A Stream Analytics job typically involves three major components:

1. Input

Data can be ingested from one or more sources including:

Azure Event Hubs – for telemetry and event stream data

Azure IoT Hub – for IoT-based data ingestion

Azure Blob Storage – for batch or historical data

2. Query

The core of ASA is its SQL-like query engine. You can use the language to:

Filter, join, and aggregate streaming data

Apply time-window functions

Detect patterns or anomalies in motion

3. Output

The processed data can be routed to:

Azure SQL Database

Power BI (real-time dashboards)

Azure Data Lake Storage

Azure Cosmos DB

Blob Storage, and more

Example Use Case

Suppose an IoT system sends temperature readings from multiple devices every second. You can use ASA to calculate the average temperature per device every five minutes:

This simple query delivers aggregated metrics in real time, which can then be displayed on a dashboard or sent to a database for further analysis.

Key Features

Azure Stream Analytics offers several benefits:

Serverless architecture: No infrastructure to manage; Azure handles scaling and availability.

Real-time processing: Supports sub-second latency for streaming data.

Easy integration: Works seamlessly with other Azure services like Event Hubs, SQL Database, and Power BI.

SQL-like query language: Low learning curve for analysts and developers.

Built-in windowing functions: Supports tumbling, hopping, and sliding windows for time-based aggregations.

Custom functions: Extend queries with JavaScript or C# user-defined functions (UDFs).

Scalability and resilience: Can handle high-throughput streams and recovers automatically from failures.

Common Use Cases

Azure Stream Analytics supports real-time data solutions across multiple industries:

Retail: Track customer interactions in real time to deliver dynamic offers.

Finance: Detect anomalies in transactions for fraud prevention.

Manufacturing: Monitor sensor data for predictive maintenance.

Transportation: Analyze traffic patterns to optimize routing.

Healthcare: Monitor patient vitals and trigger alerts for abnormal readings.

Power BI Integration

The most effective connection between ASA and Power BI serves as a fundamental feature. Asustream Analytics lets users automatically send data which Power BI dashboards update in fast real-time. Operations teams with managers and analysts can maintain ongoing key metric observation through ASA since it allows immediate threshold breaches to trigger immediate action.

Best Practices

To get the most out of Azure Stream Analytics:

Use partitioned input sources like Event Hubs for better throughput.

Keep queries efficient by limiting complex joins and filtering early.

Avoid UDFs unless necessary; they can increase latency.

Use reference data for enriching live streams with static datasets.

Monitor job metrics using Azure Monitor and set alerts for failures or delays.

Prefer direct output integration over intermediate storage where possible to reduce delays.

Getting Started

Setting up a simple ASA job is easy:

Create a Stream Analytics job in the Azure portal.

Add inputs from Event Hub, IoT Hub, or Blob Storage.

Write your SQL-like query for transformation or aggregation.

Define your output—whether it’s Power BI, a database, or storage.

Start the job and monitor it from the portal.

Conclusion

Organizations at all scales use Azure Stream Analytics to gain processing power for real-time data at levels suitable for business operations. Azure Stream Analytics maintains its prime system development role due to its seamless integration of Azure services together with SQL-based declarative statements and its serverless architecture.

Stream Analytics as a part of Azure provides organizations the power to process ongoing data and perform real-time actions to increase operational intelligence which leads to enhanced customer satisfaction and improved market positioning.

#azure data engineer course#azure data engineer course online#azure data engineer online course#azure data engineer online training#azure data engineer training#azure data engineer training online#azure data engineering course#azure data engineering online training#best azure data engineer course#best azure data engineer training#best azure data engineering courses online#learn azure data engineering#microsoft azure data engineer training

0 notes

Text

01 Databricks Tutorial 2025 | Databricks for Data Engineering | Azure Databricks Training

Azure Databricks | Databricks Tutorials | Databricks Training | Databricks End to End playlist This Databricks tutorial playlist covers … source

0 notes

Text

Unlock the Power of Data: Start Your Power BI Training Journey Today!

Introduction: The Age of Data Mastery

The world runs on data — from e-commerce trends to real-time patient monitoring, logistics optimization to financial forecasting. But data without clarity is chaos. That’s why the demand for data-driven professionals is skyrocketing.

If you’re wondering where to begin, the answer lies in Power BI training — a toolset that empowers you to visualize, interpret, and tell stories with data. When paired with Azure Data Factory training and ADF Training, you’re not just a data user — you become a data engineer, storyteller, and business enabler.

Section 1: Power BI — Your Data Storytelling Toolkit

What is Power BI?

Power BI is a suite of business analytics tools by Microsoft that connects data from hundreds of sources, cleans and shapes it, and visualizes it into stunning reports and dashboards.

Key Features:

Data modeling and transformation (Power Query & DAX)

Drag-and-drop visual report building

Real-time dashboard updates

Integration with Excel, SQL, SharePoint, and cloud platforms

Easy sharing via Power BI Service and Power BI Mobile

Why you need Power BI training:

It’s beginner-friendly yet powerful enough for experts

You learn to analyze trends, uncover insights, and support decisions

Widely used by Fortune 500 companies and startups alike

Power BI course content usually includes:

Data import and transformation

Data relationships and modeling

DAX formulas

Visualizations and interactivity

Publishing and sharing dashboards

Section 2: Azure Data Factory & ADF Training — Automate Your Data Flows

While Power BI helps with analysis and reporting, tools like Azure Data Factory (ADF) are essential for preparing that data before analysis.

What is Azure Data Factory?

Azure Data Factory is a cloud-based ETL (Extract, Transform, Load) tool by Microsoft used to create data-driven workflows for moving and transforming data.

ADF Training helps you master:

Building pipelines to move data from databases, CRMs, APIs, and more

Scheduling automated data refreshes

Monitoring pipeline executions

Using triggers and parameters

Integrating with Azure services and on-prem data

Azure Data Factory training complements your Power BI course by

Giving you end-to-end data skills: from ingestion → transformation → reporting

Teaching you how to scale workflows using cloud resources

Prepping you for roles in Data Engineering and Cloud Analytics

Section 3: Real-Life Applications and Benefits

After completing Power BI training, Azure Data Factory training, and ADF training, you’ll be ready to tackle real-world business scenarios such as:

Business Intelligence Analyst

Track KPIs and performance in real-time dashboards

Help teams make faster, better decisions

Data Engineer

Build automated workflows to handle terabytes of data

Integrate enterprise data from multiple sources

Marketing Analyst

Visualize campaign performance and audience behavior

Use dashboards to influence creative and budgeting

Healthcare Data Analyst

Analyze patient data for improved diagnosis

Predict outbreaks or resource needs with live dashboards

Small Business Owner

Monitor sales, inventory, customer satisfaction — all in one view

Automate reports instead of doing them manually every week

Section 4: What Will You Achieve?

Tangible Career Growth

Access to high-demand roles: Data Analyst, Power BI Developer, Azure Data Engineer, Cloud Analyst

Average salaries range between $70,000 to $130,000 annually (varies by country)

Future-Proof Skills

Data skills are relevant in every sector: retail, finance, healthcare, manufacturing, and IT

Learn the Microsoft ecosystem, which dominates enterprise tools globally

Practical Confidence

Work on real projects, not just theory

Build a portfolio of dashboards, ADF pipelines, and data workflows

Certification Readiness

Prepares you for exams like Microsoft Certified: Power BI Data Analyst Associate (PL-300), Azure Data Engineer Associate (DP-203)

Conclusion: Data Skills That Drive You Forward

In an era where businesses are racing toward digital transformation, the ones who understand data will lead the way. Learning Power BI, Azure Data Factory, and undergoing ADF training gives you a complete, end-to-end data toolkit.

Whether you’re stepping into IT, upgrading your current role, or launching your own analytics venture, now is the time to act. These skills don’t just give you a job — they build your confidence, capability, and career clarity.

#Azure data engineer certification#Azure data engineer course#Azure data engineer training#Azure certification data engineer#Power bi training#Power bi course#Azure data factory training#ADF Training

0 notes

Text

Fix Deployment Fast with a Docker Course in Ahmedabad

Are you tired of hearing or saying, "It works on my machine"? That phrase is an indicator of disruptively broken deployment processes: when code works fine locally but breaks on staging and production.

From the perspective of developers and DevOps teams, it is exasperating, and quite frankly, it drains resources. The solution to this issue is Containerisation. The local Docker Course Ahmedabadpromises you the quickest way to master it.

The Benefits of Docker for Developers

Docker is a solution to the problem of the numerous inconsistent environments; it is not only a trendy term. Docker technology, which utilises Docker containers, is capable of providing a reliable solution to these issues. Docker is the tool of choice for a highly containerised world. It allows you to take your application and every single one of its components and pack it thus in a container that can execute anywhere in the world. Because of this feature, “works on my machine” can be completely disregarded.

Using exercises tailored to the local area, a Docker Course Ahmedabad teaches you how to create docker files, manage your containers, and push your images to Docker Hub. This course gives you the chance to build, deploy, and scale containerised apps.

Combining DevOps with Classroom Training and Classes in Ahmedabad Makes for Seamless Deployment Mastery

Reducing the chances of error in using docker is made much easier using DevOps, the layer that takes it to the next level. Unlike other courses that give a broad overview of containers, DevOps Classes and Training in Ahmedabad dive into automation, the establishment of CI/CD pipelines, monitoring, and with advanced tools such as Kubernetes and Jenkins, orchestration.

Docker skills combined with DevOps practices mean that you’re no longer simply coding but rather deploying with greater speed while reducing errors. Companies, especially those with siloed systems, appreciate this multifaceted skill set.

Real-World Impact: What You’ll Gain

Speed: Thus, up to 80% of deployment time is saved.

Reliability: Thus, your application will remain seamless across dev, test, and production environments.

Confidence: For end-users, the deployment problems have already been resolved well before they have the chance to exist.

Achieving these skills will exponentially propel your career.

Conclusion: Transform Every DevOps Weakness into a Strategic Advantage

Fewer bugs and faster release cadence are a universal team goal. Putting confidence in every deployment is every developer’s dream. A comprehensive Docker Course in Ahmedabador DevOps Classes and Training in Ahmedabadcan help achieve both together. Don’t be limited by impediments. Highsky IT Solutions transforms deployment challenges into success with strategic help through practical training focused on boosting your career with Docker and DevOps.

#linux certification ahmedabad#red hat certification ahmedabad#linux online courses in ahmedabad#data science training ahmedabad#rhce rhcsa training ahmedabad#aws security training ahmedabad#docker training ahmedabad#red hat training ahmedabad#microsoft azure cloud certification#python courses in ahmedabad

0 notes