#aws iam user

Explore tagged Tumblr posts

Video

youtube

Python Code to Access AWS S3 Bucket | Python AWS S3 Bucket Tutorial Guide

Check out this new video on the CodeOneDigest YouTube channel! Learn how to write Python program to access S3 Bucket, how to create IAM User & Policy in AWS to access S3 Bucket.

@codeonedigest @awscloud @AWSCloudIndia @AWS_Edu @AWSSupport @AWS_Gov @AWSArchitecture

0 notes

Text

Master AWS IAM security effortlessly! Our new Python script automates user access reviews, generating detailed reports in seconds. Simplify and enhance your AWS account security today!

0 notes

Text

god i hate hate hate hate Azure and AWS and GCP. these cloud services and their consoles make me want to throw my computer across the room. "Want to make a cloud datastore? Make sure you give ur IAME user permissions!!" "Want to make an Azure Bot for MS Teams? Make sure you track down the 12 different spots to manage permissions for you and ur teams members!!" complete shit. no wonder microsoft offers certifications for Azure, this shit has more buttons and knobs than a pilot's cockpit. and for what? to sell you more Azure courses..

#i hate you microcock#i have never held this much hate for any software service before#i would push a boulder up a hill if it means microsoft killed Azure

2 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

Centralizing AWS Root access for AWS Organizations customers

Security teams will be able to centrally manage AWS root access for member accounts in AWS Organizations with a new feature being introduced by AWS Identity and Access Management (IAM). Now, managing root credentials and carrying out highly privileged operations is simple.

Managing root user credentials at scale

Historically, accounts on Amazon Web Services (AWS) were created using root user credentials, which granted unfettered access to the account. Despite its strength, this AWS root access presented serious security vulnerabilities.

The root user of every AWS account needed to be protected by implementing additional security measures like multi-factor authentication (MFA). These root credentials had to be manually managed and secured by security teams. Credentials had to be stored safely, rotated on a regular basis, and checked to make sure they adhered to security guidelines.

This manual method became laborious and error-prone as clients’ AWS systems grew. For instance, it was difficult for big businesses with hundreds or thousands of member accounts to uniformly secure AWS root access for every account. In addition to adding operational overhead, the manual intervention delayed account provisioning, hindered complete automation, and raised security threats. Unauthorized access to critical resources and account takeovers may result from improperly secured root access.

Additionally, security teams had to collect and use root credentials if particular root actions were needed, like unlocking an Amazon Simple Storage Service (Amazon S3) bucket policy or an Amazon Simple Queue Service (Amazon SQS) resource policy. This only made the attack surface larger. Maintaining long-term root credentials exposed users to possible mismanagement, compliance issues, and human errors despite strict monitoring and robust security procedures.

Security teams started looking for a scalable, automated solution. They required a method to programmatically control AWS root access without requiring long-term credentials in the first place, in addition to centralizing the administration of root credentials.

Centrally manage root access

AWS solve the long-standing problem of managing root credentials across several accounts with the new capability to centrally control root access. Two crucial features are introduced by this new capability: central control over root credentials and root sessions. When combined, they provide security teams with a safe, scalable, and legal method of controlling AWS root access to all member accounts of AWS Organizations.

First, let’s talk about centrally managing root credentials. You can now centrally manage and safeguard privileged root credentials for all AWS Organizations accounts with this capability. Managing root credentials enables you to:

Eliminate long-term root credentials: To ensure that no long-term privileged credentials are left open to abuse, security teams can now programmatically delete root user credentials from member accounts.

Prevent credential recovery: In addition to deleting the credentials, it also stops them from being recovered, protecting against future unwanted or unauthorized AWS root access.

Establish secure accounts by default: Using extra security measures like MFA after account provisioning is no longer necessary because member accounts can now be created without root credentials right away. Because accounts are protected by default, long-term root access security issues are significantly reduced, and the provisioning process is made simpler overall.

Assist in maintaining compliance: By centrally identifying and tracking the state of root credentials for every member account, root credentials management enables security teams to show compliance. Meeting security rules and legal requirements is made simpler by this automated visibility, which verifies that there are no long-term root credentials.

Aid in maintaining compliance By systematically identifying and tracking the state of root credentials across all member accounts, root credentials management enables security teams to prove compliance. Meeting security rules and legal requirements is made simpler by this automated visibility, which verifies that there are no long-term root credentials. However, how can it ensure that certain root operations on the accounts can still be carried out? Root sessions are the second feature its introducing today. It provides a safe substitute for preserving permanent root access.

Security teams can now obtain temporary, task-scoped root access to member accounts, doing away with the need to manually retrieve root credentials anytime privileged activities are needed. Without requiring permanent root credentials, this feature ensures that operations like unlocking S3 bucket policies or SQS queue policies may be carried out safely.

Key advantages of root sessions include:

Task-scoped root access: In accordance with the best practices of least privilege, AWS permits temporary AWS root access for particular actions. This reduces potential dangers by limiting the breadth of what can be done and shortening the time of access.

Centralized management: Instead of logging into each member account separately, you may now execute privileged root operations from a central account. Security teams can concentrate on higher-level activities as a result of the process being streamlined and their operational burden being lessened.

Conformity to AWS best practices: Organizations that utilize short-term credentials are adhering to AWS security best practices, which prioritize the usage of short-term, temporary access whenever feasible and the principle of least privilege.

Full root access is not granted by this new feature. For carrying out one of these five particular acts, it offers temporary credentials. Central root account management enables the first three tasks. When root sessions are enabled, the final two appear.

Auditing root user credentials: examining root user data with read-only access

Reactivating account recovery without root credentials is known as “re-enabling account recovery.”

deleting the credentials for the root user Eliminating MFA devices, access keys, signing certificates, and console passwords

Modifying or removing an S3 bucket policy that rejects all principals is known as “unlocking” the policy.

Modifying or removing an Amazon SQS resource policy that rejects all principals is known as “unlocking a SQS queue policy.”

Accessibility

With the exception of AWS GovCloud (US) and AWS China Regions, which do not have root accounts, all AWS Regions offer free central management of root access. You can access root sessions anywhere.

It can be used via the AWS SDK, AWS CLI, or IAM console.

What is a root access?

The root user, who has full access to all AWS resources and services, is the first identity formed when you create an account with Amazon Web Services (AWS). By using the email address and password you used to establish the account, you can log in as the root user.

Read more on Govindhtech.com

#AWSRoot#AWSRootaccess#IAM#AmazonS3#AWSOrganizations#AmazonSQS#AWSSDK#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

AWS Security 101: Protecting Your Cloud Investments

In the ever-evolving landscape of technology, few names resonate as strongly as Amazon.com. This global giant, known for its e-commerce prowess, has a lesser-known but equally influential arm: Amazon Web Services (AWS). AWS is a powerhouse in the world of cloud computing, offering a vast and sophisticated array of services and products. In this comprehensive guide, we'll embark on a journey to explore the facets and features of AWS that make it a driving force for individuals, companies, and organizations seeking to utilise cloud computing to its fullest capacity.

Amazon Web Services (AWS): A Technological Titan

At its core, AWS is a cloud computing platform that empowers users to create, deploy, and manage applications and infrastructure with unparalleled scalability, flexibility, and cost-effectiveness. It's not just a platform; it's a digital transformation enabler. Let's dive deeper into some of the key components and features that define AWS:

1. Compute Services: The Heart of Scalability

AWS boasts services like Amazon EC2 (Elastic Compute Cloud), a scalable virtual server solution, and AWS Lambda for serverless computing. These services provide users with the capability to efficiently run applications and workloads with precision and ease. Whether you need to host a simple website or power a complex data-processing application, AWS's compute services have you covered.

2. Storage Services: Your Data's Secure Haven

In the age of data, storage is paramount. AWS offers a diverse set of storage options. Amazon S3 (Simple Storage Service) caters to scalable object storage needs, while Amazon EBS (Elastic Block Store) is ideal for block storage requirements. For archival purposes, Amazon Glacier is the go-to solution. This comprehensive array of storage choices ensures that diverse storage needs are met, and your data is stored securely.

3. Database Services: Managing Complexity with Ease

AWS provides managed database services that simplify the complexity of database management. Amazon RDS (Relational Database Service) is perfect for relational databases, while Amazon DynamoDB offers a seamless solution for NoSQL databases. Amazon Redshift, on the other hand, caters to data warehousing needs. These services take the headache out of database administration, allowing you to focus on innovation.

4. Networking Services: Building Strong Connections

Network isolation and robust networking capabilities are made easy with Amazon VPC (Virtual Private Cloud). AWS Direct Connect facilitates dedicated network connections, and Amazon Route 53 takes care of DNS services, ensuring that your network needs are comprehensively addressed. In an era where connectivity is king, AWS's networking services rule the realm.

5. Security and Identity: Fortifying the Digital Fortress

In a world where data security is non-negotiable, AWS prioritizes security with services like AWS IAM (Identity and Access Management) for access control and AWS KMS (Key Management Service) for encryption key management. Your data remains fortified, and access is strictly controlled, giving you peace of mind in the digital age.

6. Analytics and Machine Learning: Unleashing the Power of Data

In the era of big data and machine learning, AWS is at the forefront. Services like Amazon EMR (Elastic MapReduce) handle big data processing, while Amazon SageMaker provides the tools for developing and training machine learning models. Your data becomes a strategic asset, and innovation knows no bounds.

7. Application Integration: Seamlessness in Action

AWS fosters seamless application integration with services like Amazon SQS (Simple Queue Service) for message queuing and Amazon SNS (Simple Notification Service) for event-driven communication. Your applications work together harmoniously, creating a cohesive digital ecosystem.

8. Developer Tools: Powering Innovation

AWS equips developers with a suite of powerful tools, including AWS CodeDeploy, AWS CodeCommit, and AWS CodeBuild. These tools simplify software development and deployment processes, allowing your teams to focus on innovation and productivity.

9. Management and Monitoring: Streamlined Resource Control

Effective resource management and monitoring are facilitated by AWS CloudWatch for monitoring and AWS CloudFormation for infrastructure as code (IaC) management. Managing your cloud resources becomes a streamlined and efficient process, reducing operational overhead.

10. Global Reach: Empowering Global Presence

With data centers, known as Availability Zones, scattered across multiple regions worldwide, AWS enables users to deploy applications close to end-users. This results in optimal performance and latency, crucial for global digital operations.

In conclusion, Amazon Web Services (AWS) is not just a cloud computing platform; it's a technological titan that empowers organizations and individuals to harness the full potential of cloud computing. Whether you're an aspiring IT professional looking to build a career in the cloud or a seasoned expert seeking to sharpen your skills, understanding AWS is paramount.

In today's technology-driven landscape, AWS expertise opens doors to endless opportunities. At ACTE Institute, we recognize the transformative power of AWS, and we offer comprehensive training programs to help individuals and organizations master the AWS platform. We are your trusted partner on the journey of continuous learning and professional growth. Embrace AWS, embark on a path of limitless possibilities in the world of technology, and let ACTE Institute be your guiding light. Your potential awaits, and together, we can reach new heights in the ever-evolving world of cloud computing. Welcome to the AWS Advantage, and let's explore the boundless horizons of technology together!

8 notes

·

View notes

Text

Navigating the Cloud: Unleashing the Potential of Amazon Web Services (AWS)

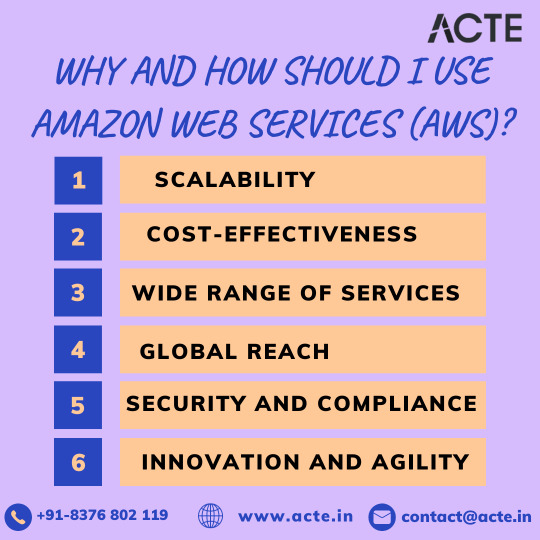

In the dynamic realm of technological progress, Amazon Web Services (AWS) stands as a beacon of innovation, offering unparalleled advantages for enterprises, startups, and individual developers. This article will delve into the compelling reasons behind the adoption of AWS and provide a strategic roadmap for harnessing its transformative capabilities.

Unveiling the Strengths of AWS:

1. Dynamic Scalability: AWS distinguishes itself with its dynamic scalability, empowering users to effortlessly adjust infrastructure based on demand. This adaptability ensures optimal performance without the burden of significant initial investments, making it an ideal solution for businesses with fluctuating workloads.

2. Cost-Efficient Flexibility: Operating on a pay-as-you-go model, AWS delivers cost-efficiency by eliminating the need for large upfront capital expenditures. This financial flexibility is a game-changer for startups and businesses navigating the challenges of variable workloads.

3. Comprehensive Service Portfolio: AWS offers a comprehensive suite of cloud services, spanning computing power, storage, databases, machine learning, and analytics. This expansive portfolio provides users with a versatile and integrated platform to address a myriad of application requirements.

4. Global Accessibility: With a distributed network of data centers, AWS ensures low-latency access on a global scale. This not only enhances user experience but also fortifies application reliability, positioning AWS as the preferred choice for businesses with an international footprint.

5. Security and Compliance Commitment: Security is at the forefront of AWS's priorities, offering robust features for identity and access management, encryption, and compliance with industry standards. This commitment instills confidence in users regarding the safeguarding of their critical data and applications.

6. Catalyst for Innovation and Agility: AWS empowers developers by providing services that allow a concentrated focus on application development rather than infrastructure management. This agility becomes a catalyst for innovation, enabling businesses to respond swiftly to evolving market dynamics.

7. Reliability and High Availability Assurance: The redundancy of data centers, automated backups, and failover capabilities contribute to the high reliability and availability of AWS services. This ensures uninterrupted access to applications even in the face of unforeseen challenges.

8. Ecosystem Synergy and Community Support: An extensive ecosystem with a diverse marketplace and an active community enhances the AWS experience. Third-party integrations, tools, and collaborative forums create a rich environment for users to explore and leverage.

Charting the Course with AWS:

1. Establish an AWS Account: Embark on the AWS journey by creating an account on the AWS website. This foundational step serves as the gateway to accessing and managing the expansive suite of AWS services.

2. Strategic Region Selection: Choose AWS region(s) strategically, factoring in considerations like latency, compliance requirements, and the geographical location of the target audience. This decision profoundly impacts the performance and accessibility of deployed resources.

3. Tailored Service Selection: Customize AWS services to align precisely with the unique requirements of your applications. Common choices include Amazon EC2 for computing, Amazon S3 for storage, and Amazon RDS for databases.

4. Fortify Security Measures: Implement robust security measures by configuring identity and access management (IAM), establishing firewalls, encrypting data, and leveraging additional security features. This comprehensive approach ensures the protection of critical resources.

5. Seamless Application Deployment: Leverage AWS services to deploy applications seamlessly. Tasks include setting up virtual servers (EC2 instances), configuring databases, implementing load balancers, and establishing connections with various AWS services.

6. Continuous Optimization and Monitoring: Maintain a continuous optimization strategy for cost and performance. AWS monitoring tools, such as CloudWatch, provide insights into the health and performance of resources, facilitating efficient resource management.

7. Dynamic Scaling in Action: Harness the power of AWS scalability by adjusting resources based on demand. This can be achieved manually or through the automated capabilities of AWS Auto Scaling, ensuring applications can handle varying workloads effortlessly.

8. Exploration of Advanced Services: As organizational needs evolve, delve into advanced AWS services tailored to specific functionalities. AWS Lambda for serverless computing, AWS SageMaker for machine learning, and AWS Redshift for data analytics offer specialized solutions to enhance application capabilities.

Closing Thoughts: Empowering Success in the Cloud

In conclusion, Amazon Web Services transcends the definition of a mere cloud computing platform; it represents a transformative force. Whether you are navigating the startup landscape, steering an enterprise, or charting an individual developer's course, AWS provides a flexible and potent solution.

Success with AWS lies in a profound understanding of its advantages, strategic deployment of services, and a commitment to continuous optimization. The journey into the cloud with AWS is not just a technological transition; it is a roadmap to innovation, agility, and limitless possibilities. By unlocking the full potential of AWS, businesses and developers can confidently navigate the intricacies of the digital landscape and achieve unprecedented success.

2 notes

·

View notes

Text

Node and Pod Autoscaling in ROSA: Automating Performance at Scale

In today’s fast-paced digital landscape, performance and resource optimization are key. When running workloads on Red Hat OpenShift Service on AWS (ROSA), it becomes crucial to dynamically scale resources based on demand — both at the pod and node levels. This is where autoscaling shines.

This article explains how node and pod autoscaling works in a ROSA cluster and how it enables efficient, responsive applications without manual intervention.

🌐 What Is Autoscaling?

Autoscaling in Kubernetes/OpenShift is the ability to automatically adjust computing resources:

Pod Autoscaling scales your application pods based on CPU/memory usage or custom metrics.

Node Autoscaling adds/removes worker nodes depending on the resource requirements of pods.

In ROSA, these mechanisms are tightly integrated with AWS infrastructure and OpenShift’s orchestration engine.

📦 Pod Autoscaling with Horizontal Pod Autoscaler (HPA)

🔹 How It Works:

The Horizontal Pod Autoscaler (HPA) increases or decreases the number of pod replicas in a deployment based on real-time metrics.

🧠 Key Metrics Used:

CPU utilization (most common)

Memory usage

Custom metrics via Prometheus Adapter

✅ Example Scenario:

A web app receives sudden traffic spikes. The HPA detects high CPU usage and automatically scales from 3 to 10 pods, ensuring uninterrupted user experience.

🖥️ Node Autoscaling with Cluster Autoscaler

🔹 What It Does:

Cluster Autoscaler automatically adjusts the number of nodes in the ROSA cluster. When pods can't be scheduled due to lack of resources, it triggers the provisioning of new nodes.

🔸 Likewise, it removes underutilized nodes to reduce cost and resource waste.

🔧 Integrated with AWS:

ROSA uses Amazon EC2 Auto Scaling Groups behind the scenes.

Ensures nodes are provisioned using the same security, networking, and IAM policies.

⚙️ How to Configure Autoscaling in ROSA (Without Deep Coding)

While this blog avoids detailed CLI configuration, here’s a conceptual workflow:

1. Enable Cluster Autoscaler

Ensure ROSA is using machine pools or machine sets.

Enable autoscaling ranges (min/max node counts).

2. Deploy Your Application

Deploy a sample app using a Kubernetes/OpenShift deployment.

3. Enable Horizontal Pod Autoscaler

Define resource limits/requests in your pod spec (especially CPU).

Create an HPA object with threshold metrics.

4. Observe Autoscaling in Action

Use OpenShift Console or metrics dashboard to watch scale events.

During high load, see pods and nodes increase.

Once demand drops, resources scale back down.

📊 Benefits of Autoscaling in ROSA

✅ Optimized Resource Usage Only run what you need — scale down during off-peak hours.

✅ Performance Assurance Automatically meet SLAs by scaling up during peak loads.

✅ Cost Efficiency No need to overprovision resources. Pay only for what you use.

✅ Cloud-Native Resilience Elastic infrastructure aligned with cloud-native architecture principles.

📌 Final Thoughts

ROSA makes it simple to implement intelligent autoscaling strategies for both pods and nodes. By combining HPA and Cluster Autoscaler, you ensure that your application is highly available, cost-effective, and performance-optimized at all times.

As workloads grow and user demands fluctuate, autoscaling ensures your ROSA cluster remains lean, responsive, and production-ready.

For more info, Kindly follow: Hawkstack Technologies

0 notes

Text

Simplifying Multicloud Operations Through Unified Console Management

The digital enterprise thrives on agility. To gain that agility, organizations increasingly adopt multicloud strategies—deploying workloads across public, private, and hybrid cloud environments. While this model enhances flexibility and resiliency, it introduces new management and visibility challenges that traditional tools can’t handle.

To meet these challenges, unified consoles create a Seamless Multicloud Management experience by offering a centralized interface to monitor, govern, and optimize cloud operations. These platforms are becoming indispensable for CIOs and cloud architects who need to reduce complexity while maintaining compliance, security, and efficiency.

The Fragmentation Problem in Multicloud Operations

When enterprises spread workloads across different cloud providers, they often encounter tool sprawl and operational silos. Each cloud platform—AWS, Azure, Google Cloud, or private infrastructure—comes with its own APIs, interfaces, policies, and monitoring tools. Managing these independently leads to:

Increased operational overhead

Inconsistent policy enforcement

Reduced infrastructure visibility

Slower incident response

Higher cloud waste and overspending

That’s why unified consoles create a seamless multicloud management experience, integrating all these fragmented elements into one cohesive system for better control.

The Core Pillars of Unified Multicloud Management

To deliver full value, a unified multicloud console must address key enterprise needs across operations, security, governance, cost, and compliance. The essential components include:

Centralized infrastructure visibility

Cross-cloud workload orchestration

Policy-based governance and compliance

Cost transparency and optimization

Unified incident and threat response

These pillars ensure that unified consoles create a seamless multicloud management experience that is scalable, secure, and future-ready.

Achieving Real-Time Visibility Across All Cloud Assets

Unified consoles provide end-to-end visibility into applications, virtual machines, databases, containers, and storage—across all cloud providers. This enables operations teams to move from reactive monitoring to proactive insights.

Capabilities include:

Real-time performance dashboards

Automated resource discovery across environments

Cloud-agnostic tagging and grouping

Unified inventory management

Visual topology mapping

With these capabilities, unified consoles create a seamless multicloud management experience where operational blind spots are eliminated.

Unified Identity and Access Management for All Clouds

Managing user access across platforms is complex and risky. Each cloud has its own IAM model, which often results in policy conflicts or unmanaged accounts. Unified consoles consolidate IAM and integrate with enterprise directories.

Security and access control features include:

Role-based access policies across providers

SSO integration with Active Directory or LDAP

Multi-factor authentication (MFA)

Policy-based access control templates

Audit trails and access reports

As a result, unified consoles create a seamless multicloud management experience that strengthens identity security and simplifies audits.

Consistent Policy Enforcement and Governance at Scale

Governance breakdown is a leading cause of cloud misconfigurations, security breaches, and compliance violations. Unified consoles enable organizations to define and enforce governance frameworks across all platforms.

Governance benefits include:

Predefined policy templates for compliance

Automated remediation for policy violations

Unified tagging and resource classification

Integrated compliance dashboards

Support for GDPR, HIPAA, ISO 27001, and other standards

Thus, unified consoles create a seamless multicloud management experience by embedding governance into everyday operations.

Streamlining Multicloud Cost Control and Optimization

Cloud cost overruns often occur because businesses lack a unified view of their spending. Each cloud has different billing formats, regions, pricing tiers, and consumption models. Unified consoles offer financial clarity across environments.

Cost management features include:

Cross-cloud cost dashboards and filters

Project and team-level cost attribution

Idle resource identification and optimization

Forecasting and budgeting tools

Integration with FinOps platforms

These tools ensure that unified consoles create a seamless multicloud management experience that maximizes ROI and minimizes waste.

Supporting DevOps and Infrastructure-as-Code (IaC)

Modern enterprises rely on DevOps pipelines and IaC tools to deploy infrastructure and applications at speed. Unified consoles provide a consistent framework to support these agile workflows across clouds.

DevOps enablement features include:

Preconfigured IaC blueprints for multicloud

CI/CD pipeline integrations with Jenkins, GitHub, etc.

Policy-as-code validation during deployments

Automated testing, monitoring, and rollback

Self-service portals for development teams

By streamlining development operations, unified consoles create a seamless multicloud management experience that fuels innovation.

Integrated Security and Threat Monitoring

Cybersecurity threats evolve fast—and siloed security tools leave gaps. Unified consoles enhance threat detection, prevention, and response by aggregating data from all cloud environments.

Security features include:

Unified security information and event management (SIEM)

Threat intelligence and behavioral analytics

Cross-cloud vulnerability scanning

Automated incident workflows and escalation

Audit-ready logs and compliance reports

Because unified consoles create a seamless multicloud management experience, they deliver real-time defense across a broad and complex attack surface.

Unified Backup, Recovery, and Disaster Resilience

Business continuity is vital. Organizations must be prepared to recover quickly from outages, cyberattacks, or data loss. Unified consoles offer disaster recovery tools that span across all cloud environments.

Disaster recovery advantages:

Automated backup and restore jobs across platforms

Geographically distributed replication

Failover and failback orchestration tools

Real-time recovery time objective (RTO) and recovery point objective (RPO) tracking

Runbooks and DR simulation testing

These features ensure that unified consoles create a seamless multicloud management experience with resilience built in.

AI and Automation Driving Next-Gen Unified Management

The next evolution of unified consoles leverages artificial intelligence and machine learning to automate decision-making and drive intelligent operations. This is the future of cloud management.

AI/ML integrations include:

Predictive analytics for workload scaling

Automated anomaly detection in logs and metrics

Autonomous remediation of performance issues

AI-assisted ticket resolution and incident tracking

ChatOps and NLP-based console interaction

By integrating these capabilities, unified consoles create a seamless multicloud management experience that is proactive, predictive, and highly automated.

Empowering Cross-Functional Collaboration

In multicloud environments, collaboration between DevOps, SecOps, FinOps, and IT teams is essential. A unified console becomes a shared source of truth, aligning business goals with cloud operations.

Collaboration features:

Role-specific dashboards for stakeholders

Shared cost and performance KPIs

Centralized documentation and knowledge base

User-defined alerts and workflows

Customizable reports for executives and team leads

Because unified consoles create a seamless multicloud management experience, they foster communication, trust, and accountability throughout the organization.

Read Full Article : https://businessinfopro.com/unified-consoles-create-a-seamless-multicloud-management-experience/

About Us: Businessinfopro is a trusted platform delivering insightful, up-to-date content on business innovation, digital transformation, and enterprise technology trends. We empower decision-makers, professionals, and industry leaders with expertly curated articles, strategic analyses, and real-world success stories across sectors. From marketing and operations to AI, cloud, and automation, our mission is to decode complexity and spotlight opportunities driving modern business growth. At Businessinfopro, we go beyond news—we provide perspective, helping businesses stay agile, informed, and competitive in a rapidly evolving digital landscape. Whether you're a startup or a Fortune 500 company, our insights are designed to fuel smarter strategies and meaningful outcomes.

#IntelligentCloudManagement#DigitalInfrastructure#MulticloudControl#UnifiedCloudOps#CloudOptimization

0 notes

Text

2025 AWS Cost Optimization Playbook: Real Strategies That Work

In 2025, cloud costs continue to rise, often silently. For startup CTOs and tech leads juggling infrastructure performance, tight budgets, and rapid scaling, AWS billing has become a monthly source of anxiety.

The problem isn’t AWS itself, it’s the hidden inefficiencies, unmanaged workloads, and scattered security practices that slowly drain your runway.

This playbook offers real strategies that work. Not vague recommendations or one-size-fits-all advice, but actionable steps drawn from working with teams who’ve successfully optimized their AWS usage while maintaining secure, scalable environments.

Let’s dive straight into what matters.

Why AWS Bills Are Still Rising, Even When Usage Doesn’t

If you’ve already tried “right-sizing” or turning off idle instances, you’re not alone. These are common first steps. But AWS billing remains confusing, and for many startups, costs keep creeping up. Why?

Over-provisioning for peak demand without ever scaling back.

Data storage left unchecked, especially S3 buckets and EBS snapshots that never get cleaned up.

Dev/test environments running 24/7, even when unused.

Ineffective tagging policies, making it impossible to trace who owns what.

Security misconfigurations leading to duplicated services or manual workarounds.

The result? You’re spending more on AWS than you should, with no clear plan to stop the bleeding.

Strategy 1: Align Cost Optimization With AWS Security Best Practices

Security and cost optimization are more connected than most realize. Misconfigured roles, unused permissions, and unrestricted access often lead to excess resource usage or worse, breaches that trigger emergency spending.

Here’s what to do:

Use AWS IAM wisely: Remove unused users and enforce least privilege policies. Overly permissive access increases risk and often leads to manual, redundant provisioning.

Enable multi-factor authentication (MFA): Helps prevent unauthorized access that could result in costly infrastructure changes.

Activate AWS CloudTrail and Config: Logging isn’t just about compliance—it helps you spot unexpected provisioning and rollback patterns that waste budget.

Run regular security audits using AWS Security Hub and Trusted Advisor. These tools often surface inefficiencies that tie directly to unnecessary spend.

These are not just security best practices. They’re cost-saving levers in disguise.

Strategy 2: Get Visibility With Tagging and Resource Ownership

Many AWS cost problems stem from a simple issue: no one knows who owns what. Without clear tagging, you’re flying blind.

Define a consistent tagging strategy across all projects and environments (e.g., Owner, Project, Environment, CostCenter).

Automate tag enforcement with tools like AWS Service Catalog or tag policies in AWS Organizations.

Use AWS Cost Explorer and set up reports based on your tags. This gives you clarity on which teams or features are driving costs.

Once ownership becomes visible, optimization becomes everyone’s job—not just yours.

Strategy 3: Optimize EC2 and RDS With Smarter Scheduling

One of the simplest and most overlooked tactics is scheduling. Your dev and staging environments don’t need to run 24/7.

Use AWS Instance Scheduler to automatically start and stop environments based on team working hours.

Look into RDS pause/resume features for non-production databases.

Benchmark EC2 instance types regularly. AWS releases newer generations frequently, and the same workload can often run cheaper on newer instances.

Small tweaks here save thousands over the course of a year—especially if you’re scaling fast.

Strategy 4: Cut Storage Waste Before It Becomes a Liability

Storage grows silently. And because it’s cheap, it’s often ignored—until it isn’t.

Regularly audit S3 buckets for unused objects or multipart uploads that were never completed.

Enable S3 Lifecycle Policies to automatically move older data to infrequent access or Glacier.

Delete unused EBS volumes and snapshots. Use Amazon Data Lifecycle Manager to automate cleanup.

This isn’t just about saving money. It’s about keeping your architecture clean, secure, and maintainable.

Strategy 5: Use Reserved Instances and Savings Plans—But Strategically

Buying Reserved Instances (RIs) or Savings Plans can save you up to 72%, but they come with a catch: commitment.

Only commit after you’ve stabilized usage patterns. Don’t buy RIs based on current over-provisioned setups.

Use AWS Cost Explorer’s recommendations to guide you—but also verify them against your team’s future roadmap.

Mix and match: Use On-Demand for variable workloads, Savings Plans for consistent usage, and Spot Instances for dev/test where interruption is acceptable.

This layered approach helps you avoid locking in waste.

Strategy 6: Bring Devs Into the Cost Conversation

If your developers treat AWS like an unlimited credit card, it’s not their fault—it’s the culture. Make cost a shared responsibility.

Integrate cost insights into your CI/CD pipeline. Tools like Infracost can estimate costs before deploying infrastructure changes.

Set budgets and alerts in AWS Budgets. Let devs see when they’re nearing thresholds.

Run monthly cost reviews with the engineering team, not just finance. Share learnings and encourage ownership.

When cost becomes part of engineering decisions, optimizations multiply.

The Real Challenge: Connecting Optimization to Your Business Goals

You’re not optimizing AWS for fun. You’re doing it to extend your runway, hit growth targets, and scale efficiently.

That’s why security, visibility, and cost controls can’t live in separate silos, they need to work together as part of your core architecture.

The most effective startup CTOs in 2025 are the ones who treat AWS cost optimization as an ongoing discipline, not a one-time fix. It’s a continuous loop of feedback, accountability, and smarter decisions.

And we’ve seen the results firsthand.

We’ve helped several CTOs reduce AWS costs by 20 to 40 percent without adding DevOps headcount or sacrificing scalability. These aren’t just abstract benchmarks. They’re backed by real outcomes.

See how our clients are saving big on AWS, no fluff, just data and results.

What’s Next: Get a Personalized Cost Optimization Review

If you’re still stuck with rising AWS bills despite best efforts, it may be time to get an outside perspective. We offer a free 30-minute AWS cost optimization session where our team reviews your setup, identifies hidden inefficiencies, and delivers a tailored savings plan.

We’ve helped teams reduce their AWS spend by 20 to 40 percent within weeks, without compromising security or performance.

Book your free 30-minute AWS cost optimization session now and unlock the real potential of your AWS environment.

Know more at https://logiciel.io/blog/2025-aws-cost-optimization-playbook-real-strategies-that-work

0 notes

Video

youtube

IAM User & Policy Setup to Access S3 Bucket | Step by Step Tutorial

Check out this new video on the CodeOneDigest YouTube channel! Learn how to create IAM User & Policy in AWS to access S3 Bucket. @codeonedigest @awscloud @AWSCloudIndia @AWS_Edu @AWSSupport @AWS_Gov @AWSArchitecture

#youtube#aws iam user#aws iam policy#aws iam user permission#aws iam user setup#aws iam policy setup

0 notes

Text

Unlocking the power of cloud computing with Imobisoft

Cloud computing empowers businesses with scalable, on-demand access to data storage and computing resources without the hassles of managing hardware. Imobisoft specialises in delivering robust cloud solutions across all major platforms including AWS, Azure, and Google Cloud.

Core Cloud Platforms Supported

Microsoft Azure offers a versatile platform of over 600 services. Imobisoft enhances automation and supports services spanning data, compute, networking, and development. Amazon Web Services provides a wide range of offerings from EC2, S3, and Lambda to IAM and VPC. Imobisoft ensures pay-only-for-what-you-use efficiency. Google Cloud Platform delivers more than 90 IT services including virtual machines, Cloud Storage, Kubernetes Engine, and serverless functions. Imobisoft helps businesses gain global accessibility and high availability.

Service Layers IaaS PaaS and SaaS

1. IaaS – Infrastructure as a Service These services provide virtualised compute, networking, and storage with full user control. Benefits include flexibility, scalability, and cost-efficiency through pay-as-you-go models. Imobisoft manages cloud resources on AWS, Azure, and GCP.

2. PaaS – Platform as a Service PaaS abstracts the underlying infrastructure, allowing developers to focus on creating and deploying applications. Imobisoft supports rapid development and deployment using services like App Engine, Web Apps, and Lambda.

3. SaaS – Software as a Service SaaS offers ready-to-use software accessible from any browser. Ideal for collaboration, e-commerce, and startups, Imobisoft helps businesses implement efficient and scalable solutions.

Why Choose Imobisoft

Expertise across all top-tier cloud platforms

Tailored solutions whether your need is IaaS, PaaS, or SaaS

Focus on efficiency with scalable resources and maximum ROI

Seamless automation, robust security, and expert migration strategies

By partnering with Imobisoft, businesses gain a trusted ally in building modern, resilient systems. Whether you are just starting or scaling up, Imobisoft delivers dependable results with a strong focus on cloud infrastructure.

#cloudsolutions#cloudcomputingservices#azuredevelopment#awsintegration#gcpcloud#iaas#paas#saas#cloudinfrastructure#cloudmigration

0 notes

Text

How to Detect, Prevent, and Audit Cybersecurity Risks

Cybersecurity threats have evolved into a persistent, intelligent force—exploiting everything from weak cloud settings to stolen credentials sold on the dark web. Organizations today require more than antivirus software and firewalls to protect their data and digital assets.

risikomonitor.com GmbH delivers a cutting-edge security platform designed to tackle today’s most critical risks with features including security risk detection, data breach prevention, darknet penetration testing, and cloud security audits.

This blog breaks down how these four pillars work together to protect your infrastructure from the inside out—and why proactive cyber risk management is non-negotiable in a hyperconnected world.

Security Risk Detection: Your Early Warning System

Before a hacker ever breaks in, there are usually signs—suspicious access attempts, unusual network traffic, unpatched software, or a misconfigured firewall. But many businesses miss these early indicators due to a lack of proper visibility.

Security risk detection by risikomonitor.com GmbH is designed to catch issues before they escalate. The platform uses automated scans, behavioral analytics, and threat intelligence to:

Monitor endpoints, servers, cloud apps, and databases

Detect vulnerabilities, misconfigurations, and access anomalies

Correlate threat behavior using AI-based rules

Alert IT teams in real time with risk severity scores

Provide automated prioritization for mitigation

This proactive approach means your organization isn’t waiting for a breach to act—you're identifying and neutralizing threats at the first sign of exposure.

Data Breach Prevention: Protecting What Matters Most

A data breach can destroy customer trust, bring regulatory fines, and derail business continuity. From insider leaks to credential theft, threats come in many forms—and businesses must be prepared on all fronts.

risikomonitor.com GmbH focuses on data breach prevention through:

Continuous monitoring of sensitive data access

Encryption audit trails and multi-layer access controls

Monitoring of shadow IT and unauthorized data movements

Phishing simulations and user awareness testing

Breach response planning and automated incident reporting

By protecting data at rest, in transit, and during use, the platform ensures that your critical information doesn’t become tomorrow’s headline.

Darknet Penetration Testing: Uncover Threats Lurking in the Shadows

Cybercriminals often operate in hard-to-reach areas of the internet known as the darknet. This is where stolen credentials, exploits, and sensitive data are bought and sold—often before companies even know they've been compromised.

Darknet penetration testing from risikomonitor.com GmbH allows organizations to:

Detect exposed company credentials and leaked data

Monitor darknet forums and marketplaces for brand mentions

Simulate attacks using the same tools and tactics hackers use

Identify third-party breaches that may impact your supply chain

Receive alerts if your domains, subdomains, or employee emails appear in breach dumps

This offensive security strategy helps companies stay one step ahead of adversaries—by thinking like them.

Cloud Security Audit: Securing the Digital Backbone

The shift to cloud computing offers scalability and efficiency—but it also introduces unique security challenges. Misconfigured cloud services, unmanaged identities, and lack of visibility can open serious security gaps.

A cloud security audit by risikomonitor.com GmbH helps organizations assess and fortify their cloud environments across AWS, Azure, Google Cloud, and more.

Features include:

Identity and access management (IAM) reviews

Configuration assessments and misconfiguration detection

API security analysis

Data encryption and compliance mapping (e.g., DSGVO, ISO 27001)

Audit logs for ongoing cloud activity tracking

The goal isn’t just to “check a box” for cloud security—but to build a secure-by-design architecture that grows with your business.

Why Choose risikomonitor.com GmbH?

risikomonitor.com GmbH stands apart by offering a fully integrated cybersecurity platform that blends proactive detection, real-world testing, and cloud-first security best practices.

With capabilities including:

AI-powered security risk detection

Real-time data breach prevention features

Deep darknet penetration testing

Automated, thorough cloud security audits

the platform empowers CISOs, IT managers, and compliance teams to build resilient, regulation-ready defenses—without needing dozens of disconnected tools.

Whether you’re a fintech startup or an enterprise with hybrid infrastructure, this solution provides the clarity and control required in today’s threat landscape.

Final Thoughts

Modern cybersecurity is not about hoping you won't be attacked—it's about preparing for when you are. This means investing in security risk detection, conducting cloud security audits, continuously testing your exposure through darknet penetration testing, and building robust data breach prevention strategies.

With risikomonitor.com GmbH, businesses gain a trusted partner in navigating the complex, ever-changing world of cyber threats. It's not just about reacting—it's about anticipating, adapting, and staying secure.

0 notes

Text

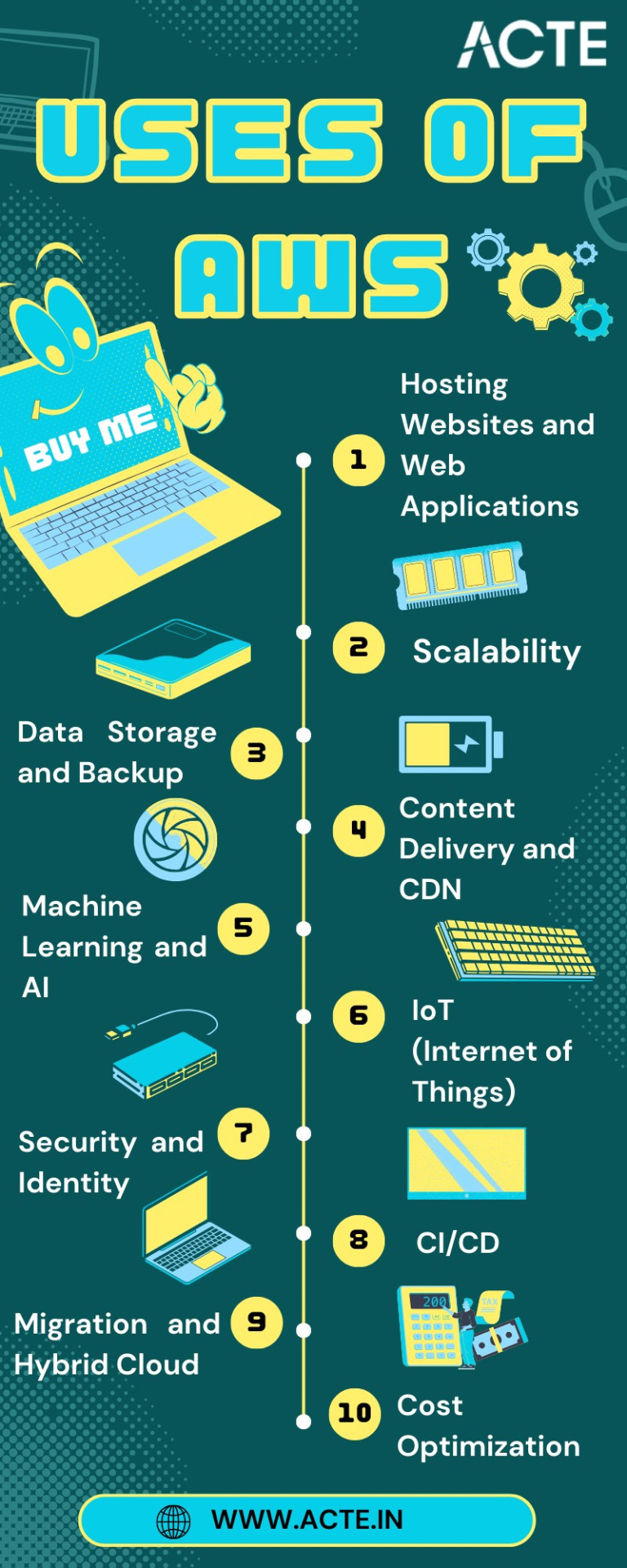

Your Journey Through the AWS Universe: From Amateur to Expert

In the ever-evolving digital landscape, cloud computing has emerged as a transformative force, reshaping the way businesses and individuals harness technology. At the forefront of this revolution stands Amazon Web Services (AWS), a comprehensive cloud platform offered by Amazon. AWS is a dynamic ecosystem that provides an extensive range of services, designed to meet the diverse needs of today's fast-paced world.

This guide is your key to unlocking the boundless potential of AWS. We'll embark on a journey through the AWS universe, exploring its multifaceted applications and gaining insights into why it has become an indispensable tool for organizations worldwide. Whether you're a seasoned IT professional or a newcomer to cloud computing, this comprehensive resource will illuminate the path to mastering AWS and leveraging its capabilities for innovation and growth. Join us as we clarify AWS and discover how it is reshaping the way we work, innovate, and succeed in the digital age.

Navigating the AWS Universe:

Hosting Websites and Web Applications: AWS provides a secure and scalable place for hosting websites and web applications. Services like Amazon EC2 and Amazon S3 empower businesses to deploy and manage their online presence with unwavering reliability and high performance.

Scalability: At the core of AWS lies its remarkable scalability. Organizations can seamlessly adjust their infrastructure according to the ebb and flow of workloads, ensuring optimal resource utilization in today's ever-changing business environment.

Data Storage and Backup: AWS offers a suite of robust data storage solutions, including the highly acclaimed Amazon S3 and Amazon EBS. These services cater to the diverse spectrum of data types, guaranteeing data security and perpetual availability.

Databases: AWS presents a panoply of database services such as Amazon RDS, DynamoDB, and Redshift, each tailored to meet specific data management requirements. Whether it's a relational database, a NoSQL database, or data warehousing, AWS offers a solution.

Content Delivery and CDN: Amazon CloudFront, AWS's content delivery network (CDN) service, ushers in global content distribution with minimal latency and blazing data transfer speeds. This ensures an impeccable user experience, irrespective of geographical location.

Machine Learning and AI: AWS boasts a rich repertoire of machine learning and AI services. Amazon SageMaker simplifies the development and deployment of machine learning models, while pre-built AI services cater to natural language processing, image analysis, and more.

Analytics: In the heart of AWS's offerings lies a robust analytics and business intelligence framework. Services like Amazon EMR enable the processing of vast datasets using popular frameworks like Hadoop and Spark, paving the way for data-driven decision-making.

IoT (Internet of Things): AWS IoT services provide the infrastructure for the seamless management and data processing of IoT devices, unlocking possibilities across industries.

Security and Identity: With an unwavering commitment to data security, AWS offers robust security features and identity management through AWS Identity and Access Management (IAM). Users wield precise control over access rights, ensuring data integrity.

DevOps and CI/CD: AWS simplifies DevOps practices with services like AWS CodePipeline and AWS CodeDeploy, automating software deployment pipelines and enhancing collaboration among development and operations teams.

Content Creation and Streaming: AWS Elemental Media Services facilitate the creation, packaging, and efficient global delivery of video content, empowering content creators to reach a global audience seamlessly.

Migration and Hybrid Cloud: For organizations seeking to migrate to the cloud or establish hybrid cloud environments, AWS provides a suite of tools and services to streamline the process, ensuring a smooth transition.

Cost Optimization: AWS's commitment to cost management and optimization is evident through tools like AWS Cost Explorer and AWS Trusted Advisor, which empower users to monitor and control their cloud spending effectively.

In this comprehensive journey through the expansive landscape of Amazon Web Services (AWS), we've embarked on a quest to unlock the power and potential of cloud computing. AWS, standing as a colossus in the realm of cloud platforms, has emerged as a transformative force that transcends traditional boundaries.

As we bring this odyssey to a close, one thing is abundantly clear: AWS is not merely a collection of services and technologies; it's a catalyst for innovation, a cornerstone of scalability, and a conduit for efficiency. It has revolutionized the way businesses operate, empowering them to scale dynamically, innovate relentlessly, and navigate the complexities of the digital era.

In a world where data reigns supreme and agility is a competitive advantage, AWS has become the bedrock upon which countless industries build their success stories. Its versatility, reliability, and ever-expanding suite of services continue to shape the future of technology and business.

Yet, AWS is not a solitary journey; it's a collaborative endeavor. Institutions like ACTE Technologies play an instrumental role in empowering individuals to master the AWS course. Through comprehensive training and education, learners are not merely equipped with knowledge; they are forged into skilled professionals ready to navigate the AWS universe with confidence.

As we contemplate the future, one thing is certain: AWS is not just a destination; it's an ongoing journey. It's a journey toward greater innovation, deeper insights, and boundless possibilities. AWS has not only transformed the way we work; it's redefining the very essence of what's possible in the digital age. So, whether you're a seasoned cloud expert or a newcomer to the cloud, remember that AWS is not just a tool; it's a gateway to a future where technology knows no bounds, and success knows no limits.

6 notes

·

View notes

Text

Transform Your IT Skills with a Premier AWS Cloud Course in Pune

Cloud computing is no longer a trend—it's the new normal. With organizations around the world migrating their infrastructure to the cloud, the demand for professionals skilled in Amazon Web Services (AWS) continues to rise. If you're in Pune and aiming to future-proof your career, enrolling in a premier AWS Cloud Course in Pune at WebAsha Technologies is your smartest career move.

The Importance of AWS in Today’s Cloud-First Economy

Amazon Web Services (AWS) is the world’s most widely adopted cloud platform, offering a range of services including computing, storage, databases, and machine learning. The reason AWS is favored by industry giants is its performance, scalability, and global infrastructure.

Why You Should Learn AWS:

Over 1 million active AWS users across industries

Top certifications that rank among the highest-paying globally

Opens doors to roles in DevOps, cloud security, architecture, and more

Flexible enough for both beginners and experienced IT professionals

Get Trained by the Experts at WebAsha Technologies

At WebAsha Technologies, we believe that quality training can change the trajectory of a career. Our AWS Cloud Course in Pune is designed for those who want to gain deep, real-world experience, not just theoretical knowledge.

What Sets Us Apart:

AWS-certified instructors with industry insights

Real-time AWS lab access and project-based learning

Regular assessments and mock tests for certification readiness

Dedicated support for interviews, resume prep & job referrals

Flexible batches: weekday, weekend, and fast-track options

Course Outline: What You’ll Master

Our AWS curriculum is aligned with the latest cloud trends and certification paths. Whether you're preparing for your first AWS certification or looking to deepen your skills, our course covers everything you need.

Key Learning Areas Include:

Understanding Cloud Concepts and AWS Ecosystem

Launching EC2 Instances and Managing Elastic IPs

Data Storage with S3, Glacier, and EBS

Virtual Private Cloud (VPC) Configuration

Security Best Practices using IAM

AWS Lambda and Event-Driven Architecture

Database Services: Amazon RDS & DynamoDB

Elastic Load Balancers & Auto Scaling

AWS Monitoring with CloudWatch & Logs

CodePipeline, CodeBuild & Continuous Integration

Who Should Take This AWS Cloud Course in Pune?

This course is ideal for a wide range of learners and professionals:

Engineering students and IT graduates

Working professionals aiming to switch domains

System admins looking to transition to cloud roles

Developers building scalable cloud-native apps

Entrepreneurs running tech-enabled startups

No prior cloud experience? No problem! Our course starts from the basics and gradually advances to deployment-level projects.

AWS Certifications Covered

We help you prepare for industry-standard certifications that are globally recognized:

AWS Certified Cloud Practitioner

AWS Certified Solutions Architect – Associate

AWS Certified SysOps Administrator – Associate

AWS Certified Developer – Associate

AWS Certified DevOps Engineer – Professional

Passing these certifications boosts your credibility and employability in the global tech market.

Why Pune is a Hotspot for AWS Careers

Pune’s growing IT ecosystem makes it a perfect launchpad for aspiring cloud professionals. With tech parks, global companies, and startups booming in the city, AWS-certified candidates have access to abundant job openings and career growth opportunities.

Conclusion: Take the Leap into the Cloud with WebAsha Technologies

The future of IT is in the cloud, and AWS is leading the way. If you're ready to make a change, gain in-demand skills, and advance your career, the AWS Cloud Course in Pune by WebAsha Technologies is your gateway to success.

0 notes

Text

The ROI of Investing in AWS Security Services for Long-Term Risk Reduction

In today’s digital-first world, businesses are racing to the cloud for flexibility, innovation, and scalability—but with cloud adoption comes new security challenges. Cyber threats are more sophisticated than ever, and the financial consequences of data breaches, downtime, or regulatory non-compliance can cripple a business. That’s why forward-thinking organizations are investing in Amazon Web Services (AWS) Security Services—not just for protection, but for long-term risk reduction and return on investment (ROI).

This article explores how AWS security services safeguard your digital assets and why investing in them yields measurable financial and operational benefits over time.

Why Cloud Security is No Longer Optional

With more data moving to the cloud, securing that data is now a business-critical priority, not just an IT concern. According to IBM’s 2024 Cost of a Data Breach Report, the average cost of a data breach globally was USD 4.45 million, with cloud environments accounting for a growing share of those incidents.

AWS, as the leading cloud provider, offers a shared responsibility model: while AWS secures the infrastructure, you are responsible for securing your workloads, data, and access policies. That’s where AWS Security Services come in—to help you meet your part of the security responsibility with the best tools in the industry.

Overview of AWS Security Services

AWS provides a comprehensive portfolio of security services designed to protect your cloud infrastructure, detect threats early, and ensure compliance. Key services include:

AWS Identity and Access Management (IAM) – Manage user access and permissions securely.

AWS GuardDuty – Intelligent threat detection for workloads and accounts.

AWS Shield & WAF – DDoS protection and web application firewall services.

AWS Security Hub – Centralized view of security posture across AWS services.

Amazon Inspector – Automated security assessments for EC2 and container workloads.

AWS Key Management Service (KMS) – Easy creation and control of encryption keys.

AWS Config & CloudTrail – Monitor and audit resource configurations and activity.

Each of these services contributes not only to security but also to financial risk mitigation and cost avoidance—key factors in determining ROI.

The Business Case for Investing in AWS Security

Security is often seen as a cost center—but when framed correctly, it’s a strategic investment that yields long-term savings and protects brand equity, customer trust, and operational continuity.

Here’s how AWS security services deliver a tangible return:

1. Reducing the Risk and Cost of Data Breaches

A successful cyberattack can result in data loss, legal penalties, reputational damage, and loss of customer confidence. By leveraging services like AWS GuardDuty and Amazon Inspector, you can:

Detect threats early before they escalate.

Automate vulnerability scans and remediation.

Maintain continuous compliance and auditing.

👉 ROI Impact: Early detection and prevention eliminate the high costs of incident response and reduce legal exposure from non-compliance.

2. Minimizing Downtime and Business Disruption

DDoS attacks, insider threats, and misconfigurations can cause service outages that lead to lost revenue and customer dissatisfaction. AWS Shield Advanced and AWS WAF offer robust protection against these attacks.

Automatically mitigate volumetric attacks.

Filter malicious web traffic at the edge.

Integrate with CloudFront for real-time threat intelligence.

👉 ROI Impact: Maintaining uptime ensures uninterrupted business operations, improved customer retention, and preservation of SLAs (Service Level Agreements).

3. Lower Operational Costs Through Automation

Manual security checks and compliance reporting drain internal resources. AWS services automate these processes:

AWS Security Hub aggregates findings from multiple tools and provides a unified dashboard.

AWS Config tracks resource changes for audit readiness.

IAM policies automate access controls based on least privilege.

👉 ROI Impact: Reduced labor costs, fewer manual errors, and faster remediation translate into long-term cost savings and higher efficiency.

4. Achieving and Maintaining Compliance

Compliance with standards such as GDPR, HIPAA, PCI DSS, or ISO 27001 requires continuous monitoring, logging, and reporting. AWS Security services help you:

Maintain detailed logs with AWS CloudTrail.

Generate compliance reports with minimal manual intervention.

Keep configurations aligned with best practices via AWS Config Rules.

👉 ROI Impact: Avoid costly fines, streamline audits, and demonstrate regulatory trustworthiness to customers and stakeholders.

5. Improved Customer Trust and Brand Reputation

Today’s customers are security-conscious. Demonstrating your commitment to protecting their data can be a competitive differentiator.

Use KMS and encryption-at-rest to show dedication to data privacy.

Provide secure access with IAM roles and multi-factor authentication (MFA).

Communicate your AWS-backed security framework as part of your value proposition.

👉 ROI Impact: Secure businesses gain more customer loyalty, command higher contract values, and reduce churn due to security concerns.

6. Scalability and Flexibility without Compromising Security

As your business grows, your attack surface does too. AWS Security Services scale seamlessly with your infrastructure.

Add users, policies, and workloads without recreating security configurations.

Enforce consistent security posture across accounts and regions using AWS Organizations and Service Control Policies (SCPs).

Manage secrets across environments with AWS Secrets Manager.

👉 ROI Impact: Scale with confidence while avoiding the cost of retrofitting security later.

How to Maximize Your ROI with AWS Security

To get the most value from AWS Security Services:

✅ Conduct a Security Audit

Start with a well-architected security review to identify gaps and prioritize which AWS security services to implement first.

✅ Use Managed Security Services

Consider partnering with AWS security consulting experts or a managed security service provider (MSSP) to optimize configurations and ongoing monitoring.

✅ Train Your Team

Ensure your DevOps and IT staff are trained in AWS security best practices. AWS offers certification programs to boost internal expertise.

✅ Monitor and Adjust

Security is an ongoing journey. Use AWS Security Hub dashboards and alerts to track incidents, fine-tune policies, and adapt to emerging threats.

Final Thoughts

In an era where cyber risk is business risk, AWS Security Services provide more than just protection—they deliver measurable business resilience, operational continuity, and peace of mind. When you factor in the cost of breaches, compliance violations, downtime, and reputational loss, the ROI of investing in AWS Security is not only clear—it’s compelling.

By embracing AWS’s security-first approach, you position your organization for long-term success and reduce risk exposure in a constantly evolving threat landscape.

So, don’t wait for a breach to show you the value of security. Invest early, invest smart—with AWS Security Services.

#aws cloud service#aws services#aws security#aws web services#aws security services#AWS Consulting Partner#AWS experts#aws certified solutions architect

0 notes