#apiautomationtestingservices

Explore tagged Tumblr posts

Text

Test Automation vs Manual Testing

In the software testing arena a perennial debate has raged between proponents of manual and automation testing. In our experience, the two are complementary; used together they form a more effective test strategy.

Manual testing

Since pretty much the start of software development in the 1960’s manual testing has been carried out by teams of testers. In this technique a team of people ( qa testers) get access to the latest software build and test it to validate that the software build works correctly. For feature testing there are two broad categories of manual testing that can be carried out.

Test case based testing

In this case test cases are defined up front, prior to the arrival of the next software build and the manual team work through the list of test cases performing the actions defined in the test cases and validating that the test case and hence the software build is operational. This technique requires more domain understanding at the test case creation point and less domain knowledge at the time of execution.

Exploratory testing

Here a manual QA tester that understands the domain of the software fairly well attempts to “break” ( cause a bug to happen) the software. Exploratory testing is an excellent complement to to test case based testing. Together they result in a significant improvement in quality

Automation testing

Since the 1990’s automation testing has risen as strong alternative. In automation testing a software tool is programmed ( In Java for example) to carry out human actions that testers would do in check based testing. The process is typically to first create the test cases like in Manual check based testing and then to program the test cases. Read for more : Test Automation vs Manual Testing

With Webomates CQ we have developed a service that incorporates the benefits of AI into a TAAS ( Testing As a Service). To hear more about WebomatesCQ schedule a demo here.

#Automation testing#apiautomationtestingservices#webomates#test automation#apiloadtesting#apitestingservices#intelligent test automation

1 note

·

View note

Text

Can Artificial Intelligence replace human in Software Testing?

Artificial intelligence is a computer science discipline that simulates intelligence in machines, by making them think, act and mimic human actions. There has been a significant development in the artificial intelligence industries, machines are automated to take rational actions and exhibit traits that are associated with humans. As days go by more and more algorithms are being created to mimic human intelligence and are embedded into machines. Software testing and development is a very important aspect where artificial intelligence is applied. With Digitalization improving human efficiency, so has improvements in AI shaped the way software is being tested.

2016-2017 Quality assurance report suggests that AI will help shape software testing by assisting humans in eliminating problems associated with QA and software testing challenges. However, if these needs are met in the software industries there is a possibility that human testers will become extinct. This calls for the question “Can Artificial intelligence replace humans in software testing?” Many software experts believe that artificial intelligence can only assist in software testing and cannot replace humans, because humans are still needed to think outside the box and explore inherent vulnerabilities in the software. Contrary to this, others think otherwise. But after critical thought and weighing both views, it appears that the former obviously holds more tangible points than the latter.

The Evolution in Software testing is continuous with the adoption of Agile and DevOps methodologies. And software development will also continue to evolve in the era of AI. Artificial intelligence is charged with creating software to understand input data versus output data. This is similar to software tests carried out by human software testers, where the tester types in an input and looks for an expected output. Today, testing tools have evolved. Automation tools can be used to create, organize and prioritize test cases. Efficiently managing tests and their outcomes remain essential to giving the developers the feedback they need.

Shortcomings of Humans in Software testing that can be positively transformed by Artificial intelligence

Although humans are considered a reliable source for software testing, humans still have its own shortcomings. This is a disadvantage to human software testers which reduces their efficiency and performance in software testing. These shortcomings are stated as follows:

Time-consuming: The primary disadvantage of performing software testing by humans is that it is time-consuming. Validation of the functionalities of software might take days and weeks, and with the assistance of Artificial intelligence time wastage is reduced to minimal.

Limited possibilities of testing for manual scenarios: Artificial intelligence creates a broad scope for testing contrary to the limited scope available to the human testing scenarios.

Lack of automation: Manual testing requires the presence of the software tester, but testing using artificial intelligence can be done steadily without much human intervention.

In a large organization without the help of Artificial intelligence automated tools in software testing, there will be low productivity.

Manual testing is not always 100% accurate as it can be exposed to certain errors which may elude the software tester. Some glitches in the software are usually not recognized by the software tester, validation only occurs in certain areas and others are ignored. With AI coverage as well as accuracy can be improved.

Scalability issues: manual testing is a linear process and happens sequential manner. this means that only one test can be created and done at the same time, trying to create more test from other functionalities simultaneously can increase complexity.

You may like to read

Importance of Artificial Intelligence Software Testing Tool

Black – Box Testing Explained

What are the advantages and disadvantages of Artificial intelligence testing tools in software testing?

Artificial intelligence testing tools can work side by side with the software testers in order to achieve improved quality in software testing. Modern Applications interacts with each other through a myriad of APIs which constantly grows in complexity exponentially as technology evolve. Software development life cycle is becoming more complicated by day and thus, management of delivery time is still significant. Therefore, software testers need to work smarter and not harder in this new age of software development. Artificial intelligence testing tools have helped to make software releases and updates that happens once a month to occur on weekly or daily basis. An artificial intelligence testing platform can perform tests more efficiently than human beings, and with constant updates to its algorithm, even the slightest change can be observed in the software. But as much as artificial intelligence has positive achievements in software testing industries, it still has its corresponding disadvantages. Some of these disadvantages are reasons why human contributions cannot be neglected.

Advantages:

Improved accuracy and efficiency: Even the most experienced software testers are bound to make mistakes. Due to how monotonous software testing is, errors are inevitable. This is where artificial intelligence tools help by performing the same test steps accurately each time they are executed and at the same time provides detailed results and feedbacks. Testers are freed from monotonous manual tests giving them more time to explore the application & able to give input for improvements or usability areas.

Increases the Overall test coverage: Artificial intelligence testing tools can help increase the scope of tests, this results in overall improvement of software quality. AI testing tools canscan through the memory, file contents, and data tables in order to determine if the software is behaving as it is expected to and at the same time can provide more triage information or even a root cause..

Saved time + Money = Faster delivery to Market: Due to repetitions that exist in software testing every time a new product is created or modified, a human tester is needed to solve the problem associated with each test case by creating and automating tests. This helps to solve the problem of repetition, thereby saving time and money and help achieve faster delivery. By integrating AI software testing, the overall timespan can be reduced which translates directly into cost savings.

Going beyond the limitations of manual testing: AI testing tools or bots can automatically create tens, hundreds or thousands of virtual set of users that can interact with a network, software or web-based application. This helps software testers to execute a controlled web testing with hundreds of users thereby breaking the limitation of manual testing.

Helps both developers and testers

Disadvantages:

Artificial intelligence in software testing use the concept of GIGO (Garbage in Garbage Out): Most people are looking at artificial intelligent to fix all their testing ills. They hope Artificial intelligence will solve all the problems that manual testing has not been able to address. The simple fact is that a problem that cannot be solved manually cannot be solved by an AI tool as well. AI tools can only solve problems that have been solved manually and has been directed for them to solve digitally. Therefore, an Artificial intelligence tool can only do what it is told to do and cannot go beyond that.

High costs: the high cost associated with acquiring AI tools coupled with cost needed to get it updated with time to meet the latest requirements, can make it inaccessible to individual testers or smaller organizations.

Can’t think outside the box: Automated software testers can only do what they are programmed to do. Their capability is limited and cannot go beyond whatever algorithm or programming is stored in their internal circuit.

Unemployment: Much human software testers won’t be needed to perform software testing Jobs because most of the positions have been occupied by automation tools. Thus, limited positions will now be available for human testers to occupy.

Areas, where artificial intelligence can assist and dominate in software testing, include the following:

Generating test case scenarios

Generating automation script

Predicting the defects as per code changes

Reducing the automation false failure

Self healing of automation script

and many more to come….

In conclusion, assistance of artificial intelligence in software testing is significant and has helped achieve tremendous results in software testing, but more emphasis is laid on inevitable human contributions. With every passing day, as artificial intelligence finds its way into Software testing and other quality assurance fields, organizations are contemplating whether it should be adopted wholly within their quality assurance departments. But evidently, Quality assurance cannot do without human contributions. The salient benefits of AI in software testing are these: it goes beyond the limitations of manual testing, it helps achieve improved accuracy and efficiency in bug and algorithm testing, and it saves time and money involved in software development. It is evident that in the long run, AI will not only be confined to helping software testers, but will be applicable to all roles across software development to deliver top quality software to the market. So answering the subject matter question we can see that the extinction and replacement of Humans by AI is fallacious.

Webomates offers a regression testing service, CQ, that uses manual testing (test case- and exploratory-based), automation, crowdsourcing with Artificial Intelligence to not only guarantee Full regression in under 24 Hours but also to provide traiged defects. Our customer spend only 1 hour a week on a Full Regression of their software.

If you are interested in learning more about Webomates’ CQ service please click here and schedule a demo or reach out to us at [email protected].

#apitestingservices#Requirementtracebilitymatrix#webapitestingservices#apitestingserviceprovidercompanyapiautomation#OTTPlatformTesting#apiautomationtestingservices#apiloadtesting

0 notes

Text

Do’s and Don’ts of Automation Testing

Automated software testing is a technique which makes use of software scripts to simulate the end user and execute the tests, thus significantly accelerating the testing process. It has marked benefits in terms of accuracy, dependability, enhanced test coverage, time and effort saving. Automation aids in reducing the duration of release cycles, albeit at a price. Initial cost of setting up an automated process for testing acts as a deterrent to many cost conscious organizations. However, it does prove beneficial in the long term, especially with CI/CD. Hence, it is important to follow certain best practices and avoid common mistakes while automating the testing process.

Following table gives a quick overview of what to do and what not to do while planning test automation. These points are further elaborated in entailing paragraphs.

Do’sDon’ts Right Team & Tools Automate everything Application knowledge Automate from day overnight test cases Solely rely on automation tools Short & independent test scenarios Ignore FALSE failures Prioritize test automation Ignore Scalability Records of manual vs automation test cases Ignore performance testing Test data management Delay updating modified test cases Test case managements multiple automation platform

What to do for successful Automation Testing

Choose the team and tools prudently

It is vital to have the right set of people working on the right tools to reap the maximum benefits from test automation.

Good knowledge of application being tested Team selection and testing tool selection will be dependent on the kind of application being tested. Having in-depth knowledge of application will help in making right choices in terms of human, hardware and software resources. Click on this link to read more about this blog : Test Automation

0 notes

Text

Overcome UAT Challenges with AI-Based Test Automation

Digitization and automation have revolutionized the way the world perceives services, devices, tools, etc. Automation has permeated almost all fields, be it applied sciences, finance, pure sciences, healthcare, research, education, retail, manufacturing, and the list goes on. Consequently, the demand for high-end software has risen exponentially. In this competitive world, software development organizations have to ensure the digital happiness of their customers for sustainable business and their continued patronage.

User acceptance testing is conducted to ensure that the application under development satisfies the acceptance criteria for specified user requirements, functionality expectations, and business scenarios.

Why is UAT important

User acceptance testing is essential when an organization is contracting out its software development and looking to measure the delivered software against the original expectations.

Consider a scenario where a business venture outsources software development for their business unit within a stipulated time frame. After initial meetings between the stakeholders from both setups, the development process starts.

Frequent builds have to be rolled out to ensure that software is shaping up as per the customer’s expectations. Before the final rollout of the build for the customer, your business team wishes to conduct acceptance testing to ensure that there are no unexpected issues later since the cost of fixing defects after release is much higher.

Here, a speed bump is encountered since no time or resources are allocated to conduct user acceptance testing. If you are in the position of being tasked with the UAT for your company with no resources or time ……we feel your pain!!!

You are now in a Catch-22. If you release the software without UAT, then there are chances of having defects in the build that goes to the end customer. And if UAT is conducted (which was not originally part of the development and delivery plan), then the whole release schedule gets delayed. And you have neither the time nor the resources to get this done. Either way your upper management is NOT going to be happy, as the project was critical enough to get funded and will impact the business if it does not get completed on time.

UAT adds value to business by validating all business requirements and ensuring that the end product is as per the customer specifications.

A comprehensive UAT nets the defects before the software is released. It ensures that the version that is finally delivered to the end-user is as per the customer’s expectations.

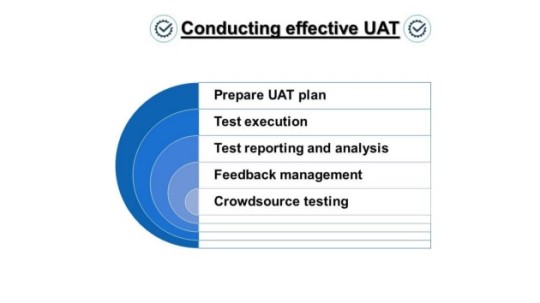

Rolling out a high-quality product successfully is a teamwork where everyone has an important role to play. Let us take a look at how different teams take responsibility for conducting effective UAT.

The road to a successful test execution is riddled with certain challenges that need to be addressed to ensure that the collective effort of the people conducting the testing is not wasted.

Adoption of agile methodology leads to frequent changes. It could be due to changes in business objectives, or user acceptance criteria, or updates due to defect rectification. The net result is UAT gets impacted, especially if the timelines are very tight. The situation may get worse if the collaboration among the teams and communication about changes/defects is not very efficient.

Managing these challenges only with a manual testing process is not an easy task.

Read More about : Test automation

If this has picked your interest and you want to know more, then please click here and schedule a demo, or reach out to us at [email protected]. We have more exciting articles coming up every week.

Stay tuned and like/follow us at

LinkedIn — Webomates LinkedIn Page

Facebook — Webomates Facebook page

For More Information visit us at: webomates.com

#apitestingservices#webapitestingservices#apiautomationtestingservices#SmokeTestingvsSanityTesting#apiloadtesting

0 notes

Text

Requirement traceability matrix

Requirements traceability is the ability to describe and follow the life of a business/technical requirement in both, forward and backward, directions (i.e., from its origins, through its development and specification, to its subsequent deployment and use, and through periods of ongoing refinement and iteration in any of these phases) — Source Wikipedia

Requirements traceability provides a context to the development team and sets expectations & goals for the testing team.

Requirements tracing is bi-directional.

Forward tracing: Requirements are traced forward to test cases, test execution, and test results

Back tracing: Features/functions are traced back to their source, typically a business requirement

What is the need for Requirements traceability?

WhyDescriptionTo ensure complianceAll specified requirements have been met and final deliverable can be traced back to a business need. It also makes sure that every requirement is accounted for.Helpful in analysis and design Requirements traceability ensures that each business need has been translated to a requirement, which further transforms into technical specifications, and then resulting in a deliverable.Change impact analysis In case of any requirement changes, traceability helps in finding the affected workflow, design, and impacted test cases.

Ensures comprehensive test coverage Requirement traceability ensures that the right test cases, which are mapped to the requirement under test, are executed. This further improves the test coverage since all the test cases are accounted for.Helps in optimizing the testing process Not all test cases that have been generated need to be executed in case there are any changes.

Traceability aids in identifying impacted parts and relevant test cases can then be executed, thus resulting in an optimal test process.Aids in keeping track of project progress Analysis of the requirements and the test results, and tracing it back to origins, helps in keeping track of how far the project has progressed.

It also helps in keeping tabs on reality check whether the timelines will be practically met or not.Decision making and analysis Any changes in the business need have a direct impact on requirements, which further propagates to the development and testing process. Requirement traceability helps in isolating the impacted requirements, thus identifying and updating the test cases associated with them. It makes it easier for the teams to analyze the impact of the change across the board.Faster time to market Requirements traceability accelerates the overall development process, thus speeding up the release cycles.

The number of defects is inversely proportional to requirements traceability. As the traceability increases, the defect rate goes down, simply because it becomes easier for the development team to understand and relate, and for the testing team to understand the context and scope of testing.Increased customer confidenceRequirements traceability helps in gaining and maintaining customer’s trust and confidence by increased transparency.

Customers can be assured in the knowledge that all their business needs have been accounted for and are traceable to a fully functional and high-quality end product

What is a typical Requirements traceability life cycle?

What is the Requirements Traceability Matrix?

Requirement traceability matrix tools is a testing artifact that keeps track of all the user requirements and the details of the test cases mapped to each of those requirements. It serves as a documented proof that all the requirements have been accounted for and validated to achieve their end purpose.

Contents of a typical RTM are

Requirements Traceability in DevOps and its challenges

It is quite evident from the previous sections that requirements tracing has a plethora of benefits, but does it work perfectly in the DevOps scenario?

In agile environments, keeping track of the requirements for every sprint and release can be a herculean task since the requirements keep evolving. One may tend to think whether all these efforts are worth it, without compromising on the release timeline and overall quality.

Two common challenges faced while striving for end-to-end requirements traceability are collaboration and maintenance.

Collaboration: Many cross-functional teams are involved when it comes to development and testing in DevOps. It calls for better collaboration for ensuring that all the requirements are effectively realized, without compromising on quality. Requirements keep evolving with every sprint and keeping traceability up to date in synchronization with various teams is a daunting task.

Maintenance: With the frequent changes of software artifacts in a DevOps environment, managing traceability for those artifacts consistently throughout the software development is extremely difficult. Therefore, traceability maintenance to cope with the continuous artifact changes becomes tough. The next section will explore how the benefits of traceability can be integrated into DevOps to handle the above-mentioned issues in order to maximize the benefits.

Requirements Traceability Matrix is a testing artifact that keeps track of all the user requirements and the details of the test cases mapped to each of those requirements. It serves as a documented proof that all the requirements have been accounted for and validated to achieve their end purpose.

Click on this link to read more Requirements Traceability Matrix

#RTM#Requirement traceability Matrix#DevOps#Automation#Agile#apiautomationtestingservices#apiloadtesting#apitestingservices

0 notes

Text

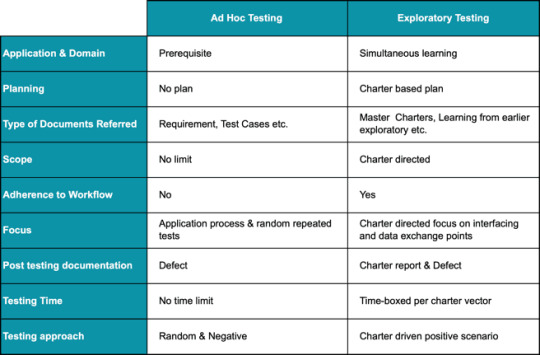

Ad hoc testing vs exploratory testing

In the software testing arena a commonly asked question is whether Exploratory testing is same as the Ad Hoc Testing? They do have some overlap which causes confusion, but in reality they are quite different. Both provide the freedom to the test engineer to explore the application and the primary focus is to find critical defects in the system. They also help in providing better test coverage, as all scenarios are extremely time consuming and costly to document as test cases.

Exploratory Testing

Exploratory testing is a formal approach of testing that involves simultaneous learning, test schematizing, and test execution. The testers explore the application and learn about its functionalities by discovery and learning method. They then, use exploratory test charters to direct, record and keep track of the exploratory test session’s observations. It is a hands-on procedure in which testers perform minimum planning and maximum test exploration.

Webomates has also done extensive research on how to high jump to high quality using exploratory and detailed analysis can be found in the article written by Aseem Bakshi, Webomates CEO.

Ad Hoc Testing

Ad Hoc Testing is an informal and random style of testing performed by testers who are well aware of the functioning of software. It is also referred to as Random Testing or Monkey Testing. Tester may refer existing test cases and pick some randomly to test the application. The testing steps and the scenarios depend on the tester, and defects are found by random checking.

Comparison is inevitable when it comes to exploring different testing types. It is vital to understand and employ the right combination for a complete multi-dimensional testing. In this article, we will compare Ad Hoc Testing and Exploratory testing to understand them better.

Ad Hoc versus Exploratory Testing

If you want to know the difference between test case based testing approach vs exploratory testing click here

Conclusion

Aforementioned testing types are just two, from a vast set of testing types at QA team’s disposal to verify and validate any application. Ideally, a combination of different techniques is used for comprehensive testing. Webomates offers a regression testing service that uses AI Automation and automation supplemented with manual testing (test case based and exploratory-based)and crowdsourcing together to guarantee all test cases (even modified ones) are executed in 24 hours. If you are interested in a demo click here Webomates CQ

In the software testing arena a commonly asked question is whether Exploratory testing is same as the Ad Hoc Testing? They do have some overlap which causes confusion, but in reality they are quite different. Both provide the freedom to the test engineer to explore the application and the primary focus is to find critical defects in the system. They also help in providing better test coverage, as all scenarios are extremely time consuming and costly to document as test cases.

If you want to know the difference between test case based testing approach ad hoc testing vs exploratory testing click here

#Adhoctesting#apitestingserviceprovidercompanyapiautomation#apiloadtesting#apiautomationtestingservices#apitestingservices

0 notes

Text

Test automation

In the software testing arena a perennial debate has raged between proponents of manual and automation testing. In our experience, the two are complementary; used together they form a more effective test strategy.

Manual testing

Since pretty much the start of software development in the 1960’s manual testing has been carried out by teams of testers. In this technique a team of people ( qa testers) get access to the latest software build and test it to validate that the software build works correctly. For feature testing there are two broad categories of manual testing that can be carried out.

Test case based testing

In this case test cases are defined up front, prior to the arrival of the next software build and the manual team work through the list of test cases performing the actions defined in the test cases and validating that the test case and hence the software build is operational. This technique requires more domain understanding at the test case creation point and less domain knowledge at the time of execution.

Exploratory testing

Here a manual QA tester that understands the domain of the software fairly well attempts to “break” ( cause a bug to happen) the software. Exploratory testing is an excellent complement to to test case based testing. Together they result in a significant improvement in quality

Automation testing

Since the 1990’s Test automation has risen as strong alternative. In automation testing a software tool is programmed ( In Java for example) to carry out human actions that testers would do in check based testing. The process is typically to first create the test cases like in Manual check based testing and then to program the test cases.

Why Choose Manual or Automation?

Clearly manual and automation testing are great complements to each other and as a combination can create a fast, less brittle solution that has exploratory testing in addition.

WebomateS offers a regression testing service that uses manual testing, automation and crowdsourcing together to guarantee all test cases(even modified ones) are executed in ONE day with exploratory testing!

In fact the output is a list of prioritized defects for software teams to review and fix.

With Webomates CQ we have developed a service that incorporates the benefits of AI into a TAAS ( Testing As a Service). To hear more about WebomatesCQ schedule a demo here.

#apitestingservices#apiloadtesting#apiautomationtestingservices#webapitestingservices#apitestingserviceprovidercompanyapiautomation

0 notes

Text

Black box tests

Black box testing can be performed using various approach :

Test case

Automation

Ad-hoc

Exploratory

Before these individual approach can be explained, It is important to know what black box testing is.

Black-box tests is a software testing method antonymous to white-box testing. The black-box is also known as Behavioral testing/functional testing/closed-box testing. It is a method of analyzing the functional and non-functional aspects of software. The focus of the software tester does not involve internal structure/implementation and design of the system involved. This means that the software tester does not necessarily need to have knowledge of programming and does not need to have access to the code. Black-box testing was developed so that it can be applied to customer requirement analysis and specification analysis. During the execution of testing the black-box software, Software tester selects a set of valid and invalid inputs and looks for valid output response.

According to ANSI/IEEE 1059 standard — “Software testing is a process of analyzing a software item to detect the differences between existing and required conditions (i.e: defects) and to evaluate the features of the software item. The approaches to software testing include Black-box testing, White-box testing and grey-box testing.”

Examples of Scenarios the applies simple Black-box testing includes:

These few examples are used to paint a vivid picture of what Black-box testing implies,

Using a Google search engine, a software tester types some texts into the inputs space provided, this server as the input. The search engine collects the data, processes it using the algorithm developed by Google programmers and then outputs the valid result or output that corresponds to the input.

Same applies to a Facebook chatting interface, When the bearer of the information inputs the information into the chat box and sends, the information is processed and sent to the receiver. The receiver from his end retrieves the information and vice versa.

Black-box testing can be used in any software system you want to test, such as a database like Oracle, a mathematical software like Excel and MATLAB, an operating system like windows and Linux and any other custom native or web based or mobile application. Emphasis is laid on the input and output of the software with no interest on the code.

Types of Black-box testing:

There are many types of black-box testing used in software testing which involves many procedures during implementation, we focus on the prominent ones.

Functional testing: This type of black-box testing involves only the input and corresponding valid output analysis. It is mainly done to determine the functionality and behavior of the system. The software testers focus on answering the question ‘Does this system really do what it claims to do?’

Few major types of functional testing are:

Sanity testing

Smoke testing

Integration testing

System testing

Regression testing

User acceptance testing

Non-functional testing: This test focuses on answering the questions ‘how efficient is this system in carrying out its functions?’. It focuses on the non-functional requirements of the system such as performance, scalability and usability which are not related to black-box testing.

Few major types of non-functional testing:

Usability testing

Load testing

Performance testing

Compatibility testing

Stress testing

Scalability testing

Regression testing: Whenever the internal structure of the system is altered regression testing is carried out to ensure existing functionality & behavior is working as expected. The alteration can mean when the system undergoes debugging, code fixes, upgrades or any other system maintenance processes. The software tester makes sure the new code does not change the existing behavior.

The black-box testing method is applicable to various levels of software testing such as:

This diagram Represents levels of black-box testing

Integration testing: in this level of software testing individual software are combined by the tester and tested as a group using black-box testing. This exposes fault experienced in the interaction between the integrated units.

System testing: in this level of software testing a fully complete software is tested to evaluate the compliance with the specified requirements proposed for the system.

Acceptance testing: In this level of software testing the acceptability of the system is tested. Here the software tester checks whether the system complies with the commercial requirement. The user needs and requirement are checked to know whether they are acceptable before delivery.

The higher the level of the black-box testing, and hence the bigger and more complex the system is the more the black-box testing that is applied.

You May Like to Read

Software Testing and the 5W and 1H technique of Metrics Reporting

Manual versus Automation Testing

Techniques involved in black-box testing:

There are many approaches use in designing black-box testing the following are but a few of them:

This diagram Represents Techniques in Black-box testing

Equivalence Partitioning or Equivalence class testing: in this software design technique the software tester divides all the inputs into equivalence classes and pick valid and invalid data from each class, a selected representative is used for the whole as a test data. The selected data is now used to carry out the black-box testing. This technique helps to minimize the number of possible test cases to a high level that maintains reasonable test coverage.

Boundary value analysis: it is a software design technique where the functional tester while carrying out the testing determines boundaries for input values. After determining the boundaries selects inputs that are at the boundaries or just inside or outside the boundaries, and uses it as test data. The test data is now used for carrying out the black-box testing.

Cause-effect graphing: in this software design technique software testers identifies the causes (valid or invalid input conditions) and effects (output conditions). This result in the production of a cause-effect graph, and Generation of test cases accordingly.

Error guessing: this is an example of an experience-based testing in which the tester uses his experience about the application and functionalities to guess the error-prone areas

Others include:

Decision table testing

Comparison testing

Black-box Testing steps

Black-box testing involves some generic steps carried out by testers on any type of Black-box.

The requirements and specifications are provided for the systems and they are thoroughly examined.

The software tester chooses both the valid inputs (the positive test scenarios) and the invalid inputs (the negative test scenarios). He tests the valid input to check whether the SUT processes them correctly then the invalid inputs to know whether they are being detected by the system.

The expected outputs are retrieved for all the inputs.

The software tester then constructs test cases with all the selected inputs.

The constructed test cases are executed.

Actual outputs from the SUT are compared with the expected outputs to determine if they comply with the expected results.

If there is any defect in the results they are fixed and the regression test is carried out.

Black-box testing and software test life cycle (STLC)

Software test life cycle (STLC) is the life cycle of a black-box testing and it is relative to the stages of software development. The cycle continues in a loop structure until a perfect and satisfying result devoid of defects are achieved.

Diagram showing software development life cycle for Black-box testing:

Requirement: This is the stage where the requirements and specifications needed by the software tester are gathered and examined.

Test planning and Analysis: In this stage the possible risks and mitigations associated with the project are determined.

Design: This stage allows scripts to be created on the basis of the software requirements.

Test execution: the prepared test cases are executed and if there is any deviation between the actual and expected results they are fixed and re-tested. The cycle then continues.

Tools used in black-box testing

The main tools used in black-box testing includes records and playback tools. These tools are mainly used for regression testing. When a defected system undergoes bug fixing, there is a possibility that the new codes will affect the existing codes in the system. Applying regression analysis with the records and playback tools helps to checkmate and fix errors.

These record and playback tools record test cases in form of scripts like TSL, VB script, JavaScript, Perl etc.

Advantages and Disadvantages of Black-box testing

Advantages:

There is no technical or programming language knowledge is required. The software tester does not need to have any knowledge of the internal structure of the system. He is required to access only the functionality of the system. This is like being in the user’s shoe and thinking from the user’s point of view.

Black-box testing is more effective for large and complex applications.

Defects and inconsistency in the system can be identified easily so that they can be fixed.

The test cannot be compromised because the designer, programmer, and tester are independent.

Tests are carried out as soon as the programming and specifications are completed.

Disadvantages

The test will not be necessary if the software programmer has already run the test case.

The tester may ignore possible conditions of scenarios to be tested due to lack of programming and technical knowledge.

Complete coverage in cases of the large and complex project is not possible.

Testing every input stream is unrealistic because it would take a large amount of time; therefore, many program parts go untested.

In conclusion, Black-box testing is a significant part of the software testing technique that requires the verification of system functionalities. This help in detection of defect for fixing to ensure the quality & efficiency of the system. 100% accuracy cannot be achieved when testing software; this requires software testers to follow the correct procedure for the testing in order to achieve a higher result.

#Adhoctesting#apitestingservices#| apiloadtesting |#apiautomationtestingservices#webapitestingservices |#apitestingserviceprovidercompanyapiautomation

0 notes

Text

Adhoc testing

What is Ad Hoc Testing?

Performing random testing without any plan is known as AdHoc Testing. It is also referred to as Random Testing or Monkey Testing. This type of testing doesn’t follow any documentation or plan to perform this activity. The testing steps and the scenarios only depend upon the tester, and defects are found by random checking.

Ad hoc Testing does not follow any structured way of testing and it is randomly done on any part of application. Main aim of this testing is to find defects by random checking. Ad hoc testing can be achieved with the testing technique called Error Guessing. Error guessing can be done by the people having enough domain knowledge and experience to “guess” the most likely source of errors.

This testing requires no documentation/ planning /process to be followed. Since this testing aims at finding defects through random approach, without any documentation, defects will not be mapped to test cases. Hence, sometimes, it is very difficult to reproduce the defects as there are no test steps or requirements mapped to it.

Types of ad hoc testing

Buddy Testing:

Two buddies mutually work on identifying defects in the same module. Mostly one buddy will be from development team and another person will be from testing team. Buddy testing helps the testers develop better test cases and development team can also make design changes early. This testing usually happens after unit testing completion.

Pair testing:

Two testers are assigned modules, share ideas and work on the same machines to find defects. One person can execute the tests and another person can take notes on the findings. Roles of the persons can be a tester and scribe during testing.

Monkey Testing:

Randomly test the product or application without test cases with a goal to break the system.

When to execute Ad hoc Testing?

Adhoc testing can be done at any point of time whether it’s beginning, middle or end of the project testing. Ad hoc testing can be performed when the time is very limited and detailed testing is required. Usually adhoc testing is performed after the formal test execution. Ad hoc testing will be effective only if the tester is having thorough knowledge of the system under Test.

This testing can also be done when the time is very limited and detailed testing is required.

Ad Hoc Testing does have its own advantages:

A totally informal approach, it provides an opportunity for discovery, allowing the tester to find missing cases and scenarios which has been missed in test case writing.

The tester can really immerse him / her in the role of the end-user, performing tests in the absence of any boundaries or preconceived ideas.

The approach can be implemented easily, without any documents or planning.

That said, while Ad Hoc Testing is certainly useful, a tester shouldn’t rely on it solely. For a project following scrum methodology, for example, a tester focused only on the requirements and who performs Ad Hoc testing for rest of the modules of the project(apart from the requirements) will likely ignore some important areas and miss testing other very important scenarios.

When utilizing an Ad Hoc Testing methodology, a tester may attempt to cover all the scenarios and areas but will likely still end up missing a number of them. There is always a risk that the tester performs the same or similar tests multiple times while other important functionality is broken and ends up not being tested at all. This is because Ad Hoc Testing does not require all the major risk areas to been covered.

Drawbacks:

As this type of testing does not follow a structured way of testing and no documentation is mandatory, the main disadvantage is that the tester has to remember all scenarios.

The tester will not be able to recreate bugs in the subsequent attempts, should someone ask for issue reproducibility.

Ad hoc testing gives us knowledge on applications with a variety of domains. And within a short time one gets to test the entire application, it gives confidence to the tester to prepare more Ad hoc scenarios, as formal test scenarios can be written based on the requirement but Ad hoc scenarios can be obtained by doing one round of Ad hoc testing on application to find more bugs, rather than through formal test execution.

You may like to read

Black – Box Testing Explained

Can Artificial Intelligence replace human in Software Testing?

Adhoc Testing vs Exploratory Testing

Ad-hoc Testing: Tester may refer existing test cases and just pick a few randomly to test the application. It is more like hit and trial to find a bug. In case you find one you have an already documented Test Case to fail here.

Exploratory testing: This is a formal testing process that doesn’t rely on test cases or test planning documents to test the application. Instead, testers go through the application and learn about its functionalities. They then, use exploratory test charters to direct, record and keep track of the exploratory test sessions observations.

Performing Testing on the Basis of Test Plan

Test cases serve as a guide for the testers. The testing steps, areas and scenarios are defined, and the tester is supposed to follow the outlined approach to perform testing. If the test plan is efficient, it covers most of the major functionality and scenarios and there is a low risk of missing critical bugs.

On the other hand, a test plan can limit the tester’s boundaries. There is less of an opportunity to find bugs that exist outside of the defined scenarios. Or perhaps time constraints limit the tester’s ability to execute the complete test suite.

So, while Ad Hoc Testing is not sufficient on its own, combining the Ad Hoc approach with a solid test plan and Exploratory testing will strengthen the results. By performing the test per the test plan while at the same time devoting resource to Ad Hoc testing, a test team will gain better coverage and lower the risk of missing critical bugs. Also, the defects found through Ad Hoc testing can be included in future test plans so that those defect prone areas and scenarios can be tested in a later release.

Additionally, in the case where time constraints limit the test team’s ability to execute the complete test suite, the major functionality can still be defined and documented. The tester can then use these guidelines while testing to ensure that these major areas and functionalities have been tested. And after this is done, Ad Hoc testing can continue to be performed on these and other areas.

Conclusion: The advantage of Ad-hoc testing is to check for the completeness of testing and find more defects than planned testing. The defect catching test cases are added as additional test cases to the planned test cases. Ad-hoc Testing saves lot of time as it doesn’t require elaborate test planning , documentation and Test Case design.

At Webomates, we have applied this technique in multiple different domains and successfully delivered with a quality defects. We specialize in taking many different software creators’ builds across domains, on 18 different Browser/OS/Mobile platforms and providing them with defects in 24 hours or less. If you are interested in a demo click here Webomates CQ

0 notes

Text

Smoke Testing vs Sanity Testing

Software development and testing are an ecosystem that works on the collective efforts of developers and testers. Every new addition or modification to the application has to be carefully tested before it is released to the customers or end-users. In order to have a robust, secure, and fully functional end application, it has to undergo a series of tests. There are multiple testing techniques involved in the whole process, however, Smoke and Sanity are the first ones to be planned.

Let us examine Smoke and Sanity testing in this article.

Smoke testing essentially checks for the stability of a software build. It can be deemed as a preliminary check before the build goes for testing.

Sanity testing is performed after a stable build is received and testing has been performed. It can be deemed as a post-build check, to make sure that all bugs have been fixed. It also makes sure that all functionalities are working as per the expectations.

Let us first take a quick look at the details of both types of testing, and then we can move onto identifying the differences between them. There is a thin line between them, and people tend to confuse one for another.

What is smoke testing?

Smoke testing, also known as build verification testing, is performed on initial builds before they are released for extensive testing. The idea is to net issues, if any, in the preliminary stages so that the QA team gets a stable build for testing, thus saving a significant amount of effort and time spent by the QA.

Smoke testing is non-exhaustive and focuses on testing the workflow of the software by testing its critical functionalities. The test cases for smoke testing can be picked up from an existing set of test cases. The build is marked rejected in case it fails the smoke tests.

Note that, it is just a check measure in the testing process, and in no way, it replaces comprehensive testing.

Smoke tests can be either manual or automated.

What is sanity testing?

Sanity testing is performed on a stable build which represents minor changes in code/functionality to ensure that none of the existing functionality is broken due to the changes. For this, a subset of regression tests is conducted on the build.

It is performed to verify the correctness of the application, in light of the changes made. Random inputs and tests are done to check the functioning of software with a prime focus on the changes made.

Once the build successfully passes the sanity test, it is then subjected to further testing.

Sanity tests can be either manual or automated.

Differences between Smoke testing and Sanity testing

This table summarizes the differences between these two tests for quick reading.

Smoke testingSanity testingTest Build TypeInitial BuildStable BuildObjective StabilityCorrectness ScopeWide (application as a whole is tested for functionality without a deep dive)Narrow (focus on one part and performs regression tests)FocusCritical functionalitiesNew/Updated functionalitiesResponsibilityDevelopers and/or testersTestersSupersetAcceptance testingRegression testingRegression testingExploratory testingDocumentation NeededYes(Uses existing test cases)Preferred release notes or released changes document

Is it sane to compare Smoke to Sanity testing?

You must be wondering why are we even comparing these two when on a superficial level, both of them are performing tests before the “big” testing cycle commences.

It may be noted that they are different at many levels, albeit they appear to be similar.

Smoke testing takes care of build stability before any other comprehensive testing (including sanity testing) can be performed. It is the first measure that should be ideally taken in the software testing cycle because conducting testing on an unstable build is a plain waste of resources in terms of time, effort, and money. However, smoke testing is not limited to just the beginning of the cycle. It is an entry pass for every new phase of testing. It can be done at the Integration level, system-level, or acceptance level too.

Whereas, sanity testing is performed on a stable build, which has passed the acid test of smoke tests and another testing.

Both can be performed either manually or with the help of automation tools. In some cases, where time is of the essence, sanity tests can be combined with smoke tests. So, it is natural for the developers/testers to often end up using these terms interchangeably. So, it is important to understand the difference between the two to ensure that no loopholes are left in the testing process due to this confusion.

To summarize, smoke tests can be deemed as general and overall health check-up of the application, whereas sanity tests focus is more targeted health check-up.

Conclusion

Webomates CQ helps in performing effective smoke testing, using various Continuous Testing methodologies that run using Automation and AI Automation channels. It provides better accuracy in testing and the results are generated within a short period, approximately 15 mins to 1 hour. It also provides CI-CD that can be linked with any build framework to give quick results.

Webomates CQ also provides services like Overnight Regression, which are conducted on specific modules, which can be either a fully developed module or work-in-progress module. The advantage of using our product is that it takes less time than a full regression cycle, and testing can be completed within 8–12 hours. Also, the development team gets a detailed execution report along with the defects report.

Webomates tools also help in generating and automating new test scenarios within hours, using AI tools. This reduces manual efforts during sanity testing.

Let us examine Smoke and Sanity testing -

Smoke testing essentially checks for the stability of a software build. It can be deemed as a preliminary check before the build goes for testing.

Sanity testing is performed after a stable build is received and testing has been performed. It can be deemed as a post-build check, to make sure that all bugs have been fixed. It also makes sure that all functionalities are working as per the expectations.

Read More about Smoke Testing vs Sanity Testing

At Webomates, we continuously work to evolve our platform & processes to provide guaranteed execution, which takes testing experience to an entirely different level, thus ensuring a higher degree of customer satisfaction.If you are interested in learning more about Webomates’ CQ service please click here and schedule a demo, or reach out to us at [email protected]

#Requirementtracebilitymatrix#webapitestingservices#apitestingserviceprovidercompanyapiautomation#OTTPlatformTesting#apiautomationtestingservices

0 notes

Text

Api automation testing services

Top Benefits of API Testing

An application programming interface (API) is a computing interface that defines interactions between multiple software intermediaries. It defines the kinds of calls or requests that can be made, how to make them, the data formats that should be used, the conventions to follow, etc. (Source: Wikipedia)

API’s help not only in abstracting the underlying implementation but also improves the modularity and scalability of software applications. APIs encompass the business and functional logic and are a gateway to sensitive data and expose only objects & actions that are needed.

API testing deals with testing the business logic of an application, which is typically encompassed in the business layer and is instrumental in handling all the transactions between the user interface and underlying data. It is deemed as a part of Integration testing that involves verification of functionality, performance, and robustness of APIs.

Besides checking for the functionality, API testing also tests for error condition handling, response handling in terms of time and data, performance issues, security issues, etc.

API testing also deals with contract testing, which in simpler terms, is verifying the compatibility and interactions between different services. The contract is between a client/consumer and API/service provider.

Clearly, API’s usage is not limited to just one application and in some cases, they can be used across many applications and are also used for third-party integrations. Hence, developing and testing them thoroughly is extremely critical.

The following section highlights why thorough API testing entails many benefits in the long run.

These benefits are discussed in detail below.

Technology independent and ease of test automation

The API’s exchange data in structured format via XML or JSON. This exchange is an independent coding language, thus giving the flexibility to choose any language for test automation.

API testing can be effectively automated because the endpoints and request parameters are less likely to change, unless there is a major change in business logic. Automation reduces manual efforts during regression testing and results in a significant amount of time-saving.

API tests require less scripting efforts as compared to GUI automated tests. As a result, the testing process is faster with better coverage. This culminates in time and resource-saving. Overall, it translates into the reduced project costs.

Reduced risks and faster time to market

API testing need not be dependent on GUI and other sub-modules. It can be conducted independently at an earlier stage to check the core business logic. Issues, if any, can be reported back for immediate action without waiting for others to complete.

APIs represent specific business logic, it is easier for the teams to isolate the buggy module and rectify it. The bugs reported early can be fixed and retested, independently yet again. This reduces the quantum of time taken between builds and release cycles, resulting in faster releasing of products.

The amount and variety of input data combinations inadvertently lead to testing limit conditions, which otherwise may not be identified/tested. This exposes vulnerabilities, if any, at an earlier stage even before GUI has been developed. These vulnerabilities can be then rectified on a priority basis and any potential loophole for breaches is handled.

When there are multiple API’s from different sources involved in development of an application, the interface handshake may or may not be firm. API testing deep dives into these integration challenges and handles them at earlier stages. This ensures that end user experience is not affected because of the issues that could have been handled at API level.

Improved test coverage

API test automation helps in covering a high number of test cases, functional and non-functional. Also, for a complete scenario check API testing requires to run both, positive and negative tests. Since API testing is a data-driven approach, various combinations of data inputs can be used to test such test cases. This gives good test coverage overall.

Good test coverage helps in identifying and managing defects in a larger scenario. As a result, miniscule bugs make way to production, thus resulting in a higher quality product.

Ease of test maintenance

API tests are usually deemed stable and major changes are done mainly when business logic is changed. The frequency and amount of changes are comparatively less. This means less rework in rewriting test cases in event of any changes. This is in sharp contrast to GUI testing, which requires rework at many levels in case of any changes.

API tests can be reused during testing, thus, reducing the overall time quantum required for testing.

Also, it is a good idea to categorize the test cases for better test case management since the number of API’s involved in any application can be large. We have talked about this in our earlier blog “Do’s and Don’ts of API testing”.

Conclusion

APIs evolve and develop as and when business and functional requirements change, thus making it even more important to test them on a continuous basis.

Webomates test APIs using Manual and Automation testing. Webomates provides API testing which focuses on Performance and Security Testing to make sure the application is secure and giving the application a strong backbone.

You can take a quick look at the following table to see which tools are already integrated in our Testing as a Service platform.

At Webomates, we continuously work to evolve our platform and processes in order to provide guaranteed execution, which takes testing experience to an entirely different level, thus ensuring a higher degree of customer satisfaction.

Webomates offers regression testing as a service that is a combination of test cases based testing and exploratory testing. Test cases based testing establishes a baseline and uses a multi-execution channel approach, to reach true pass / true fail, using AI Automation, automation, manual, and crowdsourcing. Exploratory testing expands the scope of the test to take the quality to the next level. Service is guaranteed to do both and even covers updating test cases of modified features during execution in 24 hours.API test automation helps in covering a high number of test cases, functional and non-functional. Also, for a complete scenario check API testing requires to run both, positive and negative tests. For More Details on api automation testing services offered by Webomates schedule a demo now!

If you are interested in learning more about Webomates’ CQ service please click here and schedule a demo, or reach out to us at [email protected]#apitestingservices |

#Requirementtracebilitymatrix#webapitestingservices#apitestingserviceprovidercompanyapiautomation#OTTPlatformTesting#apiautomationtestingservices#apiloadtesting

0 notes

Text

5 key AI Testing Solutions To Improve Your Product’s End-User Experience

Introduction

“Customer Experience is the new battlefield” – Chris Pemberton, Gartner

Product development involves careful planning, innovation, automation, time, and resources. One really vital piece of the development process is product testing for quality, reliability, and security. However, gone are the days when merely testing software against the business and technical requirements sufficed. Your application or software may work as defined, but Are your customers happy and satisfied? Does your product meet the user’s needs?

One thing that today’s customers won’t appreciate is bad Customer experience (CX). Customer satisfaction can make or break your business. Instant gratification is what today’s customers look for. Thus, CX is the biggest factor driving customer loyalty. Most companies including the FAANG have already made great strides into developing next-gen apps and are integrating AI across their tech stack for delivering higher customer experience.

Delight the customer and beat the competitor – is what all organizations are focused on. And this is not just online retail, it’s across every industry like finance, healthcare, media.

So, how do you measure Customer Experience?

Gartner in its recent study emphasizes the importance of the 5 types of metrics such as customer satisfaction (CSAT), customer loyalty/retention/churn, advocacy/reputation/brand, Quality/operations, and employee engagement to measure customer experience.

These metrics are a measure of how well your products and services meet your end users’ expectations.

But how can executives guide their organizations to reap the benefits from AI and exploit the benefits that beckon?

One way is to put the customer front and center during software development and testing. Artificial intelligence-enabled customer journey analytics can find answers to important CX queries like:

How can we serve the customer better to have an improved CSAT score?

How can we detect defects before the Customers?

Which features should you prioritize to improve CX and achieve business results?

Where does AI Testing come into the picture and how will it benefit the customer?

As per World Quality Report, demands for quality-at-speed and shift-left have placed the onus of ensuring end-user satisfaction on quality assurance teams.

To advance the digital transformation and enhance customer experiences, QA must break loose from their traditional bug testing shackles and embrace frictionless, AI-powered automation, and continuous delivery and continuous testing approach. Providing a higher quality, more reliable product to market faster, or getting answers to queries faster (or immediately) provides a richer, more positive experience for the customer, and thereby a competitive advantage for the company.

Taking the customer experience to the next level

Here are 5 key AI drivers to catalyze high-quality, agile software delivery that can add value to business applications, through its varied technologies and will revolutionize the customer experience.

These solutions are enabled by Webomates patented tools that work as accelerators for faster and better delivery. Webomates uses advanced intelligent test automation framework to continuously test your build across platforms and devices.

Optimize the customer experience by AI-driven exploratory testing Not all defects are due to a developer’s coding errors. What happens when there are zero defects in the code but it still fails? Go exploratory – A place where no scripted test has gone before! The basic aim of exploratory testing is to pinpoint how a feature works under various conditions and that could be against different devices, browsers or operating systems as well. It helps you scrutinize the potential risks by combining exploratory testing with regression testing.

Find your defects before the user does! With AI-based automated testing, one can increase the overall depth and scope of tests resulting in software quality improvement. The speed at which AI (Intelligent test analytics) can reveal insights allows development and testing teams to resolve defects faster than ever before. Working together shifts the gear from last-minute testing to early defect identification and resolution, thereby rapidly speeding up the time-to-market for every release!

Power of Self Healing Test Automation Framework When new changes are introduced, there is a chance that the automation may fail due to the predefined test scripts. It is then very difficult to identify which test cases should be modified or added. Webomates applies AI and ML algorithms to its self-healing test automation framework to dynamically adapt its testing scope to the changes. With such nimble-witted features, the test scripts adapt thus, making testing a lot easier.

Create exceptional digital experience by Real-time analytics and Insights Delivery AI thrives on information—the more the better. For AI to impact the customer experience (CX), actionable insights must be shared in real-time. Data unification to create a single view is a must for any type of defect analytics. For every incremental build, Webomates CQ can create, execute, maintain, analyze test cases and generate defects for browsers, mobile, Windows, and API applications. The exact state of the system in terms of defects is known after every check-in. With its power to gather and analyze data in real-time, AI is helping in getting a better understanding of the defects and their patterns, and eventually creating an enhanced customer experience strategy.

Deliver business value by addressing the risks that emerge across the digital ecosystem – Conduct in-depth performance, Security Testing Imagine your application runs perfectly fine with zero defects, and your customer is happy too. But then one security incident occurs and all your efforts go in vain! Breach of trust is the biggest no-no in the CX journey. Webomates has proven records of exhibiting agility in scaling up its testing services based on the changing requirements. The use of the Shift-left testing approach helps in building a good product where UI, API, Load, and Security are not left out to be tested as a different component at the end. Webomates CQ platform helps to achieve effortless software testing and keeping customers happy knowing that their sensitive client data is secure.

Conclusion

It is evident that AI has already begun to create a tangible impact.

Webomates CQ platform is designed to reduce test cycle duration and mission-critical defects by more than 50% by applying Machine Learning and Artificial Intelligence to software testing. The testing is optimized by combining the patented AI testing platform using multiple channels of execution like Automation and AI with crowdsourcing and manual testing.

This helps in filling the gap with the ability to connect the dots between customer behavior and business outcomes, enabling cross-functional teams to collaborate on the software development journey and better manage, measure, and optimize the development and testing.

The advantages of Intelligent Test Automation are limitless. All in all, AI testing is necessary to speed up the development and deployment of software releases. It not only helps organizations to save time but also helps in delivering high-quality products.

If you are interested in learning more about Webomates’ CQ service please click here and schedule a demo, or reach out to us at [email protected].

If you liked this blog, then please like/follow us Webomates or Aseem.

#apitestingservices#Requirementtracebilitymatrix#webapitestingservices#apitestingserviceprovidercompanyapiautomation#OTTPlatformTesting#apiautomationtestingservices

0 notes

Text

Challenges in AI Testing

The term artificial intelligence was coined in the year 1956 at a conference at Dartmouth College, New Hampshire. Since then AI has seen certain ebbs, aka AI winters. It has been around for decades, slowly evolving.

The recent surge in the use of AI can be attributed to improved machine learning algorithms because of deep learning and the availability of massive data to learn from.

The Gartner Hype Cycle for Artificial Intelligence shares deeper insights on how artificial intelligence is making waves and impacting businesses worldwide. It also talks about how AI has found a niche place in top emerging technological trends.

In separate surveys conducted by McKinsey and PwC in 2020, artificial intelligence adoption can potentially contribute approximately $14-$15 trillion to the global economy by 2030.

Software testing has embraced artificial intelligence in its operations to address issues associated with manual testing. The rise of agile methodology and DevOps had initiated the need for shorter development cycles with faster time to market. Quality, of course, has to be the top priority. While many organizations have been working on AI automation for a while now, some are still sitting on the fence regarding its usage in their operations. This stems from the fact that certain challenges impede the adoption of AI-driven automation. Let us see what they are in the entailing section.

Business issues

Immaturity in adopting AI/ML: Integrating and leveraging the power of AI in QA operations has to be meticulously planned and should be a well-thought process. It is important to understand why and how AI will help in making the QA processes more efficient and cost-effective in the long run.

Lack of long-term strategy: AI strategies have to be defined keeping in mind long-term benefits. Expecting returns from the onset leads to disillusionment. The right approach is to involve all the relevant stakeholders- business, operational and technical, to define the strategy and invest initial time in selecting the right QA process and operation to automate. Aligning the automation goals with the quality assurance goals and defining measurable KPIs enables the stakeholders to define a well-rounded AI strategy.

Infrastructure issues: AI/ML solutions require high-end processors to cater to their need for high computational speed. With the exponential increase in the volume of data, the hardware and software scaling needs also change. The infrastructure requirements and costs associated with it are a big deterrent in AI adoption, especially for small and budget-conscious organizations. Cloud migration is a solution to this dilemma. Organizations can take advantage of a cloud computing environment with the power of parallel multiple processors without changing much in the in-house setup.

Integration issues with the current setup: AI-based applications require higher capacity hardware, making legacy systems redundant or incompatible with new versions. Besides this, introducing AI may require modifying the current software or in some cases, a complete overhauling of the system may be needed. This has a cost, time, and resources factors associated with it.

Trust issues

ROI concerns: There is a lot of uncertainty involving ROI on AI automation and its subsequent benefits, especially since the initial investment is high. The right way forward is to collaborate with all the stakeholders, set realistic goals, and work towards them.

Security concerns: Visionaries like Elon Musk and Stephen Hawking have pointed that there is still a lot of grey areas around AI. AI is still evolving and with machine learning, there is a continuous and incremental evolution that introduces a factor of “unknown”. Also, the sheer amount of data at the disposal of AI systems is vesting a lot of power in a machine. Data security concerns and legalities rock the business world now and then.

Lack of expertise

Not enough experts on board: AI is a niche field and requires a good amount of subject matter expertise. Hiring the right people and/or identifying and upskilling/reskilling the right people in-house is important to efficiently develop and manage AI systems. Also, AI systems learn, predict and analyze based on rules, patterns, and algorithms defined by these experts. The right set of skills is needed to aid in responsible and supervised machine learning.

Data management

Contaminated and outdated data: AI systems learn from the data provided to them. The quality and quantity of data are important to get the desired and accurate results. Better the input for learnings, better the outcomes. These systems need reliable and trusted data sources to work on

Finding the right vendor

One of the key factors to a successful AI adoption in QA Ops is to choose the right vendor who can provide a holistic intelligent automation tool with service level guarantees at the right price

Webomates has a competitive edge over many others with its patented intelligent automation and analytics tool, which provides value for money to its customers. Additionally, we provide service level guarantees to all our customers.

Webomates CQ is a matured intelligent automation tool that can be integrated seamlessly with a customer’s CI/CD pipeline within a matter of hours.

Our tool leverages the power of 14 AI engines and provides the following services at different levels of the software testing process.

We have a capable in-house team of experts who work with our intelligent automation tool to ensure that holistic results are generated.

Webomates CQ is an ingenious monetarily and technically suitable option with the ability to scale up or down as per the customer requirement. Partner with us and reach out at [email protected] to know more or click here and schedule a demo. If you liked this blog, then please like/follow us Webomates or Aseem.

#apitestingservices#|Requirementtracebilitymatrix#webapitestingservices#apitestingserviceprovidercompanyapiautomation#OTTPlatformTesting#apiautomationtestingservices#apiloadtesting

0 notes

Text

10 Ways To Accelerate DevOps With AI

Software programming has evolved over the decades and consequently, testing, which is an integral part of software development, has also undergone a series of changes.

It all started with ad-hoc testing by developers and testers, traversing through the era of manual and automation testing, to continuous testing which is integral for DevOps. The introduction of artificial intelligence in software testing opened doors for faster and reliable testing solutions which helped in delivering high-quality end products.

Read a detailed account of this journey on our blog “Evolution of software testing”. The focus of the current article is on how artificial intelligence is changing the face of software testing by accelerating DevOps.

10 ways to accelerate DevOps with AI

DevOps helps organizations in keeping pace with the market dynamics by building, testing, and releasing the software faster. Multiple build-test-release cycles generate massive data which is then monitored and analyzed for improvising the next cycle. It is difficult to sift through such a huge amount of data manually after every cycle, especially if the time is of the essence. DevOps augmented with AI is a perfect solution to improvise and speed up CI/CD/CT pipeline in a reliable manner. This is just one example where AI aids in increasing DevOps efficiency. There are more ways in which AI can help in accelerating DevOps, covered in the entailing section.

1. Codeless testing :

Codeless testing frees up the testing team from the tedious task of writing test scripts and makes way for them to focus on other important tasks. It not only increases efficiency by saving time and resources but also expands the scope of test automation.

We have covered the top benefits of codeless testing in our blog. Do take out some to read it by clicking here “Top 5 benefits of codeless testing”.

2. Better test coverage:

AI algorithms are equipped with the capabilities of diving deep into the massive volume of data at its disposal and identify multiple test scenarios that can expand the test coverage, which is a herculean task if done manually. It also uses its analytical capabilities to prioritize the tests based on predictive analysis.

3. Improved test accuracy and reliability: