#Virginia Modeling Analysis and Simulation Center

Explore tagged Tumblr posts

Text

Banks Adopt Military-Style Tactics to Fight Cybercrime

By Stacy Cowley, NY Times, May 20, 2018

O’FALLON, Mo.--In a windowless bunker here, a wall of monitors tracked incoming attacks--267,322 in the last 24 hours, according to one hovering dial, or about three every second--as a dozen analysts stared at screens filled with snippets of computer code.

Pacing around, overseeing the stream of warnings, was a former Delta Force soldier who fought in Iraq and Afghanistan before shifting to a new enemy: cyberthieves.

“This is not that different from terrorists and drug cartels,” Matt Nyman, the command center’s creator, said as he surveyed his squadron of Mastercard employees. “Fundamentally, threat networks operate in similar ways.”

Cybercrime is one of the world’s fastest-growing and most lucrative industries. At least $445 billion was lost last year, up around 30 percent from just three years earlier, a global economic study found, and the Treasury Department recently designated cyberattacks as one of the greatest risks to the American financial sector. For banks and payment companies, the fight feels like a war--and they’re responding with an increasingly militarized approach.

Former government cyberspies, soldiers and counterintelligence officials now dominate the top ranks of banks’ security teams. They’ve brought to their new jobs the tools and techniques used for national defense: combat exercises, intelligence hubs modeled on those used in counterterrorism work and threat analysts who monitor the internet’s shadowy corners.

At Mastercard, Mr. Nyman oversees the company’s new fusion center, a term borrowed from the Department of Homeland Security. After the attacks of Sept. 11, the agency set up scores of fusion centers to coordinate federal, state and local intelligence-gathering. The approach spread throughout the government, with the centers used to fight disease outbreaks, wildfires and sex trafficking.

Then banks grabbed the playbook. At least a dozen of them, from giants like Citigroup and Wells Fargo to regional players such as Bank of the West, have opened fusion centers in recent years, and more are in the works. Fifth Third Bank is building one in its Cincinnati headquarters, and Visa, which created its first two years ago in Virginia, is developing two more, in Britain and Singapore. Having their own intelligence hives, the banks hope, will help them better detect patterns in all the data they amass.

The centers also have a symbolic purpose. Having a literal war room reinforces the new reality. Fending off thieves has always been a priority--it’s why banks build vaults--but the arms race has escalated rapidly.

Cybersecurity has, for many financial company chiefs, become their biggest fear, eclipsing issues like regulation and the economy.

Alfred F. Kelly Jr., Visa’s chief executive, is “completely paranoid” about the subject, he told investors at a conference in March. Bank of America’s Brian T. Moynihan said his cybersecurity team is “the only place in the company that doesn’t have a budget constraint.” (The bank’s chief operations and technology officer said it is spending about $600 million this year.)

The military sharpens soldiers’ skills with large-scale combat drills like Jade Helm and Foal Eagle, which send troops into the field to test their tactics and weaponry. The financial sector created its own version: Quantum Dawn, a biennial simulation of a catastrophic cyberstrike.

In the latest exercise last November, 900 participants from 50 banks, regulators and law enforcement agencies role-played their response to an industrywide infestation of malicious malware that first corrupted, and then entirely blocked, all outgoing payments from the banks. Throughout the two-day test, the organizers lobbed in new threats every few hours, like denial-of-service attacks that knocked the banks’ websites offline.

The first Quantum Dawn, back in 2011, was a lower-key gathering. Participants huddled in a conference room to talk through a mock attack that shut down stock trading. Now, it’s a live-fire drill. Each bank spends months in advance re-creating its internal technology on an isolated test network, a so-called cyber range, so that its employees can fight with their actual tools and software. The company that runs their virtual battlefield, SimSpace, is a Defense Department contractor.

Sometimes, the tests expose important gaps.

A series of smaller cyber drills coordinated by the Treasury Department, called the Hamilton Series, raised an alarm three years ago. An attack on Sony, attributed to North Korea, had recently exposed sensitive company emails and data, and, in its wake, demolished huge swaths of Sony’s internet network.

If something similar happened at a bank, especially a smaller one, regulators asked, would it be able to recover? Those in the room for the drill came away uneasy.

“There was a recognition that we needed to add an additional layer of resilience,” said John Carlson, the chief of staff for the Financial Services Information Sharing and Analysis Center, the industry’s main cybersecurity coordination group.

Soon after, the group began building a new fail-safe, called Sheltered Harbor, which went into operation last year. If one member of the network has its data compromised or destroyed, others can step in, retrieve its archived records and restore basic customer account access within a day or two. It has not yet been needed, but nearly 70 percent of America’s deposit accounts are now covered by it.

The largest banks run dozens of their own, internal attack simulations each year, to smoke out their vulnerabilities and keep their first responders sharp.

“It’s the idea of muscle memory,” said Thomas J. Harrington, Citigroup’s chief information security officer, who spent 28 years with the F.B.I.

Growing interest among its corporate customers in cybersecurity war games inspired IBM to build a digital range in Cambridge, Mass., where it stages data breaches for customers and prospects to practice on.

One recent morning, a fictional bank called Bane & Ox was under attack on IBM’s range, and two dozen real-life executives from a variety of financial companies gathered to defend it. In the training scenario, an unidentified attacker had dumped six million customer records on Pastebin, a site often used by hackers to publish stolen data caches.

As the hours ticked by, the assault grew worse. The lost data included financial records and personally identifying details. One of the customers was Colin Powell, the former secretary of state. Phones in the room kept ringing with calls from reporters, irate executives and, eventually, regulators, wanting details about what had occurred.

When the group figured out what computer system had been used in the leak, a heated argument broke out: Should they cut off its network access immediately? Or set up surveillance and monitor any further transmissions?

At the urging of a Navy veteran who runs the cyberattack response group at a large New York bank, the group left the system connected.

“Those are the decisions you don’t want to be making for the first time during a real attack,” said Bob Stasio, IBM’s cyber range operations manager and a former operations chief for the National Security Agency’s cyber center. One financial company’s executive team did such a poor job of talking to its technical team during a past IBM training drill, Mr. Stasio said, that he went home and canceled his credit card with them.

Like many cybersecurity bunkers, IBM’s foxhole has deliberately theatrical touches. Whiteboards and giant monitors fill nearly every wall, with graphics that can be manipulated by touch.

“You can’t have a fusion center unless you have really cool TVs,” quipped Lawrence Zelvin, a former Homeland Security official who is now Citigroup’s global cybersecurity head, at a recent cybercrime conference. “It’s even better if they do something when you touch them. It doesn’t matter what they do. Just something.”

Security pros mockingly refer to such eye candy as “pew pew” maps, an onomatopoeia for the noise of laser guns in 1980s movies and video arcades. They are especially useful, executives concede, to put on display when V.I.P.s or board members stop by for a tour. Two popular “pew pew” maps are from FireEye and the defunct security vendor Norse, whose video game-like maps show laser beams zapping across the globe. Norse went out of business two years ago, and no one is sure what data the map is based on, but everyone agrees that it looks cool.

What everyone in the finance industry is afraid of is a repeat--on an even larger scale--of the data breach that hit Equifax last year.

Hackers stole personal information, including Social Security numbers, of more than 146 million people. The attack cost the company’s chief executive and four other top managers their jobs. Who stole the data, and what they did with it, is still not publicly known. The credit bureau has spent $243 million so far cleaning up the mess.

It is Mr. Nyman’s job to make sure that doesn’t happen at Mastercard. Walking around the company’s fusion center, he describes the team’s work using military slang. Its focus is “left of boom,” he said--referring to the moments before a bomb explodes. By detecting vulnerabilities and attempted hacks, the analysts aim to head off an Equifax-like explosion.

But the attacks keep coming. As he spoke, the dial displayed over his shoulder registered another few assaults on Mastercard’s systems. The total so far this year exceeds 20 million.

2 notes

·

View notes

Text

How to meet researchers constant need of high-quality geospatial data

By bringing together maps, apps, data, and people, geospatial information allows everyone to make more informed decisions. By linking science with action, geospatial information enables institutes, universities, researchers, governments, industries, NGOs, and companies worldwide to innovate in planning and analysis, operations, field data collection, asset management, public engagement, simulations, and much more.

By bringing together maps, apps, data, and people, geospatial information allows everyone to make more informed decisions. By linking science with action, geospatial information enables institutes, universities, researchers, governments, industries, NGOs, and companies worldwide to innovate in planning and analysis, operations, field data collection, asset management, public engagement, simulations, and much more.

Areas of Engagement

Researchers use spatial information to trace urban growth patterns, access to mobility and transportation networks, analyze the impact of climate change on human settlements, and more. When spatial datasets are linked with non-spatial data, they become even more useful for developing applications that can make a difference. For instance, geospatial data coupled with land administration and tenure data can significantly impact urban planning and development by the landowners.

For example, a recent project by the Government of India, 'The Swamitva Project,' uses geospatial data to provide an integrated property validation solution for rural India. 'Swamitva,' which stands for Survey of Villages and Mapping with Improvised Technology in Village Areas, uses Drone Surveying technology and Continuously Operating Reference Station (CORS) technology for mapping the villages. The project aims to provide the 'record of rights' to village household owners, possessing houses in inhabited rural areas in towns, which would enable them to use their property as a financial asset for taking loans and other financial benefits from banks. The project stands to empower the rural peoples of India

Geospatial is also playing a crucial role in disaster management. By deploying geospatial data for all disaster management phases, including prevention, mitigation, preparedness, vulnerability reduction, response, and relief, significant disaster risk reduction and management can be achieved.

We all are aware that when a hail storm strikes, the damage can be catastrophic. In fact, with damage totals sometimes exceeding USD 1 billion, hailstorms are the costliest severe storm hazard for the insurance industry, making reliable, long-term data necessary to estimate insured damages and assess extreme loss risks.

That's why a team of NASA scientists is currently working with international partners to use satellite data to detect hailstorms, hail damage, and predict patterns in hail frequency. This project will provide long-term regional- to global-scale maps of severe storm occurrence, catastrophe models, and new methods to improve these storms' short-term forecasting.

"We're using data from many satellite sensors to dig in and understand when and where hailstorms are likely to occur and the widespread damage that they can cause," shares Kristopher Bedka, principal investigator at NASA's Langley Research Center in Hampton, Virginia. "This is a first-of-its-kind project, and we're beginning to show how useful this satellite data can be to the reinsurance industry, forecasters, researchers, and many other stakeholders."

Climate change is another area where research based on geospatial data is of extreme importance. The geospatial analysis not only provides visual proof of the harsh weather conditions, melting polar ice caps, dying corals, and vanishing islands, but also links all kinds of physical, biological, and socioeconomic data in a way that helps us understand what was, what is, and what could be. For instance, air quality is a public health issue that requires ongoing monitoring. Not only does air quality data provide information that can protect residents, but it also helps to monitor the overall safety of a geographic area. NASA uses satellites to collect air quality data on an ongoing basis. The satellites can evaluate air quality conditions near real-time and observe different layers and effects that may coincide.

Satellite data can reveal information like the aerosol index and aerosol depth (which indicates the extent to which aerosols are absorbing light and affecting visibility) in any given area. Other types of data that satellites collect include levels of carbon monoxide, nitrous oxide, nitric acid, sulphur dioxide, fires, and dust.

Near real-time data helps to warn residents of low air quality. It can also be used to determine how climate change impacts a geographic area and guides new infrastructure design with climate change in mind.

Thus, there is hardly any living area where geospatial data or location intelligence cannot significantly impact. The more one engages in geospatial research, the more fascinating the journey of discovery becomes.

COVID-19 and Geospatial Research

The current pandemic has made each one of us realize the importance of geospatial data all the more. Be it identifying the hotspots, taking corrective measures sooner, and curbing the spread of the virus. Geospatial research has enabled the authorities to make headway more effectively in all spheres.

The location has been the answer to most of the problems. Thus more and more companies are now engaging in the development of apps that can help trace the virus and help businesses and individuals recover faster. Accordingly, researchers are increasingly engaging in projects that could bring such significant geospatial products to life soon.

Research bottlenecks due to COVID

Projects that aim to harness location intelligence's power have high-end hardware, software, and data requirements. Researchers typically have a high-end infrastructure at their disposal. These systems are placed either in their workplace or the universities where they are pursuing their research projects. These research hubs are also their gateway to highly accurate geospatial data. In regular times, they do not need to look anywhere else. However, the scenario is completely changed due to the pandemic. With the universities remaining closed for a long time, researchers are more dependent on the online availability of good quality infrastructure and data. As a result, platforms providing Data as a Service (DaaS), Software as a Service (SaaS), Infrastructure as a Service (IaaS) solutions are becoming popular among the research community.

Meeting hardware requirements

Lockdown and closure of educational institutions have brought researchers face-to-face with the lack of high-end infrastructure required for processing geospatial data. In such a scenario, researchers rely more and more on platforms that can provide the convenience of Infrastructure as a Service (IaaS).

Hardware is becoming less and less essential in the age of cloud computing. Cloudeo's Infrastructure as a Service (IaaS) solutions can help the researchers streamline their hardware requirements, save costs, and increase their research work efficiency. With Cloudeo's Infrastructure as a Service, a researcher needs to pay only for what they need. This helps in minimizing investment in local infrastructure. They can also quickly and dynamically adapt the processing power or storage they need, spreading big processing jobs over many cores. This is something that they might not accomplish (or afford) with their physical hardware. Also, while using this service, all the data gets backed up securely in the cloud and remains protected from unexpected critical hardware failures.

Meeting geospatial data requirements

Researchers need access to platforms that can provide high-quality geospatial data from multiple sources at a low cost. Cloudeo's DaaS is emerging as an increasingly popular solution among the researchers for accessing highly valuable data from various sources, like suppliers of spaceborne, airborne, and UAV imagery and data. It's a cost-effective solution as the users do not have to buy permanent licenses for EO data integration, management, storage, and analytics. Data as a service is especially advantageous for short-term projects, where long-term or permanent licenses and data purchases can become cost-prohibitive.

Over the past few years, more and more Earth Observation (EO) data, software applications, and IT services have become available from an increasing number of EO exploitation platform providers – funded by the European Commission, ESA, other public agencies, and private investment.

For instance, ESA's Network of Resources (NoR) supports users in procuring services and outsourcing requirements while increasing uptake of EO data and information for broader scientific, social, and economic purposes. The goal is to support the next generation of commercial applications and services.

Cloudeo acts as the NoR Operator, together with its consortium partners RHEA Group and BHO Legal, by managing service providers' onboarding into the NoR Portal. The portal is a compilation of the NoR Service Portfolio, listing services on the NoR Portal, promoting the NoR services worldwide, and procuring such services for commercial users and ESA sponsorship.

Through NoR, cloudeo plays a vital role in improving and supporting education, research, and science. It is promoting community building by enabling collaboration between all stakeholders.

Research is crucial for the growth of an economy. As businesses mostly realize the importance of integrating geospatial with everyday affairs, research in the field of geospatial is gaining momentum. Accordingly, the need for access to high-quality geospatial data is also increasing. While most universities are equipped to meet these researchers' needs in regular times, with the current lockdowns and closure of institutions, the researchers are relying on robust, accurate online platforms that can meet their hardware, software, and geospatial data requirements. Cloudeo is one such reliable platform for accessing geospatial data from disparate sources. It can also complete the infrastructure requirements of researchers effectively. By bringing in all the data creators, data processors, and data users and solution/app developers onto one platform, cloudeo is creating the most user-friendly geospatial solutions marketplace to meet the infrastructure, software, and data needs of researchers.

Explore cloudeo today and take an essential step towards excelling in your research and academic endeavors. No one can tell you about everything spatial so accurately!

0 notes

Text

Better than reality: NASA scientists tap virtual reality to make a scientific discovery

https://sciencespies.com/space/better-than-reality-nasa-scientists-tap-virtual-reality-to-make-a-scientific-discovery/

Better than reality: NASA scientists tap virtual reality to make a scientific discovery

Goddard engineer Tom Grubb manipulates a 3D simulation that animated the speed and direction of four million stars in the local Milky Way neighborhood. Goddard astronomer Marc Kuchner and researcher Susan Higashio used the virtual reality program, PointCloudsVR, designed by primary software developer Matthew Brandt, to obtain a new perspective on the stars’ motions. The simulation helped them classify star groupings. Credit: NASA/Chris Gunn

NASA scientists using virtual reality technology are redefining our understanding about how our galaxy works.

Using a customized, 3-D virtual reality (VR) simulation that animated the speed and direction of 4 million stars in the local Milky Way neighborhood, astronomer Marc Kuchner and researcher Susan Higashio obtained a new perspective on the stars’ motions, improving our understanding of star groupings.

Astronomers have come to different conclusions about the same groups of stars from studying them in six dimensions using paper graphs, Higashio said. Groups of stars moving together indicate to astronomers that they originated at the same time and place, from the same cosmic event, which can help us understand how our galaxy evolved.

Goddard’s virtual reality team, managed by Thomas Grubb, animated those same stars, revolutionizing the classification process and making the groupings easier to see, Higashio said. They found stars that may have been classified into the wrong groups as well as star groups that could belong to larger groupings.

Kuchner presented the findings at the annual American Geophysical Union (AGU) conference in early December 2019. Kuchner and Higashio, both at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, plan to publish a paper on their findings next year, along with engineer Matthew Brandt, the architect for the PointCloudsVR simulation they used.

“Rather than look up one database and then another database, why not fly there and look at them all together,” Higashio said. She watched these simulations hundreds, maybe thousands of times, and said the associations between the groups of stars became more intuitive inside the artificial cosmos found within the VR headset. Observing stars in VR will redefine astronomer’s understanding of some individual stars as well as star groupings.

The 3-D visualization helped her and Kuchner understand how the local stellar neighborhood formed, opening a window into the past, Kuchner said. “We often find groups of young stars moving together, suggesting that they all formed at the same time,” Kuchner said. “The thinking is they represent a star-formation event. They were all formed in the same place at the same time and so they move together.”

“Planetariums are uploading all the databases they can get their hands on and they take people through the cosmos,” Kuchner added. “Well, I’m not going to build a planetarium in my office, but I can put on a headset and I’m there.”

Realizing a Vision

The discovery realized a vision for Goddard Chief Technologist Peter Hughes, who saw the potential of VR to aid in scientific discovery when he began funding engineer Thomas Grubb’s VR project more than three years ago under the center’s Internal Research and Development (IRAD) program and NASA’s Center Innovation Fund [CuttingEdge, Summer 2017]. “All of our technologies enable the scientific exploration of our universe in some way,” Hughes said. “For us, scientific discovery is one of the most compelling reason to develop an AR/VR capability.”

The PointCloudsVR software has been officially released and open sourced on NASA’s Github site: https://github.com/nasa/PointCloudsVR

Scientific discovery isn’t the only beneficiary of Grubb’s lab.

The VR and augmented reality (AR) worlds can help engineers across NASA and beyond, Grubb said. VR puts the viewer inside a simulated world, while AR overlays computer-generated information onto the real world. Since the first “viable” headsets came on the market in 2016, Grubb said his team began developing solutions, like the star-tracking world Kuchner and Higashio explored, as well as virtual hands-on applications for engineers working on next-generation exploration and satellite servicing missions.

Engineering Applications

Grubb’s VR/AR team is now working to realize the first intra-agency virtual reality meet-ups, or design reviews, as well as supporting missions directly. His clients include the Restore-L project that is developing a suite of tools, technologies, and techniques needed to extend satellites’ lifespans, the Wide Field Infrared Survey Telescope (WFIRST) mission, and various planetary science projects.

“The hardware is here; the support is here,” Grubb said. “The software is lagging, as well as conventions on how to interact with the virtual world. You don’t have simple conventions like pinch and zoom or how every mouse works the same when you right click or left click.”

That’s where Grubb’s team comes in, he said. To overcome these usability issues, the team created a framework called the Mixed Reality Engineering Toolkit and is training groups on how to work with it. MRET, which is currently available for government agencies, assists in science-data analysis and enables VR-based engineering design: from concept designs for CubeSats to simulated hardware integration and testing for missions and in-orbit visualizations like the one for Restore-L.

For engineers and mission and spacecraft designers, VR offers cost savings in the design/build phase before they build physical mockups, Grubb said. “You still have to build mockups, but you can work out a lot of the iterations before you move to the physical model,” he said. “It’s not really sexy to the average person to talk about cable routing, but to an engineer, being able to do that in a virtual environment and know how much cabling you need and what the route looks like, that’s very exciting.”

In a mockup of the Restore-L spacecraft, for example, Grubb showed how the VR simulation would allow an engineer to “draw” a cable path through the instruments and components, and the software provides the cable length needed to follow that path. Tool paths to build, repair, and service hardware can also be worked out virtually, down to whether or not the tool will fit and be useable in confined spaces.

In addition, Grubb’s team worked with a team from NASA’s Langley Research Center in Hampton, Virginia, this past summer to work out how to interact with visualizations over NASA’s communication networks. This year, they plan to enable people at Goddard and Langley to fully interact with the visualization. “We’ll be in the same environment and when we point at or manipulate something in the environment, they’ll be able to see that,” Grubb said.

Augmented Science—a Better Future

For Kuchner and Higashio, the idea of being able to present their findings within a shared VR world was exciting. And like Grubb, Kuchner believes VR headsets will be a more common science tool in the future. “Why shouldn’t that be a research tool that’s on the desk of every astrophysicist,” he said. “I think it’s just a matter of time before this becomes commonplace.”

Explore further

NASA Explores potential of altered realities for space engineering and science

Provided by NASA’s Goddard Space Flight Center

Citation: Better than reality: NASA scientists tap virtual reality to make a scientific discovery (2020, January 29) retrieved 29 January 2020 from https://phys.org/news/2020-01-reality-nasa-scientists-virtual-scientific.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.

#Space

0 notes

Text

Artificial Intelligence for Mental Health

Image credit: unsplash.com

In recent years we have been hearing a lot about the potential of digital doctors and nurses: the example of AI becoming directly in charge of our welfare. Being a logical step after AI assisting in diagnostics and treatment path evaluation, digitalization of medical professionals is something that the broad public still isn’t completely comfortable with.

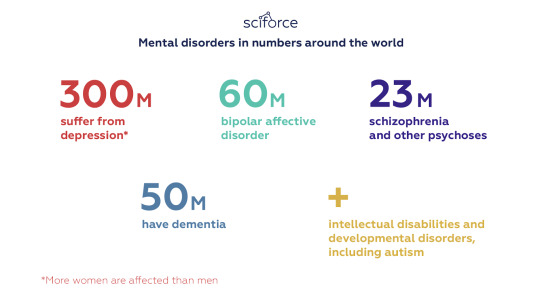

But what if the technology turns to the mental health and digitalizes not physicians, but psychologists? The implications all favor to introduction of AI into the sphere: one fourth of adult population is estimated to be affected by mental disorders. According to the World Health Organization, depression alone afflicts roughly 300 million people around the globe. The sad truth is not all of them can reach out for help. The obstacles are related to the still existing stigma in the society, the lack of therapists, the price of the therapy, and — in some countries — the qualification of the specialists.

It looks like AI offers multiple opportunities to help people maintain and improve their mental health. At present, the most prospective domains for application of AI techniques are computational psychiatry and the development of specialized chatbots that could render counseling and therapeutic services

Computational psychiatry

Broadly defined, computational psychiatry encompasses two approaches: data-driven and theory-driven. Data-driven approaches apply machine-learning methods to high-dimensional data to improve classification of disease, predict treatment outcomes or improve treatment selection. Theory-driven approaches use models that instantiate prior knowledge of such mechanisms at multiple levels of analysis and abstraction. Computational psychiatry combines multiple levels and types of computation with multiple types of data to improve understanding, diagnostics, prediction and treatment of mental disorders.

Image credit: shutterstock.com

Diagnostics

It is known that mental disorders are difficult to diagnose. At present, diagnosis is based on the display of symptoms categorized into mental health disorders by professionals and collected in the Diagnostic and Statistical Manual of Mental Disorders (the DSM). However, in many cases, with the current lack of biomarkers, and symptoms gathered through observations, such symptoms overlap among different diagnoses. Besides, humans are prone to inaccuracy and subjectivity: what is three in one person’s scale of anxiety might be seven for another.

One possible way for AI to assist or even replace human experts, as offered by the Virginia Tech group, is to combine the neuroimaging of fMRI with a trove of data, including survey responses, functional and structural MRIs, behavioral data, speech data from interviews, and psychological assessments. Another example is s Quartet Health, which screens patient medical histories and behavioral patterns to uncover undiagnosed mental health problems. To illustrate the concept, Quartet can flag possible anxiety based on whether someone has been repeatedly tested for a non-existent cardiac problem.

AI can help researchers discover physical symptoms of mental disorders and track within the body the effectiveness of various interventions. Besides, it might find new patterns in our social behaviors, or see where and when a certain therapeutic intervention is effective, providing a template for preventative mental health treatment.

Treatment assistance

Similar to somatic diseases, AI algorithms can be used to evaluate the treatment of mental disorders, predict the course of the disease and help select the optimal treatment path. Building statistical models by mining existing clinical trial data can enable prospective identification of patients who are likely to respond to a specific medicine of line of treatment.

On example of using machine learning is application of algorithms to predict the specific antidepressant with the best chance of success. While clinicians have no empirically validated mechanisms to assess whether a patient with depression will respond to a specific antidepressant, the treatment efficacy can be improved by matching patients to interventions.

Beyond analyzing fMRI images, computational psychiatry faces, ethical, spiritual, practical, and technological issues. For instance, the huge stores of intensely personal data necessary for the algorithms, immediately raise the issue of cybersecurity. At the same time, however, it is a barrier between the individual, the personal data, and the counselor that can help overcome patients’ fear of stigmatizing and the reluctance to turn to help.

Chatbot development

The idea of creating chatbots that would provide immediate counseling services was born as a response to the lack of therapists and the embarrassment of patients. It is believed that patients, who are often reluctant to reveal problems to a therapist they’ve never met before, let down their guard with AI-powered tools. Besides, the lower cost of AI treatments versus seeing a psychiatrist or psychologist let expand the coverage to a broader circle of people who require treatment.

Image credit: shutterstock.com

Virtual Counseling

The idea to use programs to simulate conversations between a therapist and a patient dates back to the 1960s when the MIT Artificial Intelligence Laboratory designed ELIZA — the grandparent of modern chatbots. The present-day advances in natural language processing and the popularity of smartphones have to the foreground of mental health care.

For instance, Ginger.io’s app has video and text-based therapy and coaching sessions. Through analyzing past assessments and real-time data collected using mobile devices, the Ginger.io app can help specialists track patients’ progress, identify times of crisis, and develop individualized care plans.

Another example is Woebot, a Facebook-integrated computer program that aims to replicate conversations between a patient and a therapist. The digital health technology asks about your mood and thoughts, “listens” to how you are feeling, learns about you and offers evidence-based cognitive behavior therapy (CBT) tools. The first randomized control trial with Woebot showed that after just two weeks, participants experienced a significant reduction in depression and anxiety.

The next generation of chatbots will feature avatars who would be able to detect nonverbal cues and respond accordingly. Such virtual therapist named Ellie was launched by the University of Southern California’s Institute for Creative Technologies (ICT) to treat veterans experiencing depression and post-traumatic stress syndrome. Ellie functions using different algorithms that determine her questions, motions, and gestures. The program observes 66 points on the patient’s face and notes the patient’s rate of speech and the length of pauses. Ellie’s actions, motions, and speech mimic those of a real therapist just to the extent it does not feel too humanlike.

Preventing Social Isolation

Another problem that can be addressed by AI-driven chatbots is the extreme social isolation and difficulties building close relationships of people suffering from mental illnesses. Combined with social networks on the Internet, such chatbots can foster a sense of belonging and encourage positive communication. The National Center of Excellence in Youth Mental Health in Melbourne, Australia has launched the Moderate Online Social Therapy (MOST) project to help young people recovering from psychosis and depression. The technology creates a therapeutic environment where young people learn and interact, as well as serves as a platform to practice therapeutic techniques.

The recent developments hint that we will soon be facing the AI revolution in mental health — promising better access and better care at a cost that won’t break the bank. However, if AI builds models for mental health disorders, are we not also building a model for normality? And if so, who gets to define what “normal” is and will it be used as a tool or a cudgel? What we should remember when applying artificial intelligence to study our brains, is that we should be careful not to reduce personality to a combination of quantifiable factors and to demystify mental disorders without finding problems in every idiosyncrasy.

0 notes

Text

Dobson DaVanzo & Associates, LLC – Research Analyst

Dobson DaVanzo & Associates, LLC is a health care consulting firm based in Vienna, Virginia, in the Washington, D.C. metropolitan area. The work of our principals has influenced numerous public policy decisions, and appears in legislation and regulation. Our research helps payers and providers develop, implement, and evaluate equitable payment methodologies in support of value-based purchasing. We apply decades of staff experience, access to a broad range of policymakers and subject matter experts, and innovative research techniques in order to best meet our clients’ needs. Our analyses are rigorous and objective, and make use of a variety of public and private-sector data sources.

We are seeking candidates with related experience to fill a Research Analyst position in a demanding, small, and fast-paced work environment. Candidates must possess strong critical thinking, verbal and written communication, organizational, and multitasking skills, pay great attention to detail, and be flexible.

Key responsibilities for the Research Analyst position include, but are not limited to: • Assisting with projects requiring quantitative and qualitative data collection and analysis • Summarizing quantitative and qualitative findings in reports and presentations • Assisting with database manipulation, construction, and simulation modeling • Assisting with the design and administration of stakeholder interview protocols and survey instruments • Summarizing stakeholder interviews and client meetings • Conducting comprehensive literature reviews using PubMed/Medline and other electronic databases and synthesizing findings • Participating in team and client project meetings • Assisting with preparation of proposals • Managing time effectively across multiple projects Our core competencies include modeling the impact of Medicare and Medicaid payment polices on health care providers using a variety of statistical and econometric methodologies in order to aid in the development of future payment systems.

The chosen candidates will possess working knowledge and experience with Medicare, Medicaid, or other public health programs of the agencies of the U.S. Department of Health and Human Services. Preference will be given to those with familiarity with CMS payment regulation for Prospective Payment Systems (PPS) for acute care hospitals, long term care hospitals, skilled nursing facilities, inpatient rehabilitation facilities, home health agencies, and ambulatory surgery centers.

A Master’s Degree and a minimum of two to three years of progressively responsible work experience in health care research, health policy analysis, and/or public sector consulting is required. Highly valued skills and abilities include:

• Ability to learn quickly and apply new content knowledge and skills • Ability to communicate complex ideas, verbally and in writing, in a clear and coherent manner • Experience with technical writing • Experience with complex database construction and analysis using Excel • Ability to multi-task and manage time effectively across multiple projects • Flexible/adaptable to changing needs and priorities • Able to work both independently and collaboratively as part of a team • Attention to detail • Strong listening skills • Proficiency in Microsoft Word, PowerPoint and Excel required • Experience with government and/or commercial contract work

Dobson | DaVanzo offers competitive salaries and a generous benefits package, including health insurance, and a retirement plan with company match. The company provides lunch every day on site, a fully stocked kitchen, free parking, and a dog-friendly office.

To apply, email your resume, cover letter, and writing sample to [email protected]. Please include your salary requirements.

Dobson | DaVanzo is an equal opportunity employer.

0 notes

Text

"Google supports COVID-19 AI and data analytics projects"

Nonprofits, universities and other academic institutions around the world are turning to artificial intelligence (AI) and data analytics to help us better understand COVID-19 and its impact on communities—especially vulnerable populations and healthcare workers. To support this work, Google.org is giving more than $8.5 million to 31 organizations around the world to aid in COVID-19 response. Three of these organizations will also receive the pro-bono support of Google.org Fellowship teams.

This funding is part of Google.org’s $100 million commitment to COVID-19 relief and focuses on four key areas where new information and action is needed to help mitigate the effects of the pandemic.

Monitoring and forecasting disease spread

Understanding the spread of COVID-19 is critical to informing public health decisions and lessening its impact on communities. We’re supporting the development of data platforms to help model disease and projects that explore the use of diverse public datasets to more accurately predict the spread of the virus.

Improving health equity and minimizing secondary effects of the pandemic

COVID-19 has had a disproportionate effect on vulnerable populations. To address health disparities and drive equitable outcomes, we’re supporting efforts to map the social and environmental drivers of COVID-19 impact, such as race, ethnicity, gender and socioeconomic status. In addition to learning more about the immediate health effects of COVID-19, we’re also supporting work that seeks to better understand and reduce the long-term, indirect effects of the virus—ranging from challenges with mental health to delays in preventive care.

Slowing transmission by advancing the science of contact tracing and environmental sensing

Contact tracing is a valuable tool to slow the spread of disease. Public health officials around the world are using digital tools to help with contact tracing. Google.org is supporting projects that advance science in this important area, including research investigating how to improve exposure risk assessments while preserving privacy and security. We’re also supporting related research to understand how COVID-19 might spread in public spaces, like transit systems.

Supporting healthcare workers

Whether it’s working to meet the increased demand for acute patient care, adapting to rapidly changing protocols or navigating personal mental and physical wellbeing, healthcare workers face complex challenges on the frontlines. We’re supporting organizations that are focused on helping healthcare workers quickly adopt new protocols, deliver more efficient care, and better serve vulnerable populations.

Together, these organizations are helping make the community’s response to the pandemic more advanced and inclusive, and we’re proud to support these efforts. You can find information about the organizations Google.org is supporting below.

Monitoring and forecasting disease spread

Carnegie Mellon University*: informing public health officials with interactive maps that display real-time COVID-19 data from sources such as web surveys and other publicly-available data.

Keio University: investigating the reliability of large-scale surveys in helping model the spread of COVID-19.

University College London:modeling the prevalence of COVID-19 and understanding its impact using publicly-available aggregated, anonymized search trends data.

Boston Children's Hospital, Oxford University, Northeastern University*: building a platform to support accurate and trusted public health data for researchers, public health officials and citizens.

Tel Aviv University: developing simulation models using synthetic data to investigate the spread of COVID-19 in Israel.

Kampala International University, Stanford University, Leiden University, GO FAIR: implementing data sharing standards and platforms for disease modeling for institutions across Uganda, Ethiopia, Nigeria, Kenya, Tunisia and Zimbabwe.

Improving health equity and minimizing secondary effects of the pandemic

Morehouse School of Medicine’s Satcher Health Leadership Institute*: developing an interactive, public-facing COVID-19 Health Equity Map of the United States.

Florida A&M University, Shaw University: examining structural social determinants of health and the disproportionate impact of COVID-19 in communities of color in Florida and North Carolina.

University of North Carolina, Vanderbilt University:investigating molecular mechanisms underlying susceptibility to SARS-CoV-2 and variability in COVID-19 outcomes in Hispanic/Latinx populations.

Beth Israel Deaconess Medical Center: quantifying the impact of COVID-19 on healthcare not directly associated with the virus, such as delayed routine or preventative care.

Georgia Institute of Technology:investigating opportunities for vulnerable populations to find information related to COVID-19.

Cornell Tech:developing digital tools and resources for advocates and survivors of intimate partner violence during COVID-19.

University of Michigan School of Information: evaluating health equity impacts of the rapid virtualization of primary healthcare.

Indian Institute of Technology Gandhinagar: modeling the impact of air pollution on COVID-related secondary health exacerbations.

Cornell University, EURECOM:developing scalable and explainable methods for verifying claims and identifying misinformation about COVID-19.

Slowing transmission by advancing the science of contact tracing and environmental sensing

Arizona State University:applying federated analytics (a state-of-the-art, privacy-preserving analytic technique) to contact tracing, including an on-campus pilot.

Stanford University:applying sparse secure aggregation to detect emerging hotspots.

University of Virginia, Princeton University, University of Maryland:designing and analyzing effective digital contact tracing methods.

University of Washington: investigating environmental SARS-CoV-2 detection and filtration methods in bus lines and other public spaces.

Indian Institute of Science, Bengaluru:mitigating the spread of COVID-19 in India’s transit systems with rapid testing and modified commuter patterns.

TU Berlin, University of Luxembourg:using quantum mechanics and machine learning to understand the binding of SARS-CoV-2 spike protein to human cells—a key process in COVID-19 infection.

Supporting healthcare workers

Medic Mobile, Dimagi: developing data analytics tools to support frontline health workers in countries such as India and Kenya.

Global Strategies:developing software to support healthcare workers adopting COVID-19 protocols in underserved, rural populations in the U.S., including Native American reservations.

C Minds:creating an open-source, AI-based support system for clinical trials related to COVID-19.

Hospital Israelita Albert Einstein:supporting and integrating community health workers and volunteers to help deliver mental health services and monitor outcomes in one of Brazil's most vulnerable communities.

Fiocruz Bahia, Federal University of Bahia:establishing an AI platform for research and information-sharing related to COVID-19 in Brazil.

RAD-AID:creating and managing a data lake for institutions in low- and middle-income countries to pool anonymized data and access AI tools.

Yonsei University College of Medicine: scaling and distributing decision support systems for patients and doctors to better predict hospitalization and intensive care needs due to COVID-19.

University of California Berkeley and Gladstone Institutes: developing rapid at-home CRISPR-based COVID-19 diagnostic tests using cell phone technology.

Fondazione Istituto Italiano di Tecnologia:enabling open-source access to anonymized COVID-19 chest X-ray and clinical data, and researching image analysis for early diagnosis and prognosis.

*Recipient of a Google.org Fellowship

Source : The Official Google Blog via Source information

0 notes

Text

Social Media Image About Mask Efficacy Right In Sentiment, But Percentages Are ‘Bonkers’

COVID-19’s “contagion probability” between two people is 70% if the carrier is not masked, 5% if the carrier is masked, and 1.5% if both parties are

— a viral image circulating on social media since April

This story was produced in partnership with PolitiFact.

This story can be republished for free (details).

A popular social media post that’s been circulating on Instagram and Facebook since April depicts the degree to which mask-wearing interferes with the transmission of the novel coronavirus. It gives its highest “contagion probability” — a very precise 70% — to a person who has COVID-19 but interacts with others without wearing a mask. The lowest probability, 1.5%, is when masks are worn by all.

The exact percentages assigned to each scenario had no attribution or mention of a source. So we wanted to know if there is any science backing up the message and the numbers — especially as mayors, governors and members of Congress increasingly point to mask-wearing as a means to address the surges in coronavirus cases across the country.

Doubts About The Percentages

As with so many things on social media, it’s not clear who made this graphic or where they got their information. Since we couldn’t start with the source, we reached out to the Centers for Disease Control and Prevention to ask if the agency could point to research that would support the graphic’s “contagion probability” percentages.

“We have not seen or compiled data that looks at probabilities like the ones represented in the visual you sent,” Jason McDonald, a member of CDC’s media team, wrote in an email. “Data are limited on the effectiveness of cloth face coverings in this respect and come primarily from laboratory studies.”

McDonald added that studies are needed to measure how much face coverings reduce transmission of COVID-19, especially from those who have the disease but are asymptomatic or pre-symptomatic.

Other public health experts we consulted agreed: They were not aware of any science that confirmed the numbers in the image.

Email Sign-Up

Subscribe to KHN’s free Morning Briefing.

Sign Up

Please confirm your email address below:

Sign Up

“The data presented is bonkers and does not reflect actual human transmissions that occurred in real life with real people,” Peter Chin-Hong, a professor of medicine at the University of California-San Francisco, wrote in an email. It also does not reflect anything simulated in a lab, he added.

Andrew Lover, an assistant professor of epidemiology at the University of Massachusetts Amherst, agreed. He had seen a similar graphic on Facebook before we interviewed him and done some fact-checking on his own.

“We simply don’t have data to say this,” he wrote in an email. “It would require transmission models in animals or very detailed movement tracking with documented mask use (in large populations).”

Because COVID-19 is a relatively new disease, there have been only limited observational studies on mask use, said Lover. The studies were conducted in China and Taiwan, he added, and mostly looked at self-reported mask use.

Research regarding other viral diseases, though, indicates masks are effective at reducing the number of viral particles a sick person releases. Inhaling viral particles is often how respiratory diseases are spread.

Sources:

ACS Nano, “Aerosol Filtration Efficiency of Common Fabrics Used in Respiratory Cloth Masks,” May 26, 2020

Associated Press, “Graphic Touts Unconfirmed Details About Masks and Coronavirus,” April 28, 2020

BMJ Global Health, “Reduction of Secondary Transmission of SARS-CoV-2 in Households by Face Mask Use, Disinfection and Social Distancing: A Cohort Study in Beijing, China,” May 2020

Email interview with Andrew Noymer, associate professor of population health and disease prevention, University of California-Irvine, June 29, 2020

Email interview with Jeffrey Shaman, professor of environmental health sciences and infectious diseases, Columbia University, June 29, 2020

Email interview with Linsey Marr, Charles P. Lunsford professor of civil and environmental engineering, Virginia Polytechnic Institute and State University, June 29, 2020

Email interview with Peter Chin-Hong, professor of medicine, and George Rutherford, professor of epidemiology and biostatistics, University of California-San Francisco, June 29, 2020

Email interview with Werner Bischoff, medical director of infection prevention and health system epidemiology, Wake Forest Baptist Health, June 30, 2020

Email statement from Jason McDonald, member of the media team, Centers for Disease Control and Prevention, June 29, 2020

The Lancet, “Physical Distancing, Face Masks, and Eye Protection to Prevent Person-to-Person Transmission of SARS-CoV-2 and COVID-19: A Systematic Review and Meta-Analysis,” June 1, 2020

Nature Medicine, “Respiratory Virus Shedding in Exhaled Breath and Efficacy of Face Masks,” April 3, 2020

Phone and email interview with Andrew Lover, assistant professor of epidemiology, University of Massachusetts Amherst, June 29, 2020

Reuters, “Partly False Claim: Wear a Face Mask; COVID-19 Risk Reduced by Up to 98.5%,” April 23, 2020

The Washington Post, “Spate of New Research Supports Wearing Masks to Control Coronavirus Spread,” June 13, 2020

One recent study found that people who had different coronaviruses (not COVID-19) and wore a surgical mask breathed fewer viral particles into their environment, meaning there was less risk of transmitting the disease. And a recent meta-analysis study funded by the World Health Organization found that, for the general public, the risk of infection is reduced if face masks are worn, even if the masks are disposable surgical masks or cotton masks.

The Sentiment Is On Target

Though the experts said it’s clear the percentages presented in this social media image don’t hold up to scrutiny, they agreed that the general idea is right.

“We get the most protection if both parties wear masks,” Linsey Marr, a professor of civil and environmental engineering at Virginia Tech who studies viral air droplet transmission, wrote in an email. She was speaking about transmission of COVID-19 as well as other respiratory illnesses.

Chin-Hong went even further. “Bottom line,” he wrote in his email, “everyone should wear a mask and stop debating who might have [the virus] and who doesn’t.”

Marr also explained that cloth masks are better at outward protection — blocking droplets released by the wearer — than inward protection — blocking the wearer from breathing in others’ exhaled droplets.

“The main reason that the masks do better in the outward direction is that the droplets/aerosols released from the wearer’s nose and mouth haven’t had a chance to undergo evaporation and shrinkage before they hit the mask,” wrote Marr. “It’s easier for the fabric to block the droplets/aerosols when they’re larger rather than after they have had a chance to shrink while they’re traveling through the air.”

So, the image is also right when it implies there is less risk of transmission of the disease if a COVID-positive person wears a mask.

“In terms of public health messaging, it’s giving the right message. It just might be overly exact in terms of the relative risk,” said Lover. “As a rule of thumb, the more people wearing masks, the better it is for population health.”

Public health experts urge widespread use of masks because those with COVID-19 can often be asymptomatic or pre-symptomatic — meaning they may be unaware they have the disease, but could still spread it. Wearing a mask could interfere with that spread.

Our Ruling

A viral social media image claims to show “contagion probabilities” in different scenarios depending on whether masks are worn.

Experts agreed the image does convey an idea that is right: Wearing a mask is likely to interfere with the spread of COVID-19.

But, although this message has a hint of accuracy, the image leaves out important details and context, namely the source for the contagion probabilities it seeks to illustrate. Experts said evidence for the specific probabilities doesn’t exist.

We rate it Mostly False.

from Updates By Dina https://khn.org/news/social-media-image-about-mask-efficacy-right-in-sentiment-but-percentages-are-bonkers/

0 notes

Text

Social Media Image About Mask Efficacy Right In Sentiment, But Percentages Are ‘Bonkers’

COVID-19’s “contagion probability” between two people is 70% if the carrier is not masked, 5% if the carrier is masked, and 1.5% if both parties are

— a viral image circulating on social media since April

This story was produced in partnership with PolitiFact.

This story can be republished for free (details).

A popular social media post that’s been circulating on Instagram and Facebook since April depicts the degree to which mask-wearing interferes with the transmission of the novel coronavirus. It gives its highest “contagion probability” — a very precise 70% — to a person who has COVID-19 but interacts with others without wearing a mask. The lowest probability, 1.5%, is when masks are worn by all.

The exact percentages assigned to each scenario had no attribution or mention of a source. So we wanted to know if there is any science backing up the message and the numbers — especially as mayors, governors and members of Congress increasingly point to mask-wearing as a means to address the surges in coronavirus cases across the country.

Doubts About The Percentages

As with so many things on social media, it’s not clear who made this graphic or where they got their information. Since we couldn’t start with the source, we reached out to the Centers for Disease Control and Prevention to ask if the agency could point to research that would support the graphic’s “contagion probability” percentages.

“We have not seen or compiled data that looks at probabilities like the ones represented in the visual you sent,” Jason McDonald, a member of CDC’s media team, wrote in an email. “Data are limited on the effectiveness of cloth face coverings in this respect and come primarily from laboratory studies.”

McDonald added that studies are needed to measure how much face coverings reduce transmission of COVID-19, especially from those who have the disease but are asymptomatic or pre-symptomatic.

Other public health experts we consulted agreed: They were not aware of any science that confirmed the numbers in the image.

Email Sign-Up

Subscribe to KHN’s free Morning Briefing.

Sign Up

Please confirm your email address below:

Sign Up

“The data presented is bonkers and does not reflect actual human transmissions that occurred in real life with real people,” Peter Chin-Hong, a professor of medicine at the University of California-San Francisco, wrote in an email. It also does not reflect anything simulated in a lab, he added.

Andrew Lover, an assistant professor of epidemiology at the University of Massachusetts Amherst, agreed. He had seen a similar graphic on Facebook before we interviewed him and done some fact-checking on his own.

“We simply don’t have data to say this,” he wrote in an email. “It would require transmission models in animals or very detailed movement tracking with documented mask use (in large populations).”

Because COVID-19 is a relatively new disease, there have been only limited observational studies on mask use, said Lover. The studies were conducted in China and Taiwan, he added, and mostly looked at self-reported mask use.

Research regarding other viral diseases, though, indicates masks are effective at reducing the number of viral particles a sick person releases. Inhaling viral particles is often how respiratory diseases are spread.

Sources:

ACS Nano, “Aerosol Filtration Efficiency of Common Fabrics Used in Respiratory Cloth Masks,” May 26, 2020

Associated Press, “Graphic Touts Unconfirmed Details About Masks and Coronavirus,” April 28, 2020

BMJ Global Health, “Reduction of Secondary Transmission of SARS-CoV-2 in Households by Face Mask Use, Disinfection and Social Distancing: A Cohort Study in Beijing, China,” May 2020

Email interview with Andrew Noymer, associate professor of population health and disease prevention, University of California-Irvine, June 29, 2020

Email interview with Jeffrey Shaman, professor of environmental health sciences and infectious diseases, Columbia University, June 29, 2020

Email interview with Linsey Marr, Charles P. Lunsford professor of civil and environmental engineering, Virginia Polytechnic Institute and State University, June 29, 2020

Email interview with Peter Chin-Hong, professor of medicine, and George Rutherford, professor of epidemiology and biostatistics, University of California-San Francisco, June 29, 2020

Email interview with Werner Bischoff, medical director of infection prevention and health system epidemiology, Wake Forest Baptist Health, June 30, 2020

Email statement from Jason McDonald, member of the media team, Centers for Disease Control and Prevention, June 29, 2020

The Lancet, “Physical Distancing, Face Masks, and Eye Protection to Prevent Person-to-Person Transmission of SARS-CoV-2 and COVID-19: A Systematic Review and Meta-Analysis,” June 1, 2020

Nature Medicine, “Respiratory Virus Shedding in Exhaled Breath and Efficacy of Face Masks,” April 3, 2020

Phone and email interview with Andrew Lover, assistant professor of epidemiology, University of Massachusetts Amherst, June 29, 2020

Reuters, “Partly False Claim: Wear a Face Mask; COVID-19 Risk Reduced by Up to 98.5%,” April 23, 2020

The Washington Post, “Spate of New Research Supports Wearing Masks to Control Coronavirus Spread,” June 13, 2020

One recent study found that people who had different coronaviruses (not COVID-19) and wore a surgical mask breathed fewer viral particles into their environment, meaning there was less risk of transmitting the disease. And a recent meta-analysis study funded by the World Health Organization found that, for the general public, the risk of infection is reduced if face masks are worn, even if the masks are disposable surgical masks or cotton masks.

The Sentiment Is On Target

Though the experts said it’s clear the percentages presented in this social media image don’t hold up to scrutiny, they agreed that the general idea is right.

“We get the most protection if both parties wear masks,” Linsey Marr, a professor of civil and environmental engineering at Virginia Tech who studies viral air droplet transmission, wrote in an email. She was speaking about transmission of COVID-19 as well as other respiratory illnesses.

Chin-Hong went even further. “Bottom line,” he wrote in his email, “everyone should wear a mask and stop debating who might have [the virus] and who doesn’t.”

Marr also explained that cloth masks are better at outward protection — blocking droplets released by the wearer — than inward protection — blocking the wearer from breathing in others’ exhaled droplets.

“The main reason that the masks do better in the outward direction is that the droplets/aerosols released from the wearer’s nose and mouth haven’t had a chance to undergo evaporation and shrinkage before they hit the mask,” wrote Marr. “It’s easier for the fabric to block the droplets/aerosols when they’re larger rather than after they have had a chance to shrink while they’re traveling through the air.”

So, the image is also right when it implies there is less risk of transmission of the disease if a COVID-positive person wears a mask.

“In terms of public health messaging, it’s giving the right message. It just might be overly exact in terms of the relative risk,” said Lover. “As a rule of thumb, the more people wearing masks, the better it is for population health.”

Public health experts urge widespread use of masks because those with COVID-19 can often be asymptomatic or pre-symptomatic — meaning they may be unaware they have the disease, but could still spread it. Wearing a mask could interfere with that spread.

Our Ruling

A viral social media image claims to show “contagion probabilities” in different scenarios depending on whether masks are worn.

Experts agreed the image does convey an idea that is right: Wearing a mask is likely to interfere with the spread of COVID-19.

But, although this message has a hint of accuracy, the image leaves out important details and context, namely the source for the contagion probabilities it seeks to illustrate. Experts said evidence for the specific probabilities doesn’t exist.

We rate it Mostly False.

Social Media Image About Mask Efficacy Right In Sentiment, But Percentages Are ‘Bonkers’ published first on https://nootropicspowdersupplier.tumblr.com/

0 notes

Text

Social Media Image About Mask Efficacy Right In Sentiment, But Percentages Are ‘Bonkers’

COVID-19’s “contagion probability” between two people is 70% if the carrier is not masked, 5% if the carrier is masked, and 1.5% if both parties are

— a viral image circulating on social media since April

This story was produced in partnership with PolitiFact.

This story can be republished for free (details).

A popular social media post that’s been circulating on Instagram and Facebook since April depicts the degree to which mask-wearing interferes with the transmission of the novel coronavirus. It gives its highest “contagion probability” — a very precise 70% — to a person who has COVID-19 but interacts with others without wearing a mask. The lowest probability, 1.5%, is when masks are worn by all.

The exact percentages assigned to each scenario had no attribution or mention of a source. So we wanted to know if there is any science backing up the message and the numbers — especially as mayors, governors and members of Congress increasingly point to mask-wearing as a means to address the surges in coronavirus cases across the country.

Doubts About The Percentages

As with so many things on social media, it’s not clear who made this graphic or where they got their information. Since we couldn’t start with the source, we reached out to the Centers for Disease Control and Prevention to ask if the agency could point to research that would support the graphic’s “contagion probability” percentages.

“We have not seen or compiled data that looks at probabilities like the ones represented in the visual you sent,” Jason McDonald, a member of CDC’s media team, wrote in an email. “Data are limited on the effectiveness of cloth face coverings in this respect and come primarily from laboratory studies.”

McDonald added that studies are needed to measure how much face coverings reduce transmission of COVID-19, especially from those who have the disease but are asymptomatic or pre-symptomatic.

Other public health experts we consulted agreed: They were not aware of any science that confirmed the numbers in the image.

Email Sign-Up

Subscribe to KHN’s free Morning Briefing.

Sign Up

Please confirm your email address below:

Sign Up

“The data presented is bonkers and does not reflect actual human transmissions that occurred in real life with real people,” Peter Chin-Hong, a professor of medicine at the University of California-San Francisco, wrote in an email. It also does not reflect anything simulated in a lab, he added.

Andrew Lover, an assistant professor of epidemiology at the University of Massachusetts Amherst, agreed. He had seen a similar graphic on Facebook before we interviewed him and done some fact-checking on his own.

“We simply don’t have data to say this,” he wrote in an email. “It would require transmission models in animals or very detailed movement tracking with documented mask use (in large populations).”

Because COVID-19 is a relatively new disease, there have been only limited observational studies on mask use, said Lover. The studies were conducted in China and Taiwan, he added, and mostly looked at self-reported mask use.

Research regarding other viral diseases, though, indicates masks are effective at reducing the number of viral particles a sick person releases. Inhaling viral particles is often how respiratory diseases are spread.

Sources:

ACS Nano, “Aerosol Filtration Efficiency of Common Fabrics Used in Respiratory Cloth Masks,” May 26, 2020

Associated Press, “Graphic Touts Unconfirmed Details About Masks and Coronavirus,” April 28, 2020

BMJ Global Health, “Reduction of Secondary Transmission of SARS-CoV-2 in Households by Face Mask Use, Disinfection and Social Distancing: A Cohort Study in Beijing, China,” May 2020

Email interview with Andrew Noymer, associate professor of population health and disease prevention, University of California-Irvine, June 29, 2020

Email interview with Jeffrey Shaman, professor of environmental health sciences and infectious diseases, Columbia University, June 29, 2020

Email interview with Linsey Marr, Charles P. Lunsford professor of civil and environmental engineering, Virginia Polytechnic Institute and State University, June 29, 2020

Email interview with Peter Chin-Hong, professor of medicine, and George Rutherford, professor of epidemiology and biostatistics, University of California-San Francisco, June 29, 2020

Email interview with Werner Bischoff, medical director of infection prevention and health system epidemiology, Wake Forest Baptist Health, June 30, 2020

Email statement from Jason McDonald, member of the media team, Centers for Disease Control and Prevention, June 29, 2020

The Lancet, “Physical Distancing, Face Masks, and Eye Protection to Prevent Person-to-Person Transmission of SARS-CoV-2 and COVID-19: A Systematic Review and Meta-Analysis,” June 1, 2020

Nature Medicine, “Respiratory Virus Shedding in Exhaled Breath and Efficacy of Face Masks,” April 3, 2020

Phone and email interview with Andrew Lover, assistant professor of epidemiology, University of Massachusetts Amherst, June 29, 2020

Reuters, “Partly False Claim: Wear a Face Mask; COVID-19 Risk Reduced by Up to 98.5%,” April 23, 2020

The Washington Post, “Spate of New Research Supports Wearing Masks to Control Coronavirus Spread,” June 13, 2020

One recent study found that people who had different coronaviruses (not COVID-19) and wore a surgical mask breathed fewer viral particles into their environment, meaning there was less risk of transmitting the disease. And a recent meta-analysis study funded by the World Health Organization found that, for the general public, the risk of infection is reduced if face masks are worn, even if the masks are disposable surgical masks or cotton masks.

The Sentiment Is On Target

Though the experts said it’s clear the percentages presented in this social media image don’t hold up to scrutiny, they agreed that the general idea is right.

“We get the most protection if both parties wear masks,” Linsey Marr, a professor of civil and environmental engineering at Virginia Tech who studies viral air droplet transmission, wrote in an email. She was speaking about transmission of COVID-19 as well as other respiratory illnesses.

Chin-Hong went even further. “Bottom line,” he wrote in his email, “everyone should wear a mask and stop debating who might have [the virus] and who doesn’t.”

Marr also explained that cloth masks are better at outward protection — blocking droplets released by the wearer — than inward protection — blocking the wearer from breathing in others’ exhaled droplets.

“The main reason that the masks do better in the outward direction is that the droplets/aerosols released from the wearer’s nose and mouth haven’t had a chance to undergo evaporation and shrinkage before they hit the mask,” wrote Marr. “It’s easier for the fabric to block the droplets/aerosols when they’re larger rather than after they have had a chance to shrink while they’re traveling through the air.”

So, the image is also right when it implies there is less risk of transmission of the disease if a COVID-positive person wears a mask.

“In terms of public health messaging, it’s giving the right message. It just might be overly exact in terms of the relative risk,” said Lover. “As a rule of thumb, the more people wearing masks, the better it is for population health.”

Public health experts urge widespread use of masks because those with COVID-19 can often be asymptomatic or pre-symptomatic — meaning they may be unaware they have the disease, but could still spread it. Wearing a mask could interfere with that spread.

Our Ruling

A viral social media image claims to show “contagion probabilities” in different scenarios depending on whether masks are worn.

Experts agreed the image does convey an idea that is right: Wearing a mask is likely to interfere with the spread of COVID-19.

But, although this message has a hint of accuracy, the image leaves out important details and context, namely the source for the contagion probabilities it seeks to illustrate. Experts said evidence for the specific probabilities doesn’t exist.

We rate it Mostly False.

Social Media Image About Mask Efficacy Right In Sentiment, But Percentages Are ‘Bonkers’ published first on https://smartdrinkingweb.weebly.com/

0 notes

Text

Complexity of human tooth enamel revealed at atomic level in NIH-funded study

Scientists used a combination of advanced microscopy and chemical detection techniques to uncover the structural makeup of human tooth enamel at unprecedented atomic resolution, revealing lattice patterns and unexpected irregularities. The findings could lead to a better understanding of how tooth decay develops and might be prevented. The research was supported in part by the National Institute of Dental and Craniofacial Research (NIDCR) at the National Institutes of Health. The findings appear in Nature.

“This work provides much more detailed information about the atomic makeup of enamel than we previously knew,” said Jason Wan, Ph.D., a program officer at NIDCR. “These findings can broaden our thinking and approach to strengthening teeth against mechanical forces, as well as repairing damage due to erosion and decay.”

Your teeth are remarkably resilient, despite enduring the stress and strain of biting, chewing, and eating for a lifetime. Enamel — the hardest substance in the human body — is largely responsible for this endurance. Its high mineral content gives it strength. Enamel forms the outer covering of teeth and helps prevent tooth decay, or caries.

Tooth decay is one of the most common chronic diseases, affecting up to 90% of children and the vast majority of adults worldwide, according to the World Health Organization. Left untreated, tooth decay can lead to painful abscesses, bone infection, and bone loss.

Tooth decay starts when excess acid in the mouth erodes the enamel covering. Scientists have long sought a more complete picture of enamel’s chemical and mechanical properties at the atomic level to better understand—and potentially prevent or reverse—enamel loss.

To survey enamel at the tiniest scales, researchers use microscopy methods such as scanning transmission electron microscopy (STEM), which directs a beam of electrons through a material to map its atomic makeup.

.”>Impurities such as magnesium showed up as dark distortions (indicated by white arrows) in the atomic lattice of human enamel crystallites.Paul Smeets, Northwestern University & Berit Goodge, Cornell University

STEM studies have shown that at the nanoscale, enamel comprises tightly bunched oblong crystals that are about 1,000 times smaller in width than a human hair. These tiny crystallites are made mostly of a calcium- and phosphate-based mineral called hydroxylapatite. STEM studies coupled with chemical detection techniques had hinted at the presence of much smaller amounts of other chemical elements, but enamel’s vulnerability to damage from high-energy electron beams prevented a more thorough analysis at the necessary level of resolution.

To define these minor elements, a team of scientists at Northwestern University, Evanston, Illinois, used an imaging tool called atom probe tomography. By successively removing layers of atoms from a sample, the technique provides a more refined, atom-by-atom view of a substance. The Northwestern group was among the first to use atom probe tomography to probe biological materials, including components of teeth.

“Earlier studies revealed the bulk composition of enamel, which is like knowing the overall makeup of a city in terms of its population,” said senior author Derk Joester, Ph.D., a professor of materials science and engineering at Northwestern. “But it doesn’t tell you how things operate at the local scale in a city block or a single house. Atom probe tomography gave us that more detailed view.”