#The Future of Software Architecture

Explore tagged Tumblr posts

Text

Revolutionize Your Art with Leonardo AI!

Leonardo AI is revolutionizing artistic creation with its advanced algorithms that transform real-world ideas into stunning masterpieces. This dynamic platform empowers us to design imaginative game assets, including characters, artifacts, landscapes, conceptual visuals, and intricate architectures.

By merging cutting-edge technology with our creative fervor, Leonardo AI enables artists and designers to bring their visions to life. It injects depth and vibrancy into our projects, making it perfect for those looking to elevate their creative endeavors. Discover how this AI-driven toolset offers an unparalleled environment for artistic innovation!

#LeonardoAI #ArtisticInnovation

#Leonardo AI#artistic revolution#creative tools#AI algorithms#game assets#character design#digital art#artistic masterpieces#innovative platform#creative projects#landscape design#architecture design#imaginative visuals#artistic innovation#AI creativity#design software#elevate creativity#vibrant art#concept art#technology and art#art community#digital creators#AI art tools#artistic expression#creative empowerment#visual storytelling#art techniques#design inspiration#future of art#unleash creativity

2 notes

·

View notes

Text

Decoding the Digital: From Architecting Systems to Shaping the Future of the Web

🚀 Decoding the Digital! 🚀 Meet [Name], the Principal Systems Architect, Digital Strategy Expert, and creator of HTMP! 🤯 From building robust systems to shaping the future of the web, [Name]'s journey is truly inspiring. Dive into my latest blog post to learn about [his/her/their] insights on digital transformation, systems architecture, and the power of innovation. 💡 #DigitalTransformation #SystemsArchitecture #WebDevelopment #HTMP #Innovation #TechLeadership #FutureOfWork #Technology #SoftwareEngineering #PHP #TechCareers #DigitalStrategy #CodingLife #Inspiration #TechCommunity #WebDev #SoftwareDeveloper ✨

The Architect: Engineering the Backbone of Digital Transformation Michael Morales isn’t just a Principal Systems Architect—he’s the mastermind behind scalable, secure, and high-performance digital ecosystems. With a track record of designing and implementing mission-critical systems for Fortune 500 companies, cloud platforms, and financial institutions, he blends deep technical expertise with…

#Digital Transformation#Future of Web#HTMP#Innovation#Leadership#PHP#software engineering#Systems Architecture#Technology#Web Development

1 note

·

View note

Text

Java is an established programming language and an ecosystem that has dominated the software business for many years. According to TIOBE index rankings, Java was the #1 popular programming language in 2020 and the fourth best currently for bespoke software development.

The key factor for its intensive popularity is its security, which is why it is extensively used in a broad range of disciplines such as Big data processing, AI application development, Android app development, Core Java software development, and many more. It provides a large set of tools and libraries, as well as cross-platform interoperability, allowing customers to build applications of their choice.

#java development#future of java#java trends#java developer for hire#Java programming language for cloud-native development#Java frameworks for microservices architecture#Java ecosystem tools for DevOps automation#Java web development trends in 2023#Agile Java development with DevOps best practices#software development company

0 notes

Text

MAGA as sexual politics

Anyone noticed that with the recent discussion of tariffs and bringing back 'good' jobs to the U.S., that the kinds of jobs involved aren't perfume supplies, customer service or architectural software? They are factory jobs, manufacturing jobs, and all clearly identified as MALE jobs. The underlying current to MAGA is not just nostalgic for an era that never was, it's not just conveniently leaving out that union membership was the source of such wealth re-distribution that led to the rapid rise of the middle class, but it's specifically aimed at increasing the value of male labor in relation to more educated but female labor. At one point, when men dominated higher education, women's labor was worth less because we lacked education, but now that women outnumber men in higher education, well... it turns out education isn't so important after all and shouldn't be so highly valued compared to the honest, hardworking, muscular labor of a MAGA man.

MAGA is really about making male labor, and thus male earning power and negotiating power in sexual relations, the future industrial focus of the country.

96 notes

·

View notes

Text

A-Café (Update #25) - Community Discussion

Good morning everyone! I know it's been a while since I've posted, but I'm finally back with another community update. In the first part, I'll be giving a brief overview of where we're at in terms of project progress. Then, in the second half, we'll discuss a new development in app accessibility.

Without further ado, let's begin!

1) Where are we at in the project currently?

A similar question was asked in the A-Café discord recently, so I figured I'd include my response here as well:

Right now we’re reworking the design of A-Café, both visually and architecturally. The initial planning and design phase of the project wasn’t done very thoroughly due to my inexperience, so now that I’m jumping back into things I want to ensure we have a solid prototype for usability testing. For us that means we’ve recently done/are doing a few things:

analyzing results from the old 2022 user survey (done)

discussing new ideas for features A-Café users might want, based on the 2022 user survey

reevaluating old ideas from the previous app design

making a new mock-up for usability testing

Once the mock-up is finished, I plan on doing internal testing first before asking for volunteer testers publicly (the process for which will be detailed in an upcoming community update).

2) Will A-Café be available for iOS and Android devices?

Yes! In fact, the first downloadable version of A-Café may no longer be so device-specific.

What do I mean by that? Well, in the beginning, the plan for A-Café was to make two different versions of the same app (iOS and Android). I initially chose to do this because device-specific apps are made with that device's unique hardware/software in mind--thus, they have the potential to provide a fully optimized user experience.

However, I've since realized that focusing on device-specific development too soon may not be the right choice for our project.

Yes, top-notch app performance would be a big bonus. But by purely focusing on iOS and Android devices for the initial launch, we'd be limiting our audience testing to specific mobile-users only. Laptop and desktop users for example, would have to wait until a different version of the app was released (which is not ideal in terms of accessibility).

Therefore, I've recently decided to explore Progressive Web App development instead.

[What is a Progressive Web App?]

A Progressive Web App (or PWA) is "a type of web app that can operate both as a web page and mobile app on any device" (alokai.com)

Much like a regular mobile app, a PWA can be found through the internet and added to your phone's home screen as a clickable icon. They can also have the ability to work offline and use device-specific features such as push-notifications.

Additionally, due to being web-based applications, PWAs can be accessed by nearly any device with a web browser. That means regardless of whether you have an iOS or Android device, you'd be able to access the same app from the same codebase.

In the end, a PWA version of A-Café should look and act similarly to an iOS/Android app, while also being accessible to various devices. And, due to having only one codebase, development of PWAs tends to be faster and be more cost-effective than making different versions of the same app.

To be clear, I haven't abandoned the idea of device-specific development entirely. We could launch iOS/Android versions of A-Café in the future if demand or revenue end up being high enough. But as of right now, I don't believe doing so is wise.

[What Does this Mean for me as a User?]

In terms of app installation and user experience, not much should hopefully change. I'd like to have A-Café available on both the Apple App Store and Google Play Store.

There will also be the option of searching for A-Café via your device's web browser, and then installing it on your home screen (iOS devices can only do so using Safari). We will likely rely on this method until we can comfortably ensure user access to A-Café on the Apple App Store and Google Play Store.

-------------

And that's it for now! Thank you for reading this latest community update. For more insight into the development process, consider joining the A-Café discord. If you have any questions or concerns regarding this post, we would love to hear your input in the comments below. See you later!

26 notes

·

View notes

Text

Write One to Throw Away?

If you're in the software industry for long enough, you'll hear this advice eventually. There's an infamous Catch-22 to writing code:

You don't deeply understand the problem (or its solution space) until you've written a solution.

The first solution you write will have none of that hindsight to help you.

So it naturally shakes out that you have to write it at least one time before you can write it well, unless you're stricken with exceptional luck. And the minimum number of attempts you will need is two: one to throw away, and a second attempt to keep.

It's just math. It's just logic. Write one to throw away. It's got the world's easiest proof. You'd have to be some kind of idiot to argue with it!

Okay, hear me out...

As you work on bigger and older projects, you will continuously be confronted with a real-world reality: that requirements are an input that never stops changing. You can make the right tool for the job today, but the job will change tomorrow. Is your pride and joy still the right tool?

If you're like most developers, your first stage of grief will be denial. Surely, if we just anticipate all the futures that could possibly happen, we can write code that's ready to be extended in any possible direction later! We're basically wizards, after all - this feels like it should work.

So you try it. You briefly feel safe in the corrosive sandstorm of time. Your code feels future proof, right up until the future arrives with a demand you didn't anticipate, which is actually so much harder to write thanks to your premature abstractions. Welcome to the anger stage. The YAGNI acronym (you ain't gonna need it) finally registers in your brain for what it is - a bitter pill, hard-won but true.

But we're wizards! We bargain with our interpreters and parsers and borrow checkers. Surely we can make our software immortal with the right burnt offerings. We can use TDD! Oops, now our tests are their own giant maintenance burden locking us into inflexible implementation decisions. Static analysis and refactoring tooling! Huh, well that made life support easier, but couldn't fix fundamental problems of approach, architecture and design (many of which only came into existence when the requirements changed).

As the sun rises and sets on entire ISAs, the cold gloom eventually sets in. There is no such thing as immortal software. Even the software that appears immortal is usually a vortex of continuous human labor and editing. The Linux kernel is constantly dying by pieces and being reborn in equal or greater measure - it feels great to get a patch merged, but your name might not be in the git blame at all in 2 years time.

I want to talk about what happens when your head suddenly jumps up in astonished clarity and you finally accept and embrace that fact: holy shit, there is no immortal software!

Silicon is sand

... and we're in the mandala business, baby.

I advocate that you write every copy to be thrown away. Every single one. I'm not kidding.

Maybe it'll be good enough (read adequacy, not perfection) that you never end up needing to replace your code in practice. Maybe you'll replace it every couple years as your traffic scales. But the only sure thing in life is that your code will have an expiration date, and every choice you make in acknowledgement of that mortality will make your life better.

People are often hesitant to throw out working code because it represents years of accumulated knowledge in real-world use. You'd have to be a fool to waste that knowledge, right? Okay. Do your comments actually instruct the reader about these lessons? Does secondary documentation explain why decisions were made, not just what those decisions were? Are you linking to an issue tracker (that's still accessible to your team)? If you're not answering yes to these type of questions, you have no knowledge in your code. It is a black hole that consumed and irreparably transformed knowledge for ten years. It is one of the worst liabilities you could possibly have. Don't be proud of that ship! You'll have nowhere to go when it sinks, and you'll go down with it.

When you write code with the future rewriter - not merely maintainer - in mind, you'll find it doesn't need to be replaced as often. That sounds ironic, and it is, but it's also true. Your code will be educational enough for onboarding new people (who would rewrite what they don't understand anyways). It will document its own assumptions (so you can tell when you need a full rewrite, or just something partial that feels more like a modification). It will provide a more useful guiding light for component size than any "do one thing well" handwave. And when the day finally comes, when a rewrite is truly necessary, you'll have all the knowledge you need to do it. In the meantime, you've given yourself permission to shit out something sloppy that might never need replacing, but will teach you a lot about the problem domain.

This is independent of things like test suite methodology, but it does provide a useful seive for thinking about which tests you do and don't want. The right tests will improve your mobility! The wrong tests will set your feet in cement. "Does this make a rewrite easier?" is a very good, very concrete heuristic for telling the two apart.

Sorry for long-posting, btw. I used this space to work through some hazy ideas and sharpen them for myself, particularly because I'm looking at getting into language design and implementation in the near future. Maybe at some future date, I'll rewrite it shorter and clearer.

TL,DR:

Every LOC you write will probably eventually be disposed or replaced. Optimize for that, and achieve Zen.

16 notes

·

View notes

Text

If you know anyone who writes music, today has probably been a very crappy day for them.

Finale, one of the most dominant programs for music notation for the past 35 years, is coming to an end. They’re no longer updating it or allowing people to purchase it, and it won’t be possible to authorize on new devices or if you upgrade your OS.

I’ve personally been using Finale to write music for about 20 years (since middle school!). It’s not something that I depend on for money, and my work should be compatible with other programs, so I’ll be fine, but this is very, very bad news for lots of people who depend on this software for their livelihood.

(cut added so info added to reblogs doesn't get buried!)

The shittiest thing is that this was preventable. From a comment on Finale’s post:

As a former Tech Lead on Finale (2019-2021) I can tell you this future was avoidable. Those millions of lines of code were old and crufty, and myself and others recognized something had to be done. So we created a plan to modernize the code base, focusing on making it easier to deliver the next few rounds of features. I encouraged product leadership to put together a feature roadmap so our team could identify where the modernization effort should be focused.

We had a high level architecture roadmap, and a low level strategy to modernize basic technologies to facilitate more precise unit testing. The plan was to create smart interfaces in the code to allow swapping out old UI architecture for a more modern, reliable, and better maintained toolset that would grow with us rather than against us.

But in the end it became clear support wasn’t coming from upper management for this effort.

I’m sad to see Finale end this way.

Finale also could allow people who own the software to move it to their new devices in the future, but Capitalism. It’s a pointless corporate IP decision that only hurts users.

There are three main options for those of us who are having to switch: Dorico, MuseScore, or Sibelius.

Sibelius has been Finale’s main competitor for as long as I can remember. It currently runs on a subscription model (ew). The programs are about equal in terms of their capabilities, though I’ve heard Finale has more options for experimental notation. (I’ve used both; Finale worked better for my workflow, but that’s probably just because I grew up using it.)

Dorico is the hip new kid and I’d personally been considering switching for quite a while, but it’s ungodly expensive (about twice what Finale cost at full price). Thankfully, they are allowing current Finale users to purchase at a price comparable (well, still 50% higher) to what Finale used to cost with the educator discount. It apparently has a very steep learning curve at first, though it is probably the best option for experimental notation.

MuseScore is open source, which is awesome! But it also has the most limitations for people who write using experimental notation.

I haven’t used MuseScore or Dorico and will probably end up switching to one of those, but it’s also not an urgent matter for me. Keep your musician friends in your thoughts; it’s going to be a rough road ahead if they used Finale.

#finale#sibelius#musescore#dorico#music notation#music notation software#the end of finale#fuck capitalism#musician#composer#songwriter

24 notes

·

View notes

Text

B-2 Gets Big Upgrade with New Open Mission Systems Capability

July 18, 2024 | By John A. Tirpak

The B-2 Spirit stealth bomber has been upgraded with a new open missions systems (OMS) software capability and other improvements to keep it relevant and credible until it’s succeeded by the B-21 Raider, Northrop Grumman announced. The changes accelerate the rate at which new weapons can be added to the B-2; allow it to accept constant software updates, and adapt it to changing conditions.

“The B-2 program recently achieved a major milestone by providing the bomber with its first fieldable, agile integrated functional capability called Spirit Realm 1 (SR 1),” the company said in a release. It announced the upgrade going operational on July 17, the 35th anniversary of the B-2’s first flight.

SR 1 was developed inside the Spirit Realm software factory codeveloped by the Air Force and Northrop to facilitate software improvements for the B-2. “Open mission systems” means that the aircraft has a non-proprietary software architecture that simplifies software refresh and enhances interoperability with other systems.

“SR 1 provides mission-critical capability upgrades to the communications and weapons systems via an open mission systems architecture, directly enhancing combat capability and allowing the fleet to initiate a new phase of agile software releases,” Northrop said in its release.

The system is intended to deliver problem-free software on the first go—but should they arise, correct software issues much earlier in the process.

The SR 1 was “fully developed inside the B-2 Spirit Realm software factory that was established through a partnership with Air Force Global Strike Command and the B-2 Systems Program Office,” Northrop said.

The Spirit Realm software factory came into being less than two years ago, with four goals: to reduce flight test risk and testing time through high-fidelity ground testing; to capture more data test points through targeted upgrades; to improve the B-2’s functional capabilities through more frequent, automated testing; and to facilitate more capability upgrades to the jet.

The Air Force said B-2 software updates which used to take two years can now be implemented in less than three months.

In addition to B61 or B83 nuclear weapons, the B-2 can carry a large number of precision-guided conventional munitions. However, the Air Force is preparing to introduce a slate of new weapons that will require near-constant target updates and the ability to integrate with USAF’s evolving long-range kill chain. A quicker process for integrating these new weapons with the B-2’s onboard communications, navigation, and sensor systems was needed.

The upgrade also includes improved displays, flight hardware and other enhancements to the B-2’s survivability, Northrop said.

“We are rapidly fielding capabilities with zero software defects through the software factory development ecosystem and further enhancing the B-2 fleet’s mission effectiveness,” said Jerry McBrearty, Northrop’s acting B-2 program manager.

The upgrade makes the B-2 the first legacy nuclear weapons platform “to utilize the Department of Defense’s DevSecOps [development, security, and operations] processes and digital toolsets,” it added.

The software factory approach accelerates adding new and future weapons to the stealth bomber, and thus improve deterrence, said Air Force Col. Frank Marino, senior materiel leader for the B-2.

The B-2 was not designed using digital methods—the way its younger stablemate, the B-21 Raider was—but the SR 1 leverages digital technology “to design, manage, build and test B-2 software more efficiently than ever before,” the company said.

The digital tools can also link with those developed for other legacy systems to accomplish “more rapid testing and fielding and help identify and fix potential risks earlier in the software development process.”

Following two crashes in recent years, the stealthy B-2 fleet comprises 19 aircraft, which are the only penetrating aircraft in the Air Force’s bomber fleet until the first B-21s are declared to have achieved initial operational capability at Ellsworth Air Force Base, S.D. A timeline for IOC has not been disclosed.

The B-2 is a stealthy, long-range, penetrating nuclear and conventional strike bomber. It is based on a flying wing design combining LO with high aerodynamic efficiency. The aircraft’s blended fuselage/wing holds two weapons bays capable of carrying nearly 60,000 lb in various combinations.

Spirit entered combat during Allied Force on March 24, 1999, striking Serbian targets. Production was completed in three blocks, and all aircraft were upgraded to Block 30 standard with AESA radar. Production was limited to 21 aircraft due to cost, and a single B-2 was subsequently lost in a crash at Andersen, Feb. 23, 2008.

Modernization is focused on safeguarding the B-2A’s penetrating strike capability in high-end threat environments and integrating advanced weapons.

The B-2 achieved a major milestone in 2022 with the integration of a Radar Aided Targeting System (RATS), enabling delivery of the modernized B61-12 precision-guided thermonuclear freefall weapon. RATS uses the aircraft’s radar to guide the weapon in GPS-denied conditions, while additional Flex Strike upgrades feed GPS data to weapons prerelease to thwart jamming. A B-2A successfully dropped an inert B61-12 using RATS on June 14, 2022, and successfully employed the longer-range JASSM-ER cruise missile in a test launch last December.

Ongoing upgrades include replacing the primary cockpit displays, the Adaptable Communications Suite (ACS) to provide Link 16-based jam-resistant in-flight retasking, advanced IFF, crash-survivable data recorders, and weapons integration. USAF is also working to enhance the fleet’s maintainability with LO signature improvements to coatings, materials, and radar-absorptive structures such as the radome and engine inlets/exhausts.

Two B-2s were damaged in separate landing accidents at Whiteman on Sept. 14, 2021, and Dec. 10, 2022, the latter prompting an indefinite fleetwide stand-down until May 18, 2023. USAF plans to retire the fleet once the B-21 Raider enters service in sufficient numbers around 2032.

Contractors: Northrop Grumman; Boeing; Vought.

First Flight: July 17, 1989.

Delivered: December 1993-December 1997.

IOC: April 1997, Whiteman AFB, Mo.

Production: 21.

Inventory: 20.

Operator: AFGSC, AFMC, ANG (associate).

Aircraft Location: Edwards AFB, Calif.; Whiteman AFB, Mo.

Active Variant: •B-2A. Production aircraft upgraded to Block 30 standards.

Dimensions: Span 172 ft, length 69 ft, height 17 ft.

Weight: Max T-O 336,500 lb.

Power Plant: Four GE Aviation F118-GE-100 turbofans, each 17,300 lb thrust.

Performance: Speed high subsonic, range 6,900 miles (further with air refueling).

Ceiling: 50,000 ft.

Armament: Nuclear: 16 B61-7, B61-12, B83, or eight B61-11 bombs (on rotary launchers). Conventional: 80 Mk 62 (500-lb) sea mines, 80 Mk 82 (500-lb) bombs, 80 GBU-38 JDAMs, or 34 CBU-87/89 munitions (on rack assemblies); or 16 GBU-31 JDAMs, 16 Mk 84 (2,000-lb) bombs, 16 AGM-154 JSOWs, 16 AGM-158 JASSMs, or eight GBU-28 LGBs.

Accommodation: Two pilots on ACES II zero/zero ejection seats.

21 notes

·

View notes

Text

New data model paves way for seamless collaboration among US and international astronomy institutions

Software engineers have been hard at work to establish a common language for a global conversation. The topic—revealing the mysteries of the universe. The U.S. National Science Foundation National Radio Astronomy Observatory (NSF NRAO) has been collaborating with U.S. and international astronomy institutions to establish a new open-source, standardized format for processing radio astronomical data, enabling interoperability between scientific institutions worldwide.

When telescopes are observing the universe, they collect vast amounts of data—for hours, months, even years at a time, depending on what they are studying. Combining data from different telescopes is especially useful to astronomers, to see different parts of the sky, or to observe the targets they are studying in more detail, or at different wavelengths. Each instrument has its own strengths, based on its location and capabilities.

"By setting this international standard, NRAO is taking a leadership role in ensuring that our global partners can efficiently utilize and share astronomical data," said Jan-Willem Steeb, the technical lead of the new data processing program at the NSF NRAO. "This foundational work is crucial as we prepare for the immense data volumes anticipated from projects like the Wideband Sensitivity Upgrade to the Atacama Large Millimeter/submillimeter Array and the Square Kilometer Array Observatory in Australia and South Africa."

By addressing these key aspects, the new data model establishes a foundation for seamless data sharing and processing across various radio telescope platforms, both current and future.

International astronomy institutions collaborating with the NSF NRAO on this process include the Square Kilometer Array Observatory (SKAO), the South African Radio Astronomy Observatory (SARAO), the European Southern Observatory (ESO), the National Astronomical Observatory of Japan (NAOJ), and Joint Institute for Very Long Baseline Interferometry European Research Infrastructure Consortium (JIVE).

The new data model was tested with example datasets from approximately 10 different instruments, including existing telescopes like the Australian Square Kilometer Array Pathfinder and simulated data from proposed future instruments like the NSF NRAO's Next Generation Very Large Array. This broader collaboration ensures the model meets diverse needs across the global astronomy community.

Extensive testing completed throughout this process ensures compatibility and functionality across a wide range of instruments. By addressing these aspects, the new data model establishes a more robust, flexible, and future-proof foundation for data sharing and processing in radio astronomy, significantly improving upon historical models.

"The new model is designed to address the limitations of aging models, in use for over 30 years, and created when computing capabilities were vastly different," adds Jeff Kern, who leads software development for the NSF NRAO.

"The new model updates the data architecture to align with current and future computing needs, and is built to handle the massive data volumes expected from next-generation instruments. It will be scalable, which ensures the model can cope with the exponential growth in data from future developments in radio telescopes."

As part of this initiative, the NSF NRAO plans to release additional materials, including guides for various instruments and example datasets from multiple international partners.

"The new data model is completely open-source and integrated into the Python ecosystem, making it easily accessible and usable by the broader scientific community," explains Steeb. "Our project promotes accessibility and ease of use, which we hope will encourage widespread adoption and ongoing development."

7 notes

·

View notes

Text

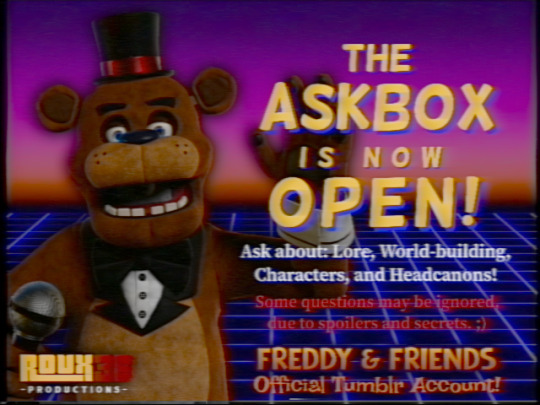

The Freddy & Friends Askbox is now open!

——————————————

An introduction to Freddy & Friends:

Freddy & Friends is an AU created by Roux36 Productions set in the Five Nights at Freddy’s IP.

As opposed to focusing on a single “what if?” question for the AU, F&F is a rewrite of the FNAF story in its entirety, constructed from the ground-up to tell a more realistic, character-driven take on the original story.

——————————————

What asks will I answer? (Examples provided)

Lore (Ex: How do the spirits work?)

World-Building (Ex: Who made the pizza recipes at Freddy’s)

Headcanons (Ex: Who’s Jeremy’s favorite music artist?)

Art requests? (Not sure about this one yet… but maybe?)

Character Asks!

Ask a question from within the Freddy & Friends universe, and it’ll be answered by the creator of the Fazbear Franchise, HENRY ███!!!

(Preface by saying “Dear Henry,”)

———————————————

Click the keep reading to learn more about the Freddy & Friends AU! >>>

——————————————

How much thought did you put into this?

It’s not that I’m a massive nerd, but it’s just that I’m actually a massive nerd.

Animatronics are loosely grounded in real-world mechanical engineering, giving them plausible designs for them to have existed in the 1980’s (down to the very components that make them function.)

The restaurant itself has regulations and procedures to manage the animatronics during the day (this varies depending on the restaurant).

The restaurant layouts themselves have been redesigned to be more coherent with the rules of architecture.

The animatronic characters themselves have their own lore and personalities within the cartoon world.

I have a full list of employees who worked at both Afton Robotics and Fazbear Entertainment.

The rules of the spirits are grounded in real-world cultural beliefs, as well as typical ghost hunting traditions.

No character is written to be two-dimensional. The characters don’t just do stuff for the plot; they do them because it’s in-character for them to do so. They’re not just ghost children haunting the animatronics. They’re also human.

———————————————

What IS canon?

Basic media taken into consideration:

Five Nights at Freddy’s

Five Nights at Freddy’s 2

Five Nights at Freddy’s 3

Five Nights at Freddy’s 4

Five Nights at Freddy’s: The Silver Eyes

FNAF World (Surprisingly)

Five Nights at Freddy’s: Sister Location

Five Nights at Freddy’s: The Movie

Details Only taken from:

Freddy Fazbear’s Pizzeria Simulator

Five Nights at Freddy’s: Help Wanted

Five Nights at Freddy’s: Security Breach

The ideas of these media are the content detrimental to the story of Freddy & Friends. However, the story of them has been changed so drastically that canon knowledge is considered unreliable when talking about the Freddy & Friends AU. Some things remain the same, but it’s recommended that knowledge of canon game/book/movie events NOT be used as a reference unless I have stated otherwise.

What’s the same?:

Mike Schmidt and Abby Schmidt’s character have remained almost exactly the same as in the movie. (Movie)

Michael Brooks is Golden Freddy (Novels)

Elizabeth Afton being killed by Circus Baby (Games)

The Bite of ‘83 (Games)

The Bite of ‘87 (Games)

Bonnie is blue

What’s changed?:

Note: not all changes will be mentioned here, due to spoilers. The reason they’re spoilers is because I will elaborate on them in future media and short stories.

Michael Afton does NOT exist—replaced by Fritz Afton

FNAF 4 Crying Child becomes the Puppet

FNAF 4 Bullies are the Missing Children

FNAF 4 Bullies are NOT bullies

The haunted animatronics have personalities, and are capable of verbal speech.

Sammy (Charlie’s twin brother) was killed instead of Charlie

Charlie is NOT an android

Very few employees (including night guards) actually died at Freddy’s

The animatronics are limited by the technology of the 1980’s, and therefore have software limitations that aren’t present in the games

Circus Baby’s Pizza World has been renamed to Circus Kingdom Pizza World

The names of the Funtimes have been changed to align with the re-theme

The Funtimes were NOT built with the intention of kidnapping children

The Funtimes are haunted

The Scooper does not exist

The FNAF 4 gameplay is a dream, and not child experimentation. (It is something more than that, but I will not elaborate further, due to spoilers. ;))

The Toy animatronics have been renamed to the Junior animatronics

The Junior location is Freddy Jr’s Pizzeria

The individual Junior characters have in-universe names beyond “Junior Freddy” and “Junior Bonnie”, etc.

The Juniors are haunted

There is no such thing as “Remnant”.

Vanessa Shelly is NOT William Afton’s Daughter

Steve Raglan and William Afton are two different people

Removed Herobrine

(Aforementioned name changes):

Circus Baby - Circus Sadie, AKA Sadie the Circus Princess

Funtime Freddy - Freddie the Ringmaster

Funtime Foxy - Foxy the Flying Fox

Toy Freddy - Freddy Fazbear Jr.

Toy Bonnie - Riley Rabbit

Toy Chica - Penny Pecks

Toy Foxy/Mangle - Bridget the Fox

——————————————

Thank you!

If you made it to the end, I wanna thank you for showing interest in this AU! I’ve been working on it ever since I was 11 years old, and I’ve just turned 20. It's been a long road with at least five rewrites and twice as many redesigns!

More Freddy & Friends content will be coming soon, in the form of:

A webcomic for the main narrative

Cinematic content

A VHS series

Short stories

Audiobooks for said short stories

A website to be!

The askbox is now open! There is much to tell, so ask away!

- Roux

#freddy & friends#freddy and friends#fnaf#five nights at freddy's#fnaf fanart#fnaf au#fnaf blender#blender#blender 3d#animatronic#fnaf movie#freddy fazbear#bonnie the bunny#chica the chicken#foxy the pirate#fredbear#the puppet#mike schmidt#qna#lore#fnaf lore#f&f lore#world building#headcanon#fnaf fanfic#short fiction#fiction#writers on tumblr#candy cadet#short story

39 notes

·

View notes

Text

ugh my room looks nice when I remove the MULTIPLE LAUNDRY BASKETS OF CRAP from my bookshelves, leaving only books. However! That! Is my crap!

That is a significant capital investment IN CRAP. Old mic, controllers, flight sticks, spare keyboards, bags of pokemon figurines, batteries, stickers, post-its, CRAP. Blank CDs, ripped CDs. Stores of emulation in potential laid in by past mbl against future hardship. HDDs.

I still read and daydream, but I MAKE videogame( asset)s, these days, I don’t have the same endless well of need to play them. Plus old games aren’t that great anyways—I’m glad to HAVE played them and been shaped by them, but if I’m really in trouble someday I don’t need to be able to emulate a PS2. Indie games and phone games (VRChat itself will eventually even be a damn phone game)—I should probably shed a lot of this hardware.

The REAL thing that should be done is properly install all the software for emulation on my backup pc, get all my backup hdds in place, get it all working, and then discard the old stuff. But that’s so much. I use that neural architecture for Blender and Unity now, not other teams-of-people’s lifeworks.

Other people can archive the video games… I think I should discard my old hardware of just video games. I will always be able to turn to video games in better times but it’s not worth unusable stores of physical crap that I will have to leave behind if eg I become homeless, versus stolen comics and ebook library that just needs any screen. I don’t NEED this STUFF. I’m not into weird enough stuff to personally need to hold on to piles of it. I can MAKE my OWN stuff. Stuff is other people. Maybe the real stuff is the people we meet along the way

19 notes

·

View notes

Text

When "Clean" Code is Hard to Read

Never mind that "clean" code can be slow.

Off the top of my head, I could give you several examples of software projects that were deliberately designed to be didactic examples for beginners, but are unreasonably hard to read and difficult to understand, especially for beginners.

Some projects are like that because they are the equivalent of GNU Hello World: They are using all the bells and whistles and and best practices and design patterns and architecture and software development ceremony to demonstrate how to software engineering is supposed to work in the big leagues. There is a lot of validity to that idea. Not every project needs microservices, load balancing, RDBMS and a worker queue, but a project that does need all those things might not be a good "hello, world" example. Not every project needs continuous integration, acceptance testing, unit tests, integration tests, code reviews, an official branching and merging procedure document, and test coverage metrics. Some projects can just be two people who collaborate via git and push to master, with one shell script to run the tests and one shell script to build or deploy the application.

So what about those other projects that aren't like GNU Hello World?

There are projects out there that go out of their way to make the code simple and well-factored to be easier for beginners to grasp, and they fail spectacularly. Instead of having a main() that reads input, does things, and prints the result, these projects define an object-oriented framework. The main file loads the framework, the framework calls the CLI argument parser, which then calls the interactive input reader, which then calls the business logic. All this complexity happens in the name of writing short, easy to understand functions and classes.

None of those things - the parser, the interactive part, the calculation - are in the same file, module, or even directory. They are all strewn about in a large directory hierarchy, and if you don't have an IDE configured to go to the definition of a class with a shortcut, you'll have trouble figuring out what is happening, how, and where.

The smaller you make your functions, the less they do individually. They can still do the same amount of work, but in more places. The smaller you make your classes, the more is-a and as-a relationships you have between classes and objects. The result is not Spaghetti Code, but Ravioli Code: Little enclosed bits floating in sauce, with no obvious connections.

Ravioli Code makes it hard to see what the code actually does, how it does it, and where is does stuff. This is a general problem with code documentation: Do you just document what a function does, do you document how it works, does the documentation include what it should and shouldn't be used for and how to use it? The "how it works" part should be easy to figure out by reading the code, but the more you split up things that don't need splitting up - sometimes over multiple files - the harder you make it to understand what the code actually does just by looking at it.

To put it succinctly: Information hiding and encapsulation can obscure control flow and make it harder to find out how things work.

This is not just a problem for beginner programmers. It's an invisible problem for existing developers and a barrier to entry for new developers, because the existing developers wrote the code and know where everything is. The existing developers also have knowledge about what kinds of types, subclasses, or just special cases exist, might be added in the future, or are out of scope. If there is a limited and known number of cases for a code base to handle, and no plan for downstream users to extend the functionality, then the downside to a "switch" statement is limited, and the upside is the ability to make changes that affect all special cases without the risk of missing a subclass that is hiding somewhere in the code base.

Up until now, I have focused on OOP foundations like polymorphism/encapsulation/inheritance and principles like the single responsibility principle and separation of concerns, mainly because that video by Casey Muratori on the performance cost of "Clean Code" and OOP focused on those. I think these problems can occur in the large just as they do in the small, in distributed software architectures, overly abstract types in functional programming, dependency injection, inversion of control, the model/view/controller pattern, client/server architectures, and similar abstractions.

It's not always just performance or readability/discoverability that suffer from certain abstractions and architectural patterns. Adding indirections or extracting certain functions into micro-services can also hamper debugging and error handling. If everything is polymorphic, then everything must either raise and handle the same exceptions, or failure conditions must be dealt with where they arise, and not raised. If an application is consists of a part written in a high-level interpreted language like Python, a library written in Rust, and a bunch of external utility programs that are run as child processes, the developer needs to figure out which process to attach the debugger to, and which debugger to attach. And then, the developer must manually step through a method called something like FrameWorkManager.orchestrate_objects() thirty times.

108 notes

·

View notes

Text

How AMD is Leading the Way in AI Development

Introduction

In today's rapidly evolving technological landscape, artificial intelligence (AI) has emerged as a game-changing force across Click for more info various industries. One company that stands out for its pioneering efforts in AI development is Advanced Micro Devices (AMD). With its innovative technologies and cutting-edge products, AMD is pushing the boundaries of what is possible in the realm of AI. In this article, we will explore how AMD is leading the way in AI development, delving into the company's unique approach, competitive edge over its rivals, and the impact of its advancements on the future of AI.

Competitive Edge: AMD vs Competition

When it comes to AI development, competition among tech giants is fierce. However, AMD has managed to carve out a niche for itself with its distinct offerings. Unlike some of its competitors who focus solely on CPUs or GPUs, AMD has excelled in both areas. The company's commitment to providing high-performance computing solutions tailored for AI workloads has set it apart from the competition.

AMD at GPU

AMD's graphics processing units (GPUs) have been instrumental in driving advancements in AI applications. With their parallel processing capabilities and massive computational power, AMD GPUs are well-suited for training deep learning models and running complex algorithms. This has made them a preferred choice for researchers and developers working on cutting-edge AI projects.

Innovative Technologies of AMD

One of the key factors that have propelled AMD to the forefront of AI development is its relentless focus on innovation. The company has consistently introduced new technologies that cater to the unique demands of AI workloads. From advanced memory architectures to efficient data processing pipelines, AMD's innovations have revolutionized the way AI applications are designed and executed.

AMD and AI

The synergy between AMD and AI is undeniable. By leveraging its expertise in hardware design and optimization, AMD has been able to create products that accelerate AI workloads significantly. Whether it's through specialized accelerators or optimized software frameworks, AMD continues to push the boundaries of what is possible with AI technology.

The Impact of AMD's Advancements

The impact of AMD's advancements in AI development cannot be overstated. By providing researchers and developers with powerful tools and resources, AMD has enabled them to tackle complex problems more efficiently than ever before. From healthcare to finance to autonomous vehicles, the applications of AI powered by AMD technology are limitless.

FAQs About How AMD Leads in AI Development 1. What makes AMD stand out in the field of AI development?

Answer: AMD's commitment to innovation and its holistic approach to hardware design give it a competitive edge over other players in the market.

youtube

2. How do AMD GPUs contribute to advancements in AI?

Answer: AMD GPUs offer unparalleled computational power and parallel processing capabilities that are essential for training deep learning models.

3. What role does innovation play in AMD's success in AI development?

Answer: Innovation lies at the core of AMD's strategy, driving the company to introduce groundbreaking technologies tailored for AI work

2 notes

·

View notes

Text

In a world where security is paramount, Java looks to be an unrivaled platform getting better with every new update. It is a premier programming language and an extraordinary ecosystem with the ability to deal with security concerns more effectively, thanks to its important tools and libraries.

Another aspect of Java’s unrivaled success is its “Write Once, Run Anywhere” principle. It doesn’t require recompilation when developing Java applications, which makes it the best choice for cross-platform software development.

Java is highly chosen for a wide range of projects, including AI/ML application development, Android app development, Bespoke Java software development, bespoke Blockchain development, and many more.

#java development#future of Java#java trends#java developer for hire#Java programming language for cloud-native development#Java frameworks for microservices architecture#Java ecosystem tools for DevOps automation#Java web development trends in 2023#Agile Java development with DevOps best practices#Java Development Unleashed#software development#angular development

0 notes

Text

How AMD is Leading the Way in AI Development

Introduction

In today's rapidly evolving technological landscape, artificial intelligence (AI) has emerged as a game-changing force across various industries. One company that stands out for its pioneering efforts in AI development is Advanced Micro Devices (AMD). With its innovative technologies and cutting-edge products, AMD is pushing the boundaries of what is possible in the realm of AI. In this article, we will explore how AMD is leading the way in AI development, delving into the company's unique approach, competitive edge over its rivals, and the impact of its advancements on the future of AI.

Competitive Edge: AMD vs Competition

When it comes to AI development, competition among tech giants is fierce. However, AMD has managed to carve out a niche for itself with its distinct offerings. Unlike some of its competitors who focus solely on CPUs or GPUs, AMD has excelled in both areas. The company's commitment to providing high-performance computing solutions tailored for AI workloads has set it apart from the competition.

AMD at GPU

AMD's graphics processing units (GPUs) have been instrumental in driving advancements in AI applications. With their parallel processing capabilities and massive computational power, AMD GPUs are well-suited for training deep learning models and running complex algorithms. This has made them a preferred choice for researchers and developers working on cutting-edge AI projects.

Innovative Technologies of AMD

One of the key factors that have propelled AMD to the forefront of AI development is its relentless focus on innovation. The company has consistently introduced new technologies that cater to the unique demands of AI workloads. From advanced memory architectures to efficient data processing pipelines, AMD's innovations have revolutionized the way AI applications are designed and executed.

AMD and AI

The synergy between AMD and AI is undeniable. By leveraging its expertise in hardware design and optimization, AMD has been able to create products that accelerate AI workloads significantly. Whether it's through specialized accelerators or optimized software frameworks, AMD continues to push the boundaries of what is possible with AI technology.

The Impact of AMD's Advancements

The impact of AMD's advancements in AI development cannot be overstated. By providing researchers and developers with powerful tools and resources, AMD has enabled them to tackle complex problems more efficiently than ever before. From healthcare to finance to autonomous vehicles, the applications of AI powered by AMD technology are limitless.

FAQs About How AMD Leads in AI Development 1. What makes AMD stand out in the field of AI development?

Answer: AMD's commitment to innovation and its holistic approach Click to find out more to hardware design give it a competitive edge over other players in the market.

2. How do AMD GPUs contribute to advancements in AI?

Answer: AMD GPUs offer unparalleled computational power and parallel processing capabilities that are essential for training deep learning models.

3. What role does innovation play in AMD's success in AI development?

Answer: Innovation lies at the core of AMD's strategy, driving the company to introduce groundbreaking technologies tailored for AI work

youtube

2 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes