#TensorFlow Extended (TFX)

Explore tagged Tumblr posts

Text

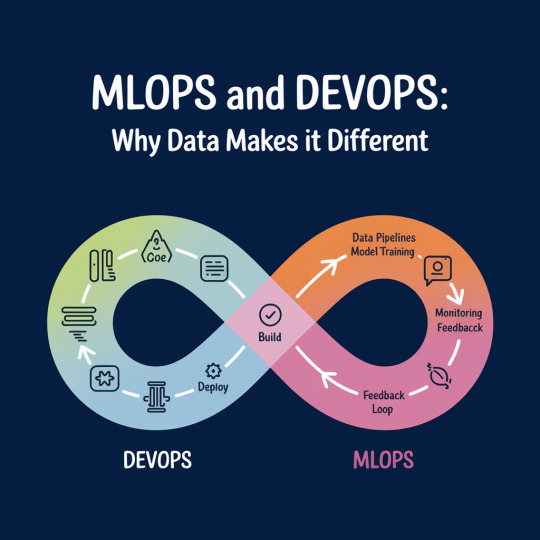

MLOps and DevOps: Why Data Makes It Different

In today’s fast-evolving tech ecosystem, DevOps has become a proven methodology to streamline software delivery, ensure collaboration across teams, and enable continuous deployment. However, when machine learning enters the picture, traditional DevOps processes need a significant shift—this is where MLOps comes into play. While DevOps is focused on code, automation, and systems, MLOps introduces one critical variable: data. And that data changes everything.

To understand this difference, it's essential to explore how DevOps and MLOps operate. DevOps aims to automate the software development lifecycle—from development and testing to deployment and monitoring. It empowers teams to release reliable software faster. Many enterprises today rely on expert DevOps consulting and managed cloud services to help them build resilient, scalable infrastructure and accelerate time to market.

MLOps, on the other hand, integrates data engineering and model operations into this lifecycle. It extends DevOps principles by focusing not just on code, but also on managing datasets, model training, retraining, versioning, and monitoring performance in production. The machine learning pipeline is inherently more experimental and dynamic, which means MLOps needs to accommodate constant changes in data, model behavior, and real-time feedback.

What Makes MLOps Different?

The primary differentiator between DevOps and MLOps is the role of data. In traditional DevOps, code is predictable; once tested, it behaves consistently in production. In MLOps, data drives outcomes—and data is anything but predictable. Shifts in user behavior, noise in incoming data, or even minor feature drift can degrade a model’s performance. Therefore, MLOps must be equipped to detect these changes and retrain models automatically when needed.

Another key difference is model validation. In DevOps, automated tests validate software correctness. In MLOps, validation involves metrics like accuracy, precision, recall, and more, which can evolve as data changes. Hence, while DevOps teams rely heavily on tools like Jenkins or Kubernetes, MLOps professionals use additional tools such as MLflow, TensorFlow Extended (TFX), or Kubeflow to handle the complexities of model deployment and monitoring.

As quoted by Andrej Karpathy, former Director of AI at Tesla: “Training a deep neural network is much more like an art than a science. It requires insight, intuition, and a lot of trial and error.” This trial-and-error nature makes MLOps inherently more iterative and experimental.

Example: Real-World Application

Imagine a financial institution using ML models to detect fraudulent transactions. A traditional DevOps pipeline could deploy the detection software. But as fraud patterns change weekly or daily, the ML model must learn from new patterns constantly. This demands a robust MLOps system that can fetch fresh data, retrain the model, validate its accuracy, and redeploy—automatically.

This dynamic nature is why integrating agilix DevOps practices is crucial. These practices ensure agility and adaptability, allowing teams to respond faster to data drift or model degradation. For organizations striving to innovate through machine learning, combining agile methodologies with MLOps is a game-changer.

The Need for DevOps Transformation in MLOps Adoption

As companies mature digitally, they often undergo a DevOps transformation consulting journey. In this process, incorporating MLOps becomes inevitable for teams building AI-powered products. It's not enough to deploy software—businesses must ensure that their models remain accurate, ethical, and relevant over time.

MLOps also emphasizes collaboration between data scientists, ML engineers, and operations teams, which can be a cultural challenge. Thus, successful adoption of MLOps often requires not just tools and workflows, but also mindset shifts—similar to what organizations go through during a DevOps transformation.

As Google’s ML Engineer D. Sculley stated: “Machine Learning is the high-interest credit card of technical debt.” This means that without solid MLOps practices, technical debt builds up quickly, making systems fragile and unsustainable.

Conclusion

In summary, while DevOps and MLOps share common goals—automation, reliability, and scalability—data makes MLOps inherently more complex and dynamic. Organizations looking to build and maintain ML-driven products must embrace both DevOps discipline and MLOps flexibility.

To support this journey, many enterprises are now relying on proven DevOps consulting services that evolve with MLOps capabilities. These services provide the expertise and frameworks needed to build, deploy, and monitor intelligent systems at scale.

Ready to enable intelligent automation in your organization? Visit Cloudastra Technology: Cloudastra DevOps as a Services and discover how our expertise in DevOps and MLOps can help future-proof your technology stack.

0 notes

Text

How To Perform Sentiment Analysis Using TensorFlow Extended (TFX)?

How To Perform Sentiment Analysis Using TensorFlow Extended (TFX)?

0 notes

Text

Automating Machine Learning Workflows in TensorFlow Extended

1. Introduction Brief Explanation and Importance: Automating machine learning (ML) workflows is crucial for efficient model development and deployment. TensorFlow Extended (TFX) simplifies this by providing a framework to define end-to-end ML pipelines, handling data ingestion, preprocessing, model training, evaluation, and serving. This automation reduces manual effort and accelerates ML…

0 notes

Text

Top Tech Stack for Machine Learning in 2025

Imagine a world where AI models train themselves faster, adapt in real-time, and integrate seamlessly with your business operations. This isn’t a distant future — it’s happening now, and the key lies in choosing the right machine learning tech stack. Whether it’s automating processes, personalizing customer experiences, or optimizing operations, ML is transforming industries across the board. But success in ML depends on more than just algorithms — it requires a well-optimized machine learning tech stack that balances performance, scalability, and efficiency. In 2025, advancements in machine learning frameworks, deep learning libraries, AI development tools, and cloud platforms for ML will play a pivotal role in accelerating AI adoption. Businesses that invest in the right technologies will gain a competitive edge, while those relying on outdated tools risk falling behind.

This guide explores the best machine learning tech stack for 2025, helping you understand the latest tools and their impact on your AI strategy.

Why the Right ML Tech Stack Matters for Businesses

Machine learning is no longer just a research-driven endeavor; it’s a critical business function. Companies across industries — healthcare, retail, finance, manufacturing, and more — are leveraging ML to drive efficiency, gain insights, and enhance customer experiences. However, selecting the right ML tech stack is crucial for success.

Challenges of an Inefficient ML Tech Stack

Many businesses struggle with:

Scalability Issues: As ML models grow in complexity, handling large datasets efficiently becomes a challenge.

Deployment Bottlenecks: Moving models from development to production is often time-consuming and resource-intensive.

Integration Complexities: AI models need to connect seamlessly with existing enterprise systems for real-time decision-making.

High Operational Costs: Cloud and compute resources can become expensive without an optimized infrastructure.

A well-planned machine learning tech stack helps businesses overcome these challenges, enabling faster model development, seamless deployment, and cost-efficient AI solutions.

Top Tech Stack for Machine Learning in 2025

1. Machine Learning Frameworks: The Foundation of AI Innovation

Machine learning frameworks provide the infrastructure for building and training AI models. In 2025, businesses will rely on these top frameworks:

TensorFlow 3.0

Google’s TensorFlow continues to lead with better GPU acceleration, lower memory consumption, and seamless integration with cloud platforms for ML. The 2025 version introduces TensorFlow Extended (TFX) for scalable ML pipelines, making it easier for enterprises to transition models from experimentation to production.

PyTorch 2.5

Favored for its dynamic computation graph and research-friendly environment, PyTorch’s 2025 update enhances multi-GPU training and introduces real-time AI model optimization. Businesses using computer vision, NLP, and autonomous AI systems will benefit from these improvements.

JAX: The Future of Large-Scale ML

Developed by Google, JAX is gaining popularity for its automatic differentiation and fast linear algebra capabilities. With deep integration into Google Cloud AI, JAX enables enterprises to train ML models at an unbelievable scale.

2. Deep Learning Libraries: Powering AI-Driven Applications

Deep learning libraries enhance model performance and enable businesses to build state-of-the-art AI applications.

Keras 4.0

Keras remains a user-friendly deep learning library, making model development accessible even to non-experts. Its 2025 update features automated hyperparameter tuning and multi-cloud deployment support.

Hugging Face Transformers

For NLP, speech AI, and sentiment analysis, Hugging Face provides pre-trained models that drastically reduce development time. The 2025 update focuses on low-latency inference, making it ideal for businesses deploying real-time AI solutions.

Fast.ai: Making Deep Learning Accessible

Fast.ai simplifies AI development with pre-built models and a high-level API. The 2025 version introduces enhanced support for distributed training and energy-efficient model training techniques.

3. Cloud Platforms for ML: Scaling AI with Ease

Cloud platforms are indispensable for handling large ML workloads, offering pre-configured environments for training, deployment, and monitoring.

Google Vertex AI

Vertex AI provides end-to-end ML operations (MLOps) with features like automated model retraining, bias detection, and real-time monitoring. Businesses using JAX and TensorFlow will benefit from its seamless integration.

AWS SageMaker

Amazon’s SageMaker streamlines ML workflows with one-click model deployment and real-time analytics. The 2025 update introduces serverless inference, reducing costs for AI-driven businesses.

Microsoft Azure Machine Learning

Azure ML provides no-code ML solutions, automated ML pipelines, and AI-powered code recommendations. It’s the ideal choice for enterprises already invested in Microsoft’s ecosystem.

4. AI Development Tools: Managing the ML Lifecycle

Beyond frameworks and cloud platforms, businesses need ML development tools to manage, optimize, and monitor their AI models.

MLflow: Version Control for ML Models

Mlflow Test provides model tracking, experiment logging, and cross-cloud compatibility, reducing vendor lock-in for enterprises.

DataRobot: No-Code AI for Business Users

DataRobot enables business users to build AI models without coding, accelerating AI adoption across non-technical teams.

Kubeflow: Kubernetes for ML

Kubeflow optimizes ML workflows by automating data preprocessing, model training, and inference across multi-cloud environments.

How to Choose the Best Machine Learning Tech Stack for Your Business

With so many options available, how do businesses select the right machine learning tech stack for 2025? Here are key considerations:

Define Your Business Goals

Are you focused on real-time AI, predictive analytics, or deep learning applications? Your ML tech stack should align with your objectives.

Evaluate Scalability

Ensure the stack supports growing datasets, increased model complexity, and multi-cloud deployments.

Assess Integration Capabilities

Your ML tools should integrate seamlessly with your existing enterprise systems, APIs, and cloud infrastructure.

Optimize for Cost and Performance

Balancing cloud spending, computational power, and operational efficiency is key to AI-driven business success.

Final Thoughts: Future-Proof Your AI Strategy with the Right ML Tech Stack

The right machine learning frameworks, AI development tools, deep learning libraries, and cloud platforms for ML can make or break your AI initiatives in 2025. As AI adoption accelerates, businesses must invest in scalable, cost-effective, and high-performance ML solutions to stay competitive.

At Charter Global, we specialize in AI-driven digital transformation, ML implementation, and cloud-based AI solutions. Our experts help businesses navigate complex AI landscapes, select the right tech stack, and optimize their ML models for efficiency and scalability.

Ready to future-proof your AI strategy? Contact us today!

Book a Consultation.

Or email us at [email protected] or call +1 770–326–9933.

#Machine Learning#ML Implementation#AI Driven Technologies#Digital Transformation#Cloud based AI Solutions

0 notes

Text

Top Data Science Tools in 2025: Python, R, and Beyond

Top Data Science Tools in 2025:

Python, R, and Beyond As data science continues to evolve, the tools used by professionals have become more advanced and specialized.

In 2025, Python and R remain dominant, but several other tools are gaining traction for specific tasks.

Python:

Python remains the go-to language for data science.

Its vast ecosystem of libraries like Pandas, NumPy, SciPy, and TensorFlow make it ideal for data manipulation, machine learning, and deep learning.

Its flexibility and ease of use keep it at the forefront of the field.

R:

While Python leads in versatility, R is still preferred in academia and for statistical analysis.

Libraries like ggplot2, dplyr, and caret make it a top choice for data visualization, statistical computing, and advanced modeling.

Jupyter Notebooks:

An essential tool for Python-based data science, Jupyter provides an interactive environment for coding, testing, and visualizing results.

It supports various programming languages, including Python and R.

Apache Spark:

As the volume of data grows, tools like Apache Spark have become indispensable for distributed computing.

Spark enables fast processing of large datasets, making it essential for big data analytics and real-time processing.

SQL and NoSQL Databases:

SQL remains foundational for managing structured data, while NoSQL databases like MongoDB and Cassandra are crucial for handling unstructured or semi-structured data in real-time applications.

Tableau and Power BI:

For data visualization and business intelligence, Tableau and Power BI are the go-to platforms. They allow data scientists and analysts to transform raw data into actionable insights with interactive dashboards and reports.

AutoML Tools:

In 2025, tools like H2O.ai, DataRobot, and Google AutoML are streamlining machine learning workflows, enabling even non-experts to build predictive models with minimal coding effort.

Cloud Platforms (AWS, Azure, GCP):

With the increasing reliance on cloud computing, services like AWS, Azure, and Google Cloud provide scalable environments for data storage, processing, and model deployment.

MLOps Tools: As data science moves into production, MLOps tools such as Kubeflow, MLflow, and TFX (TensorFlow Extended) help manage the deployment, monitoring, and lifecycle of machine learning models in production environments.

As data science continues to grow in 2025, these tools are essential for staying at the cutting edge of analytics, machine learning, and AI. The integration of various platforms and the increasing use of AI-driven automation will shape the future of data science.

0 notes

Text

What Are the Most Popular AI Development Tools in 2025?

As artificial intelligence (AI) continues to evolve, developers have access to an ever-expanding array of tools to streamline the development process. By 2025, the landscape of AI development tools has become more sophisticated, offering greater ease of use, scalability, and performance. Whether you're building predictive models, crafting chatbots, or deploying machine learning applications at scale, the right tools can make all the difference. In this blog, we’ll explore the most popular AI development tools in 2025, highlighting their key features and use cases.

1. TensorFlow

TensorFlow remains one of the most widely used tools in AI development in 2025. Known for its flexibility and scalability, TensorFlow supports both deep learning and traditional machine learning workflows. Its robust ecosystem includes TensorFlow Extended (TFX) for production-level machine learning pipelines and TensorFlow Lite for deploying models on edge devices.

Key Features:

Extensive library for building neural networks.

Strong community support and documentation.

Integration with TensorFlow.js for running models in the browser.

Use Case: Developers use TensorFlow to build large-scale neural networks for applications such as image recognition, natural language processing, and time-series forecasting.

2. PyTorch

PyTorch continues to dominate the AI landscape, favored by researchers and developers alike for its ease of use and dynamic computation graph. In 2025, PyTorch remains a top choice for prototyping and production-ready AI solutions, thanks to its integration with ONNX (Open Neural Network Exchange) and widespread adoption in academic research.

Key Features:

Intuitive API and dynamic computation graphs.

Strong support for GPU acceleration.

TorchServe for deploying PyTorch models.

Use Case: PyTorch is widely used in developing cutting-edge AI research and for applications like generative adversarial networks (GANs) and reinforcement learning.

3. Hugging Face

Hugging Face has grown to become a go-to platform for natural language processing (NLP) in 2025. Its extensive model hub includes pre-trained models for tasks like text classification, translation, and summarization, making it easier for developers to integrate NLP capabilities into their applications.

Key Features:

Open-source libraries like Transformers and Datasets.

Access to thousands of pre-trained models.

Easy fine-tuning of models for specific tasks.

Use Case: Hugging Face’s tools are ideal for building conversational AI, sentiment analysis systems, and machine translation services.

4. Google Cloud AI Platform

Google Cloud AI Platform offers a comprehensive suite of tools for AI development and deployment. With pre-trained APIs for vision, speech, and text, as well as AutoML for custom model training, Google Cloud AI Platform is a versatile option for businesses.

Key Features:

Integrated AI pipelines for end-to-end workflows.

Vertex AI for unified machine learning operations.

Access to Google’s robust infrastructure.

Use Case: This platform is used for scalable AI applications such as fraud detection, recommendation systems, and voice recognition.

5. Azure Machine Learning

Microsoft’s Azure Machine Learning platform is a favorite for enterprise-grade AI solutions. In 2025, it remains a powerful tool for developing, deploying, and managing machine learning models in hybrid and multi-cloud environments.

Key Features:

Automated machine learning (AutoML) for rapid model development.

Integration with Azure’s data and compute services.

Responsible AI tools for ensuring fairness and transparency.

Use Case: Azure ML is often used for predictive analytics in sectors like finance, healthcare, and retail.

6. DataRobot

DataRobot simplifies the AI development process with its automated machine learning platform. By abstracting complex coding requirements, DataRobot allows developers and non-developers alike to build AI models quickly and efficiently.

Key Features:

AutoML for quick prototyping.

Pre-built solutions for common business use cases.

Model interpretability tools.

Use Case: Businesses use DataRobot for customer churn prediction, demand forecasting, and anomaly detection.

7. Apache Spark MLlib

Apache Spark’s MLlib is a powerful library for scalable machine learning. In 2025, it remains a popular choice for big data analytics and machine learning, thanks to its ability to handle large datasets across distributed computing environments.

Key Features:

Integration with Apache Spark for big data processing.

Support for various machine learning algorithms.

Seamless scalability across clusters.

Use Case: MLlib is widely used for recommendation engines, clustering, and predictive analytics in big data environments.

8. AWS SageMaker

Amazon’s SageMaker is a comprehensive platform for AI and machine learning. In 2025, SageMaker continues to stand out for its robust deployment options and advanced features, such as SageMaker Studio and Data Wrangler.

Key Features:

Built-in algorithms for common machine learning tasks.

One-click deployment and scaling.

Integrated data preparation tools.

Use Case: SageMaker is often used for AI applications like demand forecasting, inventory management, and personalized marketing.

9. OpenAI API

OpenAI’s API remains a frontrunner for developers building advanced AI applications. With access to state-of-the-art models like GPT and DALL-E, the OpenAI API empowers developers to create generative AI applications.

Key Features:

Access to cutting-edge AI models.

Flexible API for text, image, and code generation.

Continuous updates with the latest advancements in AI.

Use Case: Developers use the OpenAI API for applications like content generation, virtual assistants, and creative tools.

10. Keras

Keras is a high-level API for building neural networks and has remained a popular choice in 2025 for its simplicity and flexibility. Integrated tightly with TensorFlow, Keras makes it easy to experiment with different architectures.

Key Features:

User-friendly API for deep learning.

Modular design for easy experimentation.

Support for multi-GPU and TPU training.

Use Case: Keras is used for prototyping neural networks, especially in applications like computer vision and speech recognition.

Conclusion

In 2025, AI development tools are more powerful, accessible, and diverse than ever. Whether you’re a researcher, a developer, or a business leader, the tools mentioned above cater to a wide range of needs and applications. By leveraging these cutting-edge platforms, developers can focus on innovation while reducing the complexity of building and deploying AI solutions.

As the field of AI continues to evolve, staying updated on the latest tools and technologies will be crucial for anyone looking to make a mark in this transformative space.

0 notes

Text

TensorFlow Extended (TFX): Machine Learning Pipelines and Model Understanding (Google I/O'19)

This talk will focus on creating a production machine learning pipeline using TFX. Using TFX developers can implement machine … source

0 notes

Text

Deep Learning Frameworks: TensorFlow, PyTorch, and Beyond

In the rapidly evolving field of artificial intelligence (AI), deep learning has emerged as a powerful tool for solving complex problems that were once thought to be beyond the reach of machines. Whether it's image recognition, natural language processing, or even autonomous driving, deep learning is at the heart of many of today’s AI innovations. However, building effective deep learning models requires robust frameworks, and two of the most popular frameworks today are TensorFlow and PyTorch.

In this blog, we will explore the key features, strengths, and weaknesses of these two frameworks and delve into some other deep learning frameworks that are making waves in the AI community. By understanding the landscape of AI frameworks, businesses and developers can make more informed choices when embarking on AI and deep learning projects.

What Are Deep Learning Frameworks?

Deep learning frameworks are software libraries or tools designed to simplify the process of building, training, and deploying deep learning models. They provide pre-built functions, optimizers, and architectures, enabling developers to focus on creating models without having to code every aspect of neural networks from scratch. These frameworks help in accelerating development and are crucial in building cutting-edge AI applications.

TensorFlow: The Industry Leader

TensorFlow, developed by Google, has long been considered the industry standard for deep learning frameworks. Launched in 2015, it was designed with scalability, flexibility, and performance in mind. TensorFlow’s broad adoption across industries and academia has made it one of the most widely used frameworks in the AI ecosystem.

Key Features of TensorFlow

Comprehensive Ecosystem: TensorFlow offers a complete ecosystem for machine learning and AI development. It supports everything from building simple neural networks to training large-scale models on distributed systems.

TensorFlow Extended (TFX): TensorFlow Extended is a production-ready platform designed for creating robust machine learning pipelines. It’s especially useful for large enterprises looking to deploy and maintain AI systems at scale.

TensorFlow Lite: TensorFlow Lite is optimized for mobile and edge devices. As AI models become more prevalent in smartphones, smart appliances, and IoT devices, TensorFlow Lite helps developers run inference on-device, improving efficiency and privacy.

TensorFlow Hub: TensorFlow Hub provides access to pre-trained models that can be easily integrated into custom applications. This allows for faster development of models by leveraging existing solutions rather than building them from scratch.

Keras API: TensorFlow includes Keras, a high-level API that makes building and experimenting with deep learning models much more straightforward. Keras abstracts much of the complexity of TensorFlow, making it beginner-friendly without sacrificing the framework’s power.

Strengths of TensorFlow

Scalability: TensorFlow’s design is highly scalable, making it suitable for both research and production use cases. It can efficiently handle both small-scale models and complex deep learning architectures, such as those used for natural language processing or image recognition.

Support for Distributed Computing: TensorFlow offers robust support for distributed computing, allowing developers to train models across multiple GPUs or even entire clusters of machines. This makes it an ideal choice for projects requiring significant computational power.

Wide Community Support: TensorFlow has an active community of developers and researchers who contribute to its ecosystem. Whether it’s finding tutorials, troubleshooting issues, or accessing pre-built models, TensorFlow’s extensive community is a valuable resource.

Weaknesses of TensorFlow

Steep Learning Curve: While TensorFlow is incredibly powerful, it comes with a steep learning curve, especially for beginners. Despite the addition of Keras, TensorFlow’s low-level API can be challenging to grasp.

Verbose Syntax: TensorFlow is known for being more verbose than other frameworks, making it more cumbersome for developers who are rapidly iterating through experiments.

PyTorch: The Researcher’s Favorite

PyTorch, developed by Facebook’s AI Research Lab (FAIR), has become the go-to deep learning framework for many researchers and academic institutions. It was released in 2016 and quickly gained traction for its ease of use and dynamic computation graph, which allows for greater flexibility during development.

Key Features of PyTorch

Dynamic Computation Graph: PyTorch’s dynamic computation graph (also known as “define-by-run”) is one of its most praised features. This allows developers to make changes to the model on the fly, enabling faster debugging and experimentation compared to TensorFlow’s static graphs.

Simple and Pythonic: PyTorch integrates seamlessly with Python, offering a more intuitive and Pythonic coding style. Its simplicity makes it more accessible to those new to deep learning, while still being powerful enough for complex tasks.

TorchScript: TorchScript allows PyTorch models to be optimized and exported for production environments. While PyTorch is known for its ease in research settings, TorchScript ensures that models can be efficiently deployed in production as well.

LibTorch: PyTorch offers LibTorch, a C++ frontend, enabling developers to use PyTorch in production environments that require high-performance, low-latency execution.

ONNX Support: PyTorch supports the Open Neural Network Exchange (ONNX) format, allowing models trained in PyTorch to be deployed in a variety of platforms and other deep learning frameworks.

Strengths of PyTorch

Flexibility for Research: PyTorch’s dynamic computation graph allows researchers to experiment more freely, which is why it’s so widely used in academia and by AI researchers. It offers more flexibility during the model-building process, making it ideal for tasks that require experimentation and iteration.

Pythonic Nature: The framework is very "pythonic" and straightforward, which reduces the barrier to entry for newcomers. Its intuitive design and natural Pythonic syntax make it easy to read and write, especially for data scientists and researchers familiar with Python.

Easier Debugging: Since the computation graph is built on the fly, it’s easier to debug in PyTorch compared to TensorFlow. This is a key advantage for those in research environments where rapid iteration is critical.

Weaknesses of PyTorch

Less Mature for Production: While PyTorch has gained a lot of ground in recent years, TensorFlow is still considered the more mature option for deploying AI models in production, particularly in large-scale enterprise environments.

Limited Support for Mobile and Embedded Systems: PyTorch lags behind TensorFlow when it comes to support for mobile and embedded devices. Although it’s improving, TensorFlow’s ecosystem is more developed for these platforms.

Other Deep Learning Frameworks to Consider

While TensorFlow and PyTorch dominate the deep learning landscape, there are other frameworks that cater to specific use cases or provide unique features. Here are a few other frameworks worth exploring:

1. MXNet

MXNet is an open-source deep learning framework developed by Apache. It is highly scalable and optimized for distributed computing. MXNet is particularly known for its performance on multi-GPU and cloud computing environments, making it a strong contender for organizations looking to deploy AI at scale.

2. Caffe

Caffe is a deep learning framework that specializes in image classification and convolutional neural networks (CNNs). Developed by Berkeley AI Research (BAIR), it’s lightweight and optimized for speed, but lacks the flexibility of TensorFlow or PyTorch. Caffe is ideal for tasks requiring fast computation times but not much model customization.

3. Theano

Though no longer actively developed, Theano was one of the earliest deep learning frameworks and paved the way for many others. It’s still used in some academic settings due to its robust mathematical capabilities and focus on research.

4. Chainer

Chainer is a deep learning framework known for its intuitive and flexible design. It uses a dynamic computation graph similar to PyTorch, making it well-suited for research environments where developers need to test and adjust models rapidly.

Choosing the Right Framework for Your Needs

When choosing between deep learning frameworks, it’s important to consider the goals of your project and the skill set of your team. For instance, if you’re working in a research environment and need flexibility, PyTorch may be the best choice. On the other hand, if you’re deploying large-scale models into production or working on mobile AI applications, TensorFlow may be the better fit.

Additionally, businesses like Trantor often assess these frameworks based on factors such as scalability, ease of use, and production-readiness. It’s also important to keep in mind that deep learning frameworks are constantly evolving. Features that are missing today could be implemented tomorrow, so staying informed about updates and community support is critical.

Conclusion

Choosing the right deep learning framework can significantly impact the success of your AI project. Whether it’s the scalable power of TensorFlow or the flexible simplicity of PyTorch, each framework has its strengths and weaknesses. By understanding the needs of your specific project—whether it’s research-oriented or production-focused—you can select the best tool for the job.

For organizations like Trantor, which are leading the way in AI development, selecting the right framework is crucial in delivering AI solutions that meet the demands of modern enterprises. Whether you’re building AI models for healthcare, finance, or any other sector, having a solid understanding of deep learning frameworks will ensure that your AI projects are both cutting-edge and impactful.

0 notes

Text

Revolutionizing Industries: The Latest in AI Technology

Artificial Intelligence (AI) is like teaching computers to do smart things that humans usually do. It helps them understand language, spot patterns, learn from experience, and make decisions. The big goal of Artificial Intelligence is to make machines as smart as humans so they can solve tough problems, predict, adapt to new situations, and get better over time.

Artificial Intelligence has become a big part of our lives. We see it in things like Siri, Alexa, and recommendation systems on Netflix. It's also behind self-driving cars, medical tools, and smart home devices, changing how we live and work. AI takes part in all the industry.

AI is combined with different parts like machine learning, natural language processing, and robotics. Machine learning is especially important because it helps systems learn from data without us having to tell them exactly what to do.

Benefits of AI Tools for Individuals and Businesses

For businesses, AI is super helpful. It can automate tasks, analyze lots of data quickly, and give insights into what customers like. As a marketer, it's important to understand how AI can help your business stand out.

One big way AI helps marketing is by personalizing the customer experience. By looking at customer data, AI can create personalized content, like product suggestions, for each person. This can make customers more engaged and loyal, which means more money for your business. It saves a lot of time for the customer. While using this they feel comfortable.

AI can also make marketing campaigns better by figuring out the best time and way to send messages to customers. By studying customer data, AI can help you reach the right people at the right time, making it more likely they'll buy what you're selling.

Understanding the basics of AI is important today because it's changing so fast. But what's making AI amazing are the special tools and systems that help people who work with AI do their jobs better. Here are some of these AI tools:

PyTorch: It's like a toolkit from Facebook that makes building and using AI easier.

TensorFlow: Made by Google, it's great for building AI that works on all kinds of devices.

Scikit-learn: This is a handy tool for working with AI in Python.

TensorFlow Extended (TFX): It's like a big helper that makes sure AI programs run smoothly.

Hugging Face Transformers: This tool is super helpful for working with words and text in AI.

Ray: Ray makes it easier to work with big AI projects by getting lots of computers to work together.

ONNX (Open Neural Network Exchange): ONNX helps different AI tools talk to each other, making it easier to use AI in different places.

These tools are like superpowers for people working with AI, helping them make AI smarter and more useful in our lives. And as AI keeps growing, these tools will keep getting better, helping us solve even bigger problems in the future.

1 note

·

View note

Text

Top 5 AI/ML Testing Tools for Streamlining Development and Deployment

As artificial intelligence (AI) and machine learning (ML) applications continue to proliferate across industries, ensuring the quality and reliability of these systems becomes paramount. Testing AI/ML models presents unique challenges due to their complexity and non-deterministic nature. To address these challenges, a range of specialized testing tools have emerged. In this article, we'll explore five top AI/ML testing tools that streamline the development and deployment process.

TensorFlow Extended (TFX): TensorFlow Extended (TFX) is an end-to-end platform for deploying production-ready ML pipelines. It offers a comprehensive suite of tools for data validation, preprocessing, model training, evaluation, and serving. TFX integrates seamlessly with TensorFlow, Google's popular open-source ML framework, making it an ideal choice for organizations leveraging TensorFlow for their AI projects. TFX's standardized components ensure consistency and reliability throughout the ML lifecycle, from experimentation to deployment.

PyTorch Lightning: PyTorch Lightning is a lightweight PyTorch wrapper that simplifies the training and deployment of complex neural networks. It provides a high-level interface for organizing code, handling distributed training, and integrating with popular experiment tracking platforms like TensorBoard and Weights & Biases. PyTorch Lightning automates many aspects of the training loop, allowing researchers and developers to focus on model design and experimentation while ensuring reproducibility and scalability.

MLflow: MLflow is an open-source platform for managing the end-to-end ML lifecycle. Developed by Databricks, MLflow provides tools for tracking experiments, packaging code into reproducible runs, and deploying models to production. Its flexible architecture supports integration with popular ML frameworks like TensorFlow, PyTorch, and scikit-learn, as well as cloud platforms such as AWS, Azure, and Google Cloud. MLflow's unified interface simplifies collaboration between data scientists, engineers, and DevOps teams, enabling faster iteration and deployment of ML models.

Seldon Core: Seldon Core is an open-source platform for deploying and scaling ML models in Kubernetes environments. It offers a range of features for model serving, monitoring, and scaling, including support for A/B testing, canary deployments, and multi-armed bandit strategies. Seldon Core integrates with popular ML frameworks like TensorFlow, PyTorch, and XGBoost, as well as cloud-based platforms such as AWS S3 and Google Cloud Storage. Its built-in metrics and logging capabilities enable real-time monitoring and performance optimization of deployed models.

ModelOp Center: ModelOp Center is an enterprise-grade platform for managing and monitoring AI/ML models in production. It provides a centralized hub for deploying, versioning, and governing models across heterogeneous environments, including on-premises data centers and cloud infrastructure. ModelOp Center's advanced features include model lineage tracking, regulatory compliance reporting, and automated drift detection, helping organizations ensure the reliability, security, and scalability of their AI/ML deployments.

Conclusion

Testing AI/ML models is essential for ensuring their reliability, scalability, and performance in production environments. The tools mentioned in this article provide comprehensive solutions for streamlining the development and deployment of AI/ML applications, from data preprocessing and model training to monitoring and optimization.

By leveraging these tools, organizations can accelerate their AI initiatives while minimizing risks and maximizing the value of their machine learning investments.

Need to ensure the reliability and performance of your AI/ML models? Explore Testrig Technologies AI/ML Testing Services for comprehensive validation and optimization, ensuring robustness and scalability in production environments.

0 notes

Text

Google launches Cloud AI Platform pipelines in beta to simplify machine learning development

Google today announced the beta launch of Cloud AI Platform pipelines, a service designed to deploy robust, repeatable AI pipelines along with monitoring, auditing, version tracking, and reproducibility in the cloud. Google’s pitching it as a way to deliver an “easy to install” secure execution environment for machine learning workflows, which could reduce the amount of time enterprises spend bringing products to production.

“When you’re just prototyping a machine learning model in a notebook, it can seem fairly straightforward. But when you need to start paying attention to the other pieces required to make a [machine learning] workflow sustainable and scalable, things become more complex,” wrote Google product manager Anusha Ramesh and staff developer advocate Amy Unruh in a blog post. “A machine learning workflow can involve many steps with dependencies on each other, from data preparation and analysis, to training, to evaluation, to deployment, and more. It’s hard to compose and track these processes in an ad-hoc manner — for example, in a set of notebooks or scripts — and things like auditing and reproducibility become increasingly problematic.”

AI Platform Pipelines has two major parts: (1) the infrastructure for deploying and running structured AI workflows that are integrated with Google Cloud Platform services and (2) the pipeline tools for building, debugging, and sharing pipelines and components. The service runs on a Google Kubernetes cluster that’s automatically created as a part of the installation process, and it’s accessible via the Cloud AI Platform dashboard. With AI Platform Pipelines, developers specify a pipeline using the Kubeflow Pipelines software development kit (SDK), or by customizing the TensorFlow Extended (TFX) Pipeline template with the TFX SDK. This SDK compiles the pipeline and submits it to the Pipelines REST API server, which stores and schedules the pipeline for execution.

AI Pipelines uses the open source Argo workflow engine to run the pipeline and has additional microservices to record metadata, handle components IO, and schedule pipeline runs. Pipeline steps are executed as individual isolated pods in a cluster and each component can leverage Google Cloud services such as Dataflow, AI Platform Training and Prediction, BigQuery, and others. Meanwhile, the pipelines can contain steps that perform graphics card and tensor processing unit computation in the cluster, directly leveraging features like autoscaling and node auto-provisioning.

AI Platform Pipeline runs include automatic metadata tracking using ML Metadata, a library for recording and retrieving metadata associated with machine learning developer and data scientist workflows. Automatic metadata tracking logs the artifacts used in each pipeline step, pipeline parameters, and the linkage across the input/output artifacts, as well as the pipeline steps that created and consumed them.

In addition, AI Platform Pipelines supports pipeline versioning, which allows developers to upload multiple versions of the same pipeline and group them in the UI, as well as automatic artifact and lineage tracking. Native artifact tracking enables the tracking of things like models, data statistics, model evaluation metrics, and many more. And lineage tracking shows the history and versions of your models, data, and more.

Google says that in the near future, AI Platform Pipelines will gain multi-user isolation, which will let each person accessing the Pipelines cluster control who can access their pipelines and other resources. Other forthcoming features include workload identity to support transparent access to Google Cloud Services; a UI-based setup of off-cluster storage of backend data, including metadata, server data, job history, and metrics; simpler cluster upgrades; and more templates for authoring workflows.

1 note

·

View note

Text

Kubeflow vs. TFX: Which MLOps Framework is Right for You?

1. Introduction The field of Machine Learning Operations (MLOps) has grown significantly as organizations seek to streamline and scale their machine learning workflows. Among the most popular frameworks for MLOps are Kubeflow and TensorFlow Extended (TFX). While both frameworks share some similarities, they have distinct strengths and use cases. This tutorial will guide you in understanding the…

0 notes

Quote

by Steef-Jan Wiggers Follow In a recent blog post, Google announced the beta of Cloud AI Platform Pipelines, which provides users with a way to deploy robust, repeatable machine learning pipelines along with monitoring, auditing, version tracking, and reproducibility. With Cloud AI Pipelines, Google can help organizations adopt the practice of Machine Learning Operations, also known as MLOps – a term for applying DevOps practices to help users automate, manage, and audit ML workflows. Typically, these practices involve data preparation and analysis, training, evaluation, deployment, and more. Google product manager Anusha Ramesh and staff developer advocate Amy Unruh wrote in the blog post: When you're just prototyping a machine learning (ML) model in a notebook, it can seem fairly straightforward. But when you need to start paying attention to the other pieces required to make an ML workflow sustainable and scalable, things become more complex. Moreover, when complexity grows, building a repeatable and auditable process becomes more laborious. Cloud AI Platform Pipelines - which runs on a Google Kubernetes Engine (GKE) Cluster and is accessible via the Cloud AI Platform dashboard – has two major parts: The infrastructure for deploying and running structured AI workflows integrated with GCP services such as BigQuery, Dataflow, AI Platform Training and Serving, Cloud Functions, and The pipeline tools for building, debugging and sharing pipelines and components. With the Cloud AI Platform Pipelines users can specify a pipeline using either the Kubeflow Pipelines (KFP) software development kit (SDK) or by customizing the TensorFlow Extended (TFX) Pipeline template with the TFX SDK. The latter currently consists of libraries, components, and some binaries and it is up to the developer to pick the right level of abstraction for the task at hand. Furthermore, TFX SDK includes a library ML Metadata (MLMD) for recording and retrieving metadata associated with the workflows; this library can also run independently. Google recommends using KPF SDK for fully custom pipelines or pipelines that use prebuilt KFP components, and TFX SDK and its templates for E2E ML Pipelines based on TensorFlow. Note that over time, Google stated in the blog post that these two SDK experiences would merge. The SDK, in the end, will compile the pipeline and submit it to the Pipelines REST API; the AI Pipelines REST API server stores and schedules the pipeline for execution. An open-source container-native workflow engine for orchestrating parallel jobs on Kubernetes called Argo runs the pipelines, which includes additional microservices to record metadata, handle components IO, and schedule pipeline runs. The Argo workflow engine executes each pipeline on individual isolated pods in a GKE cluster – allowing each pipeline component to leverage Google Cloud services such as Dataflow, AI Platform Training and Prediction, BigQuery, and others. Furthermore, pipelines can contain steps that perform sizeable GPU and TPU computation in the cluster, directly leveraging features like autoscaling and node auto-provisioning. Source: https://cloud.google.com/blog/products/ai-machine-learning/introducing-cloud-ai-platform-pipelines AI Platform Pipeline runs include automatic metadata tracking using the MLMD - and logs the artifacts used in each pipeline step, pipeline parameters, and the linkage across the input/output artifacts, as well as the pipeline steps that created and consumed them. With Cloud AI Platform Pipelines, according to the blog post customers will get: Push-button installation via the Google Cloud Console Enterprise features for running ML workloads, including pipeline versioning, automatic metadata tracking of artifacts and executions, Cloud Logging, visualization tools, and more Seamless integration with Google Cloud managed services like BigQuery, Dataflow, AI Platform Training and Serving, Cloud Functions, and many others Many prebuilt pipeline components (pipeline steps) for ML workflows, with easy construction of your own custom components The support for Kubeflow will allow a straightforward migration to other cloud platforms, as a respondent on a Hacker News thread on Google AI Cloud Pipeline stated: Cloud AI Platform Pipelines appear to use Kubeflow Pipelines on the backend, which is open-source and runs on Kubernetes. The Kubeflow team has invested a lot of time on making it simple to deploy across a variety of public clouds, such as AWS, and Azure. If Google were to kill it, you could easily run it on any other hosted Kubernetes service. The release of AI Cloud Pipelines shows Google's further expansion of Machine Learning as a Service (MLaaS) portfolio - consisting of several other ML centric services such as Cloud AutoML, Kubeflow and AI Platform Prediction. The expansion is necessary to allow Google to further capitalize on the growing demand for ML-based cloud services in a market which analysts expect to reach USD 8.48 billion by 2025, and to compete with other large public cloud vendors such as Amazon offering similar services like SageMaker and Microsoft with Azure Machine Learning. Currently, Google plans to add more features for AI Cloud Pipelines. These features are: Easy cluster upgrades More templates for authoring ML workflows More straightforward UI-based setup of off-cluster storage of backend data Workload identity, to support transparent access to GCP services, and Multi-user isolation – allowing each person accessing the Pipelines cluster to control who can access their pipelines and other resources. Lastly, more information on Google's Cloud AI Pipeline is available in the getting started documentation.

http://damianfallon.blogspot.com/2020/03/google-announces-cloud-ai-platform.html

1 note

·

View note

Text

key python packages for data science

7 most important Python libraries for data science.

1) TensorFlow

TensorFlow is a comprehensive free, open source platform for machine learning that includes a wide range of tools, libraries, and resources. It was first released by the Google Brain team on November 9, 2015. TensorFlow makes it easy to design and train machine learning models using high-level APIs like Keras. It also offers different levels of abstraction, allowing you to choose the best approach for your model. TensorFlow allows you to deploy machine learning models across multiple environments, including the cloud, browsers, and your device. If you want the full experience, choose TensorFlow Extended (TFX); TensorFlow Lite if you are using TensorFlow on a mobile device; and TensorFlow.js if you are going to train and deploy models in JavaScript contexts.

2) NumPy

NumPy stands for Numerical Python. It is a Python library for numerical calculations and scientific calculations. NumPy provides numerous high performance features that Python enthusiasts and programmers can use to work with arrays. NumPy arrays provide a vectorization of mathematical operations. These vectorized operations provide a performance boost over Python's loop constructs.

3) SciPy

SciPy-codenamed Scientific Python, is a variety of mathematical functions and algorithms built on Python's NumPy extension. SciPy provides many high-level commands and classes for manipulating and displaying data. SciPy is useful for exploratory data analysis and data processing systems.

4) Pandas

We can all do data analysis with pencil and paper on small data sets. We need specialized tools and techniques to analyze and derive meaningful insights from large data sets. Pandas Python is one such data analysis library with tools for high-level data structures and easy data manipulation. The ability to index, retrieve, split, join, restructure, and perform various other analyzes on multidimensional and single-dimensional data is essential to provide a simple yet effective way to analyze data.

5) PyCaret

PyCaret is a fully accessible machine learning package for model deployment and data processing. It allows you to save time as it is a low code library. It's an easy-to-use machine learning library that will help you run end-to-end machine learning tests, whether you're trying to impute missing values, analyze discrete data, design functions, tune hyperparameters, or build coupled models.

0 notes

Photo

Meet @ClemensMewald, a Product Manager for TensorFlow Extended (TFX). He talks with @lmoroney about how TFX helps developers deploy ML models in production, open source model analysis libraries and more. Watch here → https://t.co/ycqVgE5J2G https://t.co/G0veIwk1YO

1 note

·

View note

Photo

Meet @ClemensMewald, a Product Manager for TensorFlow Extended (TFX). He talks with @lmoroney about how TFX helps developers deploy ML models in production, open source model analysis libraries and more. Watch here → https://t.co/ycqVgE5J2G https://t.co/G0veIwk1YO

2 notes

·

View notes