#Scrape E-Commerce Data

Explore tagged Tumblr posts

Text

How Amazon Price Scraper Is Used To Scrape E-Commerce Data?

In the ever-evolving landscape of e-commerce, businesses are constantly seeking ways to gain a competitive edge. One such method gaining traction is web scraping, a technique used to extract valuable data from websites. Among the myriad of web scraping tools available, the Amazon Price Scraper stands out as a powerful instrument for extracting pricing information from the e-commerce giant, Amazon. In this blog post, we'll delve into the workings of the Amazon Price Scraper and explore how it is reshaping the way businesses gather crucial e-commerce data.

Understanding Amazon Price Scraper

Amazon Price Scraper is a specialized web scraping tool designed to extract pricing data from Amazon product listings. It works by accessing the HTML code of Amazon's web pages and systematically retrieving information such as product prices, availability, ratings, and more. This data can then be analyzed to gain insights into market trends, competitor pricing strategies, and consumer behavior.

How It Works

The Amazon Price Scraper employs a combination of web crawling and data extraction techniques to navigate through Amazon's vast repository of product listings. Here's a simplified overview of its operation:

URL Input: The user provides the Amazon Price Scraper with the URLs of the target product pages or categories they wish to scrape.

Web Crawling: The scraper begins by visiting the specified URLs and systematically traversing through the web pages, following links to additional product pages if necessary.

HTML Parsing: Once on a product page, the scraper extracts the HTML code containing relevant pricing and product information.

Data Extraction: Using predefined patterns or rules, the scraper identifies and extracts the desired data elements, such as product name, price, seller information, and product ratings.

Data Storage: The extracted data is then stored in a structured format, such as a spreadsheet or database, for further analysis.

Iterative Process: The scraping process may be repeated for multiple product pages or categories to gather a comprehensive dataset.

Applications of Amazon Price Scraper

The Amazon Price Scraper offers a wide range of applications for businesses operating in the e-commerce space:

Competitive Analysis: By scraping pricing data from Amazon, businesses can monitor competitors' pricing strategies in real-time. This information enables them to adjust their own pricing strategies accordingly to stay competitive in the market.

Market Research: Scraped data from Amazon can provide valuable insights into market trends, demand patterns, and consumer preferences. Businesses can use this information to identify emerging trends and tailor their product offerings to meet customer needs.

Price Tracking: E-commerce retailers can use the Amazon Price Scraper to track price fluctuations of specific products over time. This data allows them to optimize pricing strategies, identify pricing anomalies, and capitalize on pricing opportunities.

Inventory Management: By monitoring product availability and stock levels on Amazon, businesses can optimize their inventory management processes. They can ensure adequate stock levels for in-demand products and avoid stockouts or overstocking.

Dynamic Pricing: Armed with real-time pricing data scraped from Amazon, businesses can implement dynamic pricing strategies that adjust prices based on market demand, competitor prices, and other factors. This allows them to maximize revenue and profitability.

Ethical Considerations

While the Amazon Price Scraper offers numerous benefits for businesses, it's essential to address ethical considerations associated with web scraping:

Respect Terms of Service: Businesses must adhere to Amazon's terms of service and usage policies when scraping data from its website. Violating these terms can lead to legal repercussions and damage to reputation.

Data Privacy: It's crucial to respect the privacy of Amazon's users and ensure that scraped data is used responsibly and ethically. Personal information should be handled with care and in compliance with data protection regulations.

Fair Competition: While competitive analysis is a legitimate use case for web scraping, businesses should refrain from engaging in anti-competitive practices or data theft. Fair competition benefits both businesses and consumers in the long run.

Conclusion

The Amazon Price Scraper is a powerful tool that empowers businesses to gain valuable insights from Amazon's vast e-commerce ecosystem. By leveraging scraped pricing data, businesses can make informed decisions, stay competitive, and drive growth in today's dynamic e-commerce landscape. However, it's essential to use web scraping tools responsibly, respecting ethical considerations and legal boundaries, to ensure a fair and sustainable marketplace for all stakeholders.

0 notes

Text

E-Commerce Data Scraping Trends: Insights for 2024–2030

Introduction

In the ever-evolving landscape of e-commerce, staying ahead of the competition requires businesses to constantly analyze data, track trends, and leverage the latest technologies. One of the most effective ways to gain a competitive edge in e-commerce is through E-Commerce Data Scraping, a practice that allows companies to collect and analyze vast amounts of online data. In this blog, we will explore the E-Commerce Data Scraping Trends that are expected to shape the industry from 2025 to 2030. We will also dive into data analytics trends, E-Commerce API trends 2025, the role of predictive analytics, and the importance of data analytics services.

What is E-Commerce Data Scraping?

E-commerce is an industry that thrives on data. Businesses use customer information, market trends, and competitor data to optimize their strategies, improve customer experiences, and maximize sales. E-Commerce Data Scraping is the process of extracting data from online stores, competitor websites, and other digital platforms. This data is then used to analyze product pricing, customer behavior, inventory levels, and much more. The data can be gathered through web scraping services or E-Commerce Data Scraping API.

By 2025, the global web scraping market is projected to grow significantly, with estimates showing a compound annual growth rate (CAGR) of over 25%. As businesses look to extract valuable insights from large datasets, understanding the key trends in data analytics services will be crucial to staying ahead of the curve.

Key E-Commerce Data Scraping Trends

The e-commerce data scraping landscape is constantly changing, driven by new technologies and consumer demands. Let’s take a look at the key trends in E-Commerce Data Scraping that businesses should pay attention to in the coming years.

Automation of Data Scraping

Automation is one of the most significant trends in E-Commerce Data Scraping. As businesses look for ways to efficiently collect large amounts of data, automated tools and systems have become indispensable. Automated scraping tools are designed to gather data from multiple sources without the need for manual intervention, making the process faster, more reliable, and cost-effective.

Statistical Insight: By 2025, over 70% of businesses involved in e-commerce will utilize automated data scraping tools to gather real-time insights.

Year% of Businesses

2025

70%

2026

75%

2027

80%

2028

85%

The growing adoption of AI and machine learning technologies is fueling the rise of automated scraping solutions. These tools are capable of learning from patterns and improving their scraping techniques over time, which increases data accuracy and reduces the risk of errors.

Real-Time Data Scraping

In the fast-paced world of e-commerce, businesses need data that reflects the current market environment. Real-time data scraping allows businesses to continuously monitor competitor pricing, stock levels, and promotional offers, ensuring they remain competitive.

Statistical Insight: By 2026, real-time data scraping in e-commerce will account for 40% of all data scraping operations.

Year% of Real-Time Data Scraping

2025

35%

2026

40%

2027

45%

2028

50%

Real-time data scraping also plays a crucial role in optimizing supply chain management. By monitoring product availability in real-time, businesses can adjust their inventories accordingly, preventing overstocking or understocking, and improving their overall efficiency.

Cross-Platform Scraping

E-commerce platforms are no longer confined to a single website. Retailers often sell products through multiple channels, including social media, online marketplaces, and mobile apps. As such, cross-platform scraping is becoming a vital trend in data collection.

This type of scraping enables businesses to gather data from a variety of platforms, providing a comprehensive view of their competitors and market trends. Cross-platform scraping tools are designed to work across different e-commerce platforms and websites, making them more versatile and efficient.

Statistical Insight: By 2027, cross-platform scraping will represent 35% of the total e-commerce data scraping efforts.

Year% of Total E-Commerce Data Scraping

2025

28%

2026

30%

2027

35%

2028

38%

Advanced Web Scraping Techniques

As websites become more complex and dynamic, traditional scraping methods are no longer sufficient. Advanced techniques like browser automation, CAPTCHA solving, and headless scraping are becoming increasingly popular.

These advanced scraping methods allow businesses to bypass obstacles such as JavaScript rendering, dynamic content, and CAPTCHA systems, ensuring they can extract data from even the most sophisticated websites.

Statistical Insight: Advanced web scraping techniques are expected to grow by 40% year-over-year between 2025 and 2028.

Year% of Growth

2025

35%

2026

38%

2027

40%

2028

42%

E-Commerce Data Scraping and Data Analytics Trends

As e-commerce businesses collect large volumes of data, the next step is to extract meaningful insights from that data. Data analytics trends are key to understanding how to make the most of the data you collect. Let’s explore some of the key trends in data analytics and how they intersect with e-commerce data scraping.

The Role of Predictive Analytics in E-Commerce

Predictive analytics is one of the most powerful tools available to e-commerce businesses today. By analyzing historical data, predictive analytics tools can forecast future trends, such as customer buying behavior, demand for certain products, and inventory levels. This allows businesses to make data-driven decisions and plan for future demand.

Statistical Insight: By 2029, predictive analytics will drive 50% of all e-commerce business decisions.

Year% of E-Commerce Decisions Driven

2025

42%

2026

45%

2027

47%

2028

50%

Predictive analytics is powered by machine learning algorithms, which are trained on large datasets to make predictions. E-commerce businesses can use this technology to optimize pricing strategies, personalize marketing campaigns, and improve customer retention.

Integration of AI and Machine Learning in Data Analytics

AI and machine learning are transforming data analytics in e-commerce. These technologies allow businesses to analyze massive amounts of data quickly and accurately. By integrating AI into data scraping tools, e-commerce companies can identify patterns, trends, and insights that were previously difficult to uncover.

Statistical Insight: By 2028, AI and machine learning will account for 60% of all data analytics services in e-commerce.

Year% of E-Commerce Data Analytics Driven

2025

52%

2026

55%

2027

58%

2028

60%

AI-powered analytics platforms can help businesses detect fraud, forecast customer behavior, and personalize user experiences based on browsing and purchasing history. As these technologies evolve, their impact on e-commerce will only continue to grow.

E-Commerce API Trends 2025

As businesses continue to rely on data scraping and analytics, E-Commerce APIs are becoming more critical. APIs allow businesses to integrate their e-commerce platforms with external data sources, enabling seamless data exchange.

Increased Adoption of E-Commerce Data Scraping API

The adoption of E-Commerce Data Scraping API is expected to increase dramatically in 2025 and beyond. These APIs provide businesses with a streamlined way to access and collect data from websites and platforms, without the need for complex coding or scraping infrastructure.

Statistical Insight: By 2025, the market for e-commerce APIs will reach $10 billion, with a 20% annual growth rate.

Year Market Size of E-Commerce APIs (in billion USD)

2025

12

2026

14

2027

16

2028

18

E-commerce companies can use E-Commerce Data Scraping APIs to collect information such as product prices, availability, reviews, and ratings. This data is then used to optimize pricing strategies, marketing campaigns, and product assortments.

Security and Compliance in API Usage

As data scraping becomes more prevalent, businesses must ensure they comply with regulations and maintain secure data practices. In 2025, there will be a significant push towards more secure and compliant APIs in the e-commerce space.

Statistical Insight: By 2025, over 65% of e-commerce businesses will prioritize API security and compliance with data privacy regulations.

Year% of Businesses Prioritizing API

2025

65%

2026

70%

2027

75%

2028

80%

APIs that adhere to industry standards and regulations will be essential for businesses to ensure data protection and avoid legal issues.

How Real Data API Can Help?

At the heart of these trends is Real Data API, a robust platform designed to provide businesses with accurate and real-time e-commerce data. By utilizing E-Commerce Data Scraping API from Real Data API, businesses can streamline their data collection processes, improve decision-making, and enhance their marketing strategies. Whether you are looking for product pricing, competitor data, or customer insights, Real Data API can help you access the data you need to stay competitive in the marketplace.

Real Data API allows businesses to automate the data collection process, integrate it seamlessly into their platforms, and use powerful analytics tools to derive meaningful insights. With its focus on data accuracy, security, and compliance, Real Data API ensures that your business stays ahead in the data-driven world of e-commerce.

Future Outlook: 2025-2030

The future of E-Commerce Data Scraping looks promising, with continuous advancements in technology and data analytics. From 2025 to 2030, we can expect to see:

A significant increase in the use of AI and machine learning for data scraping and analytics.

Further automation of e-commerce data scraping processes.

A shift towards more sophisticated, cross-platform scraping tools.

Increased investment in secure and compliant E-Commerce APIs.

Businesses that embrace these trends will be better equipped to navigate the rapidly changing e-commerce landscape and stay ahead of the competition.

Conclusion

The future of e-commerce is closely tied to data, and E-Commerce Data Scraping Trends will continue to play a pivotal role in shaping the industry. As we move towards 2025 and beyond, businesses must embrace the latest data analytics trends, E-Commerce API trends 2025, and advancements in predictive analytics to stay competitive.

With the rise of automation, real-time scraping, and AI-powered analytics, the e-commerce landscape will continue to evolve. To stay ahead, businesses must leverage powerful tools like Real Data API, which can provide accurate, real-time data that drives growth and success.

If you're ready to take your e-commerce business to the next level with real-time data and insights, reach out to Real Data API today! Start leveraging powerful data analytics services and stay ahead of the competition.

Don’t miss out on the latest e-commerce data trends. Get started with Real Data API now and unlock the power of real-time data for your business!

Source: https://realdataapi.medium.com/e-commerce-data-scraping-trends-insights-for-2025-2030-7db479c305be

#E-Commerce API Trends 2025#AI and Machine Learning#Automation of Data Scraping#E-Commerce Data Scraping

0 notes

Text

Scrape eCommerce product reviews and pricing to gain valuable insights into customer preferences and market trends. Extract detailed product ratings, customer feedback, and pricing data using advanced tools. Leverage eCommerce product reviews scraping and pricing scraping to optimize marketing strategies, track competitors, and improve decision-making for enhanced business growth.

#Scrape eCommerce product reviews and pricing#pricing scraping tools#Scrape Product#Scrape Pricing#Reviews Data From E-commerce

0 notes

Text

E-Commerce Data Scraping Guide for 2024

0 notes

Text

How Residential Proxies Can Streamline Your Development Workflow

Residential proxies are becoming an essential tool for developers, particularly those working on testing, data scraping, and managing multiple accounts. In this article, we'll explore how residential proxies can significantly enhance your development processes.

1. Testing Web Applications and APIs from Different Geolocations

A critical aspect of developing international web services is testing how they perform across various regions. Residential proxies allow developers to easily simulate requests from different IP addresses around the world. This capability helps you test content accessibility, manage regional restrictions, and evaluate page load speeds for users in different locations.

2. Bypassing CAPTCHAs and Other Rate Limits

Many websites and APIs impose rate limits on the number of requests coming from a single IP address to mitigate bot activity. However, these restrictions can also hinder legitimate testing and data collection. Residential proxies provide access to multiple unique IPs that appear as regular users, making it easier to bypass CAPTCHAs and rate limits. This is especially useful for scraping data or conducting complex tests without getting blocked.

3. Boosting Speed and Stability

While many developers use VPNs to simulate requests from different countries, VPN services often fall short in terms of speed and reliability. Residential proxies offer access to more stable and faster IP addresses, as they are not tied to commonly used data centers. This can significantly speed up testing and development, improving your workflow.

4. Data Scraping Without Blocks

When scraping data from numerous sources, residential proxies are invaluable. They allow you to avoid bans on popular websites, stay off blacklists, and reduce the chances of your traffic being flagged as automated. With the help of residential proxies, you can safely collect data while ensuring your IP addresses remain unique and indistinguishable from those of real users.

5. Managing Multiple Accounts

For projects involving the management of multiple accounts (such as testing functionalities on social media platforms or e-commerce sites), residential proxies provide a secure way to use different accounts without risking bans. Since each proxy offers a unique IP address, the likelihood of accounts being flagged or blocked is significantly reduced.

6. Maintaining Ethical Standards

It’s essential to note that while using proxies can enhance development, it's important to adhere to ethical and legal guidelines. Whether you're involved in testing or scraping, always respect the laws and policies of the websites you interact with.

Residential proxies are much more than just a tool for scraping or bypassing blocks. They are a powerful resource that can simplify development, improve process stability, and provide the flexibility needed to work with various online services. If you're not already incorporating residential proxies into your workflow, now might be the perfect time to give them a try.

#proxy service#parsing#data scraping#proxy#proxysolutions#e-commerce development#e-commerce optimization

1 note

·

View note

Text

Benefits of Digital Shelf Analytics for Online Retailers

Boost your Ecommerce strategy with Digital Shelf Analytics. Optimize product visibility, analyze competitors, and stay competitive in the online Retail Industry.

#ecommerce data scraping service#data scraping#e-commerce web scraping tool#ecommerce web scraping tool#DigitalShelfAnalyticsServices

0 notes

Text

Legality of E-Commerce Website Scraping | A Comprehensive Overview

Introduction

In today's e-commerce-driven environment, e-commerce data scraping is a pivotal tool. At Actowiz Solutions, our expertise lies in providing top-tier web scraping services tailored for e-commerce websites legal frameworks. As e-commerce data collection becomes crucial for market leadership, questions about the legality of scraping ecommerce websites are commonplace.

For a broader understanding of the legal landscape, our earlier blog titled "Is Web Scraping Legal?" offers insights into the overarching legalities of web scraping. E-commerce data scraping, while integral to data-driven strategies, treads a delicate line regarding legality. Utilizing automated scripts for e-commerce data collection can often be perceived as navigating a grey area, requiring precision to avoid potential infringements.

This comprehensive guide seeks to demystify the intricacies of scraping ecommerce websites. We'll delve deep into the e-commerce data scraping dynamics, highlighting the nuances that separate legitimate e-commerce data collection from legal complications. Our mission is to empower e-commerce stakeholders with the expertise needed to efficiently and ethically leverage web scraping services, ensuring alignment with legal e-commerce data scraping parameters.

As staunch advocates for responsible web scraping, Actowiz Solutions prioritizes disseminating vital information regarding the e-commerce website's legal landscape. We'll elucidate the legal dimensions of e-commerce data scraping, offering actionable strategies to safeguard your operations and maintain compliance with e-commerce data collection regulations.

Understanding the Legal Implications of E-Commerce Website Scraping: Significance for Your Business Strategy

Navigating the legal landscape of e-commerce data collection through web scraping is intricate. For businesses outside the realm of giants like Google or Apple, the penalties for non-compliance can be financially crippling. While e-commerce websites legal parameters seem straightforward, data scraping introduces a dual-edged sword. It grants access to invaluable market insights, competitor pricing trends, and consumer behavior patterns. However, it simultaneously poses challenges concerning data privacy, intellectual property rights, and potential data misuse.

The emergence of stringent data protection regulations, especially the General Data Protection Regulation (GDPR) in the European Union, underscores the significance of e-commerce data collection compliance. Such regulations emphasize responsible practices like data minimization, transparency, and obtaining user consent. Overlooking these can lead to hefty fines and tarnished reputations.

For ethical and legal e-commerce data collection, it's imperative to respect the stipulated terms of service by website owners. This encompasses ensuring that the extracted data serves legitimate objectives and implementing robust security protocols to safeguard sensitive information. While web scraping tools bolster data acquisition efforts, their deployment must align with compliance guidelines. Organizations must meticulously assess the e-commerce websites legal landscape and ethical considerations of their data scraping initiatives to sidestep potential legal pitfalls.

Understanding Copyright Implications in Web Scraping and Data Collection

Understanding copyright laws and their implications is paramount when venturing into web scraping endeavors. Copyright infringement, characterized by the unauthorized use of protected material, can lead to significant penalties, litigation, and harm to one's reputation. Before utilizing scraped data, it's imperative to ascertain any potential copyright restrictions, seeking legal advice if uncertain. A notable cautionary tale involves a $400 freelance scraping project culminating in a $200K settlement due to oversight in data usage precautions

The concept of fair use is central to copyright law, permitting limited and transformative use of copyrighted content without violating the owner's rights. While fair use fosters information dissemination and spurs innovation, its parameters are nuanced and demand meticulous evaluation.

For lawful web scraping, tools and practices should uphold ethical standards. This encompasses securing explicit consent from copyright holders when required, honoring privacy regulations, and abstaining from gathering sensitive personal information. Furthermore, aligning with Creative Commons licenses, which facilitate the legal sharing and reuse of copyrighted works, can mitigate infringement risks.

A holistic comprehension of copyright regulations, adherence to fair use tenets, and recognition of human rights equips web scrapers to operate responsibly in the digital sphere. Harmonizing web scraping initiatives with regulations like the Digital Millennium Copyright Act and its stipulations is crucial to ensure innovation and copyright preservation.

Web Scraping Challenges: Extracting Data from Behind Login Screens and Handling Private Information

When the data you seek is tucked behind a login barrier, understanding the nuances of web scraping legality, especially concerning e-commerce websites, becomes paramount. Grasping the legal and ethical implications of extracting restricted, non-public information is essential. Such data, shielded by user credentials or access restrictions, demands careful handling and authorization before scraping.

Distinguishing between public and non-public data is foundational. Public data, openly accessible to website visitors, generally permits lawful scraping. Conversely, delving into non-public realms—like user profiles or confidential sales metrics behind login barriers—requires meticulous adherence to legal protocols. Unauthorized scraping in these areas breaches website terms and infringes upon privacy regulations.

Collaborating with the website's owner is indispensable for accessing such restricted data. Some platforms offer APIs, facilitating legitimate and structured data retrieval. Services like Actowiz Solutions further streamline this process, ensuring data extraction aligns with ethical standards and doesn't strain the website's infrastructure.

In essence, while mining behind login screens can unveil valuable insights, it's imperative to prioritize legal compliance. Always secure explicit consent from website proprietors before embarking on such scraping endeavors.

Unauthorized Use of Personal Property: Understanding Trespass to Chattel

Trespass to chattels is a pivotal legal recourse in the United States, safeguarding personal property from unauthorized exploitation. This doctrine can become particularly relevant within the realm of e-commerce data scraping. For instance, e-commerce giants like Amazon host an extensive array of products and services. With over 350 million items listed on Amazon's Marketplace, the allure for data scientists to glean such comprehensive e-commerce data collection is evident.

However, there's a caveat: indiscriminate scraping of vast e-commerce inventories, such as Amazon's, within compressed timelines can impose undue strain on servers. This could disrupt website operations and functionality. While the U.S. lacks explicit legal crawl rate constraints, the legal framework does not condone actions causing server damage.

Trespass to chattels is an intentional tort, necessitating intent to harm and establishing a causal link between the scraper's actions and server impairment. Should a scraper inundate Amazon's servers, leading to operational disruptions, they could face legal repercussions under trespass to chattels. Notably, such charges bear significant weight and are often likened to severe cyber offenses. In some jurisdictions, penalties for such transgressions can escalate to 15 years imprisonment.

While e-commerce websites offer a wealth of data for e-commerce data scraping endeavors, scrupulous adherence to legal parameters remains paramount to avoid potential legal entanglements.

Navigating E-Commerce Data Extraction: Decoding the Computer Fraud and Abuse Act (CFAA)

The Computer Fraud and Abuse Act (CFAA) is a pivotal federal statute prohibiting unauthorized computer system access. Within the e-commerce data scraping landscape, this law has been invoked to challenge unsanctioned data extraction from websites. However, evolving legal interpretations suggest that scraping publicly available e-commerce data may not inherently breach the CFAA.

HiQ Labs, Inc. v. LinkedIn Corporation is a landmark case that underscores this debate. LinkedIn contested HiQ Labs' e-commerce data collection activities here, alleging unauthorized scraping of its accessible web content. HiQ Labs contended that its actions were CFAA-compliant, emphasizing the public nature of the data it harvested, devoid of protective barriers like passwords.

The pivotal moment came when the U.S. Court of Appeals for the Ninth Circuit sided with HiQ Labs. The court opined that the CFAA's scope wasn't designed to oversee the aggregation of publicly accessible e-commerce data. Crucially, the court underscored the CFAA's impartiality: it doesn't differentiate between manual browser access and automated e-commerce data scraping tools.

This precedent-setting judgment reshapes the e-commerce website's legal landscape. While it suggests a potential green light for e-commerce data scraping from public domains, it's imperative to recognize that its influence remains circumscribed. As the e-commerce data collection domain continues to evolve, vigilance regarding subsequent judicial interpretations of the CFAA's applicability to e-commerce scraping practices remains crucial.

Navigating E-Commerce Web Scraping: Essential Compliance Practices

Within the bustling e-commerce landscape, web scraping is an invaluable asset, facilitating the extraction of pivotal data that illuminates market dynamics, competitor maneuvers, and consumer preferences. Yet, this potent tool demands meticulous handling. Adherence to best practices becomes paramount to harness its potential without stumbling into legal or ethical pitfalls.

Embrace Official APIs

Where feasible, tap into the designated Application Programming Interface (API) of e-commerce websites for data extraction. APIs offer a structured, authorized route for e-commerce data scraping, ensuring alignment with the platform's terms of service. This not only upholds e-commerce websites' legal guidelines but also minimizes the risks associated with unsanctioned scraping.

Adopt Sensible Crawl Rates

Maintaining a reasonable pace in your e-commerce data scraping endeavors is crucial. By moderating the frequency of your scraping requests, you safeguard the targeted website's server from undue strain. Such responsible scraping practices align with e-commerce websites' legal stipulations and preserve the platform's overall performance and integrity.

Refine Web Crawling Tactics

Efficiently scraping e-commerce websites demands astute web crawling strategies. Typically, this involves navigating to product links and extracting pertinent data from Product Display Pages (PDPs). However, suboptimal scraping tools can inadvertently revisit the same links, leading to resource wastage. Implementing caching mechanisms for visited URLs during e-commerce data collection can mitigate these inefficiencies. Such measures ensure data scraping resilience: even if disruptions occur, the process can resume without redundant efforts.

Implement Robust Anonymization Practices

In the realm of web scraping, safeguarding both personal privacy and legal standing is paramount. Effective anonymization stands as a cornerstone in achieving these dual objectives. By diversifying IP addresses, scrapers can diffuse their data extraction activities across varied origins, thwarting website owners' attempts to pinpoint request sources. This decentralized approach bolsters data collection efforts while diminishing potential legal entanglements.

Leveraging headless browsers presents another potent anonymization strategy. Mimicking human browsing behavior, these browsers allow scraping activities to blend seamlessly with typical user interactions, reducing the risk of detection and consequent legal challenges.

Further fortifying these measures, rotating User-Agent strings, introducing randomized request delays, and harnessing proxy servers amplify the anonymization robustness. However, it's pivotal to underscore that while these tactics significantly bolster protection, they aren't foolproof shields against potential litigation. E-commerce websites can deploy countermeasures to detect and bar scrapers, and legal landscapes regarding web scraping remain nuanced and jurisdiction-specific.

For web scrapers, staying abreast of the legal intricacies pertinent to their target e-commerce websites and adhering rigorously to established terms of service and laws is non-negotiable. By championing user privacy through advanced anonymization, scrapers not only mitigate legal risks but also uphold ethical data harvesting standards, cementing their credibility in the industry. At Actowiz Solutions, we're at the forefront of innovating such anonymization technologies.

Prioritize Relevant Data Extraction

When navigating the legalities of scraping e-commerce websites, precision is paramount. Instead of casting a wide net, hone in on extracting data directly pertinent to your project's goals. By adopting this focused approach, you not only sidestep superfluous data collection but also alleviate undue strain on the website. This strategic extraction ensures adherence to legal norms and optimizes the efficacy of your scraping endeavors. Always aim to extract only the data essential to your objectives while maintaining vigilance regarding the website's terms of service and relevant data scraping regulations.

Evaluate Copyright Concerns

Before initiating any web scraping initiative, meticulously review the terms of service and copyright guidelines of the targeted website. Consulting with legal professionals can provide clarity on appropriate and ethical usage. Always avoid scraping copyright protected by copyright unless explicit permission has been secured beforehand.

Limit Data Extraction to Public Domains

Ensuring the legal compliance of scraping e-commerce platforms hinges on extracting exclusively from publicly accessible data sources. Public data, in this context, pertains to information readily available on web pages without the need for specific permissions or credentials. This encompasses general product details like prices, descriptions, visuals, and customer feedback, along with overarching policies like shipping and returns.

Conversely, it's imperative to steer clear of scraping private or confined data not meant for public viewing. Such restricted content includes user-specific data, personal profiles, or any information barricaded behind login barriers or subscription fees. Unauthorized access to and scraping of this data can culminate in legal ramifications and breach privacy norms.

For clarity, consider a scenario where you're curating a price aggregation platform. Your focus would rightly be on harvesting public data, like listed product prices, ensuring your platform remains a legal and ethical conduit of information. In contrast, attempting to extract privileged or personalized insights, like user-specific purchase histories, would transgress boundaries, inviting potential legal challenges and ethical dilemmas.

Determine the Right Extraction Rate

When scraping data from e-commerce platforms, pinpointing the apt extraction frequency is pivotal. Take the instance of price monitoring from rival sites; striking the right balance in frequency is essential. Leveraging insights from over a decade in web scraping, we offer some guidance.

Our advice? Initiate with a weekly data pull, assessing the data's dynamism over several weeks. This observation phase lets you discern the fluctuation patterns, empowering you to fine-tune your extraction cadence.

Daily updates become indispensable in sectors like mobile devices or groceries, marked by swift price and availability shifts. This real-time data access equips you to navigate market volatility judiciously.

Conversely, elongating the refresh cycle to bi-weekly or monthly for segments like sewing machines, characterized by stable pricing and inventory updates, might suffice.

Adapting your extraction frequency to align with your target category's nuances enhances the efficacy of your data harvesting, ensuring timeliness without overwhelming the e-commerce site's infrastructure. It's imperative to remain attuned to data fluctuation rhythms, optimizing your scraping strategy for actionable e-commerce insights.

Precision Over Volume in Web Scraping

In the realm of web scraping, exhaustive data collection isn't always the goal. Consider product reviews as an example: rather than capturing every review, a curated sample from each star rating can often serve the purpose.

Likewise, when aiming to understand search rankings across different keywords, delving into 3 or 4 pages might offer ample insights. Nonetheless, it's paramount to strategize before initiating the scraping process. A well-calibrated approach ensures that your data extraction is both precise and effective.

Establish a Centralized Information Hub

Create a consolidated knowledge base to disseminate this web-scraped data information among team members. Whether a straightforward Google Sheet or a more comprehensive tool like Notion, having a centralized source ensures clarity and alignment within the team.

This knowledge base serves as a structured reservoir of insights, facilitating a unified understanding of the legalities and nuances of web scraping within the e-commerce domain. It's essential to encompass topics ranging from web scraping regulations, optimal data collection methodologies, and privacy implications to best practices and potential legal consequences.

Conclusion

In our extensive 12-year journey within the e-commerce data scraping sector, navigating intricate projects, a disturbing pattern emerges: a myopic focus on amassing data, often sidelining legality and compliance. This oversight is difficult. It's imperative to align with an e-commerce data scraping service that harmoniously blends robust data delivery with unwavering adherence to e-commerce websites' legal and compliance parameters.

Are you searching for an e-commerce data collection expert committed to legal integrity?

For more details, contact us now! You can also reach us for all your mobile app scraping, instant data scraper and web scraping service requirements.

#e-commerce website Scraping#E-commerce data scraping#e-commerce data collection#E-Commerce Web Scraping#E-commerce data Extractor

0 notes

Text

Unveiling the Power of Retailscrape: Competitor Price Monitoring, Intelligent Pricing, and E-commerce Price Tracking

Experience the power of precise competitor price monitoring with Retail Scrape, designed to elevate your sales growth and boost profit margins.

Know more : https://medium.com/@parthspatel321/unveiling-the-power-of-retailscrape-competitor-price-monitoring-intelligent-pricing-and-dc624214d93a

#Competitorpricemonitoring#Intelligent Pricing#E Commerce Price Tracking#Scrape Ecommerce Data#Ecommerce Scraper

0 notes

Text

How to Effortlessly Scrape Product Listings from Rakuten?

Use simple steps to scrape product listings from Rakuten efficiently. Enhance your e-commerce business by accessing valuable data with web scraping techniques.

Know More : https://www.iwebdatascraping.com/effortlessly-scrape-product-listings-from-rakuten.php

#scraping Rakuten's product listings#Data Scraped from Rakuten#Scrape Product Listings From Rakuten#Rakuten data scraping services#Web Scraping Rakuten Website#Web scraping e-commerce sites

0 notes

Text

E-Commerce Data Scraping Services - E-Commerce Data Collection Services

"We offer reliable e-commerce data scraping services for product data collection from websites in multiple countries, including the USA, UK, and UAE. Contact us for complete solutions.

know more:

#E-commerce data scraping#E-Commerce Data Collection Services#Scrape e-commerce product data#Web scraping retail product price data#scrape data from e-commerce websites

0 notes

Text

iWeb Scraping can analyze organizational opinions using Amazon price scraper and is used to scrape pricing information.

For More Information:-

0 notes

Text

Unveiling Growth Opportunities: E-Commerce Product Data Scraping Services

E-Commerce Product Data Scraping Services empower you to turn raw information into actionable insights, propelling your business toward growth and success.

#Scrape eCommerce Product Data#eCommerce Product Data Scraper#E-Commerce Product Data Scraping Services#E-Commerce Product Extractor

0 notes

Text

Unlock the Power of Data with Uniquesdata's Data Scraping Services!

In today’s data-driven world, timely and accurate information is key to gaining a competitive edge. 🌐

At Uniquesdata, our Data Scraping Services provide businesses with structured, real-time data extracted from various online sources. Whether you're looking to enhance your e-commerce insights, analyze competitors, or improve decision-making processes, we've got you covered!

💼 With expertise across industries such as e-commerce, finance, real estate, and more, our tailored solutions make data accessible and actionable.

📈 Let’s connect and explore how our data scraping services can drive value for your business.

#web scraping services#data scraping services#database scraping service#web scraping company#data scraping service#data scraping company#data scraping companies#web scraping companies#web scraping services usa#web data scraping services#web scraping services india

3 notes

·

View notes

Text

Why Should You Do Web Scraping for python

Web scraping is a valuable skill for Python developers, offering numerous benefits and applications. Here’s why you should consider learning and using web scraping with Python:

1. Automate Data Collection

Web scraping allows you to automate the tedious task of manually collecting data from websites. This can save significant time and effort when dealing with large amounts of data.

2. Gain Access to Real-World Data

Most real-world data exists on websites, often in formats that are not readily available for analysis (e.g., displayed in tables or charts). Web scraping helps extract this data for use in projects like:

Data analysis

Machine learning models

Business intelligence

3. Competitive Edge in Business

Businesses often need to gather insights about:

Competitor pricing

Market trends

Customer reviews Web scraping can help automate these tasks, providing timely and actionable insights.

4. Versatility and Scalability

Python’s ecosystem offers a range of tools and libraries that make web scraping highly adaptable:

BeautifulSoup: For simple HTML parsing.

Scrapy: For building scalable scraping solutions.

Selenium: For handling dynamic, JavaScript-rendered content. This versatility allows you to scrape a wide variety of websites, from static pages to complex web applications.

5. Academic and Research Applications

Researchers can use web scraping to gather datasets from online sources, such as:

Social media platforms

News websites

Scientific publications

This facilitates research in areas like sentiment analysis, trend tracking, and bibliometric studies.

6. Enhance Your Python Skills

Learning web scraping deepens your understanding of Python and related concepts:

HTML and web structures

Data cleaning and processing

API integration

Error handling and debugging

These skills are transferable to other domains, such as data engineering and backend development.

7. Open Opportunities in Data Science

Many data science and machine learning projects require datasets that are not readily available in public repositories. Web scraping empowers you to create custom datasets tailored to specific problems.

8. Real-World Problem Solving

Web scraping enables you to solve real-world problems, such as:

Aggregating product prices for an e-commerce platform.

Monitoring stock market data in real-time.

Collecting job postings to analyze industry demand.

9. Low Barrier to Entry

Python's libraries make web scraping relatively easy to learn. Even beginners can quickly build effective scrapers, making it an excellent entry point into programming or data science.

10. Cost-Effective Data Gathering

Instead of purchasing expensive data services, web scraping allows you to gather the exact data you need at little to no cost, apart from the time and computational resources.

11. Creative Use Cases

Web scraping supports creative projects like:

Building a news aggregator.

Monitoring trends on social media.

Creating a chatbot with up-to-date information.

Caution

While web scraping offers many benefits, it’s essential to use it ethically and responsibly:

Respect websites' terms of service and robots.txt.

Avoid overloading servers with excessive requests.

Ensure compliance with data privacy laws like GDPR or CCPA.

If you'd like guidance on getting started or exploring specific use cases, let me know!

2 notes

·

View notes

Text

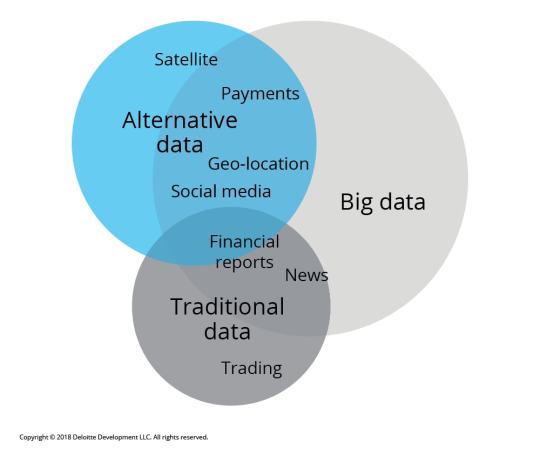

📊 Unlocking Trading Potential: The Power of Alternative Data 📊

In the fast-paced world of trading, traditional data sources—like financial statements and market reports—are no longer enough. Enter alternative data: a game-changing resource that can provide unique insights and an edge in the market. 🌐

What is Alternative Data? Alternative data refers to non-traditional data sources that can inform trading decisions. These include:

Social Media Sentiment: Analyzing trends and sentiments on platforms like Twitter and Reddit can offer insights into public perception of stocks or market movements. 📈

Satellite Imagery: Observing traffic patterns in retail store parking lots can indicate sales performance before official reports are released. 🛰️

Web Scraping: Gathering data from e-commerce websites to track product availability and pricing trends can highlight shifts in consumer behavior. 🛒

Sensor Data: Utilizing IoT devices to track activity in real-time can give traders insights into manufacturing output and supply chain efficiency. 📡

How GPT Enhances Data Analysis With tools like GPT, traders can sift through vast amounts of alternative data efficiently. Here’s how:

Natural Language Processing (NLP): GPT can analyze news articles, earnings calls, and social media posts to extract key insights and sentiment analysis. This allows traders to react swiftly to market changes.

Predictive Analytics: By training GPT on historical data and alternative data sources, traders can build models to forecast price movements and market trends. 📊

Automated Reporting: GPT can generate concise reports summarizing alternative data findings, saving traders time and enabling faster decision-making.

Why It Matters Incorporating alternative data into trading strategies can lead to more informed decisions, improved risk management, and ultimately, better returns. As the market evolves, staying ahead of the curve with innovative data strategies is essential. 🚀

Join the Conversation! What alternative data sources have you found most valuable in your trading strategy? Share your thoughts in the comments! 💬

#Trading #AlternativeData #GPT #Investing #Finance #DataAnalytics #MarketInsights

2 notes

·

View notes

Text

Must-Have Programmatic SEO Tools for Superior Rankings

Understanding Programmatic SEO

What is programmatic SEO?

Programmatic SEO uses automated tools and scripts to scale SEO efforts. In contrast to traditional SEO, where huge manual efforts were taken, programmatic SEO extracts data and uses automation for content development, on-page SEO element optimization, and large-scale link building. This is especially effective on large websites with thousands of pages, like e-commerce platforms, travel sites, and news portals.

The Power of SEO Automation

The automation within SEO tends to consume less time, with large content levels needing optimization. Using programmatic tools, therefore, makes it easier to analyze vast volumes of data, identify opportunities, and even make changes within the least period of time available. This thus keeps you ahead in the competitive SEO game and helps drive more organic traffic to your site.

Top Programmatic SEO Tools

1. Screaming Frog SEO Spider

The Screaming Frog is a multipurpose tool that crawls websites to identify SEO issues. Amongst the things it does are everything, from broken links to duplication of content and missing metadata to other on-page SEO problems within your website. Screaming Frog shortens a procedure from thousands of hours of manual work to hours of automated work.

Example: It helped an e-commerce giant fix over 10,000 broken links and increase their organic traffic by as much as 20%.

2. Ahrefs

Ahrefs is an all-in-one SEO tool that helps you understand your website performance, backlinks, and keyword research. The site audit shows technical SEO issues, whereas its keyword research and content explorer tools help one locate new content opportunities.

Example: A travel blog that used Ahrefs for sniffing out high-potential keywords and updating its existing content for those keywords grew search visibility by 30%.

3. SEMrush

SEMrush is the next well-known, full-featured SEO tool with a lot of features related to keyword research, site audit, backlink analysis, and competitor analysis. Its position tracking and content optimization tools are very helpful in programmatic SEO.

Example: A news portal leveraged SEMrush to analyze competitor strategies, thus improving their content and hoisting themselves to the first page of rankings significantly.

4. Google Data Studio

Google Data Studio allows users to build interactive dashboards from a professional and visualized perspective regarding SEO data. It is possible to integrate data from different sources like Google Analytics, Google Search Console, and third-party tools while tracking SEO performance in real-time.

Example: Google Data Studio helped a retailer stay up-to-date on all of their SEO KPIs to drive data-driven decisions that led to a 25% organic traffic improvement.

5. Python

Python, in general, is a very powerful programming language with the ability to program almost all SEO work. You can write a script in Python to scrape data, analyze huge datasets, automate content optimization, and much more.

Example: A marketing agency used Python for thousands of product meta-description automations. This saved the manual time of resources and improved search rank.

The How for Programmatic SEO

Step 1: In-Depth Site Analysis

Before diving into programmatic SEO, one has to conduct a full site audit. Such technical SEO issues, together with on-page optimization gaps and opportunities to earn backlinks, can be found with tools like Screaming Frog, Ahrefs, and SEMrush.

Step 2: Identify High-Impact Opportunities

Use the data collected to figure out the biggest bang-for-buck opportunities. Look at those pages with the potential for quite a high volume of traffic, but which are underperforming regarding the keywords focused on and content gaps that can be filled with new or updated content.

Step 3: Content Automation

This is one of the most vital parts of programmatic SEO. Scripts and tools such as the ones programmed in Python for the generation of content come quite in handy for producing significant, plentiful, and high-quality content in a short amount of time. Ensure no duplication of content, relevance, and optimization for all your target keywords.

Example: An e-commerce website generated unique product descriptions for thousands of its products with a Python script, gaining 15% more organic traffic.

Step 4: Optimize on-page elements

Tools like Screaming Frog and Ahrefs can also be leveraged to find loopholes for optimizing the on-page SEO elements. This includes meta titles, meta descriptions, headings, or even adding alt text for images. Make these changes in as effective a manner as possible.

Step 5: Build High-Quality Backlinks

Link building is one of the most vital components of SEO. Tools to be used in this regard include Ahrefs and SEMrush, which help identify opportunities for backlinks and automate outreach campaigns. Begin to acquire high-quality links from authoritative websites.

Example: A SaaS company automated its link-building outreach using SEMrush, landed some wonderful backlinks from industry-leading blogs, and considerably improved its domain authority. ### Step 6: Monitor and Analyze Performance

Regularly track your SEO performance on Google Data Studio. Analyze your data concerning your programmatic efforts and make data-driven decisions on the refinement of your strategy.

See Programmatic SEO in Action

50% Win in Organic Traffic for an E-Commerce Site

Remarkably, an e-commerce electronics website was undergoing an exercise in setting up programmatic SEO for its product pages with Python scripting to enable unique meta descriptions while fixing technical issues with the help of Screaming Frog. Within just six months, the experience had already driven a 50% rise in organic traffic.

A Travel Blog Boosts Search Visibility by 40%

Ahrefs and SEMrush were used to recognize high-potential keywords and optimize the content on their travel blog. By automating updates in content and link-building activities, it was able to set itself up to achieve 40% increased search visibility and more organic visitors.

User Engagement Improvement on a News Portal

A news portal had the option to use Google Data Studio to make some real-time dashboards to monitor their performance in SEO. Backed by insights from real-time dashboards, this helped them optimize the content strategy, leading to increased user engagement and organic traffic.

Challenges and Solutions in Programmatic SEO

Ensuring Content Quality

Quality may take a hit in the automated process of creating content. Therefore, ensure that your automated scripts can produce unique, high-quality, and relevant content. Make sure to review and fine-tune the content generation process periodically.

Handling Huge Amounts of Data

Dealing with huge amounts of data can become overwhelming. Use data visualization tools such as Google Data Studio to create dashboards that are interactive, easy to make sense of, and result in effective decision-making.

Keeping Current With Algorithm Changes

Search engine algorithms are always in a state of flux. Keep current on all the recent updates and calibrate your programmatic SEO strategies accordingly. Get ahead of the learning curve by following industry blogs, attending webinars, and taking part in SEO forums.

Future of Programmatic SEO

The future of programmatic SEO seems promising, as developing sectors in artificial intelligence and machine learning are taking this space to new heights. Developing AI-driven tools would allow much more sophisticated automation of tasks, thus making things easier and faster for marketers to optimize sites as well.

There are already AI-driven content creation tools that can make the content to be written highly relevant and engaging at scale, multiplying the potential of programmatic SEO.

Conclusion

Programmatic SEO is the next step for any digital marketer willing to scale up efforts in the competitive online landscape. The right tools and techniques put you in a position to automate key SEO tasks, thus optimizing your website for more organic traffic. The same goals can be reached more effectively and efficiently if one applies programmatic SEO to an e-commerce site, a travel blog, or even a news portal.

#Programmatic SEO#Programmatic SEO tools#SEO Tools#SEO Automation Tools#AI-Powered SEO Tools#Programmatic Content Generation#SEO Tool Integrations#AI SEO Solutions#Scalable SEO Tools#Content Automation Tools#best programmatic seo tools#programmatic seo tool#what is programmatic seo#how to do programmatic seo#seo programmatic#programmatic seo wordpress#programmatic seo guide#programmatic seo examples#learn programmatic seo#how does programmatic seo work#practical programmatic seo#programmatic seo ai

4 notes

·

View notes