#Riemannian geometry

Text

Of course the Universe was full of these jokes and ironies, mostly born of the misapprehension, native to beings living serially in time, that time itself was serial. Naturally, it was not. Time was at least Riemannian, and tended to run both in circles and cycles: outward-reaching spirals that repeated previous tendencies and archetypes reminiscent of earlier ones, but the repetitions came in “bigger” forms, and with unexpected ramifications. Now time bit its own tail one more time, and in the process of that biting pulled off the old skin, revealing the new shiny skin and the bigger body underneath: more beautifully scaled and intricately patterned, more muscular, and, as usual, harder to understand.

— To Visit the Queen (Diane Duane)

#book quotes#science fiction#fantasy fiction#ya fiction#diane duane#feline wizards#to visit the queen#time#riemannian geometry#magic#wizardry#time travel

1 note

·

View note

Text

It is good to see mathematicians indulge in humour in texts and monographs.

#mathblr#mathematics#humour#for those who are curious the book is riemannian holonomy groups and calibrated geometry#by dominic joyce#On G2 and Spin(7) manifolds

21 notes

·

View notes

Text

One frustrating thing about being a mathematician is that people who aren't into math heard that Einstein quote that's like "you haven't understood something unless you can explain it in layman's terms" and use it to mean "if it can't be explained to me in five minutes it's needlessly complicated, this person is a pretentious snob and academia is gatekeeping knowledge". And like everything in life, the matter of scientists not being able to/not caring to explain their work to people who aren't at the same level of expertise as them is a complex one that is worthy of being discussed, but here's the thing that you have to keep in mind if you haven't done math since high school:

the further you get into math, the more specialized your field becomes. You start working of puzzles that are small, but fit into a greater web of similar problems, like knitting a beautiful flower that's meant to be incorporated into a huge quilt. And all of math is build on top of each other, so you can't get to the most interesting, current math being discussed in the world without getting through the building blocks that are taught to you in elementary school, high school and the university.

You ask me to explain my thesis to you and I can tell you the title, but you won't know what the main words in it mean. And that's not because you are stupid, that's just because to learn that word you have to spend time learning a hundred others. I love math, it's my favourite thing in the universe and I always have time to talk about it, so if you want, we can sit down and I'll tell you everything you need to know to understand what I'm currently working on. You can ask me questions and I will reformulate, you can ask me to go over things again and I will oblige. With your permission, I will get a piece of paper and draw shapes and schemes to help us, but it won't take five minutes. It can't take just five minutes. That is a concession you will have to make if you truly want to learn.

(Unfortunately, I don't want to disclose the title of my thesis on tunglr dot hell, because it's super specific and I don't feel like doxxing myself. But I hope this resonates with some people. I work both in symplectic geometry and Riemannian geometry, and I have to say between the two Riemannian is a little bit easier to explain, because I can just talk about distances, but symplectic geometry or Lie groups... I'm afraid I just can't explain those in a sentence because they rely on people knowing what differentiation means, and that's not knowledge you necessarily retain if you work outside a stem field. Explaining that in a few sentences would eat up the whole five minutes).

728 notes

·

View notes

Text

california bans riemannian geometry because it is kinda white supremacist to put a metric on a manifold. florida bans topos theory becuase grothendieck was Woke

173 notes

·

View notes

Text

In the grand tradition of mathematicians using the word trivial in a way whose meaning is deeply ambiguous, I think there's a meaningful sense in which 3 dimensions is "the lowest nontrivial dimension". Now I hear you saying isn't that two dimensions (obviously 1D is trivial. No geometry) and look 2D is cool and all but it is not that rich geometrically. I think if we were 4D beings we would still think that 3D is the lowest nontrivial dimension. However we'd be so high on being able to do complex analysis intuitively we wouldn't even care about geometry. Fuck 4D beings. Also you could say we ARE 4D beings cuz time but if you consider us as living in a 4D manifold it's not even riemannian it's PSEUDO riemannian. Which if you're not a math person: the notion of "straight line" and "angle" is all fucked up. Very unlike Euclidean space. Don't wanna go there

112 notes

·

View notes

Text

My enrolment was completed for next year which means my modules have been confirmed! I will be taking

Algebraic Topology

Functional Analysis

Representation Theory

Riemannian Geometry

And I will also be taking the project module which is worth two normal modules (so it'll take up a third of my time). It'll amount to writing a dissertation. Project allocation isn't until September unfortunately but yeah :))

#my first project choice is about de Rham Cohomology and the second is about Lens Spaces#I had to pick 5 and rank them in order of preference and those are the ones I'm most excited about#maths posting#maths#mathematics#mathblr#math#lipshits posts

41 notes

·

View notes

Text

Dissertationposting 3 - The Torus

Remember that last time, by taking f=1 in Lemma 1, we showed that ʃR_Σ ≥ ʃR for a stable minimal hypersurface Σ in a manifold (M, g). In particular, if R > 0 on M, so is ʃR_Σ. But if M is 3-dimensional, then Gauss-Bonnet says that Σ must be a union of spheres! Combining this with the fact that we can find a stable minimal hypersurface in each homology class of T³, this shows that T³ cannot have a geometry with positive curvature! Let's introduce some notation to make this easier - we'll say a topological manifold is PSC if it admits a metric with R > 0, and non-PSC else. Gauss-Bonnet says that the only closed PSC 2-manifolds are unions of spheres, and we've just shown that T³ is non-PSC.

How could we make this work for higher dimensions? Well, we can still write each torus as Tⁿ×S¹, so an induction argument feels sensible. In particular:

T² is non-PSC by Gauss-Bonnet

Every (Tⁿ, g) has a stable minimal T^{n-1} by taking the homology class of the meridian by Lemma 2

Stable minimal hypersurfaces in positively curved spaces are PSC?

By induction and contradiction, Tⁿ is non-PSC for all n.

So, what do we have and what do we need to prove Statement 3?

We need to allow (Σ, g) to not have R > 0 even if Σ is PSC. The easiest idea here is to find a function to scale g (ie distances) by to get a new metric.

If we scale by φ^{4/(n-2)}, then the new curvature is φ^{-(n+2)/(n-2)} Lφ, [1] where L is the conformal Laplacian

which is a reasonably well known operator that sometimes has nicer behaviour than the regular Laplacian.

To use the full power of Lemma 1, we want another result relating the integral of |∇f|² to Vf² for some other function V.

As if by magic, functional analysis gives us exactly the result we need.

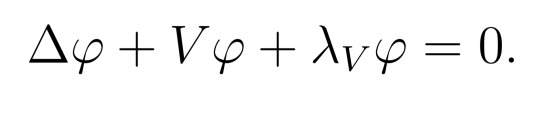

Lemma 3.

Let (M, g) be a compact n-manifold, possibly with boundary, and V a smooth function on M. Then the infimum

is attained by some function φ. Furthermore, φ > 0 on int(M), and

The proof [2] is pretty technical, but if you've done a course on Fourier analysis, the term "first eigenfunction" for φ might ring a bell. If you've done any undergrad course on ODEs, you can try thinking about how this relates to the normal existence theorem and maximum principle for the Laplacian (set V=0).

But that's all we need! Letting V = -(n-2)/4(n-1) R, Lemma 3 gives a function φ > 0 and constant λ with Lφ = -λφ; Lemma 1 and being careful with compactness gives that λ > 0; so scaling by φ^{4/(n-2)} does the job! It's worth recording that separately I think.

Proposition 4.

Let (M, g) be a closed manifold with R > 0. Then any closed stable minimal hypersurface is PSC.

Next time, we'll see how far we can push this method - in particular, it will turn out that we only actually care about the cohomology ring of M! I might even drop my first novel result, the classification of so-called SYS 3-manifolds.

[1] I'm not gonna do this for you, it's a direct calculation. I even gave you nice coefficients! I also think it's an exercise in Lee.

[2] This time, we pass to the Sobolev space H¹, where a sequence of functions approximating the infimum converges to a continuous function attaining the infimum. Showing it's an eigenfunction is fairly standard (vary φ, differentiate, divergence theorem), but the argument that it's smooth is cute. If Vφ + λφ is continuous, so is Δφ. But then φ is twice differentiable, so by induction smooth. Thierry Aubin's "Some nonlinear problems in Riemannian geometry" has all of the painful details, and a sketch is below.

8 notes

·

View notes

Text

why is riemannian geometry. like that.

96 notes

·

View notes

Text

((OOC: switching to plain text for readability and since providing a plain text translation of the entire thing would have been.... way too long.))

ITS ME DATA BOY AND I AM MAKING AN ASK GAME

THE THEME OF THIS ASK GAME IS: BRANCHES OF MATHEMATICS

DISCLAIMER: THIS DOES NOT INCLUDE ALL BRANCHES OF MATHEMATICS

NUMBER THEORY: WHAT IS YOUR FAVORITE SUBSET OF POKEMON. THIS CAN BE A TYPE (EXAMPLE: WATER POKEMON) OR SOME OTHER CATEGORY (EXAMPLES: LEGENDARY POKEMON, DOGGIE POKEMON)

DIFFERENTIAL GEOMETRY: WHAT KIND OF ART DO YOU LIKE TO MAKE

RIEMANNIAN GEOMETRY: HOW FAR AWAY ARE YOU PHYSICALLY FROM YOUR FRIENDS AND FAMILY. DO YOU LIVE NEARBY AND ALSO WHY OR WHY NOT

TOPOLOGY: WHAT ABOUT YOU HAS NEVER CHANGED EVEN AS YOU GOT OLDER AND WISER

COMPLEX GEOMETRY: HAVE YOU EVER SEEN SOMETHING THAT YOU COULD NOT EXPLAIN

GROUP THEORY: WHAT KIND OF PEOPLE DO YOU LIKE TO HANG OUT WITH

LIE ALGEBRA: DO YOU LIKE TO KEEP YOUR SPACE ORGANIZED OR DO YOU LIKE TO KEEP IT A COMPLETE MESS

BOOLEAN ALGEBRA: WHAT DO YOU BELIEVE TO ALWAYS BE TRUE

MULTIVARIATE CALCULUS: WHO IS YOUR CLOSEST FRIEND AND SAY SOMETHING VERY NICE ABOUT THEM

ORDINARY DIFFERENTIAL EQUATIONS: WHAT THINGS DO YOU LIKE TO DO WHEN YOU ARE ALONE

PARTIAL DIFFERENTIAL EQUATIONS: WHAT THINGS DO YOU LIKE TO DO WITH YOUR FRIENDS

INTEGATION: WHAT IS THE GREATEST REALIZATION THAT YOU HAVE EVER HAD

GRAPH THEORY: DO YOU LIKE TO USE THE INTERNET TO TALK OR DO YOU LIKE TO STARE AT PEOPLE WHEN YOU TALK

GAME THEORY: DO YOU TAKE RISKS AND WHAT IS THE (BAD WORD INCOMING) STUPIDEST RISK YOU HAVE EVER TAKEN

PROBABILITY THEORY: DO YOU BELIEVE IN FREE WILL

REMEMBER TO BE NICE AND ALSO SEND AN ASK TO THE PERSON YOU REBLOGGED THIS FROM AND ALSO REMEMBER TO SAY THANK YOU TO DATA BOY (ME) FOR MAKING THIS

30 notes

·

View notes

Text

I'm actually kind of spooked by machine learning. Mostly in a good way.

I asked ChatGPT to translate the Christoffel symbols (mathematical structures from differential geometry used in general relativity to describe how metrics change under parallel transport, which I've been trying to grok) into Coq.

And the code it wrote wasn't correct.

But!

It unpacked the mathematical definitions, mapping them pretty faithfully into Coq in a few seconds, explaining what it was doing lucidly along the way.

It used a bunch of libraries for real analysis, algebra, topology that have maybe been read by a few dozen people combined at a couple of research labs in France and Washington state. It used these, sometimes combining code fragments in ways that didn't type check, but generally in a way that felt idiomatic.

Sometimes it would forget to open modules or use imported syntax. But it knew the syntax it wanted! It decided to Yoneda embed a Riemannian manifold as a kind of real-valued presheaf, which is a very promising strategy. It just...mysteriously forgot to make it real-valued.

Sometimes it would use brackets to index into vectors, which is never done in Coq. But it knew what it was trying to compute!

Sometimes it would pass a tactic along to a proof obligation that the tactic couldn't actually discharge. But it knew how to use the conduit pattern and thread proof automation into definitions to deal with gnarly dependent types! This is really advanced Coq usage. You won't learn it in any undergraduate classes introducing computer proof assistants.

When I pointed out a mistake, or a type error, it would proffer an explanation and try to fix it. It mostly didn't succeed in fixing it, but it mostly did succeed in identifying what had gone wrong.

The mistakes it made had a stupid quality to them. At the same time, in another way, I felt decisively trounced.

Writing Coq is, along some hard-to-characterize verbal axis, the most cognitively demanding programming work I have done. And the kinds of assurance I can give myself about what I write when it typechecks are in a totally different league from my day job software engineering work.

And ChatGPT mastered some important aspects of this art much more thoroughly than I have after years of on-and-off study—especially knowing where to look for related work, how to strategize about building and encoding things—just by scanning a bunch of source code and textbooks. It had a style of a broken-in postdoc, but one somehow familiar with every lab's contributions.

I'm slightly injured, and mostly delighted, that some vital piece of my comparative advantage has been pulled out from under me so suddenly by a computer.

What an astounding cognitive prosthesis this would be if we could just tighten the feedback loops and widen the bandwidth between man and machine. Someday soon, people will do intellectual work the way they move about—that is, with the aid of machines that completely change the game. It will render entire tranches of human difference in ability minute by comparison.

50 notes

·

View notes

Note

According to Lee Smolin, the eight stages by which mathematics develop from the study of relations among natural objects are as follows:

1. Exploration of the natural case (e.g., countable objects, 3D spatial geometry, etc.)

2. Formalization of natural knowledge (e.g., arithmetic, trigonometry)

3. Exploration of the formalized natural case.

4. Evocation and study of variations on the natural case (e.g., non-euclidean geometry)

5. Invention of new modes of reasoning (e.g., axioms)

6. Unification of cases within more general frameworks (e.g., Riemannian geometry)

7. Discovery of relationships between constructions generated autonomously within mathematics

8. Discovery of the applicability of nature of knowledge developed internally.

Smolin rejects the idea that the study of mathematics constitutes the exploration of a Platonic realm separate from physical reality. Rather, he thinks that it represents an evocation from reasoning about items in the natural world. Thus, its applicability to physics occurs precisely because it is, historically, rooted in reasoning about the physical universe.

I need to learn more about this. So far it makes perfect sense!

20 notes

·

View notes

Text

Hi, I am a student of mathematics, educationalist and I have done my MS in mathematics. I have specialized in Differential Geometry, Complex analysis, Algebraic Topology, Riemannian Geometry and Algebraic Geometry. I am currently studying Complex analytic and Algebraic Geometry. I am interested in the application of Topology, Geometry in theoretical physics. I am also working on Space-time geometry, gauge theories, bundles and Yang Mills Problem. Functional analysis and measure theory is also something I really enjoyed

Apart from this I'm interested in literature, guitar, music, art and aesthetics. I am an oracious reader.

2 notes

·

View notes

Text

I love the random article feature on Wikipedia. Sometimes you get stuff like the 2019 South Tyneside metropolitan borough council election and sometimes you get the demographics of Bangladesh and sometimes you can read about Cut Locus (Riemannian manifold) which is part of a branch of geometry.

#plus when I feel the need to do something but not have the energy to do something I can do this and it’s still useful maybe#it’s so great#random Wikipedia article

10 notes

·

View notes

Text

Interesting Papers for Week 44, 2022

Competition between parallel sensorimotor learning systems. Albert, S. T., Jang, J., Modchalingam, S., ’t Hart, B. M., Henriques, D., Lerner, G., … Shadmehr, R. (2022). eLife, 11, e65361.

Persistent neuronal firing in the medial temporal lobe supports performance and workload of visual working memory in humans. Boran, E., Hilfiker, P., Stieglitz, L., Sarnthein, J., & Klaver, P. (2022). NeuroImage, 254, 119123.

The non-Riemannian nature of perceptual color space. Bujack, R., Teti, E., Miller, J., Caffrey, E., & Turton, T. L. (2022). Proceedings of the National Academy of Sciences, 119(18), e2119753119.

Branching Time Active Inference: The theory and its generality. Champion, T., Da Costa, L., Bowman, H., & Grześ, M. (2022). Neural Networks, 151, 295–316.

Attention modulates incidental memory encoding of human movements. Chiou, S.-C. (2022). Cognitive Processing, 23(2), 155–168.

Independent and interacting value systems for reward and information in the human brain. Cogliati Dezza, I., Cleeremans, A., & Alexander, W. H. (2022). eLife, 11, e66358.

A Variable Clock Underlies Internally Generated Hippocampal Sequences. Deng, X., Chen, S., Sosa, M., Karlsson, M. P., Wei, X.-X., & Frank, L. M. (2022). Journal of Neuroscience, 42(18), 3797–3810.

The geometry of domain-general performance monitoring in the human medial frontal cortex. Fu, Z., Beam, D., Chung, J. M., Reed, C. M., Mamelak, A. N., Adolphs, R., & Rutishauser, U. (2022). Science, 376(6593).

Cortico-Striatal Control over Adaptive Goal-Directed Responding Elicited by Cues Signaling Sucrose Reward or Punishment. Hamel, L., Cavdaroglu, B., Yeates, D., Nguyen, D., Riaz, S., Patterson, D., … Ito, R. (2022). Journal of Neuroscience, 42(18), 3811–3822.

Adaptive control of working memory. Hartmann, E.-M., Gade, M., & Steinhauser, M. (2022). Cognition, 224, 105053.

The representation of context in mouse hippocampus is preserved despite neural drift. Keinath, A. T., Mosser, C.-A., & Brandon, M. P. (2022). Nature Communications, 13, 2415.

Cortical circuits for top-down control of perceptual grouping. Kon, M., & Francis, G. (2022). Neural Networks, 151, 190–210.

Transition from predictable to variable motor cortex and striatal ensemble patterning during behavioral exploration. Kondapavulur, S., Lemke, S. M., Darevsky, D., Guo, L., Khanna, P., & Ganguly, K. (2022). Nature Communications, 13, 2450.

Differential dendritic integration of long-range inputs in association cortex via subcellular changes in synaptic AMPA-to-NMDA receptor ratio. Lafourcade, M., van der Goes, M.-S. H., Vardalaki, D., Brown, N. J., Voigts, J., Yun, D. H., … Harnett, M. T. (2022). Neuron, 110(9), 1532-1546.e4.

Neural feedback facilitates rough-to-fine information retrieval. Liu, X., Zou, X., Ji, Z., Tian, G., Mi, Y., Huang, T., … Wu, S. (2022). Neural Networks, 151, 349–364.

Phase coding of spatial representations in the human entorhinal cortex. Nadasdy, Z., Howell, D. H. P., Török, Á., Nguyen, T. P., Shen, J. Y., Briggs, D. E., … Buchanan, R. J. (2022). Science Advances, 8(18).

Encoding of Environmental Cues in Central Amygdala Neurons during Foraging. Ponserre, M., Fermani, F., Gaitanos, L., & Klein, R. (2022). Journal of Neuroscience, 42(18), 3783–3796.

Thinking takes time: Children use agents’ response times to infer the source, quality, and complexity of their knowledge. Richardson, E., & Keil, F. C. (2022). Cognition, 224, 105073.

Early lock-in of structured and specialised information flows during neural development. Shorten, D. P., Priesemann, V., Wibral, M., & Lizier, J. T. (2022). eLife, 11, e74651.

Flexible rerouting of hippocampal replay sequences around changing barriers in the absence of global place field remapping. Widloski, J., & Foster, D. J. (2022). Neuron, 110(9), 1547-1558.e8.

#science#Neuroscience#computational neuroscience#Brain science#research#cognition#cognitive science#neurons#neural networks#neurobiology#neural computation#psychophysics#scientific publications

9 notes

·

View notes

Text

All right I no longer remember why I was reading about de Sitter space, but I’m getting into a Wiki hole so I’m taking some notes here.

1. “In mathematical physics, n-dimensional de Sitter space (often abbreviated to dSn) is a maximally symmetric Lorentzian manifold with constant positive scalar curvature”

2. A Lorentzian manifold is a type of pseudo-Riemannian manifold

3. “In differential geometry, a pseudo-Riemannian manifold,[1][2] also called a semi-Riemannian manifold, is a differentiable manifold with a metric tensor that is everywhere nondegenerate.”

4. “In mathematics, a differentiable manifold (also differential manifold) is a type of manifold that is locally similar enough to a vector space to allow one to apply calculus.”

5. “In mathematics, a manifold is a topological space that locally resembles Euclidean space near each point.”

5. This is actually a sentence that makes sense to me, but in case it doesn’t to you: a Euclidean space is basically the sort of space that you’re thinking of with points on a graph. It’s regular, it’s even, it’s got a consistent coordinate system... A non-Euclidean space is, say, if you’re trying to project the Earth onto a map. Even assuming the Earth is perfectly round, trying to get that onto a flat plane leaves you with vastly irregular spacing and, well, all the flaws of the Mercator projection.

So a manifold is a shape, in n dimensions, such that any point on it looks like the space around it is Euclidean, but if you zoom out a bit further it ain’t. “One-dimensional manifolds include lines and circles, but not lemniscates.“ - a lemniscate ~is a figure 8 or an infinity symbol. On either side of the intersection point, it’s basically a circle and works as a one-dimensional line or circle, but at that intersection point, you have to have two dimensions (n+1) to describe the intersection of those lines, so it no longer locally resembles a 1-dimensional Euclidean space at that point.

4. A differentiable manifold is one that you can work calculus on. (I’m going to assume you remember scalars and vectors. If not, a scalar is a number, a vector is a number with a direction, or in graphical senses a ray.) I’m not... super clear on why you need a vector space to work calculus, but then, I’ve always tried to forget visual representations of math as fast as possible because I am a hugely non-visual person and they just confuse me. So it probably has something to do with that, and the way integration represents the area under a curve and differentiation represents its... na, slope or inflection point or whatever.

3. “In the mathematical field of differential geometry, a metric tensor (or simply metric) is an additional structure on a manifold M (such as a surface) that allows defining distances and angles, just as the inner product on a Euclidean space allows defining distances and angles there.”

The inner product is the dot product, fyi. If that doesn’t make sense to you... I’m not explaining it here. Sorry if that’s rough, but ultimately I am here for my own understanding and that’s a whole class on matrix arithmetic. Suffice it for here that the inner product lets you take your matrix representation of two curves, do math to them, and come up with a scalar representation of their relation. The metric tensor here is the generalization of that concept, something that lets you define curves’ relationship to each other.

(Note I am using the word ‘curve’ to represent lines and scribbles with arcs, consistent or not.)

“In mathematics, specifically linear algebra, a degenerate bilinear form f (x, y ) on a vector space V is a bilinear form such that the map from V to V∗ (the dual space of V ) given by v ↦ (x ↦ f (x, v )) is not an isomorphism.”

So a non-degenerate metric tensor is one such that the map from V to V* IS an isomorphism. Note that if I am remembering correctly, v is a vector in the space V. I had to remind myself that the dual space is like. Every vector that can exist in V? if you do basic mathematics to them? (Note that I am using phrases like ‘basic mathematics’ very broadly and in a not mathematically-approved sense.) And of course f(x) is a function. And an isomorphism is something that can be reversed with an inverse function.

So then x maps to f(x,v), and v maps to whatever ray or space that original mapping defined, and you can’t undo that. Except that we’re talking a non-degenerate space, so in fact the pseudo-Riemannian manifold that we started out talking about is a manifold (point 5) on which you can work calculus in a way that enables you to describe directions and angles and reverse functions/mappings done in that space. (I am much less confident in that last point.)

That brings me through point 3, but now I have to sleep.

4 notes

·

View notes