#MemoryCache

Explore tagged Tumblr posts

Text

เพิ่มความเร็วให้ Python บน Lambda ด้วย Variable Cache

สร้าง Decorator Cache ให้กับ Lambda ที่ใช้ Python Runtime ด้วยการทำ Variable Cache (MemoryCache) ด้วยที่ว่า Lambda ถ้าไม่มีการเรียกใช้งาน ชักระยะนึ่ง Lambda ก็จะถึงทำลายทิ้งไป ทำให้เราสามารถใช้ Variable Cache ช่วยในการเพิ่มความเร็วได้ โดยมีผลกระทบน้อยต่อ ram ที่ใช้งาน ด้วย Code ต่อไปนี้ ไฟล์ MemoryCacheModel.py from datetime import datetime, timedelta import os import uuid import…

View On WordPress

0 notes

Text

Mozilla "MemoryCache" Local AI

https://future.mozilla.org/blog/introducing-memorycache/

0 notes

Text

Optimizing Performance in .NET Applications: Tips and Techniques

In today's technology-driven world, performance optimization is paramount for any software application, and .NET developers must strive to create fast, responsive, and efficient applications to meet user expectations. Optimizing performance in .NET applications not only enhances the user experience but also leads to cost savings and better resource utilization. In this article, we will explore various tips and techniques to optimize the performance of .NET applications, ensuring they deliver optimal results and remain competitive in the market.

Optimizing Performance in .NET Applications

Profiling Your Application:

Before diving into optimization, it's essential to identify the performance bottlenecks in your .NET application. This can be achieved through profiling, where you analyze the application's execution flow, memory usage, and CPU consumption. Profiling tools like Visual Studio Profiler and dotTrace can help identify areas that require improvement, guiding you towards effective optimization strategies.

Efficient Memory Management:

Proper memory management is crucial to prevent memory leaks and excessive garbage collection, which can impact performance. Utilize the IDisposable pattern to release unmanaged resources, and avoid unnecessary object instantiation and boxing. Opt for value types when appropriate and implement object pooling to reduce memory churn and enhance application responsiveness.

Asynchronous Programming:

Leveraging asynchronous programming with async/await in .NET allows applications to perform non-blocking operations, making them more responsive. Asynchronous methods enable parallel execution of tasks, especially in I/O-bound operations, reducing waiting times and improving overall application performance.

Caching for Improved Response Times:

Implement caching mechanisms to store frequently accessed data, reducing the need for repeated database queries or expensive calculations. In-memory caching with technologies like MemoryCache or distributed caching with Redis can significantly improve response times and alleviate the load on backend resources.

Data Access Optimization:

Efficient data access is crucial for high-performing applications. Use database indexing and query optimizations to retrieve only the necessary data. Employing an Object-Relational Mapping (ORM) tool like Entity Framework, Dapper, or NHibernate can optimize data retrieval and simplify database interactions.

Efficient Collection Manipulation:

When working with collections, prefer List<T> over arrays, as List<T> provides dynamic resizing and additional methods, offering better performance. Use the appropriate collection type for specific scenarios to optimize memory usage and improve code efficiency.

Avoid String Concatenation:

String concatenation using the '+' operator can create numerous intermediate string objects, affecting performance. Utilize StringBuilder or string interpolation to efficiently build strings and reduce memory overhead.

Optimizing Loops:

A proficient dotnet development company minimizes work within loops, particularly in performance-critical sections. They cache loop boundaries, precalculate values outside the loop, and avoid unnecessary iterations, optimizing execution time.

Just-In-Time (JIT) Compilation:

.NET's Just-In-Time (JIT) compilation translates Intermediate Language (IL) code into native machine code during runtime. Pre-JIT or NGen (Native Image Generator) compilation can improve application startup time by avoiding JIT overhead.

Use Structs for Lightweight Data:

For small data structures that require frequent allocation, consider using structs instead of classes. Structs are value types and are allocated on the stack, reducing heap allocations and garbage collection overhead.

Monitor Application Performance:

Continuously monitor your .NET application's performance in production to identify any new bottlenecks or performance issues. Tools like Application Insights and Azure Monitor can provide valuable insights into application behavior and performance.

Load Testing and Scalability:

Conduct load testing to assess how your application performs under various load conditions. This allows you to identify potential performance issues and ensure scalability to handle increased user traffic.

Conclusion:

Optimizing performance in dotnet application development services is an ongoing process that demands constant evaluation, monitoring, and improvement. By following the tips and techniques discussed in this article, you can create high-performing .NET applications that offer a seamless user experience, maximize resource efficiency, and gain a competitive edge in today's fast-paced digital landscape. Striving for performance excellence not only benefits your users but also enhances your organization's reputation and drives success in the ever-evolving world of software development.

#dotnet development company#dotnet development services#asp.net application development company#dotnet application development services

0 notes

Text

Memory CACHE

Memory Cache Made Of Static RAM(SRAM), Which Is Very Fast Memory Compare To Regular DRAM That Is Used For Primary Memory(RAM). The Memory Cache Is The Processor's(CPU) Internal Memory.

Ans Its Job Is To Hold Data And Instructions Waiting To Be Used By The Processor. So Basically What Cache Does Is That It Holds The Common DATA That It Thinks The CPU Is Going To Access Over And Over Again. Because When The CPU Needs To Access Certain DATA It Always Checks The Faster Cache Memory First To See The Data It Needs Is There. And If It's Not There Then The CPU Will Have To Go Back To The Slower RAM(Primary Memory)To Find The DATA It Needs. So That's Why The Cache Memory Is So Important. Because If The CPU Can Access The Data What It Needs On The Faster Cache Memory Then The Faster The Computer Will Perform. The Memory Cache Comes In Different Levels.

For Example - Level 1

It Is Also Called Primary Cache Level 1 Cache Is Located On The Processor Itself. So It Runs As The Same Speed As The Processor So Its Very Fast And Its The Fastest Cache Memory On The Computer.

Level 2 Cache

There Is Also Called Level 2 Cache.

Which Is Also Called External Cache. And Level 2 Cache Is Used To Catch Recent Data Accesses From The Processor That Were Not Caught By The Level 1 Cache. So If The CPU Cant Find The DATA It Needs On The Level 1 Cache It Then Searches The Level 2 Cache For The DATA. And If The Level 2 Doesn't Have It Then It Has To Go Back To The Regular RAM To Find The DATA It Needs. Level 2 Cache Is Generally Located On A Separate Chip On The Motherboard. Or In Modern CPU's It Will Also Be Located On The Processor. Level 2 Cache Is Larger Than Level 1 Cache But It's Not As Fast As Level 1 Cache.

If You Understood Memory Cache, Share This Post To Your Friends. Read the full article

#cache#cachecleaner#cachecoherence#cachefurniture#cacheinhibernate#cachemeaning#cachemeaninginhindi#cachemeaningintelugu#cachememory#cacheperipherals#cachepronunciation#memorycache#memorycacheanddiskcache#memorycachec#memorycacheclear#memorycacheclearlinux#memorycachein.netcore#memorycacheinjava#memorycacheinnodejs#memorycachemeaning#memorycachememory#memorycachevsdiskcache

0 notes

Photo

#CashingIn #MemoryChips: Funny how fuzzy memory gets when #caching in #money comes up! #STEELYourMind #InkWellSpoken #MemoryCache #MemoryBank #FuzzyMemory #random #ComputerHumor

#CashingIn#MemoryChips#caching#money#STEELYourMind#InkWellSpoken#MemoryCache#MemoryBank#FuzzyMemory#random#ComputerHumor

0 notes

Text

Using LazyCache for clean and simple .NET Core in-memory caching

I'm continuing to use .NET Core 2.1 to power my Podcast Site, and I've done a series of posts on some of the experiments I've been doing. I also upgraded to .NET Core 2.1 RC that came out this week. Here's some posts if you want to catch up:

Eyes wide open - Correct Caching is always hard

The Programmer's Hindsight - Caching with HttpClientFactory and Polly Part 2

Adding Cross-Cutting Memory Caching to an HttpClientFactory in ASP.NET Core with Polly

Adding Resilience and Transient Fault handling to your .NET Core HttpClient with Polly

HttpClientFactory for typed HttpClient instances in ASP.NET Core 2.1

Updating jQuery-based Lazy Image Loading to IntersectionObserver

Automatic Unit Testing in .NET Core plus Code Coverage in Visual Studio Code

Setting up Application Insights took 10 minutes. It created two days of work for me.

Upgrading my podcast site to ASP.NET Core 2.1 in Azure plus some Best Practices

Having a blast, if I may say so.

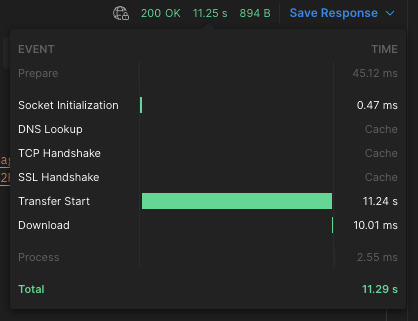

I've been trying a number of ways to cache locally. I have an expensive call to a backend (7-8 seconds or more, without deserialization) so I want to cache it locally for a few hours until it expires. I have a way that work very well using a SemaphoreSlim. There's some issues to be aware of but it has been rock solid. However, in the comments of the last caching post a number of people suggested I use "LazyCache."

Alastair from the LazyCache team said this in the comments:

LazyCache wraps your "build stuff I want to cache" func in a Lazy<> or an AsyncLazy<> before passing it into MemoryCache to ensure the delegate only gets executed once as you retrieve it from the cache. It also allows you to swap between sync and async for the same cached thing. It is just a very thin wrapper around MemoryCache to save you the hassle of doing the locking yourself. A netstandard 2 version is in pre-release. Since you asked the implementation is in CachingService.cs#L119 and proof it works is in CachingServiceTests.cs#L343

Nice! Sounds like it's worth trying out. Most importantly, it'll allow me to "refactor via subtraction."

I want to have my "GetShows()" method go off and call the backend "database" which is a REST API over HTTP living at SimpleCast.com. That backend call is expensive and doesn't change often. I publish new shows every Thursday, so ideally SimpleCast would have a standard WebHook and I'd cache the result forever until they called me back. For now I will just cache it for 8 hours - a long but mostly arbitrary number. Really want that WebHook as that's the correct model, IMHO.

LazyCache was added on my Configure in Startup.cs:

services.AddLazyCache();

Kind of anticlimactic. ;)

Then I just make a method that knows how to populate my cache. That's just a "Func" that returns a Task of List of Shows as you can see below. Then I call IAppCache's "GetOrAddAsync" from LazyCache that either GETS the List of Shows out of the Cache OR it calls my Func, does the actual work, then returns the results. The results are cached for 8 hours. Compare this to my previous code and it's a lot cleaner.

public class ShowDatabase : IShowDatabase { private readonly IAppCache _cache; private readonly ILogger _logger; private SimpleCastClient _client; public ShowDatabase(IAppCache appCache, ILogger<ShowDatabase> logger, SimpleCastClient client) { _client = client; _logger = logger; _cache = appCache; } public async Task<List<Show>> GetShows() { Func<Task<List<Show>>> showObjectFactory = () => PopulateShowsCache(); var retVal = await _cache.GetOrAddAsync("shows", showObjectFactory, DateTimeOffset.Now.AddHours(8)); return retVal; } private async Task<List<Show>> PopulateShowsCache() { List<Show> shows = shows = await _client.GetShows(); _logger.LogInformation($"Loaded {shows.Count} shows"); return shows.Where(c => c.PublishedAt < DateTime.UtcNow).ToList(); } }

It's always important to point out there's a dozen or more ways to do this. I'm not selling a prescription here or The One True Way, but rather exploring the options and edges and examining the trade-offs.

As mentioned before, me using "shows" as a magic string for the key here makes no guarantees that another co-worker isn't also using "shows" as the key.

Solution? Depends. I could have a function-specific unique key but that only ensures this function is fast twice. If someone else is calling the backend themselves I'm losing the benefits of a centralized (albeit process-local - not distributed like Redis) cache.

I'm also caching the full list and then doing a where/filter every time.

A little sloppiness on my part, but also because I'm still feeling this area out. Do I want to cache the whole thing and then let the callers filter? Or do I want to have GetShows() and GetActiveShows()? Dunno yet. But worth pointing out.

There's layers to caching. Do I cache the HttpResponse but not the deserialization? Here I'm caching the List<Shows>, complete. I like caching List<T> because a caller can query it, although I'm sending back just active shows (see above).

Another perspective is to use the <cache> TagHelper in Razor and cache Razor's resulting rendered HTML. There is value in caching the object graph, but I need to think about perhaps caching both List<T> AND the rendered HTML.

I'll explore this next.

I'm enjoying myself though. ;)

Go explore LazyCache! I'm using beta2 but there's a whole number of releases going back years and it's quite stable so far.

Lazy cache is a simple in-memory caching service. It has a developer friendly generics based API, and provides a thread safe cache implementation that guarantees to only execute your cachable delegates once (it's lazy!). Under the hood it leverages ObjectCache and Lazy to provide performance and reliability in heavy load scenarios.

For ASP.NET Core it's quick to experiment with LazyCache and get it set up. Give it a try, and share your favorite caching techniques in the comments.

Tai Chi photo by Luisen Rodrigo used under Creative Commons Attribution 2.0 Generic (CC BY 2.0), thanks!

Sponsor: Check out JetBrains Rider: a cross-platform .NET IDE. Edit, refactor, test and debug ASP.NET, .NET Framework, .NET Core, Xamarin or Unity applications. Learn more and download a 30-day trial!

© 2018 Scott Hanselman. All rights reserved.

0 notes

Text

New Post has been published on Top Auto Blog

New Post has been published on http://topauto.site/electric-crossover-nio/

Electric crossover Nio ES6: the second model of the brand

Unlike most electric mobility startups, the Chinese company Nio is still the beginning of the production of the cars. Firstborn last summer, became full-size crossover ES8, which on the Chinese market is intended to compete with the Tesla Model X. And while production at the joint venture JAC-Nio is gaining momentum (I was recently released 10-thousand copies), the company prepared the second model is a more compact crossover Nio ES6.

Two SUV have a significant degree of commonality: they have one platform with double wishbone front suspension, rear memorycache, optional air suspension and a battery under the cabin floor. But the wheelbase of the “six” is shorter at 110 mm (2900 mm) and overall length was reduced by 172 mm (4850 mm), though width (1965 mm) and height (1734 mm) practically has not changed. The body of a younger model, likewise made of aluminum, the drag coefficient is the same: 0,29.

In the same style made of not only the exterior but also the interior: Nio ES6 features virtual instruments, the vertical display of the media system and pokémon NOMI, who sits on the front panel, recognizes voice commands and has the “artificial intelligence” for the entertainment of passengers in transit. But the wheel of the six-spoke instead of dvuhsvetnoe the model ES8. And most importantly, unlike the younger from the older crossover — the absence of a third row of seats.

The basic version has two electric motors (one for each axis) with a capacity of 218 HP. the total output is 436 HP, This crossover can accelerate to 100 km/h in 5.6 s. And Performance version for rear axle mounted engine with 326 HP, that is, the peak impact reaches 544 HP and acceleration to “hundreds” is 4.7 C, But larger Nio ES8 still more powerful (653 HP) and faster (4.4 seconds).

But more compact crossover can be ordered not only with the standard battery capacity of 70 kWh (the same is installed on all ES8), but with a larger battery, 84 kW∙h. In the first case, the mileage per charge cycle NEDC is 410-430 km (depending on power), and the second 480-510 km.

In China Nio ES6 is from 52 to 65 thousand dollars (excluding state subsidies), but the price can be reduced to 14500 dollars, if you make the traction battery rental: it costs 240 dollars a month. Delivery of commodity machines is expected to start in June 2019, though the basic version with capacity of 436 HP is only expected in the fourth quarter.

0 notes

Text

LibreSSL before 2 6 5 and 2 7 x before 2 7 4 allows a memorycache sidecha

SNNX.com : LibreSSL before 2 6 5 and 2 7 x before 2 7 4 allows a memorycache sidecha http://dlvr.it/Qf2qPv

0 notes

Text

SoftReference

https://github.com/dmlloyd/openjdk/blob/jdk9/jdk9/jdk/src/java.base/share/classes/sun/security/util/Cache.java

SoftCacheEntry<K, V> 是一��� SoftReference<V>, 也就是一个V的soft reference

当V被 GC清除时,会enqueue 注册的回调 ReferenceQueue . 然后这个MemoryCache在做任何事情之前都会调用 emptyQueue() 来把 ReferenceQueue注册的 SoftCacheEntry给清了

然后还有很蛋疼的重新加入: https://github.com/dmlloyd/openjdk/blob/7d7fbd09fcfd7f8cd02bf76ce10433ceeb33b3cf/jdk/src/java.base/share/classes/sun/security/util/Cache.java#L300-L304

另外soft reference和weak reference的区别就是 weak reference 似乎会更急着被回收~

0 notes

Text

Caching in on .Net

Just a quick post about a new feature I wasn’t aware of. If you need to cache objects in .Net, before you run off and write a custom cache abstraction to divorce your code from System.Web or to scale out your cache, check out the abstract class System.Run…

View Post

0 notes

Link

In the previous part of this series we implemented a simple memory cache based upon WeakReference. It certainly improved performance when we were using the same image multiple times within a short period, but it is easy to predict that our cached images would not survive an intensive operation, such as an Activity transition. Also, the policy of when to free cached items was completely outside of our control. While there are certainly use-cases where this is not an issue, it is often necessary to have rather more control. In this article we’ll look at LruCache which gives us precisely that....

0 notes

Link

RT @ker08 know, i can't believe "Who is Troy Davis" is trending ... || well, lots of us weren't even born when the crime allegedly happened

0 notes

Text

Eyes wide open - Correct Caching is always hard

In my last post I talked about Caching and some of the stuff I've been doing to cache the results of a VERY expensive call to the backend that hosts my podcast.

As always, the comments are better than the post! Thanks to you, Dear Reader.

The code is below. Note that the MemoryCache is a singleton, but within the process. It is not (yet) a DistributedCache. Also note that Caching is Complex(tm) and that thousands of pages have been written about caching by smart people. This is a blog post as part of a series, so use your head and do your research. Don't take anyone's word for it.

Bill Kempf had an excellent comment on that post. Thanks Bill! He said:

The SemaphoreSlim is a bad idea. This "mutex" has visibility different from the state it's trying to protect. You may get away with it here if this is the only code that accesses that particular key in the cache, but work or not, it's a bad practice. As suggested, GetOrCreate (or more appropriate for this use case, GetOrCreateAsync) should handle the synchronization for you.

My first reaction was, "bad idea?! Nonsense!" It took me a minute to parse his words and absorb. Ok, it took a few hours of background processing plus I had lunch.

Again, here's the code in question. I've removed logging for brevity. I'm also deeply not interested in your emotional investment in my brackets/braces style. It changes with my mood. ;)

public class ShowDatabase : IShowDatabase { private readonly IMemoryCache _cache; private readonly ILogger _logger; private SimpleCastClient _client; public ShowDatabase(IMemoryCache memoryCache, ILogger<ShowDatabase> logger, SimpleCastClient client){ _client = client; _logger = logger; _cache = memoryCache; } static SemaphoreSlim semaphoreSlim = new SemaphoreSlim(1); public async Task<List<Show>> GetShows() { Func<Show, bool> whereClause = c => c.PublishedAt < DateTime.UtcNow; var cacheKey = "showsList"; List<Show> shows = null; //CHECK and BAIL - optimistic if (_cache.TryGetValue(cacheKey, out shows)) { return shows.Where(whereClause).ToList(); } await semaphoreSlim.WaitAsync(); try { //RARE BUT NEEDED DOUBLE PARANOID CHECK - pessimistic if (_cache.TryGetValue(cacheKey, out shows)) { return shows.Where(whereClause).ToList(); } shows = await _client.GetShows(); var cacheExpirationOptions = new MemoryCacheEntryOptions(); cacheExpirationOptions.AbsoluteExpiration = DateTime.Now.AddHours(4); cacheExpirationOptions.Priority = CacheItemPriority.Normal; _cache.Set(cacheKey, shows, cacheExpirationOptions); return shows.Where(whereClause).ToList(); ; } catch (Exception e) { throw; } finally { semaphoreSlim.Release(); } } } public interface IShowDatabase { Task<List<Show>> GetShows(); }

SemaphoreSlim IS very useful. From the docs:

The System.Threading.Semaphore class represents a named (systemwide) or local semaphore. It is a thin wrapper around the Win32 semaphore object. Win32 semaphores are counting semaphores, which can be used to control access to a pool of resources.

The SemaphoreSlim class represents a lightweight, fast semaphore that can be used for waiting within a single process when wait times are expected to be very short. SemaphoreSlim relies as much as possible on synchronization primitives provided by the common language runtime (CLR). However, it also provides lazily initialized, kernel-based wait handles as necessary to support waiting on multiple semaphores. SemaphoreSlim also supports the use of cancellation tokens, but it does not support named semaphores or the use of a wait handle for synchronization.

And my use of a Semaphore here is correct...for some definitions of the word "correct." ;) Back to Bill's wise words:

You may get away with it here if this is the only code that accesses that particular key in the cache, but work or not, it's a bad practice.

Ah! In this case, my cacheKey is "showsList" and I'm "protecting" it with a lock and double-check. That lock/check is fine and appropriate HOWEVER I have no guarantee (other than I wrote the whole app) that some other thread is also accessing the same IMemoryCache (remember, process-scoped singleton) at the same time. It's protected only within this function!

Here's where it gets even more interesting.

I could make my own IMemoryCache, wrap things up, and then protect inside with my own TryGetValues...but then I'm back to checking/doublechecking etc.

However, while I could lock/protect on a key...what about the semantics of other cached values that may depend on my key. There are none, but you could see a world where there are.

Yes, we are getting close to making our own implementation of Redis here, but bear with me. You have to know when to stop and say it's correct enough for this site or project BUT as Bill and the commenters point out, you also have to be Eyes Wide Open about the limitations and gotchas so they don't bite you as your app expands!

The suggestion was made to use the GetOrCreateAsync() extension method for MemoryCache. Bill and other commenters said:

As suggested, GetOrCreate (or more appropriate for this use case, GetOrCreateAsync) should handle the synchronization for you.

Sadly, it doesn't work that way. There's no guarantee (via locking like I was doing) that the factory method (the thing that populates the cache) won't get called twice. That is, someone could TryGetValue, get nothing, and continue on, while another thread is already in line to call the factory again.

public static async Task<TItem> GetOrCreateAsync<TItem>(this IMemoryCache cache, object key, Func<ICacheEntry, Task<TItem>> factory) { if (!cache.TryGetValue(key, out object result)) { var entry = cache.CreateEntry(key); result = await factory(entry); entry.SetValue(result); // need to manually call dispose instead of having a using // in case the factory passed in throws, in which case we // do not want to add the entry to the cache entry.Dispose(); } return (TItem)result; }

Is this the end of the world? Not at all. Again, what is your project's definition of correct? Computer science correct? Guaranteed to always work correct? Spec correct? Mostly works and doesn't crash all the time correct?

Do I want to:

Actively and aggressively avoid making my expensive backend call at the risk of in fact having another part of the app make that call anyway?

What I am doing with my cacheKey is clearly not a "best practice" although it works today.

Accept that my backend call could happen twice in short succession and the last caller's thread would ultimately populate the cache.

My code would become a dozen lines simpler, have no process-wide locking, but also work adequately. However, it would be naïve caching at best. Even ConcurrentDictionary has no guarantees - "it is always possible for one thread to retrieve a value, and another thread to immediately update the collection by giving the same key a new value."

What a fun discussion. What are your thoughts?

Sponsor: SparkPost’s cloud email APIs and C# library make it easy for you to add email messaging to your .NET applications and help ensure your messages reach your user’s inbox on time. Get a free developer account and a head start on your integration today!

© 2018 Scott Hanselman. All rights reserved.

0 notes

Text

New Post has been published on Top Auto Blog

New Post has been published on http://topauto.site/crossover-aiways-u5-wor/

Crossover Aiways U5 work of Roland Gumpert: serial version

Aiways company founded in February 2017 by former senior executives of the group’s SAIC and the Chinese Volvo. It has already raised $ 1.1 billion in investments, has a staff of seven hundred employees and the R & d center in Shanghai. However, the main actor in this project was the German engineer Roland Gumpert. Previously, he worked for Audi, and became famous as the Creator of the extreme supercar Gumpert Apollo. This project failed to promote, and in 2014 the company was closed — only to be reborn under a new name, Apollo and without Roland. Gumpert himself then disappeared from the radar, but last year it hired a Chinese company Aiways.

It’s hard to say what contribution Gumpert managed to make in the development of future models, because the first concept Aiways U5 Ion debuted in the spring. At that time, Roland worked at the company for less than a year. But now show the production version already without the prefix Ion. It is a crossover-sized Hyundai Santa Fe: length — 4680 mm, width — 1880 mm, height — 1680 mm. the Final version has kept the look of the show car, though details have changed almost all the elements: headlights, bumpers, the contours of the glazing.

Switchblade door handles — almost like a Aston Martin in road position, they are recessed and when unlocking the Central locking put forward a couple of inches below them to pry the hand. Wheels — 19-inch, and Aiways U5 is enviable for such a high car aerodynamic study: the coefficient Cd is equal to 0,29.

Crossover is built on its own modular platform MAS. This “truck” has a suspension McPherson front and memorycache back, the traction battery is located under the floor of the cabin, the design is widely used aluminum and light alloys, and the wheelbase for different models may vary in the range from 2700 to 3000 mm. the model U5 between the axes of 2800 mm.

Aiways U5 only has one motor on the front axle, it develops 190 HP and 315 Nm. The battery is average at present with a capacity of 63 kW∙h provides the mileage of up to 460 km in the NEDC cycle. But in addition provides the opportunity to order an additional battery of 18 kWh, which will be located under the boot floor. With her passport mileage will increase by another 100 km.

In the cabin of the crossover is a virtual instrument with the divided sections of the screen, touch controls, climate control, electric seats and a host of other equipment. Also, the developers boast “autopilot” of the second level, which is only adaptive cruise control with radar and camera, although the development is a more advanced system of the fourth level.

Serial production of the crossover Aiways U5 should begin in the second half of 2019. In Ganga already built an Assembly plant with capacity of 150 thousand electric cars per year soon, they will begin commissioning, and in the future, performance may be increased to 300 thousand cars. After the crossover U5 will be a number of models on the platform of the MAS, including a minivan and pickup truck. But the main goal is the Roland Gumpert — with the support of Chinese funding to start production of very different cars: the prototype became the sports car Gumpert RG Nathalie.

0 notes

Text

New Post has been published on Top Auto Blog

New Post has been published on http://topauto.site/electric-crossover-audi/

Electric crossover Audi e-tron presented in serial form

Formally, a serial e-tron debuted two weeks later the electric car Mercedes EQC — and this despite the fact that the Audi started to prepare the audience to debut electric crossover three years ago, showing the concepts and show cars. However, Daimler will begin selling the EQC next summer, while the Audi e-tron has already embarked on the conveyor. In the real market battle of the electric cars, the ball is on the side of the “four rings”! How strong is the impact?

Audi e-tron has not been kind recently presented model Q8, although they look similar and are both based on a modular platform MLB Evo with double-wishbone front suspension and rear memorycache. But the car has the original body length 4901 mm (against mm 4999, “eight”) and short wheelbase (2928 2995 mm instead of mm). Width (1935 mm) and height (1616 mm) e-tron is also a bit inferior to the “ku-eight”. Mercedes EQC and another much smaller (4656 mm in length).

Aerodynamics electric Audi worked perfectly: the drag coefficient Cd 0,28 0,34 against the model Q8. Family grille has not yet become a big plug in it still have the little notch for radiator cooling. Electrocreaser has front running lights and tail lights on the thinnest organic light emitting diode (OLED), which differ extremely bright and uniform light. For low and high beams respond to the “usual” LEDs.

The interior is designed in the style of the other new Audi models: the virtual instrument cluster, two screens on the Central console and steering-wheel chetyrehluchevoy already familiar on models A6, A7 and A8. But it is charged in the interior of the electric car will be two of the screen: they are located on the doors and show the image with the side rear view cameras that are installed on the doors. Audi e-tron arguing with the Lexus ES sedan for the title of the world’s first production car with cameras instead of side mirrors!

Displays a diagonal of seven inches located at the optimum for the driver angle, and the image on them can move and scale the usual smartphone finger movements. A choice of three operating modes, which are optimized for driving on the highway, cornering and Parking. However, by default, Audi e-tron will have a conventional mirror and it is not clear when the cameras will actually be available to order. As long as they are legalized in Japan only.

Another unusual solution in the interior selector of the transmission. Located on the Central tunnel is a massive “hump” is actually fixed, and for the choice of regimes is swinging the key on its side which is located under the thumb.

The interior is designed for five riders: due to almost missing the Central tunnel at the rear middle passenger will be able to accommodate quite comfortably. Equipment is as rich as the other Audi: options there are different types of leather upholstery, four-zone climate control, Bang & Olufsen sound system, panoramic roof and much more.

The volume of the boot under the shelf — 600 litres vs 605 from larger models Audi Q8 and 500 liters Mercedes EQC. And in addition, following the example of Tesla and Jaguar I-Pace, a small 60-litre compartment is equipped under the hood, although the main volume up control and charger.

So far the only modification Audi e-tron quattro 55. This crossover has two asynchronous electric motors (one for each axis) total impact of 408 HP and 664 Nm. Mercedes EQC has the same capacity, but thrust him much more (765 Nm). But the peak impact of the e-tron will come out no longer than one minute, after which the rates declined to a nominal 360 HP and 560 Nm. But the Audi is more capacious lithium-ion battery: 95 kWh instead of 80 Mercedes and Jaguar 90. It is located under the cabin floor, weighs 700 kg and works with a voltage of 400 volts, and charging to 80% fast terminal should take about half an hour. In Europe, Audi is involved in the project Ionity, which includes installation of 400 stations, rapid charging, and the US partner is the network of Electrify America with the plan to open two thousand terminals.

The developers promise that the Audi e-tron can drive on a single charge, approximately 400 km (according to the method WLTP). Acceleration to 100 km/h will take “less than six seconds” (Mercedes 5,1), although acceleration time to 60 mph (97 km/h) measured more accurately: the 5.5 s. top speed is limited at around 200 km/h.

Already in the base has a pneumatic suspension, which can change the ground clearance in the range of 76 mm. In off-road mode, driving electronics, the body is lifted on 35 mm relative to the standard position, and if the machine is stuck, it is possible to briefly activate the Raise function by adding 15 mm of clearance. Audi e-tron can pull a trailer weighing up to 1814 kg.

Release crossovers at the Belgian factory of the company, made the compact hatchback Audi A1 first generation, but in 2016 began the reconstruction and conversion of companies under Assembly of electric cars, to evict the new “unity” on the factory Seat in Martorell Spanish. In the European market the Audi e-tron will begin to sell before the end of 2018. Price in Germany, 79900 low against the Euro 78 thousand for the Jaguar I-Pace. Already announced prices in USA, although the supply here will only begin in the second quarter of 2019: 74800 to $ 86700.

And by 2025 in the Audi range will have 12 electrical models of different classes. At the end of this year will see the light of the conceptual coupe e-tron GT, later than promised coupe crossover e-tron Sportback, wagon and liftbek. With this Junior model will be created on a modular platform of the concern Volkswagen MEB, and the senior already under development, and truck PPE (Premium Platform Electric) in cooperation with Porsche.

0 notes

Text

wolfcrypt src ecc c in wolfSSL before 3 15 1 patch allows a memorycache si

SNNX.com : wolfcrypt src ecc c in wolfSSL before 3 15 1 patch allows a memorycache si http://dlvr.it/Qf2qPj

0 notes