#Javascript tools for your web developer

Explore tagged Tumblr posts

Text

Essentials You Need to Become a Web Developer

HTML, CSS, and JavaScript Mastery

Text Editor/Integrated Development Environment (IDE): Popular choices include Visual Studio Code, Sublime Text.

Version Control/Git: Platforms like GitHub, GitLab, and Bitbucket allow you to track changes, collaborate with others, and contribute to open-source projects.

Responsive Web Design Skills: Learn CSS frameworks like Bootstrap or Flexbox and master media queries

Understanding of Web Browsers: Familiarize yourself with browser developer tools for debugging and testing your code.

Front-End Frameworks: for example : React, Angular, or Vue.js are powerful tools for building dynamic and interactive web applications.

Back-End Development Skills: Understanding server-side programming languages (e.g., Node.js, Python, Ruby , php) and databases (e.g., MySQL, MongoDB)

Web Hosting and Deployment Knowledge: Platforms like Heroku, Vercel , Netlify, or AWS can help simplify this process.

Basic DevOps and CI/CD Understanding

Soft Skills and Problem-Solving: Effective communication, teamwork, and problem-solving skills

Confidence in Yourself: Confidence is a powerful asset. Believe in your abilities, and don't be afraid to take on challenging projects. The more you trust yourself, the more you'll be able to tackle complex coding tasks and overcome obstacles with determination.

#code#codeblr#css#html#javascript#java development company#python#studyblr#progblr#programming#comp sci#web design#web developers#web development#website design#webdev#website#tech#html css#learn to code

2K notes

·

View notes

Note

Hello. I noticed that you said you were using Ren'py for your new game. What made you come to the conclusion that this was the best option, if you don't mind my asking? I am looking into developing something, but I'm unsure which program to use. I thought the point of Ren'py was more for visual novels. I am interested in making something that isn't as visual heavy. Do you think that's possible with Ren'py? Sorry for the randomness. Thank you for taking the time to read. Have a wonderful day!

Hello!

It depends on what you're looking to achieve.

If you want the bare minimum of functionality and the bare minimum of coding to learn, you can go with ChoiceScript. Which, fair warning, is a proprietary language, so if you want to monetize/publish your work without having to adhere to their opaque content rules/cede 75% of your sales, I'd suggest you steer clear, but YMMV.

Twine is a little less easy to use, but comes with higher customization. It supports HTML/CSS/JavaScript, so what you can do is much broader than ChoiceScript. Plus, monetizing your game is fine. I find that Twine is more meant for short-form IF than long-form, but it remains usable. Deploying your game in any other form than HTML (a web game) is a hassle though, and not supported by Twine directly.

Ren'Py comes next; here you must make your own user interface (or you could just use native NVL-mode with no edits, which... it'll work for sure, but the presentation will be questionable), but I find it much more flexible than the other two options. And it comes with the added bonus of making it much easier to deploy your game to multiple OS and platforms including web and mobile.

There are other tools (Ink?) so my advice would be to try them all and settle for the one that suits your needs.

Good luck with your project! And have a wonderful day as well.

60 notes

·

View notes

Text

Tumblr.js is back!

Hello Tumblr—your friendly neighborhood Tumblr web developers here. It’s been a while!

Remember the official JavaScript client library for the Tumblr API? tumblr.js? Well, we’ve picked it up, brushed it off, and released a new version of tumblr.js for you.

Having an official JavaScript client library for the Tumblr API means that you can interact with Tumblr in wild and wonderful ways. And we know as well as anybody how important it is to foster that kind of creativity.

Moving forward, this kind of creativity is something we’re committed to supporting. We’d love to hear about how you’re using it to build cool stuff here on Tumblr!

Some highlights:

NPF post creation is now supported via the createPost method.

The bundled TypeScript type declarations have been vastly improved and are generated from source.

Some deprecated dependencies with known vulnerabilities have been removed.

Intrigued? Have a look at the changelog or read on for more details.

Migrating

v4 includes breaking changes, so if you’re ready to upgrade to from a previous release, there are a few things to keep in mind:

The callback API has been deprecated and is expected to be removed in a future version. Please migrate to the promise API.

There is no need to use returnPromises (the method or the option). A promise will be returned when no callback is provided.

createPost is a new method for NPF posts.

Legacy post creation methods have been deprecated.

createLegacyPost is a new method with the same behavior as createPost in previous versions (rename createPost to createLegacyPost to maintain existing behavior).

The legacy post creation helpers like createPhotoPost have been removed. Use createLegacyPost(blogName, { type: 'photo' }).

See the changelog for detailed release notes.

What’s in store for the future?

We'll continue to maintain tumblr.js, but we’d like to hear from you. What do you want? How can we provide the tools for you to continue making cool stuff that makes Tumblr great?

Let us know right here or file an issue on GitHub.

Some questions for you:

We’d like to improve types to make API methods easier to use. What methods are most important to you?

Are there API methods that you miss?

Tumblr.js is a Node.js library, would you use it in the browser to build web applications?

229 notes

·

View notes

Text

Mini React.js Tips #1 | Resources ✨

I thought why not share my React.js (JavaScript library) notes I made when I was studying! I will start from the very beginning with the basics and random notes I made along the way~!

Up first is what you'll need to know to start any basic simple React (+ Vite) project~! 💻

What you'll need:

node.js installed >> click

coding editor - I love Visual Studio Code >> click

basic knowledge of how to use the Terminal

What does the default React project look like?

Step-by-Step Guide

[ 1 ] Create a New Folder: The new folder on your computer e.g. in Desktop, Documents, wherever that will serve as the home for your entire React project.

[ 2 ] Open in your coding editor (will be using VSCode here): Launch Visual Studio Code and navigate to the newly created folder. I normally 'right-click > show more options > Open with Code' on the folder in the File Explorer (Windows).

[ 3 ] Access the Terminal: Open the integrated terminal in your coding editor. On VSCode, it's at the very top, and click 'New Terminal' and it should pop up at the bottom of the editor.

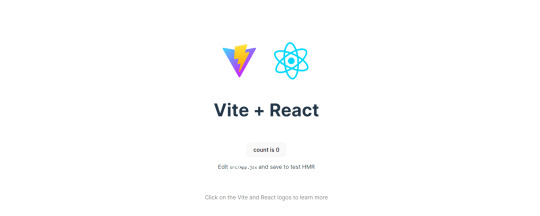

[ 4 ] Create the actual React project: Type the following command to initialize a new React project using Vite, a powerful build tool:

npm create vite@latest

[ 5 ] Name Your Project: Provide a name for your project when prompted.

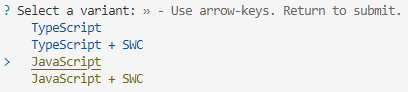

[ 6 ] Select 'React' as the Framework: Navigate through the options using the arrow keys on your keyboard and choose 'React'.

[ 7 ] Choose JavaScript Variant: Opt for the 'JavaScript' variant when prompted. This is the programming language you'll be using for your React application.

[ 8 ] Navigate to Project Folder: Move into the newly created project folder using the following command:

cd [your project name]

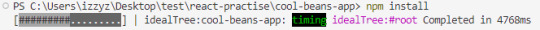

[ 9 ] Install Dependencies: Execute the command below to install the necessary dependencies for your React project (it might take a while):

npm install

[ 10 ] Run the Development Server: Start your development server with the command (the 'Local' link):

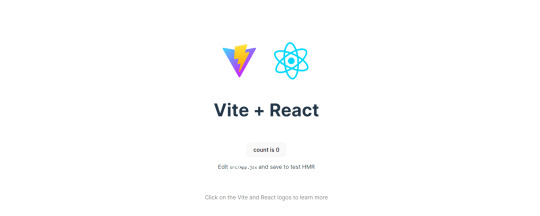

npm run dev

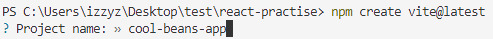

[ 11 ] Preview Your Project: Open the link provided in your terminal in your web browser. You're now ready to witness your React project in action!

Congratulations! You've successfully created your first React default project! You can look around the project structure like the folders and files already created for you!

BroCode's 'React Full Course for Free' 2024 >> click

React Official Website >> click

Stay tuned for the other posts I will make on this series #mini react tips~!

#mini react tips#my resources#resources#codeblr#coding#progblr#programming#studyblr#studying#javascript#react.js#reactjs#coding tips#coding resources

116 notes

·

View notes

Text

Funding FujoCoded: Stretch Goals!

It’s time! With our first goal met (🎉 thank you!), let’s talk about stretch goals. We have quite a few planned, so we're going to go through them one by one and explain what they are and why we chose them!

Before we go down the list, here's something fun:

Sticker Unlock: At 45 backers, we also unlocked one more sticker!

The goal of our campaign is to cover business expenses most of all. The unlocked content is an extra token of gratitude for your support that also helps us meet our own targets!

With that said, let's get to our stretch goals...

$4,000: "That's Why I Ship On Company Time" Ao3 Sticker

At $4,000 we'll unlock one more sticker design that you can add to your collection!

Our first version of this "shipping" sticker features VSCode and a terminal, but there's more than one type of shipping... here's to the other one!

$5,000: "Using NPM with Javascript" Article

Next up, we have our first article. Our plan is to add an Articles section to @fujowebdev where we'll collect simple, free guides to help beginners get past the roadblocks we see them encounter!

This first one will cover the basics of NPM, a core element of modern JavaScript!

"How do I install this JavaScript library? How do I run this open source JavaScript project? How can I get started creating my blog using a tool like @astrodotbuild?" are some of the most common questions we get in our Fandom Coders server.

Let's give *everyone* the answer!

$6,000: Offering Website Art Prints

Next up, we'll turn the excellent art on our website into prints! These will be (probably) 8x10-sized art prints that will look amazing without breaking the bank. Full specs soon!

...and speaking of the site, you have tried moving the windows, right?

$7,000: "Catching Up With Terminal" Article

Next, another common issue for beginner developers: how to start learning how to handle the Terminal.

This will require some research to determine the major roadblocks, which is how our project operates: active learning from those going through it all!

$8,000: "Crucial Confrontations" Article

And last (for now), something very dear to us: an article extracting some wisdom from the book "Crucial Confrontations": https://www.amazon.com/Crucial-Confrontations-Resolving-Promises-Expectations/dp/0071446524

This may seem like an unusual choice, but it highlights how our teaching goals go beyond programming to cover collaboration!

After years of working within our community, we repeatedly found that developing effective communication and confrontation skills helps our collaborators thrive. Unfortunately, the world doesn't teach us how to effectively (but kindly) hold each other accountable.

Some of our most involved collaborators have read this book and found the tools within it transformative. Given this experience, we deeply believe that making some of this wisdom easily accessible (without having to read the full book) will allow all of us to collaborate better!

If we can reach $8,000, this will enable us to test this hypothesis and learn how teaching soft skills beyond programming influences what we're able to achieve! It's a bold idea, but we're excited to see how it turns out in practice.

Help us make it there!

And that's all...for now!

If you want to hop on Twitch right now, you can join us as we put some extra polish on our shiny new FujoCoded website.

And remember, you can back our campaign here to help us achieve these goals and more:

23 notes

·

View notes

Text

How to Build Software Projects for Beginners

Building software projects is one of the best ways to learn programming and gain practical experience. Whether you want to enhance your resume or simply enjoy coding, starting your own project can be incredibly rewarding. Here’s a step-by-step guide to help you get started.

1. Choose Your Project Idea

Select a project that interests you and is appropriate for your skill level. Here are some ideas:

To-do list application

Personal blog or portfolio website

Weather app using a public API

Simple game (like Tic-Tac-Toe)

2. Define the Scope

Outline what features you want in your project. Start small and focus on the minimum viable product (MVP) — the simplest version of your idea that is still functional. You can always add more features later!

3. Choose the Right Tools and Technologies

Based on your project, choose the appropriate programming languages, frameworks, and tools:

Web Development: HTML, CSS, JavaScript, React, or Django

Mobile Development: Flutter, React Native, or native languages (Java/Kotlin for Android, Swift for iOS)

Game Development: Unity (C#), Godot (GDScript), or Pygame (Python)

4. Set Up Your Development Environment

Install the necessary software and tools:

Code editor (e.g., Visual Studio Code, Atom, or Sublime Text)

Version control (e.g., Git and GitHub for collaboration and backup)

Frameworks and libraries (install via package managers like npm, pip, or gems)

5. Break Down the Project into Tasks

Divide your project into smaller, manageable tasks. Create a to-do list or use project management tools like Trello or Asana to keep track of your progress.

6. Start Coding!

Begin with the core functionality of your project. Don’t worry about perfection at this stage. Focus on getting your code to work, and remember to:

Write clean, readable code

Test your code frequently

Commit your changes regularly using Git

7. Test and Debug

Once you have a working version, thoroughly test it. Look for bugs and fix any issues you encounter. Testing ensures your software functions correctly and provides a better user experience.

8. Seek Feedback

Share your project with friends, family, or online communities. Feedback can provide valuable insights and suggestions for improvement. Consider platforms like GitHub to showcase your work and get input from other developers.

9. Iterate and Improve

Based on feedback, make improvements and add new features. Software development is an iterative process, so don’t hesitate to refine your project continuously.

10. Document Your Work

Write documentation for your project. Include instructions on how to set it up, use it, and contribute. Good documentation helps others understand your project and can attract potential collaborators.

Conclusion

Building software projects is a fantastic way to learn and grow as a developer. Follow these steps, stay persistent, and enjoy the process. Remember, every project is a learning experience that will enhance your skills and confidence!

3 notes

·

View notes

Text

Fullstack .Net Free Workshop

Quality Thought, a leading software training institute with a strong reputation for delivering industry-ready IT education, is excited to offer a Free Workshop on Fullstack .NET Development on 12-July-2025. This workshop is an excellent opportunity for students, fresh graduates, job seekers, and working professionals to dive deep into one of the most in-demand technology stacks – the Microsoft .NET ecosystem.

This hands-on workshop is designed to introduce participants to the complete lifecycle of fullstack development using .NET technologies. From building dynamic backend systems to creating interactive frontend interfaces, attendees will gain exposure to modern web development practices and tools. The workshop also includes a Participation Certificate, adding value to your resume and professional profile.

What You’ll Learn:

ASP.NET Core: Understand how to build high-performance, cross-platform web applications using ASP.NET Core. Learn the basics of MVC architecture, routing, middleware, and authentication techniques to develop robust backend systems.

Entity Framework Core: Discover the power of Entity Framework Core, Microsoft’s modern ORM tool. Learn how to connect applications to databases, perform CRUD operations, and use LINQ queries efficiently through code-first and database-first approaches.

Blazor: Explore Blazor, a revolutionary framework that lets you build interactive web UIs using C#. Whether it's Blazor Server or Blazor WebAssembly, you’ll learn how to create single-page applications (SPAs) without relying heavily on JavaScript.

RESTful APIs: Learn to design and develop RESTful APIs using ASP.NET Core Web API. Gain practical knowledge of creating endpoints, handling HTTP requests, and integrating APIs with frontend applications securely and efficiently.

Frontend Frameworks: Get introduced to popular frontend frameworks and how they work with .NET backends. This includes working with tools like Angular, React, or Blazor to build responsive and dynamic user interfaces.

Why Attend?

Free of Cost: Gain top-quality training without any fees.

Certificate of Participation: Receive a certificate to enhance your LinkedIn profile and resume.

Practical Learning: Hands-on sessions, real-world projects, and code walkthroughs.

Expert Guidance: Learn from experienced trainers with deep industry knowledge.

Career-Oriented Content: Tailored to meet the demands of current software development job roles.

Whether you're new to programming or looking to upgrade your skills, this workshop offers the perfect starting point to explore the world of fullstack .NET development. Don’t miss this chance to learn, code, and grow with Quality Thought. Register today and take the first step toward a successful career in software development!

#workshop#Education#Fullstack .Net#Free Workshop#Certification#Quality Thought#.Net Training#.Net Course

2 notes

·

View notes

Text

AI Code Generators: Revolutionizing Software Development

The way we write code is evolving. Thanks to advancements in artificial intelligence, developers now have tools that can generate entire code snippets, functions, or even applications. These tools are known as AI code generators, and they’re transforming how software is built, tested, and deployed.

In this article, we’ll explore AI code generators, how they work, their benefits and limitations, and the best tools available today.

What Are AI Code Generators?

AI code generators are tools powered by machine learning models (like OpenAI's GPT, Meta’s Code Llama, or Google’s Gemini) that can automatically write, complete, or refactor code based on natural language instructions or existing code context.

Instead of manually writing every line, developers can describe what they want in plain English, and the AI tool translates that into functional code.

How AI Code Generators Work

These generators are built on large language models (LLMs) trained on massive datasets of public code from platforms like GitHub, Stack Overflow, and documentation. The AI learns:

Programming syntax

Common patterns

Best practices

Contextual meaning of user input

By processing this data, the generator can predict and output relevant code based on your prompt.

Benefits of AI Code Generators

1. Faster Development

Developers can skip repetitive tasks and boilerplate code, allowing them to focus on core logic and architecture.

2. Increased Productivity

With AI handling suggestions and autocompletions, teams can ship code faster and meet tight deadlines.

3. Fewer Errors

Many generators follow best practices, which helps reduce syntax errors and improve code quality.

4. Learning Support

AI tools can help junior developers understand new languages, patterns, and libraries.

5. Cross-language Support

Most tools support multiple programming languages like Python, JavaScript, Go, Java, and TypeScript.

Popular AI Code Generators

Tool

Highlights

GitHub Copilot

Powered by OpenAI Codex, integrates with VSCode and JetBrains IDEs

Amazon CodeWhisperer

AWS-native tool for generating and securing code

Tabnine

Predictive coding with local + cloud support

Replit Ghostwriter

Ideal for building full-stack web apps in the browser

Codeium

Free and fast with multi-language support

Keploy

AI-powered test case and stub generator for APIs and microservices

Use Cases for AI Code Generators

Writing functions or modules quickly

Auto-generating unit and integration tests

Refactoring legacy code

Building MVPs with minimal manual effort

Converting code between languages

Documenting code automatically

Example: Generate a Function in Python

Prompt: "Write a function to check if a number is prime"

AI Output:

python

CopyEdit

def is_prime(n):

if n <= 1:

return False

for i in range(2, int(n**0.5) + 1):

if n % i == 0:

return False

return True

In seconds, the generator creates a clean, functional block of code that can be tested and deployed.

Challenges and Limitations

Security Risks: Generated code may include unsafe patterns or vulnerabilities.

Bias in Training Data: AI can replicate errors or outdated practices present in its training set.

Over-reliance: Developers might accept code without fully understanding it.

Limited Context: Tools may struggle with highly complex or domain-specific tasks.

AI Code Generators vs Human Developers

AI is not here to replace developers—it’s here to empower them. Think of these tools as intelligent assistants that handle the grunt work, while you focus on decision-making, optimization, and architecture.

Human oversight is still critical for:

Validating output

Ensuring maintainability

Writing business logic

Securing and testing code

AI for Test Case Generation

Tools like Keploy go beyond code generation. Keploy can:

Auto-generate test cases and mocks from real API traffic

Ensure over 90% test coverage

Speed up testing for microservices, saving hours of QA time

Keploy bridges the gap between coding and testing—making your CI/CD pipeline faster and more reliable.

Final Thoughts

AI code generators are changing how modern development works. They help save time, reduce bugs, and boost developer efficiency. While not a replacement for skilled engineers, they are powerful tools in any dev toolkit.

The future of software development will be a blend of human creativity and AI-powered automation. If you're not already using AI tools in your workflow, now is the time to explore. Want to test your APIs using AI-generated test cases? Try Keploy and accelerate your development process with confidence.

2 notes

·

View notes

Text

What Is The Difference Between Web Development & Web Design?

In today’s world, we experience the growing popularity of eCommerce businesses. Web designing and web development are two major sectors for making a difference in eCommerce businesses. But they work together for publishing a website successfully. But what’s the difference between a web designers in Dubai and a web developer?

Directly speaking, web designers design and developers code. But this is a simplified answer. Knowing these two things superficially will not clear your doubt but increase them. Let us delve deep into the concepts, roles and differentiation between web development and website design Abu Dhabi.

What Is Meant By Web Design?

A web design encompasses everything within the oeuvre of a website’s visual aesthetics and utility. This might include colour, theme, layout, scheme, the flow of information and anything related to the visual features that can impact the website user experience.

With the word web design, you can expect all the exterior decorations, including images and layout that one can view on their mobile or laptop screen. This doesn’t concern anything with the hidden mechanism beneath the attractive surface of a website. Some web design tools used by web designers in Dubai which differentiate themselves from web development are as follows:

● Graphic design

● UI designs

● Logo design

● Layout

● Topography

● UX design

● Wireframes and storyboards

● Colour palettes

And anything that can potentially escalate the website’s visual aesthetics. Creating an unparalleled yet straightforward website design Abu Dhabi can fetch you more conversion rates. It can also gift you brand loyalty which is the key to a successful eCommerce business.

What Is Meant By Web Development?

While web design concerns itself with all a website’s visual and exterior factors, web development focuses on the interior and the code. Web developers’ task is to govern all the codes that make a website work. The entire web development programme can be divided into two categories: front and back.

The front end deals with the code determining how the website will show the designs mocked by a designer. While the back end deals entirely with managing the data within the database. Along with it forwarding the data to the front end for display. Some web development tools used by a website design company in Dubai are:

● Javascript/HTML/CSS Preprocessors

● Template design for web

● GitHub and Git

● On-site search engine optimisation

● Frameworks as in Ember, ReactJS or Angular JS

● Programming languages on the server side, including PHP, Python, Java, C#

● Web development frameworks on the server side, including Ruby on Rails, Symfony, .NET

● Database management systems including MySQL, MongoDB, PostgreSQL

Web Designers vs. Web Developers- Differences

You must have become acquainted with the idea of how id web design is different from web development. Some significant points will highlight the job differentiation between web developers and designers.

Generally, Coding Is Not A Cup Of Tea For Web Designers:

Don’t ever ask any web designers in Dubai about their coding knowledge. They merely know anything about coding. All they are concerned about is escalating a website’s visual aspects, making them more eyes catchy.

For this, they might use a visual editor like photoshop to develop images or animation tools and an app prototyping tool such as InVision Studio for designing layouts for the website. And all of these don’t require any coding knowledge.

Web Developers Do Not Work On Visual Assets:

Web developers add functionality to a website with their coding skills. This includes the translation of the designer’s mockups and wireframes into code using Javascript, HTML or CSS. While visual assets are entirely created by designers, developer use codes to implement those colour schemes, fonts and layouts into the web page.

Hiring A Web Developer Is Expensive:

Web developers are more expensive to hire simply because of the demand and supply ratio. Web designers are readily available as their job is much simpler. Their job doesn’t require the learning of coding. Coding is undoubtedly a highly sought-after skill that everyone can’t entertain.

Final Thoughts:

So if you look forward to creating a website, you might become confused. This is because you don’t know whether to opt for a web designer or a developer. Well, to create a website, technically, both are required. So you need to search for a website design company that will offer both services and ensure healthy growth for your business.

2 notes

·

View notes

Text

When it comes to building high-performance, scalable, and modern web applications, Node.js continues to stand out as a top choice for developers and businesses alike. It’s not just another backend technology it’s a powerful runtime that transforms the way web apps are developed and experienced.

Whether you're building a real-time chat app, a fast eCommerce platform, or a large-scale enterprise tool, Node.js has the flexibility and speed to meet your needs.

⚡ High Speed – Powered by Google’s V8 engine.

🔁 Non-blocking I/O – Handles multiple requests smoothly.

🌐 JavaScript Everywhere – Frontend + backend in one language.

📦 npm Packages – Huge library for faster development.

📈 Built to Scale – Great for real-time and high-traffic apps.

🤝 Strong Community – Get support, updates, and tools easily.

Your next web app? Make it real-time, responsive, and ridiculously efficient. Make it with Node.js.

#nodejs development company in usa#node js development company#nodejs#top nodejs development company#best web development company in usa

2 notes

·

View notes

Text

The Complete Manual for SEO in 2025: Methods to Rule Search Results

Digital marketing

Because Google's algorithms are becoming more intelligent and user behavior is changing, search engine optimization, or SEO, is always changing. To stay ahead of the curve in 2025, you will need to combine technical know-how, excellent content, and strategic link-building. If you are a blogger, digital marketer, or business owner, learning SEO can help you increase conversions and drive organic traffic.

In order to help you dominate search engine rankings in 2024, we will examine the most recent SEO tactics in this guide.

1. Recognizing SEO in 2025

Enhancing your website's visibility on search engines such as Google and Bing is known as SEO. A higher ranking for pertinent keywords, organic traffic, and improved user experience are the objectives.

Relevance, authority, and high-quality content are given top priority by search engines. Google's algorithms have become increasingly complex in comprehending search intent and rewarding websites that offer genuine value as a result of developments in AI and machine learning.

2. Keyword Analysis: The Basis for SEO

The foundation of SEO is still keyword research. But in 2025, search intent will be more important than ever. Take into account the following rather than just high-volume keywords:

Long-tail keywords are longer, more focused terms with higher conversion rates and less competition.

Semantic keywords: Include synonyms and variations of your primary keyword because Google's algorithms can comprehend related terms.

Keywords that are based on questions: A lot of users enter terms like "how to," "best way to," or "why does." By responding to these questions, you can increase how likely you are to be featured in snippets.

The Top Resources for Researching Keywords:

For both PPC and organic search insights, Google Keyword Planner is a free and helpful tool.

Ahrefs: Offers competitor analysis, search volume, and keyword difficulty.

SEMrush: Provides backlink analysis, site audits, and keyword research.

Finding frequently asked questions about your subject is made easier with AnswerThePublic.

3. Optimizing Your Content with On-Page SEO

Improving search rankings by optimizing individual pages is known as on-page SEO. Here's how to make your content better:

a. Meta descriptions and title tags

Your title tag should contain your target keyword, be compelling, and be no more than 60 characters. Likewise, your 160-character meta description should enticingly summarize your content and entice readers to click.

b. Header tags, such as H1, H2, and H3.

Use the main title in H1.

Utilize H2s and H3s for subheadings to enhance structure and readability.

Naturalize the use of primary and secondary keywords.

c. Optimizing URLs

A clean, descriptive URL helps both search engines and users. Example: Bad URL: www.example.com/p=12345 Visit www.example.com/seo-strategies-2025 for a good URL.

d. Linking Inside

Link equity is distributed, navigation is improved, and user engagement is prolonged when you link to other pertinent pages on your website.

e. Optimization of Images

To speed up page loads, reduce the size of images.

To help search engines understand images, use alt text to describe them.

4. Improving Website Performance with Technical SEO

Making a site's backend better for search engines to crawl and index is the main goal of technical SEO.

A. Optimization for Mobile

As mobile-first indexing is the standard, make sure your website is responsive. Google's Mobile-Friendly Test can be used to test your mobile performance.

b. Page Speed and Essential Web Elements

Google takes into account Core Web Vitals (LCP, FID, and CLS) and page speed when determining rankings. Boost speed by:

making use of a content delivery network (CDN).

making videos and pictures better.

turning on browser caching and minifying JavaScript and CSS.

c. Make Your Website Secure with HTTPS

Safe websites are given priority by Google. Convert your website to HTTPS if it still uses HTTP for better search engine rankings and user confidence.

Robots.txt and XML Sitemap

Make sure that all of the key pages are indexed by submitting an updated XML sitemap to Google Search Console. A robots.txt file can also be used to prevent the crawling of unnecessary pages.

5. Developing Authority and Trust through Off-Page SEO

a. Creating Links

One important ranking factor is still backlinks. Quantity is not as important as quality. Pay attention to:

guest posting on trustworthy websites.

Links are created by having high-quality content.

"Broken link building" is the process of identifying and fixing broken links.

Consider using HARO (Help A Reporter Out) to get backlinks from news websites.

b. Social Media Brand Mentions

Despite social signals not being direct ranking factors, a strong social media presence can boost credibility and drive traffic.

c. Local SEO and Internet critiques

If your business operates locally, encourage customers to leave positive reviews on Google My Business and Yelp. The local SEO rankings of your business are also raised when local citations contain your company's name, address, and phone number.

7. The Growth of Voice and Zero-Click Searches

It is imperative to optimize for voice search given the popularity of smart assistants like Alexa and Siri.

Make use of FAQs and keywords in natural language.

Because many voice searches are location-based, make sure your website is optimized for local search engines.

There is also an increase in zero-click searches, in which Google provides an answer right in the SERP. In order to appear in featured snippets:

Give succinct, well-organized responses.

For clarity, use lists and bullet points.

8. The Synergy of Content Marketing and SEO

Content marketing and SEO are closely related. For 2025 success, concentrate on

1,500+ words of long-form content that offers comprehensive answers.

Integration of multimedia (podcasts, infographics, and videos).

User interaction promotes sharing, comments, and conversations.

9. Tracking and Enhancing SEO Results

Use resources such as:

Google Analytics: Monitors conversions, traffic, and bounce rates.

Data on indexing and keyword performance is provided by Google Search Console.

Analysis of competitors and backlinks is provided by Ahrefs and SEMrush.

Important data to monitor:

an increase in organic traffic.

ranking of keywords.

CTR stands for click-through rates.

bounce rates and dwell time.

Conclusion: Make Your SEO Strategy Future-Proof

The process of SEO is continuous and necessitates continuous modification. Creating valuable content, staying up to date with algorithm changes, and focusing on technical optimizations are all ways to maintain high search rankings in 2025.

Ready to make your website more successful? Put these tactics into practice right now, and see how your organic traffic increases!

2 notes

·

View notes

Text

Aamod ItSolutions: Crafting Custom Websites with Modern Technologies for Your Business’s Success

In today’s digital world, having an effective website is crucial for business success. Aamod ItSolutions offers expert web design, development, and marketing services that help businesses make the most of their online platforms. Our team of skilled designers and developers uses modern technologies to build custom, user-friendly, and reliable websites tailored to your specific needs.

Why Web Development Matters

A website serves as a business’s online identity. It enables businesses to reach a wider audience, engage with customers, and boost sales. A professionally developed website builds trust, enhances credibility, and positions your business as a leader in the market.

At Aamoditsolutions, we focus on delivering websites that provide excellent user experience (UX), performance, and scalability. We employ various modern technologies to ensure your site meets business goals efficiently.

Technologies We Use

Laravel: Laravel is a powerful PHP framework used for building secure, scalable web applications. It simplifies development with features like Eloquent ORM for database management and Blade templating for creating dynamic views. Laravel is great for complex applications with robust security features.

CodeIgniter: CodeIgniter is a lightweight PHP framework known for its speed and simplicity. It’s ideal for developers looking for quick setups and minimal configuration. With its MVC architecture, CodeIgniter is perfect for building fast, high-performance websites, especially when project deadlines are tight.

CakePHP: CakePHP is another PHP framework that streamlines the development process with built-in features like form validation and security components. It helps deliver web apps quickly without compromising quality. CakePHP is ideal for projects that need rapid development with a focus on database-driven applications.

Node.js: Node.js is a JavaScript runtime for building fast and scalable applications. It is especially useful for real-time web apps such as chat applications or live notifications. Node.js provides non-blocking I/O operations, enabling it to handle many simultaneous connections without performance loss.

AngularJS: AngularJS is a framework developed by Google for building dynamic, single-page applications (SPAs). Its features like two-way data binding and dependency injection make it perfect for building interactive user interfaces that update in real time without reloading the entire page.

Why Choose Aamod ItSolutions?

At AamodItSolutions, we use the latest tools and technologies to build high-performance, secure, and user-friendly websites that help you grow your business. Whether you’re a startup or a large corporation, we create custom solutions that align with your objectives.

We understand that every business has unique needs. That’s why we choose the right technology for each project to ensure optimum results. By working with us, you can expect a website that provides a seamless experience for your users and contributes to your business growth.

Let Aamod ItSolutions help you create a powerful online presence that engages customers and drives business success.

#cosplay#drew starkey#bucktommy#entrepreneur#harley quinn#jujutsu kaisen#english literature#black literature#blush#kawaii

3 notes

·

View notes

Text

Anyone can program (yes, even you)

"Programming is easy"

I saw some variations of this statement shared around the site recently, always in good intentions of course, but it got me thinking.

Is that really true?

Well it certainly isn't hard in the way some developers would want to make you believe. A great skill bestowed only upon the greatest of minds, they're the ones making the world work. You better be thankful.

That is just elitist gibberish. If anyone ever tells you that programmers are "special people" in that way, or tries to sell you on the idea of "real" programmers that are somehow better than the rest, you can safely walk in the other direction. They have nothing of value to tell you.

But I think the answer is more complicated than a simple "Yes, programming is easy" too. In all honesty, I don't think it's an easy thing to "just pick up" at all. It can be very unintuitive at first to wrap your head around just how to tell a computer to solve certain problems.

One person in the codeblr Discord server likened it to cooking. That's a skill that can be very hard, but it's also something that everyone can learn. Anyone can cook. And anyone can program.

I really mean that. No need to be good at maths, to know what a bit is or whatever it is people told you you need. You're not too old to learn it either, or too young for that matter. If you want to start programming (and you can read this post), you already have everything you need. You can write your first little programs today!

One of the cool things about programming is that you can just fuck around and try lots of stuff, and it's fine. Realistically, the worst thing that can happen is that it doesn't work the way you imagined. But you'll never accidentally trigger the fire alarm or burn your house down, so feel free to just try a bunch of stuff.

"Okay I want to learn programming now, what do I do?"

That's awesome, I love the enthusiasm! As much as I'd love to just give you a resource and tell you to build a thing, you still have to make a choice what you want to learn first. The options I'd recommend are:

Scratch: A visual education tool. The main advantage is that you don't have to worry about the exact words you need to write down, you can just think about the structure of your program. The way it works is that you drag and drop program elements to be executed when they should be. You can relatively quickly learn to make cute little games in it. The downside is that this isn't really a "professional" programming language, so, while learning from Scratch will give you the basics that apply to most languages and will make switching to another language easier, you're still gonna have to switch sooner or later. Start here: https://scratch.mit.edu/

Python: The classic choice. Python is a very widely used, flexible programming language that is suited for beginners. It is what I would recommend if you want to skip right to or move on from Scratch to a more flexible language. https://automatetheboringstuff.com/ is your starting point, but there's also a longer list of resources here if you want to check that out at some point.

HTML/CSS/JavaScript: The web path. HTML and CSS are for creating the look of websites, and JavaScript is for the interactive elements. For example, if you ever played a game in your browser, that was probably written in JS. Since HTML and CSS are just for defining how the website should look, they're different from traditional programming languages, and you won't be able to write programs in them, that's what JS is for. You have to know HTML before you learn CSS, but otherwise the order in which you learn these is up to you. Your JavaScript resource is https://javascript.info/, and for HTML and CSS you can check out https://developer.mozilla.org/en-US/docs/Learn/Getting_started_with_the_web.

I put some starting out resources here, but they're really just that - they're for starting out. You don't have to stick to them. If you find another path that suits you better, or if you want to get sidetracked with another resource or project, go for it! Your path doesn't have to be linear at all, and there's no "correct" way to learn things.

One of the most important things you'll want to do is talk to developers when you struggle. The journey is going to be frustrating at times, so search out beginner-friendly coding communities on Discord or wherever you're comfortable. The codeblr community certainly tends to be beginner-friendly and kind. My DMs and asks are also open on here.

#programming#is that a motherhecking RATATOUILLE reference??!?#codeblr#coding#the only reliable predictor of whether someone can be a good programmer is whether they have or can develop a passion for programming#how did me thinking “well is programming actually easy" turn into a resources post uhm#coding resources#shoutouts to the codeblr discord they're coo#long post#Most good programmers do programming not because they expect to get paid or get adulation by the public; but because it is fun to program#- Linus Torvalds

24 notes

·

View notes

Note

woah! just saw your bio change to software engineer. how did you transition? is it any different than web dev?

i also went on a TikTok rabbit hole and people are saying it’s useless to learn html/css and it’s not an actual language. honestly idk why I thought it would be easy to learn html > css > javascript > angular > react and somehow land a good paying job…

it’s gonna take YEARS for me to have a career, i feel old… especially with no degree

Hiya! 🩶

This is a long reply so I answered your question in sections below! But in the end, I hope this helps you! 🙆🏾♀️

🔮 "How did you transition?"

So, yeah my old job title was "Junior Web Developer" at a finance firm, and now my new title is "Frontend Software Engineer"! In terms of transition, I didn't make too much of a change.

After I quit my old job, I focused more on Frontend technologies that were relevant, so I focused on React.js and Node.js. I used YouTube, books, and Codeacademy. My first React project was >> this Froggie project <<~! Working on real-life projects such as the volunteering job I did (only for a month) where they used the technologies I was learning. So basically I did this:

decides to learn react and node 🤷🏾♀️

"oh wait let me find some volunteering job for developers where they use the tech I am learning so I can gain some real-life experience 🤔"

experienced developers in the team helped me with other technologies such as UI tools, and some testing experience 🙆🏾♀️

I did the volunteering work for both fun and learning with experienced developers and... I was bored and wanted to feel productive again... 😅

So for transitioning, I focused on learning the new technologies I wanted to work in and got some work experience (though it was volunteering) to back up if I can work in an environment with the tech. I still live with my family so I could do the volunteering job and have time to self-study whilst being okay financially (though I was tight with money haha) 😅👍🏾

🔮 "Is it any different than web dev?"

The old job was focused on using C# and SQL (including HTML, CSS, and JavaScript but fairly small) to make the websites, they were fairly basic websites for clients to use just to navigate their information needed. They weren't fancy cool web design because they didn't need to be, which was what made me bored of the job and wanted a change.

I am only a week into the job and have been working on small tickets (features for the site), but I think after a month or two into the job I will make a proper judgment on the difference~! So far, it's kind of the same thing I did in my old job but with new workflow tools, React-based projects, and funny people to work with 😅🙌🏾

🔮 "People are saying it’s useless to learn HTML/CSS and it’s not an actual language."

Yes HTML is a markup language and CSS is a stylesheet but they are the foundation of like 90% of the websites on the internet, I wouldn't ever call them "useless". Frameworks such as React, Django, Flask, etc still require HTML and CSS code to make the website's structure and styling. CSS frameworks like Tailwind and Bootstrap 5 still use CSS as their base/foundation. Not useless at all.

Don't focus on what other people are doing and focus on your own learning. I repeat this all the time on my blog. Just because one or a couple people online said one technology is useless doesn't mean it is (this is applied to most things in tech). Someone told me jQuery was entirely useless and no bother learning it - I did it anyway and it helped me better understand JavaScript. Anyhoo, try things YOURSELF before listening to what people say - make your own judgment. Not going to let a random Tech bro online whine about how annoying Python or C or whatever is to ruin my want to learn something. (This is all coming from a girl who loves web development very much's point of view :D)

🔮 "I thought it would be easy to learn html > css > javascript > angular > react and somehow land a good paying job"

Web Dev route, I love it! That's literally the same steps I would have taken if I had to start again~! For each new tech you learn, make a bunch of projects to 1) prove to yourself that you can apply what you've learned 2) experience 3) fill that portfolio~! 😎🙌🏾

With Angular and React, I would pick one or the other and focus on being really good at it before learning another framework!

I also recommend volunteering jobs, freelancing, helping a small business out with free/paid m

Lastly, you do not need a degree to get a job in Web Development. I mean look at me? My apprenticeship certificate is the same value as finishing school at 18, so in the UK it would be A-Levels, and I completed it at the ripe age of 21! I have no degree, I applied for university and got a place but I will give that space up for someone else, I'm not ready for university just yet! haha... (plus erm it's expensive at the end, what? even for the UK...). Sure, I used to avoid the job postings that were like "You need a computer science degree" but now if I were job searching I would apply regardless.

People switching careers in their 40s going into tech instead are making it, you can switch anytime in your lifetime if you have the means to! (everyone's situation is different I understand).

I'm not too good at giving advice but I hope in the rambling I made some sense? But yeah that's all! 😎

#my asks#codeblr#coding#progblr#programming#studyblr#studying#computer science#tech#comp sci#programmer#career advice#career#career tips

19 notes

·

View notes

Text

Audio and Music Application Development

The rise of digital technology has transformed the way we create, consume, and interact with music and audio. Developing audio and music applications requires a blend of creativity, technical skills, and an understanding of audio processing. In this post, we’ll explore the fundamentals of audio application development and the tools available to bring your ideas to life.

What is Audio and Music Application Development?

Audio and music application development involves creating software that allows users to play, record, edit, or manipulate sound. These applications can range from simple music players to complex digital audio workstations (DAWs) and audio editing tools.

Common Use Cases for Audio Applications

Music streaming services (e.g., Spotify, Apple Music)

Audio recording and editing software (e.g., Audacity, GarageBand)

Sound synthesis and production tools (e.g., Ableton Live, FL Studio)

Podcasting and audio broadcasting applications

Interactive audio experiences in games and VR

Popular Programming Languages and Frameworks

C++: Widely used for performance-critical audio applications (e.g., JUCE framework).

JavaScript: For web-based audio applications using the Web Audio API.

Python: Useful for scripting and prototyping audio applications (e.g., Pydub, Librosa).

Swift: For developing audio applications on iOS (e.g., AVFoundation).

Objective-C: Also used for iOS audio applications.

Core Concepts in Audio Development

Digital Audio Basics: Understanding sample rates, bit depth, and audio formats (WAV, MP3, AAC).

Audio Processing: Techniques for filtering, equalization, and effects (reverb, compression).

Signal Flow: The path audio signals take through the system.

Synthesis: Generating sound through algorithms (additive, subtractive, FM synthesis).

Building a Simple Audio Player with JavaScript

Here's a basic example of an audio player using the Web Audio API:<audio id="audioPlayer" controls> <source src="your-audio-file.mp3" type="audio/mpeg"> Your browser does not support the audio element. </audio> <script> const audio = document.getElementById('audioPlayer'); audio.play(); // Play the audio </script>

Essential Libraries and Tools

JUCE: A popular C++ framework for developing audio applications and plugins.

Web Audio API: A powerful API for controlling audio on the web.

Max/MSP: A visual programming language for music and audio.

Pure Data (Pd): An open-source visual programming environment for audio processing.

SuperCollider: A platform for audio synthesis and algorithmic composition.

Best Practices for Audio Development

Optimize audio file sizes for faster loading and performance.

Implement user-friendly controls for audio playback.

Provide visual feedback (e.g., waveforms) to enhance user interaction.

Test your application on multiple devices for audio consistency.

Document your code and maintain a clear structure for scalability.

Conclusion

Developing audio and music applications offers a creative outlet and the chance to build tools that enhance how users experience sound. Whether you're interested in creating a simple audio player, a complex DAW, or an interactive music app, mastering the fundamentals of audio programming will set you on the path to success. Start experimenting, learn from existing tools, and let your passion for sound guide your development journey!

2 notes

·

View notes

Text

What is React and React Native? Understanding the Difference

If you're starting out in frontend or mobile development, you've likely heard of both React and React Native. But what exactly are they, and how do they differ?

In this guide, we’ll break down what is React and React Native, their use cases, key differences, and when you should use one over the other.

What is React?

React (also known as React.js or ReactJS) is an open-source JavaScript library developed by Facebook, used to build user interfaces—primarily for single-page applications (SPAs). It's component-based, efficient, and declarative, making it ideal for building dynamic web applications.

Key Features of React:

Component-based architecture: Reusable pieces of UI logic.

Virtual DOM: Improves performance by reducing direct manipulation of the real DOM.

Unidirectional data flow: Predictable state management.

Rich ecosystem: Integrates well with Redux, React Router, and Next.js.

Common Use Cases:

Building dynamic web apps and dashboards

Single-page applications (SPAs)

E-commerce platforms

Admin panels and content management systems

What is React Native?

React Native is also developed by Facebook but is used for building native mobile apps using React principles. It enables developers to write apps using JavaScript and React, but renders UI components using native APIs—meaning it works just like a real native app.

Key Features of React Native:

Cross-platform compatibility: Build apps for both iOS and Android with a single codebase.

Native performance: Uses real mobile components.

Hot reloading: Faster development cycles.

Community support: Large ecosystem of plugins and libraries.

Common Use Cases:

Mobile apps for startups and MVPs

Apps with simple navigation and native look-and-feel

Projects that need rapid deployment across platforms

React vs React Native: Key Differences

Feature

React (React.js)

React Native

Platform

Web browsers

iOS and Android mobile devices

Rendering

HTML via the DOM

Native UI components

Styling

CSS and preprocessors

Uses StyleSheet API (like CSS in JS)

Navigation

React Router

React Navigation or native modules

Ecosystem

Rich support for web tools

Tailored to mobile development

Performance

Optimized for web

Optimized for native mobile experience

When to Use React

Choose React when:

You're building a web application or website

You need SEO optimization (e.g., with Next.js)

Your app depends heavily on web-based libraries or analytics tools

You want precise control over responsive design using HTML and CSS

When to Use React Native

Choose React Native when:

You need a mobile app for both iOS and Android

Your team is familiar with JavaScript and React

You want to reuse logic between mobile and web apps

You’re building an MVP to quickly test product-market fit

Can You Use Both Together?

Yes! You can share business logic, APIs, and sometimes even components (with frameworks like React Native Web) between your React and React Native projects. This is common in companies aiming for a unified development experience across platforms.

Real-World Examples

React is used in web apps like Facebook, Instagram (web), Airbnb, and Netflix.

React Native powers mobile apps like Facebook, Instagram (mobile), Shopify, Discord, and Bloomberg.

Final Thoughts

Understanding what is React and React Native is essential for any frontend or full-stack developer. React is perfect for building fast, scalable web applications, while React Native enables you to build cross-platform mobile apps with a native experience. If you’re deciding between the two, consider your target platform, performance needs, and development resources. In many modern development teams, using both React and React Native allows for a consistent developer experience and code reuse across web and mobile platforms.

2 notes

·

View notes