#It does not have a GPU which is probably where the issue was

Explore tagged Tumblr posts

Text

Quick follow-up on yesterday's post. I am vindicated. I did test out jellyfin with transcoding yesterday and it completely saturated my media server hardware, something that doesn't come near to happening with my methods. By doing it my way I'm able to serve multiple HD streams at once, Jellyfin choked on one SD stream because it insisted on transcoding rather than just passing the file through.

#it's an older processor#but it's a 4 core i5 sitting around 3.1 GHz without turbo#It does not have a GPU which is probably where the issue was#and it's old enough that it likely doesn't have the intel instruction sets to speed up transcoding#but still it was an easy 8x increase in normal load

1 note

·

View note

Text

Rant about generative AI in education and in general under the cut because I'm worried and frustrated and I needed to write it out in a small essay:

So, context: I am a teacher in Belgium, Flanders. I am now teaching English (as a second language), but have also taught history and Dutch (as a native language). All in secondary education, ages 12-16.

More and more I see educational experts endorse ai being used in education and of course the most used tools are the free, generative ones. Today, one of the colleagues responsible for the IT of my school went to an educational lecture where they once again vouched for the use of ai.

Now their keyword is that it should always be used in a responsible manner, but the issue is... can it be?

1. Environmentally speaking, ai has been a nightmare. Not only does it have an alarming impact on emission levels, but also on the toxic waste that's left behind. Not to mention the scarcity of GPUs caused by the surge of ai in the past few years. Even sources that would vouch for ai have raised concerns about the impact it has on our collective health. sources: here, here and here

2. Then there's the issue with what the tools are trained on and this in multiple ways:

Many of the free tools that the public uses is trained on content available across the internet. However, it is at this point common knowledge (I'd hope) that most creators of the original content (writers, artists, other creative content creators, researchers, etc.) were never asked for permission and so it has all been stolen. Many social media platforms will often allow ai training on them without explicitly telling the user-base or will push it as the default setting and make it difficult for their user-base to opt out. Deviantart, for example, lost much of its reputation when it implemented such a policy. It had to backtrack in 2022 afterwards because of the overwhelming backlash. The problem is then that since the content has been ripped from their context and no longer made by a human, many governments therefore can no longer see it as copyrighted. Which, yes, luckily also means that ai users are legally often not allowed to pass off ai as 'their own creation'. Sources: here, here

Then there's the working of generative ai in general. As said before, it simply rips words or image parts from their original, nuanced context and then mesh it together without the user being able to accurately trace back where the info is coming from. A tool like ChatGPT is not a search engine, yet many people use it that way without realising it is not the same thing at all. More on the working of generative ai in detail. Because of how it works, it means there is always a chance for things to be biased and/or inaccurate. If a tool has been trained on social media sources (which ChatGPT for example is) then its responses can easily be skewed to the demographic it's been observing. Bias is an issue is most sources when doing research, but if you have the original source you also have the context of the source. Ai makes it that the original context is no longer clear to the user and so bias can be overlooked and go unnoticed much easier. Source: here

3. Something my colleague mentioned they said in the lecture is that ai tools can be used to help the learning of the students.

Let me start off by saying that I can understand why there is an appeal to ai when you do not know much about the issues I have already mentioned. I am very aware it is probably too late to fully stop the wave of ai tools being published.

There are certain uses to types of ai that can indeed help with accessibility. Such as text-to-voice or the other way around for people with disabilities (let's hope the voice was ethically begotten).

But many of the other uses mentioned in the lecture I have concerns with. They are to do with recognising learning, studying and wellbeing patterns of students. Not only do I not think it is really possible to data-fy the complexity of each and every single student you would have as they are still actively developing as a young person, this also poses privacy risks in case the data is ever compromised. Not to mention that ai is often still faulty and, as it is not a person, will often still make mistakes when faced with how unpredictable a human brain can be. We do not all follow predictable patterns.

The lecture stated that ai tools could help with neurodivergency 'issues'. Obviously I do not speak for others and this next part is purely personal opinion, but I do think it important to nuance this: as someone with auDHD, no ai-tool has been able to help me with my executive dysfunction in the long-term. At first, there is the novelty of the app or tool and I am very motivated. They are often in the form of over-elaborate to-do lists with scheduled alarms. And then the issue arises: the ai tries to train itself on my presented routine... except I don't have one. There is no routine to train itself on, because that is my very problem I am struggling with. Very quickly it always becomes clear that the ai doesn't understand this the way a human mind would. A professionally trained in psychology/therapy human mind. And all I was ever left with was the feeling of even more frustration.

In my opinion, what would help way more than any ai tool would be the funding of mental health care and making it that going to a therapist or psychiatrist or coach is covered by health care the way I only have to pay 5 euros to my doctor while my health care provider pays the rest. (In Belgium) This would make mental health care much more accessible and would have a greater impact than faulty ai tools.

4. It was also said that ai could help students with creative assignments and preparing for spoken interactions both in their native language as well as in the learning of a new one.

I wholeheartedly disagree. Creativity in its essence is about the person creating something from their own mind and putting the effort in to translate those ideas into their medium of choice. Stick figures on lined course paper are more creative than letting a tool like Midjourney generate an image based on stolen content. How are we teaching students to be creative when we allow them to not put a thought in what they want to say and let an ai do it for them?

And since many of these tools are also faulty and biased in their content, how could they accurately replace conversations with real people? Ai cannot fully understand the complexities of language and all the nuances of the contexts around it. Body language, word choice, tone, volume, regional differences, etc.

And as a language teacher, I can truly say there is nothing more frustrating than wanting to assess the writing level of my students, giving them a writing assignment where they need to express their opinion and write it in two tiny paragraphs... and getting an ai response back. Before anyone comes to me saying that my students may simply be very good at English. Indeed, but my current students are not. They are precious, but their English skills are very flawed. It is very easy to see when they wrote it or ChatGPT. It is not only frustrating to not being able to trust part of your students' honesty and knowing they learned nothing from the assignment cause you can't give any feedback; it is almost offensive that they think I wouldn't notice it.

5. Apparently, it was mentioned in the lecture that in schools where ai is banned currently, students are fearful that their jobs would be taken away by ai and that in schools where ai was allowed that students had much more positive interactions with technology.

First off, I was not able to see the source and data that this statement was based on. However, I personally cannot shake the feeling there's a data bias in there. Of course students will feel more positively towards ai if they're not told about all the concerns around it.

Secondly, the fact that in the lecture it was (reportedly) framed that being scared your job would disappear because of ai, was untrue is... infuriating. Because it already is becoming a reality. Let's not forget what partially caused the SAG-AFTRA strike in 2023. Corporations see an easy (read: cheap) way to get marketable content by using ai at the cost of the creative professionals. Unregulated ai use by businesses causing the loss of jobs for real-life humans, is very much a threat. Dismissing this is basically lying to young students.

6. My conclusion:

I am frustrated. It's clamoured that we, as teachers, should educate more about ai and it's responsible use. However, at the same time the many concerns and issues around most of the accessible ai tools are swept under the rug and not actively talked about.

I find the constant surging rise of generative ai everywhere very concerning and I can only hope that more people will start seeing it too.

Thank you for reading.

53 notes

·

View notes

Note

I think consoles should probably die but I think the issue is that the 350 dollar pc you screenshotted like. Is bad lol. It won't run new games flat out. Its like 800-1000 dollars for a midrange pc that does what you need it to ie new games and the lower price is with like months of scalping deals. Consoles still have a niche of like 500 dollars and it will do what it does for 10 years and make games look decent, which like technical aptitude aside and such like is a valuable niche. (Note: I have built pcs and will never do so myself again because it sounds easy enough until like your ram isn't compatible with your motherboard's bios despite it listing it as such and amd sends you 3 dead cpus in a row and your gpu has removed all antialiasing from games due to the silicon lottery etc etc. Never again lol, I'm paying others to deal with this from now on.)

the price on the one i posted (courtesy of goons who were talking about this exact thing by coincidence the other day) is also bc its refurbed, which helps. the dead CPU thing i hate so fucking much. i went through the same shit as you with motherboards and also had to return them 3x. since when do we live in a universe where you buy a product costing over 100 dollars and are supposed to anticipate it wont work?? insane!!!! but on the other hand i can switch shit out whenever and if it breaks its not a huge hassle.

i realized when i woke up from nap #3 today (woof lol) that part of the problem i have with things in general is that graphical fidelity is inexplicably the marker by which we determine quality. a console that can render a photo realistic human flawlessly is worthless if the games revolving around that feature and that feature only are ass. like, the only positive thing ive heard unprompted about the ps5 is that the controller is extremely cool but i will be fucking damned if i could name a single game that wasnt a tech demo that used it effectively. because they've locked themselves into this corner of boasting about having the best graphics, they have to develop games that primarily feature 4k super ultra mega graphics to justify the expense of the console. does this make sense. like now they're in this stupid loop where because game production revolves around the feature that they didn't appear to actually meaningfully plan for.

also im sick in the head, bc my first thought when people were like "but the computer won't run on ultra high settings!" was "so? turn them down?". but then i remembered all of the games ive played in the last 10 years that looked like ass because the devs didnt think that they should try to make the game attractive or maintain any of the atmosphere for people with normal computers.

32 notes

·

View notes

Text

Share Your Anecdotes: Multicore Pessimisation

I took a look at the specs of new 7000 series Threadripper CPUs, and I really don't have any excuse to buy one, even if I had the money to spare. I thought long and hard about different workloads, but nothing came to mind.

Back in university, we had courses about map/reduce clusters, and I experimented with parallel interpreters for Prolog, and distributed computing systems. What I learned is that the potential performance gains from better data structures and algorithms trump the performance gains from fancy hardware, and that there is more to be gained from using the GPU or from re-writing the performance-critical sections in C and making sure your data structures take up less memory than from multi-threaded code. Of course, all this is especially important when you are working in pure Python, because of the GIL.

The performance penalty of parallelisation hits even harder when you try to distribute your computation between different computers over the network, and the overhead of serialisation, communication, and scheduling work can easily exceed the gains of parallel computation, especially for small to medium workloads. If you benchmark your Hadoop cluster on a toy problem, you may well find that it's faster to solve your toy problem on one desktop PC than a whole cluster, because it's a toy problem, and the gains only kick in when your data set is too big to fit on a single computer.

The new Threadripper got me thinking: Has this happened to somebody with just a multicore CPU? Is there software that performs better with 2 cores than with just one, and better with 4 cores than with 2, but substantially worse with 64? It could happen! Deadlocks, livelocks, weird inter-process communication issues where you have one process per core and every one of the 64 processes communicates with the other 63 via pipes? There could be software that has a badly optimised main thread, or a badly optimised work unit scheduler, and the limiting factor is single-thread performance of that scheduler that needs to distribute and integrate work units for 64 threads, to the point where the worker threads are mostly idling and only one core is at 100%.

I am not trying to blame any programmer if this happens. Most likely such software was developed back when quad-core CPUs were a new thing, or even back when there were multi-CPU-socket mainboards, and the developer never imagined that one day there would be Threadrippers on the consumer market. Programs from back then, built for Windows XP, could still run on Windows 10 or 11.

In spite of all this, I suspect that this kind of problem is quite rare in practice. It requires software that spawns one thread or one process per core, but which is deoptimised for more cores, maybe written under the assumption that users have for two to six CPU cores, a user who can afford a Threadripper, and needs a Threadripper, and a workload where the problem is noticeable. You wouldn't get a Threadripper in the first place if it made your workflows slower, so that hypothetical user probably has one main workload that really benefits from the many cores, and another that doesn't.

So, has this happened to you? Dou you have a Threadripper at work? Do you work in bioinformatics or visual effects? Do you encode a lot of video? Do you know a guy who does? Do you own a Threadripper or an Ampere just for the hell of it? Or have you tried to build a Hadoop/Beowulf/OpenMP cluster, only to have your code run slower?

I would love to hear from you.

13 notes

·

View notes

Note

have you thought about a docked laptop setup? i have a lot of my own energy/disability issues and i'm looking into it for when i can upgrade. getting a good laptop that can do most things, but having the ability to dock it to an external gpu and a monitor setup when you want to do work or play big games at your desk is like. one of the better ways to get a split setup that lets you move to bed when you're low energy without much hassle

money's the main thang for sure. i have a solid desktop setup, so i don't really have to worry about performance or anything like that.

portability for digital art is a little tricky for me just 'cause i am very particular about how stuff feels. it's a sensory thing, and when it's off, drawing feels like 7000 pins in my wrist and arm (mentally) lol. so if my brain is in cintiq mode, it's wanting everything sensory about my cintiq - how it feels to push the pen on the screen, how much glide/friction there is, etc. also cursor/hover stuff, which doesn't exist on the ipad i have.

it also does not help that my cintiq is a 13hd, so it uses wacom's lovely (awful) 3-in-1 cable, which is already finicky enough without daisychaining usb/hdmi extenders lol

so usually i'll just swap to my ipad, if the sensory overlords deem it acceptable. anddd that brings up the other nested issue:

my ipad is a 6th gen ipad, the first non-pro one that can use the pencil. it's at the point where i can't have another app open while drawing whether it's clip studio or procreate. it's just old and i've used the hell out of it. it also doesn't support hover, so another sensory problem.

honestly if i could afford it, i'd probably just get the biggest, newest ipad pro. it has hover capability and would be comparable to my cintiq size wise. i could use this w/astropad and be able to hop to and from my desk without issue for the most part. also the novelty of a new toy could help push me through the adjustment period of getting used to going back and forth lol.

i'd be able to use it more dependably for drawing wherever, as well as serving most needs i'd want out of a laptop.

buuuut i am still in a perpetual state of not being able to afford my basics, let alone think about any technological upgrades/changes. sooo i just have to tell my autistic ass to calm down and wait it out, hopefully i can address it before i die!! :D

(ty for the suggestion btw!! i hope you find something that works for you, too!)

3 notes

·

View notes

Text

A lot of it can! I built a stupidly overpowered computer given that the game I played the most in the first month or two was Sid Meier's Alpha Centauri from 1999. Windows 11 actually does better with SMAC than Windows 10 did, it's only crashed once! I can also run Creative Suite CS2 which I OWN on this system. Photoshop from 2004 doesn't quite know what to do with multithreading but it's actually still really fast and the 64 gigs of RAM doesn't hurt. At the moment I have NMS running in the background while I have a gadzillion firefox tabs open and I could easily watch a video like this and be fine. One of the upsides of holding on to old software is that where it can take advantage of the new hardware, it really does run very well and you get actually uplift. The issue is that a lot of people lost the thread of Intel's naming scheme and haven't upgraded their computer or they bought something with stupidly little RAM (16g is probably okay but 32 g is what most gamers will be happier with and I bought a little word processing toaster of a netbook in 2020-ish or 2021 which had FOUR gigs of RAM but there was a huge tech shortage at the time and it can do what i needed it to do (run Scrivener) just fine. It cannot handle a lot of tabs open and still do, you know, the operating system. 8 gigs is Sluggish because of Windows bloat.

And yes, I have a fervent desire for two things in programming:

That programmers optimize their programs to run on a wide variety of systems with reasonable speeds

That programmers enable their programs to use things like multithreading and large amounts of available RAM *if there is excess capacity readily available* to speed necessary functions that take significant time. Like, oh, loading screens and transitions shouldn't take much time at all on my system but the cpu is sitting there at like 4% utilization while the framerate drops to 30fps on a LOADING screen because they just had to animate a gadzillion star systems through the gpu alone (looking at you NMS.)

What I mean by that is that, for example, in the gaming sector, there are a lot of companies who are like "How much eye candy can we stuff in here to meet our arbitrary (stupidly low) framerate goal" and most of the "eye candy" is stuff most observers wouldn't know or recognize from a hole in the ground. Yes, I'm talking about Starfield. I didn't understand why they'd accept a framerate of 30fps on console until I discovered they'd artificially locked both Skyrim and Fallout 4 ON THE PC to like 30 or 40 fps. And then tied the physics to framerate, completely unnecessarily (as in you can change this behavior with like two or three ini edits.) Then there's games like Baldur's Gate which was done thoughtfully and can run on a huge range of hardware. I had to return one Civilization game because they hadn't accounted for an aging fanbase and high resolution monitors and I flat out couldn't read the tiny text to play the game.

But yeah, we have this neverending leapfrogging bloat that goes on, where users try to upgrade to get things to work better and companies decide to fill in the new overhead with data mining background tasks and "user experience optimizations" that are a ridiculous rat race that in no way enhances the user experience.

Anyway, I'm not opposed to upgrades but like, do them sensibly and if you can, learn to build your own stuff so that you aren't beholden to the anticonsumer tactics most major computer system integrators (dell, etc.) use to get as much of your money as possible while giving you the least in exchange.

we should globally ban the introduction of more powerful computer hardware for 10-20 years, not as an AI safety thing (though we could frame it as that), but to force programmers to optimize their shit better

232K notes

·

View notes

Text

Skytech Gaming PC Desktop Review — My Honest Experience After 1 Month (Intel i7 + RTX 4070 Ti)

$1,499.99 with 25 percent savings

List Price: $1,999.99

If you’re in the market for a high-performance prebuilt gaming PC that doesn’t look like it was cobbled together in someone’s garage, the Skytech Gaming PC (i7–12700F + RTX 4070 Ti) caught my eye — and after using it daily for about a month, I’ve got thoughts.

Let me walk you through what this machine does well, where it stumbles, and whether it’s actually worth the price tag in 2025’s chaotic PC market.

🔧 Quick Specs at a Glance

CPU: Intel Core i7–12700F (12-core beast, turbo up to 4.9GHz)

GPU: NVIDIA RTX 4070 Ti (aka ray-tracing heaven)

RAM: 16GB DDR4 (3200 MHz — fast, but maybe not enough for everyone)

Storage: 1TB NVMe SSD (snappy, but you’ll probably want more eventually)

Cooling: 360mm AIO Liquid Cooler (this thing chills)

PSU: 750W Gold-rated

OS: Windows 11 Home

Case: RGB tempered glass mid-tower — looks sharp and runs cool

⚡ Performance: Smooth As Butter in AAA Games

MORE INFO ABOUT THIS PRODUCT

Honestly, this thing flies.

I’ve been running Cyberpunk 2077, Call of Duty: Warzone, and even the new Black Ops 6, all on Ultra settings at 1440p — and I’m consistently getting 100+ FPS. The 4070 Ti, paired with DLSS 3, absolutely handles anything I throw at it. Even 4K gaming is surprisingly smooth, though you might dial back a few settings for that extra headroom.

Multitasking? No problem. I had Twitch streaming, Chrome tabs galore, and DaVinci Resolve running simultaneously, and this PC didn’t even blink.

❄️ Cooling: No Jet Engine Here

A lot of prebuilt rigs sound like they’re prepping for liftoff under load. Not this one.

The 360mm AIO cooler is doing serious work. My CPU temps stay around 60–65°C even during extended gaming sessions, and the GPU hovers comfortably at 70°C. The fans don’t ramp up into a howling mess, either. It’s… actually kinda quiet?

Cable management is clean too, which helps with airflow and just makes the build feel more “premium” than I expected at this price.

🎮 Setup and Day-to-Day Use

Setup was a breeze. Took it out of the box, plugged it in, powered it up — done. Windows 11 Home is pre-installed with barely any bloatware (thank you, Skytech). GPU drivers were a quick manual update via NVIDIA, but otherwise? Smooth sailing.

And the RGB? Subtle but nice. Not obnoxious “gamer bro” vibes — more like tasteful neon underglow.

💰 Is It Worth the Price?

Let’s be real — building a similar rig yourself in 2025 would cost you roughly the same, and you’d have to hunt down parts, troubleshoot, and hope nothing arrives DOA.

With this Skytech system, you’re paying for convenience, design, and frankly, less headache.

That said… some users on Reddit and Amazon mention quality control issues. Mine’s been rock solid so far (knock on wood), but I do recommend buying from somewhere with easy returns, just in case. Amazon’s been good for that.

✅ What I Love

🚀 Ultra-smooth gaming at 1440p and even 4K

❄️ Whisper-quiet cooling system that actually works

💾 Lightning-fast boot and load times

🎮 Clean Windows install — no junk

🧩 Looks good and is easy to upgrade later

💸 Competitive pricing for the performance

❌ What Could Be Better

🧠 16GB RAM is fine, but 32GB would’ve been ideal for streaming + editing

📦 1TB fills up fast if you’re a game hoarder like me

🛠️ Some reports of hardware issues — YMMV

🧾 Skytech’s support? Mixed reviews. I haven’t had to contact them (yet)

🔍 What Others Are Saying

“My Shadow 4 is awesome. Runs quiet, great FPS.” “Bought a $2800 Skytech — it failed in 2 weeks. Support was helpful but slow.”

So yeah, most people are happy with performance — but QC and support seem to be a gamble. Just double-check everything when it arrives and keep your return window in mind.

💡 Tips Before Buying

Upgrade to 32GB RAM if you stream or do a lot of video work

Consider a secondary SSD or HDD early on (those Steam games add up fast)

Buy from a seller with easy returns and warranty options

Inspect the case, fans, and connections the day it arrives — a few users reported bent metal or disconnected cables

🏁 Final Verdict: Should You Buy It?

If you’re not into building your own rig but still want that “high-end custom PC” experience, this Skytech Gaming PC delivers.

It’s powerful. It’s cool (literally and visually). And it’s one of the better value-for-performance prebuilts I’ve seen in 2025.

✅ Buy it if you want:

Killer FPS in 1440p and 4K

Reliable performance for gaming + content creation

A stylish, prebuilt machine that won’t embarrass you on stream

🚫 Skip it if:

You love building PCs and tweaking every part

You’re super budget-conscious

You can’t tolerate any chance of needing warranty support

Bottom line: For the price, the performance, and the plug-and-play ease? I’d absolutely buy it again.

MORE INFO ABOUT THIS PRODUCT

#skytech gaming pc desktop review#skytech azure gaming pc desktop review#skytech archangel gaming pc desktop review#skytech shadow gaming pc desktop review#skytech o11v gaming pc desktop review#skytech nebula gaming pc desktop review#skytech chronos gaming pc desktop review#skytech rampage gaming pc desktop review#skytech king 95 gaming pc desktop review#skytech chronos mini gaming pc desktop review#skytech gaming pc desktop review and

0 notes

Text

Dev Log Apr 4 2025 - Let's talk Graphics

Taking a break complaining about WebKit, the custom rendering backend work is actually going really, really well. To the point where the average frame of the main menu dropped again from hovering around 2.7-3.3ms down to about 2.3-2.7ms. Alpha blending issue has been resolved, and all of the shaders are back up and running. We even have a little optimization around the use of rendering layers so that stuff like the jelly and water stage features only require a single pass to handle clipping instead of two! Not that the average player will care about saving 0.1ms per frame, but hey - maybe some other dev out there will find some of this useful. Back in the old days of the GameBoy and NES, (and even up to the 3DS technically), most graphics hardware had fixed pipelines where each individual step in the process always happened no matter what. The oldest devices had so many 'slots' for sprites that you loaded into a very specific position in memory, and then every frame, it would read that block and stamp the pixels on the appropriate spot on the screen. Once 3D came into the picture and we now have stuff like OpenGL, things got a little more complicated, where you now have to set up meshes and then do extra setup to have the textures drawn over top. It's a ton more flexible, but a little harder to wrangle and not quite as efficient.

For web-based games, you have an HTML element called a canvas. This element can then be rendered to using WebGL or the Canvas2D API depending on how you set it up. Canvas2D is nice, as you can just draw pixels on it directly kind of like you do a paint program by just telling it "at (35, 64), draw the image of the hat". Which is nice. But it's kind of slow, as you'll be doing that 60 times a second for every single object in the game, all in order one by one. Instead, most games use WebGL. Create the rectangle meshes, set the vertex positions, paint the sprite as a texture over top. The GPU does a bunch in parallel, so it's much, much faster. You just need to put in a little leg-work to get it set up. The thing about this setup is that now, you're doing communication between your main program on the CPU and the data on the GPU, which is (relatively speaking) kind of expensive. Ideally, everything gets sent over once, and then you do tiny little update calls to trigger parts of the program on the GPU to do its thing. Which, when things need to move around, is a little tricky. Typically, a GL render pipeline will bind a shader, bind the geometry, bind uniform attributes, then kick off the draw. However, that's a lot of overhead for 2k single-quad sprites, so we can be a little bit more efficient if we're just a little bit clever. The thing with GL is that it is completely stateful - nothing clears between frames unless you explicitly tell it to. Normally that's a bit of a pain if you accidentally enable something and you don't need it for the next geometry, but we can abuse that a little bit for our sprites. For the shaders, it would be a lot more efficient if we didn't have to turn specific ones on and off every frame for each effect, so we don't! Everything is just baked into one big shader dynamically created when the game is loaded (called an ubershader), that gets enabled once, and we never have to touch it again. Depending on who you ask, this is an absolutely terrible practice, but they're probably thinking in terms of massive 3D worlds with multi-pass lighting and other stuff that makes your 4090 cry. We're a 2D game with a whopping 8 different visual effects, so it's legitimately faster to just take the hit on the teensy little bit of branching. How about another trick? For the memory transfer between the CPU and the GPU, there's extremely high bandwidth, but like I said, it's the latency of the round-trip that really kills performance. So we trade a bunch of little trips for just one big one - every sprite sticks all of their positional data into a single big buffer, and then we just chunk copy that entire buffer over in one burst. The really neat thing about this optimization, is that with that and the ubershader combined, that then allowed us to do some basic texture batching by enabling up to 16 different textures during a single draw call by setting an index in the buffered data about which slot it needs to sample from. So with each improvement, more avenues for more improvements keep popping up. Technically, I could also stick textures into big atlases to allow even more sprites to be drawn per call, but at this point, we can comfortably handle 240Hz monitors, so I don't think that anybody would realistically notice. Maybe when we add 4K support. Technically, we could probably stick this out on Monday, but I'm going to do a bit more testing with it and save it for the holiday update on the 14th. Especially since there is no fallback for it - the old system is just completely gone. Hopefully next week's post will be some great news about the Steam Deck finally being ready, and not just another vent about the shortcomings of WebKit.

0 notes

Text

What Is Neural Processing Unit NPU? How Does It Works

What is a Neural Processing Unit?

A Neural Processing Unit NPU mimics how the brain processes information. They excel at deep learning, machine learning, and AI neural networks.

NPUs are designed to speed up AI operations and workloads, such as computing neural network layers made up of scalar, vector, and tensor arithmetic, in contrast to general-purpose central processing units (CPUs) or graphics processing units (GPUs).

Often utilized in heterogeneous computing designs that integrate various processors (such as CPUs and GPUs), NPUs are often referred to as AI chips or AI accelerators. The majority of consumer applications, including laptops, smartphones, and mobile devices, integrate the NPU with other coprocessors on a single semiconductor microchip known as a system-on-chip (SoC). However, large data centers can use standalone NPUs connected straight to a system’s motherboard.

Manufacturers are able to provide on-device generative AI programs that can execute AI workloads, AI applications, and machine learning algorithms in real-time with comparatively low power consumption and high throughput by adding a dedicated NPU.

Key features of NPUs

Deep learning algorithms, speech recognition, natural language processing, photo and video processing, and object detection are just a few of the activities that Neural Processing Unit NPU excel at and that call for low-latency parallel computing.

The following are some of the main characteristics of NPUs:

Parallel processing: To solve problems while multitasking, NPUs can decompose more complex issues into smaller ones. As a result, the processor can execute several neural network operations at once.

Low precision arithmetic: To cut down on computing complexity and boost energy economy, NPUs frequently offer 8-bit (or less) operations.

High-bandwidth memory: To effectively complete AI processing tasks demanding big datasets, several NPUs have high-bandwidth memory on-chip.

Hardware acceleration: Systolic array topologies and enhanced tensor processing are two examples of the hardware acceleration approaches that have been incorporated as a result of advancements in NPU design.

How NPUs work

Neural Processing Unit NPU, which are based on the neural networks of the brain, function by mimicking the actions of human neurons and synapses at the circuit layer. This makes it possible to execute deep learning instruction sets, where a single command finishes processing a group of virtual neurons.

NPUs, in contrast to conventional processors, are not designed for exact calculations. Rather, NPUs are designed to solve problems and can get better over time by learning from various inputs and data kinds. AI systems with NPUs can produce personalized solutions more quickly and with less manual programming by utilizing machine learning.

One notable aspect of Neural Processing Unit NPU is their improved parallel processing capabilities, which allow them to speed up AI processes by relieving high-capacity cores of the burden of handling many jobs. Specific modules for decompression, activation functions, 2D data operations, and multiplication and addition are all included in an NPU. Calculating matrix multiplication and addition, convolution, dot product, and other operations pertinent to the processing of neural network applications are carried out by the dedicated multiplication and addition module.

An Neural Processing Unit NPU may be able to do a comparable function with just one instruction, whereas conventional processors need thousands to accomplish this kind of neuron processing. Synaptic weights, a fluid computational variable assigned to network nodes that signals the probability of a “correct” or “desired” output that can modify or “learn” over time, are another way that an NPU will merge computation and storage for increased operational efficiency.

Testing has revealed that some NPUs can outperform a comparable GPU by more than 100 times while using the same amount of power, even though NPU research is still ongoing.

Key advantages of NPUs

Traditional CPUs and GPUs are not intended to be replaced by Neural Processing Unit NPU. Nonetheless, an NPU’s architecture enhances both CPUs’ designs to offer unparalleled and more effective parallelism and machine learning. When paired with CPUs and GPUs, NPUs provide a number of significant benefits over conventional systems, including the ability to enhance general operations albeit they are most appropriate for specific general activities.

Among the main benefits are the following:

Parallel processing

As previously indicated, Neural Processing Unit NPU are able to decompose more complex issues into smaller ones in order to solve them while multitasking. The secret is that, even while GPUs are also very good at parallel processing, an NPU’s special design can outperform a comparable GPU while using less energy and taking up less space.

Enhanced efficiency

NPUs can carry out comparable parallel processing with significantly higher power efficiency than GPUs, which are frequently utilized for high-performance computing and artificial intelligence activities. NPUs provide a useful way to lower crucial power usage as AI and other high-performance computing grow more prevalent and energy-demanding.

Multimedia data processing in real time

Neural Processing Unit NPU are made to process and react more effectively to a greater variety of data inputs, such as speech, video, and graphics. When response time is crucial, augmented applications such as wearables, robotics, and Internet of Things (IoT) devices with NPUs can offer real-time feedback, lowering operational friction and offering crucial feedback and solutions.

Neural Processing Unit Price

Smartphone NPUs: Usually costing between $800 and $1,200 for high-end variants, these processors are built into smartphones.

Edge AI NPUs: Google Edge TPU and other standalone NPUs cost $50–$500.

Data Center NPUs: The NVIDIA H100 costs $5,000–$30,000.

Read more on Govindhtech.com

#NeuralProcessingUnit#NPU#AI#NeuralNetworks#CPUs#GPUs#artificialintelligence#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

0 notes

Note

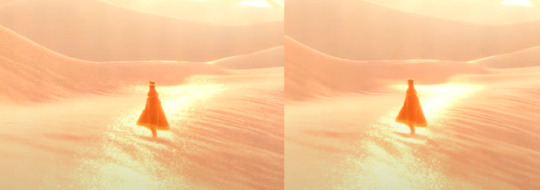

Oooh, what about Journey? I think the sand probably took a lot to pull off

it did!! i watched a video about it, god, like 6 years ago or something and it was a very very important thing for them to get just right. this is goimg to be a longer one because i know this one pretty extensively

here's the steps they took to reach it!!

and heres it all broken down:

so first off comes the base lighting!! when it comes to lighting things in videogames, a pretty common model is the lambert model. essentially you get how bright things are just by comparing the normal (the direction your pixel is facing in 3d space) with the light direction (so if your pixel is facing the light, it returns 1, full brightness. if the light is 90 degrees perpendicular to the pixel, it returns 0, completely dark. and pointing even further away you start to go negative. facing a full 180 gives you -1. thats dot product baybe!!!)

but they didnt like it. so. they just tried adding and multiplying random things!!! literally. until they got the thing on the right which they were like yeah this is better :)

you will also notice the little waves in the sand. all the sand dunes were built out of a heightmap (where things lower to the ground are closer to black and things higher off the ground are closer to white). so they used a really upscaled version of it to map a tiling normal map on top. they picked the map automatically based on how steep the sand was, and which direction it was facing (east/west got one texture, north/south got the other texture)

then its time for sparkles!!!! they do something very similar to what i do for sparkles, which is essentially, they take a very noisy normal map like this and if you are looking directly at a pixels direction, it sparkles!!

this did create an issue, where the tops of sand dunes look uh, not what they were going for! (also before i transition to the next topic i should also mention the "ocean specular" where they basically just took the lighting equation you usually use for reflecting the sun/moon off of water, and uh, set it up on the sand instead with the above normal map. and it worked!!! ok back to the tops of the sand dunes issue)

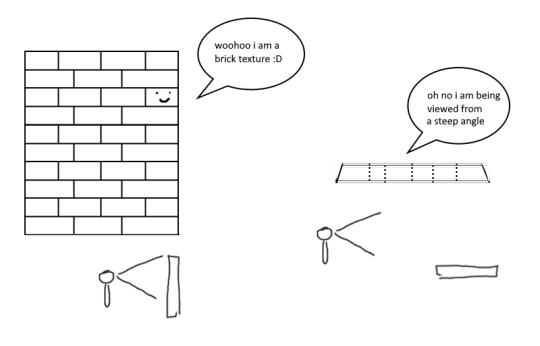

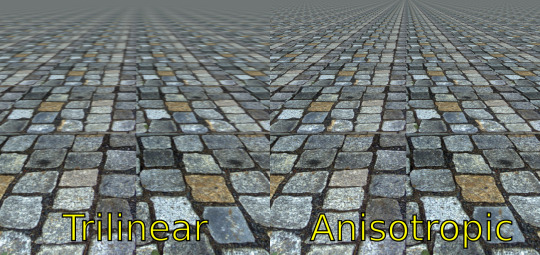

so certain parts just didnt look as they intended and this was a result of the anisotropic filtering failing. what is anisotropic filtering you ask ?? well i will do my best to explain it because i didnt actually understand it until 5 minutes ago!!!! this is going to be the longest part of this whole explanation!!!

so any time you are looking at a videogame with textures, those textures are generally coming from squares (or other Normal Shapes like a healthy rectangle). but ! lets say you are viewing something from a steep angle

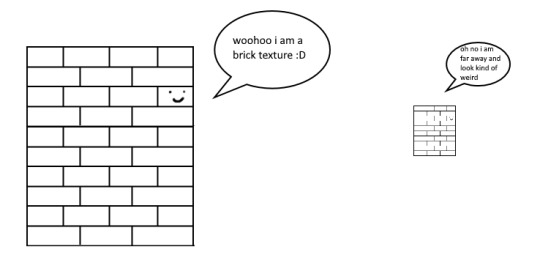

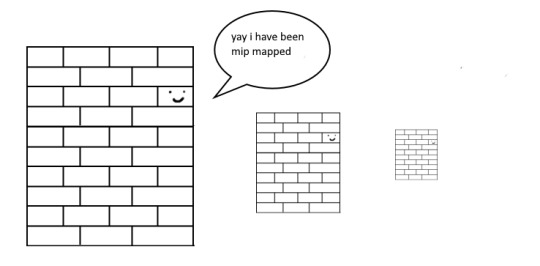

it gets all messed up!!! so howww do we fix this. well first we have to look at something called mip mapping. this is Another thing that is needded because video game textures are generally squares. because if you look at them from far away, the way each pixel gets sampled, you end up with some artifacting!!

so mip maps essentially just are the original texture, but a bunch of times scaled down Properly. and now when you sample that texture from far away (so see something off in the distance that has that texture), instead of sampling from the original which might not look good from that distance, you sample from the scaled down one, which does look good from that distance

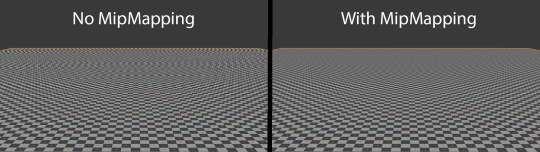

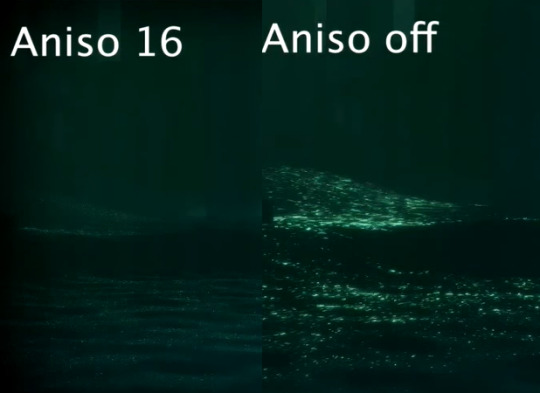

ok. do you understand mip mapping now. ok. great. now imagine you are a GPU and you know exactly. which parts of each different mip map to sample from. to make the texture look the Absolute Best from the angle you are looking at it from. how do you decide which mip map to sample, and how to sample it? i dont know. i dont know. i dont know how it works. but thats anisotropic filtering. without it looking at things from a steep angle will look blurry, but with it, your GPU knows how to make it look Crisp by using all the different mip maps and sampling them multiple times. yay! the more you let it sample, the crisper it can get. without is on the left, with is on the right!!

ok. now. generally this is just a nice little thing to have because its kind of expensive. BUT. when you are using a normal map that is very very grainy like the journey people are, for all the sparkles. having texture fidelity hold up at all angles is very very important. because without it, your textures can get a bit muddied when viewing it from any angle that isnt Straight On, and this will happen

cool? sure. but not what they were going for!! (16 means that the aniso is allowed to sample the mip maps sixteen times!! thats a lot)

but luckily aniso 16 allows for that pixel perfect normal map look they are going for. EXCEPT. when viewed from the steepest of angles. bringing us back here

so how did they fix this ? its really really clever. yo uguys rmemeber mip maps right. so if you have a texture. and have its mip maps look like this

that means that anything closer to you will look darker, because its sampling from the biggest mip map, and the further away you get, the lighter the texture is going to end up. EXCEPT !!!! because of aisononotropic filtering. it will do the whole sample other mip maps too. and the places where the anisotropic filtering fail just so happen to be the places where it starts sampling the furthest texture. making the parts that fail that are close to the camera end up as white!!!

you can see that little ridge that was causing problems is a solid white at the tip, when it should still be grey. so they used this and essentially just told it not to render sparkles on the white parts. problem solved

we arent done yet though because you guys remember the mip maps? well. they are causing their own problems. because when you shrink down the sparkly normal map, it got Less Sparkly, and a bit smooth. soooo . they just made the normal map mip maps sharper (they just multipled them by 2. this just Worked)

the Sharp mip maps are on the left here!!

and uh... thats it!!!! phew. hope at least some of this made sense

433 notes

·

View notes

Text

GAMERSMENU

This is our principle gaming PC construct guide; the arrangement of parts we'd prescribe to anybody needing to assemble another framework that adjusts evaluating and execution. However I will concede that it can for somewhat discouraging perusing at present, all gratitude to the proceeding with GPU dry season.

Go To

GAMERSMENU

Things do appear to be improving and you can essentially purchase basically everything on this rundown at an ordinary cost. However, no doubt, designs cards are still terribly over-evaluated at whatever point they're accessible. Such is life, I presume.

Notwithstanding this, we're taking a gander at a framework with an objective cost of around $1,000, and that is the place where the remainder of our fabricate sits for the planned $400 Nvidia GeForce RTX 3060 Ti that we would suggest for this degree of framework. And keeping in mind that It's feasible to get everything beside the GPU today, the designs card truly is the pulsating heart of any gaming PC, and that makes it hard to suggest a full form without basing your new apparatus around a GPU.

Hoping to fabricate your best gaming pc construct this 2021? This aide has the best pc works at different financial plans set up dependent on the best performing equipment per dollar spent. Building your own gaming pc has many advantages including the capacity to modify/customize your pc, a more noteworthy appreciation for your gaming pc construct venture and it's truly fun. Despite the fact that building your own pc can be more financial plan amicable, we as a whole have a spending plan to hold fast to, which is the place where the accompanying pc incorporates become an integral factor.

The best gaming pc fabricates you see beneath are refreshed each and every month and are parted into the most famous spending plans and gaming execution classifications that permit you to effortlessly design your next pc without the issue of doing the entirety of the examination yourself.

With present day PC games progressing at a particularly quick rate, there is nothing unexpected that there are numerous titles that have been delivered that most standard cutout PCs (modest pre-fabricated frameworks) can scarcely deal with. Furthermore, as PC gamers we like to have and encounter the best… We like to play our games on the most elevated settings conceivable, with the most noteworthy framerate conceivable, (with however many RGB lights as could reasonably be expected.)

Luckily, these days, even a spending gaming PC will permit you to run most games on higher settings on a reasonable 1080p screen. (Albeit, in this aide, we'll talk about very good quality PCs, instead of financial plan well disposed frameworks.)

For those of you who simply need to get directly into requesting the parts for your framework, I've assembled five distinctive pre-made part records ($1,000, $1,250, $1,500, $1,750, and $2,000) with the goal that you can sidestep the part choice measure and get directly into building your new amazing gaming PC for 2021.

These frameworks are refreshed with the top parts at the best costs consistently. Thus, in case you're taking a gander at these forms you can wager they'll give you most extreme execution for the spending you've set. What's more, in case you're searching for a correspondingly evaluated pre-fabricated gaming PC, simply click on the "PRE-BUILT »" connection to look at an elective alternative.

Your Ticket to High-End Gaming!

Would you like to fabricate the best top of the line gaming PC workable for $1,500? Then, at that point, you've gone to the perfect spot! The form we profile here offers the best equilibrium of CPU and GPU power you'll discover in any expand on the 'Net, alongside very good quality yet financially savvy parts in each and every other class. The objective: most extreme edges per dollar without holding back on the stuff every other person does, similar to a quality force supply and a major strong state drive. The last thing you need is a dragster motor in an old mixer skeleton, so we ensure your gaming PC fabricate marks off all the cases!

Be that as it may, before we get to our proposals, we need to discuss the condition of the PC part market at the present time. Because of a powerful coincidence of creation issues and appeal, it's frequently hard to track down a portion of the key PC parts in stock. The entirety of the best illustrations cards and surprisingly the most noticeably terrible ones are unavailable. You can discover basically any card available to be purchased by hawkers on eBay and, as indicated by our modern GPU Price Index, that implies spending essentially $800 for a RTX 3060 card that should cost $329.

While GPUs are the most noticeably terrible wrongdoers, the Ryzen 5000 series CPUs are unavailable or selling at lifted costs wherever as well. Notwithstanding, on some random day, you might observe one to be available to be purchased.

Despite the fact that building your own is frequently less expensive than purchasing prebuilt, evaluating is a lot of something individual relying upon what games you need to play. Certain individuals probably won't raise an etched eyebrow at burning through four thousand on a gaming PC to wrench up Assassin's Creed Valhalla PC Settings to 4K, while the majority of us would in any case battle to figure out $1,000 for a form that can run the most recent Call of Duty at 1080p.

1 note

·

View note

Text

2020 Recap - My Year in Gaming

2020. What a year for video games. I had big plans for last year, but in the end I did very little besides play video games, and I don’t think I’m alone there since we were all stuck at home looking for a way out of reality. I wanted to do a year-end recap as I’ve done sporadically in past years, but this one will be different than the typical “Games of the Year” format because despite all the games I played in 2020, almost none of them came out in 2020, and some of the things that defined my year in gaming weren't even games.

Resident Evil 3 Remake (PS4)

RE3 was one of the only games I played in 2020 that didn’t coincide with the deadly pandemic's spread across the US. RE3 is, of course, a game about the spread of a deadly virus in Anytown, USA. It was an appetizer, I guess.

When the Resident Evil 2 remake dropped in 2019, there were some things I loved about it, and a few things that felt like steps back from the original. I feel much the same about RE3. I had also theorized that a Resident Evil 3 remake would be better off as RE2 DLC than as a separate full-length game, and considering how short RE3 turned out, with some of the best sections of hte original cut entirely (namely, the clock tower), I stand by my theory.

Oh well, at least Jill gets this rad gun, which for the time being is the closest thing to a new Lost Planet we can hope for anytime soon.

Sekiro (PS4)

Sekiro is the first video game I ever Platinumed. This is partly because conquering the base game was such a spartan exercise that going the extra mile to get the Platinum didn’t seem so bad, but it’s also surely a result of the pandemic. I needed a project and a big win. Who didn't?

I wrote at length about why I like Sekiro more than every other modern FromSoft game, and also about the game’s cherry-on-top moment that reminded me of blowing up Hitler’s face in Bionic Commando. Please read them!

Death Stranding (PS4)

Release date notwithstanding, this was obviously the Game of 2020. I wrote about it here, here, and here. This game bears the distinction of being the second one I ever Platinumed. It took 150 hours. Only then did I learn I had a hoverboard.

Streets of Rage 4 (PS4)

This is the only 2020 game I played for more than a few hours. In fact, I cleared the entire game at least five times. I still don’t think it captures the gritty aesthetic of the prior Streets of Rages (nor even tries to), but this is probably the best-feeling bup I've played. Huge bonus points for finally bringing back Adam, but in the end I found it hard not to pick Blaze every time.

Blaster Master Zero 2 (Switch)

What impressed me about this sequel from Inti Creates was that it wasn’t just more of the same, even though that would've been fine. BMZ2 builds on its already excellent predecessor with a catchy new format where players can freely cruise the cosmos and stages take the varied form of planets—some big and sprawling, others short and sweet. Hopping at will from planet to planet without ever knowing what experiences and treasure each one held felt like system jumping in No Man’s Sky and island hopping in The Legend of Zelda: Phantom Hourglass, both of which felt like opening presents.

Dragon Force (Saturn)

Charming, satisfying, and addictive as a bag of chips. Unlike a bag of chips, when it’s over, you can do it all again. And again. And it’ll be different each time! This might be the first strategy game I've truly loved. Better late than never.

The PC Engine Mini

The PC Engine/TurboGrafx-16 Mini seems a particularly justifiable mini-console for people outside Japan because so many missed these consoles entirely, the games are hard to obtain, and the lineup includes titles spanning the entire convoluted Turbo/PC Engine ecosystem—the TurboGrafx-CD/CD-ROM², Super CD-ROM², Arcade CD-ROM² and SuperGrafx, in addition to plain, old standard HuCard games. I myself didn’t know the first thing about these systems before. It’s like reliving the nineties again for the first time.

Most of the titles included are simple action games that don't require a command of Japanese, but make no mistake: being able to understand Snatcher and TokiMemo does make me feel like an elite special person worth more than many of you.

(Side note: From a gender representation perspective, the difference between Snatcher and Death Stranding is stark. Virtually every interaction with every woman or girl in Snatcher is decorated with ways to sexually harass her. Guess someone finally had a conversation with our favorite auteur.)

A Gaming PC

I’d threatened to transition to PC gaming for years after beholding the framerate difference between the console and PC versions of DmC in 2012, and last July I finally took the leap, buying an ASUS “Republic of Gamers” (ugh) laptop with an NVIDIA GeForce RTX 2070 Max-Q GPU. It seems like consoles are getting more PC-like all the time, especially with all these half-step iterations that splinter performance and sometimes even the feature set (à la the New 3DS and Switch Lite), so with the impending new generation seemed like a fine time to change course.

In the half-year since, I’ve barely played a single PC game more recent than 2013, but just replaying PS3-era games at high settings has been like rediscovering them for the first time.

I also finally experienced keyboard-and-mouse shooting and understand now why PC gamers think they're better than everyone else. Max Payne is a completely different game with a mouse. Are all shooters like this??

The USPS

Early in the year, I rediscovered my childhood game shop, Starland, which is now an online hub known as eStarland.com with a brick-and-mortar showroom. To my delight, it has become one of the best and most modestly priced sources for import Saturn games in the country, and I scored Shining Force III’s second and third episodes, long missing from my collection, for a mere ten bucks each!

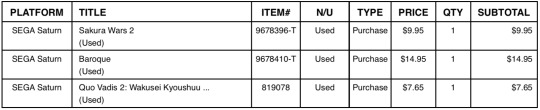

In June, I treated myself to a trio of Saturn imports from eStarland: the tactics-meets-dating-sim mashup Sakura Taisen 2, the nicely presented RTS space opera Quo Vadis 2, and beloved gothic dungeon crawler Baroque. Miraculously, this haul amounted to just around thirty dollars total. Less miraculously, they never arrived. This was the second time I’d had something lost in the mail in my entire life, and also the second time that month. Something was wrong with the USPS, and it wasn’t just COVID pains. We would soon learn Trump had been actively working to sabotage one of the nation’s oldest and most reliable institutions in a plot to compromise the upcoming presidential election.

Frankly it’s a miracle there’s still such a thing as “delivery” at all, and a few missing video games is the last of my worries considering what caused it, but nevertheless this was an experience in my gaming life that could not have happened any other year. I won’t forget it.

*By the way, USPS reimbursed me for the insured value of the missing order, which was fifty bucks. So I actually profited a little off the experience.

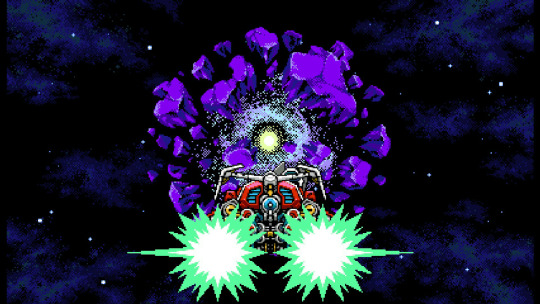

Mega Everdrive Pro

I love collecting for the Genesis and Mega Drive, but I will not pay hundreds of dollars for a video game that retailed for about sixty. The publishers never asked for that, and the developers won’t see a (ragna)cent of the money. I'm also far less inclined to start collecting for Sega CD, since the hardware is notoriously breakable, the cases are huge and also breakable, and the library just isn't that good.

Still, I'd been increasingly curious about the add-on as an interesting piece of Sega history, so when I learned Ukranian mad scientist KRIKzz had released a new Mega Everdrive that doubled as a Sega CD FPGA, I finally took the plunge into the world of flash carts. This has proven a great way to play some of the Mega Drive’s big-ticket rarities I will never buy—namely shmups like Advanced Busterhawk Gley Lancer and Eliminate Down—as well as try out prospective additions to the collection. I never would have discovered the phenomenal marvel of engineering and synth composition that is Star Cruiser without this thing, but now that I have, it’s high on the shopping list.

The Mega Everdrive Pro is functionally nearly identical to TerraOnion’s “Mega SD” cartridge, but slightly less expensive, comes in a “normal” cartridge shell instead of the larger Virtua Racing-style one, and supports a single hardworking dude in Ukraine rather than a company with reportedly iffy customer service.

Twitch

Getting a PC also resolved issues that had long prevented me from achieving a real streaming setup, and much of my gaming life in 2020 was about ramping up my streaming efforts. I even made Affiliate in about a month. Streaming has been a great creative outlet and distraction, as well as a way to connect with other people during the COVID depression and structure my gaming time. Find me every Monday through Thursday 8-11pm Eastern at twitch.tv/lacquerware.

Metroid: Other M (Dolphin)

PC ownership also gave me access to the versatile Dolphin emulator, liberating a handful of great Wii exclusives from their disposable battery-powered prison.

One of the Wii games I fired up on Dolphin was Metroid: Other M, a game I’d always wanted to try but had been dissuaded by years of bad publicity and the fact that I never had any goddamn batteries. I know I should temper what I’m about to say by acknowledging that I was playing at 1080p/60fps on a PS4 controller so my experience was automatically a vast improvement over that of all Wii players, but I’m increasingly confident Metroid: Other M was the most fun I’ve ever had playing a Metroid game. I haven’t decided yet if I’m willing to die on this hill, but I will just say that if you like the Metroidvania genre in general and aren’t particularly attached to the Metroid series’ story or its habit of making you wander aimlessly for hours, there’s a very high chance you will enjoy Other M—especially if you play it on Dolphin.

Don't Starve Together (PC)

Don't Starve is the only game my friend Jason plays, so last year I tried to get into it with him. I respect this game's singular devotion to the concept of survival, but make no mistake: every session of Don't Starve ends with you starving to death. Or freezing. Or getting stomped by a giant deity of the forest. The entire game is staving off death until it inevitably comes. Even when death comes, you can revive infinitely (in whatever mode we were playing), which means even death is not an end goal. There is no end goal. You don't even have the leeway to "play" and create your own meaning as you do in similarly zen games like Dead Rising.

Don't Starve is a game for people for whom hard work is the ultimate reward in and of itself. Don't Starve told me something about Jason.

G-Darius (PS1)

In the early fall, Sony announced they were dropping PS3, PSP, and Vita support from the browser and mobile versions of their PSN Store, and since the PS3 version of the store app runs like a solar-powered parking meter in Seattle, I decided this was my last chance to stock up on Japanese PSN gems.

Among my final haul, the PS1 port of G-Darius proved an instant favorite. Take down the usual cast of mechanized fish in a vibrant, chunky, low-poly style that perfectly inhabits the constraints of the original PlayStation hardware. I believe this is the first Darius game that lets you get into giant beam duels with the bosses, which is quite definitely one of the coolest things a video game has ever let you do. The PS1 port is also surprisingly feature-rich, including some easier difficulty levels that present an actually surmountable challenge for non-savants.

This one’s coming to the upcoming Darius Cozmic Revelation collection on Switch alongside DARIUSBURST, a good-ass romp in its own right.

Red Entertainment

In my effort to shine a tiny spotlight on some of the unsung Interesting Games of gaming, I found myself drawn again and again to the work of Red Entertainment. First there were cavechild headbutt simulator Bonk’s Adventure and twin shmups Gates of Thunder and Lords of Thunder on the PC Engine Mini. Then I streamed full playthroughs of the PS2’s best samurai-era, off-brand 3D Castlevania, Blood Will Tell and the Trigun-adjacent stand-‘n-gun, Gungrave: Overdose. Then I was dazzled by Bonk’s Adventure’s futuristic spin-off cute-‘em-up, Air Zonk, which was also sneakily tucked away on my PC Engine Mini in the “TurboGrafx-16” section. It turned out all these games were made by the same miracle developer responsible for Bujingai, the stylish PS2 wushu game starring Gackt and a household name here at the Lacquerware estate. How prolific can one team be???

Month of Cyberpunk

In November, I started toying with the idea of themed months on my Twitch channel with “Cyberpunk month.” It was supposed to be a build-up to Cyberpunk 2077’s highly anticipated November release, but holy shit that didn’t happen, did it? Still, I always find myself gravitating toward this genre in November, I guess because I associate November with gloom (even though this year it was sunny almost every day). A month is a long time to adhere to a single theme, but cyberpunk is such a well-served niche in gaming that I could easily start an all-cyberpunk Twitch channel. The fact that we’re so spoiled with choice makes Cyberpunk 2077’s terrible launch all the more embarrassing. Here are just some of the games I played (and streamed!) in November:

Ghostrunner Shadowrun (Genesis) RUINER Remember Me Transistor Rise of the Dragon (Sega CD) Shadowrun (Mega CD) Cyber Doll (Saturn) Binary Domain Shadowrun Returns Blade Runner (PC) Deus Ex: Human Revolution Deus Ex: Mankind Divided Observer

Shadowrun on the Genesis gets my top pick, but the two most recent Deus Ex games are great alternatives for those looking for something in the vein of 2077 that isn’t infested with termites.

Lost Planet 2

Every year. I played through it twice in 2020.

Dead Rising 4

I slept on this one too long. While it's a far cry from the original game, it's easily the most fun I've had with a Christmas game since Christmas NiGHTS. This is the game a lot of people thought they were getting when they bought the original Dead Rising with their new Xbox 360--goofy, indulgent, and pressure-free.

Devil May Cry 5: Vergil (PS4)

Vergil dropped for last-gen consoles in December and breathed a whole lot of life into a game that was already at the head of its class.

Nioh 2

I’ve only played a few hours of Nioh 2 because I promised my friend I’d co-op it with him and wouldn’t play ahead. But he’s a grad student with two small children. Nevertheless, Nioh 2 is my Game of 2020.

And that's it! Guess I'll spend 2021 playing games that came out last year, and maybe eventually getting vaccinated? Please?

#2020 year in review best of games of the year game of the year goty recap lacquerware death stranding sekiro darius g-darius video games gam#dragon force#2020#year in review#best of#games of the year#game of the year#goty#recap#review#lacquerware#death stranding#sekiro#darius#g-darius#video games#games#gaming#nioh#nioh 2#devil may cry#devil may cry 5#dmc5#vergil#dead rising 4#dr4#frank west#christmas games#lost planet#lp2

11 notes

·

View notes

Note

please please pleaseee tell me about video game graphics

thank you zero, i owe you my damn life

a side note: i only have experience in Blender when it comes to shading, rendering and similar things, and Dreamy Theatre 2nd is the only Project DIVA game i’ve ever played. i am nowhere near an expert, and this is just a rant so this may or may not be inaccurate oops

OKAY SO. project DIVA. love those games, have been a fan of them since like?? i first found them when i was 12?? so seeing the fact that it now has a Switch release made me really happy, and i’ve been thinking of buying it, since i dont want to clog up my brothers PS3′s memory just for an old PD game, right? the other day though, i stumbled across this video, which compares 4 of the games World is Mine PV’s, right? and i kid you not that when i understood that the Switch version was the top right one, i got. so surprised. because it looks like a downgrade from even the PS4 one, which i personally believe that in this case was a downgrade from the PS3 one.

turns out, Mega Mix uses toon shaders, which can be really good when used right! but from what i’ve seen in MM, it just looks so weird.

i just wanna preface by saying that Future Tone is the game that looks the best overall to me; the shaders and effects highlights the models and sets really well, and uses textures (look at Miku’s clothes in Rolling Girl. they’re plasticy, the light reflects on them and the different textures on her models help highlight what is what. and i think Rolling Girl in FT looks REALLY BAD compared to some previous iterations. look at how it compares to F 2nd and how much the atmosphere is ruined in FT. by the looks of it, F 2nd also uses toon shaders, though im not sure)

toon shaders can be extremely effective. they make the characters more cartoony, overriding any textures and making everything look plasticy and smooth. MMD uses toon shaders - and it causes that unique, classic MMD look!! and i love that look!! but the MMD models were made with that in mind. Mega Mix’s models look like direct ports of the PS4 game, aka they weren’t made for toon shaders.

of course, the context of the song and the pv changes the situation drastically! i’ve seen songs like Arifureta Sekai Seifuku where i actually believe the toon shader enhances the colorful, bouncy nature of the song and PV! thats where i think the toon shaders fits, in bright happy songs! the issue is, that very rarely are there PVs like that. most of them use darker tones, grittier sets or just duller colors. for songs with effects, the PS3 triumphs, but overall the PS4 just looks so much better. just look at ODDS & ENDS. F 2nd still holds up, FT looks amazing and then. MM is just. she’s flat. it’s almost as if any sort of color filter or light bounces off her.

my main issue with the toon shader in this case, is that Miku looks washed out. any texture, bump or feature dissapears, in favor for a flat, bright look. note how you can’t see her nose at all? its small, and that makes it blend in. it drowns. any texture in her hair is replaced with a bright shine and an even, uniform color. look at how well the toon shader is utilized in Ghost Rule, and this was made in MMD. the toon shader in MM leaves no room for things like bloom, reflection or shine. it all looks like its absorbed into Miku, and that she exists on a different layer compared to her surroundings.

this was intentional, from what ive heard! SEGA wanted it to look kid friendly, which means cartoony, 2D inspired and simple. but thats pretty stupid, right? when i was 12, i was drawn to the PD games both because of the gritty songs, but also the bright, happy colors. hell, look at PoPiPo! i remember this PV so clearly because the bright colors and the look made me happy, and this was achieved without toon shaders! and its achieved in Future Tone too, like. Look at World’s End Dancehall. It’s all neon colors without a single dark shadow in sight. and that video came out this year, and because of it, i assumed that this was the Switch port! i was wrong, obviously. still the PS4 release.

i’ve seen some people argue that toon shaders were used because the Switch isn’t a powerful console. first of all, it runs games like Witcher 3, BOTW, and so much more. I agree, the Switch isnt the most powerful console! but it could easily run a game like F 2nd, which still has complex effects and shading. and toon shading is still heavy on the CPU and GPU! there’s even a video where they’ve removed the toon shader in Mega Mix, and it still runs well and looks super good. you can see the textures, the small details and it does her model wonders. you can see the wrinkles in her clothes!!!! and this is still on the switch! it looks straight out of Future Tone. and that game is almost 7 years old. the switch could 100% run it with no to minor problems.

but as far as i am aware, the Project DIVA department has been merged with other departments in SEGA, so its very possible this was made because of budget cuts as well. after all, the toon shader probably takes less effort to slap onto a song, especially since it virtually looks the same on every song, and the game itself is basically a smaller port of Future Tone.

this is the only thing ive been able to think about this week honestly, it makes me kinda sad ngl

#now THIS is a rant babey!!!#asks#pr0tagonists#vocaloid#i tried to highlight and stuff so its easier to read#because god knows i hate big chunks of text that looks the same#long post#save tag

9 notes

·

View notes

Text

Porting Falcon Age to the Oculus Quest

There have already been several blog posts and articles on how to port an existing VR game to the Quest. So we figured what better way to celebrate Falcon Age coming to the Oculus Quest than to write another one!

So what we did was reduced the draw calls, reduced the poly counts, and removed some visual effects to lower the CPU and GPU usage allowing us to keep a constant 72 hz. Just like everyone else!

Thank you for coming to our Tech talk. See you next year!

...

Okay, you probably want more than that.

Falcon Age

So let's talk a bit about the original PlayStation VR and PC versions of the game and a couple of the things we thought were important about that experience we wanted to keep beyond the basics of the game play.

Loading Screens Once you’re past the main menu and into the game, Falcon Age has no loading screens. We felt this was important to make the world feel like a real place the player could explore. But this comes at some cost in needing to be mindful of the number of objects active at one time. And in some ways even more importantly the number of objects that are enabled or disabled at one time. In Unity there can be a not insignificant cost to enabling an object. So much so that this was a consideration we had to be mindful of on the PlayStation 4 as loading a new area could cause a massive spike in frame time causing the frame rate to drop. Going to the Quest this would be only more of an issue.

Lighting & Environmental Changes While the game doesn’t have a dynamic time of day, different areas have different environmental setups. We dynamically fade between different types of lighting, skies, fog, and post processing to give areas a unique feel. There are also events and actions the player does in the game that can cause these to happen. This meant all of our lighting and shadows were real time, along with having custom systems for handling transitioning between skies and our custom gradient fog.

Our skies are all hand painted clouds and horizons cube maps on top of Procedural Sky from the asset store that handles the sky color and sun circle with some minor tweaks to allow fading between different cube maps. Having the sun in the sky box be dynamic allowed the direction to change without requiring totally new sky boxes to be painted.

Our gradient fog works by having a color gradient ramp stored in a 1 by 64 pixel texture that is sampled using spherical distance exp2 fog opacity as the UVs. We can fade between different fog types just by blending between different textures and sampling the blended result. This is functionally similar to the fog technique popularized by Campo Santo’s Firewatch, though it is not applied as a post process as it was for that game. Instead all shaders used in the game were hand modified to use this custom fog instead of Unity’s built in fog.

Post processing was mostly handled by Unity’s own Post Processing Stack V2, which includes the ability to fade between volumes which the custom systems extended. While we knew not all of this would be able to translate to the Quest, we needed to retain as much of this as possible.

The Bird At its core, Falcon Age is about your interactions with your bird. Petting, feeding, playing, hunting, exploring, and cooperating with her. One of the subtle but important aspects of how she “felt” to the player was her feathers, and the ability for the player to pet her and have her and her feathers react. She also has special animations for perching on the player’s hand or even individual fingers, and head stabilization. If at all possible we wanted to retain as much of this aspect of the game, even if it came at the cost of other parts.

You can read more about the work we did on the bird interactions and AI in a previous dev blog posts here: https://outerloop.tumblr.com/post/177984549261/anatomy-of-a-falcon

Taking on the Quest

Now, there had to be some compromises, but how bad was it really? The first thing we did was we took the PC version of the game (which natively supports the Oculus Rift) and got that running on the Quest. We left things mostly unchanged, just with the graphics settings set to very low, similar to the base PlayStation 4 PSVR version of the game.

It ran at less than 5 fps. Then it crashed.

Ooph.

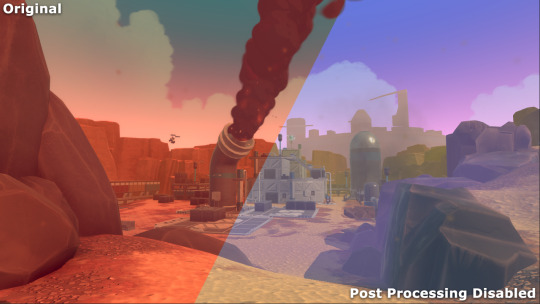

But there’s some obvious things we could do to fix a lot of that. Post processing had to go, just about any post processing is just too expensive on the Quest, so it was disabled entirely. We forced all the textures in the game to be at 1/8th resolution, that mostly stopped the game from crashing as we were running out of memory. Next up were real time shadows, they got disabled entirely. Then we turned off grass, and pulled in some of the LOD distances. These weren’t necessarily changes we would keep, just ones to see what it would take to get the performance better. And after that we were doing much better.

A real, solid … 50 fps.

Yeah, nope.

That is still a big divide between where we were and the 72 fps we needed to be at. It became clear that the game would not run on the Quest without more significant changes and removal of assets. Not to mention the game did not look especially nice at this point. So we made the choice of instead of trying to take the game as it was on the PlayStation VR and PC and try to make it look like a version of that with the quality sliders set to potato, we would need to go for a slightly different look. Something that would feel a little more deliberate while retaining the overall feel.

Something like this.

Optimize, Optimize, Optimize (and when that fails delete)

Vertex & Batch Count

One of the first and really obvious things we needed to do was to bring down the mesh complexity. On the PlayStation 4 we were pushing somewhere between 250,000 ~ 500,000 vertices each frame. The long time rule of thumb for mobile VR has been to be somewhere closer to 100,000 vertices, maybe 200,000 max for the Quest.

This was in some ways actually easier than it sounds for us. We turned off shadows. That cut the vertex count down significantly in many areas, as many of the total scene’s vertex count comes from rendering the shadow maps. But the worse case areas were still a problem.

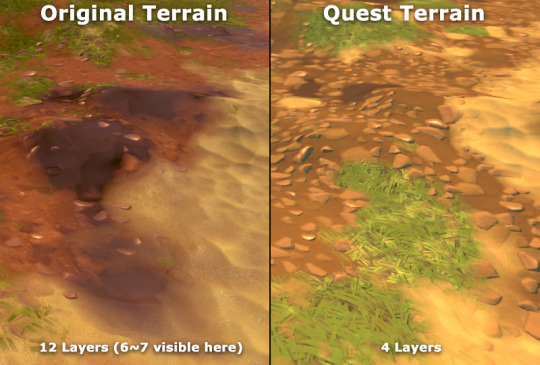

We also needed to reduce the total number of objects and number of materials being used at one time to help with batching. If you’ve read any other “porting to Quest” posts by other developers this is all going to be familiar.