I'm Max, a 3D artist from France. I do animation for video games. This is my more serious blog. The non-serious one is over here (it's where I reblog all kinds of stuff I like).

Don't wanna be here? Send us removal request.

Text

A quick look at what YouTube's "1080p Premium" option is actually made of

What's the deal with this quality option? How much better is it? What are the nitty-gritty details? Unfortunately, Google Search is worthless nowadays, and a topic as technical as video compression is unfortunately rife with misinformation online.

I had a look around to see if any publications covered this subject in any way that was more thorough than a copy-paste and rewording of YouTube's press release, but the answer was unfortunately no.

So I looked into it myself to satisfy my curiosity, and I figured I might as well write a post about my findings, comparison GIFs, etc. for anyone else who's curious about this.

Quick summary of things so far: YouTube has a lot of different formats available for each video. They combine all these different factors:

Resolution (144, 240, 360, 480, 720, 1080, 1440, 2160, 4320)

Video codec (H.264/AVC, VP9, AV1)

Audio codec (AAC & Opus in bitrates ranging from 32 to ~128k. Yes, it says 160 up there, but that's a lie. Also, some music videos have 320k, if you have Premium...)

Container (MP4, WebM + whether DASH can be used)

Different variants of these for live streams, 360° videos, HDR, high framerate...

Here's everything that's available for a video on Last Week Tonight's channel.

I believe that the non-DASH variants used to be encoded and stored differently, but nowadays it seems that they are pieced together on-the-fly for the tiny minority of clients that don't support DASH.

In fact, 1080p Premium shows up under two different format IDs here, 356 & 616. The files come out within a few hundred KiBs of each other... and that's because only the container changes. The video stream inside is identical, but 356 is WebM, while 616 is MP4.

⚠ — Note that the file sizes and bitrates written by this tool are mostly incorrect, and only by downloading the whole file can you actually assess its properties.

YouTube has published recommended encoding settings, but their recommended bitrate levels are, in my opinion, exceedingly low, and you should probably multiply all these numbers by at least 5.

What bitrate does YouTube re-encode your videos at?

YouTube hasn't targeted specific bitrates in years; they target perceptual quality levels. Much like how you might choose to save a JPEG image at quality level 80 or 95, YouTube tries to reach a specific perceptual quality level.

This is a form of constrained CRF encoding. However, YouTube applies a lot of special sauce magic, to make bad quality input (think 2008 camcorders) easier to transcode. This was roughly described in some YouTube Engineering blog post, but because they don't care about link rot, and — again — because Google Search has become worthless, I'm unable to find that article again.

So, short answer: it depends. Their system will use whatever bitrate it deems necessary. One thing is for certain: the level of quality they get for the bitrate they put in is admirably high. You've heard it before: video is hard, it's expensive, computationally prohibitive, but they're making it work. For free! (Says the guy paying for Premium)

There's a pretty funny video out there called "kill the encoder" which, adversarially, pushes YouTube's encoders as much as it can. It's reaching staggeringly high bitrates: 20 mbps at 1080p60, 100 mbps at 2160p60.

The point I'm getting to here is that you can't just say "1080p is usually 2mbps, and 1080p premium is 4mbps", because it depends entirely on the contents of the video.

Comparisons between 1080p and 1080p Premium

⚠ — One difficulty of showcasing differences is that Tumblr might be serving you GIFs shown here in a lossy recompressed version. If you suspect this is happening, you can view the original files by using right-click > open in new tab, and replacing "gifv" in the URL with "gif". I've also applied a bit of sharpening in Photoshop to comparison GIFs, so that differences can be seen more easily.

Let's get started with our first example. This is a very long "talking head" style video, with plain, flat backgrounds. This sort of visual content can go incredibly low, especially at lower resolutions.

youtube

Normal: 1,121 kbps average / 0.022 bits per pixel

Premium: 1,921 kbps average / 0.037 bits per pixel (+71%)

The visual difference here is minimal. I tried to find a flattering point of comparison, but came up short. This may be because this channel's videos are usually cut from recorded livestreams.

youtube

Normal: 1,402 kbps average / 0.027 bits per pixel

Premium: 2,817 kbps average / 0.054 bits per pixel (+101%)

Let's look closer at a frame 17 seconds in.

First, Mark Cooper-Jones's face:

Note the much improved detail on the skin and especially flat areas of the shirt (the collar sew line partially disappeared in standard 1080p). Some of the wood grain also reappears. YouTube is very good at compressing the hell out of anything that is "flat enough".

As for Jay Foreman, note that the motion blur on the hand looks much cleaner, and so does the general detail on his shirt and beard.

youtube

Last Week Tonight's uploads are always available in AV1, and this reveals an interesting thing about 1080p Premium: it's always in VP9, and never in AV1.

Normal AV1: 936 kbps average / 0.015 bits per pixel (-24%)

Normal VP9: 1,165 kbps average / 0.018 bits per pixel

Premium: 1,755 kbps average / 0.028 bits per pixel (+87%)

youtube

"VU" is a daily 6-minute show which weaponizes the Kuleshov effect to give a new meaning to the flow of images seen in the last 24 hours of French television, through the power of editing. (Epilepsy warning)

Normal: 1,132 kbps average / 0.022 bits per pixel

Premium: 2,745 kbps average / 0.053 bits per pixel (+142%)

Looking at 2m10s in for images from boxing championships, we see, again, more of a general cleaner image than a stark difference. The tattoos are much more defined, so is the skin, the man's hair, and the background man's eyes.

Shortcomings of these comparisons

Comparing two encodes of a video from still images is always going to be flawed, because there's more to video than still images; there's also how motion looks. And much like how you can see that these still images generally look a lot cleaner with "Premium" encodes, motion also benefits strongly. This is not something I can really showcase here, though.

In conclusion & thoughts on YouTube's visual quality

To answer our original question, which was "how much does 1080p Premium actually improve video quality?", the answer is "it doubles bitrate on average, and provides a much cleaner-looking image, but not to the degree that it's impressive or looking anything like the original file". I think they could get there with 3x or 4x the bitrate, but who knows?

This is not a massive upgrade in quality, but it does feel noticeably nicer to my eyes, especially in motion. However, I might have more of a discerning eye in these matters, and this is much more noticeable on a big desktop monitor, rather than a 6-inch phone screen. I suspect that YouTube's encodes are perceptually optimized for the platform with the most eyeballs: small, high-DPI screens.

One thing that I find regrettable is that this improvement is solely limited to some videos that are uploaded in 1080p. Although I heavily suspect it, I don't know for certain whether YouTube has reencoded old videos in such a way that there is massive generational loss, but they do seem to look much poorer today than they did 10 years ago, especially those that top out at 240p. I would love for "Premium" to extend to each video's top resolution as long as they are 1080p and under. Give us 480p and 720p Premium, at the very least.

There are many, many, many things you can criticize YouTube for, but their ability to ingest hundreds of hours of video every minute, while maintaining a quality-to-bitrate efficiency ratio that is this high, for free, is, in my opinion, one of their strongest engineering feats. Their encoders do have a tendency to optimize non-ideal video a bit too aggressively, and I will maintain that to get the most out of YouTube, you should upload a file that is as close to pristine as possible... and a bit of sharpening at the source wouldn't hurt either 😃

8 notes

·

View notes

Text

Adventures in MMS video in 2023: "600 kilobytes ought to be enough for anybody"

MMS is a fairly antiquated thing, now that we have so many other (and better) options to share small video snippets with our friends and families. It's from 2002, the size limit is outright anemic, and the default codecs used for sharing video are H.263 + AMR wideband.

So MMS video looks (and sounds) like this.

It's... not good! Can I get something better out of those 600 kilobytes, entirely from my Android smartphone?

As it turns out, yes, I can! Technology has gotten a lot better in the past 21 years, and by using modern multimedia codecs, we can get some very interesting results.

The video clip above was recorded straight from the camera within the "Messages" app on my Galaxy S20+. By taking a look at the 3gpp file that has been spat out, I can see the following characteristics:

Overall bitrate of 101 kbps

H.263 baseline, 176x144, 86.5 kbps, 15 fps (= 0.23 bits/pixel)

AMR narrow band audio, 12.8 kbps, 8 KHz mono

Terribly antiquated, to an almost endearing point.

Note that if you feed an existing video to the Messages app, it'll ask you to trim it, and it'll be encoded slightly differently:

Overall bitrate of 260 kbps

H.263 baseline, 176x144, 227 kbps, 10 fps (= 0.89 bits/pixel)

AAC-LC, 32 kbps, 48 KHz mono (but effectively ~10-12 KHz)

So, before we get any further, here are two hard constraints:

600 kilobytes... allegedly. This may change depending on your carrier. For example, many online sources said that my carrier's limit was 300. Some may go as low as 200 or 100. I don't know how you can find this out for yourself; the "access point" settings don't ask you to specify the size, so I assume the carrier server communicates this to the phone somehow.

The container must be 3GP or MP4. I would have loved to try WebM files, but the Messages app refused to send any of them.

With that in mind, the obvious codec candidates are:

For video: H.264/AVC, H.265/HEVC, and AV1

For audio: AAC or Opus

I will not be presenting any results for H.264 because it's so far behind the two others that it might as well not be considered an option. Likewise, everything supports Opus, so I didn't even try AAC.

An additional problem to throw into the mix: Apple phones don't support AV1 yet. No one that I communicate with using SMS/MMS uses an iPhone, so I couldn't try whether any of this worked there.

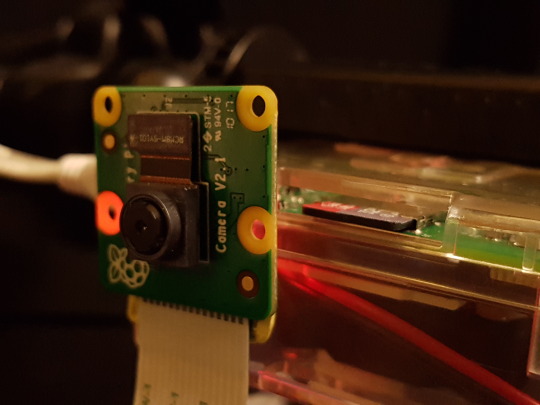

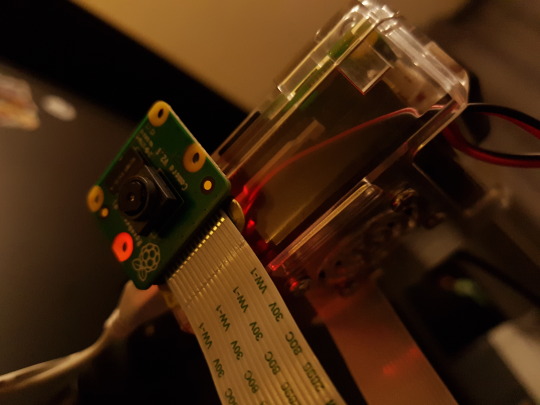

How are we going to encode this straight on our phones?

FFmpeg, of course. 😇

This means having to write a little bit of command line, but the interface of this specific app makes it easy to store presets.

Wait, I don't want to do any of that crap

If you want to convert an existing video to fit into the MMS limit, this is probably as good as it gets for now. I looked around for easy-to-use transcoding apps, but none fit the bill. That said, if you want to shoot "straight to MMS but not H.263", one thing you can try is using a third-party camera app that will let you set the appropriate parameters.

OpenCamera lets me set the video codec to HEVC, the bitrate to 200kbps (100 is unusable), and the resolution to 256x144 (176x144 is also unusable)... you can't set the audio codec, but amusingly, it switches to 12kbps 8 KHz mono AAC-LC at these two resolutions anyway! There must be something hardcoded somewhere...

This means 20 seconds maximum, which is not much, and it doesn't look great, but besides that reduced length, it's still better than the built-in MMS camera.

There may be better camera apps out there more suited to this purpose, but I haven't looked more into them. This may be worth coming back to, if/when phones get AV1 hardware encoders.

Let's transcode

So without further ado, I'll walk you through the command line.

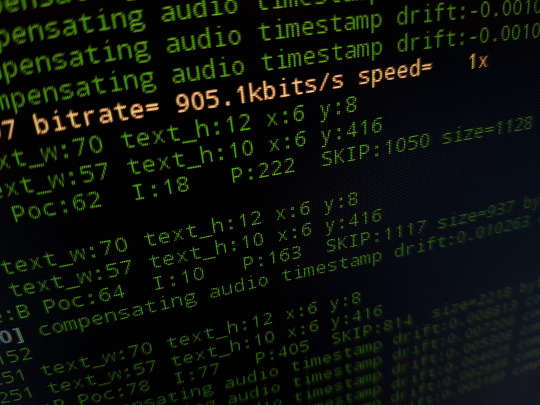

-c:v libaom-av1 -b:v 128k -g 150 -vf scale=144:-4 -cpu-used 4 -c:a libopus -b:a 32k -ar 24000 -ac 1 -t 30 -ss 0:00 -r 30

For the sake of readability, I have divided this into 3 lines, but in the app, everything will be on one line.

The first line is for video, the second one is for audio, and the third one is for... let's call it "output".

-c:v libaom-av1 — selects the AV1 encoder for video

-b:v 128k — video bitrate! this is going to be your main "knob" for staying within the maximum file size.

-g 150 — this sets a maximum keyframe interval. The default is practically unlimited which sucks for seeking, but I believe it also increases encoding complexity, so it's probably better to set it to something smaller. This is in frames, so 150 frames here, at 30 per second, would mean 5 seconds.

-vf scale=144:-4 — resize the video to a lower resolution! This is width and height. So right now, this means "set width to 144 & height to whatever matches the aspect ratio, but set that to the nearest multiple of 4" (because most video codecs like that better, and I assume these do as well)

-cpu-used 4 — the equivalent of "preset" in x264/x265. 8 is fastest, 4 is middle-of-the-road, 3 & 2 get much slower, and 1 is the absolute maximum. The lower the number, the longer the video will take to encode. There's a chart over there of quality vs. time in presets, and the conclusion is to stick with 4, unless you want absolute speed, in which case you could go with 8 or 6.

-c:a libopus — sets the Opus encoder for audio. (there's another one that's just "opus", but it's worse. This one is the official one.)

-b:a 32k — audio bitrate! And yes, 32k is very much usable... that's the magic of Opus. You could go down to 16 for voice only.

-ar 24000 — sets the audio sample rate to 24 KHz. Not sure if that's super useful since AAC-HC and Opus are smart with how they scale down, but I figured I might as well.

-ac 1 — set the number of audio channels... to just one! So, downmix to mono. With one less audio channel but the same amount of bitrate, the resulting audio will sound slightly better.

-t 30 — only encode 30 seconds

-ss 0:00 — encode starting at 0:00 into the input file. This setting, in combination with "-t", lets you trim the input video. For example: "-t 20 -ss 0:15"

-r 30 — sets the video framerate. Yeah, this one is all the way at the end out of habit (the behaviour is kind of inconsistent if it's placed earlier on). You don't need to have this one in, but it's useful for going down from 60 to 30, or 30 to 15.

To use H.265/HEVC, replace "libaom-av1" by "libx265", and outright delete "-cpu-used 4", or replace it by "-preset medium" (which you can then replace by "slow", "veryslow", "faster", etc.)

My findings

Broadly speaking, AV1 is far ahead of x265 for this use case.

This is going to sound terribly unscientific, and I'm sorry in advance for using this kind of vague language, because I don't have a good grasp of the internals of either codec... but AV1 has its "blocks" being able to transition much more smoothly into one another, and has much smoother/better "tracking" of those blocks across the frame, whereas x265 has a lot more "jumpiness" and ghosting in these blocks.

Even AV1's fastest preset beats x265's slowest preset hands down, but the former remains far slower to encode than the latter. x265 does not beat AV1 in quality even when set to be slower. For this reason, you should consider x265 (medium) to be your "fast" option.

Here are some sample videos! Unfortunately, external MP4 files will not embed on Tumblr, so I must resort to using regular links. You should probably zoom way in (CTRL+scrollwheel up) if you open these in a new tab on desktop.

The sample clip I've chosen is a little bit challenging since there's a lot of sharp stuff (tall grass, concrete, cat fur), and while it's not the worst-case scenario, it's probably somewhere close to it.

Here's my first comparison. 240x428, 30 fps, medium preset:

x265 took 1:27

AV1 took 3:15

So you can get 35 seconds of fairly decent 240p video in just 600 kilobytes. That's pretty cool! But we can try other things.

For example, what about 144p, like the original MMS?

x265 medium took 1:21

AV1 fast took 2:31

Sure, there aren't many pixels, but they are surprisingly clean... at least with AV1, because the stability in the x265 clip isn't good.

Now, what if we followed in the original MMS video's footsteps, and lowered the framerate? This would let us increase the resolution, and that's where AV1 really excels: resolution scalability...

Here's AV1 fast at 15 fps 360p, which took 3:05 to encode.

It's getting a bit messier for sure, but it's still surprisingly high quality given how much is getting crammed in so little.

But can this approach be pushed even harder? Let's take a look at 480p set to a mere 10 frames per second. This is very low, but this is the same framerate the original MMS video was using.

Here's AV1 fast, 480p @ 10 fps, 4:01 to encode.

We are definitely on the edge of breaking down now. It's possible there's too much overhead at that resolution for this bitrate to be worth it. But what if we use -cpu-used 4 (the "medium" preset)? That looks really good now, but that took 9:10 to encode...

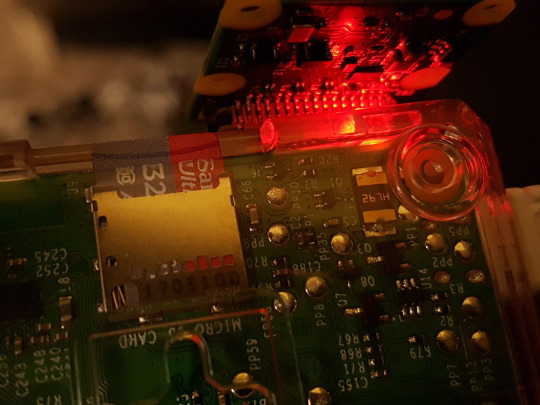

Wait, is this even going to work?

I have to manually "Share" the file from my file explorer app in order to be able to send it (because the gallery picker won't see it otherwise), but once that's done, it is sent...

... but is it getting received properly?

A core feature of MMS is that there's a server that sits in the middle of all exchanges, getting ready to transcode any media passing through if it thinks that the receiving phone won't be able to play it back. This was very useful back in the day, if you had a brand-new high-tech 256-color phone capable of taking pictures, but the receiver was still rockin' a monochrome Nokia 3310. Instead of receiving a file they wouldn't be able to display, they would instead receive a link that they could then manually type on their computer.

In my case, having sent AV1+Opus .mp4 files to other recent Samsung smartphones, I can tell you that they received the files verbatim. But this may not be the case with every smartphone, and most importantly, this may vary based on carrier...

Either way, it's really cool to see how much you can get out of AV1 and Opus these days.

Finally, here are two last video samples in conditions that are a bit more like "real world footage".

Sea lions fighting in the San Francisco harbor (512x288, medium, 8:51)

Boat to Alcatraz (360x204, medium, 8:24)

Why though

Because I can

and because I think it's kind of cool on principle

1 note

·

View note

Text

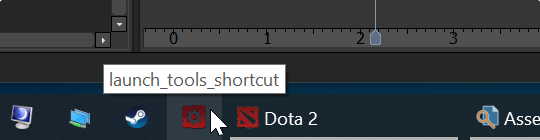

How to automate downloading the CSV of your Google Sheets spreadsheet as a one-click action pinned to your Windows taskbar

Howdy folks, I’m currently working on a project (under NDA), and the vast majority of my time is spent in a giant spreadsheet which feeds the game vast quantities of data. Every time we make a change, we have to do this:

Go click the File menu

Go down to “Download...” and wait for the CSV option to show up

Wait a couple seconds while the Google servers process the request

Browse to the appropriate directory if the File Explorer that just showed up isn’t there

Double-click on the existing CSV file

Agree to overwrite

That gets tiresome very quickly, and takes 10 seconds every time. How can we turn that into a one-click action? Preferably pinned to the Windows taskbar?

The idea is to use cURL (which you have already, if you use Windows 10 1803 or newer), along with a couple of other tricks.

IMPORTANT: note that this is assuming that the spreadsheet’s privacy setting is set to “accessible by anyone”, meaning that it would have a public (or “unlisted”) URL. If your spreadsheet needs authentification, you���ll need to pass along parameters to cURL or Powershell... but I don’t know how to do that, so you’ll have to do your own research there. (Good luck!)

STEP ONE: finding the URL that lets you fetch the CSV

So, I found out that there’s some endpoint that is supposed to let you do this “officially”, but when I tried it, it returned a CSV file that was formatted completely differently than the one which the File > Download option provides. Every cell was wrapped in quotes, and the lines were... oddly mixed together... so the file was unusable, because the game I’m working on was expecting something else.

Instead, we’re gonna take a look at the request sent by your browser when you ask for a download manually. In this example, I’ll be using Chrome.

In your sheet, open the developer console using F12. Open the “Network” tab. Then go request a download. You’ll see a bunch of new requests pop up. You’re looking for one like this.

Right-click on that, Copy > Copy link address. Boom, you’ve got your URL.

IMPORTANT: note that, when you download a CSV, you are downloading only ONE sheet of your entire document. If you have several sheets inside one document, each sheet will have its own URL. When you ask Google Sheets to download a .CSV, it will download the sheet that is currently open. So... repeat this first step to find out the URL of every sheet you wish to download!

STEP TWO: creating the .batch file

Now, create a .bat file. I’ll be doing it on the Desktop for this example. Here’s the command you’ve got to put in there:

curl -L --url "your spreadsheet URL goes here" --output "C:\(path to your folder)\(filename).csv"

Mind the quotes! Yes, even around the URL, even though it’s one solid block without spaces! If you don’t do that, cURL will interpret the downloaded data instead of passing it along nicely to whichever file it’s meant to go to.

However, if you are a Powershell Person, here’s how to get started:

Invoke-WebRequest "google docs url goes here" -OutFile "C:\(path to your folder)\(filename).csv"

That said, we’re going to continue doing it with a batch file here. Save your .bat and execute it. You should see cURL pop up for a couple seconds, and the CSV will be retrieved! (If that doesn’t happen... well... you’ll have to troubleshoot that on your own. Sorry.)

If you want to download multiple sheets, now’s the time to stack multiple commands too! 😉

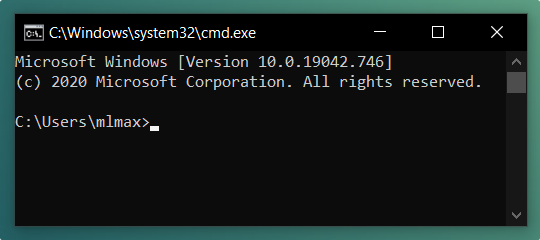

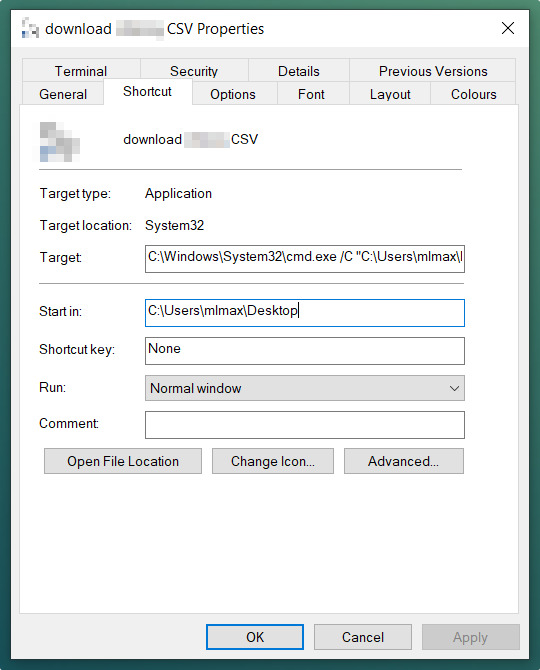

STEP THREE: pinning to the Windows taskbar

Windows 10 only lets you pin shortcuts to programs. Not shortcuts to files. Therefore, we need to create a shortcut that will open cmd.exe and points it at our .bat file. And that’s easy!

Right-click your .bat file and select Create Shortcut.

Before you do anything else, right-click your .bat file again, hold SHIFT, and select “Copy as path” (a very useful feature! but hidden.)

Right-click your shortcut > Properties > go into the “Shortcut” tab

Replace the Target field by: C:\Windows\System32\cmd.exe /C

Then hit CTRL+V after that to paste the path you copied before

The Target field should now look like this:

C:\Windows\System32\cmd.exe /C "C:\(path)\(filename).bat"

Make sure the “Start in” field is the folder your .bat is in.

Bonus style points: hit “Change Icon” and go pick an icon you like from any executable on your system (such as the game you’re working on)

Now, you can drag-and-drop that shortcut onto your Windows taskbar! And you can keep your local CSVs updated in just one click! 🙌

One last warning: if you are working live, making changes, don’t hit your download button TOO fast. If you look at the top of the browser, next to your document name, the little cloud icon should have “all changes saved” next to it. If you’re working on a particularly large spreadsheet (say, more than 10k rows), it may take a couple of seconds for changes to finish saving after the moment you do something! Keep an eye on it when you go hit your taskbar button.

Hope this helped!

Enjoy! 🙂

8 notes

·

View notes

Text

2019 & 2020

Hello everyone! So yeah, this yearly blog post is about three... four months late... it covers two years now.

I did have a lot of things written last year, last time, but the more things have changed, the more I’ve realized that a lot of things I talked about on here... were because I lacked enough of a social life to want to open up on here.

In a less awkwardly-phrased way, what I’m saying is, I was coping.

Not an easy thing to admit to in public by any means, but I reckon it’s the truth. Over the past two years, I’ve made more of an effort to build better & healthier friendships, dial back my social media usage a bit (number 1 coping strategy), not tie all my friendships to games I play, especially Dota (number 2 coping strategy), so that I could be more emotionally healthy overall.

Pictured: me looking a whole lot like @dril on the outside, although not so much on the inside. (Photo by my lovely partner.)

To some degree, I believe it’s important to be able to talk about yourself a bit more openly in a way that is generally not encouraged nor made easy on other social networks (looking at you, Twitter). I know that 2010-me would be scared to approach 2020-me; and it’s my hope that what I am writing here would not help him with that, but also help him become less of an insecure dweeb faster. 😉

Not that recent accomplishments have stopped me from being any less professionally anxious. Sometimes the impostor syndrome just morphs into... something else.

Anyway, what I’m getting at is, the first reason it took me until this year to finish last year’s post is because, with my shift in perspective, and these realizations about myself, I do want to keep a lot more things private... or rather, it’s that I don’t feel the need to share them anymore? And that made figuring out what to write a fair bit harder.

The other reason I didn’t write sooner is because, in 2018, I wrote my "year in review” post right before I became able to talk about my then-latest cool thing (my work on Valve’s 2018 True Sight documentary). So I then knew I’d have to bring it up in the 2019 post. But then, I was asked to work on the 2019 True Sight documentary, and I know it was going to air in late January 2020, so I was like, “okay, well, whatever, it, I’ll just write this yearly recap after that, so I don’t miss the coach this time”. So I just ended up delaying it again until I was like... ��okay, whatever, I’ll just do both 2019 and 2020 in a single post.”

I think I can say I’ve had the privilege of a pretty good 2019, all things considered. And also of a decent 2020, given the circumstances. Overall, 2019 was a year of professional fulfillment; here’s a photo taken of me while I was managing the augmented reality system at The International 2019! (The $35 million dollar Dota 2 tournament that was held, this that year, in Shanghai.)

If I’d shown this to myself 10 years ago it would’ve blown my mind, so I guess things aren’t all that bad...!

I’ve brought up two health topics in these posts before: weight & sleep.

As for the first, the situation is still stable. If it is improving, it is doing so at a snail’s pace. But quite frankly, I haven’t put in enough effort into it overall. Even though I know my diet is way better than it was five or six years ago, I’ve only just really caught up with the “how it should have been the entire time” stage. It is a milestone... but not necessarily an impressive one. Learning to cook better things for myself has been very rewarding and fulfilling, though. It’s definitely what I’d recommend if you need to find a place to start.

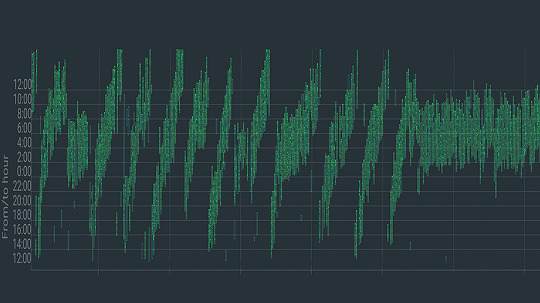

As for sleep, throughout 2019, I continued living 25-hour days for the most part. There were a few weeks during which I slowed down the process, but it continued on going. Then, in late December of 2019, motivated by the knowledge that sleep is such a foundational pillar of your health, I figured I really needed to take things seriously, and I managed to go on a three month streak of mostly-stable sleep! (See the data above.)

Part of what helped was willingly stopping to use my desktop computer once it got too late in the day, avoiding Dota at the end of the day as much as possible, and anything exciting for that matter... and, as much as that sounds like the worst possible stereotype, trying to “listen to my body” and recognizing when I was letting stress and anxiety build up inside me, and taking a break or trying to relax.

Also, a pill of melatonin before going to bed; but even though it’s allegedly not a problem to take melatonin, I figured I should try to rely on it as little as possible.

Unfortunately, that “good sleep” streak was abruptly stopped by a flu-like illness... it might have been Covid-19. The symptoms somewhat matched up, but I was lucky: they were very mild. I fully recovered in just over a week. I coughed a bit, but not that much. If it really was that disease, then I got very lucky.

(Pictured: another photo by my lovely SO, somewhere in Auvergne.)

My sleep continued to drift back to its 25-hour rhythm, and I only started resuming these efforts towards the fall... mostly because living during the night felt like a better option with the summer heat (no AC here). I thought about doing that the other way (getting up at 3am instead of going to bed at 7am), and while it’d make more sense temperature-wise, that would have kept me awake when there were practically no people online, and I was trying to have a better social life then, even if had to be purely online due to the coronavirus, so... yeah.

I’ve been working from home since 2012! I also lived alone for a number of years since then. For the most part, it hasn’t been a great thing for my mental health. Having had a taste of what being in an office was like thanks to a couple weeks in the Valve offices, I had the goal of beginning to apply at a few places here and there in March/April. Then the pandemic hit, so those plans are dead in the water. I wanted 2020 to be the year in which I’d finally stop being fully remote, but those plans are now dead in the water.

Now, at the end of the year, I don’t really know if I want to apply at any places. There’s a small handful of studios whose work really resonates with me, creatively speaking, and whose working conditions seem to be alright, at least from what I hear... but, and I swear I’m saying this in the least braggy way possible... there’s very little that beats having been able to work on what I want, when I want, and how much I want.

This kind of freelance status can be pretty terrifying sometimes, but I’ve managed (with some luck, of course) to reach a safe balance, a point at which I’ve effectively got this luxury of being able to only really work on what I want, and never truly overwork myself (at least by the standards of most of the gaming industry). It’s a big privilege and I feel like it’d take a lot to give it up.

Besides the things I mentioned before, one thing I did that drastically improved my mental health was being introduced to a new lovely group of friends by my partner! I started playing Dungeons & Dragons with them, every weekend or so! And in the spirit of a rising tide lifting all boats, I managed to also give back to our lovely DM, by being a sort of “AM” (audio manager)... It’s been great having something to look forward to every week.

Something to look forward to... I’ve heard about the concept of “temporal anchors”. I had heard about how the reason our adult years suddenly pass by in a blur is because we now have more “time” that’s already in our brains, but now I’m more convinced that it’s because we’re going from a very school routine such as the one schools impose upon us, to, well... practically nothing.

I thought most of my years since 2011 have been a blur, but none have whooshed by like 2020 has, and I reckon part of that is because I’ve (obviously) gone out far far less, and most importantly there wasn’t The Big Summer Event That The International Is, the biggest yearly “temporal anchor” at my disposal. The anticipation and release of those energies made summer feel a fair bit longer... and this year, summer was very much a blur for me. In and out like the wind.

I guess besides that, I haven’t really had that much trouble with being locked down. I had years of training for that, after all. Doesn’t feel like I can complain. 😛

(Pictured: trip to Chicago in January of 2019... right when the polar vortex hit!)

Work was good in 2019, and sparser in 2020. Working with Valve again after the 2018 True Sight was a very exciting opportunity. At the time, in February of 2019, I was out with my partner on little holiday trips around my region, and, after night fell, on the way back, we decided to stop in a wide open field, on a tiny countryside path, away from the cities, to try and do some star-gazing, without light pollution getting in the way.

And it’s there and then that I received their message, while looking at the stars with my SO! The timing and location turned that into a very vivid memory...

I then got to spend a couple weeks in their offices in late April / early May. I was able to bring my partner along with me to Washington State, and we did some sightseeing on the weekends.

(Pictured: part of a weekend trip in Washington. This was a dried up lakebed.)

After that, I worked on the Void Spirit trailer in the lead to The International. In August, those couple weeks in Shanghai were intense. Having peeked behind the curtain and seen everything that goes into production really does give me a much deeper appreciation for all the work that goes unseen.

Then after that, in late 2019, there was my work on the yearly True Sight documentary, for the second time. In 2018, I’d been tasked with making just two animated sequences, and I was very nervous since that was my first time working directly with Valve; my work then was fairly “sober”, for lack of a better term.

(Pictured: view from my hotel room in Shanghai.)

For the 2019 edition, I had double the amount of sequences on my plate, and they were very trusting of me, which was very reassuring. I got to be more technically ambitious, I let my style shine through (you know... if it’s got all these gratuitous light beams, etc.), and it was real fun to work on.

At the premiere in Berlin, I was sitting in the middle of the room (in fact, you could spot me in the pre-show broadcast behind SirActionSlacks; unfortunately I had forgotten to bring textures for my shirt). Being in that spot when my shots started playing, and hearing people laughing and cheering at them... that’s an unforgettable memory. The last time I had experienced something like that was having my first Dota short film played at KeyArena in 2015, the laughter of the crowd echoing all around me... I was shaking in my seat. Just remembering it gets my heart pumping, man. It’s a really unique feeling.

So I’m pretty happy with how that work came out. I came out of it having learned quite a few new tricks too, born out of necessity from my technical ambitions. Stuff I intend to put to use again. I’m really glad that the team I worked with at Valve was so kind and great to work with. After the premiere, I received a few more compliments from them... and I did reply, “careful! You might give me enough confidence to apply!”, to which one of them replied, “you totally should, man.” But I still haven’t because I’m a massive idiot, haha. Well, I still haven’t because I don’t think I’m well-rounded enough yet. And also because, like I alluded to before, I think I’m in a pretty good situation as it is.

It’s not the first encouragements I had received from them, too; there had been a couple people from the Dota team who, at the end of my two week stay in the offices, while I was on my way out, told me I should try applying. But again, I didn’t apply because I’m a massive idiot.

(Pictured: view from the Valve offices.)

To be 200% frank, even though there’s been quite a few people who’ve followed my work throughout the years, comments on Reddit and YouTube, etc. who’ve all said things along the lines of “why aren’t you working for them ?”, well... it’s not something I ever really pursued. I know it’s a lot of people’s dream job, but I never saw it that way. I feel like, if it ever happened to me... sure, that could be cool! But I don’t know if it’s something I really want, or even that I should want?

And if you add “being unsure” to what I consider to be a lack of experience in certain things, well... I really don’t think I’d be a good candidate (yet?), and having seen how busy these people are on the inside, the last thing I want to do is waste their time with a bad application. That would be the most basic form of courtesy I can show to them.

Besides, Covid-19 makes applying to just about any job very hard, if not outright impossible right now. And for a while longer, I suspect.

(Pictured: the Tuilière & Sanadoire rocks.)

I’m still unhappy about the amount of “actual animation” I get to do overall since I like to work on just about every step of the process in my videos, but well. It’s getting better. One thing I am happy with though, is “solving problems”. And new challenges. Seeking the answers to them, and making myself be able to see those problems, alongside entire projects, from a more “holistic” way, that is to say, not missing the forest for the trees.

It’s hard to explain, and even just the use of the term “holistic” sounds like some kind of pompous cop-out... but looking back on how I handled projects 5 years ago vs. now, I see the differences in how I think about problems a lot. And to some extent I do have my time on Valve contracts to thank a LOT in helping me progress there.

Anyway, I’m currently working on a project that I’m very interested & creativefuly fulfilled by. But it has nothing to do with animation nor Dota, for a change! There are definitely at least two other Dota short films I want to make, though. We’ll see how that goes.

Happy new year & take care y’all.

4 notes

·

View notes

Text

How I encode videos for YouTube and archival

Hello everyone! This post is going to describe the way in which I export and encode my video work to send it over the Internet and archive it. I’ll be talking about everything I’ve discovered over the past 10 years of research on the topic, and I’ll be mentioning some of the pitfalls to avoid falling into.

There’s a tremendous amount of misguided information out there, and while I’m not going to claim I know everything there is to know on this subject, I would like to think that I’ve spent long enough researching various issues to speak about my own little setup that I’ve got going on... it’s kind of elaborate and complex, but it works great for me.

(UPDATE 2020/12/09: added, corrected, & elaborated on a few things.)

First rule, the most golden of them all!

There should only ever be one compression step: the one YouTube does. In practice, there will be at least two, because you can’t send a mathematically-lossless file to YouTube... but you can send one that’s extremely close, and perceptually pristine.

The gist of it: none of your working files should be compressed if you can help it, and if they need to be, they should be as little as possible. (Because let’s face it, it’s pretty tricky to keep hours of game footage around in lossless form, let alone recording them as such in the first place.)

This means that any AVC files should be full (0-255) range, 4:4:4 YUV, if possible. If you use footage that’s recorded with, like, OBS, it’s theoretically possible to punch in a lossless mode for x264, and even a RGB mode, but last I checked, neither were compatible with Vegas Pro. You may have better luck with other video editors.

Make sure that the brightness levels and that the colors match what you should be seeing. This is something you should be doing at every single step of the way throughout your entire process. Always keep this in mind. Lagom.nl’s LCD calibration section has quite a few useful things you can use to make sure.

If you’re able to, set a GOP length / max keyframe range of 1 second in the encoder of your footage. Modern video codecs suck in video editors because they use all sorts of compression tricks which are great for video playback, but not so efficient with the ways video editors access and request video frames. (These formats are meant to be played forwards, and requesting frames in any other order, as NLEs do, has far-reaching implications that hurt performance.)

Setting the max keyframe range to 1 second will mildly hurt compressability of that working footage but it will greatly limit the performance impact you’ll be putting your video editor’s decoder through.

A working file is a lossless file!

I’ve been using utvideo as my lossless codec of choice. (Remember, codec means encoder/decoder.) It compresses much like FLAC or ZIP files do: losslessly. And not just perceptual losslessness, but a mathematical one: what comes in will be exactly what comes out, bit for bit.

Download it here: https://github.com/umezawatakeshi/utvideo/releases

It’s an AVI VFW codec. In this instance, VFW means Video for Windows, and it’s just the... sort of universal API that any Windows program can call for. And AVI is the container, just like how MP4 and MKV are containers. MP4 as a file is not a video format, it’s a container. MPEG-4 AVC (aka H.264) is the video format specification you’re thinking of when you say “MP4″.

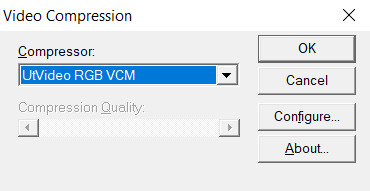

Here’s a typical AVI VFW window, you might have seen one in the wild already.

In apps that expose this setting, you can hit “configure” and set the prediction mode of utvideo to “median” to get some more efficient compression at the cost of slower decoding, but in practice this isn’t a problem.

Things to watch out for:

Any and all apps involved must support OpenDML AVIs. The original AVI spec is 2GB max only. This fixes that limitation. That’s normal, but make sure your apps support that. The OpenDML spec is from the mid-90s, so usually it’s not a problem. But for example, the SFM doesn’t support it.

The files WILL be very large. But they won’t be as large as they’d be if you had a truly uncompressed AVI.

SSDs are recommended within the bounds of reasonability, especially NVMe ones. 1080p30 should be within reach of traditional HDDs though.

utvideo will naturally perform better on CGI content rather than real-life footage and I would not recommend it at all for real-life footage, especially since you’re gonna get that in already-compressed form anyway. Do not convert your camera’s AVC/HEVC files to utvideo, it’s pointless. (Unless you were to do it as a proxy but still, kinda weird)

If you’re feeling adventurous, try out the YUV modes! They work great for matte passes, since those are often just luma-masks, so you don’t care about chroma subsampling.

If you don’t care about utvideo or don’t want to do AVIs for whatever reason, you could go the way of image sequences, but you’ll then be getting the OS-level overhead that comes with having dozens of thousands of files being accessed, etc.

They’re a valid option though. (Just not an efficient one in most cases.)

Some of my working files aren’t lossless...

Unfortunately we don’t all have 10 TB of storage in our computers. If you’re using compressed files as a source, make sure they get decoded properly by your video editing software. Make sure the colors, contrast, etc. match what you see in your “ground truth” player of choice. Make sure your “ground truth” player of choice really does represent the ground truth. Check with other devices if you can. You want to cross-reference to make sure.

One common thing that a lot of software screws up is BT.601 & BT.709 mixups. (It’s reds becoming a bit more orange.)

Ultimately you want your compressed footage to appear cohesive with your RGB footage. It should not have different ranges, different colors, etc.

For reasons that I don’t fully understand myself, 99% of AVC/H.264 video is “limited range”. That means that internally it’s actually squeezed into 16-235 as opposed to the original starting 0-255 (which is full range). And a limited range video gets decoded back to 0-255 anyway.

Sony/Magix Vegas Pro will decode limited range video properly but it will NOT expand it back to full 0-255 range, so it will appear with grayish blacks and dimmer whites. You can go into the “Levels” Effects tab to apply a preset that fixes this.

Exporting your video.

A lot of video editors out there are going to “render” your video (that is to say, calculate and render what the frames of your video look like) and encode it at the same time with whatever’s bundled in the software.

Do not ever do this with Vegas Pro. Do not ever rely on the integrated AVC encoders of Vegas Pro. They expect full range input, and encode AVC video as if it were full range (yeah), so if you want normal looking video, you have to apply a Levels preset to squeeze it into 16-235 levels, but it’s... god, honestly, just save yourself the headache and don’t use them.

Instead, export a LOSSLESS AVI out of Vegas. (using utvideo!)

But you may be able to skip this step altogether if you use Adobe Media Encoder, or software that can interface directly with it.

Okay, what do I do with this lossless AVI?

Option 1: Adobe Media Encoder.

Premiere and AE integrate directly with Adobe Media Encoder. It’s good; it doesn’t mix up BT.601/709, for example. In this case, you won’t have to export an AVI, you should be able to export “straight from the software”.

However, the integrated AVC/HEVC encoders that Adobe has licensed (from MainConcept, I believe) aren’t at the top of their game. Even cranking up the bitrate super high won’t reach the level of pristine that you’d expect (it keeps on not really allocating bits to flatter parts of the image to make them fully clean), and they don’t expose a CRF mode (more on that later), so, technically, you could still go with something better.

But what I’m getting at is, it’s not wrong to go with AME. Just crank up the bitrate though. (Try to reach 0.3 bits per pixel.) Here’s my quick rough quick guideline of Adobe Media Encoder settings:

H.264/AVC (faster encode but far from the most efficient compression one can have)

Switch from Hardware to Software encoding (unless you’re really in a hurry... but if you’re gonna be using Hardware encoding you might as well switch to H.265/HEVC, see below.)

Set the profile to High (you may not be able to do this without the above)

Bitrate to... VBR 1-pass, 30mbps for 1080p, 90mbps for 4K. Set the maximum to x2. +50% to both target and max if fps = 60.

“Maximum Render Quality” doesn’t need to be ticked, this only affects scaling. Only tick it if you are changing the final resolution of the video during this encoder step (e.g. 1080p source to be encoded as 720p)

If using H.265/HEVC (smaller file size, better for using same file as archive)

Probably stick with hardware encoding due to how slow software encoding is.

Stick to Main profile & Main tier.

If hardware: quality: Highest (slowest)

If software: quality: Higher.

4K: set Level to 5.2, 60mbps

1440p: set Level to 5.1, 40mbps

1080p: keep Level to 5.0, 25mbps

If 60fps instead of 24/30: +50% to bitrate. In which case you might have to go up to Level 6.2, but this might cause local playback issues; more on "Levels” way further down the post.

Keep in mind however that hardware encoders are far less efficient in terms of compression, but boy howdy are they super fast. This is why they become kind of worth it when it comes to H.265/HEVC. Still won’t produce the kind of super pristine result I’d want, but acceptable for the vast majority of YouTube cases.

Option 2: other encoding GUIs...

Find software of your choice that integrates the x264 encoder, which is state-of-the-art. (Again, x264 is one encoder for the H.264/AVC codec specification. Just making sure there’s no confusion here.)

Handbrake is one common choice, but honestly, I haven’t used it enough to vouch for it. I don’t know if the settings it exposes are giving you proper control over the whole BT601/709 mess. It has some UI/UX choices which I find really questionable too.

If you’re feeling like a command-line masochist, you could try using ffmpeg, but be ready to pour over the documentation. (I haven’t managed to find out how to do the BT.709 conversion well in there yet.)

Personally, I use MeGUI, because it runs through Avisynth (a frameserver), which allows me to do some cool preprocessing and override some of the default behaviour that other encoder interfaces would do. It empowers you to get into the nitty gritty of things, with lots of plugins and scripts you can install, like this one:

http://avisynth.nl/index.php/Dither_tools (grab it)

Once you’re in MeGUI, and it has finished updating its modules, you gotta hit CTRL+R to open the automated script creator. Select your input, hit “File Indexer” (not “One Click Encoder”), then just hit “Queue” so that Avisynth’s internal thingamajigs start indexing your AVI file. Once that’s done, you’ll be greeted with a video player and a template script.

In the script, all you need to add is this at the bottom:

dither_convert_rgb_to_yuv(matrix="709",output="YV12",mode=7)

This will perform the proper colorspace conversion, AND it does so with dithering! It’s the only software I know of which can do it with dithering!! I kid you not! Mode 7 means it’s doing it using a noise distribution that scales better and doesn’t create weird patterns when resizing the video (I would know, I’ve tried them all).

Your script should look like this, just 3 lines

LoadPlugin("D:\(path to megui, etc)\LSMASHSource.dll")

LWLibavVideoSource("F:\yourvideo.avi")

dither_convert_rgb_to_yuv(matrix="709",output="YV12",mode=7)

The colors WILL look messed up in the preview window but that’s normal. It’s one more example of how you should always be wary when you see an issue. Sometimes you don’t know what is misbehaving, and at which stage. Always try to troubleshoot at every step along the way, otherwise you will be chasing red herrings. Anyway...

Now, back in the main MeGUI window, we’ve got our first line complete (AviSynth script), the “Video Output” path should be autofilled, now we’re gonna touch the third line: “Encoder settings”. Make sure x264 is selected and hit “config” on the right.

Tick “show advanced settings.”

Set the encoding mode to “Const. Quality” (that’s CRF, constant rate factor). Instead of being encoded with a fixed bitrate, and then achieving variable quality with that amount of bits available, CRF instead encodes for a fixed quality, with a variable bitrate (whatever needs to be done to achieve that quality).

CRF 20 is the default, and it’s alright, but you probably want to go up to 15 if you really want to be pristine. I’m going up to 10 because I am unreasonable. (Lower is better, higher numbers means quality is worse.)

Because we’re operating under a Constant Quality metric, CRF 15 at encoder presets “fast” vs. “slow” will produce the same perceptual quality, but at different file sizes. Slow being smaller, of course.

You probably want to be at “slow” at least, there isn’t that much point in going to “slower” or “veryslow”, but you can always do it if you have the CPU horsepower to spare.

Make sure AVC Profile is set to High. The default would be Main, but High unlocks a few more features of the spec that increase compressability, especially at higher resolutions. (8x8 transforms & intra prediction, quantization scaling matrices, cb/cr controls, etc.)

Make sure to also select a Level. This doesn’t mean ANYTHING by itself, but thankfully the x264 config window here is smart enough to actually apply settings which are meaningful with regards to the level.

A short explanation is that different devices have different decoding capabilities. A decade ago, a mobile phone might have only supported level 3 in hardware, meaning that it could only do main profile at 30mbps max, and if you went over that, it would either not decode the video or do it using the CPU instead of its hardware acceleration, resulting in massive battery usage. The GPU in your computer also supports a maximum level. 5.0 is a safe bet though.

If you don’t restrict the level accordingly to what your video card supports, you might see funny things happen during playback:

It’s nothing that would actually affect YouTube (AFAIK), but still, it’s best to constrain.

Finally, head over to the “misc” tab of the x264 config panel and tick these.

If the command line preview looks like mine does (see the screenshot from a few paragraphs ago) then everything should be fine.

x264 is configured, now let’s take care of the audio.

Likewise, “Audio Input” and “Audio Output” should be prefilled if MeGUI detected an audio track in your AVI file. Just switch the audio encoder over to FLAC, hit config, crank the slider to “smallest file, slow encode” and you’re good to go. FLAC = mathematically lossless audio. Again, we want to not compress anything, or as little as possible until YouTube does its own compression job, so you might as well go with FLAC, which will equal roughly 700 to 1000kbps of audio, instead of going with 320kbps of MP3/AAC, which might be perceptually lossless, but is still compressed (bad). The added size is nothing next to the high-quality video track you’re about to pump out.

FLAC is not an audio format supported by the MP4 container, so MeGUI should have automagically changed the output to be using the MKV (Matroska) container. If it hasn’t, do it yourself.

Now, hit the “Autoencode” button in the lower right of the main window. And STOP, do not be hasty: in the new window, make sure “no target size” is selected before you do anything else. If you were to keep “file size” selected, then you would be effectively switched over to 2-pass encoding, which is another form of (bit)rate control. We don’t want that. We want CRF.

Hit queue and once it’s done processing, you should have a brand new pristine MKV file that constains lossless audio and extra clean video! Make sure to double-check that everything matches—take screenshots of the same frames in the AVI and MKV files and compare them.

Now all you’ve got to do is send it to YouTube!

For archival... well, you could just go and crank up the preset to Placebo and reduce CRF a little bit—OR you could use the 2-pass “File Size” mode which will ensure that your video stream will be the exact size (give or take a couple %) you want it to be. You could also use x265 for your archival file buuuut I haven’t used it enough (on account of how slow it is) to make sure that it has no problems anywhere with the whole BT.601/708 thing. It doesn’t expose those metadata settings so who knows how other software’s going to treat those files in the future... (god forbid they get read as BT.2020)

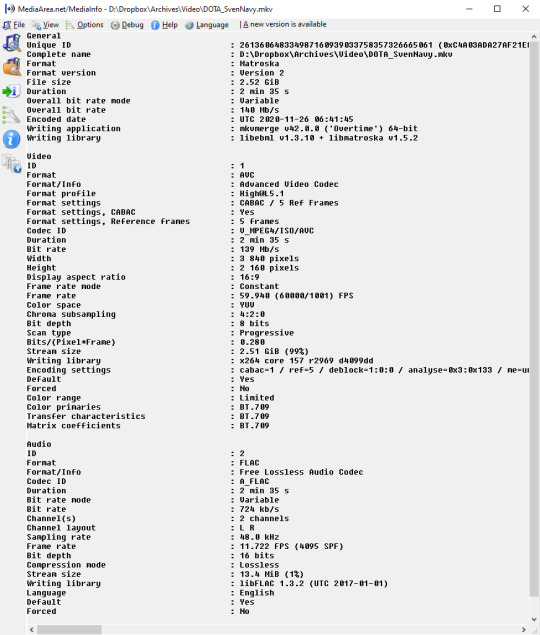

You can use Mediainfo (or any player that integrates it, like my favorite, MPC-HC) to check the metadata of the file.

Good luck out there!

And remember to always double-check the behaviour of decoders at every step of the way with your setup. 99% of the time I see people talk about YouTube messing with the contrast of their video, it’s because they weren’t aware of how quirky Vegas can be with H.264/AVC input & its integrated encoder.

Hope this helps!

15 notes

·

View notes

Text

The hunt for Realtek’s missing driver enhancements

I just had to switch from a x399 motherboard to a B550 model, both by ASRock, both “Taichi”. It’s a long story as to why my hands were tied, but anyway, I did it without reinstalling Windows, and it worked fine! (I don’t think it’s an important detail as far as this story is concerned, but you never know.)

There are two features that I want with my desktop audio:

Disabled jack detection: plugging in a front headphone jack does not disable rear speaker output. There are no separate audio devices in Windows, which is the most important thing to me. A lot of apps still don’t handle playback devices being changed in the middle of execution.

Loudness equalization: does exactly what it says on the tin. Very important to me for a few reasons. Because it works on a per-stream basis, it greatly enhances voice chat in games, for friends who are still too quiet even on 200% boost. If a video somewhere is too quiet, well now it’s not. And it means that I can keep my headset volume lower overall, which is healthy. (Especially as someone who suffers from non-hearing-loss-related tinnitus.)

The problem is, with the default Windows drivers, I could have #2, but not #1, because the Realtek Audio Console UWP app wouldn’t work. (You can only find this app through a link, not the Win10 store itself, for some goddamned reason!)

And with my motherboard’s drivers, I had #1, but not #2!

Now, I can’t say this with 100% certainty, but it seems like the reason is that ASRock has made their Realtek driver integrated with some Gamer™ Audio™ third-party thing called Nahimic. And in doing so, they disabled the stock enhancements, Loudness Equalization included.

But don’t bother trying to download the “generic” drivers through Realtek’s own website: they’re from 2017, and as far as I understand it, they’re not the new DCH-style drivers, they’re the old kind. DCH is a new driver system with Windows 10 that decouples a driver and its control panel, so that either can be installed & updated separately. (This is actually a pretty great thing, especially for laptops in general, but especially laptop graphics.)

So where could I find drivers without any kind of third-party crap?

The solution was, for me, this unofficial package:

https://github.com/pal1000/Realtek-UAD-generic

It uses other sources (like the Microsoft catalog) to fetch the actual latest universal drivers. However, I had to go download this one other tool, “Driver Store Explorer”, to force-delete a couple of things that were causing the setup script to loop.

Anyway, now I have both #1 and #2, I’m happy, but also pretty annoyed that I had to go resort to this kind of solution just to get the basic enhancements & effects working. Don’t fix what isn’t broken...

If this ever breaks I’ll probably just stick with #2 only and buy a jack splitter to fix the lack of #1. Ugh.

I hope all of this helps the next poor fool who has to fix what wasn’t broken before. Godspeed to you, traveler.

4 notes

·

View notes

Text

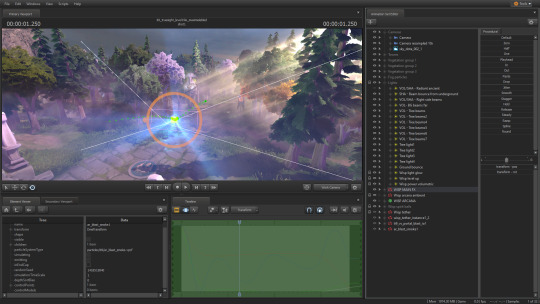

How to launch a symlinked Source 2 addon in the tools & commands to improve the SFM

I like to store a lot of my 3D work in Dropbox, for many reasons. I get an instant backup, synchronization to my laptop if my desktop computer were to suddenly die, and most importantly, a simple 30-day rollback “revision” system. It’s not source control, but it’s the closest convenience to it, with zero effort involved.

This also includes, for example, my Dota SFM addon. I have copied over the /content and /game folder hierarchies inside my Dropbox. On top of the benefits mentioned above, this allows me to launch renders of different shots in the same session easily! With some of my recent work needing to be rendered in resolutions close to 4K, it definitely isn’t a luxury.

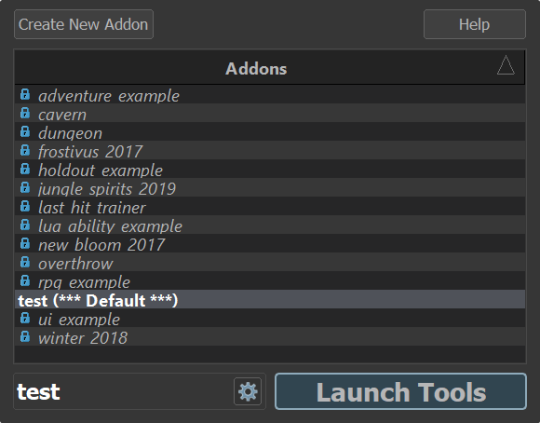

So now, of course, I can’t just launch my addon from my Dropbox. I have to create two symbolic links first — basically, “ghost folders” that pretend to be the real ones, but are pointing to where I moved them! Using these commands:

mklink /J "C:\Program Files (x86)\Steam\SteamApps\common\dota 2 beta\content\dota_addons\usermod" "D:\path\to\new\location\content"

and

mklink /J "C:\Program Files (x86)\Steam\SteamApps\common\dota 2 beta\game\dota_addons\usermod" "D:\ path\to\new\location\game"

Now, there’s a problem though; somehow, symlinked addons don’t show up in the tools startup manager (dota2cfg.exe, steamtourscfg.exe, etc)

It’s my understanding that symbolic links are supposed to be transparent to these apps, so maybe they actually aren’t, or Source 2 is doing something weird... I wouldn’t know! But it’s not actually a problem.

Make a .bat file wherever you’d like, and drop this in there:

start "" "C:\Program Files (x86)\Steam\steamapps\common\dota 2 beta\game\bin\win64\dota2.exe" -addon usermod -vconsole -tools -steam -windowed -noborder -width 1920 -height 1080 -novid -d3d11 -high +r_dashboard_render_quality 0 +snd_musicvolume 0.0 +r_texturefilteringquality 5 +engine_no_focus_sleep 0 +dota_use_heightmap 0 -tools_sun_shadow_size 8192 EXIT

Of course, you’ll have replace the paths in these lines (and the previous ones) by the paths that match what you have on your own machine.

Let me go through what each of these commands do. These tend to be very relevant to Dota 2 and may not be useful for SteamVR Home or Half-Life: Alyx.

-addon usermod is what solves our core issue. We’re not going through the launcher (dota2cfg.exe, etc.) anymore. We’re directly telling the engine to look for this addon and load it. In this case, “usermod” is my addon’s name... most people who have used the original Source 1 SFM have probably created their addon under this name 😉

-vconsole enables the nice separate console right away.

-windowed -noborder makes the game window “not a window”.

-width 1920 -height 1080 for its resolution. (I recommend half or 2/3rds.)

-novid disables any startup videos (the Dota 2 trailer, etc.)

-d3d11 is a requirement of the tools (no other APIs are supported AFAIK)

-high ensures that the process gets upgraded to high priority!

+r_dashboard_render_quality 0 disables the fancier Dota dashboard, which could theoretically by a bit of a background drain on resources.

+snd_musicvolume 0.0 disables any music coming from the Dota menu, which would otherwise come back on at random while you click thru tools.

+r_texturefilteringquality 5 forces x16 Anisotropic Filtering.

+engine_no_focus_sleep 0 prevents the engine from “artificially sleeping” for X milliseconds every frame, which would lower framerate, saving power, but also potentially hindering rendering in the SFM. I’m not sure if it still can, but better safe than sorry.

+dota_use_heightmap 0 is a particle bugfix that prevents certain particles from only using the heightmap baked at compile time, instead falling back on full collision. You may wish to experiment with both 0 and 1 when investigating particle behaviours.

-tools_sun_shadow_size 8192 sets the Global Light Shadow res to 8192 instead of 1024 (on High) or 2048 (on Ultra). This is AFAIK the maximum.

And don’t forget that “EXIT” on a new line! It will make sure the batch file automatically closes itself after executing, so it’ll work like a real shortcut.

Speaking of, how about we make it even nicer, and like an actual shortcut? Right-click on your .bat and select Create Shortcut. Unfortunately, it won’t work as-is. We need to make a few changes in its properties.

Make sure that Target is set to:

C:\Windows\System32\cmd.exe /C "D:\Path\To\Your\BatchFile\Goes\Here\launch_tools.bat"

And for bonus points, you can click Change Icon and browse to dota2cfg.exe (in \SteamApps\common\dota 2 beta\game\bin\win64) to steal its nice icon! And now you’ve got a shortcut that will launch the tools in just one click, and that you can pin directly to your task bar!

Enjoy! 🙂

8 notes

·

View notes

Text

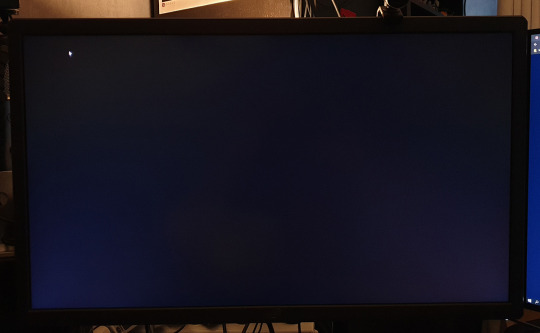

My quick review of the ASUS XG27UQ monitor (4K, HDR, 120Hz)

I originally wanted to tweet this series of bullet points out but it was getting way too long, so here goes! I got this to replace a PG278Q, which was starting to develop odd white stains, and never had good color reproduction in the first place (TN film drawbacks, very low gamma resulting in excessively bright shadows, under-saturated shadows, etc.)

The hardware aesthetic is alright! The bezels may feel a bit large to some people, but I don’t mind them at all. If you’re a fan of the no-bezel look, you’ll probably hate it. There is a glowing logo on the back that you can customize (Static Cyan is my recommendation), but it isn’t bright enough to be used as bias lighting, which would’ve been nice.

The built-in stand is decent; it comes with a tacky and distracting light projection feature at the bottom. It felt quite stable, though I don’t care about it because it got instantly replaced by an Ergotron LX arm. (I have two now, I really recommend them in spite of their price.)

The coating is a little grainy and this is noticeable on pure colors! You can kinda see the texture come through, a bit more than I’d like. Not a huge deal though.

The rest of the review will be under the cut.

The default color preset (”racing mode”), which the monitor is calibrated against, is very vivid and saturated. It looks great! But it’s inherently inaccurate, which bothers me, so I don’t like it. It looks like as if sRGB got stretched into the expanded gamut of the monitor.

sRGB “emulation” looks very similar to my Dell U2717D, whose sRGB mode is factory-calibrated. However, the XG27UQ’s sRGB mode has lower gamma (brighter shadows), so while the colors are accurate, the gamma is not. It feels 1.8-ish. Unless you were in a bright room, it would be inappropriate for work that needs to have accurate shadows. This mode also locks other controls, so it’s not the most useful, but the brightness is set well on it, so it is usable!

The “User Mode” settings use the calibrated racing mode as a starting point, which is a big relief. So it’s possible to tweak the color temperature and the saturation from there! I checked pure white against my Dell monitor and my smartphone (S9+) and tried to reach a reasonable 3-way compromise between them, knowing that the Dell is most likely the most accurate, and that Samsung also allegedly calibrates their high-end smartphones well. My configuration ended up being R:90/G:95/B:100 + SAT:42. This matches the saturation of the U2717D sRGB mode fairly closely. You also get to choose between 1.8, 2.2, and 2.5 gamma too, which is not too granular, but great to have. It kinda feels like my ideal match is between 2.2 and 2.5, but 2.2 is fine.

The color gamma according to lagom.nl looked fine, but I had to open the picture in Paint, otherwise it was DPI-scaled in the browser, and that messed with the way it works!! (That website is an amazing resource for quick monitor checks.)

Colors are however somewhat inaccurate in this mode. It’s easy to see by comparing the tweaked User Mode vs. sRGB emulation. There are some rather sizeable hue shifts in certain cases. I believe part of this is caused by the saturation tweak not operating properly.

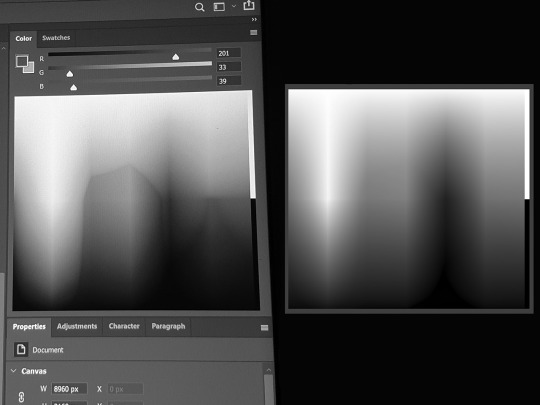

Here’s a photo of what the Photoshop color picker looks like when Saturation is set to 0 on the monitor, vs. what a proper grayscale conversion should be. It’s definitely not using the right coefficients.

So in practice, when using the Racing & User modes, compared to the U2717D sRGB, here’s a few examples of what I see:

Reds are colder (towards the purple side) & oversaturated

Bright yellow (255,215,90) is undersaturated

Bright green (120,200,130) is undersaturated

Dark green (0,105,60) is fine

Magenta (220,13,128) is oversaturated

Dark reds & brown (150,20,20 to 90,15,10) is oversaturated

Cyan (0,180,240) is fine

Pink (230,115,170) is fine

Some shades of bright saturated blue (58,48,220) have the biggest shifts.

The TF2 skin tone becomes slightly desaturated and a bit colder

It’s not inaccurate to the point of being distracting, and you always have the sRGB mode (with flawed gamma?) to check things with, but it’s definitely not ideal, and some of these shifts go far enough that I wouldn’t recommend this monitor for color work that needs to be very accurate.

I’ve went back and forth, User vs sRGB, several times, on my most recent work (True Sight 2019 sequences). I’ve found the differences were acceptable for the most part; they bothered me the most during the Chronosphere sequence, in which the hazy sunset atmosphere turned a bit into to a rose gold tint, which wasn’t unpleasant at all — and looked quite pretty! — but it wasn’t what I did.

I’m coming from the point of view of a “prosumer” who cares about color accuracy, but who ultimately recognizes that this quest is impossible in the face of so many devices out there being inaccurate or misconfigured one way or the other. In the end, my position is more pragmatic, and I feel that you gotta be able to see how your stuff’s gonna look on the devices where it’ll actually be watched. So while I’ve done color grading on a decent-enough sRGB-calibrated monitor, I’ve always checked it against the inaccurate PG278Q, and I’ve done a little bit of compromising to keep my color work looking alright even once gamma shifted. And so, now, I’ll also be getting to see what my colors look like on a monitor that doesn’t quite restrain itself to sRGB gamut properly.

Well, at least, all of that stuff is out of the box, but...

TFTCentral (one of the most trustworthy monitor review websites, in my opinion) has found suspiciously similar shifts. But after calbration, their unit passed with flying colors (pun intended), so if you really care about this sort of stuff and happen to have a colorimeter... you should give it a try!

I hope one day we’ll be able to load and apply an ICC/ICM profile computer-wide, instead of only being able to load a simple gamma curve on the GPU with third-party tools like DisplayCAL. Even if it had to squeeze the gamut a bit...

Also, there are dynamic dimming / auto contrast ratio features which could potentially be useful in limited scenarios if you don’t care about color accuracy and want to maximize brightness. I believe they are forced on for HDR. But you will probably not care at all.

IPS glow is not very present on my unit; less than on my U2717D. However, when it starts to show up (more than a 30°-ish angle away), it shows up more. UPDATED: after some more time with the monitor, I wanna say that, in fact, IPS glow isit's slightly stronger, and shows up sooner (as in, from broader angles). It requires me to sit a greater distance from the monitor in order to not have it show up and impede dark scenes. It is worse than on my U2717D.

Backlight bleed, on the other hand, is there, and a little bit noticeable. On my unit, there’s a little bit of blue-ish bleed on the lower left corner, and some dark-grey-orange bleed for a good third of the upper-left. However, in practice, and to my eyes, it doesn’t bother me, even when I look for it. It ain’t perfect, but I’ve definitely seen worse, especially from ASUS. The photo above was taken at 100% brightness, and I’ve tried to make it just a tad brighter than what my eyes see, so hopefully it’s a decent sample.

Dead pixels: on my unit, I have 5 stuck dead green subpixels overall. There are 4 in a diamond pattern somewhat down and right to the center of the screen, and another one, a bit to the right of that spot. All of them kinda “shimmer” a little bit, in the sense that they become stronger or weaker based on my angle of view. They’re a bummer but I haven’t found them to be a hindrance. Took me a few days to even notice them for the first time, after all.

HDR is just about meaningless and uses some global dimming techniques, as well as stuff that feels like... you know that Intel HD driver feature that brightens the content on the screen, while lowering the panel backlight power in tandem, to save power, but it kinda flattens (and sometimes clips) highlights? It kinda looks like that sometimes. Without local dimming, HDR is just about meaningless.

Unfortunately, the really nice HDR support in computer monitors is still looking like it’s going to be at the very least a year out, and even longer for sub-1000 price ranges. (I was holding out for the PG27UQX at first, but it still has no word on availability, a whole year after being announced, and will probably cost over two grand, so no thanks.)

G-Sync (variable refresh rate) support is... not there yet?! The latest driver does not recognize the monitor as being compatible with the feature. And it turns out that the product page says that G-Sync support is currently being applied for. Huh. I thought they had special chips in those monitors solely for the feature, but it’s possible this one does it another way? (The same way that Freesync monitors do it?)

DSC (Display Stream Compression) enables 4K 120Hz to work through a single DisplayPort cable, without chroma subsampling. And it’s working for me, which came as a surprise, as I was under the impression this feature required a 2000-series Turing GPUs. (I have a 1080 Ti.) I was wrong about this, it’s 144 Hz that requires DSC. And I don’t have it on this Pascal card. But I don’t really care since I prefer to run this monitor at 120 Hz, as it’s a multiple of the 60 Hz monitor next to it.

Windows DPI scaling support is okay now. Apps that are DPI-aware, and the vast majority of them are now, scale back and forth between 150% and 100% really well as they get dragged between the monitors! The only program I’ve had issues with is good old Winamp, which acted as if it was 100% on the XG27UQ... and shrinked down on another monitor. So I asked it to override DPI scaling behaviour (”scaling performed by: application”), which keeps the player skin at 100% on every monitor, but any call to system fonts and UI (Bento skin’s playlist + Settings panel) are still at 150%. So I had to set the playlist font size to 7 for it to look OK on the non-scaled monitor!

A few apps misbehave in interesting ways; TeamSpeak, for example, seen above, scales everything back from 150% to 100%, and there is no blurriness, but the “larger layout” (spacing, etc.) sticks.

Games look great with 4K in 27 inches. Well, I’ve only really tried Dota 2 so far, but man does it get sharp, especially with the game’s FXAA disabled. It was already a toss-up at 1440p, but at 4K I would argue you might as well keep it disabled. However, going from 2560x1440 to 3840x2160 requires some serious horsepower. It may look like a +50% upgrade in pixels, but it’s actually a +125% increase! (3.68 to 8.29 million pixels.) For a 1080 Ti, maxed-out Dota 2 at 1440p 120hz is really trivial, but once you go to 4K, not anymore... you could always lower resolution scale though! (Not an elegant solution if you like to use sharpening filters though, looking at you RDR2.)

Overall, the XG27UQ is a good monitor, and I’m satisfied with my purchase, although slightly disappointed by the strong IPS glow and the few dead subpixels. 7/10

6 notes

·

View notes

Text

PState overclocking on the x399 Taichi motherboard and a Ryzen Threadripper 1920x

It’s broken. Just writing this quick post so that someone out there might not waste an hour looking for info like I did.

Little bit of context: “p-state overclocking” is better than regular overclocking, because it doesn’t leave your clock speed and voltage completely fixed. So your processor will still ramp down when it’s (mostly) idle. On Ryzen, this can save you dozens of watts.

But here’s one thing: on the x399 Taichi motherboard, according to the information I’ve gleaned from various sources, it is partially broken, unless you have a 1950x.

How so? The Vcore will stay fixed, no matter what the current clock speed is. This means you’ll still be using ~1.35 volts at 2.2 GHz, which is extremely suboptimal and a tremendous waste.

Several solutions were pointed out, such as having to leave the pstate VID to be the exact same and modifying voltage using the general offset instead, etc. etc. but nothing works. I’ve reset my BIOS settings and then modified pstate0 by +25 Mhz, this being literally the only change done from the default settings.

It still made Vcore fixed at ~1.22 volts, and it wouldn’t budge.

From what I gather, this behaviour is broken since about 1.92 or 2.00, but pstate tweaking should still work properly if you have a 1950x...?

For what it’s worth, as a sidenote, overclocking a Ryzen processor is wasteful and not really needed. (Why did I do it? I was mostly just curious about how well my cooling setup worked.) Instead, you are much better off undervolting. I have my 1920x set to -100mV in offset mode + the droopiest load line calibration setting, Level 5. I could probably push it further, though probably not by much. This ensures a voltage that is as low as possible, especially during heavy load. This, in turn, allows the auto-boosting behaviours to go to their full potential. That’s especially true on Zen+. I have a friend whose 2700X boosts at 4.2 GHz all the time solely thanks to undervolting, no other tweaks needed. As for my 1920x, it’s gone from an all-core max-load frequency of 3500 to 3700 MHz.

1 note

·

View note

Text

2018

Hello again, it’s time for the yearly blog post. I’m a couple weeks late, and I’d like to keep it short this year, so let’s get started.

I am technically still publishing this in January so it’s cool.

One thing that I remember writing about last year is that I constantly felt hesitant, self-questioning in my writing. I think I’ve managed to dial that back a bit