#I used to have an application that worked as a proxy and would edit pages on the fly to block ads and trackers

Explore tagged Tumblr posts

Text

Alt+D (focus address bar) no longer works on desktop on tumblr.

this is my default way of getting out of a webpage, and even though this has been the case for days now I keep feeling like I've bit down on a chunk of glass every time.

I feel like firefox should have an option that's basically, "Don't let websites prevent the use of the important shortcuts."

#original#tumblr gonna tumbl#I used to have an application that worked as a proxy and would edit pages on the fly to block ads and trackers#and also had a suite of fixes for stuff like that; that was mostly about stopping the malicious popups of the late 90s

42 notes

·

View notes

Text

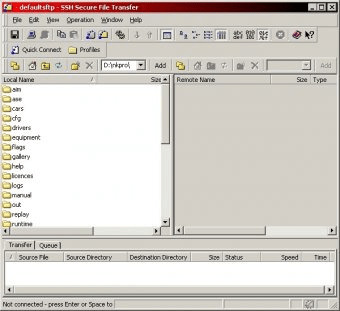

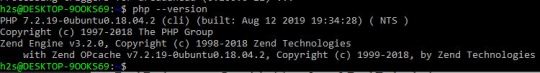

SSH Shell

Price: KiTTY is free to use. KiTTY is an SSH client that is based on PuTTY’s 0.71 version.

The Secure Shell extension works with non-Google HTTP-to-SSH proxies via proxy hooks, and third-party application nassh-relay can use those hooks to enable the Secure Shell extension to establish an SSH connection over XMLHttpRequest or WebSocket transport.

Ssh Shell For Mac

Ssh Shell Mac

Ssh Shell Script Example

Ssh Shell For Windows

SSH.NET is a Secure Shell (SSH-2) library for.NET, optimized for parallelism. This project was inspired by Sharp.SSH library which was ported from java and it seems like was not supported for quite some time. This library is a complete rewrite, without any third party dependencies, using parallelism to achieve the best.

This is quite a common task for Linux system administrators, when it is needed to execute some command or a local Bash script from a one Linux workstation or a server on another remote Linux machine over SSH.

In this article you will find the examples of how to execute a remote command, multiple commands or a Bash script over SSH between remote Linux hosts and get back the output (result).

This information will be especially useful for ones, who want to create a Bash script that will be hosted locally on a one Linux machine but would be executed remotely on the other hosts over SSH.

Cool Tip: Connect to a remote SSH server without typing a password! Configure a passwordless authentication! Only 3 easy steps! Read more →

SSH: Execute Remote Command

Execute a remote command on a host over SSH:

Examples

Get the uptime of the remote server:

Reboot the remote server:

SSH: Run Multiple Remote Commands

Ssh Shell For Mac

In the most cases it is not enough to send only one remote command over SSH.

Much more often it is required to send multiple commands on a remote server, for example, to collect some data for inventory and get back the result.

There are a lot of different ways of how it can be done, but i will show the most popular of them.

Run multiple command on a remote host over SSH:

– or –

– or –

Cool Tip: SSH login is too slow? This can be fixed easily! Get rid of delay during authentication! Read more →

Examples

Get the uptime and the disk usage:

Get the memory usage and the load average:

Show the kernel version, number of CPUs and the total RAM:

SSH: Run Bash Script on Remote Server

The equally common situation, when there is some Bash script on a Linux machine and it needs to connect from it over SSH to another Linux machine and run this script there.

The idea is to connect to a remote Linux server over SSH, let the script do the required operations and return back to local, without need not to upload this script to a remote server.

Certainly this can be done and moreover quite easily.

Cool Tip: Want to ROCK? Start a GUI (graphical) application on a remote Linux workstation over SSH! Read more →

Example

Execute the local script.sh on the remote server:

-->

Secure Shell (SSH) allows you to remotely administer and configure your Windows IoT Core device

Using the Windows 10 OpenSSH client

Important

The Windows OpenSSH client requires that your SSH client host OS is Windows 10 version 1803(17134). Also, the Windows 10 IoT Core device must be running RS5 Windows Insider Preview release 17723 or greater.

The OpenSSH Client was added to Windows 10 in 1803 (build 17134) as an optional feature. To install the client, you can search for Manage Optional Features in Windows 10 settings. If the OpenSSH Client is not listed in the list of installed features, then choose Add a feature.

Next select OpenSSH Client in the list and click Install.

Ssh Shell Mac

To login with a username and password use the following command:

Where host is either the IP address of the Windows IoT Core device or the device name.

The first time you connect you see a message like the following:

Type yes and press enter.

If you need to login as DefaultAccount rather than as administrator, you will need to generate a key and use the key to login. From the desktop that you intend to connect to your IoT Device from, open a PowerShell window and change to your personal data folder (e.g cd ~)

Register the key with ssh-agent (optional, for single sign-on experience). Note that ssh-add must be performed from a folder that is ACL'd to you as the signed-in user (BuiltinAdministrators and the NT_AUTHORITYSystem user are also ok). By default cd ~ from PowerShell should be sufficient as shown below.

Tip

If you receive a message that the ssh-agent service is disabled you can enable it with sc.exe config ssh-agent start=auto

To enable single sign, append the public key to the Windows IoT Core device authorized_keys file. Or if you only have one key you copy the public key file to the remote authorized_keys file.

If the key is not registered with ssh-agent, it must be specified on the command line to login:

If the private key is registered with ssh-agent, then you only need to specify DefaultAccount@host:

The first time you connect you see a message like the following:

Type yes and press enter.

You should now be connected as DefaultAccount

To use single sign-on with the administrator account, append your public key to c:dataProgramDatasshadministrators_authorized_keys on the Windows IoT Core device.

You will also need to set the ACL for administrators_authorized_keys to match the ACL of ssh_host_dsa_key in the same directory.

To set the ACL using PowerShell

Note

If you see a REMOTE HOST IDENTIFICATION CHANGED message after making changes to the Windows 10 IoT Core device, then edit C:Users<username>.sshknown_hosts and remove the host that has changed.

See also: Win32-OpenSSH

Using PuTTY

Download an SSH client

In order to connect to your device using SSH, you'll first need to download an SSH client, such as PuTTY.

Ssh Shell Script Example

Connect to your device

In order to connect to your device, you need to first get the IP address of the device. After booting your Windows IoT Core device, an IP address will be shown on the screen attached to the device:

Now launch PuTTY and enter the IP address in the Host Name text box and make sure the SSH radio button is selected. Then click Open.

If you're connecting to your device for the first time from your computer, you may see the following security alert. Just click Yes to continue.

If the connection was successful, you should see login as: on the screen, prompting you to login. Enter Administrator and press enter. Then enter the default password p@ssw0rd as the password and press enter.

If you were able to login successfully, you should see something like this:

Update account password

It is highly recommended that you update the default password for the Administrator account.

Ssh Shell For Windows

To do this, enter the following command in the PuTTY console, replacing [new password] with a strong password:

Configure your Windows IoT Core device

To be able to deploy applications from Visual Studio 2017, you will need to make sure the Visual Studio Remote Debugger is running on your Windows IoT Core device. The remote debugger should launch automatically at machine boot time. To double check, use the tlist command to list all the running processes from PowerShell. There should be two instances of msvsmon.exe running on the device.

It is possible for the Visual Studio Remote Debugger to time out after long periods of inactivity. If Visual Studio cannot connect to your Windows IoT Core device, try rebooting the device.

If you want, you can also rename your device. To change the 'computer name', use the setcomputername utility:

You will need to reboot the device for the change to take effect. You can use the shutdown command as follows:

Commonly used utilities

See the Command Line Utils page for a list of commands and utilities you can use with SSH.

1 note

·

View note

Text

Version 448

youtube

windows

zip

exe

macOS

app

linux

tar.gz

I had an ok couple of weeks. I was pretty ill in the middle, but I got some good work done overall. .wav files are now supported, PSD files get thumbnails, vacuum returns, and the Client API allows much cleverer search.

client api

I have added some features to the Client API. It was more complicated than I expected, so I couldn't get everything I wanted done, but I think this is a decent step forward.

First off, the main 'search for files' routine now supports many system predicates. This is thanks to a user who wrote a great system predicate text parser a long time ago. I regret I am only catching up with his work now, since it works great. I expect to roll it into normal autocomplete typing as well--letting you type 'system:width<500' and actually getting the full predicate object in the results list to select.

If you are working with the Client API, please check out the extended help here:

https://hydrusnetwork.github.io/hydrus/help/client_api.html#get_files_search_files

There's a giant list of the current supported inputs. You'll just be submitting system predicates as text, and it handles the rest. Please note that this is a complicated system, and while I have plenty of unit tests and so on, if you discover predicates that should parse but are giving errors or any other jank behaviour, please let me know!

Next step here is to add file sort and file/tag domain.

Next there's a routine that lets you add files to arbitrary pages, just like a thumbnail drag and drop:

https://hydrusnetwork.github.io/hydrus/help/client_api.html#manage_pages_add_files

This is limited to currently open pages for now, but I will add a command to create an empty file page so you can implement an external file importer page.

misc

.wav files are now supported! They should work fine in mpv as well.

Simple PSD files now get thumbnails! It turns out FFMPEG can figure this out as long as the PSD isn't too complicated, so I've done it like for .swf files--if it works, the PSD gets a nice thumbnail, and if it doesn't it gets the file default icon stretched to the correct ratio. When you update, all existing PSDs will be queued for a thumbnail regen, so they should sort themselves out in the background.

Thanks to profiles users sent in, I optimised some database code. Repository processing and large file deletes should be a little faster. I had a good look at some slow session save profiles--having hundreds of thousands of URLs in downloader pages currently eats a ton of CPU during session autosave--but the solution will require two rounds of significant work.

Database vacuum returns as a manual job. I disabled this a month or so ago as it was always a rude sledgehammer that never actually achieved all that much. Now there is some UI under database->db maintenance->review vacuum data that shows each database file separately with their current free space (i.e. what a vacuum will recover), whether it looks like you have enough space to vacuum, an estimate of vacuum time, and then the option to vacuum on a per file basis. If you recently deleted the PTR, please check it out, as you may be able to recover a whole ton of disk space.

I fixed Mr Bones! I knew I'd typo somewhere with the file service rewrite two weeks ago, and he got it. I hadn't realised how popular he was, so I've added him to my weekly test suite--it shouldn't happen again.

full list

client api:

/get_files/search_files now supports most system predicates! simply submit normal system predicate text in your taglist (check the expanded api help for a list of what is supported now) and they should be converted to proper system preds automatically. anything that doesn't parse will give 400 response. this is thanks to a user that submitted a system predicate parser a long time ago and which I did not catch up on until now. with this framework established, in future I will be able to add more predicate types and allow this parsing in normal autocomplete typing (issue #351)

this is a complicated system with many possible inputs and outputs! I have tried to convert all the object types over and fill out unit tests, but there are likely some typos or bad error handling for some unusual predicates. let me know what problems you run into, and I'll fix it up!

the old system_inbox and system_archive parameters on /get_files/search_files are now obselete. they still work, but I recommend you just use tags now. I'll deprecate them fully in future

/get_files/search_files now disables the implicit system limit that most clients apply to all searches (by default, 10,000), so if you ask for a million files, you'll (eventually) get it

a new call /manage_pages/add_files now allows you to add files to any media page, just like a file drag and drop

in the /get_files/file_metadata call, the tag lists in the different 'statuses' Objects are now human-sorted

added a link to https://github.com/floogulinc/hyextract to the client api help. this lets you extract from imported archives and reimport with tags and URLs

the client api is now ok if you POST with a utf-8 charset content-type "application/json;charset=utf-8"

the client api now tests the types of items within list parameters (e.g. file_ids should be a list of _integers_), raising an appropriate exception if they are incorrect

client api version is now 18

.

misc:

hydrus now supports wave (.wav) audio files! they play in mpv fine too

simple psd files now have thumbnails! complicated ones will get a stretched version of the old default psd filetype thumbnail, much like how flash works. all your psd files are queued up for thumbnail regen on update, so they should figure themselves out in the background. this is thanks to ffmpeg, which it turns out can handle simple psds!

vacuum returns as a manual operation. there's some new gui under _database->db maintenance->review vacuum data_. it talks about vacuum, shows current free space for each file, gives an estimate of how long vacuum will take, and allows you to launch vacuum on particular files

the 'maintenance and processing' option that checks CPU usage for 'system busy' status now lets you choose how many CPU cores must exceed the % value (previously, one core exceeding the value would cause 'busy'). maybe 4 > 25% is more useful than 1 > 50% in some situations?

removed the warning when updating from v411-v436. user reports and more study suggest this range was most likely ok in the end!

double-clicking the autocomplete tag list, or the current/pending/etc.. buttons, should now restore keyboard focus back to the text input afterwards, in float mode or not

the thumbnail 'remote services' menu, if you have file repositories or ipfs services, now appears on the top level, just below 'manage'

the file maintenance menu is shuffled up the 'database' menubar menu

fixed mr bones! I knew I was going to make a file status typo in 447, and he got it

in the downloader system, if a download object has any hashes, it now no longer consults urls for pre-import predictions. this saves a little time looking up urls and ensures that the logically stronger hashes take precedence over urls in all cases (previously, they only took precedence when a non-'looks new' status was found)

fixed an ugly bug in manage tag siblings/parents where tags imported from clipboard or .txt were not being cleaned, so all sorts of garbage with capital letters or leading spaces could be entered. all pairs are now cleaned, and anything invalid skipped over

the manage tag filter dialog now cleans all imported tag rules when using the 'import' button (issue #768)

the manage tag filter dialog now allows you to export the current tag filter with the export button

fixed the 'edit json parse rule' dialog layout so if you transition from a short display to a string match that has complicated controls, it should now expand properly to show them all

I think I fixed an odd bug where when uploading pending mappings while more mappings were being added, the x/y progress could accurately but unhelpfully continually reset to 0/y, with an ever-decreasing y until it was equal to the value it had at start. y should now always grow

hydrus servers now put their server header on a second header 'Hydrus-Server', which should allow them to be properly detectable through a proxy that overrides 'Server'

optimised a critical call in the tag mappings update database routine. for a service with many siblings and parents, I estimate repository processing is 2-7% faster

optimised the 'add/delete file' database routines in multiple ways, particularly when the file(s) have many deleted tags, and for the local file services, and when the client has multiple tag services

brushed up a couple of system predicate texts--things like num_pixels to 'number of pixels'

.

boring database refactoring:

repository update file tracking and service id normalisation is now pulled out to a new 'repositories' database module

file maintenance tracking and database-level file info updates is now pulled out to a new 'files maintenance' database module

analyse and vacuum tracking and information generation is now pulled out to a new 'db maintenance' database module

moved more commands to the 'similar files' module

the 'metadata regeneration' file maintenance job is now a little faster to save back to the database

cleared out some defunct/bad database code related to these two modules

misc code cleanup, particularly around the stuff I optimised this week

next week

Next week is a 'medium job' week. To clear out some long time legacy issues, I want to figure out an efficient way to reset and re-do repository processing just for siblings and parents. If that goes well, I'll put some more time into the Client API.

0 notes

Text

Docker Bundle Install

Estimated reading time: 15 minutes

Docker Ruby On Rails Bundle Install

Docker Bundle Install Not Working

Install OpenProject with Docker. Docker is a way to distribute self-contained applications easily. We provide a Docker image for the Community Edition that you can very easily install and upgrade on your servers. Docker: version 1.9.0 or later; Running Docker Image sudo docker run -i -t -d -p 80:80 onlyoffice/documentserver Use this command if you wish to install ONLYOFFICE Document Server separately. To install ONLYOFFICE Document Server integrated with Community and Mail Servers, refer to the corresponding instructions below. Configuring Docker Image. The Docker Desktop menu allows you to configure your Docker settings such as installation, updates, version channels, Docker Hub login, and more. This section explains the configuration options accessible from the Settings dialog. Open the Docker Desktop menu by clicking the Docker icon in the Notifications area (or System tray).

Welcome to Docker Desktop! The Docker Desktop for Windows user manual provides information on how to configure and manage your Docker Desktop settings.

For information about Docker Desktop download, system requirements, and installation instructions, see Install Docker Desktop.

Settings

The Docker Desktop menu allows you to configure your Docker settings such as installation, updates, version channels, Docker Hub login,and more.

This section explains the configuration options accessible from the Settings dialog.

Open the Docker Desktop menu by clicking the Docker icon in the Notifications area (or System tray):

Select Settings to open the Settings dialog:

General

On the General tab of the Settings dialog, you can configure when to start and update Docker.

Start Docker when you log in - Automatically start Docker Desktop upon Windows system login. Macos mojave installer size.

Expose daemon on tcp://localhost:2375 without TLS - Click this option to enable legacy clients to connect to the Docker daemon. You must use this option with caution as exposing the daemon without TLS can result in remote code execution attacks.

Send usage statistics - By default, Docker Desktop sends diagnostics,crash reports, and usage data. This information helps Docker improve andtroubleshoot the application. Clear the check box to opt out. Docker may periodically prompt you for more information.

Resources

The Resources tab allows you to configure CPU, memory, disk, proxies, network, and other resources. Different settings are available for configuration depending on whether you are using Linux containers in WSL 2 mode, Linux containers in Hyper-V mode, or Windows containers.

Advanced

Note

The Advanced tab is only available in Hyper-V mode, because in WSL 2 mode and Windows container mode these resources are managed by Windows. In WSL 2 mode, you can configure limits on the memory, CPU, and swap size allocatedto the WSL 2 utility VM.

Use the Advanced tab to limit resources available to Docker.

CPUs: By default, Docker Desktop is set to use half the number of processorsavailable on the host machine. To increase processing power, set this to ahigher number; to decrease, lower the number.

Memory: By default, Docker Desktop is set to use 2 GB runtime memory,allocated from the total available memory on your machine. To increase the RAM, set this to a higher number. To decrease it, lower the number.

Swap: Configure swap file size as needed. The default is 1 GB.

Disk image size: Specify the size of the disk image.

Disk image location: Specify the location of the Linux volume where containers and images are stored.

You can also move the disk image to a different location. If you attempt to move a disk image to a location that already has one, you get a prompt asking if you want to use the existing image or replace it.

File sharing

Note

The File sharing tab is only available in Hyper-V mode, because in WSL 2 mode and Windows container mode all files are automatically shared by Windows.

Use File sharing to allow local directories on Windows to be shared with Linux containers.This is especially useful forediting source code in an IDE on the host while running and testing the code in a container.Note that configuring file sharing is not necessary for Windows containers, only Linux containers. If a directory is not shared with a Linux container you may get file not found or cannot start service errors at runtime. See Volume mounting requires shared folders for Linux containers.

File share settings are:

Add a Directory: Click + and navigate to the directory you want to add.

Apply & Restart makes the directory available to containers using Docker’sbind mount (-v) feature.

Tips on shared folders, permissions, and volume mounts

Share only the directories that you need with the container. File sharing introduces overhead as any changes to the files on the host need to be notified to the Linux VM. Sharing too many files can lead to high CPU load and slow filesystem performance.

Shared folders are designed to allow application code to be edited on the host while being executed in containers. For non-code items such as cache directories or databases, the performance will be much better if they are stored in the Linux VM, using a data volume (named volume) or data container.

Docker Desktop sets permissions to read/write/execute for users, groups and others 0777 or a+rwx.This is not configurable. See Permissions errors on data directories for shared volumes.

Windows presents a case-insensitive view of the filesystem to applications while Linux is case-sensitive. On Linux it is possible to create 2 separate files: test and Test, while on Windows these filenames would actually refer to the same underlying file. This can lead to problems where an app works correctly on a developer Windows machine (where the file contents are shared) but fails when run in Linux in production (where the file contents are distinct). To avoid this, Docker Desktop insists that all shared files are accessed as their original case. Therefore if a file is created called test, it must be opened as test. Attempts to open Test will fail with “No such file or directory”. Similarly once a file called test is created, attempts to create a second file called Test will fail.

Shared folders on demand

You can share a folder “on demand” the first time a particular folder is used by a container.

If you run a Docker command from a shell with a volume mount (as shown in theexample below) or kick off a Compose file that includes volume mounts, you get apopup asking if you want to share the specified folder.

You can select to Share it, in which case it is added your Docker Desktop Shared Folders list and available tocontainers. Alternatively, you can opt not to share it by selecting Cancel.

Proxies

Docker Desktop lets you configure HTTP/HTTPS Proxy Settings andautomatically propagates these to Docker. For example, if you set your proxysettings to http://proxy.example.com, Docker uses this proxy when pulling containers.

Your proxy settings, however, will not be propagated into the containers you start.If you wish to set the proxy settings for your containers, you need to defineenvironment variables for them, just like you would do on Linux, for example:

For more information on setting environment variables for running containers,see Set environment variables.

Network

Note

The Network tab is not available in Windows container mode because networking is managed by Windows. Install ipsw macos catalina installer.

You can configure Docker Desktop networking to work on a virtual private network (VPN). Specify a network address translation (NAT) prefix and subnet mask to enable Internet connectivity.

DNS Server: You can configure the DNS server to use dynamic or static IP addressing.

Note

Some users reported problems connecting to Docker Hub on Docker Desktop. This would manifest as an error when trying to rundocker commands that pull images from Docker Hub that are not alreadydownloaded, such as a first time run of docker run hello-world. If youencounter this, reset the DNS server to use the Google DNS fixed address:8.8.8.8. For more information, seeNetworking issues in Troubleshooting.

Updating these settings requires a reconfiguration and reboot of the Linux VM.

WSL Integration

In WSL 2 mode, you can configure which WSL 2 distributions will have the Docker WSL integration.

By default, the integration will be enabled on your default WSL distribution. To change your default WSL distro, run wsl --set-default <distro name>. (For example, to set Ubuntu as your default WSL distro, run wsl --set-default ubuntu).

You can also select any additional distributions you would like to enable the WSL 2 integration on.

For more details on configuring Docker Desktop to use WSL 2, see Docker Desktop WSL 2 backend.

Docker Engine

The Docker Engine page allows you to configure the Docker daemon to determine how your containers run.

Type a JSON configuration file in the box to configure the daemon settings. For a full list of options, see the Docker Enginedockerd commandline reference.

Click Apply & Restart to save your settings and restart Docker Desktop.

Command Line

On the Command Line page, you can specify whether or not to enable experimental features.

You can toggle the experimental features on and off in Docker Desktop. If you toggle the experimental features off, Docker Desktop uses the current generally available release of Docker Engine.

Experimental features

Experimental features provide early access to future product functionality.These features are intended for testing and feedback only as they may changebetween releases without warning or can be removed entirely from a futurerelease. Experimental features must not be used in production environments.Docker does not offer support for experimental features.

For a list of current experimental features in the Docker CLI, see Docker CLI Experimental features.

Run docker version to verify whether you have enabled experimental features. Experimental modeis listed under Server data. If Experimental is true, then Docker isrunning in experimental mode, as shown here:

Kubernetes

Note

The Kubernetes tab is not available in Windows container mode.

Docker Desktop includes a standalone Kubernetes server that runs on your Windows machince, sothat you can test deploying your Docker workloads on Kubernetes. To enable Kubernetes support and install a standalone instance of Kubernetes running as a Docker container, select Enable Kubernetes.

For more information about using the Kubernetes integration with Docker Desktop, see Deploy on Kubernetes.

Reset

The Restart Docker Desktop and Reset to factory defaults options are now available on the Troubleshoot menu. For information, see Logs and Troubleshooting.

Troubleshoot

Visit our Logs and Troubleshooting guide for more details.

Log on to our Docker Desktop for Windows forum to get help from the community, review current user topics, or join a discussion.

Log on to Docker Desktop for Windows issues on GitHub to report bugs or problems and review community reported issues.

For information about providing feedback on the documentation or update it yourself, see Contribute to documentation.

Switch between Windows and Linux containers

From the Docker Desktop menu, you can toggle which daemon (Linux or Windows)the Docker CLI talks to. Select Switch to Windows containers to use Windowscontainers, or select Switch to Linux containers to use Linux containers(the default).

For more information on Windows containers, refer to the following documentation:

Microsoft documentation on Windows containers.

Build and Run Your First Windows Server Container (Blog Post)gives a quick tour of how to build and run native Docker Windows containers on Windows 10 and Windows Server 2016 evaluation releases.

Getting Started with Windows Containers (Lab)shows you how to use the MusicStoreapplication with Windows containers. The MusicStore is a standard .NET application and,forked here to use containers, is a good example of a multi-container application.

To understand how to connect to Windows containers from the local host, seeLimitations of Windows containers for localhost and published ports

Settings dialog changes with Windows containers

When you switch to Windows containers, the Settings dialog only shows those tabs that are active and apply to your Windows containers:

If you set proxies or daemon configuration in Windows containers mode, theseapply only on Windows containers. If you switch back to Linux containers,proxies and daemon configurations return to what you had set for Linuxcontainers. Your Windows container settings are retained and become availableagain when you switch back.

Dashboard

The Docker Desktop Dashboard enables you to interact with containers and applications and manage the lifecycle of your applications directly from your machine. The Dashboard UI shows all running, stopped, and started containers with their state. It provides an intuitive interface to perform common actions to inspect and manage containers and Docker Compose applications. For more information, see Docker Desktop Dashboard.

Docker Hub

Select Sign in /Create Docker ID from the Docker Desktop menu to access your Docker Hub account. Once logged in, you can access your Docker Hub repositories directly from the Docker Desktop menu.

For more information, refer to the following Docker Hub topics:

Two-factor authentication

Docker Desktop enables you to sign into Docker Hub using two-factor authentication. Two-factor authentication provides an extra layer of security when accessing your Docker Hub account.

You must enable two-factor authentication in Docker Hub before signing into your Docker Hub account through Docker Desktop. For instructions, see Enable two-factor authentication for Docker Hub.

After you have enabled two-factor authentication:

Go to the Docker Desktop menu and then select Sign in / Create Docker ID.

Enter your Docker ID and password and click Sign in.

After you have successfully signed in, Docker Desktop prompts you to enter the authentication code. Enter the six-digit code from your phone and then click Verify.

After you have successfully authenticated, you can access your organizations and repositories directly from the Docker Desktop menu.

Adding TLS certificates

You can add trusted Certificate Authorities (CAs) to your Docker daemon to verify registry server certificates, and client certificates, to authenticate to registries.

How do I add custom CA certificates?

Docker Desktop supports all trusted Certificate Authorities (CAs) (root orintermediate). Docker recognizes certs stored under Trust RootCertification Authorities or Intermediate Certification Authorities.

Docker Desktop creates a certificate bundle of all user-trusted CAs based onthe Windows certificate store, and appends it to Moby trusted certificates. Therefore, if an enterprise SSL certificate is trusted by the user on the host, it is trusted by Docker Desktop.

To learn more about how to install a CA root certificate for the registry, seeVerify repository client with certificatesin the Docker Engine topics.

How do I add client certificates?

You can add your client certificatesin ~/.docker/certs.d/<MyRegistry>:<Port>/client.cert and~/.docker/certs.d/<MyRegistry>:<Port>/client.key. You do not need to push your certificates with git commands.

When the Docker Desktop application starts, it copies the~/.docker/certs.d folder on your Windows system to the /etc/docker/certs.ddirectory on Moby (the Docker Desktop virtual machine running on Hyper-V).

You need to restart Docker Desktop after making any changes to the keychainor to the ~/.docker/certs.d directory in order for the changes to take effect.

The registry cannot be listed as an insecure registry (seeDocker Daemon). Docker Desktop ignorescertificates listed under insecure registries, and does not send clientcertificates. Commands like docker run that attempt to pull from the registryproduce error messages on the command line, as well as on the registry.

Docker Ruby On Rails Bundle Install

To learn more about how to set the client TLS certificate for verification, seeVerify repository client with certificatesin the Docker Engine topics.

Where to go next

Try out the walkthrough at Get Started.

Dig in deeper with Docker Labs example walkthroughs and source code.

Refer to the Docker CLI Reference Guide.

Docker Bundle Install Not Working

windows, edge, tutorial, run, docker, local, machine

0 notes

Text

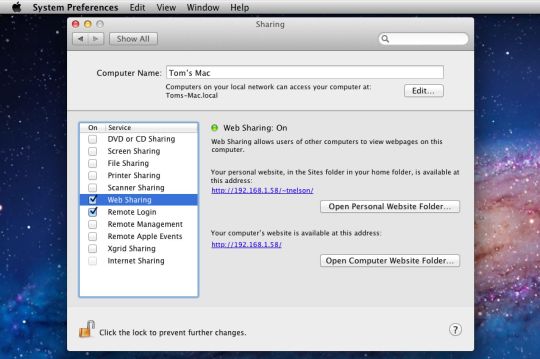

Webserver For Mac

Apache Web Server For Mac

Web Server For Microsoft Edge

Web Server For Mac Os X

Free Web Server For Mac

Web Server For Mac

Are you in need of a web server software for your projects? Looking for something with outstanding performance that suits your prerequisites? A web server is a software program which serves content (HTML documents, images, and other web resources) using the HTTP protocol. It will support both static content and dynamic content. Check these eight top rated web server software and get to know about all its key features here before deciding which would suit your project.

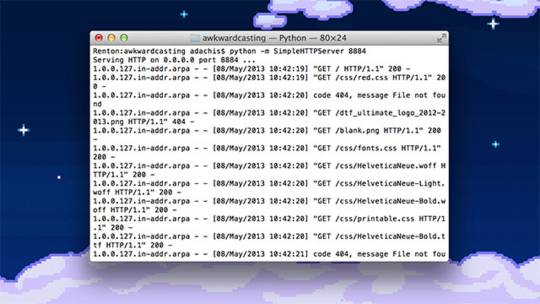

Web server software is a kind of software which is developed to be utilized, controlled and handled on computing server. Web server software gives the exploitation of basic server computing cloud for application with a collection of high-end computing functions and services. This should fire up a webserver that listens on 10.0.1.1:8080 and serves files from the current directory ('.' ) – no PHP, ASP or any of that needed. Any suggestion greatly appreciated. Macos http unix webserver.

Related:

Apache

The Apache HTTP web Server Project is a push to create and keep up an open-source HTTP server for current working frameworks including UNIX and Windows. The objective of this anticipate is to give a safe, effective and extensible server that gives HTTP administrations in a state of harmony with the present HTTP benchmarks.

Virgo Web Server

The Virgo Web Server is the runtime segment of the Virgo Runtime Environment. It is a lightweight, measured, OSGi-based runtime that gives a complete bundled answer for creating, sending, and overseeing venture applications. By utilizing a few best-of-breed advances and enhancing them, the VWS offers a convincing answer for creating and convey endeavor applications.

Abyss Web Server

Abyss Web Server empowers you to have your Web destinations on your PC. It bolsters secure SSL/TLS associations (HTTPS) and in addition an extensive variety of Web innovations. It can likewise run progressed PHP, Perl, Python, ASP, ASP.NET, and Ruby on Rails Web applications which can be sponsored by databases, for example, MySQL, SQLite, MS SQL Server, MS Access, or Oracle.

Cherokee Web Server

All the arrangement is done through Cherokee-Admin, an excellent and effective web interface. Cherokee underpins the most across the board Web innovations: FastCGI, SCGI, PHP, uWSGI, SSI, CGI, LDAP, TLS/SSL, HTTP proxying, video gushing, the content storing, activity forming, and so on. It underpins cross Platform and keeps running on Linux, Mac OS X, and then some more.

Raiden HTTP

RaidenHTTPD is a completely included web server programming for Windows stage. It’s intended for everyone, whether novice or master, who needs to have an intuitive web page running inside minutes. With RaidenHTTPD, everybody can be a web page performer starting now and into the foreseeable future! Having a web page made with RaidenHTTPD, you won’t be surprised to see a great many guests to your web website consistently or considerably more

KF Web Server

KF Web Server is a free HTTP Server that can have a boundless number of websites. Its little size, low framework necessities, and simple organization settle on it the ideal decision for both expert and beginner web designers alike.

Tornado Web Server

Tornado is a Python web structure and offbeat systems administration library, initially created at FriendFeed. By utilizing non-blocking system I/O, Tornado can scale to a huge number of open associations, making it perfect for long surveying, WebSockets, and different applications that require a seemingly perpetual association with every client.

WampServer – Most Popular Software

This is the most mainstream web server amongst all the others. WampServer is a Windows web improvement environment. It permits you to make web applications with Apache2, PHP, and a MySQL database. Nearby, PhpMyAdmin permits you to oversee effortlessly your databases. WampServer is accessible for nothing (under GPML permit) in two particular adaptations that is, 32 and 64 bits.

What is a Web Server?

A Web Server is a PC framework that works by means of HTTP, the system used to disseminate data on the Web. The term can refer to the framework, or to any product particularly that acknowledges and administers the HTTP requests. A web server, in some cases, called an HTTP server or application server is a system that serves content utilizing the HTTP convention. You can also see Log Analyser Software

This substance is often as HTML reports, pictures, and other web assets, however, can incorporate any kind of record. The substance served by the web server can be prior known as a static substance or created on the fly that is alterable content. In a request to be viewed as a web server, an application must actualize the HTTP convention. Applications based on top of web servers. You can also see Proxy Server Software

Therefore, these 8 web servers are very powerful and makes the customer really satisfactory when used in their applications. Try them out and have fun programming!

Related Posts

16 13 likes 31,605 views Last modified Jan 31, 2019 11:25 AM

Here is my definitive guide to getting a local web server running on OS X 10.14 “Mojave”. This is meant to be a development platform so that you can build and test your sites locally, then deploy to an internet server. This User Tip only contains instructions for configuring the Apache server, PHP module, and Perl module. I have another User Tip for installing and configuring MySQL and email servers.

Note: This user tip is specific to macOS 10.14 “Mojave”. Pay attention to your OS version. There have been significant changes since earlier versions of macOS.Another note: These instructions apply to the client versions of OS X, not Server. Server does a few specific tricks really well and is a good choice for those. For things like database, web, and mail services, I have found it easier to just setup the client OS version manually.

Requirements:

Basic understanding of Terminal.app and how to run command-line programs.

Basic understanding of web servers.

Basic usage of vi. You can substitute nano if you want.

Optional: Xcode is required for adding PHP modules.

Lines in bold are what you will have to type in. Lines in bold courier should be typed at the Terminal.Replace <your short user name> with your short user name.

Here goes... Enjoy!

To get started, edit the Apache configuration file as root:

sudo vi /etc/apache2/httpd.conf

Enable PHP by uncommenting line 177, changing:

#LoadModule php7_module libexec/apache2/libphp7.so

to

LoadModule php7_module libexec/apache2/libphp7.so

(If you aren't familiar with vi, go to line 177 by typing '177G' (without the quotes). Then just press 'x' over the '#' character to delete it. Then type ':w!' to save, or just 'ZZ' to save and quit. Don't do that yet though. More changes are still needed.)

If you want to run Perl scripts, you will have to do something similar:

Enable Perl by uncommenting line 178, changing:

#LoadModule perl_module libexec/apache2/mod_perl.so

to

LoadModule perl_module libexec/apache2/mod_perl.so

Enable personal websites by uncommenting the following at line 174:

#LoadModule userdir_module libexec/apache2/mod_userdir.so

to

LoadModule userdir_module libexec/apache2/mod_userdir.so

and do the same at line 511:

#Include /private/etc/apache2/extra/httpd-userdir.conf

to

Apache Web Server For Mac

Include /private/etc/apache2/extra/httpd-userdir.conf

Now save and quit.

Open the file you just enabled above with:

sudo vi /etc/apache2/extra/httpd-userdir.conf

and uncomment the following at line 16:

#Include /private/etc/apache2/users/*.conf

to

Include /private/etc/apache2/users/*.conf

Save and exit.

Lion and later versions no longer create personal web sites by default. If you already had a Sites folder in Snow Leopard, it should still be there. To create one manually, enter the following:

mkdir ~/Sites

echo '<html><body><h1>My site works</h1></body></html>' > ~/Sites/index.html.en

While you are in /etc/apache2, double-check to make sure you have a user config file. It should exist at the path: /etc/apache2/users/<your short user name>.conf.

That file may not exist and if you upgrade from an older version, you may still not have it. It does appear to be created when you create a new user. If that file doesn't exist, you will need to create it with:

sudo vi /etc/apache2/users/<your short user name>.conf

Use the following as the content:

<Directory '/Users/<your short user name>/Sites/'>

AddLanguage en .en

AddHandler perl-script .pl

PerlHandler ModPerl::Registry

Options Indexes MultiViews FollowSymLinks ExecCGI

AllowOverride None

Require host localhost

</Directory>

Now you are ready to turn on Apache itself. But first, do a sanity check. Sometimes copying and pasting from an internet forum can insert invisible, invalid characters into config files. Check your configuration by running the following command in the Terminal:

apachectl configtest

If this command returns 'Syntax OK' then you are ready to go. It may also print a warning saying 'httpd: Could not reliably determine the server's fully qualified domain name'. You could fix this by setting the ServerName directive in /etc/apache2/httpd.conf and adding a matching entry into /etc/hosts. But for a development server, you don't need to do anything. You can just ignore that warning. You can safely ignore other warnings too.

Turn on the Apache httpd service by running the following command in the Terminal:

sudo launchctl load -w /System/Library/LaunchDaemons/org.apache.httpd.plist

In Safari, navigate to your web site with the following address:

http://localhost/

It should say:

It works!

Now try your user home directory:

http://localhost/~<your short user name>

Web Server For Microsoft Edge

It should say:

My site works

Web Server For Mac Os X

Now try PHP. Create a PHP info file with:

echo '<?php echo phpinfo(); ?>' > ~/Sites/info.php

And test it by entering the following into Safari's address bar:

http://localhost/~<your short user name>/info.php

You should see your PHP configuration information.

To test Perl, try something similar. Create a Perl test file with:

echo 'print $ENV(MOD_PERL) . qq(n);' > ~/Sites/info.pl

And test it by entering the following into Safari's address bar:

http://localhost/~<your short user name>/info.pl

Free Web Server For Mac

You should see the string 'mod_perl/2.0.9'.

If you want to setup MySQL, see my User Tip on Installing MySQL.

Web Server For Mac

If you want to add modules to PHP, I suggest the following site. I can't explain it any better.

If you want to make further changes to your Apache system or user config files, you will need to restart the Apache server with:

sudo apachectl graceful

0 notes

Text

Going Jamstack with React, Serverless, and Airtable

The best way to learn is to build. Let’s learn about this hot new buzzword, Jamstack, by building a site with React, Netlify (Serverless) Functions, and Airtable. One of the ingredients of Jamstack is static hosting, but that doesn’t mean everything on the site has to be static. In fact, we’re going to build an app with full-on CRUD capability, just like a tutorial for any web technology with more traditional server-side access might.

youtube

Why these technologies, you ask?

You might already know this, but the “JAM” in Jamstack stands for JavaScript, APIs, and Markup. These technologies individually are not new, so the Jamstack is really just a new and creative way to combine them. You can read more about it over at the Jamstack site.

One of the most important benefits of Jamstack is ease of deployment and hosting, which heavily influence the technologies we are using. By incorporating Netlify Functions (for backend CRUD operations with Airtable), we will be able to deploy our full-stack application to Netlify. The simplicity of this process is the beauty of the Jamstack.

As far as the database, I chose Airtable because I wanted something that was easy to get started with. I also didn’t want to get bogged down in technical database details, so Airtable fits perfectly. Here’s a few of the benefits of Airtable:

You don’t have to deploy or host a database yourself

It comes with an Excel-like GUI for viewing and editing data

There’s a nice JavaScript SDK

What we’re building

For context going forward, we are going to build an app where you can use to track online courses that you want to take. Personally, I take lots of online courses, and sometimes it’s hard to keep up with the ones in my backlog. This app will let track those courses, similar to a Netflix queue.

Source Code

One of the reasons I take lots of online courses is because I make courses. In fact, I have a new one available where you can learn how to build secure and production-ready Jamstack applications using React and Netlify (Serverless) Functions. We’ll cover authentication, data storage in Airtable, Styled Components, Continuous Integration with Netlify, and more! Check it out →

Airtable setup

Let me start by clarifying that Airtable calls their databases “bases.” So, to get started with Airtable, we’ll need to do a couple of things.

Sign up for a free account

Create a new “base”

Define a new table for storing courses

Next, let’s create a new database. We’ll log into Airtable, click on “Add a Base” and choose the “Start From Scratch” option. I named my new base “JAMstack Demos” so that I can use it for different projects in the future.

Next, let’s click on the base to open it.

You’ll notice that this looks very similar to an Excel or Google Sheets document. This is really nice for being able tower with data right inside of the dashboard. There are few columns already created, but we add our own. Here are the columns we need and their types:

name (single line text)

link (single line text)

tags (multiple select)

purchased (checkbox)

We should add a few tags to the tags column while we’re at it. I added “node,” “react,” “jamstack,” and “javascript” as a start. Feel free to add any tags that make sense for the types of classes you might be interested in.

I also added a few rows of data in the name column based on my favorite online courses:

Build 20 React Apps

Advanced React Security Patterns

React and Serverless

The last thing to do is rename the table itself. It’s called “Table 1” by default. I renamed it to “courses” instead.

Locating Airtable credentials

Before we get into writing code, there are a couple of pieces of information we need to get from Airtable. The first is your API Key. The easiest way to get this is to go your account page and look in the “Overview” section.

Next, we need the ID of the base we just created. I would recommend heading to the Airtable API page because you’ll see a list of your bases. Click on the base you just created, and you should see the base ID listed. The documentation for the Airtable API is really handy and has more detailed instructions for find the ID of a base.

Lastly, we need the table’s name. Again, I named mine “courses” but use whatever you named yours if it’s different.

Project setup

To help speed things along, I’ve created a starter project for us in the main repository. You’ll need to do a few things to follow along from here:

Fork the repository by clicking the fork button

Clone the new repository locally

Check out the starter branch with git checkout starter

There are lots of files already there. The majority of the files come from a standard create-react-app application with a few exceptions. There is also a functions directory which will host all of our serverless functions. Lastly, there’s a netlify.toml configuration file that tells Netlify where our serverless functions live. Also in this config is a redirect that simplifies the path we use to call our functions. More on this soon.

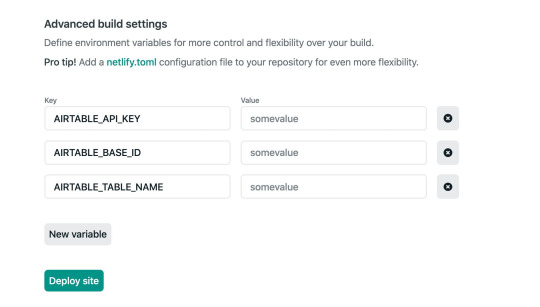

The last piece of the setup is to incorporate environment variables that we can use in our serverless functions. To do this install the dotenv package.

npm install dotenv

Then, create a .env file in the root of the repository with the following. Make sure to use your own API key, base ID, and table name that you found earlier.

AIRTABLE_API_KEY=<YOUR_API_KEY> AIRTABLE_BASE_ID=<YOUR_BASE_ID> AIRTABLE_TABLE_NAME=<YOUR_TABLE_NAME>

Now let’s write some code!

Setting up serverless functions

To create serverless functions with Netlify, we need to create a JavaScript file inside of our /functions directory. There are already some files included in this starter directory. Let’s look in the courses.js file first.

const formattedReturn = require('./formattedReturn'); const getCourses = require('./getCourses'); const createCourse = require('./createCourse'); const deleteCourse = require('./deleteCourse'); const updateCourse = require('./updateCourse'); exports.handler = async (event) => { return formattedReturn(200, 'Hello World'); };

The core part of a serverless function is the exports.handler function. This is where we handle the incoming request and respond to it. In this case, we are accepting an event parameter which we will use in just a moment.

We are returning a call inside the handler to the formattedReturn function, which makes it a bit simpler to return a status and body data. Here’s what that function looks like for reference.

module.exports = (statusCode, body) => { return { statusCode, body: JSON.stringify(body), }; };

Notice also that we are importing several helper functions to handle the interaction with Airtable. We can decide which one of these to call based on the HTTP method of the incoming request.

HTTP GET → getCourses

HTTP POST → createCourse

HTTP PUT → updateCourse

HTTP DELETE → deleteCourse

Let’s update this function to call the appropriate helper function based on the HTTP method in the event parameter. If the request doesn’t match one of the methods we are expecting, we can return a 405 status code (method not allowed).

exports.handler = async (event) => { if (event.httpMethod === 'GET') { return await getCourses(event); } else if (event.httpMethod === 'POST') { return await createCourse(event); } else if (event.httpMethod === 'PUT') { return await updateCourse(event); } else if (event.httpMethod === 'DELETE') { return await deleteCourse(event); } else { return formattedReturn(405, {}); } };

Updating the Airtable configuration file

Since we are going to be interacting with Airtable in each of the different helper files, let’s configure it once and reuse it. Open the airtable.js file.

In this file, we want to get a reference to the courses table we created earlier. To do that, we create a reference to our Airtable base using the API key and the base ID. Then, we use the base to get a reference to the table and export it.

require('dotenv').config(); var Airtable = require('airtable'); var base = new Airtable({ apiKey: process.env.AIRTABLE_API_KEY }).base( process.env.AIRTABLE_BASE_ID ); const table = base(process.env.AIRTABLE_TABLE_NAME); module.exports = { table };

Getting courses

With the Airtable config in place, we can now open up the getCourses.js file and retrieve courses from our table by calling table.select().firstPage(). The Airtable API uses pagination so, in this case, we are specifying that we want the first page of records (which is 20 records by default).

const courses = await table.select().firstPage(); return formattedReturn(200, courses);

Just like with any async/await call, we need to handle errors. Let’s surround this snippet with a try/catch.

try { const courses = await table.select().firstPage(); return formattedReturn(200, courses); } catch (err) { console.error(err); return formattedReturn(500, {}); }

Airtable returns back a lot of extra information in its records. I prefer to simplify these records with only the record ID and the values for each of the table columns we created above. These values are found in the fields property. To do this, I used the an Array map to format the data the way I want.

const { table } = require('./airtable'); const formattedReturn = require('./formattedReturn'); module.exports = async (event) => { try { const courses = await table.select().firstPage(); const formattedCourses = courses.map((course) => ({ id: course.id, ...course.fields, })); return formattedReturn(200, formattedCourses); } catch (err) { console.error(err); return formattedReturn(500, {}); } };

How do we test this out? Well, the netlify-cli provides us a netlify dev command to run our serverless functions (and our front-end) locally. First, install the CLI:

npm install -g netlify-cli

Then, run the netlify dev command inside of the directory.

This beautiful command does a few things for us:

Runs the serverless functions

Runs a web server for your site

Creates a proxy for front end and serverless functions to talk to each other on Port 8888.

Let’s open up the following URL to see if this works:

We are able to use /api/* for our API because of the redirect configuration in the netlify.toml file.

If successful, we should see our data displayed in the browser.

Creating courses

Let’s add the functionality to create a course by opening up the createCourse.js file. We need to grab the properties from the incoming POST body and use them to create a new record by calling table.create().

The incoming event.body comes in a regular string which means we need to parse it to get a JavaScript object.

const fields = JSON.parse(event.body);

Then, we use those fields to create a new course. Notice that the create() function accepts an array which allows us to create multiple records at once.

const createdCourse = await table.create([{ fields }]);

Then, we can return the createdCourse:

return formattedReturn(200, createdCourse);

And, of course, we should wrap things with a try/catch:

const { table } = require('./airtable'); const formattedReturn = require('./formattedReturn'); module.exports = async (event) => { const fields = JSON.parse(event.body); try { const createdCourse = await table.create([{ fields }]); return formattedReturn(200, createdCourse); } catch (err) { console.error(err); return formattedReturn(500, {}); } };

Since we can’t perform a POST, PUT, or DELETE directly in the browser web address (like we did for the GET), we need to use a separate tool for testing our endpoints from now on. I prefer Postman, but I’ve heard good things about Insomnia as well.

Inside of Postman, I need the following configuration.

url: localhost:8888/api/courses

method: POST

body: JSON object with name, link, and tags

After running the request, we should see the new course record is returned.

We can also check the Airtable GUI to see the new record.

Tip: Copy and paste the ID from the new record to use in the next two functions.

Updating courses

Now, let’s turn to updating an existing course. From the incoming request body, we need the id of the record as well as the other field values.

We can specifically grab the id value using object destructuring, like so:

const {id} = JSON.parse(event.body);

Then, we can use the spread operator to grab the rest of the values and assign it to a variable called fields:

const {id, ...fields} = JSON.parse(event.body);

From there, we call the update() function which takes an array of objects (each with an id and fields property) to be updated:

const updatedCourse = await table.update([{id, fields}]);

Here’s the full file with all that together:

const { table } = require('./airtable'); const formattedReturn = require('./formattedReturn'); module.exports = async (event) => { const { id, ...fields } = JSON.parse(event.body); try { const updatedCourse = await table.update([{ id, fields }]); return formattedReturn(200, updatedCourse); } catch (err) { console.error(err); return formattedReturn(500, {}); } };

To test this out, we’ll turn back to Postman for the PUT request:

url: localhost:8888/api/courses

method: PUT

body: JSON object with id (the id from the course we just created) and the fields we want to update (name, link, and tags)

I decided to append “Updated!!!” to the name of a course once it’s been updated.

We can also see the change in the Airtable GUI.

Deleting courses

Lastly, we need to add delete functionality. Open the deleteCourse.js file. We will need to get the id from the request body and use it to call the destroy() function.

const { id } = JSON.parse(event.body); const deletedCourse = await table.destroy(id);

The final file looks like this:

const { table } = require('./airtable'); const formattedReturn = require('./formattedReturn'); module.exports = async (event) => { const { id } = JSON.parse(event.body); try { const deletedCourse = await table.destroy(id); return formattedReturn(200, deletedCourse); } catch (err) { console.error(err); return formattedReturn(500, {}); } };

Here’s the configuration for the Delete request in Postman.

url: localhost:8888/api/courses

method: PUT

body: JSON object with an id (the same id from the course we just updated)

And, of course, we can double-check that the record was removed by looking at the Airtable GUI.

Displaying a list of courses in React

Whew, we have built our entire back end! Now, let’s move on to the front end. The majority of the code is already written. We just need to write the parts that interact with our serverless functions. Let’s start by displaying a list of courses.

Open the App.js file and find the loadCourses function. Inside, we need to make a call to our serverless function to retrieve the list of courses. For this app, we are going to make an HTTP request using fetch, which is built right in.

Thanks to the netlify dev command, we can make our request using a relative path to the endpoint. The beautiful thing is that this means we don’t need to make any changes after deploying our application!

const res = await fetch('/api/courses'); const courses = await res.json();

Then, store the list of courses in the courses state variable.

setCourses(courses)

Put it all together and wrap it with a try/catch:

const loadCourses = async () => { try { const res = await fetch('/api/courses'); const courses = await res.json(); setCourses(courses); } catch (error) { console.error(error); } };

Open up localhost:8888 in the browser and we should our list of courses.

Adding courses in React

Now that we have the ability to view our courses, we need the functionality to create new courses. Open up the CourseForm.js file and look for the submitCourse function. Here, we’ll need to make a POST request to the API and send the inputs from the form in the body.

The JavaScript Fetch API makes GET requests by default, so to send a POST, we need to pass a configuration object with the request. This options object will have these two properties.

method → POST

body → a stringified version of the input data

await fetch('/api/courses', { method: 'POST', body: JSON.stringify({ name, link, tags, }), });

Then, surround the call with try/catch and the entire function looks like this:

const submitCourse = async (e) => { e.preventDefault(); try { await fetch('/api/courses', { method: 'POST', body: JSON.stringify({ name, link, tags, }), }); resetForm(); courseAdded(); } catch (err) { console.error(err); } };

Test this out in the browser. Fill in the form and submit it.

After submitting the form, the form should be reset, and the list of courses should update with the newly added course.

Updating purchased courses in React

The list of courses is split into two different sections: one with courses that have been purchased and one with courses that haven’t been purchased. We can add the functionality to mark a course “purchased” so it appears in the right section. To do this, we’ll send a PUT request to the API.

Open the Course.js file and look for the markCoursePurchased function. In here, we’ll make the PUT request and include both the id of the course as well as the properties of the course with the purchased property set to true. We can do this by passing in all of the properties of the course with the spread operator and then overriding the purchased property to be true.

const markCoursePurchased = async () => { try { await fetch('/api/courses', { method: 'PUT', body: JSON.stringify({ ...course, purchased: true }), }); refreshCourses(); } catch (err) { console.error(err); } };

To test this out, click the button to mark one of the courses as purchased and the list of courses should update to display the course in the purchased section.

Deleting courses in React

And, following with our CRUD model, we will add the ability to delete courses. To do this, locate the deleteCourse function in the Course.js file we just edited. We will need to make a DELETE request to the API and pass along the id of the course we want to delete.

const deleteCourse = async () => { try { await fetch('/api/courses', { method: 'DELETE', body: JSON.stringify({ id: course.id }), }); refreshCourses(); } catch (err) { console.error(err); } };

To test this out, click the “Delete” button next to the course and the course should disappear from the list. We can also verify it is gone completely by checking the Airtable dashboard.

Deploying to Netlify

Now, that we have all of the CRUD functionality we need on the front and back end, it’s time to deploy this thing to Netlify. Hopefully, you’re as excited as I am about now easy this is. Just make sure everything is pushed up to GitHub before we move into deployment.

If you don’t have a Netlify, account, you’ll need to create one (like Airtable, it’s free). Then, in the dashboard, click the “New site from Git” option. Select GitHub, authenticate it, then select the project repo.

Next, we need to tell Netlify which branch to deploy from. We have two options here.

Use the starter branch that we’ve been working in

Choose the master branch with the final version of the code

For now, I would choose the starter branch to ensure that the code works. Then, we need to choose a command that builds the app and the publish directory that serves it.

Build command: npm run build

Publish directory: build

Netlify recently shipped an update that treats React warnings as errors during the build proces. which may cause the build to fail. I have updated the build command to CI = npm run build to account for this.

Lastly, click on the “Show Advanced” button, and add the environment variables. These should be exactly as they were in the local .env that we created.

The site should automatically start building.

We can click on the “Deploys” tab in Netlify tab and track the build progress, although it does go pretty fast. When it is complete, our shiny new app is deployed for the world can see!

Welcome to the Jamstack!

The Jamstack is a fun new place to be. I love it because it makes building and hosting fully-functional, full-stack applications like this pretty trivial. I love that Jamstack makes us mighty, all-powerful front-end developers!

I hope you see the same power and ease with the combination of technology we used here. Again, Jamstack doesn’t require that we use Airtable, React or Netlify, but we can, and they’re all freely available and easy to set up. Check out Chris’ serverless site for a whole slew of other services, resources, and ideas for working in the Jamstack. And feel free to drop questions and feedback in the comments here!

The post Going Jamstack with React, Serverless, and Airtable appeared first on CSS-Tricks.

You can support CSS-Tricks by being an MVP Supporter.

Going Jamstack with React, Serverless, and Airtable published first on https://deskbysnafu.tumblr.com/

0 notes

Text

Post Production Editing Timelapse

OpenDrives is the first to admit that expensive all-flash drive technology is not always the best solution for higher resolution, large capacity workflows. There are ways to ensure that a film’s sound is diligently handled, while working within a budget. This often comes from a transparent discussion at the onset of the project about a director’s expectations versus the reality of the budget/schedule.

What is pre and post production?

“That's a wrap!†When a movie director makes the call, cameras stop rolling, and a film is ready to move into its final phase: postproduction. This the final step in taking a story from script to screen, and the stage when a film comes to life.

A lot has to happen between the time when the director yells “cut†and the editors begin their work. Raw video also takes significantly more processing power in order to view, edit, or transcode. With a few exceptions, raw video is almost always much larger than non-raw video. That means more memory cards, more hard drives, and more time spent copying files.

Companies will hire runners who have experience in post, or wish to progress their career in this field. The hours will be long, and the list of task unrelenting, you need to wish to work in some aspect of post to get the most out of the junior roles.

Come see us at #NAB2019. Schedule a demo and when Strawberry knocks your socks off, we can help you out with our show goodie. We are co-exhibiting with #ToolsOnAir in the South Lower Hall SL14813. https://t.co/WSoJb3hJGR pic.twitter.com/mvqvilAIb7

— Projective Technology (@ProjectiveTech) April 1, 2019

The VFX editor will then create a proxy with the same codec that’s being used for the rest of editorial and drop it back into the sequence to make sure that it works as planned. When a VFX shot is completed and signed off on, the VFX house will render out the finished version of the shot to a high-quality Mezzanine codec or to an uncompressed format and send it back to the editorial team. Animatics – A group of storyboards laid out on a timeline to give a sense of pace and timing. Helpful in lengthier sequences, they allow the editor to work with music or voice-over to help create the flow of the sequence(s) prior to commencing computer animation.

Fortunately, plenty of marketers and production managers have already gone through the steps and learned from their mistakes. That's why we decided to put together a list of 20 video pre-production tips that'll help save you a lot of time, money, and hassle. He is also editor-in-chief of the GatherContent blog, a go-to resource on a range of content strategy topics. Rob is a journalism graduate, ex-BBC audience researcher, and former head of content and project manager at a branding and design agency. Online collaboration tools, like Trello, can help teams track their workflows, possibly using a built-in calendar to give a graphical view of the editorial calendar.

Is editing post production?

In the industry of film, videography, and photography, post production editing, or simply post-production, is the third and final step in creating a film. It follows pre-production and production and refers to the work, usually editing, that needs to be completed after shooting the film.

In addition to using a structure map similarly to how you would use a wall of index cards to track your story, you can also use it as a way to track your editorial progress through your first cut using colored labels.

Joined by our primary VFX supervisor Ben Kadie, we developed a plan to address the impact of VFX on 100-plus shots in our film.

Any number of workspaces can be created and can be assigned to individuals or entire teams.

But you will have the ability to leave time-stamped feedback, which makes it much easier for video professionals to interpret and implement requested changes.

Since they are the final stage of production, they are under huge pressure to make deadlines on time. Therefore, this can be a very stressful job and many may have to work nights or weekends close to deadlines. If you work as a post producer, you may spend significant amounts of time working on the computer in a dark room.

XML is a much more flexible format, and so it’s possible to include much more data in an XML file than in an EDL file, but this actually creates another potential issue. Because XML is so flexible, it’s possible for different tools to create XML files in different ways. An XML exported from one piece of software is not guaranteed to work in another.

mediaCARD Densu X

What are the 8 elements of film?

Post-Production is the stage after production when the filming is wrapped and the editing of the visual and audio materials begins. Post-Production refers to all of the tasks associated with cutting raw footage, assembling that footage, adding music, dubbing, sound effects, just to name a few.

I understand that I will pay an additional $1.00 per month for bank processing fees included in the dues amounts in this application. I can at any project manager time resign from PPA and stop charges being made to my credit card. If PPA is unable to successfully process my monthly payment, my membership will be considered void, and I am required to pay the balance in full to reinstate my membership.

Top 5 Questions About Working in Post-Production

VEGAS Pro is non-linear, so you don’t have to edit your project in sequence from beginning to end. If you decide to work on scenes or sections separately, nested timelines make it simple to work on individual scenes and then bring your entire project together.

When working on your CV check it through (or ask someone else to) to see it reads well and is correctly formatted. Correct spelling and grammar are crucial; you have to stand out from the hundreds of other people applying for the role so silly errors will mean your CV automatically gets disregarded. Post-production companies are always looking for keen new entrants to take on the role of runner. If you look at the larger companies, the turnover of staff can be very high, not because people drop out but because progression can be quick for the right candidate. However, you should know if your dream job is working in production then working in post production isn’t for you.

Purchasers of the book can download Chapter 10:

Time Savers in the Title Tool. See page 2 of the book for details.

Going to IBC? Visit us at Hall 3 A.28 and see how Strawberry Skies will dramatically improve how media productions create and share media content! https://t.co/utOdCiSYAw pic.twitter.com/7x8FhVZ9GL

— Projective Technology (@ProjectiveTech) September 5, 2019

As with any project, having a workflow can help you manage resources effectively, invest your time efficiently, and keep different teams and individual contributors on task—even if you’re working remotely. Managing video production requires input from many different teams, creatives, and contributors—and that can get messy fast.

ACES aims to solve that problem by creating a single, standardized workflow that can work for everyone who really cares about preserving all of their image data through the entire image pipeline. StudioBinder is a film production software built out of Santa Monica, CA. Our mission is to make the production experience more streamlined, efficient, and pleasant.

0 notes

Text

Don't Down Play The Advantage of Advertising on Bing

If your keyword targeting isn't optimized, then you'll wish to do some keyword research to recognize and after that target the types of keywords that will maximize the clicks and conversions for your Bing text ads. Luckily, Bing has its own keyword research tool that you can access under the "Tools" menu from your Bing advertisements control panel.

From there, you can check out additional keywords that your competitors might be using (by browsing a destination URL) and develop from there. Any Search marketer can tell you that some keywords that can drag down the efficiency of your campaigns; specific searches may trigger your Bing ads yet provide no conversions or clicks.

Fortunately is that if you have actually brought over keyword targeting from Google, much of your negatives need to already be imported. If you desire to include additional unfavorable keywords at the campaign or ad group level, you can add them by picking Negative Keywords within the Keywords Tab from the Campaigns page.

This desktop application can help you navigate and handle all of your Bings efforts much faster than from an internet browser window. According to Bing , here's why you must be using Bing Advertisements Editor: Start quickly. Transfer your account information with Google Import straight into Bing Ads Editor. Work faster. Sync your projects and accounts, make modifications or additions offline, and then publish your revisions with one click.

Straight create projects, edit advertisements, and manage countless keywords at as soon as. Plus, efficiently manage URLs, advertisement copy, budget plans, quotes, targeting and ad extensions. Multiple-account management. Download multiple accounts at the same time, copy and paste from one account to another, and carry out several Google Imports all at once. Research new keywords and quotes.

You can download Bing Advertisements Editor for Windows or Mac here . Wish to learn more? Have A Look At our Q 4 guide on Bing Shopping Ads here. .