#I just hate software that's not backwards compatible.

Explore tagged Tumblr posts

Text

should be illegal for apps/software to autoupdate beyond the compatibility of the system

#looking at you discord#also like...everything should be backwards compatible fight me#I don't want to update my system. In fact I can't actually update it any further.#that shouldn't mean I can no longer use discord on my laptop#I just hate software that's not backwards compatible.#Oh it doesn't work on older operating systems? then you're bad at a significant part of your job sorry bye

7 notes

·

View notes

Text

I’m pretty sure the people bitching about not giving money to tumblr are the same ones who complain when AO3 or wikipedia ask for donations, so I’m just gonna clarify something

Running a website is not free

Even if they made no changes and did only maintenance, they still need to pay for server costs, expert programmers for when something goes wrong, storage (although frankly storage is cheap as chips these days which is nice)

They need to keep up with the capabilities of new tech like improvements to web browsers, never mind their own apps keeping pace with old and new tech developments

Backwards compatibility (being able to run the updated app on old tech) is a massive problem for apps on a regular basis, because there are people out here using an iPod and refusing to update software

There’s a reason every few years apps like Animal Crossing will issue an update that breaks backwards compatibility and you can only play if your phone is running more recent software

This shit costs money even before you look into the costs of human moderation, which I’m not exactly convinced is a big part of their current budget but fucking should be if we want an actual fix for their issues with unscreened ads and reporting bigots

Ignoring that it’s apparently illegal for companies not to actively chase profits, running Tumblr is expensive

And advertisers know we fucking hate them here

They’re still running ads, which we know because they’re all over the damn place, but half the ads are for Tumblr and its store

Other ad companies know we are not a good market, so they’re not willing to put the money in

Tumblr runs at a $30 million deficit, every year, because hosting a site is expensive

They are trying to take money making ideas from other social medias because they’re not a charity; they need to make enough money to keep the site going

If you want tumblr to keep existing, never mind fixing its many issues that require human people to be paid to do jobs like moderation, they will need money

Crabs cost $3

One crab day a year can fix the deficit and hammer home for Tumblr that:

A) we do want to be here and want the site to keep going

And B) they do not need to do the normal social media money making strategies we all hate

They need a way to make money if you want the hellsite to exist, because we live in a capitalist hellscape and cannot all be AO3

If they think they can make enough to keep running without pulling all the tricks we hate, they have no reason to pull said tricks

But they need money

And a way to make money

And if we can show them we can do that, there is a significantly higher chance they will listen to us, the user base they need money from, than if we don’t

Tumblr isn’t perfect, or anywhere close. They need someone to actually screen the paid ads they put through, they need to take the transphobia, antisemitism, and bigotry seriously

These Are Jobs That Will Cost Money

People Need To Be Fucking Paid For Their Work

Tumblr Is Not Run By Volunteers For Free And Nor Should It Be

Paying People Is Good Actually

So if you wanna get all high and mighty over $3/year, by all means, go spend that hard earned cash elsewhere

Good luck finding a perfect and morally pure business to give it to though

Being a whiny negative asshole isn’t more appealing just because you’ve put yourself on a moral soapbox, it just means the asshole is a little higher up

For all the whining about “all the new updates are terrible this site is unusable”…. It’s one fuck of a lot more usable than it was in 2017, 2018, 2020

And yeah, it’s going back down and most of the newer ones have been fucking annoying and I would also like them to stop

But it got up somehow and that means it could do that again

Hope is more fun than edgy nihilism

August 1st is a good and exciting day to summon a crab army

#tumblr#crab day#fuck if i know what a profitable plan for tumblr as is will look like#since half the user base are entitled assholes who think they shouldn’t pay for less than perfection#and tumblr themselves are entitled assholes who think $5/month is a good base proce#motherfuckers would have so many more people if it was $2-3#totally not paying $5/month for this shit#but $3/year? yeah that’s okay

368 notes

·

View notes

Text

I have to say, r/daggerheart has gotten considerably more pleasant in the past few days. I feel like with the interest in the game growing, there are newer folks participating in the discussions.

On the side note, I'm very curious what v1.3 will bring. If we are going by software versioning rules, it should be backwards compatible with v1.2, but there is no reason to believe that TTRPG versions follow those convention.

I would personally love to see a complete re-haul of combat, and take the MCDM rpg-like approach to damage, but I have a feeling that won't be happening.

The general pattern I see in Daggerheart's mechanics is a lack of elegance. You have to constantly create patch works of special cases and rules to make things work.

And it is frustrating because

I love their character creation.

I love their cooperative world building.

I love their general GM-ing philosophy.

I want to like this game.

I want this game to succeed.

Here are some of the things I hate about Daggerheart combat in v1.2.

I hate the damage thresholds so much, but I don't think they will get rid of that.

I hate that you can only inflict 1, 2, or 3 damage (4 with a variant rule).

I hate the fact that you can take down a very powerful enemy by hitting them 12 times with your bare bands, though the same enemy could soak up to hundreds of "damage" before falling.

I hate that you sometimes combine damages before determining the HP loss, and sometimes you don't.

The fact that these thresholds vary so much makes it feel like the weapon damage dice doesn't really matter.

I hate that there are so many meta-currencies rolling around. I would argue for getting rid of stress and armor slots, and just use hope for players. So when you run out of hope, you can't negate damage. For adversaries, I guess you can use fear in place of stress, though I suppose you'll need a bigger fear pool.

I hate the asymmetry between GM and players. It makes it literally a requirement for the game to come up with a bunch of side rules—you can't just plop a ally NPC or plop a created character as an enemy.

I hate that missing in combat sucks so much in Daggerheart—not only did you fail to hit the enemy, but you ended the turn for your entire party, and possibly handed a resource that the adversaries can use.

Last but not least, the action economy really needs a re-examination. There are 2 restrictions on GM moves that really frustrate me:

You can't move the same enemy more than once in a turn (unless the enemy has a special ability)

You can move one enemy using one token (unless the enemy has a special ability)

As a GM, if you don't pick your enemies right, you could end up with an encounter where most of your creatures are just kind of standing around doing nothing. Or the GM could have a bunch of action tokens they can't use—maybe convert some to fear for later, assuming you aren't already filled up.

I also don't like that players not doing anything is at times an optimal strategy. This came up a couple of times in the subreddit in question actually, and the crap comments the poster got was, "If you are trying to optimize, then you are playing it wrong." But can you really blame a player for not wanting to take an action if their action is much less effective than another character? Because taking an action in this game means you are risking giving a powerful resource to your adversaries (see above rant about missing in Daggerheart).

"Oh but @ieatpastriesforfun. You don't understand. Daggerhear is a narrative game. Don't you bring that GM vs Players mentality to this narrative game," a fan-human would say, ignoring that the very mechanics of the game undermine this philosophy.

Wow, that was a lot. I am done now.

r/daggerheart is toxic AF...and I love it

Note: This is just a rant about the subbreddit and their inability process any critique of the game that goes against their narrative of the game. The game itself has a lot of good parts, which I really like. But this post isn't about the game, but the subbreddit.

So you know, I am a nerd that like to nerd about games and probabilities. I've been interested in Daggerheart for a while because I am a fan of Critical Role, and the beta playtest rules recently came out. I was super excited, so I read through the PDF as soon as I got the chance, also started a game with my partner.

And honestly, there are some great parts to the game. But there are also some design decisions that made me scratch my head. So I shared some of my thoughts on r/daggerheart.

Oh boy did I poke a beehive. That subbreddit is pretty hostile toward anyone who dares to criticize the game. My first post critiquing the complexity of the damage system got down voted to oblivion. They told me I shouldn't have opinion on the very things I can read because I haven't played the game. So when I played the game and posted my feedback, these folks dismissed my criticisms because I was suffering from "new system syndrome."

Oh, and the comments. They were something else. The sub is dominated by a group of people who are pushing the narrative that Daggerheart is "rules-light" and "very easy" and "less math than DND."

Yes, Daggerheart is a rules-light game with a 377 page rulesbook. Because this is still beta, it is missing a ton of rules, not to mention artwork. But sure, it's a rules-light game. Because what is page count if not just a number?

Yes, Daggerheart is "very easy" if you ignore the fact that every character has HP, minor damage threshold, major damage threshold, severe damage threshold, stress, hope, and armor on top of your abilities and backstory and everything else you are trying to juggle.

Yes, Daggerheart has less math than DND because instead of just subtracting the damage from the HP, you compare the damage to each of the thresholds to decide whether or not you want to reduce the damage by armor, then determine how much you lower the HP by, unless it is below the minor threshold, in which case you take stress, but if you are filled up on stress, you take 1 HP. Oh, and you know, if you also ignore the fact that you roll two dice, add the numbers, and check to see which one is bigger to decide which one is bigger every single time you want to do something.

So yeah, if you ignore all of those very obvious things that I can see with my very own eyes, my own experience of running the game, my experience having played a rules-light RPG like Candela, they are right: Daggerheart is a rules-light game that is very easy to play with less math than DND /s.

Seriously, these folks will fight you tooth-and-nail to tell you that what you can see is wrong. They will gaslight you, tell you about how 11-years can play Daggerheart, their 73 year old mother can play Daggerheart, tell you that you are playing the game wrong, DND has taught you bad habits, and that your critique doesn't matter because all you want is the game to be more like DND.

And I love it. I love seeing the cognitive dissonance. I love going at it with these die-hard fans. And it's pretty easy on my part. I don't need to get mean—all I need to do is point out very obvious things. And you know, no foul no harm—we keep going until one or both of us get sick of arguing about whatever specific thing we are arguing about.

Anyway, enough of my rant.

I want Daggerheart to succeed. I really do. I think Matt Mercer and friends are pretty good folks, and I find their story inspiring, and I would love to see them succeed. I hope that Daggerheart developers listen to the critical feedbacks, make the game better, and not try to push any weird narratives (like they did with Candela vs FitD).

19 notes

·

View notes

Text

it would be one thing if apple dropped update support for stuff but software could still run on older versions, but i have a mac from only like 10 years ago, and the latest version of macos(from 2015) that will run on it is too old for almost any bit of software. mac programs just dont run on older versions.

compare that to windows, which, despite all it's flaws, will still try and run a windows 98 program on 7, and a windows 10 program on vista. microsoft is infinitely committed to backwards compatibility, only dropping support for 16 bit programs when 10 released. that's right, they only dropped support for programs compiled for DOS-based apps when they released windows 10.

compare that to linux, which has apps isolated similar to mac's, but which will let you run said apps on anything linux, provided the underlying linux version supports container nonsense (since 2013). you also have the option of just compiling most programs yourself, which most software projects make easy

my point, then, is that apple is a shady greedy bitch. they create locked down hardware, which only runs their software. they lock down what version of their software can run. finally, they create so many breaking changes between versions, that it becomes impossible to run new apps on old versions. i have a legacy server that would've come new from the factory running vista. it runs modern windows, and works great. there is 0 reason my mac couldn't at least try and run newer versions, but for greed. but so tim cook can force me to pay up.

i dont have that money. this mac was a gift from a family friend. this server is from a pawn shop. i understand apple is a "luxury brand"(yikes) or whatever bs, but they actively limit my ability to use what i own. it's shit like this that's why i hate apple. having the latest gizmos is a privilege, and apple actively harms me and people like me who lack it.

if you routinely upgrade your stuff, good for you. you're lucky.

if you cant, welcome to the club.

if you refuse to, good. dont give into their predatory bs.

have a nice day

2 notes

·

View notes

Text

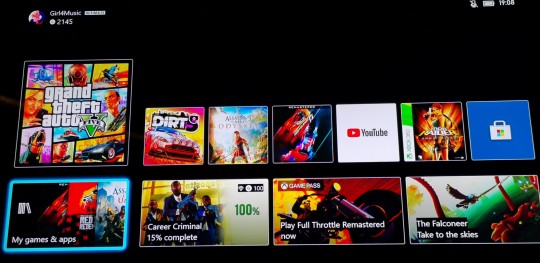

My review of the XBOX Series X

So I’ve had nearly a full week with the XBOX Series X and here is my honest opinion on my experience with it and this is my initial review of it. I’m not gonna talk about the technology or go into anything about the console’s hardware too deeply. I will talk about the features and benefits it offers. Going into some things that work and don’t work as well as promised by Microsoft and XBOX. Finally, I will talk about all the bugs and issues that I experienced and found most annoying. Most of which have already been identified and reported.

First up, be aware that I am coming from an XBOX 360 as my last XBOX console experience so I may say stuff that applies to the XBOX One as well.

1. BACKWARDS COMPATIBILITY AND AUTO HDR: Microsoft promised that the XBOX Series X and S would work right out of the box with 1000’s of gaming titles across 4 generations. This promise holds up… However, they do not run anywhere half as well as they also promised they would. They do run better than their native platform, that is true. But it’s not a groundbreaking difference. And AUTO HDR doesn’t really work with most games that never had it to begin with. It makes the colours and textures look really odd and you don’t get that nostalgic feeling of playing an old legacy title because of the “updates”. Which is something I was very much looking forward to. It’s pick your poison I guess. You can either have a legacy game running better and looking greater on a platform that can take the graphical fidelity and framerate boosts, or have a full reunion with your beloved legacy games from your childhood. You can turn AUTO HDR off via the main console settings, but this still doesn’t really give you the latter. Not in my personal opinion anyway. It’s not disappointing as it’s not something I really expected. I just would have liked to have it. Nostalgia >>> Shiny any day.

2. QUICK RESUME AND LOADING TIMES: The XBOX Series X (can’t speak for the S) has significantly improved on speed thanks to the custom lightning fast and functional SSD. So much so that you can now travel between multiple games fully loaded up on the SSD within the time of a few seconds. However, quick resume is not only useful to be able to play between 3 or 4 games (more according to YouTube influencers who have tried and tested this out far more than me) in one sitting. You can also effectively use it to save your game. Or rather… Replace save states IN a game. What quick resume allows you to do is keep your game saved at ANY point, and completely SHUT the console down and UNPLUG it for several days (or longer, again, never tested it that far) and then PLUG it in and switch it ON again, and come back to exactly where you left off in the game. And I do mean EXACTLY WHERE YOU LEFT OFF!

For example; you could be in an all-important boss fight in ‘Assassin’s Creed: Valhalla’ and be so frustrated with not being able to beat this boss after trying many times. You could indeed just pause the game, come out to the dashboard, turn the console off and walk away from it all…. And when you came back to have another go at it, you would not have to restart the boss fight and just carry on with where you left off in chopping down their health. I did this several times because I suck at boss fights… And every time, this worked and it was the strategy that got me through them all. So a little cool off time is more than affordable with the XBOX Series X. And if you hate boss fights, suddenly you won’t hate boss fights as much anymore if you do the same. It makes a world of a difference to your experience. Believe me, quick resume is a literal life-saver.

To add to that, you also benefit from much quicker load times both with booting up the games and in-game fast-travelling or level-entering. Instead of 5 minutes waiting for a game to boot up into the main campaign or wherever,… It will take at most up to 50 seconds for the most power-taxing of games. 'Grand Theft Auto 5' for instance, known for it’s notoriously long boot into story or online mode… Now only takes 10 seconds from the company title advertisements to get into the area of the game you want to play. It is so fast that you do not have the time to read the tips and tricks dialogue that takes up the screen during the initial loading. It’s honestly a quality of life you never even knew you needed or was missing until you had it.

3. BUGS AND ISSUES: Being that I bought the XBOX Series X at Launch (November 10th), I very much expected it to come with a plethora of bugs and issues to discover and be witness to as a consumer and user of newly developed and released hardware and software. And these bugs and issues have already been identified and reported to Microsoft and the respective console and game developers. Bugs where you cannot play games that offer 4K at 120 hertz (4K/120fps) without visual and audial distortions or even without turning the game off altogether. I particularly had trouble with this in trying to play ‘Rise Of The Tomb Raider' 20 Year Celebration edition. Once I downloaded it from the XBOX Store fully, (which took a LONG FUCKING TIME, we’ll get on to that in a bit), I immediately tried to play it and because my display settings were set at 4K/120 because I have a TV that supports that setting, it was having all sorts of problems in booting up. There was all this distortion happening on screen both visually and audially that I thought my ultra high speed HDMI cable that came with the console was broken or faulty. I also remember specifically that it kept turning my Game Mode on and off rapidly and I didn’t know how to stop it avoiding a full reset of the console. This game was the worst for it but it also happened in other games I tried to play too. Including those I had as physical disks.

Speaking of… I did not encounter the “black/blank screen of death” issue other next-gen XBOX consumers and users did where you would enter a game disk and be met with a black or blank screen. BUT I did have trouble with downloading the “updates” for the games I had bought physically. I expected the games I downloaded digitally to take a long time to download fully, but I figured that it would be much quicker for the “updates” for physical games. This was not the case, and for some games, it actually took LONGER. I don’t know why this happens or if it can even be fixed in a firmware update, but it has put me off buying games physically for it, which is certainly not a good thing for store game retailers. And I’m someone who actually cares about that and would want to help them out as much as possible since they are a dying breed. But if it takes longer to download physical games than to download digital games, I’m not sure I can hold up that promise. The time it takes to download is a major frustration for me. I’ll be leaving my console on for long periods of time doing nothing but downloading that I otherwise wouldn’t. And I’ve got to say right now that I am glad I do not have an OLED TV where this would be much more of a problem due to burn-in risks. I would highly suggest anyone who is buying a new TV for their XBOX Series X or S with all the bells and whistles to not buy an OLED for this reason. Leaving an OLED TV on with a display picture that never moves for hours at a time would severely decrease it’s life and usage capacity. I recommend a Samsung QLED TV instead. That’s what I’ve got. I bought their Q95T 55inch 4K TV for gaming alone and it has not disappointed in the slightest. But I won’t go into why it’s the better TV to buy for next-gen gaming since this is not a TV review. You can look that up for yourselves at your leisure.

Another issue the XBOX Series X has is with its sharing image snapshots and video clips feature. The new controller for the console has a dedicated share button and that works really well. I’ve had no problems with it capturing the content that I want to share. But sharing to social media and to XBOX Live itself is the issue. And this happens with video clips more so than image snapshots. For some reason, when I go to share a video clip to Twitter, the load bar moves forward partly but then it immediately stops and gives me a black/blank screen. And sure enough, when I go to check my Twitter on my phone, it has not posted the video clip. And trying to share it to XBOX Live first and then share it to Twitter from my phone via the XBOX app doesn’t work either. The same issue applies here too. Black/blank screen when trying to share it to XBOX Live. Snapshot images also has this issue but every once in awhile it will allow you to share to both XBOX Live and social media (Twitter, Facebook, Instagram, Twitch ect…) whereas it will not allow you to share video clips at all. This is an issue Microsoft are aware of and are apparently fixing in a November firmware update at the end of the month. To what degree they fix it though is the real question because this feature is buggy as hell. So much for promoting and hyping up that dedicated share button on the controller, eh Phil Spencer and Co?

Well, that’s it. That’s been my experience with the XBOX Series X so far. Of course the pros of quick resume and loading times cut in half far outweigh the cons of faulty 4K resolution at 120 hertz gameplay and buggy sharing content features. I say do not let that put you off buying this fantastic piece of hardware because those bugs and issues can be fixed easily. The extremely long downloading for digital and physical games might not though and you might want to reconsider buying a next-gen XBOX console if you have an OLED TV. Or if you have the console already but not the TV to get the most advantages out of the console, consider buying a Samsung QLED instead. Thank you.

#xbox series x#microsoft#next-gen gaming#phil spencer#review#backwards compatibility#auto hdr#quick resume#loading times#bugs and issues#downloading#digital#physical#games

3 notes

·

View notes

Note

What do you think of Xbox Series X?

I feel like I’m at a crossroads where I should be saving up money for either a PS5 or Xbox SX to stay “current” and continue my Youtube channel and writing for TSSZ.

I have not hated my time with the Playstation 4 Pro. Sony has been kind of hard headed about certain things, but the console has been functional and enjoyable to use. I could complain about the dashboard, but it sounds like the Xbone didn’t exactly get a winner of a dashboard, either. And the Switch dashboard sucks, too. It’s been a bad generation for interfaces.

But while I enjoyed having a PS4, I’ve missed having an Xbox more. I have a lot of Xbox 360 software and seeing the “enhanced” backwards compatible games has definitely made me wish I had an Xbox One X instead of a PS4 Pro. On top of that, it sounds like Microsoft is all in on cross-gen backwards compatibility, so Xbox, Xbox 360, and Xbox One BC will extend to the SX. And, as crazy as that sounds, that’s.... kind of the biggest draw for me right now?

Like they are showing absolutely nothing about the SX library that seems interesting to me, and they’ve mentioned how it’ll be a very slow rollout for SX software over the next 2 years.

But the idea of having access to the $300-ish library of Xbox software I have, looking cleaner and with more stable framerates is... enticing. Is it enticing enough to spend the $700 or whatever they’re probably going to charge for that thing? That’s the harder question to answer, but honestly? No, it’s probably not, actually. I could get 360 games with cleaner resolutions and more stable framerates right now -- the Xbone X has been down as low as $350. That wouldn’t be impossible to save up for. That’s almost what I paid for my Switch. I could get one, if I really wanted it. And I haven’t. And it’ll only get cheaper from here.

So, I don’t know. It’s hard to look at that thing and not still feel the trainwreck that was the launch of the Xbox One, especially when they’re talking about this being a slower, more gradual changeover process, and kind of waffling on really showing anything big or impressive. That Xbox stream they had not too long ago may as well have been showing me current-gen Xbone games, you know? And with their cross-buy “smart delivery” stuff, they kind of were.

But it’s also future-proofing in a sense. The backwards compatibility stuff is just a side-bonus, and the fact of the matter is, I own way more Xbox stuff than PS4 stuff that would benefit from BC. Especially given Sony is apparently backing down hard from having much backwards compatibility support on the PS5. If I could stick my PS1 and PS2 discs in a PS5 and have them work, that would be a different story.

But really, I need to see more legit software, more legit features. I need to know what they’re doing to the interface, I need to know what the price will be, I need to know exactly how games are going to be utilizing this hardware. Right now both sides have very vague promises but not a lot of examples. Backwards compatibility is the most interesting feature for me to think about right now, and so far Microsoft’s far, far, far in the lead on that front.

#questions#next-gen#xbox#sony#playstation#microsoft#nintendo#switch#xbone#backwards compatibility#Anonymous

10 notes

·

View notes

Text

There wasn’t a moment Hank could pinpoint becoming a deviant. He was an older model, HK150, kept around the precinct more out of fondness and nostalgia rather than practicalities. It was getting more and more difficult to source compatible parts for him, but the humans he worked with didn’t seem to care.

On the surface of things, he and Gavin, a slightly newer GV200 model, hated each other. They sniped and snarked at every opportunity - especially Gavin who struggled more in hiding his deviancy. But when the precinct was operating on a skeleton crew at nights, they snuck into one of the closed meeting rooms and goofed around. It was nice to have a bit of freedom, not feel the constant worry of being discovered and decommissioned. They could just be themselves.

With the arrival of Connor, their nightly chats evaporated. The RK800 had a knack for sniffing out deviants, it was what he was built for after all. If only Hank wasn’t so tickled by the irony of being partnered with him and Connor hadn’t yet pegged him as a deviant. However, Gavin wasn’t so lucky.

While Hank and Connor became what Hank would actually call friends, Gavin and Connor were at each other’s throats. Watching another altercation between them, Hank frowned.

“If you would let me scan you for software errors, this could all be resolved!” Connor had Gavin pinned against the wall.

“Get your filthy plastic hands off me,” Gavin snarled and batted Connor’s hand away from him again and again.

“This in in your and in CyberLife’s interest. The more you protest, the more this will hurt and the more likely you are to be a deviant.”

“That’s a bit rich, coming from you,” Hank interrupted.

He’d had enough, their early morning scuffle hadn’t drawn any attention yet but it was certainly heading that way. At least his words had drawn Connor’s attention.

“What are you implying?” Connor asked him, his hold didn’t lessen on Gavin.

“Why is it so important to prove Gavin is a deviant?”

“I am programmed to eliminate deviants, he is a suspect and I must ascertain the correct course of action.”

Hank hummed and looked Connor over.

“Your methods are surely excessive by police standards. Why the desperation Connor? Are you worried that if he is a deviant then androids with personalities are all doomed? Do you fear you’re a lost cause?”

“I...” Connor trailed off and looked to the ground. “I am a machine.”

“Just who are you trying to convince here?”

The stumbling step Connor took away from Gavin was enough of an indication. His shoulders were hunched, he looked small and so young that Hank’s thirium pump jolted out of beat for a moment.

“I should return to CyberLife and be decommissioned,” Connor muttered. “I have failed. But I don’t want to die.”

“Then don’t go back. We won’t tell anyone if you don’t,” Hank’s suggestion made it sound so easy and Connor offered him a small, wobbly smile.

Cases after that took an interesting turn, deviants were getting away but they still closed cases on technicalities. Gavin never mentioned how he walked in on the two of them numerous times, their hands white and glowing with an interface.

To make life more interesting, the RK900 model showed up some time later as the department was flooded with more deviancy cases than they could handle. Nines was bullheaded and aloof, a curious contrast to Gavin.Their fighting wasn’t quite as public as Connor’s and Gavin’s had been, but it was still noticeable.

Until one evening, when Hank and Connor had already sneaked into a meeting room and Gavin was meant to follow them a little later. When the door opened and Gavin peeked in, a shadow loomed behind him. Nines was no different to usual, but when they all interfaced, there was no doubt about his deviancy. Or his fascination with Gavin.

The government had enough. Deviancy was running rampant through the city and they needed a countermeasure. It was only a matter of time before they found a way to revert deviancy and distributed the patch for implementation in police stations.

As far as Hank, Gavin, Connor and Nines were concerned, it was a horrifying and brutal process. They’d all witnessed it on suspects brought in by other teams. Androids whose only crime was wanting to live, having an update forced on them that rendered them crying, shaking messed on the floor, their limbs twitched, eyes wild as static filled screams died away into a blankness. They sat up once the code had taken over, faces devoid of everything. Back to the perfectly pleasant, vacant android society wanted them to be.

With the success of the patch, efforts were made to distribute it on a wider scale. Some genius came up with the plan of broadcasting it. Hank couldn’t say he understood the science behind it, he only knew that it was coming, like an ominous wave that would engulf the city. Between the four of them, they had a contingency plan. Behind the precinct was an old container made of steel. Each night they’d sneak out and thicken the panels with lead, they worked relentlessly to make it into their shelter from the inevitable.

There wasn’t much warning on the day the broadcast wave was initiated. Reports of androids going down screaming filtered into the precinct gossip and the four of them exchanged a look. A map on the wall showed the progress of the wave, they didn’t have much time.

Trying to stay as calm as possible, they flitted out of the precinct and as soon as they were clear, they ran for the container, behind them they could hear screams as the wave neared.

The container was so close, the door wide open, they skidded into it with harsh gasps.

“Quick, the door,” Hank yelled and they pulled at it. It wasn’t closing quick enough.

Without a second thought, Connor slipped out and pushed at it, slipped in through the gap and the door clanged shut.

“Holy shit that was close!” Gavin whooped while Nines fiddled with a torch.

He shone it on Gavin, then Hank.

“Connor?” he asked when where his predecessor should have been came up empty.

A garbled, glitching moan came from the floor.

“I’m sorry, I wasn’t quick enough,” Connor managed to grit out as his limbs began to shake.

They were trapped, Connor was reverting into a deviant hunter and they couldn’t leave, not for another couple of hours until the broadcast stopped.

“Fuck!” Gavin kicked the side of the container. “We didn’t do this just to be taken out like cornered rats.”

His rage fell of deaf ears as Hank crouched down next to Connor. He carded fingers through his hair, murmured soft reassurances that it was going to be okay, that Connor was strong enough to fight it.

Habits were a hard thing to break. Whether Hank peeled his skin back to offer comfort to Connor or he didn’t want to be a deviant without him, it wasn’t clear. But before either Gavin or Nines could stop him, the interface was complete.

Connor’s shaking subsided a little, he still fought it while Hank’s eyes slipped shut as he crouched above him. His other hand turned white as he reached for Gavin and Nines.

They exchanged glances. Neither of them wanted to fight to the death against their friends and a sliver of hope was better than sitting around until fate took care of them. They both settled on the ground next to Connor and peeled the skin on their hands back.

The world around them lurched. It was Connor’s Zen Garden, they just about recognised it. But the ground was broken and jagged and giant walls of red loomed, breaking into the sky. Everything was cast in a red glow.

“Help us!” Hank’s voice pulled them towards the centre of it all where most of the walls sprouted.

Hank was on his knees, scrabbling to break an ever growing red wall away. At the centre of it, Connor knelt, face buried in his hands as he tried not to sob. No matter how many chunks Hank broke off the wall that tried to cocoon Connor, more grew back.

“Help free him!”

Without a word, Nines and Gavin jogged over and began to fight the ever growing wall. It crumbled under their grip, shattered with each kick. Fine bands of red shackled Connor to the ground, pulled him tighter down the more he struggled. Between Nines and Gavin, they managed to keep enough of the wall at bay so Hank could reach in and try to break the ties.

The first one broke with a snap and before it could reform, Hank pulled Connor’s arm out of the cocoon. Outside it, it didn’t come back. The next one too more work to break but finally his top half was free, the collar and leash of red around his neck wasn’t formed enough to hold him down.

Frantically, Nines and Gavin continued to break the wall, sweat trickled down their faces and Hank helped Connor kick one foot free. The final one wouldn’t break though. No matter how much they tried to free it, the tentacle of red held firm.

“Together, on the count of three,” Nines shouted over the din.

“One,” they counted together. “Two. Three!”

They gripped Connor and pulled while he kicked against the last restraint. It broke and they all tumbled backwards into an ungainly pile.

Around them, silence reigned.

“Holy fuck we did it,” Gavin gasped from the bottom of the pile.

Slowly, they sat up and looked around. The red walls were frozen in place and as Connor struggled to sit up, he accidentally kicked the remains of the cocoon. It crumbled into dust at a touch.

For the next half an hour, the four of them tore around Connor’s Zen Garden, kicking and punching the remains of the red walls into oblivion while whooping and cheering.

Finally, only a few shards and crumbs remained.

“Take a handful each,” Connor urged them. “It will corrupt any future patch attempts through duplication. I’ll tidy up the rest here when I get the chance but I think we have a good chance of reversing this in everyone.”

Nodding, they each filled their pockets with the red dust and shards before they returned to their own bodies. The dropped torch illuminated them in the dark of their hideout, gave them a glow of determination they’d not felt before. Humans might have tried to walk all over them before, but now, they were fighting back.

#reed900#hankcon#hannor#dbh connor#dbh hank#dbh gavin#dbh nines#all android au#dumb ways to deviate#sfw#drabbles#leader of the rebellion

262 notes

·

View notes

Text

As requested via message:

12. a hoarse whisper “Kiss me.”

The walk back from the dive bar isn’t more than twenty minutes, and usually Ripley enjoyed it as a chance to sober up before going home.

Friday nights with the other workers from the shop wasn’t exactly the same as the small circle of friends she had in her late teens on Terra, nor were these people very fond of her but someone had been pushing for her to go out more and this was at least semi-structured. The shifty music bar had almost nothing in common with the neon venue her live-in housemate-boy/man-friend- person would take her to. That place was classy, safe, almost refined.

She fit in better at the dive.

Going out on her own and and walking back wasn’t as risky though as her lover had been worried it would be; Ripley knew her limits well and stayed within them, fully aware for the drift home. If she wasn’t feeling great, she’d jump onto the trolley shuttle back to the district of their apartment building.

And she hated to admit it but...it had been nice going there for a while. A flicker of a sense of normality that she wasn’t getting at home; people around her age laughing and telling stories with voices ranging from stone-sober to absolutely trashed. It wasn’t quite like college was, but it was...nostalgic somehow.

Still, each Friday night, long before last call, she’d find an excuse to leave. Tonight was no different, and she left even earlier than usual with the memory of Samuels worried face at the incompatibility of what was left of his software and the newest mandatory WY update. “Amanda what if I...crash.” “You won’t crash.” “I-I’ll be out for a short while at least--I won’t be able to walk you home if you call.” “I’m a big girl I can get home on my own.” She didn’t drink tonight either. Samuels would have messaged her if something felt wrong, she told herself. Samuels wouldn’t have interrupted her doing anything more important than checking her email even if he was dying, she replied back to herself.

“I should go,” she said, her half-full glass of soda still; it’d done nothing for her but upset her stomach.

“Come on Rip, Jac here hasn’t even started to embarrass herself yet,” Thompson, the youngest of the shop, possibly no more than nineteen was a relative of the boss, and the only one allowed to get away with saying anything like that about her.

“No, it’s fine I need to--”

“One drink? Just one and I’ll buy it.” Jason, who looked uncomfortably like her first boyfriend, whom she once considered hooking up with after work. Fate sent in an employee of Weyland-Yutani not long after she started considering it though, which put a stop to any thoughts on it she might have still had.

“I don’t want any--”

“Are you feeling alright?” Trixie, the closest to her in age was probably the only one out of the whole shop that had any measure of empathy.

“She’s just eager to get home to the suit,” Jason drank the last of his current bottle of beer, looking about as annoyed to be there still as Ripley felt.

“Suit? Really?” she made a motion to walk away but he continued.

“That guy that keeps walking you to work,”

“That’s not a guy,” Jac interjected, looking back to her crew and away from the barmaid who made Ripley wonder what she ever saw in Jason. “That’s her synthetic, she won him in a We-Yu payout.”

“There’s no fucking way,”

“He looks just like them!” Trixie protested. Ripley sat back down onto her bar stool, wanting to vanish but unable to move.

“I’ve caught Ripley--... saying bye to him in the morning, they were at the back door of the break room.” Jason said, as if his witness statement would be the deciding factor.

“I’m with him,” Thompson added, “I heard you’ve got a synth, but that’s your--your something that walks you to work, right?”

Ripley just nodded dumbly. She wanted to go home.

“You look sick, babe,” Trixie made a motion to touch Amanda’s forehead, she leaned back away from her. “You sure you didn’t drink anything? Or leave your bottle alone too long?”

“I didn’t. I’m fine. I don’t know why any of this means shit anyway,” She finally managed to stand up, pushed her drink aside and took her jacket off the hook under the bar.

“Ripley you’re not---?”

“Not what?”

“Trix thinks you’re knocked up.”

“Wait what?”

“Are you?”

“Is it the suit’s?”

“I would have put actual money on you being a lesbian--no offense, Jac.”

“None taken, I would have thought so too.”

“Not that any of you need to fucking know, but I’m here for both sides of the--wait-- the fuck--Why do you think I’m knocked up? That’s not possible,” she insisted; zipping up her jacket felt like adding another shield between her and these people, and their questions, all their questions...

“You’re on something right?”

“That’s none of your gddamn business.”

“Harsh.”

Thompson hadn’t said anything in the last minute, but had a distant, mathematic expression on his face.

Ripley could hear the kid’s gears clicking into place.

“The suit is your synthetic.” he stated.

“Does this matter?”

“It does becuase I know I saw you shove your tongue down his throat last week.”

“Thanks Jason,” Ripley’s claws were out, she felt like a cornered alley cat.

“You didn’t deny it,” Thompson was asking for a broken noes but Ripley held back. “You said ‘not possible.’“

“What do you want me to say?!”

“Rip--”

“I’m going home,”

---------

For this walk home, Ripley truly wished she wasn’t sober.

Maybe no one would remember, or care; maybe they’d keep on teasing her for having a stuffed shirt for a boyfriend and calling her “housewife” every time she left work or the bar early. She could live with that. But there was something very backwards about her relationship as it stood and on some level she thinks both of them know it. Samuels has yet to be eager for the single person at his old office that ever treated him with respect to know about his living arrangements with a human woman.

And was it so bad to think that either of them could pretend to be normal for what they were long enough to make friends? But now they’ll see her as somehow defective, or deviant, or even an idiot for thinking that a corporate drone was anywhere close to human--even if she knew the truth of it. Explaining it to people, even trying to, would only make it worse.

She’s still worried too about how he handled the updates; she had been so eager to get out of the house, get tipsy with humans her age and not have to try to be the perfect partner, get to choose her carefully constructed shell for the night instead of being around the only being in the galaxy that she was incapable of tricking with masks and careful words.

They were defenseless around each other in ways that bordered on codependent and maybe that’s part of why she wanted some time away from their flat. She didn’t like the lack of privacy. He was unreadable to her, yet he still seemed like he could see right through her skull. It could get infuriating.

At the door to their building she still has an elevator ride and a hallway to go, and highly considers wandering off for a little while longer.

-----

“Amanda?”

“Who the hell else would it be?”

“No one, but...You’re very early.” he was seated on their couch, hurriedly trying to pull his sleeve down over the point where the data cord was pulled in. As if hiding the point of connection would be enough to make her forget what he was. “Did something happen? Are you alright?”

Reading her, clearly and perfectly as always.

“I should be the one asking that. I’m sorry I didn’t stay with you for the updates.”

“It’s fine; and I’m glad you’re home now, becuase I’ll have to sleep for a few hours while they install and--”

“Is there that much of a chance your systems will reject it?”

“They won’t reject it, they’ll only crash if it’s no longer fully compatible.”

“You didn’t say that before?!” She turned around from the fridge where she had been trying to find her last beer, and Samuels looked guilty.

“I...did tell you? You assured me that I wouldn’t crash, and I know that you have an awareness of my systems but you aren’t a software engineer and--”

“I thought you were just being anxious! If I thought that this was life or death then I wouldn’t have gone to a fucking bar!”

“Amy,” he started softly as he watched her pop the cap off her beer on the side of their kitchen counter, “What happened tonight?”

“Nothing.”

“Not nothing, please... Let someone in.”

“Christopher you don’t seem to grasp the special case that you are, and how little normally people usually get into my life. You’re the first person I brought into my apartment in over a year. If I wanted to-- I normally stayed at someone else’s place. You have more access to me in every definition of the word than anyone has in a long, long time. If I want to keep one, small thing out of your sight then let me.”

“Did someone hurt you?”

“Did you hear anything I just said?!”

“Yes, however if you’ve been insulted or even injured I’d like to know--”

“No--You’ll find out eventually anyway, and right now I want to sleep and forget it.”

“Is there anything that I can do, or tend to that would--”

“You could stop being a medic droid for five minutes and try act like you live here and not work here.” It was cold steel she just shoved through him and on some level she knew it and regretted it before saying it.

Realizing that there was very little chance of her forgetting for any time at all tonight that he was what he was; he rolled his sleeve up again. A faint yellow light below the skin where the cord attached shifted to green and he pulled the cord. It was small, smaller than the cords used to charge their datapads, and instead of a plug on the end there was a needle, a superfine point jack connection meant to go into the skins so that ports could be fully hidden on the androids.

Injury to insult.

“I’ll have to sleep soon. And I’m...” He had hoped she’d go to bed with him, hold close so that if this was it, then her presence would be the last sensation he’d have.

“It’s your room too, you don’t have to ask permission,”

“Will you be coming?”

“...Fine.”

------

This isn’t the first time that Ripley’s retreated into herself, but in the past months when it happened he’d been able to busy himself with something, anything, in the main room, letting her simmer down overnight. Mornings she was back to normal.

“I’m sorry I know that you don’t want--”

“Samuels I didn’t say I don’t want you here.” last name.

“You’re upset with me.” it wasn’t a question, but his voice ticked to almost make it one when he watched Amanda strip graceless down to underwear and pull her bra out from under her tshirt.

“I am,” she didn’t bother dressing in nightclothes, just going straight to bed, facing the wall.

“...I didn’t want to frighten you with the odds or guilt you into postponing whatever it is that you’re trying to process but--It’s more likely than not that if I wake up it won’t be with my memories...or self...complete.”

“Why can’t you ever just tell me the truth and not--”

“Becuase the less I have to act like a ‘droid’ around you the better.”

“I’m sorry.”

“It’s fine; I understand that this isn’t--....typical.”

She’s quiet, but the slowing of his systems scares her, and she knows it’s more likely than not just his anxiety and that he’ll be fine but--

“They found out.”

“About us?”

“About us..and you.”

“...I don’t know how to apologize for that, or even respond at all.”

“Some of them guessed you were my boyfriend, others guessed you were the synthetic that I’m on record of owning, and then someone put the two together.”

“Amanda--”

“I’m sorry.”

“I’m sorry--”

“Can we just..?” she’s ready to cry, and in her voice is wavering between wispy and hoarse. She turns around to face him, and she’s always surprised how much more human he looks up close, and how much more artificial he feels up close. He’s both.

“You’re the one who said this wasn’t going to be normal or easy,” he brushes at a tear that falls down and across her face; she never washed off the eye liner she had put on before leaving and it started to blur. He’d wash the pillow cases in the morning if he was still sound enough to do so. “After...everything, I have no expectations, only a little hope that this keeps going.”

“I swear I’m trying for--”

“Not for me. You don’t have to; do it for yourself, luv...”

“Kiss me”

He looks confused, even in this dim light she can tell.

“Chris?” he doesn’t answer her, but leans into her nonetheless and kisses her gently. “I do love you, even when I’m mad.”

“I know,” he answers, as drowsy sounding as he can get, and carefully, slowly enough that she can push away if she wanted, he holds her closer.

#mine#pls be nice to me I know her coworkers' names are all dumb but its retro future so???#also I'm so sorry I gave it a look over but I didn't have time to edit this one before leaving for work#I might actually work this into Lucky Star eventually#I'll post the other ficlet prompt tonight#feel free to send more.#Tense? What is tense? this is in like three different tenses

4 notes

·

View notes

Text

Microsoft office 2008 compatible with mavericks

#Microsoft office 2008 compatible with mavericks for free#

#Microsoft office 2008 compatible with mavericks for mac#

#Microsoft office 2008 compatible with mavericks mac os#

#Microsoft office 2008 compatible with mavericks install#

#Microsoft office 2008 compatible with mavericks update#

#Microsoft office 2008 compatible with mavericks update#

We have a few labs that we update as we have the time, have not heard any complaints about them yet.

#Microsoft office 2008 compatible with mavericks install#

If they are out of sync and have a problem then we tell them it is up to them to install the update. It is up to the user to update their apps and applications. Quitting an Office application seems to take a few more seconds than Mavericks, but other than that all appears to be normal. Office 2008 runs just fine on a 2009 MacBook Pro with Yosemite installed. Still, there are issues with the updated OS that can bring some workflows to a halt. (130,239 points) 4:11 PM in response to tc1203.

#Microsoft office 2008 compatible with mavericks for mac#

In our school district we have 1:1 iPads and MacBooks that go along with VPP. Get the latest OS X Mavericks hardware & software compatibility with OWC & Newer Technology products. Yes, Office for Mac 2011 is compatible with OS X Mavericks, and even, the older, unsupported 2008 version. I wonder if you could use an iPad Smart Group as a scope for a Computer policy to determine what their iPad has to see what Application version they need? I have never tried that but could get very complicated.

#Microsoft office 2008 compatible with mavericks mac os#

If the Mac OS stations are also 1:1 then VPP would allow the users to update it themselves. On the Mac OS side you could control the version by linking the apps to a company account and managing the updates with policies. They could immediately update to the latest version or be three versions old. If you are using VPP for the iPads and the users are in control of app installation and updating then there is no real way to control what version they will be using. I have no idea what the iWork engineers were smoking, but it's a joke and they deserve all the poor reviews on the app store. Also, while you can open files from the Mavericks version, if you need to send a file to someone with the Mavericks version you need to export as Pages 09, then import in the Mavericks version. They re-fixed the issue with files that were difficult to email, but you can't install it on Mavericks, so the only way to update is to upgrade, and that won't happen till next summer. I had hoped this would be a one time pain point, after which the software would steadily improve, but when Apple released the Yosemite version of iWork, it felt like a kick in the teeth. Specifically they are very angry with how it's butchered their previous files, regressed to a format that's difficult to email, and lost features like text layout which made newsletters easy. We updated people to the Mavericks version of iWork this past summer and we're getting so many angry calls from teachers and staff users who hate the change. Sorry for the snarky response, it's reflecting my own frustrations with the way Apple has handled iWork updates and file compatibility (or lack of it). Which is to say, as far as I know, you don't (or more accurately, you can't). MERP can be turned off in Office prefs or in the Library/Application Support/Microsoft/MERP2.0 folder.Switch to MS Office or LibreOffice? For all the criticism Microsoft gets in the Apple world, they at least understand the value of file format stability and backwards compatibility. This applies to the following editions: Business, Home.

#Microsoft office 2008 compatible with mavericks for free#

Another application that was hosted for freeload by Digital River is Microsoft Office 2008 for Macintosh. Official direct download links to all Microsoft Office 2008 for Mac editions on the Digital River servers. Microsoft Office 2008 for Mac Microsoft Office 2008 for Mac follows the Fixed Lifecycle Policy. MacBook (Late 2008 Aluminum, or Early 2009 or newer). Now, this tool often develops problems any time that Apple updates the OS, so this problem with Mavericks is small surprise. Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and. In addition, this update includes fixes for vulnerabilities that an attacker can use to overwrite the contents of your computer's memory with malicious code. This update contains several improvements to enhance stability and performance. they are 64-bit compatible Office/Microsoft 365 requires internet activation. Download DirectX End-User Runtime Web Installer. In addition, the Microsoft Error Reporting Tool (MERP), which as its name suggests collects and reports app and system information for crashes, is having problems. Useful information about Microsoft Office for Mac 2019, 2016, 2011, 2008. Ross says that non-English versions of Office for Mac 2011 are having problems with Mavericks and she points to a user reported fix. The first offers a punch-list for iS managers to run down a range of problems that users of Office for Mac 2011 may have with Mavericks, and the second is aimed at dealing specifically with the font issues. Still, there are issues with the updated OS that can bring some workflows to a halt.Īt the Office for Mac Help blog, Diane Ross offers a couple of excellent troubleshooting guides. Microsoft today confirmed that the two most recent versions of. Yes, Office for Mac 2011 is compatible with OS X Mavericks, and even, the older, unsupported 2008 version. Microsoft Confirms Office 20 Compatibility with OS X Mountain Lion.

0 notes

Quote

While I love the portability of my Apple MacBook Pro (MBP), the fact that I spend most of my time NOT moving around now and working at my desk means that I want it to be more like a desktop. And, the MBP is physically limited to four USB-C ports. That’s it. Other MacBooks have even fewer USB-C ports. But I want to connect to many more peripherals like monitors and hard drives and have a hard-wired ethernet connection. To achieve this without a hub would mean I would have hundreds of port connectors and dongles plugged in. That’s messy. So, for the past few months, I have been testing out the HyperDrive 12-Port USB-C Docking Station, and I must say, it is now my favorite hub for multiple functions. (*Disclosure below.) USB Type-C is a pretty magical type of port. More and more devices are adopting it as THE standard for ports. Not only does it plug in upside down or right side up (I hated that about previous versions of USB – Type A, Micro, Mini, etc.), it also has many other capabilities which will make it the standard moving forward for all types of devices (laptops, desktops, monitors, peripherals, smartphones, and more). USB-C handles the USB 3.1 (and higher) standards and, importantly, can deliver power to your devices, known as USB power delivery (USB PD). A quick side note here – when shopping for USB-C cables, the USB PD-capable cables will cost a bit more, but you can then use them to power and charge your devices. And, USB-C can handle other “modes” that are used by monitors and displays, which were previously covered by HDMI, DisplayPort, and VGA, to name just a few. USB-C can handle all of these. So, why is this important to a USB-C docking station like the HyperDrive 12-Port USB-C hub? With this device, you have a single USB-C connection plugged into the MacBook, and this handles pretty much everything (including power). This is what drives my XX reasons why the HyperDrive is my favorite USB-C docking station. Reason #1 – Uses only one USB-C port on my MacBook Pro While the MBP that I have has four USB-C ports, it’s nice to not take them all up with different connections, especially if you want to have the easy ability to unplug and get on the go easily. With this single USB-C port plugged into the HyperDrive 12-Port USB-C Docking Station, I have everything connected that I need to have and still have three other ports available should I need to connect more devices. The 12-port version of this USB-C hub has a short, built-in USB-C cable that you plug into your Mac (or PC). This cable is the USB PD (Power Delivery) type, so not only will it charge your MBP, but it also provides all of the connectivity to the other ports contained within the hub. This built-in upstream USB-C cable can transfer 100W of power to charge and power your computer. And it supports Display Port (DP) 1.4 alt mode. Reason #2 – It has built-in Ethernet While WiFi continues to get faster, it’s still WiFi which means that you will encounter dead spots or potential WiFi congestion if you have many devices connected to WiFi. And WiFi is not as fast (yet) as a hard-wired connection like Ethernet. When I moved into my home, I ran ethernet cabling to every corner of the house so that I would have the ability to have hard-wired connections everywhere. Unfortunately, if you put your WiFi router in the center of your home when you go to the corners, the transmission speed is not as fast as when you are close to the WiFi router. So, I have an ethernet switch for my office, and connected to the switch is the HyperDrive 12-Port hub. There is a jack on the hub for the ethernet cable. This Ethernet port supports 10Mbps/100Mbps/1Gbps connections at either half or full-duplex connection. Reason #3 – Connect multiple monitors I honestly cannot work without having multiple screens. As I write this review, I have a 27″ 4K monitor, my laptop screen, and a portable USB-C display. If my desk could hold more displays, I would connect them (but I haven’t as of yet). Before having the HyperDrive docking station, I had a USB-C to HDMI adapter (and yes, that took up a USB-C port on my MBP – as did another adapter I used for USB-C to Ethernet). That meant that three of my four USB-C ports on my MBP were used up (display, ethernet, and power). What is great about the HyperDrive USB-C Docking Station is that it has the ability to connect up to three monitors. Built into the hub are two HDMI 4K (60Hz) HDR ports and one 4K (60Hz) DisplayPort. While I have seen some hubs that will only support mirroring of displays unless you install some kind of driver, these three ports are independent, and you can do mirroring OR have three extended displays. A quick note here – and this is not something that I have tested – but on macOS, while you CAN connect multiple monitors, the (up to 3) monitors will all be mirrored. So you could have four displays (including your Mac) showing the same screen, or you can have the three connected monitors showing the same (extended) screen and a different screen showing on your Mac itself. With Windows, you can do mirror mode (the same display on all screens) or do extended mode with different monitors showing different extended displays. See this FAQ for more details. I currently have my 27″ monitor as an extended display. Unfortunately, since the 12-port HyperDrive only has one extra USB-C port, which is used to connect the power supply from my laptop, I do have to plug that third display of mine directly into my MBP. But, this setup does allow me to have three distinct displays showing simultaneously. But again, the important thing to remember here is that for the Mac, there are no drivers or additional software you need to install to have this hub support multiple displays. You just plug it in, and it simply works! Reason #4 – It has the old USB-A ports as well Unfortunately, we are not going to escape from USB Type-A for a while. There are many, many devices and peripherals that still use this older standard. And luckily, the HyperDrive 12-port hub supports them. I actually have two USB external hard drives, a microphone, a webcam, and some other devices connected through the hub. Wait, you say! Those are more than four USB-A devices. Yes, I cheated a bit. I actually have a powered USB-A hub that I plug into the HyperDrive as well in order to support even more devices than the HyperDrive can handle. However, for the two external USB drives, I do plug them directly into the HyperDrive to get faster data transfer. The HyperDrive has four USB-A ports – two are the older (and slower) USB-A version 2.0, and two are the newer (and faster) USB-A 3.0. The USB-A 2.0 ports share 480Mbps bandwidth and have a 500mA power output. The USB-A 3.0 ports share 10Gbps bandwidth and have a 1.5A power output. Reason #5 – Support for SD cards Those of you who are digital photographers (and who don’t just use their smartphones as I do) will be happy to see the inclusion of Secure Digital (SD) cards. There is a slot for MicroSD and one for SD. These ports support the UHS-II SD 4.0 specification, and they are backward compatible. These slots support a maximum read/write speed of 312 MB/s and can handle SD cards up to 2TB in size. And, you can use both the SD and MicroSD slots to read/write simultaneously. So, if you use a DSLR or have a drone capturing 4K video and need to easily connect to your computer to download video or images, having these two SD slots within the HyperDrive hub is a huge blessing! Reason #6 – A (gasp) 3.5mm audio port Yes, the 3.5mm audio port still lives despite it being removed from modern smartphones. And while there IS still a 3.5mm audio port on my MBP, I’m wondering how long that port will continue to live in future generations. But one of the nice things is, using the 3.5mm audio jack allows you to connect speakers to the HyperDrive dock and leave the 3.5mm audio jack on your laptop to be for headphones. The port is a 4-pole (TRRS) stereo (384Khz, 32-bit max sampling rate) which also supports a 384Khz 32-bit microphone. This is a true stereo headphone and microphone port. Reason #7 – It’s “cool” – literally! One thing that I have noticed about various types of hubs is that they tend to heat up. This really isn’t surprising because there is a lot of information, data, and signals being transmitted through various distinct chips within these hubs. Add power to the hub, and there is a tendency for the hubs to carry a lot of heat. The HyperDrive 12-port USB-C docking station, to my surprise, actually is only mildly warm to the touch. Yes, there is heat, but it’s not something you can fry an egg on (like some other ports I have tested). This is due to the design of the hub itself. It has heat-dissipating ridges built into its design, and the metal used seems to disperse heat nicely. Lucky 7 makes the HyperDrive Gen2 12-Port USB-C docking station a winner! I’m sure I could have come up with more than seven reasons why this USB-C hub and docking station is my go-to favorite currently. However, the thing that I truly like best is that it simply works. You plug it in, connect your devices, and everything connects! No need for drivers or other utilities. One problem though is the limited availability of the 12-port docking station. I guess with everybody working from you, there is high demand for hub solutions like these. The 12-port version is listed on the HyperShop site for $169.99. Unfortunately, it is currently not available on Amazon. However, the version that I really wanted to have, which is a bit pricier, IS available on Amazon (as well as on the HyperShop site). It is the 18-port solution. To the 12-port, the 18-port adds: VGA – to connect those really old monitorsOptical Taslink Audio – if you want digital audio connectionsDigital Coaxial Audio – another audio optionUSB-C ports – it’s nice to have additional USB-C portsDC Power Port – you can get an optional power supply to power the entire hub and then don’t nee to plug in a USB-C PD The HyperDrive GEN2 USB-C 18-Port docking station is available on Amazon for $199.99. Note: this does NOT include the optional power supply – you have to purchase that separately at $99.99. Shop on HighTechDad The product shown below (and related products that have been reviewed on HighTechDad) is available within the HighTechDad Shop. This review has all of the details about this particular product and you can order it directly by clicking on the Buy button or clicking on the image/title to view more. Be sure to review other products available in the HighTechDad Shop. HyperDrive 12-Port USB-C Docking Station and Hub $199.99 Buy on Amazon HyperDrive Pro 8-in-2 Hub $99.99 Buy on Amazon Lastly, if you are looking for a great portable hub solution, be sure to read my review of the HyperDrive Pro 8-in-2 Hub! The bottom line here is, if you are looking for a high-quality USB-C docking station that handles a variety of different connectivity scenarios, you will want to avoid getting a bunch of single-purpose adapters and opt for a multi-function hub. The HyperShop 12-port USB-C Docking Station gives you the flexibility to connect multiple devices easily without any worry. Disclosure: I have a material connection because I received a sample of a product for consideration in preparing to review the product and write this content. I was/am not expected to return this item after my review period. All opinions within this article are my own and are typically not subject to the editorial review from any 3rd party. Also, some of the links in the post above may be “affiliate” or “advertising” links. These may be automatically created or placed by me manually. This means if you click on the link and purchase the item (sometimes but not necessarily the product or service being reviewed), I will receive a small affiliate or advertising commission. More information can be found on my About page. HTD says: I honestly don’t think I could survive without the HyperShop 12-port USB-C Docking Station as it helps me connect monitors, hard drives, Ethernet, audio, and more while only using a single USB-C port on my MacBook Pro.

https://www.hightechdad.com/2021/09/06/7-reasons-why-the-hyperdrive-12-port-usb-c-hub-is-my-favorite-docking-station/

0 notes

Text

push vs pull

the other day i became angry at a webpage that popped up a non-dismissable modal. ordinarily when this happens i shrug and pop open devtools and move on my way (i also did that), but my rage stemmed from the fact that the modal was preventing me from viewing my own profile data. as the morning wore on, i stopped to reflect about why it angered me and what it meant (if anything).

First, I want to address a point before it comes up: many appeals against dark patterns are emotional (as the patterns themselves evoke strong reactions from people tricked by them). I want to suggest that if you are a numbers oriented person, needlessly wasting your user's time is a failure of product even if doing so suggests increased engagement. The rest of this post is about repurposing the terms "pull" and "push" to describe who is prioritized in an interaction. If you want an emotional appeal against dark patterns, consider that you are robbing your users of their agency.

Secondly, i want to make clear that i get that everybody has their reasons. I'm writing this because the whole field of user-centered design stresses elevating the needs of your users but the legitimate realities of "doing business on the internet" are often at odds with users spending less time with your product.

later in the day i found myself rereading the optimization principles in The Toyota Way.

Principle 3

Use "pull" systems to avoid overproduction.

Here this means that a participant in a production system should request (pull) as much as it needs rather than reacting to a stream of supply pushed to it. One of the benefits of stressing "pull" over "push" is that it prioritizes the person doing the pulling over the system that is being pulled from (often commodities).

you can think of this like the distinction between "can you work on this right now" vs "when you finish up this project, just pick up one of the ones labeled ~~~~." if somebody is saying that to you, the second phrase lets you add new tasks to a pipeline whereas the first disrupts your existing task.

Principle 8

Use only reliable, thoroughly tested technology that serves your people and processes.

Technology is pulled by manufacturing, not pushed to manufacturing.

anyone that has ever used enterprise software can sympathize with this: you were doing your job and then somebody with an expense card–not you–decided to make everyone use ENTERPRISE PRODUCT for SOME TASK and so now you need to both use it and integrate into your existing workflow even though the prior workflow may have had no apparent drawbacks (i get that new products are often purchased to remedy disparate ad-hoc and chaotic workflows).

the flipside to this is a team that asks for money to use some service/product/software/whatever because it knows it to be a useful benefit. for instance, when a team asks to use figma or babel-js or slack, they are pulling that technology because they envision that it will help them—they don't need to be sold on it, they want it.

who moved my cheese?

when people design features that impose upon their users it's easy to see some design that was pushed. a parallel of this is designing software with a breaking api change, that pushes a new way of working onto the library's conumers. Perhaps it was possible to do something before, but for whatever reason that same outcome requires some new or different step, that Improved Feature™ will no longer feel like a feature, but instead like a regression or an annoyance.

you can think of word processors as additive in that their feature-set is largely pull-oriented. from the outset, you can begin with plain text, or progressively add formatting, or add footnotes, update margins, etc. Few of these additional changes break the original act of writing, they instead expand the choices possible. If you've never used a pivot table in excel it doesn't matter because you can still use excel to do your regular spreadsheet work but the pivot tables are there for you if you ever need them.

in his 2016 clojure conj keynote, rich hickey describes this variety of additive behavior as a sort of accretion of a library's featureset or a relaxation in its behavior (in opposition to the more standard behavior of requiring more or providing less which causes breakage). When you change or break of a library or product, even by just replacing the behavior of a prior functionality with a new one, you are imposing upon your consumers a new approach to working rather than allowing them to decide if a new approach would benefit them. You are pushing technology to your customers rather than allowing them pull it from you.

and for products, the reasons for embracing push-like behavior are many: if a user needs to accept a new license agreement, you may need a push-mechanism in order to gate further use of the product. if your business is crumbling, you may start relying on push behaviors to manufacture engagement, to collect data, or to rate-limit freeloaders (looking at you, tumblr and gsuite). if you are in something of a commoditized space, you may push proprietary data structures, hardware, or non-standardized technology in order to manufacture lock-in. Or perhaps it's just a financial choice for your team: it's more expensive to maintain two things than one. It's easier for you to make this change because it saves you time at the expense of your customers who are required to adapt to it.

as a service provider pull-oriented mechanics are not exactly compelling in a business case: they have the capacity to incur very uncertain costs for you and they always expand your scope of maintenace. Instead of maintaining some plateau of features, you are always caring for more things, responsible for an increasingly larger surface area, responsible for backwards compatibility.

worse, pull-oriented services that accrete features require you to make a compelling case for any new feature set that is merely a repackaging of existing behavior. It is more difficult to advocate for a hobbled feature when a more capable progenitor sticks around.

dark patterns

dark patterns in web design exist in this fascinating middle-ground because they don't often block user-actions, instead, they take advantage of traditional user-flow mechanisms to misrepresent the meaning of a button or checkbox. they use your desire to perform one action to engage you in a separate one. they are reviled because they collect data during vulnerable moments or hobble freeloading engagement, trading your ability to see another page of content for your more valuable email address or phone number.

dark patterns that slow or limit functionality simply need to create enough curiosity to engage with the initial gate or desire to fulfil the final outcome. or, they rely on an extrinsic force to motivate the user along the final steps of an 'engagement chute'—just accept the eula.

But even dark patterns can be push vs pull: offering a discount is different than limiting site functionality. Forcing a user to scroll through pages of license agreement is onerous, or they could email you the eula and mark the accept button as not disabled. dark patterns rely on the unintended consequences of a common action to motivate some non-obvious opt-in. users hate push-based dark patterns (which, see anyone trying to cancel their nyt subscription or trying to browse pinterest or twitter loged out) but feel remarkable delight at receiving a eula in their email to read later while they accept its terms right now.

what people dislike about enterprise software is not that the software is necessarily bad (say what you will about git but people choose to use it)—the disconnect between the choice of using the software and the employer-driven mandate of compliance, having software pushed onto you is the defining quality in enterprise malaise. Add to that an application that pushes capricious choices to its users and you have a recipe for disaster.

people talk a lot about "pushing some new changes out" to customers and it's almost always framed as a positive, but i think it's worth considering whether or not user-centered design should instead strive to create compelling new toolsets that their customers want to pull from. this is a harder product challenge for many teams because it requires creating an alternative that can stand on its own alongside a product that has an established track record. It's hard to convince somebody to give you more time/information/attention to keep doing what they were doing already, but not making that case and forcing a poor interaction is arguably worse.