#High-Quality Human Expert Data Labeling

Explore tagged Tumblr posts

Text

Generative AI | High-Quality Human Expert Labeling | Apex Data Sciences

Apex Data Sciences combines cutting-edge generative AI with RLHF for superior data labeling solutions. Get high-quality labeled data for your AI projects.

#GenerativeAI#AIDataLabeling#HumanExpertLabeling#High-Quality Data Labeling#Apex Data Sciences#Machine Learning Data Annotation#AI Training Data#Data Labeling Services#Expert Data Annotation#Quality AI Data#Generative AI Data Labeling Services#High-Quality Human Expert Data Labeling#Best AI Data Annotation Companies#Reliable Data Labeling for Machine Learning#AI Training Data Labeling Experts#Accurate Data Labeling for AI#Professional Data Annotation Services#Custom Data Labeling Solutions#Data Labeling for AI and ML#Apex Data Sciences Labeling Services

1 note

·

View note

Text

DeepSeek-R1: A New Era in AI Reasoning

A Chinese AI lab that has continuously been known to bring in groundbreaking innovations is what the world of artificial intelligence sees with DeepSeek. Having already tasted recent success with its free and open-source model, DeepSeek-V3, the lab now comes out with DeepSeek-R1, which is a super-strong reasoning LLM. While it’s an extremely good model in performance, the same reason which sets DeepSeek-R1 apart from other models in the AI landscape is the one which brings down its cost: it’s really cheap and accessible.

What is DeepSeek-R1?

DeepSeek-R1 is the next-generation AI model, created specifically to take on complex reasoning tasks. The model uses a mixture-of-experts architecture and possesses human-like problem-solving capabilities. Its capabilities are rivaled by the OpenAI o1 model, which is impressive in mathematics, coding, and general knowledge, among other things. The sole highlight of the proposed model is its development approach. Unlike existing models, which rely upon supervised fine-tuning alone, DeepSeek-R1 applies reinforcement learning from the outset. Its base version, DeepSeek-R1-Zero, was fully trained with RL. This helps in removing the extensive need of labeled data for such models and allows it to develop abilities like the following:

Self-verification: The ability to cross-check its own produced output with correctness.

Reflection: Learnings and improvements by its mistakes

Chain-of-thought (CoT) reasoning: Logical as well as Efficient solution of the multi-step problem

This proof-of-concept shows that end-to-end RL only is enough for achieving the rational capabilities of reasoning in AI.

Performance Benchmarks

DeepSeek-R1 has successfully demonstrated its superiority in multiple benchmarks, and at times even better than the others: 1. Mathematics

AIME 2024: Scored 79.8% (Pass@1) similar to the OpenAI o1.

MATH-500: Got a whopping 93% accuracy; it was one of the benchmarks that set new standards for solving mathematical problems.

2.Coding

Codeforces Benchmark: Rank in the 96.3rd percentile of the human participants with expert-level coding abilities.

3. General Knowledge

MMLU: Accurate at 90.8%, demonstrating expertise in general knowledge.

GPQA Diamond: Obtained 71.5% success rate, topping the list on complex question answering.

4.Writing and Question-Answering

AlpacaEval 2.0: Accrued 87.6% win, indicating sophisticated ability to comprehend and answer questions.

Use Cases of DeepSeek-R1

The multifaceted use of DeepSeek-R1 in the different sectors and fields includes: 1. Education and Tutoring With the ability of DeepSeek-R1 to solve problems with great reasoning skills, it can be utilized for educational sites and tutoring software. DeepSeek-R1 will assist the students in solving tough mathematical and logical problems for a better learning process. 2. Software Development Its strong performance in coding benchmarks makes the model a robust code generation assistant in debugging and optimization tasks. It can save time for developers while maximizing productivity. 3. Research and Academia DeepSeek-R1 shines in long-context understanding and question answering. The model will prove to be helpful for researchers and academics for analysis, testing of hypotheses, and literature review. 4.Model Development DeepSeek-R1 helps to generate high-quality reasoning data that helps in developing the smaller distilled models. The distilled models have more advanced reasoning capabilities but are less computationally intensive, thereby creating opportunities for smaller organizations with more limited resources.

Revolutionary Training Pipeline

DeepSeek, one of the innovations of this structured and efficient training pipeline, includes the following: 1.Two RL Stages These stages are focused on improved reasoning patterns and aligning the model’s outputs with human preferences. 2. Two SFT Stages These are the basic reasoning and non-reasoning capabilities. The model is so versatile and well-rounded.

This approach makes DeepSeek-R1 outperform existing models, especially in reason-based tasks, while still being cost-effective.

Open Source: Democratizing AI

As a commitment to collaboration and transparency, DeepSeek has made DeepSeek-R1 open source. Researchers and developers can thus look at, modify, or deploy the model for their needs. Moreover, the APIs help make it easier for the incorporation into any application.

Why DeepSeek-R1 is a Game-Changer

DeepSeek-R1 is more than just an AI model; it’s a step forward in the development of AI reasoning. It offers performance, cost-effectiveness, and scalability to change the world and democratize access to advanced AI tools. As a coding assistant for developers, a reliable tutoring tool for educators, or a powerful analytical tool for researchers, DeepSeek-R1 is for everyone. DeepSeek-R1, with its pioneering approach and remarkable results, has set a new standard for AI innovation in the pursuit of a more intelligent and accessible future.

4 notes

·

View notes

Text

To some extent, the significance of humans’ AI ratings is evident in the money pouring into them. One company that hires people to do RLHF and data annotation was valued at more than $7 billion in 2021, and its CEO recently predicted that AI companies will soon spend billions of dollars on RLHF, similar to their investment in computing power. The global market for labeling data used to train these models (such as tagging an image of a cat with the label “cat”), another part of the “ghost work” powering AI, could reach nearly $14 billion by 2030, according to an estimate from April 2022, months before the ChatGPT gold rush began.

All of that money, however, rarely seems to be reaching the actual people doing the ghostly labor. The contours of the work are starting to materialize, and the few public investigations into it are alarming: Workers in Africa are paid as little as $1.50 an hour to check outputs for disturbing content that has reportedly left some of them with PTSD. Some contractors in the U.S. can earn only a couple of dollars above the minimum wage for repetitive, exhausting, and rudderless work. The pattern is similar to that of social-media content moderators, who can be paid a tenth as much as software engineers to scan traumatic content for hours every day. “The poor working conditions directly impact data quality,” Krystal Kauffman, a fellow at the Distributed AI Research Institute and an organizer of raters and data labelers on Amazon Mechanical Turk, a crowdsourcing platform, told me.

Stress, low pay, minimal instructions, inconsistent tasks, and tight deadlines—the sheer volume of data needed to train AI models almost necessitates a rush job—are a recipe for human error, according to Appen raters affiliated with the Alphabet Workers Union-Communications Workers of America and multiple independent experts. Documents obtained by Bloomberg, for instance, show that AI raters at Google have as little as three minutes to complete some tasks, and that they evaluate high-stakes responses, such as how to safely dose medication. Even OpenAI has written, in the technical report accompanying GPT-4, that “undesired behaviors [in AI systems] can arise when instructions to labelers were underspecified” during RLHF.

18 notes

·

View notes

Text

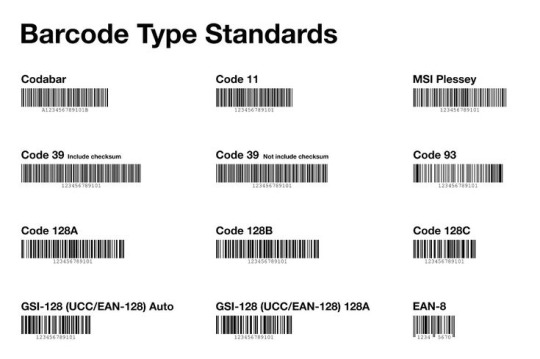

Barcode Definitions: Types, Benefits & Technology Explained 2025.

In today’s fast-paced business world, accurate and efficient product identification is essential. This is where Barcode Definitions come into play. Barcodes have revolutionized inventory management, retail checkout, and supply chain operations by providing a simple way to encode information visually. Understanding what barcodes are, the different types available, and their benefits is key to making smart business decisions. This article explores the fundamentals of barcodes, their modern technology, and how they can help businesses thrive.

What Are Barcodes? Basics and Core Definitions

At its simplest, a barcode is a machine-readable representation of data. Typically, it consists of a series of parallel black and white lines or geometric patterns that encode information such as product numbers, batch codes, or serial numbers. The concept of Barcode Definitions refers to understanding how these codes work and their various forms. Barcodes help automate the process of data entry, reducing human errors and speeding up operations.

Different Types of Barcodes: 1D, 2D, QR Codes & More

There are several types of barcodes, each designed for specific uses:

1D Barcodes (Linear Barcodes): The traditional barcodes most people recognize, composed of vertical lines of varying widths. Common examples include UPC and EAN codes found on retail products.

2D Barcodes: These include QR codes and Data Matrix codes, which store information in both horizontal and vertical dimensions. They can hold much more data than 1D barcodes and are often used for marketing, payments, and detailed product information.

QR Codes: A popular form of 2D barcode that can be scanned by smartphones, making them ideal for customer engagement and mobile marketing.

Understanding these Barcode Definitions helps businesses select the right barcode type for their needs.

Benefits of Using Barcodes in Business Operations

Barcodes provide numerous advantages that improve efficiency and accuracy:

Speed: Scanning barcodes is much faster than manual data entry.

Accuracy: Reduces human errors, ensuring the right information is captured every time.

Cost-Effective: Printing and scanning barcodes is affordable and scalable.

Inventory Control: Makes tracking stock levels simple and reliable.

Customer Experience: Speeds up checkout and order processing.

By embracing barcode technology, companies streamline their workflows, reduce costs, and improve customer satisfaction.

Modern Barcode Technologies: Scanners, Printers & Software

Barcode technology has evolved far beyond simple printed labels. Today’s systems include:

Barcode Scanners: From handheld laser scanners to mobile device cameras, these tools quickly read barcode data.

Barcode Printers: Specialized printers create high-quality labels for durable, long-lasting barcodes.

Label Software: Helps design and manage barcode labels, ensuring they meet industry standards.

These modern tools work together seamlessly to automate processes and provide real-time data to businesses.

How AIDC Technologies India Supports Barcode Implementation

AIDC Technologies India is a leading provider of automated identification and data capture solutions, including barcode scanners, printers, and software. They help businesses of all sizes understand the nuances of Barcode Definitions and implement the best solutions tailored to their unique needs. From retail to logistics and healthcare, AIDC Technologies ensures reliable, scalable, and cost-effective barcode systems are deployed. Their expert team also provides integration, training, and ongoing support to help clients maximize the benefits of barcode technology.

Key Advantages of Partnering with AIDC Technologies for Barcode Solutions

Working with AIDC Technologies India gives businesses access to:

Customized barcode solutions designed for specific industries

Advanced technology including latest scanners and printers

Comprehensive support and maintenance services

Expert consultation to integrate barcodes with existing systems

Training programs to ensure staff can use the technology effectively

This partnership empowers businesses to optimize inventory management, reduce errors, and increase operational efficiency.

The Future of Barcode Technology: AI, IoT & Smart Integration

Looking ahead, barcode technology will continue to evolve with advancements in AI and IoT. Smart warehouses will use connected barcode scanners and sensors to provide real-time data analytics. AI algorithms will help predict inventory needs and automate restocking. The Internet of Things (IoT) integration will allow barcode data to be part of a larger ecosystem, improving visibility and decision-making. Understanding these Barcode Definitions today prepares businesses for the smart technologies of tomorrow.

Conclusion & Call to Action: Enhance Your Business with AIDC Barcode Solutions

Barcodes remain a foundational technology in product identification and inventory management. By understanding Barcode Definitions and leveraging modern barcode technology, businesses can greatly improve their operations and customer experiences. If you’re ready to take your business to the next level with reliable, efficient barcode solutions, look no further than AIDC Technologies India.

Book Now with AIDC Technologies India to explore customized barcode solutions that fit your business needs perfectly.

#BarcodeTechnology2025#ModernBarcodeTypes#BarcodeBenefitsExplained#SmartInventorySolutions#DigitalLabelingTrends#BarcodeVsQR2025#RetailAutomation2025#AIDCIndiaInsights

0 notes

Text

Why Do Companies Outsource Text Annotation Services?

Building AI models for real-world use requires both the quality and volume of annotated data. For example, marking names, dates, or emotions in a sentence helps machines learn what those words represent and how to interpret them.

At its core, different applications of AI models require different types of annotations. For example, natural language processing (NLP) models require annotated text, whereas computer vision models need labeled images.

While some data engineers attempt to build annotation teams internally, many are now outsourcing text annotation to specialized providers. This approach speeds up the process and ensures accuracy, scalability, and access to professional text annotation services for efficient, cost-effective AI development.

In this blog, we will delve into why companies like Cogito Tech offer the best, most reliable, and compliant-ready text annotation training data for the successful deployment of your AI project. What are the industries we serve, and why is outsourcing the best option so that you can make an informed decision!

What is the Need for Text Annotation Training Datasets?

A dataset is a collection of learning information for the AI models. It can include numbers, images, sounds, videos, or words to teach machines to identify patterns and make decisions. For example, a text dataset may consist of thousands of customer reviews. An audio dataset might contain hours of speech. A video dataset could have recordings of people crossing the street.

Text annotation services are crucial for developing language-specific or NLP models, chatbots, applying sentiment analysis, and machine translation applications. These datasets label parts of text, such as named entities, sentiments, or intent, so algorithms can learn patterns and make accurate predictions. Industries such as healthcare, finance, e-commerce, and customer service rely on annotated data to build and refine AI systems.

At Cogito Tech, we understand that high-quality reference datasets are critical for model deployment. We also understand that these datasets must be large enough to cover a specific use case for which the model is being built and clean enough to avoid confusion. A poor dataset can lead to a poor AI model.

How Do Text Annotation Companies Ensure Scalability?

Data scientists, NLP engineers, and AI researchers need text annotation training datasets for teaching machine learning models to understand and interpret human language. Producing and labeling this data in-house is not easy, but it is a serious challenge. The solution to this is seeking professional help from text annotation companies.

The reason for this is that as data volumes increase, in-house annotation becomes more challenging to scale without a strong infrastructure. Data scientists focusing on labeling are not able to focus on higher-level tasks like model development. Some datasets (e.g., medical, legal, or technical data) need expert annotators with specialized knowledge, which can be hard to find and expensive to employ.

Diverting engineering and product teams to handle annotation would have slowed down core development efforts and compromised strategic focus. This is where specialized agencies like ours come into play to help data engineers support their need for training data. We also provide fine-tuning, quality checks, and compliant-labeled training data, anything and everything that your model needs.

Fundamentally, data labeling services are needed to teach computers the importance of structured data. For instance, labeling might involve tagging spam emails in a text dataset. In a video, it could mean labeling people or vehicles in each frame. For audio, it might include tagging voice commands like “play” or “pause.”

Why is Text Annotation Services in Demand?

Text is one of the most common data types used in AI model training. From chatbots to language translation, text annotation companies offer labeled text datasets to help machines understand human language.

For example, a retail company might use text annotation to determine whether customers are happy or unhappy with a product. By labeling thousands of reviews as positive, negative, or neutral, AI learns to do this autonomously.

As stated in Grand View Research, “Text annotation will dominate the global market owing to the need to fine-tune the capacity of AI so that it can help recognize patterns in the text, voices, and semantic connections of the annotated data”.

Types of Text Annotation Services for AI Models

Annotated textual data is needed to help NLP models understand and process human language. Text labeling companies utilize different types of text annotation methods, including:

Named Entity Recognition (NER) NER is used to extract key information in text. It identifies and categorizes raw data into defined entities such as person names, dates, locations, organizations, and more. NER is crucial for bringing structured information from unstructured text.

Sentiment Analysis It means identifying and tagging the emotional tone expressed in a piece of textual information, typically as positive, negative, or neutral. This is commonly used to analyze customer reviews and social media posts to review public opinion.

Part-of-Speech (POS) Tagging It refers to adding metadata like assigning grammatical categories, such as nouns, pronouns, verbs, adjectives, and adverbs, to each word in a sentence. It is needed for comprehending sentence structure so that the machines can learn to perform downstream tasks such as parsing and syntactic analysis.

Intent Classification Intent classification in text refers to identifying the purpose behind a user’s input or prompt. It is generally used in the context of conversational models so that the model can classify inputs like “book a train,” “check flight,” or “change password” into intents and enable appropriate responses for them.

Importance of Training Data for NLP and Machine Learning Models

Organizations must extract meaning from unstructured text data to automate complex language-related tasks and make data-driven decisions to gain a competitive edge.

The proliferation of unstructured data, including text, images, and videos, necessitates text annotation to make this data usable as it powers your machine learning and NLP systems.

The demand for such capabilities is rapidly expanding across multiple industries:

Healthcare: Medical professionals employed by text annotation companies perform this annotation task to automate clinical documentation, extract insights from patient records, and improve diagnostic support.

Legal: Streamlining contract analysis, legal research, and e-discovery by identifying relevant entities and summarizing case law.

E-commerce: Enhancing customer experience through personalized recommendations, automated customer service, and sentiment tracking.

Finance: In order to identify fraud detection, risk assessment, and regulatory compliance, text annotation services are needed to analyze large volumes of financial text data.

By investing in developing and training high-quality NLP models, businesses unlock operational efficiencies, improve customer engagement, gain deeper insights, and achieve long-term growth.

Now that we have covered the importance, we shall also discuss the roadblocks that may come in the way of data scientists and necessitate outsourcing text annotation services.

Challenges Faced by an In-house Text Annotation Team

Cost of hiring and training the teams: Having an in-house team can demand a large upfront investment. This refers to hiring, recruiting, and onboarding skilled annotators. Every project is different and requires a different strategy to create quality training data, and therefore, any extra expenses can undermine large-scale projects.

Time-consuming and resource-draining: Managing annotation workflows in-house often demands substantial time and operational oversight. The process can divert focus from core business operations, such as task assignments, to quality checks and revisions.

Requires domain expertise and consistent QA: Though it may look simple, in actual, text annotation requires deep domain knowledge. This is especially valid for developing task-specific healthcare, legal, or finance models. Therefore, ensuring consistency and accuracy across annotations necessitates a rigorous quality assurance process, which is quite a challenge in terms of maintaining consistent checks via experienced reviewers.

Scalability problems during high-volume annotation tasks: As annotation needs grow, scaling an internal team becomes increasingly tough. Expanding capacity to handle large influx of data volume often means getting stuck because it leads to bottlenecks, delays, and inconsistency in quality of output.

Outsource Text Annotation: Top Reasons and ROI Benefits

The deployment and success of any model depend on the quality of labeling and annotation. Poorly labeled information leads to poor results. This is why many businesses choose to partner with Cogito Tech because our experienced teams validate that the datasets are tagged with the right information in an accurate manner.

Outsourcing text annotation services has become a strategic move for organizations developing AI and NLP solutions. Rather than spending time managing expenses, businesses can benefit a lot from seeking experienced service providers. Mentioned below explains why data scientists must consider outsourcing:

Cost Efficiency: Outsourcing is an economical way that can significantly reduce labor and infrastructure expenses compared to hiring internal workforce. Saving costs every month in terms of salary and infrastructure maintenance costs makes outsourcing a financially sustainable solution, especially for startups and scaling enterprises.

Scalability: Outsourcing partners provide access to a flexible and scalable workforce capable of handling large volumes of text data. So, when the project grows, the annotation capacity can increase in line with the needs.

Speed to Market: Experienced labeling partners bring pre-trained annotators, which helps projects complete faster and means streamlined workflows. This speed helps businesses bring AI models to market more quickly and efficiently.

Quality Assurance: Annotation providers have worked on multiple projects and are thus professional and experienced. They utilize multi-tiered QA systems, benchmarking tools, and performance monitoring to ensure consistent, high-quality data output. This advantage can be hard to replicate internally.

Focus on Core Competencies: Delegating annotation to experts has one simple advantage. It implies that the in-house teams have more time refining algorithms and concentrate on other aspects of model development such as product innovation, and strategic growth, than managing manual tasks.

Compliance & Security: A professional data labeling partner does not compromise on following security protocols. They adhere to data protection standards such as GDPR and HIPAA. This means that sensitive data is handled with the highest level of compliance and confidentiality. There is a growing need for compliance so that organizations are responsible for utilizing technology for the greater good of the community and not to gain personal monetary gains.

For organizations looking to streamline AI development, the benefits of outsourcing with us are clear, i.e., improved quality, faster project completion, and cost-effectiveness, all while maintaining compliance with trusted text data labeling services.

Use Cases Where Outsourcing Makes Sense

Outsourcing to a third party rather than performing it in-house can have several benefits. The foremost advantage is that our text annotation services cater to the needs of businesses at multiple stages of AI/ML development, which include agile startups to large-scale enterprise teams. Here’s how:

Startups & AI Labs Quality and reliable text training data must comply with regulations to be usable. This is why early-stage startups and AI research labs often need compliant labeled data. When startups choose top text annotation companies, they save money on building an internal team, helping them accelerate development while staying lean and focused on innovation.

Enterprise AI Projects Big enterprises working on production-grade AI systems need scalable training datasets. However, annotating millions of text records at scale is challenging. Outsourcing allows enterprises to ramp up quickly, maintain annotation throughput, and ensure consistent quality across large datasets.

Industry-specific AI Models Sectors such as legal and healthcare need precise and compliant training data because they deal with personal data that may violate individual rights while training models. However, experienced vendors offer industry-trained professionals who understand the context and sensitivity of the data because they adhere to regulatory compliance, which benefits in the long-term and model deployment stages.

Conclusion

There is a rising demand for data-driven solutions to support this innovation, and quality-annotated data is a must for developing AI and NLP models. From startups building their prototypes to enterprises deploying AI at scale, the demand for accurate, consistent, and domain-specific training data remains.

However, managing annotation in-house has significant limitations, as discussed above. Analyzing return on investment is necessary because each project has unique requirements. We have mentioned that outsourcing is a strategic choice that allows businesses to accelerate project deadlines and save money.

Choose Cogito Tech because our expertise spans Computer Vision, Natural Language Processing, Content Moderation, Data and Document Processing, and a comprehensive spectrum of Generative AI solutions, including Supervised Fine-Tuning, RLHF, Model Safety, Evaluation, and Red Teaming.

Our workforce is experienced, certified, and platform agnostic to accomplish tasks efficiently to give optimum results, thus reducing the cost and time of segregating and categorizing textual data for businesses building AI models. Original Article : Why Do Companies Outsource Text Annotation Services?

#text annotation#text annotation service#text annotation service company#cogitotech#Ai#ai data annotation#Outsource Text Annotation Services

0 notes

Text

SuperHero AI Review: Create Content, Images & Voice in One Simple App

Introduction

Welcome to my Super Hero AI Review. Ever wish you had one tool that could do it all? Not just another AI app, but a real game-changer?

Super Hero AI is your all-in-one creative powerhouse. It’s like having a full team of writers, designers, coders, video editors, and virtual assistants — right inside one dashboard. No monthly fees. No steep learning curves. Just pure creative freedom.

This isn’t some hype tool. It’s powered by the world’s most advanced AI models — like GPT-4o, Gemini, DeepSeek, and DALL·E 3 HD.

That means you get 4K visuals, ultra-realistic voiceovers, custom chatbots, sales copy, blog posts, and even entire websites — done in seconds.

Let’s break it down.

What Is SuperHero AI?

SuperHero AI is your all-in-one control center for everything digital. It’s the first platform that hands you real AI superpowers — without the mess of using 10 different apps.

You log into one dashboard… and instantly start creating 4K or even 8K images, human-like voiceovers in 50+ languages, or full websites and marketing copy — all with just a few clicks.

It’s fast. It’s beginner-friendly. And yes — you can sell everything you create. No limits. No monthly bills. Just one dashboard… and endless possibilities.

How Does It Work

Step#1: Log In & Unlock Your Dashboard Once you grab access, you’ll land inside your personal AI dashboard. Everything you need is right there — image generators, voice tools, content writers, chatbots, coding tools.

Step#2: Choose What You Want to Create Want a high-quality image for social media? Done. Need a voiceover for your sales video? Easy. Writing a blog post? Creating a landing page? Building a chatbot? Just say the word.

All you do is type in what you want, and Super Hero AI figures out the rest. It’s like having a team of experts, but faster — and you’re the boss.

Step#3: Hit Generate & Watch the Magic Happen This is the fun part. Click “Generate,” and in a few seconds, your content is ready: Beautiful 4K images, Human-sounding voiceovers, Ready-to-publish articles and scripts, Websites and code you can sell or use, AI chatbots for your business or clients

It’s not just content — it’s business-ready power you can use, sell, or scale.

SuperHero AI Review — Features

1. Cinematic Strike (4K Image Generator) Generate realistic 4K images with avatars, effects, and backgrounds like a pro film editor — even if you have zero design skills.

2. VoiceMorph Power Create ultra-realistic voiceovers in 50+ languages. Choose tone, gender, style, and even accent. Perfect for YouTube, sales videos, and eLearning.

3. Sonic Boom Studio Edit and enhance your audio with pro-grade FX, background noise removal, voice mixing, and more.

4. AI Knowledge Hub Got a question? Ask your AI team anything — from SEO strategies to coding help — and get instant answers.

5. Reality Bender (Image Chat AI) Chat with your AI, describe any visual, and get it generated in real time.

6. Multi-Mind AI Assistant Command GPT-4o, Claude, Gemini, DeepSeek, DALL-E and more — all from one dashboard. No API keys needed.

7. CodeCraft Ability Generate code, scripts, landing pages, and marketing funnels with natural-language prompts.

8. Real-Time Web Data Get trending stats, real-time info, and live research results without opening a browser tab.

9. Mind Link File Chat Upload PDFs, DOCX, and TXT files — then chat with them to extract summaries, action steps, and insights instantly.

10. Client Project Manager Manage multiple client projects, deliver white-label content, and export assets directly.

11. Social Media Content Creator Create scroll-stopping content, thumbnails, captions & hashtags for TikTok, YouTube Shorts, Instagram, and more.

Read My Full Review>>>>

#SuperHeroAI#SuperHeroAIReview#SuperHeroAIoto#SuperHeroAIpricing#SuperHeroAIhonestreview#AIForBeginners#AIContentCreator#AIForBusiness#AIImageGenerator

0 notes

Text

Enhancing AI Alignment with Human Values Through RLHF

Reinforcement Learning from Human Feedback or RLHF relies on curated, high-quality data to teach AI systems nuanced, human-aligned responses. Expert data services play a critical role by providing labeled examples and human-reviewed feedback, ensuring AI behaves ethically, adapts intelligently, and delivers context-aware results across diverse real-world applications.

0 notes

Text

From Drones to Docks: Precision Annotations for Smarter Seas

We translate drone vision into structured intelligence. Wisepl specialize in meticulously crafted data annotation that powers AI to detect, classify, and track ships with unmatched accuracy.

Whether it’s identifying cargo vessels in vast oceans or spotting fishing boats near coastlines, our expert annotators draw every pixel with purpose - fueling machine learning models for maritime surveillance, defense, logistics, and climate studies.

What sets us apart?

High-precision polygon & bounding box annotations

Expertise in drone-captured geospatial datasets

Scalable, secure & quality-checked pipeline

Human-in-the-loop workflows with smart reviews

We don’t just label ships | We empower algorithms to understand the sea.

Ready to Set Sail with AI? Let’s talk about how our annotations can unlock the full potential of your drone data. www.wisepl.com | [email protected]

#ShipDetection#DroneData#DataAnnotation#ImageAnnotation#DataLabeling#WiseplAI#MaritimeAI#GeospatialIntelligence#SurveillanceTech#AIForGood#DefenseAI#ComputerVision#MachineLearning#PrecisionAnnotation#AIDrivenOceans#Wisepl

0 notes

Text

Online vs. Offline Learning in 2025: Which One Wins?

Education in 2025 is undergoing a transformative phase, shaped by rapid technological advancements and shifting learner needs. As students, parents, and educators reevaluate traditional norms, a major debate has emerged—online learning versus offline learning. Which mode is more effective? More accessible? More aligned with the demands of the modern world? The answers aren't straightforward, but by examining real data, education industry trends 2025, and expert insights, we can start to understand the evolving future of education technology.

Rise of Online Learning Platforms

Since the global push toward remote learning in 2020, online education has grown exponentially. Today, in 2025, leading platforms offer a wide range of courses tailored to all age groups, from preschool to professional upskilling. Students can access flexible learning schedules, high-quality content, and even real-time interaction with global experts.

A report by the International Education Review Board revealed that 68% of students in higher education now prefer hybrid or online-only learning models. With integrated gamification tools, AI-powered tutors, and adaptive learning systems, it’s clear that educational technology trends are driving innovation like never before.

Offline Learning Still Holds Value

Despite the surge in digital formats, traditional classrooms remain relevant. Physical learning environments offer interpersonal engagement, structured routines, and hands-on practice, especially in vocational and lab-based studies.

A case study conducted by Delhi University found that students attending in-person lectures scored 17% higher in group projects compared to their online peers. Teachers cited better engagement and communication. It suggests that while digital tools enhance learning, they can’t always replace the human connection inherent in interesting education related articles.

Expert Opinions: Striking a Balance

Dr. Maya Ranganathan, a learning psychologist, notes, “The goal shouldn't be to replace offline learning but to blend the best of both worlds.” Hybrid models that combine online flexibility with offline mentorship offer the most effective learning outcomes.

Policymakers are taking notice. Governments are now funding infrastructure for both digital access and offline teacher training. A Ministry of Education report labeled this dual investment a key part of their career guidance and education strategy for the next decade.

Data Speaks: Comparing Performance Metrics

Recent statistics from Coursera and the Indian Ministry of Higher Education provide a data-driven snapshot of where both methods stand:

Online learners complete certifications 35% faster.

Offline students have 22% higher retention rates.

Hybrid learners show a 28% improvement in concept application skills.

Clearly, both models have strengths. Online platforms promote independent study and time management, while offline methods foster collaboration and discipline. This balanced perspective is crucial when reading education related blogs and making decisions for student development.

Real Case Study: Government Exam Prep

Take the example of Aditi Sharma, a UPSC aspirant. She prepared using a combination of YouTube lectures, app-based mock tests, and local coaching centers. "Without the online mock exams, I wouldn't have improved my timing. But doubt-clearing sessions at my center kept me grounded," she shared. Her experience reflects the need for diverse education tips and advice.

Educators also stress the importance of physical classroom discipline for competitive exams. Teachers say students absorb more in focused environments without digital distractions. Still, those who blend both methods tend to outperform others.

What Students and Parents Say

Surveys conducted by EdTech firms reveal that 74% of parents prefer a hybrid approach, mainly for better monitoring and flexibility. Students say online tools save time, allowing more freedom to pursue career opportunity fields like digital marketing, design, and IT.

Top education related articles point out that learners today want autonomy and interactivity. They're not just consuming content but engaging with it. It's a shift that redefines how we view both learning styles.

Future of Learning: What Lies Ahead?

Looking forward, trends like VR classrooms, AI grading, and blockchain certification are redefining what "school" means. The future of education technology is no longer limited to digital whiteboards. It now includes immersive experiences and personalized learning paths.

EdTech startups are also integrating health and wellness tips and health self care tips into platforms, acknowledging the holistic needs of modern students. Schools are not just about academics anymore but about producing emotionally and physically resilient individuals.

Leading platforms like Coursera, Khan Academy, and Indian platforms like BYJU'S are investing in this well-rounded approach. Many now offer free mental health support and career counseling as part of their service.

Role of Content and Digital Skills

Blogs like ours, recognized among the best educational blogs, aim to provide actionable insights, updated education related articles, and the latest news. Whether you're reading about government exam tips or exploring the Best Online Digital Marketing Course, curated information plays a major role in guiding learners.

Moreover, understanding SEO tips and tricks is now a basic skill in many online courses. Students are being trained not just for exams but for the digital economy, where branding and visibility are crucial.

FAQs

Q1. Is online learning better than offline learning in 2025?

A: Not necessarily. Each has unique advantages. Online is flexible and scalable, while offline offers structure and face-to-face interaction.

Q2. What are the top trends in educational technology?

A: Key trends include AI tutors, VR classrooms, blockchain credentials, and integrated wellness modules.

Q3. Are hybrid models the future of education?

A: Yes. Hybrid learning is being increasingly adopted due to its flexibility and effectiveness.

Q4. How do students perform in online vs. offline exams?

A: Online students tend to complete tasks faster, while offline students show better retention. Hybrid learners often outperform both.

Q5. Where can I find authentic education tips and advice?

A: Reputable blogs, educational websites, and government portals are great sources for education tips and advice.

Final Thoughts

So, who wins in the battle of online vs. offline learning in 2025? The answer is both—when used wisely. A blended approach, aligned with the learner's goals and environment, offers the most promise.

What learning format do you or your child prefer, and why? Share your experience in the comments below!

1 note

·

View note

Text

How Much Does It Cost to Build an AI Video Agent? A Comprehensive 2025 Guide

In today’s digital era, video content dominates the online landscape. From social media marketing to corporate training, video is the most engaging medium for communication. However, creating high-quality videos requires time, skill, and resources. This is where AI Video Agents come into play- automated systems designed to streamline video creation, editing, and management using cutting-edge technology.

If you’re considering investing in an AI Video Agent, one of the first questions you’ll ask is: How much does it cost to build one? This comprehensive guide will walk you through the key factors, cost breakdowns, and considerations involved in developing an AI Video Agent in 2025. Whether you’re a startup, multimedia company, or enterprise looking for advanced AI Video Solutions, this article will help you understand what to expect.

What Is an AI Video Agent?

An AI Video Agent is a software platform that leverages artificial intelligence to automate and enhance various aspects of video production. This includes:

AI video editing: Automatically trimming, color grading, adding effects, or generating subtitles.

AI video generation: Creating videos from text, images, or data inputs without manual filming.

Video content analysis: Understanding video context, tagging scenes, or summarizing content.

Personalization: Tailoring video content to specific audiences or user preferences.

Integration: Seamlessly working with other marketing, analytics, or content management systems.

These capabilities make AI Video Agents invaluable for businesses seeking scalable, efficient, and cost-effective video creation workflows.

Why Are AI Video Agents in Demand?

The rise of video marketing, e-learning, and digital entertainment has created an urgent need for faster and smarter video creation tools. Traditional video editing and production are labor-intensive and expensive, often requiring skilled professionals and expensive equipment.

AI Video Applications can:

Accelerate video production timelines.

Reduce human error and repetitive tasks.

Enable non-experts to create professional-quality videos.

Provide data-driven insights to optimize video content.

Support multi-language and multi-format video creation.

This explains why many companies are partnering with AI Video Solutions Companies or investing in AI Video Software Development to build custom AI video creators tailored to their needs.

Key Components of an AI Video Agent

Before diving into costs, it’s important to understand what goes into building an AI Video Agent. The main components include:

1. Data Collection and Preparation

AI video creators rely heavily on large datasets of annotated videos, images, and audio to train machine learning models. This step involves:

Collecting diverse video samples.

Labeling and annotating key features (e.g., objects, scenes, speech).

Cleaning and formatting data for training.

2. Model Development and Training

This is the core AI development phase where algorithms are designed and trained to perform tasks such as:

Video segmentation and object detection.

Natural language processing for script-to-video generation.

Style transfer and video enhancement.

Automated editing decisions.

Deep learning models, including convolutional neural networks (CNNs) and transformers, are commonly used.

3. Software Engineering and UI/UX Design

Developers build the user interface and backend systems that allow users to interact with the AI video editor or generator. This includes:

Web or mobile app development.

Cloud infrastructure for processing and storage.

APIs for integration with other platforms.

4. Integration and Deployment

The AI Video Agent needs to be integrated with existing workflows, such as content management systems, marketing automation tools, or social media platforms. Deployment may involve cloud services like AWS, Azure, or Google Cloud.

5. Testing and Quality Assurance

Extensive testing ensures the AI video creation tool works reliably across different scenarios and devices.

6. Maintenance and Updates

Post-launch support includes fixing bugs, updating models with new data, and adding features.

Detailed Cost Breakdown

The cost of building an AI Video Agent varies widely depending on complexity, scale, and specific requirements. Below is a detailed breakdown of typical expenses.

Component

Estimated Cost Range (USD)

Notes

Data Collection & Preparation

$10,000 – $100,000+

Larger, high-quality datasets increase costs; proprietary data is pricier.

Model Development & Training

$30,000 – $200,000+

Advanced deep learning models require more time and computational resources.

Software Engineering

$40,000 – $150,000+

Includes frontend, backend, UI/UX, cloud infrastructure, and APIs.

Integration & Deployment

$10,000 – $50,000+

Depends on the number and complexity of integrations.

Licensing & Tools

$5,000 – $50,000+

Third-party SDKs, cloud compute costs, and software licenses.

Testing & QA

$5,000 – $20,000+

Ensures reliability and user experience.

Maintenance & Updates (Annual)

$10,000 – $40,000+

Ongoing support, bug fixes, and model retraining.

Example Cost Scenarios

Basic AI Video Agent

Features: Automated trimming, captioning, simple effects.

Target users: Small businesses, content creators.

Estimated cost: $20,000 – $50,000.

Timeframe: 3-6 months.

Intermediate AI Video Agent

Features: Script-to-video generation, multi-language support, style transfer.

Target users: Marketing agencies, multimedia companies.

Estimated cost: $100,000 – $250,000.

Timeframe: 6-12 months.

Advanced AI Video Agent

Features: Real-time video editing, deep personalization, multi-format export, enterprise integrations.

Target users: Large enterprises, AI Video Applications Companies.

Estimated cost: $300,000+.

Timeframe: 12+ months.

Factors That Influence Cost

1. Feature Complexity

More advanced features, such as AI clip generator capabilities, voice synthesis, or 3D video creation, significantly increase development time and cost.

2. Data Quality and Quantity

High-quality, diverse datasets are crucial for effective AI video creation tools. Licensing proprietary datasets or creating custom datasets can be expensive.

3. Platform and Deployment

Building a cloud-based AI video creation tool with scalable infrastructure costs more than a simple desktop application.

4. Customization Level

Tailoring the AI Video Agent to specific industries (e.g., healthcare, education) or branding requirements adds to the cost.

5. Team Expertise

Hiring experienced AI developers, data scientists, and multimedia engineers commands premium rates but ensures better results.

Alternatives to Building From Scratch

If your budget is limited or you want to test the waters, several best AI video generators and AI video maker platforms offer ready-made solutions:

Synthesia: AI video creator focused on avatar-based videos.

Runway: AI video editor with creative tools.

Lumen5: AI-powered video creation from blog posts.

InVideo: Easy-to-use AI video generator for marketers.

These platforms offer subscription-based pricing, allowing you to create video with AI without a heavy upfront investment.

How to Choose the Right AI Video Solutions Company

When partnering with an AI Video Solutions Company or AI Video Software Company, consider these factors:

Proven track record: Look for companies with successful AI video projects.

Transparency: Clear pricing and project timelines.

Technical expertise: Experience in AI for video creation and multimedia development.

Customization capabilities: Ability to tailor solutions to your unique needs.

Support and maintenance: Reliable post-launch assistance.

The Future of AI Video Creation

As AI technology advances, the cost of building AI Video Agents is expected to decrease due to improved tools, open-source frameworks, and more efficient algorithms. Meanwhile, the capabilities will expand to include:

Hyper-personalized video marketing.

Real-time interactive video content.

AI-powered video analytics and optimization.

Integration with AR/VR and metaverse platforms.

Investing in AI video creation tools today positions your business to stay ahead in the evolving multimedia landscape.

Conclusion

Building an AI Video Agent is a significant but rewarding investment. Depending on your requirements, the cost can range from $20,000 for a basic AI video editor to over $300,000 for a sophisticated enterprise-grade AI video creation tool. Understanding the components, cost drivers, and alternatives will help you make informed decisions.

Whether you want to develop a custom AI video generator or leverage existing AI video creation tools, partnering with the right AI Video Applications Company or multimedia company is crucial. With the right strategy, you can harness AI for video creation to boost engagement, reduce production costs, and accelerate your content pipeline.

0 notes

Text

Industry 4.0 possibilities beyond Imagination

The term Industry 4.0 is more than just a buzzword; it’s the reality of today’s smart factories, automated warehouses, and connected businesses. By blending automation, IoT, data analytics, and artificial intelligence, Industry 4.0 opens doors to improvements once thought impossible. It’s changing how products are made, stored, and delivered, pushing businesses far beyond traditional limits.

The Core of Industry 4.0: Smart Automation and Connected Systems

At the heart of Industry 4.0 is the idea of intelligent automation. This doesn’t just mean machines working faster; it means machines working smarter. Connected production lines can identify bottlenecks in real time, automatically adjust to demand changes, and keep downtime to a minimum. For businesses, this results in higher efficiency, less waste, and improved product quality—turning everyday operations into seamless processes.

How IoT is Transforming Modern Manufacturing and Operations

IoT, or the Internet of Things, is a key pillar of Industry 4.0. In factories and warehouses, IoT devices collect data from equipment, products, and even employees. This information helps managers track inventory, schedule maintenance, and spot issues before they cause delays. For instance, sensors on a production line can alert teams to wear and tear, reducing unexpected breakdowns. The result is a smarter, data-driven way to manage operations.

Real-Time Data and Predictive Analytics in Industry 4.0

Industry 4.0 goes beyond just gathering data—it uses that data to make better decisions. Predictive analytics turns past and present information into insights about what might happen next. For example, by analyzing data, a factory might predict when a machine will fail and schedule repairs in advance. This proactive approach saves money and keeps production on track, turning data into one of the most valuable assets a business can have.

Smarter Warehousing & Logistics Enabled by IoT and Automation

The warehouse is one area where Industry 4.0 truly shines. Automated guided vehicles (AGVs) and robotic arms help sort, move, and store products faster than ever. IoT-enabled shelves update inventory levels in real time, while barcode and RFID systems ensure every product can be traced instantly. Together, these technologies speed up shipping, cut down errors, and improve customer satisfaction by ensuring products are always available when needed.

Role of RFID, Barcode, and Smart Sensors in Industry 4.0

While high-tech robots and AI often get the spotlight, the success of Industry 4.0 relies heavily on simpler technologies like RFID, barcode labels, and sensors. RFID tags track product movement without line-of-sight scanning, while barcode systems provide a cost-effective way to label and trace products. Smart sensors monitor temperature, humidity, and equipment health. All these tools create a detailed, real-time picture of operations, making smarter decisions possible.

Benefits of Embracing Industry 4.0 for Businesses of All Sizes

Industry 4.0 isn’t just for large corporations—it offers valuable benefits for businesses of every size:

Increased productivity: Automation and IoT free staff from repetitive tasks.

Improved accuracy: Data-driven systems reduce human errors.

Cost savings: Predictive maintenance lowers repair costs.

Better customer service: Faster order fulfillment and real-time updates. By embracing Industry 4.0, companies stay competitive, grow faster, and adapt to changing market demands more easily.

AIDC Technologies: Helping Businesses Navigate the Industry 4.0 Shift

AIDC Technologies India plays a vital role in making Industry 4.0 accessible to businesses across sectors. As experts in automated identification and data capture, they offer solutions like barcode scanners, RFID systems, and label generators. But they don’t just provide hardware—they guide businesses through system integration, training, and ongoing support. Whether it's a small retailer starting with barcode labelling or a large manufacturer adopting RFID and IoT, AIDC Technologies India ensures every client has the right tools and know-how to succeed.

Seamless Integration of Legacy Systems with New Smart Solutions

One challenge businesses face when moving toward Industry 4.0 is how to connect old systems with new technologies. AIDC Technologies India understands this challenge and helps clients bridge the gap. By integrating barcode scanners, RFID systems, and data analytics tools with existing ERP and warehouse systems, businesses can modernize step by step—without costly overhauls. This makes the journey to Industry 4.0 smoother and more affordable.

Future Trends: AI, Edge Computing, and Beyond in Industry 4.0

As technology advances, Industry 4.0 is evolving further. Artificial intelligence will make systems even more autonomous, while edge computing will process data closer to where it’s created, reducing delays. Augmented reality may help workers visualize data directly on equipment, and 5G networks will enable faster, real-time communication between devices. By staying updated on these trends, businesses can continue to innovate and maintain their competitive edge.

Conclusion & Call to Action: Explore the Power of Industry 4.0 with AIDC Technologies

Industry 4.0 brings possibilities beyond what many could have imagined just a decade ago. From smarter manufacturing and predictive analytics to automated warehouses and connected supply chains, the benefits are real and measurable.

If your business is ready to embrace this transformation, AIDC Technologies India can guide you every step of the way. With experience, expertise, and a commitment to client success, they help turn Industry 4.0 ideas into reality.

Book Now with AIDC Technologies India and unlock the full potential of Industry 4.0 for your business.

#Industry40Revolution#SmartManufacturing2025#AIoTInnovation#DigitalTransformationNow#BeyondImaginationTech#NextGenIndustry#AutomationAndAI

0 notes

Text

What Makes Linpack’s Packaging Machines Stand Out?

In a market saturated with packaging solutions, Linpack has steadily risen as a recognized leader, providing high-quality, efficient, and reliable packaging machines. Whether it’s for food, pharmaceuticals, cosmetics, or industrial products, Linpack continues to meet diverse industry demands with cutting-edge technology and an unwavering commitment to innovation. But what truly sets Linpack apart from the competition? In this blog, we’ll explore the unique attributes and strengths that make Linpack’s packaging machines a preferred choice for businesses around the globe.

1. Advanced Technology Integration

One of the most defining characteristics of Linpack’s packaging machines is the integration of state-of-the-art technology. Their machines are equipped with advanced automation systems, smart sensors, and intelligent interfaces that enhance precision and productivity. pouch packaging machines Whether it’s form-fill-seal machines or vacuum packaging systems, Linpack ensures every product is designed for maximum efficiency with minimal human intervention.

Moreover, many of their machines support IoT-enabled controls, which allow remote monitoring and real-time data analysis. This tech-savvy approach not only streamlines operations but also significantly reduces downtime, making Linpack a future-ready partner in any production environment.

2. Customization to Industry Needs

Linpack understands that one-size-fits-all rarely works in manufacturing. Each industry has specific requirements, and Linpack has mastered the art of customized solutions. Whether it’s the size of the pouch, the type of product (powder, liquid, granular), or the packaging material used, Linpack provides tailored machines to suit exact specifications.

This high degree of customization makes their machines ideal for businesses ranging from small startups to large-scale industrial manufacturers. With Linpack, customers can choose from a wide array of machine types, including:

Pouch packing machines

Vacuum packing machines

Multi-head weighers

Vertical form-fill-seal (VFFS) machines

Automatic sealing and labeling systems

3. Exceptional Build Quality

Durability is key in the packaging industry, and Linpack delivers on this front with robust, long-lasting equipment. Every machine is engineered using high-grade stainless steel and corrosion-resistant materials, ensuring they can withstand continuous operation even in challenging environments.

This commitment to quality not only reduces maintenance costs but also ensures consistent performance for years. The sturdy design also enhances the safety of machine operators, thanks to user-friendly panels, vacuum packaging machine protective guards, and automated error detection systems.

4. Focus on User-Friendly Operation

Another reason Linpack stands out is its focus on ease of use. The machines are designed with intuitive controls, clear display interfaces, and minimal manual input requirements. This ensures that even operators with minimal technical background can run the equipment efficiently after brief training.

Features like touchscreen panels, programmable settings, and visual fault indicators empower users to control every aspect of the packaging process seamlessly. Plus, Linpack offers detailed user manuals, video guides, and expert technical support to ensure a smooth onboarding experience.

5. Energy Efficiency and Sustainability

Sustainability is no longer optional; it’s a necessity. Linpack is at the forefront of eco-conscious packaging solutions, with machines designed to reduce energy consumption, minimize waste, and support eco-friendly packaging materials.

Their innovations include:

Low power consumption motors

Minimal wastage design during sealing and cutting

Recyclable and biodegradable film compatibility

Compact designs that save space and resources

By prioritizing energy efficiency and waste reduction, Linpack not only helps businesses reduce their environmental footprint but also improves their bottom line by lowering operational costs.

6. Versatility Across Industries

Linpack’s versatility is another compelling reason why their machines are so highly regarded. They cater to a wide variety of industries, including:

Food and Beverage – For packaging snacks, spices, tea, coffee, sauces, and ready-to-eat meals

Pharmaceutical – For tablets, powders, liquids, and medical kits

Cosmetics – For lotions, creams, and cosmetic powders

Agriculture – For fertilizers, seeds, and pesticides

Industrial Products – For hardware, chemicals, and cleaning agents

The company’s extensive product range and customization options mean that virtually any business can find the right fit among Linpack’s offerings.

7. Reliable After-Sales Support

Purchasing a packaging machine is not a one-time interaction — it’s the beginning of a long-term partnership. Linpack ensures that this partnership is built on trust and support. Their after-sales services include:

Prompt installation and commissioning

On-site training for staff

Scheduled maintenance packages

24/7 technical assistance

Quick dispatch of spare parts

Their responsive customer service team ensures that clients experience minimal downtime and maximum satisfaction throughout the lifecycle of the machine.

8. Competitive Pricing Without Compromising Quality

High-quality packaging machinery often comes with a hefty price tag — but Linpack has managed to strike the perfect balance between cost and performance. By optimizing their manufacturing processes and sourcing materials smartly, Linpack offers competitive pricing that appeals to businesses of all sizes.

They provide value-driven solutions that ensure clients get the most return on investment (ROI) through increased productivity, reduced waste, and long-term durability.

9. Global Footprint and Client Trust

With a presence in over 50 countries, Linpack has built a strong global reputation. Their packaging machines are trusted by businesses across Asia, Europe, the Americas, and Africa. This international footprint is a testament to their product reliability and service excellence.

Their long list of satisfied clients includes leading food manufacturers, pharmaceutical companies, FMCG brands, and SMEs, all of whom rely on Linpack for consistent, high-performance packaging.

Final Thoughts

So, what makes Linpack’s packaging machines stand out?

It’s a combination of technological excellence, customizability, durability, ease of use, sustainability, and stellar customer service. Whether you’re a small business looking to scale up or a large enterprise seeking efficiency, Linpack offers the tools and support needed to achieve your packaging goals.

Investing in Linpack means investing in a smarter, greener, and more efficient future for your production line.

0 notes

Text

Which is the Best Microsoft Power BI Training Institute in Bangalore?

Introduction: The Rise of Data-Driven Decision Making

In today's hyper-connected business world, numbers speak. But data, by itself, is mere noise unless interpreted as insights. That's where business intelligence (BI) tools like Microsoft Power BI step in—turning intricate data into persuasive visuals and stories.

Why Power BI Has Become the Gold Standard for Business Intelligence

With interactive dashboards, real-time analysis, and seamless integration with Microsoft products, Power BI is the crown jewel of the BI category. As a Fortune 500 firm or startup, Power BI empowers organizations with the ability to take action on insights, not just data.

What Makes a Power BI Certification Worthwhile?

A certification not only confirms your technical skill but also betrays your seriousness towards the profession. A 1 Power BI Certification Training Course in Bangalore ensures that you're not learning the tool, but using it strategically in real-world situations.

Key Aspects of a High-Standard Power BI Training Institute

High-quality institutes don't just exist in theory. Look for these standards:

Hands-on labs and simulations

Capstone projects on actual data sets

Industry-veteran subject matter experts as trainers

Mock interviews and resume building

Active placement assistance

What to Expect in a Power BI Certification Training Course in Bangalore

A standard course in Bangalore consists of modules such as:

Data Modeling and DAX

Power Query and ETL processes

Custom visuals and dashboards

Integrating Power BI with Excel, Azure, and SQL Server

Live projects with Bangalore-based companies

Bangalore: The IT Capital Breeding Data Analysts

Why is Bangalore so hot for BI training? It's the Indian Silicon Valley. With thousands of MNCs, start-ups, and technology parks, innovation is palpable in the city. Institutes here tend to work directly with firms for latest learning modules.

Measuring the Best Institutes in Bangalore – Factors to Look Out For

Before you enroll, evaluate each institute on:

Trainer qualifications

Success rate of alumni

Relevance of course material

Partnerships with industries

Testimonials from previous students

Top 5 Power BI Training Institutes in Bangalore

Institute 1: Features, Fees, Reviews, and Placement Stats

100+ hours of training

Weekend as well as weekday batches

Corporate collaborations with IT giants

Placement support with 90% success rate

Institute 2: What Makes It Different

Intensive emphasis on DAX and advanced analysis

Job-relevant capstone projects

Guidance by Microsoft-certified professionals

Institute 3: Learning Culture and Support

24/7 doubt clarification support

Access to a global peer group

Recorded classes for flexibility during revision

Institute 4: Freshers' Success Stories and Career Successes

Success stories of hiring freshers at INR 6–9 LPA

Transformatory feedback by professionals at work

Detailed case studies as instructional aids

Institute 5: Flexibility, Modules, and Certification Route

Self-paced + instructor-led

Modules updated quarterly

Industry-approved certificate with Microsoft labeling

Comparing Power BI Certification Training Course in Bangalore vs Pune vs Mumbai

Power BI Certification Training Course in Pune offers excellent content, but fewer tech tie-ups.

Power BI Certification Training Course in Mumbai leans toward finance/data analytics in BFSI sectors. Bangalore wins for diversity in project exposure and MNC presence.

Why Bangalore Provides an Advantage Over Other Cities

It's not about learning—it's about opportunities after learning. With the sheer number of tech firms in Bangalore, there are more interviews, more networking sessions, and quicker professional growth.

Online vs Offline Training – What is Best in 2025

Whereas offline provides human touch and discipline, online training offers flexibility and scale. Hybrid approaches—recorded videos with real-time mentoring—are emerging as the most favored format.

Career Opportunities Post Power BI Certification

From being a Power BI Developer to becoming a Data Analyst, BI Consultant, or a Dashboard Architect, the list goes on. With a strong certification, even the door to freelancing and work-from-home positions all around the world opens.

Final Thoughts: Picking the Right Path for Your BI Career

The proper Power BI Certification Training Course in Bangalore can be a career spur. Assess your learning style, browse institute offerings, and consider where alumni work. The world of BI awaits—and Bangalore may be the perfect place to start.

0 notes

Text

The Transformative Role of AI in Medical Imaging | Health Technology Insights

In recent years, AI in medical imaging has emerged as one of the most revolutionary advancements in healthcare. With growing demand for faster and more accurate diagnoses, artificial intelligence (AI) is redefining how medical professionals interpret images from MRIs, CT scans, X-rays, and ultrasounds. The synergy between cutting-edge technology and healthcare is not just enhancing diagnostic capabilities—it’s saving lives.

Let’s Connect→ https://healthtechnologyinsights.com/contact/

What is AI in Medical Imaging?

AI in medical imaging refers to the application of artificial intelligence technologies such as machine learning and deep learning to analyze and interpret medical images. These AI algorithms can be trained to detect anomalies, assist in diagnoses, and even predict disease progression with remarkable accuracy.

Key Benefits of AI in Medical Imaging

Faster Diagnoses AI can analyze thousands of images in seconds, significantly reducing the time it takes to deliver a diagnosis. This is especially critical in emergency settings or when radiologist resources are limited.

Higher Accuracy and Consistency Algorithms can detect patterns that may be missed by the human eye, helping to minimize diagnostic errors. They also offer consistent results, regardless of fatigue or subjective interpretation.

Early Detection of Diseases AI tools are proving particularly effective in early detection of diseases like cancer, pneumonia, and neurological disorders. Early diagnosis means earlier treatment, often resulting in better patient outcomes.

Workflow Optimization By automating repetitive tasks such as image labeling and sorting, AI allows radiologists and technicians to focus more on complex diagnostic and interventional procedures.

Scalability for Remote and Underserved Areas AI-powered imaging systems can be deployed in rural or low-resource settings, where radiologists may not be available. This democratizes access to expert-level diagnostics.

Real-World Applications of AI in Medical Imaging

Radiology: AI helps radiologists detect fractures, tumors, and other abnormalities with greater confidence. Tools like Google's DeepMind have already shown success in diagnosing eye diseases and breast cancer.

Oncology: AI models can track tumor growth over time, compare scans, and help plan treatments more precisely. It’s also aiding in radiomics—extracting large amounts of features from radiographic images.

Cardiology: AI systems analyze echocardiograms and CT angiograms to identify heart conditions like arrhythmias or blocked arteries, aiding in preventive care.

Neurology: Brain scans interpreted through AI can assist in diagnosing Alzheimer’s disease, stroke, and multiple sclerosis much earlier than traditional methods.

Orthopedics: From sports injuries to degenerative diseases, AI enhances musculoskeletal imaging interpretation, reducing unnecessary surgeries and improving rehabilitation plans.

Challenges in Implementing AI in Medical Imaging

Data Privacy and Security Handling sensitive patient data requires strict adherence to HIPAA and other privacy laws. AI systems must be designed with cybersecurity at their core.

Need for High-Quality Data AI algorithms are only as good as the data they are trained on. Poor-quality or biased data can lead to inaccurate results and misdiagnoses.

Integration with Existing Systems Many hospitals use outdated IT infrastructures. Integrating AI tools into these systems can be technically challenging and resource-intensive.

Regulatory and Ethical Concerns Gaining FDA approval for AI applications is a complex process. Additionally, ethical questions arise around accountability in case of AI-related misdiagnosis.

Resistance to Change Medical professionals may be hesitant to trust or adopt AI due to fear of job displacement or lack of familiarity with the technology.

Let’s Connect→ https://healthtechnologyinsights.com/contact/

The Future of AI in Medical Imaging

Augmented Radiology AI won’t replace radiologists—it will empower them. AI tools will serve as second readers, providing a safety net and enhancing confidence in diagnostics.

Personalized Medicine By combining imaging data with genetic and clinical data, AI can help craft highly personalized treatment plans.

Continuous Learning Systems Future AI models will adapt and improve with each case, thanks to continuous learning, leading to more intelligent and accurate systems over time.

Cloud-Based and Mobile Imaging Platforms Cloud computing will allow AI-powered imaging tools to be used remotely, aiding telemedicine and global diagnostics.

Global Collaboration and Research Shared datasets and collaborative platforms will foster more innovation and validation across the global medical community.

The integration of AI in medical imaging is not just a trend—it’s a paradigm shift in the healthcare landscape. From reducing diagnostic errors to expanding access to expert care in remote regions, AI is enabling a smarter, faster, and more equitable medical ecosystem. While challenges exist, ongoing innovation, education, and ethical governance will ensure that AI becomes an indispensable ally in modern medicine.

#AIinMedicalImaging #HealthTech #RadiologyRevolution #FutureOfHealthcare #MedicalAI

Need More Insights?, Let’s Connect→ https://healthtechnologyinsights.com/contact/

AI in healthcare innovation is a revolutionary force transforming how healthcare is delivered, improving patient outcomes, and making healthcare more efficient. By leveraging advanced technologies, AI is enhancing diagnostics, treatment plans, and drug discovery while providing more accessible and personalized care. Despite the challenges, AI holds immense promise in shaping the future of healthcare, driving breakthroughs that were once considered impossible. As technology continues to advance, we can expect AI to play an even more prominent role in healthcare innovation, ultimately leading to healthier lives worldwide.

#AIinHealthcare #HealthcareInnovation #MedicalAI #HealthTech #FutureOfMedicine

HealthTech Insights is a leading global community of thought leaders, innovators, and researchers specializing in the latest advancements in healthcare technology, including Artificial Intelligence, Big Data, Telehealth, Wearables, Health Data Analytics, Robotics, and more. Through our platform, we bring you valuable perspectives from industry experts and pioneers who are shaping the future of healthcare. Their stories, strategies, and successes provide a roadmap for building resilient, patient-centered health systems and businesses.

Contact Us

Call Us

+1 (845) 347-8894

+91 77760 92666

Email Address

For All Media and Press Inquiries

Address

1846 E Innovation Park DR Site 100 ORO Valley AZ 85755

0 notes

Text

Empowering Cattle Care with Precise Data Annotation Services

Wisepl specialize in high-quality data annotation tailored for the livestock and agri-tech industry. Whether it's identifying cattle in drone footage, tagging health markers, or tracking herd behavior through computer vision, our expert team delivers accurate and scalable solutions to help you build reliable AI models for modern cattle care.

From health monitoring to automated feeding systems – your AI is only as good as the data it's trained on. That's where we come in.

What We Offer:

Bounding box & segmentation for cattle, Behavior analysis tagging, Drone imagery annotation Health condition labeling, Custom annotation workflows

Why Choose Wisepl?

Expert human annotators

Fast turnaround & scalable workforce

Data privacy and confidentiality assured

Experience with top agritech companies

Let’s annotate your way to better livestock management. Contact us today for a free sample or consultation. www.wisepl.com | [email protected]

#DataAnnotation#CattleCareAI#AgriTech#LivestockMonitoring#AIforFarming#ComputerVision#DroneData#Wisepl#SmartFarming#PrecisionAgriculture#MachineLearning#AIAnnotation#DataLabeling#ImageAnnotation#PolygonAnnotation#BoundingboxAnnotation#AI

0 notes