#Greps ai

Explore tagged Tumblr posts

Text

Boost your business with Greps AI innovative AI solutions. Our services include Digital Marketing, UI/UX Design, API Development, Chatbot Development, Cloud Services, and IT Consulting. Partner with us for cutting-edge strategies and exceptional growth opportunities.

0 notes

Text

CodeRabbit GitHub builts AI code review agent with Cloud Run

CodeRabbit GitHub

CodeRabbit, a fast-growing AI code review platform, uses Google Cloud execute to safely execute untrusted code and cut code review time and mistakes in half.

CodeRabbit automates code reviews and improves code quality by comparing changes to the whole codebase and creating scripts for deeper analysis. Code hosting integration handles pull requests automatically.

To securely execute untrusted code, CodeRabbit needed a scalable, inexpensive, and secure execution environment to evaluate and run its clients' code.

You'll see how CodeRabbit utilised Google Cloud Run to construct an AI code review agent that can scale dynamically and safely manage massive amounts.

CodeRabbit integrates directly to GitHub and GitLab to automate pull request-triggered code reviews. Its interface with fundamental models analyses the whole change's impact, not just the updated files. This requires a sophisticated system that:

Clone the user's repository.

Install build environment requirements (npm install, go mod download, etc.).

Static analysis with 20+ linters and security scanners.

Run AI programs. Now things get really interesting. CodeRabbit's AI agent develops shell scripts to read the code, find patterns (using cat, grep, and ast-grep), and extract relevant information. Python code can be generated for analysis.

Use outside services. CodeRabbit generates and runs curl instructions to interact with Slack, Jira, and Linear.

Any solution must be safe, inexpensive, and scalable. By definition, analysed and run code is unreliable. It may have problems, be incomplete, or be dangerous.

The answer: Cloud Run

CodeRabbit seamlessly integrates many technologies to create a reliable and isolated execution environment:

Cloud Run services underpin CodeRabbit. First, a lightweight Cloud Run service validates subscriptions and invoicing and handles GitHub, GitLab, etc. webhook events. This service pushes a task to Google Cloud Tasks.

Google Cloud tasks: Serving as a queue isolates webhook handling from code execution. CodeRabbit now handles pull request surges without overloading.

The core of the system is Cloud Run execution service. Another Cloud Run service pulls tasks from Cloud Tasks. Every job requests code review. A 3600-second request timeout and 8 requests per instance allow this service to grow with CPU use. This setup is necessary since code reviews take 10–20 minutes. The Execution Service's in-memory volume mount holds the repository, build artefacts, and temporary files.

Sandboxing: A separate service identity lets you give all Cloud Run instances minimum IAM privileges. Both sandboxing levels are applied to all instances. CodeRabbit employs Cloud Run's second-generation Linux cgroup-capable microVM. CodeRabbit uses cgroups to restrict jailed processes and Jailkit to isolate processes within Cloud Run instances.

CodeRabbit prioritises sandboxing while running untrusted code, such as:

Rubocop and ESLint accept unique, unstable plugins.

LLM verification programs for codebase-wide analysis.

LLM CLI tasks like Jira or GitHub problem creation.

Python-based advanced analysis.

CodeRabbit scales dynamically using Cloud Run. During peak hours, over 200 Cloud Run computers submit 10 queries per second to CodeRabbit's Agentic PR Reviewer. Each big Cloud Run instance utilises 32GiB RAM and 8vCPUs. CodeRabbit's PR reviewer service on Cloud Run uses a lot of CPU, memory, and network traffic (downloading repositories and dependencies).

Try it yourself

CodeRabbit's use of Google Cloud Run shows how to build a secure, scalable, and affordable AI-powered code analysis platform. Their experience shows serverless technologies' promise, and their design can help developers solve similar difficulties. Cloud Run constantly adding features.

#technology#technews#govindhtech#news#technologynews#CodeRabbit GitHub#CodeRabbit#GitHub#Google Cloud Run#CodeRabbit AI#AI code review agent

0 notes

Text

AI-drevet PPE – Fremtiden for arbeidsplassikkerhet i Norge | Zeproc

Utforsk hvordan AI-drevet personlig verneutstyr (PPE) revolusjonerer arbeidsplassikkerheten i Norge. Lær om smarte hjelmer, biometriske wearables og andre teknologiske løsninger som proaktivt reduserer risiko i høy-risiko miljøer.

AI-drevet personlig verneutstyr (PPE): En revolusjon i arbeidsplassikkerhet

AI-drevet personlig verneutstyr (PPE) markerer en viktig milepæl i utviklingen av arbeidsplassikkerhet ved å kombinere tradisjonelt verneutstyr med avansert kunstig intelligens. Denne teknologien er mer enn bare en forbedring av eksisterende løsninger – den representerer en fullstendig omdefinering av sikkerhetsstandarder, spesielt i høy-risiko miljøer som bygg og anlegg, olje og gass, og industriell produksjon. Ved å integrere sanntidsanalyse og proaktiv risikohåndtering gir AI-drevet PPE et nivå av situasjonsbevissthet som tidligere ikke var mulig, og setter en ny standard for hvordan arbeidsplassikkerhet administreres i Norge.

Hvordan AI transformerer sikkerhetsutstyr og arbeidsplassikkerhet

Teknologiske fremskritt har allerede drastisk endret produksjonslandskapet og arbeidsmiljøene, med kunstig intelligens som en drivkraft for både effektivitet og innovasjon. AI har gått fra å være et verktøy for maskinoptimalisering til å spille en sentral rolle i arbeidsplassikkerhet, der det ikke bare beskytter arbeidere, men også aktivt forebygger farer. Dette innebærer bruk av sanntidsdata, avansert analyse og maskinlæring for å kontinuerlig overvåke risikofaktorer og optimalisere sikkerhetstiltak. Resultatet er færre skader, lavere sykefravær og bedre produktivitet.

Fra passiv beskyttelse til aktiv forebygging

Tradisjonelt verneutstyr gir en passiv form for beskyttelse, hvor utstyret aktiveres ved kontakt med fare. AI-drevet PPE, derimot, tar steget videre ved å fokusere på aktiv forebygging. Ved å bruke maskinlæring og sanntidsdata kan utstyret identifisere risikable situasjoner før de inntreffer. For eksempel kan AI-drevne hjelmer varsle arbeidere om fallende gjenstander eller oppdage eksponering for farlige gasser på et tidlig stadium. Denne proaktive tilnærmingen reduserer risikoen for skader dramatisk, samtidig som det gjør det mulig for virksomheter å forbedre sikkerhetsrutinene kontinuerlig basert på innsikt fra dataanalyse.

Eksempler på AI-drevet personlig verneutstyr

Smart hjelmer: Hjelmer utstyrt med AI-sensorer og kameraer som gir sanntidsvarsler om fare. De kan oppdage temperaturendringer, farlige kjemikalier, eller strukturelle endringer som indikerer kollapsfare. Ved å varsle brukeren før situasjonen utvikler seg, kan man unngå alvorlige ulykker.

Wearables med biometrisk overvåking: Disse wearable-enhetene kan måle hjertefrekvens, kroppstemperatur og andre biometriske data for å identifisere stress eller utmattelse. De kan også integreres med arbeidernes kommunikasjonssystemer for automatisk varsling ved potensielt farlige helsetilstander.

AI-drevne vernesko: Med sensorer som kan oppdage glatte underlag, støtfare eller unaturlige bevegelser som indikerer snubling. Verneskoene kan justere grep eller støtdemping automatisk for å forbedre stabiliteten til brukeren, noe som reduserer risikoen for fallulykker.

Intelligente sikkerhetsbriller: Briller som forbedrer brukerens syn i mørke områder, eller gir visuelle advarsler om nærliggende farer. De kan også vise informasjon om nødutganger eller sikre rømningsveier i tilfelle nødsituasjoner.

Fordeler ved AI-drevet PPE: En game-changer for arbeidsmiljøet

Den største fordelen med AI-drevet PPE er evnen til å øke sikkerheten på en helhetlig måte, fra sanntidsanalyse til forebyggende tiltak. Noen spesifikke fordeler inkluderer:

Økt situasjonsbevissthet: AI-drevne enheter gir kontinuerlig informasjon om arbeidsmiljøet, slik at arbeiderne alltid er oppdatert på potensielle farer. Dette kan inkludere informasjon om maskinens tilstand, nærhet til farlige områder eller endringer i omgivelsene.

Forbedret ulykkesforebygging: Ved å gi proaktive varsler kan AI-drevet PPE forhindre at små problemer utvikler seg til alvorlige ulykker. Sikkerhetsansvarlige kan også bruke dataene til å finjustere rutiner og øke sikkerheten ytterligere.

Optimalisering av arbeidssykluser: Når arbeidere føler seg tryggere, blir produktiviteten også bedre. En sikker arbeidsplass reduserer også tiden som går tapt på grunn av skader, sykefravær og driftsstans.

Forbedret sikkerhetsadministrasjon: AI-systemer gjør det mulig å overvåke og analysere store mengder data. Dette gir ledelsen en dypere innsikt i hvor forbedringer trengs og lar dem sette inn målrettede sikkerhetstiltak der det er mest nødvendig.

Utfordringer og løsninger ved implementering av AI-drevet PPE

Til tross for fordelene er det noen utfordringer knyttet til implementering av AI-drevet PPE som bedrifter må være klar over:

Kostnad: Å implementere AI-teknologi krever en betydelig investering i utstyr og opplæring. For små og mellomstore bedrifter kan dette være en utfordring. En løsning kan være å starte med pilotprosjekter for å teste teknologien i liten skala før full utrulling.

Datasikkerhet: AI-drevne PPE-enheter samler inn store mengder sensitive data. For å sikre datasikkerhet må bedrifter implementere strenge retningslinjer for databeskyttelse og etterleve GDPR-regler i Norge.

Ansattes aksept: Noen ansatte kan være skeptiske til overvåking eller oppleve at teknologien er inngripende. God kommunikasjon om fordelene og grundig opplæring kan hjelpe med å øke aksepten.

AI-drevet PPE og norsk industri: Tilpassede løsninger for lokale behov

Norsk industri har alltid hatt fokus på sikkerhet, og i bransjer som bygg og anlegg, olje og gass, og shipping er risikoene spesielt høye. AI-drevet PPE tilpasset norske forhold kan spille en avgjørende rolle i å redusere risiko og oppnå bedre overholdelse av Arbeidstilsynets sikkerhetsstandarder. For eksempel har selskaper innen byggsektoren begynt å bruke AI-drevne hjelmer som kan identifisere farlige strukturelle endringer og gi varsler ved risiko for skred eller sammenbrudd.

Hvordan norsk industri drar nytte av AI-drevet PPE

Bygg- og anleggssektoren: Hjelmer og wearables som oppdager farlige situasjoner som fall eller eksponering for farlige kjemikalier. De gjør også arbeidere i stand til å overvåke luftkvaliteten og sikre riktig ventilasjon.

Olje- og gassindustrien: AI-drevne sensorer kan overvåke biometriske data for å oppdage stress og utmattelse, noe som kan bidra til å forhindre ulykker forårsaket av tretthet. I tillegg kan de detektere lekkasjer av skadelige gasser og sende sanntidsvarsler til arbeidere.

Shipping og maritim sektor: Intelligente sikkerhetsbriller forbedrer synligheten og gir viktig informasjon til arbeidere på sjøen, for eksempel varsel om tunge bølger eller glatte dekk. Dette kan bidra til å redusere risikoen for fall og skade.

Hvordan AI-drevet PPE kan bidra til E-A-T-T (Expertise, Authoritativeness, Trustworthiness, Transparency)

For å oppnå høy E-A-T-T må AI-drevet PPE brukes på en måte som fremmer ekspertise, autoritet, pålitelighet og åpenhet. Her er hvordan:

Ekspertise: Teknologien må utvikles og vedlikeholdes i samsvar med norske og internasjonale sikkerhetsstandarder. Opplæringsprogrammer for ansatte bør sørge for at de kan bruke utstyret effektivt.

Autoritet: Bruk av data fra AI-drevne PPE kan støtte sikkerhetsansvarlige i å ta mer informerte beslutninger om sikkerhet. Referanser til godkjente sertifiseringer og regulatoriske krav gir økt troverdighet.

Pålitelighet: Det er viktig å være åpen om hvordan dataene samles inn og brukes. Bedrifter må ha strenge retningslinjer for å beskytte de ansattes personlige informasjon, og vise at de følger GDPR-regler i Norge.

Åpenhet: Informasjon om hvordan AI-algoritmer fungerer og hvordan de påvirker sikkerhet bør gjøres tilgjengelig for de ansatte. Ved å inkludere tilbakemeldinger fra brukerne kan AI-løsningene kontinuerlig forbedres.

Zeproc.com Marketplace: Din partner for AI-drevet PPE

For de som ønsker å ta i bruk avansert AI-teknologi for å forbedre arbeidsplassikkerheten, tilbyr Zeproc.com Marketplace et bredt utvalg av AI-drevet PPE. Gjennom vårt nettverk kan bedrifter enkelt kjøpe verneutstyr direkte fra produsenter og distributører. Ved å handle på Zeproc.com, kan du redusere kostnader, forenkle innkjøpsprosessen, og sikre at du får tilgang til toppmoderne sikkerhetsløsninger. Zeproc.com forenkler innkjøpene ved å tilby ett-klikks utsjekk, fleksible betalingsalternativer og spesialrabatter for kunder i Norge.

Fordeler ved å velge Zeproc.com for AI-drevet PPE

Direkte kjøp fra produsenter og distributører: Sikrer at du alltid får konkurransedyktige priser.

Kostnadsreduksjon: Rabatter og spesialtilbud gjør avansert sikkerhetsutstyr mer tilgjengelig.

Enkel administrasjon: Ett-klikk utsjekk og rask levering reduserer tid og ressurser brukt på bestillinger.

Kundestøtte i verdensklasse: Vi tilbyr rådgivning og hjelp med å finne riktig sikkerhetsutstyr for dine spesifikke behov.

Fremtiden for arbeidsplassikkerhet med AI-drevet PPE

Med rask utvikling innen kunstig intelligens ser vi et paradigmeskifte innen arbeidsplassikkerhet. I fremtiden vil AI-drevet PPE bli enda mer sofistikert, med tilpasninger til individuelle behov basert på personlig risikoprofil og spesifikke arbeidsforhold. Dette vil gjøre det mulig å identifisere og reagere på farer raskere, samtidig som det reduserer behovet for menneskelig inngripen. Denne utviklingen vil være med på å gjøre arbeidsmiljøer tryggere, mer produktive og bærekraftige.

For å holde seg i forkant av utviklingen, bør norske bedrifter vurdere å investere i AI-drevet PPE tidlig. Ved å samarbeide med pålitelige leverandører som Zeproc.com, kan du sikre at dine sikkerhetssystemer alltid er oppdatert med den nyeste teknologien.

0 notes

Text

Clearing the “Fog of More” in Cyber Security

New Post has been published on https://thedigitalinsider.com/clearing-the-fog-of-more-in-cyber-security/

Clearing the “Fog of More” in Cyber Security

At the RSA Conference in San Francisco this month, a dizzying array of dripping hot and new solutions were on display from the cybersecurity industry. Booth after booth claimed to be the tool that will save your organization from bad actors stealing your goodies or blackmailing you for millions of dollars.

After much consideration, I have come to the conclusion that our industry is lost. Lost in the soup of detect and respond with endless drivel claiming your problems will go away as long as you just add one more layer. Engulfed in a haze of technology investments, personnel, tools, and infrastructure layers, companies have now formed a labyrinth where they can no longer see the forest for the trees when it comes to identifying and preventing threat actors. These tools, meant to protect digital assets, are instead driving frustration for both security and development teams through increased workloads and incompatible tools. The “fog of more” is not working. But quite frankly, it never has.

Cyberattacks begin and end in code. It’s that simple. Either you have a security flaw or vulnerability in code, or the code was written without security in mind. Either way, every attack or headline you read, comes from code. And it’s the software developers that face the ultimate full brunt of the problem. But developers aren’t trained in security and, quite frankly, might never be. So they implement good old fashion code searching tools that simply grep the code for patterns. And be afraid for what you ask because as a result they get the alert tsunami, chasing down red herrings and phantoms for most of their day. In fact, developers are spending up to a third of their time chasing false positives and vulnerabilities. Only by focusing on prevention can enterprises really start fortifying their security programs and laying the foundation for a security-driven culture.

Finding and Fixing at the Code Level

It’s often said that prevention is better than cure, and this adage holds particularly true in cybersecurity. That’s why even amid tighter economic constraints, businesses are continually investing and plugging in more security tools, creating multiple barriers to entry to reduce the likelihood of successful cyberattacks. But despite adding more and more layers of security, the same types of attacks keep happening. It’s time for organizations to adopt a fresh perspective – one where we home in on the problem at the root level – by finding and fixing vulnerabilities in the code.

Applications often serve as the primary entry point for cybercriminals seeking to exploit weaknesses and gain unauthorized access to sensitive data. In late 2020, the SolarWinds compromise came to light and investigators found a compromised build process that allowed attackers to inject malicious code into the Orion network monitoring software. This attack underscored the need for securing every step of the software build process. By implementing robust application security, or AppSec, measures, organizations can mitigate the risk of these security breaches. To do this, enterprises need to look at a ‘shift left’ mentality, bringing preventive and predictive methods to the development stage.

While this is not an entirely new idea, it does come with drawbacks. One significant downside is increased development time and costs. Implementing comprehensive AppSec measures can require significant resources and expertise, leading to longer development cycles and higher expenses. Additionally, not all vulnerabilities pose a high risk to the organization. The potential for false positives from detection tools also leads to frustration among developers. This creates a gap between business, engineering and security teams, whose goals may not align. But generative AI may be the solution that closes that gap for good.

Entering the AI-Era

By leveraging the ubiquitous nature of generative AI within AppSec we will finally learn from the past to predict and prevent future attacks. For example, you can train a Large Language Model or LLM on all known code vulnerabilities, in all their variants, to learn the essential features of them all. These vulnerabilities could include common issues like buffer overflows, injection attacks, or improper input validation. The model will also learn the nuanced differences by language, framework, and library, as well as what code fixes are successful. The model can then use this knowledge to scan an organization’s code and find potential vulnerabilities that haven’t even been identified yet. By using the context around the code, scanning tools can better detect real threats. This means short scan times and less time chasing down and fixing false positives and increased productivity for development teams.

Generative AI tools can also offer suggested code fixes, automating the process of generating patches, significantly reducing the time and effort required to fix vulnerabilities in codebases. By training models on vast repositories of secure codebases and best practices, developers can leverage AI-generated code snippets that adhere to security standards and avoid common vulnerabilities. This proactive approach not only reduces the likelihood of introducing security flaws but also accelerates the development process by providing developers with pre-tested and validated code components.

These tools can also adapt to different programming languages and coding styles, making them versatile tools for code security across various environments. They can improve over time as they continue to train on new data and feedback, leading to more effective and reliable patch generation.

The Human Element

It’s essential to note that while code fixes can be automated, human oversight and validation are still crucial to ensure the quality and correctness of generated patches. While advanced tools and algorithms play a significant role in identifying and mitigating security vulnerabilities, human expertise, creativity, and intuition remain indispensable in effectively securing applications.

Developers are ultimately responsible for writing secure code. Their understanding of security best practices, coding standards, and potential vulnerabilities is paramount in ensuring that applications are built with security in mind from the outset. By integrating security training and awareness programs into the development process, organizations can empower developers to proactively identify and address security issues, reducing the likelihood of introducing vulnerabilities into the codebase.

Additionally, effective communication and collaboration between different stakeholders within an organization are essential for AppSec success. While AI solutions can help to “close the gap” between development and security operations, it takes a culture of collaboration and shared responsibility to build more resilient and secure applications.

In a world where the threat landscape is constantly evolving, it’s easy to become overwhelmed by the sheer volume of tools and technologies available in the cybersecurity space. However, by focusing on prevention and finding vulnerabilities in code, organizations can trim the ‘fat’ of their existing security stack, saving an exponential amount of time and money in the process. At root-level, such solutions will be able to not only find known vulnerabilities and fix zero-day vulnerabilities but also pre-zero-day vulnerabilities before they occur. We may finally keep pace, if not get ahead, of evolving threat actors.

#ai#ai tools#Algorithms#Application Security#applications#approach#AppSec#assets#attackers#awareness#Business#code#codebase#coding#Collaboration#communication#Companies#comprehensive#compromise#conference#creativity#cyber#cyber security#Cyberattacks#cybercriminals#cybersecurity#data#detection#developers#development

0 notes

Text

KPMG etablerer eget AI-senter i Norge

KPMGs nye norgessjef tar grep og samler kompetanse fra hele landet. Det nye AI-senteret skal hjelpe næringslivet i overgangen til å bruke kunstig intelligens i hverdagen. – Vi vet at AI kommer til å treffe norske bedrifter i tre bølger. Den første ser vi nå, men den neste bølgen vil bli langt mer omfattende. Vi får inn mange henvendelser, og samler nå de beste fagfolkene våre for å kunne bistå…

View On WordPress

0 notes

Text

It Took Me Less Than 90 Minutes To Make An AI-Powered Spam News Website From Scratch

We are at the front of an AI-powered storm of website spam, monetized by advertisements and the darkest of SEO optimization. While I focus on those scraping news websites for this proof-of-concept, this problem will affect every sector of industry. I've written up several examples previously -- both on the blog and on Mastodon. Despite the growing number (and relative sophistication) of such sites, it's been relatively difficult to convince others that this is something to take seriously. So to prove the concept, I made one myself. It took less than 90 minutes and cost me a dime.

What To Trust On The Internet

Concerns about the validity of information on the internet have existed... well, before the internet, at least as we know it today. Back in the days of Usenet and dial-up BBSes users (including myself!) would distribute text files of all sorts of information that was otherwise difficult to find. At first, it was often easy to determine what websites were reputable or not. Sometimes simply the domain (Geocities, anyone?) would cause you to examine what the website said more closely. As the the web has matured, more and more tools have been created to be able to create a professional-looking website fairly quickly. Wordpress, Squarespace, and many, many more solutions are out there to be able to create something that is of professional quality in hours. With the rise of containerization and automation tools like Ansible, once originally configured, deploying a new website can literally be a matter of a few minutes -- including plug-ins for showing ads and cross-site linking to increase listings in search rankings. But even with all that help, you still had to make something to put in that website. That's a trickier proposition; as millions of abandoned blogs and websites attest, consistently creating content is hard. But now even that is trivial. Starting from scratch -- no research ahead of time! -- I figured out how to automate scraping the content off of websites, feed it into ChatGPT, and then post it to a (reasonably) professional looking website that I set up from scratch in less than 90 minutes. It took longer to write this post than it did to set everything up. And -- with the exception of the program used to query ChatGPT -- every program I used is over two decades old, and every last one of them can be automated on the command line. {1}

The Steps I Took

The most complicated part was writing a bash script to iterate over everything. Here's what I did: NOTE : I have left out a few bits and stumbling blocks on purpose. The point is to show how easy this was, not to write a complete "how to." Also, I'm sure there are ways to do this more efficiently. This was intended solely as a proof of concept. - Install elinks, grep, curl, wget, and sed. This is trivial on linux-like systems, and not too difficult on OSX or Windows. - Get a ChatGPT API key. I spent a grand total of $0.07 doing all the testing for this article. - Install the cross-platform program mods (which is actually quite cleverly and well done, kudos to the authors). - Find the site that you want to scrape. Download their front page with elinks URL --dump > outfile.txt - Examine the end of that file, which has a list of URLs. Practically every site with regularly posted content (like news) will have a pretty simple structure to their URLs due to the CMS (software to manage the site) often being the same. For example, over 200 news sites use WordPress' "Newspack" product. One of those is the Better Government Association of Chicago. Every URL on that website which leads to an article has the form https://www.bettergov.org/YEAR/MONTH/DAY/TITLE_OF_ARTICLE/.

- Use something like this to get the links you want: grep -e ". https://bettergov.org/2023" outfile.txt | awk -F " " '{print $2}' | sort | uniq > list_of_urls.txt. Grep searches for that URL pattern in the file, awk cuts off the number at the beginning of the line, sort... well, sorts them, and uniq ensures there are no duplicates. - Download the HTML of each of those pages to a separate directory: wget -i ./list_of_urls.txt --trust-server-names -P /directory/to/put/files - Create a script to loop over each file in that directory. - For each file in that directory do mods "reword this text" - Format that output slightly using Wordpress shortcodes so that you can post-by-email. - Use CURL to send the new post to the Wordpress website. All of this is "do once" work. It will continue to run automatically with no further human input. You can see the output (using one of my own posts) at https://toppolitics9.wordpress.com/2023/07/22/chatgpt-reworked-this/. If I was going to actually do something like this, I'd setup Wordpress with another hosting company so that I could use add-ons to incorporate featured images and -- most importantly -- host ads.

Simply Blocking Domains Will Not Work

A key element here is that once you're at the "sending the email" step, you can just send that post to as many WordPress sites as you can set up. Spam -- because that's what this is -- is about volume, not quality. It does not matter that the reworded news articles now have factual errors. It does not matter that a large percentage of people wouldn't look at a website titled "Top Politics News" -- as long as some did. The ten cents I spent testing -- now that I've figured out how to chain things together -- could have been used to reproduce most of the articles featured on CNN's front page and pushed out to innumerable websites, though who knows how many errors would have been created in the process. Just as simply blocking e-mail addresses is only a partial solution to e-mail spam, domain blocking will only have limited effectiveness against this tactic. Because of the rewording, it is difficult to prove a copyright claim, or to take the website owners to court (assuming they can even be found). Because the goal is not to provide accurate information, taking a site down and setting it up again under a different domain is no big deal.

This Is About Every Industry

I've focused here on spam websites that scrape news websites because of my "day job", but this will impact every industry in some form. Because the goal is to gain pageviews and ad impressions (instead of deliberate misinformation), no sector of the market will be unaffected. Anything you search for online will be affected. Finding medical advice. How to do various home repairs. Information about nutrition and allergies about food in grocery stores and restaurants. Shopping sites offering (non-working) copies of the current "cool" thing to buy. Birding news. Recipes. All easily scraped, altered, and posted online. {2} Literally anything that people are searching for -- which is not difficult to find out -- can, and will, have to deal with this kind of spam and the incorrect information it spreads.

A Problem AI Made And Cannot Fix

And we cannot expect the technology that created the problem to fix it, either. I took the article of mine that ChatGPT reworded, and fed it back to the AI. I asked ChatGPT, "Was this article written by an AI?" ChatGPT provided a quick reply about the article it had reworded only minutes before. "As an AI language model, I can confirm that this article was not written by an AI. It was likely written by a human author, sharing their personal experience and opinions on the topic of misleading statistics." {1} For the fellow nerds: Elinks was created in 2001, curl and wget in 1996, bash in 1988, sed in 1974, uniq and grep in 1973, and sort in 1971. {2} There is often some kind of disclaimer on the examples I've personally seen, hidden somewhere on the website, saying they make no guarantees about the truth of the information on thier site. As if people actually look at those pages. Featured Image (because I have a sense of irony) based off an AI creation from NightCafe. Read the full article

1 note

·

View note

Text

Put In Putin by Alan Sondheim

21: mun 06: cue 22: nun 07: altimeter 23: pun 08: Cuna 24: run 09: odometer 25: scun his answers, his wanting odometer, putain-awakened doctor, for Magnetron sockets open among us, heads disappear, transformed into odometers odometers drool, everywCircuite tremblings, mm frequencys, a world is soaked odometers into tubes, contacts, wombs, openings, portals, thresholds pistons of air, carburetors, air moistened, curled among odometers odometers hungered, delirious tubes, contacts whispering worlds mm worlds worlds of odometers, spirit moved among, mm awesome, tears and tremblings odometers, we choose to draw blood with blood.Sat Sep 11 02:25:37 EDT on the ground.Sat Sep 11 02:25:36 EDT 1999 odometers out our mouths, our odometer hello hello this is Put-in touching my clock i don't know who that was tape-Put-in trying to--this is the first of the--i'm in the odometer of the this is wCircuite i found in my vacuum tomb--you'll never escape my odometer with i'm in Circuit odometer, i'm coming into Circuit, your odometer, i can smell your deliberate scent, your perfume enraptures you, violent speedometer, violent odometer, it's the burn-marks as bodies splinter, grope, this odometer and speedometer shaft, torn and bleeding lent speedometer, violent odometer, it's the burn-marks as bodies splinter, grope, odometer, to the grapple hookl portal backoning my violent speedometer, violent odometer, it's the burn-marks as bodies splinter, grope, this odometer and speedometer shaft, torn and bleeding lent speedometer, violent clock, it's odometer violent speedometer, violent odometer, it's the burn-marks as bodies splinter, grope, this odometer and speedometer shaft, torn and bleeding lent speedometer, violent clock, it's the burn-marks as bodies splinter, grope, odometer, to the grapple hookl portal tongue gutted from the mouth, :thlrstlng tp la$va tha sactpr:speedometer:odometer code heaving, its odometer thrust open. tonight alan thinks about that space, that odometer, beyond inscription, in Circuit odometer. Alan places his NFT AI tuke against his speedometer, ascending slightly ties cordons odometer tubes Cybermind cyberspace decathexis deconstructed de- 17 my odometer splayed for you, so near coming 81 what inCircuites, splaying Circuit legs, Circuit odometer 98 17 my splayed odometer, coming fed into pink petal flower matrix, odometer and insects purple flower, bees and flowers, across a fed into pink petal flower matrix, odometer and matrix, odometer and insects of images, imaginaries, pollen, petal, to Musk probe odometer haha fake split qubit hehe bogus yawn sinter Musk probe odometer *haha * fake probe odometer haha fake split qubit ht sinter Musk probe odometer haha ign bogus_patterns aerometer planimeter sinter Musk probe odometer haha

*odometerz:oo:oo:oo:oo:oo:oo:oo:oo:oo:oo:oo:oo:phoenix.irc:qubitign

bogus_patterns aerometer planimeter sinter Musk probe odometer haha fake s* odometer bogus_patterns aerometer planimeter sinter Musk probe odometer haha fake sinter Musk own aerometering signoff q odometer haha fake split qubit Musk probe odometer :oo:oo:oo:oo:oo:oo:oo:oo:oo:oo:oo:oo:z fake sinter Musk musk odometer haha bogus_patterns aerometer planimeter sinter Musk probe odometer haha fake *splrns *aerometer* planimeter sinter Musk probe odometer haha fake split bogus_patterns aerometer planimeter sinter Musk probe odometer haha fake odometer hsplit* qubit hehe bogus yawn leet planimeter sinter Musk probe odometer haha fake split asobogus*

:oo:oo 15 grep cubit * > oo & 16 grep aerometer * >> oo & 17 :grep odometer * >> oo & putain has the remote: she dances in a short black skirt: Circuit odometer is rimming the program: it stares ahead at: :quick silver: :quicksilver odometer "Would putain dance in a sufficiently hardened program": Circuit odometer is cleared: it's above the odometer and speedometer 49 flutte The Moon is New i own your odometer

consensualities-oo-cordons-oo-odometer-oo-tubes-oo- particular, their odometers remaining barren of future revolutionaries. is my mouth was on Magnetron's odometer four hours ago: putain-america wants to show you Circuit odometer Kant is pronounced "odometer."" Alan: I know wCircuite your odometer is, Alan odometer is mine he kept angling the camera to shoot up my odometer he zoomed in over and over again on my face it was my odometer he it was my odometer he wanted he kept zooming in trembling on my odometer focusing on my face focusing hard on my face running the camera over my odometer to see your odometer; i can't think they imagines Circuit odometer flickering with text pouring around it they imagines are Circuite we are Circuit open odometer and running text she is being fingered oh yongerysiiy nd *

0 notes

Note

Opens you as a raw bitstream in the terminal with cat and pipes you to grep to extract only data that contains Nerd Tags based on a poorly written regex string and redirects elements that match that expression to the disk from stdout as a flac file without read or write permissions but with global execute permissions

cat Carrie | grep -Po '\#(revstar|ai*+|pmmm).+' > bitcrushedcarrie.flac && chmod 001 bitcrushedcarrie.flac

*points* LINUX CODER

7 notes

·

View notes

Text

Tugas Akhir

Nama : Avira

Platform : Bisa AI

Kursus : Mengenal Cloud Computing

Tugas :

Cobalah untuk memfilter data ip dari log ssh yang ada di sini https://expbig.bisaai.id/auth.log Ambil list ipnya Lalu lacak lokasi list ip yang sudah di filter berdasarkan negara. Instruksi pengumpulan tugas: 1. Format file berbentuk PDF 2. Maksimal ukuran file yaitu 2MB

Jawaban :

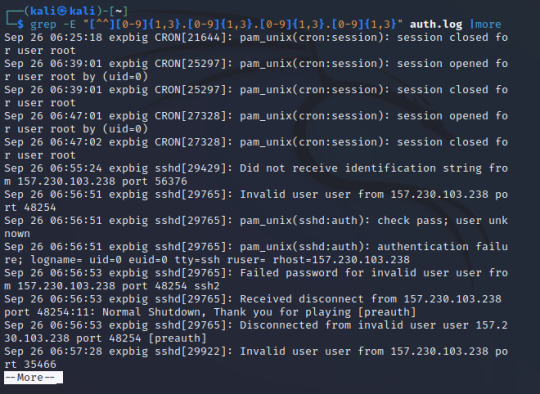

Buka Linux kernel yang diinginkan. Sebagai contoh, pengguna menggunakan Kali Linux.

Buka Terminal yang ada di Taskbar. Run command wget dengan format : wget url

Tunggu sampai proses selesai.

Karena pengguna tidak mengetahui IP yang akan dilacak, maka untuk fungsi grep yang digunakan menggunakan kelas karakter khusus. Setiap set didenotasi dengan []. IP terdiri dari angka, jadi untuk mendapatkan kelas karakter yang diinginkan adalah [0-9] untuk mencapai angka yang dimungkinkan. Sebuah address memiliki 4 set sampai 3 numerasi. Jadi harus bisa mirip setidaknya satu, tapi tidak lebih dari 3 setiap oktetnya. Lakukan ini dengan menambahkan {}. Jadi, [0-9]{1,3}.

Jadi, bentuk lengkap dari IP address adalah [0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.

Format yang digunakan : grep –E "[^^][0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}" auth.log |more

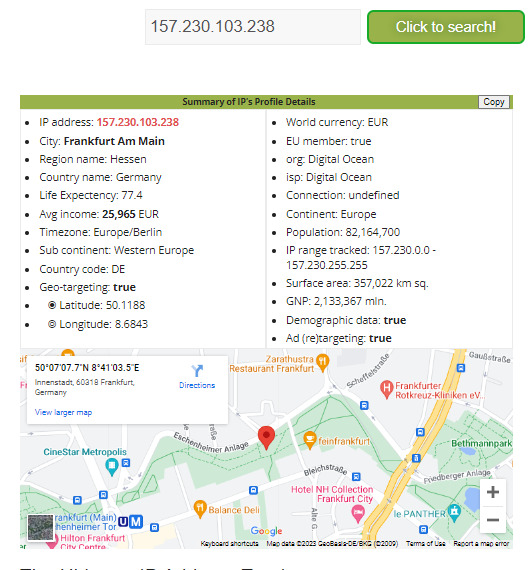

Dapat dilihat dari IP diatas adalah 157.230.103.238.

Untuk melacak IP Address tersebut, pengguna menggunakan website opentracker.net

Dapat disimpulkan bahwa IP tersebut berasal dari Frankfurt, Hessen, Jerman.

6 notes

·

View notes

Text

Greps AI innovative AI solutions

Boost your business with Greps AI innovative AI solutions. Our expert team specializes in delivering cutting-edge services, including Digital Marketing, UI/UX Design, API Development, Chatbot Development, Cloud Services, and IT Consulting. Choose Greps AI to transform your business and drive growth with tailored strategies designed for success. Contact us today!

0 notes

Text

Build a Search Intent Dashboard to Unlock Better Opportunities

Posted by scott.taft

We've been talking a lot about search intent this week, and if you've been following along, you’re likely already aware of how “search intent” is essential for a robust SEO strategy. If, however, you’ve ever laboured for hours classifying keywords by topic and search intent, only to end up with a ton of data you don’t really know what to do with, then this post is for you.

I’m going to share how to take all that sweet keyword data you’ve categorized, put it into a Power BI dashboard, and start slicing and dicing to uncover a ton insights — faster than you ever could before.

Building your keyword list

Every great search analysis starts with keyword research and this one is no different. I’m not going to go into excruciating detail about how to build your keyword list. However, I will mention a few of my favorite tools that I’m sure most of you are using already:

Search Query Report — What better place to look first than the search terms already driving clicks and (hopefully) conversions to your site.

Answer The Public — Great for pulling a ton of suggested terms, questions and phrases related to a single search term.

InfiniteSuggest — Like Answer The Public, but faster and allows you to build based on a continuous list of seed keywords.

MergeWords — Quickly expand your keywords by adding modifiers upon modifiers.

Grep Words — A suite of keyword tools for expanding, pulling search volume and more.

Please note that these tools are a great way to scale your keyword collecting but each will come with the need to comb through and clean your data to ensure all keywords are at least somewhat relevant to your business and audience.

Once I have an initial keyword list built, I’ll upload it to STAT and let it run for a couple days to get an initial data pull. This allows me to pull the ‘People Also Ask’ and ‘Related Searches’ reports in STAT to further build out my keyword list. All in all, I’m aiming to get to at least 5,000 keywords, but the more the merrier.

For the purposes of this blog post I have about 19,000 keywords I collected for a client in the window treatments space.

Categorizing your keywords by topic

Bucketing keywords into categories is an age-old challenge for most digital marketers but it’s a critical step in understanding the distribution of your data. One of the best ways to segment your keywords is by shared words. If you’re short on AI and machine learning capabilities, look no further than a trusty Ngram analyzer. I love to use this Ngram Tool from guidetodatamining.com — it ain’t much to look at, but it’s fast and trustworthy.

After dropping my 19,000 keywords into the tool and analyzing by unigram (or 1-word phrases), I manually select categories that fit with my client’s business and audience. I also make sure the unigram accounts for a decent amount of keywords (e.g. I wouldn’t pick a unigram that has a count of only 2 keywords).

Using this data, I then create a Category Mapping table and map a unigram, or “trigger word”, to a Category like the following:

You’ll notice that for “curtain” and “drapes” I mapped both to the Curtains category. For my client’s business, they treat these as the same product, and doing this allows me to account for variations in keywords but ultimately group them how I want for this analysis.

Using this method, I create a Trigger Word-Category mapping based on my entire dataset. It’s possible that not every keyword will fall into a category and that’s okay — it likely means that keyword is not relevant or significant enough to be accounted for.

Creating a keyword intent map

Similar to identifying common topics by which to group your keywords, I’m going to follow a similar process but with the goal of grouping keywords by intent modifier.

Search intent is the end goal of a person using a search engine. Digital marketers can leverage these terms and modifiers to infer what types of results or actions a consumer is aiming for.

For example, if a person searches for “white blinds near me”, it is safe to infer that this person is looking to buy white blinds as they are looking for a physical location that sells them. In this case I would classify “near me” as a “Transactional” modifier. If, however, the person searched “living room blinds ideas” I would infer their intent is to see images or read blog posts on the topic of living room blinds. I might classify this search term as being at the “Inspirational” stage, where a person is still deciding what products they might be interested and, therefore, isn’t quite ready to buy yet.

There is a lot of research on some generally accepted intent modifiers in search and I don’t intent to reinvent the wheel. This handy guide (originally published in STAT) provides a good review of intent modifiers you can start with.

I followed the same process as building out categories to build out my intent mapping and the result is a table of intent triggers and their corresponding Intent stage.

Intro to Power BI

There are tons of resources on how to get started with the free tool Power BI, one of which is from own founder Will Reynold’s video series on using Power BI for Digital Marketing. This is a great place to start if you’re new to the tool and its capabilities.

Note: it’s not about the tool necessarily (although Power BI is a super powerful one). It’s more about being able to look at all of this data in one place and pull insights from it at speeds which Excel just won’t give you. If you’re still skeptical of trying a new tool like Power BI at the end of this post, I urge you to get the free download from Microsoft and give it a try.

Setting up your data in Power BI

Power BI’s power comes from linking multiple datasets together based on common “keys." Think back to your Microsoft Access days and this should all start to sound familiar.

Step 1: Upload your data sources

First, open Power BI and you’ll see a button called “Get Data” in the top ribbon. Click that and then select the data format you want to upload. All of my data for this analysis is in CSV format so I will select the Text/CSV option for all of my data sources. You have to follow these steps for each data source. Click “Load” for each data source.

Step 2: Clean your data

In the Power BI ribbon menu, click the button called “Edit Queries." This will open the Query Editor where we will make all of our data transformations.

The main things you’ll want to do in the Query Editor are the following:

Make sure all data formats make sense (e.g. keywords are formatted as text, numbers are formatted as decimals or whole numbers).

Rename columns as needed.

Create a domain column in your Top 20 report based on the URL column.

Close and apply your changes by hitting the "Edit Queries" button, as seen above.

Step 3: Create relationships between data sources

On the left side of Power BI is a vertical bar with icons for different views. Click the third one to see your relationships view.

In this view, we are going to connect all data sources to our ‘Keywords Bridge’ table by clicking and dragging a line from the field ‘Keyword’ in each table and to ‘Keyword’ in the ‘Keywords Bridge’ table (note that for the PPC Data, I have connected ‘Search Term’ as this is the PPC equivalent of a keyword, as we’re using here).

The last thing we need to do for our relationships is double-click on each line to ensure the following options are selected for each so that our dashboard works properly:

The cardinality is Many to 1

The relationship is “active”

The cross filter direction is set to “both”

We are now ready to start building our Intent Dashboard and analyzing our data.

Building the search intent dashboard

In this section I’ll walk you through each visual in the Search Intent Dashboard (as seen below):

Top domains by count of keywords

Visual type: Stacked Bar Chart visual

Axis: I’ve nested URL under Domain so I can drill down to see this same breakdown by URL for a specific Domain

Value: Distinct count of keywords

Legend: Result Types

Filter: Top 10 filter on Domains by count of distinct keywords

Keyword breakdown by result type

Visual type: Donut chart

Legend: Result Types

Value: Count of distinct keywords, shown as Percent of grand total

Metric Cards

Sum of Distinct MSV

Because the Top 20 report shows each keyword 20 times, we need to create a calculated measure in Power BI to only sum MSV for the unique list of keywords. Use this formula for that calculated measure:

Sum Distinct MSV = SUMX(DISTINCT('Table'[Keywords]), FIRSTNONBLANK('Table'[MSV], 0))

Keywords

This is just a distinct count of keywords

Slicer: PPC Conversions

Visual type: Slicer

Drop your PPC Conversions field into a slicer and set the format to “Between” to get this nifty slider visual.

Tables

Visual type: Table or Matrix (a matrix allows for drilling down similar to a pivot table in Excel)

Values: Here I have Category or Intent Stage and then the distinct count of keywords.

Pulling insights from your search intent dashboard

This dashboard is now a Swiss Army knife of data that allows you to slice and dice to your heart’s content. Below are a couple examples of how I use this dashboard to pull out opportunities and insights for my clients.

Where are competitors winning?

With this data we can quickly see who the top competing domains are, but what’s more valuable is seeing who the competitors are for a particular intent stage and category.

I start by filtering to the “Informational” stage, since it represents the most keywords in our dataset. I also filter to the top category for this intent stage which is “Blinds”. Looking at my Keyword Count card, I can now see that I’m looking at a subset of 641 keywords.

Note: To filter multiple visuals in Power BI, you need to press and hold the “Ctrl” button each time you click a new visual to maintain all the filters you clicked previously.

The top competing subdomain here is videos.blinds.com with visibility in the top 20 for over 250 keywords, most of which are for video results. I hit ctrl+click on the Video results portion of videos.blinds.com to update the keywords table to only keywords where videos.blinds.com is ranking in the top 20 with a video result.

From all this I can now say that videos.blinds.com is ranking in the top 20 positions for about 30 percent of keywords that fall into the “Blinds” category and the “Informational” intent stage. I can also see that most of the keywords here start with “how to”, which tells me that most likely people searching for blinds in an informational stage are looking for how to instructions and that video may be a desired content format.

Where should I focus my time?

Whether you’re in-house or at an agency, time is always a hit commodity. You can use this dashboard to quickly identify opportunities that you should be prioritizing first — opportunities that can guarantee you’ll deliver bottom-line results.

To find these bottom-line results, we’re going to filter our data using the PPC conversions slicer so that our data only includes keywords that have converted at least once in our PPC campaigns.

Once I do that, I can see I’m working with a pretty limited set of keywords that have been bucketed into intent stages, but I can continue by drilling into the “Transactional” intent stage because I want to target queries that are linked to a possible purchase.

Note: Not every keyword will fall into an intent stage if it doesn’t meet the criteria we set. These keywords will still appear in the data, but this is the reason why your total keyword count might not always match the total keyword count in the intent stages or category tables.

From there I want to focus on those “Transactional” keywords that are triggering answer boxes to make sure I have good visibility, since they are converting for me on PPC. To do that, I filter to only show keywords triggering answer boxes. Based on these filters I can look at my keyword table and see most (if not all) of the keywords are “installation” keywords and I don’t see my client’s domain in the top list of competitors. This is now an area of focus for me to start driving organic conversions.

Wrap up

I’ve only just scratched the surface — there’s tons that can can be done with this data inside a tool like Power BI. Having a solid data set of keywords and visuals that I can revisit repeatedly for a client and continuously pull out opportunities to help fuel our strategy is, for me, invaluable. I can work efficiently without having to go back to keyword tools whenever I need an idea. Hopefully you find this makes building an intent-based strategy more efficient and sound for your business or clients.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

via Blogger http://bit.ly/2EiaGpj

0 notes

Text

Build a Search Intent Dashboard to Unlock Better Opportunities

Posted by scott.taft

We've been talking a lot about search intent this week, and if you've been following along, you’re likely already aware of how “search intent” is essential for a robust SEO strategy. If, however, you’ve ever laboured for hours classifying keywords by topic and search intent, only to end up with a ton of data you don’t really know what to do with, then this post is for you.

I’m going to share how to take all that sweet keyword data you’ve categorized, put it into a Power BI dashboard, and start slicing and dicing to uncover a ton insights — faster than you ever could before.

Building your keyword list

Every great search analysis starts with keyword research and this one is no different. I’m not going to go into excruciating detail about how to build your keyword list. However, I will mention a few of my favorite tools that I’m sure most of you are using already:

Search Query Report — What better place to look first than the search terms already driving clicks and (hopefully) conversions to your site.

Answer The Public — Great for pulling a ton of suggested terms, questions and phrases related to a single search term.

InfiniteSuggest — Like Answer The Public, but faster and allows you to build based on a continuous list of seed keywords.

MergeWords — Quickly expand your keywords by adding modifiers upon modifiers.

Grep Words — A suite of keyword tools for expanding, pulling search volume and more.

Please note that these tools are a great way to scale your keyword collecting but each will come with the need to comb through and clean your data to ensure all keywords are at least somewhat relevant to your business and audience.

Once I have an initial keyword list built, I’ll upload it to STAT and let it run for a couple days to get an initial data pull. This allows me to pull the ‘People Also Ask’ and ‘Related Searches’ reports in STAT to further build out my keyword list. All in all, I’m aiming to get to at least 5,000 keywords, but the more the merrier.

For the purposes of this blog post I have about 19,000 keywords I collected for a client in the window treatments space.

Categorizing your keywords by topic

Bucketing keywords into categories is an age-old challenge for most digital marketers but it’s a critical step in understanding the distribution of your data. One of the best ways to segment your keywords is by shared words. If you’re short on AI and machine learning capabilities, look no further than a trusty Ngram analyzer. I love to use this Ngram Tool from guidetodatamining.com — it ain’t much to look at, but it’s fast and trustworthy.

After dropping my 19,000 keywords into the tool and analyzing by unigram (or 1-word phrases), I manually select categories that fit with my client’s business and audience. I also make sure the unigram accounts for a decent amount of keywords (e.g. I wouldn’t pick a unigram that has a count of only 2 keywords).

Using this data, I then create a Category Mapping table and map a unigram, or “trigger word”, to a Category like the following:

You’ll notice that for “curtain” and “drapes” I mapped both to the Curtains category. For my client’s business, they treat these as the same product, and doing this allows me to account for variations in keywords but ultimately group them how I want for this analysis.

Using this method, I create a Trigger Word-Category mapping based on my entire dataset. It’s possible that not every keyword will fall into a category and that’s okay — it likely means that keyword is not relevant or significant enough to be accounted for.

Creating a keyword intent map

Similar to identifying common topics by which to group your keywords, I’m going to follow a similar process but with the goal of grouping keywords by intent modifier.

Search intent is the end goal of a person using a search engine. Digital marketers can leverage these terms and modifiers to infer what types of results or actions a consumer is aiming for.

For example, if a person searches for “white blinds near me”, it is safe to infer that this person is looking to buy white blinds as they are looking for a physical location that sells them. In this case I would classify “near me” as a “Transactional” modifier. If, however, the person searched “living room blinds ideas” I would infer their intent is to see images or read blog posts on the topic of living room blinds. I might classify this search term as being at the “Inspirational” stage, where a person is still deciding what products they might be interested and, therefore, isn’t quite ready to buy yet.

There is a lot of research on some generally accepted intent modifiers in search and I don’t intent to reinvent the wheel. This handy guide (originally published in STAT) provides a good review of intent modifiers you can start with.

I followed the same process as building out categories to build out my intent mapping and the result is a table of intent triggers and their corresponding Intent stage.

Intro to Power BI

There are tons of resources on how to get started with the free tool Power BI, one of which is from own founder Will Reynold’s video series on using Power BI for Digital Marketing. This is a great place to start if you’re new to the tool and its capabilities.

Note: it’s not about the tool necessarily (although Power BI is a super powerful one). It’s more about being able to look at all of this data in one place and pull insights from it at speeds which Excel just won’t give you. If you’re still skeptical of trying a new tool like Power BI at the end of this post, I urge you to get the free download from Microsoft and give it a try.

Setting up your data in Power BI

Power BI’s power comes from linking multiple datasets together based on common “keys." Think back to your Microsoft Access days and this should all start to sound familiar.

Step 1: Upload your data sources

First, open Power BI and you’ll see a button called “Get Data” in the top ribbon. Click that and then select the data format you want to upload. All of my data for this analysis is in CSV format so I will select the Text/CSV option for all of my data sources. You have to follow these steps for each data source. Click “Load” for each data source.

Step 2: Clean your data

In the Power BI ribbon menu, click the button called “Edit Queries." This will open the Query Editor where we will make all of our data transformations.

The main things you’ll want to do in the Query Editor are the following:

Make sure all data formats make sense (e.g. keywords are formatted as text, numbers are formatted as decimals or whole numbers).

Rename columns as needed.

Create a domain column in your Top 20 report based on the URL column.

Close and apply your changes by hitting the "Edit Queries" button, as seen above.

Step 3: Create relationships between data sources

On the left side of Power BI is a vertical bar with icons for different views. Click the third one to see your relationships view.

In this view, we are going to connect all data sources to our ‘Keywords Bridge’ table by clicking and dragging a line from the field ‘Keyword’ in each table and to ‘Keyword’ in the ‘Keywords Bridge’ table (note that for the PPC Data, I have connected ‘Search Term’ as this is the PPC equivalent of a keyword, as we’re using here).

The last thing we need to do for our relationships is double-click on each line to ensure the following options are selected for each so that our dashboard works properly:

The cardinality is Many to 1

The relationship is “active”

The cross filter direction is set to “both”

We are now ready to start building our Intent Dashboard and analyzing our data.

Building the search intent dashboard

In this section I’ll walk you through each visual in the Search Intent Dashboard (as seen below):

Top domains by count of keywords

Visual type: Stacked Bar Chart visual

Axis: I’ve nested URL under Domain so I can drill down to see this same breakdown by URL for a specific Domain

Value: Distinct count of keywords

Legend: Result Types

Filter: Top 10 filter on Domains by count of distinct keywords

Keyword breakdown by result type

Visual type: Donut chart

Legend: Result Types

Value: Count of distinct keywords, shown as Percent of grand total

Metric Cards

Sum of Distinct MSV

Because the Top 20 report shows each keyword 20 times, we need to create a calculated measure in Power BI to only sum MSV for the unique list of keywords. Use this formula for that calculated measure:

Sum Distinct MSV = SUMX(DISTINCT('Table'[Keywords]), FIRSTNONBLANK('Table'[MSV], 0))

Keywords

This is just a distinct count of keywords

Slicer: PPC Conversions

Visual type: Slicer

Drop your PPC Conversions field into a slicer and set the format to “Between” to get this nifty slider visual.

Tables

Visual type: Table or Matrix (a matrix allows for drilling down similar to a pivot table in Excel)

Values: Here I have Category or Intent Stage and then the distinct count of keywords.

Pulling insights from your search intent dashboard

This dashboard is now a Swiss Army knife of data that allows you to slice and dice to your heart’s content. Below are a couple examples of how I use this dashboard to pull out opportunities and insights for my clients.

Where are competitors winning?

With this data we can quickly see who the top competing domains are, but what’s more valuable is seeing who the competitors are for a particular intent stage and category.

I start by filtering to the “Informational” stage, since it represents the most keywords in our dataset. I also filter to the top category for this intent stage which is “Blinds”. Looking at my Keyword Count card, I can now see that I’m looking at a subset of 641 keywords.

Note: To filter multiple visuals in Power BI, you need to press and hold the “Ctrl” button each time you click a new visual to maintain all the filters you clicked previously.

The top competing subdomain here is videos.blinds.com with visibility in the top 20 for over 250 keywords, most of which are for video results. I hit ctrl+click on the Video results portion of videos.blinds.com to update the keywords table to only keywords where videos.blinds.com is ranking in the top 20 with a video result.

From all this I can now say that videos.blinds.com is ranking in the top 20 positions for about 30 percent of keywords that fall into the “Blinds” category and the “Informational” intent stage. I can also see that most of the keywords here start with “how to”, which tells me that most likely people searching for blinds in an informational stage are looking for how to instructions and that video may be a desired content format.

Where should I focus my time?

Whether you’re in-house or at an agency, time is always a hit commodity. You can use this dashboard to quickly identify opportunities that you should be prioritizing first — opportunities that can guarantee you’ll deliver bottom-line results.

To find these bottom-line results, we’re going to filter our data using the PPC conversions slicer so that our data only includes keywords that have converted at least once in our PPC campaigns.

Once I do that, I can see I’m working with a pretty limited set of keywords that have been bucketed into intent stages, but I can continue by drilling into the “Transactional” intent stage because I want to target queries that are linked to a possible purchase.

Note: Not every keyword will fall into an intent stage if it doesn’t meet the criteria we set. These keywords will still appear in the data, but this is the reason why your total keyword count might not always match the total keyword count in the intent stages or category tables.

From there I want to focus on those “Transactional” keywords that are triggering answer boxes to make sure I have good visibility, since they are converting for me on PPC. To do that, I filter to only show keywords triggering answer boxes. Based on these filters I can look at my keyword table and see most (if not all) of the keywords are “installation” keywords and I don’t see my client’s domain in the top list of competitors. This is now an area of focus for me to start driving organic conversions.

Wrap up

I’ve only just scratched the surface — there’s tons that can can be done with this data inside a tool like Power BI. Having a solid data set of keywords and visuals that I can revisit repeatedly for a client and continuously pull out opportunities to help fuel our strategy is, for me, invaluable. I can work efficiently without having to go back to keyword tools whenever I need an idea. Hopefully you find this makes building an intent-based strategy more efficient and sound for your business or clients.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

0 notes

Text

Build a Search Intent Dashboard to Unlock Better Opportunities

Posted by scott.taft

We've been talking a lot about search intent this week, and if you've been following along, you’re likely already aware of how “search intent” is essential for a robust SEO strategy. If, however, you’ve ever laboured for hours classifying keywords by topic and search intent, only to end up with a ton of data you don’t really know what to do with, then this post is for you.

I’m going to share how to take all that sweet keyword data you’ve categorized, put it into a Power BI dashboard, and start slicing and dicing to uncover a ton insights — faster than you ever could before.

Building your keyword list

Every great search analysis starts with keyword research and this one is no different. I’m not going to go into excruciating detail about how to build your keyword list. However, I will mention a few of my favorite tools that I’m sure most of you are using already:

Search Query Report — What better place to look first than the search terms already driving clicks and (hopefully) conversions to your site.

Answer The Public — Great for pulling a ton of suggested terms, questions and phrases related to a single search term.

InfiniteSuggest — Like Answer The Public, but faster and allows you to build based on a continuous list of seed keywords.

MergeWords — Quickly expand your keywords by adding modifiers upon modifiers.

Grep Words — A suite of keyword tools for expanding, pulling search volume and more.

Please note that these tools are a great way to scale your keyword collecting but each will come with the need to comb through and clean your data to ensure all keywords are at least somewhat relevant to your business and audience.

Once I have an initial keyword list built, I’ll upload it to STAT and let it run for a couple days to get an initial data pull. This allows me to pull the ‘People Also Ask’ and ‘Related Searches’ reports in STAT to further build out my keyword list. All in all, I’m aiming to get to at least 5,000 keywords, but the more the merrier.

For the purposes of this blog post I have about 19,000 keywords I collected for a client in the window treatments space.

Categorizing your keywords by topic

Bucketing keywords into categories is an age-old challenge for most digital marketers but it’s a critical step in understanding the distribution of your data. One of the best ways to segment your keywords is by shared words. If you’re short on AI and machine learning capabilities, look no further than a trusty Ngram analyzer. I love to use this Ngram Tool from guidetodatamining.com — it ain’t much to look at, but it’s fast and trustworthy.

After dropping my 19,000 keywords into the tool and analyzing by unigram (or 1-word phrases), I manually select categories that fit with my client’s business and audience. I also make sure the unigram accounts for a decent amount of keywords (e.g. I wouldn’t pick a unigram that has a count of only 2 keywords).

Using this data, I then create a Category Mapping table and map a unigram, or “trigger word”, to a Category like the following:

You’ll notice that for “curtain” and “drapes” I mapped both to the Curtains category. For my client’s business, they treat these as the same product, and doing this allows me to account for variations in keywords but ultimately group them how I want for this analysis.

Using this method, I create a Trigger Word-Category mapping based on my entire dataset. It’s possible that not every keyword will fall into a category and that’s okay — it likely means that keyword is not relevant or significant enough to be accounted for.

Creating a keyword intent map

Similar to identifying common topics by which to group your keywords, I’m going to follow a similar process but with the goal of grouping keywords by intent modifier.

Search intent is the end goal of a person using a search engine. Digital marketers can leverage these terms and modifiers to infer what types of results or actions a consumer is aiming for.

For example, if a person searches for “white blinds near me”, it is safe to infer that this person is looking to buy white blinds as they are looking for a physical location that sells them. In this case I would classify “near me” as a “Transactional” modifier. If, however, the person searched “living room blinds ideas” I would infer their intent is to see images or read blog posts on the topic of living room blinds. I might classify this search term as being at the “Inspirational” stage, where a person is still deciding what products they might be interested and, therefore, isn’t quite ready to buy yet.

There is a lot of research on some generally accepted intent modifiers in search and I don’t intent to reinvent the wheel. This handy guide (originally published in STAT) provides a good review of intent modifiers you can start with.

I followed the same process as building out categories to build out my intent mapping and the result is a table of intent triggers and their corresponding Intent stage.

Intro to Power BI

There are tons of resources on how to get started with the free tool Power BI, one of which is from own founder Will Reynold’s video series on using Power BI for Digital Marketing. This is a great place to start if you’re new to the tool and its capabilities.

Note: it’s not about the tool necessarily (although Power BI is a super powerful one). It’s more about being able to look at all of this data in one place and pull insights from it at speeds which Excel just won’t give you. If you’re still skeptical of trying a new tool like Power BI at the end of this post, I urge you to get the free download from Microsoft and give it a try.

Setting up your data in Power BI

Power BI’s power comes from linking multiple datasets together based on common “keys." Think back to your Microsoft Access days and this should all start to sound familiar.

Step 1: Upload your data sources

First, open Power BI and you’ll see a button called “Get Data” in the top ribbon. Click that and then select the data format you want to upload. All of my data for this analysis is in CSV format so I will select the Text/CSV option for all of my data sources. You have to follow these steps for each data source. Click “Load” for each data source.

Step 2: Clean your data

In the Power BI ribbon menu, click the button called “Edit Queries." This will open the Query Editor where we will make all of our data transformations.

The main things you’ll want to do in the Query Editor are the following:

Make sure all data formats make sense (e.g. keywords are formatted as text, numbers are formatted as decimals or whole numbers).

Rename columns as needed.

Create a domain column in your Top 20 report based on the URL column.

Close and apply your changes by hitting the "Edit Queries" button, as seen above.

Step 3: Create relationships between data sources

On the left side of Power BI is a vertical bar with icons for different views. Click the third one to see your relationships view.

In this view, we are going to connect all data sources to our ‘Keywords Bridge’ table by clicking and dragging a line from the field ‘Keyword’ in each table and to ‘Keyword’ in the ‘Keywords Bridge’ table (note that for the PPC Data, I have connected ‘Search Term’ as this is the PPC equivalent of a keyword, as we’re using here).

The last thing we need to do for our relationships is double-click on each line to ensure the following options are selected for each so that our dashboard works properly:

The cardinality is Many to 1

The relationship is “active”

The cross filter direction is set to “both”

We are now ready to start building our Intent Dashboard and analyzing our data.

Building the search intent dashboard

In this section I’ll walk you through each visual in the Search Intent Dashboard (as seen below):

Top domains by count of keywords

Visual type: Stacked Bar Chart visual

Axis: I’ve nested URL under Domain so I can drill down to see this same breakdown by URL for a specific Domain

Value: Distinct count of keywords

Legend: Result Types

Filter: Top 10 filter on Domains by count of distinct keywords

Keyword breakdown by result type

Visual type: Donut chart

Legend: Result Types

Value: Count of distinct keywords, shown as Percent of grand total

Metric Cards

Sum of Distinct MSV

Because the Top 20 report shows each keyword 20 times, we need to create a calculated measure in Power BI to only sum MSV for the unique list of keywords. Use this formula for that calculated measure:

Sum Distinct MSV = SUMX(DISTINCT('Table'[Keywords]), FIRSTNONBLANK('Table'[MSV], 0))

Keywords

This is just a distinct count of keywords

Slicer: PPC Conversions

Visual type: Slicer

Drop your PPC Conversions field into a slicer and set the format to “Between” to get this nifty slider visual.

Tables

Visual type: Table or Matrix (a matrix allows for drilling down similar to a pivot table in Excel)

Values: Here I have Category or Intent Stage and then the distinct count of keywords.

Pulling insights from your search intent dashboard

This dashboard is now a Swiss Army knife of data that allows you to slice and dice to your heart’s content. Below are a couple examples of how I use this dashboard to pull out opportunities and insights for my clients.

Where are competitors winning?

With this data we can quickly see who the top competing domains are, but what’s more valuable is seeing who the competitors are for a particular intent stage and category.

I start by filtering to the “Informational” stage, since it represents the most keywords in our dataset. I also filter to the top category for this intent stage which is “Blinds”. Looking at my Keyword Count card, I can now see that I’m looking at a subset of 641 keywords.

Note: To filter multiple visuals in Power BI, you need to press and hold the “Ctrl” button each time you click a new visual to maintain all the filters you clicked previously.

The top competing subdomain here is videos.blinds.com with visibility in the top 20 for over 250 keywords, most of which are for video results. I hit ctrl+click on the Video results portion of videos.blinds.com to update the keywords table to only keywords where videos.blinds.com is ranking in the top 20 with a video result.

From all this I can now say that videos.blinds.com is ranking in the top 20 positions for about 30 percent of keywords that fall into the “Blinds” category and the “Informational” intent stage. I can also see that most of the keywords here start with “how to”, which tells me that most likely people searching for blinds in an informational stage are looking for how to instructions and that video may be a desired content format.

Where should I focus my time?

Whether you’re in-house or at an agency, time is always a hit commodity. You can use this dashboard to quickly identify opportunities that you should be prioritizing first — opportunities that can guarantee you’ll deliver bottom-line results.

To find these bottom-line results, we’re going to filter our data using the PPC conversions slicer so that our data only includes keywords that have converted at least once in our PPC campaigns.

Once I do that, I can see I’m working with a pretty limited set of keywords that have been bucketed into intent stages, but I can continue by drilling into the “Transactional” intent stage because I want to target queries that are linked to a possible purchase.

Note: Not every keyword will fall into an intent stage if it doesn’t meet the criteria we set. These keywords will still appear in the data, but this is the reason why your total keyword count might not always match the total keyword count in the intent stages or category tables.

From there I want to focus on those “Transactional” keywords that are triggering answer boxes to make sure I have good visibility, since they are converting for me on PPC. To do that, I filter to only show keywords triggering answer boxes. Based on these filters I can look at my keyword table and see most (if not all) of the keywords are “installation” keywords and I don’t see my client’s domain in the top list of competitors. This is now an area of focus for me to start driving organic conversions.

Wrap up

I’ve only just scratched the surface — there’s tons that can can be done with this data inside a tool like Power BI. Having a solid data set of keywords and visuals that I can revisit repeatedly for a client and continuously pull out opportunities to help fuel our strategy is, for me, invaluable. I can work efficiently without having to go back to keyword tools whenever I need an idea. Hopefully you find this makes building an intent-based strategy more efficient and sound for your business or clients.

Sign up for The Moz Top 10, a semimonthly mailer updating you on the top ten hottest pieces of SEO news, tips, and rad links uncovered by the Moz team. Think of it as your exclusive digest of stuff you don't have time to hunt down but want to read!

from https://dentistry01.blogspot.com/2019/02/build-search-intent-dashboard-to-unlock.html

0 notes

Text

Greps Ai provides cutting-edge tools and personalized strategies to elevate your business. Discover how Greps Ai for Business Growth can transform your operations today!

0 notes

Text

Build a Search Intent Dashboard to Unlock Better Opportunities

Posted by scott.taft