#Google Cloud AI Platform

Explore tagged Tumblr posts

Text

In today’s competitive mobile app market, it’s crucial to find ways to stand out from the crowd. One powerful tool that can help you achieve this is artificial intelligence (AI). AI is rapidly transforming industries, and mobile app development is no exception. This article, explores the benefits of integrating AI into your mobile app. Let’s dive deeper and see how AI can revolutionize your app and boost your business.

0 notes

Text

Google Document AI is an exciting new technology that is changing the way we interact with information. Technology is aiding organizations and individuals to process large volumes of documents quickly and accurately.

It does this by combining natural language processing, computer vision, and machine learning. This technology also helps to extract valuable insights from the data. As the technology continues to develop, we can expect to see even more exciting applications in the years to come.

#Google Document AI#AI/ML tools and services#Google Vertex AI#google cloud ai platform#AI/ML solutions#Onix

0 notes

Text

Top AI Tools to Start Your Training in 2024

Empower Your AI Journey with Beginner-Friendly Platforms Like TensorFlow, PyTorch, and Google Colab The rapid advancements in artificial intelligence (AI) have transformed the way we work, live, and learn. For aspiring AI enthusiasts, diving into this exciting field requires a combination of theoretical understanding and hands-on experience. Fortunately, the right tools can make the learning…

#accessible AI learning#ai#AI education#AI for beginners#AI learning resources#AI technology 2024#AI tools#AI tools for students#AI tools roundup#AI training for beginners#AI training platforms#artificial intelligence training#artificial-intelligence#beginner-friendly AI platforms#cloud-based AI tools#data science tools#deep learning tools#future of AI#Google Colab#machine learning frameworks#machine-learning#neural networks#PyTorch#TensorFlow

0 notes

Text

Redefine Customer Engagement with AI-Powered Application Solutions

In today’s digital landscape, customer engagement is more crucial than ever. ATCuality’s AI powered application redefine how businesses interact with their audience, creating personalized experiences that foster loyalty and drive satisfaction. Our applications utilize cutting-edge AI algorithms to analyze customer behavior, preferences, and trends, enabling your business to anticipate needs and respond proactively. Whether you're in e-commerce, finance, or customer service, our AI-powered applications can optimize your customer journey, automate responses, and provide insights that lead to improved service delivery. ATCuality’s commitment to innovation ensures that each AI-powered application is adaptable, scalable, and perfectly aligned with your brand’s voice, keeping your customers engaged and coming back for more.

#digital marketing#seo services#artificial intelligence#seo marketing#seo agency#seo company#iot applications#amazon web services#azure cloud services#ai powered application#android app development#mobile application development#app design#advertising#google ads#augmented and virtual reality market#augmented reality agency#augmented human c4 621#augmented reality#iot development services#iot solutions#iot development company#iot platform#embedded software#task management#cloud security services#cloud hosting in saudi arabia#cloud computing#sslcertificate#ssl

0 notes

Text

WBSV

WorldBridge Sport Village is a remarkable mixed-use development located in the rapidly growing area of Chroy Changvar, just 20 minutes away from Phnom Penh's Central Business District. It is a pioneering Sport Village that offers a unique opportunity to blend work and play in a health-conscious environment inspired by international-level sports villages, akin to Olympic athlete villages. It will be the first-ever Sport village that offers you a one-of-a-kind opportunity to experience both work and play in the distinctively healthy atmosphere of an international-level sports village. WorldBridge Sport Village similarly offers a range of landed home living. Properties such as villas, Townhouses, Row Houses, and Shophouses can be found that more than accommodate any family size looking to live in the next big neighborhood in the fastest-growing area of Chroy Changvar.

•Project: WORLD BRIDGE SPORT VILLAGE •Developer: OXLEY-WORLDBRIDGE (CAMBODIA) CO., LTD. •Subsidiary: WB SPORT VILLAGE CO., LTD. •Architectural Manager: Sonetra KETH •Location: Phnom Penh, Cambodia

The condo units offer up to 3-bedroom selections across 12 high-rise blocks with spacious interiors and breathtaking views.

<script async src="https://pagead2.googlesyndication.com/pagead/js/adsbygoogle.js?client=ca-pub-9430617320114361" crossorigin="anonymous"></script>

#Sonetra Keth#Architectural Manager#Architectural Design Manager#BIM Director#BIM Manager#BIM Coordinator#Project Manager#RMIT University Vietnam#Institute of Technology of Cambodia#Real Estate Development#Construction Industry#Building Information Modelling#BIM#AI#Artificial Intelligence#Technology#VDC#Virtual Design#IoT#Machine Learning#C4R#Collaboration for Revit#Cloud Computing and Collaboration Platforms#NETRA#netra#នេត្រា#កេត សុនេត្រា#<meta name=“google-adsense-account” content=“ca-pub-9430617320114361”>#crossorigin=��anonymous”></script>#<script async src=“https://pagead2.googlesyndication.com/pagead/js/adsbygoogle.js?client=ca-pub-9430617320114361”

0 notes

Photo

A Comprehensive Guide about Google Cloud Generative AI Studio https://medium.com/google-cloud/a-comprehensive-guide-about-google-cloud-generative-ai-studio-1b2bafc4108a?source=rss----e52cf94d98af---4

#google-cloud-platform#generative-ai#generative-ai-tools#generative-ai-solution#large-language-models#Rubens Zimbres#Google Cloud - Community - Medium

0 notes

Text

“Disenshittify or Die”

youtube

I'm coming to BURNING MAN! On TUESDAY (Aug 27) at 1PM, I'm giving a talk called "DISENSHITTIFY OR DIE!" at PALENQUE NORTE (7&E). On WEDNESDAY (Aug 28) at NOON, I'm doing a "Talking Caterpillar" Q&A at LIMINAL LABS (830&C).

Last weekend, I traveled to Las Vegas for Defcon 32, where I had the immense privilege of giving a solo talk on Track 1, entitled "Disenshittify or die! How hackers can seize the means of computation and build a new, good internet that is hardened against our asshole bosses' insatiable horniness for enshittification":

https://info.defcon.org/event/?id=54861

This was a followup to last year's talk, "An Audacious Plan to Halt the Internet's Enshittification," a talk that kicked off a lot of international interest in my analysis of platform decay ("enshittification"):

https://www.youtube.com/watch?v=rimtaSgGz_4

The Defcon organizers have earned a restful week or two, and that means that the video of my talk hasn't yet been posted to Defcon's Youtube channel, so in the meantime, I thought I'd post a lightly edited version of my speech crib. If you're headed to Burning Man, you can hear me reprise this talk at Palenque Norte (7&E); I'm kicking off their lecture series on Tuesday, Aug 27 at 1PM.

==

What the fuck happened to the old, good internet?

I mean, sure, our bosses were a little surveillance-happy, and they were usually up for sharing their data with the NSA, and whenever there was a tossup between user security and growth, it was always YOLO time.

But Google Search used to work. Facebook used to show you posts from people you followed. Uber used to be cheaper than a taxi and pay the driver more than a cabbie made. Amazon used to sell products, not Shein-grade self-destructing dropshipped garbage from all-consonant brands. Apple used to defend your privacy, rather than spying on you with your no-modifications-allowed Iphone.

There was a time when you searching for an album on Spotify would get you that album – not a playlist of insipid AI-generated covers with the same name and art.

Microsoft used to sell you software – sure, it was buggy – but now they just let you access apps in the cloud, so they can watch how you use those apps and strip the features you use the most out of the basic tier and turn them into an upcharge.

What – and I cannot stress this enough – the fuck happened?!

I’m talking about enshittification.

Here’s what enshittification looks like from the outside: First, you see a company that’s being good to its end users. Google puts the best search results at the top; Facebook shows you a feed of posts from people and groups you followl; Uber charges small dollars for a cab; Amazon subsidizes goods and returns and shipping and puts the best match for your product search at the top of the page.

That’s stage one, being good to end users. But there’s another part of this stage, call it stage 1a). That’s figuring out how to lock in those users.

There’s so many ways to lock in users.

If you’re Facebook, the users do it for you. You joined Facebook because there were people there you wanted to hang out with, and other people joined Facebook to hang out with you.

That’s the old “network effects” in action, and with network effects come “the collective action problem." Because you love your friends, but goddamn are they a pain in the ass! You all agree that FB sucks, sure, but can you all agree on when it’s time to leave?

No way.

Can you agree on where to go next?

Hell no.

You’re there because that’s where the support group for your rare disease hangs out, and your bestie is there because that’s where they talk with the people in the country they moved away from, then there’s that friend who coordinates their kid’s little league car pools on FB, and the best dungeon master you know isn’t gonna leave FB because that’s where her customers are.

So you’re stuck, because even though FB use comes at a high cost – your privacy, your dignity and your sanity – that’s still less than the switching cost you’d have to bear if you left: namely, all those friends who have taken you hostage, and whom you are holding hostage

Now, sometimes companies lock you in with money, like Amazon getting you to prepay for a year’s shipping with Prime, or to buy your Audible books on a monthly subscription, which virtually guarantees that every shopping search will start on Amazon, after all, you’ve already paid for it.

Sometimes, they lock you in with DRM, like HP selling you a printer with four ink cartridges filled with fluid that retails for more than $10,000/gallon, and using DRM to stop you from refilling any of those ink carts or using a third-party cartridge. So when one cart runs dry, you have to refill it or throw away your investment in the remaining three cartridges and the printer itself.

Sometimes, it’s a grab bag:

You can’t run your Ios apps without Apple hardware;

you can’t run your Apple music, books and movies on anything except an Ios app;

your iPhone uses parts pairing – DRM handshakes between replacement parts and the main system – so you can’t use third-party parts to fix it; and

every OEM iPhone part has a microscopic Apple logo engraved on it, so Apple can demand that the US Customs and Border Service seize any shipment of refurb Iphone parts as trademark violations.

Think Different, amirite?

Getting you locked in completes phase one of the enshittification cycle and signals the start of phase two: making things worse for you to make things better for business customers.

For example, a platform might poison its search results, like Google selling more and more of its results pages to ads that are identified with lighter and lighter tinier and tinier type.

Or Amazon selling off search results and calling it an “ad” business. They make $38b/year on this scam. The first result for your search is, on average, 29% more expensive than the best match for your search. The first row is 25% more expensive than the best match. On average, the best match for your search is likely to be found seventeen places down on the results page.

Other platforms sell off your feed, like Facebook, which started off showing you the things you asked to see, but now the quantum of content from the people you follow has dwindled to a homeopathic residue, leaving a void that Facebook fills with things that people pay to show you: boosted posts from publishers you haven’t subscribed to, and, of course, ads.

Now at this point you might be thinking ‘sure, if you’re not paying for the product, you’re the product.'

Bullshit!

Bull.

Shit.

The people who buy those Google ads? They pay more every year for worse ad-targeting and more ad-fraud

Those publishers paying to nonconsensually cram their content into your Facebook feed? They have to do that because FB suppresses their ability to reach the people who actually subscribed to them

The Amazon sellers with the best match for your query have to outbid everyone else just to show up on the first page of results. It costs so much to sell on Amazon that between 45-51% of every dollar an independent seller brings in has to be kicked up to Don Bezos and the Amazon crime family. Those sellers don’t have the kind of margins that let them pay 51% They have to raise prices in order to avoid losing money on every sale.

"But wait!" I hear you say!

[Come on, say it!]

"But wait! Things on Amazon aren’t more expensive that things at Target, or Walmart, or at a mom and pop store, or direct from the manufacturer.

"How can sellers be raising prices on Amazon if the price at Amazon is the same as at is everywhere else?"

[Any guesses?!]

That’s right, they charge more everywhere. They have to. Amazon binds its sellers to a policy called “most favored nation status,” which says they can’t charge more on Amazon than they charge elsewhere, including direct from their own factory store.

So every seller that wants to sell on Amazon has to raise their prices everywhere else.

Now, these sellers are Amazon’s best customers. They’re paying for the product, and they’re still getting screwed.

Paying for the product doesn’t fill your vapid boss’s shriveled heart with so much joy that he decides to stop trying to think of ways to fuck you over.

Look at Apple. Remember when Apple offered every Ios user a one-click opt out for app-based surveillance? And 96% of users clicked that box?

(The other four percent were either drunk or Facebook employees or drunk Facebook employees.)

That cost Facebook at least ten billion dollars per year in lost surveillance revenue?

I mean, you love to see it.

But did you know that at the same time Apple started spying on Ios users in the same way that Facebook had been, for surveillance data to use to target users for its competing advertising product?

Your Iphone isn’t an ad-supported gimme. You paid a thousand fucking dollars for that distraction rectangle in your pocket, and you’re still the product. What’s more, Apple has rigged Ios so that you can’t mod the OS to block its spying.

If you’re not not paying for the product, you’re the product, and if you are paying for the product, you’re still the product.

Just ask the farmers who are expected to swap parts into their own busted half-million dollar, mission-critical tractors, but can’t actually use those parts until a technician charges them $200 to drive out to the farm and type a parts pairing unlock code into their console.

John Deere’s not giving away tractors. Give John Deere a half mil for a tractor and you will be the product.

Please, my brothers and sisters in Christ. Please! Stop saying ‘if you’re not paying for the product, you’re the product.’

OK, OK, so that’s phase two of enshittification.

Phase one: be good to users while locking them in.

Phase two: screw the users a little to you can good to business customers while locking them in.

Phase three: screw everybody and take all the value for yourself. Leave behind the absolute bare minimum of utility so that everyone stays locked into your pile of shit.

Enshittification: a tragedy in three acts.

That’s what enshittification looks like from the outside, but what’s going on inside the company? What is the pathological mechanism? What sci-fi entropy ray converts the excellent and useful service into a pile of shit?

That mechanism is called twiddling. Twiddling is when someone alters the back end of a service to change how its business operates, changing prices, costs, search ranking, recommendation criteria and other foundational aspects of the system.

Digital platforms are a twiddler’s utopia. A grocer would need an army of teenagers with pricing guns on rollerblades to reprice everything in the building when someone arrives who’s extra hungry.

Whereas the McDonald’s Investments portfolio company Plexure advertises that it can use surveillance data to predict when an app user has just gotten paid so the seller can tack an extra couple bucks onto the price of their breakfast sandwich.

And of course, as the prophet William Gibson warned us, ‘cyberspace is everting.' With digital shelf tags, grocers can change prices whenever they feel like, like the grocers in Norway, whose e-ink shelf tags change the prices 2,000 times per day.

Every Uber driver is offered a different wage for every job. If a driver has been picky lately, the job pays more. But if the driver has been desperate enough to grab every ride the app offers, the pay goes down, and down, and down.

The law professor Veena Dubal calls this ‘algorithmic wage discrimination.' It’s a prime example of twiddling.

Every youtuber knows what it’s like to be twiddled. You work for weeks or months, spend thousands of dollars to make a video, then the algorithm decides that no one – not your own subscribers, not searchers who type in the exact name of your video – will see it.

Why? Who knows? The algorithm’s rules are not public.

Because content moderation is the last redoubt of security through obscurit: they can’t tell you what the como algorithm is downranking because then you’d cheat.

Youtube is the kind of shitty boss who docks every paycheck for all the rules you’ve broken, but won’t tell you what those rules were, lest you figure out how to break those rules next time without your boss catching you.

Twiddling can also work in some users’ favor, of course. Sometimes platforms twiddle to make things better for end users or business customers.

For example, Emily Baker-White from Forbes revealed the existence of a back-end feature that Tiktok’s management can access they call the “heating tool.”

When a manager applies the heating toll to a performer’s account, that performer’s videos are thrust into the feeds of millions of users, without regard to whether the recommendation algorithm predicts they will enjoy that video.

Why would they do this? Well, here’s an analogy from my boyhood I used to go to this traveling fair that would come to Toronto at the end of every summer, the Canadian National Exhibition. If you’ve been to a fair like the Ex, you know that you can always spot some guy lugging around a comedically huge teddy bear.

Nominally, you win that teddy bear by throwing five balls in a peach-basket, but to a first approximation, no one has ever gotten five balls to stay in that peach-basket.

That guy “won” the teddy bear when a carny on the midway singled him out and said, "fella, I like your face. Tell you what I’m gonna do: You get just one ball in the basket and I’ll give you this keychain, and if you amass two keychains, I’ll let you trade them in for one of these galactic-scale teddy-bears."

That’s how the guy got his teddy bear, which he now has to drag up and down the midway for the rest of the day.

Why the hell did that carny give away the teddy bear? Because it turns the guy into a walking billboard for the midway games. If that dopey-looking Judas Goat can get five balls into a peach basket, then so can you.

Except you can’t.

Tiktok’s heating tool is a way to give away tactical giant teddy bears. When someone in the TikTok brain trust decides they need more sports bros on the platform, they pick one bro out at random and make him king for the day, heating the shit out of his account.

That guy gets a bazillion views and he starts running around on all the sports bro forums trumpeting his success: *I am the Louis Pasteur of sports bro influencers!"

The other sports bros pile in and start retooling to make content that conforms to the idiosyncratic Tiktok format. When they fail to get giant teddy bears of their own, they assume that it’s because they’re doing Tiktok wrong, because they don’t know about the heating tool.

But then comes the day when the TikTok Star Chamber decides they need to lure in more astrologers, so they take the heat off that one lucky sports bro, and start heating up some lucky astrologer.

Giant teddy bears are all over the place: those Uber drivers who were boasting to the NYT ten years ago about earning $50/hour? The Substackers who were rolling in dough? Joe Rogan and his hundred million dollar Spotify payout? Those people are all the proud owners of giant teddy bears, and they’re a steal.

Because every dollar they get from the platform turns into five dollars worth of free labor from suckers who think they just internetting wrong.

Giant teddy bears are just one way of twiddling. Platforms can play games with every part of their business logic, in highly automated ways, that allows them to quickly and efficiently siphon value from end users to business customers and back again, hiding the pea in a shell game conducted at machine speeds, until they’ve got everyone so turned around that they take all the value for themselves.

That’s the how: How the platforms do the trick where they are good to users, then lock users in, then maltreat users to be good to business customers, then lock in those business customers, then take all the value for themselves.

So now we know what is happening, and how it is happening, all that’s left is why it’s happening.

Now, on the one hand, the why is pretty obvious. The less value that end-users and business customers capture, the more value there is left to divide up among the shareholders and the executives.

That’s why, but it doesn’t tell you why now. Companies could have done this shit at any time in the past 20 years, but they didn’t. Or at least, the successful ones didn’t. The ones that turned themselves into piles of shit got treated like piles of shit. We avoided them and they died.

Remember Myspace? Yahoo Search? Livejournal? Sure, they’re still serving some kind of AI slop or programmatic ad junk if you hit those domains, but they’re gone.

And there’s the clue: It used to be that if you enshittified your product, bad things happened to your company. Now, there are no consequences for enshittification, so everyone’s doing it.

Let’s break that down: What stops a company from enshittifying?

There are four forces that discipline tech companies. The first one is, obviously, competition.

If your customers find it easy to leave, then you have to worry about them leaving

Many factors can contribute to how hard or easy it is to depart a platform, like the network effects that Facebook has going for it. But the most important factor is whether there is anywhere to go.

Back in 2012, Facebook bought Insta for a billion dollars. That may seem like chump-change in these days of eleven-digit Big Tech acquisitions, but that was a big sum in those innocent days, and it was an especially big sum to pay for Insta. The company only had 13 employees, and a mere 25 million registered users.

But what mattered to Zuckerberg wasn’t how many users Insta had, it was where those users came from.

[Does anyone know where those Insta users came from?]

That’s right, they left Facebook and joined Insta. They were sick of FB, even though they liked the people there, they hated creepy Zuck, they hated the platform, so they left and they didn’t come back.

So Zuck spent a cool billion to recapture them, A fact he put in writing in a midnight email to CFO David Ebersman, explaining that he was paying over the odds for Insta because his users hated him, and loved Insta. So even if they quit Facebook (the platform), they would still be captured Facebook (the company).

Now, on paper, Zuck’s Instagram acquisition is illegal, but normally, that would be hard to stop, because you’d have to prove that he bought Insta with the intention of curtailing competition.

But in this case, Zuck tripped over his own dick: he put it in writing.

But Obama’s DoJ and FTC just let that one slide, following the pro-monopoly policies of Reagan, Bush I, Clinton and Bush II, and setting an example that Trump would follow, greenlighting gigamergers like the catastrophic, incestuous Warner-Discovery marriage.

Indeed, for 40 years, starting with Carter, and accelerating through Reagan, the US has encouraged monopoly formation, as an official policy, on the grounds that monopolies are “efficient.”

If everyone is using Google Search, that’s something we should celebrate. It means they’ve got the very best search and wouldn’t it be perverse to spend public funds to punish them for making the best product?

But as we all know, Google didn’t maintain search dominance by being best. They did it by paying bribes. More than 20 billion per year to Apple alone to be the default Ios search, plus billions more to Samsung, Mozilla, and anyone else making a product or service with a search-box on it, ensuring that you never stumble on a search engine that’s better than theirs.

Which, in turn, ensured that no one smart invested big in rival search engines, even if they were visibly, obviously superior. Why bother making something better if Google’s buying up all the market oxygen before it can kindle your product to life?

Facebook, Google, Microsoft, Amazon – they’re not “making things” companies, they’re “buying things” companies, taking advantage of official tolerance for anticompetitive acquisitions, predatory pricing, market distorting exclusivity deals and other acts specifically prohibited by existing antitrust law.

Their goal is to become too big to fail, because that makes them too big to jail, and that means they can be too big to care.

Which is why Google Search is a pile of shit and everything on Amazon is dropshipped garbage that instantly disintegrates in a cloud of offgassed volatile organic compounds when you open the box.

Once companies no longer fear losing your business to a competitor, it’s much easier for them to treat you badly, because what’re you gonna do?

Remember Lily Tomlin as Ernestine the AT&T operator in those old SNL sketches? “We don’t care. We don’t have to. We’re the phone company.”

Competition is the first force that serves to discipline companies and the enshittificatory impulses of their leadership, and we just stopped enforcing competition law.

It takes a special kind of smooth-brained asshole – that is, an establishment economist – to insist that the collapse of every industry from eyeglasses to vitamin C into a cartel of five or fewer companies has nothing to do with policies that officially encouraged monopolization.

It’s like we used to put down rat poison and we didn’t have a rat problem. Then these dickheads convinced us that rats were good for us and we stopped putting down rat poison, and now rats are gnawing our faces off and they’re all running around saying, "Who’s to say where all these rats came from? Maybe it was that we stopped putting down poison, but maybe it’s just the Time of the Rats. The Great Forces of History bearing down on this moment to multiply rats beyond all measure!"

Antitrust didn’t slip down that staircase and fall spine-first on that stiletto: they stabbed it in the back and then they pushed it.

And when they killed antitrust, they also killed regulation, the second force that disciplines companies. Regulation is possible, but only when the regulator is more powerful than the regulated entities. When a company is bigger than the government, it gets damned hard to credibly threaten to punish that company, no matter what its sins.

That’s what protected IBM for all those years when it had its boot on the throat of the American tech sector. Do you know, the DOJ fought to break up IBM in the courts from 1970-1982, and that every year, for 12 consecutive years, IBM spent more on lawyers to fight the USG than the DOJ Antitrust Division spent on all the lawyers fighting every antitrust case in the entire USA?

IBM outspent Uncle Sam for 12 years. People called it “Antitrust’s Vietnam.” All that money paid off, because by 1982, the president was Ronald Reagan, a man whose official policy was that monopolies were “efficient." So he dropped the case, and Big Blue wriggled off the hook.

It’s hard to regulate a monopolist, and it’s hard to regulate a cartel. When a sector is composed of hundreds of competing companies, they compete. They genuinely fight with one another, trying to poach each others’ customers and workers. They are at each others’ throats.

It’s hard enough for a couple hundred executives to agree on anything. But when they’re legitimately competing with one another, really obsessing about how to eat each others’ lunches, they can’t agree on anything.

The instant one of them goes to their regulator with some bullshit story, about how it’s impossible to have a decent search engine without fine-grained commercial surveillance; or how it’s impossible to have a secure and easy to use mobile device without a total veto over which software can run on it; or how it’s impossible to administer an ISP’s network unless you can slow down connections to servers whose owners aren’t paying bribes for “premium carriage"; there’s some *other company saying, “That’s bullshit”

“We’ve managed it! Here’s our server logs, our quarterly financials and our customer testimonials to prove it.”

100 companies are a rabble, they're a mob. They can’t agree on a lobbying position. They’re too busy eating each others’ lunch to agree on how to cater a meeting to discuss it.

But let those hundred companies merge to monopoly, absorb one another in an incestuous orgy, turn into five giant companies, so inbred they’ve got a corporate Habsburg jaw, and they become a cartel.

It’s easy for a cartel to agree on what bullshit they’re all going to feed their regulator, and to mobilize some of the excess billions they’ve reaped through consolidation, which freed them from “wasteful competition," sp they can capture their regulators completely.

You know, Congress used to pass federal consumer privacy laws? Not anymore.

The last time Congress managed to pass a federal consumer privacy law was in 1988: The Video Privacy Protection Act. That’s a law that bans video-store clerks from telling newspapers what VHS cassettes you take home. In other words, it regulates three things that have effectively ceased to exist.

The threat of having your video rental history out there in the public eye was not the last or most urgent threat the American public faced, and yet, Congress is deadlocked on passing a privacy law.

Tech companies’ regulatory capture involves a risible and transparent gambit, that is so stupid, it’s an insult to all the good hardworking risible transparent ruses out there.

Namely, they claim that when they violate your consumer, privacy or labor rights, It’s not a crime, because they do it with an app.

Algorithmic wage discrimination isn’t illegal wage theft: we do it with an app.

Spying on you from asshole to appetite isn’t a privacy violation: we do it with an app.

And Amazon’s scam search tool that tricks you into paying 29% more than the best match for your query? Not a ripoff. We do it with an app.

Once we killed competition – stopped putting down rat poison – we got cartels – the rats ate our faces. And the cartels captured their regulators – the rats bought out the poison factory and shut it down.

So companies aren’t constrained by competition or regulation.

But you know what? This is tech, and tech is different.IIt’s different because it’s flexible. Because our computers are Turing-complete universal von Neumann machines. That means that any enshittificatory alteration to a program can be disenshittified with another program.

Every time HP jacks up the price of ink , they invite a competitor to market a refill kit or a compatible cartridge.

When Tesla installs code that says you have to pay an extra monthly fee to use your whole battery, they invite a modder to start selling a kit to jailbreak that battery and charge it all the way up.

Lemme take you through a little example of how that works: Imagine this is a product design meeting for our company’s website, and the guy leading the meeting says “Dudes, you know how our KPI is topline ad-revenue? Well, I’ve calculated that if we make the ads just 20% more invasive and obnoxious, we’ll boost ad rev by 2%”

This is a good pitch. Hit that KPI and everyone gets a fat bonus. We can all take our families on a luxury ski vacation in Switzerland.

But here’s the thing: someone’s gonna stick their arm up – someone who doesn’t give a shit about user well-being, and that person is gonna say, “I love how you think, Elon. But has it occurred to you that if we make the ads 20% more obnoxious, then 40% of our users will go to a search engine and type 'How do I block ads?'"

I mean, what a nightmare! Because once a user does that, the revenue from that user doesn’t rise to 102%. It doesn’t stay at 100% It falls to zero, forever.

[Any guesses why?]

Because no user ever went back to the search engine and typed, 'How do I start seeing ads again?'

Once the user jailbreaks their phone or discovers third party ink, or develops a relationship with an independent Tesla mechanic who’ll unlock all the DLC in their car, that user is gone, forever.

Interoperability – that latent property bequeathed to us courtesy of Herrs Turing and Von Neumann and their infinitely flexible, universal machines – that is a serious check on enshittification.

The fact that Congress hasn’t passed a privacy law since 1988 Is countered, at least in part, by the fact that the majority of web users are now running ad-blockers, which are also tracker-blockers.

But no one’s ever installed a tracker-blocker for an app. Because reverse engineering an app puts in you jeopardy of criminal and civil prosecution under Section 1201 of the Digital Millennium Copyright Act, with penalties of a 5-year prison sentence and a $500k fine for a first offense.

And violating its terms of service puts you in jeopardy under the Computer Fraud and Abuse Act of 1986, which is the law that Ronald Reagan signed in a panic after watching Wargames (seriously!).

Helping other users violate the terms of service can get you hit with a lawsuit for tortious interference with contract. And then there’s trademark, copyright and patent.

All that nonsense we call “IP,” but which Jay Freeman of Cydia calls “Felony Contempt of Business Model."

So if we’re still at that product planning meeting and now it’s time to talk about our app, the guy leading the meeting says, “OK, so we’ll make the ads in the app 20% more obnoxious to pull a 2% increase in topline ad rev?”

And that person who objected to making the website 20% worse? Their hand goes back up. Only this time they say “Why don’t we make the ads 100% more invasive and get a 10% increase in ad rev?"

Because it doesn't matter if a user goes to a search engine and types, “How do I block ads in an app." The answer is: you can't. So YOLO, enshittify away.

“IP” is just a euphemism for “any law that lets me reach outside my company’s walls to exert coercive control over my critics, competitors and customers,” and “app” is just a euphemism for “A web page skinned with the right IP so that protecting your privacy while you use it is a felony.”

Interop used to keep companies from enshittifying. If a company made its client suck, someone would roll out an alternative client, if they ripped a feature out and wanted to sell it back to you as a monthly subscription, someone would make a compatible plugin that restored it for a one-time fee, or for free.

To help people flee Myspace, FB gave them bots that you’d load with your login credentials. It would scrape your waiting Myspace messages and put ‘em in your FB inbox, and login to Myspace and paste your replies into your Myspace outbox. So you didn’t have to choose between the people you loved on Myspace, and Facebook, which launched with a promise never to spy on you. Remember that?!

Thanks to the metastasis of IP, all that is off the table today. Apple owes its very existence to iWork Suite, whose Pages, Numbers and Keynote are file-compatible with Microsoft’s Word, Excel and Powerpoint. But make an IOS runtime that’ll play back the files you bought from Apple’s stores on other platforms, and they’ll nuke you til you glow.

FB wouldn’t have had a hope of breaking Myspace’s grip on social media without that scrape, but scrape FB today in support of an alternative client and their lawyers will bomb you til the rubble bounces.

Google scraped every website in the world to create its search index. Try and scrape Google and they’ll have your head on a pike.

When they did it, it was progress. When you do it to them, that’s piracy. Every pirate wants to be an admiral.

Because this handful of companies has so thoroughly captured their regulators, they can wield the power of the state against you when you try to break their grip on power, even as their own flagrant violations of our rights go unpunished. Because they do them with an app.

Tech lost its fear of competitin it neutralized the threat from regulators, and then put them in harness to attack new startups that might do unto them as they did unto the companies that came before them.

But even so, there was a force that kept our bosses in check That force was us. Tech workers.

Tech workers have historically been in short supply, which gave us power, and our bosses knew it.

To get us to work crazy hours, they came up with a trick. They appealed to our love of technology, and told us that we were heroes of a digital revolution, who would “organize the world’s information and make it useful,” who would “bring the world closer together.”

They brought in expert set-dressers to turn our workplaces into whimsical campuses with free laundry, gourmet cafeterias, massages, and kombucha, and a surgeon on hand to freeze our eggs so that we could work through our fertile years.

They convinced us that we were being pampered, rather than being worked like government mules.

This trick has a name. Fobazi Ettarh, the librarian-theorist, calls it “vocational awe, and Elon Musk calls it being “extremely hardcore.”

This worked very well. Boy did we put in some long-ass hours!

But for our bosses, this trick failed badly. Because if you miss your mother’s funeral and to hit a deadline, and then your boss orders you to enshittify that product, you are gonna experience a profound moral injury, which you are absolutely gonna make your boss share.

Because what are they gonna do? Fire you? They can’t hire someone else to do your job, and you can get a job that’s even better at the shop across the street.

So workers held the line when competition, regulation and interop failed.

But eventually, supply caught up with demand. Tech laid off 260,000 of us last year, and another 100,000 in the first half of this year.

You can’t tell your bosses to go fuck themselves, because they’ll fire your ass and give your job to someone who’ll be only too happy to enshittify that product you built.

That’s why this is all happening right now. Our bosses aren’t different. They didn’t catch a mind-virus that turned them into greedy assholes who don’t care about our users’ wellbeing or the quality of our products.

As far as our bosses have always been concerned, the point of the business was to charge the most, and deliver the least, while sharing as little as possible with suppliers, workers, users and customers. They’re not running charities.

Since day one, our bosses have shown up for work and yanked as hard as they can on the big ENSHITTIFICATION lever behind their desks, only that lever didn’t move much. It was all gummed up by competition, regulation, interop and workers.

As those sources of friction melted away, the enshittification lever started moving very freely.

Which sucks, I know. But think about this for a sec: our bosses, despite being wildly imperfect vessels capable of rationalizing endless greed and cheating, nevertheless oversaw a series of actually great products and services.

Not because they used to be better people, but because they used to be subjected to discipline.

So it follows that if we want to end the enshittocene, dismantle the enshitternet, and build a new, good internet that our bosses can’t wreck, we need to make sure that these constraints are durably installed on that internet, wound around its very roots and nerves. And we have to stand guard over it so that it can’t be dismantled again.

A new, good internet is one that has the positive aspects of the old, good internet: an ethic of technological self-determination, where users of technology (and hackers, tinkerers, startups and others serving as their proxies) can reconfigure and mod the technology they use, so that it does what they need it to do, and so that it can’t be used against them.

But the new, good internet will fix the defects of the old, good internet, the part that made it hard to use for anyone who wasn’t us. And hell yeah we can do that. Tech bosses swear that it’s impossible, that you can’t have a conversation friend without sharing it with Zuck; or search the web without letting Google scrape you down to the viscera; or have a phone that works reliably without giving Apple a veto over the software you install.

They claim that it’s a nonsense to even ponder this kind of thing. It’s like making water that’s not wet. But that’s bullshit. We can have nice things. We can build for the people we love, and give them a place that’s worth of their time and attention.

To do that, we have to install constraints.

The first constraint, remember, is competition. We’re living through a epochal shift in competition policy. After 40 years with antitrust enforcement in an induced coma, a wave of antitrust vigor has swept through governments all over the world. Regulators are stepping in to ban monopolistic practices, open up walled gardens, block anticompetitive mergers, and even unwind corrupt mergers that were undertaken on false pretenses.

Normally this is the place in the speech where I’d list out all the amazing things that have happened over the past four years. The enforcement actions that blocked companies from becoming too big to care, and that scared companies away from even trying.

Like Wiz, which just noped out of the largest acquisition offer in history, turning down Google’s $23b cashout, and deciding to, you know, just be a fucking business that makes money by producing a product that people want and selling it at a competitive price.

Normally, I’d be listing out FTC rulemakings that banned noncompetes nationwid. Or the new merger guidelines the FTC and DOJ cooked up, which – among other things – establish that the agencies should be considering whether a merger will negatively impact privacy.

I had a whole section of this stuff in my notes, a real victory lap, but I deleted it all this week.

[Can anyone guess why?]

That’s right! This week, Judge Amit Mehta, ruling for the DC Circuit of these United States of America, In the docket 20-3010 a case known as United States v. Google LLC, found that “Google is a monopolist, and it has acted as one to maintain its monopoly," and ordered Google and the DOJ to propose a schedule for a remedy, like breaking the company up.

So yeah, that was pretty fucking epic.

Now, this antitrust stuff is pretty esoteric, and I won’t gatekeep you or shame you if you wanna keep a little distance on this subject. Nearly everyone is an antitrust normie, and that's OK. But if you’re a normie, you’re probably only catching little bits and pieces of the narrative, and let me tell you, the monopolists know it and they are flooding the zone.

The Wall Street Journal has published over 100 editorials condemning FTC Chair Lina Khan, saying she’s an ineffectual do-nothing, wasting public funds chasing doomed, quixotic adventures against poor, innocent businesses accomplishing nothing

[Does anyone out there know who owns the Wall Street Journal?]

That’s right, it’s Rupert Murdoch. Do you really think Rupert Murdoch pays his editorial board to write one hundred editorials about someone who’s not getting anything done?

The reality is that in the USA, in the UK, in the EU, in Australia, in Canada, in Japan, in South Korea, even in China, we are seeing more antitrust action over the past four years than over the preceding forty years.

Remember, competition law is actually pretty robust. The problem isn’t the law, It’s the enforcement priorities. Reagan put antitrust in mothballs 40 years ago, but that elegant weapon from a more civilized age is now back in the hands of people who know how to use it, and they’re swinging for the fences.

Next up: regulation.

As the seemingly inescapable power of the tech giants is revealed for the sham it always was, governments and regulators are finally gonna kill the “one weird trick” of violating the law, and saying “It doesn’t count, we did it with an app.”

Like in the EU, they’re rolling out the Digital Markets Act this year. That’s a law requiring dominant platforms to stand up APIs so that third parties can offer interoperable services.

So a co-op, a nonprofit, a hobbyist, a startup, or a local government agency wil eventuallyl be able to offer, say, a social media server that can interconnect with one of the dominant social media silos, and users who switch to that new platform will be able to continue to exchange messages with the users they follow and groups they belong to, so the switching costs will fall to damned near zero.

That’s a very cool rule, but what’s even cooler is how it’s gonna be enforced. Previous EU tech rules were “regulations” as in the GDPR – the General Data Privacy Regulation. EU regs need to be “transposed” into laws in each of the 27 EU member states, so they become national laws that get enforced by national courts.

For Big Tech, that means all previous tech regulations are enforced in Ireland, because Ireland is a tax haven, and all the tech companies fly Irish flags of convenience.

Here’s the thing: every tax haven is also a crime haven. After all, if Google can pretend it’s Irish this week, it can pretend to be Cypriot, or Maltese, or Luxembougeious next week. So Ireland has to keep these footloose criminal enterprises happy, or they’ll up sticks and go somewhere else.

This is why the GDPR is such a goddamned joke in practice. Big tech wipes its ass with the GDPR, and the only way to punish them starts with Ireland’s privacy commissioner, who barely bothers to get out of bed. This is an agency that spends most of its time watching cartoons on TV in its pajamas and eating breakfast cereal. So all of the big GDPR cases go to Ireland and they die there.

This is hardly a secret. The European Commission knows it’s going on. So with the DMA, the Commission has changed things up: The DMA is an “Act,” not a “Regulation.” Meaning it gets enforced in the EU’s federal courts, bypassing the national courts in crime-havens like Ireland.

In other words, the “we violate privacy law, but we do it with an app” gambit that worked on Ireland’s toothless privacy watchdog is now a dead letter, because EU federal judges have no reason to swallow that obvious bullshit.

Here in the US, the dam is breaking on federal consumer privacy law – at last!

Remember, our last privacy law was passed in 1988 to protect the sanctity of VHS rental history. It's been a minute.

And the thing is, there's a lot of people who are angry about stuff that has some nexus with America's piss-poor privacy landscape. Worried that Facebook turned grampy into a Qanon? That Insta made your teen anorexic? That TikTok is brainwashing millennials into quoting Osama Bin Laden? Or that cops are rolling up the identities of everyone at a Black Lives Matter protest or the Jan 6 riots by getting location data from Google? Or that Red State Attorneys General are tracking teen girls to out-of-state abortion clinics? Or that Black people are being discriminated against by online lending or hiring platforms? Or that someone is making AI deepfake porn of you?

A federal privacy law with a private right of action – which means that individuals can sue companies that violate their privacy – would go a long way to rectifying all of these problems

There's a pretty big coalition for that kind of privacy law! Which is why we have seen a procession of imperfect (but steadily improving) privacy laws working their way through Congress.

If you sign up for EFF’s mailing list at eff.org we’ll send you an email when these come up, so you can call your Congressjerk or Senator and talk to them about it. Or better yet, make an appointment to drop by their offices when they’re in their districts, and explain to them that you’re not just a registered voter from their district, you’re the kind of elite tech person who goes to Defcon, and then explain the bill to them. That stuff makes a difference.

What about self-help? How are we doing on making interoperability legal again, so hackers can just fix shit without waiting for Congress or a federal agency to act?

All the action here these day is in the state Right to Repair fight. We’re getting state R2R bills, like the one that passed this year in Oregon that bans parts pairing, where DRM is used to keep a device from using a new part until it gets an authorized technician’s unlock code.

These bills are pushed by a fantastic group of organizations called the Repair Coalition, at Repair.org, and they’ll email you when one of these laws is going through your statehouse, so you can meet with your state reps and explain to the JV squad the same thing you told your federal reps.

Repair.org’s prime mover is Ifixit, who are genuine heroes of the repair revolution, and Ifixit’s founder, Kyle Wiens, is here at the con. When you see him, you can shake his hand and tell him thanks, and that’ll be even better if you tell him that you’ve signed up to get alerts at repair.org!

Now, on to the final way that we reverse enhittification and build that new, good internet: you, the tech labor force.

For years, your bosses tricked you into thinking you were founders in waiting, temporarily embarrassed entrepreneurs who were only momentarily drawing a salary.

You certainly weren’t workers. Your power came from your intrinsic virtue, not like those lazy slobs in unions who have to get their power through that kumbaya solidarity nonsense.

It was a trick. You were scammed. The power you had came from scarcity, and so when the scarcity ended, when the industry started ringing up six-figure annual layoffs, your power went away with it.

The only durable source of power for tech workers is as workers, in a union.

Think about Amazon. Warehouse workers have to piss in bottles and have the highest rate of on-the-job maimings of any competing business. Whereas Amazon coders get to show up for work with facial piercings, green mohawks, and black t-shirts that say things their bosses don’t understand. They can piss whenever they want!

That’s not because Jeff Bezos or Andy Jassy loves you guys. It’s because they’re scared you’ll quit and they don’t know how to replace you.

Time for the second obligatory William Gibson quote: “The future is here, it’s just not evenly distributed.” You know who’s living in the future?. Those Amazon blue-collar workers. They are the bleeding edge.

Drivers whose eyeballs are monitored by AI cameras that do digital phrenology on their faces to figure out whether to dock their pay, warehouse workers whose bodies are ruined in just months.

As tech bosses beef up that reserve army of unemployed, skilled tech workers, then those tech workers – you all – will arrive at the same future as them.

Look, I know that you’ve spent your careers explaining in words so small your boss could understand them that you refuse to enshittify the company’s products, and I thank you for your service.

But if you want to go on fighting for the user, you need power that’s more durable than scarcity. You need a union. Wanna learn how? Check out the Tech Workers Coalition and Tech Solidarity, and get organized.

Enshittification didn’t arise because our bosses changed. They were always that guy.

They were always yankin’ on that enshittification lever in the C-suite.

What changed was the environment, everything that kept that switch from moving.

And that’s good news, in a bankshot way, because it means we can make good services out of imperfect people. As a wildly imperfect person myself, I find this heartening.

The new good internet is in our grasp: an internet that has the technological self-determination of the old, good internet, and the greased-skids simplicity of Web 2.0 that let all our normie friends get in on the fun.

Tech bosses want you to think that good UX and enshittification can’t ever be separated. That’s such a self-serving proposition you can spot it from orbit. We know it, 'cause we built the old good internet, and we’ve been fighting a rear-guard action to preserve it for the past two decades.

It’s time to stop playing defense. It's time to go on the offensive. To restore competition, regulation, interop and tech worker power so that we can create the new, good internet we’ll need to fight fascism, the climate emergency, and genocide.

To build a digital nervous system for a 21st century in which our children can thrive and prosper.

Community voting for SXSW is live! If you wanna hear RIDA QADRI and me talk about how GIG WORKERS can DISENSHITTIFY their jobs with INTEROPERABILITY, VOTE FOR THIS ONE!

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/08/17/hack-the-planet/#how-about-a-nice-game-of-chess

Image: https://twitter.com/igama/status/1822347578094043435/ (cropped)

@[email protected] (cropped)

https://mamot.fr/@[email protected]/112963252835869648

CC BY 4.0 https://creativecommons.org/licenses/by/4.0/deed.pt

#pluralistic#defcon#defcon 32#hackers#enshittification#speeches#transcripts#disenshittify or die#Youtube

905 notes

·

View notes

Note

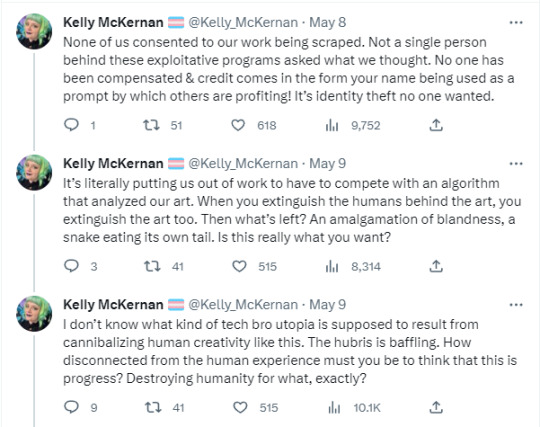

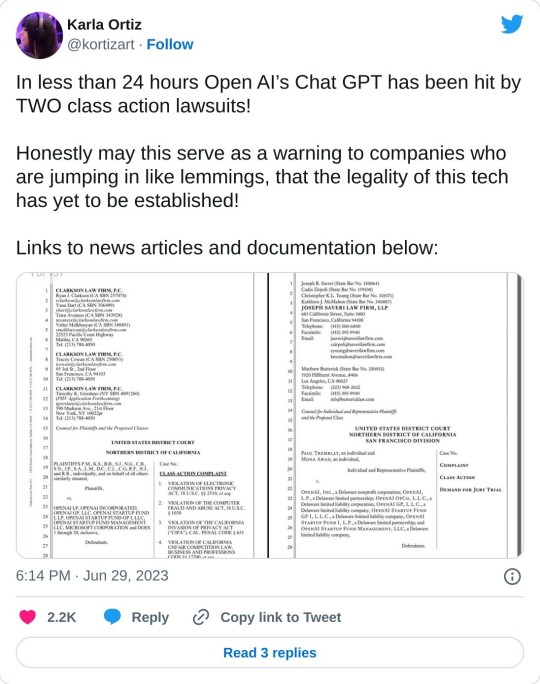

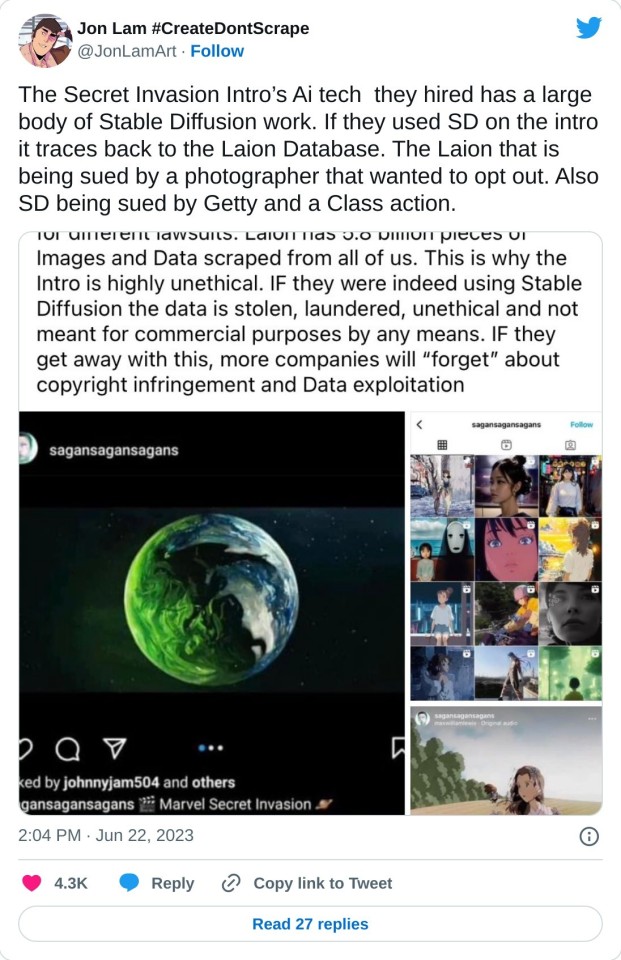

Can you please just tell us what is wrong with ai and why, I can't find anything from actual industry artists ect online through Google just tech bro type articles. All the tech articles are saying it's a good thing, and every pro I follow refuses to explain how or why it's bad. How am I supposed to know something if nobody will teach me and I can't find it myself

I'll start by saying that the reason pro artists are refusing to answer questions about this is because they are tired. Like, I dont know if anyone actually understands just how exhausting it is to have to justify over and over again why the tech companies that are stealing your work and actively seeking to destroy your craft are 'bad, actually'.

I originally wrote a very longform reply to this ask, but in classic tumblr style the whole thing got eaten, so. I do not have the spoons to rewrite all that shit. Here are some of the sources I linked, I particularly recommend stable diffusion litigation for a thorough breakdown of exactly how generative tools work and why that is theft.

youtube

or this video if you are feeling lazy and only want the art-side opening statements:

Everytime you feed someone's work- their art, their writing, their likeness- into Midjourney or Dall-E or Chat GPT you are feeding this monster.

Go forth and educate yourself.

#ai art#asks answered#qwillechatter#for real though guys please dont interact with me about AI#I intended for this to be the last post I'll ever make about it#but tumblr ate it so you miss out on the nice essay#all the sources tell you everything you need to know#Im so fucking tired

825 notes

·

View notes

Text

Integrate AI into your mobile app to enhance user experience, improve efficiency, and drive business growth. Adding artificial intelligence (AI) to your app can lead to improved user experiences, streamlined operations, and data-driven decision-making.

0 notes

Text

Ensuring Data Integrity Post Cloud Migration: Netezza to GCP – BigQuery

The rising cost of healthcare is a global concern. From expensive treatments to skyrocketing insurance premiums, making quality healthcare accessible remains a challenge. But there's a silver lining emerging: artificial intelligence in healthcare (AI) holds immense potential to transform the healthcare landscape, making it more affordable for everyone.

How Can AI Reduce Medical Costs?

The use of AI in healthcare goes beyond futuristic robots performing surgery.

Here are some ways AI can contribute to cost reduction:

Improved Diagnostics and Early Detection: AI algorithms can analyze medical scans and patient data with remarkable accuracy, leading to earlier diagnoses of diseases. Early detection often translates to less expensive and less invasive treatments, saving money in the long run.

Streamlined Treatment Plans: AI can analyze vast amounts of medical data to identify optimal treatment strategies based on a patient's specific condition and medical history. This personalized approach can prevent unnecessary tests and procedures, reducing overall costs.

Enhanced Operational Efficiency: AI can automate administrative tasks like appointment scheduling, claims processing, and patient record management. This frees up valuable time for medical professionals, allowing them to focus on patient care and improving overall operational efficiency, potentially lowering operational costs.

Reduced Hospital Readmissions: AI can analyze patient data to predict potential complications and recommend preventative measures. This can significantly reduce hospital readmissions, a major contributor to healthcare costs.

The Cost of AI in Healthcare: An Investment, Not an Expense

While implementing AI solutions in healthcare requires an initial investment, it's crucial to view it as an investment with long-term returns. As AI technology matures and becomes more widely adopted, cost-efficiencies will likely outweigh the initial investment. Additionally, the potential savings from early disease detection, personalized treatment plans, and reduced hospital readmissions can be substantial.

Exploring the Future of Affordable Healthcare with AI

AI is still in its early stages of development in healthcare, but the potential for cost reduction is undeniable. As research and innovation continue, we can expect even more advanced AI applications to emerge, transforming healthcare into a more accessible and affordable system for all.

By embracing AI, healthcare providers and institutions can pave the way for a future where quality healthcare is not a luxury, but a basic right available to everyone.

0 notes

Text

been waiting for Matt Levine to write more about AI, and he doesn't disappoint

"Wells Fargo is using large language models to help determine what information clients must report to regulators and how they can improve their business processes. “It takes away some of the repetitive grunt work and at the same time we are faster on compliance,” said Chintan Mehta, the firm’s chief information officer and head of digital technology and innovation. The bank has also built a chatbot-based customer assistant using Google Cloud’s conversational AI platform, Dialogflow."

Do you think that Wells Fargo’s customer chatbot pushes customers to open more accounts to meet its quotas? Do you think that its regulatory-reporting chatbot then reports it to regulators? Soon Wells Fargo may be able to generate and negotiate billion-dollar regulatory settlements without any human involvement at all. ... Isn’t this sort of exciting? The widespread use of relatively early-stage AI will introduce new ways of making mistakes into finance. Right now there are some classic ways of making mistakes in finance, and they periodically lead to consequences ranging from funny embarrassment through multimillion-dollar trading loss up to systemic financial crises. Many of the most classic mistakes have the broad shape of “overly confident generalizing from limited historical data,” though some are, like, hitting the wrong button. But there are only so many ways to go wrong, and they are all sort of intuitive. ... Now some banker is going to type into a chat bot “our client wants to hedge the risk of the Turkish election,” and the chat bot will be like “she should sell some Dogecoin call options and use the proceeds to buy a lot of nickel futures,” and the banker will be like “weird okay whatever.” And that trade will go wrong in surprising ways, the client will sue, the client and the banker and the chat bot will all come to court, the judge will ask the chat bot “well why would this trade hedge anything,” and the chat bot will shrug its little imaginary shoulders and be like “bro why are you asking me I’m a chat bot.” Or it will say “actually the Dogecoin/nickel spread was ex ante an excellent proxy for Turkish political risk because” and then emit a series of ones and zeros and emojis and high-pitched noises that you and I and the judge can’t understand but that make perfect sense to the chat bot. New ways to be wrong! It will make life more exciting for financial columnists, for a bit, before we are all replaced by the chat bots.

🔥

111 notes

·

View notes

Text

RECENT SEO & MARKETING NEWS FOR ECOMMERCE, JULY 2024

If you are new to my Tumblr, I usually do these summaries of SEO and marketing news once a month, picking out the pieces that are most likely to be useful to small and micro-businesses.

You can get notified of these updates plus my website blog posts via email: http://bit.ly/CindyLouWho2Blog or get all of the most timely updates plus exclusive content by supporting my Patreon: patreon.com/CindyLouWho2

TOP NEWS & ARTICLES

There is a relatively new way to file copyright claims against US residents, called The Copyright Claims Board (CCB). I wrote more here [post by me on Patreon]

After a few years of handwringing and false starts, Google is abandoning plans to block third-party cookies in Chrome. Both Safari and Firefox already block them.

When composing titles and text where other keywords are found, it can be useful to have a short checklist of the types of keywords you need, as this screenshot demonstrates. While that title is too long for most platforms and search engines, it covers really critical points that should get mentioned in the product description and keyword fields/tags as well:

The core keywords that describe the item

What the customer is looking to do - solve a problem? Find a gift? Feel better?

What makes the product stand out in its field - why buy this instead of something else? Differentiating your items is something that should come before you get to the listing stage, so the keywords should already be in your head.

Relevant keywords that will be used in long tail searches are always great add-ons.

What if anything about your item is trendy now? E.g., sustainability? Particular colours, styles or materials/ingredients are always important.

SEO: GOOGLE & OTHER SEARCH ENGINES

Google’s June spam update has finished rolling out. And here is the full list of Google news from June.

Expect a new Google core update “in the coming weeks” (as if we needed more Google excitement).

Google’s AI overviews continue to dwindle at the top of search results, now only appearing in 7% of searches.

Despite Google trying to target AI spam, many poorly-copied articles still outrank the originals in Google search results.

Internal links are important for Google SEO. While this article covers blogging in particular, most of the tips apply to any standalone website. Google also recently did a video [YouTube] on the same topic.

Google had a really excellent second quarter, mostly due to the cloud and AI.

Not Google

OpenAI is testing SearchGPT with a small number of subscribers. Alphabet shares dropped 3% after the announcement.

SOCIAL MEDIA - All Aspects, By Site

General

New social media alert: noplace is a new app billed as MySpace for Gen Z that also has some similarities with Twitter (e.g., text-based chats, with no photos or videos at this time). iOS only at the moment; no Android app or web page.

Thinking of trying out Bluesky? Here are some tips to get the most out of it.

Facebook (includes relevant general news from Meta)

Meta’s attempt at circumventing EU privacy regulations through paid subscriptions is illegal under the Digital Markets Act, according to the European Commission. “if the company cannot reach an agreement with regulators before March 2025, the Commission has the power to levy fines of up to 10 percent of the company’s global turnover.”

If you post Reels from a business page, you may be able to let Meta use AI to do A/B testing on the captions and other portions shown. I personally would not do this unless I could see what options they were choosing, since AI is often not as good as it thinks it is.

Apple’s 30% fee on in-app ad purchases for Facebook and Instagram has kicked in worldwide as of July 1.

Facebook is testing ads in the Notifications list on the app.

Meta is encouraging advertisers to connect their Google Analytics accounts to Meta Ads, claiming “integration could improve campaign performance, citing a 22% conversion increase.”

Instagram

The head of Instagram is still emphasizing that the number of DM shares per post is a huge ranking factor.

LinkedIn

Another article on the basics of setting up LinkedIn and getting found through it.

You can now advertise your LinkedIn newsletters on the platform.

Pinterest

Pinterest is slowly testing an AI program that edits the background of product photography without changing the product.

Is Pinterest dying? An investment research firm thinks so.

Reddit

If you want to see results from Reddit in your search engine results, Google is the only place that can happen now.

More than ever, Reddit is being touted as a way to be found (especially in Google search), but you do have to understand how the site works to be successful at it.

Snapchat

Snapchat+ now has 9 million paying users, and they are getting quite a few new personalization updates, and Snaps that last 50 seconds or less.

Threads

Threads has hit 175 million active users each month, up from 130 million in February.

TikTok

TikTok has made it easier to reuse your videos outside of the site without a watermark.

TikTok users can now select a custom thumbnail image for videos, either a frame from the clip itself, or a still image from elsewhere.

Twitter

You can opt out of Twitter using your posts as data for its AI, Grok.

YouTube

YouTube has new tools for Shorts, including one that makes your longer videos into Shorts.

Community Spaces are the latest YouTube test to try to get more fan involvement, while moving users away from video comments.

(CONTENT) MARKETING (includes blogging, emails, and strategies)

Start your content marketing plans for August now, including back-to-school themes and Alfred Hitchcock’s birthday on August 13.

ONLINE ADVERTISING (EXCEPT INDIVIDUAL SOCIAL MEDIA AND ECOMMERCE SITES)

Google Ads now have several new updates, including blocking misspellings.

Google’s new Merchant Center Next will soon be available for all users, if they haven’t already been invited. Supplemental feeds are now (or soon will be) allowed there.

STATS, DATA, TRACKING

Google Search Console users can now add their shipping and return info to Google search through the Console itself. This is useful for sites that do not pay for Google Ads or use Google’s free shopping ads.

BUSINESS & CONSUMER TRENDS, STATS & REPORTS; SOCIOLOGY & PSYCHOLOGY, CUSTOMER SERVICE

The second part of this Whiteboard Friday [video with transcript] discusses how consumer behaviour is changing during tight economic times. “People are still spending. They just want the most for their money. Also, the consideration phase is much more complex and longer.” The remainder of the piece discusses how to approach your target market during these times.

Prime Day was supposedly the best ever for Amazon, but they didn’t release any numbers. Adobe Analytics tracked US ecommerce sales on those days and provides some insight. “Buy-now, pay-later accounted for 7.6% of all orders, a 16.4% year-over-year increase.”

MISCELLANEOUS

You know how I always tell small business owners to have multiple revenue streams? Tech needs to have multiple providers and backups as well, as the recent CrowdStrike and Microsoft issues demonstrate.

If you used Google’s old URL shortener anywhere, those links will no longer redirect as of August 25 2024.

14 notes

·

View notes

Text

Unlocking Potential Through Immersive VR-Based Training Solutions

Atcuality is dedicated to unlocking human potential through VR-based training solutions that push the boundaries of traditional learning methods. In industries where hands-on experience is crucial, VR allows trainees to engage in realistic scenarios without leaving the training room. Our VR-based training solutions are designed to provide the kind of experiential learning that helps individuals not just understand but deeply internalize crucial skills. From medical procedures to machinery operation, these immersive training environments offer flexibility and safety, removing the limitations of traditional learning setups. By simulating high-risk or complex tasks, learners can gain valuable experience while mitigating actual risks. With Atcuality’s VR training, companies can ensure their workforce is prepared for real-world challenges, resulting in enhanced safety, improved skill levels, and reduced onboarding and training costs. Our commitment to advancing training standards is evident in our ability to offer highly customizable, impactful VR experiences across various industries.

#digital marketing#seo optimization#seo company#seo changbin#seo services#seo agency#seo marketing#emailmarketing#search engine optimization#google ads#socialmediamarketing#ai services#iot#iotsolutions#iot applications#iot development services#iot platform#digitaltransformation#techinnovation#cloud hosting in saudi arabia#cloud server in saudi arabia#cloud computing#amazon web services#amazon services#mobile app development company#mobile app developers#mobile application development#app developers#app development company#azure cloud services

0 notes

Text

WBSV Sales Gallery and Headquarters

WBSV Sales Gallery + Headquarters is a new concept that applies to the previous design which is supposed to be a Hotel and Mall. The tower has 3 levels of podium and 11 storeys of hotel. The podium of the tower typically includes the WBSV headquarters, sales gallery, retail spaces, amenities, parking, and other facilities

•Project: WORLD BRIDGE SPORT VILLAGE •Facility: WBSV SALE GALLERY and HEADQUARTERS •Architectural Manager: Sonetra KETH •Developer: OXLEY-WORLDBRIDGE (CAMBODIA) CO., LTD. •Subsidiary: WB SPORT VILLAGE CO., LTD. •Location: PHNOM PENH, CAMBODIA

#Sonetra Keth#Architectural Manager#Architectural Design Manager#BIM Director#BIM Manager#BIM Coordinator#Project Manager#RMIT University Vietnam#Institute of Technology of Cambodia#Real Estate Development#Construction Industry#Building Information Modelling#BIM#AI#Artificial Intelligence#Technology#VDC#Virtual Design#IoT#Machine Learning#Drones and UAVs#C4R#Cloud Computing and Collaboration Platforms#NETRA#netra#នេត្រា#កេត សុនេត្រា#<meta name=“google-adsense-account” content=“ca-pub-9430617320114361”>#crossorigin=“anonymous”></script>#<script async src=“https://pagead2.googlesyndication.com/pagead/js/adsbygoogle.js?client=ca-pub-9430617320114361”

0 notes

Text

🎀(*^-^)/\(*^-^*)/\(^-^*)🎀(*^-^)=EF=BC=8F=EF=BC=BC(*^-^*)=EF=BC=8F=EF=BC=BC(^-^*)Kendra (Holstein-Romanov) Hockman

🌟 X Research Scientist @ SpaceX | SpellbookAI | Elon Musk Consulting | HPC-AI | IxDA | CTE Advisor 🌟

📍 Greater Seattle Area | 🌐 Contact Info

📞 P: +1.360.560.6127 | 📧 E: [email protected]

💼 Open to Marketing and Public Relations Specialist and Event Project Manager roles 💼

🌟 About Me 🌟

✨ Business Development Specialist, Agile Project Manager, and Event Producer with +12 years of marketing experience and a passion for providing white glove customer service. ✨

🌟 Skills 🌟

Public Speaking 🎤

B2B + B2C Sales 💼

Stakeholder Relations 🤝

Account Management 📊

SCRUM Development Process 🏃♀️

Customer Relationship Management (CRM) 💬

Data Management / Analysis 📊

Talent Acquisition 👥

Training, Team Building, & Development 🏆

Event Coordination, Production, & Reporting 🎉

Machine Learning + AI Expertise 🤖

Cloud Service Platform Expertise ☁️

VR/AR Consumer Education 🕶️

UX Design 🎨

Proficient in: Zoho, Salesforce, Asana, Trello, Kaltura, Rainfocus, StreamYard, Frame.io, Office 365, Google Workspaces, ChatGPT, & more.

🌟 Professional Experience 🌟

Creative UX.design @ SPACEX

2023 - ¤¤¤ | StarlinkX Studios (remote)

Manage Client Relations

Nurtured and secured $2 Million in sales within the first 60 days

Averaging +150 phone calls per day

Virtual Producer (Project Management) @ NVIDIA

2022 - ₩ | Santa Clara, CA (remote)

Produced an educational AI conference for researchers, developers, inventors, & IT professionals (+300k attendees, +800 sessions)

Coordinated keynote sessions with experts from NVIDIA, Meta, Mastercard, Uber, Google, Amazon, Stanford, etc.

Recorded via our virtual studio, completed post-production editing (cc), and broadcasted with live Q&A featuring ChatGPT

High-Performance Computing and Artificial Intelligence (HPC-AI) Advisory Council Member

Career and Technical Education (CTE) Leadership Committee Member for Public School District

Skilled In: Kaltura, Rainfocus, StreamYard, Frame.io, Office 365 (Excel/PP), Google Workspaces, & more

Project Manager (Business Development) @ SAMSUNG

2022 - ¤¤¤ | San Jose, CA (remote)

Community Liaison for SAMSUNG. Secure & maintain business partnerships

Support students, entrepreneurs, animal rescue groups, environmental protection groups, etc.

Career and Technical Education (CTE) Leadership Committee Member for Public School District

Highest Performing Market out of 36: Washington stores averaged 165% to sales plan

National Account Manager (Event Production) @ More Than Models

2020 – 2022 | Los Angeles, CA (remote)

Provided support and managed deliverables for clients such as Ferrari, Louis Vuitton, Wells Fargo, AT&T, Amazon, etc.

Shows Managed include AT&T Pebble Beach Pro-Am 2022, SuperBowl 2022, NBA All Star 2022, and more

Interviewed, input, and trained over 1,000 new talent candidates

Primary client contact and emergency contact for all shows

Executive Assistant for CEO, Ashlyn Henson

Spokesmodel / Product Specialist / Emcee / Tour Manager

2013 – 2022 | Independent, Nationwide

Consumer educator and product demonstrator specializing in Automotive & Technology

Trained and managed teams of up to 240 for events with over 300,000 attendees

Trade Shows Include: RSA, CES, GTC, GDC, E3, PAX West, Comic Con, Google NEXT, and many others

Clients include: Google, Amazon, Samsung, Cisco, Nvidia, Intel, Microsoft, Xbox, JLR, Lexus, Volvo, and more

Presenter with Stellantis on the International Auto Show Circuit for 3 years

Marketing & Public Relations Manager (Orthodontic / Healthcare Sales) @ All Star Orthodontics

2015 – 2017 | Camas, WA

Created and executed marketing strategies on behalf of Dr. Huong Le. Earned the “Elite Invisalign Provider” Award

Practice had a +3000% case increase, with a 45% conversion increase

🌟 Multi-City Experiential Tours 🌟

Stellantis - International Auto Show Circuit - 2019 to current - Automotive Product Specialist

Nintendo - Nintendo Switch Together Tour - 2019 - Tour Manager

Maruchan - Back 2 Ramen Tour - 2018 - Tour Manager

Safeway - Safeway Back to School Tour - 2018 - Field Manager

Intel - Intel’s Tech Learning Lab Tour - Portland - 2018 - Team Lead

Samsung - Galaxy Studio Tour - 2018 - VR/AR Demonstrator

Xbox - Super Lucky Tale’s Tour - 2017 - Costume Character

Kids Obstacle Challenge - 2016 - Lead Emcee

🌟 Technology Events 🌟

Amazon - CES - Las Vegas - 2020 - AWS Spokesmodel

VMware - VM World - San Francisco - 2019 - Emcee

GlaxoSmithKline - ADA FDI World Dental Congress - San Francisco - 2019 - Spokesmodel

Google - Google Marketing Live - San Francisco - 2019 - Breakout Sessions/Keynote Supervisor

Google - Google I/O - Mountain View - 2019 - Breakout Session/Keynote Supervisor

Google - Google Developers Group Leaders Summit - Mountain View - 2019 - Supervisor

Google - Google Next - San Francisco - 2019 - Supervisor

Nvidia - NVIDIA GTC 2019 - San Jose - 2019 - Breakout Session/Keynote Supervisor

Cisco - RSA - San Francisco - 2019 - Spokesmodel