#California data privacy bill heads

Explore tagged Tumblr posts

Text

SolarWinds: IT professionals want stronger AI regulation

New Post has been published on https://thedigitalinsider.com/solarwinds-it-professionals-want-stronger-ai-regulation/

SolarWinds: IT professionals want stronger AI regulation

.pp-multiple-authors-boxes-wrapper display:none; img width:100%;

A new survey from SolarWinds has unveiled a resounding call for increased government oversight of AI, with 88% of IT professionals advocating for stronger regulation.

The study, which polled nearly 700 IT experts, highlights security as the paramount concern. An overwhelming 72% of respondents emphasised the critical need for measures to secure infrastructure. Privacy follows closely behind, with 64% of IT professionals urging for more robust rules to protect sensitive information.

Rob Johnson, VP and Global Head of Solutions Engineering at SolarWinds, commented: “It is understandable that IT leaders are approaching AI with caution. As technology rapidly evolves, it naturally presents challenges typical of any emerging innovation.

“Security and privacy remain at the forefront, with ongoing scrutiny by regulatory bodies. However, it is incumbent upon organisations to take proactive measures by enhancing data hygiene, enforcing robust AI ethics and assembling the right teams to lead these efforts. This proactive stance not only helps with compliance with evolving regulations but also maximises the potential of AI.”

The survey’s findings come at a pivotal moment, coinciding with the implementation of the EU’s AI Act. In the UK, the new Labour government recently proposed its own AI legislation during the latest King’s speech, signalling a growing recognition of the need for regulatory frameworks. In the US, the California State Assembly passed a controversial AI safety bill last month.

Beyond security and privacy, the survey reveals a broader spectrum of concerns amongst IT professionals. A majority (55%) believe government intervention is crucial to stem the tide of AI-generated misinformation. Additionally, half of the respondents support regulations aimed at ensuring transparency and ethical practices in AI development.

Challenges extend beyond AI regulation

However, the challenges facing AI adoption extend beyond regulatory concerns. The survey uncovers a troubling lack of trust in data quality—a cornerstone of successful AI implementation.

Only 38% of respondents consider themselves ‘very trusting’ of the data quality and training used in AI systems. This scepticism is not unfounded, as 40% of IT leaders who have encountered issues with AI attribute these problems to algorithmic errors stemming from insufficient or biased data.

Consequently, data quality emerges as the second most significant barrier to AI adoption (16%), trailing only behind security and privacy risks. This finding underscores the critical importance of robust, unbiased datasets in driving AI success.

“High-quality data is the cornerstone of accurate and reliable AI models, which in turn drive better decision-making and outcomes,” adds Johnson. “Trustworthy data builds confidence in AI among IT professionals, accelerating the broader adoption and integration of AI technologies.”

The survey also sheds light on widespread concerns about database readiness. Less than half (43%) of IT professionals express confidence in their company’s ability to meet the increasing data demands of AI. This lack of preparedness is further exacerbated by the perception that organisations are not moving swiftly enough to implement AI, with 46% of respondents citing ongoing data quality challenges as a contributing factor.

As AI continues to reshape the technological landscape, the findings of this SolarWinds survey serve as a clarion call for both stronger regulation and improved data practices. The message from IT professionals is clear: while AI holds immense promise, its successful integration hinges on addressing critical concerns around security, privacy, and data quality.

(Photo by Kelly Sikkema)

See also: Whitepaper dispels fears of AI-induced job losses

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Tags: adoption, ai, artificial intelligence, data, ethics, law, legal, Legislation, regulation, report, safety, Society, solarwinds, study

#adoption#ai#ai & big data expo#ai act#AI adoption#AI development#AI Ethics#AI models#AI regulation#ai safety#ai safety bill#AI systems#amp#Articles#artificial#Artificial Intelligence#automation#barrier#Big Data#california#Cloud#compliance#comprehensive#conference#cyber#cyber security#data#data quality#Database#datasets

0 notes

Text

California lawmakers took a key vote today to pass a controversial bill that requires companies that make or modify powerful forms of artificial intelligence to test for their ability to enable critical harm to society.

Following a 32-1 vote in the Senate in May, the Assembly voted 41-9 to pass the bill late Wednesday afternoon. The Senate must take one more vote for the bill to make it to Gov. Gavin Newsom, concurring with any amendments in the Assembly.

Under Senate Bill 1047, companies that spend $100 million to train an AI model or $10 million to modify one must test the model for its ability to enable cybersecurity or infrastructure attacks or the development of chemical, biological, radioactive, or nuclear weaponry.

Eight members of Congress who represent California districts earlier this month took the unusual step of urging Newsom to veto the bill. It’s not clear if he will do so. In May, at a generative AI symposium required by an executive order he signed, Newsom said California must respond to calls for regulation but avoid overregulation. California is home to many of the dominant AI companies in the world.

Powerful interests lined up in favor and in opposition to the bill. Opposition has come from companies including Google and Meta, ChatGPT maker OpenAI, startup incubator Y Combinator, and Fei-Fei Li, an advisor to President Joe Biden and co-organizer of the generative AI symposium ordered by Newsom. They argue that costs to comply with the bill will hurt the industry, particularly startups, and discourage the release of open source AI tools, since companies will fear legal liability under the bill.

Whistleblowers who used to work at OpenAI, and Anthropic, a company cofounded by former OpenAI employees, support the bill. Also in favor of the bill are Twitter CEO Elon Musk, who helped start OpenAI, and frequently-cited AI researcher Yoshua Bengio. They argue, in part, that AI tools pose significant harms and that the federal government has not done enough to address those harms, including through regulation.

Sen. Scott Wiener, a Democrat from San Francisco and author of the bill, has said the purpose of SB 1047 is to codify safety testing that companies already agreed to with President Biden and leaders of other countries.

“With this vote, the Assembly has taken the truly historic step of working proactively to ensure an exciting new technology protects the public interest as it advances,” Wiener said in a press release Wednesday.

In the past year, major AI companies reached voluntary agreements with the White House and government leaders in Germany, South Korea, and the United Kingdom to test their AI models for dangerous capabilities. In remarks responding to OpenAI’s opposition to the bill, Wiener rejected the assertion that passage of SB 1047 will lead businesses to leave the state, calling it a “tired” argument.

Wiener said similar claims were made when California adopted net neutrality and data privacy laws in 2018, but those predictions did not come true.

The bill’s critics, including OpenAI, have said they would rather Congress regulate AI safety than have it regulated at the state level. Wiener said Monday that he agrees and probably wouldn’t have proposed SB 1047 had Congress done so. He added that Congress is paralyzed and that “other than banning TikTok hasn’t passed major tech regulation since the 1990s.”

SB 1047 went through several rounds of amendments on its way to passage. Cosponsor Ari Kagan, cofounder of AI toolmaker Momentum, in July told lawmakers that passage of SB 1047 is necessary to prevent an AI disaster akin to Chernobyl or Three Mile Island. Earlier this month, he told CalMatters that amendments getting rid of a Frontier Model Division that was initially part of the bill weakens the legislation but said he still supported passage.

In addition to SB 1047, earlier this week the California Legislature moved to pass laws that require large online platforms like Facebook to take down deepfakes related to elections and create a working group to issue guidance to schools on how to safely use AI. Other AI policy bills up for a vote this week include a bill to empower the Civil Rights Department to combat automated discrimination, require creatives get permission before using the likeness of a dead person’s voice, body, or face in any capacity, and additional measures to keep voters safe from deceptive deepfakes.

In accordance with a generative AI executive order, the California Government Operations Agency is expected to release a report on how AI can harm vulnerable communities in the coming weeks.

1 note

·

View note

Text

Biden's Right to Repair will include electronics, too

The Biden administration teased a sweeping antimonopoly executive order last week, including a Right to Repair provision that was to be aimed at agricultural equipment — a direct assault on the corporate power of repair archnemesis John Deere.

https://pluralistic.net/2021/07/07/instrumentalism/#r2r

But it turns out that the executive order goes far beyond tractors and other agricultural equipment — it also applies to consumer electronics, including mobile phones, and this is a huge fucking deal.

https://www.vice.com/en/article/y3d5yb/bidens-right-to-repair-executive-order-covers-electronics-not-just-tractors

The Right to Repair fight reached US state legislatures in 2018, when dozens of R2R bills were introduced, and then killed, by an unholy alliance of Apple and other tech companies fighting alongside Big Ag and home electronics monopolists like Wahl.

But the R2R side had its own coalition — farmers, tinkerers, small repair shop owners, auto mechanics and more. If the Biden order had stopped with an agricultural right to repair, that would have weakened the R2R coalition.

Divide-and-rule has long been part of the anti-repair playbook. In 2018, farmers got suckered into backing a California R2R bill that applied to agricultural equipment, only to see the bill gutted, denying them the right to fix their tractors.

https://www.wired.com/story/john-deere-farmers-right-to-repair/

The reason Right to Repair has stayed on the agenda even after brutal legislative losses — the Apple-led coalition killed every single R2R bill in 2018 — has been its support coalition.

https://pluralistic.net/2021/02/02/euthanize-rentiers/#r2r

That’s why the early news that Biden was giving farmers their right to repair was bittersweet — breaking the coalition might snuff out the hope that we’d get right to repair for our phones, cars and appliances. It’s why the news that the EO covers electronics is so exciting.

It’s a hopeful moment, following other triumphs, like the automotive R2R 2020 ballot initiative that passed in Massachusetts with an overwhelming majority despite Big Car’s scare ads showed women literally being murdered by stalkers as a consequence.

https://pluralistic.net/2020/11/13/said-no-one-ever/#r2r

Right to Repair got a massive boost this week when Apple co-founder Steve Wozniak made a public endorsement of the movement and described its principles as fundamental to the technological breakthroughs that led to Apple’s founding.

https://www.bbc.com/news/technology-57763037

Seen in that light, all of Apple’s paternalistic arguments about blocking independent repair in the name of defending its customers’ safety are exposed as having a different motivation — blocking the path Apple took for future innovators, so that it can cement its dominance.

And repair is just one element of the antimonopoly executive order, which is broad indeed, as Zephyr Teachout wrote for The Nation. All in all, there are 72 directives in the EO.

https://www.thenation.com/article/economy/biden-monopoly-executive-order/

They encompass “corporate monopolies in agriculture, defense, pharma, banking, and tech,” ban non-compete agreements, and direct the FTC to go to war against the meat-processing monopolies that have crushed farmers and ranchers.

And further: they direct the FCC to reinstate Net Neutrality, the CFPB to force banks to let us take our financial data with us when we switch to a rival, and open up the flow of cheaper drugs from Canada, where the government has stood up to Big Pharma.

Teachout points out that some of this is symbolic in that the agency heads don’t have to do what the president says, though in practice they tend to do so, and FTC chair/superhero Lina Khan has publicly announced her support for the agenda:

https://www.thenation.com/article/economy/biden-monopoly-executive-order/

Teachout calls this an historic moment. She’s right. The Biden admin is refusing to treat agricultural repair as somehow different from automotive, electronic or appliance repair. More importantly, it’s refusing to treat tech monopolies as separate from all monopolies.

This is crucial, because the same kind of diverse coalition that kept R2R alive is potentially a massive force for driving an antimonopoly agenda in general, because monopolies have destroys lives and value in sectors from athletic shoes to finance, eyeglasses to beer.

The potential coalition is massive, but it needs a name. It’s not enough to be antimonopoly. It has to stand for something.

As James Boyle has written, the term “ecology” changed the balance of power in environmental causes.

Prior to “ecology,” there was no obvious connection between the fight for owl survival and the fight against ozone depletion. It’s not obvious that my concern for charismatic nocturnal birds connects to your concern for the gaseous composition of the upper atmosphere.

“Ecology” turned 1,000 issues into a single movement with 1,000 constituencies all working in coalition. The antimonopoly movement is on the brink of a similar phase-change, but again, it needs to stand for something.

That something can’t be “competition.” Competition on its own is perfectly capable of being terrible. Think of ad-tech companies who say privacy measures are “anticompetitive.” We don’t want competition for the most efficient human rights abuses.

https://doctorow.medium.com/illegitimate-greatness-674353e7cdf9

A far better goal is “self-determination” — the right for individuals and communities to make up their own minds about how they work and live, based on democratic principles rather than corporate fiat.

https://locusmag.com/2021/07/cory-doctorow-tech-monopolies-and-the-insufficient-necessity-of-interoperability/

That would be a fine position indeed for the Democratic Party to stake out. As Teachout writes, “[Democrats once] stood for workers and the people who produced things, and against the middlemen who sought to steal value and control industry. They understood that anti-monopoly laws were partly about keeping prices down — but also about preserving equality and dignity, and making sure that everyone who contributed to the production of goods and services got a fair cut.”

826 notes

·

View notes

Photo

Black History Month: Notable Black Women

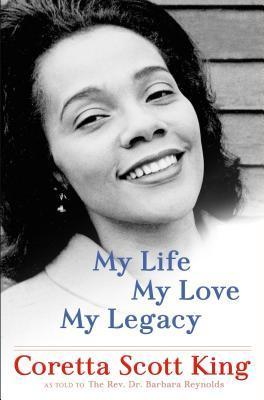

My Life, My Love, My Legacy by Coretta Scott King

The life story of Coretta Scott King—wife of Martin Luther King Jr., founder of the King Center for Nonviolent Social Change, and singular twentieth-century American civil rights activist—as told fully for the first time Born in 1927 to daringly enterprising black parents in the Deep South, Coretta Scott had always felt called to a special purpose. One of the first black scholarship students recruited to Antioch College, a committed pacifist, and a civil rights activist, she was an avowed feminist—a graduate student determined to pursue her own career—when she met Martin Luther King Jr., a Baptist minister insistent that his wife stay home with the children. But in love and devoted to shared Christian beliefs and racial justice goals, she married King, and events promptly thrust her into a maelstrom of history throughout which she was a strategic partner, a standard bearer, a marcher, a negotiator, and a crucial fundraiser in support of world-changing achievements. As a widow and single mother of four, while butting heads with the all-male African American leadership of the times, she championed gay rights and AIDS awareness, founded the King Center for Nonviolent Social Change, lobbied for fifteen years to help pass a bill establishing the US national holiday in honor of her slain husband, and was a powerful international presence, serving as a UN ambassador and playing a key role in Nelson Mandela's election. Coretta’s is a love story, a family saga, and the memoir of an independent-minded black woman in twentieth-century America, a brave leader who stood committed, proud, forgiving, nonviolent, and hopeful in the face of terrorism and violent hatred every single day of her life.

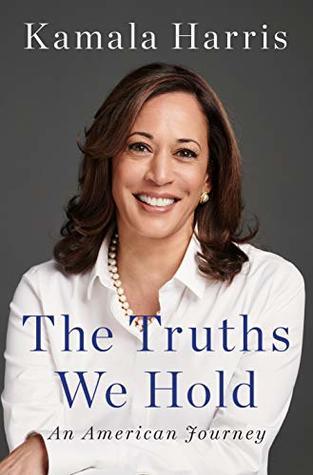

The Truths We Hold: An American Journey by Kamala Harris

From one of America's most inspiring political leaders, a book about the core truths that unite us, and the long struggle to discern what those truths are and how best to act upon them, in her own life and across the life of our country. Vice President-elect Kamala Harris's commitment to speaking truth is informed by her upbringing. The daughter of immigrants, she was raised in an Oakland, California community that cared deeply about social justice; her parents--an esteemed economist from Jamaica and an admired cancer researcher from India--met as activists in the civil rights movement when they were graduate students at Berkeley. Growing up, Harris herself never hid her passion for justice, and when she became a prosecutor out of law school, a deputy district attorney, she quickly established herself as one of the most innovative change agents in American law enforcement. She progressed rapidly to become the elected District Attorney for San Francisco, and then the chief law enforcement officer of the state of California as a whole. Known for bringing a voice to the voiceless, she took on the big banks during the foreclosure crisis, winning a historic settlement for California's working families. Her hallmarks were applying a holistic, data-driven approach to many of California's thorniest issues, always eschewing stale "tough on crime" rhetoric as presenting a series of false choices. Neither "tough" nor "soft" but smart on crime became her mantra. Being smart means learning the truths that can make us better as a community, and supporting those truths with all our might. That has been the pole star that guided Harris to a transformational career as the top law enforcement official in California, and it is guiding her now as a transformational United States Senator, grappling with an array of complex issues that affect her state, our country, and the world, from health care and the new economy to immigration, national security, the opioid crisis, and accelerating inequality. By reckoning with the big challenges we face together, drawing on the hard-won wisdom and insight from her own career and the work of those who have most inspired her, Kamala Harris offers in The Truths We Hold a master class in problem-solving, in crisis management, and leadership in challenging times. Through the arc of her own life, on into the great work of our day, she communicates a vision of shared struggle, shared purpose, and shared values. In a book rich in many home truths, not least is that a relatively small number of people work very hard to convince a great many of us that we have less in common than we actually do, but it falls to us to look past them and get on with the good work of living our common truth. When we do, our shared effort will continue to sustain us and this great nation, now and in the years to come.

Becoming by Michelle Obama

In a life filled with meaning and accomplishment, Michelle Obama has emerged as one of the most iconic and compelling women of our era. As First Lady of the United States of America—the first African American to serve in that role—she helped create the most welcoming and inclusive White House in history, while also establishing herself as a powerful advocate for women and girls in the U.S. and around the world, dramatically changing the ways that families pursue healthier and more active lives, and standing with her husband as he led America through some of its most harrowing moments. Along the way, she showed us a few dance moves, crushed Carpool Karaoke, and raised two down-to-earth daughters under an unforgiving media glare. In her memoir, a work of deep reflection and mesmerizing storytelling, Michelle Obama invites readers into her world, chronicling the experiences that have shaped her—from her childhood on the South Side of Chicago to her years as an executive balancing the demands of motherhood and work, to her time spent at the world’s most famous address. With unerring honesty and lively wit, she describes her triumphs and her disappointments, both public and private, telling her full story as she has lived it—in her own words and on her own terms. Warm, wise, and revelatory, Becoming is the deeply personal reckoning of a woman of soul and substance who has steadily defied expectations—and whose story inspires us to do the same.

More Myself: A Journey by Alicia Keys

An intimate, revealing look at one artist’s journey from self-censorship to full expression. As one of the most celebrated musicians of our time, Alicia Keys has enraptured the nation with her heartfelt lyrics, extraordinary vocal range, and soul-stirring piano compositions. Yet away from the spotlight, Alicia has grappled with private heartache―over the challenging and complex relationship with her father, the people-pleasing nature that characterized her early career, the loss of privacy surrounding her romantic relationships, and the oppressive expectations of female perfection. Since her rise to fame, Alicia’s public persona has belied a deep personal truth: she has spent years not fully recognizing or honoring her own worth. After withholding parts of herself for so long, she is at last exploring the questions that live at the heart of her story: Who am I, really? And once I discover that truth, how can I become brave enough to embrace it? More Myself is part autobiography, part narrative documentary. Alicia’s journey is revealed not only through her own candid recounting, but also through vivid recollections from those who have walked alongside her. The result is a 360-degree perspective on Alicia’s path―from her girlhood in Hell’s Kitchen and Harlem, to the process of self-discovery she’s still navigating. In More Myself, Alicia shares her quest for truth―about herself, her past, and her shift from sacrificing her spirit to celebrating her worth. With the raw honesty that epitomizes Alicia’s artistry, More Myself is at once a riveting account and a clarion call to readers: to define themselves in a world that rarely encourages a true and unique identity.

#black women#black history#black history month#notable women#nonfiction#biographies#non-fiction#nonfiction books#book recs#reading recommendations#recommended reading#library#booklr#tbr

8 notes

·

View notes

Text

SACRAMENTO, Calif | California data privacy bill heads to Gov. Jerry Brown

New Post has been published on https://is.gd/X5lPd5

SACRAMENTO, Calif | California data privacy bill heads to Gov. Jerry Brown

SACRAMENTO, Calif. (AP) — A California internet privacy bill that experts call the nation’s most far-reaching effort to give consumers more control over their data is headed to Gov. Jerry Brown after passing both chambers of the Legislature on Thursday.

Under the bill, consumers could ask companies what personal data they’ve collected, why it was collected and what categories of third parties have received it. Consumers could ask companies to delete their information and refrain from selling it.

Companies could offer discounts to customers who allow their data to be sold and could charge those who opt out a reasonable amount based on how much the company makes selling the information.

It passed the Legislature without any dissenting votes. Lawmakers scrambled to pass it so that a San Francisco real estate developer removes a similar initiative from the November ballot.

The deadline to remove initiatives is Thursday.

The bill, AB375 by Assemblyman Ed Chau, would also bar companies from selling data from children younger than 16 without consent.

“We in California are taking a leadership position with this bill,” said Sen. Bob Hertzberg, a Van Nuys Democrat who co-authored the bill.

“I think this will serve as an inspiration across the country.”

Voter-enacted initiatives are much harder to alter than laws passed through the legislative process. Given the significance and complexity of the proposed policy, supporters and even many opponents say they want legislators to pass the bill so they can more easily change it in the future.

San Francisco real estate developer Alastair Mactaggart spent $3 million to support the related initiative and qualify it for the ballot. If the bill fails, he says he’ll push forward with the initiative.

By SOPHIA BOLLAG by Associated Press

#calif#California data privacy bill heads#California internet privacy bill#Jerry Brown#Sacramento#TodayNews

0 notes

Link

See also: https://calmatters.org/commentary/library-privacy/

Libraries guarantee patrons’ privacy. That’s why LinkedIn’s policy is so troubling

At the moment, LinkedIn, the online business and employment service purchased by Microsoft in 2016 for $26.2 billion, is violating that ethical code and the policies set forth by the American Library Association.

LinkedIn Learning, which acquired Lynda.com in 2015, recently announced that all users of the platform’s online training programs will be required to create or log into a LinkedIn account to access the content. The new terms of service would also apply to LyndaLibrary users who access the platform through library subscriptions. Previously, subscribing libraries could offer patrons access to LyndaLibrary’s training videos on topics ranging from video production to project management using only a library card.

Requiring library users to create a LinkedIn account would provide the Microsoft subsidiary with a patron’s email address, first and last name, and any personal work and education information or professional contacts that the user chooses to input into the career networking platform. In addition, unless subscribing libraries create alternate credentialing systems, logging in would still require a patron’s library card number, linking it to a significant amount of personal information in a database controlled by a third party.

Samantha Lee, Intellectual Freedom Committee Chair of the Connecticut Library Association and Head of Reference Services for the Enfield Public Library, first reported on the potential privacy implications in a detailed June 4 guest post on the American Library Association’s (ALA) Intellectual Freedom Blog.

Lee wrote that: “the…patrons who are turning to LyndaLibrary to improve their technology skills…may not know to protect their [personally identifiable information] or practice good digital hygiene. LinkedIn is strategically taking advantage of technology novices all the while fleecing money from limited library budgets.”

She added that the issue had been discussed on Connecticut library listservs and said that some librarians in the state had begun contacting their account representatives. “When pushed on the patron privacy concerns, [LinkedIn Learning representatives] failed to adequately address the privacy concerns,” Lee wrote. “As a result, a few libraries have reported that they would not be renewing their contracts with LyndaLibrary/LinkedIn Learning.”

In a June 28 response to the nascent controversy titled "Our Commitment to Libraries," Mike Derezin, VP of Learning Solutions for LinkedIn, wrote that "the migration from Lynda.com to LinkedIn Learning will give our library customers and their patrons access to 2x the learning content, in more languages, and with a more engaging experience." Derezin claimed that the use of LinkedIn profiles will help the company with user authentication, and that any LinkedIn user has the ability to set their profiles to private, and to choose not to have their profiles be discoverable via search engines.

Yet profile discoverability isn’t the only privacy issue raising concerns. LinkedIn Learning’s terms of service includes expansive permissions for retention and utilization of user data, including vaguely defined permissions to share user data with additional third parties when the company believes it is reasonably necessary. While such terms have become typical for many online platforms and services, this would violate Article VII of the Library Bill of Rights, which declares that users have a right to privacy and confidentiality in their library use. Library vendors are held to stricter standards for data retention and use because, as Lee notes, Connecticut and other states also have legal standards for library confidentiality.

“As an outside vendor providing services to library users, any activity on LinkedIn Learning as a library patron would constitute a library transaction and therefore should ‘be kept confidential’” per Connecticut’s General Statutes on public libraries, she explained. “This becomes problematic when we look at LinkedIn’s Privacy Policy (again, indistinguishable from LyndaLibrary/LinkedIn Learning’s platform) and its indiscriminate collection of user information.”

This week, California State Librarian Greg Lucas recommended that the state’s libraries stop offering the service “until the company changes its new use policy so that it protects the privacy of library users. Not only does LinkedIn Learning refuse to acknowledge the fundamental right to privacy that is central to the guarantee libraries make to their customers…it seeks to use personal information provided by library patrons in various ways, including sharing it with third parties.”

Separately, ALA issued an announcement urging the company to revise the new terms, with ALA President Wanda Kay Brown stating that “the requirement for users of LinkedIn Learning to disclose personally identifiable information is completely contrary to ALA policies addressing library users’ privacy, and it may violate some states’ library confidentiality laws. It also violates the librarian’s ethical obligation to keep a person’s use of library resources confidential. We are deeply concerned about these changes to the terms of service and urge LinkedIn and its owner, Microsoft, to reconsider their position on this.”

1 note

·

View note

Photo

AI regulation: A state-by-state roundup of AI bills

Ended up you not able to attend Change 2022? Check out out all of the summit periods in our on-need library now! Enjoy here .

Wanting to know where by AI regulation stands in your point out? Today, the Electronic Privacy Data Middle (EPIC) released The Point out of Condition AI Plan , a roundup of AI-related charges at the state and neighborhood degree that were passed, launched or failed in the 2021-2022 legislative session.

In the past 12 months, according to the document, states and localities have passed or released costs “regulating synthetic intelligence or setting up commissions or activity forces to request transparency about the use of AI in their condition or locality.”

For illustration, Alabama, Colorado, Illinois and Vermont have passed payments generating a fee, undertaking pressure or oversight placement to examine the use of AI in their states and make suggestions with regards to its use. Alabama, Colorado, Illinois and Mississippi have handed payments that restrict the use of AI in their states. And Baltimore and New York City have passed neighborhood payments that would prohibit the use of algorithmic choice-creating in a discriminatory manner.

Ben Winters, EPIC’s counsel and chief of EPIC’s AI and Human Legal rights Job, reported the data was one thing he had preferred to get in one particular single document for a long time.

“State coverage in standard is seriously really hard to adhere to, so the concept was to get a kind of zoomed-out image of what has been launched and what has passed, so that at the following session everybody is well prepared to shift the excellent charges together,” he explained.

Fragmented condition and area AI legislation The record of varied rules helps make apparent the fragmentation of legislation all around the use of AI in the US – as opposed to the broad mandate of a proposed regulatory framework around the use of AI in the European Union.

But Winters explained when point out rules can be perplexing or irritating – for instance, if sellers have to offer with distinct condition legal guidelines regulating AI in governing administration contracting — the edge is that thorough bills can are likely to get watered down.

“Also, when bills are passed influencing businesses in massive states this sort of as, say, California, it in essence produces a typical,” he reported. “We’d adore to see robust laws handed nationwide, but from my standpoint right now point out-amount policy could yield stronger outcomes.”

Business firms, he additional, must be mindful of proposed AI regulation and that there is a increasing standard all round all around the transparency and explainability of AI. “To get in advance of that soaring tide, I feel they should really test to get it on on their own to do a lot more testing, extra documentation and do this in a way that is comprehensible to buyers,” he explained. “That’s what more and a lot more locations are heading to need.”

AI regulation centered on specific problems Some constrained concerns, he pointed out, will have far more accelerated expansion in legislative concentrate, such as facial recognition and the use of AI in using the services of . “Those are form of the ‘buckle your seat belt’ challenges,’ he mentioned.

Other problems will see a gradual advancement in the number of proposed costs, despite the fact that Winters said that there is a lot of motion around condition procurement and automated decision creating systems. “The Vermont invoice passed last 12 months and each Colorado and Washington point out were seriously near,” he explained. “So I believe there’s going to be far more of individuals up coming 12 months in the following legislative session.”

In addition, he reported, there may be some movement on precise expenses codifying principles close to AI discrimination. For case in point, Washington DC’s Cease Discrimination by Algorithms Act of 2021 “would prohibit the use of algorithmic final decision-creating in a discriminatory method and would need notices to people whose personalized information and facts is applied in particular algorithms to determine work, housing, health care and economic lending.”

“The DC bill hasn’t handed still, but there’s a large amount of interest,” he explained, introducing that identical ideas are in the pending American Information Privateness and Safety Act . “I really don’t imagine there will be a federal regulation or any enormous avalanche of generally-relevant AI guidelines in commerce in the future little bit, but latest point out charges have passed that have demands close to particular discrimination aspects and opting out – that is likely to need additional transparency.”

Read additional: What is AI governance

https://socialwicked.com/ai-regulation-a-state-by-state-roundup-of-ai-bills/

0 notes

Text

Facebook stops funding the brain reading computer interface

Facebook stops funding the brain reading computer interface

https://theministerofcapitalism.com/blog/facebook-stops-funding-the-brain-reading-computer-interface/

Now the answer is inside, and it’s not at all close. Four years after announcing an “incredibly crazy” project to build a “silent speech” interface using optical technology to read thoughts, Facebook leaves the project within reach, saying consumers ’brain readings are still a long way off.

In a blog post, Facebook said it would stop working on the project and instead focus on an experimental wrist controller for virtual reality that reads the muscle signals of the arm. “Although we still believe in the long-term potential of head-mounted optics [brain-computer interface] technologies, we have decided to focus our immediate efforts on a different approach to the neural interface that has a path to the market in the near future, ”said the company.

Facebook’s brain typing project had taken him into unfamiliar territory, including funding for brain surgeries to a California hospital and building prototype helmets that could shoot light through the skull, and into tough debates about whether tech companies should access private brain information. Ultimately, however, it appears that the company has decided that the investigation will simply not lead to a product soon enough.

“We have a lot of hands-on experience with these technologies,” says Mark Chevillet, the physicist and neuroscientist who until last year led the silent speech project, but recently changed roles to study how Facebook handles elections. “That is why we can say with certainty that, as a consumer interface, an optical silent voice device mounted on the head is still a long way off. Possibly more than we expected. “

Mental reading

The fashionable reason for brain-computer interfaces is that companies see mind-controlled software as a breakthrough, as important as the computer mouse, graphical user interface, or sliding screen. In addition, researchers have already shown that if they place electrodes directly on the brain to harness individual neurons, the results will be remarkable. Patients paralyzed with these “implants” can deftly moves robotic arms i play videogames or type through mental control.

Facebook’s goal was to turn these findings into a consumer technology that everyone could use, which meant a helmet or headset that you could put on and take off. “We never intended to do a brain surgery product,” Chevillet says. Given the social giant’s numerous regulatory issues, CEO Mark Zuckerberg had once said that the last thing the company should do is open skulls. “I don’t want to see Congress hearings on this issue,” he had joked.

In fact, as brain-computer interfaces move forward, there are serious new concerns. What if big tech companies could know people’s thoughts? In Chile, lawmakers are even considering protecting a human rights bill brain data, free will and mental privacy of technology companies. Given Facebook’s poor history of privacy, the decision to stop this investigation may have the secondary advantage of placing some distance between the company and growing concerns about “neurorights.”

The Facebook project was specifically aimed at a brain controller who could connect with their ambitions in virtual reality; bought Oculus VR in 2014 for $ 2 billion. To get there, the company took a two-pronged approach, Chevillet says. First, it was necessary to determine whether a voice-to-speech interface was even possible. To do so, he sponsored research at the University of California, San Francisco, where a researcher named Edward Chang has placed electrode pads on the surface of people’s brains.

While the implanted electrodes read data from individual neurons, this technique, called electrocorticography or ECoG, measures at once large groups of neurons. Chevillet says Facebook hoped it would also be possible to detect equivalent signals from outside the head.

The UCSF team made some breakthroughs and today publishes in the New England Journal of Medicine that it used these electrode pads to decode speech in real time. The subject was a 36-year-old man whom researchers refer to as “Bravo-1,” who after a severe stroke has lost his ability to form intelligible words and can only growl or moan. In their report, Chang’s group says that with electrodes on the surface of the brain, Bravo-1 has been able to form sentences on a computer at a speed of about 15 words per minute. The technology involves measuring neural signals in the part of the motor cortex associated with Bravo-1’s efforts to move the tongue and vocal tract while imagining speaking.

To achieve this result, Chang’s team asked Bravo-1 to imagine saying one of the usual 50 words nearly 10,000 times, feeding the patient’s neural signals into a deep learning model. After training the model to match words with neural signals, the team was able to correctly determine the word Bravo-1 by thinking about saying 40% of the time (likely results would have been around 2%). Still, his sentences were full of mistakes. “Hello how are you?” can come out “Hungry how are you?”

But scientists improved performance by adding a language model: a program that judges which word sequences are most likely in English. This increased accuracy to 75%. With this cyborg approach, the system could predict that Bravo-1’s phrase “I hit my nurse” meant “I like my nurse.”

As remarkable as the result may be, there are more than 170,000 words in English and therefore the performance would fall outside the restricted vocabulary of Bravo-1. This means that the technique, while it may be useful as a medical aid, does not come close to what Facebook had in mind. “We see applications in the foreseeable future in clinical care technology, but that’s not our business here,” Chevillet says. “We’re focused on consumer apps and there’s a long way to go.”

Equipment developed by Facebook for diffuse optical tomography, which uses light to measure changes in blood oxygen to the brain.

FACEBOOK

Optical failure

Facebook’s decision to abandon brain reading is no surprise to researchers studying these techniques. “I can’t say I was surprised, because they had hinted that they were looking at a short period of time and were going to evaluate things,” says Marc Slutzky, a professor at Northwest, whose former student Emily Munger was a key contractor from Facebook. “Just speaking from experience, the goal of decoding speech is a big challenge. We are still a long way from a practical solution that covers everything ”.

Still, Slutsky says the UCSF project is an “impressive next step” that demonstrates remarkable possibilities and some limits to the science of brain reading. “It remains to be seen if speech can be decoded freely,” he says. “A patient who says ‘I want a drink of water’ versus ‘I want my medicine,’ because they’re different.” He says that if artificial intelligence models could be trained for longer and in more than one person’s brain, they could improve quickly.

While doing the UCSF research, Facebook also paid other centers, such as the Johns Hopkins Laboratory of Applied Physics, to find out how to pump light through the skull to read neurons non-invasively. Like MRI, these techniques are based on the detection of reflected light to measure the amount of blood flow to brain regions.

It is these optical techniques that remain the biggest stumble. Even with recent improvements, including some from Facebook, they are not able to pick up neural signals with sufficient resolution. Another issue, Chevillet says, is that the blood flow that these methods detect occurs five seconds after the fire of a group of neurons, making it too slow to control a computer.

Source link

0 notes

Text

Community Control of Police Spy Tech

All too often, police and other government agencies unleash invasive surveillance technologies on the streets of our communities, based on the unilateral and secret decisions of agency executives, after hearing from no one except corporate sales agents. This spy tech causes false arrests, disparately burdens BIPOC and immigrants, invades our privacy, and deters our free speech.

Many communities have found Community Control of Police Surveillance (CCOPS) laws to be an effective step on the path to systemic change. CCOPS laws empower the people of a community, through their legislators, to decide whether or not city agencies may acquire or use surveillance technology. Communities can say “no,” full stop. That will often be the best answer, given the threats posed by many of these technologies, such as face surveillance or predictive policing. If the community chooses to say “yes,” CCOPS laws require the adoption of use policies that secure civil rights and civil liberties, and ongoing transparency over how these technologies are used.

The CCOPS movement began in 2014 with the development of a model local surveillance ordinance and launch of a statewide surveillance campaign by the ACLU affiliates in California. By 2016, a broad coalition including EFF, ACLU of Northern California, CAIR San Francisco-Bay Area, Electronic Frontier Alliance (EFA) member Oakland Privacy, and many others passed the first ordinance of its kind in Santa Clara County, California. EFF has worked to enact these laws across the country. So far, 18 communities have done so. You can press the play button below to see a map of where they are.

%3Ciframe%20src%3D%22https%3A%2F%2Fwww.google.com%2Fmaps%2Fd%2Fembed%3Fmid%3D1GN7RoV6w8hGOcKbu5qnycbwznnwWFpWu%22%20width%3D%22640%22%20height%3D%22480%22%20allow%3D%22autoplay%22%3E%C2%A0%3C%2Fiframe%3E

Privacy info. This embed will serve content from google.com

Bay Area Rapid Transit, CA

Berkeley, CA

Boston, MA

Cambridge, MA

Davis, CA

Grand Rapids, MI

Lawrence, MA

Madison, WI

Nashville, TN

New York City, NY

Northampton, MA

Oakland, CA

Palo Alto, CA

San Francisco, CA

Santa Clara County, CA

Seattle, WA

Somerville, MA

Yellow Springs, OH

These CCOPS laws generally share some common features. If an agency wants to acquire or use surveillance technology (broadly defined), it must publish an impact statement and a proposed use policy. The public must be notified and given an opportunity to comment. The agency cannot use or acquire this spy tech unless the city council grants permission and approves the use policy. The city council can require improvements to the use policy. If a surveillance technology is approved, the agency must publish annual reports regarding their use of the technology and compliance with the approved policies. There are also important differences among these CCOPS laws. This post will identify the best features of the first 18 CCOPS laws, to show authors of the next round how best to protect their communities. Specifically:

The city council must not approve a proposed surveillance technology unless it finds that the benefits outweigh the cost, and that the use policy will effectively protect human rights.

The city council needs a reviewing body, with expertise regarding surveillance technology, to advise it in making these decisions.

Members of the public need ample time, after notice of a proposed surveillance technology, to make their voices heard.

The city council must review not just the spy tech proposed by agencies after the CCOPS ordinance is enacted, but also any spy tech previously adopted by agencies. If the council does not approve it, use must cease.

The city council must annually review its approvals, and decide whether to modify or withdraw these approvals.

Any emergency exemption from ordinary democratic control must be written narrowly, to ensure the exception will not swallow the rule.

Members of the public must have a private right of action so they can go to court to enforce both the CCOPS ordinance and any resulting use policies.

Authors of CCOPS legislation would benefit by reviewing the model bill from the ACLU. Also informative are the recent reports on enacted CCOPS laws from Berkeley Law’s Samuelson Clinic, and from EFA member Surveillance Technology Oversight Project, as well Oakland Privacy and the ACLU of Northern California’s toolkit for fighting local surveillance.

Strict Standard of Approval

There is risk that legislative bodies may become mere rubber stamps providing a veneer of democracy over a perpetuation of bureaucratic theater. Like any good legislation, the power or fault is in the details.

Oakland’s ordinance accomplishes this by making it clear that legislative approval should not be the default. It is not the city council’s responsibility, or the community’s, to find a way for agency leaders to live out their sci-fi dreams. Lawmakers must not approve the acquisition or use of a surveillance technology unless, after careful deliberation and community consultation, they find that the benefits outweigh the costs, that the proposal effectively safeguards privacy and civil rights, and that no alternative could accomplish the agency’s goals with lesser costs—economically or to civil liberties.

A Reviewing Body to Assist the City Council

Many elected officials do not have the technological proficiency to make these decisions unassisted. So the best CCOPS ordinances designate a reviewing body responsible for providing council members the guidance needed to ask the right questions and get the necessary answers. A reviewing body builds upon the core CCOPS safeguards: public notice and comment, and council approval. Agencies that want surveillance technology must first seek a recommendation from the reviewing body, which acts as the city’s informed voice on technology and its privacy and civil rights impacts.

When Oakland passed its ordinance, the city already had a successful model to draw from. Coming out of the battle between police and local advocates who had successfully organized to stop the Port of Oakland’s Domain Awareness Center, the city had a successful Privacy Advisory Commission (PAC). So Oakland’s CCOPS law tasked the PAC with providing advice to the city council on surveillance proposals.

While Oakland’s PAC is made up exclusively of volunteer community members with a demonstrated interest in privacy rights, San Francisco took a different approach. That city already had a forum for city leadership to coordinate and collaborate on technology solutions. Its fifteen-member Committee on Information Technology (COIT) is comprised of thirteen department heads—including the President of the Board of Supervisors—and two members of the public.

There is no clear rule-of-thumb on which model of CCOPS reviewing body is best. Some communities may question whether appointed city leaders might be apprehensive about turning down a request from an allied city agency, instead of centering residents' civil rights and personal freedoms. Other communities may value the perspective and attention that paid officials can offer to carefully consider all proposed surveillance technology and privacy policies before they may be submitted for consideration by the local legislative body. Like the lawmaking body itself, these reviewing bodies’ proceedings should be open to the public, and sufficiently noticed to invite public engagement before the body issues its recommendation to adopt, modify, or deny a proposed policy.

Public Notice and Opportunity to Speak Up

Public notice and engagement are essential. For that participation to be informed and well-considered, residents must first know what is being proposed, and have the time to consult with unbiased experts or otherwise educate themselves about the capabilities and potential undesired consequences of a given technology. This also allows time to organize their neighbors to speak out. Further, residents must have sufficient time to do so. For example, Davis, California, requires a 30-day interval between publication of a proposed privacy policy and impact report, and the city council’s subsequent hearing regarding the proposed surveillance technology.

New York City’s Public Oversight of Surveillance (POST) Act is high on transparency, but wanting on democratic power. On the positive side, it provides residents with a full 45 days to submit comments to the NYPD commissioner. Other cities would do well to provide such meaningful notice. However, due to structural limits on city council control of the NYPD, the POST Act does not accomplish some of the most critical duties of this model of surveillance ordinance—placing the power and responsibility to hear and address public concerns with the local legislative body, and empowering that body to prohibit harmful surveillance technology.

Regular Review of Technology Already in Use

The movement against surveillance equipment is often a response to the concerning ways that invasive surveillance has already harmed our communities. Thus, it is critical that any CCOPS ordinance apply not just to proposals for new surveillance tech, but also to the continued use of existing surveillance tech.

For example, city agencies in Davis that possessed or used surveillance technology when that city’s CCOPS ordinance went into effect had a four-month deadline to submit a proposed privacy policy. If the city council did not approve it within four regular meetings, then the agency had to stop using it. Existing technology must be subject to at least the same level of scrutiny as newer technology. Indeed, the bar should arguably be higher for existing technologies, considering the likely existence of a greater body of data showing their capabilities or lack thereof, and any prior harm to the community.

Moving forward, CCOPS ordinances must also require that each agency using surveillance technology issue reports about it on at least an annual basis. This allows the city council and public to monitor the use and deployment of approved surveillance technologies. Likewise, CCOPS ordinances must require the city council, at least annually, to revisit its decision to approve a surveillance technology. This is an opportunity to modify the use policies, or end the program altogether, when it becomes clear that the adopted protections have not been sufficient to protect rights and liberties.

In Yellow Springs, Colorado, village agencies must facilitate public engagement by submitting annual reports to the village council, and making them publicly available on their websites. Within 60 days, the village council must hold a public hearing about the report with opportunity for public comment. Then the village council must determine whether each surveillance technology has met its standards for approval. If not, the village council must discontinue the technology, or modify the privacy policy to resolve the failures.

Emergency Exceptions

Many CCOPS ordinances allow police to use surveillance technology without prior democratic approval, in an emergency. Such exceptions can easily swallow the rule, and so they must be tightly drafted.

First, the term “emergency” must be defined narrowly, to cover only imminent danger of death or serious bodily injury to a person. This is the approach, for example, in San Francisco. Unfortunately, some cities extend this exemption to also cover property damage. But police facing large protests can always make ill-considered claims that property is at risk.

Second, the city manager alone must have the power to allow agencies to make emergency use of surveillance technology, as in Berkeley. Suspension of democratic control over surveillance technology is a momentous decision, and thus should come only from the top.

Third, emergency use of surveillance technology must have tight time limits. This means days, not weeks or months. Further, the legislative body must be quickly notified, so it can independently and timely assess the departure from legislative control. Yellow Springs has the best schedule: emergency use must end after four days, and notification must occur within ten days.

Fourth, CCOPS ordinances must strictly limit retention and sharing of personal information collected by surveillance technology on an emergency basis. Such technology can quickly collect massive quantities of personal information, which then can be stolen, abused by staff, or shared with ICE. Thus, Oakland’s staff may not retain such data, unless it is related to the emergency or is relevant to an ongoing investigation. Likewise, San Francisco’s staff cannot share such data, except based on a court’s finding that the data is evidence of a crime, or as otherwise required by law.

Enforcement

It is not enough to enact an ordinance that requires democratic control over surveillance technology. It is also necessary to enforce it. The best way is to empower community members to file their own enforcement lawsuits. These are often called a private right of action. EFF has filed such surveillance regulation enforcement litigation, as have other advocates like Oakland Privacy and the ACLU of Northern California.

The best private rights of action broadly define who can sue. In Boston, for example, “Any violation of this ordinance constitutes an injury and any person may institute proceedings.” It is a mistake to limit enforcement just to a person who can show they have been surveilled. With many surveillance tools capturing information in covert dragnets, it can be exceedingly difficult to identify such people, or prove that you have been personally impacted, despite a brazen violation of the ordinance. In real and immutable ways, the entire community is harmed by unauthorized surveillance technology, including through the chilling of protest in public spaces.

Some ordinances require a would-be plaintiff, before suing, to notify the government of the ordinance violation, and allow the government to avoid a suit by ending the violation. But this incentivizes city agencies to ignore the ordinance, and wait to see whether anyone threatens suit. Oakland’s ordinance properly eschews this kind of notice-and-cure clause.

Private enforcement requires a full arsenal of remedies. First, a judge must have the power to order a city to comply with the ordinance. Second, there should be damages for a person who was unlawfully subjected to surveillance technology. Oakland provides this remedy. Third, a prevailing plaintiff should have their reasonable attorney fees paid by the law-breaking agency. This ensures access to the courts for everyone, and not just wealthy people who can afford to hire a lawyer. Davis properly allows full recovery of all reasonable fees. Unfortunately, some cities cap fee-shifting at far less than the actual cost of litigating an enforcement suit.

Other enforcement tools are also important. Evidence collected in violation of the ordinance must be excluded from court proceedings, as in Somerville, Massachusetts. Also, employees who violate the ordinance should be subject to workplace discipline, as in Lawrence, Massachusetts.

Next Steps

The movement to ensure community control of government surveillance technology is gaining steam. If we can do it in cities across the country, large and small, we can do it in your hometown, too. The CCOPS laws already on the books have much to teach us about how to write the CCOPS laws of the future.

Please join us in the fight to ensure that police cannot decide by themselves to deploy dangerous and invasive spy tech onto our streets. Communities, through their legislative leaders, must have the power to decide—and often they should say “no.”

Related Cases:

Williams v. San Francisco

from Deeplinks https://ift.tt/3v8Y8sk

0 notes

Text

California passes landmark data privacy bill

California passes landmark data privacy bill

A data privacy bill in California is just a signature away from becoming law over the strenuous objections of many tech companies that rely on surreptitious data collection for their livelihood. The California Consumer Privacy Act of 2018 has passed through the state legislative organs and will now head to the desk of Governor Jerry …

Continue reading “California passes landmark data privacy…

View On WordPress

1 note

·

View note

Text

SACRAMENTO, Calif | California data privacy bill heads to Gov. Jerry Brown

New Post has been published on https://is.gd/X5lPd5

SACRAMENTO, Calif | California data privacy bill heads to Gov. Jerry Brown

SACRAMENTO, Calif. (AP) — A California internet privacy bill that experts call the nation’s most far-reaching effort to give consumers more control over their data is headed to Gov. Jerry Brown after passing both chambers of the Legislature on Thursday.

Under the bill, consumers could ask companies what personal data they’ve collected, why it was collected and what categories of third parties have received it. Consumers could ask companies to delete their information and refrain from selling it.

Companies could offer discounts to customers who allow their data to be sold and could charge those who opt out a reasonable amount based on how much the company makes selling the information.

It passed the Legislature without any dissenting votes. Lawmakers scrambled to pass it so that a San Francisco real estate developer removes a similar initiative from the November ballot.

The deadline to remove initiatives is Thursday.

The bill, AB375 by Assemblyman Ed Chau, would also bar companies from selling data from children younger than 16 without consent.

“We in California are taking a leadership position with this bill,” said Sen. Bob Hertzberg, a Van Nuys Democrat who co-authored the bill.

“I think this will serve as an inspiration across the country.”

Voter-enacted initiatives are much harder to alter than laws passed through the legislative process. Given the significance and complexity of the proposed policy, supporters and even many opponents say they want legislators to pass the bill so they can more easily change it in the future.

San Francisco real estate developer Alastair Mactaggart spent $3 million to support the related initiative and qualify it for the ballot. If the bill fails, he says he’ll push forward with the initiative.

By SOPHIA BOLLAG by Associated Press

#calif#California data privacy bill heads#California internet privacy bill#Jerry Brown#Sacramento#TodayNews

0 notes

Text

What divided control of Congress would mean for President-elect Biden

New Post has been published on http://khalilhumam.com/what-divided-control-of-congress-would-mean-for-president-elect-biden/

What divided control of Congress would mean for President-elect Biden

By Darrell M. West On November 5, when it looked like Republicans had retained its Senate majority, there was a news report that “a source close to McConnell tells Axios a Republican Senate would work with Biden on centrist nominees but no ‘radical progressives’ or ones who are controversial with conservatives.” The article went on to note a GOP-controlled Senate would force Biden to have “a more centrist Cabinet” and would preclude Biden from appointing left-of-center officials in federal agencies. A week later, though, that declaration looks premature. A Georgia law requiring Senate candidates to reach a 50 percent threshold means that two Senate races in that state are headed to a January 5 runoff election that would decide party control of the Senate. Based on what happens in those contests, there could be a 50-50 Senate where Vice President-elect Kamala Harris breaks the tie in favor of Democrats, or a 52-48 or 51-49 chamber in favor of Republicans. The consequences of these competing scenarios for public policy in general would be enormous but also crucial in terms of technology policy. There would be dramatic ramifications for Biden’s Cabinet choices, agency appointments, and policy options in terms of antitrust enforcement, competition policy, infrastructure investment, privacy, Section 230 reforms, regulation, tax policy, and relations with China. Unless one or two Republican Senators sided with unified Democrats on particular issues, a GOP Senate likely would block progressive action and force Biden more to the political middle on a whole host of matters. On antitrust questions, progressive House Democrats hoped for a major rewrite of the country’s internet platform rules that would restrain the ability of large tech companies to engage in predatory practices and unfair competition. With a GOP Senate, the odds of such an outcome are quite low. Biden still could pursue Department of Justice lawsuits against large tech companies, but it would be harder to rewrite the rules of the game. A similar logic applies to prospects for a national privacy law. While it is not out of the question that California’s tough privacy law plus follow-up action by other states could encourage Congress to enact legislation, working out issues regarding the right to sue and state preemption controversies would be easier with a Democratic President, House, and Senate than divided party control. In the latter situation, Biden would have to find a few Senators willing to buck their party and vote with him to resolve those issues. Such a coalition could happen, but these kinds of negotiations always are lengthy and complicated. Section 230 reforms may be one of the issues that move forward even in a divided Congress. The reason is both Republicans and Democrats have grievances against Big Tech. Republicans are upset about alleged ideological biases on the part of large platforms, while Democrats are unhappy about human trafficking, use of platforms to advertise opioids, and other abuses. The shared sense of grievance between members of the two parties could form the basis of a compromise bill that would remove or scale back the legal liability exemption in selected areas, similar to what Congress did on the Stop Enabling Sex Trafficking bill passed in 2018. Activities that further racial bias, enable opioid abuse, or incite violence could be candidates for legal carve-outs. Another prime area for congressional action under divided control would be infrastructure investment. President-elect Biden already has discussed his interest in a major bill in this area that would address problems of highways, bridges, dams, broadband, and digital infrastructure. The goal would be to close the digital divide and aid underserved communities through improved broadband. There are agency actions that could make meaningful progress to help vulnerable communities. The two parties are likely to agree on a tough line on China. The public climate has shifted markedly against China in recent years with both legislators and the general public wanting fairer trade policies, enhanced security, and a stronger emphasis on human rights. President-elect Biden will likely set up a clear process and a coherent strategy to move U.S. policy in these directions. With substantial control of diplomatic processes and trade negotiations, there are a number of things he can do on his own, such as tightening export controls, demanding fairer treatment of religious minorities, and addressing trade imbalances. Finally, if Republicans retain control of the Senate, President-elect Biden can make desired policy changes through executive orders. In the same ways that Presidents Barack Obama and Donald Trump did, Biden has considerable discretion to enact new rules through presidential orders, agency enforcement, and departmental discretion over appropriated money. Through those vehicles, President-elect Biden has many arrows in his quiver that will make him a strong and impactful leader. He can overturn his predecessor’s initiatives in the areas of digital access, personal privacy, data aggregation, cybersecurity, cell tower siting, high-skilled work visas, for-profit learning platforms, telemedicine, energy efficiency, broadband non-discrimination, and paid prioritization of internet traffic. What he does through executive orders likely will be more impactful than bills he is able to move through a divided Congress.

0 notes

Text

The TikTok Fiasco Reflects the Bankruptcy of Trump’s Foreign Policy

— By Evan Osnos | September 25, 2020 | The New Yorker

President Trump’s strong-arm effort to force a sale of the Chinese social platform’s U.S. operations has resulted in little discernible improvement in data security.Photograph by Kiyoshi Ota / Bloomberg / Getty

At first glance, the Trump Administration’s decision to ban the popular Chinese apps TikTok and WeChat had the look of hard-nosed diplomacy. China, after all, already blocks more than ten of the largest American tech companies, including Google, Facebook, and Twitter, out of fear that they facilitate unmanageable levels of free speech and organization. In Washington, U.S. officials have been increasingly worried about the rise of TikTok, which is best known for its minute-long videos of people dancing, and WeChat, the vast social-media platform that allows users to text, call, pay bills, buy things, and, of course, swap videos of people dancing. Like similar apps, they collect valuable data on the viewing habits of millions of Americans and could, it is feared, allow the Chinese Communist Party to expose Americans to propaganda and censorship. Under Chinese law, authorities have broad power to intervene in the work of private companies; a spy agency, for instance, could examine the private chats of an American user, or, in theory, direct a stream of TikTok videos and advertisements that could shape the perceptions of viewers in one part of the United States.

Last month, in an unusual intervention in the operations of a business, President Trump ordered ByteDance, the Beijing-based company that owns TikTok, to find an American owner for its U.S. operations. That touched off a chaotic scramble for a sale, culminating last Saturday, when Trump abruptly announced a deal involving Walmart and Oracle, which is headed by one of his major supporters, Larry Ellison. The announcement was vague and grandiose: it heralded the creation of a new U.S.-based company, TikTok Global, which would shift ownership into American hands while creating both twenty-five thousand new jobs in the U.S. and a very ill-defined five-billion-dollar educational fund. As Trump put it, that money would be applied so that “we can educate people as to [the] real history of our country—the real history, not the fake history.” Within days, those promises were unravelling. In Beijing, TikTok’s parent company said that it would retain control over the algorithms and the code that constitute the core of its power. Analysts could discern hardly any improvements in data security or protections against propaganda, and people involved let reporters know that the education fund was a notion added, at the last minute, to assuage Trump.

All sides agreed that Oracle and Walmart would gain a stake worth twenty per cent of the new U.S.-based company, and data from American users would reside in Oracle’s cloud. But the parties agreed on little else, especially who would own what; ByteDance said that it would retain eighty per cent of TikTok, while Oracle countered that the stake would belong to ByteDance’s investors, many of whom are American. Many critics noted that Oracle, led by a Trump supporter, potentially stood to earn a huge windfall in revenue, such that, as one put it, “the very concept of the rule of law is in shambles.” Taking stock of the mess, Bill Bishop, a China analyst and the author of the Sinocism newsletter, commented, “Struggling to find the right Chinese translation for clusterf$$!”

Meanwhile, the effort to bar WeChat also has, for the moment, run into objections. On Sunday, a federal judge in California issued an injunction against regulations issued by the Commerce Department, which seeks to bar U.S. companies from offering downloads or updates for TikTok and WeChat. Free-speech advocates had raised questions about whether the ban would harm First Amendment rights. For the moment, WeChat and TikTok would remain accessible to American users, but their futures were as unclear as Trump’s purported deal. Taken together, what Trump presented as a bold expression of American values and power has turned out to be precisely the opposite: a gesture of wall-building and retreat, suffused with the aroma of potential corruption.

In a new book, “An Open World,” the foreign-policy scholars Rebecca Lissner and Mira Rapp-Hooper begin the process of planning for a “day after Trump”—which they compare to a period of “post–natural disaster recovery”—in which the United States should focus on keeping “the international system open and free.” Lissner, an assistant professor at the U.S. Naval War College, told me that the threat to ban TikTok and WeChat “has put the United States on the wrong side, and set an unfavorable precedent.” There are plausible concerns, she said, that the Chinese Communist Party could use popular apps to collect data on American users, or continue to bar American companies in the name of governing the Internet according to its political anxieties. But the response should not be “replicating a C.C.P.-style approach to assertions of cyber sovereignty, as Trump has done.” Instead, she said, the U.S. should seek to be a leader in establishing rules that protect privacy and the free flow of information “regardless of the nationality of programs they’re using.” Lissner added, “Trump’s whack-a-mole approach doesn’t actually address the underlying risks, which reach far beyond TikTok and WeChat. Even worse, it makes it more likely that China’s preferred approach to Internet governance will triumph.”

The implications of this dispute extend far beyond the question of whether American teen-agers will be able to post to Chinese social-media sites. It represents what the Financial Times’ Tom Mitchell called the latest “proxy wars between the two reigning superpowers.” In contrast to America’s showdowns with the former Soviet Union, these battles are stirring not in Afghanistan and El Salvador but in corporate boardrooms. The Trump Administration opened its first front, in the spring of 2018, by banning American tech companies from selling components to ZTE, a Chinese telecom company that had been caught violating U.S. export controls on shipments to Iran. But Trump, who was eager to make a big trade deal with China, cut the pressure on ZTE, which settled with the U.S. Commerce Department. The battles have continued, though, and, as Mitchell observed, “it is China that has the early lead.”

The effort to wrest TikTok from China’s grip has been a boon for nationalist media sources in Beijing, which cited it as evidence that the U.S. is determined to prevent China from challenging American primacy. On Chinese state television last week, the news anchor Pan Tao derided the “hunt” for TikTok, asking, “Isn’t that behavior akin to a hostage situation? A deadline to sell on their terms, or else?” The opening of this “Pandora’s box,” Pan suggested, would make it impossible to trust American intentions. “If someone does this on Day One, who knows what they’re capable of doing on day fifteen?” Global Times, a nationalist state tabloid newspaper, called it a “thorough exhibit of Washington’s domineering behavior and gangster logic,” and predicted that leaders in Beijing would not ratify the agreement.

To Trump’s admirers in Washington, the move against Chinese tech companies is an act of reciprocity, an acknowledgment that China was the first to splinter the Internet into separate domains divided by a digital iron curtain. That sequence, of course, is true—but it does not follow that the right response to China’s fear is to impose additional barriers, instead of demonstrating that Americans have the capacity and the confidence to win on the strength of our competition. David Wertime, the author of the China Watcher newsletter at Politico, likened Trump’s decision to bar Americans from access to WeChat to the discomfiting new era in which America’s failure in the face of the coronavirus pandemic has left its people unwelcome at foreign borders. A U.S. passport “no longer ranks among the world’s most powerful,” he wrote. “A series of moves away from global institutions like the World Health Organization signal an inward retreat, keeping foreign elements out while also trapping Americans further within their homeland.”

Trump’s approach to TikTok and WeChat, like much of his foreign policy, is a gesture of defeatism camouflaged as strength. From his earliest days in politics, he has stood for the closure of the American mind, and the withdrawal of American power and confidence. He exited the Trans-Pacific Partnership, the Paris climate accord, and the Iran nuclear agreement; he ordered American troops in Syria to abandon the Kurds and other American allies, a move that James Mattis regarded as so disloyal and imprudent that he resigned as Secretary of Defense. In the present case, Trump would rather wall off Americans digitally and philosophically than establish guidelines on privacy, free speech, and data collection.

The TikTok fiasco is a product of cronyism, empty bombast, and nationalism—a political recipe that, historically, America has tended to criticize in its opponents. But, as a Pew survey reported this month, global confidence in the United States, especially among allies, is as low as it has been since the measurements began, nearly two decades ago. In the most damning indicator, Trump inspires less confidence than Vladimir Putin and Xi Jinping.