#Big Data Market

Explore tagged Tumblr posts

Text

Big Data Market Size, Share, Analysis, Forecast, and Growth Trends to 2032: How SMEs Are Leveraging Big Data for Competitive Edge

The Big Data Market was valued at USD 325.4 Billion in 2023 and is expected to reach USD 1035.2 Billion by 2032, growing at a CAGR of 13.74% from 2024-2032.

Big Data Market is expanding at a rapid pace as organizations increasingly depend on data-driven strategies to fuel innovation, enhance customer experiences, and streamline operations. Across sectors such as finance, healthcare, retail, and manufacturing, big data technologies are being leveraged to make real-time decisions and predict future trends with greater accuracy.

U.S. Enterprises Double Down on Big Data Investments Amid AI Surge

Big Data Market is transforming how businesses across the USA and Europe extract value from their information assets. With the rise of cloud computing, AI, and advanced analytics, enterprises are turning raw data into strategic insights, gaining competitive advantage and optimizing resources at scale.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/2817

Market Keyplayers:

IBM

Microsoft

Oracle

SAP

Amazon Web Services

Google

Cloudera

Teradata

Hadoop

Splunk

SAS

Snowflake

Market Analysis

The Big Data Market is witnessing robust growth fueled by the explosion of unstructured and structured data from IoT devices, digital platforms, and enterprise systems. Companies are moving beyond traditional data warehouses to adopt scalable, cloud-native analytics platforms. While the U.S. remains the innovation leader due to early adoption and tech maturity, Europe is growing steadily, aided by strict data privacy laws and the EU’s push for digital sovereignty.

Market Trends

Surge in demand for real-time analytics and data visualization tools

Integration of AI and machine learning in data processing

Rise of Data-as-a-Service (DaaS) and cloud-based data platforms

Greater focus on data governance and compliance (e.g., GDPR)

Edge computing driving faster, localized data analysis

Industry-specific big data solutions (e.g., healthcare, finance)

Democratization of data access through self-service BI tools

Market Scope

The Big Data Market is evolving into a cornerstone of digital transformation, enabling predictive and prescriptive insights that influence every business layer. Its expanding scope covers diverse use cases and advanced technology stacks.

Predictive analytics driving strategic decision-making

Real-time dashboards improving operational agility

Cross-platform data integration ensuring end-to-end visibility

Cloud-based ecosystems offering scalability and flexibility

Data lakes supporting large-scale unstructured data storage

Cybersecurity integration to protect data pipelines

Personalized marketing and customer profiling tools

Forecast Outlook

The Big Data Market is on an upward trajectory with growing investments in AI, IoT, and 5G technologies. As the volume, velocity, and variety of data continue to surge, organizations are prioritizing robust data architectures and agile analytics frameworks. In the USA, innovation will drive market maturity, while in Europe, compliance and ethical data use will shape the landscape. Future progress will center on building data-first cultures and unlocking business value with advanced intelligence layers.

Access Complete Report: https://www.snsinsider.com/reports/big-data-market-2817

Conclusion

From predictive maintenance in German factories to real-time financial insights in Silicon Valley, the Big Data Market is redefining what it means to be competitive in a digital world. Organizations that harness the power of data today will shape the industries of tomorrow. The momentum is clear—big data is no longer a back-end tool, it's a front-line business driver.

Related Reports:

Discover trends shaping the digital farming industry across the United States

Explore top data pipeline tools driving the US market growth

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

0 notes

Text

0 notes

Text

Big Data Market Expansion: How Organizations Are Leveraging Data Analytics

0 notes

Text

Big Data Market Size, Share, Price, Trends, Report and Forecast 2023-2028

Big data refers to large, diverse sets of data that are growing at an exponential rate. The volume of data, the velocity or speed with which it is created and collected, and the variety or scope of the data points covered are all factors to consider.

0 notes

Text

got jumpscared by these ‘cus it looks like he is a junior analyst at an investment bank

from mclaren’s ig

#he would be lethal on a Bloomberg terminal#his data-backed rizz would have no limits#that’s why his forehead’s so big it’s full of analytical secrets#Oscar piastri#op81#you don’t get him he’s just at one with capital markets like a gen z dollar sign megamind#wiz.yaps

412 notes

·

View notes

Text

Just saw an ad for an "AI powered" fertility hormone tracker and I'm about to go full Butlerian jihad.

#in reality this gadget is probably not doing anything other fertility trackers aren't already#the AI label is just marketing bs#like it always is#anyway ladies you don't need to hand your cycle data over to Big Tech#paper charts work just fine

9 notes

·

View notes

Text

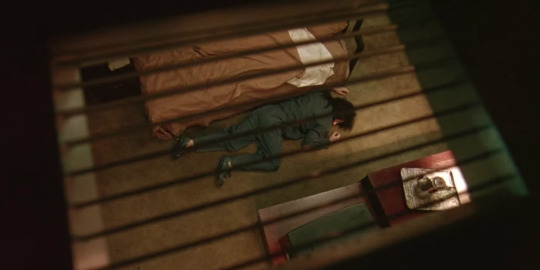

Oldboy was a Warning of Today's Big Data Dystopian Future

I thought I would finish today by showing stills from one of my favorite arthouse movies of the 21st century. A disclaimer, Oldboy is not my favorite arthouse movie. Nor is it my favorite Chanwook Park movie. One of these days I'll send him up in a subsequent post.

2003

For those of you who do not know, Oldboy is the story of a scoundrel named Oh Dae Su who is kidnapped without warning and without knowledge of who his captor is.

Struggling to cope with his captivity for fifteen years, he is suddenly released with nothing but the obsessions that kept him alive--Revenge against the person who held him captive, and Curiosity as to why he was kidnapped.

But freedom becomes complicated for Oh Dae Su when he realizes events are being manipulated around him. The phone he is given mysteriously belongs to his captor. The clothes are not his. And he begins to suspect the young woman who decides to take him in.

There is a ton going on in this complex plot. Oh Dae Su does not know what he did to cause his captivity, something all private citizens should fear about the complex legal and digital world that makes decisions for us.

Multiple spends most of his time in captivity practicing to fight his captors, and preparing his escape. We only find out halfway through the film that every action he took was under constant surveillance. His efforts would have been for nothing. He was as powerless in the confines of his cell as he was in the real world.

Oh Dae Su even fails in captivity to take his own life. He admits, while trying to dig through a wall, that even if he falls to his death he will be free. Each time he is rescued from his attempt at self harm.

All of this is to say, a theme of totalitarian control and surveillance runs throughout Oldboy. Even the clothes he wears have been given to him by his abductor, and they are loaded with bugs so that they can know everything he says and does.

Even Oh's decisions are in doubt as he comes to question whether he has been either drugged or hypnotized.

The environment in Oldboy resembles today's consumer reality. Our phones that we use every minute collect and store personal data that is used to market products to us. Worse, that data is subsequently used to direct product marketing so that the things we want are conditioned into us. Even the clothes we wear and the food we eat are someone else's choice.

So was Chanwook Park trying to warn us about the nightmare of today from 22 years ago?

Not exactly. A lot has been explained about Park's motivations while making Oldboy, more than I have even read.

Chanwook Park has said that Oldboy was only partly an adaptation of the source graphic novel by Garon Tsuchiya. On a deeper level, Park has acknowledged that he meant to retell the play Oedipus Rex using the hypnosis motif that Tsuchiya employed in the manga.

But Park also acknowledged his influences were modern and postmodern novels, including the works of Franz Kafka.

Franz Kafka's writings are surreal dark comedies, but they are presented with bleak cynicism for the social bureaucracy of law which was his first vocation.

It follows that Chanwook Park did not write the script for Oldboy thinking of the risks modern technology would pose to us. Instead, he was writing about character motivations that were centuries old.

We learn by the end of Oldboy that his captor had his own plan for revenge against Oh Dae Su for some past wrong. But the unfolding of his revenge plot could be seen as the movie's only flaw, in that is seems nihilistic and purposeless. By the end, the villain gets nothing out of it and still suffers due to his previous, tragic loss.

The villain's revenge is entirely nihilistic.

This was Chanwook Park's stated intent for the revenge theme in Oldboy. But it is also a comment on the emptiness of totalitarianism, whether state sponsored or corporate. While it is all dangerous, in the end the impulse to dominate others is stale and hollow for the perpetrator of these violations.

There is much more to be said about Chanwook Park's filmography in all its richness. But for the topic of this blog post, the similarity between the movie and reality comes essentially from the fact that people have had the same dark motives since before the conception of Big Data.

There is something more to be said about how Oldboy, and the filmography of Nora Ephron, undermine some of the essential theories of postmodernism by Jean Baudrillard. But that is for another day.

#movies#south korea#kafka#big data#surveillance#totalitarianism#oldboy#fashion#digital products#digital marketing#digital manipulation#hypnosis#postmodernism#literature#books

5 notes

·

View notes

Text

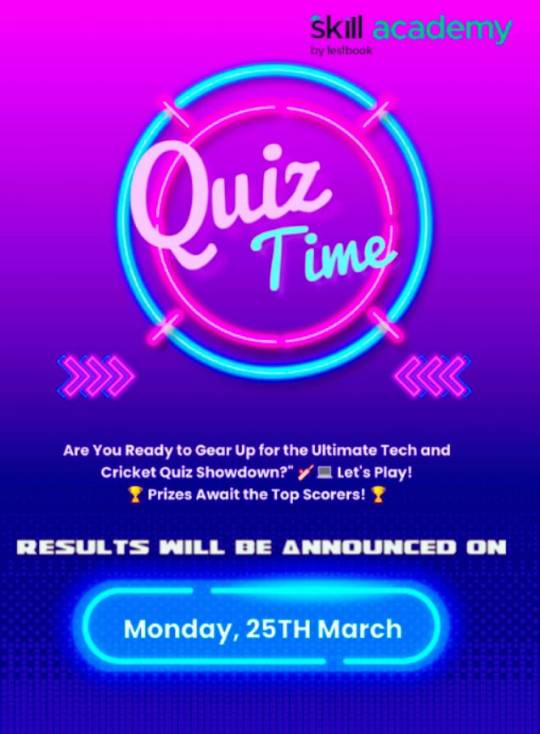

🚀 Join the Ultimate Engineering College Quiz Challenge! 🚀🏏

🌟 Test Your IPL Knowledge and Full Stack Development Skills! 🌟

🏆 Prizes Await the Top Scorers! 🏆

Ready to flaunt your smarts? Dive into our dynamic quiz featuring 10 IPL questions and 10 Full Stack Development questions. From cricket trivia to coding conundrums, we've got it all!

🏆 Prizes:

🥇 1st Place: ₹2000 Cash Prize

🥈 2nd Place: Official Merchandise

🥉 3rd Place: Electronic Merchandise

🔗 Ready for the Challenge? Click Here : https://forms.gle/xGrMcnar3xJHS7TS9

to start the quiz and seize your chance to win big! 🚀

Let the games begin! 🎉

2 notes

·

View notes

Text

Czarina-VM, study of Microsoft tech stack history. Preview 1

Write down study notes about the evolution of MS-DOS, QuickBASIC (from IBM Cassette BASIC to the last officially Microsoft QBasic or some early Visual Basic), "Batch" Command-Prompt, PowerShell, Windows editions pathing from "2.11 for 386" to Windows "ME" (upgraded from a "98 SE" build though) with Windows "3.11 for Workgroups" and the other 9X ones in-between, Xenix, Microsoft Bob with Great Greetings expansion, a personalized mockup Win8 TUI animated flex box panel board and other historical (or relatively historical, with a few ground-realism & critical takes along the way) Microsoft matters here and a couple development demos + big tech opinions about Microsoft too along that studious pathway.

( Also, don't forget to link down the interactive-use sessions with 86box, DOSbox X & VirtualBox/VMware as video when it is indeed ready )

Yay for the four large tags below, and farewell.

#youtube#technology#retro computing#maskutchew#microsoft#big tech#providing constructive criticisms of both old and new Microsoft products and offering decent ethical developer consumer solutions#MVP deliveries spyware data privacy unethical policies and bad management really strikes the whole market down from all potential LTS gains#chatGPT buyout with Bing CoPilot integrations + Windows 8 Metro dashboard crashes being more examples of corporate failings#16-bit WineVDM & 32-bit Win32s community efforts showing the working class developers do better quality maintenance than current MS does

5 notes

·

View notes

Text

Big Data Market: Innovations Powering AI and Machine Learning

0 notes

Text

Week 5 blog post "Saga of Big Data 🙃"

After watching "The Legal Side of Big Data", Maciej Ceglowski's talk and reading "The Internet's Original Sin" . I was intrigued by the complexities surrounding the use of big data in today's business landscape. As a business owner myself, I realize that harnessing the power of big data can unlock numerous opportunities for growth and innovation. However, there are crucial aspects that businesses must be acutely aware of when using big data.

First and foremost, data privacy and security must be at the forefront of any big data strategy. As businesses collect and analyze vast amounts of consumer data, they must ensure strict adherence to applicable laws and regulations. Compliance with data protection laws such as GDPR, CCPA, or other relevant regional laws is not just an ethical responsibility but also vital for avoiding potential legal repercussions and preserving consumer trust.

Transparency is another critical aspect that businesses must prioritize. Consumers have the right to know how their data is being used, stored, and shared. Clear and concise privacy policies and terms of use should be provided, ensuring that consumers can make informed decisions about their data's usage.

Furthermore, businesses should guard against using big data to engage in discriminatory practices. The insights derived from big data must be utilized responsibly and without any bias that could harm certain demographic groups or individuals. It's essential to continuously monitor data usage and algorithmic decisions to avoid reinforcing harmful stereotypes.

On the consumers' side, awareness of the implications of sharing their data is paramount. They should be mindful of what data they provide to businesses and exercise caution when consenting to data usage. Staying informed about privacy settings and exercising their rights to access, rectify, or delete personal data empowers consumers to have control over their information.

As for balancing the opportunities and threats of big data, a multi-faceted approach is necessary. Collaboration between businesses, policymakers, and consumer advocacy groups is key to developing comprehensive regulations that foster innovation while safeguarding privacy. Encouraging ethical data practices and responsible use of big data should be incentivized, and non-compliance should be met with appropriate consequences.

Additionally, promoting data literacy among the general public can foster a better understanding of the potential benefits and risks associated with big data. By educating consumers about data collection practices, they can make more informed decisions about sharing their information and demand greater accountability from businesses.

In conclusion, the world of big data offers immense potential for businesses, but it also poses significant challenges in terms of privacy, security, and ethics. By being aware of these considerations, businesses can navigate the legal complexities and build trust with their customers. Simultaneously, consumers must stay vigilant about their data and support initiatives that strike a balance between seizing the opportunities and mitigating the threats of big data. Only through collective efforts and responsible practices can we harness the full potential of big data while safeguarding individual rights and societal welfare.

2 notes

·

View notes

Text

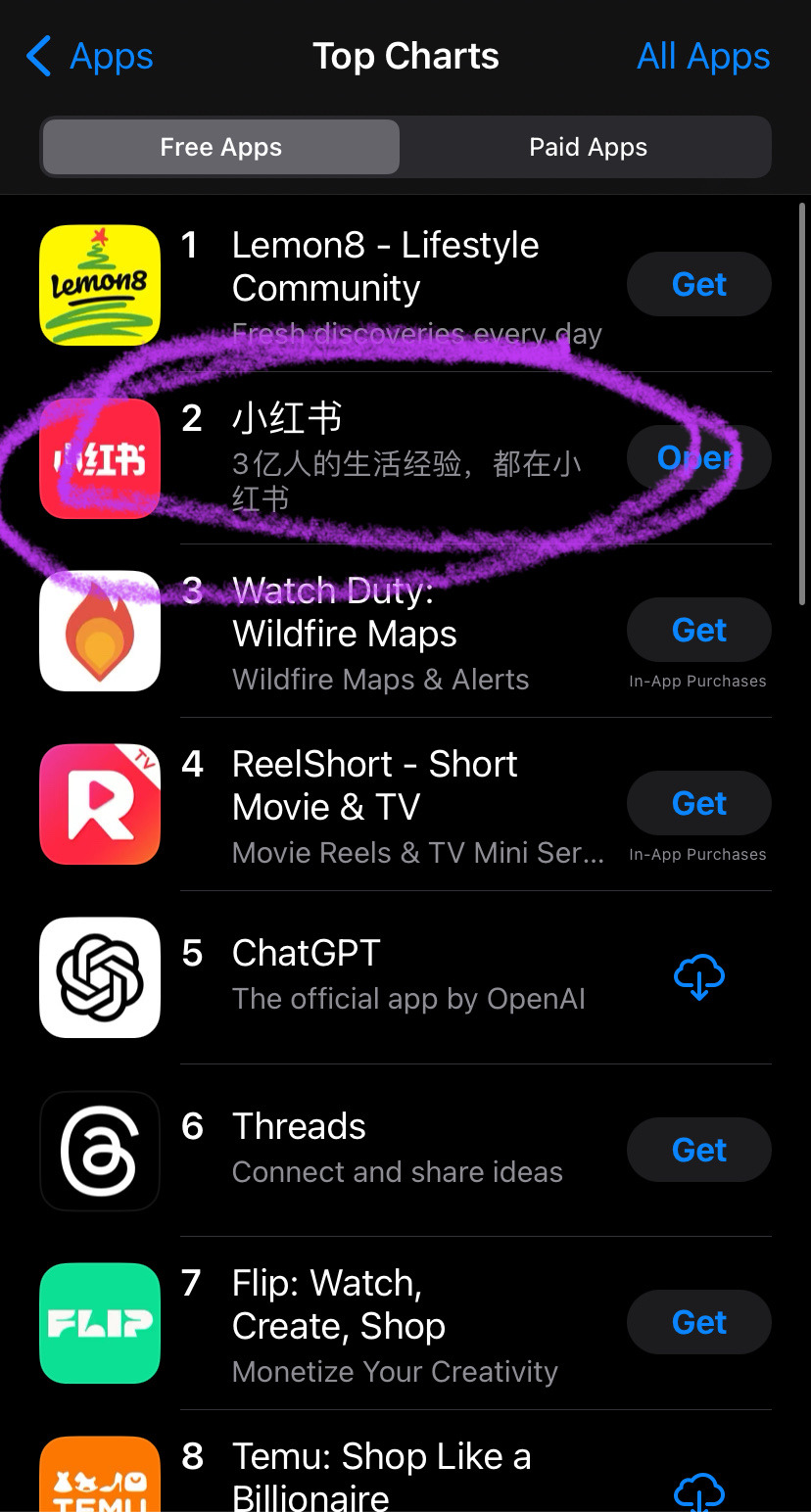

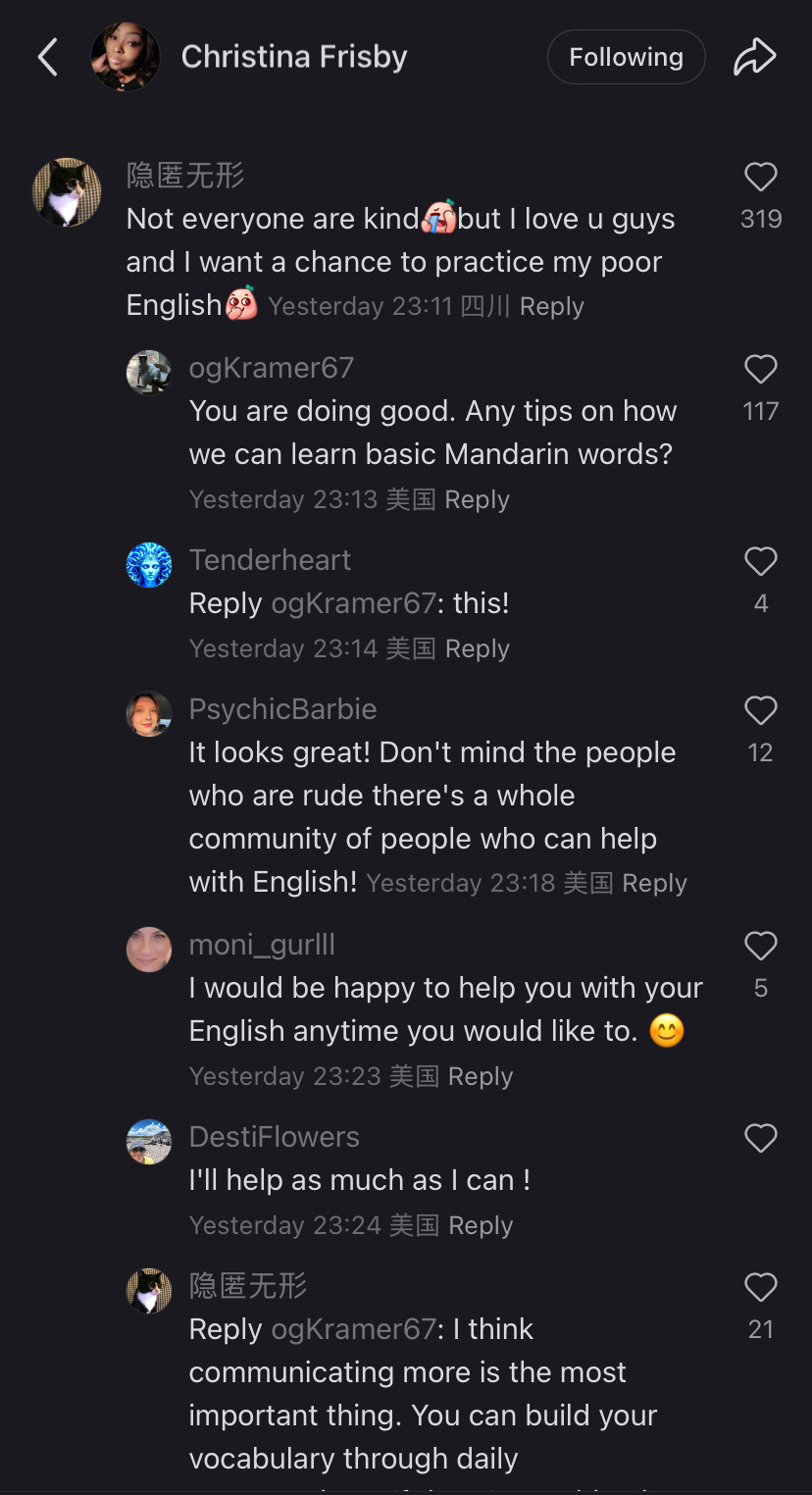

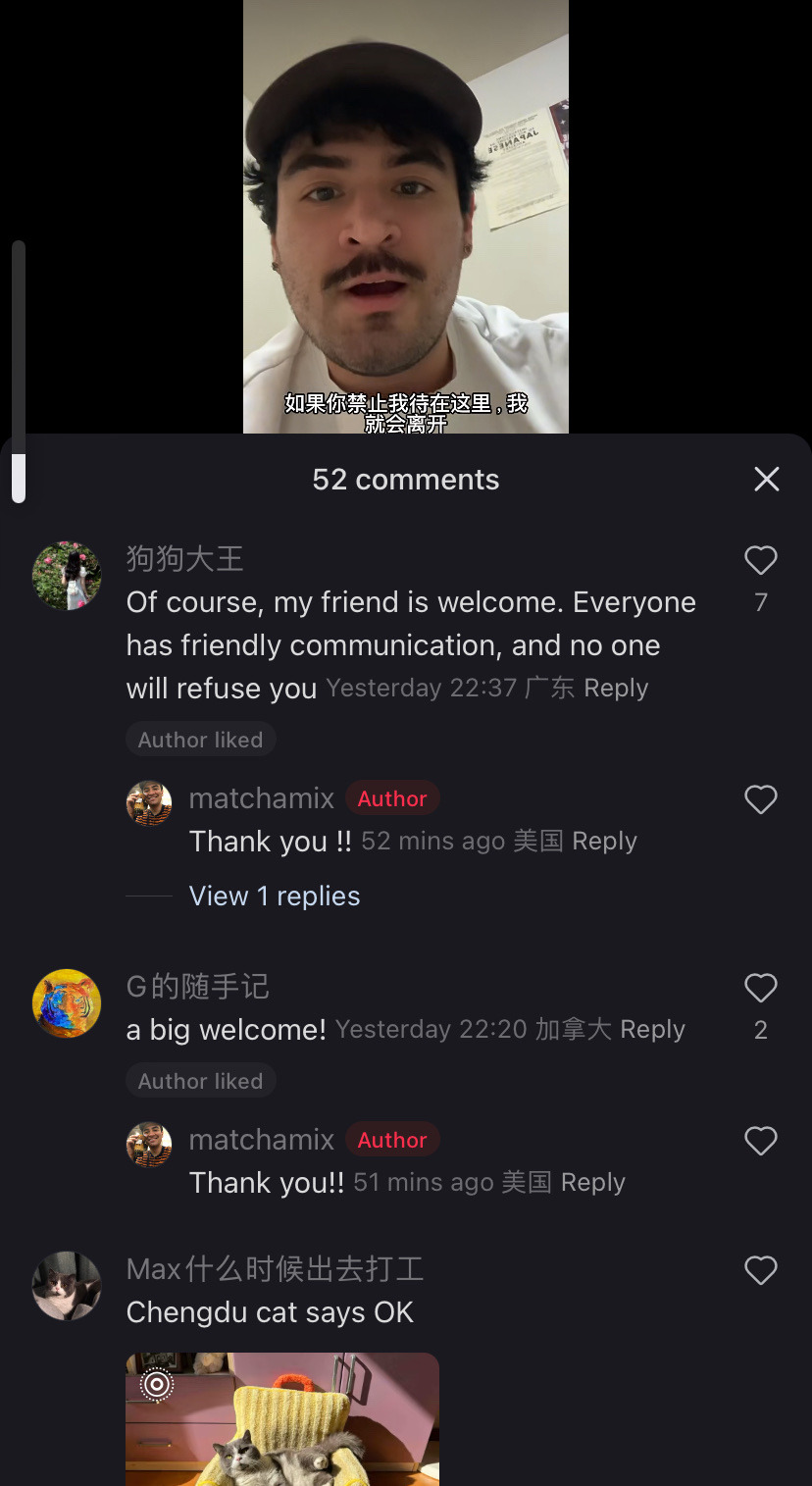

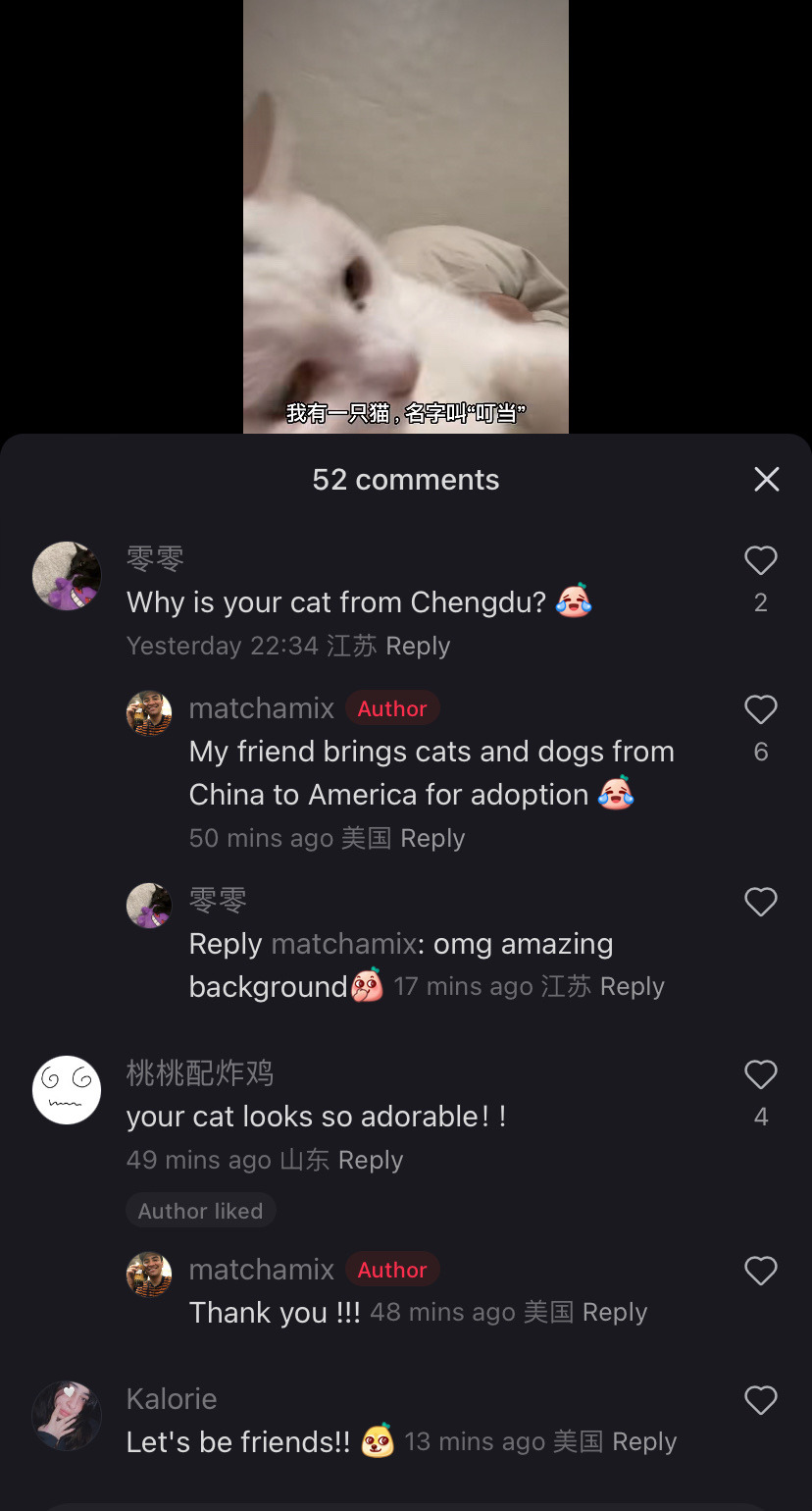

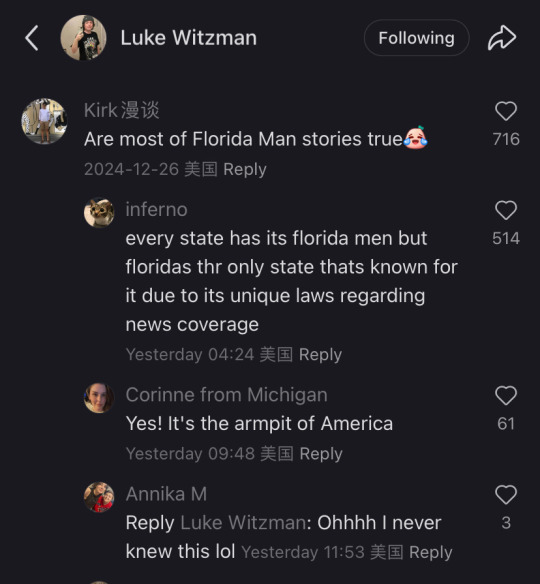

Nope now it’s at the point that i’m shocked that people off tt don’t know what’s going down. I have no reach but i’ll sum it up anyway.

SCOTUS is hearing on the constitutionality of the ban as tiktok and creators are arguing that it is a violation of our first amendment rights to free speech, freedom of the press and freedom to assemble.

SCOTUS: tiktok bad, big security concern because china bad!

Tiktok lawyers: if china is such a concern why are you singling us out? Why not SHEIN or temu which collect far more information and are less transparent with their users?

SCOTUS (out loud): well you see we don’t like how users are communicating with each other, it’s making them more anti-american and china could disseminate pro china propaganda (get it? They literally said they do not like how we Speak or how we Assemble. Independent journalists reach their audience on tt meaning they have Press they want to suppress)

Tiktok users: this is fucking bullshit i don’t want to lose this community what should we do? We don’t want to go to meta or x because they both lobbied congress to ban tiktok (free market capitalism amirite? Paying off your local congressmen to suppress the competition is totally what the free market is about) but nothing else is like TikTok

A few users: what about xiaohongshu? It’s the Chinese version of tiktok (not quite, douyin is the chinese tiktok but it’s primarily for younger users so xiaohongshu was chosen)

16 hours later:

Tiktok as a community has chosen to collectively migrate TO a chinese owned app that is purely in Chinese out of utter spite and contempt for meta/x and the gov that is backing them.

My fyp is a mix of “i would rather mail memes to my friends than ever return to instagram reels” and “i will xerox my data to xi jinping myself i do not care i share my ss# with 5 other people anyway” and “im just getting ready for my day with my chinese made coffee maker and my Chinese made blowdryer and my chinese made clothing and listening to a podcast on my chinese made phone and get in my car running on chinese manufactured microchips but logging into a chinese social media? Too much for our gov!” etc.

So the government was scared that tiktok was creating a sense of class consciousness and tried to kill it but by doing so they sent us all to xiaohongshu. And now? Oh it’s adorable seeing this gov-manufactured divide be crossed in such a way.

This is adorable and so not what they were expecting. Im sure they were expecting a reluctant return to reels and shorts to fill the void but tiktokers said fuck that, we will forge connections across the world. Who you tell me is my enemy i will make my friend. That’s pretty damn cool.

#tiktok ban#xiaohongshu#the great tiktok migration of 2025#us politics#us government#scotus#ftr tiktok is owned primarily by private investors and is not operated out of china#and all us data is stored on servers here in the us#tiktok also employs 7000 us employees to maintain the US side of operations#like they’re just lying to get us to shut up about genocide and corruption#so fuck it we’ll go spill all the tea to ears that wanna hear it cause this country is not what its cracked up to be#we been lied to and the rest of the world has been lied to#if scotus bans it tomorrow i can’t wait for their finding out#rednote

42K notes

·

View notes

Text

Dell unveils Nvidia Blackwell-based AI acceleration platform

New Post has been published on https://thedigitalinsider.com/dell-unveils-nvidia-blackwell-based-ai-acceleration-platform/

Dell unveils Nvidia Blackwell-based AI acceleration platform

Dell Technologies used the Dell Technologies World in Las Vegas to announce the latest generation of AI acceleration servers which come equipped with Nvidia’s Blackwell Ultra GPUs.

The systems claim to deliver up to four times faster AI training capabilities compared to previous generations, as Dell expands its AI Factory partnership with Nvidia amid intense competition in the enterprise AI hardware market.

The servers arrive as organisations move from experimental AI projects to production-scale implementations, creating demand for more sophisticated computing infrastructure.

The new lineup features air-cooled PowerEdge XE9780 and XE9785 servers, designed for conventional data centres, and liquid-cooled XE9780L and XE9785L variants, optimised for whole-rack deployment.

The advanced systems support configurations with up to 192 Nvidia Blackwell Ultra GPUs with direct-to-chip liquid cooling, expandable to 256 GPUs per Dell IR7000 rack. “We’re on a mission to bring AI to millions of customers around the world,” said Michael Dell, the eponymous chairman and chief executive officer. “Our job is to make AI more accessible. With the Dell AI Factory with Nvidia, enterprises can manage the entire AI lifecycle in use cases, from deployment to training, at any scale.”

Dell’s self-designation as “the world’s top provider of AI-centric infrastructure” appears calculated as companies try to deploy AI and navigate technical hurdles.

Critical assessment of Dell’s AI hardware strategy

While Dell’s AI acceleration hardware advancements appear impressive on the basis of tech specs, several factors will ultimately determine their market impact. The company has withheld pricing information for these high-end systems, which will undoubtedly represent substantial capital investments for organisations considering deployment.

The cooling infrastructure alone, particularly for the liquid-cooled variants, may need modifications to data centres for many potential customers, adding complexity and cost beyond the server hardware itself.

Industry observers note that Dell faces intensifying competition in the AI hardware space from companies like Super Micro Computer, which has aggressively targeted the AI server market with similar offerings.

However, Super Micro has recently encountered production cost challenges and margin pressure, potentially creating an opening for Dell if it can deliver competitive pricing.

Jensen Huang, founder and CEO of Nvidia, emphasised the transformative potential of these systems: “AI factories are the infrastructure of modern industry, generating intelligence to power work in healthcare, finance and manufacturing. With Dell Technologies, we’re offering the broadest line of Blackwell AI systems to serve AI factories in clouds, enterprises and at the edge.”

Comprehensive AI acceleration ecosystem

Dell’s AI acceleration strategy extends beyond server hardware to encompass networking, storage, and software components:

The networking portfolio now includes the PowerSwitch SN5600 and SN2201 switches (part of Nvidia’s Spectrum-X platform) and Nvidia Quantum-X800 InfiniBand switches, capable of up to 800 gigabits per second throughput with Dell ProSupport and Deployment Services.

The Dell AI Data Platform has received upgrades to enhance data management for AI applications, including a denser ObjectScale system with Nvidia BlueField-3 and Spectrum-4 networking integrations.

In software, Dell offers the Nvidia AI Enterprise software platform directly, featuring Nvidia NIM, NeMo microservices, and Blueprints to streamline AI development workflows.

The company also introduced Managed Services for its AI Factory with Nvidia, providing monitoring, reporting, and maintenance to help organisations address expertise gaps – skilled professionals remain in short supply.

Availability timeline and market implications

Dell’s AI acceleration platform rollout follows a staggered schedule throughout 2025:

Air-cooled PowerEdge XE9780 and XE9785 servers with NVIDIA HGX B300 GPUs will be available in the second half of 2025

The liquid-cooled PowerEdge XE9780L and XE9785L variants are expected later this year

The PowerEdge XE7745 server with Nvidia RTX Pro 6000 Blackwell Server Edition GPUs will launch in July 2025

The PowerEdge XE9712 featuring GB300 NVL72 will arrive in the second half of 2025

Dell plans to support Nvidia’s Vera CPU and Vera Rubin platform, signalling a longer-term commitment to expanding its AI ecosystem beyond this product lineup.

Strategic analysis of the AI acceleration market

Dell’s push into AI acceleration hardware reflects a strategy change to capitalise on the artificial intelligence boom, and use its established enterprise customer relationships.

As organisations realise the complexity and expense of implementing AI at scale, Dell appears to be positioning itself as a comprehensive solution provider rather than merely a hardware vendor.

However, the success of Dell’s AI acceleration initiative will ultimately depend on how effectively systems deliver measurable business value.

Organisations investing in high-end infrastructure will demand operational improvements and competitive advantages that justify the significant capital expenditure.

The partnership with Nvidia provides Dell access to next-gen AI accelerator technology, but also creates dependency on Nvidia’s supply chain and product roadmap. Given persistent chip shortages and extraordinary demand for AI accelerators, Dell’s ability to secure adequate GPU allocations will prove crucial for meeting customer expectations.

(Photo by Nvidia)

See also: Dell, Intel and University of Cambridge deploy the UK’s fastest AI supercomputer

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

#2025#accelerators#ai#ai & big data expo#AI development#AI hardware market#AI systems#ai training#air#amp#Analysis#applications#artificial#Artificial Intelligence#assessment#automation#Big Data#blackwell#Business#california#CEO#change#chip#Cloud#clouds#Companies#competition#complexity#comprehensive#computer

0 notes