#Amazon RedShift Training Online

Text

Amazon Redshift Courses Online | Amazon Redshift Certification Training

What is Amazon (AWS) Redshift? - Cloud Data Warehouse

Amazon Redshift is a fully managed cloud data warehouse service provided by Amazon Web Services (AWS). It is designed to handle large-scale data storage and analysis, making it a powerful tool for businesses looking to manage and analyse vast amounts of data efficiently. Amazon Redshift Courses Online

Key Features of Amazon Redshift

Scalability:

Redshift allows you to scale your data warehouse up or down based on your needs. You can start with a small amount of storage and expand as your data grows without significant downtime or complexity.

Performance:

Redshift uses columnar storage and advanced compression techniques, which optimize query performance. It also utilizes parallel processing, enabling faster query execution.

Fully Managed:

As a fully managed service, Redshift takes care of administrative tasks such as hardware provisioning, setup, configuration, monitoring, backups, and patching. This allows users to focus on their data and queries rather than maintenance.

Integration with AWS Services:

Redshift integrates seamlessly with other AWS services like Amazon S3 (for storage), Amazon RDS (for relational databases), Amazon EMR (for big data processing), and Amazon Quick Sight (for business intelligence and visualization).

Security:

Redshift provides robust security features, including encryption at rest and in transit, VPC (Virtual Private Cloud) for network isolation, and IAM (Identity and Access Management) for fine-grained access control.

Cost-Effective:

Redshift offers a pay-as-you-go pricing model and reserved instance pricing, which can significantly reduce costs. Users only pay for the resources they use, and the reserved instance option provides discounts for longer-term commitments. Amazon Redshift Certification

Advanced Query Features:

Redshift supports complex queries and joins, window functions, and nested queries. It is compatible with standard SQL, making it accessible for users familiar with SQL-based querying.

Data Sharing:

Redshift allows data sharing between different Redshift clusters without the need to copy or move data, enabling seamless collaboration and data access across teams and departments.

Use Cases for Amazon Redshift

Business Intelligence and Reporting: Companies use Redshift to run complex queries and generate reports that provide insights into business operations and performance.

Data Warehousing: Redshift serves as a central repository where data from various sources can be consolidated, stored, and analysed.

Big Data Analytics: Redshift can handle petabyte-scale data analytics, making it suitable for big data applications.

ETL Processes: Redshift is often used in ETL (Extract, Transform, and Load) processes to clean, transform, and load data into the warehouse for further analysis.

Visualpath is one of the Best Amazon RedShift Online Training institute in Hyderabad. Providing Live Instructor-Led Online Classes delivered by experts from Our Industry. Will provide AWS Redshift Course in Hyderabad. Enroll Now!! Contact us +91-9989971070

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Blog link: https://visualpathblogs.com/

Visit us https://visualpath.in/amazon-redshift-online-training.html

#Amazon Redshift Online Training#Redshift Training in Hyderabad#AWS Redshift Online Training Institute in Hyderabad#Amazon Redshift Certification Online Training#AWS Redshift training Courses in Hyderabad#Amazon Redshift Courses Online#Amazon Redshift Training in Hyderabad#Amazon RedShift Training#Amazon RedShift Training in Ameerpet#Amazon RedShift Training Online#AWS Redshift Training in Hyderabad

0 notes

Text

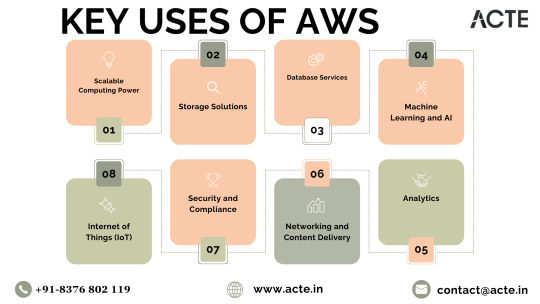

Navigating the Cloud: Unleashing Amazon Web Services' (AWS) Impact on Digital Transformation

In the ever-evolving realm of technology, cloud computing stands as a transformative force, offering unparalleled flexibility, scalability, and cost-effectiveness. At the forefront of this paradigm shift is Amazon Web Services (AWS), a comprehensive cloud computing platform provided by Amazon.com. For those eager to elevate their proficiency in AWS, specialized training initiatives like AWS Training in Pune offer invaluable insights into maximizing the potential of AWS services.

Exploring AWS: A Catalyst for Digital Transformation

As we traverse the dynamic landscape of cloud computing, AWS emerges as a pivotal player, empowering businesses, individuals, and organizations to fully embrace the capabilities of the cloud. Let's delve into the multifaceted ways in which AWS is reshaping the digital landscape and providing a robust foundation for innovation.

Decoding the Heart of AWS

AWS in a Nutshell: Amazon Web Services serves as a robust cloud computing platform, delivering a diverse range of scalable and cost-effective services. Tailored to meet the needs of individual users and large enterprises alike, AWS acts as a gateway, unlocking the potential of the cloud for various applications.

Core Function of AWS: At its essence, AWS is designed to offer on-demand computing resources over the internet. This revolutionary approach eliminates the need for substantial upfront investments in hardware and infrastructure, providing users with seamless access to a myriad of services.

AWS Toolkit: Key Services Redefined

Empowering Scalable Computing: Through Elastic Compute Cloud (EC2) instances, AWS furnishes virtual servers, enabling users to dynamically scale computing resources based on demand. This adaptability is paramount for handling fluctuating workloads without the constraints of physical hardware.

Versatile Storage Solutions: AWS presents a spectrum of storage options, such as Amazon Simple Storage Service (S3) for object storage, Amazon Elastic Block Store (EBS) for block storage, and Amazon Glacier for long-term archival. These services deliver robust and scalable solutions to address diverse data storage needs.

Streamlining Database Services: Managed database services like Amazon Relational Database Service (RDS) and Amazon DynamoDB (NoSQL database) streamline efficient data storage and retrieval. AWS simplifies the intricacies of database management, ensuring both reliability and performance.

AI and Machine Learning Prowess: AWS empowers users with machine learning services, exemplified by Amazon SageMaker. This facilitates the seamless development, training, and deployment of machine learning models, opening new avenues for businesses integrating artificial intelligence into their applications. To master AWS intricacies, individuals can leverage the Best AWS Online Training for comprehensive insights.

In-Depth Analytics: Amazon Redshift and Amazon Athena play pivotal roles in analyzing vast datasets and extracting valuable insights. These services empower businesses to make informed, data-driven decisions, fostering innovation and sustainable growth.

Networking and Content Delivery Excellence: AWS services, such as Amazon Virtual Private Cloud (VPC) for network isolation and Amazon CloudFront for content delivery, ensure low-latency access to resources. These features enhance the overall user experience in the digital realm.

Commitment to Security and Compliance: With an unwavering emphasis on security, AWS provides a comprehensive suite of services and features to fortify the protection of applications and data. Furthermore, AWS aligns with various industry standards and certifications, instilling confidence in users regarding data protection.

Championing the Internet of Things (IoT): AWS IoT services empower users to seamlessly connect and manage IoT devices, collect and analyze data, and implement IoT applications. This aligns seamlessly with the burgeoning trend of interconnected devices and the escalating importance of IoT across various industries.

Closing Thoughts: AWS, the Catalyst for Transformation

In conclusion, Amazon Web Services stands as a pioneering force, reshaping how businesses and individuals harness the power of the cloud. By providing a dynamic, scalable, and cost-effective infrastructure, AWS empowers users to redirect their focus towards innovation, unburdened by the complexities of managing hardware and infrastructure. As technology advances, AWS remains a stalwart, propelling diverse industries into a future brimming with endless possibilities. The journey into the cloud with AWS signifies more than just migration; it's a profound transformation, unlocking novel potentials and propelling organizations toward an era of perpetual innovation.

2 notes

·

View notes

Text

Data Engineering Course in Hyderabad | AWS Data Engineer Training

Overview of AWS Data Modeling

Data modeling in AWS involves designing the structure of your data to effectively store, manage, and analyse it within the Amazon Web Services (AWS) ecosystem. AWS provides various services and tools that can be used for data modeling, depending on your specific requirements and use cases. Here's an overview of key components and considerations in AWS data modeling

AWS Data Engineer Training

Understanding Data Requirements: Begin by understanding your data requirements, including the types of data you need to store, the volume of data, the frequency of data updates, and the anticipated usage patterns.

Selecting the Right Data Storage Service: AWS offers a range of data storage services suitable for different data modeling needs, including:

Amazon S3 (Simple Storage Service): A scalable object storage service ideal for storing large volumes of unstructured data such as documents, images, and logs.

Amazon RDS (Relational Database Service): Managed relational databases supporting popular database engines like MySQL, PostgreSQL, Oracle, and SQL Server.

Amazon Redshift: A fully managed data warehousing service optimized for online analytical processing (OLAP) workloads.

Amazon DynamoDB: A fully managed NoSQL database service providing fast and predictable performance with seamless scalability.

Amazon Aurora: A high-performance relational database compatible with MySQL and PostgreSQL, offering features like high availability and automatic scaling. - AWS Data Engineering Training

Schema Design: Depending on the selected data storage service, design the schema to organize and represent your data efficiently. This involves defining tables, indexes, keys, and relationships for relational databases or determining the structure of documents for NoSQL databases.

Data Ingestion and ETL: Plan how data will be ingested into your AWS environment and perform any necessary Extract, Transform, Load (ETL) operations to prepare the data for analysis. AWS provides services like AWS Glue for ETL tasks and AWS Data Pipeline for orchestrating data workflows.

Data Access Control and Security: Implement appropriate access controls and security measures to protect your data. Utilize AWS Identity and Access Management (IAM) for fine-grained access control and encryption mechanisms provided by AWS Key Management Service (KMS) to secure sensitive data.

Data Processing and Analysis: Leverage AWS services for data processing and analysis tasks, such as - AWS Data Engineering Training in Hyderabad

Amazon EMR (Elastic MapReduce): Managed Hadoop framework for processing large-scale data sets using distributed computing.

Amazon Athena: Serverless query service for analysing data stored in Amazon S3 using standard SQL.

Amazon Redshift Spectrum: Extend Amazon Redshift queries to analyse data stored in Amazon S3 data lakes without loading it into Redshift.

Monitoring and Optimization: Continuously monitor the performance of your data modeling infrastructure and optimize as needed. Utilize AWS CloudWatch for monitoring and AWS Trusted Advisor for recommendations on cost optimization, performance, and security best practices.

Scalability and Flexibility: Design your data modeling architecture to be scalable and flexible to accommodate future growth and changing requirements. Utilize AWS services like Auto Scaling to automatically adjust resources based on demand. - Data Engineering Course in Hyderabad

Compliance and Governance: Ensure compliance with regulatory requirements and industry standards by implementing appropriate governance policies and using AWS services like AWS Config and AWS Organizations for policy enforcement and auditing.

By following these principles and leveraging AWS services effectively, you can create robust data models that enable efficient storage, processing, and analysis of your data in the cloud.

Visualpath is the Leading and Best Institute for AWS Data Engineering Online Training, in Hyderabad. We at AWS Data Engineering Training provide you with the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

Visit: https://www.visualpath.in/aws-data-engineering-with-data-analytics-training.html

#AWS Data Engineering Online Training#AWS Data Engineering Training#Data Engineering Training in Hyderabad#AWS Data Engineering Training in Hyderabad#Data Engineering Course in Ameerpet#AWS Data Engineering Training Ameerpet#Data Engineering Course in Hyderabad#AWS Data Engineering Training Institute

0 notes

Text

Navigating the Learnability of AWS

As Amazon Web Services (AWS) continues to shape the landscape of cloud computing, the question arises: How accessible is the learning curve for AWS? In this blog post, we'll dissect the various dimensions of AWS to evaluate just how approachable this dynamic cloud platform is for newcomers from The Best AWS Course in Bangalore.

Embracing Fundamental Principles

Mastering Cloud Computing Basics

Before diving into the specifics of AWS, it's crucial to establish a solid understanding of fundamental cloud computing concepts. Concepts like virtualization, scalability, elasticity, and on-demand resources lay the groundwork for comprehending the intricacies of AWS.

Commencing the AWS Odyssey

Navigating the AWS Console

The AWS Management Console acts as the central hub for all AWS services. While the interface might seem overwhelming initially, its well-organized dashboard simplifies navigation. AWS provides extensive documentation and tutorials to aid users in familiarizing themselves with the console's layout and functionalities.

Prioritizing Core AWS Services

Within the expansive realm of AWS services, not all are equally relevant to every user. Start by focusing on core services like Amazon EC2, Amazon S3, and AWS Lambda, covering essential aspects such as compute, storage, and serverless computing.

If you want to learn more about AWS , I highly recommend the AWS online training because they offer certifications and job placement opportunities. You can find these services both online and offline.

Learning Resources: A Wealth of Knowledge

Leveraging AWS Documentation

The AWS documentation serves as an invaluable resource, offering comprehensive explanations, tutorials, and examples for each service. It is a go-to guide to aid in navigating the intricacies of AWS.

Engaging with Online Courses and Certifications

Platforms such as A Cloud Guru, Udemy, and Coursera offer comprehensive AWS courses. AWS certifications like AWS Certified Solutions Architect and AWS Certified Developer validate skills and instill confidence in learners.

Overcoming Challenges and Optimizing Learning

Navigating the Complexity of Some Services

While AWS is designed for user-friendliness, certain services may pose a steeper learning curve. Services like AWS Elastic Beanstalk or AWS Redshift might require additional effort to fully grasp. Patience and consistent practice are essential during these learning phases.

Hands-On Experience as the Cornerstone

Theoretical knowledge alone won't suffice in mastering AWS. Activate a free AWS account and engage in practical experiments with the services. The more hands-on experience gained, the greater the confidence in navigating and utilizing AWS effectively.

Concluding Thoughts

In conclusion, the ease of learning AWS depends on factors such as prior experience, dedication, and the selection of learning resources. Despite challenges, AWS provides extensive documentation and learning materials to support individuals at various proficiency levels. Approach the learning process incrementally, emphasizing foundational elements, and progressively explore advanced services. With the right mindset and resources, mastering AWS unfolds as a fulfilling journey for those aspiring to become proficient cloud professionals.

0 notes

Text

Serverless Wonders: AWS Lambda in Action

Unpacking Amazon Web Services (AWS) — A Beginner’s Guide to Cloud Computing

In the expansive realm of AWS, certain aspects often linger in the shadows. Let’s delve into these lesser-explored facets from the Best AWS Training Institute.

What is AWS?

AWS, or Amazon Web Administrations, resembles a mysterious tool compartment for the computerized age. It’s a bunch of instruments and administrations given by Amazon to assist you do a wide range of cool things with your PC and information, without purchasing and oversee actual equipment.

Key components and services of AWS include:

Compute Services:

Amazon Elastic Compute Cloud (EC2): Virtual servers in the cloud.

AWS Lambda: Serverless computing service.

Storage Services:

Amazon Simple Storage Service (S3): Scalable object storage.

Amazon Elastic Block Store (EBS): Block-level storage volumes for EC2 instances.

Database Services:

Amazon RDS: Managed relational database service.

Amazon DynamoDB: Fully managed NoSQL database.

Networking:

Amazon Virtual Private Cloud (VPC): Isolated virtual networks in the cloud.

Amazon Route 53: Scalable domain name system (DNS) web service.

Machine Learning and Artificial Intelligence:

Amazon SageMaker: Fully managed machine learning service.

Amazon Polly: Text-to-speech service.

Amazon Rekognition: Image and video analysis service.

Analytics:

Amazon Redshift: Fully managed data warehouse.

Amazon Athena: Query service for data stored in S3.

Security:

AWS Identity and Access Management (IAM): Access control for AWS resources.

AWS Key Management Service (KMS): Managed encryption service.

Developer Tools:

AWS CodeDeploy: Automates code deployments.

AWS CodePipeline: Continuous integration and continuous delivery (CI/CD) service.

If you want to learn more about AWS, I highly recommend AWS Course in Bangalore because they offer certifications and job placement opportunities. Experienced teachers can help you learn better. You can find these services both online and offline. Take things step by step and consider enrolling in a course if you’re interested.

1 note

·

View note

Text

Unlocking Career Paths with AWS Expertise: What Opportunities Await?

As cloud computing continues to transform the tech industry, mastering Amazon Web Services (AWS) has become a gateway to a myriad of career opportunities.

With AWS Training in Hyderabad, professionals can gain the skills and knowledge needed to harness the capabilities of AWS for diverse applications and industries.

AWS skills are increasingly sought after, opening doors to various roles across different sectors. If you’ve recently acquired AWS knowledge or are considering it, here’s a fresh look at the diverse career paths you can explore.

1. Cloud Strategy Architect: Designing the Future

Cloud Strategy Architects are visionaries who craft comprehensive cloud solutions tailored to organizational needs. They leverage AWS to design scalable and secure architectures that drive business efficiency and innovation. This role is perfect for those who excel in strategic planning and enjoy shaping the technological landscape of companies.

2. Cloud Operations Specialist: Ensuring Seamless Functionality

Cloud Operations Specialists focus on the hands-on management of cloud infrastructure. They handle the setup, maintenance, and optimization of AWS environments to ensure everything runs smoothly. This role is ideal for individuals who thrive in operational settings and have a knack for problem-solving and system maintenance.

3. DevOps Specialist: Integrating Development and Operations

DevOps Specialists play a crucial role in streamlining development and operations through automation and continuous integration. With AWS, they manage deployment pipelines and automate processes, ensuring efficient and reliable delivery of software. If you enjoy working at the intersection of development and IT operations, this role is a great fit.

4. Technical Solutions Designer: Tailoring Cloud Solutions

Technical Solutions Designers focus on creating bespoke cloud solutions that meet specific business requirements. They work closely with clients to understand their needs and design AWS-based systems that align with their objectives. This role blends technical expertise with client interaction, making it suitable for those who excel in both areas.

5. Cloud Advisory Consultant: Guiding Cloud Transformations

Cloud Advisory Consultants offer expert guidance on cloud strategy and implementation. They assess current IT infrastructures, recommend AWS solutions, and support the transition to cloud-based environments. This role is ideal for those who enjoy advising businesses and orchestrating strategic cloud initiatives.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the Best AWS Online Training.

6. AWS System Administrator: Managing Cloud Environments

AWS System Administrators are responsible for the day-to-day management of cloud systems. They oversee performance monitoring, security, and user management within AWS environments. This role suits individuals who are detail-oriented and enjoy maintaining and optimizing IT systems.

7. Data Solutions Engineer: Harnessing Data Potential

Data Solutions Engineers specialize in handling and analyzing large datasets using AWS tools like Amazon Redshift and AWS Glue. They design data pipelines and create analytics solutions to support business intelligence. If you have a passion for data and enjoy working with cloud-based data solutions, this role is a strong match.

8. Cloud Security Analyst: Safeguarding Cloud Assets

Cloud Security Analysts focus on protecting cloud infrastructure from security threats. They use AWS security features to ensure data integrity and compliance. This role is well-suited for individuals with a keen interest in cybersecurity and a commitment to safeguarding digital assets.

9. IT Systems Manager: Overseeing IT Operations

IT Systems Managers ensure that all aspects of IT infrastructure, including cloud systems, run efficiently. With AWS skills, they lead technology strategies, manage IT teams, and oversee the implementation of cloud solutions. This role is ideal for those who are strong in leadership and strategic IT management.

10. Cloud Product Lead: Innovating Cloud Solutions

Cloud Product Leads oversee the development and rollout of cloud-based products. They coordinate with engineering teams to drive innovation and ensure product features meet market needs. This role is great for those interested in product management and driving the evolution of cloud technologies.

11. Cloud Strategy Consultant: Advising on Cloud Adoption

Cloud Strategy Consultants provide expert advice on how to leverage AWS for business transformation. They help companies evaluate their cloud needs, design effective strategies, and implement solutions. This role is perfect for those who enjoy strategic planning and consultancy.

12. Cloud Deployment Specialist: Managing Cloud Transitions

Cloud Deployment Specialists manage the migration of applications and data to AWS. They ensure that transitions are smooth and align with organizational goals. If you excel in managing complex projects and enjoy overseeing cloud transitions, this role could be a great fit.

Conclusion

Mastering AWS opens up a broad spectrum of career opportunities, from strategic roles to technical positions. Whether you’re interested in designing cloud architectures, managing operations, or guiding business transformations, AWS skills are highly valued. By leveraging your AWS expertise, you can embark on a fulfilling career path that aligns with your interests and professional goals.

0 notes

Text

Anubhav Trainings: A Pioneer in Online Training: Anubhav Trainings has established itself as a trusted provider of online training courses across various IT domains. With a team of experienced industry professionals and subject matter experts, the platform delivers high-quality training to individuals and corporate clients globally. Anubhav Trainings has gained recognition for its practical approach, hands-on exercises, and real-world scenarios, ensuring that learners gain practical knowledge that can be immediately applied in their work environments.

The Importance of Datasphere Training: Data warehouse cloud solutions, such as Amazon Redshift, Google BigQuery, and Snowflake, have transformed the way organizations handle data. These platforms offer advantages like scalability, elasticity, and ease of management, allowing businesses to store and analyze vast amounts of data efficiently. However, harnessing the full potential of these tools requires expertise in data modeling, query optimization, performance tuning, and security implementation. This is where Anubhav Trainings' online data warehouse cloud training comes into play.

Comprehensive Curriculum and Hands-on Experience: .

Expert Instructors and Mentorship:

Flexible Learning Options:

Industry-Recognized Certification

Conclusion: Anubhav Trainings stands out as a reliable and comprehensive online training platform for data warehouse cloud technologies. Its well-structured curriculum, hands-on approach, expert instructors, and flexible learning options make it an ideal choice for professionals looking to enhance their skills in this rapidly evolving field. By providing top-notch training, Anubhav Trainings empowers individuals to unlock the full potential of data warehouse cloud solutions, enabling them to contribute effectively to their organizations' data-driven decision-making processes.

Call us on +91-84484 54549

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

1 note

·

View note

Text

Anubhav Trainings: A Pioneer in Online Training: Anubhav Trainings has established itself as a trusted provider of online training courses across various IT domains. With a team of experienced industry professionals and subject matter experts, the platform delivers high-quality training to individuals and corporate clients globally. Anubhav Trainings has gained recognition for its practical approach, hands-on exercises, and real-world scenarios, ensuring that learners gain practical knowledge that can be immediately applied in their work environments.

The Importance of Datasphere Training: Data warehouse cloud solutions, such as Amazon Redshift, Google BigQuery, and Snowflake, have transformed the way organizations handle data. These platforms offer advantages like scalability, elasticity, and ease of management, allowing businesses to store and analyze vast amounts of data efficiently. However, harnessing the full potential of these tools requires expertise in data modeling, query optimization, performance tuning, and security implementation. This is where Anubhav Trainings' online data warehouse cloud training comes into play.

Comprehensive Curriculum and Hands-on Experience: .

Expert Instructors and Mentorship:

Flexible Learning Options:

Industry-Recognized Certification

Conclusion: Anubhav Trainings stands out as a reliable and comprehensive online training platform for data warehouse cloud technologies. Its well-structured curriculum, hands-on approach, expert instructors, and flexible learning options make it an ideal choice for professionals looking to enhance their skills in this rapidly evolving field. By providing top-notch training, Anubhav Trainings empowers individuals to unlock the full potential of data warehouse cloud solutions, enabling them to contribute effectively to their organizations' data-driven decision-making processes.

Call us on +91-84484 54549

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

1 note

·

View note

Text

Features of Redshift - Visualpath

Redshift is understood for its emphasis on non-stop innovation, however it is the platform's structure that has made it one of the maximum effective cloud information warehouse solutions. Here are the six capabilities of that structure that assist Redshift stand proud of different information warehouses.

1. Column-orientated databases

Data may be prepared both into rows or columns. What determines the form of approach is the character of the workload.

The maximum not unusual place gadget of organizing information is through row. That's due to the fact row-orientated structures are designed to fast manner a massive variety of small operations. This is called on line transaction processing, or OLTP, and is utilized by maximum operational databases. Redshift course

2. Massively parallel processing (MPP)

MPP is an allotted layout technique wherein numerous processors practice a "divide and conquer" method to massive information jobs. A massive processing process is prepared into smaller jobs that are then allotted amongst a cluster of processors (compute nodes). The processors whole their computations concurrently as opposed to sequentially. The end result is a massive discount in the quantity of time Redshift desires to finish an unmarried, huge process.

3. End-to-quit information encryption

No enterprise or company is exempt from information privateness and protection regulations, and encryption is one of the pillars of information protection. This is especially actual in terms of complying with legal guidelines together with GDPR, HIPAA, the Sarbanes-Oxley Act, and the California Privacy Act.

Encryption alternatives in Redshift are sturdy and surprisingly customizable. This flexibility lets in customers to configure an encryption fashionable that first-rate suits their desires. Redshift protection encryption capabilities include:

• The choice of using both an AWS-controlled and a customer-controlled key

• Migrating information among encrypted and unencrypted clusters

• A desire among AWS Key Management Service or HSM (hardware protection module)

• Options to use unmarried or double encryption, relying at the scenario

4. Network isolation

For agencies that need extra protection, directors can pick out to isolate their community inside Redshift. In this scenario, community get entry to a company's cluster(s) is constrained through permitting the Amazon VPC. The person's information warehouse stays related to the present IT infrastructure with IPsec VPN. Amazon redshift course

5. Fault tolerance

Fault tolerance refers back to the cap potential of a gadget to maintain functioning even if a few additives fail. When it involves information warehousing, fault tolerance determines the potential for a process to maintain being run while a few processors or clusters are offline.

Data accessibility and information warehouse reliability are paramount for any person. AWS video display units its clusters across the clock. When drives, nodes, or clusters fail, Redshift routinely re-replicates information and shifts information to wholesome nodes.

6. Concurrency limits

Concurrency limits decide the most variety of nodes or clusters that a person can provision at any given time. These limits make sure that ok compute sources are to be had to all customers. In this sense, concurrency limits democratize the information warehouse.

Redshift keeps concurrency limits which can be much like different information warehouses, however with a diploma of flexibility. For example, the variety of nodes which can be to be had consistent with cluster is decided through the cluster's node type. Redshift additionally configures limits primarily based totally on regions, as opposed to making use of an unmarried restriction to all customers.

For More Information about AWS Redshift online training Click Here Contact: +91 9989971070

#Amazon Redshift Online Training#Amazon Redshift Training#Amazon Redshift Certification Online Training#Amazon redshift course#Redshift course#Amazon Redshift Training course

0 notes

Text

AWS Redshift Online Training Course Free Demo - Visualpath

JOIN link: https://bit.ly/3ylEBLO

Attend Online #FreeDemo On #AmazonRedshift by Mr. Bhaskar

Demo on: 27th July, 2024 @ 9:00 AM (IST)

Contact us: +91 9989971070

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Blog link: https://visualpathblogs.com/

Visit: https://visualpath.in/amazon-redshift-online-training.html

#Amazon Redshift Online Training#Redshift Training in Hyderabad#AWS Redshift Online Training Institute in Hyderabad#Amazon Redshift Certification Online Training#AWS Redshift training Courses in Hyderabad#Amazon Redshift Courses Online#Amazon Redshift Training in Hyderabad#Amazon RedShift Training#Amazon RedShift Training in Ameerpet#Amazon RedShift Training Online#AWS Redshift Training in Hyderabad

0 notes

Text

A Comprehensive Overview of Amazon Web Services (AWS): Benefits and Challenges

In today's digital era, cloud computing has become essential for businesses looking to scale, innovate, and streamline operations. Among the various cloud service providers, Amazon Web Services (AWS) stands out as a leading option.

With AWS Training in Hyderabad, professionals can gain the skills and knowledge needed to harness the capabilities of AWS for diverse applications and industries.

Known for its extensive range of services and global reach, AWS is a popular choice for many organizations. However, like any technology, it has its strengths and weaknesses. This article delves into the key advantages and potential drawbacks of using AWS as your cloud hosting provider.

Why AWS is a Top Choice

Exceptional Scalability AWS excels in providing scalable solutions that adapt to your needs. Whether you experience rapid growth or seasonal fluctuations, AWS allows you to adjust your resources dynamically, ensuring you only pay for what you use.

Global Network of Data Centers With data centers distributed worldwide, AWS ensures low latency and high performance. This global infrastructure helps businesses deliver consistent and fast experiences to users regardless of their location.

Diverse Service Portfolio AWS offers a broad array of services, from fundamental computing and storage to advanced artificial intelligence and data analytics tools. This extensive range allows businesses to build and integrate various technologies within a single platform.

Cost-Effective Pricing Model AWS’s pay-as-you-go model is designed to be cost-effective, enabling businesses to manage their expenses based on actual usage. This flexibility is particularly beneficial for handling variable workloads.

Strong Security Measures Security is a core focus for AWS, which provides robust tools for data protection, including encryption, identity and access management, and compliance with various regulatory standards.

High Availability and Reliability AWS is engineered for high availability, with features designed to ensure operational continuity even during hardware failures or maintenance activities, enhancing overall service reliability.

Advanced Analytics and Big Data AWS supports powerful analytics and big data processing through services like Amazon Redshift and AWS Glue. These tools enable efficient data analysis and the extraction of valuable insights.

Vibrant Ecosystem and Community The AWS ecosystem is rich with third-party integrations and a thriving developer community. This extensive network offers additional resources and support, enhancing the overall AWS experience. To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the Best AWS Online Training.

Potential Drawbacks of AWS

Complex Pricing Structure AWS’s pricing can be intricate and challenging to understand. Users may encounter unexpected costs if they are not careful with resource management and monitoring.

Learning Curve The breadth of AWS services and features can be daunting for newcomers. The complexity of the platform may require significant learning and training to fully leverage its capabilities.

Risk of Over-Provisioning AWS’s flexibility might lead to over-provisioning if not managed properly. Businesses might end up paying for more resources than they actually need, affecting overall cost efficiency.

Dependency on a Single Provider Relying heavily on AWS means that any disruptions or issues with their service can impact your operations. Despite its reliability, businesses should consider the risks associated with single-provider dependency.

Support Plan Costs While AWS offers various support plans, the more comprehensive options come with additional costs. For businesses requiring extensive support, these fees can add up, potentially affecting the value of the service.

Data Transfer Fees Transferring data into AWS is generally free, but moving data out can incur substantial costs. Businesses with significant data transfer needs should account for these potential expenses.

Vendor Lock-In AWS’s proprietary technologies and APIs might lead to vendor lock-in, making it challenging to switch to other providers. This could limit future flexibility and increase switching costs.

Compliance and Regulatory Considerations Although AWS is compliant with many standards, businesses in highly regulated industries may need to conduct additional due diligence to ensure all regulatory requirements are met.

Conclusion

Amazon Web Services (AWS) offers a powerful and versatile cloud platform with many advantages, including scalability, a global network, and comprehensive security features. However, it also presents challenges such as a complex pricing model and potential vendor lock-in. Understanding these factors will help businesses make an informed decision about whether AWS is the right fit for their cloud hosting needs and how to optimize its use for their specific requirements.

0 notes

Photo

Visualpath: Amazon RedShift Online Training Institute in Hyderabad. Amazon has come up with this RedShift as a Solution which is Relational Database Model, built on the post gr SQL, launched in Feb 2013 in the AWS Services, AWS is Cloud Service Operating by Amazon & RedShift is one of the Services in it, basically, design data warehouse and it is a database system. Contact us@9989971070.

0 notes

Text

Anubhav Trainings: A Pioneer in Online Training: Anubhav Trainings has established itself as a trusted provider of online training courses across various IT domains. With a team of experienced industry professionals and subject matter experts, the platform delivers high-quality training to individuals and corporate clients globally. Anubhav Trainings has gained recognition for its practical approach, hands-on exercises, and real-world scenarios, ensuring that learners gain practical knowledge that can be immediately applied in their work environments.

The Importance of Datasphere Training: Data warehouse cloud solutions, such as Amazon Redshift, Google BigQuery, and Snowflake, have transformed the way organizations handle data. These platforms offer advantages like scalability, elasticity, and ease of management, allowing businesses to store and analyze vast amounts of data efficiently. However, harnessing the full potential of these tools requires expertise in data modeling, query optimization, performance tuning, and security implementation. This is where Anubhav Trainings' online data warehouse cloud training comes into play.

Comprehensive Curriculum and Hands-on Experience: .

Expert Instructors and Mentorship:

Flexible Learning Options:

Industry-Recognized Certification

Conclusion: Anubhav Trainings stands out as a reliable and comprehensive online training platform for data warehouse cloud technologies. Its well-structured curriculum, hands-on approach, expert instructors, and flexible learning options make it an ideal choice for professionals looking to enhance their skills in this rapidly evolving field. By providing top-notch training, Anubhav Trainings empowers individuals to unlock the full potential of data warehouse cloud solutions, enabling them to contribute effectively to their organizations' data-driven decision-making processes.

Call us on +91-84484 54549

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

1 note

·

View note

Photo

AWS Redshift Training

To benefit from this Amazon Redshift Training course from mildain, you will need to have basic IT application development and deployment concepts, and good understanding of data warehousing and analytic tools, prior to attending.

#aws redshift training#redshift training#amazon redshift training#redshift certification#redshift training online

0 notes

Text

Anubhav Trainings: A Pioneer in Online Training: Anubhav Trainings has established itself as a trusted provider of online training courses across various IT domains. With a team of experienced industry professionals and subject matter experts, the platform delivers high-quality training to individuals and corporate clients globally. Anubhav Trainings has gained recognition for its practical approach, hands-on exercises, and real-world scenarios, ensuring that learners gain practical knowledge that can be immediately applied in their work environments.

The Importance of Datasphere Training: Data warehouse cloud solutions, such as Amazon Redshift, Google BigQuery, and Snowflake, have transformed the way organizations handle data. These platforms offer advantages like scalability, elasticity, and ease of management, allowing businesses to store and analyze vast amounts of data efficiently. However, harnessing the full potential of these tools requires expertise in data modeling, query optimization, performance tuning, and security implementation. This is where Anubhav Trainings' online data warehouse cloud training comes into play.

Comprehensive Curriculum and Hands-on Experience: .

Expert Instructors and Mentorship:

Flexible Learning Options:

Industry-Recognized Certification

Conclusion: Anubhav Trainings stands out as a reliable and comprehensive online training platform for data warehouse cloud technologies. Its well-structured curriculum, hands-on approach, expert instructors, and flexible learning options make it an ideal choice for professionals looking to enhance their skills in this rapidly evolving field. By providing top-notch training, Anubhav Trainings empowers individuals to unlock the full potential of data warehouse cloud solutions, enabling them to contribute effectively to their organizations' data-driven decision-making processes.

Call us on +91-84484 54549

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

1 note

·

View note

Text

Important libraries for data science and Machine learning.

Python has more than 137,000 libraries which is help in various ways.In the data age where data is looks like the oil or electricity .In coming days companies are requires more skilled full data scientist , Machine Learning engineer, deep learning engineer, to avail insights by processing massive data sets.

Python libraries for different data science task:

Python Libraries for Data Collection

Beautiful Soup

Scrapy

Selenium

Python Libraries for Data Cleaning and Manipulation

Pandas

PyOD

NumPy

Spacy

Python Libraries for Data Visualization

Matplotlib

Seaborn

Bokeh

Python Libraries for Modeling

Scikit-learn

TensorFlow

PyTorch

Python Libraries for Model Interpretability

Lime

H2O

Python Libraries for Audio Processing

Librosa

Madmom

pyAudioAnalysis

Python Libraries for Image Processing

OpenCV-Python

Scikit-image

Pillow

Python Libraries for Database

Psycopg

SQLAlchemy

Python Libraries for Deployment

Flask

Django

Best Framework for Machine Learning:

1. Tensorflow :

If you are working or interested about Machine Learning, then you might have heard about this famous Open Source library known as Tensorflow. It was developed at Google by Brain Team. Almost all Google’s Applications use Tensorflow for Machine Learning. If you are using Google photos or Google voice search then indirectly you are using the models built using Tensorflow.

Tensorflow is just a computational framework for expressing algorithms involving large number of Tensor operations, since Neural networks can be expressed as computational graphs they can be implemented using Tensorflow as a series of operations on Tensors. Tensors are N-dimensional matrices which represents our Data.

2. Keras :

Keras is one of the coolest Machine learning library. If you are a beginner in Machine Learning then I suggest you to use Keras. It provides a easier way to express Neural networks. It also provides some of the utilities for processing datasets, compiling models, evaluating results, visualization of graphs and many more.

Keras internally uses either Tensorflow or Theano as backend. Some other pouplar neural network frameworks like CNTK can also be used. If you are using Tensorflow as backend then you can refer to the Tensorflow architecture diagram shown in Tensorflow section of this article. Keras is slow when compared to other libraries because it constructs a computational graph using the backend infrastructure and then uses it to perform operations. Keras models are portable (HDF5 models) and Keras provides many preprocessed datasets and pretrained models like Inception, SqueezeNet, Mnist, VGG, ResNet etc

3.Theano :

Theano is a computational framework for computing multidimensional arrays. Theano is similar to Tensorflow , but Theano is not as efficient as Tensorflow because of it’s inability to suit into production environments. Theano can be used on a prallel or distributed environments just like Tensorflow.

4.APACHE SPARK:

Spark is an open source cluster-computing framework originally developed at Berkeley’s lab and was initially released on 26th of May 2014, It is majorly written in Scala, Java, Python and R. though produced in Berkery’s lab at University of California it was later donated to Apache Software Foundation.

Spark core is basically the foundation for this project, This is complicated too, but instead of worrying about Numpy arrays it lets you work with its own Spark RDD data structures, which anyone in knowledge with big data would understand its uses. As a user, we could also work with Spark SQL data frames. With all these features it creates dense and sparks feature label vectors for you thus carrying away much complexity to feed to ML algorithms.

5. CAFFE:

Caffe is an open source framework under a BSD license. CAFFE(Convolutional Architecture for Fast Feature Embedding) is a deep learning tool which was developed by UC Berkeley, this framework is mainly written in CPP. It supports many different types of architectures for deep learning focusing mainly on image classification and segmentation. It supports almost all major schemes and is fully connected neural network designs, it offers GPU as well as CPU based acceleration as well like TensorFlow.

CAFFE is mainly used in the academic research projects and to design startups Prototypes. Even Yahoo has integrated caffe with Apache Spark to create CaffeOnSpark, another great deep learning framework.

6.PyTorch.

Torch is also a machine learning open source library, a proper scientific computing framework. Its makers brag it as easiest ML framework, though its complexity is relatively simple which comes from its scripting language interface from Lua programming language interface. There are just numbers(no int, short or double) in it which are not categorized further like in any other language. So its ease many operations and functions.

Torch is used by Facebook AI Research Group, IBM, Yandex and the Idiap Research Institute, it has recently extended its use for Android and iOS.

7.Scikit-learn

Scikit-Learn is a very powerful free to use Python library for ML that is widely used in Building models. It is founded and built on foundations of many other libraries namely SciPy, Numpy and matplotlib, it is also one of the most efficient tool for statistical modeling techniques namely classification, regression, clustering.

Scikit-Learn comes with features like supervised & unsupervised learning algorithms and even cross-validation. Scikit-learn is largely written in Python, with some core algorithms written in Cython to achieve performance. Support vector machines are implemented by a Cython wrapper around LIBSVM.

Below is a list of frameworks for machine learning engineers:

Apache Singa is a general distributed deep learning platform for training big deep learning models over large datasets. It is designed with an intuitive programming model based on the layer abstraction. A variety of popular deep learning models are supported, namely feed-forward models including convolutional neural networks (CNN), energy models like restricted Boltzmann machine (RBM), and recurrent neural networks (RNN). Many built-in layers are provided for users.

Amazon Machine Learning is a service that makes it easy for developers of all skill levels to use machine learning technology. Amazon Machine Learning provides visualization tools and wizards that guide you through the process of creating machine learning (ML) models without having to learn complex ML algorithms and technology. It connects to data stored in Amazon S3, Redshift, or RDS, and can run binary classification, multiclass categorization, or regression on said data to create a model.

Azure ML Studio allows Microsoft Azure users to create and train models, then turn them into APIs that can be consumed by other services. Users get up to 10GB of storage per account for model data, although you can also connect your own Azure storage to the service for larger models. A wide range of algorithms are available, courtesy of both Microsoft and third parties. You don’t even need an account to try out the service; you can log in anonymously and use Azure ML Studio for up to eight hours.

Caffe is a deep learning framework made with expression, speed, and modularity in mind. It is developed by the Berkeley Vision and Learning Center (BVLC) and by community contributors. Yangqing Jia created the project during his PhD at UC Berkeley. Caffe is released under the BSD 2-Clause license. Models and optimization are defined by configuration without hard-coding & user can switch between CPU and GPU. Speed makes Caffe perfect for research experiments and industry deployment. Caffe can process over 60M images per day with a single NVIDIA K40 GPU.

H2O makes it possible for anyone to easily apply math and predictive analytics to solve today’s most challenging business problems. It intelligently combines unique features not currently found in other machine learning platforms including: Best of Breed Open Source Technology, Easy-to-use WebUI and Familiar Interfaces, Data Agnostic Support for all Common Database and File Types. With H2O, you can work with your existing languages and tools. Further, you can extend the platform seamlessly into your Hadoop environments.

Massive Online Analysis (MOA) is the most popular open source framework for data stream mining, with a very active growing community. It includes a collection of machine learning algorithms (classification, regression, clustering, outlier detection, concept drift detection and recommender systems) and tools for evaluation. Related to the WEKA project, MOA is also written in Java, while scaling to more demanding problems.

MLlib (Spark) is Apache Spark’s machine learning library. Its goal is to make practical machine learning scalable and easy. It consists of common learning algorithms and utilities, including classification, regression, clustering, collaborative filtering, dimensionality reduction, as well as lower-level optimization primitives and higher-level pipeline APIs.

mlpack, a C++-based machine learning library originally rolled out in 2011 and designed for “scalability, speed, and ease-of-use,” according to the library’s creators. Implementing mlpack can be done through a cache of command-line executables for quick-and-dirty, “black box” operations, or with a C++ API for more sophisticated work. Mlpack provides these algorithms as simple command-line programs and C++ classes which can then be integrated into larger-scale machine learning solutions.

Pattern is a web mining module for the Python programming language. It has tools for data mining (Google, Twitter and Wikipedia API, a web crawler, a HTML DOM parser), natural language processing (part-of-speech taggers, n-gram search, sentiment analysis, WordNet), machine learning (vector space model, clustering, SVM), network analysis and visualization.

Scikit-Learn leverages Python’s breadth by building on top of several existing Python packages — NumPy, SciPy, and matplotlib — for math and science work. The resulting libraries can be used either for interactive “workbench” applications or be embedded into other software and reused. The kit is available under a BSD license, so it’s fully open and reusable. Scikit-learn includes tools for many of the standard machine-learning tasks (such as clustering, classification, regression, etc.). And since scikit-learn is developed by a large community of developers and machine-learning experts, promising new techniques tend to be included in fairly short order.

Shogun is among the oldest, most venerable of machine learning libraries, Shogun was created in 1999 and written in C++, but isn’t limited to working in C++. Thanks to the SWIG library, Shogun can be used transparently in such languages and environments: as Java, Python, C#, Ruby, R, Lua, Octave, and Matlab. Shogun is designed for unified large-scale learning for a broad range of feature types and learning settings, like classification, regression, or explorative data analysis.

TensorFlow is an open source software library for numerical computation using data flow graphs. TensorFlow implements what are called data flow graphs, where batches of data (“tensors”) can be processed by a series of algorithms described by a graph. The movements of the data through the system are called “flows” — hence, the name. Graphs can be assembled with C++ or Python and can be processed on CPUs or GPUs.

Theano is a Python library that lets you to define, optimize, and evaluate mathematical expressions, especially ones with multi-dimensional arrays (numpy.ndarray). Using Theano it is possible to attain speeds rivaling hand-crafted C implementations for problems involving large amounts of data. It was written at the LISA lab to support rapid development of efficient machine learning algorithms. Theano is named after the Greek mathematician, who may have been Pythagoras’ wife. Theano is released under a BSD license.

Torch is a scientific computing framework with wide support for machine learning algorithms that puts GPUs first. It is easy to use and efficient, thanks to an easy and fast scripting language, LuaJIT, and an underlying C/CUDA implementation. The goal of Torch is to have maximum flexibility and speed in building your scientific algorithms while making the process extremely simple. Torch comes with a large ecosystem of community-driven packages in machine learning, computer vision, signal processing, parallel processing, image, video, audio and networking among others, and builds on top of the Lua community.

Veles is a distributed platform for deep-learning applications, and it’s written in C++, although it uses Python to perform automation and coordination between nodes. Datasets can be analyzed and automatically normalized before being fed to the cluster, and a REST API allows the trained model to be used in production immediately. It focuses on performance and flexibility. It has little hard-coded entities and enables training of all the widely recognized topologies, such as fully connected nets, convolutional nets, recurent nets etc.

1 note

·

View note