#Airbyte

Explore tagged Tumblr posts

Text

Data Lake Querying with Amazon Athena

In the ever-evolving world of data analytics, efficiently querying data lakes remains a significant challenge. But don't worry, we're here to help! Our latest video dives deep into this topic and introduces you to Amazon Athena, a powerful tool designed to simplify data lake querying.

Key Challenges in Querying Data Lakes

We start by identifying the main obstacles faced when dealing with data lakes. Data lakes often contain vast amounts of unstructured data, making it difficult to perform efficient queries. Issues like data quality, integration, and the sheer volume of data can pose significant challenges. Understanding these challenges is the first step towards finding effective solutions.

Introduction to Amazon Athena

Next, we provide a comprehensive overview of Amazon Athena, explaining its features and how it stands out as a solution for data lake querying. Amazon Athena allows you to run SQL queries directly on data stored in Amazon S3 without the need for complex ETL processes. It's serverless, so there's no infrastructure to manage, and you pay only for the queries you run.

How Athena Addresses Data Lake Challenges

We explore how Athena tackles the common issues in querying data lakes, making the process more efficient and user-friendly. Amazon Athena supports a variety of data formats, including CSV, JSON, ORC, and Parquet, providing flexibility in how you store and query your data. This capability allows it to handle diverse data formats seamlessly.

Live Demo of Amazon Athena

Watch a live demonstration of Athena in action. See how it works in real-time and how you can leverage its capabilities for your data analytics needs. This live demo will give you a practical understanding of its functionalities and show you how to maximize its potential.

Limitations of Amazon Athena

Finally, we discuss the limitations of Amazon Athena to provide a balanced view of its capabilities and help you make an informed decision. While Athena is powerful, it may not be suitable for real-time data analysis due to its query latency. Additionally, complex queries might require optimization for better performance. Athena is best suited for batch processing and querying historical data. For real-time analytics, other AWS services like Kinesis or Redshift might be more appropriate.

0 notes

Text

Olá pessoal, tudo bem? Nos próximos meses estarei postando lá no linkedin o desenvolvimento de um projeto de engenharia de dados chamado "📊 LiftOff Data", aplicando o conhecimento que eu venho aprendendo aqui na Jornada de Dados. (Sou junior então tenha paciencia kkkkk)

🎯 Objetivo: Este projeto apresenta uma arquitetura de pipeline de dados de baixo custo voltada para startups, com foco em integração de dados de vendas a partir de APIs e CRMs, utilizando tecnologias modernas e acessíveis. O objetivo é criar uma solução escalável para ingestão, transformação e visualização de dados, garantindo que tanto engenheiros de dados quanto analistas possam colaborar eficientemente. A arquitetura proposta inclui a divisão do pipeline em múltiplas camadas (Bronze, Silver e Gold) com PostgreSQL com pg_duckdb, integração com APIs, Airbyte para ingestão de dados, Airflow para orquestração e #DBT para transformação de dados. A plataforma colaborativa #Briefer também é integrada, permitindo que analistas de dados acessem e utilizem os dados transformados de forma eficiente.

Além do pipeline de dados, o projeto inclui uma interface de assistente AI no Streamlit, que possibilita interagir com um modelo de IA, como ChatGPT ou Llama3. Essa interface oferece uma experiência prática para análise de vendas e insights, permitindo que analistas façam perguntas e obtenham respostas rápidas e insights relevantes.

Como parte da solução, desenvolvi um dashboard interativo utilizando o Streamlit, que permite visualizar e analisar os dados de vendas e recursos humanos de forma intuitiva e eficiente. O dashboard apresenta métricas-chave, gráficos interativos e tabelas informativas, facilitando a tomada de decisões baseadas em dados.

Sinta-se à vontade para explorar, utilizar, dar sugestões para melhorias e contribuir para este repositório com um pull request!

Link do projeto completo no GitHub (Se puder dar uma estrelinha 🌟 lá no github eu agradeço muito): https://lnkd.in/dF_4TkV7

Link pro post linkedin: https://www.linkedin.com/posts/thiagosilvafarias_postgresql-pgabrduckdb-airflow-activity-7265029605361676289-G89E?utm_source=share&utm_medium=member_desktop

0 notes

Text

Airbyte Enhances Databricks Integration, Enables Data Replication from Diverse Sources into the Databricks Data Intelligence Platform

http://securitytc.com/TFk9PQ

0 notes

Text

Airbyte Success Story: An open-source data integration engine that helps you consolidate your data

0 notes

Text

Separei os 5 melhores projetos nossos Todos são gratuitos e estão em nosso Youtube 1) Como criar do ZERO um Lakehouse (Nota 9.94): Quer dominar conceitos como Lake House, Data Mesh e arquitetura de dados? Neste workshop, Nilton traz para você a palestra de destaque que conquistou os maiores eventos de Data & AI no Brasil. Aproveite a oportunidade para se atualizar sobre as tendências discutidas pelas principais lideranças do setor! Participação Nilton Kazuyuki U. https://lnkd.in/dRM8eaXj 2) Qualidade de dados em ETL com Pandera (Nota 9.80): Pandera é uma biblioteca para validação de dados em pipelines ETL, permitindo definir e aplicar regras de validação em DataFrames do Pandas (Spark) para garantir a integridade e qualidade dos dados. Participação do Renan Heckert https://lnkd.in/dKCeF_vv 3) DW completo com SQL, Airflow, dbt e Airbyte (Nota 9.78): Desenvolva um Data Warehouse completo utilizando SQL para manipulação de dados, Airflow para orquestração de workflows, dbt para transformação de dados e Airbyte para integração de dados. Participação da lenda Marc Lamberti https://lnkd.in/dbPQmxMs 4) Crie pipelines CI/CD com dbt e GitHub Actions (Nota 9.77): Aprenda a configurar pipelines de Integração e Entrega Contínua (CI/CD) utilizando dbt e GitHub Actions, automatizando testes, deploys e garantindo a qualidade do código em projetos de dados. Participação do Bruno Souza de Lima https://lnkd.in/ddYWa5xP 5) Pipeline Black Friday com Python, SQL e AI: Dias 8, 9 e 10 de Outubro às 20h. Prepare-se para aumentar suas vendas da Black Friday com uma pipeline de dados completa! Ao longo de três dias, você aprenderá a extrair, transformar e carregar dados, utilizando Python, SQL, DBT e Inteligência Artificial. Esta é uma oportunidade única para dominar as ferramentas essenciais para automação e otimização de processos de vendas. Já curte e se inscreve no canal para não perder Participação do Fabio Cantarim Melo https://lnkd.in/dYwzHiY5

0 notes

Text

Google Maps Reviews Scraper | Google Maps Reviews Data Extractor

RealdataAPI / google-maps-reviews-scraper

Are you looking to scrape all reviews for a specific location on Google Maps? The Google Maps Reviews Scraper can do just that! Enter the location's URL, and you'll receive a complete dataset of all reviews. This service is available in Australia, Canada, Germany, France, Singapore, USA, UK, UAE, and India.

Customize me! Report an issue Automation E-commerce

Readme

API

Input

Related actors

What to do Using Google Maps Reviews Scraper?

Have you ever thought about extracting Google Maps Reviews but found it challenging? Try this Google Maps Reviews Scraper for free!

Discover any location on Google Maps to scrape review data from. Copy the URL of that page and paste it into the input of Google Maps Reviews Data Scraper.

Then click on the RUN option at the bottom of the webpage and allow some time for the tool to scrape data for you. It may take a few minutes, so keep patience for that duration or do some other activity.

After the execution, you can preview and export the scraped data in multiple formats.

What are the Restrictions of Scraping Google Maps Reviews?

We advise using the limited number of reviews to extract from Google. You may not need over one thousand reviews from Google Maps. Google tries to push pictures and texts to the top of the list, so they are among the top to scrape. You will find it tough to scrape huge reviews from a single place leading to an expensive run.

Want a Complex Google Maps Scraper?

Try our actor if you want more complex review data from Google Maps. It's more complex to set, but you will scrape any Google Maps data.

Why Extract Reviews from Google Maps?

There are many uses of scraped Google Maps data, as below:

Find, track, and study your competitors

Perform market research for the most important keywords related to your industry

Perform analysis of reviews for your competitors, and your location

Explore our industry pages for more motivation to know how and where to use scraped data. Check how companies use data scraping results in travel, retail and eCommerce, and the real estate industry.

What is the Cost of Scraping Google Maps Reviews?

Real Data API offers you 5 USD monthly free credit for our free plan, and you can scrape around 100k reviews from this actor for free credits. Therefore you can scrape 100k outputs for free.

However, if you need more data too often, subscribe to the Real Data API monthly plan of 49 USD. We also offer a team plan for 499 USD.

Google Maps Reviews Scraper with Integrations

Lastly, using our integrations, you can connect the Google Maps Reviews data extractor with any web application or cloud service. You can connect with Zapier, Google Drive, GitHub, Airbyte, Slack, etc. You can also try webhooks to take action based on event occurrence.

Using Google Maps Reviews Scraper with Real Data API Actor

The Real Data API Actor gives you programmatic permission to access our platform. We've organized this API around RESTful HTTP endpoints to allow you to handle, schedule, and execute APIs. It also gives you access to any dataset and allows you to track API performance, discover outputs, update and create new versions, etc.

To use the API using Python, try our client PyPl package, and to use it in Node.js, try the client NPM package.

Check out code examples on the API page.

#GoogleMapsReviewsScraper#ExtractGoogleReviews#ScrapeGoogleReviews#GoogleReviewsAPIScraper#GoogleReviewsDataExtractor#GoogleMapsReviewAPI#GoogleBusinessReviewScraper#GooglePlacesReviewsScraper#WebScraping#DataScraping#DataCollection#DataExtraction#RealDataAPI#usa#uk#uae#germany#australia#canada

0 notes

Text

ICYMI: Airbyte Joins AWS ISV Accelerate Program http://dlvr.it/TB3pMl

0 notes

Video

youtube

Fueling AI with Great Data with Airbyte

0 notes

Text

ETL Showdown: Sling vs. Airbyte vs. Dagster vs. dbt — Which Tool is Right for You?

0 notes

Text

描述ai的潜力和中短期的未来预测

文章起源于一个用户感叹openai升级chatgpt后,支持pdf上传功能,直接让不少的靠这个功能吃饭的创业公司面临危机,另外一个叫Ate a pie的用户对此做了回复,这个简短的回复也很值得���读:

“我不知道为什么会有人感到惊讶。

以下是OpenAI未来两年的产品战略:

+你将能够上传任何内容到ChatGPT +你将能够链接任何外部服务,如Gmail、Slack +ChatGPT将拥有持久的记忆,除非你想要,否则不再需要多次聊天,(*所有的聊天都在一个窗口中进行,这样chatgpt能对你的喜好进行全方位的了解) +ChatGPT将拥有一致的、用户可定制的个性,包括政治偏见 +ChatGPT将能够支持文本、声音、图像(图表和视频还在开发中?) +ChatGPT将变得更加快速,直到你感觉它是一个真人(响应时间>50毫秒) +幻觉和非事实性错误将迅速下降 +随着自我调节的改进,拒绝回答问题的情况将减少”

然后本文作者,Rob Phillips,对此进行了详细的回复。 作为曾与Siri团队合作的工程师,作者对ai助理未来的潜力的描述非常专业:

“OpenAI正在构建一种全新的计算机,不仅仅是一个用于中间件/前端的大型语言模型(LLM)。他们需要实现这一目标的关键部分包括:

1、对用户偏好的持续把握:

1a、ai助理最大的突破始终是深入理解用户最具体的需求。 1b、这是电脑的“我靠”时刻。 1c、我们2016年在Viv项目上做到了这一点,当时我们的AI根据你通过Viv使用的每项服务了解你的喜好,并结合了上下文,比如你告诉我们你妈喜欢什么样的花。 1c、这还需要包括访问您的个人信息以推断偏好。

2、外部实时数据:

2a、LLM的50%实用性来自基础训练和RLHF微调(Reinforcement Learning from Human Feedback,增强式用户反馈学习);但通过扩展其可用数据与外部资源,其实用性将大大增加。 2b、Zapier、Airbyte等将有所帮助,但期望与第三方应用程序/数据进行深度集成。 2c、“与PDF聊天”只是冰山一角。能做的远不止这些。

3、跑在虚拟机上的应用:

3a、上下文窗口有限,因此AI提供商将继续从直接在Python或Node/Deno虚拟环境上运行任务中获益,使其可以像今天的计算机一样消耗大量数据。 3b、如今这些是数据分析师暂时的工作环境,但随着时间的推移,它们将成为一种新型的Dropbox,您的数据将长期保留以供额外处理或进行跨文件推断/洞察。

4、代理任务/流程规划:

4a、没有意图,规划就无法进行。理解意图一直是(应用开发的)圣杯,LLM最终帮助我们解锁了我们在Viv上用NLP技术花了多年时间来试图解决的功能。 4b、一旦意图准确,规划就可以开始。创建代理规划器需要非常细致,需要与用户偏好、第三方数据集、对计算能力的了解等进行大量集成。 4c、Viv真正的魔法大部分是动态规划器/混合器,它会将所有这些数据和API整合在一起,并为普通消费者生成工作流程和动态UI以执行它们。

5、专家级(可组合的)应用商店:

5a、苹果最初犯了一个错误,建立了一个封闭的应用商店;后来他们意识到,如果开放,他们可以通过创意复利来实现盈利。 5b、尽管OpenAI说他们只专注于ChatGPT,但他们终将重新定义专注的边界,并最终将帮助创造一大波的专业助理(agent)。 5c、构建者将能够将多种工具组合在一起形成专业工作流程。 5d、随着时间的推移,AI也将能够自动组合这些应用(agent),从之前的构建者那里学习。

6、持久的、上下文相关的记忆:

6a、嵌入(Embeddings)很有帮助,但它们缺少基本部分,如上下文切换、对话中心点、总结、丰富化等。 6b、如今LLM的大部分成本来自提示,但随着历史和持久性的嵌入以及推断的缓存,这将解锁长期记忆的能力,并指向关键的主题、话题、情感、语调等。 6c、核心记忆仅仅是开始。我们仍然需要所有我们思考过去的日落、分手、科学理解或与我们互动的人的敏感上下文时大脑会想到的丰富信息。

7、长轮询任务:

7a、“代理”是一个有争议的词,但部分意图是拥有可以根据所需的时间范围进行计划和自我完成的任务。 例如,“当蒙特利尔到夏威夷的航班价格低于500美元时通知我” 这将需要跨API提供商以及云中的虚拟环境��调计算。

8、动态用户界面:

8a、聊天不是最终的、一切的界面。应用之所以有按钮、日期选择器、图像等便利性,是因为它简化、澄清了操作。 8b、AI将是一个副驾驶,但要成为副驾驶,它需要适应对特定用户来说最有效的界面。未来的用户界面将是个性化的,因为优化需要它,所以用户界面将是动态的。

9、API和工具组合:

9a、预计未来AI将生成自定义的“应用”,在那里我们可以构建自己的工作流程并组合API,无需等待一个大型初创公司来做这个项目。 9b、将需要更少的应用程序和初创公司来生成前端,AI将更擅长将一系列工具和API结合在一起,付一定的费用后,生成最能满足用户需要的前端。

10、助理间的互动:

10a、未来将有无数的助理,每个助理都在帮助人类和其他助理朝着某个更高的(能力)意图发展。 10b、与此同时,助理还需要学会通过文本、API、文件系统和代理/初创公司和人类都使用的其他模式来相互对接,随着各种应用,更深的嵌入我们的世界。

11、插件/工具商店:

11a、只有通过组合工具、API、提示、数据、偏好等,才能实现专业助理。 11b、当前的插件商店还处于早期阶段,所以期待更多工作的到来,许多插件将因为它们变得更加任务关键而被内部整合。

这只是(我)10分钟的头脑风暴;之后毫无疑问还有更多,包括互联网搜索和抓取、社区(用于意图、构建、RLHF等)、动态API生成器和连接器、费用优化、工具构建、通过眼镜/耳机等不同输入方式的信息摄取。

如果你认为进入AI的时机已晚,那么请知道上述内容大约只占实际需要的25%,随着我们迭代并变得更加有创意,还有更多的创意即将到来。

我们正在@FastlaneAI构建这些部分,基于一个不太一样的理解:OpenAI永远不会在所有事情上都是最好的。因此,我们希望让你使用世界上最好的AI,不管是谁构建的(也可以是你!)。”

原文

0 notes

Text

Largest Data Engineering Survey Reports on Adoption of Modern Data Stack Tools

Airbyte, creators of a fast-growing open-source data integration platform, made available results of the biggest data engineering survey in the market which provides insights into the latest trends, tools, and practices in data engineering – especially adoption of tools in the modern data stack. Its first worldwide State of Data survey displays results in an interactive format so that anyone can drill further into the information using filters to see, for example, adoption patterns by organization size.

0 notes

Text

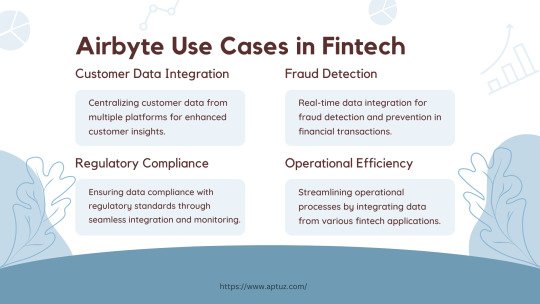

Explore the impactful use cases of Airbyte in the fintech industry, from centralizing customer data for enhanced insights to real-time fraud detection and ensuring regulatory compliance. Learn how Airbyte drives operational efficiency by streamlining data integration across various fintech applications, providing businesses with actionable insights and improved processes.

Know more at: https://bit.ly/3UbqGyT

#Fintech#data analytics#data engineering#technology#Airbyte#ETL#ELT#Cloud data#Data Integration#Data Transformation#Data management#Data extraction#Data Loading#Tech videos

0 notes

Text

6 Means To Fuse Internet Scratching Into An Electronic Advertising Technique

The e-mail scratching job checklists are Voila Norbet, Listgrabber, Aeroleads, as well as extra. In situation you wish to look for a life time license, you need to perform an one-time payment. Recording leads, nurturing them and lastly convincing them to come to be a sales lead is not an easy task, remaining in the sales industry, you are cognizant of exactly how the procedure takes place. The checklists in Skrapp are fairly helpful and quickly offered inside the chrome expansion itself.

DASSAULT SYSTÈMES PRIVACY POLICY - discover.3ds.com

DASSAULT SYSTÈMES PRIVACY POLICY.

Posted: Wed, 26 Oct 2022 07:00:00 GMT [source]

We're linking business from the digital sector for a shared function. These regulations also detail your duties concerning securely storing and also securing individual information, consisting of securing the information. You're also not allowed to market info Legal considerations for scraping Amazon data about an individual to a 3rd party unless they provide you specific permission.

What Is Social Media Sites Scratching Tool?

Semrush is an all-in-one digital advertising and marketing solution with more than 50 tools in search engine optimization, social networks, and web content marketing. In this article, we will provide you suggestions https://public.sitejot.com/gjwhana987.html on just how to gather email listing with the Using web scraping for strategic investment choices in venture capital aid of e-mail scuffing. Nonetheless, prior to we continue to email scraping, allow's comprehend what email advertising is everything about and also just how it can help associate online marketers. With Import.io, individuals can arrange data extraction, automate internet interactions as well as operations, as well as gain beneficial understandings with records, charts, and visualizations.

youtube

Social Media Marketing (SMM): What It Is, How It Works, Pros and ... - Investopedia

Social Media Marketing (SMM): What It Is, How It Works, Pros and ....

Posted: Sat, 25 Mar 2017 19:23:57 GMT [source]

Unlike a Profile Expansion Table, which shops aggregated information, a Supplemental Table holds unaggregated information. For example, John Doe carried out several browses or made multiple purchases. One of the crucial functions of Apify is the Apify Proxy, which gives HTTPS assistance, geolocation targeting, intelligent IP turning, as well as Google SERP proxies. You can export your data in JSON, XML, and CSV formats, and Apify perfectly integrates with Zapier, Keboola, Transposit, Airbyte, Webhooks, RESTful API, and Apify. The brand name does an excellent task highlighting its items in an enjoyable, non-promotional method.

Consumer Persona/avatar Development

Edit the visual appearance of HTML tags through using CSS, add web types, activity buttons, links and so on. After saving, the changed file will certainly stay on the computer system with upgraded functions, design as well as targeted activities. In the complimentary version, you can download as much as 40 projects with no more than 500 data in one task.

How do I produce material for my affiliate advertising and marketing site?

Create content with high search volume.Write concerning events associated with your niche.Write detailed and truthful product reviews.Write to the larger audience.Research your prospects and your products.

Check rules/regulations: Scuffing publicly readily available information online is lawful yet you have to consider information protection and also user privacy. An associate email marketing project is the promo of associate items through an e-mail sequence. Other variables are visibility to a modification of approach, looking for brand-new campaigns, carrying out examinations and also, certainly, marketing analysis. Success is achieved by those who do not stop at trifles, however search for ways to scale. To run one project, you require to do a great deal of research on the target team, GEO option, uses, and so on, as well as prepare consumables, consisting of a landing web page. Email scuffing is a method for marketers to collect regular get in touch with info on prospective leads. A program examines websites, extracts email addresses and also other details, after that publishes the data to a storage space format like a database or Excel data. Organizations can also use email scrapers to collect information from social networks. " A regular mistake B2B organizations make is enlightening the buyer by themselves firm, product, or service. The buyer isn't prepared for that; they are simply beginning to comprehend their issue." Download our overview to enhancing e-mail advertising and marketing for conversions and find out just how to grow your email checklist, make certain deliverability, as well as increase engagement. Devise a brand name placing declaration that your group and also prospective clients can rely on, as well as you'll await the next action. It is likewise possible to buy a landing web page not from the certified public accountant network or from an already prepared landing page. If Russian scares you, just utilize the translation alternative that Google supplies. Nevertheless, online marketers utilize e-mail addresses as a starting point for cool e-mail advertising. Finding that information by hand is a lengthy process that isn't constantly trusted. That's why numerous businesses rely on email scraping to enhance the collection of call info.

You must recognize just how those laws effect your use of email scratching programs.

Join us to find out the use of Byteline's no-code Web Scrape along with its Chrome extension.

With the free variation of the app, you will certainly obtain 50 complimentary searches/month as well as can upgrade your represent extra.

Video clip additionally gave one of the most ROI when contrasted to other formats like pictures, article, podcasts, and also study.

It is also feasible to include removal guidelines inside the outcome option in order to create effective extractors. Each line of the table is turned into a JSON things where tricks would certainly be column name as well as value would be material of the table. ScrapingBee permits you to conveniently get formated information from HTML tables.

0 notes

Text

The Importance of Data Engineering for Successful AI with Airbyte and Zilliz

http://securitytc.com/TFS1XR

0 notes

Link

0 notes

Text

Open-source data integration for modern data teams Get your data pipelines running in minutes. With pre-built or custom connectors. From the Airbyte UI, API or CLI. Join 3,000+ companies syncing data with our open-source ELT platform.

— https://airbyte.io/

0 notes