Text

Amazon Aurora Insights from Minjar

Minjar is a Premier Consulting Partner of AWS and offers a comprehensive portfolios of offerings including Managed Cloud, Cloud Migration and our advisory offering – Smart Assist. Database solutions form an integral part of our offerings and we are engaged with our customers through Architecture advisory, technical design, implementation and migration and ongoing managed services / optimization of database environments. As part of these activities, Minjar looks are performance and cost optimization opportunities and we have seen customer use cases where existing MySQL databases can be upgraded to Aurora for higher price performance. Minjar Cloud practice team is constantly evaluating opportunities to optimize client databases to leverage Aurora where its applicable. In this post we share some of our learnings and best practices on Amazon Aurora.

Amazon Aurora is a relational database engine from Amazon Web Services. Aurora engine is compatible with MySQL, which means applications and drivers used in databases relying on MySQL can be used in Aurora with almost no changes.

Amazon Relational Database Service (RDS) manages Aurora databases by handling provisioning, patching, backup, recovery and other tasks. Aurora stores a minimum of 10 GB and automatically scales to a maximum of 64 TB size. The service divides the volume of a database into 10 GB chunks, which are spread across different disks. Each chunk is replicated six ways across three AWS Availability Zones (AZs). If data in one AZ fails, Aurora attempts to recover data from another AZ. Aurora is also self-healing, meaning it performs automatic error scans of data blocks and disks.

Developers can scale up resources allocated to a database instance and improve availability through Amazon Aurora Replicas, which share the same storage as the Elastic Compute Cloud (EC2) instance. An Amazon Aurora Replica can be promoted to a primary instance without any data loss, which helps with fault tolerance if the primary instance fails. If a developer has made an Aurora Replica, the service automatically fails over within one minute; it takes about 15 minutes to fail over without a replica.

AWS Aurora was built to deliver significantly improved parallel processing and concurrent I/O operations. In traditional database engine architectures (like Mysql, MSSQL, and Oracle), all layers of data functionality – like SQL, transactions, caching, and logging – reside in single box.

But when you provision Amazon Aurora, logging and caching are moved into a “multi tenant, scale-out database-optimised storage service” that’s deeply integrated with other AWS compute and storage services. Besides allowing you to dramatically scale without nearly the overhead, you can restart the database engine without losing the cache.

Some key reasons where we recommend AWS Aurora in comparison to MySQL are:

5x increase in performance when compared to MySQL.

Designed to detect database crashes and restart without the need for manual crash recovery

SSL (AES-256) encryption to secure data in transit

You can provision upto15 read replica.

Migration approach

You can easily migrate from RDS MySQL to Aurora. You can provision Aurora instance from any of the existing RDS MySQL snapshot.

AWS Aurora is fully compatible AWS Database Migration Service (DMS). You can migrate or convert any of the existing database to Aurora using DMS service. If you need to convert the database engine then first you need to use AWS Schema Conversion tool.

0 notes

Text

7 Basic Freedoms Promised on AWS Cloud

Most of the enterprises today have migrated to cloud in order to lower down workloads and stay ahead in the game. As an AWS premier consulting partner, we have helped customers in securely migrating to AWS Cloud with ease.

This year, at the AWS re:Invent conference in Vegas, Amazon introduced a host of new services to provide complete freedom to customers looking to migrate their on-premise infra to AWS Cloud.

Take a look-

1. Freedom to build unfettered

If you can't build fast, you are out of the league.

In response to this, AWS has launched a series of new services that provides an infrastructure that is robust as well as atop for developers and helps in faster deployments.

2. Freedom to get real value from data

Complexity, timely analysis of the expensive BI tools are a few challenges that businesses have been facing over the years. In response to this, AWS has announced a new service called Quicksight, a fast, easy to use business intelligence as a service solution that has deep integration with the AWS platform for about 1/10th of the cost of traditional BI software.

3. Freedom to get in and out of the cloud quickly

Streaming data into the cloud is no more a challenge.

AWS announced Kinesis Firehose which is a service for securely streaming data into AWS.

One big challenge faced by businesses today is dealing with large terabyte or petabyte scale data loads. Right from buying a ton of removable storage, to running encryption and data slicing processes , to loading it onto a storage device and then shipping it. the process is just cumbersome.

In response to this challenge, AWS announced a storage device called Snowball, a hardened case that can store 50TB.

4. Freedom from bad database relationships

Businesses today are largely shifting from expensive commercial database software solutions to open source database technologies like MySql, PostGres, etc. But the process of migration can be a big headache, causing large downtime. With the new AWS Database Migration Service, you can smoothly migrate to AWS with zero downtime. The new AWS Aurora supports MySQL and PostGres, and support for MariaDB is another added service by Amazon. And if you are looking to migrate between different database engines, try out the new Scheme Conversion Tool.

5. Freedom to Migrate

Migrating to cloud is now as easy as getting a cup of coffee!

Yes, Amazon has announced a stream of new services to provide freedom and control to end customers and helping them move to cloud, seamlessly.

Still unsure if you need to migrate your business to cloud? Reach out to us, and we shall be more than happy to assist you.

6. Freedom to secure your cake and eat it too

Being secure in the cloud and having full control access of your data is what matters to us.

The new set of compliance and security services announced by Amazon includes an SEC financial certification called SEC Rule 17a-4(f) which allows companies to backup financial data in AWS’s Glacier Vault Lock.

Further, AWS Config Rules and Amazon Inspector are a few new services launched that intend to make your cloud journey absolute with no security barriers.

7. Freedom to say yes

Moving to cloud is not easy if the cloud strategy is not in place. We ensure secure cloud adoption for enterprises and give you the freedom to stay agile in the game with AWS services.

With the promise of complete 'Freedom' along with scalability, agility, and responsibility, Amazon also published the AWS Well-Architected Framework, an insightful guide to AWS Architecture Solutions.

And, if you have any queries related to Cloud, connect with us at [email protected].

0 notes

Text

Minjar recognized as AWS Premier Consulting Partner for 2016

We are pleased to announce that we are recognised as a premier consulting partner of AWS for 2016 second time in a row at re:Invent. And, we were also one among the top system integrators.

Hang on! That’s not the end. We were also recognized as one of the top AWS managed services partners.

We are humbled by this gesture from Amazon Web Services leadership team. It wouldn’t have been possible to be part of the premier league without the support of our awesome customers and talented team.

“We are very excited to be in the league of AWS MSP partners and bring the intelligent solutions to enterprise customers to simplify their cloud adoption” says Vijay Rayapati, CEO at Minjar.

“Minjar believes in deeply understanding customer problems, building out-of-box solutions and automating everything! Hence its customers are able to focus on innovation, while they work with an expert partner and highly responsive team to get complete business assurance for their mission critical applications“ says Anand, Co-founder & President at Minjar.

Again, we would like to thank all our customers for their immense support throughout our journey.

To know more about Minjar, visit www.minjar.com or write to us at [email protected].

0 notes

Text

AWS reInvent 2015 day 3 keynote - A quick recap

Just when the cloud ecosystem thought it has recovered from the rapid fire announcements from Day 2, AWS re:Invent 2015 event in Las Vegas had bigger plans in stored!

With even higher spirits on Day 3 of the event, Dr. Werner Vogels took up the stage and revealed some of the most anticipated announcements and placed a big bet for other players in the market.

Here’s a recap of the announcements:

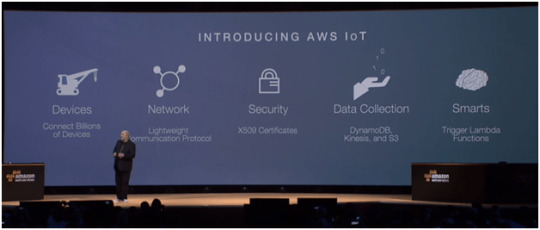

AWS IoT

AWS IoT is a managed cloud platform that lets connected devices easily and securely interact with cloud applications and other devices. AWS IoT can support billions of devices and trillions of messages, and can process and route those messages to AWS endpoints, such as AWS Lambda and Amazon Kinesis, and to other devices reliably and securely.

AWS Mobile Hub

AWS Mobile Hub is the fastest way to build mobile apps powered by AWS. It lets you easily add and configure features for your apps, including user authentication, data storage, backend logic, push notifications, content delivery, and analytics — all from a single, integrated console, and without worrying about provisioning, scaling, and managing the infrastructure.

Amazon EC2 Container Service

You can now use the Amazon ECS CLI with Docker Compose to easily launch multi-container applications. We have also added availability zone awareness to the Amazon ECS service scheduler, allowing the scheduler to spread your tasks across availability zones.

AWS Lambda

You can now develop your AWS Lambda function code using Python, maintain multiple versions of your function code, invoke your code on a regular schedule, and run your functions for up to five minutes.

Amazon EC2 X1

Amazon EC2 X1 Instances are high-memory instances designed for in-memory databases such as SAP HANA as well as memory intensive and latency sensitive workloads such as Microsoft SQL Server, Apache Spark, and Presto. X1 instances will have up to 2 TB of instance memory, and are powered by four-way 2.3 GHz Intel® Xeon® E7 8880 v3 (Haswell) processors, which offer high memory bandwidth and a large L3 cache to boost performance of in-memory applications.

With those thick and fast major announcements, the crowd headed to some crazy party time hosted outdoors in the LINQ parking lot. The re:Play madness, sponsored by Intel, featured head-spinning, groovy music performance by popular Djs (including DJ Zedd), free food one simply can’t miss on, drinks, and tons of fun activities featuring drones, video games, rides, and more.

If you haven’t got a chance to meet our Minjas at our booth, drop by booth #129.

Lots of free swags reserved at our booth for the last day of AWS re:Invent. Don’t forget to ask for the coupon cards to avail discounts on Botmetric, your virtual cloud engineer.

0 notes

Text

AWS reinvent 2015 day 2 keynotes - A quick recap!

This year, the largest cloud conference AWS reinvent 2015 was much bigger with over 19000+attendees and 278 breakout session, the Sands expo hall was full and over flowing. Andy Jassy, SVP at AWS, shared numbers describing the AWS quarter over quarter growth. With over 1 million active customers, Amazon EC2 usage increased by 95% and S3 data transfer has increased by 120%.

Here’s a recap of the major announcements from Day 2 AWS reinvent:

Amazon QuickSight

Amazon kicked off the session by announcing Amazon QuickSight, is a fast, cloud-powered business intelligence (BI) service that makes it easy to build visualizations, perform ad-hoc analysis, and quickly get business insights from data. Amazon QuickSight integrates with AWS data stores, flat files, and third-party sources, and it remains super-fast and responsive while seamlessly scaling to hundreds of thousands of users and petabytes of data.

Amazon Kinesis Firehose

Amazon Kinesis Firehose was purpose-built to make it even easier to load streaming data into AWS. Users simply create a delivery stream, route it to an Amazon Simple Storage Service (S3) bucket and/or a Amazon Redshift table, and write records (up to 1000 KB each) to the stream. Behind the scenes, Firehose will take care of all of the monitoring, scaling, and data management.

AWS Snowball

Amazon announced the release of AWS Import/Export Snowball. Built around appliances that we own and maintain, the new model is faster, cleaner, simpler, more efficient, and more secure. Users don’t have to buy storage devices or upgrade your network. Snowball is designed for customers that need to move lots of data (generally 10 terabytes or more) to AWS on a one-time or recurring basis.

MariaDB

AWS introduced MariaDB is now available as a fully-managed service on AWS with up to 6TB of storage, 30,000 IOPS, and support for high-availability deployments through the Amazon Relational Database Service. Amazon RDS for MariaDB is available in all commercial regions. You can start running production workloads from day one with high availability using multiple availability zones.

AWS Config rule

AWS has extended Config with a powerful new rule system. Users can define their own custom rules. Rules can be targeted at specific resources (by id), specific types of resources, or at resources tagged in a particular way. Rules are run when those resources are created or changed, and can also be evaluated on a periodic basis (hourly, daily, and so forth).

Amazon Inspector

Amazon Inspector is an automated security assessment service that helps minimize the likelihood of introducing security or compliance issues when deploying applications on AWS. Amazon Inspector automatically assesses applications for vulnerabilities or deviations from best practices. After performing an assessment, Amazon Inspector produces a detailed report with prioritized steps for remediation.

It was indeed an incredible session with amazing announcements focusing on security, BI & data analytics. On that note, if you are at reinvent, drop by at booth #129, catch up with our experts and get exciting offers and prizes.

Day 2 fun at booth #129 – Loads of visitors, t-Shirts, product demos, coupon cards, offers and prizes.

0 notes

Text

Only Cloud Can Deliver ITSM Nirvana

The emergence of Cloud technologies has been a paradigm shift that is not only disrupting how Information Technology is used in business but also redefining the very definition of how industries operate. While these big shifts will continue to play out at a larger lever; for the IT operations practitioner in the Enterprise another shift is playing out that impacts them on a day to day basis. Cloud adoption brings in agility and responsiveness as an inherent expectation from any IT service delivered; however historically businesses have seen IT to be inflexible and not aligned to the speed required by business. At the core has been the debate around the benefits or challenges of ITSM based operations that a significant number of Enterprise IT teams have adopted over the years. This water cooler conversation graphic aptly highlights the story.

However, in this post I offer a completely contrarian view on the relationship between Cloud and ITSM. If we were to abstract the technology behind Cloud and question as a consumer, what does Cloud really mean to them? The answer can be summarized into 4E’s:

1. Ease of Use

2. Elastic

3. Evergreen

4. Everything as a Service

Given this context, lets now take a minute to relook at the definitions of Service and Service Management:

A service is a means of delivering value to customers by facilitating outcomes customers want to achieve without the ownership of specific costs and risks.

Service management is a set of specialized organizational capabilities for providing value to customers in the form of services.

So If Cloud is all about delivering exceptional service and ITIL is all about good practices to manage service lifecycles, why is there an assumed lack of compatibility between the two?

The answer is simple: ITIL’s philosophy has NOT been adopted fully till date. ITIL has been successful only in the IT operations realm and even today has not found proponents in the Application realm of IT. This limited adoption doesn’t mean that ITIL doesn’t include these components in its framework. Also the philosophy articulated in ITIL V3 is of a complete service lifecycle approach. Enterprise implementations have stayed focused on Service Transition and Service Operations in the IT Infrastructure Operations domain and not yet espoused the spirit behind Service Strategy and Design components of ITSM. Also to make adoption successful, too much rigidity has been brought in some aspects; which was not really envisioned. I quote Sharon Taylor Chief Architect, ITIL Service Management Practices

““…The philosophy of ITIL is: good practice structures with room for self-optimization…

...Instead of rigid frameworks, preventing graceful adaptation under changing conditions, there remains room for self-optimization””

Given this history and current state of affairs in Enterprise IT, I am convinced that Only Cloud Can Deliver ITSM Nirvana. The reasons for the same are:

• Cloud removes the focus and need from IT Infrastructure to the “Workload” on the Cloud and the “Service” it delivers

• Cloud takes time away from worrying about IT Infrastructure Operations which is delivered as a service and that time can then be dedicated to Service strategy and design

• Cloud forces focus and attention on overall Application stack and eliminates a fragmented – Application/ Infrastructure/ Data based division of IT

• Cloud hence Truly allows focus on Business services and need to define Business Service Utility and Warranty as envisioned in ITSM

As Enterprise Cloud adoption trend keeps on moving to mainstream adoption, this topic will continue to be of interest to IT practitioners. I am pretty passionate about helping clients make this journey successful and committed to share best practices in this area.

As a start, I am really excited to share a Whitepaper that Minjar and Amazon Web Services have co-authored on “ITIL Asset and Configuration Management on Cloud”. Would love to hear from you about your views as well.

Author : Anindo Sengupta - Chief Delivery Officer at Minjar

1 note

·

View note

Text

Minjar turns 3 and AWS CTO wishes us a happy birthday!

We are extremely happy to announce that we have successfully completed three glorious years on cloud. On that note, here are some quick facts on our achievements from last year.

1. Secured premier consulting partner status at reinvent 2014.

2. We are the first certified and audited managed service partner of Amazon Web Services from India.

3. We have grown 100x in revenue since we started in 2012.

4. Acquired enterprise customers from various industry verticals such as Banking, E-commerce, Media, Telecommunication, SaaS and more.

5. We launched our own software product “Botmetric" in Nov 2014 to make DevOps on AWS easy. Botmetric will go full scale in Nov 2015.

This implies we are growing and evolving every day. At Minjar, we believe in “Customer Obsessesion” and we do what it takes for “Customer Success”.

We are also pretty excited about having received wishes from the Amazon Chief Technology Officer Werner Vogels on our social pages.

Our CEO @vijayrayapati has replied ‘We wouldn't be where we are without your good wishes and constant encouragement from our early days as a company. You inspire not just our engineers but entire company!’

On that joy, we would also like to thank all our customers who have supported us through out our journey.

So Minjar has turned 3, we are young, stubborn and ambitious! Want to join us on this exciting cloud journey? Visit our careers page.

0 notes

Text

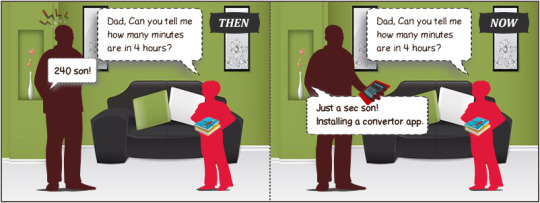

How Technology Has Changed The Way We Think!

It won’t be incorrect, if we call everyday as a techno-day. Right from waking up till we hit the bed, we are in constant touch with technology. Ever since the mobile phone has come in place, we have completely forgotten about the bedside alarm clock. We set an alarm in our phone which most of us keep it besides the pillow at night. We read news more in a phone or laptop than in a newspaper. Even for a small mathematical calculation, we use calculator available on phone or laptop rather than our brains. We remember people’s birthday because we keep an alert on our phone or the Facebook sends a birthday alert. Thus, technology is changing our lives in every small way. Here’s an overview on how technology has changed the creative thoughts of human brain.

WakeyWakey: Do you ever think how it would be to wake up without the alarm on your phone ringing; at least during your office days. Most of us will not even make it to the office on time. Our brains function as we train. Prior to the techno-days, people used to wake up in the morning without any alarms because that is how they have trained their brain to act.

Everyday Calculation: Even for a trivial calculation, we take our phone and use the calculator. Though most of us memorized the multiplication tables in our childhood, none of us use them in the real life. We completely rely on the calc.

Loved one’s Calendar: We don’t remember birthdays or special days because we completely rely on phones. Our memory is going worse because we keep a note or an alert for everything on our phone. We don't even try to remember routes as we have our friendly online maps. We keep blaming stress and growing age for our weak memory but isn’t it also because of technology and our dependency on it.

Google Everything: While Google is playing a very important role in educating us about everything, it is also making our brain dull and inactive. We Google everything, without even thinking about an answer to a question. Be it a designer, a coder, an artist or a writer, we don’t use our brains to be solely creative. The first thing we do is Google and then we ask the brain to act. Why don’t we take a moment to think and remember for a change; at least sometimes?

Online Existence: We exist more online than offline. We are super active in Facebook, LinkedIn and Twitter but hardly share a wish with the neighbor next door. We are connected with the entire school and college batch in WhatsApp but hardly connect with them in real life. Even during the dinner dates or friends or family outings, most of the time, we are online on our phones.

So, yes technology is greatly changing the way we live and our brain functions. Well, we can’t complain because it is making our lives much more convenient and fast. However, we need to train our brains to be more active and creative before it turns completely dull and dependent.

2 notes

·

View notes

Text

Does DevOps Drive Cloud?

This is one of the technology-driven debates buzzing amidst the software world these days. #DevOps is trending in Twitter and that too along with #Cloud which emphasizes that it definitely has some great things to do with cloud. Let’s simplify and try to understand the duo – how it is related and if DevOps literally drives cloud.

The software industry is experiencing a transition from being product-oriented to service oriented. Earlier, software companies used to develop products and hand it over to customers, but now, they also take care of the operations after the product has been delivered. With Cloud, this is even more correct. So, the end-clients are interested both in service delivery along with product delivery. They are putting more emphasis on experiences which led to the emergence of DevOps.

DevOps is a software development approach that focuses on communication, collaboration, integration, automation and cooperation. This approach identifies the interdependence of different teams in developing software and finally executing and maintaining it. Right from software development to quality assurance, this approach defines an effective process. Thus, DevOps being aligned to cloud ensures efficiency. Therefore, it definitely is a plus.

Besides, the industry is moving agile and innovative which again demands for an approach like DevOps. To cope up with the fast moving world, companies need to fasten their pace, shorten work cycles and increase delivery efficiency. To achieve these DevOps give a boost and so are the firms following the trend.

Firms that manage cloud infrastructure need to co-ordinate function and operations hand in hand. The market understands the need of a cross-functional structure. This is the reason behind most of the cloud firms adopting DevOps. This is basically to enhance the collaborative work between different teams. It ensures that Cloud is implemented and is operating effectively. Someone rightly said, “If Cloud is an instrument, DevOps is the musician that plays it”.

Would you like to explore more about DevOps and Cloud? Give us a shout here, we would love to help you get the best out of the both.

Stay tuned!

4 notes

·

View notes

Text

AWS Summit stall to hit SG and MY

AWS Summit is a one-day event where you will connect, collaborate and learn all about the AWS platform, with the sessions covering hot topics like architecture best practices, new services, performance, operations and more.

Minjar is excited to present at the AWS summit SG and MY 2015. Do not miss the talk by our Chief Delivery Officer, Anindo Sengupta.

Register here for Singapore event Register here for Malaysia event

Minjar as a Premier Consulting & Managed Services partner for AWS brings significant expertise in assuring clients of delivering enhanced value of services to the businesses. Also, drop by at our booth and have a chat with our cloud experts.

We would love to see you all at the event!

Know more about the AWS summit event here and if you want to spend some time with our experts mail us at [email protected].

ABOUT ANINDO SENGUPTA:

Anindo is an expert in helping organizations design and deliver efficient business solutions, through innovative use of cloud and intelligent automation, continuously redefining benchmarks and improving service maturity. An experienced leader in Technology services, Anindo has a rare 360 degree view of the industry, having spent 15+ years across both enterprise and service provider side technology leadership roles at organizations such as GE, Cognizant, and Microland.

As a business leader, Anindo enables accomplishments of the organizational goals by establishing high performance teams and building an adaptive organization culture.

0 notes

Text

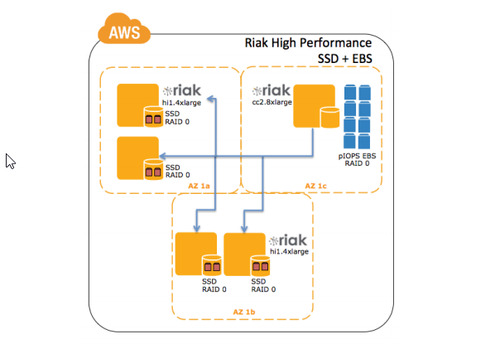

Understanding Riak Clusters - Designing a Backup strategy

One of our customers was running a Riak cluster on Amazon EC2 and we had to design a backup strategy for this cluster. In order to come up with a backup strategy,one must first understand how Riak works, the kind of problems it solves, howthings like consistency are handled.

Image source: docs.basho.com

So What Is Riak

Riakis a scalable, highly available, distributed key-value store. Like Cassandra,Riak was modelled on Amazon’s description of Dynamo with extra functionalitylike mapreduce, indexes and full-text search. A comparison of Riak with other NoSQL databases is out of the scope of this article, but checkout this great summary by Kristof.

How Does Riak Clusters Work?

Data in a Riak cluster is distributed across nodes using consistent hashing. Riak’s clusters are masterless. Each node in the cluster has same data, containing a complete, independent copy of Riak package. This design ensures fault-tolerance and scalability. Consistent hashing ensures data is distributed across all nodes in the cluster evenly.

How Does Replication Work?

Riak allows you to tune the replication number, which is n value in Riak speak. The default value is ‘3’, which means that each object is replicated 3 times. At the time of this writing, Basho says that it is almost 100% sure that this piece of replicated data is in three different physical nodes and they are working towards guaranteeing that it will be so in future.

Riak’s take on CAP (as an aside, you must read this excellent article - Plain English Introduction To CAP theorem ) is they let you tune N - Number of replicated nodes per bucket, R - number of nodes required for a read and W - number of nodes required for a successful write. Riak requires a minimum of 4 nodes to set up but ideally, you must be running at least 5 node cluster. Here are details of why.

To summarize:

If you have a 3 node cluster with N value as 3, When a node goes down, your cluster wants to replicate to 3 nodes, but you only have 2 and there is a risk of performance degradation and data loss

If you have a 4 node cluster with N value as 3, The reads use a quorum of 2 and writes use a quorum of 2. When a node goes down, 75 to 100 % of your nodes need to respond.

The best configuration is N value + 2 nodes. A 5 node cluster with N value 3, R value 2, W value 2 is best for scalability, high availability and fault-tolerance.

Eventual Consistency and How Riak deals with Node Failures

So how is ‘eventual consistency’ achieved in our 5 node cluster? When data is written to this cluster with write quorum of 2, the data will still be sent to all three replicas (n value is 3). It doesn’t matter if one of the primary nodes is down. When the node comes back, Read Repair kicks in and makes sure that the data becomes eventually consistent.

Implementing Riak on AWS and Backing up Riak

So with all the knowledge we have about Riak so far, the implementation should look like this on AWS EC2.

Source: Running Riak On AWS - White Paper

Now to the backup part. There is a riak-admin backup command which can be used to take backup but this has been deprecated and Basho suggests taking backups at file system level. There are multiple backends available in Riak. If you use LevelDB as your backend, you need to shut down the node, take a filesystem level backup and then start the node. If you are using Bitcask and if you are on AWS EC2 it becomes easier. You don’t need to bring the node offline for bitcask and a simple EBS snapshot would do.

You can either use tar, rsync or filesystem level snapshots. If you go with tar and/or rsync - below are the directories you should be backing up:

Bitcask data: ./data/bitcask

LevelDB data: ./data/leveldb

Ring data: ./data/riak/ring

Configuration: ./etc

The path of these folders varies Linux distro to distro. here is the complete list. So for our 5 node cluster running on AWS EC2 with Bitcask backend, you can just schedule periodic snapshot jobs on each node and you will be safe. A slight inconsistency in data during backups is allowed due to the eventual consistency of Riak. When we restore from that backup,Read Repair feature will kick in and make it eventually consistent.

Restoring From A Backup

Assuming a node fails. Below are steps for recovering:

Install Riak on a new node.

Restore from failed node’s backup/snapshot

Start the node and verify if Riak ping, riak-adminstatus etc works

If you are restoring this node with the same name, then you first need to mark the original node as down. riak-admin down failednode

Join the new node to cluster riak-admin cluster join newnode

Replace original with new riak-admin cluster force-replace failednode newnode

Plan changes: riak-admin cluster plan

Commit changes riak-admin cluster commit

Hope this was helpful! Need guidance? Give us a shout here.

Stay tuned!

Author : Ranjeeth Kuppala (Database Architect at Minjar)

4 notes

·

View notes

Text

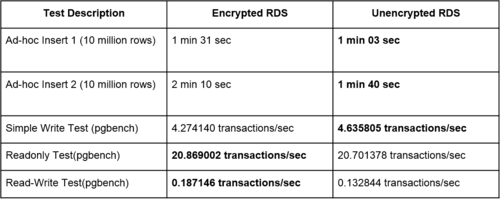

RDS Encryption and Benchmarking PostgreSQL

Amazon has recently announced new data at rest encryption option for PostgreSQL, MySQL and Oracle. The enterprise editions of Microsoft SQL Server and Oracle already had encryption in the form of AWS Managed Keys. This new feature extends support to PostgreSQL and MySQL.

Credit : neilyoungcv.com

We took the new encryption enabled Postgres instance for a spin and this is what we learned:

An existing unencrypted RDS instance can not be converted to encrypted one.

Restoring a backup/snapshot of an unencrypted RDS into an encrypted RDS is not supported

Once you provision an encrypted RDS instance, there is no going back. You cant change it to unencrypted.

You can not have unencrypted read replicas for an encrypted RDS or vice versa.

Not all instance classes support encryption. Check the list of instance classes that support encryption here

What all of the above means is - if you are trying to upgrade an existing RDS to enable encryption, its not that straight forward. So the only way left is to take a logical backup(export data), spin a new RDS instance with encryption enabled and import data from logical backup.

How about the performance?

Before suggesting our customers to go ahead with encryption, we wanted to test the effect of enabling encryption in terms of performance. We launched a couple of PostgreSQL RDS instances in Tokyo region. One of them encrypted and the other is unencrypted. Both these instances are of the same instance class, db.m3.medium.

We ran a simple insert test to insert 10 mln rows on both the instances using the following query:

# Create Table

CREATE TABLE names (id INT ,NAME VARCHAR(100) ); #Run the Insert INSERT INTO names ( id ,NAME ) SELECT x.id ,'my name is #' || x.id FROM generate_series(1, 10000000) AS x(id);

The results were interesting. Encrypted RDS took 1 min 31 sec and unencrypted RDS took 1 min 03 sec.

Another insert test with a slightly different query:

CREATE TABLE t_random01 AS SELECT s ,md5(random()::TEXT) FROM generate_Series(1, 10000000) s;

Encrypted RDS took 2 min 10 sec and unencrypted took 1 min 40 sec.

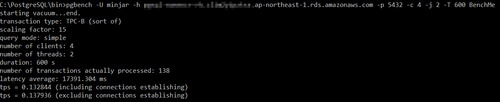

We ran the above tests multiple times with slightly different timelines but in each test, the encrypted RDS took more time to insert than the unencrypted one. We then decided run some benchmarking tests for read/write performance using PGBench.

PGBench runs same set of SQL commands multiple times in same sequence and calculated transactions per second and it tends to give more accurate results. While pgbench allows you to run wide variety of tests like connection contentions, prepared and adhoc queries - we limited our tests to read/write related ones in this case.

Initializing PGBench:

Assuming that you already have PGBench binaries installed, cd to that directory and initialize a database on both instances.

pgbench -U minjar -h xxxxx-encrypted-xxx.clim2yipztss.ap-northeast-1.rds.amazonaws.com -p 5432 -i -s 70 BenchMe

You may have to create a blank database BenchMe on your RDS instances. The above command will create pgbench_accounts, pgbench_branches, pgbench_history and pgbench_tellers tables and populates it with data.

Read Write Test:

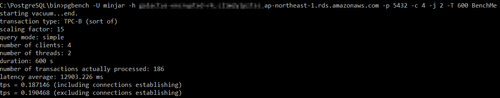

pgbench -U minjar -h xxxxxx-encrypted-xxxx.clim2yipztss.ap-northeast-1.rds.amazonaws.com -p 5432 -c 4 -j 2 -T 600 BenchMe

The above command runs simple read/write workload on BenchMe database for 600 sec. Results below:

Unencrypted RDS

Encrypted RDS

The encrypted RDS is processing 0.187146 transactions per second which is slightly better than unencrypted (0.132844).

ReadOnly Test:

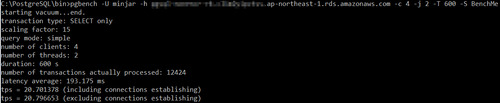

pgbench -U minjar -h xxxx-encrypted-xxxxx.clim2yipztss.ap-northeast-1.rds.amazonaws.com -c 4 -j 2 -T 600 -S BenchMe

In the above command, -S switch makes sure that the workload is readonly and the time frame is 600 seconds. The results are below.

Unencrypted RDS

encrypted RDS

In this test too, encrypted RDS wins with higher number of transactions per second compared to encrypted RDS. We think Amazon provisions these encrypted instances on better disk subsystem and they gain considerably in terms of read performance. This is one of the reasons why the read/write test above went in favor of encrypted RDS.

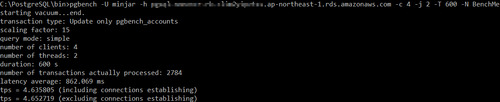

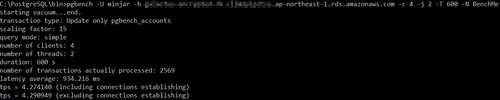

Simple Write Test:

pgbench -U minjar -h xxxxx-nonencr-xxxx.clim2yipztss.ap-northeast-1.rds.amazonaws.com -c 4 -j 2 -T 600 -N BenchMe

unencrypted RDS

encrypted RDS

unencrypted RDS wins in simple write test with 4.635805 transactions. This is inline with the initial findings from running ad-hoc inserts.

Summary of results:

So the final verdict is - there will be a very slight performance hit in terms of writes when you enable RDS encryption. But Amazon more than makes up for it in terms of overall better performance. We suggest enabling RDS encryption, the slight write performance overhead is worth the security that encryption brings.

If you still want to know more about the RDS encryption or need guidance, give us a shout here and we’d love to help you!

Author : Ranjeeth Kuppala (Database Architect at Minjar)

1 note

·

View note

Text

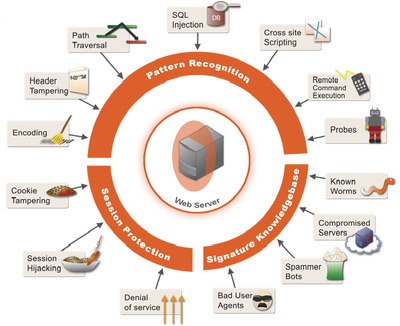

Web Application Firewall(WAF) Solutions for Applications in AWS Cloud

Following on the previous post about Vulnerability Assessment and Penetration Testing Solutions (VAPT) for AWS Cloud, let's discuss about Web Application Firewall (WAF) solutions in this post.

In today's world, web applications are the primary targets for security loopholes and attackers compromising business data. You can learn more about OWASP TOP 10 list to understand common web attacks and application compromise patterns.

Image Source

Just like network and server firewalls for better protection, we recommend deploying Web Application Firewall (WAF) solutions for protecting applications from common web attacks. We believe that WAF is an important element to achieve total application security and it is the most critical component.

This post sums up various options including traditional enterprise vendors and SaaS providers with cloud based solutions. These solutions are PCI-DSS compliant and Minjar team has used all of them. Here are our recommendations:

InCapsula - This is a cloud based WAF as a Service offering from Imperva. We have used and deployed this solution for variety of applications. Their pricing is per domain based and starts at $49 per month for WAF protection. It includes protection for known web attacks, bots and black listing IPs based on usage behaviour etc. It's very easy to get started unless you have some data privacy or compliance issues, you can deploy this solution. They support custom WAF rules in enterprise plan.

Reblaze - This Israel company offers both SaaS and hosted WAF solution for protecting web applications from known attack patterns. It includes bot and malicious traffic protection. This is a a good solution if you want to deploy WAF in your AWS Cloud. They also provide a great UI and reporting features. We use Reblaze for some of our applications.

IndusFace WAF - Like Reblaze they offer both SaaS and hosted WAF for protecting applications on AWS. The interesting aspect is enterprise plans include support for managed WAF security and custom security rules with tuning. They also offer web application VAPT service for vulnerabilities detection.

Enterprise Vendors (F5 and NetScaler) - Traditional vendors like Big-IP F5 and Citrix NetScaler also offer WAF for protecting applications. Both of these solutions can be hosted in AWS Cloud and available from MarketPlace.

All these solutions provide reports and insights on traffic patterns, identified attacks and blocked sources etc. You can use most of these solutions in passive or active mode to either just detect/alert the attacks or block them.

We strongly recommend businesses to protect their web workloads in AWS Cloud using one of these WAF solutions. If you are looking for a guidance or need help, please reach out to us.

0 notes

Text

Top 10 announcements of AWS in 2014

2014 was a busy and challenging year for AWS Cloud, which faced a tough competition from other leading players such as Microsoft Azure, Google compute engine, who aggressively played catch up by announcing price reductions and new feature additions. However, Amazon has been an innovation leader in rolling out new features at a faster pace in the Cloud market.

As mentioned by an analyst Carl Brooks “There has been a concerted effort from Amazon to become more enterprise-friendly. They still remain the gold standard of the cloud and they have at least a year’s lead over the competition in innovation,”.

By the end of the re:Invent 2014 conference, AWS had added over 454 significant features and services. And, AWS was the first one to announce one million active users on its cloud platform. Their customers include startups to over 900 government agencies – such as the navy and intelligence security agencies – academic institutions and large enterprises.

Here is a quick recap of the top 10 announcements of Amazon Web Services in 2014.

Early this year, AWS announced the general availability of Amazon WorkSpaces, a fully managed desktop computing service in the cloud. One of the significant features that allow users to easily provision cloud-based desktops to access the documents, applications and resources they need with the device of their choice, including laptops, iPad, Kindle Fire, or Android tablets. Users can also integrate Amazon WorkSpaces securely with their corporate Active Directory to seamlessly access company resources.

Amazon Mobile Analytics, a service that lets a user easily collect, visualize, and understand app usage data at scale. Amazon Mobile Analytics is designed to deliver usage reports within 60 minutes of receiving data from an app so that users can act on the data more quickly.

Amazon Cognito, a simple user identity and data synchronization service that helps customers securely manage and synchronize app data for their users across their mobile devices. Users can save app data locally on their devices allowing applications to work even when the devices are offline.

Amazon Zocalo, a fully managed, secure enterprise storage and sharing service with strong administrative controls and feedback capabilities that improve user productivity. Users can comment on files, send them to others for feedback, and upload new versions without having to resort to emailing multiple versions of their files as attachments.

AWS Key Management Service (KMS), a managed service that makes it easy for users to create and control the encryption keys used to encrypt data, and uses Hardware Security Modules (HSMs) to protect the security of keys. AWS Key Management Service is integrated with other AWS services including Amazon EBS, Amazon S3, and Amazon Redshift.

AWS Config, a fully managed service that provide users with an AWS resource inventory, configuration history, and configuration change notifications to enable security and governance. With AWS Config users can discover existing AWS resources, export a complete inventory of AWS resources with all configuration details, and determine how a resource was configured at any point in time.

AWS CodeDeploy, a service that automates code deployments to Amazon EC2 instances. AWS CodeDeploy makes it easier for users to rapidly release new features, helps avoid downtime during deployment, and handles the complexity of updating applications. AWS CodeDeploy can be used to automate deployments, eliminating the need for error-prone manual operations, and the service scales with infrastructure so users can easily deploy to one EC2 instance or thousands.

Amazon Aurora, a MySQL-compatible, relational database engine that combines the speed and availability of high-end commercial databases with the simplicity and cost-effectiveness of open source databases. Amazon Aurora provides up to five times better performance than MySQL at a price point one tenth that of a commercial database while delivering similar performance and availability.

AWS Lambda, a compute service that runs user’s code in response to events and automatically manages the compute resources, making it easy to build applications that respond quickly to new information. AWS Lambda can also be used to create new back-end services where compute resources are automatically triggered based on custom requests. With AWS Lambda users pay only for the requests served and the compute time required to run the code.

Amazon EC2 Container Service, a highly scalable, high performance container management service that supports Docker containers and allow users to easily run distributed applications on a managed cluster of Amazon EC2 instances. Amazon EC2 Container Service lets users launch and stop container-enabled applications with simple API calls, allows to query the state of cluster from a centralized service, and gives access to many familiar Amazon EC2 features like security groups, EBS volumes and IAM roles.

With these incredible services, AWS has maintained its innovation leadership in the Cloud space. Let’s see how 2015’s cloud market pans out.

Would you like to explore more on the above services? Give us a shout here, we would love to help you get the best out of AWS cloud.

Stay tuned!

0 notes

Text

DDoS Prevention and Security for Web Application Workloads on AWS Cloud

One of the sophisticated & known web attacks is Distributed Denial of Service (DDoS) to bring down web applications and deny service availability to genuine users by flooding with large number of bot generated requests. Typical DDoS attacks can be simulated from a handful of machines to large network of bots spread across the world.

Image Source

In the recent times, many popular web applications and enterprise businesses have been victims of DDoS attacks. The common DDoS attacks are SYN floods, SSL floods, slow upload connections, large number of file downloads, generate large set of HTTP 500 errors etc.

DDoS attacks can be simulated at different OSI stack layers like network to session to application layers with attack throughputs ranging from 10's of Mbps at application level to 10's of Gbps at network level. However, due to the nature of DDoS attacks at different layers, developing and deploying good defense protection against DDoS attacks demands a scalable and cost effective approach.

Minjar has implemented a variety of DDoS solutions to protect our customers and web applications in AWS Cloud using industry leading solutions.

This blog post sum up various options and cost effective strategy for DDoS protection and prevention. You can find our recommendations below:

Big-IP F5 AFM - F5 Advanced Firewall Manager(AFM) provides DDoS protection services from network layer to application level. You can install F5 virtual application with AFM in AWS EC2 and create a cluster of them with DNS level load balancing to protect your web applications and services. F5 is one of the popular traditional vendors and deployed in many enterprises. If you have an existing license and migrating workloads to AWS Cloud then F5 is a good option. However managing, scaling F5 AFM nodes and protecting against large DDoS attacks can be cost prohibitive due to licensing & operational burden. You can start using F5 from AWS Marketplace and it's a good solution for customer hosted DDoS protection.

aiScaler aiProtect - You can use aiProtect appliance to protect your web applications and infrastructure against DDoS attacks. It supports protection against standard DDoS attacks to rate limiting number of requests from particular IP address etc. This appliance is available from AWS Marketplace with hourly or annual billing. If you are looking for a cheaper alternative to F5 then aiProtect is a good solution for customer hosted DDoS protection.

Incapsula by Imperva - This is one of the popular SaaS solution for DDoS protection along with standard web application firewall along with CDN capabilities. Imperva is an established player in traditional enterprises and Incapsula is a cost effective solution for startup & SME customers to protect their workloads in Cloud. Also InCapsula offers global CDN to offload caching needs of many web applications and it manages the DNS for applications to protect against attacks. Incapsula has a large DDoS protection networks spread across different geographies with attack protection ranging upto 10 Gbps to 100's of Gbps. The DDoS protection package starts from $249 per month and it's very easy to get started.

Reblaze - This one is from an upcoming vendor with great DDoS support for AWS Cloud and technical teams based out of Israel. Their service is available as a SaaS for small companies and can be deployed as a hosted solution in customer VPC to protect variety of applications. The solution pricing for dedicated DDoS protection & security in AWS starts at a few thousand dollars.

We recommend evaluating InCapsula or Reblaze for cost effective solution and if you have an existing license from F5 then it's a good option for DDoS protection of your AWS Cloud applications. Also aiProtect works best with respect to the price compared to F5 if you are deploying multiple nodes DDoS protection in AWS.

So, how secure are your web applications? If you still want to know more about the DDoS service, give us a shout here and we'd love to help you!

0 notes

Text

Vulnerability Assessment and Penetration Testing Solutions (VAPT) for AWS Cloud

Software security is a very challenging field and implementing a good security solution requires smart tools that can analyse everything from infrastructure to applications so we can implement best practices to prevent the known attack patterns at application, middleware & server levels.

( Image Credit )

First part of implementing a good security solution requires us to understand the vulnerabilities through assessment and penetration testing mechanisms known as VAPT so we can work on improving the overall solution security. Generally, application security testing involves both static and dynamic analysis for uncovering various security issues.

This blog post summaries various options available for application and server level VAPT that are well suited for AWS Cloud workloads. Please find a quick overview and our recommendations:

Nessus - One of the most popular open source vendors with enterprise plans and support for their scanner tool. You can use Nessus for server level VAPT and it generates great reports with insights. This is our default choice for server level vulnerability assessment. You can install the tool in EC2 instance and use it to scan all servers.

VeraCode - They are a leading vendor in Gartner Magic Quadrant and offer a good solution for application level VAPT. You can use their solutions for pre-production application testing to continuous assessment of both web and mobile applications. Application VAPT is very important aspect as most of the attacks are targeted at this layer.

WhiteHat Security - They are known as thought leaders in the application security space and offer a SaaS platform for application vulnerability management. Their Sentinel offering is a leading solution with actionable insights for identified vulnerabilities. Their source code analysis toolkit is good for pre-production security assessment of application code.

IndusGaurd Web Scanner - One of the emerging web security solution vendors from India with great local support. They provide application level VAPT with business logic testing and also support mobile applications. They offer both on-demand & continuous scanning service for web applications. They are our web security solutions partner.

Open Source Solutions - There are multiple open source options for application and server level VAPT testing. Our favourites include W3AF, Grabber, OpenVAS and Vega. You can keep track of this list at http://sectools.org/tag/vuln-scanners/

Hope you have enjoyed reading this blog post, let us know if you need any help. Also we would love to hear about your experience of working with different tools.

0 notes

Text

Endpoint Server Security Solutions for AWS Cloud

One of the important aspects of security is protection of servers using endpoint solution and it's a common approach in traditional enterprise deployments.

By using an endpoint server security, we can protect cloud servers against common malware attacks, intrusion prevention, perform integrity monitoring and application scanning etc.

(Image credit - Golime)

We have worked with some of the solutions shared in this blog and the below section will provide an overview of different solutions for virtual servers security (EC2 instances) in AWS Cloud along with our perspective:

TrendMicro DeepSecurity - TrendMicro is one of the early traditional security vendors with support for AWS Cloud virtual servers security. They offer an agent based solution to protect servers against malware attacks, hosted based intrusion detection and prevention, file integrity and log monitoring. You can control and manage all agents from a single dashboard. The pricing is flexible for Cloud and they charge 20% of your EC2 server fees as monthly charge for endpoint server security.

Sophos Endpoint Antivirus - You can use Sophos Cloud solution to protect the Windows infrastructure in the cloud. They offer Sophos SecureOS, which is a flavour of CentOS with security. They don't support Linux yet in Sophos Cloud.

McAfee - They provide ePO product (management console) along with anti-virus and anti-malware solutions. It works on Windows and supports most of the Linux environments. Their anti-virus product is called VirusScan Enterprise. They are one of the traditional and popular end point security vendor. They have a free secured Amazon Linux AMI in marketplace.

Bitdefender SaaS Security - They offer a good anti-malware solution for protecting both Windows and Linux workloads in AWS Cloud. It supports pay-as-you-go billing like TrendMicro DeepSecurity. This solution is offered via AWS marketplace.

CloudPassage Halo - They offer a software defined security for Cloud infrastructure with workload firewall management, file integrity monitoring, software vulnerability assessment and event logging/alerting. Their agents are certified for both Windows and Linux environments with broad support for infrastructure in Cloud and On-premise.

OSSEC - This is one of the popular open source options and doesn't provide full anti-virus solution. However it includes Host Based Intrusion Detection(HIDS), File Integrity Monitoring, Rootkit Detection and ability to block certain attack patterns at server level. It supports both Windows and Linux environments.

Some of the customers also look at next generation firewall solutions that combine the IPS, IDS, AntiVirus, AntiMalware etc into one appliance for protecting the virtual private cloud infrastructure in AWS by using network gateway approach. We will talk about this option in future blog posts.

We recommend you to look at deploying OSSEC at the minimum on servers for host level protection if you cannot use any other solutions.

Most of the traditional security vendor solutions should work on AWS Cloud and some of them are embracing the pay-as-you-go pricing model for their security solutions on Cloud.

WANT TO KNOW MORE ABOUT THE CLOUD SECURITY SOLUTIONS?

Give us a shout here or mail us at [email protected]. Stay tuned!

1 note

·

View note