#Cloud Amazon Aurora

Explore tagged Tumblr posts

Text

Amazon Relation Database Service RDS Explained for Cloud Developers

Full Video Link - https://youtube.com/shorts/zBv6Tcw6zrU Hi, a new #video #tutorial on #amazonrds #aws #rds #relationaldatabaseservice is published on #codeonedigest #youtube channel. @java @awscloud @AWSCloudIndia @YouTube #youtube @codeonedig

Amazon Relational Database Service (Amazon RDS) is a collection of managed services that makes it simple to set up, operate, and scale relational databases in the cloud. You can choose from seven popular engines i.e., Amazon Aurora with MySQL & PostgreSQL compatibility, MySQL, MariaDB, PostgreSQL, Oracle, and SQL Server. It provides cost-efficient, resizable capacity for an industry-standard…

View On WordPress

#amazon rds access from outside#amazon rds aurora#amazon rds automated backup#amazon rds backup#amazon rds backup and restore#amazon rds guide#amazon rds snapshot export to s3#amazon rds vs aurora#amazon web services#aws#aws cloud#aws rds aurora tutorial#aws rds engine#aws rds explained#aws rds performance insights#aws rds tutorial#aws rds vs aurora#cloud computing#relational database#relational database management system#relational database service

1 note

·

View note

Text

The Story (Re-Write)

Because so many people wanted to read the story, I had to explain a few times… It's gone. It's lost — I can't find it on Live journal or the Wayback Machine. I suspect it might have been on Google+…

Anyway, several people asked for it to be re-written. Between now and the past, a lot's happened, much of which makes for juicy plot points: And, so I'm serialising it… But only because if I tried to write it in one sitting, my brain would melt. So here's Chapter 1:

Trouble sleeping

Didn't Shakespeare write something about sleep? “Shut me in a nutshell, and I'll be hella good if I can get skibidi sleep?”

Something like that.

Larry was not getting sleep, or not enough. Every time he started to sink into the cloud of free association and relaxation, something skittered or whispered to him and jerked him back awake.

In an earlier time, he'd have blamed it on spirits or demons. More recently, maybe the effects of stress and mental health. His parents would have gone to therapy about it.

Larry, a modern person, brought up by Memers and soaked in tech his entire life, didn't have to guess.

It was the fucking wallpaper.

It'd sounded great when he put the first payment down for it. The installers had shown up, ground sixty years of Landlord Paint off the walls, leaving chunks of off-white Fordite all over the floor, and mounted the data-mesh.

Then they'd rolled the rubbery panels down over it, cutting them to fit, letting the test images calibrate and line up.

The first thing Larry put up was an immersive view of some idealised forest. Lush green ferns, a spritz of mist and shafts of smoky golden sun reaching through the trunks.

A week later, the first adverts popped up across the mars-scape he'd switched to. A can of SelZa sitting on a rock that developed a halo of text about how great drinking your pizza would be: Would you like to order now?

The Amazon link popped right up, and Larry had to look away before it took a casual look as an invitation to send him fizzy pizza water.

Soon the animated avatars of other brands were sauntering out from behind rocks to tempt him with Keto Water, Fréch Breeze (Guaranteed fresh air: No microplastics, with Extra Oxygen and Caffeine — Take a Breath of Fréche Air™), frozen pizza, and about a dozen competing Kombucha fermenters.

Larry thought he could live with it.

He was wrong.

Soon his soothing landscapes and themed views were plated in adverts, coruscating and blinking to get his attention.

Phantom people stared in at him, vamping with designer goods and clothes.

Ad gremlins scampered around, making him twitch as the motion caught the periphery of his vision.

And the WindoWall had audio: The big panels vibrating to act as speakers, and picking up video through camera arrays, listening via a hundred microphones that could pinpoint him within the room.

Just part of life, he told himself.

But then he went to bed.

The WindoWall darkened and spread an Aurora across one wall, a sprinkle of glittering stars. The audio changed to cancel traffic sounds — A soft chorus of frogs and pattering rain started to play — One wall developed a brazier of softly glowing coals…

Larry backflipped into relaxed unconsciousness hard.

… for ten minutes.

Larry woke, squinting at the sudden brightness:

An advert for SleepyTime Choc Hotlet tried to sing him a lullaby as a cartoon capybara with a fucking lamp demonstrated the sleep he could be getting if they hadn't woken him up to tell him how much better he'd be sleeping if he just bought some SleepTime Choc hotlet to drink!

The Capybara winked and turned off the light. Larry tried to get back to sleep. Fireflies danced gently around the room… and formed up to spell out the name of a mattress company.

Larry rolled over and closed his eyes.

An adorable gnome giggled at him and had a whispered, though perfectly audible conversation with a delightful bunny about how before bed she always used Freshens. Whatever they were.

Larry commando rolled out of bed, mashed his toe on something, screamed in pain, was offered three brands of slipper, four over-the counter painkillers and seven offers to sue someone for personal injury.

All of which were ignored as Larry pulled up the WindoWall app and pushed the brightness and volume to minimum — The glassy matte of the wall panels becoming black in the room lit only by the tablet screen.

Then Larry took some Ibuprofen and went back to bed.

45 minutes later he twitched and woke up to find the room lit by wall panels of text and still images for sleep aids fading in and out, and a soft sussurtion - Quiet Ad Reads that Larry suspected were supposed to subconsciously bias him to buying... Sleep Underwear?

In short succession, Larry found out that he couldn't sleep with an eyemask because the WindoWall started cranking the audio up now he wasn't looking.

And that ear plugs improvised from rolled tissue got him a volume increase and some recommendations about the mildest softest tissues — And he could save 15% if he subscribed!

And that was bad enough: Larry called up the WindoWall Customer Support after a sleepless night.

Customer Support turned out to be an AI avatar who appeared on the wall, using the built-in camera array to track Larry.

“Where are all these adverts coming from?” He asked. Starting simple.

The Face of WindoWall did a polite look of curiosity then, in rich, friendly terms, said “'Adverts' is short for Advertisements: a Method of promoting products....”

Typical answerbot, grabbing a definition from Wiki — But not resolving the query. Larry immediately asked for a real human being.

The Face argued that the chatbot could help, or else it could open the Support Pages for Larry.

Larry insisted on a human and eventually hit on the correct keywords: “I want to talk to a human being - This is a complaint, please escalate.” And the Face went into an idle animation, repeating, “Our support staff are currently busy with other requests – Please wait. In the meantime you can use our AI responder or the support website” every minute or so.

Larry, familiar with the under-staffed human support departments, picked up a tablet and opened his current book-in-progress while he waited.

Thumbing past a motion graphic for the series of re-writes of Terry Pratchett by an AI to add 50% extra hilarity and extended scenes, he opened Virtual Investigations: A Max Ransom Adventure...

She walked into my office feed like bad news wrapped in a pretty bow. No way she was real: A dame like that doesn't walk into a virtual office like mine. The wings wouldn't fit through the door. The angel looked at me with golden eyes and hair the colour of mocha - Like the mocha on my desk provided by CoffeeCourier™ – Roast and vacuum packed for freshness according to the quick personality quiz that matched me to the perfect coffee for my busy lifestyle. And you too can enjoy a cup of Mental-Fresh Mocha using code DETECTIVE10 “What's an avatar like you doing in my office?” I asked. In reality I was renting a VR space with a fold down bed and access to three busted washer-dryers in the basement. Online my virtual office was classy, just like you'd expect from a CubeSpace Virtual Site - CubeSpace use real scans to decorate your virtual space for the best in class work sites. Use DETECTIVE10 to get 10% off your own virtual office site for the lifetime of your subscription... She looked at me a moment then said “As an LLM I don't have a philosophy of mind to answer with. I'm here because you have 4.6 stars on Amazon Business Listings as a Detective.” I do. It'd be higher, but some people don't give you a good rating when you chase them for payment. Maybe I should stop showing up with a bill and a threat to post about thier broke asses on the Socials. “I will pay you for your services as a detective to locate my user.” She continued. I looked at her with fresh eyes. So, not someone with an angel kink – An actual House Assistant looking for it's user... the case just got interesting!

Larry was interrupted by the Face clearing its throat, now puppeted by a support worker. The Face's shirt turned green and developed a name badge saying “obj.user.name_1”

“Hello, my name is Shimonne, I'll be assisting you today. The uh notes say you want to know what adverts are...?” It said in the same voice – Corporate robbing its staff of even their own voice.

“Uh no,” said Larry, “I asked where all these adverts came from? Did the video stream get hacked?”

The Face paused and did a canned animation of looking at a tablet.

“Ah, as per the contract, WindoWall reserve the right to show adverts from...” a soft, tired sigh, “Select partners, to provide you with enhanced opportunities to discover carefully curated products” - Said in an equally tired monotone.

Somewhere, Larry assumed, Shimonne was getting dinged for non-compliant tone. But Larry appreciated the little bit of empathy.

A pop-up survey asking how the interaction was progressing slid up, invisible to the Face. Larry tapped a 5 for style and actual empathy. Fuck the Corporate Tone.

“Ok...” said Larry, “But that wasn't how it was sold to me.”

“I understand.” Shimonne said through the Face. “It is in the EULA.” They added, pronouncing it with a deliciously melodic ripple of vowels that even the Face's vocoder couldn't stamp into the carefully selected Midwest accent the marketing team for VirtuAgent had pushed.

So of course Larry asked, “How do I turn them off?”

And that's how Larry got upsold on the Premium Ad-Free tier.

Of course... He didn't read the EULA for that either.

------

OK now a bit about the story. It's very like a couple of Black Mirror Episodes. Even some Farenheit 451, Idocracy, Midnight Burger, Feed, a spritz of Snowcrash... a whole bunch of other stuff. Some of this I've read or watched, some I haven't. But I don't live in a void: Whatever's in here definitely 100% was isnpired in whole or in part by other works, or even current events, shitposts on Social media, memes on Imgur, even things on Tumblr: @marlynnofmany writes fantastic stories and pops up some seriously interesting questions about what day to day life would be like in a world where you can have a starship full of non-human intelligences deliver your packages. So if you're thinking 'This reminds me of..." the answer is probably "yeah that's right." :)

And now a snitch post for some people who wanted to be notified if the story ever showed up:

@ravencromwell @rocinantescoffeestop @vtothefun @call-me-b-please-and-thank-you @msimpossibility @museumofinefarts @faeriesaurus @starlo-official

32 notes

·

View notes

Text

The Art of Nature and Wildlife Photography: A Journey Through Time and Terrain

The Art of Nature and Wildlife Photography: A Journey Through Time and Terrain

Nature and wildlife photography is more than a craft—it’s a profound dialogue between humans and the natural world. It demands technical mastery, artistic intuition, and endless patience, blending the science of light and composition with a deep respect for ecosystems. Whether capturing the fleeting grace of a hummingbird or the raw power of a storm, photographers become storytellers, revealing Earth’s hidden narratives and advocating for its preservation.

The essence of nature photography

Patience & Timing: Waiting for the "golden hour" or the perfect animal interaction.

Ethics: Prioritizing minimal disturbance—using long lenses, respecting habitats.

Storytelling: Showcasing behaviors (a lion’s hunt, a flower’s bloom) to evoke emotion and awareness.

Iconic Destinations for Nature Enthusiasts

If you like travel and tourism and knowing the costs of the trip, click here

Wildlife Spectacles

Serengeti National Park Tanzania

Highlight: The Great Migration—1.5 million wildebeest and zebras traverse crocodile-infested rivers.

Tip: Visit July-October for river crossings; use a telephoto lens for drama

Yellowstone, USA

Wildlife: Bison, wolves, and grizzlies against geysers and prismatic springs.

Photo Focus: Winter scenes with steam rising over wolves in snow.

If you like traveling and visiting these places, go here

Amazon Rainforest, South America

Biodiversity: Jaguars, macaws, and pink river dolphins.

Access: Ecuador’s Yasuní or Brazil’s Mamirauá lodges for guided canoe trips.

Birding Paradises

Bharatpur Bird Sanctuary, India

Star Species: Siberian cranes, kingfishers, and raptors in wetland mosaics.

Best Time: Winter (Nov-Feb) for migratory flocks.

Everglades, USA

Rarities: Roseate spoonbills and snail kites in sawgrass marshes.

Technique: Kayak silently for intimate shots of wading birds.

Costa Rica’s Cloud Forests

Gems: Resplendent quetzals and hummingbirds amid misty canopies.

Pro Tip: Monteverde’s hanging bridges for canopy-level perspectives.

Landscapes That Stir the Soul

If you like traveling and visiting these places, go here

Patagonia (Chile/Argentina)

Drama: Torres del Paine’s granite spires, glacial lakes, and guanacos.

Light: Capture sunrise at Laguna Capri for fiery peaks.

Lofoten Islands, Norway

Arctic Beauty: Midnight sun, Northern Lights, and fjord villages.

Unique Shot: Aurora reflections in fishing huts’ glass windows.

Zhangjiajie, China

Surrealism: Pillar-like karst formations shrouded in mist.

Inspiration: The real-world “Avatar” mountains; shoot at dawn for fog.

Underrated Gems

Galápagos, Ecuador: Swim with sea lions, photograph giant tortoises and blue-footed boobies.

Namib Desert, Namibia: Dead Vlei’s skeletal trees against orange dunes.

Kamchatka, Russia: Volcanic valleys teeming with brown bears and Steller’s sea eagles.

Photography as Conservation

Great images inspire action. Locations like Madagascar (lemurs) or Raja Ampat (coral reefs) remind us of fragility. Share work with conservation groups or use social media to spotlight endangered species.

If you like traveling and visiting these places, go here

Final Frame: The world’s wild places are both muse and mission. Through your lens, celebrate their beauty and amplify their silent cries for protection. Every click is a testament to Earth’s wonders—and a call to safeguard them. 🌿📷

#nature photography#wildlife photography#Conservation storytelling#zoos#animals#amazon rainforest#Serengeti Great Migration#Steller’s sea eagles#golden hour#animal behavior#Biodiversity hotspots#Patagonia’s Torres del Paine#Yellowstone bison herds#habitat preservation#Fragile ecosystems

1 note

·

View note

Video

youtube

Amazon RDS Performance Insights | Monitor and Optimize Database Performance

Amazon RDS Performance Insights is an advanced monitoring tool that helps you analyze and optimize your database workload in Amazon RDS and Amazon Aurora. It provides real-time insights into database performance, making it easier to identify bottlenecks and improve efficiency without deep database expertise.

Key Features of Amazon RDS Performance Insights:

✅ Automated Performance Monitoring – Continuously collects and visualizes performance data to help you monitor database load. �� ✅ SQL Query Analysis – Identifies slow-running queries, so you can optimize them for better database efficiency. ✅ Database Load Metrics – Displays a simple Database Load (DB Load) graph, showing the active sessions consuming resources. ✅ Multi-Engine Support – Compatible with MySQL, PostgreSQL, SQL Server, MariaDB, and Amazon Aurora. ✅ Retention & Historical Analysis – Stores performance data for up to two years, allowing trend analysis and long-term optimization. ✅ Integration with AWS Services – Works seamlessly with Amazon CloudWatch, AWS Lambda, and other AWS monitoring tools.

How Amazon RDS Performance Insights Helps You:

🔹 Troubleshoot Performance Issues – Quickly diagnose and fix slow queries, high CPU usage, or locked transactions. 🔹 Optimize Database Scaling – Understand workload trends to scale your database efficiently. 🔹 Enhance Application Performance – Ensure your applications run smoothly by reducing database slowdowns. 🔹 Improve Cost Efficiency – Optimize resource utilization to prevent over-provisioning and reduce costs.

How to Enable Amazon RDS Performance Insights: 1️⃣ Navigate to AWS Management Console. 2️⃣ Select Amazon RDS and choose your database instance. 3️⃣ Click on Modify, then enable Performance Insights under Monitoring. 4️⃣ Choose the retention period (default 7 days, up to 2 years with paid plans). 5️⃣ Save changes and start analyzing real-time database performance!

Who Should Use Amazon RDS Performance Insights? 🔹 Database Administrators (DBAs) – To manage workload distribution and optimize database queries. 🔹 DevOps Engineers – To ensure smooth database operations for applications running on AWS. 🔹 Developers – To analyze slow queries and improve app performance. 🔹 Cloud Architects – To monitor resource utilization and plan database scaling effectively.

Amazon RDS Performance Insights simplifies database monitoring, making it easy to detect issues and optimize workloads for peak efficiency. Start leveraging it today to improve the performance and scalability of your AWS database infrastructure! 🚀

**************************** *Follow Me* https://www.facebook.com/cloudolus/ | https://www.facebook.com/groups/cloudolus | https://www.linkedin.com/groups/14347089/ | https://www.instagram.com/cloudolus/ | https://twitter.com/cloudolus | https://www.pinterest.com/cloudolus/ | https://www.youtube.com/@cloudolus | https://www.youtube.com/@ClouDolusPro | https://discord.gg/GBMt4PDK | https://www.tumblr.com/cloudolus | https://cloudolus.blogspot.com/ | https://t.me/cloudolus | https://www.whatsapp.com/channel/0029VadSJdv9hXFAu3acAu0r | https://chat.whatsapp.com/BI03Rp0WFhqBrzLZrrPOYy *****************************

*🔔Subscribe & Stay Updated:* Don't forget to subscribe and hit the bell icon to receive notifications and stay updated on our latest videos, tutorials & playlists! *ClouDolus:* https://www.youtube.com/@cloudolus *ClouDolus AWS DevOps:* https://www.youtube.com/@ClouDolusPro *THANKS FOR BEING A PART OF ClouDolus! 🙌✨*

#youtube#AmazonRDS RDSPerformanceInsights DatabaseOptimization AWSDevOps ClouDolus CloudComputing PerformanceMonitoring SQLPerformance CloudDatabase#amazon rds database S3 aws devops amazonwebservices free awscourse awstutorial devops awstraining cloudolus naimhossenpro ssl storage cloudc

0 notes

Text

AWS RDS: Simplifying Database Management in the Cloud

AWS RDS: Simplifying Database Management in the Cloud Amazon Relational Database Service (AWS RDS) is a fully managed service that makes setting up, operating, and scaling relational databases in the cloud simple and efficient.

AWS RDS supports popular database engines such as MySQL, PostgreSQL, Oracle, SQL Server, MariaDB, and Amazon Aurora, offering users flexibility in choosing the right database for their applications.

Key Features:

Automated Management: RDS handles routine database tasks like backups, patching, and scaling, reducing operational overhead.

High Availability: With Multi-AZ (Availability Zone) deployments, RDS ensures failover support and business continuity.

Scalability: It allows seamless scaling of database storage and compute resources to meet changing demands.

Security: Offers encryption at rest and in transit, along with integration with AWS IAM and VPC for access control.

Monitoring: Provides performance insights and integration with Amazon CloudWatch for tracking database health and metrics.

Benefits: Reduces the complexity of managing on-premises databases.

Saves time with automatic provisioning and maintenance.

Supports disaster recovery and high availability, crucial for modern applications.

Common Use Cases:

E-commerce platforms with MySQL or PostgreSQL databases.

Analytics applications using Amazon Aurora for faster query performance. Enterprise applications relying on SQL Server or Oracle databases.

AWS RDS is an ideal solution for developers and businesses looking to focus on building applications rather than managing databases.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

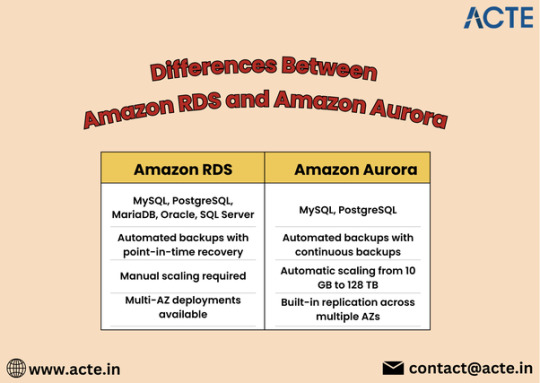

Comparing Amazon RDS and Aurora: Key Differences Explained

When it comes to choosing a database solution in the cloud, Amazon Web Services (AWS) offers a range of powerful options, with Amazon Relational Database Service (RDS) and Amazon Aurora being two of the most popular. Both services are designed to simplify database management, but they cater to different needs and use cases. In this blog, we’ll delve into the key differences between Amazon RDS and Aurora to help you make an informed decision for your applications.

If you want to advance your career at the AWS Course in Pune, you need to take a systematic approach and join up for a course that best suits your interests and will greatly expand your learning path.

What is Amazon RDS?

Amazon RDS is a fully managed relational database service that supports multiple database engines, including MySQL, PostgreSQL, MariaDB, Oracle, and Microsoft SQL Server. It automates routine database tasks such as backups, patching, and scaling, allowing developers to focus more on application development rather than database administration.

Key Features of RDS

Multi-Engine Support: Choose from various database engines to suit your specific application needs.

Automated Backups: RDS automatically backs up your data and provides point-in-time recovery.

Read Replicas: Scale read operations by creating read replicas to offload traffic from the primary instance.

Security: RDS offers encryption at rest and in transit, along with integration with AWS Identity and Access Management (IAM).

What is Amazon Aurora?

Amazon Aurora is a cloud-native relational database designed for high performance and availability. It is compatible with MySQL and PostgreSQL, offering enhanced features that improve speed and reliability. Aurora is built to handle demanding workloads, making it an excellent choice for large-scale applications.

Key Features of Aurora

High Performance: Aurora can deliver up to five times the performance of standard MySQL databases, thanks to its unique architecture.

Auto-Scaling Storage: Automatically scales storage from 10 GB to 128 TB without any downtime, adapting to your needs seamlessly.

High Availability: Data is automatically replicated across multiple Availability Zones for robust fault tolerance and uptime.

Serverless Option: Aurora Serverless automatically adjusts capacity based on application demand, ideal for unpredictable workloads.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the AWS Online Training.

Key Differences Between Amazon RDS and Aurora

1. Performance and Scalability

One of the most significant differences lies in performance. Aurora is engineered for high throughput and low latency, making it a superior choice for applications that require fast data access. While RDS provides good performance, it may not match the efficiency of Aurora under heavy loads.

2. Cost Structure

Both services have different pricing models. RDS typically has a more straightforward pricing structure based on instance types and storage. Aurora, however, incurs costs based on the volume of data stored, I/O operations, and instance types. While Aurora may seem more expensive initially, its performance gains can result in cost savings for high-traffic applications.

3. High Availability and Fault Tolerance

Aurora inherently offers better high availability due to its design, which replicates data across multiple Availability Zones. While RDS does offer Multi-AZ deployments for high availability, Aurora’s replication and failover mechanisms provide additional resilience.

4. Feature Set

Aurora includes advanced features like cross-region replication and global databases, which are not available in standard RDS. These capabilities make Aurora an excellent option for global applications that require low-latency access across regions.

5. Management and Maintenance

Both services are managed by AWS, but Aurora requires less manual intervention for scaling and maintenance due to its automated features. This can lead to reduced operational overhead for businesses relying on Aurora.

When to Choose RDS or Aurora

Choose Amazon RDS if you need a straightforward, managed relational database solution with support for multiple engines and moderate performance needs.

Opt for Amazon Aurora if your application demands high performance, scalability, and advanced features, particularly for large-scale or global applications.

Conclusion

Amazon RDS and Amazon Aurora both offer robust solutions for managing relational databases in the cloud, but they serve different purposes. Understanding the key differences can help you select the right service based on your specific requirements. Whether you go with the simplicity of RDS or the advanced capabilities of Aurora, AWS provides the tools necessary to support your database needs effectively.

0 notes

Text

AWS Certified Solutions Architect — Associate: A Gateway to Cloud Mastery

In the world of cloud computing, Amazon Web Services (AWS) has established itself as the leader, offering a vast array of cloud services that enable businesses to innovate and scale globally. With more companies moving their infrastructure to the cloud, there’s a growing demand for skilled professionals who can design and deploy scalable, secure, and cost-efficient systems using AWS. One of the best ways to demonstrate your expertise in this area is by obtaining the AWS Certified Solutions Architect — Associate certification.

This certification is ideal for IT professionals looking to build a solid foundation in designing cloud architectures and solutions using AWS services. In this blog, we’ll explore what the AWS Solutions Architect — Associate certification entails, why it’s valuable, what skills it validates, and how it can help propel your career in cloud computing.

What is the AWS Certified Solutions Architect — Associate Certification?

The AWS Certified Solutions Architect — Associate certification is a credential that validates your ability to design and implement distributed systems on AWS. It is designed for individuals who have experience in architecting and deploying applications in the AWS cloud and want to showcase their ability to create secure, high-performance, and cost-efficient cloud solutions.

This certification covers a wide range of AWS services and requires a thorough understanding of architectural best practices, making it one of the most sought-after certifications for cloud professionals. It is typically the first step for individuals aiming to achieve more advanced certifications, such as the AWS Certified Solutions Architect — Professional.

Why is AWS Solutions Architect — Associate Important?

1. High Demand for AWS Skills

As more businesses migrate to AWS, the demand for professionals with AWS expertise has skyrocketed. According to a 2022 report by Global Knowledge, AWS certifications rank among the highest-paying IT certifications globally. The Solutions Architect — Associate certification can help you stand out to potential employers by validating your skills in designing and implementing AWS cloud architectures.

2. Recognition and Credibility

Earning this certification demonstrates that you possess a deep understanding of how to design scalable, secure, and highly available systems on AWS. It is recognized globally by companies and hiring managers as a mark of cloud proficiency, enhancing your credibility and employability in cloud-focused roles such as cloud architect, solutions architect, or systems engineer.

3. Versatile Skill Set

The AWS Solutions Architect — Associate certification provides a broad foundation in AWS services, architecture patterns, and best practices. It covers everything from storage, databases, networking, and security to cost optimization and disaster recovery. These versatile skills are applicable across various industries, making you well-equipped to handle a wide range of cloud-related tasks.

What Skills Will You Learn?

The AWS Certified Solutions Architect — Associate exam is designed to assess your ability to design and deploy robust, scalable, and fault-tolerant systems in AWS. Here’s a breakdown of the key skills and knowledge areas that the certification covers:

1. AWS Core Services

The certification requires a solid understanding of AWS’s core services, including:

Compute: EC2 instances, Lambda (server less computing), and Elastic Load Balancing (ELB).

Storage: S3 (Simple Storage Service), EBS (Elastic Block Store), and Glacier for backup and archival.

Databases: Relational Database Service (RDS), DynamoDB (NoSQL database), and Aurora.

Networking: Virtual Private Cloud (VPC), Route 53 (DNS), and Cloud Front (CDN).

Being familiar with these services is essential for designing effective cloud architectures.

2. Architecting Secure and Resilient Systems

The Solutions Architect — Associate exam focuses heavily on security best practices and resilience. You’ll need to demonstrate how to:

Implement security measures using AWS Identity and Access Management (IAM).

Secure your data using encryption and backup strategies.

Design systems with high availability and disaster recovery by leveraging multi-region and multi-AZ (Availability Zone) setups.

3. Cost Management and Optimization

AWS offers flexible pricing models, and managing costs is a crucial aspect of cloud architecture. The certification tests your ability to:

Select the most cost-efficient compute, storage, and database services for specific workloads.

Implement scaling strategies using Auto Scaling to optimize performance and costs.

Use tools like AWS Cost Explorer and Trusted Advisor to monitor and reduce expenses.

4. Designing for Performance and Scalability

A key part of the certification is learning how to design systems that can scale to handle varying levels of traffic and workloads. You’ll gain skills in:

Using AWS Auto Scaling and Elastic Load Balancing to adjust capacity based on demand.

Designing decoupled architectures using services like Amazon SQS (Simple Queue Service) and SNS (Simple Notification Service).

Optimizing performance for both read- and write-heavy workloads using services like Amazon DynamoDB and RDS.

5. Monitoring and Operational Excellence

Managing cloud environments effectively requires robust monitoring and automation. The exam covers topics such as:

Monitoring systems using Cloud Watch and setting up alerts for proactive management.

Automating tasks like system updates, backups, and scaling using AWS tools such as Cloud Formation and Elastic Beanstalk.

AWS Solutions Architect — Associate Exam Overview

To earn the AWS Certified Solutions Architect — Associate certification, you need to pass the SAA-C03 exam. Here’s an overview of the exam:

Exam Format: Multiple-choice and multiple-response questions.

Number of Questions: 65 questions.

Duration: 130 minutes (2 hours and 10 minutes).

Passing Score: A score between 720 and 1000 (the exact passing score varies by exam version).

Cost: $150 USD.

The exam focuses on four main domains:

Design Secure Architectures (30%)

Design Resilient Architectures (26%)

Design High-Performing Architectures (24%)

Design Cost-Optimized Architectures (20%)

These domains reflect the key competencies required to design and deploy systems in AWS effectively.

How to Prepare for the AWS Solutions Architect — Associate Exam

Preparing for the AWS Solutions Architect — Associate exam requires a blend of theoretical knowledge and practical experience. Here are some steps to help you succeed:

AWS Training Courses: AWS offers several training courses, including the official “Architecting on AWS” course, which provides comprehensive coverage of exam topics.

Hands-On Experience: AWS’s free tier allows you to explore and experiment with key services like EC2, S3, and VPC. Building real-world projects will reinforce your understanding of cloud architecture.

Study Guides and Books: There are numerous books and online resources dedicated to preparing for the Solutions Architect exam. Popular books like “AWS Certified Solutions Architect Official Study Guide” provide detailed coverage of exam objectives.

Practice Exams: Taking practice tests can help familiarize you with the exam format and highlight areas that need more attention. AWS offers sample questions, and third-party platforms like Whiz labs and Udemy provide full-length practice exams.

Conclusion

Earning the AWS Certified Solutions Architect — Associate certification is a significant achievement that can open up new career opportunities in the fast-growing cloud computing field. With its focus on core AWS services, security best practices, cost optimization, and scalable architectures, this certification validates your ability to design and implement cloud solutions that meet modern business needs.

Whether you’re an IT professional looking to specialize in cloud computing or someone aiming to advance your career, the AWS Solutions Architect — Associate certification can provide the knowledge and credibility needed to succeed in today’s cloud-driven world.

0 notes

Text

What are the alternatives for SAP HANA?

SAP HANA is a leading in-memory database platform, but several alternatives cater to different organizational needs. Popular options include Oracle Database, known for its scalability and robust features; Microsoft SQL Server, offering integration with Microsoft tools; IBM Db2, recognized for AI-driven analytics; Amazon Aurora, a cloud-based solution with high availability; and Google BigQuery, designed for big data analytics.

If you're looking to upgrade your SAP skills, Anubhav Online Training is highly recommended.

Anubhav Oberoy, a globally renowned corporate trainer, offers comprehensive SAP courses suitable for both beginners and professionals. His sessions are tailored for practical, industry-relevant knowledge and are available online to accommodate global participants. Check out his upcoming batches at Anubhav Trainings. Whether you're pursuing a career in SAP or seeking to enhance your expertise, Anubhav's training programs provide exceptional guidance and real-world insights.

#free online sap training#sap online training#sap abap training#sap hana training#sap ui5 and fiori training#best corporate training#best sap corporate training#sap corporate training#online sap corporate training

0 notes

Text

Scale Your Databases with Seamless Migration to AWS Aurora

1. Introduction Introduction Migrating on-premises databases to Amazon Web Services (AWS) Aurora can enhance performance, reliability, and scalability. AWS Aurora is a relational database built for the cloud, providing improved durability and fault tolerance compared to traditional on-premises databases. By following this tutorial, you’ll learn the necessary steps to migrate your on-premises…

0 notes

Text

AWS introduces Amazon Aurora DSQL, a new serverless, distributed SQL database that promises high availability, strong consistency, and PostgreSQL compatibility (Frederic Lardinois/TechCrunch)

Frederic Lardinois / TechCrunch: AWS introduces Amazon Aurora DSQL, a new serverless, distributed SQL database that promises high availability, strong consistency, and PostgreSQL compatibility — At its re:Invent conference, Amazon’s AWS cloud computing unit today announced Amazon Aurora DSQL, a new serverless … Continue reading AWS introduces Amazon Aurora DSQL, a new serverless, distributed…

0 notes

Text

"How Do AWS Solution Architects Design Serverless Applications Effectively?"

An AWS Solution Architect plays a pivotal role in helping organizations leverage cloud computing effectively. As businesses increasingly migrate to the cloud, this role has become essential for designing, deploying, and managing robust and scalable solutions. If you're considering a career as an AWS Solution Architect or working alongside one, understanding their core responsibilities is crucial. Let’s explore the key tasks that define this critical role.

1. Designing Scalable and Reliable Cloud Architectures

The primary responsibility of an AWS Solution Architect is to design cloud architectures that align with the organization's business goals. This involves:

Scalability: Ensuring the infrastructure can handle growth and increased demand.

Reliability: Implementing high-availability solutions to minimize downtime.

Flexibility: Designing systems that can adapt to changing business needs.

Architects achieve these goals by leveraging AWS services such as Auto Scaling, Elastic Load Balancing, and AWS Lambda.

2. Identifying the Right AWS Services

AWS offers a vast array of services, and selecting the right combination is critical for a successful cloud solution. AWS Solution Architects must:

Understand the unique requirements of the organization.

Choose services that maximize performance and cost-efficiency.

Evaluate options like Amazon RDS, Amazon S3, EC2, and DynamoDB for specific use cases.

For instance, they might recommend AWS Aurora for database management or AWS CloudFront for content delivery.

3. Implementing Security Best Practices

Cloud security is a top priority, and AWS Solution Architects ensure that the organization's systems are protected against potential threats. Their responsibilities include:

Configuring Identity and Access Management (IAM) roles to control user permissions.

Encrypting sensitive data using tools like AWS Key Management Service (KMS).

Designing secure network architectures with Virtual Private Cloud (VPC) and Security Groups.

By adhering to AWS’s Well-Architected Framework, architects ensure compliance with industry standards and best practices.

4. Cost Optimization

AWS provides various pricing models, and an AWS Solution Architect ensures that cloud solutions are cost-effective. This involves:

Analyzing workloads to optimize resource usage.

Using services like AWS Cost Explorer to monitor expenses.

Recommending cost-saving measures such as reserved instances, spot instances, and serverless architectures.

Their goal is to reduce unnecessary expenses without compromising performance or scalability.

5. Collaborating with Development and Operations Teams

AWS Solution Architects serve as a bridge between development and operations teams, ensuring seamless communication and collaboration. Key responsibilities include:

Assisting developers in deploying applications on the cloud.

Working with operations teams to monitor and manage infrastructure.

Aligning cloud strategies with organizational goals.

This collaboration often follows the DevOps approach, emphasizing continuous integration, delivery, and automation.

6. Conducting Performance Optimization

Once a cloud solution is implemented, architects continuously monitor and optimize performance. This includes:

Identifying bottlenecks in the infrastructure.

Implementing caching mechanisms using services like Amazon ElastiCache.

Scaling resources dynamically to maintain optimal performance during peak traffic.

Their proactive approach ensures a seamless user experience and maximizes system efficiency.

7. Ensuring Disaster Recovery and Backup Solutions

To safeguard data and maintain business continuity, AWS Solution Architects design robust disaster recovery plans. Their responsibilities include:

Implementing backup solutions using AWS Backup or Amazon S3 Glacier.

Configuring disaster recovery architectures such as multi-region deployments.

Ensuring minimal downtime and data loss in case of failures.

These strategies provide organizations with peace of mind and resilience against unexpected events.

8. Providing Technical Guidance

AWS Solution Architects act as subject matter experts, offering guidance to stakeholders on technical decisions. This involves:

Educating teams on AWS services and capabilities.

Presenting architectural designs to management and explaining their benefits.

Advising on cloud adoption strategies and migration plans.

Their insights help organizations make informed decisions and achieve their goals effectively.

9. Supporting Cloud Migration Efforts

For organizations transitioning to AWS, Solution Architects play a vital role in ensuring a smooth migration process. Responsibilities include:

Assessing existing systems to plan the migration strategy.

Choosing appropriate tools like AWS Migration Hub and AWS DataSync.

Minimizing downtime and ensuring data integrity during the migration.

They also address challenges such as compatibility issues and scalability requirements during the migration process.

10. Staying Updated on AWS Innovations

AWS frequently introduces new services and features. An AWS Solution Architect must stay informed about the latest updates to leverage cutting-edge technologies. This involves:

Attending AWS events and webinars.

Exploring new tools like AWS SageMaker for machine learning or AWS Outposts for hybrid cloud environments.

Evaluating how emerging trends like serverless computing or edge computing can benefit their organization.

Continuous learning is a crucial aspect of the role, ensuring they remain ahead in the dynamic cloud landscape.

Conclusion

The role of an AWS Solution Architect is multifaceted, requiring a combination of technical expertise, strategic thinking, and effective communication. From designing scalable architectures to optimizing costs and ensuring security, their contributions are integral to the success of cloud-based solutions.

For organizations, having a skilled AWS Solution Architect can transform cloud strategies, streamline operations, and drive innovation. For professionals, this role offers a rewarding career path with endless opportunities for growth and impact. If you're considering a career in cloud computing, becoming an AWS Solution Architect is an excellent choice to make a meaningful difference in the tech world.

#awstraining#cloudservices#softwaredeveloper#training#iot#data#azurecloud#artificialintelligence#softwareengineer#cloudsecurity#cloudtechnology#business#jenkins#softwaretesting#onlinetraining#ansible#microsoftazure#digitaltransformation#ai#reactjs#awscertification#google#cloudstorage#git#devopstools#coder#innovation#cloudsolutions#informationtechnology#startup

0 notes

Text

How Amazon Web Services (AWS) Powers the Future of E-Commerce

The e-commerce industry is evolving at lightning speed, demanding innovative solutions to meet customer expectations, optimize operations, and stay competitive. For businesses seeking to achieve these goals, Amazon Cloud Web Services (AWS) provides the perfect foundation. With its robust cloud infrastructure, cutting-edge tools, and global reach, AWS is transforming the e-commerce landscape.

This article delves into how AWS empowers e-commerce businesses, offering solutions that address their unique challenges and pave the way for growth and innovation.

Key Challenges in E-Commerce

E-commerce businesses face a dynamic environment filled with challenges, such as:

Managing fluctuating website traffic.

Ensuring fast and secure transactions.

Delivering personalized shopping experiences.

Optimizing operational costs while scaling globally.

AWS addresses these challenges with a suite of services designed to meet the diverse needs of e-commerce platforms.

AWS Solutions That Revolutionize E-Commerce

AWS provides a comprehensive toolkit that simplifies complex operations and enables businesses to focus on delivering value to their customers.

1. Unmatched Scalability

With AWS, e-commerce platforms can scale their resources up or down based on demand. During high-traffic events like Black Friday or Cyber Monday, AWS ensures that your website remains operational and responsive, providing a seamless shopping experience.

2. Faster Website Performance

Amazon CloudFront, AWS’s Content Delivery Network (CDN), reduces latency by delivering content from servers closest to your customers. Faster loading times translate to higher customer satisfaction and improved conversion rates.

3. Robust Data Management

AWS offers powerful database solutions like Amazon Aurora and DynamoDB, enabling businesses to handle vast amounts of customer, inventory, and transaction data with ease and reliability.

4. Personalized Customer Journeys

AWS's machine learning services, including Amazon Personalize and Amazon Rekognition, help businesses create tailored shopping experiences. Personalized product recommendations based on browsing and purchase history lead to higher engagement and sales.

5. Security You Can Trust

AWS implements advanced security measures, including encryption, firewalls, and identity management, to protect customer data and maintain compliance with global standards like GDPR and PCI DSS.

Real-World Impact of AWS on E-Commerce

1. Supporting Business Growth

Startups and enterprises alike leverage AWS’s cost-effective and scalable infrastructure to grow without the need for significant upfront investment. By eliminating the need for physical servers, businesses save on operational costs while focusing on customer acquisition and retention.

2. Streamlining Backend Operations

AWS Lambda automates backend processes such as order management, payment processing, and inventory updates. This automation reduces manual errors and speeds up operations, enhancing the overall efficiency of the business.

3. Enhancing Customer Engagement

Using AWS analytics tools, businesses can track customer behavior in real-time and adapt their strategies accordingly. From identifying trends to optimizing marketing campaigns, AWS helps businesses stay ahead of the curve.

Benefits of AWS for E-Commerce Businesses

Here are some compelling reasons why AWS is the ideal cloud platform for e-commerce:

Reliability: AWS guarantees 99.99% uptime, ensuring uninterrupted access to your website.

Cost Efficiency: With a pay-as-you-go pricing model, businesses only pay for the resources they use.

Flexibility: AWS supports multiple programming languages, frameworks, and operating systems, allowing seamless integration with existing technologies.

Innovation: AWS continuously evolves, offering new tools and services that enable businesses to innovate and scale efficiently.

Getting Started with AWS

Starting your AWS journey is straightforward:

Define Your Goals: Assess your e-commerce platform’s current challenges and future needs.

Select Services: Choose AWS solutions that align with your business requirements, such as hosting, storage, or AI tools.

Implement and Optimize: Collaborate with cloud experts to implement AWS and continually refine your strategy for better results.

Conclusion

Amazon Web Services (AWS) has become the gold standard for e-commerce businesses seeking to innovate, scale, and deliver exceptional customer experiences. Its comprehensive suite of tools and global presence make it the ultimate solution for businesses navigating the digital economy.

Feathersoft Info Solutions specializes in delivering AWS solutions tailored to the unique needs of e-commerce platforms, ensuring a smooth and successful transition to the cloud. Partner with them to unlock the full potential of AWS and take your business to the next level.

#AWS#AmazonWebServices#CloudComputing#EcommerceSolutions#CloudInfrastructure#ScalableBusiness#DigitalTransformation#EcommerceDevelopment#CloudTechnology#AWSSolutions#MachineLearning#DataAnalytics#EcommerceGrowth#CloudSecurity#TechInnovation#BusinessAutomation#AIinEcommerce#EcommerceTech#AWSCloud#CloudServices

0 notes

Text

Software Development Engineer, Aurora Storage

DESCRIPTIONAWS Utility Computing (UC) provides product innovations – from foundational services such as Amazon’s Simple Storage Service (S3) and Amazon Elastic Compute Cloud (EC2), to consistently released new product innovations that continue to set AWS’s services and features apart in the industry. As a member of the UC organization, you’ll support the development and management of Compute,…

0 notes

Text

AWS Aurora vs RDS: An In-Depth Comparison

AWS Aurora vs. RDS

Amazon Web Services (AWS) offers a range of database solutions, among which Amazon Aurora and Amazon Relational Database Service (RDS) are prominent choices for relational database management. While both services cater to similar needs, they have distinct features, performance characteristics, and use cases. This comparison will help you understand the differences and make an informed decision based on your specific requirements.

What is Amazon RDS?

Amazon RDS is a managed database service that supports several database engines, including MySQL, PostgreSQL, MariaDB, Oracle, and Microsoft SQL Server. RDS simplifies the process of setting up, operating, and scaling a relational database in the cloud by automating tasks such as hardware provisioning, database setup, patching, and backups.

What is Amazon Aurora?

Amazon Aurora is a MySQL and PostgreSQL-compatible relational database built for the cloud, combining the performance and availability of high-end commercial databases with the simplicity and cost-effectiveness of open-source databases. Aurora is designed to deliver high performance and reliability, with some advanced features that set it apart from standard RDS offerings.

Performance

Amazon RDS: Performance depends on the selected database engine and instance type. It provides good performance for typical workloads but may require manual tuning and optimization.

Amazon Aurora: Designed for high performance, Aurora can deliver up to five times the throughput of standard MySQL and up to three times the throughput of standard PostgreSQL databases. It achieves this through distributed, fault-tolerant, and self-healing storage that is decoupled from compute resources.

Scalability

Amazon RDS: Supports vertical scaling by upgrading the instance size and horizontal scaling through read replicas. However, the scaling process may involve downtime and requires careful planning.

Amazon Aurora: Offers seamless scalability with up to 15 low-latency read replicas, and it can automatically adjust the storage capacity without affecting database performance. Aurora’s architecture allows it to scale out and handle increased workloads more efficiently.

Availability and Durability

Amazon RDS: Provides high availability through Multi-AZ deployments, where a standby replica is maintained in a different Availability Zone. In case of a primary instance failure, RDS automatically performs a failover to the standby replica.

Amazon Aurora: Enhances availability with six-way replication across three Availability Zones and automated failover mechanisms. Aurora’s storage is designed to be self-healing, with continuous backups to Amazon S3 and automatic repair of corrupted data blocks.

Cost

Amazon RDS: Generally more cost-effective for smaller, less demanding workloads. Pricing depends on the chosen database engine, instance type, and storage requirements.

Amazon Aurora: Slightly more expensive than RDS due to its advanced features and higher performance capabilities. However, it can be more cost-efficient for large-scale, high-traffic applications due to its performance and scaling advantages.

Maintenance and Management

Amazon RDS: Offers automated backups, patching, and minor version upgrades. Users can manage various configuration settings and maintenance windows, but they must handle some aspects of database optimization.

Amazon Aurora: Simplifies maintenance with continuous backups, automated patching, and seamless version upgrades. Aurora also provides advanced monitoring and diagnostics through Amazon CloudWatch and Performance Insights.

Use Cases

Amazon RDS: Suitable for a wide range of applications, including small to medium-sized web applications, development and testing environments, and enterprise applications that do not require extreme performance or scalability.

Amazon Aurora: Ideal for mission-critical applications that demand high performance, scalability, and availability, such as e-commerce platforms, financial systems, and large-scale enterprise applications. Aurora is also a good choice for organizations looking to migrate from commercial databases to a more cost-effective cloud-native solution.

Conclusion

Amazon Aurora vs Amazon RDS both offer robust, managed database solutions in the AWS ecosystem. RDS provides flexibility with multiple database engines and is well-suited for typical workloads and smaller applications. Aurora, on the other hand, excels in performance, scalability, and availability, making it the preferred choice for demanding and large-scale applications. Choosing between RDS and Aurora depends on your specific needs, performance requirements, and budget considerations.

0 notes

Text

Exploring Amazon Aurora: A Detailed Guide

Amazon Aurora is a powerful relational database service offered by Amazon Web Services (AWS), designed to deliver the performance and reliability of high-end commercial databases while remaining cost-effective and easy to use. In this guide, we will explore the key features, benefits, and use cases of Amazon Aurora, helping you understand why it’s a popular choice for modern applications.

If you want to advance your career at the AWS Course in Pune, you need to take a systematic approach and join up for a course that best suits your interests and will greatly expand your learning path.

What is Amazon Aurora?

Launched in 2014, Amazon Aurora is a cloud-native database that offers compatibility with both MySQL and PostgreSQL. This flexibility allows developers to use familiar tools and frameworks while taking advantage of Aurora’s advanced capabilities.

Key Features of Amazon Aurora

Exceptional Performance:

Amazon Aurora provides up to five times the performance of standard MySQL databases. Its innovative architecture optimizes data processing and enables faster query execution, making it suitable for high-traffic applications.

Automatic Scaling:

Aurora automatically scales storage from 10 GB to 128 TB based on your needs. This dynamic scaling ensures that your database can grow seamlessly without requiring downtime or manual intervention.

High Availability:

With a multi-AZ (Availability Zone) deployment, Aurora ensures that your database is always available. It replicates data across multiple locations, allowing for automatic failover in the event of an outage.

Robust Security:

Aurora offers several security features, including encryption at rest and in transit, as well as integration with AWS Identity and Access Management (IAM) for access control. This helps protect sensitive data from unauthorized access.

Fully Managed Service:

As a fully managed database service, Aurora handles routine tasks such as backups, patching, and monitoring. This allows developers to focus on application development rather than database administration.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the AWS Online Training.

Benefits of Using Amazon Aurora

Cost-Effective: Aurora operates on a pay-as-you-go pricing model, allowing businesses to pay only for what they use. This can result in significant savings compared to traditional database solutions.

Seamless Migration: The compatibility with MySQL and PostgreSQL makes it easier for businesses to migrate existing applications to Aurora without extensive modifications.

Enhanced Monitoring and Insights: Aurora provides advanced monitoring tools that help you track database performance and optimize resource usage effectively.

Use Cases for Amazon Aurora

Amazon Aurora is versatile and can be applied in various scenarios:

Web and Mobile Applications: The high performance and scalability cater to applications with fluctuating workloads, ensuring smooth user experiences.

E-Commerce Platforms: Aurora’s reliability makes it ideal for handling peak traffic during sales events without degrading performance.

SaaS Applications: Its multi-tenant architecture allows companies to serve multiple clients efficiently while maintaining performance.

Conclusion

Amazon Aurora stands out as a robust and flexible database solution for businesses of all sizes. Its combination of high performance, automatic scaling, and managed services makes it an attractive choice for developers and organizations looking to build modern applications.

Whether you’re managing a small web app or a large-scale enterprise solution, Aurora can provide the reliability and efficiency needed to succeed in today’s competitive landscape. By leveraging the power of Amazon Aurora, you can focus on innovation and growth while AWS handles the complexities of database management.

0 notes

Text

AWS Amplify Features For Building Scalable Full-Stack Apps

AWS Amplify features

Build

Summary

Create an app backend using Amplify Studio or Amplify CLI, then connect your app to your backend using Amplify libraries and UI elements.

Verification

With a fully-managed user directory and pre-built sign-up, sign-in, forgot password, and multi-factor auth workflows, you can create smooth onboarding processes. Additionally, Amplify offers fine-grained access management for web and mobile applications and enables login with social providers like Facebook, Google Sign-In, or Login With Amazon. Amazon Cognito is used.

Data Storage

Make use of an on-device persistent storage engine that is multi-platform (iOS, Android, React Native, and Web) and driven by GraphQL to automatically synchronize data between desktop, web, and mobile apps and the cloud. Working with distributed, cross-user data is as easy as working with local-only data thanks to DataStore’s programming style, which leverages shared and distributed data without requiring extra code for offline and online scenarios. Utilizing AWS AppSync.

Analysis

Recognize how your iOS, Android, or online consumers behave. Create unique user traits and in-app analytics, or utilize auto tracking to monitor user sessions and web page data. To increase customer uptake, engagement, and retention, gain access to a real-time data stream, analyze it for customer insights, and develop data-driven marketing plans. Amazon Kinesis and Amazon Pinpoint are the driving forces.

API

To access, modify, and aggregate data from one or more data sources, including Amazon DynamoDB, Amazon Aurora Serverless, and your own custom data sources with AWS Lambda, send secure HTTP queries to GraphQL and REST APIs. Building scalable apps that need local data access for offline situations, real-time updates, and data synchronization with configurable conflict resolution when devices are back online is made simple with Amplify. powered by Amazon API Gateway and AWS AppSync.

Functions

Using the @function directive in the Amplify CLI, you can add a Lambda function to your project that you can use as a datasource in your GraphQL API or in conjunction with a REST API. Using the CLI, you can modify the Lambda execution role policies for your function to gain access to additional resources created and managed by the CLI. You may develop, test, and deploy Lambda functions using the Amplify CLI in a variety of runtimes. After choosing a runtime, you can choose a function template for the runtime to aid in bootstrapping your Lambda function.

GEO

In just a few minutes, incorporate location-aware functionalities like maps and location search into your JavaScript online application. In addition to updating the Amplify Command Line Interface (CLI) tool with support for establishing all necessary cloud location services, Amplify Geo comes with pre-integrated map user interface (UI) components that are based on the well-known MapLibre open-source library. For greater flexibility and sophisticated visualization possibilities, you can select from a variety of community-developed MapLibre plugins or alter embedded maps to fit the theme of your app. Amazon Location Service is the driving force.

Interactions

With only one line of code, create conversational bots that are both interactive and captivating using the same deep learning capabilities that underpin Amazon Alexa. When it comes to duties like automated customer chat support, product information and recommendations, or simplifying routine job chores, chatbots can be used to create fantastic user experiences. Amazon Lex is the engine.

Forecasts

Add AI/ML features to your app to make it better. Use cases such as text translation, speech creation from text, entity recognition in images, text interpretation, and text transcription are all simply accomplished. Amplify makes it easier to orchestrate complex use cases, such as leveraging GraphQL directives to chain numerous AI/ML activities and uploading photos for automatic training. powered by Amazon Sagemaker and other Amazon Machine Learning services.

PubSub

Transmit messages between your app’s backend and instances to create dynamic, real-time experiences. Connectivity to cloud-based message-oriented middleware is made possible by Amplify. Generic MQTT Over WebSocket Providers and AWS IoT services provide the power.

Push alerts

Increase consumer interaction by utilizing analytics and marketing tools. Use consumer analytics to better categorize and target your clientele. You have the ability to customize your content and interact via a variety of channels, such as push alerts, emails, and texts. Pinpoint from Amazon powers this.

Keeping

User-generated content, including images and movies, can be safely stored on a device or in the cloud. A straightforward method for managing user material for your app in public, protected, or private storage buckets is offered by the AWS Amplify Storage module. Utilize cloud-scale storage to make the transition from prototype to production of your application simple. Amazon S3 is the power source.

Ship

Summary

Static web apps can be hosted using the Amplify GUI or CLI.

Amplify Hosting

Fullstack web apps may be deployed and hosted with AWS Amplify’s fully managed service, which includes integrated CI/CD workflows that speed up your application release cycle. A frontend developed with single page application frameworks like React, Angular, Vue, or Gatsby and a backend built with cloud resources like GraphQL or REST APIs, file and data storage, make up a fullstack serverless application. Changes to your frontend and backend are deployed in a single workflow with each code commit when you simply connect your application’s code repository in the Amplify console.

Manage and scale

Summary

To manage app users and content, use Amplify Studio.

Management of users

Authenticated users can be managed with Amplify Studio. Without going through verification procedures, create and modify users and groups, alter user properties, automatically verify signups, and more.

Management of content

Through Amplify Studio, developers may grant testers and content editors access to alter the app data. Admins can render rich text by saving material as markdown.

Override the resources that are created

Change the fine-grained backend resource settings and use CDK to override them. The heavy lifting is done for you by Amplify. Amplify, for instance, can be used to add additional Cognito resources to your backend with default settings. Use amplified override auth to override only the settings you desire.

Personalized AWS resources

In order to add custom AWS resources using CDK or CloudFormation, the Amplify CLI offers escape hatches. By using the “amplify add custom” command in your Amplify project, you can access additional Amplify-generated resources and obtain CDK or CloudFormation placeholders.

Get access to AWS resources

Infrastructure-as-Code, the foundation upon which Amplify is based, distributes resources inside your account. Use Amplify’s Function and Container support to incorporate business logic into your backend. Give your container access to an existing database or give functions access to an SNS topic so they can send an SMS.

Bring in AWS resources

With Amplify Studio, you can incorporate your current resources like your Amazon Cognito user pool and federated identities (identity pool) or storage resources like DynamoDB + S3 into an Amplify project. This will allow your storage (S3), API (GraphQL), and other resources to take advantage of your current authentication system.

Hooks for commands

Custom scripts can be executed using Command Hooks prior to, during, and following Amplify CLI actions (“amplify push,” “amplify api gql-compile,” and more). During deployment, customers can perform credential scans, initiate validation tests, and clear up build artifacts. This enables you to modify Amplify’s best-practice defaults to satisfy the operational and security requirements of your company.

Infrastructure-as-Code Export

Amplify may be integrated into your internal deployment systems or used in conjunction with your current DevOps processes and tools to enforce deployment policies. You may use CDK to export your Amplify project to your favorite toolchain by using Amplify’s export capability. The Amplify CLI build artifacts, such as CloudFormation templates, API resolver code, and client-side code generation, are exported using the “amplify export” command.

Tools

Amplify Libraries

Flutter >> JavaScript >> Swift >> Android >>

To create cloud-powered mobile and web applications, AWS Amplify provides use case-centric open source libraries. Powered by AWS services, Amplify libraries can be used with your current AWS backend or new backends made with Amplify Studio and the Amplify CLI.

Amplify UI components

An open-source UI toolkit called Amplify UI Components has cross-framework UI components that contain cloud-connected workflows. In addition to a style guide for your apps that seamlessly integrate with the cloud services you have configured, AWS Amplify offers drop-in user interface components for authentication, storage, and interactions.

The Amplify Studio

Managing app content and creating app backends are made simple with Amplify Studio. A visual interface for data modeling, authorization, authentication, and user and group management is offered by Amplify Studio. Amplify Studio produces automation templates as you develop backend resources, allowing for smooth integration with the Amplify CLI. This allows you to add more functionality to your app’s backend and establish multiple testing and team collaboration settings. You can give team members without an AWS account access to Amplify Studio so that both developers and non-developers can access the data they require to create and manage apps more effectively.

Amplify CLI toolchain

A toolset for configuring and maintaining your app’s backend from your local desktop is the Amplify Command Line Interface (CLI). Use the CLI’s interactive workflow and user-friendly use cases, such storage, API, and auth, to configure cloud capabilities. Locally test features and set up several environments. Customers can access all specified resources as infrastructure-as-code templates, which facilitates improved teamwork and simple integration with Amplify’s continuous integration and delivery process.

Amplify Hosting

Set up CI/CD on the front end and back end, host your front-end web application, build and delete backend environments, and utilize Amplify Studio to manage users and app content.

Read more on Govindhtech.com

#AWSAmplifyfeatures#GraphQ#iOS#AWSAppSync#AmazonDynamoDB#RESTAPIs#Amplify#deeplearning#AmazonSagemaker#AmazonS3#News#Technews#Technology#technologynews#Technologytrends#govindhtech

0 notes