Text

So.cl is saying farewell as of March 15, 2017

Socl has been a wonderful outlet for creative expression, as well as a place to enjoy a supportive community of like-minded people, sharing and learning together. In supporting you, Socl’s unique community of creators, we have learned invaluable lessons in what it takes to establish and maintain community as well as introduce novel new ways to make, share and collect digital stuff we love. Through you we've been able to introduce many interesting ideas such as:

Collages; beautiful posts of content discovered through search and auto-assembled

Riffs; themed conversations based on community inspiration

Automatic Translation; seamlessly spanning languages and culture

Collections; telling your story through collections of posts

Picotales, Video Parties, Blinks, Kodu - new ways to create and share

From the very beginning, we've been amazed by your creativity, openness, and positivity. Thank you so very much for sharing your inspirations with us.

The So.cl Team

1 note

·

View note

Text

Kodu Workshop Summary

This year, FUSE Labs collaborated with Martin Luther King Jr Elementary and Highland Middle School to host a computer science workshop for students in Seattle Public Schools and Bellevue School District. The workshop was a four-day learning experience geared towards exposing students to computer science and inspiring them to pursue STEM related fields. Following a rigorous process emphasizing design and coding, 50 students from 9-14 years old had their first coding experience using a free Microsoft application, Kodu.

In this session, students were introduced to basic coding concepts and design skills using Kodu. We coached students on designing terrain as well as coding their first single player game, multiplayer game, and autonomous game. With just a few days of instruction, students 9-14-year-old were already engineering their own Kodu multiplayer mob game and racing game with beautiful terrain and responsive obstacles.

Students present their Microsoft Kodu world to their teacher from Martin Luther King Jr Elementary, Ben Lawton

A student tests her new world with Kodu workshop trainer, Michael Braun

A student presents his Microsoft Kodu world to Highland Middle School teacher, Dennis Crane

"People can't get jobs, and we have jobs that can't be filled" says Brad Smith, Microsoft President.

The percentage of CS degrees earned by women has actually been going down since peaking around 37% in 1984. Currently it's closer to 18% of the degrees being earned by women. Hopefully, by exposing girls to CS early, we can capture their imagination before they're told that CS is for boys. - Computer World

There are likely to be 150,000 computing jobs opening up each year through 2020, according to an analysis of federal forecasts by the Association of Computing Machinery, a professional society for computer researchers. But despite the hoopla around start-up celebrities like Mark Zuckerberg of Facebook, fewer than 40,000 American students received bachelor's degrees in computer science during 2010, the National Center for Education Statistics estimates. - Fostering Tech Talent in Schools

FUSE Labs has been curating Kodu curriculum that combines design and computer science with subjects in STEM (Science, Technology, Engineering, and Math) targeting 9-14-year-old students will little to no coding experience. The Kodu curriculum and supporting lessons focus on understanding design and coding in the setting of a classroom, club, and camp. The curriculum has been tested with elementary and middle school students.

A student build a fully functional multiplayer competition with keyboard and mouse input, which keeps track of the points scored by submarines collecting seashells underwater. The Kodu app serves as an open-ended game creation tool where the students design their own terrain, create their own directions, and code their own characters.

With a single player game creation, another student programed a racing game with six autonomous characters and a single player entangled in competition to reach the finish, the castle, first. The autonomous characters each followed their own path designed by the student. The student designed the racetrack with hidden minefields to avoid along the racecourse.

The Kodu students shared their interest in pursuing computer science and future opportunities in technology companies. As our world becomes ever more technologically advanced, proficiency in computer science will become more critical for employment opportunities and professional success. We must therefore encourage learning of these skills from an early age. With the support of free applications like Kodu, we can teach our students to go one step further, becoming creators—not just consumers—of technology. We look forward to engaging with future Kodu-based events to spark the imaginations of the next generation of engineers.

2 notes

·

View notes

Link

0 notes

Link

This week, we added new sample bots to our Bot Builder-Samples GitHub repository, showcasing how some Bing and Cognitive Services APIs for vision, speech and search can help developers build smarter conversational interfaces.

Read more in the Bot Framework Blog!

1 note

·

View note

Link

As bots become an important part of how people are productive and have fun, bot analytics are becoming ever more important to the bot developer. What did my bot’s traffic look like? Who is using my bot? What channels are most popular?

Read more on the Bot Framework Blog!

1 note

·

View note

Link

Check out the new Bot Builder SDK samples we released last week!

0 notes

Link

Last June we organized Botness, a two-day gathering for 100ish people in San Francisco, at PCH/Highway 1, to share all things bots: design, tools, AI, components, policy, privacy… & discuss how together we can shape the evolving ecosystem to support innovation and open collaboration, making bots work across operating systems, messaging apps, and websites.

Read more...

1 note

·

View note

Link

Bot and CaaP (Conversation as a Platform) momentum continues at Microsoft. Recently we had a fantastic hack with the super talented folks at Kik and learned a ton. We’ve been working with the Kik team very closely to add the Kik channel to Microsoft Bot Framework. As you see in Ivar Chan’s (Partner Success, Kik Interactive Inc.) blog post, the Kik channel is now live.

Read more on the Bot Framework Blog!

0 notes

Link

When we first introduced the Microsoft Bot Framework at Build 2016, the framework provided support for text and image conversation across all of our conversation channels. We’ve had great response and developers are creating and connecting new bots of all shapes and sizes every day. In parallel we’ve been working on updating the Bot Framework with new functionality. Last week we introduced the Facebook channel, enabling developers to make their bots available to everyone on Facebook, and have started taking submissions for our yet to be opened bot directory.

Read more on the Bot Framework Blog!

0 notes

Text

Designing & Studying Social Computing Systems―FUSE research at #CHI2016

This week at CHI we are presenting two research projects, a qualitative study and a system design, that highlight how the design of online communities can support creative expression and personal connections that otherwise wouldn't have existed.

The first project, titled Journeys & Notes, focuses on the design and deployment of a production-quality mobile app that enables people to log their travels, leave notes behind, and build a community around spaces in between destinations (think of it as a Foursquare for trips). The app is playful, supports creative expression, and allows people to do this anonymously and pseudonymously. The project grew out of Justin Cranshaw’s internship project (who is now a full time member of our lab), in collaboration with designer S.A. Needham and myself. Justin is presenting the work on Wed May 11 at 4:30pm in room 210AE.

youtube

The second project, Surviving an "Eternal September," is a qualitative study of the NoSleep subreddit, an online community of horror fiction writers, was able to manage accelerated growth after being featured on the home page of Reddit. Usenet's infamous "Eternal September" suggest that large influxes of newcomers can hurt online communities, but our interviews suggest that NoSleep survived without major incident because of three traits of this community: (1) administrators, (2) shared community moderation, and (3) technological infrastructure for preventing norm violations. This research was done in collaboration with Charles Kiene, a UW undergraduate student, and his advisor Benjamin Mako Hill at the UW Department of Communication. Charles presents the work on Mon May 9 at 4:30 pm in room LL20A.

youtube

1 note

·

View note

Text

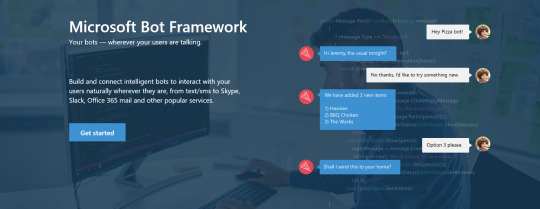

Microsoft Bot Framework

Written by Colleen Estrada

As some of you may have noticed, Fuse Labs has been doing a lot of thinking about and work on conversational user experiences. Today, Microsoft released the public preview of the Microsoft Bot Framework. In the spirit of One Microsoft, the Bot Framework is a collaborative effort across many teams, including Microsoft Technology and Research, Microsoft’s Applications and Services Group and Microsoft’s Developer Experience teams. The framework provides just what developers need to build and connect intelligent bots that interact naturally wherever users are talking, from text/sms to Skype, Slack, Office 365 mail and other popular services.

Bots (or conversation agents) are rapidly becoming an integral part of one’s digital experience – they are as vital a way for users to interact with a service or application as is a web site or a mobile experience. Developers writing bots all face the same problems: bots require basic I/O; they must have language and dialog skills; and they must connect to users – preferably in any conversation experience and language the user chooses. The Bot Framework provides tools to easily solve these problems and more for developers e.g., automatic translation to more than 30 languages, user and conversation state management, debugging tools, an embeddable web chat control and a way for users to discover, try, and add bots to the conversation experiences they love.

The Bot Framework consists of three main components:

We hope you take the Bot Framework for a spin – you can try some of the Sample Bots from Microsoft Build 2016 today by visiting the Bot Directory.

Happy making. And chatting.

1 note

·

View note

Text

Social Computing Symposium 2016

Click here to see all sessions and videos!

The 2016 Social Computing Symposium (SoCosy) was a two-day conversation focused on the utopian (entertainment) and dystopian (harassment, threats, trolling) aspects of technology. The goal was to interrogate how to humanize conversations through moderation, engagement, and listening.

Special thanks to the Interactive Telecommunications Program at NYU for hosting the conference, to the 70+ people who were able to fit in the room, and to the catalyzers that made it happened: Kati London, Brady Forrest, Elizabeth Churchill, Matt Stempeck, Kate Crawford, Clive Thompson, and Liz Lawley. It was an incredibly diverse set of attendees, mixing up social startups, researchers, writers, and influential commentators.

We hope you enjoy the videos of the talks. Make sure to watch the audience choice talks, they are always my favorite because speakers find out that they have been picked to give a talk in the morning ;-), so we hear what people REALLY want to hear and say.

-Lili Cheng

0 notes

Text

Crowds, bots, and remixing: FUSE’s research at CSCW

This week, three of our collaborators will be presenting some of our recent work at the conference on Computer-supported Collaborative Work and Social Computing in San Francisco.This research explores several angles on crowd research, from how to engage crowds in collective action, to how to design crowd-powered applications, to how people learn computational thinking by reusing crowd-produced programs.

Below is a list of the papers. If you are attending CSCW, please stop by!

1. Botivist: Calling Volunteers to Action using Online Bots. In collaboration with Saiph Savage and Tobias Hollerer (UCSB), this work explores different recruitment strategies to engage people in “micro” activism using Twitter bots. Tuesday March 1 at 11:00 am in Bayview B.

2. Storia: Summarizing Social Media Content based on Narrative Theory using Crowdsourcing. In collaboration with Joy Kim (Stanford), we introduce a novel crowdsourcing technique for generating stories of events based on social media data and narrative templates. Tuesday March 1 at 2:30 pm in Bayview B.

3. Remixing as a Pathway to Computational Thinking. In collaboration with Sayamindu Dasgupta (MIT), William Hale and Benjamin Mako Hill (UW), this work presents some of the first large-scale empirical evidence of how reusing crowd-produced programs helps people learn programming. Wednesday March 2 at 11:00AM in Bayview A.

0 notes

Text

Design Expo 2016

[written by Colleen Estrada] It’s that time of year - time to formally announce the 2016 Microsoft Research Design Expo Challenge...

The Microsoft Research Design Expo features student teams from top design schools around the world, each responding to the challenge as only top design students can - and always with fascinating results. Here’s this year’s challenge which will be answered in Redmond, WA, USA during the first week of July, 2016.

Achieving Symbiosis and the Conversational User Interface (CUI)

The hope is that, in not too many years, human brains and computing machines will be coupled together very tightly, and that the resulting partnership will think as no human brain has ever thought and process data in a way not approached by the information-handling machines we know today.

- J.C.R. Licklider, Man-Computing Symbiosis, 1960

Move ahead an astonishing 55 years. Licklider’s prerequisites (and more) exist for Symbiosis. A plethora of chatty bots are offering to do things for us in our messaging experiences. A series of personal agent services have emerged that leverage machines, humans or both to complete tasks for us (x.ai, Clara Labs, Fancy Hands, Task Rabbit, Facebook “M” to name a few) – the commanding interface is email, text or a voice call. WeChat is perhaps the most stunning example of the power of chat-driven UI to date, providing indispensable value to users through millions of verified services in an all-in-one system that allows you to do everything from grabbing a taxi, to paying the electric bill or sending money to a friend. Offerings such as Siri, Google Now and Cortana are also demonstrating value to millions of people, particularly on mobile form factors, where the conversational user interface (CUI) is often superior to the GUI.

Clearly, the value of the CUI is not found simply in ‘speech’.

The CUI is more than just synthesized speech; it is an intelligent interface. It’s intelligent because it combines these voice technologies with natural-language understanding of the intention behind those spoken words, not just recognizing the words as a text transcription. The rest of the intelligence comes from contextual awareness (who said what, when and where), perceptive listening (automatically waking up when you speak) and artificial intelligence reasoning.

- Ron Kaplan, Beyond the GUI; it’s Time for a Conversational User Interface Wired Magazine, March 2013

While in complete agreement with Kaplan’s statement – made a mere two years ago – it is the combination of the CUI, adaptive/learning sensor technologies, a rich personal profile, increasingly pervasive user agents and service bots (powered by machines, humans or both), as well as the ability to fluidly transact, that will enable the most fluid, powerful, and human computing experiences spanning digital and physical environments and form factors that we have ever before been able to design, and build, and from which we will all benefit. 2015 seems poised to be “The Year of the Conversational Bot” but we are still just scratching the surface of the Symbiosis Promise.

2016 Design Expo Challenge

Design a product, service or solution that demonstrates the value and differentiation of the CUI. Your creation should demonstrate the best qualities of a symbiotic human-computer experience which features an interface designed to interpret human language and intent. Of course, language takes many forms – from speech, to text, to gesture, body language, and even thought. Your creation should clearly demonstrate foundational elements the CUI calls upon in order to delight people. It should meet a clear need and be extensible to wider applications. It may be near-term practical or blue sky, but the idea must be innovative, technically feasible, and have a realistic chance of adoption if instantiated. Of course, to deliver an optimal experience, much is implied – from data and identity permissions to cross-app agent and/or bot cooperation and coordination (first and third party); your design should minimally show awareness of these barriers or explore solutions to them.

1 note

·

View note

Text

Kodu & the BBC micro:bit at BETT 2016

We recently traveled to the UK to attend the BETT Show where we showed off Kodu’s support for the BBC micro:bit.

What is a BBC micro:bit?

If you aren’t from the UK, you may not have heard of the micro:bit. The micro:bit is a small, programmable device, developed by the BBC and partners including Microsoft, that makes it easy for children to get creative with technology. In the coming weeks, close to 1 million micro:bits will be given freely to every Year 7 child or equivalent across the UK.

How does Kodu work with the micro:bit?

We’ve added new tiles to Kodu’s programming language to allow interaction with different parts of the micro:bit. With these tiles you can do things like control character movement using the micro:bit’s accelerometer, jump and shoot with a button press, display animations on the screen, and more.

Our Experience at BETT

BETT was very busy, with 40,000 attendees across four days. There was massive, unrelenting interest in the micro:bit. Our booth was one of the busiest at the show. With the pending drop of 1 million micro:bits into UK classrooms, teachers and other school faculty visiting our booth were full of questions. They were generally excited about the device, though also a bit anxious about how they’ll teach with it. We spent a lot of time demoing how the online editor works, and giving tours of the supporting lesson materials. This seemed to help demystify the device and answer basic questions. We also gave out three thousand copies of the BBC micro:bit Quick Start Guide for Teachers.

After demoing the basics of device itself, we would turn attention to Kodu. We would typically demo how Kodu can be programmed to use the micro:bit as a game controller: steering character movement by tilting the micro:bit, shooting missiles on a button press, and displaying game score on the micro:bit’s LED display. There is much love for Kodu in the UK. The majority of the teachers we met were either using Kodu now, or had used Kodu sometime in the past. Teachers consistently loved Kodu’s micro:bit integration – with several stating this is where they’ll start their Year 7s when they receive their micro:bits before moving on to the web programming interface.

Parting Thoughts

It was wonderful to be able to personally connect with so many teachers that love Kodu and use it in their classrooms. It was also fantastic to witness at BETT the excitement building around the micro:bit. With major initiatives like the micro:bit underway, along with the revamped UK computing curriculum and increased focus on teaching CS topics in classrooms around the planet, it seems 2016 is shaping up to be a very interesting year for Kodu and similar products!

0 notes

Text

GUIs vs CUIs vs C64UIs

Long before Windows, around the time dinosaurs roamed the earth, there were Commodore 64s. I got my intro to programming on one of those. The programming language was Basic and this is what the screen looked like:

Your programs start with a series of interspersed INPUTs and PRINTs. This is how your programs interacted with the user. The user interface, such as it was, consisted of a series of questions (asked by the program) and answers (typed by the user.)

Today of course this type of user interface is called CUIs and the programs are called bots.

Right?

I hope not!

The problem with this type of interface is its inflexibility. If the user makes one wrong entry and didn’t realize it before pressing Enter he has to quit the program and start over.

This was recognized as a problem long before graphical user interfaces became popular. And that’s why, even character mode applications implemented forms. This is what that looked like:

Forms offer the convenience of being able to go back and forward between fields. You can fill the fields in any order, and you can go back and correct any field you want before submitting the form. No more starting over and retyping everything just because you entered one value incorrectly.

If your user interface is a long series of questions that the user has to type the answers to, then that’s not a CUI, that’s more like a C64UI (for Commodore-64 UI). In a modern CUI the user is the one in control. The user asks questions in a natural language, and the bot answers the questions. In ancient C64UIs the control is inversed: the program asks questions in a natural language, and the user answers the questions (and has to start over if he types an answer wrong.)

Not every program needs to be a bot. Some programs should be apps. If the bulk of the functionality of your program consists of asking the user a long series of questions, then the question ought to be asked: should your program be an app instead – and have convenient forms for entering data?

The other requirement for bots is that you have to match user’s expectations exactly. If you do less than what users expect then they will get frustrated and will not use your bot again. If your bot does more than what users expect they will never discover those features. You can mitigate this last problem by allowing the user to ask a “what can you do?” question and then replying to that. But this reply has to be short, otherwise it is like having to read a user manual in order to use your bot… didn’t we solve that problem long ago with GUIs?

My colleague Andres Monroy-Hernandez offers the following techniques to alleviate some of these problems:

Know the user. Avoid asking question by knowing the user and predicting the answer to those questions. For example, in a meeting scheduling agent, you can reduce verbosity if you have access to the user's calendar, know her time preferences, preferred locations, and the people she meets with. Such that a user can simply say "@bot: arrange a meeting with Rajeev." That is a lot easier than having to click on a bunch of windows, compare calendars,find rooms, etc. Obviously bots are not there yet, but that should be our north star.

Understand any conversation. A bot should be able to understand any utterance. This can be accomplished by:

Single-purpose bots. For example, instead of a general purpose bots that pretend to do and understand everything, we might want to start with single-purpose bots to put boundaries on the universe of utterances. I think bot-specialization builds nicely on how we already think about professions (plumbers, secretaries, lawyers, etc)

Improve language understanding models through data and humans in the loop

Focus on scenarios that naturally emerge from conversations. If the user has to open a conversation app just to talk to the bot, that is not as effective as if the user was already in the conversation app when his or her need came up. Forwarding a mail to a bot, CC'ing or @mentioning bot in an existing conversation, make sense. Opening an app just to use a bot feels a bit of a stretch.

0 notes