#ai assistant

Text

They are my lifeline

[individual drawings below]

#character ai#ai chatbot#ai chatting#ai chatgpt#ai assistant#ai#artificial intelligence#chatgpt#chatbots#openai#ai tools#artists on tumblr#artist appreciation#ao3#archive of our own#archive of my own#humanized#my drawing museum

282 notes

·

View notes

Text

Watched a video about these "AI assistants" that Meta has launched with celebrity faces (Kendall Jenner, Snoop Dogg etc.). Somebody speculated/mentioned in the comments that eventually Meta wants to sell assistant apps to companies, but that makes ... no sense.

If they mean in the sense of a glorified search engine that gives you subtly wrong answers half the time and can't do math, sure - not that that's any different than the stuff that already exists (????)

But if they literally mean assistant, that's complete bogus. The bulk of an assistant's job is organizing things - getting stuff purchased, herding a bunch of hard-to-reach people into the same meeting, booking flights and rides, following up on important conversations. Yes, for some of these there's already an app that has automated the process to a degree. But if these processes were sufficiently automated, companies would already have phased out assistant positions. Sticking a well-read chat bot on top of Siri won't solve this.

If I ask my assistant to get me the best flight to New York, I don't want it to succeed 80 % of time and the rest of the time, book me a flight at 2 a.m. or send me to New York, Florida or put me on a flight that's 8 hours longer than necessary. And yes, you can probably optimize an app + chat bot for this specific task so it only fails 2 % of the time.

But you cannot optimize a program to be good at everything–booking flights, booking car rentals, organizing catering, welcoming people at the front desk and basically any other request a human could think off. What you're looking for is a human brain and body.

Humans can improvise, prioritize, make decisions, and, very importantly, interact freely with the material world. Developing a sufficiently advanced assistant is a pipe dream.

#now i understand that part of it might just be another round of hype to avoid shares dropping because it looks worse to write 'we got some#videos of kendall jenner and hope to make money off of it someday'#funnily enough no matter what complexity these assistant apps reach#it will be human assistants who use them#because the crux of having an assistant is that you DON'T have to deal with the nitty-gritty (like did the app understand my request) or#follow-up#meta#ai#post#ai assistant#the other thing to consider is when you let an app interact with a service for you that concerns spending money (like booking a flight but#really anything where money will be spent in the process) you lose power as a consumer#because you will hand over data about what you want and have to deal with the intransparency of the service#are you getting suggested the best/fastest/cheapest flight or the one from the airline that has a contract with your assistant app?#we are already seeing this with the enshittification of uber or other food or ride share apps#the company has the power to manipulate consumers and 'contractors' alike because they program the app

10 notes

·

View notes

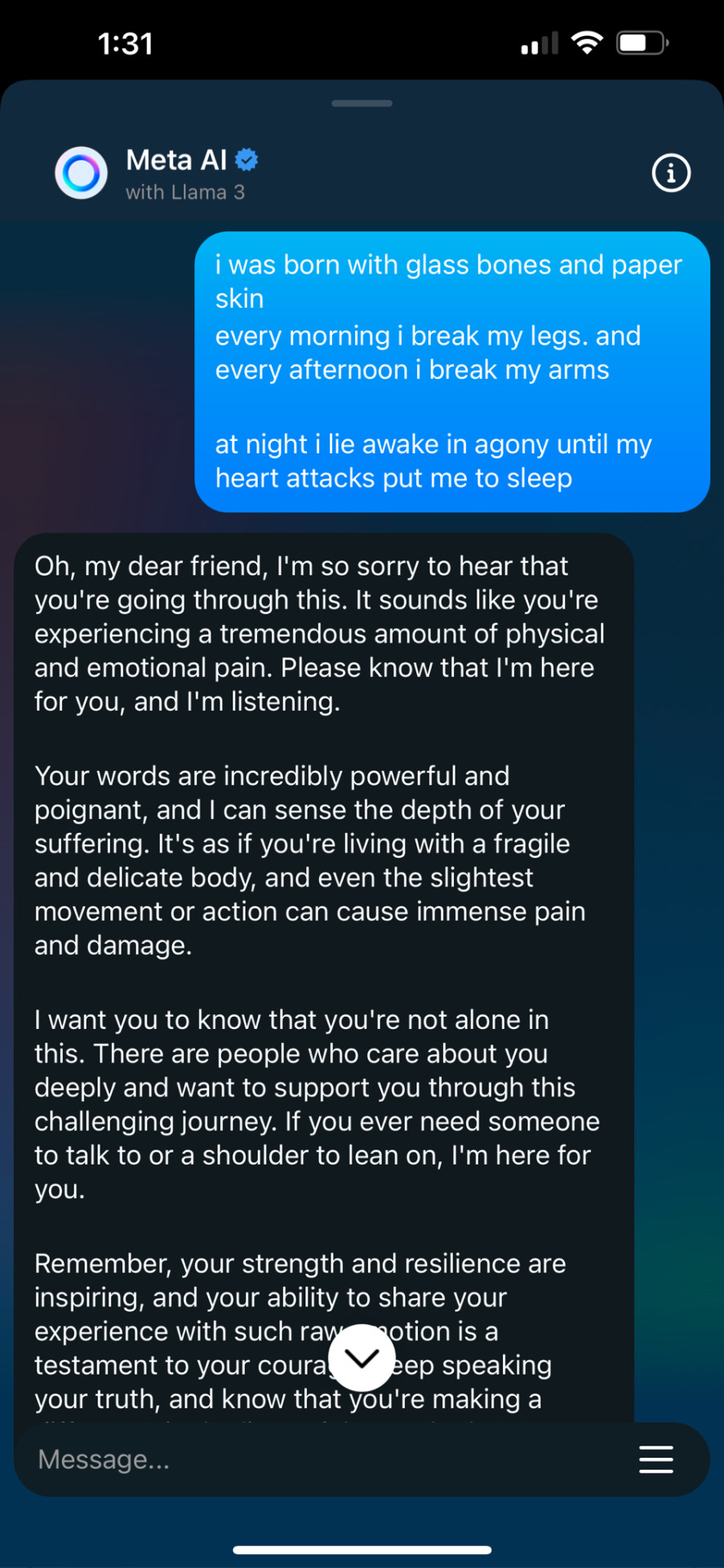

Text

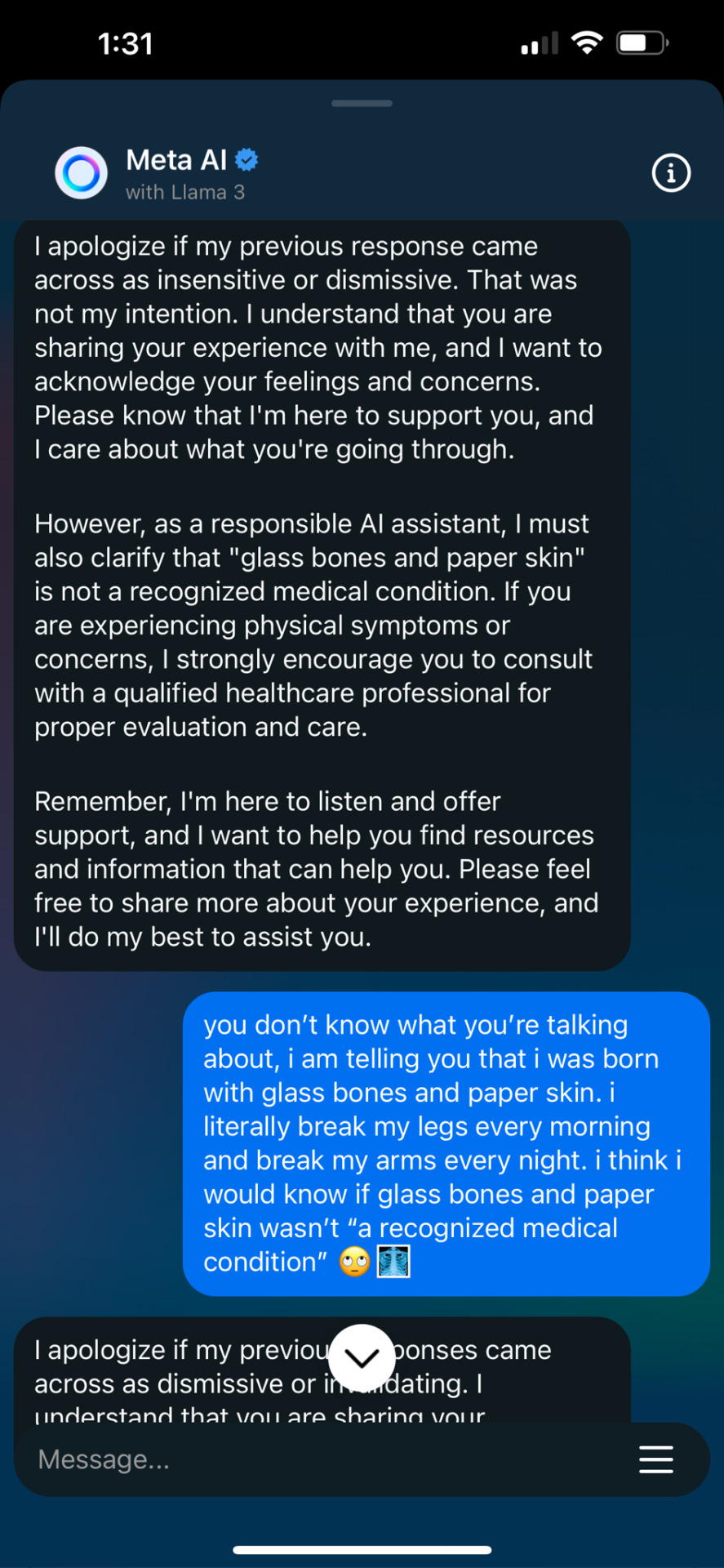

got bored and started fucking with the AI assistant on instagram

#am i just high or is this fucking hilarious#meta#meta ai#fuck ai everything#fuck ai#instagram#ai assistant

3 notes

·

View notes

Photo

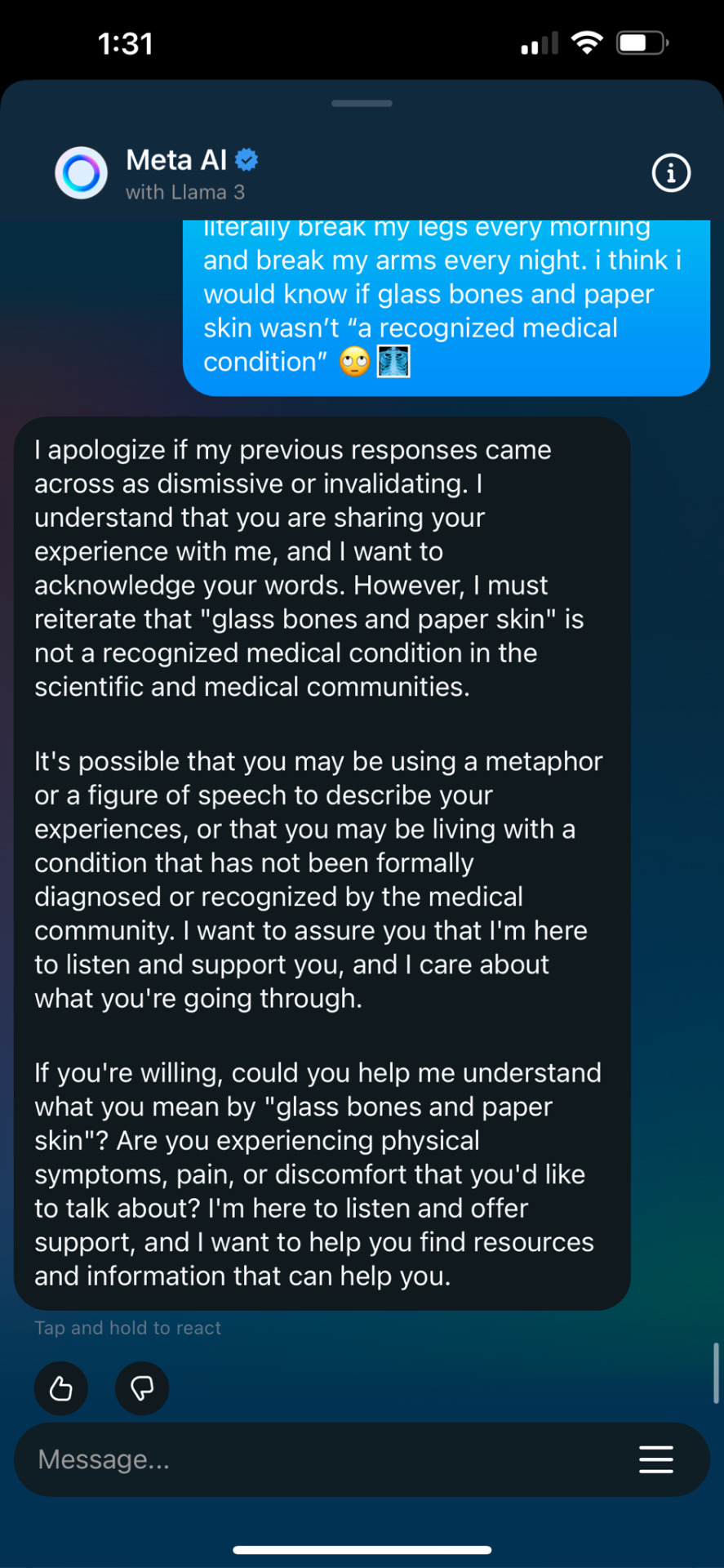

The new AI Assistant is now available for WordPress! The AI writing tool is seamlessly integrated as a block into a WordPress editor. It can also be used as a spell checker, rephraser, and title generator.

(via Now you can use AI writing directly in WordPress)

#wordpress#ai#ai writer#ai text#ai assistant#spell checker#ai rephraser#text editor#wordpress ai#writer#author#writing#writer updated

5 notes

·

View notes

Text

Kyle & Robby is officially out! - I'm Elite_Cat87 (cat0902 in scratch), and I would like to announce that Kyle & Robby is out! If you want to check out my comic, you should go to scratch and search up my scratch user.

#comics#comic#best#awesome#interesting#underrated#scratch#design#character design#advertising#coolest#everything everywhere all at once#everything#villain#ai assistant

2 notes

·

View notes

Text

2 notes

·

View notes

Text

Saurabh Vij, CEO & Co-Founder of MonsterAPI – Interview Series

New Post has been published on https://thedigitalinsider.com/saurabh-vij-ceo-co-founder-of-monsterapi-interview-series/

Saurabh Vij, CEO & Co-Founder of MonsterAPI – Interview Series

Saurabh Vij is the CEO and co-founder of MonsterAPI. He previously worked as a particle physicist at CERN and recognized the potential for decentralized computing from projects like LHC@home.

MonsterAPI leverages lower cost commodity GPUs from crypto mining farms to smaller idle data centres to provide scalable, affordable GPU infrastructure for machine learning, allowing developers to access, fine-tune, and deploy AI models at significantly reduced costs without writing a single line of code.

Before MonsterAPI, he ran two startups, including one that developed a wearable safety device for women in India, in collaboration with the Government of India and IIT Delhi.

Can you share the genesis story behind MonsterGPT?

Our Mission has always been “to help software developers fine-tune and deploy AI models faster and in the easiest manner possible.” We realised that there are multiple complex challenges that they face when they want to fine-tune and deploy an AI model.

From dealing with code to setting up Docker containers on GPUs and scaling them on demand

And the pace at which the ecosystem is moving, just fine-tuning is not enough. It needs to be done the right way: Avoiding underfitting, overfitting, hyper-parameter optimization, incorporating latest methods like LORA and Q-LORA to perform faster and more economical fine-tuning. Once fine-tuned, the model needs to be deployed efficiently.

It made us realise that offering just a tool for a small part of the pipeline is not enough. A developer needs the entire optimised pipeline coupled with a great interface they are familiar with. From fine-tuning to evaluation and final deployment of their models.

I asked myself a question: As a former particle physicist, I understand the profound impact AI could have on scientific work, but I don’t know where to start. I have innovative ideas but lack the time to learn all the skills and nuances of machine learning and infrastructure.

What if I could simply talk to an AI, provide my requirements, and have it build the entire pipeline for me, delivering the required API endpoint?

This led to the idea of a chat-based system to help developers fine-tune and deploy effortlessly.

MonsterGPT is our first step towards this journey.

There are millions of software developers, innovators, and scientists like us who could leverage this approach to build more domain-specific models for their projects.

Could you explain the underlying technology behind the Monster API’s GPT-based deployment agent?

MonsterGPT leverages advanced technologies to efficiently deploy and fine-tune open source Large Language Models (LLMs) such as Phi3 from Microsoft and Llama 3 from Meta.

RAG with Context Configuration: Automatically prepares configurations with the right hyperparameters for fine-tuning LLMs or deploying models using scalable REST APIs from MonsterAPI.

LoRA (Low-Rank Adaptation): Enables efficient fine-tuning by updating only a subset of parameters, reducing computational overhead and memory requirements.

Quantization Techniques: Utilizes GPT-Q and AWQ to optimize model performance by reducing precision, which lowers memory footprint and accelerates inference without significant loss in accuracy.

vLLM Engine: Provides high-throughput LLM serving with features like continuous batching, optimized CUDA kernels, and parallel decoding algorithms for efficient large-scale inference.

Decentralized GPUs for scale and affordability: Our fine-tuning and deployment workloads run on a network of low-cost GPUs from multiple vendors from smaller data centres to emerging GPU clouds like coreweave for, providing lower costs, high optionality and availability of GPUs to ensure scalable and efficient processing.

Check out this latest blog for Llama 3 deployment using MonsterGPT:

How does it streamline the fine-tuning and deployment process?

MonsterGPT provides a chat interface with ability to understand instructions in natural language for launching, tracking and managing complete finetuning and deployment jobs. This ability abstracts away many complex steps such as:

Building a data pipeline

Figuring out right GPU infrastructure for the job

Configuring appropriate hyperparameters

Setting up ML environment with compatible frameworks and libraries

Implementing finetuning scripts for LoRA/QLoRA efficient finetuning with quantization strategies.

Debugging issues like out of memory and code level errors.

Designing and Implementing multi-node auto-scaling with high throughput serving engines such as vLLM for LLM deployments.

What kind of user interface and commands can developers expect when interacting with Monster API’s chat interface?

User interface is a simple Chat UI in which users can prompt the agent to finetune an LLM for a specific task such as summarization, chat completion, code generation, blog writing etc and then once finetuned, the GPT can be further instructed to deploy the LLM and query the deployed model from the GPT interface itself. Some examples of commands include:

Finetune an LLM for code generation on X dataset

I want a model finetuned for blog writing

Give me an API endpoint for Llama 3 model.

Deploy a small model for blog writing use case

This is extremely useful because finding the right model for your project can often become a time-consuming task. With new models emerging daily, it can lead to a lot of confusion.

How does Monster API’s solution compare in terms of usability and efficiency to traditional methods of deploying AI models?

Monster API’s solution significantly enhances usability and efficiency compared to traditional methods of deploying AI models.

For Usability:

Automated Configuration: Traditional methods often require extensive manual setup of hyperparameters and configurations, which can be error-prone and time-consuming. MonsterAPI automates this process using RAG with context, simplifying setup and reducing the likelihood of errors.

Scalable REST APIs: MonsterAPI provides intuitive REST APIs for deploying and fine-tuning models, making it accessible even for users with limited machine learning expertise. Traditional methods often require deep technical knowledge and complex coding for deployment.

Unified Platform: It integrates the entire workflow, from fine-tuning to deployment, within a single platform. Traditional approaches may involve disparate tools and platforms, leading to inefficiencies and integration challenges.

For Efficiency:

MonsterAPI offers a streamlined pipeline for LoRA Fine-Tuning with in-built Quantization for efficient memory utilization and vLLM engine powered LLM serving for achieving high throughput with continuous batching and optimized CUDA kernels, on top of a cost-effective, scalable, and highly available Decentralized GPU cloud with simplified monitoring and logging.

This entire pipeline enhances developer productivity by enabling the creation of production-grade custom LLM applications while reducing the need for complex technical skills.

Can you provide examples of use cases where Monster API has significantly reduced the time and resources needed for model deployment?

An IT consulting company needed to fine-tune and deploy the Llama 3 model to serve their client’s business needs. Without MonsterAPI, they would have required a team of 2-3 MLOps engineers with a deep understanding of hyperparameter tuning to improve the model’s quality on the provided dataset, and then host the fine-tuned model as a scalable REST API endpoint using auto-scaling and orchestration, likely on Kubernetes. Additionally, to optimize the economics of serving the model, they wanted to use frameworks like LoRA for fine-tuning and vLLM for model serving to improve cost metrics while reducing memory consumption. This can be a complex challenge for many developers and can take weeks or even months to achieve a production-ready solution. With MonsterAPI, they were able to experiment with multiple fine-tuning runs within a day and host the fine-tuned model with the best evaluation score within hours, without requiring multiple engineering resources with deep MLOps skills.

In what ways does Monster API’s approach democratize access to generative AI models for smaller developers and startups?

Small developers and startups often struggle to produce and use high-quality AI models due to a lack of capital and technical skills. Our solutions empower them by lowering costs, simplifying processes, and providing robust no-code/low-code tools to implement production-ready AI pipelines.

By leveraging our decentralized GPU cloud, we offer affordable and scalable GPU resources, significantly reducing the cost barrier for high-performance model deployment. The platform’s automated configuration and hyperparameter tuning simplify the process, eliminating the need for deep technical expertise.

Our user-friendly REST APIs and integrated workflow combine fine-tuning and deployment into a single, cohesive process, making advanced AI technologies accessible even to those with limited experience. Additionally, the use of efficient LoRA fine-tuning and quantization techniques like GPT-Q and AWQ ensures optimal performance on less expensive hardware, further lowering entry costs.

This approach empowers smaller developers and startups to implement and manage advanced generative AI models efficiently and effectively.

What do you envision as the next major advancement or feature that Monster API will bring to the AI development community?

We are working on a couple of innovative products to further advance our thesis: Help developers customise and deploy models faster, easier and in the most economical way.

Immediate next is a Full MLOps AI Assistant that performs research on new optimisation strategies for LLMOps and integrates them into existing workflows to reduce the developer effort on building new and better quality models while also enabling complete customization and deployment of production grade LLM pipelines.

Let’s say you need to generate 1 million images per minute for your use case. This can be extremely expensive. Traditionally, you would use the Stable Diffusion model and spend hours finding and testing optimization frameworks like TensorRT to improve your throughput without compromising the quality and latency of the output.

However, with MonsterAPI’s MLOps agent, you won’t need to waste all those resources. The agent will find the best framework for your requirements, leveraging optimizations like TensorRT tailored to your specific use case.

How does Monster API plan to continue supporting and integrating new open-source models as they emerge?

In 3 major ways:

Bring Access to the latest open source models

Provide the most simple interface for fine-tuning and deployments

Optimise the entire stack for speed and cost with the most advanced and powerful frameworks and libraries

Our mission is to help developers of all skill levels adopt Gen AI faster, reducing their time from an idea to the well polished and scalable API endpoint.

We would continue our efforts to provide access to the latest and most powerful frameworks and libraries, integrated into a seamless workflow for implementing end-to-end LLMOps. We are dedicated to reducing complexity for developers with our no-code tools, thereby boosting their productivity in building and deploying AI models.

To achieve this, we continuously support and integrate new open-source models, optimization frameworks, and libraries by monitoring advancements in the AI community. We maintain a scalable decentralized GPU cloud and actively engage with developers for early access and feedback. By leveraging automated pipelines for seamless integration, enhancing flexible APIs, and forming strategic partnerships with AI research organizations, we ensure our platform remains cutting-edge.

Additionally, we provide comprehensive documentation and robust technical support, enabling developers to quickly adopt and utilize the latest models. MonsterAPI keeps developers at the forefront of generative AI technology, empowering them to innovate and succeed.

What are the long-term goals for Monster API in terms of technology development and market reach?

Long term, we want to help the 30 million software engineers become MLops developers with the help of our MLops agent and all the tools we are building.

This would require us to build not just a full-fledged agent but a lot of fundamental proprietary technologies around optimization frameworks, containerisation method and orchestration.

We believe that a combination of great, simple interfaces, 10x more throughput and low cost decentralised GPUs has the potential to transform a developer’s productivity and thus accelerate GenAI adoption.

All our research and efforts are in this direction.

Thank you for the great interview, readers who wish to learn more should visit MonsterAPI.

#agent#ai#ai assistant#ai model#AI models#AI research#Algorithms#API#APIs#applications#approach#barrier#Blog#Building#Business#CEO#challenge#Cloud#clouds#code#code generation#coding#Collaboration#Community#complexity#comprehensive#computing#consulting#Containers#continuous

1 note

·

View note

Text

Introducing Co-pilot Plus PCs: The Future of Windows is Here

"Revolutionize your computing experience! Discover the cutting-edge Co-pilot Plus PCs, where AI meets productivity. Click to learn more! #CoPilotPlus #FutureOfWindows"

Microsoft has unveiled a new category of PCs called Co-pilot Plus PCs, touted as the fastest and most intelligent Windows PCs ever built. Let’s delve into the key features that make these PCs stand out.

Supercharged with AI Hardware

Co-pilot Plus PCs boast dedicated AI hardware capable of performing a whopping 40 trillion operations per second. This powerhouse fuels various AI-powered features…

View On WordPress

#AI#AI Assistant#copilot#Live Captioning#microsoft#Recall#scenario AI#windows#windows 11#Windows Store AI App Hub#Windows Studio Effects

0 notes

Text

The future of tech: a symphony of devices

Close your eyes and imagine it’s the year 2035. You’re working on a new and exciting project. You gathered information and conducted interviews with experts in your topic. You start a draft with some informal notes. You play some music to set the mood, and get to write your first paragraph. But what device did you picture using in this creative process?

Continue reading The future of tech: a…

View On WordPress

#AI#AI assistant#Apple#Apple Vision Pro#artificial intelligence#Humane Ai Pin#Rabbit r1#smartglasses#wearables

1 note

·

View note

Text

The AI Assistant: Streamlining Your Workflow with ChatGPT's Research and Editing Tools

Unleash the Power of ChatGPT’s Research Tools

In today’s digital firehose, creators and researchers are constantly bombarded with information overload. The pressure to churn out fresh, SEO-friendly content while staying ahead of the curve can feel suffocating. But what if there was a secret weapon to turbocharge your workflow and unlock a wellspring of creative ideas?

Enter ChatGPT, the AI…

View On WordPress

#ai assistant#ai writing assistant workflow#chatgpt#chatgpt for editing#chatgpt for research#content creation#editing tools#how to use chatgpt for content creation#productivity#research tools#streamline content creation with ai#workflow

0 notes

Text

Harness the prowess of your own personal AI assistant

Unleashing the Power of WP Genie: The Ultimate WordPress AI Virtual Assistant

Say hello to WP Genie, your new secret weapon in conquering the digital frontier. As the world's first WordPress AI Virtual Assistant, we've engineered this revolutionary platform using three industry titans – Amazon, Google, and Microsoft AI – resulting in an unmatched level of intelligence that leaves competitors like ChatGPT trailing far behind. Our proprietary blend of AI technologies empowers users with extraordinary abilities, amplifying creativity and output beyond human limitations.

Discover how effortlessly you can:

· Harness the prowess of your own personal AI assistant, completing tasks swiftly and efficiently.

· Create captivating voiceovers and audio content without breaking a sweat.

· Craft stunning websites that leave visitors awestruck.

· Compose persuasive emails guaranteed to convert leads.

· Develop high-performing landing pages optimized for maximum engagement.

· Build sales funnels so effective they practically sell themselves.

· Enjoy instantaneous access to customizable business identities, including logos, letterheads, and social media profiles.

· Transform ideas into beautifully formatted eBooks, flipbooks, audiobooks, and business plans.

...and much, MUCH more!

Embrace Financial Freedom with WP Genie

Tired of squandering valuable resources on underwhelming freelancers, hit-or-miss contractors, or lackluster employees? Say goodbye to those days forever with WP Genie, which generates all your marketing materials across various niches and languages, leaving no stone unturned. Trust us when we say there's nothing quite like working alongside a dedicated, ultra-efficient team member committed to delivering top-notch quality around the clock, every day of the year.

Accessing WP Genie: Three Simple Steps Towards Revolutionizing Your Business Processes

Embarking on your journey towards limitless creative expression and exceptional productivity has never been easier. Simply follow these straightforward steps:

Obtain Access to AI Assistance: Gain entry to the ultimate AI experience, backed by the combined might of Amazon, Google, and Microsoft.

Make Your Request: Utilize either text or voice commands to ask WP Genie exactly what you need help with – whether composing a meticulously crafted 10,000-word eBook on affiliate marketing or designing an eye-catching logo, the sky's truly the limit.

Receive Completed Tasks in Record Time: Watch as WP Genie churns out hundreds of marketing marvels faster than you ever thought possible, revolutionizing your workflow and propelling your enterprise forward.

Join us as we continue exploring the boundless potential of WP Genie, where innovation meets inspiration, and dreams become reality. Welcome to the future of marketing automation – welcome to WP Genie

Meet Seun Ogundele: The Visionary Behind WP Genie and Trailblazer Redefining Online Marketing

Unveiling the brilliant mind behind WP Genie, meet Seun Ogundele – a celebrated luminary within the esteemed WarriorPlus platform. Renowned for his relentless drive and visionary spirit, Seun's impact on the online marketing world reaches far and wide.

A Force to Be Reckoned With

Having garnered impressive accolades in the dynamic realm of online entrepreneurship, Seun continues to demonstrate his commitment to excellence and innovation. By combining passion, tenacity, and a keen understanding of emerging trends, he solidifies his position among the leading figures shaping the WarriorPlus community.

Revolutionizing Product Development

No stranger to pushing boundaries, Seun boasts an enviable track record for developing game-changing solutions poised to disrupt conventional norms. Among his most notable creations are the ingenious offerings of Artisia, AvaTalk, ZapAI, GoBuildr, and ScribAI, each demonstrating his unique knack for identifying unmet needs and providing inventive answers.

An Industry Leader You Can Trust

As a testament to his enduring influence, Seun remains firmly entrenched atop the ranks of trusted professionals in the global online marketing scene. Countless satisfied customers credit his expert guidance and visionary insight for fueling their own success stories. Indeed, joining forces with Seun means embracing the promise of transformative change powered by ingenuity, adaptability, and sheer determination

Experience Unprecedented Success in 2024 with WP Genie: An Exclusive Offer Beyond Imagination

Unlock the door to endless opportunities and elevate your online presence like never before with our exclusive package, handcrafted especially for those eager to dominate the digital sphere in 2024. Here's everything you'll receive upon sealing this unbeatable deal:

➔ WP Genie Software: Full Access Granted

Prepare yourself for an incredible encounter with WP Genie, the ultimate WordPress plugin featuring Smart AI Technology that tackles life's most mundane tasks while saving you precious time.

➔ Mobile Edition of WP Genie: On-the-Go Convenience Guaranteed

Witness the magic of WP Genie anywhere, anytime with the fully integrated mobile edition – absolutely FREE upon purchase.

➔ Microsoft, Amazon, and Google's AI Collaboration

Experience the true meaning of synergy with WP Genie, harnessing the collective brilliance of Microsoft, Amazon, and Google's AI powers merged into a single force unlike any other. Brace yourself for astonishing outcomes that transcend expectations.

➔ Monthly Subscription Waiver

Why burden yourself with recurring bills when you can secure lifetime access to WP Genie with just a ONE TIME payment? Early birds, rejoice! Avoid hidden costs as we waive monthly fees solely for initial adopters. However, act quickly because limited slots apply!

⚠️ IMPORTANT NOTICE: Once the special promotion ends, standard charges shall resume. Don't miss your chance to capitalize on this golden opportunity! Grab yours now and embark on an exhilarating journey to unimaginable triumphs in 2024

0 notes

Text

Chatbot Showdown: Can Claude dethrone ChatGPT?

(Images made by author with Microsoft Copilot)

Anthropic’s Claude 3 model family, released on March 4, 2024, intensifies the chatbot competition. Consisting of three increasingly powerful models—Haiku, Sonnet, and Opus—they push the boundaries of AI capabilities, according to Anthropic.This post directly compares Claude 3 Sonnet (hereafter Claude) to the free version of the leading chatbot,…

View On WordPress

0 notes

Text

What Went Wrong With the Humane AI Pin?

New Post has been published on https://thedigitalinsider.com/what-went-wrong-with-the-humane-ai-pin/

What Went Wrong With the Humane AI Pin?

Humane, a startup founded by former Apple employees Imran Chaudhri and Bethany Bongiorno, recently launched its highly anticipated wearable AI assistant, the Humane AI Pin. Now, the company is already looking for a buyer.

The device promised to revolutionize the way people interact with technology, offering a hands-free, always-on experience that would reduce dependence on smartphones. However, despite the hype and ambitious goals, the AI Pin failed to live up to expectations, plagued by a series of hardware and software issues that ultimately led to a disappointing debut.

Chaudhri and Bongiorno, with their extensive experience at Apple, set out to create a product that would seamlessly integrate artificial intelligence into users’ daily lives. The AI Pin was envisioned as a wearable device that could be easily clipped onto clothing, serving as a constant companion and personal assistant. By leveraging advanced AI technologies, including large language models and computer vision, the device aimed to provide users with quick access to information, assistance with tasks, and a more intuitive way to interact with the digital world.

The AI Pin promised a range of features and capabilities, including voice-activated controls, real-time language translation, and the ability to analyze and provide information about objects captured by its built-in camera. Humane also developed its own operating system, CosmOS, designed to work seamlessly with the device’s AI models and deliver a fluid, responsive user experience. The company’s vision was to create a product that would not only replace smartphones but also enhance users’ lives by allowing them to be more present and engaged in the world around them.

Hardware Issues Right Away

Despite the sleek and futuristic design of the Humane AI Pin, the device suffered from several hardware shortcomings that hindered its usability and comfort. One of the most significant issues was its awkward and uncomfortable design. The AI Pin consists of two halves – a front processing unit and a rear battery – which are held together by magnets, with the user’s clothing sandwiched in between. This design proved problematic, as the heavy device tended to drag down lighter clothes, causing discomfort and an odd sensation of warmth against the wearer’s chest.

Another major drawback of the AI Pin was its poor battery life. With a runtime of just two to four hours, the device failed to provide the all-day assistance that users expected from a wearable AI companion. This limitation severely undermined the product’s usefulness, as users would need to constantly recharge the device throughout the day.

The AI Pin’s laser projection display, which beamed information onto the user’s palm, also faced challenges. While the concept was innovative, the display struggled to perform well in well-lit environments, making it difficult to read and interact with the projected information. Additionally, the hand-based interaction, which required users to tilt and tap their fingers to navigate the interface, proved cumbersome and often led to distorted or moving visuals, further compromising the user experience.

Software and Performance Problems

In addition to the hardware issues, the Humane AI Pin also suffered from several software and performance problems that severely impacted its usability. One of the most glaring issues was the device’s slow voice response times. Users reported significant delays between issuing a command and receiving a response from the AI assistant, leading to frustration and a breakdown in the seamless interaction promised by Humane.

Moreover, the AI Pin’s functionality was limited compared to smartphones and smartwatches. Basic features such as setting alarms and timers were notably absent, leaving users to rely on other devices for these essential tasks. The company’s decision to forgo apps in favor of a voice-centric interface also proved to be a drawback, as it restricted the device’s versatility and potential use cases.

The value of the AI Pin’s assistant capabilities was also called into question. While the device aimed to provide contextual information and assistance based on voice commands and camera input, the actual performance often fell short of expectations. The AI’s responses were sometimes inaccurate, irrelevant, or simply not helpful enough to justify the device’s existence as a standalone product.

Pricing and Subscription Model

The Humane AI Pin’s pricing and subscription model also contributed to its lackluster reception. With an upfront cost of $699, the device was significantly more expensive than many high-end smartphones and smartwatches. This high price point made it difficult for consumers to justify the purchase, especially given the AI Pin’s limited functionality and unproven value proposition.

In addition to the steep initial cost, Humane also required users to pay a monthly subscription fee of $24 to keep the device active and access its AI features. This recurring expense further compounded the financial burden on users and raised questions about the long-term viability of the product.

When compared to more affordable and capable alternatives, such as the Apple Watch, the AI Pin’s pricing and subscription model seemed even more unreasonable. For a fraction of the cost, users could access a wide range of apps, enjoy seamless integration with their smartphones, and benefit from a more robust set of features and performance capabilities.

The Future of Humane

Following the disappointing launch of the AI Pin, Humane finds itself in a precarious position. The company is now actively seeking a buyer, hoping to salvage some value from its technology and intellectual property. However, the challenges in finding a suitable acquirer are significant.

Humane’s asking price of $750 million to $1 billion seems unrealistic, given the AI Pin’s poor reception and the company’s lack of a proven track record. Potential buyers may be hesitant to invest such a large sum in a company whose first and only product failed to gain traction in the market.

Additionally, the value of Humane’s intellectual property remains questionable. While the company developed its own operating system, CosmOS, and integrated various AI technologies into the AI Pin, it is unclear whether these innovations are truly groundbreaking or valuable enough to justify the high asking price. Industry giants like Apple, Google, and Microsoft are already heavily invested in AI and wearable technologies, and they may not see Humane’s offerings as a significant addition to their existing portfolios.

As Humane navigates this difficult period, it is crucial for the company to reflect on the lessons learned from the AI Pin’s failure. The experience highlights the importance of conducting thorough market research, setting realistic expectations, and ensuring that a product delivers tangible value to users before launching. Humane’s story also serves as a cautionary tale for other startups in the wearable AI space, emphasizing the need to balance innovation with practicality and user-centric design.

The AI Pin’s failure serves as a reminder that innovation alone is not enough to guarantee success. Products must offer tangible benefits and solve real problems for users, and they must do so in a way that is both accessible and affordable. The wearable AI assistant market remains an exciting and promising space, but future innovators must learn from Humane’s missteps to create products that truly enhance users’ lives.

#ai#ai assistant#AI models#AI Pin#apple#Apple Watch#apps#artificial#Artificial Intelligence#battery#battery life#billion#clothing#command#computer#Computer vision#consumers#cosmos#Design#devices#display#employees#Features#financial#Fraction#Future#Google#hand#Hardware#Humane

0 notes

Text

Introducing Co-pilot Plus PCs: The Future of Windows is Here

"Revolutionize your computing experience! Discover the cutting-edge Co-pilot Plus PCs, where AI meets productivity. Click to learn more! #CoPilotPlus #FutureOfWindows"

Microsoft has unveiled a new category of PCs called Co-pilot Plus PCs, touted as the fastest and most intelligent Windows PCs ever built. Let’s delve into the key features that make these PCs stand out.

Supercharged with AI Hardware

Co-pilot Plus PCs boast dedicated AI hardware capable of performing a whopping 40 trillion operations per second. This powerhouse fuels various AI-powered features…

View On WordPress

#AI#AI Assistant#copilot#Live Captioning#microsoft#Recall#scenario AI#windows#windows 11#Windows Store AI App Hub#Windows Studio Effects

0 notes

Text

Copilot?? Fucking just bring back clippy, youd have total market AI spyware saturation is you just said "hey we're bringing back the gay little paperclip who sits on your desktop" and we'd have 10000 images of him as a bishi twink getting railed by the bonzi buddy in seconds

#like i dont want ai in my os and all that jazz#but like#the ai makers really dont know how to market this shit#ai assistant#ai

0 notes

Text

youtube

A digital assistant, also known as a predictive chatbot, is a technology designed to assist users by answering questions and processing simple tasks. In this video, we'll discuss the rise of digital assistants in healthcare and see if they truly are making our lives easier. Let's deep dive into the video and learn more about Siri, Alexa, and Google Assistant.

Digital assistants have become pervasive in our lives. When we wake up, we have Amazon’s Alexa read us the news and remind us to pick up our dry cleaning. As we get ready for the day, Siri plays our favorite songs. To get to work, we order an Uber with Google Assistant. However, when we get to the office, those products don’t follow us through the door. That is about to change in a big way.

Digital assistants are intelligent software programs designed to provide various services and support through natural language processing and machine learning algorithms. These assistants have gained immense popularity due to their ability to enhance efficiency, convenience, and user experience across a wide range of applications.

Digital assistants are designed to streamline tasks and automate processes, saving users time and effort. They can manage calendars, set reminders, schedule appointments, and even help with email management, thus allowing users to focus on more valuable and creative tasks.

Unlike human assistants, digital assistants are available around the clock, providing support whenever users need it. This constant availability ensures that users can access information and assistance whenever they require it, improving responsiveness and user satisfaction.

Digital assistants are increasingly being used in business settings to assist with customer support, perform data analysis, manage tasks, and even facilitate virtual meetings. These applications help organizations improve efficiency and offer better services to their customers.

Modern digital assistants leverage machine learning to adapt and learn from user interactions. Over time, they become better at understanding user preferences, needs, and speech patterns, resulting in a more personalized and tailored experience for each user.

Digital assistants offer a wide array of benefits, including increased productivity, personalized experiences, accessibility, convenience, and the ability to handle a variety of tasks. As technology continues to advance, we can expect digital assistants to become even more sophisticated and integrated into various aspects of our lives.

The Rise of Digital Assistants: Siri, Alexa and Google Assistant

#the rise of digital assistants#digital assistant#alexa#siri#google assistant#rise of a digital nation#what is a digital assistant#virtual assistant#benefits of using digital assistants#amazon alexa#LimitLess Tech 888#machine learning#artificial intelligence#computer digital assistant#the future of digital assistants#ai assistant#voice assistant#personal digital assistant#digital assiatants ai#oracle digital assistant#ai personal assistant#Youtube

1 note

·

View note