Text

Mistral Codestral is the Newest AI Model in the Code Generation Race

New Post has been published on https://thedigitalinsider.com/mistral-codestral-is-the-newest-ai-model-in-the-code-generation-race/

Mistral Codestral is the Newest AI Model in the Code Generation Race

Plus updates from Elon Musk’s xAI , several major funding rounds and intriguing research publications.

Created Using DALL-E

Next Week in The Sequence: Mistral Codestral is the New Model for Code Generation

Edge 401: We dive into reflection and refimenent planning for agents. Review the famous Reflextion paper and the AgentVerse framework for multi-agent task planning.

Edge 402: We review UC Berkeley’s research about models that can understand one hour long videos.

You can subscribe to The Sequence below:

TheSequence is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

📝 Editorial: Mistral Codestral is the Newest AI Model in the Code Generation Race

Code generation has become one of the most important frontiers in generative AI. For many, solving code generation is a stepping stone towards enabling reasoning capabilities in LLMs. This idea is highly debatable but certainly has many subscribers in the generative AI community. Additionally, coding is one of those use cases in which there is a clear and well-established customer base as well as distribution channels. More importantly, capturing the minds of developers is a tremendous stepping stone towards broader adoption.

Not surprisingly, all major LLM providers have released code generation versions of their models. Last week, Mistral entered the race with the open-weight release of Codestral, a code generation model trained in over 80 programming languages.

Like other Mistral releases, Codestral shows impressive performance across many coding benchmarks such as HumanEval and RepoBench. One of the most impressive capabilities of Codestral is its 32k context length in the 22B parameter model, which contrasts with the 8k context window in the Llama 3 70B parameter model.

Codestral is relevant for many reasons. First, it should become one of the most viable open-source alternatives to closed-source foundation models. Additionally, Mistral has already established strong enterprise distribution channels such as Databricks, Microsoft, Amazon, and Snowflake, which can catalyze Codestral’s adoption in enterprise workflows.

Being an integral part of the application programming lifecycle can unlock tremendous value for generative AI platforms. Codestral is certainly an impressive release and one that is pushing the boundaries of the space.”

🔎 ML Research

USER-LLM

Google Research published a paper outlining USER-LLM, a framework for contextualizing individual users interactions with LLMs. USER-LLM compresses user interactions into embedding representations that are then used in fine-tuning and inference —> Read more.

AGREE

Google Research published a paper introducing Adaptation for GRounding Enhancement(AGREE), a technique for grounding LLM responses. AGREE enables LLM to provide precise citations that back their responses —> Read more.

Linear Features and LLMs

Researchers from MIT published a paper proposing a framework to discover multi-dimensional features in LLMs. These features can be decomposed into lower dimensional features and can improve the computational ability of LLMs which are typically based on manipulating one-dimensional features —> Read more.

CoPE

Meta FAIR published a paper outlining contextual position encoding(CoPE), a new method that improves known counting challenges in attention mechanisms. CoPE allows positions to be based on context and addresses many challenges of traditional positional embedding methods —> Read more.

DP and Synthetic Data

Microsoft Research published a series of research papers exploring the potential of differential privacy(DP) and synthetic data generation. This is a fast growing are that allow companies to generate synthetic data and maintain privacy over the original datasets —> Read more.

LLMs and Theory of Mind

Researchers from Google DeepMind, Johns Hopkins University and several other research labs published a paper evaluating whether LLMs have developed a higher order theory of mind(ToM). By ToM we refers to the ability of human cognition to reason through multiple emotional and mental states in a recursive manner —> Read more.

🤖 Cool AI Tech Releases

Claude Tools

Anthropic added tools support to Claude —> Read more.

Codestral

Mistral open sourced Codestral, their first generation code generation model —> Read more.

Samba-1 Turbo

Samba Nova posted remarkable performance or 1000 tokens/s with its new Samba-1 turbo —> Read more.

📡AI Radar

Elon Musk’s xAI finally closed its Series B of $6 billion at a $24 billion valuation.

Chinese generative AI startup Ziphu raised an astonishing $400 million to build a Chinese alternative to ChatGPT.

Perplexity released Pages, the ability to create web pages from search results.

ElevenLabs released a new feature called Sound Effects for creating audio samples.

You.com launched a new feature for building custom assistants.

Play AI raised $4.3 million for building a modular gaming AI blockchain.

Google admitted some unexpected behavior of its AI Overviews feature.

AI genomics company Tempus is getting ready to go public with a well known CEO.

AI manufacturing company EthonAI raised $15 million in new funding.

AI oncology company Valar Labs raised $22 million Series A.

Tech giants such like Microsoft, Meta and AMD launched a group to work on data center AI connectivity.

PCC became OpenAI’s first reseller partner and largest enterprise customer.

TheSequence is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

#8K#agent#agents#ai#ai model#AI platforms#Amazon#amd#attention#audio#Behavior#benchmarks#billion#Blockchain#Building#CEO#chatGPT#claude#code#code generation#coding#cognition#Community#Companies#connectivity#data#Data Center#databricks#datasets#DeepMind

0 notes

Text

Suda51 On Working With Swery65, James Gunn, And Finding Peace And Appreciation For Shadows Of The Damned

New Post has been published on https://thedigitalinsider.com/suda51-on-working-with-swery65-james-gunn-and-finding-peace-and-appreciation-for-shadows-of-the-damned/

Suda51 On Working With Swery65, James Gunn, And Finding Peace And Appreciation For Shadows Of The Damned

Goichi “Suda51” Suda is arguably the most consistently unique game designer in the industry. He created Killer7, the No More Heroes series, worked with James Gunn (before he directed Guardians of the Galaxy or was put in charge of DC films) on Lollipop Chainsaw, and lots more. In preparation for his role as the keynote speaker at MomoCon this year, we caught up with him over e-mail to discuss his next game, Hotel Barcelona (a collaboration with Deadly Premonition creator Hidetaka “Swery65” Suehiro), remastering his 2011 game Shadows of the Damned, Batman, and more.

Hotel Barcelona

Game Informer: Hotel Barcelona is inspired by horror films. Are there specific films you cite as references?

Goichi “Suda51” Suda: There are a lot of them. Swery of White Owls is the main creator, so you should probably ask him this question, as I don’t want to spoil anything. It’s mostly based on Swery’s own personal tastes – however, I do have a good amount of input in it as well – so I’d suggest continuing this interview with Swery himself. I’ll be looking forward to reading it!

How do you and Swery split the work on a game like Hotel Barcelona? Do you collaborate on a script, or do you each handle specific characters? Does one of you handle gameplay, the other story?

At first, Swery was like, “Let’s write the whole thing up character by character,” but now I’m extremely busy with Grasshopper projects so I’ve been leaving most of it up to him. We both came up with a lot of ideas, but Swery has been doing most of the work; I do provide some input sometimes, but Swery and White Owls are handling the actual development.

What are your favorite time loops in fiction?

One would be the German show Dark on Netflix. Also, Flower, Sun & Rain is time loop-based, too; that time loop was actually based on an X-Files episode called “Monday” (Season 6/Episode 14) that was really interesting. That was where the idea from Flower, Sun & Rain FSR came from.

Shadows of the Damned: Hella Remastered

Has the Shadows of the Damned: Hella Remastered tempted you to make changes that are closer to what you wanted it to be?

No; this game feels like our own child – it’s something we created, and we love it for what it is. For the remastered version, content-wise, we simply added a New Game+ feature and new costumes we weren’t able to include before. We’d always wanted to do a proper, loyal remaster for SotD. It originally stemmed from Kurayami (which ran in Edge Magazine in the UK), which is something else that I’d actually like to make a game out of at some point. But Damned is Damned, and Kurayami is something else altogether now.

Damned actually came from the sixth draft of Kurayami. If possible, I’d like to do something with all the first through five drafts, respectively, which are all very different.

The fourth draft ended up becoming Black Knight Sword, and Kurayami Dance was I believe the third or fifth draft, I think.

You were publicly unhappy with Shadows of the Damned at launch. How do you feel about it in 2024?

A lot of things happened at the time, but I look back on the whole experience pretty fondly now. While we were making the original, we definitely had a lot of friction with EA – we argued about a lot of aspects and ideas for the game, and both we and EA made a lot of compromises, but those experiences are what brought about Garcia, Paula, Fleming, and their whole story, so I love the end result as a Grasshopper game in general and as our own personal creation as well. I had some great experiences with Mikami making the game; we got called out to Los Angeles by [publisher] EA this one time, and there were like ten people in this hotel suite sitting at this big table. When we walked in, it kinda freaked us out. We got really bitched out, getting asked things like, “Just what the hell are you trying to make?!” That kind of experience is rare – especially in the video game industry – and I feel like if this was the underworld or something I probably would’ve gotten whacked in that room (laughs). So many of the experiences we had back then were super interesting and are really fond and valuable memories now.

Now that I think about it, I believe that hotel thing happened at the Marriott in Los Angeles. Like I said, there were like ten people from EA – some of their top dudes – all lined up, and we had no idea what was going on. We were simply told, “Come to this meeting.” No context or anything, so we were shocked when we got there. It’s actually sort of an awesome memory now. It wasn’t exactly scary, since being the video game industry and all, I knew we weren’t actually going to get killed or anything, but we were definitely surprised.

Anyway, I’m really proud of what we made, and I cherish all the memories I have of those times.

What is the most underrated Suda 51 game?

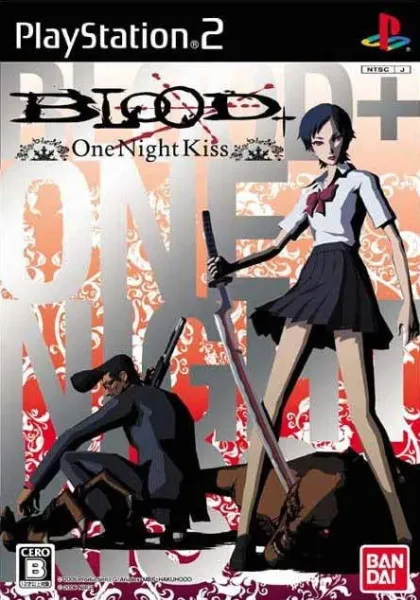

There would be two: Samurai Champloo: Sidetracked & Blood+ One Night Kiss. Blood+ wasn’t released outside of Japan, but Samurai Champloo was released in Japan and North America. They’re both really great games, and the stories were totally different from their respective original versions. Also, these two games basically spawned the No More Heroes.

If you get the opportunity, be sure to try Samurai Champloo. Blood+ is only available in Japanese, but if you can, please give that one a try, too. I consider Samurai Champloo, Blood+, and No More Heroes to be my “Big 3 Sword Action Games.” Without the first two, I’m not sure that No More Heroes would have ever been made.

What are some of your recent favorite video games that you didn’t work on?

I’ve downloaded a ton of games recently, but I have the bad habit of not actually getting around to playing them [laughs]. I started Helldivers 2 recently, and I’m still deciding on whether or not to really get full-on into it. I’ve also been digging Alan Wake 2, which I haven’t cleared yet but would like to sometime soon.

What are some of your recent favorite movies and TV shows?

TV: 3 Body Problem (both the Tencent version and the Netflix version); movie: The Iron Claw.

You and Swery65 use similar names. You both strive to make unique games. Does it bother you when people confuse the two of you? Or is it flattering?

I’m really good friends with Swery, so it doesn’t bother me at all. I mean, they’re totally different numbers anyway, so I really don’t get annoyed or anything when people get them mixed up.

Hidetaka “Swery65” Suehiro’s Deadly Premonition

What surprised you about working with Swery?

It’s well-known that Swery is the director and CEO of White Owls, but he’s also a really good producer, and he also does project management-related stuff, as well. He’s generally thought of as mainly a game creator, but he’s actually really proficient in all kinds of game development areas; he writes scenarios, he handles things like product management, etc. I think he has this image of just drinking lots of beer and messing around a lot, but he’s actually a super serious, hard worker and a really great producer – and although Swery himself may not want me to say stuff out loud [laughs], he’s really quite professional, which I’ve seen firsthand throughout his career, and I want people to know this.

What do you think he would say is surprising about working with you?

Probably the fact that although we promised we’d both write up the scenarios for Hotel Barcelona, I ended up not doing it and left it up to him [laughs].

Are there other developers you would like to collaborate with in the future?

I spoke with Akihiro Hino, CEO of Level-5 a little while back, and I’d really like to collaborate with him on something. The difference between the two of us is like night and day, so I think it would be really interesting to see what we could come up with.

Lollipop Chainsaw

Would you consider making a licensed DC video game with James Gunn?

I haven’t spoken with him much recently apart from a few greetings or DMs on Twitter here and there, but I think I would totally be down with doing another game with him if the opportunity presented itself. I love Batman, so if it was going to be a DC game, then I’d really like to try doing something with the Black & White series. Also, James is really good at always making sure to get back to me quickly whenever I message him, which I really appreciate, but I know that he’s obviously extremely busy, so I try not to bother him too much.

When will Killer7 get the remake/remaster treatment?

Unfortunately we don’t own the IP for Killer7 – Capcom does – so I don’t really have any say in that.

#2024#America#amp#blood#career#CEO#collaborate#Collaboration#content#dance#Dark#developers#development#Difference Between#dms#drinking#Edge#friction#Full#Future#Galaxy#game#game development#games#how#Ideas#Industry#INterview#iron#it

0 notes

Text

AI Headphones Allow You To Listen to One Person in a Crowd

New Post has been published on https://thedigitalinsider.com/ai-headphones-allow-you-to-listen-to-one-person-in-a-crowd/

AI Headphones Allow You To Listen to One Person in a Crowd

In a crowded, noisy environment, have you ever wished you could tune out all the background chatter and focus solely on the person you’re trying to listen to? While noise-canceling headphones have made great strides in creating an auditory blank slate, they still struggle to allow specific sounds from the wearer’s surroundings to filter through. But what if your headphones could be trained to pick up on and amplify the voice of a single person, even as you move around a room filled with other conversations?

Target Speech Hearing (TSH), a groundbreaking AI system developed by researchers at the University of Washington, is making progress in this area.

How Target Speech Hearing Works

To use TSH, a person wearing specially-equipped headphones simply needs to look at the individual they want to hear for a few seconds. This brief “enrollment” period allows the AI system to learn and latch onto the unique vocal patterns of the target speaker.

Here’s how it works under the hood:

The user taps a button while directing their head towards the desired speaker for 3-5 seconds.

Microphones on both sides of the headset pick up the sound waves from the speaker’s voice simultaneously (with a 16-degree margin of error).

The headphones transmit this audio signal to an onboard embedded computer.

The machine learning software analyzes the voice and creates a model of the speaker’s distinct vocal characteristics.

The AI system uses this model to isolate and amplify the enrolled speaker’s voice in real-time, even as the user moves around in a noisy environment.

The longer the target speaker talks, the more training data the system receives, allowing it to better focus on and clarity the desired voice. This innovative approach to “selective hearing” opens up a world of possibilities for improved communication and accessibility in challenging auditory environments.

Shyam Gollakota is the senior author of the paper and a UW professor in the Paul G. Allen School of Computer Science & Engineering

“We tend to think of AI now as web-based chatbots that answer questions. But in this project, we develop AI to modify the auditory perception of anyone wearing headphones, given their preferences. With our devices you can now hear a single speaker clearly even if you are in a noisy environment with lots of other people talking.” – Gollakota

Testing AI Headphones with TSH

To put Target Speech Hearing through its paces, the research team conducted a study with 21 participants. Each subject wore the TSH-enabled headphones and enrolled a target speaker in a noisy environment. The results were impressive – on average, the users rated the clarity of the enrolled speaker’s voice as nearly twice as high compared to the unfiltered audio feed.

This breakthrough builds upon the team’s earlier work on “semantic hearing,” which allowed users to filter their auditory environment based on predefined sound classifications, such as birds chirping or human voices. TSH takes this concept a step further by enabling the selective amplification of a specific individual’s voice.

The implications are significant, from enhancing personal conversations in loud settings to improving accessibility for those with hearing impairments. As the technology develops, it could fundamentally change how we experience and interact with our auditory world.

Improving AI Headphones and Overcoming Limitations

While Target Speech Hearing represents a major leap forward in auditory AI, the system does have some limitations in its current form:

Single speaker enrollment: As of now, TSH can only be trained to focus on one speaker at a time. Enrolling multiple speakers simultaneously is not yet possible.

Interference from similar audio sources: If another loud voice is coming from the same direction as the target speaker during the enrollment process, the system may struggle to isolate the desired individual’s vocal patterns.

Manual re-enrollment: If the user is unsatisfied with the audio quality after the initial training, they must manually re-enroll the target speaker to improve the clarity.

Despite these constraints, the University of Washington team is actively working on refining and expanding the capabilities of TSH. One of their primary goals is to miniaturize the technology, allowing it to be seamlessly integrated into consumer products like earbuds and hearing aids.

As the researchers continue to push the boundaries of what’s possible with auditory AI, the potential applications are vast, from enhancing productivity in distracting office environments to facilitating clearer communication for first responders and military personnel in high-stakes situations. The future of selective hearing looks bright, and Target Speech Hearing is poised to play a pivotal role in shaping it.

#Accessibility#ai#amp#applications#approach#Artificial Intelligence#audio#background#birds#change#chatbots#communication#computer#Computer Science#data#devices#directing#direction#earbuds#engineering#Environment#filter#first responders#focus#form#Future#headphones#hearing#how#human

0 notes

Text

The Impact of AI and LLMs on the Future of Jobs

New Post has been published on https://thedigitalinsider.com/the-impact-of-ai-and-llms-on-the-future-of-jobs/

The Impact of AI and LLMs on the Future of Jobs

Artificial intelligence (AI) has grown tremendously in recent years, which has created excitement and raised concerns about the future of employment. Large language models (LLMs) are the latest example of that. These powerful subsets of AI are trained on massive amounts of text data to understand and generate human-like language.

According to a report by LinkedIn, 55% of its global members may experience some degree of change in their jobs due to the rise of AI.

Knowing how AI and LLMs will disrupt the job market is critical for businesses and employees to adapt to the change and remain competitive in the rapidly growing technological environment.

This article explores the impact of AI on jobs and how automation in the workforce will disrupt employment.

Large Language Models: Catalysts for Job Market Disruption

According to Goldman Sachs, generative AI, and LLMs can potentially disrupt 300 million jobs shortly. They have also predicted that 50% of the workforce is at risk of losing jobs due to the integration of AI in business workflows.

LLMs are increasingly automating tasks previously considered the sole domain of human workers. For instance, LLMs, trained on vast repositories of prior interactions, can now answer product inquiries, generating accurate and informative responses.

This reduces the workload of human staff and allows for faster, 24/7 customer service. Moreover, LLMs constantly evolve, going well past customer services and being utilized in various applications, such as content development, translation, legal research, software development, etc.

Large Language Models and Generative AI: Automation

LLMs and generative AI are becoming increasingly prevalent, which could lead to partial automation and the potential displacement of some workers while creating opportunities for others.

1. Reshaping Routine Tasks

AI and LLMs excel at handling repetitive tasks with defined rules, such as data entry, appointment scheduling, and generating basic reports.

This automation allows human workers to focus on more complex tasks but raises concerns about job displacement. As AI and LLMs become more capable of automating routine tasks, the demand for human input decreases, consequently triggering job displacement. However, jobs that require a high degree of human oversight and input will be the least affected.

2. Industries at Automation Risk

Sectors with a high volume of routine tasks, like manufacturing and administration, are most susceptible to AI and LLM automation. Due to their ability to streamline operations like data entry and production line scheduling, LLMs are a risk to jobs in these sectors.

Source

According to the Goldman Sachs report, AI automation will transform the workforce with efficiency and productivity while also putting millions of routine and manual jobs at high risk.

3. Potential Loss of Low-Skilled Jobs

The impact of AI on the low-skilled workforce is expected to grow in the future. The skill-biased nature of AI-driven automation has made it more difficult for those with less technical knowledge to grow in their employment. This is because automation widens the gap between high and low-skilled workers.

Low-skilled workers can only retain their jobs through high-quality education, training, and reskilling programs. They may also face difficulty transitioning to newer, higher-paying, and high-skilled jobs that use AI technologies.

This becomes more evident as the latest McKinsey report predicts that low-wage workers are 14 times more likely to need a job switch. Without upskilling or transitioning to new, AI-compatible roles, they risk being left behind in a rapidly evolving job market.

4. Role of AI and LLMs in Streamlining Processes

A significant shift occurs within the business landscape due to the growing adoption of AI and LLMs. A recent report from Workato reveals a compelling statistic: operations teams automated a remarkable 28% of their processes in 2023.

AI and LLMs are game-changers, reducing operating costs, streamlining tasks through automation, and improving service quality.

The Future of Work in the Age of AI

While AI is inevitable, with enough resources and sufficient training, employees can use AI and LLMs to increase productivity in their daily routine tasks.

For instance, the National Bureau of Economic Research (NBER) states that customer support agents using a generative AI (GPT) tool increased their productivity by around 14%. This shows the potential of collaboration between humans and machines.

While AI undoubtedly changes the job market, its integration should be seen as an opportunity rather than a threat. The true potential lies in the collaboration between human intuition, creativity, and empathy combined with AI’s analytical prowess.

Reskilling For LLMs and Generative AI

While GPT could generate texts and images, its successors, like GPT-4o, seamlessly process and generate content across text, audio, images, and video formats.

This shows that the new multi-modal LLMs and AI technologies are evolving rapidly. Reskilling becomes essential for both modern organizations and workers to survive due to the impact of artificial intelligence on the future of jobs. Some of the important skills include:

Prompts Engineering: LLMs rely on prompts to guide their outputs. Learning how to create clear and concise prompts will be a key factor in achieving their true potential.

Data fluency: The ability to work with and understand data is essential. This covers collecting, analyzing, and interpreting data, influencing your interaction with LLMs.

AI literacy: Foundational knowledge about AI, including its capabilities and limitations, will be essential for effective collaboration and communication with these powerful tools.

Critical thinking and evaluation: While LLMs can be impressive, assessing their outputs is important. Assessing, updating, and analyzing the LLM’s work is essential.

Ethical Implications of AI in the Workplace

The presence of AI in the workplace has its pros and cons, which must all be carefully considered. The former, of course, increases productivity and reduces costs. However, if adopted detrimentally can also have adverse effects.

Here are some ethical considerations that need to be part of the larger narrative:

Algorithmic Bias and Fairness: AI algorithms have the potential to reinforce biases found in the data they are trained on, which could result in unfair recruitment decisions.

Employee Privacy: AI relies on vast amounts of employee data, raising concerns about potentially misusing this information, which could lead to unemployment.

Inequality: The increased use of AI in workflows presents challenges like inequality or inaccessibility. Initiatives like upskilling and reskilling programs can help reduce AI’s negative impacts on employees across organizations.

Workplace paradigms are shifting as a result of AI and LLMs integration. This will greatly impact the future of work and careers.

For further resources and insights on AI and data science, explore Unite.ai.

#2023#Administration#agents#ai#Algorithms#applications#appointment scheduling#Article#artificial#Artificial Intelligence#audio#automation#Bias#Business#Careers#catalysts#change#Collaboration#communication#content#course#creativity#customer service#data#data science#development#economic#education#effects#efficiency

1 note

·

View note

Text

Uni-MoE: Scaling Unified Multimodal LLMs with Mixture of Experts

New Post has been published on https://thedigitalinsider.com/uni-moe-scaling-unified-multimodal-llms-with-mixture-of-experts/

Uni-MoE: Scaling Unified Multimodal LLMs with Mixture of Experts

The recent advancements in the architecture and performance of Multimodal Large Language Models or MLLMs has highlighted the significance of scalable data and models to enhance performance. Although this approach does enhance the performance, it incurs substantial computational costs that limits the practicality and usability of such approaches. Over the years, Mixture of Expert or MoE models have emerged as a successful alternate approach to scale image-text and large language models efficiently since Mixture of Expert models have significantly lower computational costs, and strong performance. However, despite their advantages, Mixture of Models are not the ideal approach to scale large language models since they often involve fewer experts, and limited modalities, thus limiting the applications.

To counter the roadblocks encountered by current approaches, and to scale large language models efficiently, in this article, we will talk about Uni-MoE, a unified multimodal large language model with a MoE or Mixture of Expert architecture that is capable of handling a wide array of modalities and experts. The Uni-MoE framework also implements a sparse Mixture of Expert architecture within the large language models in an attempt to make the training and inference process more efficient by employing expert-level model parallelism and data parallelism. Furthermore, to enhance generalization and multi-expert collaboration, the Uni-MoE framework presents a progressive training strategy that is a combination of three different processes. In the first, the Uni-MoE framework achieves cross-modality alignment using various connectors with different cross modality data. Second, the Uni-MoE framework activates the preference of the expert components by training modality-specific experts with cross modality instruction data. Finally, the Uni-MoE model implements the LoRA or Low-Rank Adaptation learning technique on mixed multimodal instruction data to tune the model. When the instruction-tuned Uni-MoE framework was evaluated on a comprehensive set of multimodal datasets, the extensive experimental results highlighted the principal advantage of the Uni-MoE framework in reducing performance bias in handling mixed multimodal datasets significantly. The results also indicated a significant improvement in multi-expert collaboration, and generalization.

This article aims to cover the Uni-MoE framework in depth, and we explore the mechanism, the methodology, the architecture of the framework along with its comparison with state of the art frameworks. So let’s get started.

The advent of open-source multimodal large language models including LLama and InstantBlip have outlined the notable success and advancement in tasks involving image-text understanding over the past few years. Furthermore, the AI community is working actively towards building a unified multimodal large language model that could accommodate a wide array of modalities including image, text, audio, video, and more, moving beyond the traditional image-text paradigm. A common approach followed by the open source community to boost the abilities of multimodal large language models is to increase the size of vision foundation models, and integrating it with large language models with billions of parameters, and using diverse multimodal datasets to enhance instruction tuning. These developments have highlighted the increasing ability of multimodal large language models to reason and process multiple modalities, showcasing the importance of expanding multimodal instructional data and model scalability.

Although scaling up a model is a tried and tested approach that delivers substantial results, scaling a model is a computationally expensive process for both the training and inference processes.

To counter the issue of high overhead computational costs, the open source community is moving towards integrating the MoE or Mixture of Expert model architecture in large language models to enhance both the training and inference efficiency. Contrary to multimodal large language and large language models that employ all the available parameters to process each input resulting in a dense computational approach, the Mixture of Expert architecture only requires the users to activate a subset of expert parameters for each input. As a result, the Mixture of Expert approach emerges as a viable route to enhance the efficiency of large models without extensive parameter activation, and high overhead computational costs. Although existing works have highlighted the successful implementation and integration of Mixture of Expert models in the construction of text-only and text-image large models, researchers are yet to fully explore the potential of developing the Mixture of Expert architecture to construct powerful unified multimodal large language models.

Uni-MoE is a multimodal large language model that leverages sparse Mixture of Expert models to interpret and manage multiple modalities in an attempt to explore scaling unified multimodal large language models with the MoE architecture. As demonstrated in the following image, the Uni-MoE framework first obtains the encoding of different modalities using modality-specific encoders, and then maps these encodings into the language representation space of the large language models using various designed connectors. These connectors contain a trainable transformer model with subsequent linear projections to distill and project the output representations of the frozen encoder. The Uni-MoE framework then introduces a sparse Mixture of Expert layers within the internal block of the dense Large Language Model. As a result, each Mixture of Expert based block features a shared self-attention layer applicable across all modalities, a sparse router for allocating expertise at token level, and diverse experts based on the feedforward network. Owing to this approach, the Uni-MoE framework is capable of understanding multiple modalities including speech, audio, text, video, image, and only requires activating partial parameters during inference.

Furthermore, to enhance multi-expert collaboration and generalization, the Uni-MoE framework implements a three-stage training strategy. In the first stage, the framework uses extensive image/audio/speech to language pairs to train the corresponding connector owing to the unified modality representation in the language space of the large language model. Second, the Uni-MoE model trains modality-specific experts employing cross-modality datasets separately in an attempt to refine the proficiency of each expert within its respective domain. In the third stage, the Uni-MoE framework integrates these trained experts into the Mixture of Expert layer of the large language model, and trains the entire Uni-MoE framework with mixed multimodal instruction data. To reduce the training cost further, the Uni-MoE framework employs the LoRA learning approach to fine-tune these self-attention layers and the pre-tuned experts.

Uni-MoE : Methodology and Architecture

The basic motivation behind the Uni-MoE framework is the high training and inference cost of scaling multimodal large language models along with the efficiency of Mixture of Expert models, and explore the possibility of creating an efficient, powerful, and unified multimodal large language model utilizing the MoE architecture. The following figure presents a representation of the architecture implemented in the Uni-MoE framework demonstrating the design that includes individual encoders for different modalities i.e. audio, speech and visuals along with their respective modality connectors.

The Uni-MoE framework then integrates the Mixture of Expert architecture with the core large language model blocks, a process crucial for boosting the overall efficiency of both the training and inference process. The Uni-MoE framework achieves this by implementing a sparse routing mechanism. The overall training process of the Uni-MoE framework can be split into three phases: cross-modality alignment, training modality-specific experts, and tuning Uni-MoE using a diverse set of multimodal instruction datasets. To efficiently transform diverse modal inputs into a linguistic format, the Uni-MoE framework is built on top of LLaVA, a pre-trained visual language framework. The LLaVA base model integrates CLIP as its visual encoder alongside a linear projection layer that converts image features into their corresponding soft image tokens. Furthermore, to process video content, the Uni-MoE framework selects eight representative frames from each video, and transforms them into video tokens by average pooling to aggregate their image or frame-based representation. For audio tasks, the Uni-MoE framework deploys two encoders, BEATs and the Whisper encoder to enhance feature extraction. The model then distills audio features vector and fixed-length speech, and maps them into speech tokens and soft audio respectively via a linear projection layer.

Training Strategy

The Uni-MoE framework introduces a progressive training strategy for the incremental development of the model. The progressive training strategy introduced attempts to harness the distinct capabilities of various experts, enhance multi-expert collaboration efficiency, and boost the overall generalizability of the framework. The training process is split into three stages with the attempt to actualize the MLLM structure built on top of integrated Mixture of Experts.

Stage 1 : Cross Modality Alignment

In the first stage, the Uni-MoE framework attempts to establish connectivity between different linguistics and modalities. The Uni-MoE framework achieves this by translating modal data into soft tokens by constructing connectors. The primary object of the first training stage is to minimize the generative entropy loss. Within the Uni-MoE framework, the LLM is optimized to generate descriptions for inputs across different modalities, and the model only subjects the connectors to training, a strategy that enables the Uni-MoE framework to integrate different modalities within a unified language framework.

Stage 2: Training Modality Specific Experts

In the second stage, the Uni-MoE framework focuses on developing single modality experts by training the model dedicatedly on specific cross modality data. The primary objective is to refine the proficiency of each expert within its respective domain, thus enhancing the overall performance of the Mixture of Expert system on a wide array of multimodal data. Furthermore, the Uni-MoE framework tailors the feedforward networks to align more closely with the characteristics of the modality while maintaining generative entropy loss as focal metric training.

Stage 3: Tuning Uni-MoE

In the third and the final stage, the Uni-MoE framework integrates the weights tuned by experts during Stage 2 into the Mixture of Expert layers. The Uni-MoE framework then fine-tunes the MLLMs utilizing mixed multimodal instruction data jointly. The loss curves in the following image reflect the progress of the training process.

Comparative analysis between the configurations of Mixture of Expert revealed that the experts the model refined during the 2nd training stage displayed enhanced stability and achieved quicker convergence on mixed-modal datasets. Furthermore, on tasks that involved complex multi-modal data including text, images, audio, videos, the Uni-MoE framework demonstrated more consistent training performance and reduced loss variability when it employed four experts than when it employed two experts.

Uni-MoE : Experiments and Results

The following table summarizes the architectural specifications of the Uni-MoE framework. The primary goal of the Uni-MoE framework, built on LLaMA-7B architecture, is to scale the model size.

The following table summarizes the design and optimization of the Uni-MoE framework as guided by specialized training tasks. These tasks are instrumental in refining the capabilities of the MLP layers, thereby leveraging their specialized knowledge for enhanced model performance. The Uni-MoE framework undertakes eight single-modality expert tasks to elucidate the differential impacts of various training methodologies.

The model evaluates the performance of various model variants across a diverse set of benchmarks that encompasses two video-understanding, three audio-understanding, and five speech-related tasks. First, the model is tested on its ability to understand speech-image and speech-text tasks, and the results are contained in the following table.

As it can be observed, the previous baseline models deliver inferior results across speech understanding tasks which further impacts the performance on image-speech reasoning tasks. The results indicate that introducing Mixture of Expert architecture can enhance the generalizability of MLLMs on unseen audi-image reasoning tasks. The following table presents the experimental results on image-text understanding tasks. As it can be observed, the best results from the Uni-MoE models outperforms the baselines, and surpasses the fine-tuning task by an average margin of 4 points.

Final Thoughts

In this article we have talked about Uni-MoE, , a unified multimodal large language model with a MoE or Mixture of Expert architecture that is capable of handling a wide array of modalities and experts. The Uni-MoE framework also implements a sparse Mixture of Expert architecture within the large language models in an attempt to make the training and inference process more efficient by employing expert-level model parallelism and data parallelism. Furthermore, to enhance generalization and multi-expert collaboration, the Uni-MoE framework presents a progressive training strategy that is a combination of three different processes. In the first, the Uni-MoE framework achieves cross-modality alignment using various connectors with different cross modality data. Second, the Uni-MoE framework activates the preference of the expert components by training modality-specific experts with cross modality instruction data. Finally, the Uni-MoE model implements the LoRA or Low-Rank Adaptation learning technique on mixed multimodal instruction data to tune the model.

#ai#Analysis#applications#approach#architecture#Art#Article#Artificial Intelligence#attention#audio#beats#benchmarks#Bias#Building#CLIP#Collaboration#Community#comparison#comprehensive#connectivity#connector#construction#content#data#datasets#Design#development#Developments#efficiency#employed

0 notes

Text

Clearing the “Fog of More” in Cyber Security

New Post has been published on https://thedigitalinsider.com/clearing-the-fog-of-more-in-cyber-security/

Clearing the “Fog of More” in Cyber Security

At the RSA Conference in San Francisco this month, a dizzying array of dripping hot and new solutions were on display from the cybersecurity industry. Booth after booth claimed to be the tool that will save your organization from bad actors stealing your goodies or blackmailing you for millions of dollars.

After much consideration, I have come to the conclusion that our industry is lost. Lost in the soup of detect and respond with endless drivel claiming your problems will go away as long as you just add one more layer. Engulfed in a haze of technology investments, personnel, tools, and infrastructure layers, companies have now formed a labyrinth where they can no longer see the forest for the trees when it comes to identifying and preventing threat actors. These tools, meant to protect digital assets, are instead driving frustration for both security and development teams through increased workloads and incompatible tools. The “fog of more” is not working. But quite frankly, it never has.

Cyberattacks begin and end in code. It’s that simple. Either you have a security flaw or vulnerability in code, or the code was written without security in mind. Either way, every attack or headline you read, comes from code. And it’s the software developers that face the ultimate full brunt of the problem. But developers aren’t trained in security and, quite frankly, might never be. So they implement good old fashion code searching tools that simply grep the code for patterns. And be afraid for what you ask because as a result they get the alert tsunami, chasing down red herrings and phantoms for most of their day. In fact, developers are spending up to a third of their time chasing false positives and vulnerabilities. Only by focusing on prevention can enterprises really start fortifying their security programs and laying the foundation for a security-driven culture.

Finding and Fixing at the Code Level

It’s often said that prevention is better than cure, and this adage holds particularly true in cybersecurity. That’s why even amid tighter economic constraints, businesses are continually investing and plugging in more security tools, creating multiple barriers to entry to reduce the likelihood of successful cyberattacks. But despite adding more and more layers of security, the same types of attacks keep happening. It’s time for organizations to adopt a fresh perspective – one where we home in on the problem at the root level – by finding and fixing vulnerabilities in the code.

Applications often serve as the primary entry point for cybercriminals seeking to exploit weaknesses and gain unauthorized access to sensitive data. In late 2020, the SolarWinds compromise came to light and investigators found a compromised build process that allowed attackers to inject malicious code into the Orion network monitoring software. This attack underscored the need for securing every step of the software build process. By implementing robust application security, or AppSec, measures, organizations can mitigate the risk of these security breaches. To do this, enterprises need to look at a ‘shift left’ mentality, bringing preventive and predictive methods to the development stage.

While this is not an entirely new idea, it does come with drawbacks. One significant downside is increased development time and costs. Implementing comprehensive AppSec measures can require significant resources and expertise, leading to longer development cycles and higher expenses. Additionally, not all vulnerabilities pose a high risk to the organization. The potential for false positives from detection tools also leads to frustration among developers. This creates a gap between business, engineering and security teams, whose goals may not align. But generative AI may be the solution that closes that gap for good.

Entering the AI-Era

By leveraging the ubiquitous nature of generative AI within AppSec we will finally learn from the past to predict and prevent future attacks. For example, you can train a Large Language Model or LLM on all known code vulnerabilities, in all their variants, to learn the essential features of them all. These vulnerabilities could include common issues like buffer overflows, injection attacks, or improper input validation. The model will also learn the nuanced differences by language, framework, and library, as well as what code fixes are successful. The model can then use this knowledge to scan an organization’s code and find potential vulnerabilities that haven’t even been identified yet. By using the context around the code, scanning tools can better detect real threats. This means short scan times and less time chasing down and fixing false positives and increased productivity for development teams.

Generative AI tools can also offer suggested code fixes, automating the process of generating patches, significantly reducing the time and effort required to fix vulnerabilities in codebases. By training models on vast repositories of secure codebases and best practices, developers can leverage AI-generated code snippets that adhere to security standards and avoid common vulnerabilities. This proactive approach not only reduces the likelihood of introducing security flaws but also accelerates the development process by providing developers with pre-tested and validated code components.

These tools can also adapt to different programming languages and coding styles, making them versatile tools for code security across various environments. They can improve over time as they continue to train on new data and feedback, leading to more effective and reliable patch generation.

The Human Element

It’s essential to note that while code fixes can be automated, human oversight and validation are still crucial to ensure the quality and correctness of generated patches. While advanced tools and algorithms play a significant role in identifying and mitigating security vulnerabilities, human expertise, creativity, and intuition remain indispensable in effectively securing applications.

Developers are ultimately responsible for writing secure code. Their understanding of security best practices, coding standards, and potential vulnerabilities is paramount in ensuring that applications are built with security in mind from the outset. By integrating security training and awareness programs into the development process, organizations can empower developers to proactively identify and address security issues, reducing the likelihood of introducing vulnerabilities into the codebase.

Additionally, effective communication and collaboration between different stakeholders within an organization are essential for AppSec success. While AI solutions can help to “close the gap” between development and security operations, it takes a culture of collaboration and shared responsibility to build more resilient and secure applications.

In a world where the threat landscape is constantly evolving, it’s easy to become overwhelmed by the sheer volume of tools and technologies available in the cybersecurity space. However, by focusing on prevention and finding vulnerabilities in code, organizations can trim the ‘fat’ of their existing security stack, saving an exponential amount of time and money in the process. At root-level, such solutions will be able to not only find known vulnerabilities and fix zero-day vulnerabilities but also pre-zero-day vulnerabilities before they occur. We may finally keep pace, if not get ahead, of evolving threat actors.

#ai#ai tools#Algorithms#Application Security#applications#approach#AppSec#assets#attackers#awareness#Business#code#codebase#coding#Collaboration#communication#Companies#comprehensive#compromise#conference#creativity#cyber#cyber security#Cyberattacks#cybercriminals#cybersecurity#data#detection#developers#development

0 notes

Text

NIS2 is coming - why you should act now - CyberTalk

New Post has been published on https://thedigitalinsider.com/nis2-is-coming-why-you-should-act-now-cybertalk/

NIS2 is coming - why you should act now - CyberTalk

By Patrick Scholl, Head of Operational Technology, Infinigate

NIS2 – the Network and Information Security Directive – is a revision of the NIS Directive, which came into force in 2016, with the aim of strengthening cyber security resilience across the EU.

The revision tightens reporting requirements and introduces stricter control measures and enforcement provisions. By October 17th 2024, the NIS2 Directive will be a requirement across all EU member states. Despite the urgency, businesses still have many questions.

Distributors like Infinigate are committed to supporting the implementation of NIS2 by offering a broad choice of cyber security solutions and services in collaboration with vendors, such as Check Point.

Supporting NIS2 implementation

In Germany, as an example, NIS2UmsuCG, the local directive governing the implementation of the EU NIS2 to strengthen cyber security, is already available as a draft and defines EU-wide minimum standards that will be transferred into national regulation.

It is estimated that around 30,000 companies in Germany will have to make changes to comply. However, thus far, only a minority have adopted the measures mandated by the new directive. Sometimes, symbolic measures are taken with little effect. In view of the complexity of the NIS2 requirements, the short time in which they are to be implemented and the need for holistic and long-term solutions, companies need strong partners who can advise on how to increase their cyber resilience.

Who is affected?

The NIS2 directive coming into force in autumn 2024 will apply to organisations across 18 sectors with 50 or more employees and a turnover of €10 million. Additionally, some entities will be regulated regardless of their size — especially in the areas of ‘essential’ digital infrastructure and public administration.

The following industry sectors fall under the ‘essential’ category:

Energy

Transport

Banking and finance

Education

Water supply

Digital infrastructure

ICT service management

Public administration

Space exploration and research

Postal and courier services

Waste management

Chemical manufacturing, production and distribution

Food production, processing and distribution

Industry & manufacturing (medical devices and in-vitro, data processing, electronics, optics, electrical equipment, mechanical engineering, motor vehicles and parts, vehicle manufacturing)

Digital suppliers (marketplaces, search engines, social networks)

Research institutes

It’s worth bearing in mind that NIS2 regulations apply not only to companies, but also their contractors.

Good to know: The “size-cap” rule

The “size-cap” rule is one of the innovations that come with NIS2 and is intended to level out inequalities linked with varying requirements and risk profiles, budgets, resources and expertise. The regulation is intended to enable start-ups and medium-sized companies as well as large corporations to be able to implement the security measures required by NIS2.

You can get NIS2 compliance tips here: https://nis2-check.de/

NIS2 in a nutshell

In Germany, companies are required to register with the BSI (Federal Office for Information Security), for their relevant areas. A fundamental rule is that any security incidents must be reported immediately.

Across Europe, the strict security requirements mandated by NIS2 include the following:

Risk management: identify, assess and remedy

Companies are required to take appropriate and proportionate technical, operational and organisational measures. A holistic approach should ensure that risks to the security of network and information systems can be adequately managed.

Security assessment: a self-analysis

Security assessment includes questions such as: what vulnerabilities are there in the company? What is the state of cyber hygiene? What security practices are already in place today? Are there misconfigured accounts that could be vulnerable to data theft or manipulation?

Access management: protecting privileged accounts

Companies subject to NIS2 regulations are encouraged to restrict the number of administrator-level accounts and change passwords regularly. This lowers the risk of network cyber security breaches threatening business continuity.

Closing the entry gates: ransomware and supply chain security

One of the main concerns of the NIS2 directive is proactive protection against ransomware. Endpoint security solutions can help here. Employee training is another necessary step to create risk awareness and help identify and prevent cyber attacks.

The focus here should be on best practices in handling sensitive data and the secure use of IT and OT systems. Supply chain vulnerability is a major area of concern. Companies need to ensure that the security features and standards of the machines, products and services they purchase meet current security requirements.

Zero tolerance strategy: access control and zero trust

In a world where corporate boundaries are increasingly blurred due to digitalisation, cloud infrastructures and decentralised working models, perimeter-based architectures have had their day. A zero trust concept provides multiple lines of defence, relies on strong authentication methods and threat analysis to validate access attempts.

Business continuity: prepared for emergencies

Business continuity management measures are essential to ensure that critical systems can be maintained in the event of an emergency. These include backup management, disaster recovery, crisis management and emergency plans.

In summary, we should not let the complexity of the topic discourage us from taking action; after all, NIS2 is for our benefit, to help us protect our business assets from increasing cyber risk.

Businesses would be well advised to start on the route to assessing their security posture and current status vis-à-vis NIS2 requirements. You can make a start by simply identifying all relevant stakeholders in your organisation, starting a task-force and gathering intelligence on your cyber risk.

Identify key steps and build a roadmap to compliance that is manageable for your resources; your channel partners can help by providing expert advice https://page.infinigate.com/nis2-checkpoint.

#000#2024#access control#access management#Accounts#Administration#Advice#amp#Analysis#approach#assessment#assets#authentication#awareness#backup#banking#bearing#bsi#budgets#Business#business continuity#change#channel#Channel partners#Check Point#chemical#Cloud#Collaboration#Companies#complexity

0 notes

Text

OpenAI disrupts five covert influence operations

New Post has been published on https://thedigitalinsider.com/openai-disrupts-five-covert-influence-operations/

OpenAI disrupts five covert influence operations

.pp-multiple-authors-boxes-wrapper display:none;

img width:100%;

In the last three months, OpenAI has disrupted five covert influence operations (IO) that attempted to exploit the company’s models for deceptive activities online. As of May 2024, these campaigns have not shown a substantial increase in audience engagement or reach due to OpenAI’s services.

OpenAI claims its commitment to designing AI models with safety in mind has often thwarted the threat actors’ attempts to generate desired content. Additionally, the company says AI tools have enhanced the efficiency of OpenAI’s investigations.

Detailed threat reporting by distribution platforms and the open-source community has significantly contributed to combating IO. OpenAI is sharing these findings to promote information sharing and best practices among the broader community of stakeholders.

Disrupting covert IO

In the past three months, OpenAI disrupted several IO operations using its models for various tasks, such as generating short comments, creating fake social media profiles, conducting open-source research, debugging simple code, and translating texts.

Specific operations disrupted include:

Bad Grammar: A previously unreported operation from Russia targeting Ukraine, Moldova, the Baltic States, and the US. This group used OpenAI’s models to debug code for running a Telegram bot and to create political comments in Russian and English, posted on Telegram.

Doppelganger: Another Russian operation generating comments in multiple languages on platforms like X and 9GAG, translating and editing articles, generating headlines, and converting news articles into Facebook posts.

Spamouflage: A Chinese network using OpenAI’s models for public social media activity research, generating texts in several languages, and debugging code for managing databases and websites.

International Union of Virtual Media (IUVM): An Iranian operation generating and translating long-form articles, headlines, and website tags, published on a linked website.

Zero Zeno: A commercial company in Israel, with operations generating articles and comments posted across multiple platforms, including Instagram, Facebook, X, and affiliated websites.

The content posted by these operations focused on various issues, including Russia’s invasion of Ukraine, the Gaza conflict, Indian elections, European and US politics, and criticisms of the Chinese government.

Despite these efforts, none of these operations showed a significant increase in audience engagement due to OpenAI’s models. Using Brookings’ Breakout Scale – which assesses the impact of covert IO – none of the five operations scored higher than a 2, indicating activity on multiple platforms but no breakout into authentic communities.

Attacker trends

Investigations into these influence operations revealed several trends:

Content generation: Threat actors used OpenAI’s services to generate large volumes of text with fewer language errors than human operators could achieve alone.

Mixing old and new: AI was used alongside traditional formats, such as manually written texts or copied memes.

Faking engagement: Some networks generated replies to their own posts to create the appearance of engagement, although none managed to attract authentic engagement.

Productivity gains: Threat actors used AI to enhance productivity, summarising social media posts and debugging code.

Defensive trends

OpenAI’s investigations benefited from industry sharing and open-source research. Defensive measures include:

Defensive design: OpenAI’s safety systems imposed friction on threat actors, often preventing them from generating the desired content.

AI-enhanced investigation: AI-powered tools improved the efficiency of detection and analysis, reducing investigation times from weeks or months to days.

Distribution matters: IO content, like traditional content, must be distributed effectively to reach an audience. Despite their efforts, none of the disrupted operations managed substantial engagement.

Importance of industry sharing: Sharing threat indicators with industry peers increased the impact of OpenAI’s disruptions. The company benefited from years of open-source analysis by the wider research community.

The human element: Despite using AI, threat actors were prone to human error, such as publishing refusal messages from OpenAI’s models on their social media and websites.

OpenAI says it remains dedicated to developing safe and responsible AI. This involves designing models with safety in mind and proactively intervening against malicious use.

While admitting that detecting and disrupting multi-platform abuses like covert influence operations is challenging, OpenAI claims it’s committed to mitigating the dangers.

(Photo by Chris Yang)

See also: EU launches office to implement AI Act and foster innovation

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

Tags: ai, artificial intelligence, generative ai, influence operations, openai

#2024#ai#ai & big data expo#ai act#AI models#ai tools#AI-powered#amp#Analysis#Articles#artificial#Artificial Intelligence#automation#Big Data#bot#Cloud#code#Community#Companies#comprehensive#conference#Conflict#content#cyber#cyber security#data#databases#Design#detection#Digital Transformation

0 notes

Text

The Legend Of Zelda: Majora's Mask Part 16 | Super Replay

New Post has been published on https://thedigitalinsider.com/the-legend-of-zelda-majoras-mask-part-16-super-replay/

The Legend Of Zelda: Majora's Mask Part 16 | Super Replay

After The Legend of Zelda: Ocarina of Time reinvented the series in 3D and became its new gold standard, Nintendo followed up with a surreal sequel in Majora’s Mask. Set two months after the events of Ocarina, Link finds himself transported to an alternate version of Hyrule called Termina and must prevent a very angry moon from crashing into the Earth over the course of three constantly repeating days. Majora’s Mask’s unique structure and bizarre tone have earned it legions of passionate defenders and detractors, and one long-time Zelda fan is going to experience it for the first time to see where he lands on that spectrum.

Join Marcus Stewart and Kyle Hilliard today and each Friday on Twitch at 1:00 p.m. CT as they gradually work their way through the entire game until Termina is saved. Archived episodes will be uploaded each Saturday on our second YouTube channel Game Informer Shows, which you can watch both above and by clicking the links below.

Part 1 – Plenty of Time

Part 2 – The Bear

Part 3 – Deku Ball Z

Part 4 – Pig Out

Part 5 – The Was a Bad Choice!

Part 6 – Ray Darmani

Part 7 – Curl and Pound

Part 8 – Almost a Flamethrower

Part 9 – Take Me Higher

Part 10 – Time Juice

Part 11 – The One About Joey

Part 12 – Ugly Country

Part 13 – The Sword is the Chicken Hat

Part 14 – Harvard for Hyrule

Part 15 – Keeping it Pure

[embedded content]

If you enjoy our livestreams but haven’t subscribed to our Twitch channel, know that doing so not only gives you notifications and access to special emotes. You’ll also be granted entry to the official Game Informer Discord channel, where our welcoming community members, moderators, and staff gather to talk games, entertainment, food, and organize hangouts! Be sure to also follow our second YouTube channel, Game Informer Shows, to watch other Replay episodes as well as Twitch archives of GI Live and more.

#3d#channel#chicken#Community#course#curl#defenders#earth#entertainment#Events#Food#game#games#gold#it#Link#links#mask#members#Moon#notifications#One#Other#prevent#spectrum#Staff#structure#time#twitch#Version

0 notes

Text

Sony unveils advanced microsurgery assistance robot

New Post has been published on https://thedigitalinsider.com/sony-unveils-advanced-microsurgery-assistance-robot/

Sony unveils advanced microsurgery assistance robot

youtube

Sony is making strides in the surgical robotics market with its newly developed microsurgery assistance robot. The Tokyo-based company recently announced its advanced system designed for automatic surgical instrument exchange and precision control.

Robot development and functionality

Sony, known for its electronic technologies, created this robot to assist in microsurgical procedures. The robot, used in conjunction with a microscope, works on extremely small tissues such as veins and nerves.

It tracks the movements of a surgeon’s hands and fingers using a highly sensitive control device. These movements are then replicated on a small surgical instrument that mimics the movement of the human wrist.

Addressing practical challenges

The new system aims to overcome practical challenges conventional surgical assistant robots face, such as interruptions and delays from manually exchanging surgical instruments. Sony’s R&D team achieved this through the miniaturization of parts, allowing for automatic exchange.

The system comprises a tabletop console operated by a surgeon and a robot that performs procedures on a patient. The surgeon’s hand movements on the console are replicated at a reduced scale (approximately 1/2 to 1/10) at the tip of the robot arm’s surgical instrument. Sony’s researchers envision the robot assistant being used in various surgical procedures.

Key features of the robot

1. Automatic instrument exchange

One of the robot’s standout features is its ability to automatically exchange instruments, made possible by the miniaturization of surgical tools. Multiple instruments can be compactly stored near the robot arm, allowing the left and right arms to make small movements to exchange tools quickly without human intervention.

2. High-precision control

The robot employs a highly sensitive control device to provide stable and precise control necessary for microsurgical procedures. This compact and lightweight device accurately reflects the delicate movements of human fingertips.

The tip of the surgical instrument, designed with multiple joints, moves smoothly like a human wrist. Sony aims for the robot to enable nimble operation and smooth movements that feel almost imperceptible to the user.

3. Advanced display technology

Equipped with a 1.3-type 4K OLED microdisplay developed by Sony Semiconductor Solutions Corporation, the robot provides operators with high-definition images of the surgical area and the movement of instruments.

4. Performance and testing

In February, Sony conducted an experiment at Aichi Medical University. Surgeons and medical practitioners who do not specialize in microsurgery used the prototype to create an anastomosis in animal blood vessels successfully.

Sony claims this was the world’s first instance of microvascular anastomosis using a surgical assistance robot with an automatic instrument exchange function.

Expert insights

Munekazu Naito, a professor in the Department of Anatomy at Aichi Medical University, remarked on humans’ superior brain and hand coordination, which allows for precise and delicate movements.

He noted that mastering microsurgery typically requires extensive training, but Sony’s robot demonstrated exceptional control over novice surgeons’ movements.

This technology enabled them to perform intricate tasks with skills comparable to experienced experts. Naito hoped surgical assistance robots would expand physicians’ capabilities and enhance advanced medical practices.

Future development and goals

Sony plans to collaborate with university medical departments and medical institutions to further develop and validate robotic assistance technology’s effectiveness. The ultimate goal is to resolve issues in the medical field and contribute to medical advancements through innovative robotic technology.

Read more about how AI and computer vision are advancing healthcare by reading the eBook below:

Computer Vision in Healthcare eBook 2023

Unlock the mystery of the innovative intersection of technology and medicine with our latest eBook, Computer Vision in Healthcare.

Like what you see? Then check out tonnes more.

From exclusive content by industry experts and an ever-increasing bank of real world use cases, to 80+

deep-dive summit presentations, our membership plans are packed with awesome AI resources.

Subscribe now

#4K#ai#amp#Anatomy#arm#Artificial Intelligence#blood#blood vessels#Brain#collaborate#computer#Computer vision#content#development#display#electronic#Experienced#Features#Future#hand#healthcare#how#human#humans#images#Industry#issues#it#Mastering#medical

0 notes

Text

MIT Corporation elects 10 term members, two life members

New Post has been published on https://thedigitalinsider.com/mit-corporation-elects-10-term-members-two-life-members/

MIT Corporation elects 10 term members, two life members

The MIT Corporation — the Institute’s board of trustees — has elected 10 full-term members, who will serve one-, two-, or five-year terms, and two life members. Corporation Chair Mark P. Gorenberg ’76 announced the election results today.

The full-term members are: Nancy C. Andrews, Dedric A. Carter, David Fialkow, Bennett W. Golub, Temitope O. Lawani, Michael C. Mountz, Anna Waldman-Brown, R. Robert Wickham, Jeannette M. Wing, and Anita Wu. The two life members are: R. Erich Caulfield and David M. Siegel. Gorenberg was also reelected as Corporation chair.

As of July 1, the Corporation will consist of 80 distinguished leaders in education, science, engineering, and industry. Of those, 24 are life members and eight are ex officio. An additional 25 individuals are life members emeritus.

The 10 new term members are:

Nancy C. Andrews PhD ’85, executive vice president and chief scientific officer, Boston Children’s Hospital

Andrews is a biologist and physician noted for her research on iron diseases and her roles in academic administration. She currently serves as executive vice president and chief scientific officer at the Boston Children’s Hospital and professor of pediatrics in residence at Harvard Medical School. She previously served for a decade as the first female dean of the Duke University School of Medicine. After graduating from medical school, she completed residency and fellowship at BCH, joining the faculty at Harvard as an assistant professor in 1993. From 1999 to 2003, Andrews served as director of the Harvard-MIT Health Sciences and Technology MD-PhD program, and then was appointed professor of pediatrics and dean for basic sciences and graduate studies at Harvard Medical School. Andrews currently serves on the boards of directors of Novartis, Charles River Laboratories, and Maze Therapeutics.

Dedric A. Carter ’98, MEng ’99, MBA ’14, chief innovation officer, University of North Carolina at Chapel Hill

Carter currently serves as the vice chancellor for innovation, entrepreneurship, and economic development and chief innovation officer at the University of North Carolina at Chapel Hill. He has cabinet-level responsibility for the entrepreneurship, innovation, economic development, and commercialization portfolios at the university through Innovate Carolina and the Innovate Carolina Junction, a new hub for catalyzing innovation and accelerating entrepreneurial invention located in Chapel Hill, North Carolina, among other oversight and engagement roles. Prior to his appointment, he was the vice chancellor for innovation and chief commercialization officer at Washington University in St. Louis. Before that, he served as the senior advisor for strategic initiatives in the Office of the Director at the U.S. National Science Foundation, in addition to serving as the executive secretary to the U.S. National Science Board executive committee.

David Fialkow, co-founder and managing director, General Catalyst Partners

Fialkow currently serves as managing director of General Catalyst Partners, a venture capital firm that makes early-stage and transformational investments in technology and consumer companies. His areas of focus include financial services, digital health, artificial intelligence, and data analytics. With business partner Joel Cutler, Fialkow built and sold several companies prior to founding General Catalyst Partners in 2000. Early in his career, he worked for the investment firm Thomas H. Lee Company and the venture capital firm U.S. Venture Partners. Fialkow studied film at Colgate University and continues to produce documentaries with his wife, Nina, focused on health care and social justice.

Bennett W. Golub ’79, SM ’82, PhD ’84, co-founder and senior policy advisor, BlackRock

In 1988, Golub was one of eight people to start BlackRock, Inc., a global asset management company. In March of 2022, he stepped down from his day-to-day activities at the company to assume a part-time role of senior policy advisor. Formerly, he served as chief risk officer with responsibilities that included investment, counterparty, technology, and operational risk, and he chaired BlackRock’s Enterprise Risk Management Committee. Beginning in 1995, he was co-head and founder of BlackRock Solutions, the company’s risk advisory business. He also served as the acting CEO of Trepp, LLC. a former BlackRock affiliate that pioneered the creation and distribution of data and models for collateralized commercial-backed securities, beginning in 1996. Prior to the founding of BlackRock, Golub served as vice president at The First Boston Corporation (now Credit Suisse).

Temitope O. Lawani ’91, co-founder, Helios Investment Partners, LLP

Lawani, a Nigerian national, is the co-founder and managing partner of Helios Investment Partners, LLP, an Africa-focused private investment firm based in London. He also serves as co-CEO of Helios Fairfax Partners, an investment holding company. Prior to forming Helios in 2004, Lawani was a principal at the San Francisco and London offices of TPG Capital, a global private investment firm. Before that, he worked as a mergers and acquisitions and corporate development analyst at the Walt Disney Company. Lawani serves on the boards of Helios Towers, Pershing Square Holdings, and NBA Africa. He is also a director of the Global Private Capital Association and The END Fund, and has served on the boards of several public and private companies across various sectors.

Michael C. Mountz ’87, principal, Kacchip LLC

Michael “Mick” Mountz is a logistics industry entrepreneur and technologist known for inventing the mobile robotic order fulfillment approach now in widespread use across the material handling industry. In 2003, Mountz, along with MIT classmate Peter Wurman ’87 and Raffaelo D’Andrea co-founded Kiva Systems, Inc., a manufacturer of this mobile robotic fulfillment system. After Kiva, Mountz established Kacchip LLC, a technology incubator and investment entity to support local founders and startups, where Mountz currently serves as principal. Before founding Kiva, Mountz served as a director of business process and logistics at online grocery delivery company Webvan, and before that he served as a product marketing manager for Apple Computer working on the launch of the G3 and G4 series Macintosh desktops. Mountz holds over 40 U.S. technology patents and was inducted into the National Inventors Hall of Fame in 2022.

Anna Waldman-Brown ’11, SM ’18, PhD ’23