File Based QC | Automated QC Solutions | Pulsar & Quasar

Don't wanna be here? Send us removal request.

Text

Media Offline: What is it and how to deal with it?

Media offline is a term used to describe the situation where a portion of a content is inaccessible or cannot be played due to a technical error, a missing media segment, or other reasons.

How does this happen?

One of the most important steps during the post-production process for a content is ‘Editing’. A typical editing project comprises many media clips and segments that are stitched together in the timeline, in order to create the complete content. A professional editing solution keeps references to all these segments and allows publishing of the final render once the editing process is completed. These media segments can reside in various storage such as SD-Card, SAN, NAS, USB, HDD, etc.

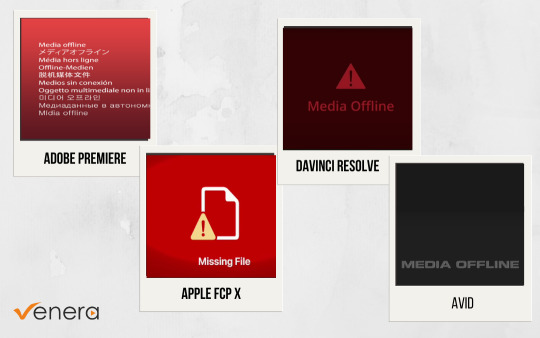

Imagine a scenario where a fresh piece of content is received on an SD card. The editor inserts this SD card into the Editing software and adds the media files on the SD card on to the editing timeline. It looks all good at preview and the editor saves the project. The editor comes the next day, removes the SD card while continuing to work on the editing project. On previewing the file, the editor suddenly sees “Media Offline” image in the video. This “Media offline” image is inserted by the Editing solution when the referenced media is not accessible due to the removal of the SD card. This can also happen if a media file is deleted, moved, renamed or simply becomes inaccessible in a network storage. It can also happen due to slow access to the storage. The actual “Media Offline” image also varies across different editing solutions.

Here are some examples:

Unfortunately, human mistakes & network issues continue to happen in the editing environment and therefore, such a scenario is common. However, the presence of “Media Offline” segments in the final content delivered out of the post-production process is completely unacceptable. Its presence will lead to content rejection, thereby damaging the reputation of the post-production house. If the issue is detected in time before delivery, rectification is fairly easy as it is only a matter of providing correct reference to the missing media file inside the editing solution.

How to rectify “Media Offline”?

Comprehensive rectification requires timely & accurate detection of “Media offline” issues in the content. There are two ways of detecting media offline: manual and automated.

Manual Method to Detect Media Offline

The manual method involves an operator manually checking the content to determine if the “Media Offline” image is present in the content. The image can be present for a few frames or for a longer duration, depending on the length of missing content. This will require an operator to watch through the entire content, which can be a time-consuming process and is prone to human errors.

Automated Method to Detect Media Offline

An Automated QC solution can detect “Media offline” in the content without any manual intervention. An operator just needs to configure the template in the video QC software and the rest is done automatically. This test by the video quality checker software is also accurate and therefore, can be relied upon without a need for a manual scan. Using an Automated File QC solution for this detection is faster and more efficient than manual methods, thereby helping optimize the content workflows.

Pros and Cons of Manual and Automatic Methods

There are hardly any cons of using Automated methods, as the investment in QC software is easily offset by the cost savings by eliminating manual intervention.

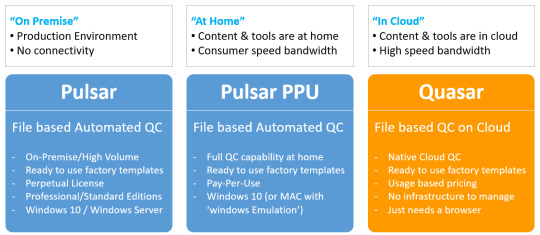

Venera offers a wide range of Automated QC solutions – both for on-premise (Pulsar) and Cloud deployment (Quasar).

Quasar & Pulsar are perfect solutions for automated media offline detection, allowing you to detect and fix media offline issues quickly and efficiently. This helps minimize the impact of such QC issues on production timelines and budgets while retaining the reputation of the post-production company.

By relying on Quasar & Pulsar, post-production houses can be certain that content delivered by them to their customers is free of “Media Offline” issues.

Venera’s QC solutions are also highly customizable, allowing users to configure them to their specific needs and requirements. Users can choose to invest upfront in a QC software license or can pay on a usage basis. With these options available, using a QC solution to detect and eliminate Media Offline issues efficiently is easy & feasible for everyone.

We hope this article has been informative and helpful in understanding media offline and its implications on media production. We would love to hear about your experiences and tips for dealing with media offline. Please share your thoughts and recommendations in the comments section below.

This content originally appeared here on: https://www.veneratech.com/what-is-media-offline-and-how-to-deal-with-it/

0 notes

Text

Venera Technologies: Revolutionizing the Video Quality Control Process with Native Cloud QC Solution

As the video industry continues to grow and evolve, the need for efficient and accurate video quality control (QC) has become increasingly important. In the past, video QC was a time-consuming and labor-intensive process that involved manual checks for various technical and content-related issues. However, with the advent of cloud technology, the process can now be greatly streamlined and improved.

Venera Technologies is a software company that specializes in cloud video QC. Our mission is to revolutionize the video quality control process and make it more efficient, accurate, and cost-effective for video production companies of all sizes.

What is Cloud Video QC?

Cloud video QC is a process that involves the use of cloud-based software to automate the video quality control process. This software is designed to detect and flag any technical and content-related issues in a video, making it easier and more efficient to identify and fix these issues. With cloud video QC, video content providers can save time and reduce the risk of errors, leading to faster delivery of high-quality content.

How Venera Technologies's Cloud Video QC Software Works?

At Venera Technologies, we understand the importance of delivering high-quality video content, which is why we have developed a cloud native video QC software that is both easy to use and highly effective. Our solution is designed to detect and flag specific technical and content-related issues in audio-video files, making it easy for our clients to identify and fix these issues.

Here's how our cloud video QC software works:

1) QC Template: Set up a QC template to specify and configure the checks you wish to perform on your content. A wide range of options are available for video, audio, container, package and adaptive bitrate checks.

2) Specify content location: Users can specify the location of their media assets in cloud storage in our cloud-based software for analysis. Our software is designed to handle a large number of video files, making it ideal for large scale media workflows .

3) Automated Analysis: Our software automatically performs a comprehensive analysis of content. This analysis includes checks for technical and content-related issues, such as audio levels, video resolution, aspect ratio, and more.

4) Generate Reports: Once the analysis is complete, a detailed report is generated that highlights issues identified in the content. These reports can be easily reviewed and shared with other team members, making it easy to collaborate and fix any issues.

5) Improved Collaboration: With our cloud video QC software, team members can access video files and quality control reports from anywhere with an internet connection. This facilitates collaboration between team members, regardless of their location, and helps video production companies to deliver high-quality final products faster and more effectively.

Examples of How Venera Technologies's Cloud Video QC Software has Helped Video Production Companies

1) Improved Efficiency: One of our clients, a large content aggregator, was spending a significant amount of time and resources on manual video QC checks on their short form content. After implementing our cloud video QC software, they were able to automate the process, saving significant time and labor costs. They process many thousands of files daily with our cloud native QC software - Quasar®.

2) Improved Accuracy: Another client, a small startup, was concerned about the risk of human error in their video QC process and they also wanted to catch issues that are difficult to identify with manual QC. By using our cloud video QC software, they were able to eliminate the possibility of human error and ensure that all issues were identified and addressed accurately with minimal investment.

For Details Check: Venera Technologies

0 notes

Text

Caption/Subtitle QC vs. Authoring

The presence of captions and subtitles with digital media files has become more prominent and nearly universal. We often see content creators and distributors contemplate the difference between a Caption Authoring system and a Caption/Subtitle QC system and whether they are both needed. So, we thought it is worthwhile to clarify and differentiate the role each software category provides.

Caption/Subtitle Authoring and Caption/Subtitle QC remain two separate and distinct activities. While caption authoring is used to create captions and subtitles, caption QC is an increasingly important part of any caption/subtitle workflow in order to ensure the optimum end-user experience when viewing captions. The caption QC until recently, had remained a time-consuming and resource intensive manual process. The advent of advanced and automated caption QC software, such as CapMate from Venera Technologies, is now providing a logical alternative to the tedious manual caption QC process, allowing automation of a large portion of this process.

To further clarify and differentiate between caption authoring tools and caption QC tools, we will examine some scenarios to help you appreciate the importance of specialized caption QC systems.

Caption/Subtitle Creation

A common authoring system will let you generate raw captions using ASR (Automated Speech Recognition) technology that provides the first draft of the captions. An operator is required to then add the captions missed by the ASR technology and properly align/format all the captions as needed. Authoring systems may provide basic measurements such as CPS (Characters Per Second), WPM (Words Per Minutes) and CPL (Characters Per Line) that will allow you to rectify the basic ‘timing’ issues. Since the raw captions are generated by the tool itself, they are expected to be aligned with the converted audio. However, the responsibility of aligning any new captions you add lies with you. Authoring tools probably can not provide any analysis capabilities for sync issues on the user added segments. Another common requirement is to ensure that the captions are not placed on top of burnt-in text in the video. Again, you will have to manually ensure that no such overlapping sections exist in the video and an operator will have to watch the entire video content in order to ensure this. The full review of the caption/video is similarly required for many other common issues.

So, while an authoring system allows you to create, edit and format your captions efficiently, it usually doesn’t provide rich analysis capabilities to QC the captions. The responsibility of detecting basic issues and correcting them lies with you.

Caption/Subtitle Compliance

Let’s take this a step further. In today’s world, ensuring the basic sanity of captions is not enough. Every major broadcaster or OTT service provider or educational content provider has its own technical specifications for the captions it requires. There can be many such requirements, a few of which are as follows: – Max number of lines of caption per screen. – Minimum and Maximum duration of each caption. – Captions sync aligned with audio to a maximum specified sub-second threshold. – All captions to be placed at the bottom third of the screen, while avoiding burnt-in text overlay. In case of overlap, another position may be used. – Detection of profanity (words defined by the user to be unacceptable) – Spelling checks.

Caption/Subtitle Editing

So far, we have discussed only the Caption creation scenario. However, a lot of times, an existing caption file needs to be repurposed because of editing in the audio-visual content. Such changes can include the addition of certain video segments, removal of segments, changing caption location based on customer guidelines, or frame rate changes. We have encountered many cases where the customers have been trying to use the original captions with such edited content, which leads to a lot of issues. Detecting and correcting such issues manually can be time-consuming and resource intensive. Since authoring tools do not usually provide auto-analysis capabilities, they can’t help with the detection of such issues. The only way you can use caption authoring systems in this case is to use their user interface and detect/correct such issues manually.

Any compromise in this manual process will lead to missed issues in the content delivered to the customers/content owner. This will effectively mean multiple iterations, causing further delays and affecting customer satisfaction before the captions are accepted by the customer.

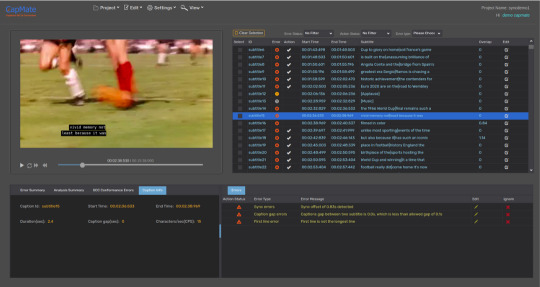

This is where the caption QC tools come in. Caption QC systems address these issues head-on by performing auto-detection (and in case of advanced systems like CapMate, auto-correction) for a wide-range of caption issues. With configurable QC templates, you can set up the checks you needed, define the acceptable thresholds, and let the system do its job. Since the aim of such systems is the analysis, the entire interface is designed to make the analysis and spotting quick & efficient. You only need to act upon the issues reported by the caption QC system. An intelligent caption QC system such as CapMate also provides features to automatically correct many of the issues found, as well as a rich review/editing tool, using which you can easily browse through the reported issues and make the appropriate manual corrections efficiently. They no longer need to watch the entire content.

Not using captions QC tools means that the responsibility of detecting and correcting all captions issues lies with you, which is time-consuming and resource intensive, not to mention error-prone.

While it is understood that the concept of ‘Automated Captions QC & Correction’ is relatively new but adopting such a system can lead to significant business benefits. Our customers who have adopted the use of CapMate into their workflow are benefiting from the efficiencies gained in their caption QC operations from the insights provided by the tool along with its auto-correction abilities.

In conclusion, Caption QC and Caption Authoring tools serve different and complimentary purposes in the caption workflow operation and do their respective jobs in an excellent manner. While Caption QC tools are not intended for caption authoring, Caption Authoring tools are also not well-suited, nor are they intended, for efficient caption QC process. Using both tools judiciously in a workflow can lead to higher quality caption deliveries with more efficient use of the experienced QC operators.

About CapMate™

CapMate™ is a Cloud Native SaaS service for Captions/Subtitles QC and Correction. Whether you are a Captioning service provider, OTT service provider, Broadcaster or a Captioning platform, CapMate can significantly improve your workflow efficiency with its automatic analysis, rich review, spotting, and correction capabilities. Once completed, you can export the finished captions for direct use in production.

Get in touch with us today and we would love to discuss with you how we can help you solve your content QC challenges efficiently!

This content first appeared here on: https://www.veneratech.com/caption-subtitle-qc-vs-authoring/

1 note

·

View note

Text

CapMate 101 – Caption/Subtitle Files Verification and Correction Solution

Many of our customers had been telling us that the process of validating and correcting closed caption files is tedious, time consuming and costly! And that they needed an innovative QC solution for caption and subtitle files, similar to what we have done for Audio/Video QC. We took that request to heart and have introduced CapMate, the first comprehensive cloud native caption QC software that provides verification and correction of captions and subtitle files.

In fact, CapMate is so innovative and bleeding edge that many are not even aware of such a solution category! Of course, there are a large selection of capable programs in the ‘captioning’ category that allow for creation of caption and subtitle files. However, until CapMate came around, there was NO solution to address the dire need for an innovative automated software for caption QC that could find caption related issues, much less fix them! Before CapMate, caption verification and correction was a painfully slow, manual, and error prone process.

And so starting with this blog post and following up with a series of short blogs, I would like to introduce you to this new category of software and tell you more about CapMate!

Let me start by giving you the highlights! CapMate:

Is a cloud-native solution that can work with your local content or those in the AWS cloud

Has been in heavy production use for over a year and so it already is a robust and proven solution

Helps drastically reduce the amount of time needed to verify and fix caption files, improving operational efficiency

Supports all the major caption formats such as SCC, SRT, IMSC, EBU-STL, and many more

Has usage-based pricing (monthly/annual/ad-hoc) so you only pay for what you use

Can detect the most common and difficult caption issues, like Sync, Text Overlap, missing caption, and many more

Can also correct these issues, in most cases automatically, and allow you to generate a new clean caption file

And so much more….

You can see a short 1-min clip highlighting CapMate’s features here.

Be on a look out for CapMate 102, the next blog in this series where I will write about one of the key features of CapMate, its ability to accurately detect and correct caption sync issues! At any time that you think CapMate may be the subtitle QC software solution you have been looking for, contact us ([email protected]), and we are happy to give you a live demo of CapMate and set you up with a FREE trial account!

This content first appeared here on: https://www.veneratech.com/capmate-101-solution-for-verification-and-correction-of-caption-subtitle-files/

1 note

·

View note

Text

Understanding GOP – What is “Group Of Pictures” and Why is it Important

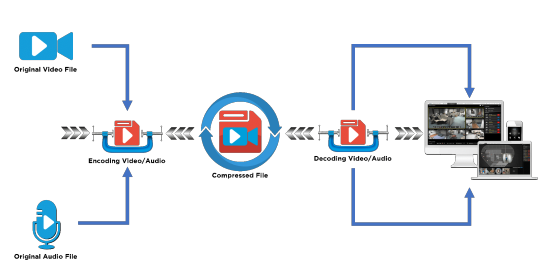

GOP or “Group of Pictures” is a term that refers to the video structure representing how digital video is grouped. But before we get into understanding GOP, let’s start with the basics of the video structure. When a video is encoded to be viewed on television or by any streaming platform, it’s important that it is compressed. In order to more easily transmit digital media, video compression is used to turn large raw video files into smaller video files that can transmit more easily over limited bandwidth. Video compression works by locating and reducing redundancies within an image or a sequence of images. A video is composed of a sequence of frames displayed at a given frequency. In most common video content, each frame is very similar to those that precede and follow it. Though there might be lots of movement in the subject of the content, the background and a large portion of the image is usually the same or very similar from frame to frame.

Figure 1: Encoding video for data transmission uses data compression technology (https://lensec.com/about/news/newsletter/lpn-03-20/video-compression-flowchart/)

Video compression takes advantage of this by only sending some frames in full (known as Inter-frames or I-frames) along with the difference between the I-frame and the subsequent frames. The decoder then uses the I-frame plus these differences to accurately re-create the original frames. This method of compression is known as temporal compression because it exploits the fact that information changes in a video slowly over time. A second type of compression, known as spatial compression, is used to compress the I-frames themselves by finding and eliminating redundancies within the same image.

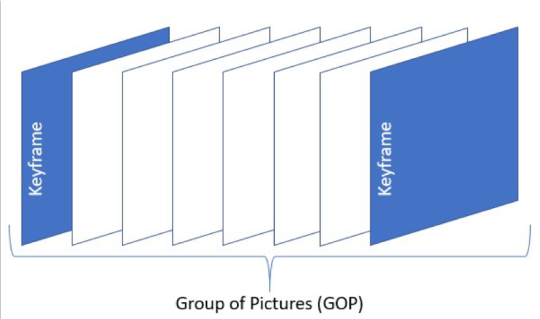

This brings us back to the concept of Group of Pictures. Put simply, a GOP is the distance between two I-frames measured in the number of frames. I-frames are sometimes referred to as “Keyframes” as they are the key that the other types of frames are structured around. Figure 2 below shows a simple representation of a single GOP. As you can see, it begins with the keyframe (blue) and the white frames contain the information used to create the appearance of motion when referencing the keyframe.

Figure 2: GOP with Keyframes (https://aws.amazon.com/blogs/media/part-1-back-to-basics-gops-explained/)

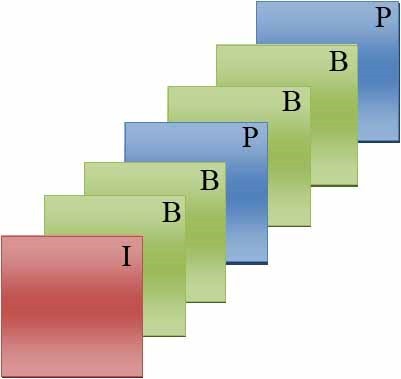

Let’s look at one example: If you’re watching a compressed video at 30 frames per second, you’re not really seeing 30 full pictures. Instead, you’re seeing sets of GOPs similar to the one pictured above. Depending on the codec, a GOP could consist of very large or very small GOP lengths. Within a typical GOP, you have three types of frames: I-frames, P-frames, and B-frames. Every GOP begins with an I-frame, which contain the complete image. After this comes the P-frames (Predicted frames) and B-frames (Bi-directionally predicted frames). P-frames reference past frames and B-frames reference past and future frames. P-frames and B-frames are incomplete images that reference the I-frame and surrounding images to fill in the blanks. P-frames and B-frames contain either bits of new visual information to replace parts of the previous frame or instructions on how to move around bits of the previous frame to create the appearance of motion. By processing and compressing GOPs instead of individual frames, file sizes and stream bitrates can be significantly reduced. Figure 3 below is a representation of these different types of frames, arranged into one Group of Pictures.

Figure 3: GOP with B-Frames and P-Frames (https://fr.wikipedia.org/wiki/Fichier:Group_of_pictures_illustration.jpg)

Optimizing your GOP Length

The length of your GOP has important implications in regards to video quality. A shorter duration can preserve more visual information, especially in high-motion video, but is less efficient in that it needs a higher bitrate to look good. A longer GOP is useful in low-motion videos where very little in the frame changes, allowing for reduced redundancy which can look better at lower bitrates. Longer GOPs are better suited for maximum compression on a given bandwidth while smaller GOPs are better suited for scene changes, rewinding, and resiliency to media defects.

Open and Closed

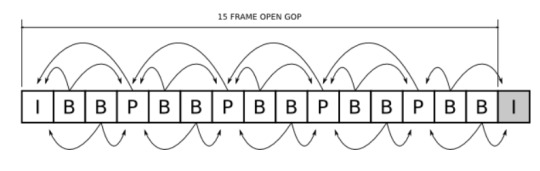

GOPs can be divided into two categories: ‘Open’ and ‘Closed’. Open GOPs are those where frames in one GOP can refer to frames in other GOPs for redundant blocks. You see this in Figure 4 below where the last two B-frames refer to the I-frame in the next GOP for redundancy.

Figure 4: Open GOP has frames that refer to frames outside the GOP for redundancies (http://tiliam.com/Blog/2015/07/06/effective-use-long-gop-video-codecs/)

On the other hand, closed GOPs are those in which frames in one GOP can only refer to frames within the same GOP for redundant blocks. An IDR frame is a special type of I-frame that specifies that no frame after the IDR frame can reference any frame before it. Through the use of these IDR frames, we form closed GOPs which can’t refer to frames outside the GOP. The IDR frame acts as a buffer between GOPs, closing them off to references from other GOPs. This can be seen in Figure 5 below where a closed GOP is shown with an IDR frame.

Figure 5: A closed GOP can’t refer to frames outside the GOP for redundancies (http://tiliam.com/Blog/2015/07/06/effective-use-long-gop-video-codecs/)

All in all, GOP structure is an extremely useful concept in the world of digital media that allows us to properly compress video streams and significantly reduce stream bitrates while maintaining maximum quality for a variety of applications. Encoding using I-frames, P-frames, and B-frames is an integral part of video compression in the modern digital media world and understanding the correct GOP structure for your content is vital for proper quality control and providing the best viewing experience to the viewer.

GOP Verification Tools

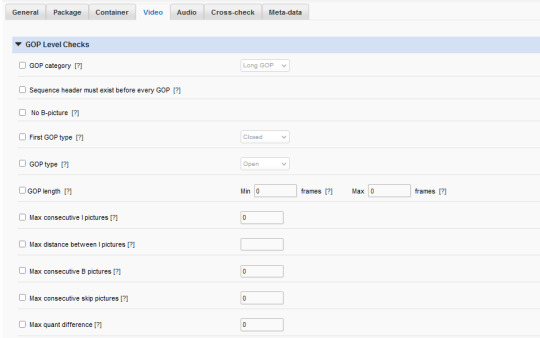

One integral step in the typical media workflow processing is use of QC software, such as Venera Technologies Pulsar (for on-premise QC) and Quasar (for cloud-based QC). Pulsar & Quasar offer variety of GOP level checks and are the most effective way to prevent GOP related problems in many types of content. For example, in low-motion video a larger number of B-frames can look fine, and deliver great compression ratios, but in faster motion video it will consume more processing power to decode. Using Pulsar/Quasar, the user can verify that the media has the proper GOP structure, and therefore would represent the best visual quality to the viewer.

There are many options for GOP verification within Pulsar/Quasar. Since proper validation of GOP structure is an important aspect of quality control in digital media, Pulsar/Quasar make it easy to verify GOP length, verify the presence or absence of different types of frames, and check for a multitude of GOP compliance aspects. These checks can be automated and the process is as easy as customizing a template for the GOP qualities you wish to maintain and scanning files in any folder to ensure compliance.

One important check within Pulsar/Quasar is the ability to specify a range (in frames) of GOP lengths in order to verify the content falls within that range. This distinction has large implications on the file size and bandwidth required for your digital media. Another is the GOP Category verification. This allows you to specify long GOPs or I-frame only GOPs. Pulsar/Quasar also offer checks for the presence or absence of B-frames, Max consecutive I-frames, distance between I-frames, and max consecutive B-frames. Furthermore, both software solutions also allow you to verify Closed or Open GOPs.

Pulsar/Quasar support a wide range of media formats and offer comprehensive quality checks, including extensive GOP related compliance checks. They are solutions that can dramatically increase your QC efficiency when used effectively. They can be integrated into your workflow in a multitude of locations and template customization allows you to tailor them to your specific needs.

For additional information about Pulsar, please visit https://www.veneratech.com/pulsar

For additional information about Quasar, please visit https://www.veneratech.com/Quasar

This content originally appeared here on: https://www.veneratech.com/understanding-gop-what-is-group-of-pictures-and-why-is-it-important/

0 notes

Text

Dialog-gated Audio loudness and why it is important

Being a fan of watching video content on a variety of devices, I sometimes get into situations where I can’t make out what is being spoken even though the overall audio levels are fine. Have you experienced these issues? I am sure they are annoying enough and make us think how this content was approved for publishing with such obvious issues.

Many of you would be surprised that such content can pass the Loudness criteria that are commonly used for typical audio QC testing, and if not manually reviewed, the content can indeed pass and be made available to consumers. This is because a more sophisticated level of Loudness testing, called ‘dialog-gated loudness’ criteria must be used in order to verify that the portion of the content with dialog has the proper loudness levels. Not performing the dialog-gated loudness verification could result in content that while passing general Loudness criteria, may still not have audible dialogs for the viewer. This can negatively impact content providers who are continuously vying to gain & retain consumers by maintaining the high quality of their content – both technically and editorially. Now a days OTT service providers like Netflix and others require the dialog-gated loudness compliance.

In this article, we will discuss how such issues can be detected by using Dialog-gating and how our QC products can help content providers achieve this in a fully automated manner.

Dialog Gating

Gating is the process that only pass audio signal satisfying the criteria while removing the unwanted audio signals from loudness measurement. The gating criteria may be absolute audio level, relative audio level or audio type such as speech or non-speech.

Dialog-gating is a process that only allows the audio signal which has speech content. All other non-speech audio segments are rejected and not passed through for the loudness measurement.

Level-gating is a process that only allows the audio signal higher than particular audio level to pass through. There are two common level gating techniques:

Absolute Gate. All the audio segments below a particular audio level (mostly -70 LKFS/LUFS) are rejected.

Relative Gate. All the audio segments that are lower than particular value (mostly 10 LU) below the average absolute loudness are rejected.

Let’s consider the case of a 5.1 audio stream. The list of channels in such audio stream are L, R, C, Ls, Rs, Lfe. Normally the speech content is carried in the Center (C) channel but sometimes it may be carried in Left (L) and Right (R) channels also. For this reason, only L, R and C are considered for calculating the Dialog-gated loudness and all other channels are ignored.

In real workflows requiring dialog-gated loudness measurement, an adaptive gating approach is taken. It means that Dialog-gated measurement should be performed if there is sufficient speech content in audio. If the speech content is not sufficient, then level gating is used to perform the loudness measurement.

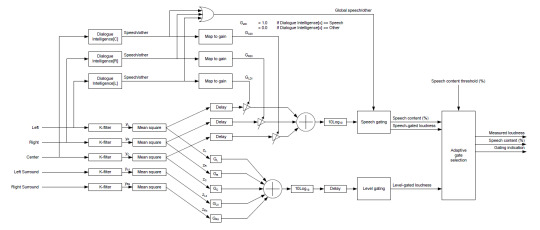

The diagram below shows such a workflow for a 5.1 audio stream:

The upper half of this diagram takes the audio content from L, R and C channels for dialog-gated loudness measurement. The content is passed through “Dialogue Intelligence” to determine if the audio contains speech. If the audio has speech, it is assigned a gain of one, else zero. The resultant channels with gain are fed to the dialog gating process. In this case, only the audio segments containing speech will pass through along with corresponding loudness level and amount of speech content. Non-speech audio segments will be dropped. Adaptive gate selection decides whether to use dialog gated loudness or level gated loudness depending on the overall amount of speech content.

This measurement provides a true picture of actual speech levels in audio and content providers can be sure that the audio experience of their audience is preserved.

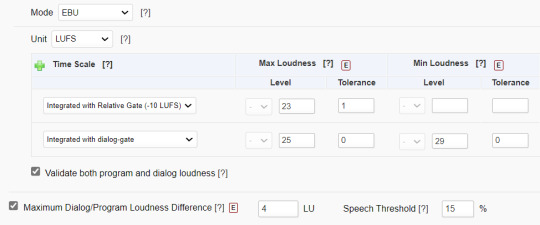

Venera’s Automated QC tools – Pulsar™ & Quasar® allows automated measurement of dialog-gated loudness measurement. Following options – shown for the EBU mode (popular standard in Europe) – are available in both the solutions:

These options are available for ATSC (popular standard in North America), OP-59 (popular standard in Australia), and ARIB TR-B32 (popular standard in Japan) modes as well.

In addition to measuring the dialog-gated loudness, users can also measure the difference of loudness level using dialog-gated measurement and level-gated measurement. This gives them a practical perspective of audio composition in the content they provide to their consumers.

Pulsar™ also allows users to automatically normalize the audio levels eliminating manual intervention in making the content compliant.

In addition to Loudness measurement, Venera’s QC tools – Quasar® & Pulsar™, offer a wide range of Audio and Video measurements that help users automate the otherwise tedious content QC operations.

Quasar® is a Native Cloud Content QC service, allowing auto scaling with ability to process hundreds of files simultaneously with wide range of content security capabilities so that our users can process their content with peace of mind. Quasar® can be integrated using REST API for highly automated workflows. Visit www.veneratech.com/quasar to read more about Quasar® and request a free trial.

Pulsar™ is an on-premise Automated File QC systems, allowing scaling with clustering of multiple Verification Units in user’s datacenter or office location. Pulsar™ is the fastest QC system in the market allowing up to 6x faster than real-time speed for HD content. Pulsar™ can be integrated using XML/SOAP API for highly automated workflows. Visit www.veneratech.com/pulsar to read more about Pulsar™ and request a free trial.

Get in touch with us today and we would be happy to discuss with you how we can help solve your content QC challenges efficiently!

This content first appeared here on: https://www.veneratech.com/dialog-gated-audio-loudness-and-why-it-is-important/

0 notes

Text

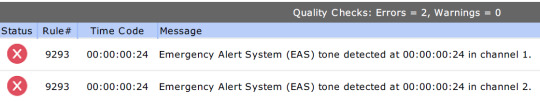

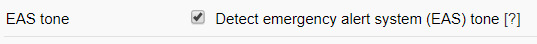

Automated detection of Mosquito Tone in media content

Mosquito Tone! Does this give the impression that it has something to do with mosquitos?

Well, it does relate to tones with the buzzing sounds similar to the noise made by a mosquito but it is not directly related to ultra-sonic mosquito repellant devices in any way.

Humans can hear the sounds between frequencies of 20 Hz to 20 KHz.

Mosquito tones are high-frequency tones, normally above 17 KHz.

These tones are inaudible by adults but can be heard by teenagers. Yes, you read it correctly! Teenagers can hear mosquito tones but adults cannot. That is because it is normal for people to lose their hearing as they age and as a result, they are unable to hear the higher frequency sounds. With age, the audible audio frequency range continue to narrow down with losses towards the high frequency. The actual audible range can vary across individuals who are similar in age.

While there are both desired and undesired uses of mosquito tone in various applications, presence of mosquito tone is generally not acceptable in the media content delivered by various content delivery services. Presence of mosquito tones can cause severe degradation in user experience for the younger population. Infants & toddlers hearing systems can be severely impacted by the presence of such tones as the adults will not even notice their presence while unknowingly exposing kids to them for an extended period of time.

It is therefore important for content providers to ensure mosquito tones are not present in the delivered content. This is where the challenge comes in.

Most of the QC operators working with content providers are adults and as a result will inevitably miss the mosquito tone even if it is present in the content. So, performing a full manual QC of the audio is certainly not sufficient to detect this. Missing such signals can prove to be very expensive for content providers in terms of increased churn as well as related legal liability, as this could be potentially harmful to the public health. In case of delivery mediums like television, the negative effect could be very wide-spread due to the inherent broadcast nature of the delivery medium.

Therefore, since it is clear that manual QC for detection of these tones will not work, using a QC tool that can performance a reliable detection of mosquito tones is necessary.

Venera’s QC tools – Quasar & Pulsar, perform audio spectrum analysis and can reliably report the presence of mosquito tones in the content. Users have the flexibility to define the frequency range for these tones, and all the mosquito tones in that range will be reliably reported for user’s review. Moreover, all such tones can be detected in an automated manner, thereby improving the workflow efficiencies significantly while saving content providers from any claims downstream.

Visit www.veneratech.com/pulsar to read more about Pulsar™ and request a free trial.

Visit www.veneratech.com/quasar to read more about Quasar® and request a free trial.

This content first appeared here on: https://www.veneratech.com/automated-detection-mosquito-tone-in-media-content/

0 notes

Text

Automated Detection of Slates in Media Content

What is a Slate?

A Slate is a graphic element that is usually present before the essence as part of the mezzanine media file exchanged between organizations. Slate contains important information about the media in the file and usually has the following information:

Program/Episode-title

Material identification number

Episode number

Season number

Client/Production company brand

Content version

Aspect ratio

Resolution

Frame rate

Asset type: Texted master, Texted master with Textless tail, or Textless master

Textless material timecode in case of Textless tail

Audio channel layout

Audio Language

Duration

Clock

The actual Slate metadata can vary based on the content type (such as Advertisement, Movie, TV series) and the brand. Since the primary purpose of Slate is to describe the content, the content properties should exactly match with the Slate metadata. This is especially relevant for the audio-video technical properties outlined above. Operators need to ensure this for every media file processed.

The Slate is displayed for a pre-defined duration and is usually followed by a Black segment before the actual essence starts. Other elements such as Color bar/tone, Black frames etc. may be present before the Slates. An example of a content structure is shown below. The content structures varies across different organizations.

Presence of Slate

Many major media companies worldwide now require Slates to be present at specific locations with specific types of meta-data. Following scenarios can lead to content rejection by customers:

Slate not present at the desired location

Missing information in Slate

Incorrect information in Slate

Therefore, the first requirement for QC operators is to validate that the Slate is indeed present at a precise location and it contains the desired metadata. To achieve this, the operator will have to manually seek the desired timecode and validate the presence of necessary slates. This can introduce inefficiencies in high content volume requirements.

Venera’s QC solutions – Pulsar & Quasar, allow the automated detection and validation of Slate presence as per user’s specifications. Users can specify the precise time-in and time-out location of Slate, and an alert will be raised if the Slate is not present there. By doing this, there is no need for operators to manually examine every file and they only need to review the files that are flagged by Venera’s Automated QC systems. This can lead to substantial improvements in workflow efficiency, allowing better usage of manual resources.

Visit www.veneratech.com/pulsar to read more about Pulsar™ and request a free trial.

Visit www.veneratech.com/quasar to read more about Quasar® and request a free trial.

This content first appeared here on: https://www.veneratech.com/automated-detection-of-slates-in-media-content/

0 notes

Text

What is Captions Quality and how to ensure it?

Closed Captions are a must-have for deaf and hard to hear people comprehend and enjoy the audio-visual content.

According to DCMP (Described and Captioned Media Program), there are more than 30 million Americans with some type of hearing loss. If extended to the worldwide population, this number will easily grow to a few hundred million. This is a large population that needs effective and high-quality Closed Captions to comprehend the media content.

And it is often stated that Captions should be of “high quality” to be effective for this population.

But how does one define this “high quality” for Captions?

According to DCMP, the following are key elements of Captioning Quality:

Errorless captions for each production.

Uniformity in style and presentation of all captioning features.

A complete textual representation of the audio, including speaker identification and non-speech information.

Captions are displayed with enough time to be read completely, are in synchronization with the audio, and are not obscured by (nor do they obscure) the visual content.

Equal access requires that the meaning and intention of the material are completely preserved.

Every caption service provider needs to ensure that they create “quality captions” meeting the above guidelines. And every content provider, whether a Broadcaster or a Streaming service provider, needs to ensure that they deliver high quality captions to their viewers. Failing these captioning quality standards can have a detrimental effect on their brand and people will be less enthused in signing up or continuing with their captioning/subtitling service.

Checking for all these parameters and correcting them can be resource-intensive, tedious, and cost-prohibitive. Nowadays, the same content is delivered through a variety of mediums, which may use different audio-visual content versions due to specific editing, frame rate, and other technical requirements. It is essential that the captions are also properly edited for these different content versions. Due to the sheer content volume and the cost involved in Caption/Subtitle QC & correction of each content version, captioning service providers may choose to not perform full QC on all the caption files, leading to low quality and erroneous captions. It is therefore important to bring automation in the QC process so that QC process itself becomes feasible and more manageable for everyone in the content production and delivery chain.

CapMate™, a native cloud captions verification & correction platform from Venera Technologies, allows users to automatically detect a variety of issues that affect quality. Some of these issues and their impact on quality include:

Captions-Audio sync: Readability issue

Detection of missing captions: Clarity issue

Captions overlaid on burnt-in text: Readability issue

Captions duration: Readability issue

Characters per line: Readability issue

Characters per second: Readability issue

Words per minute: Readability issue

The gap between captions: Readability issue

Number of caption lines: Readability issue

Spell Check: Accuracy

Detection of Profane/foul words: Compliance issue

Captions format compliance issues

CapMate™ not only allows Automated QC of Closed Caption and Subtitle files but it also provides for automated correction of a wide range of issues with an option for manual review. CapMate™ comes equipped with a browser-based, rich viewer tool, that allows users to review the results in detail along with an audio-video preview. This viewer application also allows users to edit the captions. Once all the edits are done (automatically or manually), corrected caption/subtitle files can be exported in order to be used in the workflow.

Usage of CapMate™ can save numerous hours which can lead to fast delivery times as well as reduced QC costs. Content providers who depend on closed captioning service providers can send detailed QC report to their vendors, reducing review iterations as well as turnaround times.

With its usage-based monthly or annual subscription plans, as well as a unique Ad-hoc pricing plan, CapMate™ can fit every budget for organizations of any size, proving to be an indispensable tool in improving the quality of captions.

Read more about CapMate™ at www.veneratech.com/capmate. You can also request a free trial on the same page.

This content first appeared here on: https://www.veneratech.com/what-is-captions-quality-how-to-ensure-it/

0 notes

Text

Ref-Q™: Reference based Automated QC

A novel approach for automating the detection of differences between versions of media content.

Did you know that a major studio may end up creating 300-400 versions of a premium title for distribution? Well, that certainly happens, and often!

This happens because of the following scenarios:

Different content edits needed for different locations and customers

Different texted versions of the content for different languages

Multiple delivery formats

Both HDR/SDR versions to be delivered for various distribution channels

Validating all the content versions for editorial and quality accuracy can be daunting and will require numerous, expensive person-days in order to complete. Even after investing all that time, the process still remains error-prone as it is very tedious and subject to human error. This may lead to operators missing important differences, resulting in heavy costs for the content providers in terms of content reproduction as well as impact on their reputation.

Ref-Q™, is a new reference based automated video comparison technology from Venera Technologies, available as a feature within Quasar® native-cloud QC service. It can significantly reduce the overall cost of the validation process by producing predictable and accurate results much faster and at much lower cost, while allowing your teams to focus on the core business activities. Your team needs to only review the differences reported by Ref-Q™ and decide whether to accept or reject those differences. Ref-Q™ works 24×7 for you in an automated manner, allowing you and your team to invest time on activities that requires human intervention.

Use cases

Following are the common use cases where detailed frame-by-frame comparison is needed:

1. Transcoding issues

On occasions, frame dropouts or significant degradation may be introduced due to the transcoding process. This may lead to introduction of black frames, colored frames or significant drop out artifacts being introduced in the frames. This may happen only for a few frames and so can be potentially missed during the manual QC process.

Ref-Q™ can automatically compare the source and transcoded versions of the content and report the difference segments.

2. HDR/SDR versions

HDR capability is commonly available in television sets today and therefore it is common for content providers to deliver both the HDR and SDR versions of the content. HDR and SDR versions for a particular delivery must be exactly the same structurally. Even the minor differences in the structure of the HDR and SDR versions can lead to rejection of the file by the customer. In other words, the same texted version must be delivered to a particular country/customer in both the HDR and SDR versions. This is very difficult to validate manually.

Ref-Q™ can perform automated comparison of HDR and SDR version of the content, and report if any structural differences between the two versions are found.

3. Texted/Textless version comparison

In addition to the comparison for HDR/SDR versions of the same texted delivery, there is also a requirement to spot all texted segments within a content. Doing this manually is tedious, error-prone and costly.

Ref-Q™ can allow you to compare the textless and texted version of the content frame by frame, and report all the locations where the text has been overlaid on the video.

As an output of the QC process, you get a XML/PDF report with start/end timecodes of all difference segments. You may review these segments and make the appropriate decisions.

Ref-Q™ is offered as part of our Native Cloud QC service called Quasar®. All the advanced scaling and security capabilities of Quasar® are also available for Ref-Q™ analysis jobs. You can process hundreds of Ref-Q™ jobs at the same time, allowing immense scalability. To put things in perspective, a set of one-hour files that your manual resources could take a few hours to compare can be completed by Ref-Q™ in fraction of that time. This allows for significant business benefits with fast turn-around times and accurate automated analysis requiring only minimum manual review.

With its monthly or annual subscription based pricing, as well as its industry-first ad-hoc usage-based pricing, Quasar® and its Ref-Q™ feature is available to media companies of any size as well as media professionals.

We also offer a free trial for Ref-Q™, allowing you to test your files on our SaaS platform without any prior commitments.

Get in touch with us today at sales(at)veneratech.com to learn more and request a free trial.

This content first appeared here on: https://www.veneratech.com/ref-q-reference-based-automated-qc/

0 notes

Text

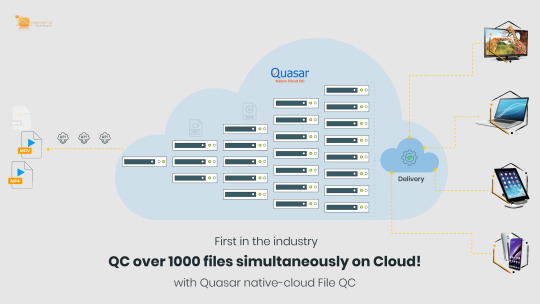

Quasar Leap – We went where no Cloud-QC service had gone before!

We have achieved something that has been near and dear to my heart for a couple of years, as it relates to our Cloud-based QC capabilities. And that is how we extended the capability of our Quasar native-cloud QC service to the level that I don’t believe anyone else has actually reached! And we call it “Quasar Leap”.

When we started the development work on Quasar®, our native cloud QC service, the goal was clear. We didn’t want to just take our popular on-premise QC software, Pulsar™, run it on a VM and call it “cloud” QC. We made the deliberate decision that while we would use the same core QC capabilities of Pulsar, we would build Quasar architecture from grounds up, to be a ‘native’ cloud QC service. And we did accomplish that by being the first cloud-based QC to legitimately call ourselves ‘native’ cloud. And the phrase ‘native’ cloud meant capabilities like microservices architecture, dynamic scalability, regional content awareness, SaaS deployment, usage-based pricing, high grade content security, and of course redundancy.

But we wanted to go even further. And that was when the project we code named ‘Quasar Leap’ came about. To borrow and paraphrase from one of my all time favorite TV shows, Star Trek, we wanted to “take Quasar to where no cloud-QC had gone before”! (Those of you who are Star Trek fans know what I am talking about!).

Quasar was already able to process 100s of files at the time, but the goal of ‘Quasar Leap’ was to show that Quasar can process ONE THOUSAND files simultaneously! Of course, anyone can claim that their solution is robust, scalable, reliable, etc, but we set out to actually do it and then record it to prove that we did it!

This was not a marketing ploy, although to be honest, I knew there would be great appreciation and name recognition telling our customers and prospects that we can QC 1,000 files simultaneously. But there was a practical and quite useful benefit of doing so. After Quasar’s initial release, we found out when we started to push the boundaries of how many files Quasar could process simultaneously, there were some practical limitations to our architecture, even though we were already way ahead of our competition. And while we could easily process a few hundred files at the same time (more than any of our customers had needed), when we tried to push beyond that, the process could break down, and impact the reliability of the overall service.

So because of project ‘Quasar Leap’, our engineering team took a very close look at various components of our architecture. And while I am obviously not going to give away our secret sauce (!), suffice to say, they further enhanced and tweaked various aspects of our internal workflow to remove any bottlenecks and stress points, to make Quasar massively and dynamically scalable!

And then we decided instead of just ‘saying’ that we have the most scalable native-cloud QC solution, we would ‘actually do’ it and record it!! And I can now tell you confidently that we have actually done that! That, we actually submitted 1000 60-minute media files, watched our Quasar system dynamically spin up 1000 AWS virtual computing units (called EC2s in their terminology), and process (QC) those 1000 files simultaneously, and then spin down those EC2 instances once they were no longer needed.

With the new scalling capabilities, 60,000 minutes (1000 hours!) of content can be processed in about 45 minutes which even includes the time of spinning up of the EC2 instances! To put things in perspective, 1000 hours of content, equivalent of approximately 660 movies, or 20 seasons of 7 different popular TV Sitcoms, can be processed in just 45 minutes! To say it differently, with our massive simultaneous processing capability, approximately 1300 hours of content can be processed in one hour!

If you say to yourself “that is great, but who has that much content that they need to process them that quickly”, I have an answer! Actually a three-point answer:

You will be surprised how many media companies have PETA bytes (that is with a “P”!) of content sitting in cloud storage! They face the daunting task of managing a cloud-based workflow to monetize that archived content by restoring, ingesting, transcoding, and ultimately delivering that content to audiences. And one step that is naturally important in this workflow is ensuring that all of that content goes through content validation at various stages before delivery to the end user. And that is where this massive simultaneous QC processing ability of Quasar will be much needed to minimize delays in this effort.

Some of our customers get content in bursts with strict delivery timelines. Ability to process that burst of content immediately offers significant business value in addition to workflow efficiency.

And let’s not forget the main gain from the ‘Quasar Leap’ project, which was the behind the scene tweaking, in some cases revamping, and enhancing of our underlying architecture. And that has resulted in a solid platform, which will benefit ALL of our Quasar SaaS users, whether they have 100,000 files or 100 files, or even a few files! It ensures reliability, scalability and confidence in that they can rely on Quasar to meet their QC needs regardless of their normal volume or any sudden increases (bursts) in their content flow due to an unexpected event or last minute request.

All that effort for ‘Quasar Leap’ by our talented and dedicated development team, conducted during the challenging time of the pandemic, is finally complete! The new release of Quasar with all the architectural changes resulting from the ‘Quasar Leap’ project has rolled out.

According to Tony Huidor, SVP, Products & Technology at Cinedigm, a premier independent content distributor, a great customer of ours, and an early benefactor of ‘Quasar Leap’: “Given the rapidly growing volume of content that uses our cloud-based platform, we needed the ability to expand the number of files we need to process in a moment’s notice. Quasar’s massive concurrent QC processing capability gives us the scalability we required and effectively meets our needs.”

And now ‘Quasar Leap’, giving us the ability to massively scale up our simultaneous processing capability, is ‘live’, and “our Quasar native-cloud QC has gone where no other cloud-QC has gone before”!

Learn more about our Quasar capabilities here, or contact us for a demo and free trial!

And as Mr. Spock would say: “Live Long & Prosper”!

By: Fereidoon Khosravi

This content first appeared here on: https://www.veneratech.com/quasar-leap-scalability-cloud-qc-service/

0 notes

Text

CapMate – Key Features of Our Closed Caption and Subtitle Verification and Correction Platform

In my last blog, which coincided with the official launch of CapMate, our Caption and Subtitle Verification and Correction platform, I gave the background on how the concept of CapMate came about and, at a high level, what the capabilities are that it brings to the table. Here is the blog, in case you need a refresher! It is now time to dig in a little deeper into what CapMate can actually do and why we think it will add great value for any organization that has to deal with Closed Caption/Subtitle files.

We had many conversations with our customers and heard their concerns about the issues they ran across when processing or reviewing captions, and why the closed caption verification and correction is a slow and time-consuming process. Based on those feedbacks, we derived a list of key functionalities which would allow them to reduce the amount of time and effort they regularly had to spend in verifying and fixing the caption/subtitle files. For our first release, we set out to tackle and resolve as many of these issues as we could, and to provide an easy and user-friendly interface for operators to review, process, and correct their caption files.

Here is a subset of those functionalities and a short description for each. Some of these items are complex enough that deserve their own dedicated blog. Hopefully soon!

Caption Sync:

How many times have you been bothered by the fact that the caption of a show is just a tad bit behind or ahead of the actual dialog? The actor stops talking and the caption starts to appear! Or the caption and audio seem to be in sync but as time goes by, there seems to be a bigger and bigger gap between what is being said and the caption that is being shown on the screen. It makes watching a show with closed caption/subtitle quite annoying. There are many reasons for such sync issues, which I will leave for a different blog. But suffice to say, fixing such sync issues is a very time-consuming effort and probably as challenging for the operators who have to deal with them, as it is for you and I who want to watch the show! The operators have to spend painstaking time, adjusting the timing of the closed captions all the way through, making sure that fixing the sync issue in one section doesn’t have a ripple effect of causing sync issues elsewhere. The time to fix the sync issue could vary from a few hours to more than a day!

CapMate, with the use of Machine Learning techniques, can provide a very accurate analysis of such sync issues, determining what type of sync problem exists, and how far off is the caption from the spoken words. And deploying a complex algorithm, CapMate can actually automatically adjust and correct the sync issue throughout the entire file at the operator’s press of one button! This action alone can save a substantial amount of an operator’s time, with amazing accuracy. Users can also perform a detailed review of the captions using CapMate viewer application and perform manual changes.

Caption Overlay:

Another item that can be annoying to an audience, is when the caption text, usually placed at the lower part of the screen, overlaps with burnt-in text in the show. Operators need to manually review the content with the caption turned on to see if and when the caption may overlay a burnt-in text present on the screen. This is another time-consuming process.

CapMate, using a sophisticated algorithm can examine every frame and detect any text that may be part of the content. It can then mark all the time codes where caption text is overlaying on the on-screen text, simplifying the process for the operator who can quickly adjust the location of the caption and remedy the issue.

Caption Overlap:

While this sounds similar to the previous feature, it is actually quite different. There are instances where due to missed caption timing, the beginning of a caption may occur before the end of the previous caption. That, as you can imagine, has a big impact on the viewing experience and is not acceptable.

CapMate can easily detect and report back on all instances where such caption overlaps exist and like many of its other features, CapMate provides an intuitive interface for the operator to have CapMate make the necessary adjustment to all affected captions.

SCC (and other) Standards Conformance:

Closed caption and subtitle files come in many different formats. One of the oldest and most arcane formats (and yet quite prevalent) is called SCC, which stands for “Scenarist Closed Captions.” It’s commonly used with broadcast and web video, as well as DVDs and VHS videos (yes, it is that old!). It has very specific format specifications and is not a human-readable file. Therefore checking for format compliance is a very difficult task for an operator, always requiring additional tools. And making corrections to such files is even more difficult as it is easy to make matter worse by the smallest mistake. There are also a variety of XML-based caption formats that while more human-readable, are still difficult to manually verify and correct.

CapMate has automated Standards conformance capability, and can quickly and easily not only detect file conformance issues for SCC and other formats, but it also can make corrections accurately, and effortlessly. There are a variety of different templates defined for IMSC, DFXP, SMPTE-TT, etc, which CapMate can verify for conformance.

Profanity and Spell Check/Correction:

While some content may include profanity that is spoken, many broadcasters may choose not to have such words spelled out as part of the caption/subtitle. In many cases where automated speech-to-text utilities are used to create the initial caption files, such profane words are transcribed without any discretion. And in case of human authoring where captions are generated manually, spelling mistakes can be easily introduced by the authoring operators.

CapMate provides quick and accurate analysis of the caption text against a user-defined profanity database, and a user-extendable English dictionary to detect both profanity and spelling mistakes. Similar to word-processing software, CapMate allows the operator to do a global replacement of a profane word with a suitable substitute, or fix a spelling mistake. This work will take a fraction of the time using CapMate compare to manual caption/subtitle detection and correction.

Many other Features:

To detail all the features of CapMate here would make this a very long blog! Suffice to say, there is a wealth of other features that deal with items such as CPL (Characters Per Line), CPS (Characters Per Second), WPM (Words Per Minute), or number of lines, that CapMate can verify and provide an intuitive interface for the operator to fix.

I will have to leave those for a separate blog (it is called job security! J )

But if you want to get more details about CapMate please go here or contact us for a demo and free trial! You can also check out the launch video here we made announcing CapMate!

By: Fereidoon Khosravi

This content first appeared here on: https://www.veneratech.com/capmate-key-features-closed-caption-and-subtitle-verification-and-correction/

0 notes

Text

Introducing CapMate – Taking Caption QC & Management to the Next Level

By: Fereidoon Khosravi

During my face-to-face meetings and discussions with customers (during the pre-COVID times, remember those days?!) an interesting theme started to emerge. At first, it was subtle but quickly it became clear to me what they were asking for (the ‘aha’ moment!). Aside from using our QC tools (Pulsar & Quasar) for media (audio/video) QC, many of them were asking if we could do something similar for their caption and subtitle files in Pulsar or Quasar! It turns out that caption file QC and correction is quite a time consuming effort, one that for the most part was manual and labor-intensive, without a very good automated solution available. They had been looking for an automated way to not only QC their Caption files, but many also preferred an automated or interactive way to review and correct the files as well.

After studying their requirements carefully, we realized what our customers were looking for, required a set of new functionality, features, and a different interface that was best suited as a new offering which could be used in conjunction with our existing QC tools.

And now after months of hard work, and collaboration with some key customers who helped us fine tune the functionality, we are super excited to announce, ‘CapMate’, a cloud-based SaaS solution for verification *and* correction of side car caption and subtitle files!

And the name says it all, ‘Cap’ and ‘Mate’! A companion to help deal with caption related issues!

We heard our customers loud and clear and here are a few interesting features that helps CapMate stand out:

It is a native-cloud solution. It means it can scale up and down seamlessly providing dynamic scalability to the customers.

It has a usage-based pricing model, so our customers can sign up for a subscription plan that fits their volume of content.

We even added an industry first ad-hoc pricing! So those who don’t have ongoing caption need, can just sign up and pay for what they need, when they need it, without monthly commitment!

Taking advantage of Machine Learning techniques, CapMate can provide a more accurate sync analysis and in fact does an amazing job in auto-fixing various Sync issues!

CapMate can detect many of the pain points we have heard from our customers, from the technically challenging Standards compliance (like for SCC format) to determining whether the caption is overlaid on top of burnt-in text, to detecting profanity or spelling issues. As well as a comprehensive list of editing features!

We paid very close attention to content security as we know that is so important to our customers; as well as scalability so our customers won’t have any bottlenecks processing large volumes of content at the same time! CapMate is capable of processing hundreds of titles at the same time.

Using CapMate, there is a significant saving of time and cost for QC of each caption file, as CapMate can analyze and report back all the issues in a matter of minutes! Customers can get a CapMate report and share it with their caption vendor to point out what needs to be corrected.

Jack Hurley, the Director of Digital Production at Cinedigm, one of our great early adopters, had this to say: “CapMate maintains both a robust and unique feature set for checking captions on the cloud. Our ability to leverage its capabilities has drastically increased our accuracy and efficiency in verifying our growing volume of captions received. As Cinedigm forges onward into the ever-changing FAST OTT landscape, we rely on CapMate to help us serve reliable, quality captions to our audience.”

For our customers who choose to correct the caption files themselves, or for our customers who are caption vendors, with the intuitive and easy to use CapMate interactive interface, the entire process of review and correction can be done in fraction of the time that it would take for the current manual review! The customer can choose to do auto-correction or make the corrections as they are reviewing the results, and generate a new clean caption file, right on the spot!

And then if you account for our scalability and our dynamic parallel processing capability, the customer can submit hundreds of titles at a time, and get them all analyzed in parallel and receive the results back at the same time! Imagine the savings in time!

With the introduction of CapMate, we are expanding and broadening the scope of what we consider ‘Quality Control’. We can now go beyond the wide range of QC features we have for audio and video in a media file and make sure all those caption and subtitle files also meet the customer requirements.

So all those months of being cooped up because of COVID was put to good use and we are excited to announce ‘CapMate’!

Get more details about CapMate here and contact us for a demo and free trial!

P.S. Check out the splashy launch video we made announcing CapMate! See if you can spot me!

youtube

This content first appeared here on: https://www.veneratech.com/introducing-capmate-taking-caption-qc-management-to-the-next-level/

0 notes

Text

HDR Insights Series Article 4 : Dolby Vision

In the previous article, we discussed the HDR tone mapping and how it is used to produce an optimum viewer experience on a range of display devices. This article discusses the basics of Dolby Vision meta-data and the parameters that the user needs to validate before the content is delivered.

What is HDR metadata?

HDR Metadata is an aid for a display device to show the content in an optimal manner. It contains the HDR content and mastering device properties that are used by the display device to map the content according to its own color gamut and peak brightness. There are two types of metadata – Static and Dynamic.

Static metadata

Static metadata contains metadata information that is applicable to the entire content. It is standardized by SMPTE ST 2086. Key items of static metadata are as following:

Mastering display properties: Properties defining the device on which content was mastered.

RGB color primaries

White point

Brightness Range

Maximum content light level (MaxCLL): Light level of the brightest pixel in the entire video stream.

Maximum Frame-Average Light Level (MaxFALL): Average Light level of the brightest frame in the entire video stream.

In a typical content, the brightness and color range varies from shot to shot. The challenge with static metadata is that if the tone mapping is performed based on the static metadata, it will be based only on the brightest frame in the entire content. As a result, the majority of the content will have greater compression of dynamic range and color gamut than needed. This will lead to poor viewing experience on less capable HDR display devices.

Dynamic metadata

Dynamic metadata allows the tone mapping to be performed on a per scene basis. This leads to a significantly better user viewing experience when the content is displayed on less capable HDR display devices. Dynamic metadata has been standardized by SMPTE ST 2094, which defines content-dependent metadata. Using Dynamic metadata along with Static metadata overcomes the issues presented by the usage of only the static metadata for tone mapping.

Dolby Vision

Dolby Vision uses dynamic metadata and is in fact the most commonly used HDR technology today. This is adopted by major OTT service providers such as Netflix and Amazon, as well as major studios and a host of prominent television manufacturers. Dolby Vision is standardized in SMPTE ST 2094-10. In addition to supporting for dynamic metadata, Dolby Vision also allows description of multiple trims for specific devices which allows finer display on such devices.

Dolby has documented the details of its algorithm in what they refer to as Content Mapping (CM) documents. The original CM algorithm is version (CMv2.9) which has been used since the introduction of Dolby Vision. Dolby introduced the Dolby Vision Content Mapping version 4 (CMv4) in the fall of 2018. Both versions of the CM are still in use. The Dolby Vision Color Grading Best Practices Guide provides more information.

Dolby Vision metadata is coded at various ‘levels’, the description of which is mentioned below:Metadata Level/Field DescriptionLEVEL 0GLOBAL METADATA (STATIC)Mastering DisplayDescribes the characteristics of the mastering display used for the projectAspect RatioRatio of canvas and image (active area)Frame RateFrame RateTarget DisplayDescribes the characteristics of each target display used for L2 trim metadataColor EncodingDescribes the image container deliverableAlgorithm/Trim VersionCM algorithm version and Trim versionLEVEL 1ANALYSIS METADATA (DYNAMIC)L1 Min, Mid, MaxThree floating point values that characterize the dynamic range of the shot or frame

Shot-based L1 metadata is created by analyzing each frame contained in a shot in LMS color space and combined to describe the entire shot as L1Min, L1Mid, L1Max

Stored as LMS (CMv2.9) and L3 OffsetsLEVEL 2BACKWARDS COMPATIBLE PER-TARGET TRIM METADATA (DYNAMIC)Reserved1, Reserved2, Reserved3, Lift, Gain, Gamma, Saturation, Chroma and Tone DetailAutomatically computed from L1, L3 and L8 (lift, gain, gamma, saturation, chroma, tone detail) metadata for backwards compatibility with CMv2.9LEVEL 3OFFSETS TO L1 (DYNAMIC)L1 Min, Mid, MaxThree floating point values that are offsets to L1 Analysis metadata as L3Min, L3Mid, L3Max

L3Mid is a global user defined trim control

L1 is stored as CMv2.9 computed values, CMv4 reconstructs RGB values with L1 + L3LEVEL 5PER-SHOT ASPECT RATIO (DYNAMIC)Canvas, ImageUsed for defining shots that have different aspect ratios than the global L0 aspect ratioLEVEL 6OPTIONAL HDR10 METADATA (STATIC)MaxFALL, MaxCLLMetadata for HDR10

MaxCLL – Maximum Content Light Level MaxFALL – Maximum Frame Average Light LevelLEVEL 8PER-TARGET TRIM METADATA (DYNAMIC)Lift, Gain, Gamma, Saturation, Chroma, Tone Detail, Mid Contrast Bias, Highlight Clipping

6-vector (R,Y,G,C,B,M) saturation and 6-vector (R,Y,G,C,B,M) hue trimsUser defined image controls to adjust the CMv4 algorithms per target with secondary color controlsLEVEL 9PER-SHOT SOURCE CONTENT PRIMARIES (DYNAMIC)Rxy, Gxy, Bxy, WPxyStores the mastering display color primaries and white point as per-shot metadata

Dolby Vision QC requirements

Netflix, Amazon, and other streaming services are continuously adding more and more HDR titles to their library with the aim of improving the quality of experience for their viewers and differentiating their service offerings. This requires that the content suppliers are equipped to deliver good quality and compliant HDR content. Moreover, having the ability to verify quality before delivery becomes more important.

Many of these OTT services support both the HDR-10 and Dolby Vision flavors of HDR. However, more and more Netflix HDR titles are now based on Dolby Vision. Dolby Vision is a new and complex technology, and therefore checking the content for correctness and compliance is not always easy. Delivering non-compliant HDR content can affect your business and therefore using a QC tool to assist in HDR QC can go a long way in maintaining a good standing with these OTT services.

Here are some of the important aspects to verify for HDR-10 and Dolby Vision:

HDR metadata presence

HDR-10: Static metadata must be coded with the correct parameter values.

Dolby Vision: Static metadata must be present once and dynamic metadata must be present for every shot in the content.

HDR metadata correctness. There are a number of issues that content providers need to check for correctness in the metadata:

Only one mastering display should be referenced in metadata.

Correct mastering display properties – RGB primaries, white point and Luminance range.

MaxFALL and MaxCLL values.

All target displays must have unique IDs.

Correct algorithm version. Dolby supports two versions:

Metadata Version 2.0.5 XML for CMv2.9

Metadata Version 4.0.2 XML for CMv4

No frame gaps. All the shots, as well as frames, must be tightly aligned within the timeline and there should not be any gap between frames and/or shots

No overlapping shots. The timeline must be accurately cut into individual shots; and analysis to generate L1 metadata should be performed on a per-shot basis. If the timeline is not accurately cut into shots, there will be issues with luminance consistency and may lead to flashing and flickering artifacts during playback.

No negative duration for shots. Shot duration, as coded in “Duration” field, must not be negative

Single trim for a particular target display. There should be one and only one trim for a target display.

Level 1 metadata must be present for all the shots.

Valid Canvas and Image aspect ratio. Cross check the canvas and image aspect ratio with the baseband level verification of the actual content.

Validation of video essence properties. Essential properties such as Color matrix, Color primaries, Transfer characteristics, bit depth etc. must be correctly coded.

Netflix requires the Dolby Vision metadata to be embedded in the video stream for the content delivered to them. Reviewing the embedded meta-data in video stream can be tedious and therefore an easy way to extract & review the entire metadata may be needed and advantageous.

How can we help?

Venera’s QC products (Pulsar – for on-premise & Quasar – for cloud) can help in identifying these issues in an automated manner. We have worked extensively with various technology and media groups to create features that can help the users with their validation needs. And we have done so without introducing a lot of complexity for the users.

Depending on the volume of your content, you could consider one of our Perpetual license editions (Pulsar Professional, or Pulsar Standard), or for low volume customers, we also have a very unique option called Pulsar Pay-Per-Use (Pulsar PPU) as an on-premise usage-based QC software where you pay a nominal per minute charge for content that is analyzed. And we, of course, offer a free trial so you can test our software at no cost to you. You can also download a copy of the Pulsar brochure here. And for more details on our pricing you can check here.

If your content workflow is in the cloud, then you can use our Quasar QC service, which is the only Native Cloud QC service in the market. With advanced features like usage-based pricing, dynamic scaling, regional resourcing, content security framework and REST API, the platform is a good fit for content workflows requiring quality assurance. Quasar is currently supported for AWS, Azure and Google cloud platforms and can also work with content stored on Backblaze B2 cloud storage. Read more about Quasar here.

Both Pulsar & Quasar come with a long list of ‘ready to use’ QC templates for Netflix, based on their latest published specifications (as well as some of the other popular platforms, like iTunes, CableLabs, and DPP) which can help you run QC jobs right out of the box. You can also enhance and modify any of these QC templates or build new ones! And we are happy to build new QC templates for your specific needs.