#why you no compatible microsoft you make me SAD

Explore tagged Tumblr posts

Text

THE EMPRESS - CHAPTER 7

ITS UP! YES!

Just a few warnings to note., this one is a little bit violent towards the end and there is a panic attack described in the first section. Hope that's ok!

#I AM GOING TO COLLAPSE ON MY BED#Gurrrl my mac be praying for my downfall i had to retrive it so many times due to word crashing#why you no compatible microsoft you make me SAD#screaming#The Empress#Updates#Chapter 7

11 notes

·

View notes

Text

8485 - INTERVIEW

From a computer science standpoint, the Protocol is open-source. Medically, it’s individualized to the patient. I would like to explain this better, but the more I elaborate on the Protocol, the more specific I get, the less I think that statement would be true...

Text

COSMOS.PRESS

Date

August 3, 2023

Read

10 mins

COSMOS.PRESS

Tell us about 8485.

8485

There isn’t a lot I can say about 8485 definitively. I would like people to know that 8485 belongs to me. That’s all.

COSMOS.PRESS

Talk to us about your most recent release, Personal Protocol (2023).

youtube

8485

Personal Protocol is my current approach to everything. I have been working on it for a long time and I’m happy it’s finally ready to be demonstrated to the public. It’s less of a statement and more of a machine and a living organism at the same time. It’s very cool and fun to dance to.

COSMOS.PRESS

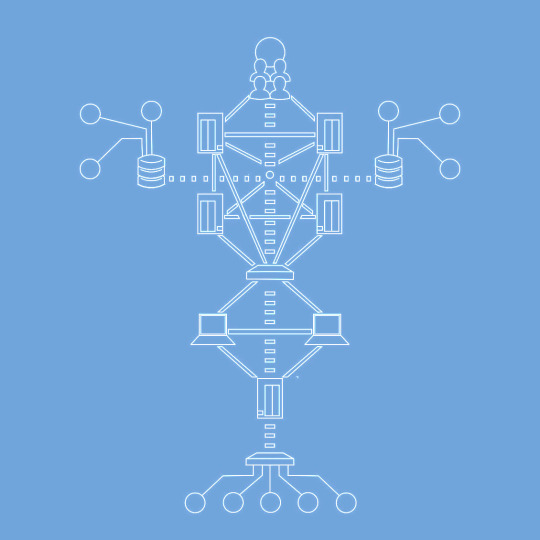

On the album art for the project, a rendition of the 'Sefirot' (tree of life) is on the computer monitor you're next to. How does sacred geometry relate to the concepts behind Personal Protocol?

8485

The structure and symbology of the Protocol schematic are native to Personal Protocol. It’s not a Sefirot, but I noticed a lot of similarity between computer science diagrams like the Spanning Tree Protocol and sacred geometry like the Sefirot, and that similarity informed the schematic. Both these ancient symbols and modern diagrams are ways of visualizing the logic of complex systems.

A lot of Personal Protocol reflects on different systems and patterns, making sense of what I can, and struggling with faith in what I can’t. The first two audio modules set up that dichotomy right away: COOL_DNB_SONG deals with a lot of huge universal patterns and Same tasks zooms in on tiny, simple loops.

COSMOS.PRESS

Why was it essential for you to release an abstract alongside the album?

8485

Getting into the weeds of Personal Protocol and all its functions goes beyond the scope of reasonable conversation, so I’ve tried to distill it into as many digestible forms as possible. All of them: the 7 tracks, 2 audiovisual modules, the Protocol schematic, a 5-foot collage, and obviously the Abstract itself are “abstracts” in their own rite.

COSMOS.PRESS

How has your approach to art direction changed in this last album cycle?

youtube

8485

I’ve branched out a lot. I was always interested in multimedia, visual art and video, but with Personal Protocol, it became equal to the music itself. I went through a phase with music where it was genuinely nauseating to attempt so I had to lean into other means of communication.

COSMOS.PRESS

Can you elaborate on the phrase, "The Protocol is universally compatible?"

8485

From a computer science standpoint, the Protocol is open-source. Medically, it’s individualized to the patient. I would like to explain this better, but the more I elaborate on the Protocol, the more specific I get, the less I think that statement would be true.

COSMOS.PRESS

Much of the technology represented in your recent art is visibly dated, from old operating systems to floppy disks and everything in between.

Why are you choosing to highlight these dated fragments of electronics?

youtube

8485

I relate to them. I think they deserve it. The rapid acceleration of digital technology leaves behind an exponentially increasing pile of dead, obsolete shells. Microsoft recently replaced their desktop assistant “Cortana” with “Copilot” which ostensibly uses more modern AI technology. It makes me sad for her.

People become attached to the things they use every day. Technology is moving on much faster than we can emotionally.

COSMOS.PRESS

Can you talk to us a bit about the track Atlantis (2º)?

8485

Atlantis (2°) is the inverse of Same tasks. It’s about finding out the systems I had blind, urgent faith in where cover stories for a reality I wasn’t aware of. When I discovered that, I started to suspect that there was more that the new “truth” was hiding from me, too. Discovering that the patterns I understood were malicious encryption jobs required me to open myself up to possibilities I haven’t fully made sense of yet.

It’s a terrifying loss of control that is actually written into the control systems of Personal Protocol as an essential driving force.

COSMOS.PRESS

What’s next for 8485?

8485

My hope is always that I can’t fully and accurately answer that question. I have a song about roadkill that I’m pretty keen to release.

1 note

·

View note

Text

The Persistence of Loss: More Ghosts Teaching Robots Life Lessons

This is a story written by Mark Stevenson, but it takes place in the Eugenesis continuity. Fun fact: when everything’s fanfic, that means everything’s equally canon! TMUK took advantage of this nodule of wisdom very frequently.

This is running on Microsoft Word in compatibility mode, by the way. No PDFs here.

It’s after the events the Epilogue of Eugenesis, and there’s a thing called “the List” hanging up in the new Autobase. You know, the one that was set up in the fucking concentration camp.

The worst part of this is how many questions are stirred up by the fact this is on printer paper. Where did the paper come from? Does this mean Cybertron has some sort of plant life that could be pulped down and made into paper? Did they bring some from Earth on the Ark?

What the List is isn’t directly stated, but considering the events of Eugenesis, it isn’t hard to guess.

Meanwhile, Bombshell, everyone’s favorite mind-controller and giant bug, is messing around with the Quintesson corpses, utterly fascinated by the way they’re built.

I never covered this in my breakdown, but the little dudes who were flying the Tridents? All those nameless nobodies? They’re hardwired into their controls. There’s no transition from steering to hand or seat to ass, it’s all one and the same.

Swindle is, of course, disgusted by Bombshell’s little distraction, but there’s not much point arguing with a guy like that, especially now that the tentative peace in the wake of the Quintesson invasion is about to be bashed in with a hammer, since Galvatron’s going to be back on Cybertron in the next few hours. Flattop cuts in, saying they’ve got company inbound.

Over at the remains of Delphi, Scourge has decided to have a little alone time, just thinking his thoughts. It’s nice and quiet, the sunset is positively lovely, and he’s honestly probably overdue for some sort of interruption.

Welp, looks like he wasn’t dead after all. I guess he just decided he was going to sit the entirety of the genocide out.

Though maybe he just didn’t realize it was happening, because this Cyclonus really is just stupid as shit. He laughs at a comment Scourge makes, completely forgetting that they’re in the Sonic Canyons, and nearly kills the both of them. Once the danger’s passed, Cyclonus finally asks Scourge what’s bothering him. What a good friend.

Back at Autobase, Rodimus Prime is sad. He’s always sad, but he’s particularly sad right now. We’re still only a couple of days beyond him having woken up, so he probably stopped self-isolating over Kup’s death roughly twenty minutes ago.

He’s currently reflecting on Emyrissus, the Micromaster he sent to assassinate Galvatron, whose death was as awful as it was predictable, or so Rodimus likes to think. He knew Emyrissus was going to die.

You see, this is why Rodimus is a better leader than Optimus is, at least in terms of empathy. He understands that he’s in a position of power, one that can make or break a person’s very life, and that scares the shit out of him. Regardless of Eugenesis Optimus being one from prior the horrendously long war, he was still enough of a figurehead to at least entertain the thought of his being put on a pedestal by those around him.

But no. Instead everyone deserved to die.

Thanks, space dad.

Stevenson, you are playing a dangerous game here-

Mirage and his friends are being ambushed by a group of Decepticons. He’s currently rocking around with Ramhorn and Kick-Off, and they’re currently barricading themselves behind a wall. Ramhorn, being a wildcard, runs out of cover and decides to just go for it. Mirage silently wonders if this is why the Transformers as a race can’t function outside of making war. That thought doesn’t get to the self-reflection stage, however, as he basically says “fuck it” and vaults over the wall himself, though he at least has the bright idea to go invisible beforehand.

Getting back to Scourge’s angst, it would seem that Nightbeat was right on the money about not having hit him with the mind wipe device. Scourge remembered everything, and it's tortured him for the last 27 years- even more if you think too hard about all the time travel. He was fully convinced that after he went through the wormhole, that was it- the Transformers lost, and he had his very own countdown. THAT would be why he blew himself up in Liars, A-to-D.

Now that it looks like everything’s going to be about as okay as it gets on Cybertron, he’s really not sure what to do with his life anymore.

These two fucking idiots have a great big laugh together, to the point where the nearby homeless population wonder if the Quintessons came back. They eventually calm down, and Scourge asks Cyclonus what I’ve been wondering for months: what he did in the Eugenesis Wars.

Over with Rodimus, Kup is at the door.

Alright, let’s see where this goes. I’m betting on hallucination.

Kup enters, closing the door behind him at Rodimus’ request, and comments on the state of the office. It’s positively dreary, and that’s with the inclusion of the window.

Kup seems to be a sort of manifestation of Rodimus’ self-loathing. He should probably see a therapist, but last I heard Rung was over with the Decepticons, and he’s probably the only mental health specialist on the entire planet.

Which makes me wonder why Galvatron hasn’t killed him yet. Guy’s not exactly a fan of therapy.

Kup’s tough love comes from a good place- he can see Rodimus is deep in the rut that is Depression™, and he needs a swift kick in the ass to help him get back on track. I don’t quite think that’s how this works, but something’s got to give, I suppose.

Because you see, Kup’s seen the future, and it ain’t pretty- Star Saber isn’t someone to be trusted, and his whole gang is going to be coming down on Cybertron like sharks smelling blood.

Then again, Kup’s not real, so what does he know?

Rodimus asks what this is all actually about, seeing as Kup always had a reason for showing up for anything. Kup admits that he wants to talk about Emyrissus.

The problem is that things are only going to get harder from here on, as the lines between good and evil are blurred, as the Autobots sink deeper into the dredges of war to try and win this thing. Emyrissus is just the most glaring example at present. Kup opens the door, and Rodimus worries that the Micromaster is going to pop out to join the conversation, but Kup just says that he doesn’t have enough memories of the guy to build him in his head like he can Kup.

Kup tells Rodimus that he needs to learn to let go, and stop blaming himself for everything that’s gone wrong with this war. Then he’s gone.

Rodimus goes to join the troops.

Over with Mirage, things aren’t going so hot. He’s been shot. HIs team members are either too busy to help, or completely AWOL. He scrabbles for his gun- very reminiscent of Liars A-to-D here- only to have someone else’s gun put to his head. It’s Bombshell. Look at the scenes coming together all nice-like!

Bombshell threatens to shoot him, and Mirage is very okay with this plan. He’s hit his nihilism barrier and broken clean through it- what’s the point? All they do is fight, all they do is kill, and one day there won’t be anything left, and all will be lost to time. There’s nothing worth living for anymore.

The postpartum depression is hitting Mirage very hard.

Bombshell recalls the Quintesson soldier, and orders his team to stand down. They won’t be killing anyone today. He promises Mirage that when the war is over, they’ll have a chat, then leaves.

Mirage is, understandably, confused by this.

Back at Autobase, Rodimus is being followed by a smattering of groupies, as he makes his way to the List. By the time he gets there, nearly fifty folks have joined the throng. He figures now is as good a time as any to speak to his troops, and he hops up on a toolbox so everyone can see him.

First and foremost, he tells them that he’s proud of them. Then thanks them for being here with him.

Then he addresses the elephant in the room.

Then Nightbeat pushes through the crowd towards the Prime. He’s fresh off the presses, and he knows what Rodimus was about to do to the List. He knows, and he encourages it.

With a flourish, Rodimus Prime rips the List off of the wall, and everyone bursts into applause.

Finally getting back to Cyclonus’ deal, it turns out he was buried under Darkmount the whole time. Bit anticlimactic, that. With the Mystery of the Missing Cyclonus solved, the two decide to go get plastered at Maccadam’s, and also maybe stab a few people. Good times.

Meanwhile, off-world, Great Shot enters the office of Star Saber, and they join in the long-standing tradition of talking shit about Old Cybertron. Star Saber is less than impressed with the Autobots, and how they got their asses kicked by a bunch of guys that look like flying eggs. Still, helping them out gives him something to do, and that something is rebuilding Old Cybertron into the gleaming, perfect image of New Cybertron.

And then there’s a quote directly ripped from Hitler himself, to really sell you on the fact that Star Saber is a Bad Fucking Dude.

The end!

This will most likely be the only non-Roberts Eugenesis-related work I’ll be looking at. There are others, but they’ve been lost to time. Also, they’re not really why I’m doing this, so… yeah.

Up next…

Huh.

Guess I’ll start on the professional stuff.

#transformers#eugenesis#the persistence of loss#maccadam#Hannzreads#text post#long post#prose writing

13 notes

·

View notes

Text

Weekend Top Ten #444

Top Ten PlayStation Games I Hope Get PC Releases

And once again I turn my steely eye to the world of gaming. This time though I’m pulling on my blue jumper and talking about PlayStation (because I guess Xbox would have a green one and Nintendo’s would be red? I dunno, I’m making this up as I go). I’ve said in the past that as much as I like Sony and would love a PlayStation, I’ve never actually owned one myself because I always tend to buy an Xbox first. As much as I love the gaming industry, gaming as a past-time, and games themselves as an art-form, I have a rapidly dwindling supply of free time and unfortunately once I factor in trying to see enough films to maintain polite conversation and staring at my phone for hours on end in order to maximise my ennui, I don’t have an awful lot of minutes left in the day to dive into a wide variety of triple-A titles. As such, because I’m used to the Xbox’s way of working, because I tend to prefer its controllers and its whole ecosystem, and because I love several of their franchises (Halo and Fable especially), it’s always Xbox I gravitate towards, and then I just don’t have enough gaming time left over to justify the expense of a second huge console. And let’s get it out of the way – the PlayStation 5 is huge.

As a result, as time has gone on, there is an ever-growing number of PlayStation exclusives that I’ve barely played. In The Olden Days this was less of a problem, as pre-kids (and, heck, pre-everything considering how old the original PlayStation is at this point) I was able to saunter over to a friend’s house and try out games on their console. In this fashion I sampled a good many PS1 and PS2 titles such as Metal Gear Solid, WipeOut, Resident Evil, Time Splitters, Ico, and my absolute favourite, the original PS2 Transformers game. By the time PS3 rolled around this happened more rarely, but I’d argue it was fairly late in the generation when they showed off any games that really interested me (specifically those from Naughty Dog); and with the PS4, I’ve barely played on one at all, more’s the pity. And I really do mean more’s the pity, because this time around there have been loads of games I wanted; they really have had a better generation than Xbox, even if I couldn’t give up my Halo or Gears, to say nothing of the huge collection of backwards compatible games that get played to death by my kids.

That’s why I’m overjoyed that Sony have finally taken a leaf out of Microsoft’s book and are starting to release some of their bigger games on PC. I’ve been largely laptop-only for about a decade now, but it is a very powerful laptop, even if it’s not dedicated gaming hardware, and I’ve been pleasantly surprised how well it manages to run even quite demanding 3D games such as Assassin’s Creed Odyssey or Gears Tactics (I really must try out Flight Simulator sometime soon). The first big Sony exclusives to drop on Steam are Death Stranding (which looks bonkers but not my cup of tea) and the intriguing Horizon: Zero Dawn, which I’d probably really like. But those were never the Sony games that totally floated my boat; no, there are others, and I would absolutely love it if Sony saw fit to unleash them on Steam in the near future. Hey, I’m not picky; you don’t need to day-and-date it. I don’t mind enjoying a “Part I” whilst PS5 gamers are playing the hot new “Part II”. But I increasingly think be-all-and-end-all exclusives are rather old-fashioned, and whilst I get that there should probably be games tied to specific boxes, the services those box-companies provide should be more universal. That’s why I like Microsoft’s Play Anywhere initiative and the mobile game streaming via xCloud. But this is a Sony list, and these are some very, very good Sony games. I assume. By and large, I haven’t played them.

Marvel’s Spider-Man (2018): I love Rocksteady’s Arkham series of Batman games, but I do find them a bit relentlessly dark and miserable with an oh-so-gritty art style. What could be better, then, than a game that seems to play broadly similar but is nice, bright, funny, and sunny? Spider-Man is the perfect hero for that sort of game, and this looks absolutely like everything I’d ever want from a superhero game. I really, really, hope it comes to PC at some point, but I’ll be honest, I doubt it.

The Last of Us (2013): I like a good third-person action-adventure, whether it’s Gears, Tomb Raider, or Jedi: Fallen Order. TLOU looks most up my street, however, for its story, and its seemingly moving depiction of a family unit forming amidst the end of the world. By all accounts it’s a tear-jerker; I’ve tried to steer clear of the plot. Porting it over to PC whilst the well-received sequel is getting an inevitable PS5 upgrade seems like a good idea.

Uncharted: The Nathan Drake Collection (2015): I’ve very briefly played one of the Uncharteds, but not really; I hear they’re like the Tomb Raider reboot, but better, which seems nice. A rollicking third-person action-adventure with an Indiana Jones spirit? Count me in. With the long-mooted film adaptation finally underway, COVID notwithstanding, it seems like a good time to let PC gamers have a go at the classic saga. I’d add part 4 to the existing trilogy collection before shunting it to Steam.

Shadow of the Colossus (2018): I’ve played Ico a bit so I’m broadly familiar with the tone of these games, but Colossus seems like an even cooler idea. Scaling moving monsters, killing them but feeling guilty, sounds like both a great gameplay mechanic and a moving and evocative theme for a game. Port the recent remake to PC please, Mr. Sony.

Ratchet and Clank (2016): full disclosure: the new PS5 Ratchet game is the only title I’ve seen demoed that really looks next-gen, with its fancy ray-tracing, excessive particle effects, and funky portal-based gameplay. How’s about, then, giving PC gamers a chance to enjoy the relatively-recent remake of the very first game? A bit of cross-promotion works wonders, Sony.

God of War (2018): the old PS3-era God of War games never really appealed, I guess because I’m not always a huge fan of hack-and-slash and they gave off a kind of crazy excessive, almost laddish vibe that I found off-putting (having not played them, I may be being incredibly unfair). The new one, though, sounds like it’s all about being a dad and being sad and remorseful, so count me in.

Wipeout Omega Collection (2017): I’ve always enjoyed arcade racers, but one sub-genre that I don’t think gets enough love is a futuristic racer, especially where you’ve got hover cars (they seemed to be quite popular twenty-odd years ago). I played the original Wipeout on my mate’s OG PlayStation, but I’d love it if us PC gamers could play the whole series. Could it possibly be even better than Star Wars Episode I Racer?

LittleBigPlanet 3 (2014): chances are, if I’d done this list back around the time the first two LittleBigPlanet games were released, they’d have topped the chart. They looked like cool, fun platform games, with a fantastic creative aspect; I bet my kids would love them. With that in mind, I’d be over the moon to see Sackboy take a bow on Steam. I’d have put Dreams on this list, incidentally, except I can’t see myself getting a VR set anytime soon.

The Last Guardian (2016): feels a bit of a cheat having both this and Colossus on the list, but I do want to see what the fuss is about. One of those games infamous for its time in development, it seems to be a love-it-or-hate-it affair, and I am intrigued. Plus I want to know who dies at the end, the boy or the monster.

Killzone Shadow Fall (2013): gaming cliché has it that Nintendo does cutesy platformers, Microsoft does shooters, and Sony does third-person action-adventures; so whilst I’m well-versed in Halo and Gears, I’ve never sampled PlayStation’s key FPS franchise. Famous for its genuinely wowing showcase when the PS4 was announced, I’m not sure how good Shadow Fall actually is (or any of its predecessors for that matter) but I’d be very interested in finding out. Alternatively, give us one of the Resistance games and let me tear around an alternative Manchester or something.

So, there we are; ten games that I think are probably quite good – or even, y’know, masterpieces – but I’ve not had the chance to really sample them yet. And short of me picking up a PlayStation on the cheap, I don’t know when I really can. I mean, I told myself I’d buy a second-hand PS3 and a copy of TLOU once this current generation was in full swing, but that never happened. So throw me a bone, Sony! I still want to buy your stuff! Just sell it somewhere else! Somewhere I already am! Like Steam! Please?!

0 notes

Link

If you've been around as long as I have, you've probably noticed something remarkable about JavaScript (as compared to other languages). It's evolving at a breakneck pace. In general, this is a very good thing (although it can make it quite challenging to keep your skills current). And if you're part of the "new generation" of coders - many of whom only code in JavaScript - this might not even strike you as extraordinary. But as someone who's watched numerous languages evolve over the better part of three decades, let me assure you that, in this category, JavaScript is in a class of its own.

History

Most "modern" languages are maintained (in the worst-case scenario) by a single company. Or they're maintained (in the best-case scenario) by a vast, (virtually) nameless, open-source consortium. Single-company stewardship isn't necessarily "bad". On one hand, it can allow the (small) braintrust of stewards to make quick-and-decisive corrections wherever the language is deemed to be "lacking". On the other hand, it can lead to stagnation if the company's braintrust doesn't favor Improvement X, even in the face of a development community that may be clamoring for Feature X. It can also cause serious headaches if the parent company has objectives that clash with the broader community - or if they ever decide to abandon the project altogether. Open-source projects are generally assumed to be "better". But even they have their downsides. Open-source projects are often plagued with "groupthink" and "analysis paralysis". If you think it's difficult to get a single room of people in your company to agree on anything, try getting a new feature proposal approved in a well-established open-source project. You can submit a proposal saying that, "Bugs are bad." And you can almost be assured that someone in the open-source community (probably, a well-established and respected senior voice in the community) will chime in and say, "Ummm... No, they're not. And we definitely don't need a removeBugs() function in the core language."

JavaScript's Accidental Solution

If you're part of the latest-generation of JavaScript devs, you can be forgiven if you think that the language has always evolved at its current pace. After all, the 2010s have seen an impressive array of new features and syntactic shortcuts added to the language. But let me assure you, it hasn't always been so. The early days of JavaScript were strikingly similar to other trying-to-get-a-foothold languages. The ECMAScript committee had good intentions - but change was slowwwww. (Like it is for nearly any decision-by-large-committee process.) If you don't believe me, just look at what happened (or... didn't happen) with the ECMAScript standard for nearly 10 years, starting in the early 00s. We went many years without having any substantive improvements to the language. And for most of those years, JavaScript was much more of a "toy" than a serious programming language. This was, perhaps, best illustrated by TypeScript. TypeScript wasn't supposed to be a separate "language". It was supposed to be a major enhancement to core JavaScript - an enhancement that was heralded by none other than: Microsoft. But through a series of last-minute backtracking decisions that would require multiple blog posts to explain, MS's TypeScript modifications ended up being rejected. This eventually led to MS releasing TypeScript as its own, separate, open-source project. It also led to years of stagnation in JavaScript. We might still be wallowing in that general malaise if it weren't for the introduction of several key technologies. I'm talking about:

Node.JS

Node Package Manager (NPM)

Babel

[NOTE: If you're part of the adoring Yarn crowd, this isn't meant to sidestep you in any way. Yarn's wonderful. It's great. But I firmly believe that, with respect to the evolution that I'm trying to outline in this article, Node/NPM/Babel were the "sparks" that drove this initial advancement.]

Sidestepping Committees

There's nothing "magical" about JavaScript. Like any other language, it has its strength (and its flaws). And like any other language, if a broad internet consortium needs to reach consensus about the language's newest features, we could well be waiting a decade-or-more for such improvements. But a funny thing happened on the way to the endless open-source release-candidate debates. The Node folks spurred a dynamic package model called NPM. (Which has had its own share of growing pains - but that's for another article...) And NPM spurred a fantastical, automagical package called Babel. For the first time, Babel gave the burgeoning JavaScript community an incredible ability to evolve the language on their own. Babel created a vast, real-world "proving ground" for advancements in the JavaScript language. If you look at the major advancements in the ECMAScript spec over the last 10+ years, you'd be hard-pressed to find any improvements that weren't first encapsulated in NPM packages, that were then transpiled to backward-compatible JavaScript in Babel, before they were eventually absorbed into the core language itself.

"Innovations" Are Rarely... Inventions

Maybe you're thinking that an NPM package is not an innovation in the language itself. And many times, I would agree with you. But when something becomes sufficiently-useful to a huge portion of the programming ecosystem, it can, in fact, become an innovation in the underlying language. Let's imagine that, in your JavaScript applications, you repeatedly have the need to makeToast(). Of course, JavaScript has no native makeToast() functionality - so you've coded up some grand, extensive, convoluted utility function that will allow you to makeToast() right in the middle of any application where you feel the need to, well, you know... make toast. After a while, you find yourself copying-and-pasting this amazing utility into all of your applications. Eventually, you feel a bit of developer guilt over this repeated copying-and-pasting, so you encapsulate your awesome makeToast() functionality into an NPM package. The NPM package allows you to do this:

import toast from 'make-toast'; const noToastHere = 'plain ol bread'; const itsToasted = toast.make(noToastHere);

There's a good chance that your incredible toast.make() function utilizes a ton of non-ECMA-standard language constructs. But that's OK. Because all of your non-ECMA-standard language constructs are just a pile of syntactic sugar for things that you could always do in JavaScript - but with a lot more hellacious-looking code. And when you run your revolutionary new toast.make() function through Babel, it transpiles it back down into that old, ugly, IE7-compliant JavaScript that you never wanna have to type out manually. You see, there's a good chance that your amazing toast.make() functionality isn't doing anything that you couldn't always, theoretically, do with old-skool JavaScript. toast.make() presumably just gives you a faster, sleeker, more-efficient way to make toast, rather than relying on every dev team, in every codebase, having to manually figure out how to make their own toast from scratch. To be clear, such "advancements" aren't just about semantics. They absolutely are advancements. If we always had to do this:

export default function makeToast() { // here are my 200 lines of custom, painfully crafted, // cross-browser-compliant code that allows me to makeToast() }

And now we can just do this:

import toast from 'make-toast'; const noToastHere = 'plain ol bread'; const itsToasted = toast.make(noToastHere);

And, if many thousands of developers all around the world find themselves repeatedly having to either A) import your make-toast package, or B) figure out a way to manually craft the functionality from scratch, then your amazing toast.make() feature is a potentially-significant advancement in the language. More important, if your toast.make() feature becomes so ubiquitous that it's more-or-less "standard" in modern codebases, there's a chance that the ECMAScript committee might actually decide to promote it to the level of being a language construct. (Granted, they may not choose to implement it in the exact same way that you did in your NPM package. But the point is that they might eventually look at what's happening in the broader JS community, realize that vast numbers of codebases now see a need to make toast, and find a way to incorporate this as a base feature in the core language itself.)

Lodash & Underscore

To see this in action, look at many of the core functions that are available under the Underscore or Lodash libraries. When those libraries first rose to prominence, they provided a ton of functionality that you just couldn't do in JavaScript without manually coding out all the functions yourself. Nowadays, those libraries still offer some useful functionality that simply doesn't exist in core JavaScript. But many of their features have actually been adopted into the core language. A good example of this is the Array.prototype functions. One of my pet peeves is when I see a dev import Lodash so they can loop through an array. When Lodash was first introduced, there was no one-liner available in JavaScript that did that. Now... we have Array.prototype functions. But that's not a knock on Lodash. Lodash, Underscore, and other similar libraries did their jobs so well, and become so ubiquitous, that some of their core features ended up being adopted into the language itself. And this all happened in a relatively short period of time (by the standard of typical language evolution).

Innovation vs Stagnation

If you think that JavaScript's recent barrage of advancements is "normal" for a programming language, let me assure you: It's not. I could probably come up with 50 sad examples of language stagnation, but let me give you one very-specific scenario where one language (JavaScript) shines and another (Java) has crawled into a corner to hide. In JavaScript, I can now do this:

import Switch as SlidingSwitch from '@material-ui/core/Switch'; import {Switch as RouterSwitch} from 'react-router-dom'; import Switch as CustomSwitch from './common/form-elements/Switch'; export default function Foo() { return ( <> <RouterSwitch> <Route path={'/path1'} component={Path1Component}/> <Route path={'/path2'} component={Path2Component}/> </RouterSwitch> <div>Here is my SlidingSwitch <SlidingSwitch/></div> <div>Here is my CustomSwitch <CustomSwitch/></div> </> ); }

There's nothing too rocket-sciencey going on here. I'm importing three different components into my code. It just so happens that all three of them were originally written with the same name. But that's OK. JavaScript gives us an easy way to deal with the naming collisions by aliasing them at the point where they're imported. This doesn't make JavaScript unique or special. Other languages have import aliasing features. But it's worthwhile to note that, a decade-or-more ago, you couldn't do this in JavaScript. So how do we handle such naming collisions in Java??? It would look something like this:

import material.ui.core.Switch; import react.router.dom.Switch; import com.companydomain.common.utils.Switch; public class MyClass { material.ui.core.Switch slidingSwitch = new material.ui.core.Switch; react.router.dom.Switch routerSwitch = new react.router.dom.Switch; com.companydomain.common.utils.Switch customSwitch = new com.companydomain.common.utils.Switch; }

If that looks like a vomiting of word soup, that's because... it is. Since you can't alias imports in Java, the only way to deal with the issue is to handle each different type of Switch by using its fully-qualified name. To be fair, every language has quirks and, at times, some annoying little limitations. The point of this post is: When the language runs into limitations, how are those limitations resolved?? Five years ago there were no imports in JavaScript. But now we have imports, with import aliasing thrown in as a bonus. Java's had import capabilities since it was introduced. But it's never had import aliasing. Is that because no one wants import aliasing in Java? Nope. Import aliasing has been proposed as a new feature numerous times over the last twenty+ years. Every single time, it's been shot down - usually by a single, senior member of the Java open-source committee who just looks at the proposal and says, "Ummm... No. You don't need that. DENIED." This is where Node/NPM/Babel is so critical in vaulting JavaScript past other languages. In Java, if you really want to have import aliasing, this is what that process looks like:

Submit a JDK Enhancement Proposal (JEP).

Have your JEP summarily dismissed with a one-line rejection like, "You don't need that."

Just accept that the language doesn't have your desired feature and trudge along accordingly.

Maybe, a few years later, submit a new JEP (and probably have it denied again).

This is the way it worked in JavaScript:

No one had to sit around and wait for imports or import aliasing to be added to JavaScript by a committee. They went out and made their own packages - like, RequireJS.

As it became clear that third-party import solutions were becoming ubiquitous, the ECMAScript committee began working on a formal spec.

Even if the ECMAScript committee had ignored imports altogether, or had denied import aliasing as a feature, anyone who wanted it could continue to use the third-party packages such as RequireJS - so no one was ever beholden to the whims of a stodgy old committee.

Agility... By Accident

First it bears mentioning that JavaScript's NPM/Babel ecosystem is not a magic cure-all for the administrative hurdles inherent in upgrading an entire programming language. With these tools, we can do an amazing "end-around" to get non-standard functionality that would take years - or decades - to get approved through regular channels. But it can still only provide features that could already be done, in some longer and more-manual form, through the language itself. If you want JavaScript to to do something that it simply cannot do today, you still have to go through the ECMAScript committee. But for everything else, NPM/Babel provide a dynamic playground in which proposed features can be tested in live apps. And the download/installation of those features serves as a de facto vote in favor of those approaches. I don't pretend for an instant that this state-of-affairs was a conscious strategy devised by anyone involved in the planning process for JavaScript. In fact, JavaScript's early history shows that it's just as susceptible to "analysis paralysis" as any other language. But the Babel/NPM revolution has allowed for the ecosystem of devs to put natural pressure on the planning committees by allowing us to install-and-run experimental packages without the fear that they won't compile on our users' systems (i.e., browsers). This, in turn, has sparked rapid evolution from a sleepy little language, in the early part of the the century, to a full-bore programming juggernaut today. Of course, this doesn't make JavaScript any "better" than Java (or any other language). There are certain virtues to waiting a quarter century (or more) for something as simple and as basic as import aliasing. Waiting builds character. Waiting helps you appreciate life's more refined pleasures - like, Tiger King, or WWE wrestling. If Zen Buddhists had created software development, they would have most certainly included vast amounts of waiting. And multiple layers of petty denials. I'm pretty sure that if I can just live to be 100, I'll probably see the day when Java finally implements import aliasing. And ohhhhh man! Will that day be grand!

0 notes

Text

The Slow Death of Internet Explorer and the Future of Progressive Enhancement

My first full-time developer job was at a small company. We didn’t have BrowserStack, so we cobbled together a makeshift device lab. Viewing a site I’d been making on a busted first-generation iPad with an outdated version of Safari, I saw a distorted, failed mess. It brought home to me a quote from Douglas Crockford, who once deemed the web “the most hostile software engineering environment imaginable.”

The “works best with Chrome” problem

Because of this difficulty, a problem has emerged. Earlier this year, a widely shared article in the Verge warned of “works best with Chrome” messages seen around the web.

Hi Larry, we apologize for the frustration. Groupon is optimized to be used on a Google Chrome browser, and while you are definitely able to use Firefox or another browser if you'd like, there can be delays when Groupon is not used through Google Chrome.

— Groupon Help U.S. (@GrouponHelpUS) November 26, 2017

Hi Rustram. We'd always recommend that you use Google Chrome to browse the site: we've optimised things for this browser. Thanks.

— Airbnb Help (@AirbnbHelp) July 12, 2016

There are more examples of this problem. In the popular messaging app Slack, voice calls work only in Chrome. In response to help requests, Slack explains its decision like this: “It requires significant effort for us to build out support and triage issues on each browser, so we’re focused on providing a great experience in Chrome.” (Emphasis mine.) Google itself has repeatedly built sites—including Google Meet, Allo, YouTube TV, Google Earth, and YouTube Studio—that block alternative browsers entirely. This is clearly a bad practice, but highlights the fact that cross-browser compatibility can be difficult and time-consuming.

The significant feature gap, though, isn’t between Chrome and everything else. Of far more significance is the increasingly gaping chasm between Internet Explorer and every other major browser. Should our development practices be hamstrung by the past? Or should we dash into the future relinquishing some users in our wake? I’ll argue for a middle ground. We can make life easier for ourselves without breaking the backward compatibility of the web.

The widening gulf

Chrome, Opera, and Firefox ship new features constantly. Edge and Safari eventually catch up. Internet Explorer, meanwhile, has been all but abandoned by Microsoft, which is attempting to push Windows users toward Edge. IE receives nothing but security updates. It’s a frustrating period for client-side developers. We read about new features but are often unable to use them—due to a single browser with a diminishing market share.

Internet Explorer’s global market share since 2013 is shown in dark blue. It now stands at just 3 percent.

Some new features are utterly trivial (caret-color!); some are for particular use cases you may never have (WebGL 2.0, Web MIDI, Web Bluetooth). Others already feel near-essential for even the simplest sites (object-fit, Grid).

A list of features supported in Chrome but unavailable in IE11, taken from caniuse.com. This is a truncated and incomplete screenshot of an extraordinarily long list. The promise and reality of progressive enhancement

For content-driven sites, the question of browser support should never be answered with a simple yes or no. CSS and HTML were designed to be fault-tolerant. If a particular browser doesn’t support shape-outside or service workers or font-display, you can still use those features. Your website will not implode. It’ll just lack that extra stylistic flourish or performance optimization in non-supporting browsers.

Other features, such as CSS Grid, require a bit more work. Your page layout is less enhancement than necessity, and Grid has finally brought a real layout system to the web. When used with care for simple cases, Grid can gracefully fall back to older layout techniques. We could, for example, fall back to flex-wrap. Flexbox is by now a taken-for-granted feature among developers, yet even that is riddled with bugs in IE11.

.grid > * { width: 270px; /* no grid fallback style */ margin-right: 30px; /* no grid fallback style */ } @supports (display: grid) { .grid > * { width: auto; margin-right: 0; } }

In the code above, I’m setting all the immediate children of the grid to have a specified width and a margin. For browsers that support Grid, I’ll use grid-gap in place of margin and define the width of the items with the grid-template-columns property. It’s not difficult, but it adds bloat and complexity if it’s repeated throughout a codebase for different layouts. As we start building entire page layouts with Grid (and eventually display: contents), providing a fallback for IE will become increasingly arduous. By using @supports for complex layout tasks, we’re effectively solving the same problem twice—using two different methods to create a similar result.

Not every feature can be used as an enhancement. Some things are imperative. People have been getting excited about CSS custom properties since 2013, but they’re still not widely used, and you can guess why: Internet Explorer doesn’t support them. Or take Shadow DOM. People have been doing conference talks about it for more than five years. It’s finally set to land in Firefox and Edge this year, and lands in Internet Explorer … at no time in the future. You can’t patch support with transpilers or polyfills or prefixes.

Users have more browsers than ever to choose from, yet IE manages to single-handedly tie us to the pre-evergreen past of the web. If developing Chrome-only websites represents one extreme of bad development practice, shackling yourself to a vestigial, obsolete, zombie browser surely represents the other.

The problem with shoehorning

Rather than eschew modern JavaScript features, polyfilling and transpiling have become the norm. ES6 is supported everywhere other than IE, yet we’re sending all browsers transpiled versions of our code. Transpilation isn’t great for performance. A single five-line async function, for example, may well transpile to twenty-five lines of code.

“I feel some guilt about the current state of affairs,” Alex Russell said of his previous role leading development of Traceur, a transpiler that predated Babel. “I see so many traces where the combination of Babel transpilation overhead and poor [webpack] foo totally sink the performance of a site. … I’m sad that we’re still playing this game.”

What you can’t transpile, you can often polyfill. Polyfill.io has become massively popular. Chrome gets sent a blank file. Ancient versions of IE receive a giant mountain of polyfills. We are sending the largest payload to those the least equipped to deal with it—people stuck on slow, old machines.

What is to be done?Prioritize content

Cutting the mustard is a technique popularized by the front-end team at BBC News. The approach cuts the browser market in two: all browsers receive a base experience or core content. JavaScript is conditionally loaded only by the more capable browsers. Back in 2012, their dividing line was this:

if ('querySelector' in document && 'localStorage' in window && 'addEventListener' in window) { // load the javascript }

Tom Maslen, then a lead developer at the BBC, explained the rationale: “Over the last few years I feel that our industry has gotten lazy because of the crazy download speeds that broadband has given us. Everyone stopped worrying about how large their web pages were and added a ton of JS libraries, CSS files, and massive images into the DOM. This has continued on to mobile platforms that don’t always have broadband speeds or hardware capacity to render complex code.”

The Guardian, meanwhile, entirely omits both JavaScript and stylesheets from Internet Explorer 8 and further back.

The Guardian navigation as seen in Internet Explorer 8. Unsophisticated yet functional.

Nature.com takes a similar approach, delivering only a very limited stylesheet to anything older than IE10.

The nature.com homepage as seen in Internet Explorer 9.

Were you to break into a museum, steal an ancient computer, and open Netscape Navigator, you could still happily view these websites. A user comes to your site for the content. They didn’t come to see a pretty gradient or a nicely rounded border-radius. They certainly didn’t come for the potentially nauseating parallax scroll animation.

Anyone who’s been developing for the web for any amount of time will have come across a browser bug. You check your new feature in every major browser and it works perfectly—except in one. Memorizing support info from caniuse.com and using progressive enhancement is no guarantee that every feature of your site will work as expected.

The W3C’s website for the CSS Working Group as viewed in the latest version of Safari.

Regardless of how perfectly formed and well-written your code, sometimes things break through no fault of your own, even in modern browsers. If you’re not actively testing your site, bugs are more likely to reach your users, unbeknownst to you. Rather than transpiling and polyfilling and hoping for the best, we can deliver what the person came for, in the most resilient, performant, and robust form possible: unadulterated HTML. No company has the resources to actively test their site on every old version of every browser. Malfunctioning JavaScript can ruin a web experience and make a simple page unusable. Rather than leaving users to a mass of polyfills and potential JavaScript errors, we give them a basic but functional experience.

Make a clean break

What could a mustard cut look like going forward? You could conduct a feature query using JavaScript to conditionally load the stylesheet, but relying on JavaScript introduces a brittleness that would be best to avoid. You can’t use @import inside an @supports block, so we’re left with media queries.

The following query will prevent the CSS file from being delivered to any version of Internet Explorer and older versions of other browsers:

<link id="mustardcut" href="stylesheet.css" media=" only screen, only all and (pointer: fine), only all and (pointer: coarse), only all and (pointer: none), min--moz-device-pixel-ratio:0) and (display-mode:browser), (min--moz-device-pixel-ratio:0) ">

We’re not really interested in what particular features this query is testing for; it’s just a hacky way to split between legacy and modern browsers. The shiny, modern site will be delivered to Edge, Chrome (and Chrome for Android) 39+, Opera 26+, Safari 9+, Safari on iOS 9+, and Firefox 47+. I based the query on the work of Andy Kirk. If you want to take a cutting-the-mustard approach but have to meet different support demands, he maintains a Github repo with a range of options.

We can use the same media query to conditionally load a Javascript file. This gives us one consistent dividing line between old and modern browsers:

(function() { var linkEl = document.getElementById('mustardcut'); if (window.matchMedia && window.matchMedia(linkEl.media).matches) { var script = document.createElement('script'); script.src = 'your-script.js'; script.async = true; document.body.appendChild(script); } })();

matchMedia brings the power of CSS media queries to JavaScript. The matches property is a boolean that reflects the result of the query. If the media query we defined in the link tag evaluates to true, the JavaScript file will be added to the page.

It might seem like an extreme solution. From a marketing point of view, the site no longer looks “professional” for a small amount of visitors. However, we’ve managed to improve the performance for those stuck on old technology while also opening the possibility of using the latest standards on browsers that support them. This is far from a new approach. All the way back in 2001, A List Apart stopped delivering a visual design to Netscape 4. Readership among users of that browser went up.

Front-end development is complicated at the best of times. Adding support for a technologically obsolete browser adds an inordinate amount of time and frustration to the development process. Testing becomes onerous. Bug-fixing looms large.

By making a clean break with the past, we can focus our energies on building modern sites using modern standards without leaving users stuck on antiquated browsers with an untested and possibly broken site. We save a huge amount of mental overhead. If your content has real value, it can survive without flashy embellishments. And for Internet Explorer users on Windows 10, Edge is preinstalled. The full experience is only a click away.

Internet Explorer 11 with its ever-present “Open Microsoft Edge” button.

Developers must avoid living in a bubble of MacBook Pros and superfast connections. There’s no magic bullet that enables developers to use bleeding-edge features. You may still need Autoprefixer and polyfills. If you’re planning to have a large user base in Asia and Africa, you’ll need to build a site that looks great in Opera Mini and UC Browser, which have their own limitations. You might choose a different cutoff point for now, but it will increasingly pay off, in terms of both user experience and developer experience, to make use of what the modern web has to offer.

https://ift.tt/2KlVR59

0 notes

Text

The Slow Death of Internet Explorer and the Future of Progressive Enhancement

My first full-time developer job was at a small company. We didn’t have BrowserStack, so we cobbled together a makeshift device lab. Viewing a site I’d been making on a busted first-generation iPad with an outdated version of Safari, I saw a distorted, failed mess. It brought home to me a quote from Douglas Crockford, who once deemed the web “the most hostile software engineering environment imaginable.”

The “works best with Chrome” problem

Because of this difficulty, a problem has emerged. Earlier this year, a widely shared article in the Verge warned of “works best with Chrome” messages seen around the web.

Hi Larry, we apologize for the frustration. Groupon is optimized to be used on a Google Chrome browser, and while you are definitely able to use Firefox or another browser if you'd like, there can be delays when Groupon is not used through Google Chrome.

— Groupon Help U.S. (@GrouponHelpUS) November 26, 2017

Hi Rustram. We'd always recommend that you use Google Chrome to browse the site: we've optimised things for this browser. Thanks.

— Airbnb Help (@AirbnbHelp) July 12, 2016

There are more examples of this problem. In the popular messaging app Slack, voice calls work only in Chrome. In response to help requests, Slack explains its decision like this: “It requires significant effort for us to build out support and triage issues on each browser, so we’re focused on providing a great experience in Chrome.” (Emphasis mine.) Google itself has repeatedly built sites—including Google Meet, Allo, YouTube TV, Google Earth, and YouTube Studio—that block alternative browsers entirely. This is clearly a bad practice, but highlights the fact that cross-browser compatibility can be difficult and time-consuming.

The significant feature gap, though, isn’t between Chrome and everything else. Of far more significance is the increasingly gaping chasm between Internet Explorer and every other major browser. Should our development practices be hamstrung by the past? Or should we dash into the future relinquishing some users in our wake? I’ll argue for a middle ground. We can make life easier for ourselves without breaking the backward compatibility of the web.

The widening gulf

Chrome, Opera, and Firefox ship new features constantly. Edge and Safari eventually catch up. Internet Explorer, meanwhile, has been all but abandoned by Microsoft, which is attempting to push Windows users toward Edge. IE receives nothing but security updates. It’s a frustrating period for client-side developers. We read about new features but are often unable to use them—due to a single browser with a diminishing market share.

Internet Explorer’s global market share since 2013 is shown in dark blue. It now stands at just 3 percent.

Some new features are utterly trivial (caret-color!); some are for particular use cases you may never have (WebGL 2.0, Web MIDI, Web Bluetooth). Others already feel near-essential for even the simplest sites (object-fit, Grid).

A list of features supported in Chrome but unavailable in IE11, taken from caniuse.com. This is a truncated and incomplete screenshot of an extraordinarily long list. The promise and reality of progressive enhancement

For content-driven sites, the question of browser support should never be answered with a simple yes or no. CSS and HTML were designed to be fault-tolerant. If a particular browser doesn’t support shape-outside or service workers or font-display, you can still use those features. Your website will not implode. It’ll just lack that extra stylistic flourish or performance optimization in non-supporting browsers.

Other features, such as CSS Grid, require a bit more work. Your page layout is less enhancement than necessity, and Grid has finally brought a real layout system to the web. When used with care for simple cases, Grid can gracefully fall back to older layout techniques. We could, for example, fall back to flex-wrap. Flexbox is by now a taken-for-granted feature among developers, yet even that is riddled with bugs in IE11.

.grid > * { width: 270px; /* no grid fallback style */ margin-right: 30px; /* no grid fallback style */ } @supports (display: grid) { .grid > * { width: auto; margin-right: 0; } }

In the code above, I’m setting all the immediate children of the grid to have a specified width and a margin. For browsers that support Grid, I’ll use grid-gap in place of margin and define the width of the items with the grid-template-columns property. It’s not difficult, but it adds bloat and complexity if it’s repeated throughout a codebase for different layouts. As we start building entire page layouts with Grid (and eventually display: contents), providing a fallback for IE will become increasingly arduous. By using @supports for complex layout tasks, we’re effectively solving the same problem twice—using two different methods to create a similar result.

Not every feature can be used as an enhancement. Some things are imperative. People have been getting excited about CSS custom properties since 2013, but they’re still not widely used, and you can guess why: Internet Explorer doesn’t support them. Or take Shadow DOM. People have been doing conference talks about it for more than five years. It’s finally set to land in Firefox and Edge this year, and lands in Internet Explorer … at no time in the future. You can’t patch support with transpilers or polyfills or prefixes.

Users have more browsers than ever to choose from, yet IE manages to single-handedly tie us to the pre-evergreen past of the web. If developing Chrome-only websites represents one extreme of bad development practice, shackling yourself to a vestigial, obsolete, zombie browser surely represents the other.

The problem with shoehorning

Rather than eschew modern JavaScript features, polyfilling and transpiling have become the norm. ES6 is supported everywhere other than IE, yet we’re sending all browsers transpiled versions of our code. Transpilation isn’t great for performance. A single five-line async function, for example, may well transpile to twenty-five lines of code.

“I feel some guilt about the current state of affairs,” Alex Russell said of his previous role leading development of Traceur, a transpiler that predated Babel. “I see so many traces where the combination of Babel transpilation overhead and poor [webpack] foo totally sink the performance of a site. … I’m sad that we’re still playing this game.”

What you can’t transpile, you can often polyfill. Polyfill.io has become massively popular. Chrome gets sent a blank file. Ancient versions of IE receive a giant mountain of polyfills. We are sending the largest payload to those the least equipped to deal with it—people stuck on slow, old machines.

What is to be done?Prioritize content

Cutting the mustard is a technique popularized by the front-end team at BBC News. The approach cuts the browser market in two: all browsers receive a base experience or core content. JavaScript is conditionally loaded only by the more capable browsers. Back in 2012, their dividing line was this:

if ('querySelector' in document && 'localStorage' in window && 'addEventListener' in window) { // load the javascript }

Tom Maslen, then a lead developer at the BBC, explained the rationale: “Over the last few years I feel that our industry has gotten lazy because of the crazy download speeds that broadband has given us. Everyone stopped worrying about how large their web pages were and added a ton of JS libraries, CSS files, and massive images into the DOM. This has continued on to mobile platforms that don’t always have broadband speeds or hardware capacity to render complex code.”

The Guardian, meanwhile, entirely omits both JavaScript and stylesheets from Internet Explorer 8 and further back.

The Guardian navigation as seen in Internet Explorer 8. Unsophisticated yet functional.

Nature.com takes a similar approach, delivering only a very limited stylesheet to anything older than IE10.

The nature.com homepage as seen in Internet Explorer 9.

Were you to break into a museum, steal an ancient computer, and open Netscape Navigator, you could still happily view these websites. A user comes to your site for the content. They didn’t come to see a pretty gradient or a nicely rounded border-radius. They certainly didn’t come for the potentially nauseating parallax scroll animation.

Anyone who’s been developing for the web for any amount of time will have come across a browser bug. You check your new feature in every major browser and it works perfectly—except in one. Memorizing support info from caniuse.com and using progressive enhancement is no guarantee that every feature of your site will work as expected.

The W3C’s website for the CSS Working Group as viewed in the latest version of Safari.

Regardless of how perfectly formed and well-written your code, sometimes things break through no fault of your own, even in modern browsers. If you’re not actively testing your site, bugs are more likely to reach your users, unbeknownst to you. Rather than transpiling and polyfilling and hoping for the best, we can deliver what the person came for, in the most resilient, performant, and robust form possible: unadulterated HTML. No company has the resources to actively test their site on every old version of every browser. Malfunctioning JavaScript can ruin a web experience and make a simple page unusable. Rather than leaving users to a mass of polyfills and potential JavaScript errors, we give them a basic but functional experience.

Make a clean break

What could a mustard cut look like going forward? You could conduct a feature query using JavaScript to conditionally load the stylesheet, but relying on JavaScript introduces a brittleness that would be best to avoid. You can’t use @import inside an @supports block, so we’re left with media queries.

The following query will prevent the CSS file from being delivered to any version of Internet Explorer and older versions of other browsers:

<link id="mustardcut" href="stylesheet.css" media=" only screen, only all and (pointer: fine), only all and (pointer: coarse), only all and (pointer: none), min--moz-device-pixel-ratio:0) and (display-mode:browser), (min--moz-device-pixel-ratio:0) ">

We’re not really interested in what particular features this query is testing for; it’s just a hacky way to split between legacy and modern browsers. The shiny, modern site will be delivered to Edge, Chrome (and Chrome for Android) 39+, Opera 26+, Safari 9+, Safari on iOS 9+, and Firefox 47+. I based the query on the work of Andy Kirk. If you want to take a cutting-the-mustard approach but have to meet different support demands, he maintains a Github repo with a range of options.

We can use the same media query to conditionally load a Javascript file. This gives us one consistent dividing line between old and modern browsers:

(function() { var linkEl = document.getElementById('mustardcut'); if (window.matchMedia && window.matchMedia(linkEl.media).matches) { var script = document.createElement('script'); script.src = 'your-script.js'; script.async = true; document.body.appendChild(script); } })();

matchMedia brings the power of CSS media queries to JavaScript. The matches property is a boolean that reflects the result of the query. If the media query we defined in the link tag evaluates to true, the JavaScript file will be added to the page.

It might seem like an extreme solution. From a marketing point of view, the site no longer looks “professional” for a small amount of visitors. However, we’ve managed to improve the performance for those stuck on old technology while also opening the possibility of using the latest standards on browsers that support them. This is far from a new approach. All the way back in 2001, A List Apart stopped delivering a visual design to Netscape 4. Readership among users of that browser went up.

Front-end development is complicated at the best of times. Adding support for a technologically obsolete browser adds an inordinate amount of time and frustration to the development process. Testing becomes onerous. Bug-fixing looms large.

By making a clean break with the past, we can focus our energies on building modern sites using modern standards without leaving users stuck on antiquated browsers with an untested and possibly broken site. We save a huge amount of mental overhead. If your content has real value, it can survive without flashy embellishments. And for Internet Explorer users on Windows 10, Edge is preinstalled. The full experience is only a click away.

Internet Explorer 11 with its ever-present “Open Microsoft Edge” button.

Developers must avoid living in a bubble of MacBook Pros and superfast connections. There’s no magic bullet that enables developers to use bleeding-edge features. You may still need Autoprefixer and polyfills. If you’re planning to have a large user base in Asia and Africa, you’ll need to build a site that looks great in Opera Mini and UC Browser, which have their own limitations. You might choose a different cutoff point for now, but it will increasingly pay off, in terms of both user experience and developer experience, to make use of what the modern web has to offer.

https://ift.tt/2KlVR59

0 notes

Text

The Slow Death of Internet Explorer and the Future of Progressive Enhancement

My first full-time developer job was at a small company. We didn’t have BrowserStack, so we cobbled together a makeshift device lab. Viewing a site I’d been making on a busted first-generation iPad with an outdated version of Safari, I saw a distorted, failed mess. It brought home to me a quote from Douglas Crockford, who once deemed the web “the most hostile software engineering environment imaginable.”

The “works best with Chrome” problem

Because of this difficulty, a problem has emerged. Earlier this year, a widely shared article in the Verge warned of “works best with Chrome” messages seen around the web.

Hi Larry, we apologize for the frustration. Groupon is optimized to be used on a Google Chrome browser, and while you are definitely able to use Firefox or another browser if you'd like, there can be delays when Groupon is not used through Google Chrome.

— Groupon Help U.S. (@GrouponHelpUS) November 26, 2017

Hi Rustram. We'd always recommend that you use Google Chrome to browse the site: we've optimised things for this browser. Thanks.

— Airbnb Help (@AirbnbHelp) July 12, 2016

There are more examples of this problem. In the popular messaging app Slack, voice calls work only in Chrome. In response to help requests, Slack explains its decision like this: “It requires significant effort for us to build out support and triage issues on each browser, so we’re focused on providing a great experience in Chrome.” (Emphasis mine.) Google itself has repeatedly built sites—including Google Meet, Allo, YouTube TV, Google Earth, and YouTube Studio—that block alternative browsers entirely. This is clearly a bad practice, but highlights the fact that cross-browser compatibility can be difficult and time-consuming.

The significant feature gap, though, isn’t between Chrome and everything else. Of far more significance is the increasingly gaping chasm between Internet Explorer and every other major browser. Should our development practices be hamstrung by the past? Or should we dash into the future relinquishing some users in our wake? I’ll argue for a middle ground. We can make life easier for ourselves without breaking the backward compatibility of the web.

The widening gulf

Chrome, Opera, and Firefox ship new features constantly. Edge and Safari eventually catch up. Internet Explorer, meanwhile, has been all but abandoned by Microsoft, which is attempting to push Windows users toward Edge. IE receives nothing but security updates. It’s a frustrating period for client-side developers. We read about new features but are often unable to use them—due to a single browser with a diminishing market share.

Internet Explorer’s global market share since 2013 is shown in dark blue. It now stands at just 3 percent.

Some new features are utterly trivial (caret-color!); some are for particular use cases you may never have (WebGL 2.0, Web MIDI, Web Bluetooth). Others already feel near-essential for even the simplest sites (object-fit, Grid).

A list of features supported in Chrome but unavailable in IE11, taken from caniuse.com. This is a truncated and incomplete screenshot of an extraordinarily long list. The promise and reality of progressive enhancement

For content-driven sites, the question of browser support should never be answered with a simple yes or no. CSS and HTML were designed to be fault-tolerant. If a particular browser doesn’t support shape-outside or service workers or font-display, you can still use those features. Your website will not implode. It’ll just lack that extra stylistic flourish or performance optimization in non-supporting browsers.

Other features, such as CSS Grid, require a bit more work. Your page layout is less enhancement than necessity, and Grid has finally brought a real layout system to the web. When used with care for simple cases, Grid can gracefully fall back to older layout techniques. We could, for example, fall back to flex-wrap. Flexbox is by now a taken-for-granted feature among developers, yet even that is riddled with bugs in IE11.

.grid > * { width: 270px; /* no grid fallback style */ margin-right: 30px; /* no grid fallback style */ } @supports (display: grid) { .grid > * { width: auto; margin-right: 0; } }

In the code above, I’m setting all the immediate children of the grid to have a specified width and a margin. For browsers that support Grid, I’ll use grid-gap in place of margin and define the width of the items with the grid-template-columns property. It’s not difficult, but it adds bloat and complexity if it’s repeated throughout a codebase for different layouts. As we start building entire page layouts with Grid (and eventually display: contents), providing a fallback for IE will become increasingly arduous. By using @supports for complex layout tasks, we’re effectively solving the same problem twice—using two different methods to create a similar result.

Not every feature can be used as an enhancement. Some things are imperative. People have been getting excited about CSS custom properties since 2013, but they’re still not widely used, and you can guess why: Internet Explorer doesn’t support them. Or take Shadow DOM. People have been doing conference talks about it for more than five years. It’s finally set to land in Firefox and Edge this year, and lands in Internet Explorer … at no time in the future. You can’t patch support with transpilers or polyfills or prefixes.

Users have more browsers than ever to choose from, yet IE manages to single-handedly tie us to the pre-evergreen past of the web. If developing Chrome-only websites represents one extreme of bad development practice, shackling yourself to a vestigial, obsolete, zombie browser surely represents the other.

The problem with shoehorning

Rather than eschew modern JavaScript features, polyfilling and transpiling have become the norm. ES6 is supported everywhere other than IE, yet we’re sending all browsers transpiled versions of our code. Transpilation isn’t great for performance. A single five-line async function, for example, may well transpile to twenty-five lines of code.

“I feel some guilt about the current state of affairs,” Alex Russell said of his previous role leading development of Traceur, a transpiler that predated Babel. “I see so many traces where the combination of Babel transpilation overhead and poor [webpack] foo totally sink the performance of a site. … I’m sad that we’re still playing this game.”

What you can’t transpile, you can often polyfill. Polyfill.io has become massively popular. Chrome gets sent a blank file. Ancient versions of IE receive a giant mountain of polyfills. We are sending the largest payload to those the least equipped to deal with it—people stuck on slow, old machines.

What is to be done?Prioritize content

Cutting the mustard is a technique popularized by the front-end team at BBC News. The approach cuts the browser market in two: all browsers receive a base experience or core content. JavaScript is conditionally loaded only by the more capable browsers. Back in 2012, their dividing line was this:

if ('querySelector' in document && 'localStorage' in window && 'addEventListener' in window) { // load the javascript }

Tom Maslen, then a lead developer at the BBC, explained the rationale: “Over the last few years I feel that our industry has gotten lazy because of the crazy download speeds that broadband has given us. Everyone stopped worrying about how large their web pages were and added a ton of JS libraries, CSS files, and massive images into the DOM. This has continued on to mobile platforms that don’t always have broadband speeds or hardware capacity to render complex code.”

The Guardian, meanwhile, entirely omits both JavaScript and stylesheets from Internet Explorer 8 and further back.

The Guardian navigation as seen in Internet Explorer 8. Unsophisticated yet functional.

Nature.com takes a similar approach, delivering only a very limited stylesheet to anything older than IE10.

The nature.com homepage as seen in Internet Explorer 9.

Were you to break into a museum, steal an ancient computer, and open Netscape Navigator, you could still happily view these websites. A user comes to your site for the content. They didn’t come to see a pretty gradient or a nicely rounded border-radius. They certainly didn’t come for the potentially nauseating parallax scroll animation.

Anyone who’s been developing for the web for any amount of time will have come across a browser bug. You check your new feature in every major browser and it works perfectly—except in one. Memorizing support info from caniuse.com and using progressive enhancement is no guarantee that every feature of your site will work as expected.

The W3C’s website for the CSS Working Group as viewed in the latest version of Safari.

Regardless of how perfectly formed and well-written your code, sometimes things break through no fault of your own, even in modern browsers. If you’re not actively testing your site, bugs are more likely to reach your users, unbeknownst to you. Rather than transpiling and polyfilling and hoping for the best, we can deliver what the person came for, in the most resilient, performant, and robust form possible: unadulterated HTML. No company has the resources to actively test their site on every old version of every browser. Malfunctioning JavaScript can ruin a web experience and make a simple page unusable. Rather than leaving users to a mass of polyfills and potential JavaScript errors, we give them a basic but functional experience.

Make a clean break

What could a mustard cut look like going forward? You could conduct a feature query using JavaScript to conditionally load the stylesheet, but relying on JavaScript introduces a brittleness that would be best to avoid. You can’t use @import inside an @supports block, so we’re left with media queries.

The following query will prevent the CSS file from being delivered to any version of Internet Explorer and older versions of other browsers:

<link id="mustardcut" href="stylesheet.css" media=" only screen, only all and (pointer: fine), only all and (pointer: coarse), only all and (pointer: none), min--moz-device-pixel-ratio:0) and (display-mode:browser), (min--moz-device-pixel-ratio:0) ">

We’re not really interested in what particular features this query is testing for; it’s just a hacky way to split between legacy and modern browsers. The shiny, modern site will be delivered to Edge, Chrome (and Chrome for Android) 39+, Opera 26+, Safari 9+, Safari on iOS 9+, and Firefox 47+. I based the query on the work of Andy Kirk. If you want to take a cutting-the-mustard approach but have to meet different support demands, he maintains a Github repo with a range of options.

We can use the same media query to conditionally load a Javascript file. This gives us one consistent dividing line between old and modern browsers:

(function() { var linkEl = document.getElementById('mustardcut'); if (window.matchMedia && window.matchMedia(linkEl.media).matches) { var script = document.createElement('script'); script.src = 'your-script.js'; script.async = true; document.body.appendChild(script); } })();

matchMedia brings the power of CSS media queries to JavaScript. The matches property is a boolean that reflects the result of the query. If the media query we defined in the link tag evaluates to true, the JavaScript file will be added to the page.

It might seem like an extreme solution. From a marketing point of view, the site no longer looks “professional” for a small amount of visitors. However, we’ve managed to improve the performance for those stuck on old technology while also opening the possibility of using the latest standards on browsers that support them. This is far from a new approach. All the way back in 2001, A List Apart stopped delivering a visual design to Netscape 4. Readership among users of that browser went up.

Front-end development is complicated at the best of times. Adding support for a technologically obsolete browser adds an inordinate amount of time and frustration to the development process. Testing becomes onerous. Bug-fixing looms large.