#there are a lot of arguments against generative ai which I'm not even touching

Explore tagged Tumblr posts

Text

I like to think that if you're in a fandom space posting AI art, it's because you just aren't aware of why that might be a problem.

So in case you aren't aware: the generative AI you might be using to create "fanart" is trained on a countless number of artworks created by artists whose permission was not asked. Or maybe it was, technically, intentionally buried in a terms of service document somewhere, or in an update which they could either accept or else delete an entire social media account with years of history. This "art" is only possible because of stolen art. When you post images created by generative AI, you are supporting that.

Also, fandom is about creation and community. Individuals and groups creating art of all sorts, and sharing it with each other. When you post AI art... you haven't really created anything. To me at least, that's kind of detracting from what fandom is about. I want to share and raise up works of art that my community has worked hard to create, not something generative AI spit out from a couple key word inputs.

I can't tell anybody here what to do. If you are posting AI art, I'm sure there are some people who will like it, who will share it, who will be happy to do so. But there will also be people who aren't happy about it. There will be people – and especially artists – who will be frustrated to see other people in their community who support this technology that has stolen directly from them. There will be people who will block you, who will refuse to interact with your posts, and it will make your community smaller.

I understand it's tempting, especially if you aren't an artist yourself. But if you want art that fits a specific prompt, there are other ways to get it that actually support the fandom community: submit your prompt to a fanartist who accepts prompts, or commission someone for a piece of art, or even give it a try yourself and start learning to actually make art!

I don't hate AI as a whole. I think there are a lot of really amazing things we can do with AI, if it's used correctly. But posting AI fanart is not one of them.

#there are a lot of arguments against generative ai which I'm not even touching#and honestly I'm not knowledgeable enough to comment on them#but I think that even if it was more ethical from an art standpoint it would still defeat the point of fandom#to be posting ai art when we're all here to be a community and support each other and share what we make#so anyway. yeah.#been seeing some ai fanart seeping into the fandom#I'm hesitant to reach out to anyone and say something directly because I feel like it's not my place#but I do have thoughts and I want to assume people aren't posting ai art in bad faith#so hopefully this perpective will help someone. idk#I will start blocking if I have to but I'd prefer not to#mine#personal#ai art#ai

53 notes

·

View notes

Text

The Age Old Debate: Fire Good, or Fire Bad?

This was originally going to be part of this thread, but the points were distinct enough and my thoughts rambly enough that I split it into two posts.

From the recent PalWorld thread:

We gotta handle that last tag in two parts.

Part 1 "the devs admitted to using AI art to make the pals"

First off, that isn't true near as I can tell. I can't find anything of the PalWorld Devs admitting they used AI for PalWorld designs. Palworld had demo footage with Pals in it 2 years ago on June 6 with their announcement trailer, which means they would have had to have started dev much earlier than that.

This is what AI art from June of 2022 looked like:

On the left, Hieronymus Bosch's Pokemon, on the right, Charmander on Gumby.

I did a much deeper breakdown of the "used AI" accusation here. It does not hold water.

Now, I could change my mind on this point if there were linked evidence to the creators of Palworld saying this. But there isn't.

Because the accusation is repeated in a tag, there's no way to include supporting information, or even to easily directly ask the accuser for it. Many people are going to see it, internalize it, and then repeat it uncritically, and that's how rumors and witchhunts start.

Because I've seen a lot of accusations about PalWorld stealing fakemon, and I'm yet to see a smoking gun. There's barely smoke.

Gonna hit the second point in that tag, but while we're on the theme of spreading misinfo:

Part 2 of the Tag: Using AI to Brainstorm is "Bad"

This is also an assertion that would require support, and I believe it to be wholly incorrect.

Plagiarism happens at publication. Not at inception, not inspiration, not even at the production level. The only measure of whether something is or is not "stolen art" is whether what comes out at the end replicates, with insufficient transformation, an existing, fixed expression. Art theft is about what comes out, not about what goes in.

For more about how this works with AI art, I suggest checking out the Electronic Frontier Foundation's statement on the issue. They're the ones looking out for your online civil rights, and I agree with their position on this.

The argument that AI art is theft because it is trained on public-facing material on the internet just doesn't fly. Those are all fixed published works subject to inspiration, study, and transformative recreation under fair use. The utilization of mechanical apparatus does not change that principle.

And fair use that requires permission isn't fair use. That's a license.

Moreover, altering the process to put infringement at inspiration/input or allowing the copyrighting of styles would be the end of art as we know it.

There's no coincidence that the main legal push against AI art on copyright grounds is backed by Adobe and Disney. Adobe is already using AI art as a pretext to lobby congress to let them copyright styles, and Disney owns enough material on its own to produce a dataset that would let them do all the AI they'd ever need to, entirely with material they "own." And they're DOING THAT.

The genie is out of the bottle, they (Disney, Adobe, Warner Bros, Universal) have it, and it can't be taken away from them. They just don't want anyone else using AI to compete with them.

Palworld didn't use AI to conceive of its critters. If it had, they'd have probably been less derivative.

(three random AI fakemon I prompted up as examples of just that)

Both traditional and AI-assisted art can plagerize or be original, its entirely based upon how the techniques are used.

Moreover, you can infringe entirely accidentally without realizing, but you can also fail at copying enough that it becomes a new protected work.

We're well into moral panic territory with AI in general, and there's more than a touch of it around Palworld, largely because people aren't suspicious enough of information that confirms their worldview.

I used the quoted set of tags as the prompt for the top of the post, all the AI images in this post are unmodified and were not extensively guided, and thus do not meet the minimal expression threshold and should be considered in the public domain.

#palworld#palworld discourse#AI discourse#AI art#fair use#public domain#creativity#copyright#plagiarism#what is art?#midjourney#bing image creator

63 notes

·

View notes

Text

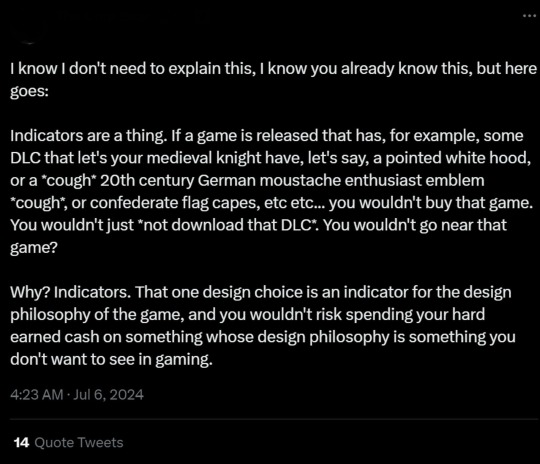

Ok so basically at some point in the last week it was announced that Dragon Age: The Veilguard is supposed to have an optional no-death mode and this resulted in many of the usual people being upset and declaring that they no longer intend to buy the game. When asked why someone responded with this:

Now I could dunk on this but other people have done it so in the BEST CASE SCENARIO I'm going to pretend this is poorly worded.

Using Nazi shit as an example of something people would boycott a game over and comparing it to an optional game mode is dumb and bad because those things aren't equivalent, but let's pretend they mentioned ai art

Lots of people, myself included, would choose not to play a game they were otherwise interested in if it was announced they were using generative ai to make the character models or something, because we don't want to support AI Art. At it's core they're right that this is a thing people do.

And I'm going to be polite and assume they don't think the thing they're taking a principled stand against is that disabled people can play games (even though, since this is an optional mode, that is ultimately what their principled stand is against) and more the concept of games getting easier or being "watered down" as a whole (which frankly does not appear to be happening, as based on my understanding Dragon Age is not a 'git gud' kind of series). In which case they're rather misinformed but at the end of the day no one can force them to play a game they don't want to play.

I do however want to bring up the argument this attitude is sort of appropriating, which is "not every game is for everyone/don't like, don't play," something again, I agree with in theory! As someone who likes Visual Novels, I really wish people who just don't like them would just stop trying to say they're bad.

However, what that misunderstands in the disability discourse context is that disabled people DO want to play these games, which is why they'd like a better entry point or to be able to pause. It's not that someone with crohns doesn't like hard games or soulikes, it's that they should be able to pause when their body decides they hate them and not lose progress. It's that someone who struggles with fast input times would like to get more leeway.

I'd even go so far as to say that telling people not to play these games kind of does the games a disservice, since it implies that the only reason anyone would enjoy this game is the combat as opposed to the lore, map design, characters, branching narrative, structure, all the stuff that comes from having an emergent narrative and makes games unique as stories (and can be lost with a let's play so I don't want anyone saying to just do that. if someone's gonna be stupid and make a choice that gets us all killed I want it to be me)

And of course all of this comes down to in 99% of cases (Pokemon not withstanding, but that's another post) these changes aren't forced upon the player. If there's an accessibility feature you don't want to use, you don't have to use it and the game would try to lead you to using it the way gachas are designed to make you use the gacha. The people who are the most upset about this don't have to be affected

Besides. If people can't complain about games being too hard because not all games are for everyone, then certainly that means you shouldn't complain about a game having an optional easy mode as well?

And if you decide a game having more options for other people that you literally do not have to touch means it's not for you, then that's a personal problem but there's no need to complain about it like the choice to add a no-death mode means you'll never get another Elden Ring, because there's always going to be a market for super hard games.

*lies on the floor* the gamers are being stupid againnn

#hell I'd even argue that difficulty modes open the door for the developers to make hard modes harder?#because they don't have to worry about it being unbeatable for a majority of players if players can just drop the difficulty#the gamers are being stupid again

12 notes

·

View notes

Text

^^^^^^^^

(tried to talk in tags but it wouldnt fit......converted to text an the fucking post crashed so far in. i hate technology . is it ok if i add on though i know that can be annoying.....but a valve burst pls forgive me )

(So mad I lost all that text. Let's see if I can remember what was said.)

Genuinely happy to see someone open this point for discussion, like FUCK YEAH PAINT IT GREY.

What we have here is a moral dilemma conjured out of a fundamental misunderstanding between how humans and humanoid robots (I distinguish humanoid robots from others because I think that has a lot to do with it) think.

Where you fall in the argument is all contingent on what you stand for personally.

If you stand for individuality and personal freedom, reprograming is inconsiderate at best, and oppression comparable to slavery at worst.

If you stand for order and smooth social operation, this is the easiest, most painless road to rehabilitation.

If you believe independent thought is the height of a humanoid robot's lived experience, their agency and voice are the most important elements in the choice to reprogram them.

If you believe humanoid robots are simply industrial/domestic/administrative aides (tools, for some), their usefulness and service to whatever they were built for is the only factor that matters.

If you view them under the lens of utilising a deep learning AI, it will feel reductive and patronising to re"program" them as if they couldn't think for themselves.

If you don't, there's no guilt.

And a lot of the moral argument over whether it's okay or not is human centric, isn't it?

I'm under the impression that a humanoid robot has no reason to grieve for what was lost if they no longer have the memory. And if they do retain the memory (what a shitty wipe/reprogram that was then), does it result in any severe emotional reaction in the first place? Is that capacity for metacognition not what separates them from reploids?

I can't scrounge up much evidence in the games of any previously civilian robots despairing over what they used to be, or do, even if for them it was as natural as breathing. Anything else could be too, once they're made for it.

Also want to touch on the point that we're not sure which robots were self-aware that were stolen/reconstructed entirely for combat. We don't know if they started off with advanced AI for sure, do we? Cold Man used to be a fridge Dr. Light constructed to house dinosaur DNA, Burst Man used to be a security guard at a chemical plant, Freeze Man was one of a few experimental robots (like Tengu Man) --- these all strike me as positions that could have been filled by robots that don't require higher learning.

Dr. Light's provably were because of his investment in artificial general intelligence, and as a result, we assume this can be extrapolated across anyone who's ever made a biped robot. What if that isn't the case? If that's so, there'd be limits on the way they considered their existences before they were given a conscious AI, right? It wouldn't affect them beyond being a mere objective change.

Dr. Wily tampers with robots' livelihoods freely likely because he takes the position that robots are tools, and are made to maximise production efficiency. He doesn't see them as people, and if he does (implied to me by the fact he gives his own in-house humanoids distinctive personalities), they're tantamount to lifelong servants.

Dr. Light, incidentally, has not been seen bothering with reprogramming any robots that weren't previously his, for the exact opposite reason. He clearly believes in the idea of a self-fulfilling machine. He values what they've been carved into, and how they grow. That growth is what makes them humane for him. This is why he hasn't taken any of Dr. Wily's robots out of his custody and reprogrammed them to fit into society, as it would be against their will, and he can't support that. Even if gutting the Earth's most noxious criminals and repurposing them would not only allow them to live needed and useful to the planet collectively, but also allow humans to live alongside them safely.

There's little of nothing stopping him from forcibly bringing Blues back home and replacing his power core besides Blues himself, his refusal to obey. As much as he misses him, he won't do it. He must have respect for what the humanoid robot wants if he wouldn't even do it to his own boy, and if his other kids had objected to returning back from Wily's side after being stolen, I wonder. He might let them stay.

I'm still not sure where I stand on this.

Human beings anthropomorphise anything they see that's even remotely relatable to their lives, all the time, even if they are physically, mentally, intrinsically separate from them on the most basic level.

I know I'd look at something like the Wilybots being removed from Dr. Wily's custody and rezoned, used for something they were never intended to do, amd feel awful. Like I'm watching a family coming apart. But that's me imposing my sensibilities onto the situation because I'm very attached to family and the idea of forgetting bothers me. If a humanoid robot is so limited at this point in the timeline, would that bother them? If it really is about what they think and feel, we have to consider what comes from their mouths. Evidently, they either can't or don't grieve for past lives.

And it must be said none of this would be any concern if these robots weren't humanoid or inclined to deep thought from the beginning. No-one cries for any old mass produced Joe on the lines, even if they emulate some basic emotions, they're too robotic. They can't advocate for themselves in any way. They don't matter as much.

I guess, in general, something is only as wrong as it relates to what lines you personally would/would not cross.

Those lines are malleable when your mind is reducible to a set of numbers. And it's hard to reconcile that as a human being.

imagine if throughout your whole entire life you had a set of goals, wants, and people you genuinely knew well and cared about deeply. and you had a job that was difficult but you felt pride in it and it was one of your main reasons for your sense of self... it made you who you are

and then imagine that one day a group of scientists that you dont know tell you that all of those things were inappropriate and that they were going to change your brain, whether you liked that idea or not, and make you more adaptable to what best fits in society, and thats its okay because they were also going to make it so that you loved that! your personality would be changed and youd have new wants and needs and you would be so happy!! you'd be a new person, very literally, as everything about your brain and parts of your body would change! and, most important of all, you would be *useful*

reprogramming is essentially that?

#megaman#AGAIN AGGAIN IM SORRY FOR FUCKIN GOING OFF ON A TANGENT#none of this makes any sense and plus writing it twice it probably makes even less sense now#thank you in general for giving this topic legs bc in canon they never talk about it#i want it to be a very uncomfortable topic to breach if it ever comes up in universe#the existence of competing needs and even wants in the MM universe is not a layer of the greater struggle and thats a shame#if we're fighting for both humans and robots we should consider both sides as needing sympathy but also reconcile with who they are#the fact of them being different isn't a problem. humanoid robots aren't humans but god#they make you forget that and then in rare cases you can even make the robots themselves forget that#but that's like. a discussion abt the KGNs and i cant. i cant do it right now im GONE#(gonna reread this at somepoint and cringe at the lack of direction probably. fuck it we ball)

66 notes

·

View notes