#spinnaker software

Explore tagged Tumblr posts

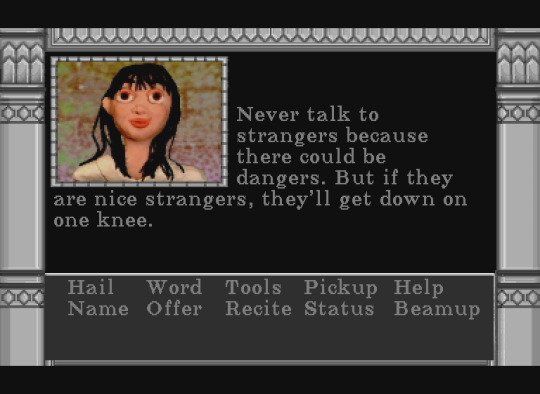

Text

youtube

Bubble Burst, released in 1984 by Spinnaker Software for the Commodore 64, is an action-arcade game where you must prevent creatures called "Zeboingers" from popping bubbles in a bathtub.

You control a target to shoot bubbles at these bird-like Zeboingers as they approach the bubble bath. The game becomes more challenging as the Zeboingers can open windows or turn on showers, which further reduce the number of bubbles. Additionally, you have a limited amount of "bubble juice" each round to defend the bath, adding a layer of strategy.

The game offers two difficulty levels: Level 1 starts from the beginning, while Level 2 lets you begin at the 11th stage. I chose to start at Level 1 and managed to progress well beyond stage 11.

#retro gaming#retro gamer#retro games#video games#gaming#old school gaming#old gamer#gaming videos#youtube video#longplay#bubble burst#c64#commodore 64#action games#love gaming#gaming life#gamer 4 ever#gamer 4 life#gamer guy#gaming community#Youtube

3 notes

·

View notes

Text

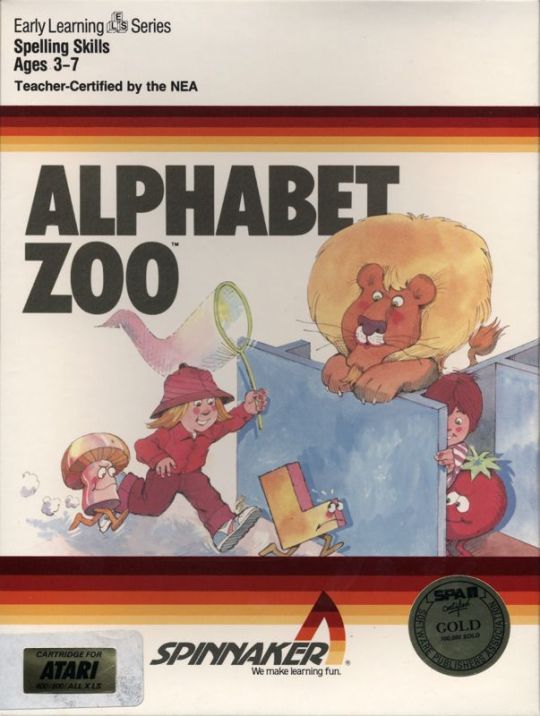

Alphabet Zoo (Apple II/ZX Spectrum/Atari 8-bit/TRS-80 CoCo/Colecovision, Spinnaker Software, 1983/1984)

You can play it here.

To view controls for anything running in MAME, press Tab, then select 'Input (this machine)'.

For the C64 version, to make the emulated joystick work, press F12, use arrows (including right and left to enter and exit menus) to enter 'Machine settings' and then 'Joystick settings', and then set Joystick 1 to Numpad and Joystick 2 to None. (Numpad controls are 8462 and 0 for fire.)

#internet archive#in-browser#apple ii#apple 2#zx spectrum#trs 80#tandy#colecovision#game#games#video game#video games#videogame#videogames#computer game#computer games#obscure games#educational games#tiger#fox#retro games#retro gaming#retro graphics#game history#gaming history#boxart#1983#1984#1980s#80s

9 notes

·

View notes

Text

Step-by-Step DevSecOps Tutorial for Beginners

Introduction: Why DevSecOps Is More Than Just a Trend

In today's digital-first landscape, security can no longer be an afterthought. DevSecOps integrates security directly into the development pipeline, helping teams detect and fix vulnerabilities early. For beginners, understanding how to approach DevSecOps step by step is the key to mastering secure software development. Whether you're just starting out or preparing for the best DevSecOps certifications, this comprehensive tutorial walks you through practical, real-world steps with actionable examples.

This guide also explores essential tools, covers the DevSecOps training and certification landscape, shares tips on accessing DevSecOps certification free resources, and highlights paths like the Azure DevSecOps course.

What Is DevSecOps?

DevSecOps stands for Development, Security, and Operations. It promotes a cultural shift where security is integrated across the CI/CD pipeline, automating checks and balances during software development. The goal is to create a secure development lifecycle with fewer manual gates and faster releases.

Core Benefits

Early vulnerability detection

Automated security compliance

Reduced security risks in production

Improved collaboration among teams

Step-by-Step DevSecOps Tutorial for Beginners

Let’s dive into a beginner-friendly step-by-step guide to get hands-on with DevSecOps principles and practices.

Step 1: Understand the DevSecOps Mindset

Before using tools or frameworks, understand the shift in mindset:

Security is everyone's responsibility

Security practices should be automated

Frequent feedback loops are critical

Security policies should be codified (Policy as Code)

Tip: Enroll in DevSecOps training and certification programs to reinforce these principles early.

Step 2: Learn CI/CD Basics

DevSecOps is built upon CI/CD (Continuous Integration and Continuous Deployment). Get familiar with:

CI tools: Jenkins, GitHub Actions, GitLab CI

CD tools: Argo CD, Spinnaker, Azure DevOps

Hands-On:

# Sample GitHub Action workflow

name: CI

on: [push]

jobs:

build:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Install dependencies

run: npm install

- name: Run tests

run: npm test

Step 3: Integrate Static Application Security Testing (SAST)

SAST scans source code for vulnerabilities.

Popular Tools:

SonarQube

Checkmarx

CodeQL (by GitHub)

Use Case: Integrate SonarQube into your Jenkins pipeline to detect hard-coded credentials or SQL injection flaws.

Code Snippet:

sonar-scanner \

-Dsonar.projectKey=MyProject \

-Dsonar.sources=. \

-Dsonar.host.url=http://localhost:9000

Step 4: Set Up Dependency Scanning

Most modern applications use third-party libraries. Tools like OWASP Dependency-Check, Snyk, or WhiteSource can identify vulnerable dependencies.

Tip: Look for DevSecOps certification free labs that simulate dependency vulnerabilities.

Step 5: Implement Container Security Scanning

With containers becoming standard in deployments, scanning container images is essential.

Tools:

Trivy

Clair

Aqua Security

Sample Command Using Trivy:

trivy image nginx:latest

Step 6: Apply Dynamic Application Security Testing (DAST)

DAST tools test running applications for vulnerabilities.

Top Picks:

OWASP ZAP

Burp Suite

AppSpider

Real-World Example: Test an exposed login form on your dev environment using OWASP ZAP.

Step 7: Use Infrastructure as Code (IaC) Scanning

Misconfigurations in IaC can lead to major security flaws. Use tools to scan Terraform, CloudFormation, or ARM templates.

Popular Tools:

Chekhov

tfsec

Azure Bicep Linter (for Azure DevSecOps course users)

Step 8: Enforce Security Policies

Create policies that define security rules and ensure compliance.

Tools:

Open Policy Agent (OPA)

Kyverno

Use Case: Block deployments if a Kubernetes pod is missing a security context.

Best DevSecOps Certifications to Advance Your Career

If you’re serious about building a career in secure DevOps practices, here are some of the best DevSecOps certifications:

1. Certified DevSecOps Professional

Covers real-world DevSecOps use cases, including SAST, DAST, and container security.

2. AWS DevSecOps Certification

Ideal for cloud professionals securing AWS environments.

3. Azure DevSecOps Course Certification

Microsoft-specific course focusing on Azure security best practices.

4. GIAC Cloud Security Automation (GCSA)

Perfect for automation experts aiming to secure CI/CD pipelines.

Tip: Many DevSecOps certification free prep materials and labs are available online for self-paced learners.

DevSecOps Training Videos: Learn by Watching

Learning by watching real demos accelerates your understanding.

Topics Covered in Popular DevSecOps Training Videos:

How to secure a CI/CD pipeline

Real-world attack simulations

Vulnerability scanning workflows

Secure Dockerfile best practices

Visual Learning Tip: Platforms like H2K Infosys offer training sessions and tutorials that explain concepts step by step.

Accessing DevSecOps Tutorial PDF Resources

Sometimes having a reference guide helps. You can download DevSecOps tutorial PDF resources that summarize:

The DevSecOps lifecycle

Tools list by category (SAST, DAST, etc.)

Sample workflows and policies

These PDFs often accompany DevSecOps training and certification programs.

Azure DevSecOps Course: A Platform-Specific Approach

Microsoft Azure has strong native integration for DevSecOps.

What’s Covered in an Azure DevSecOps Course?

Security Center integrations with pipelines

Azure Key Vault secrets management

ARM Template and Bicep scanning

RBAC, Identity & Access Management

Example Toolchain: Azure DevOps + Microsoft Defender + Azure Policy + Terraform + Key Vault

Certification Note: Some Azure DevSecOps course modules count towards official Microsoft certifications.

Real-World Case Study: DevSecOps in a Banking Application

Problem: A fintech firm faced security vulnerabilities during nightly releases.

Solution: They implemented the following:

Jenkins-based CI/CD

SonarQube for code scanning

Snyk for dependency scanning

Trivy for container security

Azure Policy for enforcing RBAC

Results:

Reduced critical vulnerabilities by 72%

Release frequency increased from weekly to daily

Key Takeaways

DevSecOps integrates security into DevOps workflows.

Use SAST, DAST, IaC scanning, and policy enforcement.

Leverage DevSecOps training videos and tutorial PDFs for continuous learning.

Pursue the best DevSecOps certifications to boost your career.

Explore Azure DevSecOps course for platform-specific training.

Conclusion: Start Your DevSecOps Journey Now

Security is not optional, it's integral. Equip yourself with DevSecOps training and certification to stay ahead. For structured learning, consider top-rated programs like those offered by H2K Infosys.

Start your secure development journey today. Explore hands-on training with H2K Infosys and build job-ready DevSecOps skills.

0 notes

Text

DevOps: Bridging Development & Operations

In the whirlwind environment of software development, getting code quickly and reliably from concept to launch is considered fast work. There has usually been a wall between the "Development" (Dev) and the "Operations" (Ops) teams that has usually resulted in slow deployments, conflicts, and inefficiencies. The DevOps culture and practices have been created as tools to close this gap in a constructive manner by fostering collaboration, automation, and continuous delivery.

DevOps is not really about methodology; it's more of a philosophy whereby developers and operations teams are brought together to collaborate and increase productivity by automation of infrastructure and workflows and continuous assessment of application's performance. Today, it is imperative for any tech-savvy person to have the basic know-how of DevOps methodologies-adopting them-and especially in fast-developing IT corridors like Ahmedabad.

Why DevOps is Crucial in Today's Tech Landscape

It is very clear that the benefits of DevOps have led to its adoption worldwide across industries:

Offering Faster Time-to-Market: DevOps has automated steps, placing even more importance on collaboration, manuals, to finish testing, and to deploy applications very fast.

Ensuring Better Quality and Reliability: With continuous testing, integration, and deployment, we get fewer bugs and more stable applications.

Fostering Collaboration: It removes traditional silos between teams, thus promoting shared responsibility and communication.

Operational Efficiency and Cost-Saving: It automates repetitive tasks, eliminates manual efforts, and reduces errors.

Building Scalability and Resilience: DevOps practices assist in constructing scalable systems and resilient systems that can handle grow-thrust of users.

Key Pillars of DevOps

A few of the essential practices and tools on which DevOps rests:

Continuous Integration (CI): Developers merge their code changes into a main repository on a daily basis, in which automated builds and tests are run to detect integration errors early. Tools: Jenkins, GitLab CI, Azure DevOps.

Continuous Delivery / Deployment: Builds upon CI to automatically build, test, and prepare code changes for release to production. Continuous Deployment then deploys every valid change to production automatically. Tools: Jenkins, Spinnaker, Argo CD.

Infrastructure as Code (IaC): Managing and provisioning infrastructure through code instead of through manual processes. Manual processes can lead to inconsistent setups and are not easily repeatable. Tools: Terraform, Ansible, Chef, Puppet.

Monitoring & Logging: Monitor the performance of applications as well as the health of infrastructure and record logs to troubleshoot and detect issues in the shortest possible time. Tools: Prometheus, Grafana, ELK Stack (Elasticsearch, Logstash, Kibana).

Collaboration and Communication: On the other hand, it is a cultural change towards open communication, working jointly, and feedback loops.

Essential Skills for a DevOps Professional

If you want to become a DevOps Engineer or start incorporating DevOps methodologies into your day-to-day work, these are some skills to consider:

Linux Basics: A good understanding of Linux OS is almost a prerequisite, as most servers run on Linux.

Scripting Languages: Having a working understanding of one or another scripting language (like Python, Bash, or PowerShell) comes in handy in automation.

Cloud Platforms: Working knowledge of cloud providers like AWS, Microsoft Azure, or Google Cloud Platform, given cloud infrastructure is an integral part of deployments nowadays.

Containerization: These include skills on containerization using Docker and orchestration using Kubernetes for application deployment and scaling.

CI/CD Tools: Good use of established CI/CD pipeline tools (Jenkins, GitLab CI, Azure DevOps, etc.).

Version Control: Proficiency in Git through the life of the collaborative code change.

Networking Basics: Understanding of networking protocols and configurations.

Your Path to a DevOps Career

The demand for DevOps talent in India is rapidly increasing.. Since the times are changing, a lot of computer institutes in Ahmedabad are offering special DevOps courses which cover these essential tools and practices. It is advisable to search for programs with lab sessions, simulated real-world projects, and guidance on industry-best practices.

Adopting DevOps is more than just learning new tools; it is a mindset that values efficiency and trust in automation as well as seamless collaboration. With such vital skills, you can act as a critical enabler between development and operations to ensure the rapid release of reliable software, thereby guaranteeing your position as one of the most sought-after professionals in the tech world.

At TCCI, we don't just teach computers — we build careers. Join us and take the first step toward a brighter future.

Location: Bopal & Iskcon-Ambli in Ahmedabad, Gujarat

Call now on +91 9825618292

Visit Our Website: http://tccicomputercoaching.com/

0 notes

Text

Faster, Safer Deployments: How CI/CD Transforms Cloud Operations

In today’s high-velocity digital landscape, speed alone isn't enough—speed with safety is what defines successful cloud operations. As businesses shift from legacy systems to cloud-native environments, Continuous Integration and Continuous Deployment (CI/CD) has become the engine powering faster and more reliable software delivery.

CI/CD automates the software lifecycle—from code commit to production—ensuring rapid, repeatable, and error-free deployments. In this blog, we’ll explore how CI/CD transforms cloud operations, enabling teams to deliver updates with confidence, reduce risk, and accelerate innovation.

🔧 What Is CI/CD?

CI/CD stands for:

Continuous Integration (CI): The practice of frequently integrating code changes into a shared repository, automatically triggering builds and tests to detect issues early.

Continuous Deployment (CD): The process of automatically releasing validated changes to production or staging environments without manual intervention.

Together, they create a streamlined pipeline that supports rapid, reliable delivery.

🚀 Why CI/CD Is Essential in Cloud Environments

Cloud infrastructure is dynamic, scalable, and ever-evolving. Manual deployments introduce bottlenecks, inconsistencies, and human error. CI/CD addresses these challenges by automating key aspects of software and infrastructure delivery.

Here’s how CI/CD transforms cloud operations:

1. Accelerates Deployment Speed

CI/CD pipelines reduce the time from code commit to deployment from days to minutes. Automation removes delays caused by manual approvals, environment setups, or integration conflicts—empowering developers to release updates faster than ever before.

For cloud-native companies that rely on agility, this speed is a game-changer.

2. Improves Deployment Safety

CI/CD introduces automated testing, validation, and rollback mechanisms at every stage. This ensures only tested and secure code reaches production. It also supports blue/green and canary deployments to minimize risk during updates.

The result? Fewer bugs, smoother releases, and higher system uptime.

3. Enables Continuous Feedback and Monitoring

CI/CD tools integrate with monitoring solutions like Prometheus, Datadog, or CloudWatch, providing real-time insights into application health and deployment success. This feedback loop helps teams quickly identify and resolve issues before users are affected.

4. Enhances Collaboration Across Teams

DevOps thrives on collaboration. With CI/CD, developers, testers, and operations teams work together on shared pipelines, using pull requests, automated checks, and deployment logs to stay aligned. This cross-functional synergy eliminates silos and speeds up troubleshooting.

5. Supports Infrastructure as Code (IaC)

CI/CD pipelines can also manage infrastructure using IaC tools like Terraform or Ansible. This enables automated provisioning and testing of cloud resources, ensuring consistent environments across dev, test, and production.

Incorporating IaC into CI/CD helps teams deploy full-stack applications—code and infrastructure—reliably and repeatedly.

🔄 Key Components of a CI/CD Pipeline

Source Control (e.g., GitHub, GitLab)

Build Automation (e.g., Jenkins, GitHub Actions, CircleCI)

Automated Testing (e.g., JUnit, Selenium, Postman)

Artifact Management (e.g., Docker Registry, Nexus)

Deployment Automation (e.g., Spinnaker, ArgoCD)

Monitoring and Alerts (e.g., Prometheus, New Relic)

Each step is designed to catch errors early, maintain code quality, and reduce deployment time.

🏢 How Salzen Cloud Helps You Build CI/CD Excellence

At Salzen Cloud, we specialize in building robust, secure, and scalable CI/CD pipelines tailored for cloud-native operations. Our team helps you:

Automate build, test, and deployment workflows

Integrate security and compliance checks (DevSecOps)

Streamline rollback and disaster recovery mechanisms

Optimize cost and performance across multi-cloud environments

With Salzen Cloud, your teams can release more frequently—with less stress and more control.

📌 Final Thoughts

CI/CD isn’t just a developer convenience—it’s the backbone of modern cloud operations. From faster time-to-market to safer releases, CI/CD enables organizations to innovate at scale while minimizing risk.

If you’re looking to implement or optimize your CI/CD pipeline for the cloud, let Salzen Cloud be your trusted partner in transformation. Together, we’ll build a deployment engine that fuels your growth—one commit at a time.

0 notes

Text

DevOps Tools for Each Phase of the DevOps Lifecycle

Intoduction

In today's competitive software landscape, businesses need to innovate faster, deploy reliably, and maintain scalability. DevOps services have become essential in achieving these goals by bridging the gap between development and operations. At the heart of these practices lies a well-defined DevOps lifecycle supported by a range of powerful tools.

Let’s explore the key phases of the DevOps lifecycle and the most relevant tools used in each, while understanding how DevOps consulting services, DevOps as a service, and DevOps managed services play a crucial role in implementation.

1. Planning

The planning phase sets the stage for collaboration and project clarity. Teams define requirements, outline goals, and establish timelines.

Popular tools:

Jira – Agile project tracking and issue management.

Trello – A visual tool for task organization.

Confluence – Documentation and collaboration platform.

DevOps consulting services help teams define strategic goals, set KPIs, and build roadmaps, ensuring alignment from the start. Expert insights during planning can dramatically improve team efficiency.

2. Development

This phase focuses on coding, version control, and peer reviews. It’s where developers begin creating applications based on the agreed plan.

Popular tools:

Git – Distributed version control system.

GitHub / GitLab – Code hosting platforms with collaboration and CI/CD integration.

Bitbucket – Git repository with built-in CI/CD.

Partnering with DevOps as a service providers streamlines development processes through automation and optimized workflows. This also ensures seamless integration with build and deployment stages.

3. Build

After coding, source code needs to be compiled into executable applications. The build phase is where continuous integration starts to show its power.

Popular tools:

Jenkins – Automates building and testing of code.

Apache Maven – Project build and dependency management.

Gradle – Flexible build tool for Java, Kotlin, and more.

Through DevOps services and solutions, businesses can automate build pipelines and reduce manual errors, improving build consistency and deployment speed.

4. Testing

Testing ensures the quality and stability of software before deployment. Automated testing accelerates this process and improves reliability.

Popular tools:

Selenium – Automated browser testing.

JUnit / NUnit – Unit testing frameworks.

TestNG – Testing framework inspired by JUnit with additional features.

A DevOps engineer plays a crucial role in integrating testing frameworks into CI/CD pipelines. They also ensure comprehensive test coverage using multiple testing strategies.

5. Release

This is where software is prepared and pushed to production. Automating this step guarantees consistency and reliability during releases.

Popular tools:

Spinnaker – Multi-cloud continuous delivery platform.

Harness – Automated software delivery platform.

Octopus Deploy – Simplifies deployment orchestration.

With DevOps managed services, release management becomes seamless. Businesses can deploy faster with reduced risk and rollback capabilities.

6. Deploy

The deploy phase involves rolling out applications into live environments. Automation ensures minimal downtime and error-free deployments.

Popular tools:

Kubernetes – Container orchestration platform.

Docker – Container platform to build, ship, and run applications.

Ansible / Chef / Puppet – Configuration management and automation tools.

Providers offering DevOps as a service deliver automated deployment pipelines that align with organizational requirements and compliance standards.

7. Operate

Once deployed, the system must be monitored and managed continuously to ensure performance, availability, and scalability.

Popular tools:

Prometheus – Monitoring system and time-series database.

Grafana – Visualization tool for metrics and logs.

ELK Stack (Elasticsearch, Logstash, Kibana) – Real-time log analysis.

DevOps services include infrastructure monitoring, performance tuning, and incident management—enabling operations teams to respond to issues proactively.

8. Monitor

The final phase ensures continuous feedback. By collecting telemetry data, logs, and user feedback, teams can enhance product quality and user experience.

Popular tools:

Nagios – Infrastructure monitoring and alerting.

New Relic – Application performance monitoring.

Datadog – Cloud-scale monitoring and analytics.

With DevOps services and solutions, feedback loops are established that empower development and operations teams to improve the next release cycle.

Conclusion

Each phase of the DevOps lifecycle is interconnected, and the tools used in each phase play a vital role in ensuring speed, stability, and scalability. By embracing comprehensive DevOps services, businesses can achieve streamlined workflows, faster time-to-market, and improved customer satisfaction.

Whether you’re a startup or an enterprise, engaging with experts through DevOps consulting services or adopting DevOps managed services can accelerate transformation. If you’re looking for a trusted partner to support your DevOps journey, explore our offerings at Cloudastra – DevOps Services.

An experienced DevOps engineer can make a significant difference in how smoothly your development and operations teams function, especially when supported by the right tools and strategy.

0 notes

Text

CI/CD Explained: Making Software Delivery Seamless

In today’s fast-paced digital landscape, where users expect frequent updates and bug fixes, delivering software swiftly and reliably isn’t just an advantage — it’s a necessity. That’s where CI/CD comes into play. CI/CD (short for Continuous Integration and Continuous Delivery/Deployment) is the backbone of modern DevOps practices and plays a crucial role in enhancing productivity, minimizing risks, and speeding up time to market.

In this blog, we’re going to explore the CI/CD pipeline in a way that’s easy to grasp, even if you’re just dipping your toes into the software development waters. So, grab your coffee and settle in — let’s demystify CI/CD together.

What is CI/CD?

Let’s break down the terminology first:

Continuous Integration (CI) is the practice of frequently integrating code changes into a shared repository. Each integration is verified by an automated build and tests, allowing teams to detect problems early.

Continuous Delivery (CD) ensures that the software can be released to production at any time. It involves automatically pushing code changes to a staging environment after passing CI checks.

Continuous Deployment, also abbreviated as CD, takes things a step further. Here, every change that passes all stages of the production pipeline is automatically released to customers without manual intervention.

Think of CI/CD as a conveyor belt in a high-tech bakery. The ingredients (code changes) are put on the belt, and through a series of steps (build, test, deploy), you end up with freshly baked software ready to be served.

Why is CI/CD Important?

Speed: CI/CD accelerates the software release process, enabling teams to deliver new features, updates, and fixes quickly.

Quality: Automated testing helps catch bugs and issues early in the development cycle, improving the overall quality of the product.

Consistency: The pipeline standardizes how software is built, tested, and deployed, making the process predictable and repeatable.

Collaboration: With CI/CD in place, developers work in a more collaborative and integrated manner, breaking down silos.

Customer Satisfaction: Faster delivery of reliable updates means happier users.

Core Components of a CI/CD Pipeline

Here’s what typically makes up a robust CI/CD pipeline:

Source Code Repository: Usually Git-based platforms like GitHub, GitLab, or Bitbucket. This is where the code lives.

Build Server: Tools like Jenkins, Travis CI, or CircleCI compile the code and run unit tests.

Automated Tests: Unit, integration, and end-to-end tests ensure the code behaves as expected.

Artifact Repository: A place to store build outputs, such as JARs, Docker images, etc.

Deployment Automation: Tools like Spinnaker, Octopus Deploy, or AWS CodeDeploy automate the delivery of applications to various environments.

Monitoring & Feedback: Monitoring tools like Prometheus, Grafana, or New Relic provide insights post-deployment.

The CI/CD Workflow: A Step-by-Step Look

Let’s walk through a typical CI/CD workflow:

Code Commit: A developer pushes new code to the source repository.

Automated Build: The CI tool kicks in, compiles the code, and checks for errors.

Testing Phase: Automated tests (unit, integration, etc.) run to validate the code.

Artifact Creation: A build artifact is generated and stored.

Staging Deployment: The artifact is deployed to a staging environment for further testing.

Approval/Automation: Depending on whether it’s Continuous Delivery or Deployment, the change is either auto-deployed or requires manual approval.

Production Release: The software goes live, ready for end-users.

Monitoring & Feedback: Post-deployment monitoring helps catch anomalies and improve future releases.

Benefits of CI/CD in Real-Life Scenarios

Let’s take a few examples to show how CI/CD transforms software delivery:

E-commerce Sites: Imagine fixing a payment bug and deploying the fix in hours, not days.

Mobile App Development: Push weekly app updates with zero downtime.

SaaS Platforms: Roll out new features incrementally and get real-time user feedback.

With CI/CD, you don’t need to wait for a quarterly release cycle to delight your users. You do it continuously.

Tools That Power CI/CD

Here’s a friendly table to help you get familiar with popular CI/CD tools:PurposeToolsSource ControlGitHub, GitLab, BitbucketCI/CD PipelinesJenkins, CircleCI, Travis CI, GitLab CI/CDContainerizationDocker, KubernetesConfiguration ManagementAnsible, Chef, PuppetDeployment AutomationAWS CodeDeploy, Octopus DeployMonitoringPrometheus, Datadog, New Relic

Each of these tools plays a specific role, and many work beautifully together.

CI/CD Best Practices

Keep Builds Fast: Optimize tests and build processes to minimize wait times.

Test Early and Often: Incorporate testing at every stage of the pipeline.

Fail Fast: Catch errors as early as possible and notify developers instantly.

Use Infrastructure as Code: Manage your environment configurations like version-controlled code.

Secure Your Pipeline: Incorporate security checks, secrets management, and compliance rules.

Monitor Everything: Observability isn’t optional; know what’s going on post-deployment.

Common CI/CD Pitfalls (and How to Avoid Them)

Skipping Tests: Don’t bypass automated tests to save time — you’ll pay for it later.

Overcomplicating Pipelines: Keep it simple and modular.

Lack of Rollback Strategy: Always be prepared to revert to a stable version.

Neglecting Team Training: CI/CD success relies on team adoption and knowledge.

CI/CD and DevOps: The Dynamic Duo

While CI/CD focuses on the pipeline, DevOps is the broader culture that promotes collaboration between development and operations teams. CI/CD is a vital piece of the DevOps puzzle, enabling continuous feedback loops and shared responsibilities.

When paired effectively, they lead to:

Shorter development cycles

Improved deployment frequency

Lower failure rates

Faster recovery from incidents

Why Businesses in Australia Are Adopting CI/CD

The tech ecosystem in Australia is booming. From fintech startups to large enterprises, the demand for reliable, fast software delivery is pushing companies to adopt CI/CD practices.

A leading software development company in Australia recently shared how CI/CD helped them cut deployment times by 70% and reduce critical bugs in production. Their secret? Embracing automation, training their teams, and gradually building a culture of continuous improvement.

Final Thoughts

CI/CD isn’t just a set of tools — it’s a mindset. It’s about delivering value to users faster, with fewer headaches. Whether you’re building a mobile app, a web platform, or a complex enterprise system, CI/CD practices will make your life easier and your software better.

And remember, the journey to seamless software delivery doesn’t have to be overwhelming. Start small, automate what you can, learn from failures, and iterate. Before you know it, you’ll be releasing code like a pro.

If you’re just getting started or looking to improve your current pipeline, this is your sign to dive deeper into CI/CD. You’ve got this!

0 notes

Text

Site Reliability Engineering: Tools, Techniques & Responsibilities

Introduction to Site Reliability Engineering (SRE)

Site Reliability Engineering (SRE) is a modern approach to managing large-scale systems by applying software engineering principles to IT operations. Originally developed by Google, SRE focuses on improving system reliability, scalability, and performance through automation and data-driven decision-making.

At its core, SRE bridges the gap between development and operations teams. Rather than relying solely on manual interventions, SRE encourages building robust systems with self-healing capabilities. SRE teams are responsible for maintaining uptime, monitoring system health, automating repetitive tasks, and handling incident response.

A key concept in SRETraining is the use of Service Level Objectives (SLOs) and Error Budgets. These help organizations balance the need for innovation and reliability by defining acceptable levels of failure. SRE also emphasizes observability—the ability to understand what's happening inside a system using metrics, logs, and traces.

By embracing automation, continuous improvement, and a blameless culture, SRE enables teams to reduce downtime, scale efficiently, and deliver high-quality digital services. As businesses increasingly depend on digital infrastructure, the demand for SRE practices and professionals continues to grow. Whether you're in development, operations, or IT leadership, understanding SRE can greatly enhance your approach to building resilient systems.

Tools Commonly Used in SRE

Monitoring & Observability

Prometheus – Open-source monitoring system with time-series data and alerting.

Grafana – Visualization and dashboard tool, often used with Prometheus.

Datadog – Cloud-based monitoring platform for infrastructure, applications, and logs.

New Relic – Full-stack observability with APM and performance monitoring.

ELK Stack (Elasticsearch, Logstash, Kibana) – Log analysis and visualization.

Incident Management & Alerting

PagerDuty – Real-time incident alerting, on-call scheduling, and response automation.

Opsgenie – Alerting and incident response tool integrated with monitoring systems.

VictorOps (now Splunk On-Call) – Streamlines incident resolution with automated workflows.

Automation & Configuration Management

Ansible – Simple automation tool for configuration and deployment.

Terraform – Infrastructure as Code (IaC) for provisioning cloud resources.

Chef / Puppet – Configuration management tools for system automation.

CI/CD Pipelines

Jenkins – Widely used automation server for building, testing, and deploying code.

GitLab CI/CD – Integrated CI/CD pipelines with source control.

Spinnaker – Multi-cloud continuous delivery platform.

Cloud & Container Orchestration

Kubernetes – Container orchestration for scaling and managing applications.

Docker – Containerization tool for packaging applications.

AWS CloudWatch / GCP Stackdriver / Azure Monitor – Native cloud monitoring tools.

Best Practices in Site Reliability Engineering (SRE)

Site Reliability Engineering (SRE) promotes a disciplined approach to building and operating reliable systems. Adopting best practices in SRE helps organizations reduce downtime, manage complexity, and scale efficiently.

A foundational practice is defining Service Level Indicators (SLIs) and Service Level Objectives (SLOs) to measure and set targets for performance and availability. These metrics ensure teams understand what reliability means for users and how to prioritize improvements.

Error budgets are another critical concept, allowing controlled failure to balance innovation with stability. If a system exceeds its error budget, development slows to focus on reliability enhancements.

SRE also emphasizes automation. Automating repetitive tasks like deployments, monitoring setups, and incident responses reduces human error and improves speed. Minimizing toil—manual, repetitive work that doesn’t add long-term value—is essential for team efficiency.

Observability is key. Systems should be designed with visibility in mind using logs, metrics, and traces to quickly detect and resolve issues.

Finally, a blameless post mortem culture fosters continuous learning. After incidents, teams analyze what went wrong without pointing fingers, focusing instead on preventing future issues.

Together, these practices create a culture of reliability, efficiency, and resilience—core goals of any successful SRE team.

Top 5 Responsibilities of a Site Reliability Engineer (SRE)

Maintain System Reliability and Uptime

Ensure services are available, performant, and meet defined availability targets.

Automate Operational Tasks

Build tools and scripts to automate deployments, monitoring, and incident response.

Monitor and Improve System Health

Set up observability tools (metrics, logs, traces) to detect and fix issues proactively.

Incident Management and Root Cause Analysis

Respond to incidents, minimize downtime, and conduct postmortems to learn from failures.

Define and Track SLOs/SLIs

Establish reliability goals and measure system performance against them.

Know More: Site Reliability Engineering (SRE) Foundation Training and Certification.

0 notes

Text

Canary Testing: Ensuring Smooth Rollouts

In the fast-paced world of software development, canary testing has emerged as a critical strategy for minimizing risks during deployments. By enabling teams to validate changes in a controlled and incremental manner, this method ensures seamless rollouts while safeguarding the user experience.

What is Canary Testing?

Canary testing is a software deployment strategy where new features or updates are released to a small, targeted subset of users before rolling out to the entire user base. This approach allows teams to monitor performance, gather feedback, and identify potential issues in real-world scenarios, minimizing the risk of widespread disruptions.

Why Canary Testing Matters

By releasing changes to a small subset of users before a full rollout, canary testing allows teams to identify potential issues without impacting the entire user base. This ensures that problems can be resolved quickly and efficiently, reducing downtime and improving the overall reliability of the software.

Key Principles of Canary Testing

Effective canary testing relies on careful planning, targeted deployments, and robust monitoring to ensure smooth implementation. Here are the core principles:

Define Success Metrics: Establish clear performance indicators such as response times, error rates, and user feedback.

Gradual Rollout Strategies: Roll out updates incrementally, starting with a small percentage of users and gradually expanding.

Monitoring and Rollback Plans: Continuously monitor performance and have a rollback plan ready to address any issues swiftly.

Benefits of Canary Testing

Canary testing offers several advantages that make it a preferred approach for modern development teams:

Risk Mitigation: By limiting the exposure of changes, teams can quickly identify and resolve issues before they affect all users.

Early Bug Detection: Real-world testing helps uncover bugs that may not appear in development or staging environments.

Improved User Confidence: Gradual rollouts reduce the likelihood of significant disruptions, enhancing trust and satisfaction among users.

Challenges of Implementing Canary Testing

While canary testing is highly effective, it comes with its own set of challenges that teams must address:

Choosing the Right User Subset: Selecting a representative group of users for testing can be complex.

Handling Feedback Loops: Gathering and analyzing feedback from canary users requires efficient processes.

Managing Infrastructure Requirements: Implementing canary testing may demand additional resources for monitoring and managing multiple deployment environments.

Tools and Technologies for Canary Testing

Leveraging the right tools can streamline canary testing and ensure its success in complex systems. Here are some popular options:

Feature Flag Tools: Tools like LaunchDarkly and Unleash allow teams to toggle features on and off for specific user groups.

Monitoring Tools: Platforms such as Datadog and Prometheus provide real-time insights into system performance and user behavior.

Deployment Platforms: Solutions like Kubernetes and Spinnaker support automated canary releases and scaling.

Best Practices for Successful Canary Testing

To maximize the benefits of canary testing, teams should follow these best practices:

Start Small and Scale Incrementally: Begin with a small subset of users and expand gradually as confidence in the update grows.

Automate Testing and Monitoring: Leverage automated tools to ensure consistent and efficient monitoring throughout the testing process.

Define Clear Rollback Criteria: Establish predefined conditions for rolling back updates to minimize downtime and disruptions.

Real-World Example: Successful Canary Testing in Action

Let’s explore how a leading tech company utilized canary testing to roll out a critical feature seamlessly:

Case Study: A payment platform introduced a new transaction processing feature.

Metrics Tracked: The team monitored error rates, system latency, and user feedback during the canary release.

Outcome: Early bug detection allowed the team to resolve issues quickly, leading to a smooth full-scale deployment and enhanced user satisfaction.

Conclusion: Why Your Team Should Adopt Canary Testing

Canary testing is not just a technique; it’s a safety net for delivering high-quality software with confidence. By enabling teams to identify issues early and reduce deployment risks, this approach ensures reliable and efficient software delivery in today’s competitive landscape.

Call to Action Ready to take your deployment strategy to the next level? Start integrating canary testing into your development workflow today and experience the benefits of smoother, safer rollouts.

0 notes

Text

SRE Technologies: Transforming the Future of Reliability Engineering

In the rapidly evolving digital landscape, the need for robust, scalable, and resilient infrastructure has never been more critical. Enter Site Reliability Engineering (SRE) technologies—a blend of software engineering and IT operations aimed at creating a bridge between development and operations, enhancing system reliability and efficiency. As organizations strive to deliver consistent and reliable services, SRE technologies are becoming indispensable. In this blog, we’ll explore the latest trends in SRE technologies that are shaping the future of reliability engineering.

1. Automation and AI in SRE

Automation is the cornerstone of SRE, reducing manual intervention and enabling teams to manage large-scale systems effectively. With advancements in AI and machine learning, SRE technologies are evolving to include intelligent automation tools that can predict, detect, and resolve issues autonomously. Predictive analytics powered by AI can foresee potential system failures, enabling proactive incident management and reducing downtime.

Key Tools:

PagerDuty: Integrates machine learning to optimize alert management and incident response.

Ansible & Terraform: Automate infrastructure as code, ensuring consistent and error-free deployments.

2. Observability Beyond Monitoring

Traditional monitoring focuses on collecting data from pre-defined points, but it often falls short in complex environments. Modern SRE technologies emphasize observability, providing a comprehensive view of the system’s health through metrics, logs, and traces. This approach allows SREs to understand the 'why' behind failures and bottlenecks, making troubleshooting more efficient.

Key Tools:

Grafana & Prometheus: For real-time metric visualization and alerting.

OpenTelemetry: Standardizes the collection of telemetry data across services.

3. Service Mesh for Microservices Management

With the rise of microservices architecture, managing inter-service communication has become a complex task. Service mesh technologies, like Istio and Linkerd, offer solutions by providing a dedicated infrastructure layer for service-to-service communication. These SRE technologies enable better control over traffic management, security, and observability, ensuring that microservices-based applications run smoothly.

Benefits:

Traffic Control: Advanced routing, retries, and timeouts.

Security: Mutual TLS authentication and authorization.

4. Chaos Engineering for Resilience Testing

Chaos engineering is gaining traction as an essential SRE technology for testing system resilience. By intentionally introducing failures into a system, teams can understand how services respond to disruptions and identify weak points. This proactive approach ensures that systems are resilient and capable of recovering from unexpected outages.

Key Tools:

Chaos Monkey: Simulates random instance failures to test resilience.

Gremlin: Offers a suite of tools to inject chaos at various levels of the infrastructure.

5. CI/CD Integration for Continuous Reliability

Continuous Integration and Continuous Deployment (CI/CD) pipelines are critical for maintaining system reliability in dynamic environments. Integrating SRE practices into CI/CD pipelines allows teams to automate testing and validation, ensuring that only stable and reliable code makes it to production. This integration also supports faster rollbacks and better incident management, enhancing overall system reliability.

Key Tools:

Jenkins & GitLab CI: Automate build, test, and deployment processes.

Spinnaker: Provides advanced deployment strategies, including canary releases and blue-green deployments.

6. Site Reliability as Code (SRaaC)

As SRE evolves, the concept of Site Reliability as Code (SRaaC) is emerging. SRaaC involves defining SRE practices and configurations in code, making it easier to version, review, and automate. This approach brings a new level of consistency and repeatability to SRE processes, enabling teams to scale their practices efficiently.

Key Tools:

Pulumi: Allows infrastructure and policies to be defined using familiar programming languages.

AWS CloudFormation: Automates infrastructure provisioning using templates.

7. Enhanced Security with DevSecOps

Security is a growing concern in SRE practices, leading to the integration of DevSecOps—embedding security into every stage of the development and operations lifecycle. SRE technologies are now incorporating automated security checks and compliance validation to ensure that systems are not only reliable but also secure.

Key Tools:

HashiCorp Vault: Manages secrets and encrypts sensitive data.

Aqua Security: Provides comprehensive security for cloud-native applications.

Conclusion

The landscape of SRE technologies is rapidly evolving, with new tools and methodologies emerging to meet the challenges of modern, distributed systems. From AI-driven automation to chaos engineering and beyond, these technologies are revolutionizing the way we approach system reliability. For organizations striving to deliver robust, scalable, and secure services, staying ahead of the curve with the latest SRE technologies is essential. As we move forward, we can expect even more innovation in this space, driving the future of reliability engineering.

0 notes

Text

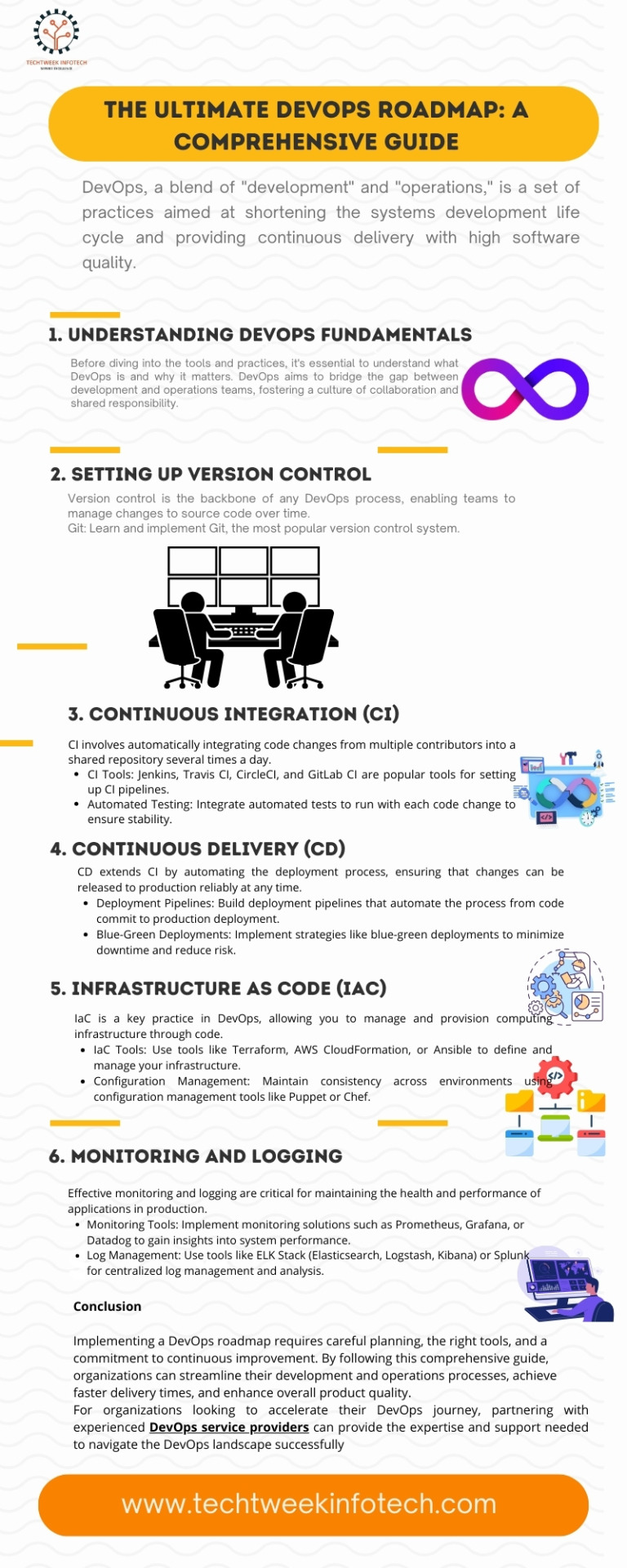

Introduction

The DevOps approach has revolutionized the way software development and operations teams collaborate, significantly improving efficiency and accelerating the delivery of high-quality software. Understanding the DevOps roadmap is crucial for organizations looking to implement or enhance their DevOps practices. This roadmap outlines the key stages, skills, and tools necessary for a successful DevOps transformation.

Stage 1: Foundation

1.1 Understanding DevOps Principles: Before diving into tools and practices, it's essential to grasp the core principles of DevOps. This includes a focus on collaboration, automation, continuous improvement, and customer-centricity.

1.2 Setting Up a Collaborative Culture: DevOps thrives on a culture of collaboration between development and operations teams. Foster open communication, shared goals, and mutual respect.

Stage 2: Toolchain Setup

2.1 Version Control Systems (VCS): Implement a robust VCS like Git to manage code versions and facilitate collaboration.

2.2 Continuous Integration (CI): Set up CI pipelines using tools like Jenkins, GitLab CI, or Travis CI to automate code integration and early detection of issues.

2.3 Continuous Delivery (CD): Implement CD practices to automate the deployment of applications. Tools like Jenkins, CircleCI, or Spinnaker can help achieve seamless delivery.

2.4 Infrastructure as Code (IaC): Adopt IaC tools like Terraform or Ansible to manage infrastructure through code, ensuring consistency and scalability.

Stage 3: Automation and Testing

3.1 Test Automation: Incorporate automated testing into your CI/CD pipelines. Use tools like Selenium, JUnit, or pytest to ensure that code changes do not introduce new bugs.

3.2 Configuration Management: Use configuration management tools like Chef, Puppet, or Ansible to automate the configuration of your infrastructure and applications.

3.3 Monitoring and Logging: Implement monitoring and logging solutions like Prometheus, Grafana, ELK Stack, or Splunk to gain insights into application performance and troubleshoot issues proactively.

Stage 4: Advanced Practices

4.1 Continuous Feedback: Establish feedback loops using tools like New Relic or Nagios to collect user feedback and performance data, enabling continuous improvement.

4.2 Security Integration (DevSecOps): Integrate security practices into your DevOps pipeline using tools like Snyk, Aqua Security, or HashiCorp Vault to ensure your applications are secure by design.

4.3 Scaling and Optimization: Continuously optimize your DevOps processes and tools to handle increased workloads and enhance performance. Implement container orchestration using Kubernetes or Docker Swarm for better scalability.

Stage 5: Maturity

5.1 DevOps Metrics: Track key performance indicators (KPIs) such as deployment frequency, lead time for changes, mean time to recovery (MTTR), and change failure rate to measure the effectiveness of your DevOps practices.

5.2 Continuous Learning and Improvement: Encourage a culture of continuous learning and improvement. Stay updated with the latest DevOps trends and best practices by participating in conferences, webinars, and training sessions.

5.3 DevOps as a Service: Consider offering DevOps as a service to other teams within your organization or to external clients. This can help standardize practices and further refine your DevOps capabilities.

Conclusion

Implementing a DevOps roadmap requires careful planning, the right tools, and a commitment to continuous improvement. By following this comprehensive guide, organizations can streamline their development and operations processes, achieve faster delivery times, and enhance overall product quality.

For organizations looking to accelerate their DevOps journey, partnering with experienced DevOps service providers can provide the expertise and support needed to successfully navigate the DevOps landscape.

1 note

·

View note

Text

Customers Can Continue Their GitOps Journey with Argo and OpsMx Following the Shutdown of Weaveworks

0 notes

Text

DevOps Tools and Toolchains

DevOps Course in Chandigarh,

DevOps tools and toolchains are crucial components in the DevOps ecosystem, helping teams automate, integrate, and manage various aspects of the software development and delivery process. These tools enable collaboration, streamline workflows, and enhance the efficiency and effectiveness of DevOps practices.

Version Control Systems (VCS): Tools like Git and SVN are fundamental for managing source code, enabling versioning, branching, and merging. They facilitate collaboration among developers and help maintain a history of code changes.

Continuous Integration (CI) Tools: Jenkins, Travis CI, CircleCI, and GitLab CI/CD are popular CI tools. They automate the process of integrating code changes, running tests, and producing build artifacts. This ensures that code is continuously validated and ready for deployment.

Configuration Management Tools: Tools like Ansible, Puppet, and Chef automate the provisioning and management of infrastructure and application configurations. They ensure consistency and reproducibility in different environments.

Containerization and Orchestration: Docker is a widely used containerization tool that packages applications and their dependencies. Kubernetes is a powerful orchestration platform that automates the deployment, scaling, and management of containerized applications.

Continuous Deployment (CD) Tools: Tools like Spinnaker and Argo CD facilitate automated deployment of applications to various environments. They enable continuous delivery by automating the release process.

Monitoring and Logging Tools: Tools like Prometheus, Grafana, ELK Stack (Elasticsearch, Logstash, Kibana), and Splunk provide visibility into application and infrastructure performance. They help monitor metrics, logs, and events to identify and resolve issues.

Collaboration and Communication Tools: Platforms like Slack, Microsoft Teams, and Jira facilitate communication and collaboration among team members. They enable seamless sharing of updates, notifications, and project progress.

Infrastructure as Code (IaC) Tools: Terraform, AWS CloudFormation, and Azure Resource Manager allow teams to define and manage infrastructure using code. This promotes automation, versioning, and reproducibility of environments.

Continuous Testing Tools: Tools like Selenium, JUnit, and Mocha automate testing processes, including unit tests, integration tests, and end-to-end tests. They ensure that code changes do not introduce regressions.

Security and Compliance Tools: Tools like SonarQube, OWASP ZAP, and Nessus help identify and mitigate security vulnerabilities and ensure compliance with industry standards and regulations.

In summary, DevOps tools and toolchains form the backbone of DevOps practices, enabling teams to automate, integrate, and manage various aspects of the software development lifecycle. These tools promote collaboration, efficiency, and reliability, ultimately leading to faster and more reliable software delivery.

0 notes

Photo

195 notes

·

View notes

Text

CI/CD for Cloud Success: Streamlining Deployment with Automation

In today’s fast-paced development landscape, businesses must deliver software quickly, reliably, and securely. Continuous Integration (CI) and Continuous Deployment (CD) have emerged as essential practices for achieving these goals. By combining CI/CD pipelines with cloud infrastructure, organizations can automate their deployment processes, reduce manual errors, and accelerate software delivery.

In this blog, we’ll explore the key benefits of CI/CD in cloud environments, best practices for implementation, and how automation drives deployment success.

Why CI/CD is Crucial for Cloud Success

Cloud environments are dynamic, with scalable infrastructure and frequent code updates. Manual deployment methods often lead to:

❌ Deployment Delays: Manual processes slow down release cycles. ❌ Inconsistent Environments: Configuration differences create deployment issues. ❌ Increased Downtime: Undetected bugs and failures disrupt services. ❌ Security Risks: Manual oversight may overlook vulnerabilities.

By implementing CI/CD pipelines, businesses can automate testing, deployment, and monitoring processes to ensure faster, more reliable cloud deployments.

Key Benefits of CI/CD for Cloud Deployments

✅ Faster Release Cycles: Automation accelerates code integration, testing, and deployment. ✅ Improved Code Quality: Frequent testing reduces bugs and vulnerabilities. ✅ Consistent Environments: CI/CD pipelines ensure identical configurations across development, staging, and production. ✅ Enhanced Collaboration: Developers, testers, and operations teams can collaborate efficiently. ✅ Reduced Downtime: Automated rollbacks minimize service disruptions during failed deployments.

Key CI/CD Practices for Successful Cloud Deployment

🔹 1. Automate Code Integration with CI Tools

Continuous Integration ensures developers merge code changes frequently. Automated builds and tests identify integration issues early.

✅ Implement automated builds to compile code on each commit. ✅ Use static code analysis tools to detect security vulnerabilities. ✅ Enable automated testing to validate code quality before deployment.

Tools: Jenkins, GitLab CI/CD, CircleCI, Travis CI

🔹 2. Use Infrastructure as Code (IaC) for Consistent Environments

Integrating IaC with CI/CD pipelines ensures infrastructure is deployed and configured automatically.

✅ Define cloud infrastructure using IaC tools. ✅ Automate environment provisioning to ensure consistency. ✅ Implement drift detection to identify and resolve configuration changes.

Tools: Terraform, AWS CloudFormation, Azure Bicep

🔹 3. Implement Automated Testing at Multiple Stages

Testing is crucial for ensuring application stability and security.

✅ Unit Tests: Identify issues in individual components. ✅ Integration Tests: Verify data flow between services. ✅ Performance Tests: Evaluate scalability and response times. ✅ Security Tests: Detect vulnerabilities before deployment.

Tools: Selenium, Cypress, JUnit, OWASP ZAP

🔹 4. Adopt Blue-Green and Canary Deployments for Safer Releases

These deployment strategies minimize downtime and reduce risks in production environments.

✅ Blue-Green Deployment: Run two identical environments — switch traffic to the new version only after successful testing. ✅ Canary Deployment: Gradually roll out changes to a small user base before full deployment.

Tools: AWS CodeDeploy, Spinnaker, Argo CD

🔹 5. Integrate Security into the CI/CD Pipeline (DevSecOps)

Embedding security practices throughout the pipeline ensures vulnerabilities are identified early.

✅ Automate security scans for dependencies, containers, and code. ✅ Enforce policies to prevent insecure code from reaching production. ✅ Use role-based access controls to restrict permissions.

Tools: SonarQube, Checkmarx, Snyk

🔹 6. Enable Continuous Monitoring for Proactive Issue Detection

Post-deployment monitoring ensures application performance and availability.

✅ Implement automated alerts for performance degradation, latency issues, and security threats. ✅ Use dashboards to track key metrics in real-time.

Tools: Prometheus, Grafana, Datadog

🔹 7. Automate Rollbacks for Faster Recovery

Automated rollbacks ensure seamless recovery in case of failed deployments.

✅ Detect deployment failures with automated health checks. ✅ Implement rollback logic to revert to the previous stable version automatically.

Best Practices for CI/CD Implementation in Cloud Environments

✅ Adopt a Pipeline-as-Code Approach: Define CI/CD pipelines in code for improved maintainability. ✅ Ensure Fast and Reliable Builds: Optimize pipelines for quick feedback loops. ✅ Implement Parallel Testing: Run tests concurrently to reduce build times. ✅ Use Feature Flags: Enable selective feature rollouts to minimize deployment risks. ✅ Enforce Access Controls: Secure your pipelines with role-based permissions.

Salzen Cloud’s Approach to CI/CD for Cloud Success

At Salzen Cloud, we help businesses design and implement powerful CI/CD pipelines to streamline deployment processes. Our solutions include:

✔️ End-to-end CI/CD pipeline automation for faster releases. ✔️ Seamless integration with cloud platforms like AWS, Azure, and Google Cloud. ✔️ Automated security testing to ensure secure deployments. ✔️ Real-time monitoring and rollback strategies for enhanced stability.

By combining robust automation frameworks with industry best practices, Salzen Cloud ensures businesses achieve reliable, efficient cloud deployments.

Conclusion

In the fast-moving world of cloud computing, CI/CD pipelines are essential for delivering high-quality software at speed. By automating builds, testing, and deployment processes, businesses can improve reliability, reduce errors, and scale efficiently.

Partner with Salzen Cloud to implement tailored CI/CD solutions that drive cloud success.

Ready to accelerate your cloud deployments? Contact Salzen Cloud today! 🚀

0 notes