#so i linked the github which has the steps and everything and they were like 'but it's not on [homebrew app]'

Explore tagged Tumblr posts

Text

I know a lot of people have been saying that tech literacy is falling bc a lot of younger people aren't properly taught how to use computers anymore and they only interact with their devices through applications. and like that made sense to me given how much tech education had already been cut when I was in school, but I didn't have much contact with younger genZers to have noticed the change myself. but god now that I'm on reddit (mistake) there are so many of these people in homebrew/console hacking communities. like it boggles my mind that an intersection of people exists that 1) are tech savvy enough to be interested in console hacking 2) completely fall to pieces whenever something requires doing something on a PC and not the console itself. like it's frustrating for the people trying to help them AND for them, I'd imagine. they don't have the tools they need

#your pc and the console are friends. they like working together let them work together#for reference I'm mostly talking about vita hacking communities here#you don't need a pc to hack the thing and there's a homebrew app to uh. acquire most games without needing to transfer data#so you can do a lot without using a pc! which is convenient#but some custom stuff and some games require a pc and you see people asking for help with with basic related stuff all the time#someone was looking for a english patch of a specific game and i happened to have used a patcher for it before#so i linked the github which has the steps and everything and they were like 'but it's not on [homebrew app]'#well ok i can't help with that sorry#also i don't understand people that download pirated games directly to their vita in front of god and everyone sans vpn#maybe I'm paranoid though idk#reilly.txt

1 note

·

View note

Note

Glances at your post about Gerry's name in the ARG files... Speaking as someone who totally missed the Magnus Protocol ARG...do you mind explaining it? Or point me in the direction of other posts/summaries/videos *etc* that break it down? Or is it very much 'you had to be there' thing?

Cuz I keep seeing references to it and am very confused...

Hi!

I wasn't able to follow and keep on top of the TMAGP ARG when it was happening last year in September/October (it was a super busy time for me in my offline life), so I am definitely missing a lot of information.

There is a sort of "ARG explained" video on YouTube here which people tend to recommend, but um. I think it's very poorly structured and the speaker backtracks a lot as they remember things that they forgot to mention or adds in their own speculation. I didn't find it very cohesive and didn't watch the whole thing because I felt like it was just confusing me (but I appreciate their enthusiasm).

I just wanted a step-by-step chronological breakdown of everything from the ARG and what was found (in-universe or not), which I have not seen anyone post. You might get more out of it than me.

Rusty Quill's website has a summary of the ARG here, but it's a bit more focused on the overall structure of the ARG and doesn't really show how some solutions were found (at least not in ways that made sense to me), and doesn't always include images of what is being said?

At the end of the summary, Rusty Quill linked to a GitHub repository for various parts of the ARG. Most of it is code which isn't very helpful to me, but you can access the text used for some of the ARG websites (which no longer work) as well as some images, and the excel document that Gerry appears on. You sort of have to hunt around a bunch for files that aren't just code tho.

Based on RQ's summary and what I've heard, the ARG also gave players access to a forum with in-universe conversations. I don't know if there's anything interesting in there in terms of lore hints or if it was just a place for ARG clue hunting. I don't know how to access these forums or if anyone saved the text anywhere, but I sure would love to have a look :c

That's about all I know. I seriously doubt knowing anything about the ARG will actually matter in terms of following the story in TMAGP, but it's fun and interesting to see.

#ramblings of a bystander#tmagp arg#the magnus protocol#tmagp#tmagp spoilers#magpod#ask#answer#just-prime

11 notes

·

View notes

Text

@romancedeldiablo just reminded me the entire cybersecurity/information security industry is having the greatest field days ever since this whole Covid-19 triggered a mass work from home exodus.

I have so much to say about it and all the security issues that are occurring. This mostly pertains to the US. This isn’t meant to scare anyone, they’re just food for thought and a bit of explanation about my industry.

PSA: Not all hackers are bad, just a reminder. There are very legitimate reasons for hacking such as compliance and research. When I talk about hackers here, I’m talking about the bad ones who are exploiting without permission and for malicious reasons.

The main thing about this whole working from home thing is that most organizations don’t have the infrastructure to support their entire workforce. Not every company uses Google Drive or OneDrive or DropBox.

This means that companies with on-premise servers, isolated servers or networks are screwed. Imagine trying to connect to your friend’s computer who lives on the other side of the world and controlling their mouse. Can’t do it. Gotta download something on both ends to do it. Now imagine that for 500 people at home who are trying to connect to a single server. You’d need to open that server/network up to the internet. That has its own risks because without controlling WHO can access the server, you’re basically allowing anyone (hackers especially) to go in and take all your data.

But then you ask, “Isn’t that what passwords are for?” BITCH look at your own passwords. Do you really think 500 people will have passwords strong enough to withstand a rainbow table attack or that the server won’t shit itself when receiving 500 connections from unknown locations by means of a not-often used method? Hackers only need to exploit one password (for the most part) while the company needs to ensure ALL 500 are protected. That’s difficult as all hell and if it were that easy, I wouldn’t have a job.

Then there’s shit like Virtual Private Networks (VPNs) and RADIUS servers that’ll secure the network connection so it can’t be hijacked and do authentication respectively. Here’s the problem. VPN solutions need to be downloaded on the client system (your computer). When your organization has very technically illiterate people, that becomes a nightmare. ‘Cause you have to set up their accounts on the VPN system and set the permissions for each of them so they can only access what they’re allowed to access otherwise Bob from sales now has access to the HR system with everyone’s social security numbers. It’s very time consuming and can get very complicated. Even worse is that VPNs often require licenses. When you only have 50 licenses and suddenly 500 people want access, you’re screwed. But you can always purchase more licenses, no problem. Here’s the rub. Suddenly, this VPN tunnel needs to accept connections from 500 people. This tunnel is only strong enough to accept 50 concurrent sessions. When 10x that amount get on, guess what? The tunnel shits itself and basically the company has DoS’d itself. Now no one can get any work done until IT figures out how to get 500 people on a system that’s only capable of supporting 50.

Fuck, almost forgot about RADIUS. There’s DIAMETER, too, but shut up about it. It’s an authentication system but depending on how it’s set up, you’ll have to also set up the users. That’s an extra step and it’s a pain in the ass if RADIUS somehow isn’t connected to AD and the user has different passwords and shit.

Not to mention hackers suddenly gaining access to all this information because they’ve already infected people’s home computers and routers prior to the work from home stuff. There’s very limited way for IT to control what happens on a personal computer, so these personal computers can have no anti-virus or security software. This means all data is in danger because someone decided Windows Defender is annoying. (Windows Defender is pretty great, btw.)

Physical robberies are occurring a little more because there’s no one to protect the stores and such. Physical security is taking a hell of a beating.

There’s been an increase in phishing scams around COVID-19. Unemployment sites are probably being (and probably already have been) hacked and the data is being stolen. I think there were some people who were creating fake unemployment sites to steal PII. There are e-mails going out to people saying stuff like, “Your computer has been infected with the CORONAVIRUS. Click here to clean it up.” And you’re wondering, “What sort of morons…?” Don’t. It’s very easy to give in to your panic. Hackers don’t hack computers solely. They hack into human emotion, into the psyche. Anyone can fall for their shit.

The thing with Zoom? Basically they’re so insecure, people are hacking them without issue. How? Because people are silly and put out links, chat logs are saved onto insecure machines that have already been hacked, there are a bunch of exploits available for Zoom, etc.

Healthcare organizations. Oh boy. So, we all know healthcare organizations are working their damnedest to save people suffering from COVID-19. Every second counts and any delay in that process could mean life or death. They work hard. Here’s the thing. There has always been a delicate balance between security and usability. Too secure and it’ll make it difficult for the end user to do their job. Usable without security just makes it easier for an attacker to do their job. Why am I talking about this?

Healthcare organizations usually hold sensitive information. Health information. Social security numbers. Birth dates. Addresses. Insurance information. Family member information. So much stuff. They are a beautiful target for hackers because all that shit is right there and it’s accessible. Healthcare organizations, by and large, do not put a lot of emphasis on security. That’s changing a bit, but for the most part, the don’t care about security. They do the bare minimum because guess what? Every additional control can add time to a doctor or healthcare worker’s routine. Computer lockscreen every 5 minutes? Now the doctor has to re-logon every 5 minutes. This adds about 15 seconds to their rountine. Multiply that several times over for every patient that comes in assuming a doctor will need to log in at least 3 times during a single visit. That can clock in at at least an hour throughout the day. A hour that they could’ve spent doing something else. So imagine more controls. Password needs to be reset. Need to badge in. Log into this extra program to access this file. Call IT because this thing locked them out. Each one of these normal controls now feel insanely restrictive. The ease of use isn’t there and so organizations might look at reversing these security controls, potentially making things even less secure than before in the name of efficiency.

Don’t @ me about HIPAA. I will start rants about how non-prescriptive and ineffective it is to actually get proper security implemented.

LOL @ internet service providers. Internet speeds are dropping due to the amount of traffic they’re getting. Commercial internet really wasn’t prepared for this. Those poor bastards.

Some organizations outsource their IT teams. Those people (Managed Service Providers aka MSPs) are not prepared for this nonsense. It’s popular now to go after these guys for hacking. An MSP usually works for multiple organizations. So, why try going after 50 organizations individually when you have just one organization with poor security controls managing everything from one place? You’d logically go after the one rather than 50. It’s easier.

MSPs are now overworked because they also have to work from home to connect to systems that can’t support so many people connecting to it on personal computers that the MSP can’t log into like they normally would to fix any issues. This makes them tired. What happens when you’re tired? You make more mistakes. And that’s exactly what hackers go after. Once they’re in the MSP’s system, the hacker can now potentially gain access to the 50 clients’ systems. Easy win.

Shadow IT and alternate solutions. This is another doozy. Imagine all your files and shit are on your company’s network. No one is able to access it because there isn’t any VPN or remote sharing system or FTP server set up for this stuff, but you still need to do your job. So, what do you do? Obviously, you start making stuff on your own computer using whatever you’re comfortable with. Google Drive. Dropbox. Box. Slack. That shitty PDF reader you downloaded three years ago and didn’t update.

Now imagine sharing it through things like your personal e-mail which may or may not have been hacked without your knowledge. Or maybe the recipient’s been hacked without anyone’s knowledge. Maybe your files are normally encrypted if they’re on the company network. Now you’re off of it and nothing’s encrypted. Maybe you forget it delete a file or 80 off of your system which has been infected. Or maybe you pasted shit on pastebin or github and it’s available to the public because that’s just easier. Now anyone searching can find it. This is how database dumps are found sometimes and they’re really entertaining.

Shadow IT putting in alternate solutions without the company’s knowledge is always a fucking nightmare. I get that people need to do their jobs and want to do things a certain way, but can you not be selfish and put everyone at risk because you decided your way or the high way?

That sounds awfully familiar…it feels like a situation that we’re going through right now…hey, wait a minute…

Long story short, this whole working from home thing opens up a lot of security issues. Most companies are ill-equipped to handle IT issues, let alone cybersecurity/information security/IT security issues, but because of that, we’re seeing a lot of interesting things happening. Such as finding out New Jersey’s unemployment system runs on a 60+ year old programming language.

Holy shit I can talk about this all day. I’ve definitely glossed over a lot of stuff and oversimplified it. If anyone wants me to talk about any specific topic related to this or cybersecurity or information security in general, drop an ask. I’m always, always more than happy to talk about it.

26 notes

·

View notes

Text

Version 330

youtube

windows

zip

exe

os x

app

tar.gz

linux

tar.gz

source

tar.gz

I had a great week. There are some more login scripts and a bit of cleanup and speed-up.

The poll for what big thing I will work on next is up! Here are the poll + discussion thread:

https://www.poll-maker.com/poll2148452x73e94E02-60

https://8ch.net/hydrus/res/10654.html

login stuff

The new 'manage logins' dialog is easier to work with. It now shows when it thinks a login will expire, permits you to enter 'empty' credentials if you want to reset/clear a domain, and has a 'scrub invalid' button to reset a login that fails due to server error or similar.

After tweaking for the problem I discovered last week, I was able to write a login script for hentai foundry that uses username and pass. It should inherit the filter settings in your user profile, so you can now easily exclude the things you don't like! (the click-through login, which hydrus has been doing for ages, sets the filters to allow everything every time it works) Just go into manage logins, change the login script for www.hentai-foundry.com to the new login script, and put in some (throwaway) credentials, and you should be good to go.

I am also rolling out login scripts for shimmie, sankaku, and e-hentai, thanks to Cuddlebear (and possibly other users) on the github (which, reminder, is here: https://github.com/CuddleBear92/Hydrus-Presets-and-Scripts/tree/master/Download%20System ).

Pixiv seem to be changing some of their login rules, as many NSFW images now work for a logged-out hydrus client. The pixiv parser handles 'you need to be logged in' failures more gracefully, but I am not sure if that even happens any more! In any case, if you discover some class of pixiv URLs are giving you 'ignored' results because you are not logged in, please let me know the details.

Also, the Deviant Art parser can now fetch a sometimes-there larger version of images and only pulls from the download button (which is the 'true' best, when it is available) if it looks like an image. It should no longer download 140MB zips of brushes!

other stuff

Some kinds of tag searches (usually those on clients with large inboxes) should now be much faster!

Repository processing should also be faster, although I am interested in how it goes for different users. If you are on an HDD or have otherwise seen slow tag rows/s, please let me know if you notice a difference this week, for better or worse. The new system essentially opens the 'new tags m8' firehose pretty wide, but if that pressure is a problem for some people, I'll give it a more adaptable nozzle.

Many of the various 'select from a list of texts' dialogs across the program will now size themselves bigger if they can. This means, for example, that the gallery selector should now show everything in one go! The manage import/export folder dialogs are also moved to the new panel system, so if you have had trouble with these and a small screen, let me know how it looks for you now.

The duplicate filter page now has a button to edit your various duplicate merge options. The small button on the viewer was too-easily missed, so this should make it a bit easier!

full list

login:

added a proper username/password login script for hentai foundry--double-check your hf filters are set how you want in your profile, and your hydrus should inherit the same rules

fixed the gelbooru login script from last week, which typoed safebooru.com instead of .org

fixed the pixiv login 'link' to correctly say nsfw rather than everything, which wasn't going through last week right

improved the pixiv file page api parser to veto on 'could not access nsfw due to not logged in' status, although in further testing, this state seems to be rarer than previously/completely gone

added login scripts from the github for shimmie, sankaku, and e-hentai--thanks to Cuddlebear and any other users who helped put these together

added safebooru.donmai.us to danbooru login

improved the deviant art file page parser to get the 'full' embedded image link at higher preference than the standard embed, and only get the 'download' button if it looks like an image (hence, deviant art should stop getting 140MB brush zips!)

the manage logins panel now says when a login is expected to expire

the manage logins dialog now has a 'scrub invalidity' button to 'try again' a login that broke due to server error or similar

entering blank/invalid credentials is now permitted in the manage logins panel, and if entered on an 'active' domain, it will additionally deactivate it automatically

the manage logins panel is better at figuring out and updating validity after changes

the 'required cookies' in login scripts and steps now use string match names! hence, dynamically named cookies can now be checked! all existing checks are updated to fixed-string string matches

improved some cookie lookup code

improved some login manager script-updating code

deleted all the old legacy login code

misc login ui cleanup and fixes

.

other:

sped up tag searches in certain situations (usually huge inbox) by using a different optimisation

increased the repository mappings processing chunk size from 1k to 50k, which greatly increases processing in certain situations. let's see how it goes for different users--I may revisit the pipeline here to make it more flexible for faster and slower hard drives

many of the 'select from a list of texts' dialogs--such as when you select a gallery to download from--are now on the new panel system. the list will grow and shrink depending on its length and available screen real estate

.

misc:

extended my new dialog panel code so it can ask a question before an OK happens

fixed an issue with scanning through videos that have non-integer frame-counts due to previous misparsing

fixed a issue where file import objects that have been removed from the list but were still lingering on the list ui were not rendering their (invalid) index correctly

when export folders fail to do their work, the error is now presented in a better way and all export folders are paused

fixed an issue where the export files dialog could not boot if the most previous export phrase was invalid

the duplicate filter page now has a button to more easily edit the default merge options

increased the sibling/parent refresh delay for 1s to 8s

hydrus repository sync fails due to network login issues or manual network user cancel will now be caught properly and a reasonable delay added

additional errors on repository sync will cause a reasonable delay on future work but still elevate the error

converted import folder management ui to the new panel system

refactored import folder ui code to ClientGUIImport.py

converted export folder management ui to the new panel system

refactored export folder ui code to the new ClientGUIExport.py

refactored manual file export ui code to ClientGUIExport.py

deleted some very old imageboard dumping management code

deleted some very old contact management code

did a little prep work for some 'show background image behind thumbs', including the start of a bitmap manager. I'll give it another go later

next week

I have about eight jobs left on the login manager, which is mostly a manual 'do login now' button on manage logins and some help on how to use and make in the system. I feel good about it overall and am thankful it didn't explode completely. Beyond finishing this off, I plan to continue doing small work like ui improvement and cleanup until the 12th December, when I will take about four weeks off over the holiday to update to python 3. In the new year, I will begin work on what gets voted on in the poll.

2 notes

·

View notes

Text

The beginning of the major project story

In October 2020 I began putting ideas together for a project. Something that I wanted to last, become part of my life on a longterm basis; something I cared about. At the time of writing this (January 2021), I cannot for the life of me remember what those initial ideas were. I had spent the summer reading and reflecting on my creative practice. The pandemic was going on way longer than I thought it would have and it had started to expose a lot of things for me that were just hiding from plain sight. I had many conversations with friends (Squad) over Zoom and ‘the group chat’ about internet cultures and the impact URL life is having on IRL life. Generally speaking we were finding the divide between the internet that we love, and the internet that was pissing us off, and trying to find out why we were getting so miffed about certain things. We had been talking a lot about Spotify, about how we didn’t like the network effect it had over musicians to release music on there despite the remuneration system seeming so unfair. I use Spotify to listen to a lot of music, so there’s definitely some cognitive dissonance going on there. I get that it’s convenient for listeners. And I also get that getting your track in a popular playlist can get you loads of streams (and so maybe earn a bit of money). But as a group we reflected on the namelessness of this system. How easy it was to leave playlist running and not know who or what you are listening to even if asked. "Ah its on this playlist" was a phrase we discussed a fair bit. You might argue that this system allows for greater music exploration, finding things you’ve never heard before. And you’d be right. But radio does this and I have no gripes with radio. What’s all that about? Artist and Computer Person, Elliott Cost wrote a short paper on the vastness of a website. In it he talks about how over the last few years… "platforms have stripped away any hint of how vast they actually are. As a result, users only get to see a tiny sliver of an entire platform. There’s been an overwhelming push to build tools specifically designed for engagement (like buttons, emoji responses, comment threading) instead of building tools that help users actually explore. This has replaced any sense of play with a bleak struggle for users attention. The marketing line for these new tools could easily be, "engage more, explore less."" He tries to combat this in the websites he designs by adding explore buttons that randomise content, for example. You can see this in action in a website he contributed to called the The Creative Independent. "One thing we did implement was a random button that served up a random interview from over 600 articles across the site. I ended up moving this button into the main navigation so that readers could continue to click the button until they found an interview that interested them. It’s fairly easy to implement a “randomized items/articles” section on a website. In the case of The Creative Independent, this simple addition revealed how expansive the site really was.”

https://elliott.computer/pages/exploring-the-vastness-of-a-website.html Sticking with the website theme, another thing we discussed as a Squad was the increase of Web 3.0 models in comparison to out current 2.0 models. We’d all done some listening to and reading of Jaron Lanier, who after writing a few books about the future of big data and the potential to monetise your own, eventually just wrote a really on those nose book about getting off social media. It’s called ‘Ten Arguments for Deleting Your Social Media Accounts Right Now’. To the point right? After feeling the negative effects of social media throughout 2018 and 2019, I’d reached breaking point, and this book tipped me over the edge to try going cold turkey. It was surprisingly easy and I loved being away from it all, especially Instagram. That app can do things to you. For quite some time I was obsessed over crafting the perfect post for my music and creative practice that I stopped making my core content to focus on keeping up appearances on Instagram. I don’t think it works like this for everyone. Perhaps some people are more susceptible to the allure of its powers. Maybe it rooted in some insecurities. Either way, the network of people I was following and that were following me back were certainly not social. Our relationships were built on tokenistic and obligatory likes and comments. The FOMO was hitting hard and I wasn’t getting anywhere with my art and music. I’m still off Instagram, all Facebook platforms in fact. I got rid of WhatsApp and forced my friends to use Signal. Cos that’s what you do to people you love, shine a light down on anything toxic in their life while sitting on that high horse. I have returned to Twitter, months and months after being away from everything, because I’m trying to start a record label during a pandemic. You can’t meet up with anyone or go anywhere, how am I supposed to do guerrilla marketing if everyone is staring at their computer at home everyday? I could’ve come up with something online perhaps, and perhaps I might still. But for know I’ve jumped on Twitter and am just following everyone in Cardiff involved with music. I’m playing the spam game until we can go outside again. Then I’ll delete that little blue bird from my computer again. I appreciate that these networks are useful and convenient. And there aren’t any good alternative with the same network effect. But the thing that Lanier said that really struck me was this idea that there needs to be enough people on the outside of it all to show others that it can be done. So until something better comes along, I am happy to sit outside of it all. Jenny Odell is helping me through this with her book “How to Do Nothing.” As we discussed this as a Squad we noticed that much of what we were talking about was about aligning your actions with your values. It’s something seemingly impossible to maintain in all aspects of your life, but I genuinely think the more you can do this the happier you’ll be. We do it in so many other aspects of our lives, I wondered why it was so difficult to musicians who hate Spotify to not use, or for those riddled with anxiety to not use Instagram. I think a huge factor of this is down to that word convenience again. Now, convenience is king. But, “At what cost?” I will ask. For every few seconds shaved off, kj of energy saved, or steps reduced in completing a task or getting something, there are hidden costs elsewhere that the consumer doesn’t have to worry about. And I think this is worrying. Not that I think things should be deliberately inconvenient for people. But on reflecting on this, I am happy for things to be a little ‘anti-convenient’. For processes of consumption and creation, to have that extra step I do myself perhaps, or for it to take that little bit longer for a package to get to me. Or even that I spend some time learning how to do whole processes myself. Anyway, back to those Web3 chats���. the Squad noticed that the new Web seems to include glimmers of Web 1.0 and the return of personal websites, as well as newer ideas like decentralised systems of exchange. Artists that can do a bit of coding and seasoned web designers alike are creating an online culture that focuses on liberating the website and our online presence from platform capitalism. Instructions for how to set up your own social network (https://runyourown.social) are readily available with a quick search, and calls for a community focused web are common place from those dying to get off Twitter and live in their own corner of the internet with their Squad, interconnected with other Squads. What’s this got to do with Third Nature? Well it means I decided to build our website from scratch using simple HTML and CSS. I intend to maintain this and eventually try to move the hosting from GitHub Pages over to a personal server ran on a Raspberry Pi. There is a link between the anti-Spotify movement and the pro-DIY-website culture, which is that ‘aligning your actions with your values’ thing. Before Third Nature had a website though - before it was called Third Nature for that matter - I had this idea… What if there were an alternative to Spotify that was as fair as the #BrokenRecord campaign wanted it to be? I could so have a go at making that. Maybe on a small scale. Like for Cardiff, and then expand. After sharing the idea with the Squad though we did some research and actually came across a few music platforms that were doing these types of things. More on this in the next post…

0 notes

Text

How to Build a Blog with Gatsby and Netlify CMS – A Complete Guide

In this article, we are going to build a blog with Gatsby and Netlify CMS. You will learn how to install Gatsby on your computer and use it to quickly develop a super fast blog site.

You are also going to learn how to add Netlify CMS to your site by creating and configuring files, then connecting the CMS to your site through user authentication.

And finally, you'll learn how to access the CMS admin so that you can write your first blog post.

The complete code for this project can be found here.

Here's a brief introduction to these tools.

What is Gatsby?

Gatsby is a free and open-source framework based on React that helps you build fast websites and web apps. It is also a static site generator like Next.js, Hugo, and Jekyll.

It includes SEO (Search Engine Optimization), accessibility, and performance optimization from the get-go. This means that it will take you less time to build production-ready web apps than if you were building with React alone.

What is Netlify CMS?

Netlify CMS is a CMS (Content Management System) for static site generators. It is built by the same people who made Netlify. It allows you to create and edit content as if it was WordPress, but it's a much simpler and user-friendly interface.

The main benefit of Netlify CMS is you don't have to create markdown files every time you want to write a post. This is useful for content writers who don't want to deal with code, text editors, repositories, and anything to do with tech - they can just focus on writing articles.

Alright, without any further ado, let's start building the blog!

But before we get going, a quick heads up: This guide requires prior knowledge of JavaScript and React. If you are not comfortable with these tools yet, I've linked the resources at the end of the article to help you brush up on those skills.

Even if you're new to those technologies, I tried to make this guide as simple as I was able so you can follow along.

How to set up the environment

Before we can build Gatsby sites, we have to make sure that we have installed all the right software required for the blog.

Install Node.js

Node.js is an environment that can run JavaScript code outside of a web browser.

It is a tool that allows you to write backend server code instead of using other programming languages such as Python, Java, or PHP. Gatsby is built with Node.js and that's why we need to install it on our computer.

To install Node.js, go to the download page and download it based on your operating system.

When you are done following the installation prompts, open the terminal and run node -v to check if it was installed correctly. Currently, the version should be 12.18.4 and above.

Install Git

Git is a free and open-source distributed version control system that helps you manage your coding projects efficiently.

Gatsby starter uses Git to download and install its required files and that's why you need to have Git on your computer.

To install Git, follow the instructions based on your operating system:

Install Gatsby CLI

Gatsby CLI (Command Line Interface) is the tool that lets you build Gatsby-powered sites. By running this command, we can install any Gatsby sites and the plugins we want.

To install Gatsby CLI, open the terminal and run this command:

npm install -g gatsby-cli

Once everything is set up successfully then we are ready to build our first Gatsby site.

How to build a Gatsby site

In this guide, we're going to use the default Gatsby starter theme, but you're free to choose any themes on the Gatsby starter library. I personally use the Lekoart theme because the design is minimalist and beautiful, and it has a dark mode.

In the terminal, run this command to install the new Gatsby blog:

gatsby new foodblog https://github.com/gatsbyjs/gatsby-starter-blog

Note for Windows users: If you encounter "Error: Command failed with exit code 1: yarnpkg" while creating Gatsby site, see this page to troubleshoot it. You may have to clean up dependencies of old yarn installations or follow the Gatsby on Windows instructions.

What's does this command line mean exactly? Let me explain.

new - This is the command line that creates a new Gatsby project

foodblog - This is the name of the project. You can name it whatever you want here. I named this project foodblog as an example only.

The URL (https://github.com/gatsbyjs/gatsby-starter-blog) - This URL specified points to a code repository that holds the starter code you want to use. In other words, I picked the theme for the project.

Once the installation is complete, we'll run the cd foodblog command which will take us to the location of our project file.

cd foodblog

Then we'll run gatsby develop that will start running on the local machine. Depending on the specs of your computer, it will take a little while before it is fully started.

gatsby develop

Open a new tab in your browser and go to http://localhost:8000/. You should now see your new Gatsby site!

How a Gatsby starter blog homepage looks

Now that we've created the blog, the next step is to add Netlify CMS to make writing blog posts easier.

How to add Netlify CMS to your site

Adding Netlify CMS to your Gatsby site involves 4 major steps:

app file structure,

configuration,

authentication, and

accessing the CMS.

Let's tackle each of these stages one at a time.

How to set up the app's file structure

This section deals with the file structure of your project. We are going to create files that will contain all Netlify CMS codes.

When you open your text editor, you will see a lot of files. You can read this article if you are curious about what each of these files does.

├── node_modules ├── src ├── static ├── .gitignore ├── .prettierrc ├── gatsby-browser.js ├── gatsby-config.js ├── gatsby-node.js ├── gatsby-ssr.js ├── LICENSE ├── package-lock.json ├── package.json └── README.md

Do not worry about all these files — we are going to use very few of them here.

What we are looking for is the static folder. This is the folder where it will form the main structure of the Netlify CMS.

If your project does not have Static folder, then create the folder at the root directory of your project.

Inside the static folder, create an admin folder. Inside this folder, create two files index.html and config.yml:

admin ├ index.html └ config.yml

The first file, index.html, is the entry point to your CMS admin. This is where Netlify CMS lives. You don't need to do styling or anything as it is already done for you with the script tag in the example below:

<!doctype html> <html> <head> <meta charset="utf-8" /> <meta name="viewport" content="width=device-width, initial-scale=1.0" /> <title>Content Manager</title> </head> <body> <script src="https://unpkg.com/netlify-cms@^2.0.0/dist/netlify-cms.js"></script> </body> </html>

The second file, config.yml, is the main core of the Netlify CMS. It's going to be a bit complicated as we are going to write backend code. We'll talk more about it in the configuration section.

How to configure the back end

In this guide, we are using Netlify for hosting and authentication and so the backend configuration process should be relatively straightforward. Add all the code snippets in this section to your admin/config.yml file.

We'll begin by adding the following codes:

backend: name: git-gateway branch: master

Heads up: This code above works for GitHub and GitLab repositories. If you're using Bitbucket to host your repository, follow these instructions instead.

The code we just wrote specifies your backend protocol and your publication branch (which is branch: master). Git Gateway is an open-source API that acts as a proxy between authenticated users of your site and your site repository. I'll explain more what this does in the authentication section.

Next up, we will write media_folder: "images/uploads". This will allow you to add media files like photos directly to your CMS. Then you won't need to use a text editor to manually add media and all that.

media_folder: "images/uploads"

Make sure you created a folder called images in the admin folder. Inside the images folder, create an uploads folder as this is the place where you'll host your images.

Configure Collections

The collections will define the structure for the different content types on your static site. As every site can be different, how you configure the collection's settings will differ from one site to another.

Let's just say your site has a blog, with the posts stored in content/blog, and files saved in a date-title format, like 2020-09-26-how-to-make-sandwiches-like-a-pro.md. Each post begins with settings in the YAML-formatted front matter in this way:

--- layout: blog title: "How to make sandwiches like a pro" date: 2020-09-26 11:59:59 thumbnail: "/images/sandwich.jpg" --- This is the post body where I write about how to make a sandwich so good that will impress Gordon Ramsay.

With this example above, this is how you will add collections settings to your Netlify CMS config.yml file:

collections: - name: "blog" label: "Blog" folder: "content/blog" create: true slug: "---" fields: - {label: "Layout", name: "layout", widget: "hidden", default: "blog"} - {label: "Title", name: "title", widget: "string"} - {label: "Publish Date", name: "date", widget: "datetime"} - {label: "Body", name: "body", widget: "markdown"}

Let's examine what each of these fields does:

name: This one is used in routes like /admin/collections/blog

label: This one is used in the UI (User Interface). When you are in the admin page, you will see a big word "Blog" on the top of the screen. That big word "Blog" is the label.

folder: This one points to the file path where your blog posts are stored.

create: This one lets the user (you or whoever has admin access) create new documents (blog posts in this case) in these collections.

slug: This one is the template for filenames. , , and which are pulled from the post's date field or save date. is a URL-safe version of the post's title. By default it is .

The fields are where you can customize the content editor (the page where you write the blog post). You can add stuff like ratings (1-5), featured images, meta descriptions, and so on.

For instance, in this particular code, we add curly braces {}. Inside them we write label with the value "Publish Date" which will be the label in the editor UI.

The name field is the name of the field in the front matter and we name it "date" since the purpose of this field is to enter the date input.

And lastly, the widget determines how the UI style will look and the type of data we can enter. In this case, we wrote "datetime" which means we can only enter the date and time.

- {label: "Publish Date", name: "date", widget: "datetime"}

You can check the list right here to see what exactly you can add. If you want, you can even create your own widgets, too. For the sake of brevity, we'll try to keep things simple here.

Enable Authentication

At this point, we are nearly done with the installation and configuration of Netlify CMS. Now it's time to connect your Gatsby site to the CMS by enabling authentication.

We'll add some HTML code and then activate some features from Netlify. After that, you are on the way to creating your first blog post.

We are going to need a way to connect a front end interface to the backend so that we can handle authentication. To do that, add this HTML script tag to two files:

<script src="https://identity.netlify.com/v1/netlify-identity-widget.js"></script>

The first file to add this script tag is the admin/index.html file. Place it between the <head> tags. And the second file to add the tag is the public/index.html file. This one also goes in between the <head> tags.

When a user logs in with the Netlify Identity widget, an access token directs them to the site homepage. In order to complete the login and get back to the CMS, redirect the user back to the /admin/ path.

To do this, add the following code before the closing body tag of the public/index.html file:

<script> if (window.netlifyIdentity) { window.netlifyIdentity.on("init", user => { if (!user) { window.netlifyIdentity.on("login", () => { document.location.href = "/admin/"; }); } }); } </script>

With this, we are now done writing the code and it's time to visit Netlify to activate authentication.

Before we move on, you should Git commit your changes and push them to the repository. Plus, you will have to deploy your site live so you can access the features in the Enable Identity and Git Gateway section.

Deploy your site live with Netlify

We are going to use Netlify to deploy our Gatsby site live. The deployment process is pretty straightforward, quick, and most importantly, it comes with a free SSL (Secure Sockets Layer). This means your site is protected (you can tell by looking at the green lock on the browser search).

If you haven't signed up for the platform, you can do it right here. When you've finished signing up, you can begin the deployment process by following these 3 steps.

Click the "New site from Git" button to create a new site to be deployed. Choose the Git provider where your site is hosted. My site is hosted on GitHub so that's what I will choose.

Choose the repository you want to connect to Netlify. The name of my Gatsby site is "foodblog" but you have to pick your own project name.

The last one asks how you would like Netlify to adjust your builds and deploy your site. We are going to leave everything as it is and we will click the "Deploy site" button. This will begin deploying your site to live.

Once the deployment is complete, you can visit your live site by clicking the green link that has been generated for you on the top left of the screen. Example: https://random_characters.netlify.app.

With this, the world can now view your site. You can replace the weird URL with your custom domain by reading this documentation.

How to enable Identity and Git Gateway

Netlify's Identity and Git Gateway services help you manage CMS admin users for your site without needing them to have an account with your Git host (Like GitHub) or commit access on your repository.

To activate these services, head to your site dashboard on Netlify and follow these steps:

Go to Settings > Identity, and select Enable Identity service.

In the Overview page of your site, click the "Settings" link.

After clicking "Settings", scroll down the left sidebar and click the "Identity" link.

Click the "Enable Identity" button to activate the Identity feature.

2. Under Registration preferences, select Open or Invite only. Most of the time, you want only invited users to access your CMS. But if you are just experimenting, you can leave it open for convenience.

Under the Identity submenu, click the "Registration" link and you'll be taken to the registration preferences.

3. Scroll down to Services > Git Gateway, and click Enable Git Gateway. This authenticates with your Git host and generates an API access token.

In this case, we're leaving the Roles field blank, which means any logged-in user may access the CMS.

Under the Identity submenu, click the "Services" link.

Click the "Enable Git Gateway" button to activate the Git Gateway feature.

With this, your Gatsby site has been connected with Netlify CMS. All that is left is to access the CMS admin and write blog posts.

How to access the CMS

All right, you are now ready to write your first blog post!

There are two ways to access your CMS admin, depending on what accessing options you chose from the Identity.

If you selected Invite only, you can invite yourself and other users by clicking the Invite user button. Then an email message will be sent with an invitation link to login to your CMS admin. Click the confirmation link and you'll be taken to the login page.

Alternatively, if you selected Open, you can access your site's CMS directly at yoursite.com/admin/. You will be prompted to create a new account. When you submit it, a confirmation link will be sent to your email. Click the confirmation link to complete the signup process and you'll be taken to the CMS page.

Note: If you cannot access your CMS admin after clicking the link from the email, the solution is to copy the link in the browser starting with #confirmation_token=random_characters and paste the link after the hashtag "#", like this: https://yoursite.com/admin/#confirmation_token=random_characters. This should fix the problem.

If everything goes well, you should see your site's admin dashboard:

Netlify CMS admin.

You can create your new post by clicking the "New post" button.

When you're ready to publish your post, you can click the "Publish Now" button to publish it immediately.

When you hit the publish button, the post file is automatically created. Then it will add to the changes with the commit message based on the name of the post along with the date and time of publishing. Finally, it will be pushed to the host repository, and from there your post will be seen live.

You can view the changes by looking at the commit message in your host repository.

After waiting for a few minutes, your new post should be live.

One more thing

The last thing to do is clean up the sample articles. To delete these posts, go to the blog files in your text editor and delete them one by one. Make sure you check your terminal when deleting them so that there will be no issues on your site.

Once all the sample posts are cleared out, commit these changes and push them to the repository.

And now, you are all done! You can now create your new posts from the comfortable CMS dashboard and share your stories to the world.

Summary

In this guide you have learned how to:

Create a Gatsby blog site

Added the Netlify CMS to your Gatsby site by creating and configuring files

Enable user authentication by activating Identity and Git Gateway

Access your site's CMS admin

Publish your first post powered by Gatsby and Netlify CMS

By the end of this guide, you should now be able to enjoy writing blog posts with a fast website and simple content editor. And you probably don't have to touch the code unless it needs further customization.

There is still more to cover about Gatsby and Netlify CMS. One of the best ways to learn about them is to go through their documentation.

I hope you found this guide beneficial, and happy posting!

Check out my blog to learn more tips, tricks, and tutorials about web development.

Cover photo by NeONBRAND on Unsplash.

Resources for JavaScript and React

Here are some resources that may help you to learn JavaScript and React:

JavaScript

React

0 notes

Photo

JS1024 winners, TypeScript gets a new site, and the future of Angular

#500 — August 7, 2020

Unsubscribe | Read on the Web

It's issue 500! Thanks for your support over the years, we're not too far away from our 10th anniversary which we'll cover separately. But do I think issue 512 will be even cooler to celebrate? Yes.. 🤓

JavaScript Weekly

1Keys: How I Made a Piano in Only 1KB of JavaScript — A month ago we promoted JS1024, a contest to build the coolest thing possible in just 1024 bytes of JavaScript. It’s well worth looking at all the winners/results, but one winner has put together a fantastic writeup of how he went about the task. If this genius seems familiar, he also did a writeup about implementing a 3D racing game in 2KB of JavaScript recently, and more besides.

Killed By A Pixel

Announcing the New TypeScript Website — The official TypeScript site at typescriptlang.org is looking fresh. Learn about the updates here or just go and enjoy it for yourself.

Orta Therox (Microsoft)

Get a Free T-Shirt. It Doesn’t Cost Anything to Get Started — FusionAuth provides authentication, authorization, and user management for any app: deploy anywhere, integrate with anything, in minutes. Download and install today and we'll send you a free t-shirt.

FusionAuth sponsor

A Roadmap for the Future of Angular — The Angular project now has an official roadmap outlining what they’re looking to bring to future versions of the popular framework.

Jules Kremer (Google)

You May Finally Use JSHint for 'Evil'(!) — JSHint is a long standing tool for detecting errors and problems in JavaScript code (it inspired ESLint). A curious feature of JSHint’s license is that the tool couldn’t be used for “evil” – this has now changed with a switch to the MIT license(!)

Mike Pennisi

⚡️ Quick bytes:

Apparently Twitter's web app now runs ES6+ in modern browsers, reducing the polyfill bundle size by 83%.

Salesforce has donated $10K to ESLint – worth recognizing, if only to encourage similar donations to JavaScript projects by big companies 😄

A nifty slide-rule implemented with JavaScript. I used to own one of these!

💻 Jobs

Backend Engineering Position in Beautiful Norway — Passion for building fast and globally scalable eCommerce APIs with GraphQL using Node.js? 😎 Join our engineering team - remote friendly.

Crystallize

JavaScript Developer at X-Team (Remote) — Join the most energizing community for developers and work on projects for Riot Games, FOX, Sony, Coinbase, and more.

X-Team

One Application, Hundreds of Hiring Managers — Use Vettery to connect with hiring managers at startups and Fortune 500 companies. It's free for job-seekers.

Vettery

📚 Tutorials, Opinions and Stories

How Different Versions of Your Site Can Be 'Running' At The Same Time — You might think that the version of your site or app that’s ‘live’ and in production is the version everyone’s using.. but it’s not necessarily the case and you need to be prepared.

Jake Archibald

Let's Debug a Node Application — A brief, high level look at some ways to step beyond the console.log approach, by using Node Inspect, ndb, llnode, or other modules.

Julián Duque (Heroku)

3 Common Mistakes When Using Promises — Spoiler: Wrapping everything in a Promise constructor, consecutive vs parallel thens, and executing promises immediately after creation.

Mateusz Podlasin

Stream Chat API & JavaScript SDK for Custom Chat Apps — Build real-time chat in less time. Rapidly ship in-app messaging with our highly reliable chat infrastructure.

Stream sponsor

Matching Accented Letters in Regular Expressions — A quick tip for when a range like A-z doesn’t quite work..

David Walsh

Setting Up Redux For Use in a Real-World Application — For state management there’s Redux in theory and then there is Redux in practice. This is the tutorial you need to get over the hump from one to the other.

Jerry Navi

Reviewing The 'Worst Piece of Code Ever' — I don’t think it really is but it’s not great and it’s allegedly in production. Hopefully you will read this for entertainment purposes only.

Michele Riva

Get an Instant GraphQL API with Hasura to Build Fullstack Apps, Fast

Hasura sponsor

The 10 Best Angular Tips Selected by The Community — Well, the Angular tips by one person that were liked the most on Twitter, at least :-)

Roman Sedov

Node Modules at 'War': Why CommonJS and ES Modules Can’t Get Along — No, it’s not really a ‘war’, but it’s a worthwhile reflection on the differences between the two module types from the Node perspective.

Dan Fabulich

Four Ways to Combine Strings in JavaScript

Samantha Ming

🔧 Code & Tools

GPU.js: GPU Accelerated JavaScript — It’s been a while since we linked to this project but it continues to get frequent updates. It’s compiles JavaScript functions into shader language so they can run on GPUs (via WebGL or HeadlessGL). This has a lot of use cases (and there are plenty of demos here) but if you need to do lots of math in parallel.. you need to check this out.

gpu.js Team

Moveable: A Library to Make Elements Easier to Manipulate — Add moving, dragging, resizing, and rotation functionality to elements with this. GitHub repo.

Daybrush (Younkue Choi)

A Much Faster Way to Debug Code Than with Breakpoints or console.log — Wallaby catches errors in your tests and code and displays them right in your editor as you type, making your development feedback loop more productive.

Wallaby.js sponsor

react-digraph 7.0: A Library for Creating Directed Graph Editors — Create a directed graph editor without implementing SVG drawing or event handling logic yourself.

Uber Open Source

JSchallenger: Learn JavaScript by Solving Coding Exercises — I like that the home page shows the “most failed” challenges, which can give you an idea of the kind of thing other developers are having trouble with.

Erik Kückelheim

JSON Schema Store: Schemas for All Commonly Known JSON File Formats

SchemaStore

WordSafety: Check a Name for Unwanted Meanings in Other Languages — A neat idea. Rather than name your next project something that offends half of a continent, run it through this to pick up any glaring issues.

Pauli Olavi Ojala

🆕 Quick releases:

Handsontable 8.0 — A data grid meets spreadsheet control.

Fabric.js 4.0 — Canvas library and SVG to Canvas parser.

Fastify 3.2.0 — Low overhead Node.js web framework.

Escodegen 2.0 — ECMAScript code generator.

Acorn 7.4.0 — Small pure JS JavaScript parser.

Material Design for Angular ��� v1.2.0

by via JavaScript Weekly https://ift.tt/33B9YQQ

0 notes

Text

Version 320

youtube

windows

zip

exe

os x

app

tar.gz

linux

tar.gz

source

tar.gz

I had a great week. The downloader overhaul is in its last act, and I've fixed and added some other neat stuff. There's also a neat hydrus-related project for advanced users to try out.

Late breaking edit: Looks like I have broken e621 queries that include the '/' character this week, like 'male/female'! Hold off on updating if you have these, or pause them and wait a week for me to fix it!

misc

I fixed an issue introduced in last week's new pipeline with new subs sometimes not parsing the first page of results properly. If you missed files you wanted in the first sync, please reset the affected subs' caches.

Due to an oversight, a mappings cache that I now take advantage of to speed up tag searches was missing an index that would speed it up even further. I've now added these indices--and your clients will spend a minute generating them on update--and most tag searches are now superfast! My IRL client was taking 1.6s to do the first step of finding 5000-file tag results, and now it does it in under 5ms! Indices!

The hyperlinks on the media viewer now use any custom browser launch path in options->files and trash.

downloader overhaul (easy)

I have now added gallery parsers for all the default sites hydrus supports out the box. Any regular download now entirely parses in the new system. With luck, you won't notice any difference, but let me know if you get any searches that terminate early or any other problems.

I have also written the new Gallery URL Generator (GUG) objects for everything, but I have not yet plugged these in. I am now on the precipice of switching this final legacy step over to the new system. This will be a big shift that will finally allow us to have new gallery 'seachers' for all kinds of new sites. I expect to do this next week.

When I do the GUG switch, anything that is supported by default in the client should switch over silently and automatically, but if you have added any new custom boorus, a small amount of additional work will be required on your end to get them working again. I will work with the other parser-creators in the community to make this as painless as possible, and there will be instructions in next week's release post. In any case, I expect to roll out nicer downloaders for the popular desired boorus (derpibooru, FA, etc...) as part of the normal upcoming update process, along with some other new additions like artstation and hopefully twitter username lookup.

In any case, watch this space! It's almost happening!

downloader overhaul (advanced)

So, all the GUGs are in place, and the dialog now saves. If you are interested in making some of your own, check what I've done. I'm going to swap out the legacy 'gallery identifier' object with GUGs this coming week, and fingers-crossed, it will mostly all just swap out no prob. I can update existing gallery identifiers to my new GUGs, which will automatically inherit the url classes and parsers I've already got in place, but custom boorus are too complicated for me to update completely automatically. I will try to auto-generate gallery and post url parsers, but users will need GUGs and url classes to get working again. I think the best solution is if we direct medium-level users to the parser github and have them link things together manually, and then follow-up with whatever 'easy import' object I come up with to bundle downloader-capability into a single object. And as I say above, I'll also fold in the more popular downloaders into some regular updates. I am open to discuss this more if you have ideas!

Furthermore, I've extended url classes this week to allow 'default' values for path components and query parameters. If that component or parameter is missing from a given URL, it will still be recognised as the URL class, but it will gain the default value during import normalisation. e.g. The kind of URL safebooru gives your browser when you type in a query:

https://safebooru.org/index.php?page=post&s=list&tags=contrapposto

Will be automatically populated with an initialising pid=0 parameter:

https://safebooru.org/index.php?page=post&pid=0&s=list&tags=contrapposto

This helps us with several "the site gives a blank page/index value for the first page, which I can't match to a paged URL that will then increment via the url class"-kind of problems. It will particularly help when I add drag-and-drop search--we want it so a user can type in a query in their browser, check it is good, and then DnD the URL the site gave them straight into hydrus and the page stuff will all get sorted behind the scenes without them having to think about it.

I've updated a bunch of the gallery url classes this week with these new defaults, so again, if you are interested, please check them out. The Hentai Foundry ones are interesting.

I've also improved some of the logic behind download sites' 'source url' pre-import file status checking. Now, if URL X at Site A provides a Source URL Y to Site B, and the file Y is mapped to also has a URL Z that fits the same url class as X, Y is now distrusted as a source (wew). This stops false positive source url recognition when the booru gives the same 'original' source url for multiple files (including alternate/edited files). e621 has particularly had several of these issues, and I am sure several others do as well. I've been tracking this issue with several people, so if you have been hit by this, please let me if and know this change fixes anything, particularly for new files going forward, which have yet to be 'tainted' by multiple incorrect known url mappings. I'll also be adding some 'just download the damned file' checkboxes to file import options as I have previously discussed.

A user on the discord helpfully submitted some code that adds an 'import cookies.txt' button to the review session cookies panels. This could be a real neat way to effect fake logins, where you just copy your browser's cookies, so please play with this and let me know how you get on. I had mixed success getting different styles of cookies.txt to import, so I would be interested in more information, and to know which sites work great at logging in this way, and which are bad, and which cookies.txt browser add-ons are best!

a web interface to the server

I have been talking for a bit with a user who has written a web interface to the hydrus server. He is a clever dude who has done some neat work, and his project is now ready for people to try out. If you are fairly experienced in hydrus and would like to experiment with a nice-looking computer- and phone-compatible web interface to the general file/tag mapping system hydrus uses, please check this out:

https://github.com/mserajnik/hydrusrvue

https://github.com/mserajnik/hydrusrv

https://github.com/mserajnik/hydrusrv-docker

In particular, check out the live demo and screenshots here:

https://github.com/mserajnik/hydrusrvue/#demo

Let him know how you like it! I expect to write proper, easier APIs in the coming years, which will allow projects like this to do all sorts of new and neat things.

full list

clients should now have objects for all default downloaders. everything should be prepped for the big switchover:

wrote gallery url generators for all the default downloaders and a couple more as well

wrote a gallery parser for deviant art--it also comes with an update to the DA url class because the meta 'next page' link on DA gallery pages is invalid wew!

wrote a gallery parser for hentai foundry, inkbunny, rule34hentai, moebooru (konachan, sakugabooru, yande.re), artstation, newgrounds, and pixiv artist galleries (static html)

added a gallery parser for sankaku

the artstation post url parser no longer fetches cover images

url classes can now support 'default' values for path components and query parameters! so, if your url might be missing a page=1 initialsation value due to user drag-and-drop, you can auto-add it in the normalisation step!

if the entered default does not match the rules of the component or parameter, it will be cleared back to none!

all appropriate default gallery url classes (which is most) now have these default values. all default gallery url classes will be overwritten on db update

three test 'search initialisation' url classes that attempted to fix this problem a different way will be deleted on update, if present

updated some other url classes

when checking source urls during the pre-download import status check, the client will now distrust parsed source urls if the files they seem to refer to also have other urls of the same url class as the file import object being actioned (basically, this is some logic that tries to detect bad source url attribution, where multiple files on a booru (typically including alternate edits) are all source-url'd back to a single original)

gallery page parsing now discounts parsed 'next page' urls that are the same as the page that fetched them (some gallery end-points link themselves as the next page, wew)

json parsing formulae that are set to parse all 'list' items will now also parse all dictionary entries if faced with a dict instead!

added new stop-gap 'stop checking' logic in subscription syncing for certain low-gallery-count edge-cases

fixed an issue where (typically new) subscriptions were bugging out trying to figure a default stop_reason on certain page results

fixed an unusual listctrl delete item index-tracking error that would sometimes cause exceptions on the 'try to link url stuff together' button press and maybe some other places

thanks to a submission from user prkc on the discord, we now have 'import cookies.txt' buttons on the review sessions panels! if you are interested in 'manual' logins through browser-cookie-copying, please give this a go and let me know which kinds of cookies.txt do and do not work, and how your different site cookie-copy-login tests work in hydrus.

the mappings cache tables now have some new indices that speed up certain kinds of tag search significantly. db update will spend a minute or two generating these indices for existing users

advanced mode users will discover a fun new entry on the help menu

the hyperlinks on the media viewer hover window and a couple of other places are now a custom control that uses any custom browser launch path in options->files and trash

fixed an issue where certain canvas edge-case media clearing events could be caught incorrectly by the manage tags dialog and its subsidiary panels

think I fixed an issue where a client left with a dialog open could sometimes run into trouble later trying to show an idle time maintenance modal popup and give a 'C++ assertion IsRunning()' exception and end up locking the client's ui

manage parsers dialog will now autosort after an add event

the gug panels now normalise example urls

improved some misc service error handling

rewrote some url parsing to stop forcing '+'->' ' in our urls' query texts

fixed some bad error handling for matplotlib import

misc fixes

next week

The big GUG overhaul is the main thing. The button where you select which site to download from will seem only to get some slightly different labels, but in truth a whole big pipeline behind that button needs to be shifted over to the new system. GUGs are actually pretty simple, so I hope this will only take one week, but we'll see!

1 note

·

View note

Text

Weekly Digest

Dec 16, 2017, 3rd issue.

A roundup of stuff I consumed this week. Published weekly(ish).

Read

Whoever your graphic design portfolio site is aimed at, you have to remember that people’s time and attention is limited. Employers, to take one example, may look at dozens of portfolios in the space of 10 minutes. So you only have a few seconds to really grab their attention and enthuse them.

—8 great graphic design portfolio sites for 2018

Paying for more than 3,500 daily drinks for six years, it turns out, is expensive. The NIH would need more funding—and soon, a team stepped up to the plate. The Foundation of the NIH, a little-known 20-year-old non-profit that calls on donors to support NIH science, was talking to alcohol corporations. By the fall of 2014, the study was relying on the industry for “separate contributions to the Foundation of the NIH beyond what the NIAAA could afford,” as Mukamal put it in an e-mail to a prospective collaborator. Later that year, Congress encouraged the NIH to sponsor the study, but lawmakers didn’t provide any money. Five corporations—Anheuser-Busch InBev, Diageo, Pernod Ricard, Heineken, and Carlsberg—have since provided a total of $67 million. The foundation is seeking another $23 million, according to its director of development, Julie Wolf-Rodda.

—A MASSIVE HEALTH STUDY ON BOOZE, BROUGHT TO YOU BY BIG ALCOHOL

When Starbucks (SBUX) announced that it was closing its Teavana tea line and wanted to shutter all of its stores, mall operator Simon Property Group (SPG) countered with a lawsuit. Simon cited in part the effect the store closures might have on other mall tenants.

Earlier this month, a judge upheld Simons' suit, ordering Teavana to keep 77 of its stores open.

—America's malls are rotting away

The Dots claims to have a quarter of a million members and current clients include Google, Burberry, Sony Pictures, Viacom, M&C Saatchi, Warner Music, Tate, Discovery Networks and VICE amongst others.

—Aiming to be the LinkedIn for creatives, The Dots raises £4m

The Cboe's bitcoin futures fell 10 percent Wednesday, triggering a two-minute trading halt early Wednesday afternoon.

—Bitcoin futures briefly halted after plunging 10%

Through a very clever scheme, the people behind Tether can continue to send Bitcoin into the stratosphere until it reaches a not-yet-known breaking point.

—Bitcoin Only Has One Way To Go If This Is True

—Bitcoin Price Dilemma: Bull and Bear Paths in Play

—Botera – Free Font

"He is being a huge assh*le and avoiding you so it literally forces you to be the one to break up with him because he's too much of a coward to do it himself. GOD, I HATE GUYS."

—"Breakup Ghosting" Is the Most Cowardly Way to End a Relationship

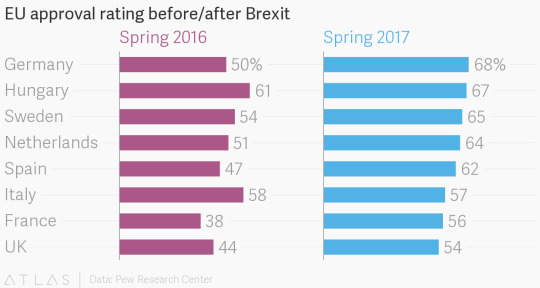

—Britain rejected the EU, and the EU is loving its new life

“Although the science is still evolving, there are concerns among some public health professionals and members of the public regarding long-term, high use exposure to the energy emitted by cellphones,” Dr. Karen Smith, CDPH Director and State Public Health Officer, said in a statement.

—California Warns People to Limit Exposure to Cellphones

There is a way CSS can get its hands on data in HTML, so long as that data is within an attribute on that HTML element.

—The CSS attr() function got nothin’ on custom properties

“The recent coverage of AI as a single, unified power is a predictable upshot of a self-aggrandizing Silicon Valley culture that believes it can summon a Godhead,” says Thomas Arnold

—Former Google and Uber engineer is developing an AI 'god'

Here are two facts: 1) Throughout the tail end of Matt Lauer’s tenure at NBC’s Today, ABC’s Good Morning America beat it in the ratings, and 2) In the two weeks since Lauer was kicked to the curb for sexual misconduct and replaced by Hoda Kotb, Today’s viewership has surpassed GMA’s by a considerable margin.

Here are two opinions: 1) No one ever really liked Matt Lauer, but tolerated him as you would a friend you’ve known for 20 years but have nothing in common with anymore, 2) Hota Kotb makes everything better.

—A Funny Thing Is Happening to Today Now That Matt Lauer Is Gone: Its Ratings Are Going Up

The game challenges you to build an empire that stands the test of time, taking your civilization from the Stone Age to the Information Age as you wage war, conduct diplomacy, advance your culture, and go head-to-head with history’s greatest leaders.

—Get the newest game in 'Sid Meier’s Civilization' series for 50% off

Amazingly, despite the mind control and hypnosis, the girl resisted being totally drawn into her father’s “cult of three.” But she suffered from self-loathing and took to self-harm as a coping mechanism.

—Girl’s father tortured her for a decade to make her ‘superhuman’

The most searched for dog breed was the golden retriever.

—Google's top searches for 2017: Matt Lauer, Hurricane Irma and more

"A few months ago, I started collecting stories from people about their real experiences with loneliness. I started small, asking my immediate network to share with their friends/family, and was flooded with submissions from people of all ages and walks of life.

"The Loneliness Project is an interactive web archive I created to present and give these stories a home online. I believe in design as a tool to elevate others' voices. Stories have tremendous power to spark empathy, and I believe that the relationship between design and emotion only strengthens this power.

—Graphic designer tackles issue of wide-spread loneliness in moving campaign

While the Windows 10 OpenSSH software is currently in Beta, it still works really well. Especially the client as you no longer need to use a 3rd party SSH client such as Putty when you wish to connect to a SSH server.

—Here's How to Enable the Built-In Windows 10 OpenSSH Client

In America we have settled on patterns of land use that might as well have been designed to prevent spontaneous encounters, the kind out of which rich social ties are built.

—How our housing choices make adult friendships more difficult

Today was "Break the Internet" day, in which many websites altered their appearance and urged visitors to contact members of Congress about the pending repeal (see the gallery above for examples from Reddit, Kickstarter, GitHub, Mozilla, and others).

—How Reddit and others “broke the Internet” to support net neutrality today

“He’s the Usain Bolt of business for Jamaica,” Richards said. “For each Jamaican immigrant, Lowell Hawthorne is me, he’s you. He was the soul of Jamaica, the son of our soil, and all of our struggles were identified with him.”

—How the Jamaican patty king made it to the top — before ending it all

—How to break a CAPTCHA system in 15 minutes with Machine Learning

After the trap has snapped shut, the plant turns it into an external stomach, sealing the trap so no air gets in or out. Glands produce enzymes that digest the insect, first the exoskeleton made of chitin, then the nitrogen-rich blood, which is called hemolyph.

The digestion takes several days depending on the size of the insect, and then the leaf re-opens. By that time, the insect is a "shadow skeleton" that is easily blown away by the wind.

—How the Venus Flytrap Kills and Digests Its Prey

Back at The Shed, Phoebe has arrived. She's an intuitive waitress who can really get across the nuances of our menu, like how – by serving pudding in mugs – we're aiming to replicate the experience of what it's like to eat pudding out of a mug.

—I Made My Shed the Top Rated Restaurant On TripAdvisor

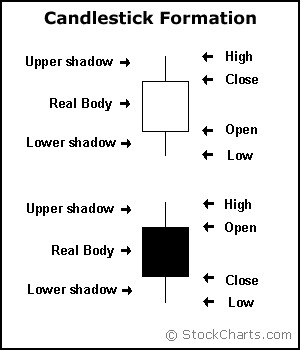

In order to create a candlestick chart, you must have a data set that contains open, high, low and closevalues for each time period you want to display. The hollow or filled portion of the candlestick is called “the body” (also referred to as “the real body”). The long thin lines above and below the body represent the high/low range and are called “shadows” (also referred to as “wicks” and “tails”). The high is marked by the top of the upper shadow and the low by the bottom of the lower shadow.

—Introduction to Candlesticks

The object in question is ‘Oumuamua, an asteroid from another star system currently zipping past Jupiter at about 196,000 miles per hour, too fast to be trapped by the sun’s gravitational pull. First discovered in mid-October by astronomers at the Pan-STARRS project at the University of Hawaii, the 800-meter-long, 80-meter-wide, cigar-shaped rock is, technically speaking, weird as hell—and that’s precisely why some scientists think it’s not a natural object.

—Is This Cigar-Shaped Asteroid Watching Us?

I tried out LinkedIn Career Advice and Bumble Bizz over the course of a work week and compared them in terms of how easy they are to use and the kind of people they introduce you to.

—I tried LinkedIn's career advice app vs. dating app Bumble's version and discovered major flaws with both

“The Bitcoin dream is all but dead,” I wrote.

—I Was Wrong About Bitcoin. Here’s Why.

—Jessen's Orthogonal Icosahedron

In the study, depressed patients who got an infusion of ketamine reported rapid relief from suicidal thoughts—many as soon as a few hours after receiving the drug.

—Ketamine Relieved Suicidal Thoughts Within Hours in Hospital Study