#sci_evergreen1

Explore tagged Tumblr posts

Text

Tracking air pollution disparities -- daily -- from space

https://sciencespies.com/environment/tracking-air-pollution-disparities-daily-from-space/

Tracking air pollution disparities -- daily -- from space

Studies have shown that pollution, whether from factories or traffic-snarled roads, disproportionately affects communities where economically disadvantaged people and Hispanic, Black and Asian people live. As technology has improved, scientists have begun documenting these disparities in detail, but information on daily variations has been lacking. Today, scientists report preliminary work calculating how inequities in exposure fluctuate from day to day across 11 major U.S. cities. In addition, they show that in some places, climate change could exacerbate these differences.

The researchers will present their results at the fall meeting of the American Chemical Society (ACS).

Air pollution levels can vary significantly across relatively short distances, dropping off a few hundred yards from a freeway, for example. Researchers, including Sally Pusede, Ph.D., have used satellite and other observations to determine how air quality varies on a small geographic scale, at the level of neighborhoods.

But this approach overlooks another crucial variable. “When we regulate air pollution, we don’t think of it as remaining constant over time, we think of it as dynamic,” says Pusede, the project’s principal investigator. “Our new work takes a step forward by looking at how these levels vary from day to day,” she says.

Information about these fluctuations can help pinpoint sources of pollution. For instance, in research reported last year, Pusede and colleagues at the University of Virginia found that disparities in air quality across major U.S. cities decreased on weekends. Their analysis tied this drop to the reduction of deliveries by diesel-fueled trucks. On weekends, more than half of such trucks are parked.

Pusede’s research focuses on the gas NO2, which is a component of the complex brew of potentially harmful compounds produced by combustion. To get a sense of air pollution levels, scientists often look to NO2. But it’s not just a proxy — exposure to high concentrations of this gas can irritate the airways and aggravate pulmonary conditions. Inhaling elevated levels of NO2 over the long term can also contribute to the development of asthma.

The team has been using data on NO2 collected almost daily by a space-based instrument known as TROPOMI, which they confirmed with higher resolution measurements made from a similar sensor on board an airplane flown as part of NASA’s LISTOS project. They analyzed these data across small geographic regions, called census tracts, that are defined by the U.S. Census Bureau. In a proof-of-concept project, they used this approach to analyze initial disparities in Houston, and later applied these data-gathering methods to study daily disparities over New York City and Newark, New Jersey.

Now, they have analyzed satellite-based data for 11 additional cities, aside from New York City and Newark, for daily variations. The cities are: Atlanta, Baltimore, Chicago, Denver, Houston, Kansas City, Los Angeles, Phoenix, Seattle, St. Louis and Washington, D.C. A preliminary analysis found the highest average disparity in Los Angeles for Black, Hispanic and Asian communities in the lowest socioeconomic status (SES) tracts. They experienced an average of 38% higher levels of pollution than their non-Hispanic white, higher SES counterparts in the same city — although disparities on some days were much higher. Washington, D.C., had the lowest disparity, with an average of 10% higher levels in Black, Hispanic and Asian communities in low-income tracts.

In these cities, as in New York City and Newark, the researchers also analyzed the data to see whether they could identify any links with wind and heat — both factors that are expected to change as the world warms. Although the analysis is not yet complete, the team has so far found a direct connection between stagnant air and uneven pollution distribution, which was not surprising to the team because winds disperse pollution. Because air stagnation is expected to increase in the northeastern and southwestern U.S. in the coming years, this result suggests uneven air pollution distribution could worsen in these regions, too, if actions to reduce emissions are not taken. The team found a less robust connection with heat, though a correlation existed. Hot days are expected to increase across the country with climate change. Thus, the researchers say that if greenhouse gas emissions aren’t reduced soon, people in these communities could face more days in which conditions are hazardous to their health from the combination of NO2 and heat impacts.

Pusede hopes to see this type of analysis used to support communities fighting to improve air quality. “Because we can get daily data on pollutant levels, it’s possible to evaluate the success of interventions, such as rerouting diesel trucks or adding emissions controls on industrial facilities, to reduce them,” she says.

The researchers acknowledge support and funding from NASA and the National Science Foundation.

Video: https://youtu.be/SbQ87rZq9MA

#Environment

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Environment

8 notes

·

View notes

Text

JWST has taken even more beautiful images of Jupiter and its aurora

https://sciencespies.com/space/jwst-has-taken-even-more-beautiful-images-of-jupiter-and-its-aurora/

JWST has taken even more beautiful images of Jupiter and its aurora

The James Webb Space Telescope has taken new images of Jupiter, showing off its bright hazes, tenuous rings and auroras with the hopes of understanding the entire system better

Space 22 August 2022

By Leah Crane

The orange glow at Jupiter’s poles are its aurora

NASA, ESA, CSA and Jupiter ERS Team. Image processing by Judy Schmidt

The James Webb Space Telescope (JWST) has released two stunning new images showcasing the complexities of Jupiter. While its previous images of the gas giant each used only one wavelength of light, these are composite images, showing Jupiter’s glowing auroras, shifting haze and two of its small moons.

Because JWST observes in infrared light, these images do not show Jupiter as it would look to the naked eye. Instead, different infrared wavelengths have been mapped to different colours to highlight particular features of the planet.

In the above image, the orange glow at Jupiter’s poles is its aurora. The green represents layers of tenuous high-altitude haze, while blue shows the main cloud layer. The white areas show the tops of storms, including the Great Red Spot.

Advertisement

“We hadn’t really expected it to be this good, to be honest,” said Imke de Pater at University of California, Berkeley – who led this research along with Thierry Fouchet at the Paris Observatory – in a statement. “It’s really remarkable that we can see details on Jupiter together with its rings, tiny satellites, and even galaxies in one image.”

Jupiter and two of its small moons, Adrastea and Amalthea

NASA, ESA, CSA and Jupiter ERS Team. Image processing by Judy Schmidt

The wide-field image of Jupiter, above, shows not just Jupiter’s aurora – this time in blue – but also its tenuous rings. Lined up to the left of the planet are two of its small moons, Adrastea and Amalthea. The spots scattered throughout the image are mostly distant galaxies in the background.

De Pater and her colleagues hope that images like this will allow them to unravel the connections between Jupiter’s different layers and gain an understanding of how gas and heat move throughout the planet. They also aim to study the planet’s faint ring and how it evolves over time, as well as take pictures of some of its moons.

“This one image sums up the science of our Jupiter system program,” said Fouchet. The researchers are now analysing the data that was used to create these images, looking for hints as to Jupiter’s inner machinations.

Sign up to our free Launchpad newsletter for a voyage across the galaxy and beyond, every Friday

More on these topics:

#Space

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Space

1 note

·

View note

Text

DNA profiling solves Australian rabbit plague puzzle

https://sciencespies.com/nature/dna-profiling-solves-australian-rabbit-plague-puzzle/

DNA profiling solves Australian rabbit plague puzzle

Rabbits were first introduced to mainland Australia when five domestic animals were brought to Sydney on the First Fleet in 1788. At least 90 subsequent importations would be made before 1859 but none of these populations became invasive. But within 50 years, at a rate of 100 km per year, rabbits would spread across the entire continent, making this the fastest colonisation rate for an introduced mammal ever recorded. So what changed after 1859 and how did the invasion begin?

Historians and the Australian public have long assumed that the country’s ‘rabbit plague’ began at Barwon Park, the estate of Thomas Austin, near Geelong in Victoria. In a study published today in PNAS, an international team led by the University of Cambridge and CIBIO Institute in Portugal finally provides genetic proof for this version of events and settles a debate about whether the invasion arose from a single or several independent introductions.

On 6th October 1859, Austin’s brother, William, sent a consignment of wild rabbits – caught on the family’s land in Baltonsborough in Somerset – together with some domestic rabbits, on the ship Lightning. On Christmas Day, 24 rabbits arrived in Melbourne and were dispatched to Barwon Park. Within three years, ‘Austin rabbits’ had multiplied into thousands, according to a local newspaper report and Austin himself.

The researchers studied historical records alongside new genetic data collected from 187 ‘European rabbits’ – mostly wild-caught across Australia, Tasmania, New Zealand, Britain and France between 1865 and 2018 – to establish where Australia’s invasive rabbits originated from; whether the invasion arose from a single or multiple introductions; how they spread across the country; and whether there was a genetic explanation for their success compared to that of other imported rabbit populations.

Recent studies disputed the single-origin hypothesis, instead arguing that invasive rabbits arose from several independent introductions. However, they did not sample ancestral European and domestic populations, which was crucial to disentangle the source of Australia’s rabbits. Lead author, Dr Joel Alves, who is currently a researcher at the University of Oxford and CIBIO Institute said:

“We managed to trace the ancestry of Australia’s invasive population right back to the South-West of England, where Austin’s family collected the rabbits in 1859.

advertisement

“Our findings show that despite the numerous introductions across Australia, it was a single batch of English rabbits that triggered this devastating biological invasion, the effects of which are still being felt today.”

The researchers found that as the rabbits moved further away from Barwon Park, genetic diversity declined and rare genetic variants which occur in rapidly growing populations became more frequent.

Despite the construction of rabbit-proof fences, the deliberate introduction of the myxoma virus and other measures, rabbits remain one of the major invasive species in Australia threatening native flora and fauna and costing the agricultural sector an estimated $200 million per year.

Previous studies have suggested that several factors contribute to biological invasions, including the number of individuals, the number of introductions, and environmental change. The new findings suggest that the genetic composition of those animals can be just, if not more, influential.

The researchers point out that if the trigger for the invasion had been environmental change, such as the development of large pastoral areas by human settlers, then multiple local rabbit populations would likely have expanded. The study’s genetic findings and the failure of pre-1859 rabbits to become invasive undermined this possibility.

advertisement

Instead, the team explored the possibility that the arrival of specific genetic traits acted as the trigger for the invasion, something which would help to explain the overwhelming genetic evidence for a single introduction.

The rabbits introduced to Australia before 1859 were often described as displaying tameness, fancy coat colours and floppy ears, traits associated with domestic breeds but normally absent in wild animals. Austin’s rabbits were described as wild-caught at the time, and the new study’s genetic findings prove that at least some of these animals were indeed wild.

Senior author Professor Francis Jiggins from Cambridge’s Department of Genetics said:

“There are numerous traits that could make feral domestic rabbits poorly adapted to survive in the wild but it is possible that they lacked the genetic variation required to adapt to Australia’s arid and semi-arid climate.

“To cope with this, Australia’s rabbits have evolved changes in body shape to help control their temperature. So it is possible that Thomas Austin’s wild rabbits, and their offspring, had a genetic advantage when it came to adapting to these conditions.”

In the 20th century, Joan Palmer recalled that her grandfather William Austin had found it difficult to source the animals for Thomas “as wild rabbits were by no means common round Baltonsborough. It was only with great difficulty that he managed to get six; these were half-grown specimens taken from their nests and tamed. To make up the number he bought seven grey rabbits that the villagers had kept in hutches, either as pets or to eat”.

Alves and Jiggins found that the invasive rabbits descended from Austin’s imports contained a substantial element of domestic ancestry which they argue supports Joan Palmer’s claim that wild and domestic rabbits in the shipment bred before or during their 80-day journey, which would explain why more rabbits arrived than were sent.

Dr Alves said: “These findings matter because biological invasions are a major threat to global biodiversity and if you want to prevent them you need to understand what makes them succeed.”

“Environmental change may have made Australia vulnerable to invasion, but it was the genetic makeup of a small batch of wild rabbits that ignited one of the most iconic biological invasions of all time.”

“This serves as a reminder that the actions of just one person, or a few people, can have a devastating environmental impact.”

#Nature

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Nature

0 notes

Text

DNA profiling solves Australian rabbit plague puzzle

https://sciencespies.com/nature/dna-profiling-solves-australian-rabbit-plague-puzzle/

DNA profiling solves Australian rabbit plague puzzle

Rabbits were first introduced to mainland Australia when five domestic animals were brought to Sydney on the First Fleet in 1788. At least 90 subsequent importations would be made before 1859 but none of these populations became invasive. But within 50 years, at a rate of 100 km per year, rabbits would spread across the entire continent, making this the fastest colonisation rate for an introduced mammal ever recorded. So what changed after 1859 and how did the invasion begin?

Historians and the Australian public have long assumed that the country’s ‘rabbit plague’ began at Barwon Park, the estate of Thomas Austin, near Geelong in Victoria. In a study published today in PNAS, an international team led by the University of Cambridge and CIBIO Institute in Portugal finally provides genetic proof for this version of events and settles a debate about whether the invasion arose from a single or several independent introductions.

On 6th October 1859, Austin’s brother, William, sent a consignment of wild rabbits – caught on the family’s land in Baltonsborough in Somerset – together with some domestic rabbits, on the ship Lightning. On Christmas Day, 24 rabbits arrived in Melbourne and were dispatched to Barwon Park. Within three years, ‘Austin rabbits’ had multiplied into thousands, according to a local newspaper report and Austin himself.

The researchers studied historical records alongside new genetic data collected from 187 ‘European rabbits’ – mostly wild-caught across Australia, Tasmania, New Zealand, Britain and France between 1865 and 2018 – to establish where Australia’s invasive rabbits originated from; whether the invasion arose from a single or multiple introductions; how they spread across the country; and whether there was a genetic explanation for their success compared to that of other imported rabbit populations.

Recent studies disputed the single-origin hypothesis, instead arguing that invasive rabbits arose from several independent introductions. However, they did not sample ancestral European and domestic populations, which was crucial to disentangle the source of Australia’s rabbits. Lead author, Dr Joel Alves, who is currently a researcher at the University of Oxford and CIBIO Institute said:

“We managed to trace the ancestry of Australia’s invasive population right back to the South-West of England, where Austin’s family collected the rabbits in 1859.

advertisement

“Our findings show that despite the numerous introductions across Australia, it was a single batch of English rabbits that triggered this devastating biological invasion, the effects of which are still being felt today.”

The researchers found that as the rabbits moved further away from Barwon Park, genetic diversity declined and rare genetic variants which occur in rapidly growing populations became more frequent.

Despite the construction of rabbit-proof fences, the deliberate introduction of the myxoma virus and other measures, rabbits remain one of the major invasive species in Australia threatening native flora and fauna and costing the agricultural sector an estimated $200 million per year.

Previous studies have suggested that several factors contribute to biological invasions, including the number of individuals, the number of introductions, and environmental change. The new findings suggest that the genetic composition of those animals can be just, if not more, influential.

The researchers point out that if the trigger for the invasion had been environmental change, such as the development of large pastoral areas by human settlers, then multiple local rabbit populations would likely have expanded. The study’s genetic findings and the failure of pre-1859 rabbits to become invasive undermined this possibility.

advertisement

Instead, the team explored the possibility that the arrival of specific genetic traits acted as the trigger for the invasion, something which would help to explain the overwhelming genetic evidence for a single introduction.

The rabbits introduced to Australia before 1859 were often described as displaying tameness, fancy coat colours and floppy ears, traits associated with domestic breeds but normally absent in wild animals. Austin’s rabbits were described as wild-caught at the time, and the new study’s genetic findings prove that at least some of these animals were indeed wild.

Senior author Professor Francis Jiggins from Cambridge’s Department of Genetics said:

“There are numerous traits that could make feral domestic rabbits poorly adapted to survive in the wild but it is possible that they lacked the genetic variation required to adapt to Australia’s arid and semi-arid climate.

“To cope with this, Australia’s rabbits have evolved changes in body shape to help control their temperature. So it is possible that Thomas Austin’s wild rabbits, and their offspring, had a genetic advantage when it came to adapting to these conditions.”

In the 20th century, Joan Palmer recalled that her grandfather William Austin had found it difficult to source the animals for Thomas “as wild rabbits were by no means common round Baltonsborough. It was only with great difficulty that he managed to get six; these were half-grown specimens taken from their nests and tamed. To make up the number he bought seven grey rabbits that the villagers had kept in hutches, either as pets or to eat”.

Alves and Jiggins found that the invasive rabbits descended from Austin’s imports contained a substantial element of domestic ancestry which they argue supports Joan Palmer’s claim that wild and domestic rabbits in the shipment bred before or during their 80-day journey, which would explain why more rabbits arrived than were sent.

Dr Alves said: “These findings matter because biological invasions are a major threat to global biodiversity and if you want to prevent them you need to understand what makes them succeed.”

“Environmental change may have made Australia vulnerable to invasion, but it was the genetic makeup of a small batch of wild rabbits that ignited one of the most iconic biological invasions of all time.”

“This serves as a reminder that the actions of just one person, or a few people, can have a devastating environmental impact.”

#Nature

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Nature

0 notes

Text

Detecting nanoplastics in the air

https://sciencespies.com/environment/detecting-nanoplastics-in-the-air/

Detecting nanoplastics in the air

Large pieces of plastic can break down into nanosized particles that often find their way into the soil and water. Perhaps less well known is that they can also float in the air. It’s unclear how nanoplastics impact human health, but animal studies suggest they’re potentially harmful. As a step toward better understanding the prevalence of airborne nanoplastics, researchers have developed a sensor that detects these particles and determines the types, amounts and sizes of the plastics using colorful carbon dot films.

The researchers will present their results today at the fall meeting of the American Chemical Society (ACS).

“Nanoplastics are a major concern if they’re in the air that you breathe, getting into your lungs and potentially causing health problems,” says Raz Jelinek, Ph.D., the project’s principal investigator. “A simple, inexpensive detector like ours could have huge implications, and someday alert people to the presence of nanoplastics in the air, allowing them to take action.”

Millions of tons of plastic are produced and thrown away each year. Some plastic materials slowly erode while they’re being used or after being disposed of, polluting the surrounding environment with micro- and nanosized particles. Nanoplastics are so small — generally less than 1-µm wide — and light that they can even float in the air, where people can then unknowingly breathe them in. Animal studies suggest that ingesting and inhaling these nanoparticles may have damaging effects. Therefore, it could be helpful to know the levels of airborne nanoplastic pollution in the environment.

Previously, Jelinek’s research team at Ben-Gurion University of the Negev developed an electronic nose or “e-nose” for monitoring the presence of bacteria by adsorbing and sensing the unique combination of gas vapor molecules that they release. The researchers wanted to see if this same carbon-dot-based technology could be adapted to create a sensitive nanoplastic sensor for continuous environmental monitoring.

Carbon dots are formed when a starting material that contains lots of carbon, such as sugar or other organic matter, is heated at a moderate temperature for several hours, says Jelinek. This process can even be done using a conventional microwave. During heating, the carbon-containing material develops into colorful, and often fluorescent, nanometer-size particles called “carbon dots.” And by changing the starting material, the carbon dots can have different surface properties that can attract various molecules.

To create the bacterial e-nose, the team spread thin layers of different carbon dots onto tiny electrodes, each the size of a fingernail. They used interdigitated electrodes, which have two sides with interspersed comb-like structures. Between the two sides, an electric field develops, and the stored charge is called capacitance. “When something happens to the carbon dots — either they adsorb gas molecules or nanoplastic pieces — then there is a change of capacitance, which we can easily measure,” says Jelinek.

Then the researchers tested a proof-of-concept sensor for nanoplastics in the air, choosing carbon dots that would adsorb common types of plastic — polystyrene, polypropylene and poly(methyl methacrylate). In experiments, nanoscale plastic particles were aerosolized, making them float in the air. And when electrodes coated with carbon-dot films were exposed to the airborne nanoplastics, the team observed signals that were different for each type of material, says Jelinek. Because the number of nanoplastics in the air affects the intensity of the signal generated, Jelinek adds that currently, the sensor can report the amount of particles from a certain plastic type either above or below a predetermined concentration threshold. Additionally, when polystyrene particles in three sizes — 100-nm wide, 200-nm wide and 300-nm wide — were aerosolized, the sensor’s signal intensity was directly related to the particles’ size.

The team’s next step is to see if their system can distinguish the types of plastic in mixtures of nanoparticles. Just as the combination of carbon dot films in the bacterial e-nose distinguished between gases with differing polarities, Jelinek says it’s likely that they could tweak the nanoplastic sensor to differentiate between additional types and sizes of nanoplastics. The capability to detect different plastics based on their surface properties would make nanoplastic sensors useful for tracking these particles in schools, office buildings, homes and outdoors, he says.

The researchers acknowledge support from the Israel Innovation Authority.

Story Source:

Materials provided by American Chemical Society. Note: Content may be edited for style and length.

#Environment

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Environment

0 notes

Text

Air pollution is associated with heart attacks in non-smokers

https://sciencespies.com/environment/air-pollution-is-associated-with-heart-attacks-in-non-smokers/

Air pollution is associated with heart attacks in non-smokers

Research presented at ESC Congress 2022 supports a causal relationship between air pollution and heart attacks since smokers, who already inhale smoke, were unaffected by dirty air.

Study author Dr. Insa de Buhr-Stockburger of Berlin Brandenburg Myocardial Infarction Registry (B2HIR), Germany said: “The correlation between air pollution and heart attacks in our study was absent in smokers. This may indicate that bad air can actually cause heart attacks since smokers, who are continuously self-intoxicating with air pollutants, seem less affected by additional external pollutants.”

This study investigated the associations of nitric oxide, particulate matter with a diameter less than 10 µm (PM10), and weather with the incidence of myocardial infarction in Berlin. Nitric oxide originates from combustion at high temperatures, in particular from diesel vehicles. Combustion is also a source of PM10, along with abrasion from brakes and tyres, and dust.

The study included 17,873 patients with a myocardial infarction between 2008 and 2014 enrolled in the B2HIR.2 Daily numbers of acute myocardial infarction were extracted from the B2HIR database along with baseline patient characteristics including sex, age, smoking status, and diabetes. Daily PM10 and nitric oxide concentrations throughout the city were obtained from the Senate of Berlin. Information on sunshine duration, minimum and maximum temperature, and precipitation were retrieved from the Berlin Tempelhof weather station and merged with the data on myocardial infarction incidence and air pollution.

The researchers analysed the associations between the incidence of acute myocardial and average pollutant concentrations on the same day, previous day, and an average of the three preceding days among all patients and according to baseline characteristics. Associations between the incidence of acute myocardial and weather parameters were also analysed.

Regarding pollution, myocardial infarction was significantly more common on days with high nitric oxide concentrations, with a 1% higher incidence for every 10 µg/m3 increase. Myocardial infarction was also more common when there was a high average PM10 concentration over the three preceding days, with a 4% higher incidence for every 10 µg/m3 increase. The incidence of myocardial infarction in smokers was unaffected by nitric oxide and PM10 concentrations.

Regarding weather, the incidence of myocardial infarction was significantly related to the maximum temperature, with a 6% lower incidence for every 10°C rise in temperature. No associations with sunshine duration or precipitation were detected.

Dr. de Buhr-Stockburger said: “The study indicates that dirty air is a risk factor for acute myocardial infarction and more efforts are needed to lower pollution from traffic and combustion. Causation cannot be established by an observational study. It is plausible that air pollution is a contributing cause of myocardial infarction, given that nitric oxide and PM10 promote inflammation, atherosclerosis is partly caused by inflammatory processes, and no associations were found in smokers.”

Story Source:

Materials provided by European Society of Cardiology. Note: Content may be edited for style and length.

#Environment

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Environment

0 notes

Text

Sound reveals giant blue whales dance with the wind to find food

https://sciencespies.com/environment/sound-reveals-giant-blue-whales-dance-with-the-wind-to-find-food/

Sound reveals giant blue whales dance with the wind to find food

A study by MBARI researchers and their collaborators published today in Ecology Letters sheds new light on the movements of mysterious, endangered blue whales. The research team used a directional hydrophone on MBARI’s underwater observatory, integrated with other advanced technologies, to listen for the booming vocalizations of blue whales. They used these sounds to track the movements of blue whales and learned that these ocean giants respond to changes in the wind.

Along California’s Central Coast, spring and summer bring coastal upwelling. From March through July, seasonal winds push the top layer of water out to sea, allowing the cold water below to rise to the surface. The cooler, nutrient-rich water fuels blooms of tiny phytoplankton, jumpstarting the food web in Monterey Bay, from small shrimp-like krill all the way to giant whales. When the winds create an upwelling event, blue whales seek out the plumes of cooler water, where krill are most abundant. When upwelling stops, the whales move offshore into habitat that is transected by shipping lanes.

“This research and its underlying technologies are opening new windows into the complex, and beautiful, ecology of these endangered whales,” said John Ryan, a biological oceanographer at MBARI and lead author of this study. “These findings demonstrate a new resource for managers seeking ways to better protect blue whales and other species.”

The directional hydrophone is a specialized underwater microphone that records sounds and identifies the direction from which they originate. To use this technology to study blue whale movements, researchers needed to confirm that the hydrophone reliably tracked whales. This meant matching the acoustic bearings to a calling whale that was being tracked by GPS. With confidence in the acoustic methods established, the research team examined two years of acoustic tracking of the regional blue whale population.

This study built upon previous research led by MBARI Senior Scientist Kelly Benoit-Bird, which revealed that swarms of forage species — anchovies and krill — reacted to coastal upwelling. This time, researchers combined satellite and mooring data of upwelling conditions and echosounder data on krill aggregations with the acoustic tracks of foraging blue whales logged by the directional hydrophone.

“Previous work by the MBARI team found that when coastal upwelling was strongest, anchovies and krill formed dense swarms within upwelling plumes. Now, we’ve learned that blue whales track these dynamic plumes, where abundant food resources are available,” explained Ryan.

advertisement

Blue whales recognize when the wind is changing their habitat and identify places where upwelling aggregates their essential food — krill. For a massive animal weighing up to 150 tonnes (165 tons), finding these dense aggregations is a matter of survival.

While scientists have long recognized that blue whales seasonally occupy Monterey Bay during the upwelling season, this research has revealed that the whales closely track the upwelling process on a very fine scale of both space (kilometers) and time (days to weeks).

“Tracking many individual wild animals simultaneously is challenging in any ecosystem. This is especially difficult in the open ocean, which is often opaque to us as human observers,” said William Oestreich, previously a graduate student at Stanford University’s Hopkins Marine Station and now a postdoctoral fellow at MBARI. “Integration of technologies to measure these whales’ sounds enabled this important discovery about how groups of predators find food in a dynamic ocean. We’re excited about the future discoveries we can make by eavesdropping on blue whales and other noisy ocean animals.”

Background

Blue whales (Balaenoptera musculus) are the largest animals on Earth, but despite their large size, scientists still have many unanswered questions about their biology and ecology. These gentle giants seasonally gather in the Monterey Bay region to feed on small shrimp-like crustaceans called krill.

advertisement

Blue whales are elusive animals. They can travel large distances underwater very quickly, making them challenging to track. MBARI researchers and collaborators employed a novel technique for tracking blue whales — sound.

MBARI’s MARS (Monterey Accelerated Research System) observatory offers a platform for studying the ocean in new ways. Funded by the National Science Foundation, the cabled observatory provides continuous power and data connectivity to support a variety of instruments for scientific experiments.

In 2015, MBARI researchers installed a hydrophone, or underwater microphone, on the observatory. The trove of acoustic data from the hydrophone has provided important insights into the ocean soundscape, from the migratory and feeding behaviors of blue whales to the impact of noise from human activities.

In 2019, MBARI and the Naval Postgraduate School installed a second hydrophone on the observatory. The directional hydrophone gives the direction from which a sound originated. This information can reveal spatial patterns for sounds underwater, identifying where sounds came from. By tracking the blue whales’ B call — the most powerful and prevalent vocalization among the regional blue whale population — researchers could follow the movements of individual whales as they foraged within the region.

Researchers compared the directional hydrophone’s recordings to data logged by tags that scientists from Stanford University had previously deployed on blue whales. Validating this new acoustic tracking method opens new opportunities for simultaneously logging the movements of multiple whales. It may also enable animal-borne tag research by helping researchers find whales to tag. “The integrated suite of technologies demonstrated in this paper represents a transformative tool kit for interdisciplinary research and mesoscale ecosystem monitoring that can be deployed at scale throughout protected marine habitats. This is a game changer and brings both cetacean biology and biological oceanography to the next level,” said Jeremy Goldbogen, an associate professor at Stanford University’s Hopkins Marine Station and a coauthor of the study.

This new methodology has implications not only for understanding how whales interact with their environment and one another but also for advancing management and conservation.

Despite protections, blue whales remain endangered, primarily from the risk of collisions with ships. This study showed that blue whales in Monterey Bay National Marine Sanctuary regularly occupy habitat transected by shipping lanes. Acoustic tracking of whales may provide real-time information for resource managers to mitigate risk, for example, through vessel speed reduction or rerouting during critical periods. “These kinds of integrated tools could allow us to spatially and temporally monitor, and eventually even predict, ephemeral biological hotspots. This promises to be a watershed advancement in the adaptive management of risks for protected and endangered species,” said Brandon Southall, president and senior scientist for Southall Environmental Associates Inc. and a coauthor of the research study.

Support for this research was provided by the David and Lucile Packard Foundation. The National Science Foundation funded the installation and maintenance of the MARS cabled observatory through awards 0739828 and 1114794. Directional acoustic processing work was supported by the Office of Naval Research, Code 32. Tag work was funded in part by the National Science Foundation (IOS-1656676), the Office of Naval Research (N000141612477), and a Terman Fellowship from Stanford University.

#Environment

#10-2022 Science News#2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#October 2022 Science News#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#Space Physics & Nature#Space Science#Environment

36 notes

·

View notes

Text

Fossil Find Tantalizes Loch Ness Monster Fans

https://sciencespies.com/news/fossil-find-tantalizes-loch-ness-monster-fans/

Fossil Find Tantalizes Loch Ness Monster Fans

Plesiosaurs went extinct 66 million years ago, but evidence that the long-necked reptiles lived in freshwater, not just oceans, has offered hope to Nessie enthusiasts.

LONDON — Millions of years before the first (alleged) sighting of the Loch Ness monster, populations of giant reptiles swam through Jurassic seas in areas that are now Britain. Known as plesiosaurs, these long-necked creatures were thought to have dwelled exclusively in oceans.

But a discovery published in a paper last week by researchers in Britain and Morocco added weight to a hypothesis that some Loch Ness monster enthusiasts have long clung to: that plesiosaurs lived not just in seas, but in freshwater, too. That could mean, they reasoned excitedly, that Nessie, who is sometimes described as looking a lot like a plesiosaur, really could live in Loch Ness, a freshwater lake.

Local papers have celebrated the finding. It “gives further credit to the idea that Nessie may have been able to survive and even thrive in Loch Ness,” said an article on page 32 of the Inverness Courrier, a biweekly newspaper in the Scottish Highlands. “Loch Ness Monster bombshell,” blared a headline from Britain’s Daily Express tabloid. “Existence of Loch Ness Monster is ‘plausible’” read headlines in The Scotsman, The Telegraph and elsewhere, seizing on a phrase in the University of Bath’s announcement of the study’s findings.

This is not the first study to find that plesiosaurs lived in freshwater. “This new study is simply providing additional evidence for certain members of this group living in freshwater,” said Dean Lomax, a paleontologist and visiting scientist at the University of Manchester. “We’ve always known this.”

But Nick Longrich, the lead author of the study, said his team had one of the stronger cases for it because they found fossils of 12 plesiosaurs, proof that it was not just one plesiosaur that wandered into freshwater and then died there.

“The more plesiosaur fossils discovered in freshwater environments, the more this will further build the picture to explain why plesiosaurs might be turning up in freshwater environments around the world,” said Georgina Bunker, a student who was a co-author of the paper.

Dr. Longrich, a paleontologist and evolutionary biologist at the University of Bath, said it was “completely unexpected” to find the fossil of a plesiosaur that had lived in an 100-million-year-old freshwater river system that is now the Sahara.

While on a research trip to Morocco, he was sifting through a box in the back room of a shop when he spotted a “kind of chunky” bone, which turned out to be the arm of a five-foot long baby plesiosaur. Dr. Longrich paid the cashier no more than 200 Moroccan Dirham, or about $20, after bargaining to bring down the price, and brought the fossils back to Britain for further study.

Nick Longrich/University of Bath

“Once we started looking, the plesiosaur started turning up everywhere,” he said. “It reminds you there’s a lot we don’t know.” (The fossils will be returned to museums in Morocco at a later date, he said.)

As the news of the study made headlines last week, some Nessie fans were hopeful. George Edwards, who was for years the skipper of a Loch Ness tourism boat called the Nessie Hunter, said that for him the new study showed how creatures could adapt to survive in new environments — and that the world is full of mysteries. Take the coelacanth, a bony fish that was thought to have become extinct millions of years ago but was found in 1938 by a South African museum curator on a fishing trawler. “Lo and behold, they found them, alive and kicking,” Mr. Edwards said. “Anything is possible.”

Mr. Edwards said he had seen unexplained creatures in Loch Ness plenty of times: “There’s got to be a family of them.” From what he has seen, the creatures have a big arched back, no fins and are somewhat reminiscent of a plesiosaur.

But there is one detail that some Nessie lovers may have overlooked in their embrace of the plausibility of Nessie’s existence: Plesiosaurs became extinct at the same time as dinosaurs did, some 66 million of years ago. Loch Ness was only formed about 10,000 years ago, and before that it was ice.

Valentin Fischer, an associate professor of paleontology at the University of Liège in Belgium, said that it would currently be impossible for a marine reptile like the plesiosaur to live in Loch Ness.

Nick Longrich/University of Bath

The first recorded sighting of Nessie dates back to the sixth century A.D., when the Irish monk St. Columba was said to have driven a creature into the water. But global interest was revived in the 20th century, after a British surgeon, Col. Robert Wilson, took what became the most famous photo of the Loch Ness monster in 1934. Sixty years later, the photograph was revealed to be a hoax.

But some people were not discouraged, and, ever since, throngs of tourists have traveled to Loch Ness each year in hopes of seeing the monster.

There have been more than 1,100 sightings at Loch Ness, including four this year, according to the register of official sightings.

A famous photograph of the Loch Ness Monster taken in 1934 was later revealed to have been a hoax.Keystone/Getty Images

Steve Feltham, a full-time monster hunter who has lived on the shores of Loch Ness for three decades, said the British-Moroccan study was interesting, but that it was irrelevant to his search. Ever since it became clear that the famous 1934 photo of Nessie was fake, he has stopped believing that Nessie was a plesiosaur. Plesiosaurs have to come up for air, so he figures he would have seen it during the 12 hours a day that he scans the loch. Instead, he scans the water for giant fish that look like a boat turned upside down.

“I struggle to think of any bona fide Nessie hunter that still believes in the plesiosaur,” he said. “The hunt has moved on from that.”

#News

#2022 Science News#8-2022 Science News#acts of science#August 2022 Science News#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#Space Physics & Nature#Space Science#News

38 notes

·

View notes

Text

Quantum magnet is billions of times colder than interstellar space

https://sciencespies.com/physics/quantum-magnet-is-billions-of-times-colder-than-interstellar-space/

Quantum magnet is billions of times colder than interstellar space

A magnet made out of ytterbium atoms is only a billionth of a degree warmer than absolute zero. Understanding how it works could help physicists build high temperature superconductors

Physics 1 September 2022

By Karmela Padavic-Callaghan

Ytterbium atoms have been used to make a very cold magnet

Carlos Clarivan/Science Photo Library

A new kind of quantum magnet is made out of atoms only a billionth of a degree warmer than absolute zero – and physicists are not sure how it behaves.

Regular magnets repel or attract magnetic objects depending on whether electrons inside the magnet are in an “up” or a “down” quantum spin state, a property analogous to saying where their north and south poles would be if the particles were tiny bar magnets. However, this isn’t the only property that can be used to build a magnet.

Kaden Hazzard at Rice University in Texas and his colleagues used ytterbium atoms to make a magnet based on a spin-like property that has six options each labelled with a colour.

Advertisement

The researchers confined the atoms in a vacuum in a small glass and metal box then used laser beams to cool them down. The push from the laser beam made the most energetic atoms release some energy, which lowers the overall temperature, similar to blowing on a cup of tea.

They also used lasers to arrange the atoms in different configurations to produce magnets. Some were one-dimensional like a wire, others were two-dimensional like a thin sheet of a material or three-dimensional like a piece of a crystal.

The atoms arranged in lines and sheets reached about 1.2 nanokelvin, more than 2 billion times colder than interstellar space. For the atoms in three-dimensional arrangements, the situation is so complex the researchers are still figuring out the best way to measure the temperature.

“Our colleagues achieved the coldest fermions in the universe. Thinking about experimenting on this ten years ago, it looked like a theorist’s dream,” says Hazzard.

Physicists have long been interested in how atoms interact in exotic magnets like this because they suspect that similar interactions happen in high temperature superconductors – materials that perfectly conduct electricity. By better understanding what happens, they could build better superconductors.

There have been theoretical calculations about such magnets but they have failed to predict exact colour state patterns or how magnetic exactly they can be, says co-author Eduardo Ibarra-García-Padilla. He says that he and colleagues carried out some of the best calculations yet while they were analysing the experiment, but could still only predicted the colours of eight atoms at a time in the line and sheet configurations out of the thousands of atoms in the experiment.

Victor Gurarie at the University of Colorado Boulder says that the experiment was just cold enough for atoms to start “paying attention” to the quantum colour states of their neighbours, a property that does not influence how they interact when warm. Because computations are so difficult, similar future experiments may be the only method for studying these quantum magnets, he says.

Reference: Nature Physics, DOI: 10.1038/s41567-022-01725-6

More on these topics:

#Physics

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Physics

7 notes

·

View notes

Text

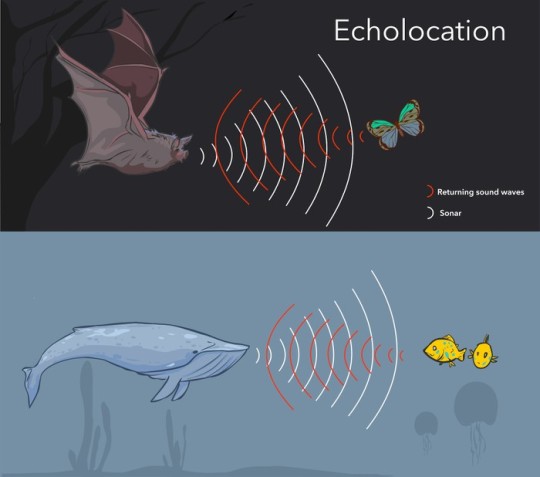

How does echolocation work?

https://sciencespies.com/nature/how-does-echolocation-work/

How does echolocation work?

This furry, flying critter shouts into the void, and then listens to the echoes that bounce back from objects in the darkness. Echolocation helps the bat to navigate, and to chase and snatch prey, such as moths, straight out of the sky.

Advertisement

Most of the world’s 1,400 bat species use echolocation. They produce pulses of sound, largely in the ultrasound range, high above the limits of human hearing. Most bats contract their larynx muscles to make the clicks via an open mouth, but some species use other body parts. Leaf-nosed bats make calls through their elaborate noses, while some fruit bats make clicks by flapping their wings.

How does echolocation work? © Getty Images

As the bat closes in on its prey, the pulses increase in frequency to more than 160 clicks per second. The returning echoes then help the bat to determine the size, shape, texture, distance and direction of the prey or object. At up to 140 decibels, the shrieks are also incredibly loud. Just before it calls, the bat contracts its middle ear muscle, effectively dialling down its hearing, so the mammal is not deafened by its own cries. The situation is then reversed almost instantly, so the echoes can be detected.

Toothed whales, including dolphins and porpoises, also echolocate, as do certain birds and small mammals, such as some tenrecs and shrews. The strategy makes sense for species that are active at night, or that live underground or deep in the ocean, where visual cues are limited.

Read more:

Asked by: Ella McGregor, Edinburgh

Advertisement

To submit your questions email us at [email protected] (don’t forget to include your name and location)

#Nature

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Nature

9 notes

·

View notes

Text

Ocean cooling over millennia led to larger fish

https://sciencespies.com/nature/ocean-cooling-over-millennia-led-to-larger-fish/

Ocean cooling over millennia led to larger fish

Earth’s geological history is characterized by many dynamic climate shifts that are often associated with large changes in temperature. These environmental shifts can lead to trait changes, such as body size, that can be directly observed using the fossil record.

To investigate whether temperature shifts that occurred before direct measurements were recorded, called paleoclimatology, are correlated with body size changes, several members of the University of Oklahoma’s Fish Evolution Lab decided to test their hypothesis using tetraodontiform fishes as a model group. Tetradontiform fishes are primarily tropical marine fishes, and include pufferfish, boxfishes and filefish, among others.

The study was led by Dahiana Arcila, assistant professor of biology and assistant curator at the Sam Noble Museum of Natural History, with Ricardo Betancur, assistant professor of biology, along with biology graduate student Emily Troyer, and involved collaborators from the Smithsonian Institution, University of Chicago, and George Washington University in the United States, as well as University of Turin in Italy, University of Lyon in France, and CSIRO Australia.

The researchers discovered that the body sizes of these fishes have grown larger over the past hundred million years in conjunction with the gradual cooling of ocean temperatures.

Their finding adheres to two well-known rules of evolutionary trends, Cope’s rule which states that organismal body sizes tend to increase over evolutionary time, and Bergmann’s rule which states that species reach larger sizes in cooler environments and smaller sizes in warmer environments. What was less understood, however, was how these rules relate to ectotherms, organisms that can’t regulate their internal body temperatures and are dependent on their external or environmental climates.

“Cope’s and Bergmann’s rules are fairly well-supported for endotherms, or warm-blooded species, such as birds and mammals,” Troyer said. “However, among ectothermic species, especially vertebrates, these rules tend to have mixed findings.”

A challenge of studying ancient fish is that there are very few fossil records. To supplement that missing information, the researchers combined genomic data of living fish with fossil data.

“When you look across different groups in the tree of life, then you will notice that there are a limited number of groups that actually have a good fossil record, but the larger marine fish group (known as Tetraodontiformes)that includes the popular pufferfish, ocean sunfish and boxfish, is remarkable in that it has a spectacular paleontological record,” Arcila said. “So, by integrating those two fields, genomics and paleontology, then we’re actually able to bring into the picture new results that you won’t be able to obtain using just one data type.”

The genomic and fossil data was then combined with data on ocean temperatures, that demonstrated that the gradual climate cooling over the past 100 million years is associated with increased body size of tetraodontiform fishes.

“Based on fossil data, we’re showing that these fish started very small, but you can see that living species are much larger, and those changes are associated with the cooling temperature of the ocean over this very long period of time,” Arcila said.

While the evolution of tetraodontiform fishes appears to conform to Cope’s and Bergmann’s hypotheses, the authors add a caveat that many more factors could play a role in fish body size evolution.

“It’s really exciting to see support for these two biological rules in Tetraodontiformes, as these trends are less studied among marine fishes compared with terrestrial species,” Troyer said. “Undoubtedly we will discover more about their body size evolution in the future.”

#Nature

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Nature

6 notes

·

View notes

Text

'Plant blindness' is caused by urban life and could be cured through wild food foraging

https://sciencespies.com/nature/plant-blindness-is-caused-by-urban-life-and-could-be-cured-through-wild-food-foraging/

'Plant blindness' is caused by urban life and could be cured through wild food foraging

“Plant blindness” is caused by a lack of exposure to nature and could be cured by close contact through activities such as wild food foraging, a study shows.

A lack of awareness and appreciation for native flora stems from diminished time with plants, and is not an innate part of being human, according to the research.

This leads to the common misperceptions that plants are ‘less alive’ than animals.

Researchers say the key to breaking the cycle of plant awareness disparity lies in introducing individuals to biodiverse places and altering their perceptions of the perceived utility of plants. Plant blindness is a well-evidenced lack of interest and awareness for plants in urban societies, as compared to animals.

The research, by Dr Bethan Stagg from the University of Exeter and Professor Justin Dillon from UCL, shows people’s plant awareness develops where they have frequent interactions with plants that have direct relevance to their lives.

Researchers examined 326 articles published in academic journals from 1998 to 2020. Most showed people had more interest and paid more attention — and were more likely to remember — information about animals.

advertisement

There was no concrete evidence this was an innate human characteristic, instead, diminished experience of nature in urbanised societies appeared to be the cause. It was not inevitable if people had regular contact with plants.

The research shows a decline in relevant experience with plants leads to a cyclical process of inattention. This can be addressed through first-hand experiences of edible and useful plants in local environments.

Studies showed it was common for children — especially when young — to see plants as inferior to animals and not to be able to identify many species.

Plant awareness disparity was reported in teachers as well as students, particularly in primary teachers who had not graduated in a science subject.

Older people had better plant knowledge, which studies suggest was because they were more likely to have nature-related hobbies.

Thirty-five studies found that modernisation or urbanisation had a negative impact on plant knowledge. The increased reliance on urban services and a cash economy reduced the utility of plant foraging. School attendance and work reduced the time available to spend in the natural environment. These factors also reduced the time spent with family, negatively impacting the oral transmission of plant knowledge between children and older relatives.

Dr Stagg said: “People living in highly industrialised countries have a plant attention deficit due to a decline in relevant experience with plants, as opposed to a cognitive impediment to the visual perception of plants. People living in rural communities in low and middle-income countries were more likely to have high plant knowledge due to a dependence on natural resources. Interestingly, economic development does not necessarily lead to this knowledge being lost if communities still have access to the biodiverse environments.

“The key is to demonstrate some direct benefits of plants to people, as opposed to the indirect benefits through their pharmaceutical and industrial applications, or their value to remote, traditional societies. The level of botanical knowledge in younger generations is shown to be directly related to their perceived usefulness of this knowledge.

“‘Wild plant’ foraging shows considerable promise in this respect, both as a way of introducing people to multiple species and connecting them with some ‘modern-day’ health, cultural and recreational uses.”

Story Source:

Materials provided by University of Exeter. Note: Content may be edited for style and length.

#Nature

#10-2022 Science News#2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#October 2022 Science News#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#Space Physics & Nature#Space Science#Nature

10 notes

·

View notes

Text

We need to stop thinking of insects as 'creepy crawlies' and recognize their keystone role in ecosystems

https://sciencespies.com/environment/we-need-to-stop-thinking-of-insects-as-creepy-crawlies-and-recognize-their-keystone-role-in-ecosystems/

We need to stop thinking of insects as 'creepy crawlies' and recognize their keystone role in ecosystems

We need to stop thinking of insects as creepy crawlies and focus on the huge benefits they bring to people and the natural environment, scientists say.

The widespread and deeply ingrained cultural perception of insects as creepy crawlies is a key factor holding back the public’s appreciation of the role they play within ecosystems. This perception is in part reflected in government biodiversity policy inaction across the globe, they argue.

This point is among a range of actions highlighted as part of a new paper published in Ecology and Evolution produced by an international team of entomologists which outlines a ‘battle plan’ including steps needed to prevent further insect losses across the globe.

Led by Dr Philip Donkersley of Lancaster University and co-authored by scientists from the University of Hong Kong, the Czech Academy of Sciences and Harper Adams University, the paper is a call to action targeted at other entomologists to step up advocacy for insects.

Despite 30 years of intergovernmental reports highlighting biodiversity targets, global insect abundance, biomass and diversity continues to decline. The paper considers the lack of progress in protecting insects and why meaningful change has not happened.

“Biodiversity, including insect, declines are often unintended consequences of human activities with human wellbeing nearly always trumping nature conservation, and this is likely to continue until we reach a point where we see flat-lining ecosystems are detrimental to our own species,” said Dr Donkersley. “Intergovernmental action has been slow to respond, kicking in only when change becomes impossible to ignore. If we are to see political attitudes and actions change then first societies’ perception of insects needs to be addressed.”

The paper highlights the range of benefits that insects bring, including some that are lesser known. These benefits include fundamental roles within ecosystems through interactions with plants including as pollinators, as a food for other animals, and as a food source for people in many parts of the world. Other benefits the authors highlight include insects’ contributions to wellbeing, culture and innovation, such as the benefits people derive from seeing butterflies in parks and gardens, their inclusion in poetry and literature, and their inspiration for a range of technologies, cosmetics and pharmaceuticals.

The researchers have outlined strategic priorities in their action plan to help support the conservation of insects. These include:

to proactively and publicly address government inaction

highlighting the technological developments we owe to the insects, and that there is a lot still to be discovered

aligning with bird, plant and mammal conservation groups to show species interdependencies and knock-on benefits insect conservation has for other animals

Engage public and school students with the wonders of the insect world to counter perceptions of insects as threatening ‘creepy crawlies’

“The benefits we gain from the insect world are broad, yet aversion of phobias of invertebrates are common and stand firmly in the path of their conservation,” said Dr Donkersley. “We need to move beyond this mindset and appreciate the huge role they play in ecosystems, foodchains, mental health, and even technological innovation.

“This perception change is a crucial step, alongside other measures we outline in this paper. Immediate and substantial actions are needed to protect insect species in order to maintain global ecosystem stability.”

The steps are outlined in the paper ‘Global insect decline is the result of wilful political failure: A battle plan for entomology’.

Authors on the paper are Dr Philip Donkersley, Lancaster University, Dr Louise Ashton, University of Hong Kong, Dr Greg Lamarre, Czech Academy of Sciences, and Dr Simon Segar, Harper Adams University.

Story Source:

Materials provided by Lancaster University. Note: Content may be edited for style and length.

#Environment

#10-2022 Science News#2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#October 2022 Science News#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#Space Physics & Nature#Space Science#Environment

10 notes

·

View notes

Text

30-million-year-old amphibious beaver fossil is oldest ever found

https://sciencespies.com/nature/30-million-year-old-amphibious-beaver-fossil-is-oldest-ever-found/

30-million-year-old amphibious beaver fossil is oldest ever found

A new analysis of a beaver anklebone fossil found in Montana suggests the evolution of semi-aquatic beavers may have occurred at least 7 million years earlier than previously thought, and happened in North America rather than Eurasia.

In the study, Ohio State University evolutionary biologist Jonathan Calede describes the find as the oldest known amphibious beaver in the world and the oldest amphibious rodent in North America. He named the newly discovered species Microtheriomys articulaquaticus.

Calede’s findings resulted from comparing measurements of the new species’ anklebone to about 340 other rodent specimens to categorize how it moved around in its environment — which indicated this animal was a swimmer. The Montana-based bone was determined to be 30 million years old — the oldest previously identified semi-aquatic beaver lived in France 23 million years ago.

Beavers and other rodents can tell us a lot about mammalian evolution, said Calede, an assistant professor of evolution, ecology and organismal biology at Ohio State’s Marion campus.

“Look at the diversity of life around us today, and you see gliding rodents like flying squirrels, rodents that hop like the kangaroo rat, aquatic species like muskrats, and burrowing animals like pocket gophers. There is an incredible diversity of shapes and ecologies. When that diversity arose is an important question,” Calede said. “Rodents are the most diverse group of mammals on Earth, and about 4 in 10 species of mammals are rodents. If we want to understand how we get incredible biodiversity, rodents are a great system to study.”

The research is published online today (Aug. 24, 2022) in the journal Royal Society Open Science.

advertisement

The scientists, including Calede, who found the bones and teeth of the new beaver species in western Montana knew they came from beavers right away because of their recognizable teeth. But the discovery of an anklebone, about 10 millimeters long, opened up the possibility of learning much more about the animal’s life. The astragalus bone in beavers is the equivalent to the talus in humans, located where the shin meets the top of the foot.

Calede took 15 measurements of the anklebone fossil and compared it to measurements — over 5,100 in all — of similar bones from 343 specimens of rodent species living today that burrow, glide, jump and swim as well as ancient beaver relatives.

Running computational analyses of the data in multiple ways, he arrived at a new hypothesis for the evolution of amphibious beavers, proposing that they started to swim as a result of exaptation — the co-opting of an existing anatomy — leading, in this case, to a new lifestyle.

“In this case, the adaptations to burrowing were co-opted to transition to a semi-aquatic locomotion,” he said. “The ancestor of all beavers that have ever existed was most likely a burrower, and the semi-aquatic behavior of modern beavers evolved from a burrowing ecology. Beavers went from digging burrows to swimming in water.

“It’s not necessarily surprising because movement through dirt or water requires similar adaptations in skeletons and muscles.”

Fossils of fish and frogs and the nature of the rocks where Microtheriomys articulaquaticus fossils were found suggested it had been an aquatic environment, providing additional evidence to support the hypothesis, Calede said.

advertisement

Fossils are usually dated based on their location between layers of rocks whose age is determined by the detection of the radioactive decay of elements left behind by volcanic activity. But in this case, Calede was able to age the specimen at a precise 29.92 million years old because of its location within, rather than above or below, a layer of ashes.

“The oldest semi-aquatic beaver we knew of in North America before this was 17 or 18 million years old,” he said. “And the oldest aquatic beaver in the world, before this one, was from France and is about 23 million years old.

“I’m not claiming this new species is necessarily the oldest aquatic beaver ever, because there are other animals that we know, from their teeth, that are related to this species I described.”

Microtheriomys articulaquaticus did not have the flat tail that helps beavers swim today. It likely ate plants instead of wood and was comparably small — weighing less than 2 pounds. The modern adult beaver, weighing 50 pounds or so, is the second-largest living rodent after the capybara from South America.

Calede’s analysis of beaver body size over the past 34 million years suggests beaver evolution adheres to what is known as Cope’s Rule, which posits that organisms in evolving lineages increase in size over time. A giant beaver the size of a black bear lived in North America as recently as about 12,000 years ago. Like all but the two beaver species living today, Castor canadensis and Castor fiber, the giant beaver is extinct.

“It looks like when you follow Cope’s Rule, it’s not good for you — it sets you on a bad path in terms of species diversity,” Calede said. “We used to have dozens of species of beavers in the fossil record. Today we have one North American beaver and one Eurasian beaver. We’ve gone from a group that is super diverse and doing so well to one that is obviously not so diverse anymore.”

This work was funded by the American Philosophical Society Lewis and Clark Fund, Sigma-Xi, the Geological Society of America, the Evolving Earth Foundation, the Northwest Association, the Paleontological Society, the Tobacco Root Geological Society, the UWBM, the University of Washington Department of Biology and Ohio State.

#Nature

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Nature

5 notes

·

View notes

Text

Oyster reef habitats disappear as Florida becomes more tropical

https://sciencespies.com/nature/oyster-reef-habitats-disappear-as-florida-becomes-more-tropical/

Oyster reef habitats disappear as Florida becomes more tropical

With temperatures rising globally, cold weather extremes and freezes in Florida are diminishing — an indicator that Florida’s climate is shifting from subtropical to tropical. Tropicalization has had a cascading effect on Florida ecosystems. In Tampa Bay and along the Gulf Coast, University of South Florida researchers found evidence of homogenization of estuarine ecosystems.

While conducting fieldwork in Tampa Bay, lead author Stephen Hesterberg, a recent graduate of USF’s integrative biology doctoral program, noticed mangroves were overtaking most oyster reefs — a change that threatens species dependent on oyster reef habitats. That includes the American oystercatcher, a bird that the Florida Fish and Wildlife Conservation Commission has already classified as “threatened.”

Working alongside doctoral student Kendal Jackson and Susan Bell, distinguished university professor of integrative biology, Hesterberg explored how many mangrove islands were previously oyster reefs and the cause of the habitat conversion.

The interdisciplinary USF team found the decrease in freezes allowed mangrove islands to replace the previously dominant salt marsh vegetation. For centuries in Tampa Bay, remnant shorelines and shallow coastal waters supported typical subtropical marine habitats, such as salt marshes, seagrass beds, oyster reefs and mud flats. When mangroves along the shoreline replaced the salt marsh vegetation, they abruptly took over oyster reef habitats that existed for centuries.

“Rapid global change is now a constant, but the extent to which ecosystems will change and what exactly the future will look like in a warmer world is still unclear,” Hesterberg said. “Our research gives a glimpse of what our subtropical estuaries might look like as they become increasingly ‘tropical’ with climate change.”

The study, published in the Proceedings of the National Academy of Sciences, shows how climate-driven changes in one ecosystem can lead to shifts in another.

advertisement

Using aerial images from 1938 to 2020, the team found 83% of tracked oyster reefs in Tampa Bay fully converted to mangrove islands and the rate of conversion accelerated throughout the 20th century. After 1986, Tampa Bay experienced a noticeable decrease in freezes — a factor that previously would kill mangroves naturally.

“As we change our climate, we see evidence of tropicalization — areas that once had temperate types of organisms and environments are becoming more tropical in nature,” Bell said. She said this study provides a unique opportunity to examine changes in adjacent coastal ecosystems and generate predictions of future oyster reef conversions.

While the transition to mangrove islands is well-advanced in the Tampa Bay estuary and estuaries to the south, Bell said Florida ecosystem managers in northern coastal settings will face tropicalization within decades.

“The outcome from this study poses an interesting predicament for coastal managers, as both oyster reefs and mangrove habitats are considered important foundation species in estuaries,” Bell said.

Oyster reefs improve water quality and simultaneously provide coastal protection by reducing the impact of waves. Although mangroves also provide benefits, such as habitat for birds and carbon sequestration, other ecosystem functions unique to oyster reefs will diminish or be lost altogether as reefs transition to mangrove islands. Loss of oyster reef habitats will directly threaten wild oyster fisheries and reef-dependent species.

Although tropicalization will make it increasingly difficult to maintain oyster reefs, human intervention through reef restoration or active removal of mangrove seedlings could slow or prevent homogenization of subtropical landscapes — allowing both oyster reefs and mangrove tidal wetlands to co-exist.

Hesterberg plans to continue examining the implications of such habitat transition on shellfisheries in his new role as executive director of the Gulf Shellfish Institute, a non-profit scientific research organization. He is expanding his research to investigate how to design oyster reef restoration that will prolong ecosystem lifespan or avoid mangrove conversion altogether.

Story Source:

Materials provided by University of South Florida. Note: Content may be edited for style and length.

#Nature

#2022 Science News#9-2022 Science News#acts of science#Earth Environment#earth science#Environment and Nature#everyday items#Nature Science#New#News Science Spies#Our Nature#planetary science#production line#sci_evergreen1#Science#Science Channel#science documentary#Science News#Science Spies#Science Spies News#September 2022 Science News#Space Physics & Nature#Space Science#Nature

4 notes

·

View notes

Text

Archaeology and ecology combined sketch a fuller picture of past human-nature relationships

https://sciencespies.com/environment/archaeology-and-ecology-combined-sketch-a-fuller-picture-of-past-human-nature-relationships/

Archaeology and ecology combined sketch a fuller picture of past human-nature relationships