#recently i actually learned that at our size we're actually called a-

Explore tagged Tumblr posts

Text

I wanted to add a gentle reminder/explanation beneath this harsh one, haha. This is at no one in particular but just anyone that might wonder about these things:

I know it's frustrating when a design someone really wants is sold out, your size isn't available, or a different version of something you really like doesn't exist yet- but please keep in mind that we're a tiny 2 person business operating out of our apartment🙏

We don't have the space or the funds it'd take to keep everything in stock all the time (I'd have to do the math on it but as a very simplified example, let's say I have about 100 designs at 4 sizes each and want to have at least 10 pieces on hand in each size.. that'd mean needing to be able to store 4,000 pieces of clothing and just to be able to order them in the first place I'd need well over $100k liquid cash on hand 😱😵💫🫥).

This whole time I've built my business up one step at a time without ever taking loans, and I plan to keep going slowly and steadily and not trying to make any sudden leaps to scale 20 levels up like taking out a huge loan and renting a warehouse and buying a larger vehicle to be able to transport all that stuff and needing to hire employees to keep up with it all and.. etc, etc *tries not to have an anxiety meltdown just thinking about it*

I realize I operate in a way and on a timeline that isn't very compatible with the speed everything moves at these days, or with how business is often done in the US, or to be able to meet the expectations people have of "online shopping" in general. But overall it's really working for me, and I don't even know if I want my business to end up as big as it *could* be if I let things just barrel down the road totally uncontrolled.

I hope everyone can understand where I'm coming from and that I'm doing my best! There's also always a lot going on behind the scenes that isn't at all apparent from just looking at the shop or seeing a couple posts on social media. Things are growing and improving all the time, but it will take time ☺️

#putting this in the tags because i don't want to complain to much in the main post#but i also have a number of Problems which are further reasons to not just go wild with ultra fast growth and expansion#chronic physical health issues / anxiety / cptsd / etc#honestly my health is SO MUCH better the past couple years than it has been since i was probably 12#but one main thing to keep it that was is not letting my stress levels get out of control#I'm in no way ready to run a business that will require employees and all that other stuff#the idea of being responsible for people's livelihood alone is too much#being ultimately responsible for my bf since he quit his job to work with me is more than enough for the time being#so we'll level up when we're ready to which is not right now#maybe from the outside it seems unreasonably slow idk#but things have changed so much so fast since just last year#i hope everyone won't mind being patient with the pace I'm setting#thank you all 💖💖💖#witch vamp#small business#recently i actually learned that at our size we're actually called a-#micro business#!#behind the scenes

732 notes

·

View notes

Note

I feel like I’m sympathetic to people who make mistakes in their early twenties. Like for years you are called a kid and are made to sit in a chair for hours and then suddenly one day you turn a certain age and the whole world sees you as an adult and there are adult consequences and yet for the first time you have to find your footing with all this freedom that you didn’t have before. Like I can’t imagine getting in trouble with the law at a young age and then having to carry that throughout your whole life when like different decades of your life you are different people and like we know being a labeled a bad person does more harm then good since that all somebody believes they are. Like you at 21 isn’t the same as you at 28 year old. Sorry I’m just thinking of humans and how we behave a lot lately and I feel like as people we really don’t do enough to help other people. Like as far as we know we are all we have in this world and maybe one day the earth with explode and all of this will be meaningless but even so we should like wish the best for each other a because I think at the heart of it all people do want to do good. And with that said I think what you said in your last post about superheroes in regards to the Spider-Man film really resonated with me on what a hero should be. Sorry I know this is random but sometimes I see one of your post and it spirals into something else.

i definitely agree. like admittedly even people that young can do heinous things but it's like.. no one is doing those heinous things without a basis, yknow. like i'm going to be as vague here as i possibly can be but one of the death row inmates i worked on a claim for recently committed his crime when he was barely eighteen, and it was a horrible thing, and he clearly has a very affected sense of morality and such, but he's also got an extensive trauma profile. and in the end, is a lifetime spent in prison awaiting an execution date really going to do anything for him as a person? will he learn anything? will he get better? will anything about the roots of why people turn out this way and commit these crimes improve? i think i agree young criminals (or frankly any criminals) do need to be stripped of power lest they abuse it again, but ig the problem with our system is that the idea of being stripped of abusive power is viewed as equivalent to a prison sentence. it's not viewed as equivalent to being in a position to learn from scratch, to being made to commit yourself to community, to addressing your behaviors and attitudes at the root. the victims of these crimes obv don't have to forgive these people but i do think if we're ever going to improve as a society that there need to be systems in place that actually allow criminals to start from scratch in some place where they they can't abuse power again but can learn to like. commit to something at the ground level that reshapes their perspective etc. it's not a one size fits all kind of thing i'm sure but i do think it's worth investing in bc some of the death row inmates i've met who it seems have totally turned their life around (as much as one can while in prison for years), it's bc they found something to learn and commit to and that gave them the space and time to reflect on their behaviors and crimes and whether they really believed in what drove them to commit those crimes in the first place

2 notes

·

View notes

Text

╰ ☾ ☆ * : ・ ⁞ — ˗ˏˋㅤㅤ𝐆𝐄𝐓 𝐓𝐎 𝐊𝐍𝐎𝐖 𝐓𝐇𝐄 𝐌𝐔𝐍

NAME : Stella

PRONOUNS : She / Her

PREFERENCE OF COMMUNICATION : I don't give out my discord much nowadays so actually, my preferred method is tumblr ims through my main !

MOST ACTIVE MUSE : Unfortunately NOT Misha because the V.NC RPC is always dead compared to my other RPC. But Misha is always very near and dear to me no matter what.

EXPERIENCE / HOW MANY YEARS : Goes to show my age I believe I started maybe when I was 9-10 before I even knew it was called roleplaying. I started in 2012, back in the days where no one knew how to cut posts and everyone had entire gifs as ' icons '. My muse was so minor of a character there wasn't any gifs so I HAD icons but they were all inconsistent sizes LOL.

BEST EXPERIENCE : Honestly as much as I hate to say it, I think the 2012-2013 days were the best despite the site being so messy and so unhinged since everyone was still learning and I had a lot of fun plots with that character. But more recently, I'd say it was meeting some of my very few besties here on this site !

RP PET PEEVE : If I had to name ONE ultimate pet peeve, it's that people on this site tend to take things too seriously. Boundaries are important yes but people tend to forget that the block / blacklist button exist. I've seen and experienced it all on this site and that's the root of all the hurt feelings, d/rama, friendships ending, etc. In the end, we're all here to write our silly little fictional characters and if some people don't mesh, that's okay ! Just don't start a witchhunt or start policing over it.

PLOTS OR MEMES : BOTH except I'm really bad at plotting so I tend to lean towards memes !

LONG OR SHORT REPLIES : It really depends on the day. Sometimes I have no muse and sometimes I can type 240238490324 paragraphs. GENERALLY though, I try to match my partner's length !

ARE YOU LIKE YOUR MUSES : OH GOD I HOPE NOT okay, no I relate to them a bit, yes.

Tagged by: @jardinae

Tagging: Whoever !

#ੈ ✩ ‧₊ ˚ ☾ The blue moon. ✧ OOC.#ੈ ✩ ‧₊ ˚ ☾ Fumbling for you by moonlight‚ overlapping and entangling with you. ✧ GAMES.

1 note

·

View note

Note

THANL YOU SO MUCH OMG IM HONOURED! OMG I WANNA GO TO GERMANY SO BAD😭 ❤️🇩🇪

AND PLEASE OMG ANY WORDS YOU WANNA KNOW HOW TO PRONOUNCE ASK ME! one of the best things about being british is our elite humour and our way with words. we have the best insults its great! it’s actually funny cus my accent is hardly noticeable im from a particularly small/just under average size city yet its ranked quite lowly in attractive accent lists (my accent is unatractive apparently) my city is also largely hated as its a shithole pretty much. apparently some kids were told if they dont behave their parents will send them to (insert my city name here) BUT ITS REALLY NOT THAT BAD THO 😭

BUT SERIOUSLY I LOVE THE BRITISH ENGLISH LANGUAGE SO MUCH I WILL GLADLY HELP YOU WITH WORD PRONUNCIATION

my step-mum is fluent german and almost fluent french and she says that german is her favourite language and she fully supports me in wanting to learn it. (it would 100% also help me in the future as i want to go down the historian path but specifically look at ww2) (also im hopefully gonna learn latin at school which will also help in becoming a historian) my favourite german word is the one you gained in world war 2 because of the destruction caused to my city in the blitz. 🥲

AND YES OMG GERMANY AND ENGLAND ARE TECHNICALLY LIKE ‘brothers’! because english and germany are actually germanic languages from the indo-european language family.

~🦈🇬🇧❤️🇩🇪

AAaAAAHHHHHH💕💕💕💕I SHOULD BE THE ONE THANKING U !!! If you need any help with German pronunciation as well, feel free to ask me anytime!!!! ❤❤❤

I need to re-read/listen English pronunciation books (mainly because i have to speak good English to get into the Universities i want to go), so if i have any problem pronouncing a particular word, i'll ask you!!! 🥰 I currently have trouble with several words rn, but i want to first re-read some things and practice pronunciation. If i still have problems with said words, i'll definitely tell you about it! 🤭🤭😚💝

Please know that if you ever need me to return the favour by helping you out with German pronunciation, i'm always here and absolutely glad to help you out!!! At your service 🧎♀️ (it's supposed to be on one knee but oh well) ❤

You know, my dad & i always enjoy watching WW2 documentaries because we're both German as hell! And we've seen several good docus on Amazon Prime & Netflix. I seriously don't remember the names of the documentaries and im not sure if they'll be available in UK, but maybe you can search some on those platforms, mostly on Amazon Prime, because I know they have good quality documentaries about WW2. Also, there's a YouTube british channel that talks about history, and has some documentaries about a certain moustache man, and WW2. I wanna say it's called 《History Hit》 but to be honest i think it's other channel similar to that...if i find it, i'll tell you the name!!!

And also, UK & Germany are like the best brothers ever and have a good history together!! Recently when the Queen died i saw on TikTok several videos of Germans in UK crying for the Queen and honestly it was quite an emotional moment 🥲 God I love UK so much and you guys are the best <333 I swear I follow a shit ton of British memes accounts on TikTok bc you all make me laugh so much and your humour??? Top notch 👌👌👌

Thx so so much for allowing me to ask you questions abt English💕💕🤧🤧🤧🤧

🇬🇧🤜🤛🇩🇪

2 notes

·

View notes

Text

Just as we returned from the store our neighbor was just getting out of her car, so I went over to help her bring her things inside. She'd run out to get some donuts and a slushie. She was having a good day and was making the best of it. She'd managed to get out of the house twice before I arrived.

I carried her goods for her and gave her my arm to help her up the hill to her door. She said her hands weren't cooperating well today, so she gave me her keys and I unlocked her door for her. We went in and she wanted to put her slushie into the freezer as a treat for later. She'd gotten the biggest size so it was a little tall for the freezer, but I helped her get it in without spilling.

She offered me a donut but she'd only bought two, so I declined. As much as I love donuts, and maple bars in particular, I could not in good conscience rob her of such delights. We talked about our favorite donut places, and incidentally, ours was the same -- a local place not too far out of the way. There's an even better place I know of (award winning, in fact), but it's kind of far away. Perhaps I'll take a long detour to bring her a maple bar from there sometime.

She asks about the photo I'd sent her of the reusable zip tie in use, and if I had any other photos of my aquariums. I don't have any on hand but I do have a video I'd taken recently of the baby guppies eagerly swimming right into my open palm. She loves this.

She tells me about her favorite radio show and about how the hosts all know her and have dubbed her their community witch. She plays for me a download from their show in which they highlight one of her Wicked Witch impressions and it's so spot on it's uncanny. Then she suggests that I send them the video of my baby fish, because they do a pet segment on their website and "it's not just dogs!"

I ask her for more details and then agree to send it it. Learning her user name, I mention I'll be sure to give her a shout out as my friend who suggested I submit it and she loves this even more.

We discuss painting, as she is a painter too (or was before her hands stopped cooperating). She wanted to travel and paint the aurora borealis. She says there is a southern one, too, and tells me all about her plans in 2020 to visit New Zealand to see them. But then the pandemic happened.

"Now I'll never get to experience that..." she says, forlorn.

"Are there any videos of it, do you think?" I ask.

This fills her with hope, "Oh, Maybe?"

I decide it will be my mission to help her find them. They are apparently a totally different color than the Northern Lights. We'll have to see if this is true.

She tells me she's trying to find an amethyst for me. I assume because she saw my ring. I tell her I've been wearing it more than usual lately because it's my little brother's birthstone and he passed away last month. She hugs me and I am grateful. I've had a rough couple of months myself, before this. A lot of grief.

I show her my nails, painted in red and gold for the 49ers and she is almost giddy about that I actually did it. She gets up and goes to a nearby shelf and brings back a small fabric cosmetic bag. Inside is where she keeps all the stuff she used when she was able to do her nails regularly. She hands me 49er's decals and I eagerly accept them. This will complete the look for real.

We talk for a while longer and then her friend (the maintenance guy) calls. He asks if she's home and she asks me, jokingly so he can hear, if we're at her house. I respond in kind, "Last I checked, anyway!" He comes over right as I need to leave, so we basically swap places at the door. My neighbor hugs me and says life is easier when you have someone in it who gives a shit. Then she apologizes for swearing.

I remind her to text me any time at all, whether she needs or just wants something and she says she will. Later that evening I notice her car is gone. I assume she has gone to get dinner with aforementioned guy. Later yet she's still not home, I'm a little worried, as I know she has driven a city over before and not been able to get back home, but I give it a little time trusting she'd text me if she needed help.

By 10:00pm I poke my head out to check and thankfully her car is now there, parked in its usual spot. Right as I get back inside she texts me saying she had an INCREDIBLE night. I tell her I can't wait to hear the details and how remarkable her timing was as I had just thought to check on her. She gushes about how she had just gone for a drive in her little sports car but then wound up at the casino and even won a little money!

She tells me all about it and I'm so relieved to hear she was out having fun. She hadn't been able to do that last week. Or the one before that. She tells me she could die a happy woman tonight. I tell her I hope she doesn't, so she can tell me more about her adventures. She sends me an emoji filled post about how her best luck wasn't at the casino but in meeting me.

I tell her the feeling's mutual.

Tough day today... and friendly reminder that being human is easier when we help each other.

I saw one of our neighbors, an older woman we sometimes talk to in passing, sitting outside of her house. I don't know what exactly made me look twice, but on second glance as we drove by I realized her walker was in the grass. She was otherwise just sitting there, like she had a thousand times before, so it would have been easy to assume she was fine and go on with my life as normal but something told me to go check in on her anyway.

She was not fine. She was the polar opposite of fine. Just diagnosed with terminal cancer not fine. No next of kin not fine. A veteran facing eviction from her house for missing rent while in the hospital not fine. In constant debilitating pain not fine. Only semi-lucid not fine. She was extremely alone not fine.

I thought, at most, she might be bored while unable to pick up her walker not fine. A five minute detour from my day not fine. A help her back into her house and say "see you later!" not fine. Instead I spent the last three hours with her because she was so scared and alone and no one should be alone.

We talked a lot while I was there. She's actually two years younger than my mom (who also has cancer but slightly better luck, I guess). I helped her into her house and got her a drink and we talked about what all is going on with her. None of it was good. I was as reassuring as I could be, but there's only so much of this I can actually help her with.

"Why did you come?" she asked through tears.

"Because you looked like you might need some help."

She called me an angel. I told her I was just doing my best. I told her that kindness should never be rare. That we should all try to make the world just a little bit better than it was.

She offered to pay me but I told her I was just there as a friend. Before today we were basically strangers. No need to repay me with anything other than her company, I assured her. She cried, a lot. I managed not to somehow. Something tells me she had needed to cry long before this but in being Strong she never had the chance to.

She needed to get her mail, which is a long walk when you're disabled because it is not at all handicap accessible (across a parking lot, over a bridge, across a small field). So I helped her get her mail. We stopped every three feet because her pain was so bad, but she was determined to be able to go do this with me and not just send me on an errand. I patiently stayed with her and reminded her, through her apologies, it was fine to take our time: there was a nice breeze and birds were singing. She appreciated this. She loves nature.

Halfway back she said she wanted to go to the pool. To put her feet in the water. She loves water, and has not been able to even see the pool in a month. Neither of us were dressed for swimming, but I took her to the pool anyway. There is a stair leading down to it, meaning she couldn't bring her walker, so I offered her my arm.

We went to the pool. She put her feet in the water and then, with more energy and enthusiasm than I'd seen the whole time, she jumped in. In her fancy dress! She was instantly ten years younger at least, clear and happy, floating in the sun. Dress and all. She grew up with a pool and had been on a swim team.

I sat by the edge of the pool while she swam, keeping her company and also making sure she was okay. When she got tired I took her back home and then had to help her get undressed and redressed. I made sure she felt no shame. Getting out of wet clothes is hard for anyone, let alone someone with like twenty pounds of tumors racking them with constant pain.

She was so fucking happy to have gone swimming.

She is trying to "make everything right" before she goes. Trying to repay her debt to society and her debts in general. She couldn't understand why the corporation that owns our houses wouldn't take her money. She was genuinely distressed -- not to be homeless on her deathbed but to not leave this world with a clean slate. I told her intent matters. She can only do her best.

This company not letting her repay her debt was their fault, not hers.

When I finally needed to go, I told her to let me know any time she needed a hand or just wanted company. She told me she was going to die tonight. I told her I hoped not, so I could see her tomorrow. I offered her a hug, we hugged and she sobbed for a solid ten minutes into my shoulder. I told her she was okay. That it was okay.

When I got home I cried myself, because I could not believe she was going through all of that alone. I cannot even imagine how isolated she must have felt. Once I pulled myself back together I sent her a text reminding her to reach out any time and I'd do my best to come over. Like, any time at all.

I hope she is here tomorrow.

906 notes

·

View notes

Text

Tiktokers wanting lolita to be fast fashion and buy their knock-offs from amazon that aren't even going to look right on them bc of how shitty they're made when the rest of us have taken years to build our wardrobes bc we're not impatient brats. And they call us meanie gatekeepers for trying to teach them 🙄

We buy cheaper taobao brands, we save up, we prioritize, we buy second hand and wait for sales. Lolita is never going to be fast fashion and it's not going anywhere so be patient.

I was like 12-13 when I discovered lolita fashion thru looking up stuff about Chobits lol. I lurked egl LJ for the longest time just learning things (as a result, I didn't have much of an ita phase.) My first item was secondhand skirt of my dream print at 18, I think. I didnt have a decent sized wardrobe till recently. I'm now 30 lol.

Y'all can wait if lolita fashion is actually important to you.

3 notes

·

View notes

Text

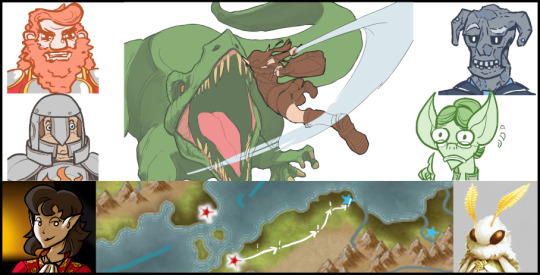

Two-Faced Jewel: Session 9

The Slaying of the Bobbledragon

A half-elf conwoman (and the moth tasked with keeping her out of trouble) travel the Jewel in search of, uh, whatever a fashionable accessory is pointing them at. [Campaign log]

Since slaying a serial-killer dragon is a little outside the party's expertise, they're off to Cauterdale to enlist the aid of the Deathseekers' Guild! Having gotten a good night's sleep at a druid village, and not eaten, they're ready to take on, uh...

Well, some sort of very large monster that Zero kindly drew for me.

In the morning, they rather uneventfully get up and get back on the road, thanking the villagers for their hospitality. And the remainder of the trip to Cauterdale is likewise brief and uneventful, right up until the fire.

Saelhen du Fishercrown: the what Benedict I. (GM): The fire.

Yeah, the forest and the road up ahead are ablaze, sort of blocking passage. The dirt road isn't actively on fire, but the trees on both sides are, making it pretty risky to proceed. The team opts to send Oyobi up ahead to scout the situation- and pretty soon she comes back with a report. Apparently, just past the visible fireline, the forest is totally burned down- just charred stumps as far as she could see, right up to the city walls. The fire itself is just, like, 10 meters wide or so, so it's totally something they could just dash through.

It takes some Animal Handling checks to coax the giraffes through, and the ones that balk get them and their riders a little bit of chip damage from heat and smoke inhalation, but the party is pretty much able to push through to the blasted wasteland of charred tree stumps surrounding Cauterdale.

They notice a few people in strange armor in the distance, doing something near the fire- from the seemingly controlled nature of this burn and the name of the town, they conclude that those are fire squads doing this deliberately, and don't get involved. It's a fine conclusion, and the party begins walking the remaining mile to the city.

As they approach, they notice... a little ways off from the main gates, something is attacking the city walls. Guards atop the walls are manning some sort of huge harpoon guns, and they seem to have already slain several of the... whatever these things are. The remaining one, though, seems larger and more resilient than the others, continuing its assault despite the several harpoons already lodged in its flesh.

What they see is a huge reptilian monster. It's probably not a dragon- no wings, and it doesn't appear to be using a breath weapon- but it's the size of a dragon, with tiny arms, headbutting the metal walls of the town repeatedly.

Orluthe makes his Nature roll to recognize this thing- he's heard of them before. They're called "bobbledragons"- some sort of deformed mutant offshoot of true dragons, incapable of speech or flight or magic but still possessed of monstrous strength and durability.

Luckily, the bobbledragon doesn't seem to be in between them and the main gate- the fight is far enough away that they could potentially just walk up and head into town, assuming they'll open the gates during a situation like this. Hell, they don't even need to open the gates- if the guards just drop a rope, they should be able to just climb over. That seems like a decent plan, so Saelhen and Looseleaf begin working together to draft a use of the Message spell to ask the guards to help them inside.

Then they notice that I've been moving Oyobi's token on the map in the direction of the fight.

Oyobi, blinded by bloodlust and/or extra-credit-in-Severe-Zoology-lust, is determined to help fell the bobbledragon. Their attempts at persuasion fail, and Oyobi, undeterred, continues to charge the giant fucking T-rex that is making huge dents in the walls of a city.

As Oyobi runs for it, and as the party follows behind in hopes of stopping her from making a terrible mistake, the bobbledragon jumps and seizes one of the guards on the wall in its jaws, demonstrating its +10 4d12+7 bite attack by immediately oneshotting its victim.

Looseleaf: oh god we're all going to die. you're using the real t-rex statblock. that thing is challenge eight. it is made for a party of four level eight adventurers, so either we are all going to die here, or the guards are going to show us why they are professional fighters and we are students. Benedict I. (GM): "Shit! It can jump!" "No!" The guards seem upset.

Not promising.

Looseleaf: This thing does sufficient damage to oneshot any of us with a perfectly mediocre hit. Looseleaf right now is kind of thoroughly convinced that Oyobi is actually literally about to die. In that light, Looseleaf is going to message Oyobi again. And she is not going to get any closer. Actually, she's going to back off, put distance between herself and the monster. [Oyobi that thing is going to bite you in half get back here you are going to die.] Benedict I. (GM): Roll Persuasion! DC 20 again. -Looseleaf: 17 / PERSUASION (1)- Oyobi Yamatake: [I'M GONNA LIVE FOREVER!!!]

So... that's a bust, and Oyobi finally reaches the dragon and begins her assault. Miraculously, her flying leap hits, and she digs her sword in... for thirteen damage.

The guards return fire against the bobbledragon, and one of the harpoons catches it in the chest- but it doesn't go down, and the second harpoon- manned by just one guard, after his partner got crunched- misses. Another guard, without a cannon, throws a spear- and gets not only a critical hit, but a max damage critical hit, spearing the thing right in the eye.

youtube

...for eleven damage, because these are ordinary CR 1/8 Guards, but still!

Saelhen tries to distract the bobbledragon so Oyobi can run and hide, but... her arrow goes wide, and Oyobi isn't interested in running and hiding anyway. The bobbledragon, targeting whatever did the most damage to it recently with its bite attack, jumps and bites the whole damn harpoon gun out of the guard tower, leaving the guards without heavy weaponry.

And then with its tail, it tries to slap the insect that just stung it in the rear.

...and rolls a 3, meaning Oyobi gracefully backflips over the attack and strikes a dramatic pose.

Looseleaf: God, she did not deserve that dodge. She got so fucking lucky there. Saelhen du Fishercrown: she really didn't Oyobi Yamatake: "When you get to Dragon Hell, tell them Oyobi Yamatake sent you!!"

Looseleaf, in the interest of communicating to Oyobi how much danger she's in, makes use of an upgrade to her Rend Spirit attack she learned while studying Lumiere's notes on pain. With Painread, she can get some feedback back from something whose spirit she disrupts, and figure out exactly how bad a shape it's in. She does so (dealing a cool 16 damage as she does), and learns how huge this thing's remaining hit point pool is, so she can tell Oyobi how unlikely she is to survive long enough to take it down.

...It, uh, it was already pretty hurt when they arrived, and it, um, has nine hit points left. And it's Oyobi's turn.

Oyobi Yamatake: Oyobi dashes forwards, Naruto-runs up to the T-rex's throat, and does a spinning leap that slashes open its jugular. It roars, and the roar swiftly fades off as its breath escapes. Saelhen du Fishercrown: God dammit, Oyobi. Oyobi Yamatake: "YES! YES! B-S-U! B-S-U! B-S-U!" "THAT is how it's DONE!" She is jumping up and down, doing a celebratory dance, the works. "Flawlessed the boss! Hell yeah!"

Yeah, so... I had kind of been planning on her getting oneshot and laid up in the hospital, as a sort of character growth thing and also keeping her out of the way of certain events in town, but, uh... the dice... didn't exactly... share my priorities.

With the bobbledragon slain, and Oyobi doing an extremely obnoxious victory dance, the rest of the party springs into action to stabilize the guard who was used as a chew toy. Thanks to his plate armor, he hasn't lost much blood, but he's got more broken bones than not, and his prognosis wouldn't be good... if it weren't for the healer's kits Looseleaf had the foresight to buy for everyone. Saelhen stabilizes him, and Orluthe calls on his goddess to Lay On Hands to save the guard's life.

Then there's this guy- the captain of the guard, who fought in the battle with a fancy crossbow that shot flaming bolts. He demands to know who the party is, seeming kind of annoyed that they rewarded weakness by saving the guard's life.

Benedict I. (GM): He looks down at your medical kit. "Y'know, all of my men are prepared to fight and die for our home. You really want to take away this man's glory?" The injured guard looks up. "Uh, sir, I- it's fine, actually..." "Feh." Looseleaf: This guy immediately seems like a bad boss. Saelhen du Fishercrown: Oh, he's ridiculous. Okay, that changes the tenor of this conversation somewhat! "...I apologize, sir," says Saelhen, bowing to the guard on his stretcher, "if I have diminished your victory with my carelessness."

And rather than give this guy any more of the time of day, Saelhen asks the random guard his name. (And then I have to give him one and make him a character, whoops.)

Medd Cutter here is thankful for Saelhen's assistance saving his life, and Saelhen pledges to remember his heroism. The commander feels- by design- somewhat left out of the heroism-remembering, and declares that he is REX SCAR, and Saelhen kind of blows him off. He's not happy, but...

Captain Scar is still the sort of person who is very impressed with anyone who rolls up and kills a bobbledragon just because they felt like it, and despite Saelhen's calculated snub, tries to get buddy-buddy with the group of obviously very powerful people who just arrived. He decides to help them through customs without going through the usual processes, much to the chagrin of...

...Long-Tongue, Cauterdale Customs and Border Inspection Officer of Cauterdale, who's very loquacious and wordy and redundantly repeats what she says in different words to phrase things differently in a somewhat unnecessary fashion for no real reason. Rex bullies his way past her, but Saelhen- as another snub, and just to be... nice? (What's her game...?), hands her the 300-page history of the de la Surplus family as collateral for a deferred border inspection.

Inside the walls, Cauterdale is a very crowded place. It's like 80% slum, choked with buildings constructed of a patchwork of scrap metal and discarded siding, without much wood to speak of. The streets are narrow and bustling, and the general vibe around the place is impatient.

The remaining guards escorting them (Rex went off someplace) inform them, when questioned, that the town indeed burns down the forest around them- since they're near the jungle, horrible dangerous things tend to come out of the trees to attack them, and their harpoon defenses are most effective when they can see their attackers coming from a mile away, with no obstructions. Looseleaf asks if bobbledragon attacks are common.

Benedict I. (GM): Another guard shakes his head. "No, that one was pretty crazy. Usually it's just the giant spiders, or the giant mosquitoes, or the mushroom demons." "We've had a few bobbledragons before, but that was like, four at once." Looseleaf: "Oh gods there's already giant spiders?!" "We're not even at- I thought this was a pine forest still!" Benedict I. (GM): "No, that's usually after it rains," Medd says. Looseleaf: Looseleaf casts Druidcraft. Please tell me it's not going to rain. Benedict I. (GM): Nope! Clear skies for now. "Whoa, cool." Looseleaf:"Thank the gods of sea and sky and weather and everything even tangentially related to weather," she says. "No rain." "I hope it never rains, ever again." Benedict I. (GM): "Haha, better stay away from..." "Wait, where are you headed?" Saelhen du Fishercrown: "The rainforest," adds Saelhen, mildly. Looseleaf: "Ttttthunderbrush, and yes I know that place is crawling with spiders NOERU SHUT UP,"

Then Looseleaf asks about what they're there for- the Deathseekers' Guild. Unfortunately, the guards tell them that the Deathseekers... probably still exist, but they're like, a weird secret club of old people who think they're too cool to join the guard. They give them a couple leads- apparently the Temple of Andra keeps tabs on them, and also a guard by the name of Mags was the last to see them as they were recently seen leaving the city.

The team splits up- Looseleaf and Orluthe head for the temple, and Oyobi and Saelhen head for the guardhouse to talk to Mags. (Vayen... is still gone, after vanishing as soon as the bobbledragon fight started.) The latter group does their thing next session, so...

After dropping off their rental giraffes, they head inside to meet...

This guy, working the reception desk. He seems to be made of rock, and when he talks he rumbles.

As Looseleaf explains their dilemma and their need for Deathseekers, this guy takes a keen interest in their plight. He's very "hmmmm, iiiiiinteresting, oh i see, you don't say?" about the whole thing, making a very normal interaction seem as ominous as possible.

He tells her that the Deathseekers, to his knowledge, should be back in the city from their unspecified errand inside two days, and offers to take a message.

Looseleaf: "I don't suppose they're looking for a green dragon, are they?" Benedict I. (GM): This guy's smile keeps getting wider. It's kind of creepy. "Hm? What makes you say that?"

As she explains about the dragon, he offers her and Orluthe a candy from a bowl on the desk. After some hemming and hawing out-of-character because the creepy rock man is offering you suspicious candy, they eventually opt to have some, because really, Looseleaf isn't suspicious of this guy. Hers is lemon-flavored. It's tasty.

Then, as she describes the empty tower with the corpse of the torture wizard in it, this guy's demeanor changes suddenly from "creepy wry amusement" to "genuine concern". He tries to put on a poker face, but him having a poker face when he's until now been all creepy-friendly chewing the scenery... stands out. He gives her a strong assurance that the Deathseekers will handle this problem for her.

Benedict I. (GM): "I... thank you, for this information." Looseleaf: "You're welcome. Please, uh, make sure that the Deathseekers get this information as quickly as possible. The dragon eats a corpse a week and there's only three corpses left in the tower, there's a very real deadline on this." Benedict I. (GM): [rolling 1d20+4] (Insight) 17+4 = 21 Looseleaf: Belatedly, Looseleaf realizes she's made a mistake. Benedict I. (GM): "You say... the dragon eats three corpses a week?" "Only three corpses left in the tower?" Looseleaf: Namely: Looseleaf has no good reason to know the fact that the dragon eats a corpse a week. Since she's never met the dragon. Benedict I. (GM): "Curious information." "How did you come across it?" Looseleaf: "Uh, erm, uh." Shit.

Looseleaf opts to tell the truth about Arnie, to avoid spinning a dangerous web of lies for herself- after all, Arnie's not worth lying for. She does describe him in as sympathetic terms as she can, though, and asks this guy not to harm him if possible- she doesn't want to break her word to Arnie if she can help it.

Benedict I. (GM): He takes a moment to process this. "...Very well." "My people will be the soul of discretion." "I thank you very much for your generous contribution to the Ecumene of Understanding."

Looseleaf notices that something is wrong.

This guy is the receptionist. He's not a bishop or anything. He's not even wearing priestly vestments- just a nice suit. And he's speaking as though he's in a position of power- "my people", he says.

And after considering various possibilities, she tries something. A shot in the dark, but...

And the way Looseleaf plays this, is... "quit acting like you don't know what I'm talking about, c'mon, the jig is up". She takes out the letter she found in Lumiere's tower and shows it off, as proof!

And this guy keeps denying it, and getting increasingly more panicked, and looking nervously over at Orluthe, and asking her to please stop, shh shh shh shh, and it's when he begs her to have a conversation with him in private please that she makes the connection. If this guy is affiliated with Lumiere, who's apparently affiliated with some sort of secret conspiracy that's affiliated with some sort of deific usurpation... he maybe doesn't want to have that conversation in front of a cleric.

Looseleaf:"Okay, Orluthe, uhm. Sorry, so," Looseleaf whispers into Orluthe's ear. "Long story short, turns out my sister, who left my village way before I did, ended up falling into some kind of magical secret society. The kind of secret society with Hal Lumiere, i.e. 'the torture wizard who came up with all those pain knives that we all got stabbed a lot with', was apparently a very active member of." Benedict I. (GM):Oh my god, um. Looseleaf: "So, uh, I'm kinda freaking out about that, right now, but if my hunches are right then I'm the sister of someone important in their organization?" Benedict I. (GM): As you start whispering, he tries to interrupt. "Please do not say things to him!" "Please let us speak in private!!" Looseleaf: Oh he's freaked out now huh. "Anyways that's why I am actually indeed going to speak, with this guy, in private," Looseleaf finishes. "And if I don't show up in a half-hour or so, then things have probably gone lopsided." "In which case you should find everyone else and tell them to, I dunno, come save me or whatever." "You got all that?" Benedict I. (GM): The rock man looks distraught. Orluthe Chokorov: "I, uh... think so? This is really... I'm not sure it's safe..."

With a good Persuasion roll, Orluthe agrees to stay behind, and the rock man leads Looseleaf into a backroom whose doors and walls seem warded heavily with some sort of abjuration magic. A secret saferoom.

The man describes the problem: the gods don't know that they exist, or didn't until Looseleaf went and told a cleric of Diamode that they existed. Clerics, in this setting, channel divinity literally- their gods come into their heads to do magic for them, meaning anything a cleric knows is something a god can know, if they care to check.

Benedict I. (GM): "Because if the next time Diamode is in that kid, if she goes looking for that memory..." "I mean, she might not. And you didn't mention anything about our aims, so she might consider it beneath her notice." "But that, right there? That was nearly game over." "And I can't just kill you, because if I did, Yomi would end me." Looseleaf: "Yeah, I'm not incredibly foolish, I haven't actually shown anybody else Yomi's letter." "Nobody knows that Lumiere was involved with... deicidal blasphemy." "That's what this is about, right? Thereabouts, in terms of sheer magnitude and hubris?" Benedict I. (GM): He sighs. "It's not like that." "At least, it's not all like that." "The Project is... fractious." "The less you know about the project, the less you're able to carelessly blurt out about the project your cleric friends, or to anyone who tries reading your mind or tricks you into a Zone of Truth..." "The safer we all are." "With as much as you know, you're already dangerous. It'd be best for us- and you- if you dropped this. Never spoke of it to anyone."

Looseleaf points out that it's good that she found the letter, because that tower was sitting abandoned for a year- anyone could've walked in and read it, since it was lying on a bookcase in the open.

This is somehow not taken as good news- when he finds out that the letter could've potentially been read by anyone, that there was a security breach for a year...

Looseleaf: "Look, my man, next time you want to send a letter, by the way, use... use some encoding." "Don't just write things in plaintext like a chump, by the gods." Benedict I. (GM): "He was supposed to burn after reading." Saelhen du Fishercrown: he's too dead for that! Benedict I. (GM): "Wait, you said it was... out in the open?" "But he's dead?" "Either he was an idiot, or... someone else opened his mail." "Except... Yomi should've hand-delivered it, so..." "...well. We'll definitely look into it."

He brings up sending for someone to do memory magic to handle the breach- but he realizes he can't have that done to Looseleaf, because Diamode would notice if someone tampered with her cleric's memories, and someone needs to still know what's up so they can keep Orluthe away from the truth. (Plus, she figures she'd notice the inconsistencies and end up sleuthing it out again.)

Looseleaf asks if Yomi is doing well, and gets... that she's intense, and powerful, and she probably thinks she's "doing well", but... he doesn't know about happy.

Lastly, he shows Looseleaf a symbol- a blank circle, with the elvish character 人 drawn underneath. The symbols of gods are typically circles with a design inside- so the meaning of this and its relationship to the nature of the Project is fairly easy to infer.

Benedict I. (GM): "If you need to prove to someone you're in the know, without blurting out a bunch of dangerous details, this is the mark." He then eats the paper and the graphite stick he used to draw it.

Next time: Saelhen and Oyobi grill the guard Mags for information on the Deathseekers, and connections are made with powerful individuals.

2 notes

·

View notes

Text

Explorers of Arvus: uhhhh / 3.23.21

today's notes are different from usual bc. well. you'll see

LAST TIME ON EXPLORERS OF ARVUS i broke my sleep schedule and am barely existing so this is fine. we went back to camp vengeance an uhhhhhhhhhhhh we are now going to fuck off into the forest to die or prove a very important point

oh god we forgot to level up

[mgd voice] BOOSTING NYX TO MAXIMUM LEVEL

im so fuckin tired. what on earth am i doing. how do i level again

k is not here this time but instead we've got mae+nii bonking their heads together to simulate 2 braincells and so far it is not working. i might just have to like fuckin, drop out n zzz partway thru or somethin. would be fun to see how chaotic michael makes charlie in my absensce

oh wait i can do d&dbeyond i think. how do i work this again. will i ever remember i have shield

what level am i. level 6? pog. oh shit i think i have a new thing

. new spell

. 3 total 3rd level spell slots

. bend luck! i can now screw people over on purpose (and will probably use my sorcery points FINALLY)

michael is leveling charlie up bc my brain is apple sos

ASDXFKLJFH I FEEL CALLED OUT zec rb'd my most recent art of MaX with "all i know about xem is that leo likes xem a lot that's the extent of my knowledge" THANK U FOR SUPPORTIN ME ANYWAY

there will be less blaseball distractions than last time bc blaseball is now on siesta. however i will still have MaX brainrot in the background bc i was drawing xem

wyatt mason my beloved

OKAY I GOTTA MUTE THE TACO STAND FOR THE ENTIRETY OF D&D i cannot and will not get distracted. we can do this. we

nintendo wii

we havent even started yet and im already incoherent

ok i have made a decision and that decision is that i do not have the brainpower to play. however i do have the brianpower to take notes hopefully! so ill just like. vibe. this will be a first

oh man im gonan pick up Blink. charlie is gonna be a fucking menace to herself and others

oh my god its not concentration so charlie may continue teleporting while unconscious. thorne is going to hate this

[charlie gets her soul eaten by a ring] [charlie singing dragonston din tei at halvkWAIT JORB HAS A PRIZE

jorb got a thing! an evil genius thing! figure man. fugrine. figuring. help

GREEN HAS DIAGNOSED ME AS TIGREX MONSTERHUNTER i love this

my notes are a disaster. this is so sucks

serotonin is stored in the wiggly zoomy jorb camera

jorb: his pinky is the size of the rest of his fingers

leo: he has a disease

jorb: he has a disease.

jorb: that disease is male pattern baldness

leo: [reduced to tearful giggling for mysterious reasons]

LAST TIME, ON EXPLORERS OF ARVUS: we've returned to camp vengeance! taure is still unconscious, which is not very great. camp vengeance is doin better tho!

michael, as part of the recap: ingrid is getting railed by her new girlfriend,

first dice roll of the day is michael rolled a 1. good start

OH THORNE IS AN ARTIFICER NOW thorne took a level in artificer!

"...it's like figuring out the right mathematical equation to summon a gun."

group is gonna go check out the statue that we passed by now that we're not WHAT DO YOU MEAN PONK AND GEORGE CANONICALLY HAVE IBS thats it im not looking at 772 anymore

im doing a bad job of paying attention but at least im Present

SIERON LEARNED FLY AND USED IT ON CHARLIE

michael: what do you want to do with your new flying powers?

leo: how many problems can i cause in 10 minutes

guard 1: ...why is the halfling flying?

guard 2: [rolls a 3 on intelligence] i think they can just do that

groundhogs, the real scourge of the campaign

silje and sieron are gonna hunt a big elk. they got distracted and sieron is putting grass on silje's head. i think

WAIT WE'RE ON WATCH NOW FUCK

we have discovered kali's tragic backstory whoops

update i am. too sleepy for this. good nigh everyone

[ and then leo went and somewhat took a nap! solar, normally playing thorne, started playing charlie in my stead. @jorbs-palace, local hero, started taking shitpost notes in my stead. ]

jorb's ghostwritten notes for leo:

help solar is immediately doing a cursed voice for charlie. charlie can do so many crimes

congratulations, charlie is now temporarily immortal!

dwarves can hit things with their beard

kali wants to know if she's legally allowed to bail

she'd feel really bad if she had to loot our corpses for payment if we died.

we have entered the Tree Zone

one of the corpses is now a flamingo (has one leg)

silje has decided to stab the ground. take that, dirt

kali was large size for a second there but then she remembered to not be a giant

"you accidentally deleted my cat?!"

silje has learned naruto cloning jutsu

be gone, thot

oh boy, making an int check to look at a statue! 11! silje is dumb apparently.

hmm. the statue has divination magic. it's also affecting silje.

SILJE LEARNED A 6TH LEVEL SPELL? its only single use but still

you solved my statue riddllllleeeee

thorne forgot to have eyes

its a shame mac and cheese doesnt exist in the d&d universe

wizards are just math criminals (the criminal part is setting people on fire)

sieron crit fails a check but it was still a 9 because of having +8

thorne is looking for what's weird!

uh oh music got scary, never a good sign

hmm. those leaves over there weren't dead a moment ago.

UNDEAD TROLL TIME! rolling initiative

"it's ok, im a wizard, it's my duty to be correct." "wow! waow!"

woooah here he comes

IT JUST DID HALF SIERON'S HEALTH AS A PASSIVE END OF TURN EFFECT?

thorne backed up and cast eldri- oh, ray of enfeeblement. character development continues

charlie is going to just blink out of existence for a minute.

big chungus has grabbed silje and sieron. BIG CHUNGUS HAS THROWN SILJE AND SIERON.

sieron is using hit and run tactics! isn't good at his extra attack yet though

silje is activating bid bid blood blood blood

thorne uses beam of skipping your leg day. troll's legs are now skipped.

michael is trying to determine what a 'clavicle' is

"does that mean the star trek kind, or the bdsm kind?"

charlie wants to cast magic missile.

charlie has vanished back into the ethereal plane mid-taunt

silje has decided to not get bitten today

silje may or may not have stats.

oh, right, trolls are weak to fire! and also we forgot to upgrade sieron's firebolt. so it actually hurts now!

silje is full of knives and blades and does 31 damage in one turn!

charlie shouts words of encouragement from the ethereal plane. a nearby ghost vibes with this.

🎉 eldritch blast 🎉

kali remembered she hates the sun

silje is enthuasiatic about charlie saying "get him cat boy!"

charlie contemplating using fireball to nuke the troll and also the entire stonehenge

charlie has decided to use magic missile instead, probably for the best

the troll bit at charlie SO POORLY it broke some of its teeth on the ground

charlie is too small to hit

accidentally rolled advantage on a firebolt, so got to learn it WOULD have done 29 damage with a crit but instead it missed because it was not actually with advantage

silje has just sliced open its entire back and made a spray of frozen blood! radical. big boy is down!

we have burned the body because we are not stupid. well, we ARE stupid, but not stupid in the way of leaving a body full of necrotic magic around

[dr coomer voice] i think it's good that he died!

we're also doing a funeral pyre for the other corpses that were around. just to be sure.

our loot is: the satisfaction of a job well done

thorne is cosplaying as charlie

charlie has located the direction troll came from! she found the 'the way to sweet loot' sign

thorne is apparently better at survival checks than our hired guide? wack

we found a viking house! it has: mead, a shield, gravestones,

found a gold coin in the mead! maybe it was thirsty

oh theres a LOT Of coins in there actually. 60 gold and 120 silver!

have successfully pointed out a hole in the DM's logic :)

there was a raven! it cawed and left. ok bye buddy

and that's where we leave it! heading back to camp vengeance next time.

someone rated this session a 7.2 out of 10, which is very specific

good night mr coconut

4 notes

·

View notes

Text

Call Me BREW-know Mark!

It's the first workshop in this weekend series, some hands-on time to learn how to brew coffee at home. Yes, it's not rocket science but this fun little activity had me convinced that there is science to making the perfect cup!

I googled 'weekend classes' and found this on offer. I've always been a coffee fan but I wouldnt call it legit, which means I fancy a 3-in-1 as much as an espresso. You can say I'm only really going for that comforting feeling you get from sipping a hot drink but with a kick, which is why I don't share the same sentiment for tea.

There I was, an eager beaver who was up bright and early for a Sunday morning class. It's only my third time in Yardstick, definitely dig the cozy workspace vibe.

We're a small group of five, I ended up fifth-wheeling with two couples plus Jon, our coffee expert-slash-teacher for the day, who kicked off the session by asking why we signed up for the workshop. It was a gift for the first couple who travelled all the way from Laguna, being such coffee addicts; out of curiosity says one, and a good knowledge head-starter for business says another. As for me, it was the weeekendproject = fill my weekends with something that adds value... sparks joy! 😂

Obviously, I was more interested in taking photos, so I did away with notes. However, if there was only one key take-away, it has to be that COFFEE IS 98% WATER, so the type you use actually matters a whole lot ~ FILTERED > DISTILLED.

On we went then to get our hands dirty (or very, very clean ~ wash your hands please) to try out some recipes!

It brought me back to my Chem lab days

3x3

Three rounds with three recipes each, I get to fly solo ~for obvious reasons. We exchanged notes on what we thought about each brew.

Round 1: French Press

We were asked to dive right into the brewing process, only to learn the err of our ways after, like... forgetting to AGITATE!

We were all too gentle with our grounds, but apparently, you gotta get rough to get the most out of them beans. AGITATE ~a fun word to really just mean stir vigorously.

Round 2: Kalita

Your coffee can do away with the taste of paper, so prep by pouring hot water on that filter before-hand.

There's also 'the PURGE', sacrificing six perfectly fine beans in the grinder to wash out the last batch. And so I did the purge, the grind, then release.

Side Note: Remember to hold the cup before you release, else you end up throwing away fine fresh grounds, which is exactly what happened to me ~and it was on my second attempt #clumsy

Round 3: AeroPress

Purge. Agitate. Press.

Who knew that small differences in steeping time - 1 vs 3 vs 5minutes, water temperature - 80 vs 90 vs 100 degrees, and ground size - 14 vs 16 vs 18 can mean either a good experience or a crappy one! For instance, I now know why my recent brews have been extra sour, effect of underextraction ~ I guess you really can't hurry a good cup (...just like love)

Learned loads in the quick session. Love it when I get more just from listening to other people's Q&A. Really glad I was in this mix!

More courses available ~ who knows, latte art could soon be part of my arsenal of coffee-skillz

WHAT'S NEXT?

It's probably worthwhile to get to exact measurements - ground size and weight, water temperature, steeping time - but on the daily, will have to make do with approximations. Will have to eventually beef up on tools (think weighing scale and bean grinder) to calibrate my bean-method combo to perfection! Afterall, to get to a close approximate, you will need to have a clear idea of the exact.

A wine appreciation class should be a fun follow up for a weekend workshop. And while you can already learn loads online, I still think HANDS ON >> HOW TO YOUTUBE VIDEOS

This weekend is rated A for A~xperience! 😋

1 note

·

View note

Text

‘Oseaaq Burns: SftE Writing Exercise #2’ by Eddie White

"Oh………em………GEEZY, NADY! Lookit! They have a fish taco stand………UNDERWATER!" Shantrice shrieks, overcome with joy and wonderment. This dimension they've been transported to—a completely aquatic version of earth called Oseaaq—is the most remarkable thing she's ever laid eyes on, but for Sinead? Not so much.

"Shan………for the love of God………I already saw it! I'm not fuckin' blind," she gripes, rolling her eyes. "I don't know what's so fuckin' amazing about some muthafuckin' fish eating fish anyway."

Sinead has been moping around, arms folded in indignation and feeling her usual regret for tagging along with her best friend. For the life of her, she can't figure out why she unfailingly agrees. She is always thinking that she'd rather not, intending to rebuke Shantrice's offer with the strongest no to ever be uttered, but results are consistently opposite. Every. Single. Time.

"Well, it's not about fish eating fish, obviously, but I mean, how many times have you been to an aquatic dimension with a damn fish taco stand?" Shantrice pauses after that inquiry, but abruptly continues before Sinead can respond as the question was rhetorical. "I'll tell you how many: ZERO! This is fuckin' newsworthy shit! Uncommon territory, literally!"

"Shantrice………we're not here to eat and gush over food stands," Sinead sighs. While it is true they didn't come there to eat, she can't deny the rumblings in her tummy. Nevertheless, she ignores her hunger. "Actually, we shouldn't be here at all, but you and that damn professor and y'alls inventions, always opening up gateways and shit."

"Hey! Don't fault us for our interest in the unknown! We do this for science anyway!" Shantrice declares as she marches along, holding her head high like she was Madame President speaking to an auditorium of packed students and constituents. "Besides, you're never doing shit. Learn to live a little."

"Ugh! Whatever, Shan!" Sinead screams, storming off in a rage. Due to her current disposition, she accidentally activates one of the abilities she has copied, causing jawbreaker-sized holes to appear on her face, her neck and the back of her hands. These holes erupt with an intense, silver fire that bursts through the protective gear she's wearing.

Even with exposure to the aquatic atmosphere, the flames aren't being squelched, which doesn't bode well for Sinead as her suit is now filling up with water. Before she can even get a grasp on the situation, the entire area is ablaze, including the fish taco stand Shantrice was raving about.

"Oh no, Nady! What the fuck have you done!?"

"Don't yell at me, dammit!" sneers Sinead. "I wasn't trying to do this, it just happened!"

Her anxiety has reached an intensity of 50,000 as she struggles to repress the flames and deal with the water entering her suit. Although the uniform has an advanced form of nanites that can fix any kind of damage as well as suppress Eclipsed abilities in dire situations, they aren't able to perform those actions for some reason. The fire is apparently overpowering them, something Professor Corsair most likely didn't factor in.

Also, being that the required training to manage this power had only recently started, it's no surprise Sinead is experiencing zero success.

"Shit! I'm sorry, Nady! I really am," Shantrice replies, feeling distraught. "I know you weren't. Nevertheless, we gotta do something about this fire and you—IMMEDIATELY!"

Shantrice is trying to keep a cooler head—no pun intended—and simultaneously bring soothing to her bestie. Quite often she forgets just how quickly Sinead can become flustered in intense situations. It's amazing that UpShift was even able to convince her to join the Safeguard Junior Guild. Although, she has surmised that Dexter joining may have something to do with that.

"Well I don't know what to do, Shan!" Sinead wails. "The guy I replicated this power from didn't know how to use it himself, which left me shit outta luck!"

"Okay, okay! Just try to calm down," Shantrice sighs, simultaneously feeling exasperated and enervated. "Your panicking is making me panic."

"Well you should be panicking, Shan! I might drown out here!"

As Sinead is persistently trying to recall the flames, something comes barreling towards her in a state of supercavitation, slamming into the poor girl. The impact knocks her about three yards away from Shantrice, and possibly out cold.

"Ohshitohshitohshitohshit—SINEAD!" Shantrice yelps. She raises her left arm and presses a rectangular button, arming a miniature cannon located inside the forearm of her armor. "Alright! Whoever just hit my fuckin' bestie, show yourself or I'm blasting every cotton-pickin' thing in sight!"

Overhead appear a trio of massive shadows, blocking out what little sun was visible. As a possible response to Shantrice's threat, the shadows descend and reveal themselves to be a humanoid shark, whale and squid.

"Oh my fuckin' Lord………," Shantrice shudders.

Realizing she's in over her head, she tries to activate the teleporter on both her and Sinead's suits, but the latter is too far away. Her hopes of reaching Sinead are dashed for now, as the path to her retrieval is blocked by not only the humanoid creatures, but a wall of roaring flames.

"ShitshitshitshitSHIT!"

"Don't go screamin' now, bitch," roars the humanoid shark, which appears to be a hammerhead and also female. "Where's all that tough talk from a few seconds ago?"

"Tough talk? Tuh! Bitch this is who I am!" Shantrice growls. "Now, I'll admit, y'all got me outnumbered, but one of y'all attacked my friend, so somebody's gotta pay. Who's it gonna be?"

The humanoid whale, also a female, steps forward. "I can handle this runt, Demetria, unless you object?"

"Go right ahead, Nadine," Demetria chuckles. "I wanna see what the bitch is really made of."

Shantrice grunts and cracks her knuckles, an action which sounds extremely weird because it reverberated inside the gauntlets of her armor. "Alright then, fuck it. Bring it on, Shamu. I'm gonna kick your tubby ass."

"We'll see about that!" Nadine roars, lunging towards Shantrice at breakneck speed, almost too fast for the girl to dodge.

"Fuck! You move like a fuckin' NFL player!"

"I don't know what this NFL is that you speak of, but I did play Oseaaq-Orb in high school," Nadine explains.

"Yeah………that's pro'lly the same thing, but I'm just gonna end this discussion here. This is weird," Shantrice groans.

"Suit yourself!" Nadine barrels towards Shantrice in a supercavitating state, similar to what Sinead was hit with moments ago. Either she was her assailant, or all of the inhabitants of Oseaaq possess this ability.

"You're not gonna pull the same move twice, wench!" Shantrice tightens her left fist, firing a blast from the cannon she armed earlier. "Take that!"

A pulsating sphere of electricity soars towards Nadine, striking her directly in her blowhole. The attack sends a shock through her nervous system, disabling her for the time being.

"Well shit, that was easier than I thought!" Shantrice snickers, feeling overconfident. "One down, two to go! Who's next!?"

🌊🌊🌊

#creative writing#nature#anime#writing#writers and poets#writers#writers of wattpad#writers of the future#memphis#light novel#superhero#speculative fiction#science fiction#writing exercise#writerslife#comics#comic books#ados#adosaf#blacklivesmatter#black writers#trans

0 notes

Text