#rationalists

Explore tagged Tumblr posts

Text

i know i have mutuals and followers who are/were close to rationalist circles, or rationalists themselves, or who are simply anarchist trans girl hackers who seem to br broadly familiar with the subject. and tbc it really REALLY isnt for me (im vaguelu familiar with the arguments and have read about rokos basilisk and lesswrong etc and it's beffudling to me). but do you guys have any good writings or posts on how much it's influencing tech giants and in which ways? there's been a lot of sensational writings on the ftx polycule etc and how elon and co are claiming to embrace longtermism but I'd love to see stuff on what they specifically get out of it and how close their interpretation is to the og ideas and circles, and what movements and groups DO exist today and how much they oppose or embrace recent tech industry developments (from ai chat and art to crypto crashing to elon and others indicating they're fans of the whole thing)

tbc this isn't about drama or sensationalist stuff, and im not seeking intellectual arguments on why it's the right way or utter bullshit. rather im trying to understand how these ideas developed, where the movement is today, and what kind of cultural and intellectual influence they actually DO have on tech giants and tech billionaires as a whole

#tagging this for people to find#lesswrong#effective altruism#rationalists#bayrats#longtermism#artificial intelligence#eli talks#tech

19 notes

·

View notes

Text

Robert Evans saying that Roko's Basilisk is basically atheists reinventing Calvinism is spot-on.

"Roko's Basilisk is Pascal's Wager but God is an evil robot."

I also like he points out that all of this is people whose enlightened idea of how a future super-robot will work is from Terminator and The Matrix, and both of THEM were just stealing it from Harlan Ellison's I Have No Mouth and I Must Scream.

...Except in that story, the God-Robot was doing that for a very specific reason in the context of that story, not just because it was generally an asshole.

Who has the foresight to conceive of an advanced AI that is all-powerful, yet then they go "but it's probably going to be a giant petty bitch just like we are."

Why would it be? It's a robot. It doesn't have the same needs and wants that we do.

Do these people really perceive of "advanced intelligence" as automatically being self-righteous and inherently cruel?

That says way more about how fucked up these people are than anything about robots.

And if they think this...why are they trying to make it? Because a lot of them are programmers and they're working for AI startups. If you think there's no god now, and if we make one it will be evil, why the fuck are you working on it?

At least when people invented God thousands of years ago, they fantasized that he was fundamentally good.

Who actively tries to invent an evil supercomputer?

Again, I think that tells us everything we need to know about Silicon Valley.

...Also LLMs aren't genuine intelligence, we can barely define what that even is, but it is probably more than "math that tricks people into thinking a computer knows what it's doing because it can have a conversation with us."

7 notes

·

View notes

Text

hello everyone! tumblr seems like a plausible successor site for posters as twitter becomes less fun, so i'm moving part of my exodus here.

where on here do the rationalists hang out? or the zoomer transfems? or the zoomer transfem rationalists, even?

#leaving twitter#rationality#rationalists#lesswrong#transfem#zoomer#lgbtescreal#zoomer transfem rationalists#please tell me this culture exists somewhere besides twitter#and i guess if it doesn't i'll have to create it

19 notes

·

View notes

Text

I have to consciously dodge sounding like a rationalist sometimes. We probably share some ground, we both worship the effective and love analysis. But they have this certain bright-eyed optimism, a total lack of edge with a slight note of corniness. You hear them pipe up from 6 feet deep in the weeds, all nuance and pet theory, and you just think "god, I should step on that thing."

I think if tumblr people can be corny too, we at least like to think we're outrageous outsiders. Rationalists seem to allow no concept of the outrageous and they're so damn sincere about that: you need a patina of cynicism, a hint of jadedness, to pull it off.

8 notes

·

View notes

Quote

This promise in Jeremiah [29:13] eliminates two types of people who claim to be seekers. There is the rationalist (who only allows reason – but the true seeker needs to be a whole person not just an 'autonomous' mind). And there is the cynic (whose start point assumes there is not enough evidence available and they seem committed only to seeking confirmation for their start-point). Hence both are defective seekers.

Andrew Fellows

4 notes

·

View notes

Text

3125. No Cantos (2025 Piece)

This is "No Cantos (2025 Piece)." Activate the forest weapon.

No cantos for the war-hungry, please. And let me forget (not really) your insipid kisses on my pillow. We're in the age where a full moon will bring out the rationalists. Careful! Another dream of you walking away in a slushy parking lot wheels forth. Another you coming back as a beautiful stranger with secrets and a slice of pie. I can smell the spring on everything now. The wet trees and sound. The thrum of the insect world beating its drums of war. Eat your sugar now! Explain your confusion to someone close. I'm going to stop now—I'm sorry. All my life I've been a victim of politeness.

#prose#fiction#story#who knows#other stuff#weird#i don't know#no cantos#war#thrum#politeness#rationalists#spring#suzanne treister art#tgif#2025 piece

1 note

·

View note

Text

The norms that are useful for batting around complicated ideas in your insular and socially awkward (affectionate!) book club are not good for understanding how politics functions in the real world. Of course people lie about their political motivations, and if they realize they can get you on side by dressing up their tendentious argument in a way that appeals to you, they will tailor their lies precisely to flatter your preconceptions. That’s why costly signaling and mechanisms of in-group/out-group distinction evolved in the first place! They weren’t just a fun way for paleolithic allistics to bully each other, they were a safeguard against all the dang liars!

2K notes

·

View notes

Text

Rationalist Philosophers Tutorial - YouTube

In this video, I explained about Rationalist philosophers- Descartes, Spinoza and Leibnitz, their theories of Substance, God and Rationalism.

0 notes

Text

1) Yes, the Zizians floated around Tumblr for years, in various forms. They're uber-nerds, trans people, and decided violence is the only logical means to their ends. If they had held out until 2025, they would have been fantastically successful here.

2) At some point, Pennsylvania is involved, because as I've said before, there is no obscure violent cult that doesn't eventually end up here.

3) To any Rationalists reading this, fuck the Singleton. If God being mad at me wasn't enough to stop me, I'm not cowering before your stupid demon robot.

At least God doesn't have a power cord that can be yanked out of the wall, metaphorically speaking.

"YEAH WELL IT WOULDN'T BY THEN!"

Yeah I know. It would be using human bodies as batteries. I saw that movie too.

2 notes

·

View notes

Text

477 notes

·

View notes

Text

finally read this essay and yeah, illuminating. I hesitate to credit too much of the world to One Specific Guy, but I have had enough frustrating conversations (mostly offline) where rationalism came up that immediately segued to roko's basilisk or neoractionaries, that I wonder if Gerard's wikipedia articles played a roll in that.

Very much enjoyed Tracing Woodgrain's foray into the internet life of jilted ex-rationalist and Wikipedia editor David Gerard. It is of course "on brand" for me - the social history of the internet, as a place of communities and individual lives lived, is one of my own passion projects, and this slots neatly into that domain in more ways than one. At the object-level it is of course about one such specific community & person; but more broadly it is an entry into the "death of the internet-as-alternate-reality" genre; the 1990's & 2000's internet as a place separate from and perhaps superior to the analog world, that died away in the face of the internet's normalization and the cruel hand of the real.

Here that broad story is made specific; early Wikipedia very much was "better than the real", the ethos of the early rationalist community did seem to a lot of people like "Yeah, this is a new way of thinking! We are gonna become better people this way!" - and it wasn't total bullshit, logical fallacies are real enough. And the decline is equally specific: the Rationalist project was never going to Escape Politics because it was composed of human beings, Wikipedia was low-hanging fruit that became a job of grubby maintenance, the suicide of hackivist Aaron Swartz was a wake-up call that the internet was not, in any way, exempt from the reach of the powers-that-be. TW's allusion to Gamergate was particularly amusing for me, as while it wasn't prominent in Gerard's life it was truly the death knell for the illusion of the internet as a unified culture.

But anyway, the meat of the essay is also just extremely amusing; someone spending over a decade on a hate crusade using rules-lawyering spoiling tactics for the most petty stakes (unflattering wikipedia articles & other press). The internet is built by weirdos, and that is going to be a mixed bag! It is beautiful to see someone's soul laid bare like this.

It can be tempting to get involved in the object-level topics - how important was Lesswrong in the growth of Neoreaction, one of the topics of Gerard's fixations? It was certainly, obviously not born there, never had any numbers on the site, and soon left it to grow elsewhere. But on the flip side, for a few crucial years Lesswrong was one of the biggest sites that hosted any level of discussion around it, and exposed other people to it as a concept. This is common for user-generated content platforms; they aggregate people who find commonalities and then splinter off. Lesswrong's vaunted "politics is the mindkiller" masked a strong aversion to a lot of what would become left social justice, and it was a place for those people to meet. I don't think neoreaction deserves any mention on Lesswrong's wikipedia page, beyond maybe a footnote. But Lesswrong deserves a place on Neoreaction's wikipedia page. There are very interesting arguments to explore here.

You must, however, ignore that temptation, because Gerard explored fucking none of that. No curiosity, no context, just endless appeals to "Reliable Source!" and other wikipedia rules to freeze the wikipedia entries into maximally unflattering shapes. Any individual edit is perhaps defensible; in their totality they are damning. My "favourite" is that on the Slate Star Codex wikipedia page, he inserted and fought a half-dozen times to include a link to an academic publication Scott Alexander wrote, that no one ever read and was never discussed on SSC beyond a passing mention, solely because it had his real name on it. He was just doxxing him because he knew it would piss Scott off, and anyone pointing that out was told "Springer Press is RS, read the rules please :)". It is levels of petty I can't imagine motivating me for a decade, it is honestly impressive!

He was eventually banned from editing the page as some other just-as-senior wikipedia editor finally noticed and realized, no, the guy who openly calls Scott a neo-nazi is not an "unbiased source" for editing this page wtf is wrong with you all. I think you could come away from this article thinking Wikipedia is ~broken~ or w/e, but you shouldn't - how hard Gerard had to work to do something as small as he did is a testament to the strength of the platform. No one thinks it is perfect of course, but nothing ever will be - and in particular getting motivated contributors now that the sex appeal has faded is a very hard problem. The best solution sometimes is just noticing the abusers over time.

Though wikipedia should loosen up its sourcing standards a bit. I get why it is the way it is, but still, come on.

270 notes

·

View notes

Text

Probably not weird that an "we MUST create the AI god so that the AI god won't punish us for not creating it" cult is buddy buddy with Christians and opportunistic rich people who want to burn the world down for their own version of the rapture (and profit in the meantime). I just wish they weren't in the FUCKING WHITE HOUSE ACTUALLY DOING IT

321 notes

·

View notes

Text

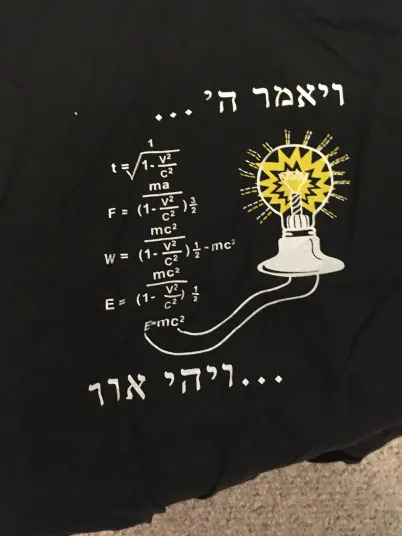

Everyone say hello to one of my all time favorite shirts

259 notes

·

View notes

Text

There is something about this dynamic and I want more XD

#rottmnt#rise of the teenage mutant ninja turtles#rottmnt donnie#rottmnt leo#disaster twins#rise donnie#rise leo#donnie as paranoid theorist#and leo as skeptical rationalist xD

2K notes

·

View notes

Text

i have a complaint that's really minor in the scheme of things but you know i love to be pedantic. too many posts and comments saying kaiba invented "time travel."

kaiba did NOT invent TIME TRAVEL. he did not go three thousand years back into the past to see Atem. time travel is beginner shit.

he DID invent TEARING A HOLE IN THE FABRIC OF SPACE-TIME TO ENTER THE ANCIENT EGYPTIAN REALM OF DEATH, which is a totally different thing, and implies something way cooler and metaphysically/spiritually challenging than time travel, which is that many dimensions exist alongside ours, and maybe death is just one of those dimensions, and dying is perhaps merely a process of moving irreversibly from one dimension to another.

yugioh is fantasy, except for the blaring and inescapable klaxon of science fiction that is kaiba. this has been my PSA (pedantic service announcement)

#intern memo#he's not a rationalist or a materialist though. don't get it twisted or i'll have to post again

124 notes

·

View notes