#my biggest vice is caring so much about the things i consume that a misinterpretation of its character is like a misinterpretation of my own

Explore tagged Tumblr posts

Text

sometimes I see a fandom/media-related opinion that is so just objectively wrong from my perspective that I wanna scream bloody murder and foam at the mouth like a rabid dog, but I have to contain my rage because it isn't "cool" or "hip" to get involved in unnecessary online discourse about stuff that doesn't matter

#like not even something harmful just like#how do you consume that piece of media and get that takeaway and genuinley say it with confidence#how do you believe that in your heart when it is so clearly incorrect#how dare you interpret things differently from my very own special little view of that world#i keep the hater locked up in my soul but he's chewing through the prison bars gearing up for an escape#my biggest vice is caring so much about the things i consume that a misinterpretation of its character is like a misinterpretation of my own#im need to be hit over the head with a brick

2 notes

·

View notes

Text

Human Geography Researcher Potential!

It is wild to think that this is the last blog post in this class! When I chose this class for this semester I wasn’t really excited about it - it was just another required course. I’m happy to say that I really appreciated this course and learned so many things as well as met some more people in geography!

These three things I know for certain about human geography research:

1. Human geography research is not just one thing. It is interconnected with so many other types of geography like the ones presented in our last class and more! My favourite part of this course was attending that final class and watching all of the videos about different subtopics under human geography that students in this class created. It helped identify connections and relations as well as how these are relevant in the real world. When combined together, they form this incredible subject of geography.

2. It is essential! Human geography research provides patterns and connections between people and places which is vital for living today. It helps us understand the world better which can aid the development of moving forward in a positive direction while respecting the past. In the summary of chapter one in the textbook, it states that “human geographers are bringing new and effective approaches to the fundamental questions of societal structures and individual experiences (Hay 2016 p. 26). Human geography will continue to help find answers to these questions about the world we live in.

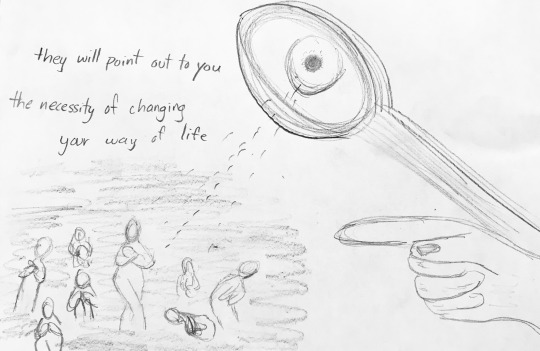

3. It is a delicate process. All research is a delicate and complex process as there are numerous things to consider and be aware of, but because human geography deals with real people, their lives, culture, religion, families, etc., I know that we need to be so careful to respect and acknowledge others and who they are. Chapter three of the textbook includes a poem by Barabara Nicholson, titled Something There Is… that highlights the necessity of consent and privacy in research. Just because someone is classified as a researcher does not give them the right to invade a person's life (Hay 2016 p. 48). Below is a sketch I did after I read the poem for the first time: (I am not an artist but it was something I did afterwards to reflect upon the reading)

In general, it’s a researcher looking through a magnifying glass at these people who feel exposed from the “research”.

These three things I am still confused by:

1. Analyzing surveys. This was one of the larger lectures we had live in class and I think I was having a hard time keeping up after we had so many lectures online in which I would pause, rewind and go back. It was my fault that I never went back to the recording to review so I’d still like to clarify this content. I know that if I were to be asked about each data type: nominal, ordinal, interval, and ratio, I would not be able to explain them all clearly (Hooykaas 2021 Week 5).

2. The following phrase was used in the week 6 lecture: “Testimony by itself is a relatively weak form of evidence” (Hooykaas 2021 Week 6). I’m unclear with how or why this is. When we watched documentaries in this course I thought this involved testimony and it was used in research. Maybe they are classified more as a case study. So I wonder, what are the differences between a case study and a testimony? Or is a testimony involved within a case study? For example, in week 3 we watched the documentary Surviving in the Siberian Wilderness for 70 years produced by VICE. I believe that this was a case study, but within it, Agafia Lykovs shares her story. Is the research incomplete unless you unpack and verify this testimony?

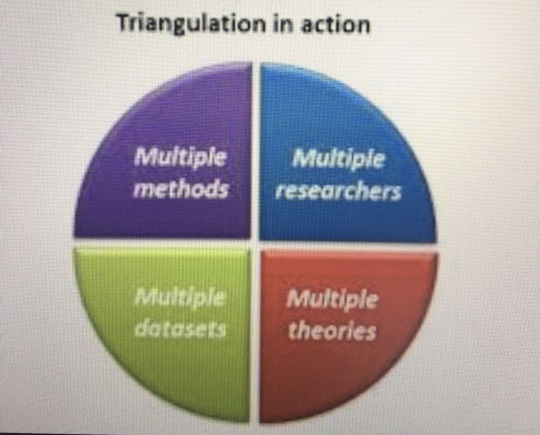

3. I am a little confused with the concept of triangulation. The week 6 lecture provided this image:

I am not sure if triangulation means having one of these sections, for example, researchers but having multiple of them, or if it is putting these sections together, for example, both multiple researchers along with multiple theories (Hooykaas 2016 Week 6). I have a feeling it would be the second option, simply because if you have multiple researchers then most likely you would get multiple theories and methods, however, I would like to clarify in order to understand it better.

These three things I know for certain about me as a human geographic researcher:

I created a word-cloud of things I’ve felt I’ve gained from this course and things that I enjoyed to help me come up with this section of the blog:

1. There is potential! I remember writing my first blog post in this class and describing how I used to really dislike geography and didn’t want anything to do with it. After this class, I know that I have the potential to become a researcher and possibly find it enjoyable! I surprised myself when I enjoyed working on the DSP. It was fun coding information with all of the colours and although it was challenging to go through the information, condense, review, condense some more, etc., it felt so rewarding to show that final product to others and to think that other people could learn valuable information useful in the world based on what you provided to them! I think if I ever did become a researcher I would enjoy participatory action research since it allows people in the community to become “co-researchers and decision-makers in their own right” (Hay 2016 p. 350). This is really important to avoid that idea of invasion of privacy.

2. I learned more about my interests. I used to think the biggest goal in geography was being able to sing this song called Nations of the World:

https://www.youtube.com/watch?v=5pOFKmk7ytU

I thought research in geography was only about analyzing piles of data and I didn’t realize you could bring creative outlooks to it. I enjoyed the poem we read in the textbook, the documentaries we watched, the opportunity of interviewing for the DSP, the creativity with the final DSP videos, etc. I am intrigued by those forms of media to learn more about and analyze/reflect on geographical concepts.

3. I have more appreciation for geography and others. The topics of critical reflexivity and ethical considerations apply to research in human geography of course but it also floods into all aspects of life. It helps consider other people’s backgrounds, lives, privileges or no privileges, and just creates better communication and respectful relationships between people (Hooykaas 2021 Week 2). It’s also worth thinking about whether you’re an insider or an outsider before you interact with different groups so that you can build a good rapport with trust (Hay 2016 p. 40).

These three areas I need to spend time developing/learning in order to feel more confident in my skills:

1. Patience. When working on the DSP, after my group and I had found our resources, I just wanted to dive in and write the script! Then we learned about coding in the week 8 lecture and my group members expressed how they would feel better going through the information quite a few times before writing anything. Of course, this worked extremely well even if it was time-consuming! In the future, I would like to make sure I take the process only one step at a time and make sure I hit every part of the research process in order to create a robust and accurate end result. Once again, this applies not only to human geography research but also the real world. Chapter 18 in the textbooks states that “Being in the world requires us to categorize, sort, prioritize, and interpret social data in all of our interactions” (Hay 2016 p. 391). There is always room for improvement here so that misinterpretations and miscommunication can be avoided.

2. During the research with the DSP, I had a challenging time determining when my group should move forward and how much research we should gather especially with the course deadlines in mind. I know that you can move forward when you reach a “point of saturation” and concepts begin to be repetitive, however, because I am detail-oriented, I was not great with grouping similar ideas if one tiny thing distinguished them (Hooykaas 2021 Week 9). I would like this to improve so that I have a clearer sense of when enough is enough.

3. I would like to clarify and learn more about the list of three things that still confuse me. It’s good to identify what confuses you and what you are unsure of but it’s even better to then go and clarify those things and understand them so that you develop your understanding and skills even more. I want to fill in those gaps of information so that everything makes a bit more sense.

Final Remark

Overall, I am really glad that I took this class and hope everyone has a great end of the semester! It was nice interacting with everyone through these blogs!

-April

References

Hay, I. (2016). Qualitative Research Methods in Human Geography. Fourth ed., Oxford.

Hooykaas, A. (2021). Week 2: Philosophy, Power, Politics and Research Design.

Hooykaas, A. (2021). Week 3: Cross-Cultural Research: Ethics, Methods, and Relationships.

Hooykaas, A. (2021). Week 5: Literature Review.

Hooykaas, A. (2021). Week 6: Data Collection - Interviews, Oral Histories, Focus Groups.

Hooykaas, A. (2021). Week 9: Writing Qualitative Geographies, Constructing Geographical Knowledge Data Analysis, Writing, and Re-Evaluating Research Aims Presenting Findings.

Nicholson, Barbara. (2000). Something There Is....

Vice (April 2013). Surviving in the Siberian Wilderness for 70 Years. Youtube. https://www.youtube.com/watch?v=tt2AYafET68

1 note

·

View note

Text

7 Mistakes to Avoid When You’re Reading Research

A couple weeks ago I wrote a post about how to read scientific research papers. That covered what to do. Today I’m going to tell you what NOT to do as a consumer of research studies.

The following are bad practices that can cause you to misinterpret research findings, dismiss valid research, or apply scientific findings incorrectly in your own life.

1. Reading Only the Abstract

This is probably the BIGGEST mistake a reader can make. The abstract is, by definition, a summary of the research study. The authors highlight the details they consider most important—or those that just so happen to support their hypotheses.

At best, you miss out on potentially interesting and noteworthy details if you read only the abstract. At worst, you come with a completely distorted impression of the methods and/or results.

Take this paper, for example. The abstract summarizes the findings like this: “Consumption of red and processed meat at an average level of 76 g/d that meets the current UK government recommendation (less than or equal to 90g/day) was associated with an increased risk of colorectal cancer.”

Based on this, you might think: 1. The researchers measured how much meat people were consuming. This is only half right. Respondents filled out a food frequency questionnaire that asked how many times per week they ate meat. The researchers then multiplied that number by a “standard portion size.” Thus, the amount of meat any given person actually consumed might vary considerably from what they are presumed to have eaten.

2. There was an increased risk of colorectal cancers. It says so right there after all. The researchers failed to mention that there was only an increased risk of certain types of colon cancer (and a small one at that—more on this later), not for others, and not for rectal cancer.

3. The risk was the same for everyone. Yet from the discussion: “Interestingly, we found heterogeneity by sex for red and processed meat, red meat, processed meat and alcohol, with the association stronger in men and null in women.” Null—meaning not significant—in women. If you look at the raw data, the effect is not just non-significant, it’s about as close to zero as you can get. To me, this seems like an important detail, one that is certainly abstract-worthy.

Although it’s not the norm for abstracts to blatantly misrepresent the research, it does happen. As I said in my previous post, it’s better to skip the abstract altogether than to read only the abstract.

2. Confusing Correlation and Causation

You’ve surely heard that correlation does not imply causation. When two variables trend together, one doesn’t necessarily cause the other. If people eat more popsicles when they’re wearing shorts, that’s not because eating popsicles makes you put on shorts, or vice versa. They’re both correlated with the temperature outside. Check out Tyler Vigen’s Spurious Correlations blog for more examples of just how ridiculous this can get.

As much as we all know this to be true, the popular media loves to take correlational findings and make causal statements like, “Eating _______ causes cancer!” or “To reduce your risk of _______, do this!” Researchers sometimes use sloppy language to talk about their findings in ways that imply causation too, even when their methods do not support such inferences.

The only way to test causality is through carefully controlled experimentation where researchers manipulate the variable they believe to be causal (the independent variable) and measure differences in the variable they hypothesize will be affected (the dependent variable). Ideally, they also compare the experimental group against a control group, replicate their results using multiple samples and perhaps different methods, and test or control for confounding variables.

As you might imagine, there are many obstacles to conducting this type of research. It’s can be expensive, time consuming, and sometimes unethical, especially with human subjects. You can’t feed a group of humans something you believe to be carcinogenic to see if they develop cancer, for example.

As a reader, it’s extremely important to distinguish between descriptive studies where the researchers measure variables and use statistical tests to see if they are related, and experimental research where they assign participants to different conditions and control the independent variable(s).

Finally, don’t be fooled by language like “X predicted Y.” Scientists can use statistics to make predictions, but that also doesn’t imply causality unless they employed an experimental design.

3. Taking a Single Study, or Even a Handful of Studies, as PROOF of a Phenomenon

When it comes to things as complex as nutrition or human behavior, I’d argue that you can never prove a hypothesis. There are simply too many variables at play, too many potential unknowns. The goal of scientific research is to gain knowledge and increase confidence that a hypothesis is likely true.

I say “likely” because statistical tests can never provide 100 percent proof. Without going deep into a Stats 101 lesson, the way statistical testing actually works is that you set an alternative hypothesis that you believe to be true and a null hypothesis that you believe to be incorrect. Then, you set out to find evidence to support the null hypothesis.

For example, let’s say you want to test whether a certain herb helps improve sleep. You give one experimental group the herb and compare them to a group that doesn’t get the herb. Your null hypothesis is that there is no effect of the herb, so the two groups will sleep the same.

You find that the group that got the herb slept better than the group that didn’t. Statistical tests suggest you can reject the null hypothesis of no difference. In that case, you’re really saying, “If it was true that this herb has no effect, it’s very unlikely that the groups in my study would differ to the degree they did.” You can conclude that it is unlikely—but not impossible—that there is no effect of the herb.

There’s always the chance that you unwittingly sampled a bunch of outliers. There’s also a chance that you somehow influenced the outcome through your study design, or that another unidentified variable actually caused the effect. That’s why replication is so important. The more evidence accumulates, the more confident you can be.

There’s also publication bias to consider. We only have access to data that get published, so we’re working with incomplete information. Analyses across a variety of fields have demonstrated that journals are much more likely to publish positive findings—those that support hypotheses—than negative findings, null findings (findings of no effect), or findings that conflict with data that have been previously published.

Unfortunately, publication bias is a serious problem that academics are still struggling to resolve. There’s no easy answer, and there’s really nothing you can do about it except to maintain an open mind. Never assume any question is fully answered.

4. Confusing Statistical Significance with Importance

This one’s a doozy. As I just explained, statistical tests only tell you whether it is likely that your null hypothesis is false. They don’t tell you whether the findings are important or meaningful or worth caring about whatsoever.

Let’s take that study we talked about in #1. It got a ton of coverage in the press, with many articles stating that we should all eat less red meat to reduce our cancer risk. What do the numbers actually say?

Well, in this study, there were 2,609 new cases of colorectal cancer in the 475,581 respondents during the study period—already a low probability. If you take the time to download the supplementary data, you’ll see that of the 113,662 men who reported eating red or processed mean four or more times per week, 866 were diagnosed. That’s 0.76%. In contrast, 90 of the 19,769 men who reported eating red and processed meat fewer than two times per week were diagnosed. That’s 0.45%.

This difference was enough to be statistically significant. Is it important though? Do you really want to overhaul your diet to possibly take your risk of (certain types of) colorectal cancer from low to slightly lower (only if you’re a man)?

Maybe you do think that’s important. I can’t get too worked up about it, and not just because of the methodological issues with the study.

There are lots of ways to make statistical significance look important, a big one being reporting relative risk instead of absolute risk. Remember, statistical tests are just tools to evaluate numbers. You have to use your powers of logic and reason to interpret those tests and decide what they mean for you.

5. Overgeneralizing

It’s a fallacy to think you can look at one piece of a jigsaw puzzle and believe you understand the whole picture. Any single research study offers just a piece of the puzzle.

Resist the temptation to generalize beyond what has been demonstrated empirically. In particular, don’t assume that research conducted on animals applies perfectly to humans or that research conducted with one population applies to another. It’s a huge problem, for example, when new drugs are tested primarily on men and are then given to women with unknown consequences.

6. Assuming That Published Studies are Right and Anecdotal Data is Wrong

Published studies can be wrong for a number of reasons—author bias, poor design and methodology, statistical error, and chance, to name a few. Studies can also be “right” in the sense that they accurately measure and describe what they set out to describe, but they are inevitably incomplete—the whole puzzle piece thing again.

Moreover, studies very often deal with group-level data—means and standard deviations. They compare the average person in one group to the average person in another group. That still leaves plenty of room for individuals to be different.

It’s a mistake to assume that if someone’s experience differs from what science says it “should” be, that person must be lying or mistaken. At the same time, anecdotal data is even more subject to biases and confounds than other types of data. Anecdotes that run counter to the findings of a scientific study don’t negate the validity of the study.

Consider anecdotal data another piece of the puzzle. Don’t give it more weight than it deserves, but don’t discount it either.

7. Being Overly Critical

As I said in my last post, no study is meant to stand alone. Studies are meant to build on one another so a complete picture emerges—puzzle pieces, have I mentioned that?

When conducting a study, researchers have to make a lot of decisions:

Who or what will their subjects be? If using human participants, what is the population of interest? How will they be sampled?

How will variables of interest be operationalized (defined and assessed)? If the variables aren’t something discrete, like measuring levels of a certain hormone, how will they be measured? For example, if the study focuses on depression, how will depression be evaluated?

What other variables, if any, will they measure and control for statistically? How else will they rule out alternative explanations for any findings?

What statistical tests will they use?

And more. It’s easy as a reader to sit there and go, “Why did they do that? Obviously they should have done this instead!” or, “But their sample only included trained athletes! What about the rest of us?”

There is a difference between recognizing the limitations of a study and dismissing a study because it’s not perfect. Don’t throw the baby out with the bathwater.

That’s my top seven. What would you add? Thanks for reading today, everybody. Have a great week.

(function($) { $("#dfBetBk").load("https://www.marksdailyapple.com/wp-admin/admin-ajax.php?action=dfads_ajax_load_ads&groups=1078&limit=1&orderby=random&order=ASC&container_id=&container_html=none&container_class=&ad_html=div&ad_class=&callback_function=&return_javascript=0&_block_id=dfBetBk" ); })( jQuery );

window.onload=function(){ga('send', { hitType: 'event', eventCategory: 'Ad Impression', eventAction: '95641' });}

The post 7 Mistakes to Avoid When You’re Reading Research appeared first on Mark's Daily Apple.

7 Mistakes to Avoid When You’re Reading Research published first on https://venabeahan.tumblr.com

0 notes

Text

7 Mistakes to Avoid When You’re Reading Research

A couple weeks ago I wrote a post about how to read scientific research papers. That covered what to do. Today I’m going to tell you what NOT to do as a consumer of research studies.

The following are bad practices that can cause you to misinterpret research findings, dismiss valid research, or apply scientific findings incorrectly in your own life.

1. Reading Only the Abstract

This is probably the BIGGEST mistake a reader can make. The abstract is, by definition, a summary of the research study. The authors highlight the details they consider most important—or those that just so happen to support their hypotheses.

At best, you miss out on potentially interesting and noteworthy details if you read only the abstract. At worst, you come with a completely distorted impression of the methods and/or results.

Take this paper, for example. The abstract summarizes the findings like this: “Consumption of red and processed meat at an average level of 76 g/d that meets the current UK government recommendation (less than or equal to 90g/day) was associated with an increased risk of colorectal cancer.”

Based on this, you might think: 1. The researchers measured how much meat people were consuming. This is only half right. Respondents filled out a food frequency questionnaire that asked how many times per week they ate meat. The researchers then multiplied that number by a “standard portion size.” Thus, the amount of meat any given person actually consumed might vary considerably from what they are presumed to have eaten.

2. There was an increased risk of colorectal cancers. It says so right there after all. The researchers failed to mention that there was only an increased risk of certain types of colon cancer (and a small one at that—more on this later), not for others, and not for rectal cancer.

3. The risk was the same for everyone. Yet from the discussion: “Interestingly, we found heterogeneity by sex for red and processed meat, red meat, processed meat and alcohol, with the association stronger in men and null in women.” Null—meaning not significant—in women. If you look at the raw data, the effect is not just non-significant, it’s about as close to zero as you can get. To me, this seems like an important detail, one that is certainly abstract-worthy.

Although it’s not the norm for abstracts to blatantly misrepresent the research, it does happen. As I said in my previous post, it’s better to skip the abstract altogether than to read only the abstract.

2. Confusing Correlation and Causation

You’ve surely heard that correlation does not imply causation. When two variables trend together, one doesn’t necessarily cause the other. If people eat more popsicles when they’re wearing shorts, that’s not because eating popsicles makes you put on shorts, or vice versa. They’re both correlated with the temperature outside. Check out Tyler Vigen’s Spurious Correlations blog for more examples of just how ridiculous this can get.

As much as we all know this to be true, the popular media loves to take correlational findings and make causal statements like, “Eating _______ causes cancer!” or “To reduce your risk of _______, do this!” Researchers sometimes use sloppy language to talk about their findings in ways that imply causation too, even when their methods do not support such inferences.

The only way to test causality is through carefully controlled experimentation where researchers manipulate the variable they believe to be causal (the independent variable) and measure differences in the variable they hypothesize will be affected (the dependent variable). Ideally, they also compare the experimental group against a control group, replicate their results using multiple samples and perhaps different methods, and test or control for confounding variables.

As you might imagine, there are many obstacles to conducting this type of research. It’s can be expensive, time consuming, and sometimes unethical, especially with human subjects. You can’t feed a group of humans something you believe to be carcinogenic to see if they develop cancer, for example.

As a reader, it’s extremely important to distinguish between descriptive studies where the researchers measure variables and use statistical tests to see if they are related, and experimental research where they assign participants to different conditions and control the independent variable(s).

Finally, don’t be fooled by language like “X predicted Y.” Scientists can use statistics to make predictions, but that also doesn’t imply causality unless they employed an experimental design.

3. Taking a Single Study, or Even a Handful of Studies, as PROOF of a Phenomenon

When it comes to things as complex as nutrition or human behavior, I’d argue that you can never prove a hypothesis. There are simply too many variables at play, too many potential unknowns. The goal of scientific research is to gain knowledge and increase confidence that a hypothesis is likely true.

I say “likely” because statistical tests can never provide 100 percent proof. Without going deep into a Stats 101 lesson, the way statistical testing actually works is that you set an alternative hypothesis that you believe to be true and a null hypothesis that you believe to be incorrect. Then, you set out to find evidence to support the null hypothesis.

For example, let’s say you want to test whether a certain herb helps improve sleep. You give one experimental group the herb and compare them to a group that doesn’t get the herb. Your null hypothesis is that there is no effect of the herb, so the two groups will sleep the same.

You find that the group that got the herb slept better than the group that didn’t. Statistical tests suggest you can reject the null hypothesis of no difference. In that case, you’re really saying, “If it was true that this herb has no effect, it’s very unlikely that the groups in my study would differ to the degree they did.” You can conclude that it is unlikely—but not impossible—that there is no effect of the herb.

There’s always the chance that you unwittingly sampled a bunch of outliers. There’s also a chance that you somehow influenced the outcome through your study design, or that another unidentified variable actually caused the effect. That’s why replication is so important. The more evidence accumulates, the more confident you can be.

There’s also publication bias to consider. We only have access to data that get published, so we’re working with incomplete information. Analyses across a variety of fields have demonstrated that journals are much more likely to publish positive findings—those that support hypotheses—than negative findings, null findings (findings of no effect), or findings that conflict with data that have been previously published.

Unfortunately, publication bias is a serious problem that academics are still struggling to resolve. There’s no easy answer, and there’s really nothing you can do about it except to maintain an open mind. Never assume any question is fully answered.

4. Confusing Statistical Significance with Importance

This one’s a doozy. As I just explained, statistical tests only tell you whether it is likely that your null hypothesis is false. They don’t tell you whether the findings are important or meaningful or worth caring about whatsoever.

Let’s take that study we talked about in #1. It got a ton of coverage in the press, with many articles stating that we should all eat less red meat to reduce our cancer risk. What do the numbers actually say?

Well, in this study, there were 2,609 new cases of colorectal cancer in the 475,581 respondents during the study period—already a low probability. If you take the time to download the supplementary data, you’ll see that of the 113,662 men who reported eating red or processed mean four or more times per week, 866 were diagnosed. That’s 0.76%. In contrast, 90 of the 19,769 men who reported eating red and processed meat fewer than two times per week were diagnosed. That’s 0.45%.

This difference was enough to be statistically significant. Is it important though? Do you really want to overhaul your diet to possibly take your risk of (certain types of) colorectal cancer from low to slightly lower (only if you’re a man)?

Maybe you do think that’s important. I can’t get too worked up about it, and not just because of the methodological issues with the study.

There are lots of ways to make statistical significance look important, a big one being reporting relative risk instead of absolute risk. Remember, statistical tests are just tools to evaluate numbers. You have to use your powers of logic and reason to interpret those tests and decide what they mean for you.

5. Overgeneralizing

It’s a fallacy to think you can look at one piece of a jigsaw puzzle and believe you understand the whole picture. Any single research study offers just a piece of the puzzle.

Resist the temptation to generalize beyond what has been demonstrated empirically. In particular, don’t assume that research conducted on animals applies perfectly to humans or that research conducted with one population applies to another. It’s a huge problem, for example, when new drugs are tested primarily on men and are then given to women with unknown consequences.

6. Assuming That Published Studies are Right and Anecdotal Data is Wrong

Published studies can be wrong for a number of reasons—author bias, poor design and methodology, statistical error, and chance, to name a few. Studies can also be “right” in the sense that they accurately measure and describe what they set out to describe, but they are inevitably incomplete—the whole puzzle piece thing again.

Moreover, studies very often deal with group-level data—means and standard deviations. They compare the average person in one group to the average person in another group. That still leaves plenty of room for individuals to be different.

It’s a mistake to assume that if someone’s experience differs from what science says it “should” be, that person must be lying or mistaken. At the same time, anecdotal data is even more subject to biases and confounds than other types of data. Anecdotes that run counter to the findings of a scientific study don’t negate the validity of the study.

Consider anecdotal data another piece of the puzzle. Don’t give it more weight than it deserves, but don’t discount it either.

7. Being Overly Critical

As I said in my last post, no study is meant to stand alone. Studies are meant to build on one another so a complete picture emerges—puzzle pieces, have I mentioned that?

When conducting a study, researchers have to make a lot of decisions:

Who or what will their subjects be? If using human participants, what is the population of interest? How will they be sampled?

How will variables of interest be operationalized (defined and assessed)? If the variables aren’t something discrete, like measuring levels of a certain hormone, how will they be measured? For example, if the study focuses on depression, how will depression be evaluated?

What other variables, if any, will they measure and control for statistically? How else will they rule out alternative explanations for any findings?

What statistical tests will they use?

And more. It’s easy as a reader to sit there and go, “Why did they do that? Obviously they should have done this instead!” or, “But their sample only included trained athletes! What about the rest of us?”

There is a difference between recognizing the limitations of a study and dismissing a study because it’s not perfect. Don’t throw the baby out with the bathwater.

That’s my top seven. What would you add? Thanks for reading today, everybody. Have a great week.

(function($) { $("#dfBetBk").load("https://www.marksdailyapple.com/wp-admin/admin-ajax.php?action=dfads_ajax_load_ads&groups=1078&limit=1&orderby=random&order=ASC&container_id=&container_html=none&container_class=&ad_html=div&ad_class=&callback_function=&return_javascript=0&_block_id=dfBetBk" ); })( jQuery );

window.onload=function(){ga('send', { hitType: 'event', eventCategory: 'Ad Impression', eventAction: '95641' });}

The post 7 Mistakes to Avoid When You’re Reading Research appeared first on Mark's Daily Apple.

7 Mistakes to Avoid When You’re Reading Research published first on https://drugaddictionsrehab.tumblr.com/

0 notes