#like this video is just talking about how to make your drawing interesting via composition and thumbnailing

Explore tagged Tumblr posts

Text

So much of art is just understanding the science of where people look and why

#watching a practice composition video#theres deceptively a lot of theory in art#this sort of video reminds me so much of like#math class?#we talk about the theory we go through examples alreadly done the teacher goes through it step by step with a new idea/problem and explains#as they go#its building upon (assumed) prior knowledge#like this video is just talking about how to make your drawing interesting via composition and thumbnailing#but it lays on the knowledge of tone and perspective and rule of thirds and probably a bunch more things ive forgotten the name#anyway. i love art its so cool you can really never stop learning about it

4 notes

·

View notes

Text

Level Design Workshop: Blockmesh and Lighting Tips

I watched this talk from David Shaver about level design with blockmesh. The talk is packed full of amazing tips for any aspiring level designer.

Level Requirements

Environment Type

Time of Day

Location in Story

Available Character Abilities

Enemy Types

You should think of these before starting the level design as these will influence how you design your level

Affordance

A way to communicate to the player what to play with or where to go

Players learn the affordance rules via consistent color and shape and a trust contact is formed.

Ensure affordances work consistently game-wide.

Add to your blockmesh for playtests

Affordance is a way to tell the player where they should go or what they can interact with. For example when we look at a door we can tell its push or pull just by seeing the handle.

You can use colour and shapes to tell the player where to go. For example Mirror's Edge uses the colour red. Or you can use shapes, such a piece of wood partially hanging off a building to indicate you can jump off it.

You can also use both, such as having an edge on a cliff that sticks out and is a different colour.

Denying Affordance

A way to communicate to the player where they can’t go

This is using the same techniques from before but you are achieving the opposite result

If you did not want a player to climb up a wall, you could push foliage on top or have spikes on top. This will let the player know they can’t go there

You can use this together with affordance, an example being having a door boarded up but the window open, indicating to the player they need to enter the house through the window.

Visual Language - Shapes

Shape Consistency is important - communicates affordance

Primitive shapes have an effect on player psychology

We can use this to guide players.

Certain shapes have an effect on player psychology.

Round Shapes are considered safe and not dangerous

Rectangular shapes are considered stable, like a safe house.

Diagonal Shapes are viewed as dangerous

You can use Round shapes to indicate safe area the player can walk, while using diagonal shapes to nudge the player on the right path.

And finally having a square building will give the player a goal to arrive at where they know they will be safe.

Visual Language - Colour You need this to be consistent with the environment. For example, if you use a bright green in a mossy cave to indicate where you can climb it would feel right and consistent. However if you used bright green to climb a rock in the middle of a desert it would not be consistent with the environment.

Colour consistency is important - it communicates affordance

Colour provides context in a blockmesh

Gets the team on the same page without explanation

You can contrasting colours to guide the player, such as having the top of edges you can climb be a colour that stands out.

Landmarks

Orients players.

Distant object seen from many vantage points in the level.

A goal to work towards.

Using landmarks creates a goal and can guide the player. It also lets them know they are going the right way.

When building the level, a good way is to create the landmark and build backwards from it. This lets you shape the level around it.

Half Life 2 does this well with its Citadel, acting as something the player was going towards at the start, then away from the Citadel and eventually back to it.

Openings Attract

Caves, doors, arch ways, etc.

Often leads to a refuge space, which psychologically feels safe

Mystery -”What could be Inside?”

Having an opening in a wall encourages the player to enter.

If you have an opening, try and make it stand out so the player can see it.

Using opening can encourage players to explore different paths in your level.

An example I can think of was when I first played Mario Kart 64, on the Koopa Troopa Beach level. I remember when I first saw the hole in the mountain and was wondering what was in it, and I stopped racing and my new goal was to try and get into the cave, just to see what was inside.

Gates and Valves

Gates stop progress until conditions are met

Valves prevent backtracking.

Both reduce the possibility space and prevent aimless wandering.

Great for linear games, but can be sprinkled into open worlds too.

Gates are pretty straight forward. The player has to achieve something before they can progress, such as killing all the enemies or solving a puzzle.

Valves however stop the player from backtracking. You can do this with a door closing behind the player, or the player jumping down from a ledge they can’t climb back up.

Gears of War would do this a lot, and do it quite well. You would go into a room where you have to push forward and fight enemies who have a strategic position. You would then fight more enemies once you are in the strategic position.

Leading Lines

Lines that draw your eyes to the intended point of interest

Composition technique

Roads, pipes, cables, etc.

Leading the players eyes if one of the most important things with level design. If you need them to find a certain object or see a certain event, using leading lines can help you lead the players eyes.

For example, this photo of Titanfall 2 uses pipes to lead the players eyes towards a door. An enemy will barge through the door and without these pipes the player might miss the moment that happens.

You can also use leading lines to guide the player where you want them too. In the photo below, you can see the pipes on the right encouraging the player to follow them.

Pinching

Angle shapes to funnel players to a specific spot.

Good for redirection

Great for setting up a reveal.

Depends on your mobility mechanics.

Pinches are useful forcing a player to look in a certain direction. You can use these when you want the player to see something important.

In the example below, by forcing the player to go walk through this pinch, they will clearly see the hospital in the distance and know they are going the right way.

Framing and Composition

Draws attention to point of interest by blocking other parts of the image, making it stand out.

Google photography composition techniques - lots of good websites

Great when combined with Pinching.

You can use the layout of the level to frame important objects. In the photo below you can see that river helps lead the players eye towards the dam, which helps frame the building.

In the Last of Us 2 trailer, there is a moment where the car is facing to the right which draws the players eyes there, and then there are two perfectly framed trees with light shining from them. This clearly shows the player the way to go even though the player is in a forest.

Breadcrumbs

Attract/lead the player, a piece at a time to a goal

Can be almost anything that draws the eye

-Stuff that breaks up the negative space of floor/walls. -Pickups -Enemies -Lit Areas

Usually better to add after early playtests of blockmesh to see if they are even needed.

Breadcrumbs help lead the player. You essentially litter the ground with a trail of objects. A classic example is Super Mario games, where they use coins to lead the player. I really like this technique as its super simple but highly effective.

Textures

Just point the way to go

Examples

-Arrows pointing the way -Scrapes on the ground/walls -Signs -Etc.

You can use textures to act as an arrows to tell the player where to go. In my narrative game I have used blood smears and drag markings on the ground to guide the player. In some games you can even just use an arrow if it matches the environment, such as in a street with a sign that tells you where the area is you have to go. You should try and block these out in your blockmesh.

Movement

Use movement to grab the eye.

Examples: -Big Scripted moments -Birds -Spark FX -Enemies -Something flapping in the breeze

Guides the eye to where you want the player to go

I like the idea of using movement to grab the players attention. It helps them to notice things you want them to see.

Doing this in the blockmesh phase is highly dependant on your game

Try to do it anyway for your playtests

Cubes triggered to lerp in a direction or along a spline will suffice

Can be a late game bandage for guidance

Light and God Rays

Players are attracted to the light

God rays draw attention and a line to the goal

Important in blockmesh phase.

I believe lighting is one of the most important things to think about when designing a level. It helps guide the player, show them points of interest and can hide areas you don’t want them to go too.

God Rays in particular act as a beacon. What I really liked was how he mentioned a squint test. The Squint Test is where you squint your eyes and have a look at your game. What stands out when everything is blurry? Whatever stands out is the first thing people will see when they play your game.

This entire talk is amazing and is so jam packed full of information.

This talk taught me of so many things I should be thinking about in the blockmesh stage of level design and how to think of ways to guide the player throughout your levels.

As an aspiring level designer, this video is the best video to teach you about everything you need to know.

2 notes

·

View notes

Text

AI, Artificial Intelligence?

This week the focus will lay on seeing and acknowledging AI as a material to be used within the field of IXD as well as the field of HCI. Although, what I have understood from reading the paper provided as well as what Maliheh brought up and talked about during yesterday's lecture is that the view of the role of AI within the practice of IDX. The main two views of AI in this field is AI as a material and AI as a character of an object. If AI is seen as a material it entails that it can be shaped by the designer but also by the user in some cases. It can also be compared to a block of wood that in the beginning is a blank canvas but later shaped into an object with properties and textures much like a blank AI that does not do anything until the designer fills it with a purpose and data of what it should do but also how it should be done. In contrast, seeing AI as a character it entails that it still has a purpose but does not necessarily obey. It can also be perceived as something that is a living organism or something that has its own will. This is kind of what is discussed in the paper “Giving Form to Smart Objects: Exploring Intelligence as an Interaction Design Material“. This is also the paper on which I choose to write a summary about, and here it is:

“The authors of the paper explain the movement of the third wave of HCI as a phenomenon which is evolving into encouraging participation of the users in the creation of interaction artifacts. Further, they see the third wave as an opportunity to design and give form to smart everyday objects. They believe that the existing smart objects do not entail intelligence but rather have their intelligence “stuck onto it”. They further believe that intelligence already is considered as a design material to some extent. Due to this, they hope to contribute to the HCI by recommending how intelligence could be used as a tool for the interaction practice. In the paper, reviews of related work will be brought up in order to receive an understanding of AI, and the history of formgiving practices within HCI will be viewed upon. Practical work will also be analysed and discussed to give a deeper understanding and to provide examples of implementation of intelligence.

The authors claim that materials in the formgiving practices represent the creation in a visual form and it ranges from physical to digital materials. They further state that physical materials are used earlier in projects while the use of digital materials are often introduced later in the process. Therefore, the concept computational composites is introduced as it unites the three elements: physical form, temporal form and the ‘form’ of interaction. Machine Learning is elucidated as a tool that enables designers to design AI into the products. However, it is not without difficulty. Although, the authors explicitly state that this is not an issue that will be addressed in the paper and that the focus rather will be on how AI could be meaningfully implemented into products. They also suggest that in order to give form to the character of an object one must consider the object's intent, materiality, as well as its agency.

In order to illustrate how everyday objects are given AI as a part of their character, the authors describe and evaluate projects done by Master students of the course Interactive Formgiving. The project was divided into three iterative phases: Concept, Embodiment and Enactment. During the Conceptual phase the students were expected to find concrete situations where people struggle, and later find an object that is related to that situation. In the Embodiment phase the students gave the conceptual objects a physical form in order to test their intelligence and to create video prototypes. In the last phase Enactment, students were to get an understanding and development of the object’s agency through watching the video prototypes.

To conclude, the authors suggest that in order to be successful when designing objects with AI, they believe that their proposed approach, dividing the process into three iterative phases, is to recommend. They further express their belief of their work contributing to the field of IXD and HCI and allows for new theoretical understandings as well as approaches in designing objects with AI.”

First brainstorming session was conducted via zoom with the whole group. The goal for this session was to identify some artifacts that have some sort of intelligence already embedded. Examples of an artifact could be smart assistants, smart watches and cell phones. We came up with four different artifacts which is:

A smart fridge

A home assistante

A chatbot

A frankenstein robot combining “Jibo” and boston dynamics “Atlas”

The idea of the smart fridge was not to design a smart fridge as we know it. Meaning, the fridge will not help you to keep track of your groceries and create recipes for you. Our idea was to give the fridge a personality that sort of shames you if you open the fridge without either taking stuff out or putting stuff in. How it would do it is not clear but that was one of my many crazy ideas. The second idea was to materialize the action of listening and to replace the pointer lights that are present on the tech today. We also talked about what it is that adds the feeling of presence and awareness and concluded after reviewing some existing products that sort of tries to achieve a similar goal that the use of facial expressions and most important, the use of eyes and eyebrows. As we can see in the image below both of these products creates some sort of personality by having eyes and mobility in order to show awareness.

Image shows Jibo to the left and Cozmo to the right.

A chatbot is something that most of us has encountered some point in our help seeking days. They are present both in written chats but are most common when dialing a company and instead of givinging you options that correspond with a number they ask you to say you issue and the bot will try to understand and direct you to the right human. I believe we all could agree that it is mildly infuriating and gives the feeling of you no longer are in control of the situation. If we went with this we might have to tackle this issue and try to envision a way of making the interaction with this type of intelligence more pleasant. Finally, we had a crazy idea of combining Jibo with Atlas which is a robot developed by Boston Dynamics. We saw this as an opportunity to create some “creepypasta” since according to the product video of Jibo, he/she could be perceived, not only as an assistant but also as a member of the family. What Jibo lacks though is movement and that's where Atlas comes in. Since Atlas is built almost to mimic the structure of a human body but also the movement of one. Combining these two gives us a family member that can move around much like a real member.

Image showing Boston Dynamics robot Atlas.

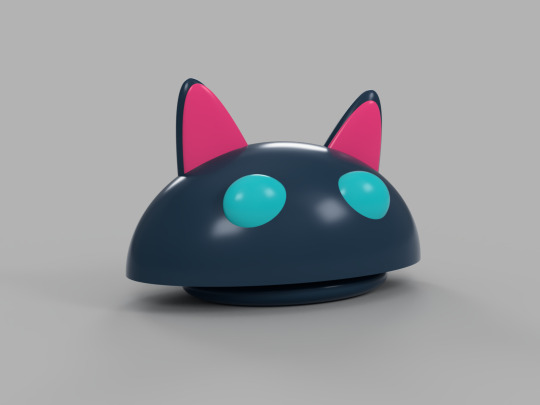

We have now decided to move on with the smart home assistant based on the fact that we could come up with more ideas on how to improve and embody the intelligence of it. During our brainstorming we found different products that already exist and are available to consumers to some extent. We have already talked about the companion robots Jibo and Cozmo. We find these very interesting since the both manage to convey a sense of them being alive and being able to perceive feelings. They could, according to the company videos, almost be seen as a part of the family. What we also found intriguing with the Cozmo is that he has his own needs. He comes up to the user and wants to play or just to have the user to pay attention to him. But how do they manage to convey these complex interactions to the users? We believe that it is because of the eyes. The eyes of a person say a lot about his/hers emotional state and it is a way for humans to read and understand each other. It is also with the eyes one can know if the person is paying attention or not (Source: All of our teachers). Even with the movement of these robots, that is brilliant, puted aside, the eyes manage to say a lot about the robots state of ‘emotions’. We also discussed the form of the assistant. What should it look like? Or more important, what should it not look like? I mentioned above the creepypasta of combining Jibo and Atlas. I believe that this creates a clear example of what it should not look like. Having an intelligent robot walking around in your house looking and behaving like a human would probably feel intimidating for most people. Drawing on Disney's ‘Big Hero 6’ where an intelligent robot is introduced as a big ‘huggable’ robot with no intimidating physical features is what we might want to aim for.

Image of Dabai (Baymax) from the Disney movie Big Hero 6

Dabai, which is the name of the robot in the movie, also has a feature that is shared with both Jibo and Cozmo which is the silliness and childiness of them. That level of playful idiocy despite the amount of knowledge they can get a hold of. I believe this also could be a key feature if we would like to create an assistant that is not as stiff and add the aspect of having the assistant as a companion.

We also talked about the difference between an artificial assistant and an artificial companion. The difference between these two is that the assistant just does whatever the user tells it to do and the companion cares more about the user and tries to please instead of just do. But what if we blur the line separating these two? What would that look like? We imagine a companion assistant that necessarily does not provide information unless it has learned that information earlier. If the user would like to fetch ‘new’ information the companion assistant will have to take some time in order to ‘learn’ that new piece of information. The idea is that the companion assistant will take the same amount of time as a human would take if he/she would look it up themselves. The companion assistant would also be able to recognise and adapt to the different members of the family.

Concept art of the companion assistant.

The idea is to take the hugginess and silliness/childiness of Dabai, the expressiveness of eyes and movement from Cozmo and Jibo, intelligence of a smart home assistant and the need to learn from the concept of a learning child.

Since the limited time of this project we had to rethink our way of presenting our concept. In the weeks before we have made video prototypes that would show the concept but also show the interaction with it. This week we won’t have time to create a physical product and create a video prototype to accompany it. One of the deliverables this week is storyboards showing different use cases and how the interaction with it would look like. While I continued to work on the concept art Johan took on the task of creating a 3D render of the concept. He created the render in Fusion 360 that we all were introduced to earlier in the program. We used the same colors as we did in the art form of it and the general forms were eyeballed since we all are new to the program and we kept it as simple as we could.

The 3D render of our concept artifact.

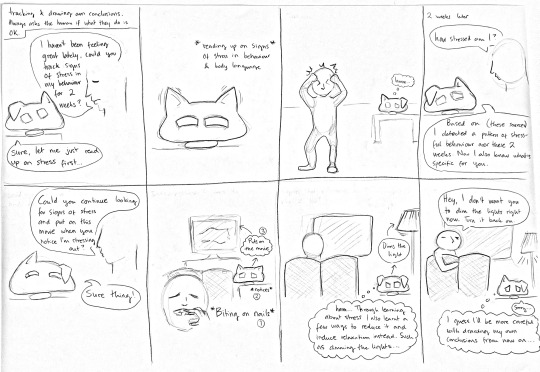

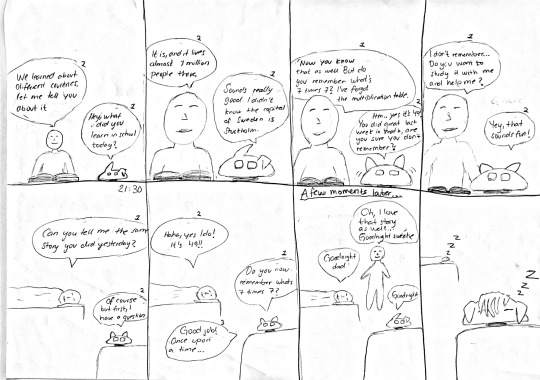

Clara and Christina created our lovely storyboards. The storyboards show three different occasions on which the companion assistant is of use.

Storyboard that shows the ability to learn, track, notice and try new things based on knowledge.

Storyboard that shows the ability to learn, recall, allow for privacy and to know who it interacts with.

Storyboard that shows the ability to teach, learn, recall and to adapt to children.

As I said earlier, we do not have time to create a physical prototype of it and we do not have time to create a prototype video showing the interaction with the companion assistant. Then we had the idea of interacting with the storyboard! We recorded Johan who used a cartoonie voice and then we applied it in the presentation. By timing the effects in the keynote presentation we could read the lines of the user live on camera and the companion assistant would respond through the presentation. Our teachers said that we should have fun while working and boy did we have fun doing this. Let's just hope the others find this as funny as us.

When we had shown this to the whole class and David we first of all received a very good response of the presentation being fun and well used. The response of the 3D render was also surprisingly positive. Both David and Chloé mentioned that our concept made them think of some project called “Nabaztag Rabbit”.

Image showing the Nabaztag rabbit.

I have never heard of this before so I googled it. What I seem to understand is that it is a project that started many years ago and the goal was to create a smart object that could do more than just light up. The rabbit could read out the users emails, read the weather forecast etc. In newer versions the rabbit also allows for physical interaction from the user and it can also act as the user for things like a back scratch. I now see why our project made them think of this rabbit. The rabbit is a more simple version of what we are envisioning. The rabbit even communicates to some extent with its ears.

David also mentioned the fact that we are not the first ones to consider the creation of a companion assistant. He did not say that it is a bad idea but he rather focused on the question why there is no companion assistant that has taken roots in the living rooms. He said that it could be since the smart home assistant has been getting very cheap lately and that it makes it more appealing to consumers compared to an expensive companion assistant. I won’t argue against this but I also believe that one of the issues of why there is no companion assistant in every living room is because a companion AI is still considered as a novelty and as an expensive toy with no real purpose. I also believe that companion AI is important for people that either live on their own and need that daily interaction but also people that have some kind of disability that will have to learn how to communicate. I have no data on this but I think that if our concept was working and were to be sold in stores it would gain popularity and especially now when people are forced to isolate and might need someone to talk to.

0 notes

Text

Psycho Pass (9-10)

Episode 9 - Paradise fruits

Kogami, Tsunemori and Ginoza at the Oso Academy to continue their investigation. Ginoza brought Kogami aside to apologize for his misjudgment. Kogami responded that he hadn't felt as lively for a long while.

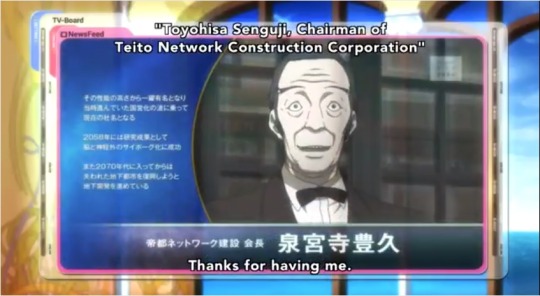

Morning next day, Tsunemori was told about a news report video recommended by the Ministry of Welfare in which a Senguji Toyohisa was interviewed and talked about how immortality could be achieved by turning human into cyborgs.

As Tsunemori drove to pick up Kogami, she played that video in her car. Kogami was not interested in what Senguji said, he looked out of the window when that video played. Nonetheless, Tsunemori asked:

"...by becoming a cyborg?"

Kogami answered immediately, without looking at Tsunemori, that he was not interested. "LIfe as a latent criminal isn't the sort of thing you'd want to go on forever." Tsunemori countered that if the social system became more developed, the rights of latent criminals might improve. Kogami laughed it off, saying no wonder Tsunemori's psycho-pass tended to stay clear. The video continued. The host asked Senguji about a survey that showed people's reluctance in becoming more than 50% cyborg, and Senguji responded that everyone had become more or less cyborg now. Even though they did not have artificial body parts, they had become totally dependent on portable information terminals, AI secretaries and similar technologies that functioned as their second brain. And he concluded that the history of science was a history of the expansion of the human body's functionality, in other words, the history of man's cyberization.

Tsunemori kept driving without talking with Kogami. They arrived at a private property. Both quietly got out of the car.

As Kogami pressed the doorbell, Tsunemori commented that she didn't see much environment hologram in use there. Kogami replied that the owner didn't like those kinds of things.

Door opened. Kogami greeted and addressed the owner as professor Saiga.

They were invited in. Seated, Saiga started doing profiling on Tsunemori, saying that she was from Chiba, not bad at athletic activities, but couldn't swim, and both her parents were still alive...

Saiga continued talking about how Tsunemori's parents saw thing, their opposition to Tsunemori becoming a Public Safety agents, and Tsunemori's relationships with her grandma.

Saiga, "Just some simple observations. People manifest all sorts of signs unconsciously. Once you get the knack of it, you can easily read those signs."

Kogami then said to Saiga that he had two favors to ask for. First, Saiga taught Tsunemori how to do profiling, and second, Saiga showed Kogami the list of past course attendees.

Saiga asked who it was that Kogami was looking for. Kogami replied, "I think this guy is the worst criminal since the creation of the Sibyl system. He's a high-level intellectual criminal and is probably fit and in good health, too. He's someone with unique charisma. He rarely kills people with his own hands. He controls other people's minds and influences them. Much like a music conductor, he orchestrates one crime after another." Saiga asked Kogami to define chrisma. "I used it to mean the nature of a hero or a ruler." Saiga said he would give that answer 20 points. Then he gave his answer:

1. The nature of a hero or a prophet; 2. An ability to simply make you feel good when you're around them; and 3. The intelligence to eloquently talk about all sorts of things.

Then, Saiga asked Kogami which of the above elements did the guy he was looking for have. "All of them."

At the Public Safety Office, Masaoka showed the profile of Shibata Yukimori, an elderly man whose identity was used by someone who taught art at Oso Academy.

Another agent added that all the video data had been destroyed and that there was no way to reconstruct his appearance. Both creating a traditional photofit picture and drawing a composite sketch failed.

Kogami asked if Tsunemori found the crash course helpful. Tsunemori replied it helped a lot, then she wondered why Saiga's course was not in the Public Safety Bureau's archive.

Kogami said that was impossible. Saiga's courses were specially set up for Public Safety inspectors. But some of the people who attended the courses had clouded hues and high crime coefficient readings.

Tsunemori was surprised by this.

"Say there's a dark swamp and you can't see the bottom. In order to check the swamp, you have no choice but to jump in. Mr. Saiga is used to it since he's dived in to investigate it so many times. But it's not like all students can come back safely after diving into the swamp. There are gaps in their abilities and simply their suitability, too." "You seem like someone who'd dive deep...and yet come back safely."

"Well...at least the Sibyl system decided that I couldn't come back."

Cut to Maikshima. He was accompanied by his guest, the hunter who killed Rikako, the chairman who appeared in an interview, Senguji Toyohisa.

Senguji smoked with a pipe made of Rikako's bone.

Makishima asked Senguji that since he had overcome aging in his body, "all that's left is your mind?"

Senguji replied, "Yes. You maintain a healthy and sound life by sacrificing other lives. But if people only seek youth for their bodies and lose sight of the means to cultivate their minds, then naturally it will only lead to an increase in the number of living dead. How foolish, don't you think?"

"The energy that comes from thrills. It's a dangerous reward that goes hand in hand with death, huh?"

"That's right. In hunting, the tougher your prey is, the fresher the youth you can gain from it." Senguji replied. "With all that in mind, I think I can arrange for your next prey to be an exquisite one. It's an Enforcer from the Public Safety Bureau (MWPSB). His name is..."

Walking along with Kogami, Tsunemori said checking Saiga's lecture attendees list didn't help much.

"But if Makishima is a living man, he's left a trace somewhere for sure."

As soon as Kogami and Tsunemori entered the office, Ginoza reacted.

"That's because I asked him to," said Tsunemori.

"...making her a latent criminal who strayed from the right path like you?"

"A brat who's confused about anything and everything! Why do you think we have the classifications of Inspectors and Enforcers? It's in order to avoid the risk of having healthy people's Psycho-Pass get clouded by criminal investigations. We use latent criminals, who can never return to society, in our place. That's precisely why you can fulfill your duty while protecting your mind!" Kogami listened without making any objection.

Tsunemori though...

"That's not teamwork! Solving crimes or protecting our own Psycho-pass, which on earth is more important?!" "Do you wanna throw away your career? Are you going to sacrifice everything you've built so far?" "It's..."

"It's certainly true that I'm new. And you are a respectable senior to me, Inspector Ginoza. But please don't forget that we're on equal footing in terms of our rank! I'm managing my coefficient just fine. You may be senior to me, but I'd like you to restrain yourself from questioning my ability at the workplace and in front of the Enforcers!"

Tsunemori was really angry. Ginoza walked off without saying anything.

Facial expressions of enforcers Kunizuka Yayoi, Ginoza and Kogami when Tsunemori talked back.

Tsunemori walked out of the office, Masaoka followed her and talked her out of making a complaint to the Bureau chief.

In a one-on-one conversation, Masaoka told Tsunemori that Ginoza's father was a latent criminal.

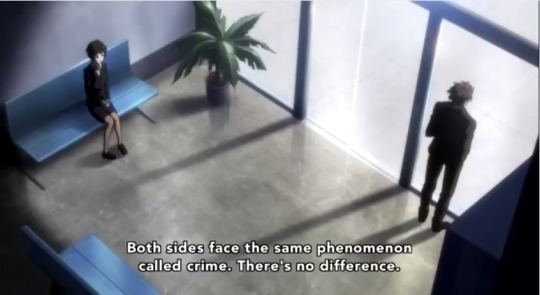

"Terrible misunderstandings and rumors about latent criminals were common in [the days when the Sibyl system was just put into operation]. If a family member happened to show a high crime coefficient, that alone caused the rest of the family to be treated as if they were the same. I'm sure he suffered quite a bit. When a detective gets deeply involved in an investigation, in the end, the Sibyl system starts keeping an eye on them just like it does the criminals. Committing a crime or cracking down on crimes. Both sides face the same phenomenon called crime. There's no difference."

"Now there are Enforcers, but before they created that position, there were many detectives who were diagnosed as latent criminals like that. Inspector Ginoza's father was one of them."

"That's why he can't forgive those who run a risk on their own. And yet, Kogami, his former colleague was also...He feels he was betrayed twice. First by his father and then by his colleague. That's why he acts like that towards you."

"Missy, you do have a family and friends too, don't you? If your Psycho-Pass gets clouded, this time, those people will suffer the same hardships Nobuchika did. In order to avoid that, we, Enforcers, are here."

"he used to. But now...he might be oblivious to everything that's not related to that Makishima guy." Cut to Sneguji in a conversation with Makishima. Senguji said he would not capture the target alive.

"It seems that you haven't noticed it yourself, so I'll tell you this. Kogami Shinya...When you speak that name, you look quite amused."

Another version of Ode to Joy was played in the background as this conversation went on.

End of episode 9.

Episode 10 - Methuselah's Game

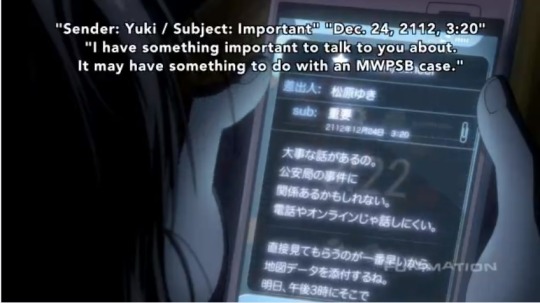

In the middle of her sleep, Tsunemori was awoken by an alert of an incoming email from her friend Funehara Yuki.

Accompanied by Kogami, Tsunemori went to the site Funahara specified.

Tsunemori felt the place was strange and appeared like a trap. Kogami agreed and said Tsunemori should be the target.

Kogami then requested to be armed and entered the obsolete subway station on his own to check things out, while Tsunemori waited for the back up and guided him underground.

Kogami walked as Tsunemori said via a device that "In the back there..." Interference jammed the message. Kogami walked on nonetheless.

What Tsunemori said was, "In the back there, there's a dead end." And Tsunemori was struck by what she saw.

When the interference stopped, Kogami heard Tsunemori's voice telling him to keep going.

Then, Tsunemori's voice told Kogami to check the train cars.

Some water rushed by, followed by a train. Kogami held onto that train as he tried to communicate with Tsunemori to no avail.

All he heard was

And Tsunemori could not get her message to Kogami either.

Kogami entered the train compartment and saw a girl blindfolded in it. He removed her blindfold, produced his warrant card, and asked the girl who she was. "Funahara Yuki."

On the ground, other members of division one arrived.

"From the smell, it's definitely polluted water mixed with liquid waste. If something like that gets poured on you, you'll be in for a world of hurt." "But Mr. Kogami unmistakably moved ahead beyond that point. In fact, he went even further, going through the wall!" said Tsunemori. Kagari folded his arms and threw a question at Tsunemori with a voice of distrust, "Isn't that a problem with the navigator?" Kunizuka voiced her comment as she checked the surroundings. "The issue might not be with the hardware, but the software. Since this area has been repeatedly redeveloped, you'd never know if the entered data matches the actual condition. "

"Aren't you the only one who got deceived, Inspector Tsunemori?"

"Kogami left your surveillance and his location is lost. In other words, he might've staged this situation from the start to run away." Masaoka chimed in. "Suppose we trust the navigator instead of the map data, do you know in which direction Kogami was headed? Did the signal show any strange movements?"

"Come to think of it...after a while, he suddenly started moving in a straight line really fast. He must be in some vehicle now. Isn't there a subway line around that runs in a north-south direction?" Kunizuka replied, "There is."

"The subway Ginza Line. But it was discontinued sixty years ago."

Kogami asked Funehara if she had any clue what happened and the girl hadn't the faintest idea. She got home from work, took a bath and slept as usual. When she woke up, she was already on this train. Kogami still thought Tsunemori was the target, but then he had second thought. He played Tsunemori's voice and determined it was a fake created from voice samples. "They knew all along that she wouldn't be the one to come to the basement, but someone else instead. "

The train stopped.

An electric hound emerged, Kogami and Funehara had to take the stairways and headed straight to Senguji's hunting ground.

"Things have been going as we planned so far. It seems they're quick to pick up on things, too. The smarter the prey, the more enjoyable the hunt becomes." Said Senguji. "Looks like it will be a worthwhile game to watch from the bleachers." Makishima commented. "Why don't you take part in the hunt for a change?" Senguji probed. "My interest lies in what will transpire during this hunt. So it would be best for me to observe things as a third party."

Kogami inspected the hunting ground, and found a bag with chemical light sticks and bottles in it.

Kogami carried that bag and made use of the chemical light sticks to find their way out. As they walked, Funehara asked if Tsunemori was doing okay with her work. Kogami replied that Tsunemori had faith and intuitively understood what it meant to be a detective.

Funehara continued talking about Tsunemori. And Kogami threw another light stick and heard different sounds. He sensed a trap. and confirmed it with the torch in his hand.

Funehara saw a bag identical to the one Kogami was carrying, and ran to grab it right away. Turned out it was a device that would guide an electric hound right to them.

As the two ran from the hound, Senguji fired at Kogami. He missed.

Kogami told Funehara to stay hiding while he went out to try take down one of the hounds.

He attacked one with a transponder attached to it. That hound struggled and stepped right into a clamp, it then darted to the position of the other trap and was destroyed. Kogami grabbed the transponder and ran.

Senguji fired and missed again.

Kogami ran to Funehara and told her to run with him. The transponder in his hand had battery, he needed to find an antenna to communicate with his colleagues.

Senguji was impressed with Kogami. Then he asked Makishima added in some plot into the game that he wasn't aware of. Makishima started, "When a man faces fear, his soul is tested. What he was born to seek...What he was born to achieve...His true nature will become clear." "Are you trying to mock me?" "It's not just that Kogami guy. I'm interested in you, too. Mr. Senguji. An unforeseen situation...an unexpected turn of events..."

"I know that is the thrill and excitement you've been seeking."

Makishima then pondered if Kogami understood the meaning of this game.

Kogami tried to figure out what was going on. "With you as bait, they lured in Tsunemori. But they knew it would actually be me who came looking for you instead. They factored all that in when setting up this hunt." "It's you who they want to play with, right? I'm just...dammit! I'm just being dragged into this, right?!" "That's right. With regard to you, your role should have been over when your email was sent. And yet...why did they put you on the subway?" "isn't it to make it difficult for you to run away?" On hearing this, Kogami figured out something. "This fox hunt is not just a one-sided game. They are hinting that I've got a chance, too. In other words, I'm being tested. Whether I abandon you during this game or not, I bet it's also one of the keys to winning this game. "

Then, Kogami ordered Funehara to take off her clothes, "If you want to survive, just do as I say." Funehara complied. Then, Kogami asked if Funehara usually coordinated her bra and he asked her to hand those over.

Kogami found the antenna he needed.

On the ground, Ginoza was laying out his plan for agents to go in and, based on the premise that cymatic scan couldn't be fooled, ordered Kogami be shot with a dominator as soon as he was spotted so as to see his true intention. If Kogami didn't intent to run away, he coefficient would stay the same and he would be paralyzed; but if he did try to run away, the eliminator mode would be activated and he would be killed. Tsunemori protested, pointed out that Kogami was his friend. Ginoza coldly said, "If Kogami ends up dying, the fault will lie on you due to your poor supervision."

"If you had Kogami under control, this wouldn't have had to happen. How do you feel about someone dying because of your own incompetence?"

Masaoka stepped in, grabbed Ginoza by the collar of his jacket, hoisted him, and asked

Then Masaoka tossed Ginoza aside.

Kogami made contact and told the team his location.

The team went in.

Tsunemori's thought as she ran inside, "Mr. Kogami, please let us make it in time."

End of episode 10

Comment Episode nine: The scene in which Tsunemori talked back to Ginoza was an important moment of growth for this character, taking into consideration that Japan would probably remain a male-dominant society at the time when this anime took place, in like almost a hundred years from now, Tsunemori, as a woman, a junior Inspector, showed her courage, determination in a vocal way that surprised Kunizuka, Kogami and annoyed Goinza. And it is the most memorable part of this episode for me. Another thing worth noting was that Masaoka referred to Ginoza by using his given name, Nobuchika. Given that addressing someone by their given name is a sign of closeness, this would be an implicit indication that the relationship between Masaoka and Ginoza was close.

Episode ten: The episode's title carries that name that became synonymous with longevity, so this episode was like a quest for longevity, and the one who craved it the most was Senguji. And Makishima changed the hunting game, turning it into a duet between Senguji and Kogami. Despite mounting disadvantages, Kogami managed to take down one electric hound and found a way to make contact with his colleagues.

A side note for Ginoza, a person keenly aware that his career was at stake, he tried hard not to be influenced by emotions. But in this episode his judgment was clearly clouded by feelings: his distrust of and disappointment in Kogami and his contempt of Tsunemori, and he spoke in a way that was coldhearted, mean, and as Masaoka put it, sinister. But then, on a second thought, Ginoza found himself in a situation that he needed to act tough or people would not take him seriously. He was not happy with it and when Tsunemori protested by saying that Kogami was his friend, Ginoza exploded. He needed to take it out and Tsunemori provided the perfect vent.

0 notes

Text

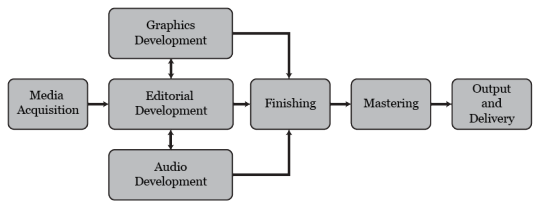

Colleges With Post Production Editing Majors In Louisiana

Alternatively you can use PluginUpdate, which is a free app from Kasrog that will scan your plugin folder and compare it against a database in the cloud. The picture above shows a Pro Tools session with a stereo audio track called “MUSIC”. CLICK HERE to learn Avid Pro Tools one on one with a digital audio professional at OBEDIA. Freeze is designed to circumvent very CPU-intensive processes, such as software instruments with a complex voice architecture, and complex plug-ins (such as reverbs, filter banks, or FFT-based effects).

A SCR file is a screensaver file for Windows used to display vector graphic or text animations, play slide shows, animation, or videos, and may include sound effects when a computer is inactive for a customized period of time. If you have additional information about the BIN file format or software that uses files with the BIN suffix, please do get in touch - we would love hearing from you.

What is the final stage for picture editing in the post production workflow process?

Introduction (with title, release date, background information) Summary of the story. Analysis of the plot elements (rising action, climax) Creative elements (dialogues, characters, use of colors, camera techniques, mood, tone, symbols, costumes or anything that contributes or takes away from the overall plot)

Running Windows Firewall, Windows Defender or other anti-virus software on your editing clients can have negative impact on the client’s performance. Therefore, the most common recommended course of action is to disable these services altogether whilst restricting Internet access to the editing clients. It is important to have the time on your storage and the clients synched up. Your ELEMENTS system can easily offer time synchronization to all clients. Under media you can remove/add sounds that you want windows to play.

Recent Posts

Team collaboration in the workplace is more than just teamwork—it’s an approach to project management that combines working together, innovative thinking, and equal participation to achieve a common goal. Successful team collaboration in the workplace is often supported by technology tools or collaboration software that improve communication, decision-making and workflow, as well as a work culture that values every individual’s contribution.

Purpose of process mapping

You can install it standalone and integrate with any application through API or together with OnlyOffice's collaborative system that offers additional possibilities for document management. If you are looking for a collaborative document editor with good MS Office format compatibility and complex feature-set, OnlyOffice is just what you need.

Using LucidLink and Avid Media Composer Print

What follows is the workflow my team uses to minimize the friction of creating social video on a consistent basis. This is, of course, not the end-all of how video teams should operate. However, if you’re new to regularly producing social media video, it may help you skip many of the challenges that commonly disrupt teams. When I talk with businesses about using more video, one of the biggest obstacles is production.

Can 7zip open BIN files?

Click the "Tools" button on the menu, and then select the "Convert Image File Format" option. The "convert" dialogue will be pop-up. Press the “Browse” then choose a BIN/CUE file you wish to convert and choose the “ISO files(*. iso)” option.

Your role is to ensure that post production is set up for success. During the start of a TV Show or Feature film, you will be working with the DIT and or DP to ensure the DP’s creative vision (color wise) will be automated through post production. An understanding of commonly used cameras and software/hardware on set is required, along with a working knowledge of post production tools and how they utilize this on set color. Outside of the on set knowledge, you will need to know Dailies workflow inside and out, as your job will require customizing the process to fit the needs of each and every job that comes in. Outside of the dailies work, your team will be playing a major role of VFX and Promo/Marketing pipeline development, so having an understanding of this world is also a big asset.

Are movies getting longer?

Part (but not all) of the reason why top-grossing movies have a longer average run time is that there is a great number of extremely long outliers. 10% of top-grossing movies are longer than 140 minutes, compared with just 96% of all movies in cinemas.

Focus Check: Upgrade Your Production and Post-Production Workflow

This gives users access to this node-based effects compositing tool from within the Media Composer user interface. Onboard DNxHR encoding for capture workflows will be enabled on DNxIO via a future no-cost firmware update. Updated software for supported creative editorial applications will be required to take advantage of this capability. Avid Artist

as soon as I let go of the mouse button, Pro Tools renders out the audio version. - If you are interested in learning Pro Tools 12, hands-on, ProMedia Training will be offering Training Classes in Pro Tools 12 starting in January 2016. The so-called .bin files virus is a threat that is designed to act like a typical data locker ransomware. Following data encryption, it extorts a ransom payment from victims. Frozen instrument tracks display both the MIDI data and the audio waveform, though neither is editable.

I’ll never forget the first time I read “Behind The Seen” about Walter Murch’s journey editing Cold Mountain using Final Cut Pro (I fondly refer to this book as “Porn for editors”). One of the most profound things I learned from this book was his intricate system of creating colored index cards and putting them up on a wall to help him organize the structure of complex films. I was mesmerized by this concept – it was like Tetris mixed with screenwriting and film editing…my own personal version of heaven. It is easy to see by observing technical adoption, how useful accurate metadata is in streamlining the production workflow https://www.toodledo.com all the way through final post processing. Providing vast sets of data based resources for FX departments to draw from for their work, and for editors to have at their disposal, helps to streamline productivity down the pipeline.

What is a film production schedule?

The Orad product lines complement the Avid MediaCentral Platform, and many of Avid’s and Orad’s solutions are already integrated and widely used together. Avid Artist

0 notes

Text

A Look at ‘The Decisive Moment’ by Henri Cartier-Bresson

youtube

A bible for photographers. That is how Robert Capa described The Decisive Moment by Henri Cartier-Bresson. After almost 70 years it was first published, this book has still a lot to say to photographers and especially to street and documentary photographers.

The book said to be an essential one for any photography collection. But is it? I am going to give you this brief overview of this legendary book.

Henri Cartier-Bresson is said to be a founder of modern photojournalism. I have actually talked about his life and photography in one of my very first videos. He actually came up with this idea of the “decisive moment.” I wouldn’t say he invented it but he definitely gave it a name and introduced it to a wider audience.

Here’s what Cartier-Bresson told the Washington Post in 1957:

Photography is not like painting. There is a creative fraction of a second when you are taking a picture. Your eye must see a composition or an expression that life itself offers you, and you must know with intuition when to click the camera. That is the moment the photographer is creative, oop! The Moment! Once you miss it, it is gone forever.

The book was published in 1952 originally titled Images à la Sauvette (“images on the run”) in the French, published in English with a new title, The Decisive Moment. The words were actually taken from a quote by the 17th century Cardinal de Retz, who said, “There is nothing in this world that does not have a decisive moment.”

You can find the first edition on eBay for $1,000 and more depending on the condition. It was printed in 10,000 copies; 3,000 of the French edition and 7,000 of the American edition. The original price was actually $12.50 in North America.

The new print run of the book has a hardback and extra hard case to house the book itself and a pamphlet that I will talk about later.

The new 2015 edition of The Decisive Moment. Photo courtesy Amazon.

The artwork on the case and on the book itself is an artwork by Henri Matisse — it’s not a photograph but a signature cut-out of Matisse.

In the top right, we can see the sun that shines over the Blue Mountains. In the middle is a bird holding a branch. Then there are a few green and black vegetal forms and a stone at the bottom. On the back cover, we can see color sparkles of green and blue. The spirals are supposed to evoke the pace of time.

What is interesting about this cover is that when you look closely, the name Cartier-Bresson is actually missing the hyphen. I don’t actually know why that is. Maybe Matisse forgot to draw it there and then they just didn’t want to tell him to fix his artwork. The pamphlet suggests that the missing hyphen could be deliberate, as if Matisse wanted to express Cartier-Bresson’s duality and his shifting temperament.

The book is pretty big. Even without the case, it is the biggest book I own. It is 37x27cm so the ratio works for photographs of 24×36 photographic film Cartier-Bresson used. Each page can fit one horizontal photograph or two vertical ones. The pages are stitched in a way that allows proper flat opening. It has 160 pages with 126 photographs and weighs over 5.5lbs/2.5kg.

The pamphlet is a very nice behind-the-scenes book composed of quotes by Henri Cartier-Bresson and some other information about how the book was made. It tells the story of Cartier — Bresson working on the book, the sequencing, work with the publisher, the story of the cover, and so on.

The publisher insisted that the images should be matched with a text in the book. The idea was to provide technical and “how-to” information, but it was something Cartier-Bresson wasn’t fond of too much.

The book starts with an introduction by Henri Cartier-Bresson followed by photographs split into two sections, chronologically and geographically. The first one contains photographs taken in the West from the years 1932 to 1947. The second contains photographs taken in the East from 1947 to 1952. Since the introduction wasn’t technical enough, there is also a technical text by Richard L. Simon at the end of the book, which was only included in the American version.

We can also consider the two sections to be the phases of Cartier-Bresson’s carrier. During his career, Cartier-Bresson oscillated between art and photojournalism. It is obvious from his photographs before he joined Magnum and after that. When selecting the photographs, Cartier-Bresson clearly favored the reportage images he made as a member of Magnum Photos as some of his well-known images like Hyeres, France, 1932 (AKA The Cyclist) from the early 30s are missing.

As The Decisive Moment was published 5 years after he joined Magnum, he left out a lot of his surrealism inspired images and used more of his photojournalism work, especially in the second section which displays only Magnum images. Those photos also have much longer captions as they were mostly shot for press.

The book is evidence of Cartier-Bresson’s shift from art to photojournalism during that time. He would later switch back when he focused on exhibitions and wanted to be perceived more as an artist rather than a photojournalist.

All photographs are in black and white, as Cartier-Bresson didn’t like to shoot colors. He saw color as technically inferior (due to the slow speeds of color films) as well as aesthetically limited.

At the time the book was published, it was immediately clear the book was unique, not only in terms of size but also quality. Even though it was accepted very well by critics, the first sales were not so good: that was the reason there was never the second print (prior to 2015).

“The Decisive Moment” wasn’t actually the only title Cartier-Bresson considered. One of the favorite possible titles was “À pas de loup” (“Tiptoeing”), which expressed the way in which Cartier-Bresson approached those whom he photographed. “The subject must be approached tiptoeing,” he once said.

The second possible title was “Images à la Sauvette” (roughly translated as “images on the run”), which ended up being the name of the French edition, related to small street vendors ready to flee when being asked for their license. It also expressed his concept of photography.

My favorite picture from the book is one from Kashmir showing Muslim women on the slopes of Hari Parbal Hill in Srinagar praying toward the sun rising behind the Himalayas.

The #ICPMuseum is the first venue in the United States to present “Henri Cartier-Bresson: The Decisive Moment.” The exhibition shares the behind-the-scenes story and offers a rare chance to see first edition printing. https://t.co/blKiNC0Wgo

Srinagar, India, 1948 pic.twitter.com/UJY54xTG3P

— ICP (@ICPhotog) July 9, 2018

As you see when you look at Henri Cartier-Bresson’s photographs, he adapted many styles. When I first saw this photograph, I just thought how timeless it looked. It could easily be taken thousands of years ago if cameras had existed then. Cartier-Bresson shot some very important events but also some casual photos.

I think The Decisive Moment an amazing book even though it is expensive. But the valuable information it provides and the forms makes you really feel like you are holding a piece of history. It would be a great gift for any photographer but especially for someone shooting a street or documentary style.

About the author: Martin Kaninsky is a photographer, reviewer, and YouTuber based in Prague, Czech Republic. The opinions expressed in this article are solely those of the author. Kaninsky runs the channel All About Street Photography. You can find more of his work on his website, Instagram, and YouTube channel.

(function(d, s, id) { var js, fjs = d.getElementsByTagName(s)[0]; if (d.getElementById(id)) return; js = d.createElement(s); js.id = id; js.async=true; js.src = "http://connect.facebook.net/en_GB/sdk.js#xfbml=1&version=v2.10&appId=207578995971836"; fjs.parentNode.insertBefore(js, fjs); }(document, 'script', 'facebook-jssdk')); Source link

Tags: CartierBresson, Decisive, Henri, MOMENT

from WordPress https://ift.tt/2KCW5XQ via IFTTT

0 notes

Link

via cdm.link

Reimagine pixels and color, melt your screen live into glitches and textures, and do it all for free on the Web – as you play with others. We talk to Olivia Jack about her invention, live coding visual environment Hydra.

Inspired by analog video synths and vintage image processors, Hydra is open, free, collaborative, and all runs as code in the browser. It’s the creation of US-born, Colombia-based artist Olivia Jack. Olivia joined our MusicMakers Hacklab at CTM Festival earlier this winter, where she presented her creation and its inspirations, and jumped in as a participant – spreading Hydra along the way.

Olivia’s Hydra performances are explosions of color and texture, where even the code becomes part of the aesthetic. And it’s helped take Olivia’s ideas across borders, both in the Americas and Europe. It’s part of a growing interest in the live coding scene, even as that scene enters its second or third decade (depending on how you count), but Hydra also represents an exploration of what visuals can mean and what it means for them to be shared between participants. Olivia has rooted those concepts in the legacy of cybernetic thought.

Oh, and this isn’t just for nerd gatherings – her work has also lit up one of Bogota’s hotter queer parties. (Not that such things need be thought of as a binary, anyway, but in case you had a particular expectation about that.) And yes, that also means you might catch Olivia at a JavaScript conference; I last saw her back from making Hydra run off solar power in Hawaii.

Following her CTM appearance in Berlin, I wanted to find out more about how Olivia’s tool has evolved and its relation to DIY culture and self-fashioned tools for expression.

CDM: Can you tell us a little about your background? Did you come from some experience in programming?

Olivia: I have been programming now for ten years. Since 2011, I’ve worked freelance — doing audiovisual installations and data visualization, interactive visuals for dance performances, teaching video games to kids, and teaching programming to art students at a university, and all of these things have involved programming.

Had you worked with any existing VJ tools before you started creating your own?

Very few; almost all of my visual experience has been through creating my own software in Processing, openFrameworks, or JavaScript rather than using software. I have used Resolume in one or two projects. I don’t even really know how to edit video, but I sometimes use [Adobe] After Effects. I had no intention of making software for visuals, but started an investigative process related to streaming on the internet and also trying to learn about analog video synthesis without having access to modular synth hardware.

youtube

Alexandra Cárdenas and Olivia Jack @ ICLC 2019:

youtube

In your presentation in Berlin, you walked us through some of the origins of this project. Can you share a bit about how this germinated, what some of the precursors to Hydra were and why you made them?

It’s based on an ongoing Investigation of:

Collaboration in the creation of live visuals

Possibilities of peer-to-peer [P2P] technology on the web

Feedback loops

Precursors:

Satellite Arts project by Kit Galloway and Sherrie Rabinowitz http://www.ecafe.com/getty/SA/ (1977)

Internet as a live immersive place

Sandin image processor, analog synthesizer: https://en.wikipedia.org/wiki/Sandin_Image_Processor

My work with CultureHub starting in 2015 creating browser-based tools for network performance https://www.culturehub.org/

A significant moment came as I was doing a residency in Platohedro in Medellin in May of 2017. I was teaching beginning programming, but also wanted to have larger conversations about the internet and talk about some possibilities of peer-to-peer protocols. So I taught programming using p5.js (the JavaScript version of Processing). I developed a library so that the participants of the workshop could share in real-time what they were doing, and the other participants could use what they were doing as part of the visuals they were developing in their own code. I created a class/library in JavaScript called pixel parche to make this sharing possible. “Parche” is a very Colombian word in Spanish for group of friends; this reflected the community I felt while at Platoedro, the idea of just hanging out and jamming and bouncing ideas off of each other. The tool clogged the network and I tried to cram too much information in a very short amount of time, but I learned a lot.

Rather than think about a webpage as a “page”, “site”, or “place” that you can “go” to, what if we think about it as a flow of information where you can configure connections in realtime?

I was also questioning some of the metaphors we use to understand and interact with the web. “Visiting” a website is exchanging a bunch of bytes with a faraway place and routed through other far away places. Rather than think about a webpage as a “page”, “site”, or “place” that you can “go” to, what if we think about it as a flow of information where you can configure connections in realtime? I like the browser as a place to share creative ideas – anyone can load it without having to go to a gallery or install something.

And I was interested in using the idea of a modular synthesizer as a way to understand the web. Each window can receive video streams from and send video to other windows, and you can configure them in real time suing WebRTC (realtime web streaming).

Here’s one of the early tests I did:

vimeo

hiperconectadxs from Olivia Jack on Vimeo.

I really liked this philosophical idea you introduced of putting yourself in a feedback loop. What does that mean to you? Did you discover any new reflections of that during our hacklab, for that matter, or in other community environments?

It’s processes of creation, not having a specific idea of where it will end up – trying something, seeing what happens, and then trying something else.

Code tries to define the world using specific set of rules, but at the end of the day ends up chaotic. Maybe the world is chaotic. It’s important to be self-reflective.

How did you come to developing Hydra itself? I love that it has this analog synth model – and these multiple frame buffers. What was some of the inspiration?

I had no intention of creating a “tool”… I gave a workshop at the International Conference on Live Coding in December 2017 about collaborative visuals on the web, and made an editor to make the workshop easier. Then afterwards people kept using it.

I didn’t think too much about the name but [had in mind] something about multiplicity. Hydra organisms have no central nervous system; their nervous system is distributed. There’s no hierarchy of one thing controlling everything else, but rather interconnections between pieces.

Ed.: Okay, Olivia asked me to look this up and – wow, check out nerve nets. There’s nothing like a head, let alone a central brain. Instead the aquatic creatures in the genus hydra has sense and neuron essentially as one interconnected network, with cells that detect light and touch forming a distributed sensory awareness.

Most graphics abstractions are based on the idea of a 2d canvas or 3d rendering, but the computer graphics card actually knows nothing about this; it’s just concerned with pixel colors. I wanted to make it easy to play with the idea of routing and transforming a signal rather than drawing on a canvas or creating a 3d scene.

This also contrasts with directly programming a shader (one of the other common ways that people make visuals using live coding), where you generally only have access to one frame buffer for rendering things to. In Hydra, you have multiple frame buffers that you can dynamically route and feed into each other.

MusicMakers Hacklab in Berlin. Photo: Malitzin Cortes.

Livecoding is of course what a lot of people focus on in your work. But what’s the significance of code as the interface here? How important is it that it’s functional coding?

It’s inspired by [Alex McLean’s sound/music pattern environment] TidalCycles — the idea of taking a simple concept and working from there. In Tidal, the base element is a pattern in time, and everything is a transformation of that pattern. In Hydra, the base element is a transformation from coordinates to color. All of the other functions either transform coordinates or transform colors. This directly corresponds to how fragment shaders and low-level graphics programming work — the GPU runs a program simultaneously on each pixel, and that receives the coordinates of that pixel and outputs a single color.

I think immutability in functional (and declarative) coding paradigms is helpful in live coding; you don’t have to worry about mentally keeping track of a variable and what its value is or the ways you’ve changed it leading up to this moment. Functional paradigms are really helpful in describing analog synthesis – each module is a function that always does the same thing when it receives the same input. (Parameters are like knobs.) I’m very inspired by the modular idea of defining the pieces to maximize the amount that they can be rearranged with each other. The code describes the composition of those functions with each other. The main logic is functional, but things like setting up external sources from a webcam or live stream are not at all; JavaScript allows mixing these things as needed. I’m not super opinionated about it, just interested in the ways that the code is legible and makes it easy to describe what is happening.

What’s the experience you have of the code being onscreen? Are some people actually reading it / learning from it? I mean, in your work it also seems like a texture.

I am interested in it being somewhat understandable even if you don’t know what it is doing or that much about coding.

I like using my screen itself as a video texture within the visuals, because then everything I do — like highlighting, scrolling, moving the mouse, or changing the size of the text — becomes part of the performance.

Code is often a visual element in a live coding performance, but I am not always sure how to integrate it in a way that feels intentional. I like using my screen itself as a video texture within the visuals, because then everything I do — like highlighting, scrolling, moving the mouse, or changing the size of the text — becomes part of the performance. It is really fun! Recently I learned about prepared desktop performances and related to the live-coding mantra of “show your screens,” I like the idea that everything I’m doing is a part of the performance. And that’s also why I directly mirror the screen from my laptop to the projector. You can contrast that to just seeing the output of an AV set, and having no idea how it was created or what the performer is doing. I don’t think it’s necessary all the time, but it feels like using the computer as an instrument and exploring different ways that it is an interface.

The algorave thing is now getting a lot of attention, but you’re taking this tool into other contexts. Can you talk about some of the other parties you’ve played in Colombia, or when you turned the live code display off?

Most of my inspiration and references for what I’ve been researching and creating have been outside of live coding — analog video synthesis, net art, graphics programming, peer-to-peer technology.

Having just said I like showing the screen, I think it can sometimes be distracting and isn’t always necessary. I did visuals for Putivuelta, a queer collective and party focused on diasporic Latin club music and wanted to just focus on the visuals. Also I am just getting started with this and I like to experiment each time; I usually develop a new function or try something new every time I do visuals.

Community is such an interesting element of this whole scene. So I know with Hydra so far there haven’t been a lot of outside contributions to the codebase – though this is a typical experience of open source projects. But how has it been significant to your work to both use this as an artist, and teach and spread the tool? And what does it mean to do that in this larger livecoding scene?

I’m interested in how technical details of Hydra foster community — as soon as you log in, you see something that someone has made. It’s easy to share via twitter bot, see and edit the code live of what someone has made, and make your own. It acts as a gallery of shareable things that people have made:

https://twitter.com/hydra_patterns

Although I’ve developed this tool, I’m still learning how to use it myself.

Although I’ve developed this tool, I’m still learning how to use it myself. Seeing how other people use it has also helped me learn how to use it.

I’m inspired by work that Alex McLean and Alexandra Cardenas and many others in live coding have done on this — just the idea that you’re showing your screen and sharing your code with other people to me opens a conversation about what is going on, that as a community we learn and share knowledge about what we are doing. Also I like online communities such as talk.lurk.org and streaming events where you can participate no matter where you are.

Madrid performance. Photo: Tatiana Soshenina.

I’m also really amazed at how this is spreading through Latin America. What has the scene been like there for you – especially now living in Bogota, having grown up in California?

I think people are more critical about technology and so that makes the art involving technology more interesting to me. (I grew up in San Francisco.) I’m impressed by the amount of interest in art and technology spaces such as Plataforma Bogota that provide funding and opportunities at the intersection of art, science, and technology.

The press lately has fixated on live coding or algorave but maybe not seen connections to other open source / DIY / shared music technologies. But – maybe now especially after the hacklab – do you see some potential there to make other connections?

To me it is all really related, about creating and hacking your own tools, learning, and sharing knowledge with other people.

Oh, and lastly – want to tell us a little about where Hydra itself is at now, and what comes next?

Right now, it’s improving documentation and making it easier for others to contribute.

Personally, I’m interested in performing more and developing my own performance process.

Thanks, Olivia!

Check out Hydra for yourself, right now:

https://hydra-editor.glitch.me/

Previously:

Inside the livecoding algorave movement, and what it says about music

Magical 3D visuals, patched together with wires in browser: Cables.gl

Tags:

bogota

,

browser

,

code

,

coding

,

colombia

,

frame buffers

,

free as in freedom

,

free-software

,

github

,

hydra

,

javascript

,

live-coding

,

live-visuals

,

motion

,

Olivia Jack

,

online

,

open-source

,

Software

,

usa

,

visuals

,

VJ

,

Web

0 notes

Text

How Reviews Impact Google Shopping Ads (and How to Get More of Them)

Did you know that 84% of online shoppers trust reviews just as much as they do their friends? That means that any time you can show off the trustworthiness of your products with positive reviews, you’ll be much more likely to close the deal.

With Google Shopping ads, you can promote your best products with your overall rating in plain sight. You just have to know how to set up your ads, get reviews, and make sure they’re all as good as possible.

Not quite convinced? Not sure where to start? Keep reading.

Here, I’ll discuss what Google Shopping ads are, why you should be using them, and how user reviews play into your long-term success. Hopefully, this will convince you to start using this awesome ad type as soon as possible.

Let’s get started.

Google Shopping Ads: A Basic Introduction

Google Shopping ads are an ad option on the Google Search Network. Essentially, these ads allow you to sell a product directly on a search engine results page (SERP).

For example, let’s say you’re looking to buy a new pair of Jordans. If you were to put the search term “Nike Jordans” in Google, the first thing you would see is a carousel of images with information underneath.

These are Google Shopping ads. With this quick example, you can start to get an idea of how immediately effective they are.

Shopping ads capture the eye with their imagery, provide price competition right there on the page, and present the most logical place for a user to start a shopping journey.

To set them up, you’ll need to have a Google Merchant Center account linked with a Google Ads account. Then, follow Google’s guided tour and set up your products so that you can easily add them to your ads.

You’ll also need to create and maintain a product data feed if you want total control over your Shopping ads experience.

Why You Need Google Shopping Ads

When you look at the statistics for Google Shopping ads, it’s easy to see why so many brands choose to use them.

By far, most brands are putting their ad spend on Google Shopping ads. In some cases, it’s as much 80%.

Image Source

In fact, one study found that Google Shopping ad spend increased 40% in just one year.

But these costs are justified. The same study showed that the overwhelming majority of ad clicks for brands come via Google Shopping ads. Text ads just don’t compare.

Image Source

And Google Ads isn’t just sitting on the success they already have. They’ve recently started allowing brands to include a video showcase in their ads with great success.

While the technique is new, it’s indicative of Google’s overall drive to improve their already dominant ad platform.

And the evidence doesn’t stop there. In one case study, a brand saw a 272% return on ad spend when they ran segmented Shopping ad campaigns.

In another case, a brand saw a 425% increase in new customer transactions. That’s four times the revenue from just one type of ad.

And these cases aren’t the exception. There are hundreds of stories and case studies that clearly show how effective these ads can be when used correctly.

So if you aren’t using them, it’s in your best interest to seriously consider adding this tool to your digital marketing arsenal.

Now that we’re clear on why you should use Google Shopping ads, I want to switch gears and talk about one specific yet powerful aspect of Shopping ads: User Reviews.

How Reviews Impact Google Shopping Ads

When you see a Shopping ad on Google, one of the elements that draw the most attention is the user-submitted rating that’s shown on a five-star scale. This is what Google calls your Google Seller Rating.

The Google Seller Rating is an automatic extension of your Search Network ads that only gets added under certain conditions, which we’ll discuss in a moment.

It’s clear, though, that this is a direct form of social proof that can make or break your ability to sell.

One study found that the majority of transactions take place with five- and four-star reviewed products.

Image Source

After your product dips below a four-star rating, you’ll likely have a hard time getting it to sell even with a large advertising budget. This rating can also have an impact on your overall SEO, which makes it doubly important.

If you want to sell effectively, you’ll need to either raise your rating or choose to advertise a better product.

How to Get Your Google Seller Ratings Activated