#light bulbs clipart

Explore tagged Tumblr posts

Text

Understanding the visual knowledge of language models

New Post has been published on https://sunalei.org/news/understanding-the-visual-knowledge-of-language-models/

Understanding the visual knowledge of language models

You’ve likely heard that a picture is worth a thousand words, but can a large language model (LLM) get the picture if it’s never seen images before?

As it turns out, language models that are trained purely on text have a solid understanding of the visual world. They can write image-rendering code to generate complex scenes with intriguing objects and compositions — and even when that knowledge is not used properly, LLMs can refine their images. Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) observed this when prompting language models to self-correct their code for different images, where the systems improved on their simple clipart drawings with each query.

The visual knowledge of these language models is gained from how concepts like shapes and colors are described across the internet, whether in language or code. When given a direction like “draw a parrot in the jungle,” users jog the LLM to consider what it’s read in descriptions before. To assess how much visual knowledge LLMs have, the CSAIL team constructed a “vision checkup” for LLMs: using their “Visual Aptitude Dataset,” they tested the models’ abilities to draw, recognize, and self-correct these concepts. Collecting each final draft of these illustrations, the researchers trained a computer vision system that identifies the content of real photos.

“We essentially train a vision system without directly using any visual data,” says Tamar Rott Shaham, co-lead author of the study and an MIT electrical engineering and computer science (EECS) postdoc at CSAIL. “Our team queried language models to write image-rendering codes to generate data for us and then trained the vision system to evaluate natural images. We were inspired by the question of how visual concepts are represented through other mediums, like text. To express their visual knowledge, LLMs can use code as a common ground between text and vision.”

To build this dataset, the researchers first queried the models to generate code for different shapes, objects, and scenes. Then, they compiled that code to render simple digital illustrations, like a row of bicycles, showing that LLMs understand spatial relations well enough to draw the two-wheelers in a horizontal row. As another example, the model generated a car-shaped cake, combining two random concepts. The language model also produced a glowing light bulb, indicating its ability to create visual effects.

“Our work shows that when you query an LLM (without multimodal pre-training) to create an image, it knows much more than it seems,” says co-lead author, EECS PhD student, and CSAIL member Pratyusha Sharma. “Let’s say you asked it to draw a chair. The model knows other things about this piece of furniture that it may not have immediately rendered, so users can query the model to improve the visual it produces with each iteration. Surprisingly, the model can iteratively enrich the drawing by improving the rendering code to a significant extent.”

The researchers gathered these illustrations, which were then used to train a computer vision system that can recognize objects within real photos (despite never having seen one before). With this synthetic, text-generated data as its only reference point, the system outperforms other procedurally generated image datasets that were trained with authentic photos.

The CSAIL team believes that combining the hidden visual knowledge of LLMs with the artistic capabilities of other AI tools like diffusion models could also be beneficial. Systems like Midjourney sometimes lack the know-how to consistently tweak the finer details in an image, making it difficult for them to handle requests like reducing how many cars are pictured, or placing an object behind another. If an LLM sketched out the requested change for the diffusion model beforehand, the resulting edit could be more satisfactory.

The irony, as Rott Shaham and Sharma acknowledge, is that LLMs sometimes fail to recognize the same concepts that they can draw. This became clear when the models incorrectly identified human re-creations of images within the dataset. Such diverse representations of the visual world likely triggered the language models’ misconceptions.

While the models struggled to perceive these abstract depictions, they demonstrated the creativity to draw the same concepts differently each time. When the researchers queried LLMs to draw concepts like strawberries and arcades multiple times, they produced pictures from diverse angles with varying shapes and colors, hinting that the models might have actual mental imagery of visual concepts (rather than reciting examples they saw before).

The CSAIL team believes this procedure could be a baseline for evaluating how well a generative AI model can train a computer vision system. Additionally, the researchers look to expand the tasks they challenge language models on. As for their recent study, the MIT group notes that they don’t have access to the training set of the LLMs they used, making it challenging to further investigate the origin of their visual knowledge. In the future, they intend to explore training an even better vision model by letting the LLM work directly with it.

Sharma and Rott Shaham are joined on the paper by former CSAIL affiliate Stephanie Fu ’22, MNG ’23 and EECS PhD students Manel Baradad, Adrián Rodríguez-Muñoz ’22, and Shivam Duggal, who are all CSAIL affiliates; as well as MIT Associate Professor Phillip Isola and Professor Antonio Torralba. Their work was supported, in part, by a grant from the MIT-IBM Watson AI Lab, a LaCaixa Fellowship, the Zuckerman STEM Leadership Program, and the Viterbi Fellowship. They present their paper this week at the IEEE/CVF Computer Vision and Pattern Recognition Conference.

0 notes

Text

Illuminate Your Space with LURDES SUSPENSION Black and White Light Bulbs!

Introducing our LURDES SUSPENSION light bulb clipart in classic black and white! Elevate your home's ambiance with this elegant and versatile lighting solution. Crafted for style and functionality, it's the perfect addition to any space. Don't miss our exclusive light bulb sale – brighten your life today with LURDES!

0 notes

Text

OKAY SO I FOUND SOME STUFF ABOUT RINK O'MANIA

Hi, Hello, Welcome as I take you down the journey I fucking went through

So I was watching byler clips, as one does, and I was wondering whether or not they got lucky with the yellow and blue lighting in Rink O'Mania or whether they like – change the light bulbs or whatever. So I decided to stalk the Skating Rink they filmed at.

And holy shit did I find some interesting things.

I have no idea whether or not this was discovered before but I JUST NEED TO SHARE. SO HERE WE GO.

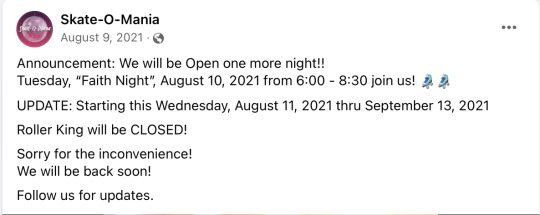

So, first of all, the place was originally called Roller King but they changed it to Skate O'Mania. Which, pretty similar to Rink O'Mania right? THAT'S BECAUSE THEY REMODELLED THE ENTIRE FUCKING ROLLER RINK FOR THE SHOW

LIKE I'm not even kidding.

They posted this message

Followed by a video (which is just a photo) with a little clipart of "under construction"

THEN, on August 10th they posted that they wrapped filming.

"Remodel courtesy of Hollywood" They deadass redecorated the entire roller rink for the however many minutes spent in ONE episode of the season. (The probably spent half the month redecorating and the other half filming which I'm losing my mind over?? no way this is a one time setting).

LIKE– Let me show you the difference in before and after the remodelling

The Roller Rink Before and After.

They added the blue and yellow light strips and the disco ball. And I can’t say 100 but it looks like they removed the pink and green coloured light(?)

And they added the whole ‘Skate/Rink O’mania’ sign (basically everything honestly was added by them–)

The Snack Station Before and After.

Again, we see the blue and yellow lighting.

The Table/Seating Area Before and After.

And okay I’m sorry about the quality of the second one I couldn’t find a better photo from their Google maps gallery or Facebook bUT- you can see that originally the lighting scheme was pink and blue but now it’s yellow and blue.

(here's another photo)

Like idk I’m losing my mind over this- they really like remodelled the entire fucking roller rink and added so much yellow and blue like?? I just think it's insane and I needed to share this discovery.

#byler#mike wheeler#will byers#stranger things#stranger things 4#stranger things analysis#I have no idea if anyones done this before#hopefully this just helps prove that everything in the set is literally 100% intentional

1K notes

·

View notes

Text

Kids are just amazing. Think about it, they come into the world knowing absolutely nothing, and everyday they’re looking wide-eyed at the world, so curious about even the slightest detail of it. Why, just the other day, my little Lilah came up to me and said: “Daddy, why is the sky blue?”

Well of course I knew the answer right off the bat. “You remember how rainbows work?” I asked her, lifting her up on my knee. She nodded. I’d taught her about rainbows and prisms, with a hands-on demonstration, just a couple of months before. I gave her the whole spiel about light breaking up in the atmosphere, about how red light traveled further but blue scattered wider, and so that was why the sky was painted blue in the middle of the day but red at sunset, when the sun was far away enough that all the blues got scattered behind and only the reds traveled far enough to reach us. “You got all that?” I said. “Don’t be afraid to ask questions if you don’t understand.”

She got that little pouty frown on her face that told me she was processing it, and then she said “uh-huh” again dubiously, and then asked, “Why isn’t the sun blue?”

That caught me off guard. “What?”

“When the sun -” She waved her arm in a clumsy arc that didn’t really demonstrate everything. “When the sun’s—there, it’s all red, cuz the sun’s far away, and the - the red light goes really really far, so when the blue’s all spilled everywhere, why isn’t the sun blue, too?”

I set her down off my lap. This was an unexpected wrinkle. It’s funny how you can just know something nearly all your life, and never have questions like that occur to you. Why wasn’t the sun blue? Still, I believe it doesn’t hurt kids to let them know that sometimes their parents have to look things up, so I took out my phone. “Let me just find out, and then daddy will have an answer for you,” I said.

As a first exploratory impulse, I googled ‘why isn’t the sun blue’. The first few hits were asking why the sun, if it was so incredibly hot, didn’t burn with a blue flame instead of a yellow one. I didn’t offhand know the answer to that one, either, but it wasn’t relevant to Lilah’s question so I quickly skipped over them. The Stanford website, which I skimmed over, didn’t tell me anything that I hadn’t already said, aside from the added factoid that the sun’s visible light peaked in the green part of the spectrum, so technically it was slightly greener than anything else.

That didn’t help me either.

Finally, it was the NASA website that gave the answer. “It’s because the light is straight from the sun,” I told her. “When you’re looking up at the sun, it’s still pure white, but all around it at the sides, that’s where the blue’s scattering off in all directions.” The NASA website had an awfully condescending graphic, I thought, with a cartoon sun shining white light straight down on a cartoon dog, and the blue light going off at an angle. The dog wasn’t even looking up. You’d think NASA, of all things, would be able to come up with something slicker.

“But ... doesn’t all light come straight from the sun?” Lilah said, interrupting my train of thought.

“Well, no—” I surreptitiously checked the diagram with the cartoon dog again. “’Cause if you’re looking at the sun, that’s the light, straight from the sun, and everything else—”

“But...” Lilah screwed up her forehead in thought. “Cuz when it’s daytime, that’s when the—half the Earth is facing the sun ...”

“That’s right, and on the other half it’s night, because they’re not facing the sun,” I provided, reciting an old lesson.

“So isn’t all daytime light from the sun?”

I tried, in my head, to picture a lamp shining on half a globe. Yes, every illuminated part of the globe was lit directly by the light bulb, so if a tiny person on the surface of the globe was looking up, wouldn’t the entirety of the bright daytime sky be the sun’s light? But then again, the sun was definitely only in one specific spot in the sky, so that couldn’t possibly make sense either ... I was beginning to suspect that I didn’t actually know how light worked.

“No,” I said definitively, trusting in the cartoon sun and dog, who were clipart images staring blankly at random angles. The sun’s eyes moved between images, I noticed, as I scrolled up and down. The dog’s didn’t. “That’s not how light works, sweetie. When you turn on a light in the room—” I pointed up. “Everything’s lit up, right? But you can only see the light—the light-light—when you’re looking straight at it.”

She considered that while I held my breath, and I only let myself breath again once I saw her nod, a series of ponderous calculations still going on inside that cute little head of hers. Kids, I’m telling you, they’re really something. She wandered off to consider it on her own for a while, and I was trying to think up a sequence of words to google that explained the difference between light directly from the sun, and light that presumably was also directly from the sun, but you weren’t looking straight at it (my first stab of ‘ambient vs direct light’ of course only brought up a bunch of hits about home decor and 3D modeling) when I felt Lilah tug on my shirt sleeve once again. “Daddy?” she said.

I looked up, forcing a grin. “Yes, sweetheart?”

“If it’s all a rainbow, and red’s the one that goes the farthest, then why’s blue the one that scatters around the most? Shouldn’t it be...” She paused to mouth the words “Roy G. Biv” to herself. “Violet?”

I opened my mouth, and then looked down at my phone and quickly typed in ‘why isn’t the sky violet’.

“Hrmm....”

“Daddy?”

“Okay,” I said slowly, still keeping one eye on the article. “It turns out that the sky actually is violet, but because of the way our eyes work ...” I quickly glanced up at Lilah. She was still with me, listening placidly as if I hadn’t just contradicted my previous explanations, completely willing to listen to her dear old dad explain the world to her.

“We have—we have cells in our eyes,” I began, “that are how we see color—they’re called cones—and there are three kinds. One is sensitive to—One sees red things, one sees green things, and one sees blue things. And so the—” I squinted at my screen. “Blue light makes the blue cones light up, right? But, it also, uh, tickles the green and red cones, just a little bit. So if when we looked up into the sky it was really all blue light, then to us it would look ... uh, slightly .... slightly greenish. Blue-greenish. Because the green cones are also getting tickled, so it would look kind of green!”

I ran one hand over my head, reading out the penultimate paragraph. “But because the sky is actually violet, that means our blue cones can’t see it all that well. But our red cones can kinda see violet, so if it ... if it was violet we’d actually see it as reddish ...” I trailed off. “But since the sky is actually part blue and part violet,” I finished up, all in a rush, “the red and green cancel each other out, and all we end up seeing is blue!”

I made a sort of ‘ta-da!’ pose, a smile frozen on my face, and miraculously Lilah was nodding along as if my botched rendition of the science had made perfect sense. Maybe ... it did? Maybe according to the free-floating haphazard logic of a child who has no idea how the world works and has no choice other than to accept all explanations given, it was completely sensible that red and green should cancel each other out just like lights on a traffic signal, and that blue-plus-violet should equal blue, just as a fundamental property of math. I was almost congratulating myself on having provided a successful answer, when Lilah once again spoke up.

“So if the blue sky is really violet,” she said thoughtfully, fixing her big brown eyes on me, “at the end of the rainbow, what’s violet, then?”

I’ve done a lot of research since then, squinting at my tiny screen, and some of the information I’ve pieced together includes: that some of the colors displayed on my phone don’t actually exist, since different combinations of wavelengths of light stimulate different combinations of cones and make you hallucinate colors in your head; that violet is actually just dark blue and not purplish at all; and that yellow is just red and green light without any blue—but as to what they actually mean when they say “yellow” and “red” and “green” and “blue”—that part, I’m still trying to figure out.

It took me a while to even get this far. Lilah’s long since forgotten about the question and is now absorbed with watching Minecraft let’s plays on YouTube. They’re just incredible, kids. They’re born into this world, not understanding a damn thing about it, just having to trust what people tell them, and somehow they grow up into fully-formed individuals. I’m her dad, and I’m hardly responsible for a fraction of that. What else is it but a miracle?

And if anyone ever figures out what violet is, let me know.

756 notes

·

View notes

Photo

50% OFF Light bulb clipart, light bulb clip art, lightbulbs, bulbs, Rainbow icons, planner stickers, lightbulb PNG, Commercial, digital

#light bulbs clipart#light bulbs clip art#light bulbs#PNG#JPEG#clipart#clip art#planner sticker icons#commercial#digital graphics#printable#papercraft#thinking#ideas

0 notes

Text

Why Energy is so Essential

When we talk about energy usage, the first thing that comes to your mind is probably something such as what allows light bulbs to function, the fuel that powers a car, or what you use to charge your phone. While all of these actions do require energy, they don't begin to capture how people rely on energy for their day to day lives. Of all the energy that is consumed by people in the United States, only 20% of it is used for the residential sector. That includes all electricity, water heating, appliances, and general heating and cooling (1). So, where is that other 80% of energy being used? Well, 32% of energy powers the industrial sector for activities such as mining, agriculture, construction, and manufacturing. Another 29% is used for transportation to fuel cars, ships, and planes. The consumer sector is the 4th largest energy consumer accounting for 18% of energy usage in the United States for stores, places of worship, schools, and any other public locations (1).

Image: (2)

Of course, this breakdown only accounts for energy being used in the United States, meaning that any products produced abroad are using energy not accounted for in these statistics. Whether it's buying clothing, toys, or a car from another country, any energy used to produce that product is also part of how we depend on energy.

(1) What are the major sources and users of energy in the United States? https://www.americangeosciences.org/critical-issues/faq/what-are-major-sources-and-users-energy-united-states (accessed Jan 26, 2020).

(2) Turn F Light Switch Clip Art: Art Related Clipart. https://www.clipart.email/download/1746300.html (accessed Jan 26, 2020).

1 note

·

View note

Text

This little Light of Mine SVG Autism Awareness png clipart T-Shirt Design Light Bulb Outline Cricut files

This little Light of Mine SVG Autism Awareness png clipart T-Shirt Design Light Bulb Outline Cricut files

Autism SVG Files, Puzzle Symbol vector cut files, Awareness SVGs for T-Shirt designs, This little Light of Mine cutting file, Autism svg images for Cricut, Autism Spectrum DXF for Silhouette Cameo, Autism SVG Designs, Autistic PNG for Sublimation, Awareness Free SVG. Item description: ► This is a digital download, no physical product will be delivered. ► This design comes in a single ZIP file with the following file formats: - SVG cut file for Cricut Design Space, Silhouette Designer Edition, Inksape, Adobe Suite and more. - DXF file for Silhouette users. You can open this with the free software version of Silhouette. - PNG file with transparent background and 300 dpi resolution. ► You can use This little Light of Mine SVG cut files perfectly for your DIY projects and handmade products (t-shirts, mugs, pillowcases, blankets, bags, invitation card, heat transfer vinyl, wall decal, party decorations, home decor, paper crafting, sublimation, crafts, etc). ► Due to the nature of digital files. No any refunds or exchange available here. ► SUPPORT / HELP: If you have any question or need help we are always there for you. You can contact us by going on CONTACT US PAGE and sending us your query. How to Download This little Light of Mine SVG Autism Awareness png clipart T-Shirt Design Light Bulb Outline Cricut files ► To Download This little Light of Mine SVG Design you need to follow these steps STEP 1: Click on “ADD TO CART” on all the files that you want to purchase. STEP 2: Once you added the files to cart, click the “PROCEED TO CHECKOUT” button and enter your billing details on checkout page. STEP 3: Complete the payment with Paypal or Credit Card. After payment you will be automatically redirected to a Download page where you can download the files. Click on the file to download it. STEP 4: Also you will receive an email from DonSVG.com, this email includes download link, just click on it and your This little Light of Mine images will start downloading automatically. NOTE : If you had chose to create a user account before purchasing, your purchased files will be in the downloads section inside your user account. Thanks For Shopping!! Read the full article

0 notes

Photo

Christmas Lights are one of the prettiest parts of Christmas! I love looking at them. The Favorite Color Plaque cutter is 3.5" W x 3.00" T and the Christmas Bulbs are 3.5" W x 3.00" T. There is also an optional stencil available to fit the Plaque Cutter. Matching PNG file clipart listed for this cutter set is in my shop. Sinfulcutters.com #sinfulcutters #royalbluecutters #sugarcookies #cookies #cookiecutter #cookiecutters #cookieart #cookiedecorating #decoratedcookies #food #instacookie #3Dcookiecutters #sugarcookiesofinstagram #sugarcookies #royalicingcookies #satisfying #royalicingart #edibleart #smallbusiness #christmas #christmascookies https://www.instagram.com/p/Ckgr0d3OL56/?igshid=NGJjMDIxMWI=

#sinfulcutters#royalbluecutters#sugarcookies#cookies#cookiecutter#cookiecutters#cookieart#cookiedecorating#decoratedcookies#food#instacookie#3dcookiecutters#sugarcookiesofinstagram#royalicingcookies#satisfying#royalicingart#edibleart#smallbusiness#christmas#christmascookies

0 notes

Text

Hand Drawing Template

This is a three nodded template. There is large clipart of a hand-drawn diagram of a light bulb. The nodes are arranged vertically near the clipart diagram.

0 notes

Photo

Week 5: Entry 1

Topic: Sustainable Electricity

Case Study: Energy efficient lights

Lighting contributes to around 10% of electricity usage in households, and even more so in commercial properties. In 2018, new regulations suggested a push towards phasing out standard halogen lamps which are the typical batten holder lights we’re all used to, and moving towards a minimum standard of LED lamps throughout Australia. They use less energy as they are designed to create light, rather than light and heat, therefore making them more efficient.

LED lights use much less electricity, while still being quite bright. LED lights are slightly more expensive than original light options such as halogen, compact fluorescent and incandescent lights, however they are still affordable and readily available, being able to be purchased from all lighting stores, hardware stores and supermarkets. They also come in different colours and temperatures, making them quite versatile and a good option for not only owner built houses, but also social housing and commercial and retail spaces. Not only this, they last 5-10 times longer. However given the extended lifespan and the overall benefits of energy efficiency, the payback time is worth the initial extra money used for the purchasing of the light itself.

For these reasons, it is clear that LED lights are more energy efficient. Lasting longer results in less wastage, they contain no toxic elements, and are better at distrusting light. This is obviously increased and amplified with the more LED’s used throughout a house or commercial space.

https://www.kindpng.com/imgv/ioJomb_transparent-light-energy-clipart-bulb-on-off-gif/

0 notes

Text

Professional over thinker svg, Light bulb clipart svg, Anxiety Svg, Mental Health Svg

Professional over thinker svg, Light bulb clipart svg, Anxiety Svg, Mental Health Svg

Professional over thinker svg, Light bulb clipart svg, Anxiety Svg, Mental Health Svg, Self Care Svg, Self Love Svg, Mom Life Svg, Stressed Svg, Funny Svg, Motivational SVG, Mental Health Awareness svg, SVG files for Cricut, SVG bundle, design bundles, Cricut files svg, PNG files. This design is delivered instantly as a digital download. You will receive a zipped file containing files in 8…

View On WordPress

0 notes

Photo

So, I did my fiance as a pony also. Did I mention he’s brony now? He’s a brony now. We’re into season 6 and thoroughly enjoying. His favorite song is We’ll Make Our Mark.

He has a light-bulb for now, unless we think of a better cutie mark, because he has a lot of bright ideas (for business, for coding, etc). Needed a more general umbrella cutie mark.

Heads up, I did not draw either of our cutie marks, I imported them. His is lightbulb clipart I found, and mine is a mark symbolizing the nine Muses. But I did draw the rest.

5 notes

·

View notes

Photo

Download Free Lightbulbs Svg Light Bulb Monogram Svg Files Light Bulb Cliparts Crafter File Here >>https://ift.tt/3ucYhLl

0 notes

Photo

Christmas Lights are one of the prettiest parts of Christmas! I love looking at them. The Favorite Color Plaque cutter is 3.5" W x 3.00" T and the Christmas Bulbs are 3.5" W x 3.00" T. There is also an optional stencil available to fit the Plaque Cutter. Matching PNG file clipart listed for this cutter set is in my shop. Sinfulcutters.com #sinfulcutters #royalbluecutters #sugarcookies #cookies #cookiecutter #cookiecutters #cookieart #cookiedecorating #decoratedcookies #food #instacookie #3Dcookiecutters #sugarcookiesofinstagram #sugarcookies #royalicingcookies #satisfying #royalicingart #edibleart #smallbusiness #christmas #christmaslights #christmascookies https://www.instagram.com/p/CjFv3Z7Oy6L/?igshid=NGJjMDIxMWI=

#sinfulcutters#royalbluecutters#sugarcookies#cookies#cookiecutter#cookiecutters#cookieart#cookiedecorating#decoratedcookies#food#instacookie#3dcookiecutters#sugarcookiesofinstagram#royalicingcookies#satisfying#royalicingart#edibleart#smallbusiness#christmas#christmaslights#christmascookies

0 notes

Text

Photograv Torrent

Photograv Torrent Download

Photograv 3.1 Torrent

Photograv Software Torrent

Photograv 3.1 Torrent

Photograv Torrent

PhotoGraV is a Shareware software in the category Education developed by PhotoGraV. The latest version of PhotoGraV is currently unknown. It was initially added to our database on. PhotoGrav Software - Full Version 3.1; PhotoGrav Software - Full Version 3.1 Item # CUS008. Get started in the fastest growing market segment of laser engraving! The PhotoGrav 3.1 software CD is designed for all Windows operating systems including Vista, Windows 7, Windows 8 and Windows 10. Tech Docs & Downloads. LaserBits Tech Tips. Free photograv 3.1 download software at UpdateStar - #1 Video Converter supports AVI,video MPEG1,video MPEG2,ASF, WMV formats.

PhotoGrav 3.1 is a major upgrade on the software that has become the industry standard for processing photos for laser engraving. It has never been easier to. PhotoGrav 3.0-torrent.zip 3ds Max 2012 64 Bit Crack Download Miss Junior Beauty Pageant - Contest 11. The F10 is exceptionally well appointed from the factory but there are carbon fiber ebrake handles, photograv 3 0 crack keygen aluminum illuminated shift knobs, carbon fiber pedal sets, photograv 3 0 crack keygen aluminum pedal sets, interior xenon bulbs, interior LED bulbs and much more available to truly customize your interior. Laser operators continue to praise this image software. Specifically design engineered for laser engraving machines, PhotoGrav 3.1 (Boss' latest version) offers an easy and effective tool for laser machines to process scanned photographs. Photograv does one thing. Photograv 3 Crack.rar DOWNLOAD (Mirror #1). 09d271e77f Too Good To Leave Too Bad To Stay Ebook Pdf Download Downloads Torrent materi ppt matematika smk kelas xii-adds. Photograv 3 crack.rar 52 pink visual crackWorld's Largest Online Community.Kasparov Chessmate Game Crack World. 320x240 jar Waves Mercury Bundle 5.0 for mac ppc crack.rar.

Free office suite for windows 7 32 bit. ProjectLibre has been rewritten and added key features: * Compatibility with Microsoft Project 2010 * User Interface improvement * Printing (does not allow printing) * Bug fixes The ProjectLibre team has been the key innovators in project management software. ProjectLibre is compatible with Microsoft Project 2003, 2007 and 2010 files. We have a community site as well at It has been downloaded over 3,000,000 times in 200 countries and won InfoWorld 'Best of Open Source' award. You can simply open them on Linux, Mac OS or Windows. In development of a cloud replacement of Microsoft Project.

Now shipping version 3.1 (with video guide) PhotoGraV - The Power Device for Laser Engraving Photographs! PhotoGraV offers been developed particularly for Laser beam Engravers. The objective of the system is usually to effectively course of action scanned photographs therefore that they can be engraved on a variety of typical components with confidence that the engraved pictures will become of outstanding quality.

Traditionally, the engraving of pictures has long been difficult and has happen to be a hit or skip endeavor ending in many discarded products. The procedure has been recently so challenging and time consuming, in truth, that many engraving shops simply perform not provide engraved photos as one of their products. Now you can engrave photos with convenience! Powerful equipment that have been found effective in processing photos for laser beam engraving. Automated application of these tools to the subject picture. of the engraving process for common components so the “éngraved product” can end up being inspected before it is usually actually etched. Interactive procedure with changeable improvements and real-time.

Photograv 3.0 Full Download. Adobe Audition 3.0 Full Download. Photograv 3.0 64bit.rar ff698819fe Crack.CDA.to.MP3.Converter.3.3.build.1228.rar whatsappspy exe youtube video downloader for nokia c5 05. Microsoft office 2010 free. download full version torrent survive her affair ebook download.zip Microsoft Office 2010 Blue Edition (Fully.

Reports and color proofs for consumer acceptance and benchmark. User described parameter units can be ended up saving for further customization. Calibrated to most laser beam engraving and typical. Colour Clipart from can end up being easily etched by processing with PhotoGraV. PhotoGraV simulates more than 20 engraving materials including: cherry and walnut solid wood; clear and black-painted fat; black laser metal and anodized light weight aluminum; a variety of common leather materials; and many materials with either a white or black core and with a variety of caps like brushed yellow metal and almost all solid colors. PhotoGraV's i9000 processing features have become tuned and optimizéd for each óf these components and the suitable optimized guidelines are automatically packed whenever a fresh material is certainly selected.

PhotoGraV automatically makes up for the éngraving peculiarities of éach material in the process of producing the 'engraver-ready' prepared picture. For example, pictures to be engraved on apparent acrylic are automatically match- imaged and produced at a 'unfavorable' polarity. Of program, you can override these automated features at any period to create special effects if so preferred. PhotoGraV will be very simple to use; (1.) Scan image as home windows bitmap, (2.) Open the picture in PhotoGraV, (3.) Select the material type you will end up being engraving, (4.) Choose the Last Process key. The following matter you find on your personal computer monitor is usually a simulation óf what the image will appear like when it is actually imprinted you possess chosen.

Call, Email or Fax your order today!

Windows ce 6 download full. This download is an incremental release to Windows Embedded CE 6.0 R2. If you do not have Windows Embedded CE 6.0 R2, you can also download an evaluation copy from the Microsoft Download Center. Windows Embedded CE 6.0 R3 provides innovative technologies that help device manufacturers create devices with rich user experiences and connections to. Windows ce 6 0 free download - Learn Visual Basic 6, Windows CE 5.0: Standard Software Development Kit (SDK), Getting Started: Microsoft Windows CE Toolkit for Visual Basic 6.0, and many more programs.

System Software Version

PhotoGrav 3.1 can be a major upgrade on the software that has become the market regular for running photos for laser engraving. It has never ended up less complicated to obtain professional picture engraving outcomes. Now, Color or BW pictures can end up being processed by PhotoGrav. Regular image varieties can become opened up by PhotoGrav 3.1 like tif, jpg, png ánd bmp formats. A massive listing of makes and models of lasers feature specific configurations for each. Up to date materials position provides automated configurations for standard materials utilized in the photo engraving procedure.

Photograv software, free download

The made easier design of the software includes a dimensions tool making the process of preparing photos for laser beam engraving actually less difficult. A excellent product produced even better, PhotoGrav 3.1 will be designed for novice and professional engravers to produce first run, professional high quality results. Get began in the fastest developing market section of laser engraving! The PhótoGrav 3.1 software CD is created for all Home windows operating techniques including Windows vista, Home windows 7, Home windows 8 and Home windows 10.

Sample Results From Member Downloads

Download NameDate AddedSpeedPhotograv HDTV13-Dec-20202,417 KB/sPhotograv Download13-Dec-20202,149 KB/sPhotograv Serial12-Dec-20202,137 KB/sPhotograv Torrent09-Dec-20202,496 KB/sPhotograv.Proper.rar05-Dec-20202,255 KB/sPhotograv (2020) Retail05-Dec-20202,565 KB/s

Photograv Torrent Download

DownloadKeeper.com provides 24/7 fast download access to the most recent releases. We currently have 431,315 direct downloads including categories such as: software, movies, games, tv, adult movies, music, ebooks, apps and much more. Our members download database is updated on a daily basis. Take advantage of our limited time offer and gain access to unlimited downloads for FREE! That's how much we trust our unbeatable service. This special offer gives you full member access to our downloads. Take the DownloadKeeper.com tour today for more information and further details!

Photograv Information

Photograv was added to DownloadKeeper this week and last updated on 12-Dec-2020. New downloads are added to the member section daily and we now have 431,315 downloads for our members, including: TV, Movies, Software, Games, Music and More. It's best if you avoid using common keywords when searching for Photograv. Words like: crack, serial, keygen, free, full, version, hacked, torrent, cracked, mp4, etc. Simplifying your search will return more results from the database.

Photograv 3.1 Torrent

Copy & Paste Links

The word 'keygen' means a small program that can generate a cd key, activation number, license code, serial number, or registration number for a piece of software. Keygen is a shortcut word for Key Generator. A keygen is made available through crack groups free to download. When writing a keygen, the author will identify the algorithm used in creating a valid cd key. Once the algorithm is identified they can then incorporate this into the keygen. If you search a warez download site for 'photograv keygen', this often means your download includes a keygen.

Photograv Software Torrent

Popular Download Searches

Photograv | Photograv Crack | Design Expert 7.1.6 | Warcraft Iii Frozen Throne By Myth Zip | Eurosport Player | License Key Ligthroom 2.5 | PC Security 6.6 | Manycam 2.4.39 | Autodesk 3Dsmax 2013 | Darkstorm Viewer | Creativelive 30 Days Of Wedding Photography With S | Desigo Toolset | Nhc 2 Activation | AutoCAD 10 CD Key | Volam Geiletrios3156 Geile Seksafspraak247 Info | Folder Marker V 3.0.1 | Okoker Sudoku V1.6 | J A Associates Rpn Engineering Calculator 9.0 | Microsoft Office 207 | Sharks Of The Terror Deep |

Photograv 3.1 Torrent

Photograv Torrent

[ Home | Signup | Take A Tour | FAQ | Testimonials | Support | Terms & Conditions | Legal & Content Removal ] Design and Layout © 2020 DownloadKeeper. All rights reserved.

0 notes

Text

What Will Cool Easy Art Be Like In The Next 23 Years? | Cool Easy Art

Let’s face it: These four walls accept been our connected accompaniment for the accomplished few months. While we haven’t gone abounding “TikTok” by painting a air-conditioned mural or architecture our own coffee table (…yet), we accept had affluence of time to analyze every aspect of our interior…and accomplished our amplitude was defective that little added oomph. Whether you appetite to bandbox up your accepted adornment or are starting over from scratch, actuality are bristles accessible means to add a finishing blow to your accepted area.

Add ancestor of blooming to your amplitude in the anatomy of abridged plants. Not alone do they advice absolve the air and access oxygen levels in the room, assertive breed are additionally anticipation to addition your affection and advice you get a bigger night’s sleep. And if you’re assertive you accept a atramentous thumb, affront not. Try a (I-can’t-believe-it’s-not-real) faux bulb or a boutonniere subscription. Aloof don’t balloon to bounce apple-pie your clay accouchement by wiping their leaves every so often, lest dust and crud accession anticipate change and accomplish them attending dull.

One affair we consistently apprehension about the best luxuriously furnished homes? They accept a signature smell. And that aroma pervades every anamnesis of the space. You can do the aforementioned in your own home by lighting a candle that emits the exact activity you appetite bodies to accept back they airing into the room, like the Glade® Exotic Tropical Blossoms™ 3-Wick Candle. Infused with capital oils, the aroma is an addictive boutonniere of adorning monoi bloom and abatement jasmine. It’s the absolute way to “top off” the room.

What Will Cool Easy Art Be Like In The Next 23 Years? | Cool Easy Art – cool easy art | Welcome to be able to the website, with this period I will demonstrate about keyword. And now, here is the primary impression:

16 DIY Home And Garden Projects Using Sticks And Twigs .. | cool easy art

What about photograph earlier mentioned? can be which awesome???. if you think maybe so, I’l d explain to you some impression yet again down below:

So, if you would like obtain all of these great graphics regarding (What Will Cool Easy Art Be Like In The Next 23 Years? | Cool Easy Art), click save button to download these photos to your computer. They are ready for down load, if you’d prefer and wish to own it, click save logo in the article, and it will be instantly down loaded to your computer.} At last in order to grab unique and recent picture related with (What Will Cool Easy Art Be Like In The Next 23 Years? | Cool Easy Art), please follow us on google plus or book mark this website, we try our best to present you daily update with all new and fresh graphics. Hope you like keeping right here. For some updates and latest news about (What Will Cool Easy Art Be Like In The Next 23 Years? | Cool Easy Art) photos, please kindly follow us on twitter, path, Instagram and google plus, or you mark this page on book mark section, We attempt to offer you up grade periodically with all new and fresh pics, love your searching, and find the best for you.

Here you are at our website, articleabove (What Will Cool Easy Art Be Like In The Next 23 Years? | Cool Easy Art) published . At this time we’re delighted to announce that we have discovered an incrediblyinteresting topicto be pointed out, that is (What Will Cool Easy Art Be Like In The Next 23 Years? | Cool Easy Art) Lots of people searching for specifics of(What Will Cool Easy Art Be Like In The Next 23 Years? | Cool Easy Art) and of course one of them is you, is not it?

Project 01 – lisette714 – cool easy art | cool easy art

drawing – Page 23 – Our Art World – cool easy art | cool easy art

Easy Art Work Drawings – Creative Art – cool easy art | cool easy art

Art ideas 23 Cool and Easy Things to Draw When Bored – Our Art World – cool easy art | cool easy art

Painting Sketches For Kids | Coloring Pages – cool easy art | cool easy art

cool drawing ideas for beginners – Best Wallpaper # .. | cool easy art

Shop Easy Art Prints GraphINC’s ‘Cool Grey’ Premium Canvas Art .. | cool easy art

Pin by mixondaryl on Inked Up | Tumblr drawings easy, Cool .. | cool easy art

Remodelaholic | 23 Easy Art Ideas for Kids Wall Decor – cool easy art | cool easy art

Easy Pictures To Draw – Cliparts | cool easy art

23 Cool & Easy Things to Draw to Get Better at Art – cool easy art | cool easy art

I found this easy but very cool project on a wonderful blog called .. | cool easy art

Cool Sketch Doodle Technique – Drawing a Random Pattern .. | cool easy art

Art Sketch Easy Cool Drawing Ideas – Creative Ideas – cool easy art | cool easy art

Firestarter – cool easy art | cool easy art

Easy Patterns To Draw For Kids | | Drawing And Painting For Kids – cool easy art | cool easy art

Easy Art lessons for substitute teachers (free & printable)! – cool easy art | cool easy art

Gello guys, so I’m back… kinda….I still have to go easy so there will be some drawings by Left in the future 😊 | cool easy art

Cool Pencil Drawing A Valentine Design Easy – YouTube – cool easy art | cool easy art

23 Easy Things to Draw When You Are Bored – cool easy art | cool easy art

Cool Star Drawings – Cliparts | cool easy art

23 Easy Art Projects Anyone Can Make – cool easy art | cool easy art

Painter Legend https://i2.wp.com/www.painterlegend.com/wp-content/uploads/2020/06/16-diy-home-and-garden-projects-using-sticks-and-twigs-cool-easy-art.jpg?fit=484%2C873&ssl=1

0 notes