#hyperconverged

Explore tagged Tumblr posts

Text

0 notes

Text

What is a hyperconverged appliance (HCI appliance)? | Definition from TechTarget

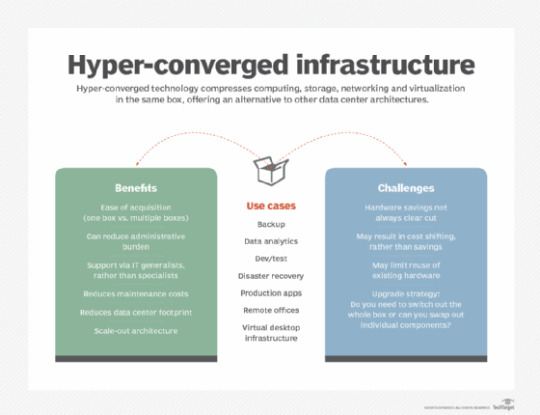

What is a hyperconverged appliance (HCI appliance)? A hyperconverged appliance (HCI appliance) is a hardware device that provides multiple data center management technologies within a single box. Hyperconverged systems are characterized by a software-centric architecture that tightly integrates compute, storage, networking and virtualization resources and other technologies. At one time, these…

View On WordPress

0 notes

Text

Future-Ready IT: Hyperconverged Infrastructure Development Services

Simplify, scale, and secure your IT operations with our Hyperconverged Infrastructure (HCI) Development Services. We help businesses integrate computing, storage, and networking into a single, efficient system—boosting performance, reducing costs, and streamlining management. Upgrade to a smarter, more agile infrastructure with our expert solutions.

0 notes

Text

The SAN Storage Revolution: Hyperconvergence and the Future of Data Sharing

The storage area network (SAN) has long been the backbone of high-performance enterprise storage solutions. Today, as data usage grows exponentially and organizations strive to reduce complexity, hyperconvergence is changing the landscape of SAN storage. By combining compute, storage, and networking into a single, streamlined infrastructure, hyperconverged systems are shaping the future of data sharing and storage efficiency.

This post explores the transformation of SAN storage under the influence of hyperconvergence, including its game-changing capabilities, the benefits it delivers to enterprises, and how it paves the way for the future of IT infrastructure.

Why SAN Storage Needs to Evolve

Traditional SAN solutions have served enterprises well for decades. They deliver fast, centralized storage and high-capacity data sharing essential for business-critical applications. However, as an increasing number of organizations scale their digital operations, traditional SANs face challenges such as:

Scalability limitations: Scaling storage often requires significant infrastructure changes, which can be costly and time-consuming.

Complex management: Separate compute, storage, and networking layers require skilled teams and complicated workflows to maintain.

High cost: Legacy SAN systems are expensive to implement and operate, especially for large enterprises managing petabytes of data.

With data volumes expected to double every three years, according to research firm IDC, these limitations spotlight the need for a revolutionary approach to storage infrastructure. Enter hyperconverged SAN solutions.

What Is Hyperconvergence in SAN Storage?

Hyperconvergence is an IT architecture that integrates compute, storage, and networking into tightly integrated systems. Instead of operating on discrete hardware components, hyperconverged solutions bundle these resources together in software-defined infrastructure, manageable from a single interface.

This approach is changing the way businesses approach SAN storage. By abstracting hardware resources and pooling them under one hypervisor, hyperconvergence allows entities to optimize storage utilization, reduce wasted capacity, and create more agile environments.

How SAN fits into the hyperconverged model

Despite the shift from traditional models, hyperconverged infrastructure (HCI) doesn’t replace SAN entirely; rather, it enhances it. Modern SAN technologies in HCI improve data sharing speeds, boost redundancy, and integrate seamlessly into cloud and multi-cloud ecosystems.

The Benefits of Hyperconverged SAN Solutions

Hyperconverged SAN architectures bring a suite of advantages to IT teams and business operations. Here’s why many enterprises are adopting hyperconvergence:

1. Simplified Management

Hyperconvergence eliminates the need to manage separate compute, storage, and networking layers. Using intelligent, centralized control software, IT teams can provision resources, monitor system performance, and perform maintenance tasks seamlessly.

For example, Nutanix's hyperconverged platform enables IT teams to manage entire infrastructures through a single management console, dramatically reducing complexity.

2. Scalability on Demand

Traditional SAN frameworks demand significant investment and downtime for scaling. Hyperconverged SAN systems, however, allow organizations to add nodes incrementally. This flexibility supports growing workloads without requiring an overhaul of the entire infrastructure.

Case in point: An e-commerce enterprise experiencing seasonal traffic spikes could add just the necessary resources in a hyperconverged setup, avoiding the high upfront expenses tied to legacy SAN solutions.

3. Cost Efficiency

IT spending is always a concern, but hyperconverged SAN solutions have the potential to reduce short- and long-term costs. By consolidating resources into a single, scalable system, businesses save on hardware, power, cooling, and administrative overhead.

Gartner forecasts that hyperconverged platforms will lead to 20% lower infrastructure costs over five years compared to legacy data center setups.

4. Enhanced Performance and Reliability

Hyperconverged systems leverage distributed storage and optimized data paths to increase reliability and improve performance. By minimizing hardware dependency and enabling intelligent failover systems, hyperconverged SAN prevents downtime and ensures continuous operations.

For example, when one node fails in a hyperconverged architecture, redundancy algorithms redistribute its data load to functioning nodes without impacting performance.

5. Seamless Cloud Integration

Cloud-first strategies are becoming the norm, and hyperconverged solutions are built with the cloud in mind. Leading HCI platforms, such as VMware vSAN, support easy deployment of data-intensive workloads on public, private, and hybrid clouds, providing enterprises with greater flexibility and cost control.

How Hyperconvergence Shapes the Future of Data Sharing

Hyperconverged SAN storage isn’t just a trend; it’s paving the way for the future of IT infrastructures. Here are three emerging trends tied to hyperconvergence that promise to redefine data sharing:

1. AI-Powered Storage Optimization

Machine learning (ML) and artificial intelligence (AI) are now integrated into modern hyperconverged systems, enabling continuous optimization. AI mechanisms study access patterns, analyze system bottlenecks, and make real-time recommendations to optimize workloads, ensuring maximum efficiency.

2. Edge-Friendly Architecture

Hyperconverged solutions are allowing enterprises to bring compute and storage closer to the edge, where data is generated. With advancements in edge computing, businesses can extend storage capabilities to remote or branch offices (ROBOs), enhancing responsiveness and reducing latency.

Imagine a retail chain managing point-of-sale systems across hundreds of stores. A hyperconverged edge solution can store, process, and sync transactions locally while ensuring consolidated reporting to headquarters.

3. Application-Driven Workloads

The future of enterprise infrastructure is application-centric. Hyperconverged platforms simplify the deployment of containerized applications, integrating seamlessly with Kubernetes clusters. This makes them increasingly relevant for modern DevOps workflows and microservice-based architectures.

Challenges of Hyperconverged SAN (and How to Tackle Them)

While hyperconvergence presents numerous benefits, it isn’t without challenges. Here are common hurdles and strategies to address them:

Initial Investment: While HCI systems save on long-term expenses, they may have a high upfront cost. Implementing systems that allow gradual scaling can help manage initial budget constraints.

Learning Curve: IT teams may need to upskill to manage hyperconverged systems effectively. Comprehensive training programs offered by vendors such as Dell EMC can bridge this gap.

Vendor Lock-in: Proprietary solutions may limit flexibility. To avoid this, consider open-source platforms like Red Hat Hyperconverged Infrastructure.

Is It Time to Embrace Hyperconverged SAN Storage?

The SAN storage solution revolution is well underway, and hyperconvergence is its driving force. Organizations that adopt hyperconverged systems today can gain a strategic edge by scaling seamlessly, streamlining management, and optimizing costs. Whether you’re managing a large data center or planning for a hybrid-cloud future, hyperconvergence offers a practical solution that adapts to your evolving needs.

0 notes

Text

Intel And Google Cloud VMware Engine For Optimizing TCO

Google Cloud NEXT: Intel adds performance, flexibility, and security to Google Cloud VMware Engine for optimum TCO.

Intel and Google Cloud cooperate at NEXT 25. New and expanded Intel solutions will improve your total cost of ownership (TCO) by improving performance and workload flexibility.

Utilise Intel Xeon 6 processors' C4 virtual machine growth

Granite Rapids, the latest Intel Xeon 6 CPU, will join the C4 Machine Series. This expansion's capabilities, shape possibilities, and flexibility can improve the performance and scalability of customers' most essential applications. designed for industry-leading inference, databases, analytics, gaming, and real-time platform performance.

Google's latest local storage innovation, high-performance Titanium Local SSDs, will be offered in new virtual machine forms for C4 on Intel Xeon 6 CPUs. This cutting-edge storage technology speeds up workloads for I/O-intensive applications with better performance and up to 35% reduced local SSD latency.

Customers that want the maximum control and freedom may now use C4 bare metal instances. The new goods outperform bare metal predecessors from preceding generations by 35%.

Intel supports new, bigger C4 VM forms for greater flexibility and scalability. These new designs have the highest all-core turbo frequency of any Google Compute Engine virtual machine (VM) at 4.2 GHz, higher frequencies, greater cache sizes, and up to 2.2 TB of memory. This allows memory-bound or license-constrained applications like databases and data analytics to grow effectively.

Want a Memory-Optimized VM? Meet M4, the latest memory-optimized VM

Emerald Rapids is the first memory-optimized instance on 5th Gen Intel Xeon Scalable CPUs. The M4 virtual machine family, Google Cloud's latest memory-optimized instances, is designed for in-memory analytics. Compare to other top cloud solutions with similar architecture, M4 performs better.

M4's 13.3:1 and 26.6:1 Memory/Core ratios provide you additional flexibility to size database workloads. It supports 744GB to 3TB capacities. The RAM-to-vCPU ratio doubles with M4. M4 offers great options for companies looking to improve their memory-optimized infrastructure with new designs and a large virtual machine portfolio. The pricing performance of M4 instances is up to 65% better than M3.

Perfect SAP Support

Intel Xeon CPUs are ideal for SAP applications due to their speed and scalability. New memory-optimized M4 instances from Google Cloud are certified for SAP NetWeaver Application Server and in-memory SAP HANA workloads from 768GB to 3TB. In the M4, 5th Gen Intel Xeon Scalable processors provide 2.25x more SAPs than the previous iteration, improving performance. Intel's hardware-based security and data protection and M4's SAP workload flexibility help you reduce costs and maximise performance.

Z3-highmem and Z3.metal: New Storage Options for Industry-Specific VMs

Google Cloud's Z3 instance on 4th Gen Intel Xeon Scalable CPUs (codenamed Sapphire Rapids) adds 11 Z3-highmem Storage Optimised offerings with new smaller VM shapes from 3TB to 18TB Titanium SSD. This extension scales from 2 to 11 virtual machines to satisfy various storage needs and workloads.

Google Cloud, which optimises storage with the Titanium Offload System, includes Z3.metal and Z3 high-memory versions. Google Cloud's latest bare metal instance, Z3h-highmem-192-metal, has 72TB of Titanium SSD in a single compute engine unit. This form supports hyperconverged infrastructure, advanced monitoring, and custom hypervisors. Customers may develop comprehensive CPU monitoring or tightly controlled process execution with direct access to real server CPUs. Security, CI/CD, HPC, and financial services can handle workloads that cannot run on virtualised systems due to licensing and vendor support requirements.

Google Cloud VMware Engine Adds 18 Nodes that Change Everything

Cloud by Google VMware Engine is a fast way to migrate VMware estate to Google Cloud. Intel Xeon CPUs make VMware applications easy to run and move to the cloud. There are currently 26 node forms in VMware Engine v1 and v2, with 18 more added. You now have the most industry options to optimise TCO and capacity to meet business needs.

More Security with Intel TDX

Intel and Google Cloud provide cutting-edge security capabilities including Intel Trust Domain Extensions. Intel TDX encrypts currently running processes using a proprietary CPU function.

Two items now support Intel TDX:

Confidential GKE Nodes are Google Kubernetes Engines that protect memory data and defend against attacks via hardware. usually available in Q2 2025 with Intel TDX.

Process sensitive data in Intel TDX's safe, segregated environment.

Google Cloud VMware Engine?

Move VMware-based apps to Google Cloud quickly without changing tools, procedures, or apps. includes all hardware and VMware licenses needed for a VMware SDDC on Google Cloud.

Google Cloud VMware Engine Benefits

A fully integrated VMware experience

Intel provides all licenses, cloud services, and invoicing while simplifying VMware services and tools with unified identities, management, support, and monitoring, unlike other options.

Fast provisioning and scaling

Rapid provisioning with dynamic resource management and auto scaling lets you establish a private cloud in 30 minutes.

Use popular third-party cloud applications

Keep utilising your important cloud-based business software without changes. Google Cloud VMware Engine integrates top ISV database, storage, disaster recovery, and backup technologies.

Key characteristics of Google Cloud VMware Engine

Fast networking and high availability

VMware Engine uses Google Cloud's highly performant, scalable architecture with 99.99% availability and fully redundant networking up to 200 Gbps to meet your most demanding corporate applications.

Increase datastore capacity without compromising compute.

Google Filestore and Google Cloud NetApp Volumes are VMware Engine NFS datastores qualified by VMware. Add external NFS storage to vSAN storage for storage-intensive virtual machines to increase storage independently of compute. Use Filestore or Google Cloud VMware Engine to increase capacity-hungry VM storage from TBs to PBs and vSAN for low-latency storage.

Google Cloud integration experience

Fully access cutting-edge Google Cloud services. Native VPC networking allows private layer-3 access between Google Cloud services and VMware environments via Cloud VPN or Interconnect. Access control, identity, and billing unify the experience with other Google Cloud services.

Strong VMware ecosystems

Google Cloud Backup and Disaster Recovery provides centralised, application-consistent data protection. Third-party services and IT management solutions can supplement on-premises implementations. Intel, NetApp, Veeam, Zerto, Cohesity, and Dell Technologies collaborate to ease migration and business continuity. Review the VMware Engine ecosystem brief.

Google Cloud operations suite and VMware tools knowledge

If you use VMware tools, methods, and standards for on-premises workloads, the switch is straightforward. Monitor, debug, and optimise Google Cloud apps with the operations suite.

#technology#technews#govindhtech#news#technologynews#cloudcomputing#Google Cloud VMware Engine#Google Cloud VMware#Intel Google Cloud VMware Engine#Cloud VMware Engine#VMware Engine#C4 virtual machines#Z3-highmem and Z3.metal

0 notes

Text

MTS-4/ SMTS [ Python Automation]

Hungry, Humble, Honest, with Heart.The OpportunityNutanix is a global leader in cloud software and a pioneer in hyperconverged infrastructure solutions, making computing invisible anywhere. Companies around the world use Nutanix software to leverage a single platform to manage any app, at any location, at any scale for their private, hybrid and multi-cloud environments.Our System Test team is…

0 notes

Text

MTS-4/ SMTS [ Python Automation]

Hungry, Humble, Honest, with Heart.The OpportunityNutanix is a global leader in cloud software and a pioneer in hyperconverged infrastructure solutions, making computing invisible anywhere. Companies around the world use Nutanix software to leverage a single platform to manage any app, at any location, at any scale for their private, hybrid and multi-cloud environments.Our System Test team is…

0 notes

Text

Pinakastra Computing Private Limited

Founded in 2021 in Bangalore, Karnataka, India, Pinakastra Computing specializes in Private cloud infrastructure and hyperconverged infrastructure (HCI), offering innovative cloud solutions for enterprises, academia, and research organizations. Our Pinaka ZTi™ cloud platform integrates advanced hardware and software to deliver scalable, efficient, and secure private cloud solutions. Pinakastra aims to fill the gap in India’s enterprise tech ecosystem by offering alternatives to global cloud providers. We focus on addressing the growing demand for VM & Container based AI/ML workloads with modular, cost-efficient, and scalable solutions, aspiring to become a global leader in cloud infrastructure.

https://pinakastra.com/

#PrivateCloud#CloudComputing#IoT#DataSecurity#HyperConvergedInfrastructure#HCI#SecureData#HealthcareIT#FinTech#EdTech#biotech#GovernmentIT#ManufacturingTech#RetailSolutions#smartfactory

1 note

·

View note

Text

How to Eliminate Future Storage Refreshes with an Enterprise-Class vSAN

A storage refresh is often a costly and time-consuming process, forcing IT teams to evaluate, purchase, and migrate data every few years. The traditional approach of investing in dedicated storage arrays or single-vendor hyperconverged systems locks organizations into rigid, short-term solutions that require expensive forklift upgrades whenever performance, capacity, or workload requirements…

0 notes

Text

How to provide Aged Servers with a Brand New Lease of life?

Server Refresh interval can be understood to become the warranty/maintenance timetable ordered from the OEM vendors (typically 3-5 years). Nonetheless, according to a leading analyst firm's report, the potential life span of servers will be currently between 7 10 years (an average of 6 years for rack servers and up to 10 years for incorporated methods ), "up to three times longer compared to regular replacement cycle for servers and storage arrays."

The Authentic Price of updates

Let's State your one desires would be to stay to your OEM seller. Your options are to either renew your care arrangement or secure the most current generation of servers to directly substitute for current ones. Both options include exceptionally substantial premiums. The true cost of upgrades goes beyond your primary buy.

Criteria:

· Missing productivity: A hardware upgrade will probably also indicate potential downtime, which affects staff timing, the uptime of solutions, also has a synergistic impact on additional projects within the company. It may also mean increased requests for technician service when the system rests.

· Unrealized ROI: The challenge to virtually any IT department is to offer 24/7 aid during the integration of fresh gear. Migration and upgrades are complex endeavours that divert your staff against innovating and forcing profits.

Hyperconverged Infrastructure--Innovate with old Components

Hyperconverged Infrastructure is not just a buzzword. It's shifting how forward-thinking CIOs are now building their own data centres.

Legacy Servers can be included as building blocks for hyper-converged infrastructure. We have witnessed customers evolve their three-tier architecture (server, SAN, and storage) into a two-tier hyper-converged (server/storage) natural environment while using existing network infrastructure for connectivity. ) In summary, heritage systems are being leveraged as building blocks for the current hyper-converged environments.

A Hyperconverged infrastructure option should be to your radar, as it eliminates the demand for silo-ed surgeries and places better attention on a consolidated infrastructure, and this reduces reliance on precious real estate when consuming less power. This results in reduced working cost and less handbook maintenance.

A hyper-converged alternative additionally opens a world of possibilities, such as:

· Software-defined storage: The battle of data deluge is very real. About 2.5 exabytes of info have been created daily. That is equivalent to ninety years of all HD movie clip. Software-defined storage was proven to be a whole lot more efficient compared to indigenous components storage, and it reduces the number of components self-storage components that you need to replace and maintain.

· Data protection and higher availability: As a virtual computing platform, hyper-convergence provides you continued use of your data in case of an error or tragedy, and it supplies the crucial redundancies for data protection, backup, data retrieval.

· Faster provisioning: When customers desire on-demand upgrades, you may lower your deployment and implementation time without even bothering surgeries. This suggests shorter lead time, also that -- right now -- is how you can channel your funds to concentrate on initiatives that drive earnings.

I hope these pointers have offered you something to consider since it is my view that even though OEM suppliers talk about new tech innovations daily, we need to rethink the facts of tech refreshes and also the sustainability of venture engineering.

We are not talking about prolonging the life span of servers. We're giving Serious idea to the way we can collectively lengthen the life span of all both last-generation Hardware to the sake of one's organizations and, Hey -- for this planet all of us live on. Contact us for more about server and storage hardware support.

#Storage hardware support#Server hardware support#server maintenance#Storage maintenance#server AMC#Storage AMC

0 notes

Text

5 hyper-converged infrastructure trends for 2024 | TechTarget

Hyper-converged infrastructure technology has taken big strides since emerging more than a decade ago, finding a home in data centers looking to ease procurement headaches and management chores. Vendors originally positioned the technology as a simple-to-deploy, all-in-one offering that combined compute, storage and networking with a hypervisor. The essential selling points around simplicity…

View On WordPress

0 notes

Text

Computing and Artificial Intelligence

Hewlett Packard Enterprise Co. is a global edge-to-cloud company engaged in providing information technology, technology and enterprise products, solutions and services. The company operates through Computing, High Performance Computing and Artificial Intelligence (HPC & AI), Storage, Intelligent Edge, Financial Services, Enterprise Investments and Other segments. The Computing segment includes both general-purpose servers for multi-workload computing and workload-optimized servers that deliver the highest performance and value for demanding applications. The HPC & AI segment offers standard and custom hardware and software solutions designed to support specific use cases. The

Storage segment offers workload-optimized storage products and services, including intelligent hyperconverged infrastructure with HPE Nimble Storage dHCI and HPE SimpliVity. The Intelligent Edge segment offers wired and wireless local area networks, campus and data center switching, software-defined wide area networks, network security and related services, enabling secure connectivity for businesses of all sizes. The Financial Services division offers customers flexible investment solutions, such as leasing, financing, IT consumption, utility programs and asset management services, technology delivery models and acquisition of complete IT solutions, including hardware, software and services from Hewlett Packard Enterprise and others.

The Corporate Investments and Other segment includes the A&PS business, which primarily provides advisory services, technology expertise and advice from hpe and partners, implementation services and complex solution engagement capabilities, the Communications and Media Solutions business, the HPE Software business and the Hewlett Packard Laboratories. . The company was founded on July 2, 1939 and is headquartered in Spring, Texas.

0 notes

Link

Indonesia’s bottle drinking water products producer and distributor PT Sariguna Primatirta Tbk (Tanobel) has modernised applications to improve business processes. The company replaced its virtualised environment, windows servers, and storage area network (SAN) appliances with a modern, hyperconverged compute and storage solution to support its distributed architecture. This helped the firm to improve performance, scalability and resilience. The new environment has also substantially reduced downtime, benefiting end users, developers, and operations. Tanobel’s IT Director, Tanaka Murinata said the on-demand horizontal scalability enabled by Red Hat technology played a crucial part in performance improvement. He shared the example of the Dremio data lake Tanobel uses for analytics. “Our Dremio deployment is one master pod and three executor pods in OpenShift. It allows us to spread the workload horizontally if we need to scale,” In contrast, spreading the workload horizontally was impossible in Tanobel’s legacy environment. It would mean creating a new VM, installing the latest operating system, installing the application, configuring environments, and testing before it can be commissioned for production. Founded in 2003, Tanobel has rapidly expanded its drinking water product line, including brands like Cleo, Anda, Vio, and SuperO2, across Indonesia. It needed to ensure its IT systems were resilient and performing optimally. Engineers need to be able to run critical reports and analytics at speed. For that, they need critical Enterprise Resource Planning (ERP) systems to run and commit data on time and for computing resources to scale when needed. Murinata noted that their attempt to implement a data lake for reporting and analytics faced performance issues when the required object storage was added to their traditional storage solution, causing the systems to slow significantly. The team decided to implement Red Hat OpenShift Virtualisation that could help to take advantage of cloud-native application development while preserving existing VM workload investments - all within the same unified platform. Adopting modern application environment The company migrated existing Windows and database workloads to the virtualised environment provided by OpenShift Virtualisation before branching out to focus on containerisation. Murinata said currently the virtualised environment supports a handful of applications that don’t support containerisation and some database instances. OpenShift Container Platform has also helped Tanobel advance with containers, with 90 percent of workloads now running on OpenShift Container Platform. The container environment supports a Dremio data lake and almost 300 newly developed microservices, including web services and application programming interfaces (APIs), he added. Tanaka said containerised applications can self-repair without user impact, which quickly spawns new instances if an application goes down. The infrastructure is highly available, ensuring that the loss of nodes or entire clusters doesn’t affect users. For example, during recent hardware failures with two disk drives, Red Hat OpenShift Data Foundation has prevented data loss and performance issues, allowing for a quick recovery with minimal effort, he added. Red Hat technology has enhanced code quality and resilience in Tanobel's systems by allowing developers to test applications in the same environment as their development. This has simplified deployment to a single-click process, unlike the legacy system that required manual synchronisation of test VMs. Additionally, the CI/CD workflow could provide better transparency in code versions, as developers must deploy code to a Git repository before it goes to the server. This prevents unauthorised changes directly on the server, improving both code and deployment quality. Tanaka said Tanobel plans to further modernise its applications using Red Hat by developing new microservices and containerised applications on OpenShift Virtualisation. Their roadmap includes creating a disaster recovery facility and preparing for cloud integration. It aims to create a hybrid environment where cloud and on-premise instances are identical, ensuring a consistent OpenShift experience.

0 notes

Text

Computing and Artificial Intelligence

Hewlett Packard Enterprise Co. is a global edge-to-cloud company engaged in providing information technology, technology and enterprise products, solutions and services. The company operates through Computing, High Performance Computing and Artificial Intelligence (HPC & AI), Storage, Intelligent Edge, Financial Services, Enterprise Investments and Other segments. The Computing segment includes both general-purpose servers for multi-workload computing and workload-optimized servers that deliver the highest performance and value for demanding applications. The HPC & AI segment offers standard and custom hardware and software solutions designed to support specific use cases. The

Storage segment offers workload-optimized storage products and services, including intelligent hyperconverged infrastructure with HPE Nimble Storage dHCI and HPE SimpliVity. The Intelligent Edge segment offers wired and wireless local area networks, campus and data center switching, software-defined wide area networks, network security and related services, enabling secure connectivity for businesses of all sizes. The Financial Services division offers customers flexible investment solutions, such as leasing, financing, IT consumption, utility programs and asset management services, technology delivery models and acquisition of complete IT solutions, including hardware, software and services from Hewlett Packard Enterprise and others.

The Corporate Investments and Other segment includes the A&PS business, which primarily provides advisory services, technology expertise and advice from hpe and partners, implementation services and complex solution engagement capabilities, the Communications and Media Solutions business, the HPE Software business and the Hewlett Packard Laboratories. . The company was founded on July 2, 1939 and is headquartered in Spring, Texas.

0 notes