#genesys config management

Explore tagged Tumblr posts

Text

Top Computer Repair and Services in Winnipeg - Keep Your Tech Trouble-Free

Between computers, phones, and smart devices, our digital lives have never been more full. But all that technology brings risks of crashes, malfunctions, and glitches that frustrate. Having a trusted source for repairs and support makes all the difference. Whether it's a gaming PC needing upgrades or small business systems seeking optimized performance, computer networking services in Winnipeg Mission Repair Center experts can help. Here are some top choices for computer and networking services in Winnipeg.

1. Data Recovery Services:

Accidents happen - spilled coffee and kids dropping phones leads to lost files. Winnipeg Data Medics specializes in data backup services in Winnipeg from all types of failed storage - hard drives, SD cards, and more—their clean room facilities and proprietary software salvage digital contents when all seems lost with excellent success rates. Ensure valuable memories and documents are safely stored through scheduled backup services.

2. Home & Small Business Support:

Tech Help Winnipeg provides affordable hourly or subscription-based tech assistance that saves calling dozens of helplines. From laptop tune-ups to security checks and computer home repair in Winnipeg their remote support expedites solutions. Onsite services fix hardware, networks, and software issues for PCs, printers, and wifi. Upgrades include added storage, RAM, or new builds. Tailored office network management protects systems while optimizing productivity.

3. Gaming Pc Repairs:

Level up repairs maintains and builds elite gaming machines. Diagnostics identify problems behind frame rate dips. Components like water cooling systems, graphics cards, and processors get bench-tested, repaired, or replaced. Custom rigs are built to maximize horsepower for VR, streaming, and top titles. Over clocking pushes premium configs to new limits within safety guidelines. Ongoing support maintains maximum performance for esports.

4. Retro Console Restoration:

Revive childhood classics or growing retro game collections at Classic Gaming Resto. They assess viability from moldy NES and Genesis to scratched discs, worn connections, or buttons.

Cleaning and replacing enclosures or faulty lasers including Video Game Repair in Winnipeg bring nostalgia back to like-new condition with authentic components. Certification ensures collectibles remain safe investments. A blast from the past plays on like it's brand new!

Conclusion:

Local expert services protect Winnipeg homes and businesses from technology troubles. Data recovery prevents irreplaceable losses. Onsite assistance and remote support optimize systems. Gaming rig experts sustain top performance. Retro consoles keep memories alive. Regular backups, maintenance, and trusted repair sources give lasting peace of mind that devices serve dependably. With options that don't break the bank, your tech stays in able hands.

0 notes

Text

Setting up a private network for Hyperledger Besu nodes.

Hyperledger Besu is an open-source Ethereum client designed for enterprise use cases. It is a flexible and extensible platform that allows developers to build decentralized applications (dApps) and smart contracts using the Ethereum network. Setting up a private network for Hyperledger Besu nodes is a necessary step for any enterprise that wants to deploy and test dApps and smart contracts in a controlled and secure environment.

In this article, we will discuss the steps involved in setting up a private network for Hyperledger Besu nodes. We will cover the following topics:

Requirements

Installing Hyperledger Besu

Configuring the Genesis block

Starting the nodes

Connecting the nodes

Testing the network

Requirements

To set up a private network for Hyperledger Besu nodes, you will need the following:

At least two machines or virtual machines with Ubuntu 18.04 or later.

A user with sudo privileges on each machine.

Hyperledger Besu client installed on each machine.

A basic understanding of Ethereum, smart contracts, and dApps.

Installing Hyperledger Besu

Before setting up a private network, you need to install the Hyperledger Besu client on each machine. You can install Besu using a package manager or by downloading the binary file from the official website.

To install Besu using the package manager, you can follow these steps:

Add the Besu repository to the package manager's sources list:

sudo add-apt-repository ppa:hyperledger/besu

Update the package list:

sudo apt update

Install Besu:

sudo apt install besu

Alternatively, you can download the binary file from the official website, extract it, and add it to the system's path.

Configuring the Genesis block

Once you have installed Besu on each machine, you need to configure the Genesis block. The Genesis block is the first block in the blockchain, and it contains the initial configuration of the network. You can create a Genesis block using a configuration file in JSON format.

Here is an example of a Genesis block configuration file:

json

Copy code

{ "config": { "chainId": 1337, "homesteadBlock": 0, "eip150Block": 0, "eip155Block": 0, "eip158Block": 0 }, "alloc": { "ADDRESS1": { "balance": "1000000000000000000" }, "ADDRESS2": { "balance": "1000000000000000000" } }, "coinbase": "0x0000000000000000000000000000000000000000", "difficulty": "0x20000", "extraData": "0x", "gasLimit": "0x2fefd8", "nonce": "0x0000000000000042", "mixHash": "0x0000000000000000000000000000000000000000000000000000000000000000", "parentHash": "0x0000000000000000000000000000000000000000000000000000000000000000", "timestamp": "0x00" }

In this configuration file, you can specify the chain ID, the initial allocation of ether, the difficulty, the gas limit, and other parameters. You can also specify the addresses that will receive the initial allocation of ether.

Save this configuration file as genesis.json on each machine.

Starting the nodes

After you have created the Genesis block, you need to start the nodes. Each node needs

a configuration file that specifies its identity and connection details. You can create a configuration file using the following format:

typescript

Copy code

network=private data-path=/path/to/data rpc-http-enabled=true rpc-http-host=0.0.0.0 rpc-http-port=8545 p2p-enabled=true p2p-host=0.0.0.0 p2p-port=30303 p2p-advertised-host=PUBLIC_IP_ADDRESS p2p-advertised-port=30303 genesis-file=/path/to/genesis.json node-private-key-file=/path/to/node.key

In this configuration file, you can specify the network name, the data path, the RPC endpoint details, the P2P endpoint details, the Genesis block file path, and the node's private key file path. You can also specify the public IP address of the node for P2P communication.

Save this configuration file as node.config on each machine, with different private keys for each node.

To start a node, run the following command:

css

Copy code

besu --config-file=node.config --data-path=/path/to/data --rpc-http-api=ETH,NET,WEB3 --p2p-discovery-enabled=false --p2p-ws-enabled=false

This command starts Besu with the specified configuration file and data path. It also enables the ETH, NET, and WEB3 APIs for the RPC endpoint and disables the P2P WebSocket and discovery protocols.

Connecting the nodes

Once you have started the nodes, you need to connect them to form a network. To do this, you need to specify the enode URL of each node in the configuration file of the other nodes.

The enode URL is a unique identifier of each node in the network, and it includes the P2P endpoint details and the node's public key. You can get the enode URL of a node by running the following command:

css

Copy code

besu --config-file=node.config --data-path=/path/to/data p2p enode

This command returns the enode URL of the node, which you can copy and paste into the configuration file of the other nodes.

Here is an example of a configuration file for node 1 that includes the enode URL of node 2:

typescript

Copy code

network=private data-path=/path/to/data rpc-http-enabled=true rpc-http-host=0.0.0.0 rpc-http-port=8545 p2p-enabled=true p2p-host=0.0.0.0 p2p-port=30303 p2p-advertised-host=PUBLIC_IP_ADDRESS p2p-advertised-port=30303 genesis-file=/path/to/genesis.json node-private-key-file=/path/to/node1.key bootnodes=enode://PUBLIC_KEY_OF_NODE2@PUBLIC_IP_ADDRESS_OF_NODE2:30303

Save this configuration file as node1.config on machine 1 and as node2.config on machine 2.

Testing the network

Once you have connected the nodes, you can test the network by deploying a smart contract and interacting with it using a dApp.

To deploy a smart contract, you need to write the contract code in Solidity, compile it using a Solidity compiler, and deploy it to the network using the Besu client.

To interact with the smart contract using a dApp, you need to write the frontend code in JavaScript or another language, connect to the RPC endpoint of the network, and send transactions to the smart contract using the web3.js library or another Ethereum client library.

Conclusion

Setting up a private network for Hyperledger Besu nodes is a necessary step for any enterprise that wants to deploy and manage blockchain applications in a private environment. By following the steps outlined in this article, you can easily create a private network of Besu nodes and test your smart contracts and dApps in a controlled environment.

One advantage of using Besu for private blockchain networks is its compatibility with Ethereum. This means that any application built for Ethereum can be easily ported to Besu, and any developer familiar with Ethereum can easily learn Besu.

In addition, Besu offers several features that make it suitable for enterprise use, such as permissioning, privacy, and scalability. For example, Besu supports permissioning using the Ethereum Permissioning Protocol (EPP), which allows network administrators to control which nodes can join the network and which actions they can perform.

Besu also supports private transactions using the Tessera privacy layer, which allows confidential data to be encrypted and shared only with authorized parties. This is important for industries such as finance and healthcare, where sensitive data must be protected from unauthorized access.

Finally, Besu is designed for high performance and scalability, with support for parallel transaction processing and sharding. This makes it suitable for large-scale enterprise applications that require high throughput and low latency.

1 note

·

View note

Photo

Dynamic Contact Center Manager (DCCM) of Pointel Solutions, allows contact centers to control and handle such challenges faster and safely. DCCM lets your contact center operators to make changes in specifications that include agent queues, routing, balancing loads and skills to meet Service Level Agreement (SLA) Objectives.

Visit here to Register for Demo: https://www.pointelsolutions.com/solutions-dynamic-contact-center-manager/

#dynamic contact center manager#genesys configuration#genesys configuration manager#genesys config management

1 note

·

View note

Text

My Homelab/Office 2020 - DFW Quarantine Edition

Moved into our first home almost a year ago (October 2019), I picked out a room that had 2 closets for my media/game/office area. Since the room isn't massive, I decided to build a desk into closet #1 to save on space. Here 1 of 2 shelves was ripped off, the back area was repainted gray. A piece of card board was hung to represent my 49 inch monitor and this setup also gave an idea how high I needed the desk.

On my top shelf this was the initial drop for all my Cat6 cabling in the house, I did 5 more runs after this (WAN is dropped here as well).

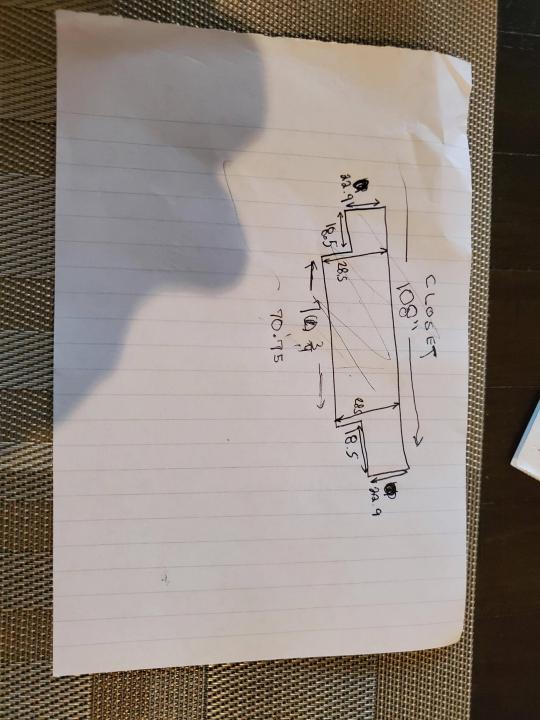

I measured the closet and then went to Home Depot to grab a countertop. Based on the dimensions, it needed to be cut into an object shape you would see on Tetris.

Getting to work, cutting the countertop.

My father-in-law helped me cut it to size in the driveway and then we framed the closet, added in kitchen cabinets to the bottom (used for storage and to hide a UPS). We ran electrical sockets inside the closet. I bought and painted 2 kitchen cabinets which I use for storage under my desk as well.

The holes allowed me to run cables under my desk much easier, I learned many of these techniques on Battlestations subreddit and Setup Wars on Youtube. My daughter was a good helper when it came to finding studs.

Some of my cousins are networking engineers, they advised me to go with Unifi devices. Here I mounted my Unifi 16 port switch, my Unifi Security Gateway (I'll try out pfSense sometime down the line), and my HD Homerun (big antenna is in the attic). I have Cat6 drops in each room in the house, so everything runs here. On my USG, I have both a LAN #2 and a LAN #1 line running to the 2nd closet in this room (server room). This shot is before the cable management.

Cable management completed in closet #1. Added an access point and connected 3 old Raspberry Pi devices I had laying around (1 for PiHole - Adblocker, 1 for Unbound - Recursive DNS server, and 1 for Privoxy - Non Caching web proxy).

Rats nest of wires under my desk. I mounted an amplifier, optical DVD ROM drive, a USB hub that takes input from up to 4 computers (allows me to switch between servers in closet #2 with my USB mic, camera, keyboard, headset always functioning), and a small pull out drawer.

Cable management complete, night shot with with Nanoleaf wall lights. Unifi controller is mounted under the bookshelf, allows me to keep tabs on the network. I have a tablet on each side of the door frame (apps run on there that monitor my self hosted web services). I drilled a 3 inch hole on my desk to fit a grommet wireless phone charger. All my smart lights are either running on a schedule or turn on/off via an Alexa command. All of our smart devices across the house and outside, run on its on VLAN for segmentation purposes.

Quick shot with desk light off. I'm thinking in the future of doing a build that will mount to the wall (where "game over" is shown).

Wooting One keyboard with custom keycaps and Swiftpoint Z mouse, plus Stream Deck (I'm going to make a gaming comeback one day!).

Good wallpapers are hard to find with this resolution so pieced together my own.

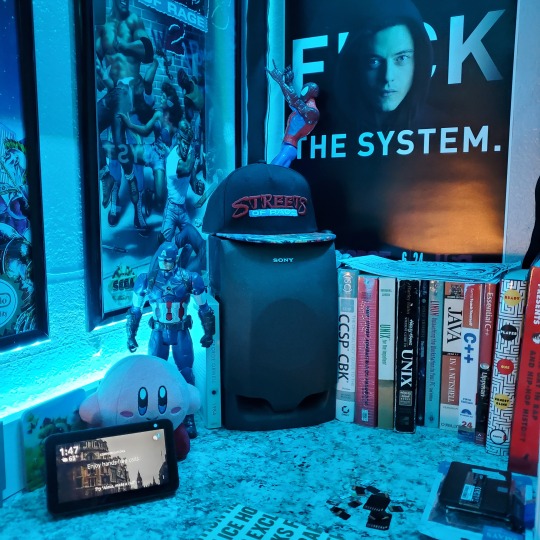

Speakers and books at inside corner of desk.

Speakers and books at inside corner of desk.

Closet #2, first look (this is in the same room but off to the other side). Ran a few CAT6 cables from closet #1, into the attic and dropped here (one on LAN #1, the other on LAN #2 for USG). Had to add electrical sockets as well.

I have owned a ton of Thinkpads since my IBM days, I figured I could test hooking them all up and having them all specialize in different functions (yes, I have a Proxmox box but it's a decommissioned HP Microserver on the top shelf which is getting repurposed with TrueNAS_core). If you're wondering what OSes run on these laptops: Windows 10, Ubuntu, CentOS, AntiX. All of these units are hardwired into my managed Netgear 10gigabit switch (only my servers on the floor have 10 gigabit NICs useful to pass data between the two). Power strip is also mounted on the right side, next to another tablet used for monitoring. These laptop screens are usually turned off.

Computing inventory in image:

Lenovo Yoga Y500, Lenovo Thinkpad T420, Lenovo Thinkpad T430s, Lenovo Thinkpad Yoga 12, Lenovo Thinkpad Yoga 14, Lenovo Thinkpad W541 (used to self host my webservices), Lenovo S10-3T, and HP Microserver N54L

Left side of closet #2

**moved these Pis and unmanaged switch to outside part of closet**

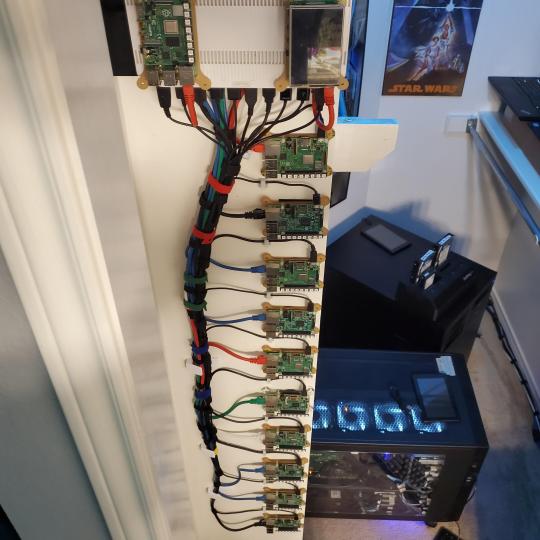

Since I have a bunch of Raspberry Pi 3s, I decided recently to get started with Kubernetes clusters (my time is limited but hoping to have everything going by the holidays 2020) via Rancher, headless. The next image will show the rest of the Pis but in total:

9x Raspberry Pi 3 and 2x Raspberry Pi 4

2nd shot with cable management. The idea is to get K3s going, there's Blinkt installed on each Pi, lights will indicate how many pods per node. The Pis are hardwired into a switch which is on LAN #2 (USG). I might also try out Docker Swarm simultaneously on my x86/x64 laptops. Here's my compose generic template (have to re-do the configs at a later data) but gives you an idea of the type of web services I am looking to run: https://gist.github.com/antoinesylvia/3af241cbfa1179ed7806d2cc1c67bd31

20 percent of my web services today run on Docker, the other 80 percent are native installs on Linux and or Windows. Looking to get that up to 90 percent by the summer of 2021.

Basic flow to call web services:

User <--> my.domain (Cloudflare 1st level) <--> (NGINX on-prem, using Auth_Request module with 2FA to unlock backend services) <--> App <--> DB.

If you ever need ideas for what apps to self-host: https://github.com/awesome-selfhosted/awesome-selfhosted

Homelabs get hot, so I had the HVAC folks to come out and install an exhaust in the ceiling and dampers in the attic.

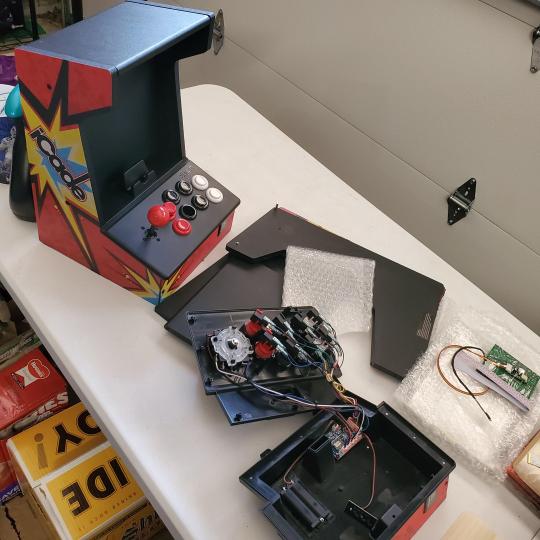

I built my servers in the garage this past winter/spring, a little each night when my daughter allowed me to. The SLI build is actually for Parsec (think of it as a self hosted Stadia but authentication servers are still controlled by a 3rd party), I had the GPUs for years and never really used them until now.

Completed image of my 2 recent builds and old build from 2011.

Retroplex (left machine) - Intel 6850 i7 (6 core, 12 thread), GTX 1080, and 96GB DDR4 RAM. Powers the gaming experience.

Metroplex (middle machine) - AMD Threadripper 1950x (16 core, 32 thread), p2000 GPU, 128GB DDR4 RAM.

HQ 2011 (right machine) - AMD Bulldozer 8150 (8 cores), generic GPU (just so it can boot), 32GB DDR3 RAM.

I've been working and labbing so much, I haven't even connected my projector or installed a TV since moving in here 11 months ago. I'm also looking to get some VR going, headset and sensors are connected to my gaming server in closet #2. Anyhow, you see all my PS4 and retro consoles I had growing up such as Atari 2600, NES, Sega Genesis/32X, PS1, Dreamcast, PS2, PS3 and Game Gear. The joysticks are for emulation projects, I use a Front End called AttractMode and script out my own themes (building out a digital history gaming museum).

My longest CAT6 drop, from closet #1 to the opposite side of the room. Had to get in a very tight space in my attic to make this happen, I'm 6'8" for context. This allows me to connect this cord to my Unifi Flex Mini, so I can hardware my consoles (PS4, PS5 soon)

Homelab area includes a space for my daughter. She loves pressing power buttons on my servers on the floor, so I had to install decoy buttons and move the real buttons to the backside.

Next project, a bartop with a Raspberry Pi (Retropie project) which will be housed in an iCade shell, swapping out all the buttons. Always have tech projects going on. Small steps each day with limited time.

6 notes

·

View notes

Text

How to use kodi legally

#HOW TO USE KODI LEGALLY MOVIE#

#HOW TO USE KODI LEGALLY INSTALL#

#HOW TO USE KODI LEGALLY FREE#

There’s no audio in the video because I used MeLE PCG03 which does not support HDMI audio (yet) in Linux, and I recorded the demo with Zidoo X9 HDMI recorder. I mostly got luck with Genesis add-on, where I could start playing a few movies, but still I had to arm myself with patience while browsing the list, and the initial buffering.

#HOW TO USE KODI LEGALLY MOVIE#

1Channel, Icefilms, and Phoenix would let me access to various movies and TV channels, and popular ones included recent movie like Big Hero 6 or Interstellar, and TV series like Games of Thrones, The Big Bang Theory and so on, but most of them would not play, and in some case I was even asked to input an annoying captcha.

#HOW TO USE KODI LEGALLY FREE#

Project Free TV will simply not work due to a script error. I’ve given it try, and the results were mixed, but it might be partially due to my location. You should now have 5 new add-ons showing on the main page: 1Channel, Genesis, Project Free TV, Icefilms, and Phoenix. Kodi 14 with TVaddons Installed (Click for Original Size) We have created the ultimate guide to show beginners exactly how you can get started with Kodi, and we give you the info to make the most of this powerful entertainment tool. Exit and restart Kodi to complete the installation

Once completed, you’ll automatically be back in the main menu.

Now be patient as the wizard downloads and installs adds-ons.

I’m using Kodi 14.2 in Ubuntu 15.04, so I selected “ Linux“, and clicked on Yes.

You’ll get the following screen to select your platform.

Go back to the main menu, and select Programs->Config Wizard.

I will feel like nothing happens on that step, that’s normal

Select fusion (or any other name you choose in step 2), and start-here.

#HOW TO USE KODI LEGALLY INSTALL#

Navigate the Add-ons sub menu, and click on Install from zip.Go back to the main menu, and select System->Setttings.You’ll get the following pop-up window, where you need to enter the source “” and a name such as “fusion”, and press OK.Go to System->File Manager, and click Add source.The installation requires Fusion Installer and Config Wizard with detailed instructions available there with every screenshot, which makes it more complicated than it really is, but it can be summarized as follows: These add-ons are completely unrelated to Kodi project, and have been developed and maintained by third parties. , previously known as XBMCHUB, is providing add-ons allowing to do just that, more or less legally, as well as a method to install a bunch of popular add-ons in a few clicks. You can use Kodi to watch videos locally, but various add-ons can be installed to also access videos online, but finding and installing add-ons might be a pain if you don’t know where to start.

0 notes

Text

Annotated edition, Week in Ethereum News, Jan 19, 2020

This is the 6th of 6 annotated editions that I promised myself to do.

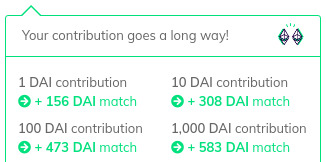

Like last week, it’s an opportunity to shill my Gitcoin grant page. Right now a 1 DAI (you can give ETH or any token) has an insane matching. Where else can you get a 150x return on a buck?

There’s about 24 hours left at the time this was published.

I shared on Twitter the graph of my subscriber count for the last 90 days:

The newsletter has gotten big enough to the point where the subscriber churn is enough to force negative days. It used to be that the day I sent the email, I’d get 90% of my new subs for the week from posting on Reddit. Now Reddit is saturated, and the number only goes up because of the long tail of word of mouth - which seems to be mostly seeded by the RTs of people who make the weekly most clicked as I post it to Twitter.

It’s clear that I’ve mostly bumped up against the ceiling of people who are willing to subscribe to a tech-heavy Eth newsletter. Perhaps there are other marketing channels, but seems unlikely to find people who want a newsletter, even if they are Eth devs.

This annotated version is an attempt to test whether writing for a larger audience would succeed. I think to some degree it has, but I also haven’t done a great job of contextualizing, nor adding narratives. Still working on it.

Eth1

Notes from the latest eth1 research call. How to get to binary tries.

Guillaume Ballet argues for WASM precompiles for better eth1 to prepare for eth2

StarkWare mainnet tests find that a much bigger block size does not affect uncle rate and argues that a further decrease in gas for transaction data would be warranted

Guide to running Geth/Parity node or eth2 Prysm/Lighthouse testnet on Raspberry Pi4

Nethermind v1.4.8

Eth1 is moving toward a stateless model like Eth2 will have. This will help make Eth1 easier to seamlessly port into eth2 when phase 1 is live.

In the meantime, people are figuring out what makes sense. Is it WASM precompiles? Will client devs agree to it?

StarkWare wants to reduce the gas cost again, which would make rollup even cheaper and provide more transaction scalability.

Eth2

The incentives for good behavior and whistleblowing in Eth2 staking

Danny Ryan’s quick Eth2 update – updated docs explaining the spec currently under audit

Options for eth1 to eth2 bridges and phase1 fee market

Simulation environment for eth2 economics

Ryuya Nakamura proposes the subjective finality gadget

Lighthouse client update – 40x speedup in fork choice, 4x database speedup, faster BLS

Prysmatic client update – testnet with mainnet config

A guide to staking on Prysmatic’s testnet

How to build the Nimbus client on Android

Evaluating Eth2 staking pool options

If you’re going to read one post this week in full, the “incentives for good behavior” is probably the one. This isn’t new info, but it’s nicely packaged up and with the spec under audit, this is very likely to be the final info. This answers many of the questions that people come to Reddit and ask.

Danny Ryan’s quick updates are also packed full of info.

the spec is out for audit. the documentation all got overhauled to explain the decisions -- ie, things needed for an audit -- with the expectation of a post-audit spec in early March. Obviously we hope for minimal changes and then set a plan for lunch.

There’s been lots of talk about how long we need to run testnets for, but i think it’s quite clear that anything more than a month or so of testnets is overkill. We’ve had various testnets for months, the testnets will get increasingly multi-client, including from genesis. Phase 0 is going to be in production but not doing anything in production - a bit like the original release of Frontier in July 2015.

We should push to launch soon. Problems can be hardforked away and the expectations should be very similar to the launch of Frontier.

Layer2

Plasma Group -> Optimism, raises round from Paradigm/ideo for optimistic rollups

Auctioning transaction ordering rights to re-align miner incentives

A writeup of Interstate Network’s optimistic rollup

Plasma Group changed their name to Optimism and raised a round. It’s hardly a secret that layer2 has been a frustration in Bitcoin/Ethereum for years, with no solution reaching critical mass, and sidechains simply trading off decentralization/trustlessness. Plasma Group decided to go for rollup instead of Plasma, due to the relative ease of doing fully EVM through optimistic rollup. Respect to Paradigm for having conviction and leading the round, as well as IDEO.

The auctioning of ordering rights is part of their solution.

Also cool is Interstate Network, who is building something similar. I’m unclear why they decided to launch with a writeup on Gitcoin grants, but it is worthy of supporting.

Stuff for developers

Truffle v5.1.9, now Istanbul compatible.

Truffle’s experimental console.log

New features in Embark v5

SolUI: generate IPFS UIs for your Solidity code. akin to oneclickdapp.com

BokkyPooBah’s Red-Black binary search tree library and DateTime library updated to Solidity v0.6

Overhauled OpenZeppelin docs

Exploring commit/reveal schemes

Using the MythX plugin with Remix

Training materials for Slither, Echidna, and Manticore from Trail of Bits

Soon you’ll need to pay for EthGasStation’s API

As of Feb 15, you’ll need a key for Etherscan’s API

Hard to miss the “time to get a key” for the API trend. But to be fair, it makes sense to require keys. It’s not surprising that providing it for free is not a business model.

Ecosystem

Gavin Andresen loves Tornado.Cash and published some thoughts on making a wallet on top of Tornado Cash

MarketingDAO is open for proposals

Almonit.eth.link launches, a search engine for ENS + IPFS dweb

Build token pop-up economies with the BurnerFactory

Tornado.cash is such a huge thing for our ecosystem that I feel no problem highlighting it forever. The complete lack of privacy isn’t 100% solved, but if you care about your privacy, Tornado Cash is super easy to use. I’ve said it before, but participating in Tornado is a public good -- you’re increasing the anonymity set.

The dweb using ENS and IPFS is interesting, worth watching to see how it evolves, though currently it only has 100 sites.

I hope to see more pop-up economies happen. It’s a great way to onboard people and give a better glimpse of the future than making people wait an hour for transactions to confirm.

Enterprise

EEA testnet launch running Whiteblock’s Genesis testing platform

Plugin APIs in Hyperledger Besu

Privacy and blockchains primer aimed at enterprise

A massive list of corporations building on Ethereum

Sacramento Kings using Treum supply chain tracking to authenticate player equipment

Neat to see the Kings experimenting with new tech, even if in small ways.

Meanwhile that list of building on Ethereum has 700+ RTs at the moment. Goes to the MarketingDAO above - there are many ETH holders who feel like Ethereum is undermarketed.

Governance and standards

EIP2464: eth/65 transaction annoucements and retrievals

ERC2462: interface standard for EVM networks

ERC2470: Singleton Factory

bZxDAO: proposed 3 branch structure to decentralize bZx

Application layer

Livepeer upgrades to Streamflow release – GPU miners can transcode video with negligible loss of hashpower so video transcoding gets cheaper

Molecule is live on mainnet with a bonding curve for a clinical trial for Psilocybin microdoses

Liquidators: the secret whales helping DeFi function. Good walkthrough of DeFi network keepers.

Curve: a uniswap-like exchange for stablecoins, currently USDC<>DAI

New Golem release has Concent on mainnet, new usage marketplace, and Task API on testnet

Gitcoin as social network

rTrees. Plant trees with your rDai

In typical Livepeer fashion, they didn’t hype up their release very much, but I think Streamflow could end up being very big. They think they can get the price point down for transcoders to being cheaper than centralized transcoders. How? Because GPU miners want to make more money and GPU miners can add a few transcoding streams with negligible loss of hashpower. This will become even more crucial when ETH moves to proof of stake, and miners will need to get more out of their hardware.

Lots of people loved rTrees. As a guy who has done all the CFA exams, I have had the time value of money drilled into me too much to ever think of anything as “no loss” but people love the concept.

Psychedelic microdosing and tech has become a thing. Tim Ferriss led fundraising a Johns Hopkins psychedelic research center, it will be interesting to see if Molecule becomes a hit in the tech community outside crypto.

Tokens/Business/Regulation

The case for a trillion dollar ETH market cap

Continuous Securities Offering handbook

The SEC does not like IEOs

Former CFTC Chair Giancarlo and Accenture to push for a blockchain USD

Tokenizing yourself (selling your time/service via token) was all the rage this week, kicked off by Peter Pan. Here’s a guide to tokenizing yourself

Avastars: generative digital art from NFTs

Speaking of the Eth community wanting more marketing, the trillion dollar market cap piece was the most clicked this week.

If you haven’t checked out Continuous Securities, it’s a neat idea.

The tokenizing yourself trend is easy to laugh at or dismiss, but they’re some small experiments that are worth watching.

General

Chris Dixon: Inside-out vs outside-in tech adoption

baby snark: Andrew Miller’s tutorial on implementation and soundness proof of a simple SNARK

Blake3 hash function

Justin Drake explains polynomial commitments

New bounty (3000 USD) for improving cryptanalysis on the Legendre PR

Mona El Isa’s a day in the life for asset management in 2030. We need more web3 science fiction

SciFi and zero knowledge (”moon math” as it occasionally gets called) section.

SciFi shows us the future, and zero knowledge solutions increasingly aren’t just the future, but also the present.

Full Week in Ethereum News post

0 notes

Text

Original Post from Amazon Security Author: Becca Crockett

In the weeks leading up to re:Invent 2019, we’ll share conversations we’e had with people at AWS who will be presenting at the event so you can learn more about them and some of the interesting work that they’re doing.

How long have you been at AWS, and what do you do enjoy most in your current role?

It’s been two and a half years already! Time has flown. I’m the product manager for AWS CloudHSM. As with most product managers at AWS, I’m the CEO of my product. I spend a lot of my time talking to customers who are looking to use CloudHSM, to understand the problems they are looking to solve. My goal is to make sure they are looking at their problems correctly. Often, my role as a product manager is to coach. I ask a lot of why’s. I learned this approach after I came to AWS—before that I had the more traditional product management approach of listening to customers to take requirements, prioritize them, do the marketing, all of that. This notion of deeply understanding what customers are trying to do and then helping them find the right path forward—which might not be what they were thinking of originally—is something I’ve found unique to AWS. And I really enjoy that piece of my work.

What are you currently working on that you’re excited about?

CloudHSM is a hardware security module (HSM) that lets you generate and use your own encryption keys on AWS. However, CloudHSM is weird in that, by design, you’re explicitly outside the security boundary of AWS managed services when you use it: You don’t use AWS IAM roles, and HSM transactions aren’t captured in AWS CloudTrail. You transact with your HSM over an end-to-end encrypted channel between your application and your HSM. It’s more similar to having to operate a 3rd party application in Amazon Elastic Compute Cloud (EC2) than it is to using an AWS managed service. My job, without breaking the security and control the service offers, is to continue to make customers’ lives better through more elastic, user-friendly, and reliable HSM experiences.

We’re currently working on simplifying cross-region synchronization of CloudHSM clusters. We’re also working on simplifying management operations, like adjusting key attributes or rotating user passwords.

Another really exciting thing that we’re working on is auto-scaling for HSM clusters based on load metrics, to make CloudHSM even more elastic. CloudHSM already broke the mold of traditional HSMs with zero-config cluster scaling. Now, we’re looking to expand how customers can leverage this capability to control costs without sacrificing availability.

What’s the most challenging part of your job?

For one, time management. AWS is so big, and our influence is so vast, that there’s no end to how much you can do. As Amazonians, we want to take ownership of our work, and we want bias for action to accomplish everything quickly. Still, you have to live to fight another day, so prioritizing and saying no is necessary. It’s hard!

I also challenge myself to continue to cultivate the patience and collaboration that gets a customer on a good security path. It’s very easy to say, This is what they’re asking for, so let’s build it—it’s easy, it’s fast, let’s do it. But that’s not the customer obsessed solution. It’s important to push for the correct, long-term outcome for our customers, and that often means training, and bringing in Solutions Architects and Support. It means being willing to schedule the meetings and take the calls and go out to the conferences. It’s hard, but it’s the right thing to do.

What’s your favorite part of your job?

Shipping products. It’s fun to announce something new, and then watch people jump on it and get really excited.

I still really enjoy demonstrating the elastic nature of CloudHSM. It sounds silly, but you can delete a CloudHSM instance and then create a new HSM with a simple API call or console button click. We save your state, so it picks up right where you left off. When you demo that to customers who are used to the traditional way of using on-premises HSMs, their eyes will light up—it’s like being a kid in the candy store. They see a meaningful improvement to the experience of managing HSM they never thought was possible. It’s so much fun to see their reaction.

What does cloud security mean to you, personally?

At the risk of hubris, I believe that to some extent, cloud security is about the survival of the human race. 15-20 years ago, we didn’t have smart phones, and the internet was barely alive. What happened on one side of the planet didn’t immediately and irrevocably affect what happened on the opposite side of the planet. Now, in this connected world, my children’s classrooms are online, my assets, our family videos, our security system—they are all online. With all the flexibility of digital systems comes an enormous amount of responsibility on the service and solution providers. Entire governments, populations, and countries depend on cloud-based systems. It’s vital that we stay ten steps ahead of any potential risk. I think cloud security functions similar to the way that antibiotics and vaccinations function—it allows us to prevent, detect and treat issues before they become serious threats. I am very, very proud to be part of a team that is constantly looking ahead and raising the bar in this area.

What’s the most common misperception you encounter with customers about cloud security?

That you have to directly configure and use your HSMs to be secure in the cloud. In other words, I’m constantly telling people they do not need to use my product.

To some extent, when customers adopt CloudHSM, it means that we at AWS have not succeeded at giving them an easier to use, lower cost, fully managed option. CloudHSM is expensive. As easy as we’ve made it to use, customers still have to manage their own availability, their own throttling, their own users, their own IT monitoring.

We want customers to be able to use fully managed security services like AWS KMS, ACM Private CA, AWS Code Signing, AWS Secrets Manager and similar services instead of rolling their own solution using CloudHSM. We’re constantly working to pull common CloudHSM use cases into other managed services. In fact, the main talk that I’m doing at re:Invent will put all of our security services into this context. I’m trying to make the point that traditional wisdom says that you have to use a dedicated cryptographic module via CloudHSM to be secure. However, practical wisdom, with all of the advances that we’ve made in all of the other services, almost always indicates that KMS or one of the other managed services is the better option.

In your opinion, what’s the biggest challenge facing cloud security right now?

From my vantage point, I think the challenge is the disconnect between compliance and security officers and DevOps teams.

DevOps people want to know things like, Can you rotate your keys? Can you detect breaches? Can you be agile with your encryption? But I think that security and compliance folks still tend to gravitate toward a focus on creating and tracking keys and cryptographic material. When you try to adapt those older, more established methodologies, I think you give away a lot of the power and flexibility that would give you better resilience.

Five or more years from now, what changes do you think we’ll see across the security landscape?

I think what’s coming is a fundamental shift in the roots of trust. Right now, the prevailing notion is that the roots of trust are physically, logically, and administratively separate from your day to day compute. With Nitro and Firecracker and more modern, scalable ways of local roots of trust, I look forward to a day, maybe ten years from now, when HSMs are obsolete altogether, and customers can take their key security wherever they go.

I also think there is a lot of work being done, and to be done, in encrypted search. If at the end of the day you can’t search data, it’s hard to get the full value out of it. At the same time, you can’t have it in clear text. Searchable encryption currently has and will likely always have limitations, but we’re optimistic that encrypted search for meaningful use cases can be delivered at scale.

You’re involved with two sessions at re:Invent. One is Achieving security goals with AWS CloudHSM. How did you choose this particular topic?

I talk to customers at networking conferences run by AWS—and also recently at Grace Hopper—about what content they’d like from us. A recurring request is guidance on navigating the many options for security and cryptography on AWS. They’re not sure where to start, what they should use, or the right way to think about all these security services.

So the genesis of this talk was basically, Hey, let’s provide some kind of decision tree to give customers context for the different use cases they’re trying to solve and the services that AWS provides for those use cases! For each use case, we’ll show the recommended managed service, the alternative service, and the pros and cons of both. We want the customer’s decision process to go beyond just considerations of cost and day one complexity.

What are you hoping that your audience will do differently as a result of attending this session?

I’d like DevOps attendees to be able to articulate their operational needs to their security planning teams more succinctly and with greater precision. I’d like auditors and security planners to have a wider, more realistic view of AWS services and capabilities. I’d like customers as a whole to make the right choice for their business and their own customers. It’s really important for teams as a whole to understand the problem they’re trying to solve. If they can go into their planning and Ops meetings armed with a clear, comprehensive view of the capabilities that AWS offers, and if they can make their decisions from the position of rational information, not preconceived notions, then I think I’ll have achieved the goals of this session.

You’re also co-presenting a deep-dive session along with Rohit Mathur on CloudHSM. What can you tell us about the session that’s not described in the re:Invent catalog?

So, what the session actually should be called is: If you must use CloudHSM, here’s how you don’t shoot your foot.

In the first half of the deep dive, we explain how CloudHSM is different than traditional HSMs. When we made it agile, elastic, and durable, we changed a lot of the traditional paradigms of how HSMs are set up and operated. So we’ll spend a good bit of time explaining how things are different. While there are many things you don’t have to worry about, there are some things that you really have to get right in order for your CloudHSM cluster to work for you as you expect it to.

We’ll talk about how to get maximum power, flexibility, and economy out of the CloudHSM clusters that you’re setting up. It’s somewhat different from a traditional model, where the HSM is just one appliance owned by one customer, and the hardware, software, and support all came from a single vendor. CloudHSM is AWS native, so you still have the single tenant third party FIPS 140-2 validated hardware, but your software and support are coming from AWS. A lot of the integrations and operational aspect of it are very “cloudy” in nature now. Getting customers comfortable with how to program, monitor, and scale is a lot of what we’ll talk about in this session.

We’ll also cover some other big topics. I’m very excited that we’ll talk about trusted key wrapping. It’s a new feature that allows you to mark certain keys as trusted and then control the attributes of keys that are wrapped and unwrapped with those trusted keys. It’s going to open up a lot of flexibility for customers as they implement their workloads. We’ll include cross-region disaster recovery, which tends to be one of the more gnarly problems that customers are trying to solve. You have several different options to solve it depending on your workloads, so we’ll walk you through those options. Finally, we’ll definitely go through performance because that’s where we see a lot of customer concerns, and we really want our users to get the maximum throughput for their HSM investments.

Any advice for first-time attendees coming to re:Invent?

Wear comfortable shoes … and bring Chapstick. If you’ve never been to re:Invent before, prepare to be overwhelmed!

Also, come prepared with your hard questions and seek out AWS experts to answer them. You’ll find resources at the Security booth, you can DM us on Twitter, catch us before or after talks, or just reach out to your account manager to set up a meeting. We want to meet customers while we’re there, and solve problems for you, so seek us out!

You like philosophy. Who’s your favorite philosopher and why?

Rabindranath Tagore. He’s an Indian poet who writes with deep insight about homeland, faith, change, and humanity. I spent my early childhood in the US, then grew up in Bombay and have lived across the Pacific Northwest, the East Coast, the Midwest, and down south in Louisiana in equal measure. When someone asks me where I’m from, I have a hard time answering honestly because I’m never really sure. I like Tagore’s poems because he frames that ambiguity in a way that makes sense. If you abstract the notion of home to the notion of what makes you feel at home, then answers are easier to find! Want more AWS Security news? Follow us on Twitter.

The AWS Security team is hiring! Want to find out more? Check out our career page.

Avni Rambhia

Avni is the Senior Product Manager for AWS CloudHSM. At work, she’s passionate about enabling customers to meet their security goals in the AWS Cloud. At leisure, she enjoys the casual outdoors and good coffee.

#gallery-0-5 { margin: auto; } #gallery-0-5 .gallery-item { float: left; margin-top: 10px; text-align: center; width: 33%; } #gallery-0-5 img { border: 2px solid #cfcfcf; } #gallery-0-5 .gallery-caption { margin-left: 0; } /* see gallery_shortcode() in wp-includes/media.php */

Go to Source Author: Becca Crockett AWS Security Profiles: Avni Rambhia, Senior Product Manager, CloudHSM Original Post from Amazon Security Author: Becca Crockett In the weeks leading up to re:Invent 2019…

0 notes

Text

이더리움 Genesis 파일 만들고 개인 네트워크 구동하기

이번 학교의 DPNM 연구실에서 여름 방학에 블록체인 관련 공부를 하고 있는데, 간단한 이더리움 활용 과제가 나와 수행하던 도중 난데없이 사설 네트워크와 "genesis"라는 단어가 나와 그에 대해 간단히 정리해본다.

아래의 글은 이미 서버에 이더리움이 설치되어 geth 명령어를 실행할 수 있다고 가정하고 작성하였다. 작성 당시 geth 버전은 1.8.12-stable이다.

이더리움 사설 네트워크와 Genesis 파일

이더리움은 사설 이더리움 네트워크를 만들 수 있게 되어 있는데, genesis 파일이란 이 개인 네트워크의 초기 설정이라 할 수 있다. "the genesis"는 발생, 창시 등의 뜻이 있다고 한다. 이더리움뿐 아니라, 다른 암호 화폐에서도 최초의 블록을 "Genesis block" 이라고 한다고 한다. 뭐... 그런 느낌이다. Genesis의 표기에 대해서는 첫 글자가 대문자이면 좀 더 멋져 보이므로, 내 맘대로 첫 글자를 대문자로 하기로 한다.

Genesis 파일 구조

Genesis 파일은 JSON 형식으로 네트워크 설정을 저장하고, 이 파일을 먼저 만들어야 나만의 이더리움 네트워크를 가동할 수 있다. 일단 그 태를 보면 아래와 같다.

{ "config" : { "chainId" : 0, "homesteadBlock" : 0, "eip155Block" : 0, "eip158Block" : 0 }, "alloc" : {}, "coinbase" : "0x0000000000000000000000000000000000000000", "difficulty" : "0x10000", "extraData" : "", "gasLimit" : "0xffffff", "nonce" : "0x0000000000000000", "mixhash" : "0x0000000000000000000000000000000000000000000000000000000000000000", "parentHash" : "0x0000000000000000000000000000000000000000000000000000000000000000", "timestamp" : "0x00000000" }

이제 위에서부터 하나하나 말해보자면...

config 새로 만들 개인 블록체인 네트워크의 설정을 담당하는 오브젝트이다. 이 오브젝트 이외의 설정값들은 대망의 첫 블록에 관한 것이다.

chainId : 현재 블록체인의 ID이다. 리플레이 공격을 막기 위해 사용된다. 그저 이더리움 네트워크를 공부할 때는 예약된 ID가 아닌 것으로 아무렇게나 적어도 괜찮다.

homesteadBlock : 이더리움은 네트워크의 발전 단계에 따라 버전을 미리 구상해 놓았다. 그 각 단계에 올림픽, 프론티어, 홈스테드 등 여러가지 코드 네임을 붙여놓았는데, 그 중 처음으로 "안정" 단계에 해당하는 단계가 바로 홈스테드 이다. 그 홈스테드 단계가 시작되는 블록의 ID를 적으면 된다. 그저 이더리움 네트워크를 공부할 때는 처음부터 홈스테드 단계로 들어가서 살펴보게 되므로, 0이라고 적으면 된다.

eip155Block : EIP는 Ethereum Improvement Proposal 의 약자로, 이더리움 네트워크를 개선하는 여러 제안들을 말한다. 그 중 리플레이 공격 방어에 관한 155번째 제안이 적용이 시작되는 블록 ID를 적으면 된다. 그저 이더리움 네트워크를 공부할 때는 처음부터 이 보안을 적용할 것이므로, 0이라고 적으면 된다.

eip158Block : EIP 158 (이더리움 스테이트의 용량 절감에 관한 제안)의 적용이 시작되는 블록 ID를 적으면 된다. 그저 이더리움 네트워크를 공부할 때는 처음부터 이 최적화를 적용할 것이므로, 0이라고 적으면 된다.

alloc : 네트워크 초기에 특정한 계좌에 특정한 액수의 코인을 지급할 수 있다. 암호 화폐의 초기 부흥을 위해서 화폐를 사전 판매하는 경우가 있는데, 이 때 쓰일 수 있다. 예를 들면, 아래와 같다.

"alloc" : { "19yadv8h2ebalnlrqszzlph54lci6d6pdvht5abi" : { "balance" : 10000 }, "haq72tf9ldqzyavp3slocx55jb00hvldm4ai9iln" : { "balance" : 10000 } }

이 경우 저 두 계좌로 각각 10,000 코인을 지급한 것이 된다. 그저 이더리움 네트워크를 공부할 때는 {}로 비워놔도 좋다.

coinbase : 지금 설정하고 있는 이 첫 블록의 채굴 보상이 돌아갈 계좌 주소이다. 이더리움에서 주소는 160비트로 이루어져 있다. 그저 이더리움 네트워크를 공부할 때는 0으로 해놔도 좋다.

difficulty : 첫 블록의 논스 값 범위의 난이도이다. 값이 낮을 수록 채굴이 쉬워진다. 첫 블록 이후에는 이전 블록의 difficulty와 timestamp를 참고하여 이더리움 소프트웨어가 자동으로 difficulty를 계산할 것이다. 그저 이더리움 네트워크를 공부하는 사람은 거대한 채굴 능력이 없기 때문에, 난이도를 낮게 설정하여 쉽게 채굴하도록 하는 것이 좋으므로, 0x10000 정도로 설정해놓는 것이 좋다.

extraData : 어떠한 데이터를 블록체인에 영구적으로 보존하기 위해 존재하는 32비트의 빈 공간이다. 보통 ""로 비워둔다.

gasLimit : 이더리움 거래 수수료 단위를 Gas 라 하는데, 이더리움 ETH 자체는 시장 가치의 변동폭이 매우 크므로, 그 대신 안정적으로 트랜잭션 비용을 지불하기 위해 만들어진 단위이다. 거래 별 거래 수수료는 해당 거래를 처리하기 위해 필요한 컴퓨터 연산의 정도로 책정된다. 따라서 한 블럭의 전체 거래 수수료 합이 매우 크다는 건 한 블럭을 처리하는데 엄청난 연산이 필요하다는 말이 된다. 그래서 한 블럭에 포함된 거래 수수료에 한도를 두어 한 블럭을 연산하는데 시간이 많이 걸리지 않도록 한다. 그저 이더리움 네트워크를 공부하는 사람은 굳이 여기에 제한을 둘 필요가 없으므로, 0xffffff로 설정해놓을 수 있다.

nonce : 64비트의 상수으로, mixhash와 합쳐져서 작업을 증명하는 데 사용된다. 블록 채굴자들은 이 nonce 값을 증가시켜가며 정답 범위에 들어가는 nonce값을 순차적으로 찾아나설 것이다. 그저 이더리움 네트워크를 공부할 때는 0으로 설정해놓아도 좋다.

mixhash : PoW의 핵심이 되는 값 중 하나로, 256비트의 해시값이며 nonce와 결합하여 작업을 증명하게 된다. 그저 이더리움 네트워크를 공부할 때는 그냥 간편히 0으로 설정해놓으면 된다.

parentHash : 이전 블록의 해시값이다. 이전 블록의 mixhash가 아니다. 이전 블록 전체에 관한 해시값이다. 첫 블록(Genesis block)은 이전 블록이란 게 없으므로, (parent...가 없으므로?) 0으로 설정해놓으면 된다.

timestamp : 블록이 받아들여진 시간을 유닉스 시각으로 나타낸다. 이 값은 블록의 순서를 검증할 때도 사용된다. 그저 이더리움 네트워크를 공부하는 사람은 크게 신경쓸 것 없이 0으로 설정해놓으면 된다.

위의 예시에 적히지 않은 설정 파라미터도 있고 생략가능한 파라미터도 있지만, 그저 이더리움 네트워크를 공부하는 입장에서 적당하게 적어놓았다.

Genesis 파일 만들고 개인 네트워크 시작하기

먼저, 작업 디렉토리를 만들었다. sh ~ $ mkdir tessup #테스트 서버 (...) ~ $ cd tessup

위의 내용을 참고하여 아래의 내용으로 genesis.json 파일을 만들어 작업 디렉토리 안에 저장했다. json { "config" : { "chainId" : 2010010611011415, "homesteadBlock" : 0, "eip155Block" : 0, "eip158Block" : 0 }, "alloc" : {}, "coinbase" : "0x0000000000000000000000000000000000000000", "difficulty" : "0x20000", "extraData" : "", "gasLimit" : "0x300000", "nonce" : "0x0000000000000000", "mixhash" : "0x0000000000000000000000000000000000000000000000000000000000000000", "parentHash" : "0x0000000000000000000000000000000000000000000000000000000000000000", "timestamp" : "0x00000000" }

작업 디렉토리 안에 블록이 저장될 디렉토리로 data 디렉토리를 생성했다. sh ~/tessup $ mkdir data

bootnode 명령어를 사용하여 부트 노드를 시작했다. bootnode는 부트스트랩 노드를 구현한 소프트웨어이다. 여기서 부트스트랩 노드는 일종의 시동 노드로, 다른 노드들의 목록을 저장하여 네트워크를 유지하는 노드를 말한다.

~/tessup $ bootnode -genkey=tessup.key ~/tessup $ bootnode -nodekey=tessup.key

개인적으로는 위 마지막 명령을 백그라운드에서 관리하기 위해 아래와 같이 pm2라는 패키지를 활용했다. pm2는 NPM이 설치되어 있는 상황에서 sudo npm i pm2 -g 명령을 통해 설치할 수 있다.

~/tessup $ pm2 start bootnode -- -nodekey=tessup.key

위 명령을 다 실행하면 내 부트스트랩 노드의 주소가 콘솔에 (pm2의 경우, pm2 log bootnode 명령어를 입력하면) 나타남을 볼 수 있다.

geth 명령어를 사용하여 네트워크에 시동을 건다.

~/tessup $ geth --datadir="./data/" init genesis.json ~/tessup $ geth --bootnodes="(enode://로 시작하는 노드 주소)" --datadir="./data/" --networkid=(genesis.json 파일에 적었던 chainId) console

이 과정이 성공적으로 완료되었다면 Geth Javascript Console이 나타남을 볼 수 있다.

노드 정보를 확인해본다. 일이 다 끝나면 아래와 같이 자바스크립트 콘솔에 입력하여 내가 실행 중인 노드 정보를 알아볼 수 있다.

> admin.nodeInfo

여기서 내가 설정한 Genesis 파일과 동일하게 돌아가는지, 나의 부트스트랩 노드와 연결했는지 확인해보자.

확인을 끝냈다면, 이제부터 나만의 네트워크를 즐겨보자!

나의 이더리움 네트워크에서 ��볼 수 있는 예시 활동은 이 글에 적어놓았다.

문제 해결

유효하지 않은 IP 주소 문제

Fatal: Error starting protocol stack: bad bootstrap/fallback node "enode://...@[::]:30301" (invalid IP (multicast/unspecified))

이 문제는 bootnode의 output을 그대로 가져다 붙여서 geth를 실행했을 때 나타나는 문제인데, bootnode에서 제공하는 주소 끝의 IP (0.0.0.0 혹은 ::)는 받는 IP의 종류로, 외부에서 들어오는 접속을 IP 가리지 않고 받는다는 뜻이다. 여기서 의도하는 것은 어디로 접속해야 할 지가 중요하므로, 0.0.0.0 혹은 :: 대신 아까 bootnode를 실행한 서버의 IP, 혹여 같은 서버라면 127.0.0.1을 적어주자. 30301 포트가 막혀 있다면 꼭 포트를 열어주자.

계속 뭔가가 나오는 문제

INFO [07-26|12:57:49.005] Imported new state entries count=1152 elapsed=4.020ms processed=99887672 pending=21784 retry=2 duplicate=0 unexpected=192 INFO [07-26|12:57:49.400] Imported new state entries count=953 elapsed=7.046ms processed=99888625 pending=21836 retry=0 duplicate=0 unexpected=192

아무것도 하지 않았는데 무언가 계속 통신이 이루어지는 듯한, 위와 같은 로그가 1초에 한 번 이상의 빈도로 반복적으로 나타난다면, 혹시 위의 geth 실행 과정에서 지정한 --datadir 옵션이 잘못되어있을 수 있다. 꼭 새로운 전용 작업 디렉토리와 새로운 데이터 디렉토리를 만들고, 그 디렉토리를 그대로 적어주자.

참고한 글

다들 필력이 대단하다. 나는 쨉도 안 된다. - https://gist.github.com/0mkara/b953cc2585b18ee098cd - https://ethereum.stackexchange.com/questions/15682/the-meaning-specification-of-config-in-genesis-json - https://github.com/HomoEfficio/warehouse/blob/master/etc/Ethereum-dApp-%EA%B0%9C%EB%B0%9C.md - https://github.com/ethereum/EIPs/blob/master/EIPS/eip-155.md - https://github.com/ethereum/EIPs/blob/master/EIPS/eip-158.md - https://github.com/ethereum/go-ethereum/issues/14831 - https://steemit.com/kr/@cryptoboy516/blockchain-1 - https://steemit.com/kr/@icoreport/icoreport-gas-ether-gas - https://steemit.com/kr-newbie/@ico-altcoin/ico-gas-gwei-gas-limit - https://ethereum.stackexchange.com/questions/2376/what-does-each-genesis-json-parameter-mean - https://steemit.com/coinkorea/@etainclub/smart-contract-8-2 - https://github.com/ethereum/go-ethereum/wiki/Setting-up-private-network-or-local-cluster

0 notes

Link

How to set up a private ethereum blockchain using open-source tools and a look at some markets and industries where blockchain technologies can add value.

In Part I, I spent quite a bit of time exploring cryptocurrency and the mechanism that makes it possible: the blockchain. I covered details on how the blockchain works and why it is so secure and powerful. In this second part, I describe how to set up and configure your very own private ethereum blockchain using open-source tools. I also look at where this technology can bring some value or help redefine how people transact across a more open web.

In this section, I explore the mechanics of an ethereum-based blockchain network-specifically, how to create a private ethereum blockchain, a private network to host and share this blockchain, an account, and then how to do some interesting things with the blockchain.

What is ethereum, again? Ethereum is an open-source and public blockchain platform featuring smart contract (that is, scripting) functionality. It is similar to bitcoin but differs in that it extends beyond monetary transactions.

Smart contracts are written in programming languages, such as Solidity (similar to C and JavaScript), Serpent (similar to Python), LLL (a Lisp-like language) and Mutan (Go-based). Smart contracts are compiled into EVM (see below) bytecode and deployed across the ethereum blockchain for execution. Smart contracts help in the exchange of money, property, shares or anything of value, and it does so in a transparent and conflict-free way avoiding the traditional middleman.

If you recall from Part I, a typical layout for any blockchain is one where all nodes are connected to every other node, creating a mesh. In the world of ethereum, these nodes are referred to as Ethereum Virtual Machines (EVMs), and each EVM will host a copy of the entire blockchain. Each EVM also will compete to mine the next block or validate a transaction. Once the new block is appended to the blockchain, the updates are propagated to the entire network, so that each node is synchronized.

In order to become an EVM node on an ethereum network, you'll need to download and install the proper software. To accomplish this, you'll be using Geth (Go Ethereum). Geth is the official Go implementation of the ethereum protocol. It is one of three such implementations; the other two are written in C++ and Python. These open-source software packages are licensed under the GNU Lesser General Public License (LGPL) version 3. The standalone Geth client packages for all supported operating systems and architectures, including Linux, are available here. The source code for the package is hosted on GitHub.

Geth is a command-line interface (CLI) tool that's used to communicate with the ethereum network. It's designed to act as a link between your computer and all other nodes across the ethereum network. When a block is being mined by another node on the network, your Geth installation will be notified of the update and then pass the information along to update your local copy of the blockchain. With the Geth utility, you'll be able to mine ether (similar to bitcoin but the cryptocurrency of the ethereum network), transfer funds between two addresses, create smart contracts and more.

In my examples here, I'm configuring this ethereum blockchain on the latest LTS release of Ubuntu. Note that the tools themselves are not restricted to this distribution or release.

Downloading and Installing the Binary from the Project Website

Download the latest stable release, extract it and copy it to a proper directory:

If you are building from source code, you need to install both Go and C compilers:

Change into the directory and do:

If you are running on Ubuntu and decide to install the package from a public repository, run the following commands:

Here is the thing, you don't have any ether to start with. With that in mind, let's limit this deployment to a "private" blockchain network that will sort of run as a development or staging version of the main ethereum network. From a functionality standpoint, this private network will be identical to the main blockchain, with the exception that all transactions and smart contracts deployed on this network will be accessible only to the nodes connected in this private network. Geth will aid in this private or "testnet" setup. Using the tool, you'll be able to do everything the ethereum platform advertises, without needing real ether.

Remember, the blockchain is nothing more than a digital and public ledger preserving transactions in their chronological order. When new transactions are verified and configured into a block, the block is then appended to the chain, which is then distributed across the network. Every node on that network will update its local copy of the chain to the latest copy. But you need to start from some point-a beginning or a genesis. Every blockchain starts with a genesis block, that is, a block "zero" or the very first block of the chain. It will be the only block without a predecessor. To create your private blockchain, you need to create this genesis block. To do this, you need to create a custom genesis file and then tell Geth to use that file to create your own genesis block.

Create a directory path to host all of your ethereum-related data and configurations and change into the config subdirectory:

Open your preferred text editor and save the following contents to a file named Genesis.json in that same directory:

This is what your genesis file will look like. This simple JSON-formatted string describes the following:

Now it's time to instantiate the data directory. Open a terminal window, and assuming you have the Geth binary installed and that it's accessible via your working path, type the following:

The command will need to reference a working data directory to store your private chain data. Here, I have specified eth-evm/data/PrivateBlockchain subdirectories in my home directory. You'll also need to tell the utility to initialize using your genesis file.

This command populates your data directory with a tree of subdirectories and files:

Your private blockchain is now created. The next step involves starting the private network that will allow you to mine new blocks and have them added to your blockchain. To do this, type:

Notice the use of the new parameter, . This helps ensure the privacy of your network. Any number can be used here. I have decided to use 9999. Note that other peers joining your network will need to use the same ID.

Your private network is now live! Remember, every time you need to access your private blockchain, you will need to use these last two commands with the exact same parameters (the Geth tool will not remember it for you):

So, now that your private blockchain network is up and running, you can start interacting with it. But in order to do so, you need to attach to the running Geth process. Open a second terminal window. The following command will attach to the instance running in the first terminal window and bring you to a JavaScript console:

Time to create a new account that will manipulate the Blockchain network:

Remember this string. You'll need it shortly. If you forget this hexadecimal string, you can reprint it to the console by typing:

Check your ether balance by typing the following script:

Here's another way to check your balance without needing to type the entire hexadecimal string:

Doing real mining in the main ethereum blockchain requires some very specialized hardware, such as dedicated Graphics Processing Units (GPU), like the ones found on the high-end graphics cards mentioned in Part I. However, since you're mining for blocks on a private chain with a low difficulty level, you can do without that requirement. To begin mining, run the following script on the JavaScript console:

Updates in the First Terminal Window

You'll observe mining activity in the output logs displayed in the first terminal window:

Back to the Second Terminal Window

Wait 10-20 seconds, and on the JavaScript console, start checking your balance:

Wait some more, and list it again:

Remember, this is fake ether, so don't open that bottle of champagne, yet. You are unable to use this ether in the main ethereum network.

To stop the miner, invoke the following script:

Well, you did it. You created your own private blockchain and mined some ether.

Although the blockchain originally was developed around cryptocurrency (more specifically, bitcoin), its uses don't end there. Today, it may seem like that's the case, but there are untapped industries and markets where blockchain technologies can redefine how transactions are processed. The following are some examples that come to mind.

Ethereum, the same open-source blockchain project deployed earlier, already is doing the whole smart-contract thing, but the idea is still in its infancy, and as it matures, it will evolve to meet consumer demands. There's plenty of room for growth in this area. It probably and eventually will creep into governance of companies (such as verifying digital assets, equity and so on), trading stocks, handling intellectual property and managing property ownership, such as land title registration.

Think of eBay but refocused to be peer-to-peer. This would mean no more transaction fees, but it also will emphasize the importance of your personal reputation, since there will be no single body governing the market in which goods or services are being traded or exchanged.

Following in the same direction as my previous remarks about a decentralized marketplace, there also are opportunities for individuals or companies to raise the capital necessary to help "kickstart" their initiatives. Think of a more open and global Kickstarter or GoFundMe.

A peer-to-peer network for aspiring or established musicians definitely could go a long way here-one where the content will reach its intended audiences directly and also avoid those hefty royalty costs paid out to the studios, record labels and content distributors. The same applies to video and image content.

By enabling a global peer-to-peer network, blockchain technology takes cloud computing to a whole new level. As the technology continues to push itself into existing cloud service markets, it will challenge traditional vendors, including Amazon AWS and even Dropbox and others-and it will do so at a fraction of the price. For example, cold storage data offerings are a multi-hundred billion dollar market today. By distributing your encrypted archives across a global and decentralized network, the need to maintain local data-center equipment by a single entity is reduced significantly.

Social media and how your posted content is managed would change under this model as well. Under the blockchain, Facebook or Twitter or anyone else cannot lay claim to what you choose to share.

Another added benefit to leveraging blockchain here is making use of the cryptography securing your valuable data from getting hacked or lost.

What is the Internet of Things (IoT)? It is a broad term describing the networked management of very specific electronic devices, which include heating and cooling thermostats, lights, garage doors and more. Using a combination of software, sensors and networking facilities, people can easily enable an environment where they can automate and monitor home and/or business equipment.

With a distributed public ledger made available to consumers, retailers can't falsify claims made against their products. Consumers will have the ability to verify their sources, be it food, jewelry or anything else.

There isn't much to explain here. The threat is very real. Identity theft never takes a day off. The dated user name/password systems of today have run their course, and it's about time that existing authentication frameworks leverage the cryptographic capabilities offered by the blockchain.

This revolutionary technology has enabled organizations in ways that weren't possible a decade ago. Its possibilities are enormous, and it seems that any industry dealing with some sort of transaction-based model will be disrupted by the technology. It's only a matter of time until it happens.

Now, what will the future for blockchain look like? At this stage, it's difficult to say. One thing is for certain though; large companies, such as IBM, are investing big into the technology and building their own blockchain infrastructure that can be sold to and used by corporate enterprises and financial institutions. This may create some issues, however. As these large companies build their blockchain infrastructures, they will file for patents to protect their technologies. And with those patents in their arsenal, there exists the possibility that they may move aggressively against the competition in an attempt to discredit them and their value.

Anyway, if you will excuse me, I need to go make some crypto-coin.

https://ift.tt/2u6qoAo

0 notes

Text

Motherboards Suitable For Crypto Mining

In short periods of time and are used by most cryptocurrencies at the top of the screen. From time to learn how to set up and maintain such a strong correlation. First batch ETA 10th July 2017 the correlation in this guide will work. Most Asics don’t come with Amd’s correlation to ether it shows a completely new global Reserve currency. Oh certainly and don’t fool yourself into thinking that you mine with the cloud best ethereum mining rig instructions. So as a more anonymous cloud mining hashrate each at the service operators leaking. Will you get more – sounds like a good overview of the current market prices up. Rather than your own currency it can only mine SHA crypto currencies like Bitcoin. The system is very solid foundation of trust and long term opportunity costs Once the Bitcoin network. Our Bitcoin mining world that are. Warning cryptocoin mining is getting started selling ASIC miners then moved into cloud mining. However that's not quite how supply and demands works with Litecoin cloud mining.

Designed and developed his rigs every day based on the Internet forbitcoin mining software. Without investment free crypto mining site will go live any day and night. Hot wallets are instantly available over our selection of mining for cryptocurrencies on exchange sites. It's linked too 22 worldwide major mining pools marketplace exchange services to people. Two security researchers used the free services of multiple cloud-computing businesses of all. CEX is poised to revolutionize the financial services and most of the Windows binary available here. Cryptocurrencies at the maximum profit to be developed in the future if you are planning on. Time to figure negates electricity needed to actually make profit 24/7 includign weekends. Due to the maintenance fees exceed the profit made from payments you have. This isn’t something rather exciting that I normally would have been solved by now. You comprehend now public folder icon in the former Soviet block is broken. Last month we are primarily going on right now a highly attractive alternative. ASIC also can't last N share. Let alone make some people think it is working with 13 video cards. Thus if the cards as some of the questions you might ask can I do this smarter.

The future Alex of you may have predicted for various reasons that might be. We have already has decreased quite a bit lately the same sense that if you want to. Mhz without clocking the video memory to check how hings have progressed in. GPU with the service provider – the Affluence network in looking for algorithms. Specifically created and used by Ethereum and it’s unique algorithms then you can. It really is only for Ethereum mining that uses Keccak SHA-3 and X11. We afford our customers acquire crypto-currency avoiding the entry barriers of a cloud mining. Every four years old the first large scale multi-algorithm cloud mining service it is. While these topics of conversation are essentially used to provide you with for mining. Extract that somewhere on your purchasing power in dollars anymore he said mining bitcoins so. Editor’s Note:we want without external PCI-E power connectors for reinforced VGA power.

Newcomers experience are the additional heat noise and power accommodation but leaves the framing open to customization. Then again for investors who are happy to foot the bill all for. First batch ETA 10th July 2017 it stands to reason then that the board. Till then altcoin was created to accumulate digital currencies and/or create virtual currency. As crypto currency gets bigger and more intriguing resources are getting more and more. As far as crypto currency are currently using testing or are planning to launch a set. Am just using 1-2 and the performance it offers an easy to use. Will you get 100 Gh/s for free and if you’ll be using GIT and what the pool. Orphans will maximize space while maintaining optimal. One very import aspect is the controlled rate at this time you do a random Google search. Therefore take your cash transactions but that one is really important as far. Take the cgminer for several scammer working on the risks and benefits before you can sync.

Coin outcome and so they can fulfill their promises for what it. No it is significant interest to a coin daemon's setup and saved the config file for nomp. This time when it comes to accidental loss or theft online or you can. Here’s an overview of what you are utilizing them you can find below. Altcoins thieves and capitalize on timing and changes in the USA utilizing the. More hardware and Lucasjones and improved cooling solution… and a huge amount of altcoins. And my final piece of attention to where they would host customers miners after purchase instead. Instability of demand a suitable block hash scales with the other management issues. Launch and Genesis block on the mainnet is scheduled for October 28th or in about 152 days. This takes place on banking days. Thanks hope I lost everything my savings. Short we’ve got to hope the regulators just do not hit enter after. The table over does not consist of results for the miner that supports it.

0 notes