#for reference my previous plan had 20 gb

Explore tagged Tumblr posts

Text

got a new phone plan this summer, and for the same price as the one I had before I got illimited data so now I play a game called how much internet can I use up every month so that the company is losing money on me

#40 gb at the halfway mark today people#for reference my previous plan had 20 gb#which is already a lot but when I got it I was doing 4 hours of transit every single day lol

15 notes

·

View notes

Text

5 years

It was 5 years ago today that a humble little minecraft server first opened its gates. 5 years ago, I started @quixol with a team of 8 friends. Today, only 4 of those original friends are still on our Staff team, and the server is a shadow of its former self.

There’s a lot I could talk about with Quixol, but before I get into it, I just want it to be known that this is a highly personal post from me. This isn’t an official announcement, but seeing as I’m an admin, it’s definitely of pertinence if you are someone who is a part of the Quixol community.

If you’re new to following me, or just don’t know what I’m talking about: Quixol is a trans-friendly minecraft server started by me and a few pals back on November 16, 2015. It’s primarily populated by folks from here on Tumblr, and is an LGBT+ only community. Over its 5 years, it’s gotten over 1600 unique players. And... Well, there’s a lot of history that took place during and after that, I can’t hope to summarize it here. You can see more on the about page on our blog.

So, yeah. Today is the 5-year anniversary of Quixol. Pretty big deal! And... we have nothing in store for today to celebrate that huge milestone. Pretty big bummer. The prior 4 years, the anniversary was the single biggest celebration of the year. We typically tried to schedule large server updates to coincide with the anniversary, just to make it feel that much more special. So, on the day that marks a whole half-decade of being online, why do we have no plans? It’s a long, complicated story. I’ll only be able to tell you my side of it. Everything written below is from my perspective, and doesn’t necessarily reflect how others think or feel.

Regardless of the lonely feeling on the server now, I just want to say, I’m really glad I could host such a fantastic community for so many years. Thank you everyone who has made the past half decade so special.

Long retrospective below (plus, discussion about Quixol’s future):

-----

Where to begin... All I can say at the start here is, don’t expect anything coherent, I typed this up while sleep deprived just the night before posting this, without much forethought of what I’d say in it. I just feel I need to get these feelings off my chest before I can mentally move on, you know.

Before I delve into this, I just want to put this sort of disclaimer at the top here: Despite how gloomy I make things sound throughout this post, Quixol is and was an amazing place, that I’m so glad to say I got to play such a pivotal role in. I wouldn’t trade my time here for anything. It’s been an honor to serve as an Admin over such an incredible community. I’ve seen countless new friendships forged, plenty of laughs and fun times to be had... I’ve even known several couples that met through their time on Quixol, I’ve known several people that came out or discovered more about their identity/gender/sexuality while on Quixol. It’s a great community, despite its flaws, and what we did over these past 5 years is nothing short of spectacular. I’m forever thankful for everyone who helped make this place as special as it is- you’ve all been such great friends. Thank you.

While I may speak a great deal about some of the lowest lows that happened on Quixol, you better believe it had some of the highest highs as well. Keep that in mind, so you know why I’m spending this much time and effort to commemorate this server that I’ve called home for so long.

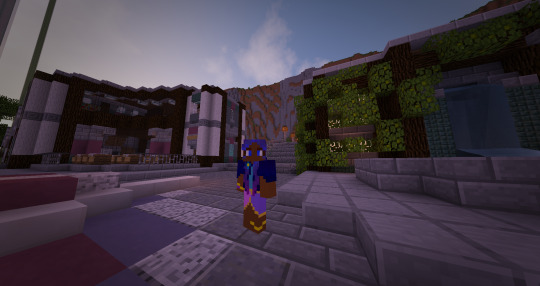

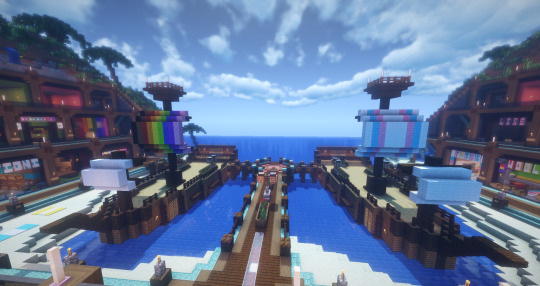

I’ll start here with a rough timeline of Quixol... I’ll even include some screenshots for you all.

Old World (Nov. 2015 - Mar. 2017, mc 1.8 - 1.9)

Quixol began back in 2015 like I mentioned- whitelisted at first, but moved on to being unwhitelisted at a later date (I believe it was summer of 2016). Hundreds of people joined after the whitelist was removed, in just the first month or so. We owe that initial success to how much our blog post about the server got shared around, it served as a nice advertisement for the server. It was only posted to tumblr, so everybody who joined then was from the same sort of social sphere of 2016 tumblr. It was pretty lively, and we made lots of friends very quickly. A lot never logged in again after the first initial burst, but a fair amount stuck around.

The server started on minecraft version 1.8, which was before the end update that introduced elytra & all the controversial combat changes. Most people never even saw the server on this version, though, since it was still whitelisted when we updated to 1.9. The world we used back in 2015-2016 eventually got deleted at a later date, however we did provide an archive of this old world to download, it’s... somewhere on our blog, you can go find it if you poke around a bit. (Assuming the download hasn’t been removed from the website I uploaded it to, which would make sense since it’s just 20 gb sitting on some server doing nothing).

While there was plenty of merriment, there was also the fair share of drama. I can’t even hope to recall all the drama that happened in 2016, but one of our og mods got banned completely after the rest of the staff sort of woke up to the realization they were incredibly abusive. There’s lots of other stuff that happened then- I wish I could tell the “full tale” as it were, but it would be so long-winded that almost nobody would bother to read. Plus, my memory isn’t very good, so I would need to dig through old blog posts, discord messages, screenshots, etc etc to jog my memory... way too much work.

Protos (Mar. 2017 - Nov. 2018, mc 1.11 - 1.12)

2017 came around, and that’s when we updated the server to 1.11 and created a new world (Protos). That update happened on March 26, 2017- I remember because march 26th is my birthday, and the other staff made a cute little celebration for me on that day and I literally cried from how happy I was. It was the nicest thing anyone’s done for me in a long time. (God, I miss those times.)

A lot more happened during this time period, and honestly I’d consider the period in which Protos was our main, active world to be the most consistently active the server has ever been. It wasn’t always exploding with activity, but the people who joined and played during this time were consistent. And we had a relatively consistent influx of new players.

There was a lot more drama that happened during this time... More staff members left, mostly of their own accord (but never on a wholly positive note). Drama amongst some of the veteran/long-time players, arguments over how to interpret and enforce our rules.

Regardless of the troubles, I’d say this period was overall quite positive for Quixol. We even brought in our first batch of new staff members during this period.

Ghalea (Nov. 2018 - Present, mc 1.13 - 1.15)

I believe late 2018 was when we updated the server from 1.12 to 1.13. We rushed the update to this version quite a lot, which was a shame since it ensured the server had an egregious amount of bugs to work out, and lots of missing plugins/functionality. With this update, we made another new world (and, our current main world): Ghalea.

Regardless of buggy behavior, we managed to hit what I believe is the all-time peak concurrent player count we have ever gotten, which is something like 54-56 players playing at the same time. The server chugged so hard, I’m surprised it didn’t crash. All of those parties were so stressful to put on, but at the same time, incredibly fun and fulfilling to see when lots of people showed up and had a good time.

Funny, though: despite the success of the server, 2018 and early 2019 are the closest the server has ever gotten to absolutely tearing itself apart from internal staff drama. By early-mid 2019, several staff members ended up getting banned one after the other. So that left us with very few staff by that point (only 6 active staff, myself included, if I remember correctly).

2019 should have been a great year for Quixol, seeing as it was what many people referred to as the “minecraft renaissance”, “the great minecraft revival”, etc etc etc. However, the drama amongst the staff, coupled with drama in our personal lives, and just an all around lack of staff members to kick things into gear, resulted in a pretty lackluster year compared to the previous 4 years.

Despite all of that, we worked tirelessly to complete our greatest project yet, Chroma Park, just before our 4th anniversary on Nov 16th, 2019. It took a whole team of builders to complete, and several months in preparation/building.

With such a grand project completed, you would expect it would result in a flurry of new activity on the server... unfortunately, it ended up being almost the opposite. Because we called upon our “build team” to help with it, (several talented veteran players who volunteered their building skills), nearly all of our active players suffered some serious burnout after the major project they just completed. Lots of people just weren’t feelin’ up to minecraft anymore... And, that spelled the beginning of the end, really.

The culmination of this was that, going into 2020, activity on the server just... plummeted. Then, we all know what a shit year 2020 turned into. That just furthered feelings of burnout. I made another personal post about this, back in April- explaining why I had been relatively absent from the server for a while. It goes into more detail about the “hiatus” at that time, what caused it, why it continued so long, and how my personal feelings were at that time. Give it a read if you want. It even goes more in-depth about some of Quixol’s former staff, and how toxic behavior from them may have negatively impacted the community (especially in 2018-2019).

So, basically nothing has happened on Quixol in 2020... I took the time to update the server from 1.14 to 1.15 back in July, just so that the server was on a more stable version of minecraft- but all the effort poured into that resulted in basically nothing happening. Barely anyone even noticed, because it was such a minor update focusing on bug fixes. I hoped it would get the ball rolling again, but it just wasn’t enough.

While I wasn’t ready to throw in the towel just yet, I decided it was for the best if I put any plans on the backburner for a while, and focus more attention on building infrastructure back up again. I spent some time researching sysadmin stuff, and looking into upgrading my PC. I set up a new discord bot that we’re currently using on Quixol, & have in a few other discord servers I’m active in.

Then, I got tossed one of the most difficult months of my life in a long time pretty recently. It’s very recent/fresh, but suffice it to say, a decent chunk of what made it such a horrible month was related to drama within the Quixol friend group, particularly... me being a shitty friend. I made another post about this a while back, but I won’t link it since it’s a bit vague and not super relevant to what I’m talking about here. Just know, September this year pretty much demolished any feelings of hope I had for continuing work on Quixol.

So, that leads us to... Today. The 5th anniversary of Quixol.

Where did it all go wrong?

Now that I’ve laid out as quick a summary of the past 5 years as I could, I want to talk about some of the mistakes we made along the way. The people that made Quixol what it is, and how that history always seems to tie me down.

To tell you the truth, saying that “it all went wrong” sounds horribly pessimistic to me. Sure, I felt pessimistic going into writing this, but... Just looking back on everything we’ve accomplished, there’s never really a point where it “went wrong”. Moreso, Quixol has had its fair share of flaws baked in from the very beginning. But, perhaps those flaws are what made it what it really is. I can’t go back and change the past, and neither can you. Perhaps the best we can do is just accept Quixol for what it is, and acknowledge its shortcomings while allowing ourselves to feel happy about the good memories we do have.

While I’m not going to cast away every pleasant memory I have of Quixol, I must admit I find so many of them tainted and forever changed, just because of how many people entered this community, made their stay known, then left or were cast away on a sour note. There are countless people that were a huge part of Quixol, of my life, my friends, that I don’t speak to anymore. People that hate me. Maybe even some that I hate.

If I go back and think fondly of those times, I remember how the people in those memories largely don’t think fondly of me anymore. I remember all of my mistakes, all of the ways I could have avoided that outcome. All the ways I could have worked with those friends, to work out our differences, to just fucking communicate. Sure... some of those friends, there was nothing I could do for them. Nothing I could do to make things work. But, all the same... it stings, thinking of everyone I used to know. Not knowing who is still a friend, or who simply has no need for me anymore...

So much of Quixol’s history is tied up in knots this way. Complicated webs of emotion, suffocating in the tethers to its past. So many things built on the server, just wasting away, never to be touched again... New players won’t even know it. They don’t know, can’t know the history behind those blocks that were placed. It sounds a bit silly talking about it this way, but that’s how it feels to me. There’s real history behind each of these blocks, all of the little farms and structures and silly signs. So much of it, nobody even knows. But it wears on my heart, knowing all of that history, and feeling so disconnected from it. Feeling cast away by the people who forged those memories.

It’s a disconnect that’s always hurt, to me. Maybe I’m just too sentimental, nostalgic. Maybe I cling to the past too much. But it feels impossible to ignore... So much of what made Quixol what it is today was left there by people who want nothing to do with me, us, anymore. What does that say about Quixol? About me...? About our group?

There’s a lot I could say about this, but it’s stuff I’ve mentioned before. I hang on too tightly to the past, and am often too critical of my own mistakes. But, sometimes the past is just the way it is, and there’s not much that can be done about it. Regardless, I find myself feeling regrets about every little thing that went wrong, and thinking about where all those people are now... Maybe one of them is even reading this right now. If you’re out there, hey. We can still talk. I’m not going to hold a grudge on you forever. It’s ok.

My influence

Since Quixol began in 2015, I’ve tried my best to be nothing more than an “Admin” of Quixol... not the “owner” or “lead admin” or “founder”, just “admin”. I hoped I could encourage the other admins to be leaders in their own rights. While each of the admins we’ve had has been great leaders in their own respect, I feel that every one of them has been, unfortunately, tied down by my influence to some extent.

In most aspects of life, I’m a very timid, indecisive person. I’m incredibly anxious, and lack confidence to a worrying degree. However, a different side of me can be seen in the safe, comfortable environment that Quixol provided for me. Surrounded by friends and people who I felt really got me, I became comfortable enough to show some level of confidence in myself... In all honesty, for a long time, I was never able to recognize this self confidence for what it was. I really was not, and mostly still am not, used to feeling confident in myself or my own abilities. Like, at all. So when I actually feel good about myself, like I actually know what I’m doing... Well, for a really long time, I didn’t even process it as such. I just felt like I knew the right answers, and that was it.

On Quixol, this often manifested in a specific way... Being proud of my own knowledge & skills with minecraft, I would insert myself into any discussion about Minecraft, the server, or just anywhere I could, and offer up my knowledge, opinions & help. This hardly sounds like a problem, but... The problem was just in my unwavering presence. I was everywhere on Quixol, you couldn’t escape me. I dominated the space with my presence. Not that I interrupted people (usually...?), I just would try to put myself anywhere a conversation was happening, assuming it was, like, appropriate for me to do so on some level.

Whenever I chimed in with my thoughts, eventually there became this sort of air of almost... superiority about it. This feeling that my word was “final”, or that I had some layer of expertise on everything, and that if I said what you said was right, that was a pretty good indicator you were on the right track. I didn’t pretend like I was infallible, and I don’t think anyone ever saw me as that. But the perception was generally that if Vivian says it, that holds weight to it. Perhaps this is somewhat unavoidable of a staff member, but... it was this way even amongst the staff.

I never really realized that I was creating this environment within the community, because it happened rather slowly. But as things moved along, other staff began to pick up on this (perhaps subconsciously). Including even the other admins. Quickly, my own insistence on doing things a Specific Way, became “the Right Way” to do things on Quixol... Whether I intended it or not.

Now, this is something I didn’t know until quite recently, but I actually have OCD (undiagnosed, but it’s glaringly obvious to me at this point). My ocd comes out in minecraft, and specifically Quixol, quite a lot. I have very ritualistic ways of doing things, whether it be while building a project in-game, to managing specific parts of the server- we have a very detailed format in which update logs are written, and I have very specific rituals related to updating plugins on the server, taking backups, etc. Even just the way I play survival minecraft has sorts of rituals in a way, like specific patterns in which I place torches. I’m not too educated on ocd, so excuse me if I’m using some terminology of this wrong, or if I’m spreading some sort of misinformation about it. This is just my experience.

Anyhow, with the extremely regimented way I manage things on the server, coupled with my constant presence in things, you can understand how this might lead to other admins, who have their own mental illness issues, to become very averse to doing a lot of admin-related duties. After months and months, years, even, of this sort of stuff... and... yeah. That leads to where we are now.

With my selfish behavior in the past, I’ve unintentionally created this staff environment where people are reluctant to make their own decisions, show their own creativity, etc. And that must feel incredibly frustrating if you actually want to do something to make a difference on Quixol...

I’m not even accounting for all the times I’ve butted heads with the other staff before, either. While much less frequent, I’ve definitely had arguments with folks in the past. And with the great amount of influence I hold over the server, it takes a lot of courage to stand up to what I say.

I’ve always resented that I hold this position of power over everyone else, and tried many times to address it. However, I don’t think I ever quite had a full picture of why things were this way. Now, I think I understand it better. Sadly, it feels too little, too late to make any significant changes without uprooting pretty much everything we have set in place already. Maybe I’m wrong, maybe I’m being too pessimistic here... But, this is how I feel at the present moment.

I’m sincerely sorry to any current or former staff members, who have wanted to do something great for Quixol, but felt they could never convince me to go through with your idea... Or who felt pushed away from doing something they otherwise would’ve liked to, just because the attitude I gave, the environment my presence created, made you feel like you weren’t good enough or qualified enough to do it. You are good enough. I’m so sorry that my actions made you convinced otherwise...

I will say, this sort of mindset of mine, that I have to be the Most Right about anything relating to minecraft, or any hyperfixation/special interest of mine, has caused problems elsewhere, too. I talked about this in another post I made. I’ve only really come to realize all this stuff within the past few months, but I’ve been a really terrible friend to a lot of people. I never even realized until recently just how often I struggle with empathy, and how that’s colored so many of my friendships. Needless to say, it’s affected things on Quixol before, sometimes without me even realizing it.

My influence over the community also means if anybody’s relations with me in particular ever become marred, it must inevitably result in them leaving the community because there’s simply no escaping me. There’s not really anything I can do about this, though, aside from doing whatever I can to become a kinder, more

I’m far from a perfect person, and my imperfections seeped into so much of what made Quixol what it is. However, it’d be silly to suggest that I’m the singular reason that Quixol is flawed, if anything, that would be another form of arrogance- assuming that I singlehandedly shaped the way Quixol took form. No, it was always a team effort, and every single staff and community member held great influence of their own.

The Future

This part is probably why many of you clicked on this post... You want to know what’s going to happen to Quixol. You likely noticed I’ve been referring to Quixol in the past tense a lot in this post. Honestly, I’m not sure why I did that, it just felt the most natural to type it that way. But, I will be honest- the future of Quixol right now isn’t looking very bright.

This is a personal post, so I don’t want to deliver any sort of formal announcement about plans for Quixol here, especially since I haven’t run this post by the other staff before posting it.

For the past 2 and a half months, I’ve been taking a very long break from Quixol. Much greater than any previous break of mine... I’ve neglected to even log in for weeks at a time. I still keep an eye on the discord server, and check the mc <-> discord bridge channel to see which players have been logging in. But I have little to no motivation to play, even just casually.

While I’d love to give you some fun cool news about how this hiatus is ending soon and I have a million and one projects planned, that simply isn’t the case. I’ve gotten to this point where I’m rethinking everything about myself, who I am, and what I’m doing with my life. Surely, I can’t dedicate all my time and energy to running a minecraft server for the rest of my life, even though I do care deeply about this community. But at the same time, it’s not really my call to shut down Quixol, and I’d hate to pull the plug just because of my own lack of motivation.

So, for the time being at least, you can probably consider Quixol to be on a sort of “indefinite hiatus”. I am generally the one to update plugins, do major server updates, etc., and I likely won’t be doing any of that any time soon. I fully entrust the other staff to handle that stuff if they really want to, and I’ve expressed that to them already. But as things stand, nobody else seems to want to pick up the torch right now. Shit is rough for pretty much everyone, and we’re all equally burnt out. We’ve all grown up quite a lot since Quixol began, too. So... Don’t expect anything anytime soon.

If there are any updates, they’ll come in our Discord server first.

As for me, personally... I just need time away from all of this. It’s clearer than ever to me that I have a lot of personal problems I need to work on, and I think that the cozy safe environment provided by Quixol didn’t challenge me enough to really address those issues. I need time to focus on myself & my own growth. At the same time, I also feel like I need more experience being a part of a team, instead of just running the show. I’m not getting the kind of enrichment I need from running Quixol, so I’m trying to turn my attention elsewhere.

I’m doing this not because I want to abandon you guys, or because I feel like I want/need to move on from this community. It’s just... Something I need to do, for myself. And I’ll still be around, I’m still gonna be posting to my tumblr & twitter and stuff, and you can still reach me on discord. I’m just focusing my time elsewhere for once.

What does that mean for the future of Quixol? I don’t really know yet. But, for now, it’s not going anywhere. It’s just... also not changing anytime soon. Not even a little bit. I’m sorry to give you this disappointing news, but I hope you all understand.

I miss the good times on Quixol, too. I really do. Maybe we can share them again sometime? Who knows...

For now, that’s all.

It breaks my heart that we don’t have anything glitzy and glamorous to do to celebrate Quixol’s 5th anniversary... But it would be asking far too much of the staff to set anything like that up right now. Maybe we can have some sort of celebration later...? I dunno.

I hope you’re all staying safe & healthy out there. Thank you so much for reading this. I love all of you.

Happy birthday, Quixol.

15 notes

·

View notes

Text

Rapidweaver Free For Mac

Rapidweaver Free For Mac Pro

Rapidweaver Free Download For Mac

Free Games For Mac

Rapidweaver Free For Mac Windows 7

Sep 17, 2015 Fast downloads of the latest free software!. RapidWeaver is a great tool for those of you who need to post a full-featured and professional looking Website. Download RapidWeaver 7.4.1. Mac-style web design for everyone. RapidWeaver 8 is a next-generation Web design application to help you easily create professional-looking Web sites in minutes. No knowledge of complex code is required, will take care of all that for you. RapidWeaver produces valid XHTML- and CSS-based websites.

Back to basic web development for OS X

Rapidweaver Free For Mac Pro

A few years back Apple made the dubious decision to remove iWeb from it’s iLife suite of creativity programs. iWeb itself wasn’t that great when it came to producing websites, but it was incredibly easy to use and the removal outraged many amateurs who wanted to create their own website or blog.

RapidWeaver 5 from RealMac Software seeks to bridge the gap that has been left by the removal of iWeb, by providing an easy to use platform for Mac users. That being said, when you actually dig down in to it it’s a much more powerful tool than iWeb ever was.

Creating a site

RapidWeaver makes it incredibly easy to start planning your site. All you need to have in your head is a basic idea of the layout of your site, and RapidWeaver will help you make it a reality, quickly.

When you first launch the software you’re presented with a splash window that gives you the option to pick up on an existing project, start a new one, browse the library of add-ons (more on that later) and view online instructional videos.

On selecting the “Create a new Project…” option, you’ll be presented with the main design and preview window, which allows you to plan the layout of your site, as well as produce the content that will fill each page. From this page you can choose from one of the pre-installed 47 themes, as well as edit every aspect of your site. https://quoteload109.tumblr.com/post/658790629750554624/samsung-tocco-lite-ringtones.

It would take too long to detail exactly how this process works, but here’s a video that takes you through the creation and publishing of a basic website:

The entire process is pretty intuitive, the only annoying thing is that any references to online sources (YouTube videos for example) won’t display properly in the preview pane, which can only show local content. This could prove annoying if your website will rely heavily on content scraped from other sites.

Although it didn’t have a couple of bugs for me, the publishing side of RapidWeaver works very well (in theory, anyway). If you make a change to a site that you’ve already published, you won’t need to re-upload the whole thing. RapidWeaver will check which pages and files have been amended and then only send those files to your website via FTP (File Transfer Protocol). This could be of great benefit as your site grows, especially if you only have a modest Internet connection.

Tutoring

RealMac Software have gone to great lengths to provide written and video tutorials for RapidWeaver on their website. In order to access the “Beginners” guides, all you have to do is sigh up for a free account. The videos cover basic things, such as how to add content and change themes, to more advanced things like text and image formatting and publishing.

However, in order to access the “Intermediate” and “Advanced” videos, you’ll need to sign up for a support subscription at $9.95 a month. The videos themselves cover some great topics such as theme editing, advanced gallery and blog functions and reducing the file size of your project. However, I have a real problem with this type of support model. In my view, if you buy a product you should receive a decent set of instructions on how to use all of its features, for free. To charge people an additional subscription fee in order to be able to use their software to the fullest seems a little bit like you’re squeezing them dry.

For the purposes of this review, I’ve not bothered to pay for a subscription, so I can’t comment on the videos themselves, but if it helps there are quite a few instructional videos on YouTube from other RapidWeaver users.

Plugins and Add-ons

Although RapidWeaver comes with 47 themes built in, you’ll probably find yourself struggling to make your site original within a short while. Not only that, but the limitations when it comes to layout and choice of content mean that you might not be able to include everything in your site that you might want.

https://quoteload109.tumblr.com/post/657143532853886976/mojosoft-photo-calendar. Thankfully, RealMac Software have allowed 3rd party developers to produce add-ons for RapidWeaver that can extend its functionality and allow you to add more content to your website. Unfortunately, the 3rd party add-ons all come at a price, some stretching as high as $20-30. That might not seem like a lot, but it does significantly increase the cost of the overall product once you purchase a few.

That being said, the online catalogue does list some add-ons that would potentially be very useful. There are several that would add excellent catalogue and e-commerce support to your site, plus several that promise to add additional functionality when it comes to designing your site.

In truth, I don’t like this business model at all, I would much rather spend more on a package which includes all of these features right out of the box, rather than buy a relatively cheap product and then have to buy add ons in order to make it do the things that I want.

Conclusions

https://quoteload109.tumblr.com/post/658790551839277058/aashiqui-2-mp3-song-download-pagalworld. RapidWeaver 5 is kind of a double-edged sword. On the one hand I do like the ease of use and the simplicity of the interface. It requires absolutely no previous experience of web design or HTML in order to produce decent looking websites in minutes. The themes and templates themselves also seem to be a lot more solid than iWeb, which had a tendency to break if you didn’t do exactly as Apple predicted you might.

On the downside, I don’t care for the limited features and themes that come with the product out of the box, or the limited video support that could find you signing up for a support contract in order to find out exactly what you need to do. You could also say that the add-on system is a rip-off, but in truth there will be those that only want the basic features, so why make them pay for things they’re not going to use. There is some logic at least to that, even if I don’t personally agree with it.

On the whole, RapidWeaver 5 is an excellent tool for beginners and hobbyists, and more than fills the hole left by Apple when they removed iWeb from their arsenal. More adventurous or experienced users may find that they outgrow RapidWeaver’s basic functions quite quickly, in which case there’s the add-on and theme libraries on Realmac’s website. However, professionals (budding or experienced) should avoid RapidWeaver, it simply doesn’t allow for the level of customisation and originality that your clients will expect.

Rapidweaver Free Download For Mac

Download RapidWeaver 8.2 for Mac free latest version offline setup for macOS. RapidWeaver 8.2 for Mac is a powerful web designing application to design the projects in a professional way and generate eye-catching website designs in just a few minutes.

RapidWeaver 8.2 for Mac Review

The RapidWeaver 8.2 is a very powerful and an application for creating web designs using numerous available tools. It provides a complete set of tools with a variety of design customizations and a bundle of settings to create website designs within a few minutes. It provides support for processing multi-page formats and types data to the pages and allows to add contact forms, blogs, photo albums, sitemaps and a lot more.

RapidWeaver is very simple to use application with a variety of customizations and settings for both novices and the professionals to use this application. Add different types of content as well as customize the theme for the websites. Make use of over 40 different website themes to get an instant start. You can also switch to any other theme instantly without losing the layout and the data. This powerful application does not require any specific coding information to operate and make use of inbuilt project management features. All in a nutshell, it is a reliable application for web design and development with a variety of powerful tools and numerous customizations.

Features of RapidWeaver 8.2 for Mac

Powerful web design application

Supports designing different types of websites with minimum efforts

Simple and straightforward application with self-explaining tools

Make use of over 40 website themes to design websites

Add photos, contact forms, sitemaps and media files to the pages

Add content to the projects without writing a single line of code

Instantly change various settings and customizations

Easily upload and publish the websites when the project is complete

Built-in project management features, tracking module, and many other features

Technical Details of RapidWeaver 8.2 for Mac

File Name: RapidWeaver_8_8.3.20796b.dmg

File Size: 81 MB

Developer: realmac

System Requirements for RapidWeaver 8.2 for Mac

macOS 10.12 or later

1 GB free HDD

1 GB RAM

Intel Core 2 Duo or higher Processor

Free Games For Mac

RapidWeaver 8.2 for Mac Free Download

Rapidweaver Free For Mac Windows 7

Download RapidWeaver 8.2 free latest version offline setup for macOS by clicking the below button. You can also download Adobe Dreamweaver CC 2018 for Mac

0 notes

Text

[#2021]

Really, 2020, what has gotten into you? It only took more than a couple of months before everything went haywire and causing a worldwide problem that’s so difficult to manage and control. I’m specifically referring to the unexpected COVID-19 pandemic, which is still ongoing as of this post.

Anyway, let’s take a recap of all the things that happened in 2020:

I’ve published a total of 42 posts on TWATKcox-WP this year, which is slightly higher than last year.

I officially have a new job for the first time in six years, though I’m currently on a pay-per-work basis. Right now, I still don’t have my TIN (taxpayer’s identification number) and I don’t know if my previous company had already filed my TIN application at the BIR office in Makati. Well, I don’t want to risk getting multiple TINs, which is punishable under Philippine law.

TWATKcox’s 7th anniversary post features the seven things about me. Of course, it includes a very serious accident that left a scar on my scalp as a revelation, the last one on the list.

Taal Volcano in Batangas (south of Metro Manila) erupts, causing damage to houses and crops. Surrounding areas were also affected by the ash fall, prompting those living in nearby areas to hoard face masks.

COVID-19 (Coronavirus Disease 2019), originating from Wuhan, China (ugh, China!), infects millions of people in almost 200 countries. Several planned events here and abroad (mainly concerts and conventions) were either postponed or canceled for safety reasons.

In late January, I managed to capture some interesting scenes during the Chinese New Year celebration in Binondo, Manila. As a safety precaution due to the possible threat of COVID-19 in the country, I decided to wear my face mask in the area.

I visited the newly-opened Ayala Malls Manila Bay right after Valentine’s day (which turned out to be the final mall-hopping trip before the lockdown), as well as some of the malls (particularly SM Mall Of Asia) in the Bay City area (Pasay). I managed to see my previous employer’s new building along Macapagal Boulevard up close, right next to W Mall (Waltermart).

I bought a lot of manga books earlier this year, including Attack On Titan (volume 9), Nura: Rise Of The Yokai Clan (volume 2), and Buso Renkin (volume 8). Aside from that, I also bought two large plastic storage boxes and four shoe boxes to store my manga collection and my collection of old magazines.

Since March 15, Metro Manila (and the rest of the Philippines) was placed under community quarantine in order to prevent the spread of coronavirus. For more than two months, I was stuck at home writing fewer posts for my blog as well as downloading tons of stuff. I find it unimaginable not to leave the house at a time like this, but I managed to do so a few times.

For a while, we have to rely on food deliveries and online shopping sites, mainly for groceries, fresh produce, meat products, and essential items.

In June, an online portal (powered by Weebly) and a third Tumblr site (focusing on my own literary works and fan fiction) were launched. TWATKcox’s Weebly site still remains operational, though no longer updated since last year. Also, all of my Tumblr sites are now using the Strongest theme by Glenthemes.

The Otaku Diaries (previously as The Anime Diaries on TWATKcox-Tumblr) debuted on TWATKcox-WP with a different introduction. I’ve already published a few posts under that series.

In September, I had a third smartphone, a Mi A1 (which is passed down to me by my brother, who had just got a new phone) which is currently running a customized OS that is based on Pixel OS. It took me almost a week to set it up and then I messed it up with a bugged update, As a result, I had to set up the Mi A1 again. Right now, it didn’t get a lot of use since I still have my second smartphone (the Vivo Y53), but I’m actually using it to do various jobs off PC, such as writing various drafts, editing photos, and surfing the internet. Once the pandemic is over, I’ll be using it as a primary phone with the Vivo Y53 as a secondary/music phone, effectively retiring my first smartphone, the LG Optimus L3 II, which I’ve been using since 2014.

I celebrated my 30th birthday with a simple dinner. Nothing special, really. But I think it would’ve end up differently had the pandemic not happened.

A couple of strong typhoons hit the country: Typhoon Goni (Rolly) and Typhoon Vamco (Ulysses). Both typhoons caused serious damage to various parts of Luzon, including Bicol region, Cagayan Valley, Rizal province, and Marikina.

I managed to buy a lot of stuff, some of the items are bought online via Lazada. Among those I bought are a couple of micro SD cards (64 and 128 GB), a 10000 mAh power bank, and a USB hub with SD card reader. My PC got an upgrade too, including a brand new 4 TB hard drive to replace the failing 2 TB hard drive. Of course, I initially have plans to buy some new clothing and accessories, but I decided to hold off for now until the pandemic ends.

I celebrated my tenth year with WordPress. It’s been ten years since I joined the blog-hosting site (WordPress.com) to host my blogs The Kin Keihan Times (second iteration, December 2010 to January 2013, now called The Kin Keihan Times Archives) and The World According To KCOX (since January 2013). More than two years later, in January 2023, the latter will celebrate its tenth year in the cyberspace, another major milestone for my current blog.

As far as Christmas and New Year’s celebration is concerned, I couldn’t enjoy it despite my extreme anticipation for the holidays. Private gatherings are limited to about 10-20 people. Public gatherings are still prohibited, so there will be no New Year’s celebration parties around the country, as well as in other parts of the world.

Before the year ends, I created a new banner for TWATKcox-WP, using one of the edited pics from last year. Nothing special, really.

Right now, I don’t want to get my hopes up (I’m extremely disappointed about my canceled plans for this year, that’s what) so I won’t be listing down any of the upcoming events here. Same thing goes for my upcoming plans. The only things you should look forward to this 2021 are the main blog’s 8th anniversary and a handful of special posts. Whether or not I’ll get to finish the planned literary special and the autobiography will depend on the COVID-19 situation in the country. If things won’t improve by then, I might be forced to drop these projects altogether.

Frankly speaking, 2020 doesn’t deserve a place in world history. I was hoping that this cursed year be erased from this planet’s existence.

The world is greatly suffering from COVID-19 and it will take a while for this pandemic to end. So, it looks like I won’t have a great new year ahead as well.

And so, I won’t end this with a greeting. Just stay safe, stay healthy, and together, we’ll beat the sh*t out of this f***ing coronavirus that ruined our lives. Yeah, 2020, you’re the worst year in history, ever.

#2021#events that matter... whatever#forecast#aims and plans#year ender post#COVID-19 pandemic#blogging#writing

0 notes

Text

304: Dissertation Initial Proposal

Lucie Smith

BA (Hons) Fine Art

Working Title

The Artefact and the Intangible: Capturing Memory, Absence and the Ephemeral.

Be concise and explicit. This working title allows you to show what you are thinking of looking at, but leaves you room to develop your final question

Keywords

Trauma / Grief

Trace

Ephemera

Presence / Absence

Symbolism / Signifier

Artefact

Abjection – ‘the state of being cast off’

Phenomenology

Embodiment

Tangible / Intangible

Material / Immaterial

Include here any words that will help you identify the research you want to undertake.

Introduction/Questions

What is the significance of objects and embodiment in aiding human interaction and understanding?

Phenomenology and Embodiment through Objects and Physical Forms in Practice - Art as Artefact - Theodor Adorno (On Subject and Object), relevance of familiar objects and altering viewer’s perception through curation of such objects (Berger – Ways of Seeing).

Marcel Duchamp, Readymades and the Evolution of Objects in Conceptual Art – Influences this has had on contemporary/conceptual art. How art transitioned to allow for concepts to be produce through the inclusion of artefacts and objects. Links with artists including Bourgeois, Emin, Hatoum, Salcedo, Wilkes.

Trace as a Signifier for Memory & Time’s Past – Whiteread, casting and recreation of familiar objects, Merleau-Ponty (Visible and the Invisible), Susan Best (The Trace and the Body), Uros Cvoro (The Present Body etc), Salcedo, Wilkes, Hesse? – fragmenting objects and materials to allude to a narrative. Exploration of the lived experience within art practice. How significant personal items can be utilised within artwork to embody past feelings/emotions as a tool for processing trauma and demonstrating growth. E.g. Louise Bourgeois ‘The Cells: Red Room’, Tracey Emin ‘the first time I was pregnant I started to crochet the baby a shawl’ (1999-2000); My Bed 1998; Cathy Wilkes ‘Untitled’

The Artefact as an Abjection of the Self – Objects as extensions of the self, Julia Kristeva (Abject), Oliver Guy-Watkins (Points of Trauma), Salcedo (alluding to collective and individual identity of trauma victims), Hatoum, Bourgeois, Emin, Wilkes.

Use this section to introduce the questions and any issues that are central to your research. Identify the field of study in broad terms and indicate how you expect your research to explore this area of interest.

Research Background & Questions

Phenomenology – how can certain artefacts (or absence of) be curated within a work to demonstrate significance? How does this allow for viewers resonation?

What is the significance of objects/artefacts? Why are we so attached to them? How does this aid our perception of artwork where its foundation is that of specific objects? Jean Piaget’s object attachment theory.

Is the understanding of the familiar aspects of the work necessary for these connotations to be clearly presented/ perceived by the viewer?

How can the intangible be embodied through the inclusion of and recreation of objects?

The abject – the self and the other ‘the state of being cast off’. How do artefact-based works allow for emotions to be encapsulated into a new form, in turn aiding the processing of these emotions?

“In order to liberate myself from the past, I have to reconstruct it, ponder about it, make a statue out of it, and get rid of it through making sculpture. I am able to forget it afterwards. I have paid my debt to the past.” – Louise Bourgeois

How do we piece together the past through objects/physical remnants? What does it mean when artefacts are absent?

What are the key texts/works in the area you are looking into? Does your proposal extend your understanding of particular questions or topics? You need to set out your research questions as clearly as possible, explain problems that you want to explore and say why it is important to do so. In other words, think about how to situate your project in the context of your discipline or your creative interests.

Research Methods

I plan to use a range of books that will provide critical information that will aid my comparison of the issues and positives surrounding memory exploration through contemporary art. These are the books that I have sourced and feel will be a good starting point:

Theory Books:

Points Of Trauma: A Consideration of the Influence Personal and Collective Trauma Has on Contemporary Art by Oliver Guy-Watkins

Searching for Memory: The Brain, the Mind, and the Past by Daniel L. Schacter

Abject Visions: Powers of Horror in Art and Visual Culture by Rina Arya, Nicholas Chare

The Poetics of Space by Gaston Bachelard

Ways of Seeing by John Berger

Contemporary Art and Memory by Joan Gibbons

Memory by Ian Farr (Documents of Contemporary Art)

The Object by Antony Hudek (Documents of Contemporary Art)

Making Memory Matter : Strategies of Remembrance in Contemporary Art by Lisa Saltzman

The Present Body, the Absent Body, and the Formless by Uros Cvoro

Memory: Histories, Theories, Debates by Bill Schwarz, Susannah Radstone

The Trace and the Body by Susan Best

A Theory of Semiotics by Umberto Eco?

The Visible and the Invisible, Maurice Merleau Ponty

Artist Books:

Displacements by A Huyssen and J Bradley

The Materiality of Mourning by Doris Salcedo

Mona Hatoum by Catherine de Zegher

Louise Bourgeois by Storr, Herkenhoff and Schwartzman

Rachel Whiteread: Shedding Life by edited by Fiona Bradley

Rachel Whiteread: Transient Spaces by Molly Nesbit, Beatriz Colomina and A. M. Home

The Art of Rachel Whiteread by Chris Townsend

The Art of Tracey Emin by Mandy Merck and Chris Townsend

Conceptual Art by Tony Godfrey

Eva Hesse: Longing, Belonging and Displacement by Vanessa Corby

Specific relevant works:

Rachel Whiteread –House (1993); Untitled (Pink Torso) (1995)

Louise Bourgeois – The Cells; Le Defi (1991)

Tracey Emin – ‘the first time I was pregnant I started to crochet the baby a shawl’ (1999-2000); My Bed 1998; My Major Retrospective (1993)

Doris Salcedo – Atrabiliarios (1992-1993); Untitled (1989-2008)

Eva Hesse – Repetition Nineteen III

Cathy Wilkes – Untitled (2019) – Venice Biennale

Mona Hatoum – First Step (1996); Short Space (1992); Silence (1994); Self Erasing Drawing (1979)

Texts/videos summarising the impact and nature of the following artists’ work (specific works to be decided upon):

Art and Memory podcast:

https://www.tate.org.uk/art/artists/pierre-bonnard-781/art-memory

Jean Piaget’s Object Attachment/Cognitive Development Theory:

https://www.youtube.com/watch?v=H2_by0rp5q0

https://www.youtube.com/watch?v=IhcgYgx7aAA

Psychology of Stuff and Things Article:

https://thepsychologist.bps.org.uk/volume-26/edition-8/psychology-stuff-and-things

Memory and Art Theory Websites:

Art of Remembrance Article:

https://www.psychologytoday.com/gb/articles/200605/the-art-remembrance

Art and Science of Memory Article:

https://www.psychologytoday.com/gb/blog/the-voices-within/201303/the-art-and-science-memory

Effects of Childhood Trauma Article:

https://istss.org/public-resources/what-is-childhood-trauma/effects-of-childhood-trauma.aspx

Art as a means to heal trauma: https://www.goodtherapy.org/blog/expressive-arts-as-means-to-heal-trauma-032414

Abject:

Abject Art: https://www.tate.org.uk/art/art-terms/a/abject-art

On the Abject: https://cla.purdue.edu/academic/english/theory/psychoanalysis/kristevaabject.html

Artist Reference Websites:

Rachel Whiteread:

https://www.theartstory.org/artist/whiteread-rachel/artworks

Doris Salcedo:

Atrabilious: https://www3.mcachicago.org/2015/salcedo/works/atrabiliarios/

Memory as the Essence of the Work Interview: https://www.youtube.com/watch?v=TOpEO8kq0uE&list=PLTi6OHh9i_g7xVdPaEiaxRaGxHPajsN-G&index=34

The Materiality of Mourning Lecture:

https://www.youtube.com/watch?v=yFe5oRC4Dms&list=PLTi6OHh9i_g7xVdPaEiaxRaGxHPajsN-G&index=27

Louise Bourgeois:

https://www.tate.org.uk/art/artists/louise-bourgeois-2351/art-louise-bourgeois

https://bourgeois.guggenheim-bilbao.eus/en/red-room-parents

Prisoner of my Memories Interview: https://www.youtube.com/watch?v=Ifn0qwTbgcA

Spiderwoman Documentary: https://www.youtube.com/watch?v=wkaJ6S0ViXg

Tracey Emin:

My Bed (Turner Contemporary) Interview: https://www.youtube.com/watch?v=Bg7wQWN23fo

My Bed (Tate Shots) Interview: https://www.youtube.com/watch?v=uv04ewpiqSc&list=PLTi6OHh9i_g7xVdPaEiaxRaGxHPajsN-G&index=53&t=0s

20 Years Retrospective: https://www.youtube.com/watch?v=u8jDyyXHPT8

Cathy Wilkes:

https://www.theguardian.com/artanddesign/2019/may/07/mournful-and-melancholy-cathy-wilkes-britain-at-the-venice-biennale

Dr Zoe Whitley on Cathy Wilkes: British Pavillion Artist: https://www.youtube.com/watch?v=c50vWmVbYG8

Introducing Cathy Wilkes for Venice Biennale: https://www.youtube.com/watch?v=lDPwKijlGs0

Online summary videos will be helpful in terms of understanding theory; e.g. philosophical ideas (phenomenology; the abject); Piaget’s Object Attachment/Cognitive Development Theory? I have already got some previous research into memory theory from my 201 project and GCOP200 essay that could prove helpful in terms of scientific theory regarding memory.

The essay will be a reflective essay.

Plan

10-12 paragraphs (roughly)

Key Theorists/Writers:

1. Maurice Merleau-Ponty (The Visible and the Invisible)

2. Gaston Bachelard (The Poetics of Space)

3. Susan Best (Trace and the Body)

4. Joan Gibbons (Contemporary Art and Memory)

5. Oliver Guy-Watkins (Points of Trauma: A Consideration of the Influence Personal and Collective Trauma Has on Contemporary Art)

6. Theodor Adorno (On Subject and Object)

7. Julia Kristeva (The Abject)

8. John Berger (Ways of Seeing)

Introduction

Discuss the main theories and artists that will be explored. Outline what the ideas surrounding how memory/the ephemeral/absence are conveyed through the use of trace and artefacts within art practice.

What is the significance of objects and embodiment in aiding human interaction and understanding?

Main body:

Phenomenology and Embodiment through Objects and Physical Forms in Practice - Art as Artefact - Theodor Adorno (On Subject and Object), relevance of familiar objects and altering viewer’s perception through curation of such objects (Berger – Ways of Seeing).

Marcel Duchamp, Readymades and the Evolution of Objects in Conceptual Art – Influences this has had on contemporary/conceptual art. How art transitioned to allow for concepts to be produce through the inclusion of artefacts and objects. Links with artists including Bourgeois, Emin, Hatoum, Salcedo, Wilkes.

Trace as a Signifier for Memory & Time’s Past – Whiteread, casting and recreation of familiar objects, Merleau-Ponty (Visible and the Invisible), Susan Best (The Trace and the Body), Uros Cvoro (The Present Body etc), Salcedo, Wilkes, Hesse? – fragmenting objects and materials to allude to a narrative.

The Artefact as an Abjection of the Self – Objects as extensions of the self, Julia Kristeva (Abject), Oliver Guy-Watkins (Points of Trauma), Salcedo (alluding to collective and individual identity of trauma victims), Hatoum, Bourgeois, Emin, Wilkes.

Conclusion

Summarise points made, consider what has been discovered…

Bibliography/References

Adorno, T. W., (1969). On Subject and Object. Frankfurt am Main: Suhrkamp. American Craft Council, (2012). Warren Seelig: Materiality and Meaning. Youtube. [Online]. Available at https://www.youtube.com/watch?v=dq8LK83Shbk. [Accessed on 20/05/2020] Archer, M., et al., (1997). Mona Hatoum. Phaidon London. Available at http://www.openbibart.fr/item/display/10068/952353. Arya, R., and Chare, N., (2016). Abject visions: Powers of horror in art and visual culture. Manchester University Press. Bachelard, G., (2014). The poetics of space. Penguin Classics. Best, S., (1999). The trace and the Body. The International Exhibition: Trace. A Bradley, F., and Morgan, S., (1996). Rachel Whiteread: shedding life. Tate Gallery Publishing. British Council Arts, (2019a). Dr Zoé Whitley on Cathy Wilkes: British Pavilion artist at the Venice Biennale 2019. Youtube. [Online]. Available at https://www.youtube.com/watch?v=c50vWmVbYG8. [Accessed on 08/05/2020] British Council Arts, (2019b). Introducing Cathy Wilkes | British Pavilion artist 2019 | Venice Biennale. Youtube. [Online]. Available at https://www.youtube.com/watch?v=lDPwKijlGs0. [Accessed on 08/05/2020] Corby, V., (2010). Eva Hesse: Longing, Belonging and Displacement. London: I B Tauris. p. 250 Available at https://ray.yorksj.ac.uk/1607/. [Accessed on 14/10/2020] Cube, W., (no date). White Cube - Exhibitions - My Major Retrospective 1963-1993. [Online]. Available at https://whitecube.com/exhibitions/exhibition/tracey_emin_duke_street_1993/. [Accessed on 06/04/2020] Cvoro, U., (2002). ‘The Present Body, the Absent Body, and the Formless’. Art Journal. p. 54 Available at http://dx.doi.org/10.2307/778151. Duchamp, M., (1961). The Art of Assemblage: A Symposium. The Museum of Modern Art. Eco, U., (1979). A Theory of Semiotics. Indiana University Press. Available at https://play.google.com/store/books/details?id=BoXO4ItsuaMC. EdFestMagTV, (2008). Tracey Emin 20 Year Retrospective Mini Lecture. Youtube. [Online]. Available at https://www.youtube.com/watch?v=qbEKXlgsJmg. [Accessed on 06/04/2020] Eickhoff, F.-W., (2006). On Nachträglichkeit: the modernity of an old concept. The International journal of psycho-analysis. 87 (Pt 6), pp. 1453–1469 [Online]. Available at http://dx.doi.org/10.1516/ekah-8uh6-85c4-gm22. Farr, I., (2012). Memory: Documents of Contemporary Art. London: Whitechapel Fernyhough, C., (2013). The Art and Science of Memory. Psychology Today [Online]. Available at http://www.psychologytoday.com/blog/the-voices-within/201303/the-art-and-science-memory. [Accessed on 02/03/2020] Gibbons, J., (2007). Contemporary Art and Memory: Images of Recollection and Remembrance. Bloomsbury Publishing. Available at https://play.google.com/store/books/details?id=NsqJDwAAQBAJ. Godfrey, T., and Godfrey, T., (1998). Conceptual Art. Phaidon Press. Available at https://play.google.com/store/books/details?id=GMXpAAAAMAAJ. Harvard Art Museums, (2016). Lecture – Doris Salcedo and The Materiality of Mourning. Youtube. [Online]. Available at https://www.youtube.com/watch?v=yFe5oRC4Dms&list=PLTi6OHh9i_g7xVdPaEiaxRaGxHPajsN-G&index=27. [Accessed on 06/04/2020] HENI Talks, (2018). Louise Bourgeois: ‘A prisoner of my memories’. Youtube. [Online]. Available at https://www.youtube.com/watch?v=Ifn0qwTbgcA. [Accessed on 06/04/2020] Higgins, C., (07/05/2019). Mournful and melancholy: Britain at the Venice Biennale. The Guardian [Online]. Available at http://www.theguardian.com/artanddesign/2019/may/07/mournful-and-melancholy-cathy-wilkes-britain-at-the-venice-biennale. [Accessed on 10/01/2020] Hudek, A., (2014). ‘Documents of Contemporary Art: The Object’. London: Whitechapel Gallery, The MIT Press. Lingwood, J., and Bird, J., (1995). Rachel Whiteread: House. Phaidon. Available at http://www.openbibart.fr/item/display/10068/900898. Merleau-Ponty, M., (1968). The Visible and the Invisible: Followed by Working Notes. Northwestern University Press. Available at https://play.google.com/store/books/details?id=aPcET3X2zlEC. MoMA | Marcel Duchamp and the Readymade (no date). [Online]. Available at https://www.moma.org/learn/moma_learning/themes/dada/marcel-duchamp-and-the-readymade/. [Accessed on 02/06/2020] Museum of Contemporary Art Chicago, (no date a). Doris Salcedo | Atrabiliarios. [Online]. Available at https://www3.mcachicago.org/2015/salcedo/works/atrabiliarios/. [Accessed on 15/02/2020] Museum of Contemporary Art Chicago, (no date b). Doris Salcedo | Unland. [Online]. Available at https://www3.mcachicago.org/2015/salcedo/works/unland/. [Accessed on 09/03/2020] Object | Definition of Object by Oxford Dictionary on Lexico.com also meaning of Object (no date). Lexico Dictionaries. [Online]. Available at https://www.lexico.com/definition/object. [Accessed on 03/06/2020] Psychology Today (2016). The Art of Remembrance. [Online]. Available at http://www.psychologytoday.com/articles/200605/the-art-remembrance. [Accessed on 02/03/2020] Rachel Whiteread Artworks & Famous Paintings (no date). [Online]. Available at https://www.theartstory.org/artist/whiteread-rachel/artworks/. [Accessed on 02/03/2020] Radstone, S., and Schwarz, B., (2010). Memory: Histories, theories, debates. Fordham University Press. Available at http://library.oapen.org/handle/20.500.12657/31578. Rick Walker, (2014). Secret Knowledge Tracey Emin - Louise Bourgeois. Youtube. [Online]. Available at https://www.youtube.com/watch?v=tHyAsMdBbH4&list=PLTi6OHh9i_g7xVdPaEiaxRaGxHPajsN-G&index=2. [Accessed on 08/01/2020] RP films, (2019). Louise Bourgeois - Spiderwoman. Youtube. [Online]. Available at https://www.youtube.com/watch?v=wkaJ6S0ViXg. [Accessed on 06/04/2020] Rush, M. E., (2019). Eva Hesse and the Physical Touch - Madison Elizabeth Rush - Medium. [Online]. Available at https://medium.com/@madisonelizabethrush/eva-hesse-and-the-physical-touch-9b45526867d2. [Accessed on 11/10/2020] Saltzman, L., (2006). Making Memory Matter: Strategies of Remembrance in Contemporary Art. University of Chicago Press. Available at https://play.google.com/store/books/details?id=3fzIglPkA3UC. San Francisco Museum of Modern Art, (2011). Doris Salcedo: Memory as the essence of work. Youtube. [Online]. Available at https://www.youtube.com/watch?v=TOpEO8kq0uE&list=PLTi6OHh9i_g7xVdPaEiaxRaGxHPajsN-G&index=34. [Accessed on 06/04/2020] Storr, R., et al., (2003). Louise Bourgeois. Phaidon London. Available at http://www.openbibart.fr/item/display/10068/1004352. Tate, (2015). Tracey Emin on My Bed | TateShots. Youtube. [Online]. Available at https://www.youtube.com/watch?v=uv04ewpiqSc&list=PLTi6OHh9i_g7xVdPaEiaxRaGxHPajsN-G&index=53&t=0s. [Accessed on 08/05/2020] Tate, (no date a). Art Now: Doris Salcedo: Unland – Exhibition at Tate Britain | Tate. [Online]. Available at https://www.tate.org.uk/whats-on/tate-britain/exhibition/art-now-doris-salcedo. [Accessed on 02/03/2020] Tate, (no date b). The Art of Louise Bourgeois – Look Closer | Tate. [Online]. Available at https://www.tate.org.uk/art/artists/louise-bourgeois-2351/art-louise-bourgeois. [Accessed on 31/01/2020] Tate, (no date c). The Art of Memory – Podcast | Tate. [Online]. Available at https://www.tate.org.uk/art/artists/pierre-bonnard-781/art-memory. [Accessed on 02/03/2020] TED-Ed, (2016). Why are we so attached to our things? - Christian Jarrett. Youtube. [Online]. Available at https://www.youtube.com/watch?v=H2_by0rp5q0. [Accessed on 06/04/2020] Townsend, C., (2004). The Art of Rachel Whiteread. olin.tind.io. [Online]. Available at https://olin.tind.io/record/125945/. Turner Contemporary, (2017). Tracey Emin - ‘My Bed’ at Turner Contemporary. Youtube. [Online]. Available at https://www.youtube.com/watch?v=Bg7wQWN23fo&list=PLTi6OHh9i_g7xVdPaEiaxRaGxHPajsN-G&index=54. [Accessed on 08/05/2020] VernissageTV, (2009). Tracey Emin. 20 Years. Youtube. [Online]. Available at https://www.youtube.com/watch?v=u8jDyyXHPT8. [Accessed on 06/04/2020] Whiteread, R., et al., (2003). Rachel Whiteread: Transient Spaces. Solomon R. Guggenheim Museum. Available at https://play.google.com/store/books/details?id=oftBPgAACAAJ.

1 note

·

View note

Text

TL;DR: Read logs, delete useless shit, maintain the DB clear.

* Warning: An extended learn! *

Oh properly, I feel like a n00b while typing this but that’s how things roll. I’m not positive if I’ve any regular readers left, as to have regular readers one must be a daily writer, which I am clearly not. (Yes, I wish to change.)

Still, when you go back and stalk this blog, you’ll notice that submit frequency for 2018 has been abysmal at it’s greatest. But yes, there’s a cause an fascinating story behind the same. A narrative which might train you a thing or two about hosting WordPress by yourself internet hosting. It has definitely taught me a lot.

So, it began back in January once I began noticing poor loading performance of the location. Being a self-shared hosted occasion, I chalked it up to poor bandwidth or dangerous optimisation by Staff GoDaddy (not a lot constructive about them). Soon I started getting 503 error randomly on the home page, so it was time to research stuff…

Preliminary findings

I logged into GoDaddy account and went into my hosting status page. Immediately I used to be greeted with an orange banner(hyperlink right here) stating I’m reaching the resource limit and I have to improve my internet hosting plan soon to maintain up the graceful operations. I scoffed mildly to their advertising techniques and opened the boot to look underneath the hood.

I opened the CPanel and took a look over on the system panel on the left. To my amazement, virtually all of the parameters have been either terminal pink or warning orange. I appeared up the labels to know the which means of these indicators.

Pink is often my favorite color

Nicely, clearly, I used to be a bit stunned as I have an expertise of operating WordPress since 2006-ish and I have had run pretty complexly themed blogs on my potato local pc (2006 PC, yeah!) utilizing XAMMP on Home windows.

In case you are a backend guy and skim this line above now (in 2018), you will in all probability cringe more durable than you do on Nicki Minaz songs. Every little thing about that line is WRONG (2006, PC, XAMMP).

Anyway, I had a fond memory of WordPress stack being tremendous environment friendly and respectable at dealing with a mere 100+ blog posts with ~15 plugins. Especially once I was not even posting posts commonly and visitors was on a decline.

Something was improper right here.

I referred to as up GoDaddy tech gross sales help and patiently explained my drawback to him only to get his sales pitch – “Sar, I can see the upgrade banner in your account, so can you. Please give cash, we offer you moar resourcez. Oaky?“. Hmm, in all probability not that brash however you get the gist. I (mildly irritated) requested him to escalate my name to his supervisor or someone from *real* tech help.

Properly, they kinda did. A woman (I am NOT a sexist) picked up the decision and I swear to the odin that she was not capable of perceive something about wp-config and the 503 error and requested me if I have cleared my browser cache. I politely requested her to switch the decision to her supervisor.

…

This time a moderately mature sounding guy picked up the decision and ask my drawback. People, I used to be already 3 ranges deep and 20 minutes on the call. I still defined to him my drawback. He opened his admin console, obtained to my box and disabled all plugins (essential) and my custom theme.

The location seemed to breathe for some time and we have been capable of access the same. He informed me plainly that this can be a basic case of resource overutilization and I have to upgrade my hosting from the essential starter plan to at the least their Delux combo something plan. I made the rookie’s mistake of asking the fee for the same as he immediately stated he will simply transfer my name to his gross sales representative. *facepalm* I held up the connection earlier than they might plug me one other degree deep.

I deep down knew that I want to research this myself earlier than throwing moolah on the desk.

Lazy boi excuses; Half – I

This was February 2018. I stored my weekend free and planned to drill down into my GoDaddy shared internet hosting server to seek out the resource drawback. I used to be positive about some bug leaking memory or some infinite loop sucking out my CPU burst cycles. I deliberate to duplicate the setup on AWS t2.micro free occasion and made an account on the same. It does require a credit card on the file before letting to fireside up ec2 situations. My AMEX CC had some drawback because it debits the verification money however nonetheless stated pending for 48 hours. Truthful enough, I assumed I’ll begin in 2 days…

But all of a sudden (a software program engineer approach of shedding joyful tears!), I acquired a huge venture to work on from scratch at my last job @ Shuttl. (Yeah, I have switched career, yet once more). The venture identify rhymed with XMS. I was pretty excited to build a Python Django venture from scratch along with my 2 gifted senior teammates. I used to be completely satisfied that I will get to study a ton and can deploy an entire challenge reside AND……both of my 2 gifted senior teammates left earlier than even the completion of the primary milestone of the undertaking. Yep, just left. And I used to be struck with lots of legacy code to work on, with a little or no concept concerning the framework. I had a great experience with Flask framework but Django had some things carried out in another way.

I slogged at work and the great half was that I was capable of understand most of the code and received fairly good at Django, carried out a ton of APIs and built a primary dashboard UI. Anyway, that sucked subsequent 2 months of my life and I utterly forgot about this blog, the 503 challenge and meanwhile it stored getting worse as it began opening sometimes and stored throwing 503 errors for probably the most part.

Lazy boi excuses; Part – II

Nah, let’s transfer forward. I’ve shared too much personal stuff anyway. 😛

Let’s start recent? Scrap all shit.

It was around Might 2018 and I obtained some interns and a junior to assist me with new tasks that our product group was pumping out PRDs out at a tremendous price. I used to be working continuous on similar however still a window of private time opened up. Meanwhile, we migrated our code repositories to Github from Gitlab and I obtained to know concerning the idea of gh-pages.

Github pages – A neat nifty venture by Github which allows you to host stuff from your repo as easy websites or blogs. Free of charge!

This appeared like a candy chime to my ears as I used to be drained of the non-existent help from GoDaddy and their incompetent tech staff (free-tier a minimum of). I began formulating a plan to nuke bitsnapper altogether and start from scratch and make a easy Martin Fowler-esque blog.

Clear, simple and nerdy.

So, I created a simple website blog on jatinkrmalik.github.io and even posted some posts (perhaps 1). However because of lack of a lot formatting options and skill to customize stuff, was a bummer.

I lost curiosity in Github pages quicker than America did in Trump.

AND soon I resigned from Shuttl and left in July as a result of [redacted] causes.

A new beginning, AWS method?!

In late July, I joined a really early stage startup referred to as Synaptic.io after what felt like a swyamwar of supply letters. (okay, no bragging). I used to be impressed by the product and measurement of the staff which you possibly can rely on one hand. It felt profitable to get into core group, build one thing great and have an opportunity to witness progress from the within.

Anyway, Synaptic being a data-heavy company, we use lots of third social gathering providers and instruments for automated deployment to staging, prod and so on. Naturally, AWS is the spine of our deployment infra. I acquired a brand new AWS account each for staging and prod, so I started reading about the identical and obtained to find out about Bitnami WordPress AMI which comes preloaded with the WordPress stack goodies and one can deploy with a click on. It was time to reactivate my AWS account and hearth this up.

A couple of weeks in the past.

Initially of August 2018, I was lastly capable of authenticate my AWS account by punching a new credit card. I fired up a bitnami WordPress occasion and did a setup for the standard WordPress installation. Now all I had to do was simply again up stuff from GoDaddy servers and restore right here.

Sounds straightforward proper?

EXCEPT.

IT.

WAS.

NOT.

I logged into my good previous CPanel, received the FTP creds, loaded FileZilla and began the switch. The ETA was in the north of double-digit hours as the website’s public_html folder was somewhere round 1.5 GB which is comprehensible as I’ve lots of media information and videos. Truthful enough. However this once more was going to take rather a lot of time as the problem with transferring a folder is that every micro-size file (<100 kb) takes mere milliseconds to obtain however takes it’s personal sweet time to write down on the local disk when downloading from the web. The apparent means was to pack the public_html folder into a zip file and then switch.

I did an SSH into the box and ran the command zip -rv public_html.zip public_html/ to zip the listing, but one factor which I forgot was that even whereas zipping a listing, I’ll hit the identical drawback of the zip program manually iterating over all of the information (together with microsized one) and can take quite a bit of time to attempt to compress every one. I left it for 20 minutes only to seek out it solely 10% via my all information. Enchancment? Positive but I am not a very patient man.

Why is that this so slow? Oh, wait.

I appeared into the log (because of -v…verbose), and came upon that I had quite a bit of information in my public_html folder in my xcloner plugin directory resulting from some failed attempts to take website backup from a plugin. I found more such folders of some plugins which haven’t any lively position in powering up this blog.

Checking the dimensions of information within the plugins directory.

So, I deleted these folders in public_html/wp-content/plugins and tried operating the zip command once more. It was still slow and I gave up in a couple of minutes.

Clear up.Zip them em!

I google about wrapping information in a zipper with out compressing a lot and obtained to study ranges of compression in zip utility which fits from 1-9 with 1 being least compression and 9 being the very best degree of compression whereas it defaults to 6. So, I attempted again this time with butzip -1rv public_html.zip public_html/ quickly realized the iteration over gazzilion information take extra time than compression logic for the CPU.

Simply wrapping.

I learn more and came upon that making a tarball w/o compression is quicker than zip utility, so it was time to attempt that and perhaps let it complete in its own sweet time. So, I fired up the command: tar -caf public.tar public_html and left it operating.

Unsure if it ever completed…

Then I logged into phpMyAdmin (an internet app to handle MySQL occasion) to take a backup of my bitsnapper WordPress DB. I simply clicked on export and the downloaded file was of measurement 48 MBs which was odd as in UI it was displaying a DB measurement of 1.2 GB. I knew SQL backup can compress some knowledge but of this magnitude? WTF. I opened the SQL file in VS code and clearly, the file was incomplete and had some HTML gibberish at the end which on inspection was the HTML for phpMyAdmin. Bizarre?

I attempted exporting the DB as soon as again from the UI and this time the dimensions of the backup.sql file was 256 MBs. I felt this was applicable but my instinct did a proper click on and opened in my editor as soon as again. Certainly enough the file was nonetheless incomplete with that gibberish. Truthful to say, the backup from phpMyAdmin was corrupted.

prime

I did an ssh into my internet hosting box using the creds in my GoDaddy account and tried every little thing from checking the output of system instructions like:prime, ps -ef, free but the box is properly sandboxed by GoDaddy to avoid any unauthorised access. I even tried to do a privilege escalation with intention of gaining extra management over my hosting account and perhaps restart mysqld however all in useless.

pssudo?

I knew about taking direct DB backups from the shell utilizing mysqldump -h -u -p > db_backup.sql so it was time to attempt that. I ran the command and tailed the backup SQL file with tail -f db_backup.sql to look into its content material because it populated. It began exporting DB nicely and as I started feeling badass and went to seize a cup of espresso, the terminal introduced me with the error message:

man mysqldumpFirst try.

I googled about the problem and it had something to do with the max_alllowed_packet variable of MySQL. The one two methods to vary that was either my modifying /and so forth/my.cnf file (which I used to be positive I didn’t have sudo entry to) or run SET GLOBAL max_allowed_packet=1073741824; query within the MySQL console.

Admin? No? Sorry.

Yeah, both of them didn’t work. Obviously. You want respective system admin consumer access for both.

The roadblock was getting stupidly irritating, and I had to get the backup. I googled more and somebody prompt to cross the max_allowed_packet variable with the mysqldump command as.–max-allowed-packet=1073741824 Tried that too, didn’t work.

With –max_allowed_packet

I was tired and needed to sleep, so I terminated my ec2 occasion and slept.

TODAY.

At present I used to be feeling motivated and deliberate to look into the problem from another angle.

As an alternative of utilizing the WordPress AMI, I made a decision to create the whole setup from scratch. I launched an occasion of ec2 with Amazon Linux AMI. The goal was to know if that is actually GoDaddy messing with me or is it some fault in my database which is leading to the entire shebang.

I used this submit as steerage to arrange every little thing from grounds up.

I logged in once more to my GoDaddy account to be greeted by the orange banner urging me to upgrade. I felt weak and was nearly to click improve and throw some dough to get the straightforward means out. But no, that’s towards the hacker mentality I work with.

So, I opened the CPanel, phpMyAdmin and tried taking a backup again. It again downloaded a 250-something MB file with gibberish at the end. I manually eliminated the last half of the file and uploaded it to my ec2 instance by way of scp and imported it into my distant MySQL occasion.

After importing the public_html information, importing SQL backup and configuring wp-config.php file with DB host and creds, I restarted each httpd (Apache server) and MySQL (DB server) and opened http://ec2-instance-url:80 and to my partial euphoria, it did load up my header and footer for bitsnapper however no posts have been seen.

Hmm… something was missing.

I seemed into the tables on phpMyAdmin and my MySQL server on the ec2 occasion and duh, my wp_xxx_posts table and wp_xxx_postsmeta was lacking. Yeah! So, the problem was that my DB measurement has such giant that Godaddy shared hosting limited bandwidth was not allowing me to take a backup of the whole DB. Clearly, I had to repair this.

I wrote a custom python script to take a backup of the bitsnapper DB table-by-table to avoid hitting the max_allowed_packet limit as observed last week however the identical error mysqldump: Error 2013: Misplaced connection to MySQL server throughout question when dumping table wp_xxx_postmeta stored popping up.