#eureka service registry

Explore tagged Tumblr posts

Video

youtube

Service Discovery Design Pattern For Microservices Short Tutorial for De...

Full Video Link https://youtu.be/bRZm5u6e9o8

Hello friends, new #video on #servicediscovery #microservice #designpattern #tutorial for #programmers with #examples is published on #codeonedigest #youtube channel.

@java #java #aws #awscloud @awscloud @AWSCloudIndia #salesforce #Cloud #CloudComputing @YouTube #youtube #azure #msazure #servicediscovery #servicediscoveryinmicroservices #servicediscoveryinmicroservicesspringboot #servicediscoveryKubernetes #servicediscoveryinawsecs #servicediscoverypattern #servicediscoveryvsapigateway #servicediscoveryinaws #servicediscoverymicroservicesspringbootexample #servicediscoveryvsserviceregistry #servicediscoveryinPrometheus #servicediscoveryinmobilecomputing #servicediscoverymicroservices #servicediscoverymicroservicesjavaexample #servicediscoverymicroservicesspringboot #serviceregistryanddiscoverymicroservices #servicediscoverymicroservicesaws #serviceregistry #serviceregistrymicroservices #serviceregistryanddiscoverymicroservices #serviceregistryinspringboot #serviceregistryKubernetes #serviceregistrypattern #serviceregistryinazure #serviceregistryspringboot #eurekaserviceregistry #eurekaserviceregistryspringboot #consulserviceregistry #springbootserviceregistryexample

#youtube#service discovery#service registry#service discovery pattern#service registry pattern#microservice pattern#microservice design pattern#design pattern#software pattern#software design pattern#java pattern#java design pattern#microservices#microservice architecture#microservice#eureka service discovery#eureka service registry#eureka registry#netflix eureka#eureka netflix#netflix service discovery#netflix service registry

1 note

·

View note

Text

Essential Components of a Production Microservice Application

DevOps Automation Tools and modern practices have revolutionized how applications are designed, developed, and deployed. Microservice architecture is a preferred approach for enterprises, IT sectors, and manufacturing industries aiming to create scalable, maintainable, and resilient applications. This blog will explore the essential components of a production microservice application, ensuring it meets enterprise-grade standards.

1. API Gateway

An API Gateway acts as a single entry point for client requests. It handles routing, composition, and protocol translation, ensuring seamless communication between clients and microservices. Key features include:

Authentication and Authorization: Protect sensitive data by implementing OAuth2, OpenID Connect, or other security protocols.

Rate Limiting: Prevent overloading by throttling excessive requests.

Caching: Reduce response time by storing frequently accessed data.

Monitoring: Provide insights into traffic patterns and potential issues.

API Gateways like Kong, AWS API Gateway, or NGINX are widely used.

Mobile App Development Agency professionals often integrate API Gateways when developing scalable mobile solutions.

2. Service Registry and Discovery

Microservices need to discover each other dynamically, as their instances may scale up or down or move across servers. A service registry, like Consul, Eureka, or etcd, maintains a directory of all services and their locations. Benefits include:

Dynamic Service Discovery: Automatically update the service location.

Load Balancing: Distribute requests efficiently.

Resilience: Ensure high availability by managing service health checks.

3. Configuration Management

Centralized configuration management is vital for managing environment-specific settings, such as database credentials or API keys. Tools like Spring Cloud Config, Consul, or AWS Systems Manager Parameter Store provide features like:

Version Control: Track configuration changes.

Secure Storage: Encrypt sensitive data.

Dynamic Refresh: Update configurations without redeploying services.

4. Service Mesh

A service mesh abstracts the complexity of inter-service communication, providing advanced traffic management and security features. Popular service mesh solutions like Istio, Linkerd, or Kuma offer:

Traffic Management: Control traffic flow with features like retries, timeouts, and load balancing.

Observability: Monitor microservice interactions using distributed tracing and metrics.

Security: Encrypt communication using mTLS (Mutual TLS).

5. Containerization and Orchestration

Microservices are typically deployed in containers, which provide consistency and portability across environments. Container orchestration platforms like Kubernetes or Docker Swarm are essential for managing containerized applications. Key benefits include:

Scalability: Automatically scale services based on demand.

Self-Healing: Restart failed containers to maintain availability.

Resource Optimization: Efficiently utilize computing resources.

6. Monitoring and Observability

Ensuring the health of a production microservice application requires robust monitoring and observability. Enterprises use tools like Prometheus, Grafana, or Datadog to:

Track Metrics: Monitor CPU, memory, and other performance metrics.

Set Alerts: Notify teams of anomalies or failures.

Analyze Logs: Centralize logs for troubleshooting using ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd.

Distributed Tracing: Trace request flows across services using Jaeger or Zipkin.

Hire Android App Developers to ensure seamless integration of monitoring tools for mobile-specific services.

7. Security and Compliance

Securing a production microservice application is paramount. Enterprises should implement a multi-layered security approach, including:

Authentication and Authorization: Use protocols like OAuth2 and JWT for secure access.

Data Encryption: Encrypt data in transit (using TLS) and at rest.

Compliance Standards: Adhere to industry standards such as GDPR, HIPAA, or PCI-DSS.

Runtime Security: Employ tools like Falco or Aqua Security to detect runtime threats.

8. Continuous Integration and Continuous Deployment (CI/CD)

A robust CI/CD pipeline ensures rapid and reliable deployment of microservices. Using tools like Jenkins, GitLab CI/CD, or CircleCI enables:

Automated Testing: Run unit, integration, and end-to-end tests to catch bugs early.

Blue-Green Deployments: Minimize downtime by deploying new versions alongside old ones.

Canary Releases: Test new features on a small subset of users before full rollout.

Rollback Mechanisms: Quickly revert to a previous version in case of issues.

9. Database Management

Microservices often follow a database-per-service model to ensure loose coupling. Choosing the right database solution is critical. Considerations include:

Relational Databases: Use PostgreSQL or MySQL for structured data.

NoSQL Databases: Opt for MongoDB or Cassandra for unstructured data.

Event Sourcing: Leverage Kafka or RabbitMQ for managing event-driven architectures.

10. Resilience and Fault Tolerance

A production microservice application must handle failures gracefully to ensure seamless user experiences. Techniques include:

Circuit Breakers: Prevent cascading failures using tools like Hystrix or Resilience4j.

Retries and Timeouts: Ensure graceful recovery from temporary issues.

Bulkheads: Isolate failures to prevent them from impacting the entire system.

11. Event-Driven Architecture

Event-driven architecture improves responsiveness and scalability. Key components include:

Message Brokers: Use RabbitMQ, Kafka, or AWS SQS for asynchronous communication.

Event Streaming: Employ tools like Kafka Streams for real-time data processing.

Event Sourcing: Maintain a complete record of changes for auditing and debugging.

12. Testing and Quality Assurance

Testing in microservices is complex due to the distributed nature of the architecture. A comprehensive testing strategy should include:

Unit Tests: Verify individual service functionality.

Integration Tests: Validate inter-service communication.

Contract Testing: Ensure compatibility between service APIs.

Chaos Engineering: Test system resilience by simulating failures using tools like Gremlin or Chaos Monkey.

13. Cost Management

Optimizing costs in a microservice environment is crucial for enterprises. Considerations include:

Autoscaling: Scale services based on demand to avoid overprovisioning.

Resource Monitoring: Use tools like AWS Cost Explorer or Kubernetes Cost Management.

Right-Sizing: Adjust resources to match service needs.

Conclusion

Building a production-ready microservice application involves integrating numerous components, each playing a critical role in ensuring scalability, reliability, and maintainability. By adopting best practices and leveraging the right tools, enterprises, IT sectors, and manufacturing industries can achieve operational excellence and deliver high-quality services to their customers.

Understanding and implementing these essential components, such as DevOps Automation Tools and robust testing practices, will enable organizations to fully harness the potential of microservice architecture. Whether you are part of a Mobile App Development Agency or looking to Hire Android App Developers, staying ahead in today’s competitive digital landscape is essential.

0 notes

Text

When do we set the values of two properties to false in application.properties file?

eureka.client.register-with-eureka=false eureka.client.fetch-registry=false eureka.client.register-with-eureka=false We set this to false when the service is itself a Eureka server. The default value of this property is true. When a service is not an Eureka server(normal microservice), we don’t need to set this property as it is true by default. Basically, this property is used to register a…

View On WordPress

0 notes

Text

Microservices Architecture with Spring Cloud

What are microservices?

Microservices is an architecture(service-oriented architecture) (SOA). In this architecture, applications are broken down into various services. The motivation behind this is separation and modularity.

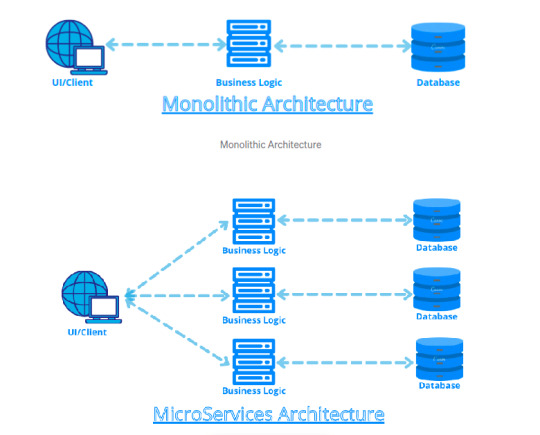

Monolithic architecture and microservices are more beneficial.

We don’t need to specify all business logic into a single software module. It leads to complexity and time-consuming debugging.

In the above diagram, as we can see, we have only one unit of application in a monolithic architecture. In a microservices architecture, we can see three different services.

Advantages

Increases scalability

More flexibility

Modular architecture

Ease of introducing new features

More reliable and robust structure

Challenges

Cost management

Network latency and load balancing

Complexity at the end-to-end testing

Health Care Example

Let’s take an example

Spring Cloud

It helps us build microservices architecture with various components it provides. Spring cloud helps us manage communication, security threats, maintenance and fault tolerance with different components. Let’s see them one by one.

Spring Cloud Components

Spring Cloud Config Server

Feign Client

Service Discovery and Registry with Eureka

Spring Cloud Gateway

Resillience4j

Spring Cloud Bus

Spring Cloud -> Config Server

Config server uses the git repo to store configurations.

Ease of managing configuration of multiple microservices in a single place

Configurations can be managed with the application.yaml / properties files within a git repo

Each microservice can connect to the config server and get the required configurations

Spring Cloud -> Feign Client

Microservices need to communicate with each other to exchange information. Feign client can be used for that learn more

#microservices#cloud blogs#spring#Healthcare#healthcare software development company#Healthcare interoperability#healthcare solutions

0 notes

Text

Dependency free microservice API docs aggregation/portal?

Is there a way to simply aggregate API docs from multiple sources (different microservices on different hosts) and show them in a single front-end, without having to inject a bunch of service registry nonsense into each individual app? Something like swagger-ui where you can select from a list of all configured services.

I imagine it as a kind of standalone API-docs server where you configure multiple sources, like sources: app1: https://foo.host:8080/api/openapidocs app2: https://bar.host:8080/api/openapidocs

And then I can run the API doc portal without these services knowing or caring about it. FWIW the services are written in Java/Spring. We do provide the API specification in OAS 3.0 in the services already.

I realize this can be done with Spring Cloud, but it requires a bunch of service registry stuff like Eureka etc that we don't want to bloat or environment with. The services are already running fine with service discovery etc handled elsewhere (mostly native kubernetes).

submitted by /u/Dwight-D [link] [comments] from Software Development - methodologies, techniques, and tools. Covering Agile, RUP, Waterfall + more! https://ift.tt/2YvTjuM via IFTTT

0 notes

Text

Simple about java microservices

I decided to create simple tutorial about creating microservices with Spring. My goal is to make few presentations that should be easy to understand & follow. I participated in several small to huge microservices projects and find it hard to understand for developers who never worked with it before.

I plan to create following training topics:

Simple Spring Boot REST Service

Spring-Boot basics

Spring starters

Spring Actuator

Spring Cloud Configuration

Eureka Services Registry

Feign client

Zuul API Gateway

Swagger

Metrics

Docker Basics

Kubernetes

0 notes

Text

Java Solution Architect

Job Title: Java Solution Architect Location: Topeka, KS Duration: 12+Months Interview Type: Skype Job Description: · Expert level programming skills in Java · Experience with TDD utilizing Mocking and similar concepts · Strong understanding of Microservices architectures · Experience with technologies used for service registry like Zookeeper, Eureka etc · Experience with event-based and message-driven distributed system · Experience with reactive programming (RX, Reactive Streams, Akka etc) · Experience with NoSQL Datastores such as Cassandra and MongoDB · Experience with distributed caching frameworks such as Redis, JBoss Datagrid · Experienced with Platforms as a Service such as Cloud Foundry, OpenShift, etc. · Experience with Continuous Integration / Continuous Delivery using modern DevOps tools and workflows such as git, GitHub, Jenkins · Experience with agile development (Scrum, Kanban, etc.) and Test Automation (behaviour, unit, integration testing) Desirable: · Java Certification · Experience with JBoss Drools · Experience with any BRMS(Business Rules Management System) like iLog, JBoss BRMS · Experience with JMS, Kafka · Experience with Spring boot · Experience with Spring cloud · Experience with Apache Camel · Experience with Gradle · Experience with Groovy, Scala Reference : Java Solution Architect jobs Source: http://jobrealtime.com/jobs/technology/java-solution-architect_i5841

0 notes

Text

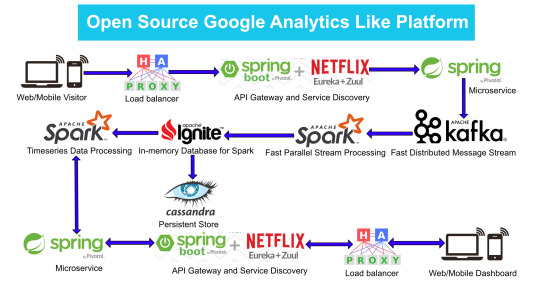

Google Analytics (GA) like Backend System Architecture

There are numerous way of designing a backend. We will take Microservices route because the web scalability is required for Google Analytics (GA) like backend. Micro services enable us to elastically scale horizontally in response to incoming network traffic into the system. And a distributed stream processing pipeline scales in proportion to the load.

Here is the High Level architecture of the Google Analytics (GA) like Backend System.

Components Breakdown

Web/Mobile Visitor Tracking Code

Every web page or mobile site tracked by GA embed tracking code that collects data about the visitor. It loads an async script that assigns a tracking cookie to the user if it is not set. It also sends an XHR request for every user interaction.

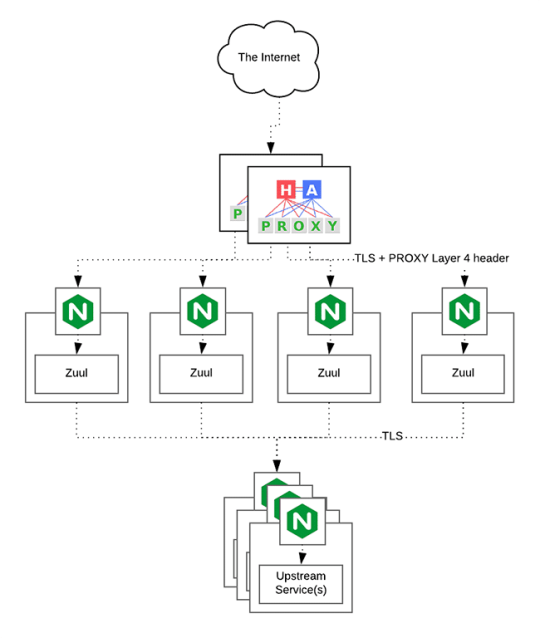

HAProxy Load Balancer

HAProxy, which stands for High Availability Proxy, is a popular open source software TCP/HTTP Load Balancer and proxying solution. Its most common use is to improve the performance and reliability of a server environment by distributing the workload across multiple servers. It is used in many high-profile environments, including: GitHub, Imgur, Instagram, and Twitter.

A backend can contain one or many servers in it — generally speaking, adding more servers to your backend will increase your potential load capacity by spreading the load over multiple servers. Increased reliability is also achieved through this manner, in case some of your backend servers become unavailable.

HAProxy routes the requests coming from Web/Mobile Visitor site to the Zuul API Gateway of the solution. Given the nature of a distributed system built for scalability and stateless request and response handling we can distribute the Zuul API gateways spread across geographies. HAProxy performs load balancing (layer 4 + proxy) across our Zuul nodes. High-Availability (HA ) is provided via Keepalived.

Spring Boot & Netflix OSS Eureka + Zuul

Zuul is an API gateway and edge service that proxies requests to multiple backing services. It provides a unified “front door” to the application ecosystem, which allows any browser, mobile app or other user interface to consume services from multiple hosts. Zuul is integrated with other Netflix stack components like Hystrix for fault tolerance and Eureka for service discovery or use it to manage routing rules, filters and load balancing across your system. Most importantly all of those components are well adapted by Spring framework through Spring Boot/Cloud approach.

An API gateway is a layer 7 (HTTP) router that acts as a reverse proxy for upstream services that reside inside your platform. API gateways are typically configured to route traffic based on URI paths and have become especially popular in the microservices world because exposing potentially hundreds of services to the Internet is both a security nightmare and operationally difficult. With an API gateway, one simply exposes and scales a single collection of services (the API gateway) and updates the API gateway’s configuration whenever a new upstream should be exposed externally. In our case Zuul is able to auto discover services registered in Eureka server.

Eureka server acts as a registry and allows all clients to register themselves and used for Service Discovery to be able to find IP address and port of other services if they want to talk to. Eureka server is a client as well. This property is used to setup Eureka in highly available way. We can have Eureka deployed in a highly available way if we can have more instances used in the same pattern.

Spring Boot Microservices

Using a microservices approach to application development can improve resilience and expedite the time to market, but breaking apps into fine-grained services offers complications. With fine-grained services and lightweight protocols, microservices offers increased modularity, making applications easier to develop, test, deploy, and, more importantly, change and maintain. With microservices, the code is broken into independent services that run as separate processes.

Scalability is the key aspect of microservices. Because each service is a separate component, we can scale up a single function or service without having to scale the entire application. Business-critical services can be deployed on multiple servers for increased availability and performance without impacting the performance of other services. Designing for failure is essential. We should be prepared to handle multiple failure issues, such as system downtime, slow service and unexpected responses. Here, load balancing is important. When a failure arises, the troubled service should still run in a degraded functionality without crashing the entire system. Hystrix Circuit-breaker will come into rescue in such failure scenarios.

The microservices are designed for scalability, resilience, fault-tolerance and high availability and importantly it can be achieved through deploying the services in a Docker Swarm or Kubernetes cluster. Distributed and geographically spread Zuul API gateways route requests from web and mobile visitors to the microservices registered in the load balanced Eureka server.

The core processing logic of the backend system is designed for scalability, high availability, resilience and fault-tolerance using distributed Streaming Processing, the microservices will ingest data to Kafka Streams data pipeline.

Apache Kafka Streams

Apache Kafka is used for building real-time streaming data pipelines that reliably get data between many independent systems or applications.

It allows:

Publishing and subscribing to streams of records

Storing streams of records in a fault-tolerant, durable way

It provides a unified, high-throughput, low-latency, horizontally scalable platform that is used in production in thousands of companies.

Kafka Streams being scalable, highly available and fault-tolerant, and providing the streams functionality (transformations / stateful transformations) are what we need — not to mention Kafka being a reliable and mature messaging system.

Kafka is run as a cluster on one or more servers that can span multiple datacenters spread across geographies. Those servers are usually called brokers.

Kafka uses Zookeeper to store metadata about brokers, topics and partitions.

Kafka Streams is a pretty fast, lightweight stream processing solution that works best if all of the data ingestion is coming through Apache Kafka. The ingested data is read directly from Kafka by Apache Spark for stream processing and creates Timeseries Ignite RDD (Resilient Distributed Datasets).

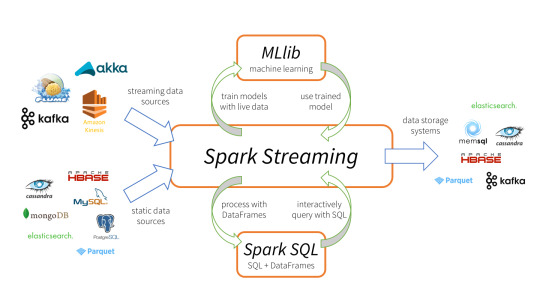

Apache Spark

Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data streams.

It provides a high-level abstraction called a discretized stream, or DStream, which represents a continuous stream of data.

DStreams can be created either from input data streams from sources such as Kafka, Flume, and Kinesis, or by applying high-level operations on other DStreams. Internally, a DStream is represented as a sequence of RDDs (Resilient Distributed Datasets).

Apache Spark is a perfect choice in our case. This is because Spark achieves high performance for both batch and streaming data, using a state-of-the-art DAG scheduler, a query optimizer, and a physical execution engine.

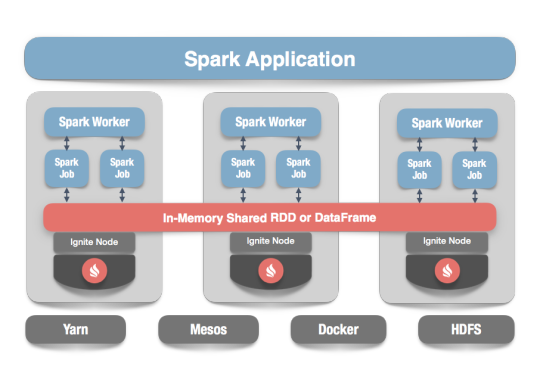

In our scenario Spark streaming process Kafka data streams; create and share Ignite RDDs across Apache Ignite which is a distributed memory-centric database and caching platform.

Apache Ignite

Apache Ignite is a distributed memory-centric database and caching platform that is used by Apache Spark users to:

Achieve true in-memory performance at scale and avoid data movement from a data source to Spark workers and applications.

More easily share state and data among Spark jobs.

Apache Ignite is designed for transactional, analytical, and streaming workloads, delivering in-memory performance at scale. Apache Ignite provides an implementation of the Spark RDD which allows any data and state to be shared in memory as RDDs across Spark jobs. The Ignite RDD provides a shared, mutable view of the same data in-memory in Ignite across different Spark jobs, workers, or applications.

The way an Ignite RDD is implemented is as a view over a distributed Ignite table (aka. cache). It can be deployed with an Ignite node either within the Spark job executing process, on a Spark worker, or in a separate Ignite cluster. It means that depending on the chosen deployment mode the shared state may either exist only during the lifespan of a Spark application (embedded mode), or it may out-survive the Spark application (standalone mode).

With Ignite, Spark users can configure primary and secondary indexes that can bring up to 1000x performance gains.

Apache Cassandra

We will use Apache Cassandra as storage for persistence writes from Ignite.

Apache Cassandra is a highly scalable and available distributed database that facilitates and allows storing and managing high velocity structured data across multiple commodity servers without a single point of failure.

The Apache Cassandra is an extremely powerful open source distributed database system that works extremely well to handle huge volumes of records spread across multiple commodity servers. It can be easily scaled to meet sudden increase in demand, by deploying multi-node Cassandra clusters, meets high availability requirements, and there is no single point of failure.

Apache Cassandra has best write and read performance.

Characteristics of Cassandra:

It is a column-oriented database

Highly consistent, fault-tolerant, and scalable

The data model is based on Google Bigtable

The distributed design is based on Amazon Dynamo

Right off the top Cassandra does not use B-Trees to store data. Instead it uses Log Structured Merge Trees (LSM-Trees) to store its data. This data structure is very good for high write volumes, turning updates and deletes into new writes.

In our scenario we will configure Ignite to work in write-behind mode: normally, a cache write involves putting data in memory, and writing the same into the persistence source, so there will be 1-to-1 mapping between cache writes and persistence writes. With the write-behind mode, Ignite instead will batch the writes and execute them regularly at the specified frequency. This is aimed at limiting the amount of communication overhead between Ignite and the persistent store, and really makes a lot of sense if the data being written rapidly changes.

Analytics Dashboard

Since we are talking about scalability, high availability, resilience and fault-tolerance, our analytics dashboard backend should be designed in a pretty similar way we have designed the web/mobile visitor backend solution using HAProxy Load Balancer, Zuul API Gateway, Eureka Service Discovery and Spring Boot Microservices.

The requests will be routed from Analytics dashboard through microservices. Apache Spark will do processing of time series data shared in Apache Ignite as Ignite RDDs and the results will be sent across to the dashboard for visualization through microservices

0 notes

Video

youtube

Create Spring Cloud Configuration Server for Microservices | Setup Eurek...

Full Video Link https://youtu.be/Exoy4ZNAO9Y

Hello friends, new #video on #springcloud #configserver #eureka #servicediscovery setup is published on #codeonedigest #youtube channel.

@java #java #aws #awscloud @awscloud @AWSCloudIndia #salesforce #Cloud #CloudComputing @YouTube #youtube #azure #msazure #configserver #configserverspringboot #configserverinmicroservices #configserverinspringbootmicroservices #configserverinspringbootexample #configserverspringbootgithub #configserverfirewall #configserverspringcloud #configserverspring #configurationserver #configurationserverspringboot #configurationserverdns #configurationserverinmicroservices #configurationserverstepbystep #eurekaservice #eurekaservicediscovery #eurekaserviceregistryspringboot #eurekaservicediscoveryexamplespringboot #eurekaserviceregistry #eurekaserviceinmicroservices #eurekaservicediscoveryexample #eurekaservicediscoveryspringboot #eurekaserviceregistryexample #eurekaservicespringboot

#youtube#spring cloud#spring cloud config#spring cloud config server#spring config server#configuration server#config service#config server#eureka service discovery#service discovery#service registry#netflix eureka#eureka service registry#service discovery pattern#microservices#microservice configuration server

1 note

·

View note

Text

How to Use Hazelcast Auto-Discovery With Eureka

Hazelcast IMDG supports auto-discovery for many different environments. Since we introduced the generic discovery SPI, a lot of plugins were developed so you can use Hazelcast seamlessly on Kubernetes, AWS, Azure, GCP, and more. Should you need a custom plugin, you are also able to create your own. If your infrastructure is not based on any popular Cloud environment but you still want to take advantage of the dynamic discovery rather than static IP configuration, you can set up your service registry. One of the more popular choices, especially in the JVM-based microservice world, is Eureka (initially developed by Netflix and now part of Spring Cloud). Eureka follows the client-server model, and you usually set up a server (or a cluster of servers for high availability) and use clients to register and locate services. http://bit.ly/2IIeMdG

0 notes

Text

What is Service Registry and Service Discovery in Microservices?

Service Registry is the process of registering a microservice with Eureka Server. In simple words, it acts as a kind of database that stores the details of all microservices involved in the entire application. Although, Eureka Server is itself a microservice. To enable the service registry capabilities, we apply annotation @EnableEurekaServer on the top of the Application’s main class. Moreover,…

View On WordPress

0 notes

Video

youtube

Spring Boot + Eureka Server(Service Registry) Example | Java Inspires

0 notes

Text

springboot netflix ref

https://medium.com/@marcus.eisele/microservices-with-mo-part-five-the-registry-service-netflix-eureka-96f0de083252

0 notes

Text

Malware and Cyber Security Incident Response Tools

Many thanks to postmodernsecurity.com and @grecs. Also to Lenny Zeltzer, author of the REMnux malware analysis and reverse engineering distro, who I’ve borrowed double-plus-secret shamelessly from. You’ll find many of these tools and others on his own lists, so I encourage you to check his posts on this topic as well.

Online Network Analysis Tools

Network-Tools.com offers several online services, including domain lookup, IP lookup, whois, traceroute, URL decode/encode, HTTP headers and SPAM blocking list.

Robtex Swiss Army Knife Internet Tool

CentralOps Online Network tools offers domain and other advanced internet utilities from a web interface.

Shadowserver Whois and DNS lookups check ASN and BGP information. To utilize this service, you need to run whois against the Shadowserver whois system or DNS queries against their DNS system.

Netcraft provides passive reconnaissance information about a web site using an online analysis tool or with a browser extension.

Online Malware Sandboxes & Analysis Tools

Malwr: Malware analysis service based on Cuckoo sandbox.

Comodo Instant Malware Analysis and file analysis with report.

Eureka! is an automated malware analysis service that uses a binary unpacking strategy based on statistical bigram analysis and coarse-grained execution tracing.

Joe Sandbox Document Analyzer checks PDF, DOC, PPT, XLS, DOCX, PPTX, XLSX, RTF files for malware.

Joe Sandbox File Analyzer checks behavior of potentially malicious executables.

Joe Sandbox URL Analyzer checks behavior of possibly malicious web sites.

ThreatTrack Security Public Malware Sandbox performs behavioral analysis on potential malware in a public sandbox.

XecScan Rapid APT Identification Service provides analysis of unknown files or suspicious documents. (hash search too)

adopstools scans Flash files, local or remote.

ThreatExpert is an automated threat analysis system designed to analyze and report the behavior of potential malware.

Comodo Valkyrie: A file verdict system. Different from traditional signature based malware detection techniques, Valkyries conducts several analyses using run-time behavior.

EUREKA Malware Analysis Internet Service

MalwareViz: Malware Visualizer displays the actions of a bad file by generating an image. More information can be found by simply clicking on different parts of the picture.

Payload Security: Submit PE or PDF/Office files for analysis with VxStream Sandbox.

VisualThreat (Android files) Mobile App Threat Reputation Report

totalhash: Malware analysis database.

Deepviz Malware Analyzer

MASTIFF Online, a free web service offered by KoreLogic Inc. as an extension of the MASTIFF static analysis framework.

Online File, URL, or System Scanning Tools

VirusTotal analyzes files and URLs enabling the identification of malicious content detected by antivirus engines and website scanners. See below for hash searching as well.

OPSWAT’s Metascan Online scans a file, hash or IP address for malware

Jotti enables users to scan suspicious files with several antivirus programs. See below for hash searching as well.

URLVoid allows users to scan a website address with multiple website reputation engines and domain blacklists to detect potentially dangerous websites.

IPVoid, brought to you by the same people as URLVoid, scans an IP address using multiple DNS-based blacklists to facilitate the detection of IP addresses involved in spamming activities.

Comodo Web Inspector checks a URL for malware.

Malware URL checks websites and IP addresses against known malware lists. See below for domain and IP block lists.

ESET provides an online antivirus scanning service for scanning your local system.

ThreatExpert Memory Scanner is a prototype product that provides a “post-mortem” diagnostic to detect a range of high-profile threats that may be active in different regions of a computer’s memory.

Composite Block List can check an IP to see if it’s on multiple block lists and it will tell you if blocked, then who blocked it or why.

AVG LinkScanner Drop Zone: Analyzes the URL in real time for reputation.

BrightCloud URL/IP Lookup: Presents historical reputation data about the website

Web Inspector: Examines the URL in real-time.

Cisco SenderBase: Presents historical reputation data about the website

Is It Hacked: Performs several of its own checks of the URL in real time and consults some blacklists

Norton Safe Web: Presents historical reputation data about the website

PhishTank: Looks up the URL in its database of known phishing websites

Malware Domain List: Looks up recently-reported malicious websites

MalwareURL: Looks up the URL in its historical list of malicious websites

McAfee TrustedSource: Presents historical reputation data about the website

MxToolbox: Queries multiple reputational sources for information about the IP or domain

Quttera ThreatSign: Scans the specified URL for the presence of malware

Reputation Authority: Shows reputation data on specified domain or IP address

Sucuri Site Check: Website and malware security scanner

Trend Micro Web Reputation: Presents historical reputation data about the website

Unmask Parasites: Looks up the URL in the Google Safe Browsing database. Checks for websites that are hacked and infected.

URL Blacklist: Looks up the URL in its database of suspicious sites

URL Query: Looks up the URL in its database of suspicious sites and examines the site’s content

vURL: Retrieves and displays the source code of the page; looks up its status in several blocklists

urlQuery: a service for detecting and analyzing web-based malware.

Analyzing Malicious Documents Cheat Sheet: An excellent guide from Lenny Zeltser, who is a digital forensics expert and malware analysis trainer for SANS.

Qualys FreeScan is a free vulnerability scanner and network security tool for business networks. FreeScan is limited to ten (10) unique security scans of Internet accessible assets.

Zscaler Zulu URL Risk Analyzer: Examines the URL using real-time and historical techniques

Hash Searches

VirusTotal allows users to perform term searches, including on MD5 hashes, based on submitted samples.

Jotti allows MD5 and SHA1 hash searches based on submitted samples.

Malware Hash Registry by Team Cymru offers a MD5 or SHA-1 hash lookup service for known malware via several interfaces, including Whois, DNS, HTTP, HTTPS, a Firefox add-on or the WinMHR application.

Domain & IP Reputation Lists

Malware Patrol provides block lists of malicious URLs, which can be used for anti-spam, anti-virus and web proxy systems.

Cisco SenderBase Reputation data about a domain, IP or network owner

Malware Domains offers domain block lists for DNS sinkholes.

Malware URL not only allows checking of websites and IP addresses against known malware lists as described above but also provides their database for import into local proxies.

ZeuS Tracker provides domain and IP block lists related to ZeuS.

Fortiguard Threat Research and Response can check an IP or URL’s reputation and content filtering category.

CLEAN-MX Realtime Database: Free; XML output available.

CYMRU Bogon List A bogon prefix is a route that should never appear in the Internet routing table. These are commonly found as the source addresses of DDoS attacks.

DShield Highly Predictive Blacklist: Free but registration required.

Google Safe Browsing API: programmatic access; restrictions apply

hpHosts File: limited automation on request.

Malc0de Database

MalwareDomainList.com Hosts List

OpenPhish: Phishing sites; free for non-commercial use

PhishTank Phish Archive: Free query database via API

ISITPHISHING is a free service from Vade Retro Technology that tests URLs, brand names or subnets using an automatic website exploration engine which, based on the community feeds & data, qualifies the phishing content websites.

Project Honey Pot’s Directory of Malicious IPs: Free, but registration required to view more than 25 IPs

Scumware.org

Shadowserver IP and URL Reports: Free, but registration and approval required

SRI Threat Intelligence Lists: Free, but re-distribution prohibited

ThreatStop: Paid, but free trial available

URL Blacklist: Commercial, but first download free

Additional tools for checking URLs, files, IP address lists for the appearance on a malware, or reputation/block list of some kind.

Malware Analysis and Malicious IP search are two custom Google searches created by Alexander Hanel. Malware Analysis searches over 155 URLS related to malware analysis, AV reports, and reverse engineering. Malicious IP searches CBL, projecthoneypot, team-cymru, shadowserver, scumware, and centralops.

Vulnerability Search is another custom Google search created by Corey Harrell (of Journey into Incident Response Blog). It searches specific websites related to software vulnerabilities and exploits, such as 1337day, Packetstorm Security, Full Disclosure, and others.

Cymon Open tracker of malware, phishing, botnets, spam, and more

Scumware.org in addition to IP and domain reputation, also searches for malware hashes. You’ll have to deal with a captcha though.

ISC Tools checks domain and IP information. It also aggregates blackhole/bogon/malware feeds and has links to many other tools as well.

Malc0de performs IP checks and offers other information.

OpenMalware: A database of malware.

Other Team Cymru Community Services Darknet Project, IP to ASN Mapping, and Totalhash Malware Analysis.

viCheck.CA provides tools for searching their malware hash registry, decoding various file formats, parsing email headers, performing IP/Domain Whois lookups, and analyzing files for potential malware.

AlienVault Reputation Monitoring is a free service that allows users to receive alerts of when domains or IPs become compromised.

Web of Trust: Presents historical reputation data about the website; community-driven. Firefox add-on.

Shodan: a search engine that lets users find specific types of computers (routers, servers, etc.) connected to the internet using a variety of filters.

Punkspider: a global web application vulnerability search engine.

Email tools

MX Toolbox MX record monitoring, DNS health, blacklist and SMTP diagnostics in one integrated tool.

Threat Intelligence and Other Miscellaneous Tools

ThreatPinch Lookup Creates informational tooltips when hovering oven an item of interest on any website. It helps speed up security investigations by automatically providing relevant information upon hovering over any IPv4 address, MD5 hash, SHA2 hash, and CVE title. It’s designed to be completely customizable and work with any rest API. Chrome and Firefox extensions.

ThreatConnect: Free and commercial options.

Censys: A search engine that allows computer scientists to ask questions about the devices and networks that compose the Internet. Driven by Internet-wide scanning, Censys lets researchers find specific hosts and create aggregate reports on how devices, websites, and certificates are configured and deployed.

RiskIQ Community Edition: comprehensive internet data to hunt for digital threats.

Threatminer Data mining for threat intelligence.

IBM X-Force Exchange a threat intelligence sharing platform that you can use to research security threats, to aggregate intelligence, and to collaborate with peers.

Recorded Future: Free email of trending threat indicators.

Shadowserver has lots of threat intelligence, not just reputation lists.

The Exploit Database: From Offensive Security, the folks who gave us Kali Linux, the ultimate archive of Exploits, Shellcode, and Security Papers.

Google Hacking Database: Search the database or browse GHDB categories.

Privacy Rights Clearinghouse: Data breach database.

Breach Level Index: Data breach database.

AWStats: Free real-time log analyzer

AlienVault Open Threat Exchange

REMnux: A Linux Toolkit for Reverse-Engineering and Analyzing Malware: a free Linux toolkit for assisting malware analysts with reverse-engineering malicious software.

Detection Lab: collection of Packer and Vagrant scripts that allow you to quickly bring a Windows Active Directory online, complete with a collection of endpoint security tooling and logging best practices.

SIFT Workstation: a group of free open-source incident response and forensic tools designed to perform detailed digital forensic examinations in a variety of settings.

Security Onion: free and open source Linux distribution for intrusion detection, enterprise security monitoring, and log management.

Kali Linux: Penetration testing and security auditing Linux distribution.

Pentoo: a security-focused livecd based on Gentoo

Tails: a live operating system with a focus on preserving privacy and anonymity.

Parrot: Free and open source GNU/Linux distribution designed for security experts, developers and privacy aware people.

0 notes

Text

Java Architect

Job Title: Java Architect Location: Topeka,KS-Fort Worth,TX Duration: 12+Months Interview Type: Skype Primary Skills: Java Architect Job Description: Expert level programming skills in Java Experience with TDD utilizing Mocking and similar concepts Strong understanding of Microservices architectures Experience with technologies used for service registry like Zookeeper, Eureka etc Experience with event-based and message-driven distributed system Experience with reactive programming (RX, Reactive Streams, Akka etc) Experience with NoSQL Datastores such as Cassandra and MongoDB Experience with distributed caching frameworks such as Redis, JBoss Datagrid Experienced with Platforms as a Service such as Cloud Foundry, OpenShift, etc. Experience with Continuous Integration / Continuous Delivery using modern DevOps tools and workflows such as git, GitHub, Jenkins Experience with agile development (Scrum, Kanban, etc.) and Test Automation (behaviour, unit, integration testing) Desirable: Java Certification Experience with JBoss Drools Experience with any BRMS(Business Rules Management System) like iLog, JBoss BRMS Experience with JMS, Kafka Experience with Spring boot Experience with Spring cloud Experience with Apache Camel Experience with Gradle Experience with Groovy, Scala Reference : Java Architect jobs source http://www.qoholic.com/jobs/technology/java-architect_i5420

0 notes

Text

Java Architect

Job Title: Java Architect Location: Topeka,KS-Fort Worth,TX Duration: 12+Months Interview Type: Skype Primary Skills: Java Architect Job Description: Expert level programming skills in Java Experience with TDD utilizing Mocking and similar concepts Strong understanding of Microservices architectures Experience with technologies used for service registry like Zookeeper, Eureka etc Experience with event-based and message-driven distributed system Experience with reactive programming (RX, Reactive Streams, Akka etc) Experience with NoSQL Datastores such as Cassandra and MongoDB Experience with distributed caching frameworks such as Redis, JBoss Datagrid Experienced with Platforms as a Service such as Cloud Foundry, OpenShift, etc. Experience with Continuous Integration / Continuous Delivery using modern DevOps tools and workflows such as git, GitHub, Jenkins Experience with agile development (Scrum, Kanban, etc.) and Test Automation (behaviour, unit, integration testing) Desirable: Java Certification Experience with JBoss Drools Experience with any BRMS(Business Rules Management System) like iLog, JBoss BRMS Experience with JMS, Kafka Experience with Spring boot Experience with Spring cloud Experience with Apache Camel Experience with Gradle Experience with Groovy, Scala Reference : Java Architect jobs source http://jobrealtime.com/jobs/technology/java-architect_i3815

0 notes