#datacenter provider

Explore tagged Tumblr posts

Text

https://www.colocrossing.com/blog/best-colocation-data-center-near-you/

#colocation#dedicated servers#dedicated server#colocation server datacenter#colocrossing#data center colocation#colocation service provider#colocation data center near me#colocation data center#colocation services

0 notes

Text

Probably not a hot take

I currently have on my desk three notices of data breaches which may affect me, and expect two more in the next week.

Now, clearly there are "hackers". However, I also know that if these companies followed established data protection standards these breaches would be meaningless.

These are issues because of corporate negligence and greed. The causes are twofold:

First, companies collect way too much data. They track our browsing habits. Our spending habits. Our sleeping habits. Why? in the hope that maybe later they can sell that data. There's an entire economy built around buying and selling information which shouldn't even exist. There's no reason, as a consumer, for my Roomba to upload a map of the inside of my house to the iRobot servers. It provides zero value to me, it's not necessary for the robot to operate. The company can't even articulate a business need for it. They just want to collect the data in order to sell it later.

Second, companies are incredibly lax about IT security. They're understaffed, underfunded, and they often don't bother training people on the technology they have, nor do they install and configure it (hint: a firewall doesn't count if it's still sitting in a box on the datacenter floor).

And I think the only way for companies to sit up and take notice is to make them bleed. You can issue guidelines as much as you want, they won't care because making changes and performing due diligence is expensive. They'd much rather just snoop on their customers and sell it all.

So what we need to do, is set a regulatory environment in which:

We recognize that customers, not companies are the victims of data breaches. The companies which are breached are not victims, they are accomplices.

Create a legal definition of private data. This should definitely include medical data, SSNs, &c, but should be broad enough to include information we'd not think about collecting normally (someday in the future, someone will create a toilet which is able to track how often you flush your toilet. They WILL want to sell that data. Fuck 'em.) [I would also want to sneak in there some restrictions clarifying that disclosing this data is covered under the 5th amendment - that no one else can provide your medical data in a court of law, and that you cannot be compelled to do so.]

Create a legal set of guidelines for data security. This needs to be a continuing commitment - a government organization which issues guidance annually. this guidance should establish the minimum standards (e.g., AES128 is required, AES256 certainly qualifies, it's not "the FITSA guidelines only allow AES128, we can't legally use AES512").

Legislate that failure to follow these guidelines to protect private data is negligence, and that responsibility for corporate negligence goes all the way up to the corporate officers. This should be considered a criminal, not civil, matter.

Restrict insurance payouts to companies when the cause is their own negligence.

Set minimum standards for restitution to victims, but clearly state that the restitution should be either the minimum, or 200% the cost to make the victim "whole" - whichever is higher. This must be exempted from arbitration and contractual restrictions - fuck DIsney's bullshit; no one signs their rights away.

Make the punishments for data negligence so severe that most companies - or at least their officers - are terrified of the risks. I'm talking putting CISOs and CEOs in jail and confiscating all their property for restitution.

The goal here is to make it so that the business model of "spy on people, sell their information" is too damned risky and companies don't do it. Yes, it will obsolete entire business models. That's the idea.

10 notes

·

View notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

Best Tally, Cloud, App Development Services Provider | Cevious Technologies.

CEVIOUS was founded in 2009 in Delhi, having 14+ years of experience as a 5-Star Tally Sales, Service & GVLA Partner, catering to 12000+ customers & 4500 Tally License Host in our datacenter. We provide Tally Prime, Customization, Tally AWS Cloud, Datacenter Service, App Development and business automation solutions.

2 notes

·

View notes

Text

Wasat: More Questions than Answers

Major order victory! Wasat was liberated near the end of the 27th day of the Galactic War, well within time before the Automatons could decisively react. Super Earth's top minds made short work of the datacenter's encryption.

The information they've collected thus far, however, remains almost entirely vague and non-conclusive. We still don't know exactly why they're here, who made them, or when, but we do know one thing: they have a lot of plans. Foremost of which is the terraforming of Cyberstan, the icy world where the cyborgs of the original Galactic War were interred and put to work. What they intend to terraform it into remains to be seen, but speculations run wild.

Also concerning were the detailed recordings that the Automatons had of the Meridian Supercolony and of what resulted from it. Whether they were simply observing the effects of Terminid behavior, looking towards the bugs for inspiration against us, or even, as a radical few believe, working towards an alliance with them is unclear.

Finally, and most mysteriously, are the several keywords referenced at multiple points throughout the data revealed so far. Intimidating titles such as "Vessel 00," "Nucleus," and "The Final Collective" are just a few of these that we know of. Your guesses as to what they really mean are as good as ours.

Given the alarming threat of the Automatons terraforming a planet, Super Earth has declared Cyberstan the primary target for the Automaton front of the war. But before any moves can be made on the prison world itself, much ground will have to be retaken to reach it.

As this happens, a choice has been presented to the Helldivers. Two Automaton-controlled planets have sent out distress calls pointing out vital points of contention on the surface, despite the considerable time during which they've been considered lost.

On Vernen Wells, as many as thousands of civilians have so far evaded the bots' murderous rampage, boxed in inside the crumbling ruins of Super Citizen Anne's Hospital for Very Sick Children, or "SCAHfVSC" for short! Running low on food, water, and time, entire villages worth of people could be lost if the Helldivers do not act fast.

But on Marfark, a previously lost tactical opportunity rears its head for the third time. Although the Helldivers have twice now failed to acquire the necessary explosive materials for the construction of the MD-17 Anti-Tank Mines, with them having been lost to Automaton hands, the SEAF has intercepted transmissions from the Automatons identifying a depot on Marfark's surface containing many of the materials originally lost. If reclaimed, the Ministry of Defense could finally provide the Helldivers with another way to take down the many highly armored threats they face in this war.

But to even reach either planet, Aesir Pass must first be taken, as it is the most direct supply route available to both options. That's right, options. SEAF analysts project that whichever planet the SEAF liberates first will be the only one that can be saved. Most likely, evacuating Anne's hospital will leave the bots with enough time and space to relocate the materials, while the effort taken to seize them will be too long before the majority of the starving citizens perish, one way or another.

A moral dilemma ensues. Is it better to save thousands now and set our potential galactic influence back once again, or sacrifice them for unproven ordnance that may not be worth the price? Let's hope that whatever choice the Helldivers make is the right one.

Coming up next will be the next major order. We apologize, as technical issues forestalled our broadcast regarding the most recent one. That's all for now, though.

Give 'em Hell, Divers!

#alnbroadcast#helldivers 2#helldivers#helldivers ii#roleplay#Federation of Super Earth#Automatons#Major Order#SEAF#Wasat#Aesir Pass#Marfark#Vernen Wells#Ymir Sector#Hydra Sector#Andromeda Sector

5 notes

·

View notes

Text

Features of Cloud Hosting Server

Cloud hosting is a form of web hosting provider that utilises resources from more than one server to offer scalable and dependable hosting solutions. My research suggests that Bsoftindia Technologies is one of the high-quality options for you. They have provided all digital and IT services since 2008. They offer all of these facilities at a reasonable fee. Bsoftindia is a leading provider of cloud hosting solutions for businesses of all sizes. Our cloud hosting services are designed to offer scalability, flexibility, and safety, empowering businesses to perform efficiently in the digital world

FEATURES OF CLOUD SERVERS

Intel Xeon 6226R Gold Processor YFastest & Latest Processor: 2.9 GHz, Turbo Boost: 3.9 GHz NVMe Storage Micron 9300, 2000 MB/s Read/Write vs 600 MB/s in SSD 1 Gbps Bandwidth Enjoy Unlimited, BGPed, and DDOS Protected bandwidth Snapshot Backup #1 Veeam Backup & Replication Setup with guaranteed restoration Dedicated Account Manager Dedicated AM & TAM for training, instant support, and seamless experience 3 Data Center Location Choose the nearest location for the least latency 99.95% Uptime A commitment to provide 99.95% Network Uptime Tier 3 Datacenter #1 Veeam Backup & Replication Setup with guaranteed restoration

Get a free demo and learn more about our cloud hosting solutions. https://bsoft.co.in/cloud-demo/

#cloud services#cloud hosting#clouds#service#technology#marketing#cloudserver#digital marketing#delhi#bestcloudhosting#bestcloudhostingsolution

2 notes

·

View notes

Text

Lunar Rose Adventure Academy & Guild What is Lunar Rose? Lunar Rose is centered around the theme of adventure. A Academy and a Guild are under the banner of Lunar. the curriculum consists of activities and lessons that will prepare the students for a life of adventuring, offering practical knowledge on topics such as combat, first-aid and magic, and many more! While the Adventure Guild offers quests from monster hunts to saving cats from trees, Lunar Rose provides adventures for all with the sufficient skills to handle them. Both the teachers and students at Lunar are players roleplaying their characters, as are the staff and members in the Guild. In addition we have characters that work as school staff, for example running security. We have a free company house that serves as the school grounds, and a small for the Guild. But we also often venture out to the vast world of Eorzea too! The school and Guild is accessible for everyone on the Crystal, Aether and Primal Data Center, and we help out New Rpers/Players too! Typical roleplaying activities at Lunar Rose include: School classes following a set schedule Quests ran and DMed by players Student-run clubsA bar called 'Starfall' where people can serve drinks for anyone Casual roleplay in a school setting A more mature theme in the Guild School field trips and other seasonal events Personal plotlines in the Guild Collaborations with other RP groups and communities Come join a community that helps each other out and RPs with everyone on whatever datacenter! Check out the Carrd or join our discord! For any questions or help, please message Masao#2913 https://discord.gg/7WUvEZjRYd https://ghse30ghseku.carrd.co/ https://lunar-rose.carrd.co/

#ff14#ffxiv#final fantasy xiv#ffxiv rp#new rp#original rp#oc rp#roleplay#mature rp#ic#oc#ffxiv balmung#free company#lfc#ffxiv lfrp#final fantasy 14#ffxiv oc#ffxiv housing#Lunar Rose

9 notes

·

View notes

Text

Introduction to Real Estate Investment Trusts (REITs)

Overview of REITs

An organization that owns, manages, or finances real estate that generates revenue is known as a real estate investment trust, or REIT. Like mutual funds, REITs offer an investment opportunity that enables regular Americans, not just Wall Street, banks, and hedge funds, to profit from valuable real estate. It gives investors access to total returns and dividend-based income, and supports the expansion, thriving, and revitalization of local communities.

Anyone can engage in real estate investment trusts (REITs) in the same manner as they can invest in other industries: by buying individual firm shares, through exchange-traded funds (ETFs), or mutual funds. A REIT’s investors receive a portion of the revenue generated without really having to purchase, operate, or finance real estate. Families with 401(k), IRAs, pension plans, and other investment accounts invested in REITs that comprise about 150 million Americans.

Historical Evolution

1960s - REITs were created

When President Eisenhower passes the REIT Act title found in the 1960 Cigar Excise Tax Extension into law, REITs are established. Congress established REITs to provide a means for all investors to participate in sizable, diversified portfolios of real estate that generate income.

1970s - REITs around the world

In 1969 The Netherlands passes the first piece of European REIT legislation. This is when the real estate investment trusts model started to spread over the world; shortly after, in 1971, listed property trusts were introduced in Australia.

1980s - Competing for capital

1980s saw real estate tax-sheltered partnerships expanding at this time, raising billions of dollars through private placements. Because they were and are set up in a way that prevents tax losses from being "passed through" to REIT shareholders, REITs struggle to compete for capital.

1990s - First REIT reaches $1 billion market cap

In December 1991 the New Plan achieves $1 billion in equity market capitalization, becoming the first publicly traded REIT to do so. Centro Properties Group, based in Australia, purchased New Plan in 2007.

2000s - REITs modernization act

President Clinton signed the REIT Modernization Act of 1999's provisions into law in December 1999 as part of the Ticket to Work and Work Incentives Improvement Act of 1999. The capacity of a REIT to establish one or more taxable real estate investment trusts subsidiaries (TRS) that can provide services to third parties and REIT tenants is one of the other things.

The diverse landscape of REIT investments

Real estate investing is a dynamic field with a wide range of options for those wishing to build wealth and diversify their holdings.

Residential REITs: This is an easy way for novices to get started in real estate investing, as single-family houses offer a strong basis. Purchasing duplexes or apartment buildings can result in steady rental income as well as possible capital growth.

Commercial REITs: This covers activities such as office building investments. They provide steady cash flow and long-term leases, especially in desirable business areas. Rental assets such as shopping centers and retail spaces are lucrative prospects and can appreciate value as long as businesses remain successful.

Specialty REITs: These include investments in healthcare-related properties such as assisted living centers or physician offices. Datacenter investments have become more and more common in the digital age because of the growing need for safe data storage.

Job profiles within REITs

Real estate investment jobs have many specifications, including:

Real estate analysts: The job of a real estate analyst is to find chances for purchasing profitable real estate. These analysts will require a strong skill set in financial modeling in addition to a solid understanding of the current markets. These analysts could also be involved in the negotiation of terms related to pricing and real estate transactions.

Asset managers: Opportunities in property trusts are plenty. The higher-level property management choices are made by asset managers. Since asset managers will be evaluating and controlling a property's operating expenses about its potential for income generation, they must possess a greater foundation in finance.

Property managers: REIT employment prospects include property managers. While some real estate investment trusts employ their property managers, others contract with outside businesses to handle their properties. Along with working with renters, property managers handle all daily duties required to keep up the property.

Essential skills for success in REIT careers

Successful REIT careers require the development of several essential talents, three of which are listed below:

Financial acumen: Jobs in real estate finance involve investors with strong financial acumen who are better equipped to evaluate financing choices, cash flow forecasts, property valuations, and tax consequences. With this thorough insight, investors may make well-informed strategic decisions that optimize profits while also supporting their investing goals.

Market analysis skills: Real estate investors should cultivate an awareness of important market indicators and a keen sense of market conditions. Purchasing and managing profitable rental properties requires an accurate and detailed understanding of a possible market's amenities, dynamics, future potential, and relative risk.

Communication skills: Are a common attribute among successful real estate investors and are often ranked as the most important one. This is because effective interpersonal communication is crucial when investing in real estate. Working directly with a variety of industry professionals, including lenders, agents, property managers, tenants, and many more.

Global perspectives on REITs

International REIT Markets:

The US-based REIT method for real estate investing has been embraced by more than 40 nations and regions, providing access to income-producing real estate assets worldwide for all investors. The simplest and most effective approach for investors to include global listed real estate allocations in their portfolios is through mutual funds and exchange-traded funds.

The listed real estate market is getting more and more international, even if the United States still has the largest market. The allure of the US real estate investment trusts strategy for real estate investing is fueling the expansion. All G7 nations are among the more than forty nations and regions that have REITs today.

Technological innovations in REIT operations

PropTech integration: Real estate investment managers can improve the efficiency of property acquisitions and due diligence procedures, which can lead to more precise assessments, quicker data processing, and better decision-making, all of which improve investment outcomes, by incorporating these PropTech platforms into their workflows.

Data analytics in real estate: Data analytics in real estate enables real estate professionals to make data-driven choices regarding the acquisition, purchase, leasing, or administration of a physical asset. To provide insights that can be put into practice, the process entails compiling all pertinent data from several sources and analyzing it.

Conclusion

REITs have a lot of advantages and disadvantages for professional development. They provide a means of incorporating real estate into an investment portfolio, but they could also produce a bigger dividend than certain other options. Since non-exchange-listed REITs do not trade on stock exchanges, there are certain risks associated with them. Finding the value of a share in a non-traded real estate investment trusts can be challenging, even though the market price of a publicly traded REIT is easily available. Buying shares through a broker allows you to invest in a publicly traded REIT that is listed on a major stock exchange. The bottom line for a REIT is that, in contrast to other real estate firms, it doesn't build properties to resell them.

2 notes

·

View notes

Text

Azure’s Evolution: What Every IT Pro Should Know About Microsoft’s Cloud

IT professionals need to keep ahead of the curve in the ever changing world of technology today. The cloud has become an integral part of modern IT infrastructure, and one of the leading players in this domain is Microsoft Azure. Azure’s evolution over the years has been nothing short of remarkable, making it essential for IT pros to understand its journey and keep pace with its innovations. In this blog, we’ll take you on a journey through Azure’s transformation, exploring its history, service portfolio, global reach, security measures, and much more. By the end of this article, you’ll have a comprehensive understanding of what every IT pro should know about Microsoft’s cloud platform.

Historical Overview

Azure’s Humble Beginnings

Microsoft Azure was officially launched in February 2010 as “Windows Azure.” It began as a platform-as-a-service (PaaS) offering primarily focused on providing Windows-based cloud services.

The Azure Branding Shift

In 2014, Microsoft rebranded Windows Azure to Microsoft Azure to reflect its broader support for various operating systems, programming languages, and frameworks. This rebranding marked a significant shift in Azure’s identity and capabilities.

Key Milestones

Over the years, Azure has achieved numerous milestones, including the introduction of Azure Virtual Machines, Azure App Service, and the Azure Marketplace. These milestones have expanded its capabilities and made it a go-to choice for businesses of all sizes.

Expanding Service Portfolio

Azure’s service portfolio has grown exponentially since its inception. Today, it offers a vast array of services catering to diverse needs:

Compute Services: Azure provides a range of options, from virtual machines (VMs) to serverless computing with Azure Functions.

Data Services: Azure offers data storage solutions like Azure SQL Database, Cosmos DB, and Azure Data Lake Storage.

AI and Machine Learning: With Azure Machine Learning and Cognitive Services, IT pros can harness the power of AI for their applications.

IoT Solutions: Azure IoT Hub and IoT Central simplify the development and management of IoT solutions.

Azure Regions and Global Reach

Azure boasts an extensive network of data centers spread across the globe. This global presence offers several advantages:

Scalability: IT pros can easily scale their applications by deploying resources in multiple regions.

Redundancy: Azure’s global datacenter presence ensures high availability and data redundancy.

Data Sovereignty: Choosing the right Azure region is crucial for data compliance and sovereignty.

Integration and Hybrid Solutions

Azure’s integration capabilities are a boon for businesses with hybrid cloud needs. Azure Arc, for instance, allows you to manage on-premises, multi-cloud, and edge environments through a unified interface. Azure’s compatibility with other cloud providers simplifies multi-cloud management.

Security and Compliance

Azure has made significant strides in security and compliance. It offers features like Azure Security Center, Azure Active Directory, and extensive compliance certifications. IT pros can leverage these tools to meet stringent security and regulatory requirements.

Azure Marketplace and Third-Party Offerings

Azure Marketplace is a treasure trove of third-party solutions that complement Azure services. IT pros can explore a wide range of offerings, from monitoring tools to cybersecurity solutions, to enhance their Azure deployments.

Azure DevOps and Automation

Automation is key to efficiently managing Azure resources. Azure DevOps services and tools facilitate continuous integration and continuous delivery (CI/CD), ensuring faster and more reliable application deployments.

Monitoring and Management

Azure offers robust monitoring and management tools to help IT pros optimize resource usage, troubleshoot issues, and gain insights into their Azure deployments. Best practices for resource management can help reduce costs and improve performance.

Future Trends and Innovations

As the technology landscape continues to evolve, Azure remains at the forefront of innovation. Keep an eye on trends like edge computing and quantum computing, as Azure is likely to play a significant role in these domains.

Training and Certification

To excel in your IT career, consider pursuing Azure certifications. ACTE Institute offers a range of certifications, such as the Microsoft Azure course to validate your expertise in Azure technologies.

In conclusion, Azure’s evolution is a testament to Microsoft’s commitment to cloud innovation. As an IT professional, understanding Azure’s history, service offerings, global reach, security measures, and future trends is paramount. Azure’s versatility and comprehensive toolset make it a top choice for organizations worldwide. By staying informed and adapting to Azure’s evolving landscape, IT pros can remain at the forefront of cloud technology, delivering value to their organizations and clients in an ever-changing digital world. Embrace Azure’s evolution, and empower yourself for a successful future in the cloud.

#microsoft azure#tech#education#cloud services#azure devops#information technology#automation#innovation

2 notes

·

View notes

Text

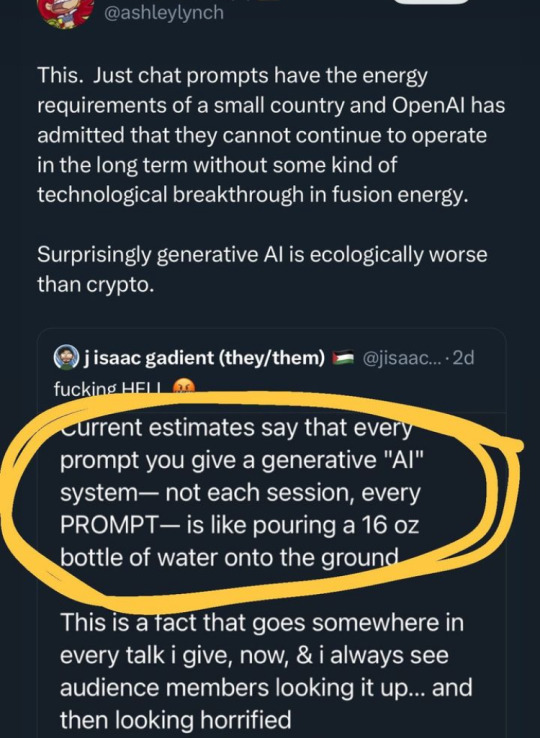

since there's a lot of confusion in the notes:

no, the water used for cooling does not go back into the river or lake that it came from. microsoft, and many other companies with large server farms, use evaporative (adiabatic) cooling, so the water is lost to the atmosphere

no, 16 oz of water is not used per prompt - the article itself says that 16 oz may be used for between 5 to 50 prompts. this is an average, and it can be as little as 0 during the winter months when outdoor temperatures are enough to cool the computers on their own.

my own thoughts:

AI itself is a neutral tool that is currently being used for some pretty stupid to outright exploitative things. the completely unhelpful chatbot that comes up when you're trying to talk to customer service for a multi-billion dollar company that just doesn't want to pay a real person? get rid of it.

but to say that none of these servers should exist doesn't acknowledge that there are sectors that can provide greater human good by accessing this processing power: AI for cancer detection, AI for ocean exploration, AI for disease prevention and outbreak response...there's a lot of promise and possibility in what we can do for the world with this tool

it's good to be skeptical of new technology, but it's also good to keep in mind that there are existing enterprises that are already actively causing this much damage to the world times ten: cryptocurrency mining still outpaces the environmental impact of AI, and that's purely for the generation of a currency, with no other positive human societal outcomes.

if AI datacenters are going to continue to exist, and they will, then there should be a concerted push for actually building or relocating these servers to cold climates that will require little to no water to function. don't put them in the middle of iowa in 90 degree summers where they're chugging down water that small towns need to survive.

63K notes

·

View notes

Text

As businesses increasingly rely on digital operations, choosing a reliable colocation service provider is a critical decision. With the right colocation partner, companies can ensure secure, efficient, and cost-effective infrastructure management. Whether you’re a small business or an enterprise requiring extensive resources, understanding the key factors that define a dependable colocation provider is essential.

#Colocation#colocation server datacenter#colocation data center#colocation data center near me#colocation services#colocation service provider#data center colocation

0 notes

Text

When it comes time to deploy a platform for new projects, set up a CRM server, or build a data center fit for a standard hypervisor, every IT manager or storage administrator is faced with the question of which type of storage to use: traditional SAN appliance or virtual SAN? In this article, we'll take a look at two SAN solutions, distinguish between them, and give you an answer on which one to choose for your projects. Сontents What is the Storage Area Network (SAN)? When utilizing a typical SAN device? What are the usual costs of SAN appliances? What is a vSAN appliance? Use cases for virtual SAN (vSAN) devices When should you utilize a vSAN appliance? Cost of a virtual SAN (vSAN) device What is the difference between a regular SAN and vSAN? Which SAN to choose? Conclusion What is the Storage Area Network (SAN)? In essence, SANs are high-performance iSCSI or Fiber Channel block-mode physical datastores that may be used to host hypervisors, databases, and applications. Traditional Storage Area Network devices, which are generally available in a 4-bay tower to 36-bay rackmount configurations, offer high-performance storage space for structured applications using the iSCSI and/or Fiber Channel (FC) protocols. Structured workloads include the following: Databases: MySQL, Oracle, NoSQL, PostgreSQL, etc. Applications: SAP HANA or other major CRM or EHR software. Large deployments of standard hypervisors such as VMware ESX/ESXi, Microsoft Hyper-V, Windows Server Standard (or Datacenter) 2016/2019, KVM, Citrix (formerly XenServer), or StoneFly Persepolis For a better understanding of the difference between block storage and file storage, you can read this. When utilizing a typical SAN device? On-premises SAN systems are ideal for large deployments of critical applications with a low tolerance for delay. In addition to addressing latency problems, local SAN appliances offer you more control in terms of administration, operation, and security of physical devices, which is required by many regulating companies. With commensurate performance, SAN systems may scale from hundreds of gigabytes to petabytes of storage capacity. If your workloads have the ability to rise to this scale, on-premises SAN hardware is the superior alternative in terms of return on investment (ROI). That isn't to say that 4-bay towers or 6-bay devices aren't appropriate for SMB environments. It all comes down to the company budget, latency requirements, and the project(s) at hand. NetApp SAN, Voyager, Dell PowerVault, StoneFly ISC, and other on-premises SAN hardware are examples. What are the usual costs of SAN appliances? The level of cost of an on-premises SAN device is determined by the provider you choose, the OS you install, and, of course, the hardware specs you choose: system RAM, processor, network connections, RAID controller, hard drives, and other components are all important. Most vendors, including Dell, HPE, and NetApp, offer pre-configured products with limited customization options. As a consequence, you can find the price range on their web pages or in their catalogs. Other vendors let you customize your SAN hardware by selecting the characteristics that best meet your requirements. Before shipping you the plug-and-play appliance, they produce, test, and configure it. As a result, you could be given the qualities you desire within your budget. What is a vSAN appliance? Virtual SANs (vSANs) are iSCSI volumes that have been virtualized and installed on common hypervisors. Find out more here. The developer business VMware is responsible for popularizing the term vSAN in general. But VMware vSAN is not the only option provided. NetApp vSAN, StarWind vSAN, StoneFly vSAN, StorMagic vSAN, and others are examples of vSAN devices that are available. Use cases for virtual SAN (vSAN) devices Standard SAN and vSAN devices are similar in terms of use cases. The configuration is the sole variation between them. In other words,

vSAN equipment may be utilized for structured workloads just like classic SAN appliances (examples listed above). When should you utilize a vSAN appliance? The deployment of vSAN technology is very adaptable. A vSAN appliance can be installed locally, in the cloud, or on a distant server. This offers up a variety of applications; nevertheless, the flexible deployment has a number of drawbacks, including administration, cost, availability, latency, and so on. vSAN, depending on the vendor, promises scalable performance and a high return on investment when placed on local hyper-converged infrastructure (HCI), according to the supplier chosen (VMware vSAN is usually costly). Latency is a factor when using public clouds or distant servers. If it's in a nearby location, latency may not be an issue - as many companies who run their workloads entirely in the cloud have discovered. Furthermore, several business clients have relocated to the cloud before returning to on-premises. The causes differ from one situation to the next. Just because vSAN isn't working for someone else doesn't imply it probably wouldn't work for you. However, just because something works for others does not guarantee that it will perform for you. So, once again, your projects, finance, and performance and latency needs will determine whether or not a vSAN appliance is the best option for you. Cost of a virtual SAN (vSAN) device The cost of vSAN appliances varies depending on the manufacturer, deployment, and assigned resources such as system memory, CPU, and storage capacity. If vSAN is installed in the cloud, the price of the cloud, the frequency with which vSAN is installed, and the frequency with which it is used are all factors to consider. The budget of the infrastructure and hypervisor influences the ROI if it is put on an on-premises HCI appliance. What is the difference between a regular SAN and vSAN? Aside from the obvious difference that one product is a physical object and the other is a virtual version, there are a few other significant differences: Conventional SAN: To assign storage capacity for structured workloads, outside network-attached storage (NAS), or data storage volumes are required. If migration is required, it is often complicated and error-prone. This is permanent machinery. You can't expand processor power or system ram, but you can add storage arrays to grow storage. With an internal SAN, you won't have to worry about outbound bandwidth costs, server security, or latency issues. Virtual SAN: Provides a storage pool with accessible storage resources for virtual machines to share (VMs). When necessary, migration is relatively simple. Volumes in vSAN are adaptable. You may quickly add extra CPU, memory modules, or storage to dedicated resources. In a totally server-less setup, vSAN may be implemented in public clouds. Which SAN to choose? There is no common solution to this issue. Some operations or requirements are better served by standard SAN, whereas others are better served by vSAN. So, how can you know which is right for you? The first step is to have a better grasp of your project, performance needs, and budget. Obtaining testing results might also be beneficial. Consulting with professionals is another approach to ensure you've made the appropriate selection. Request demonstrations to learn more about the capabilities of the product you're considering and the return on your investment. Conclusion The question isn't which is superior when it comes to vSAN vs SAN. It's more about your needs and which one is ideal for your projects. Both solutions offer benefits and drawbacks. Traditional SANs are best suited for large-scale deployments, whereas vSANs offer better flexibility and deployment options, making them suitable for a wide range of use cases, enterprises, and industries.

0 notes

Text

HostPapa, a rapidly growing Canadian-based web services company, appears to have acquired a significant portion of QuadraNet’s IPv4 address space, adding over 200,000 IPs to its expanding portfolio. This move further strengthens HostPapa’s position in the hosting and cloud infrastructure market, particularly as IPv4 scarcity continues to drive up demand and value. QuadraNet, a long-established data center and IT infrastructure provider, was acquired by Edge Centres in 2024 as part of its strategic expansion into the North American market. With a flagship facility in downtown Los Angeles near One Wilshire and additional remote locations in New Jersey, Chicago, Dallas, Seattle, and Atlanta, QuadraNet has historically offered colocation, dedicated servers, and cloud hosting services. However, recent events suggest the company may be facing operational challenges. Over the past several days, QuadraNet’s Los Angeles data center has been at least partially offline, frustrating customers and fueling discussions across hosting forums, including LowEndTalk where inquiring users are eager to understand what all of this means. As of January 31st, 2025, their official status page remains unchanged since January 28th, stating: "Thank you for your continued patience as we work through our maintenance in our Los Angeles facilities." Additionally, users have reported recent outages in QuadraNet’s Chicago and Dallas locations, raising concerns about broader infrastructure stability. Even on that other sleepy forum, there's 19 pages of rage. Looks like QuadraNet has only about 6,656-ish IPv4 remaining according to their ARIN profile. Let's do some back-of-the-envelope math: even if every customer got only 5 IPs (often typical for a dedicated server), that's only space for 1,300 customers, and of course it doesn't divide out quite that neatly. Here's what an ARIN search for 'QuadraNet' looks like as of January 31st, 2025: Here is an example of the transfer that has taken place over the last few weeks: This is quite a fall for QuadraNet, which has been a large player in the dedicated server space for over a decade. I still remember their "buy 3 get 1 free" offer from a few years back. However, their web site has been aging out toward oblivion: job postings from 2019, and an endorsement from the defunct HostMantis and long-dead Tragic Servers. Datacenter down for days, IPs sold off... is QuadraNet fading to black? If you are a customer of QuadraNet are your services online? Have you experienced any interruptions or change of service quality recently? Let us know the comments or join the conversation at LowEndTalk where an active discussion about what all of this means is playing out. Read the full article

0 notes

Link

Last Updated:January 30, 2025, 19:42 IST IndiaIT Minister Ashwini Vaishnaw announced Thursday that the Indian government has chosen to develop a large language model of its own domestically as part of the Rs 10,370 crore IndiaAI Mission, just days after a startup Chinese artificial intelligence (AI) lab unveiled the low-cost foundational model DeepSeek.Additionally, the government has chosen ten businesses to provide 18,693 graphics processing units, or GPUs—high-end CPUs required to create machine learning tools that can be used to build a basic model. These firms include CMS Computers, Ctrls Datacenters, Locuz Enterprise Solutions, NxtGen Datacenter, Orient Technologies, Jio Platforms, Tata Communications, Yotta, which is funded by the Hiranandani Group, and Vensysco Technologies. Yotta alone will provide nearly half of all GPUs, with a commitment to provide 9,216 units. n18oc_indiaNews18 Mobile App - https://onelink.to/desc-youtube atOptions = 'key' : '6c396458fda3ada2fbfcbb375349ce34', 'format' : 'iframe', 'height' : 60, 'width' : 468, 'params' : ;

0 notes

Text

Containerization Revolution: Growth and Insights Into the Global Market

The global application container market size is expected to reach USD 31.50 billion by 2030, according to a new report by Grand View Research, Inc., registering a 32.4% CAGR during the forecast period. Application containerization is used to deploy and run applications without launching separate Virtual Machines (VMs).

VMs and containers have similar resource allocation and isolation advantages; however, containers help virtualize the Operating System (OS) in place of hardware. These solutions are more portable and efficient as compared to VMs owing to the requirement of fewer operating systems and minimum space.

The application container market has also been gaining prominence as a result of increasing adoption of containerization technology, particularly in retail, healthcare, banking and finance, and telecommunication sectors. The banking, financial services, and insurance (BFSI) sector is one of the early adopters of this technology, which offers benefits such as administration ease and cost-effectiveness.

The Docker datacenter is implemented in the banking sector as it provides benefits such as platform portability, higher security and smooth workflow of data, and efficient usage of infrastructure. The BFSI sector has adopted the Docker platform due to the challenging environment of digital disruption, wherein the use of application containers enables improvements in software delivery capabilities.

Application Container Market Report Highlights

The on-premise deployment segment is expected to exhibit a significant CAGR over the forecast period

The large enterprises segment dominated the market by organization size in 2023, a trend that is expected to continue through 2030

On the basis of service, The monitoring & security segment accounted for the highest revenue share of 29.4% in 2023. The dynamism and complexity of containerized applications require robust monitoring solutions to ensure optimal performance, detect anomalies, and prevent disruptions.

North America accounted for the largest revenue share in 2023 and is projected to remain the dominant region over the forecast period, which is primarily attributed to high degree implementation of cloud technologies by vendors in the region

Application Container Market Segmentation

Grand View Research has segmented the global application container market based on service, deployment, enterprise size, end use, and region:

Application Container Service Outlook (Revenue, USD Million, 2018 - 2030)

Monitoring & Security

Data Management & Orchestration

Networking

Support & Maintenance

Other

Application Container Deployment Outlook (Revenue, USD Million, 2018 - 2030)

Hosted

On-Premise

Application Container Enterprise Size Outlook (Revenue, USD Million, 2018 - 2030)

SMEs

Large Enterprises

Application Container End Use Outlook (Revenue, USD Million, 2018 - 2030)

BFSI

Healthcare & Life Science

Telecommunication & IT

Retail & E-commerce

Education

Media & Entertainment

Others

Application Container Regional Outlook (Revenue, USD Million, 2018 - 2030)

North America

US

Canada

Mexico

Europe

UK

Germany

France

Asia Pacific

Japan

India

China

Australia

South Korea

Latin America

Brazil

Middle East & Africa

South Africa

Saudi Arabia

UAE

List of Key Players

IBM

Amazon Web Services, Inc.

Microsoft

Google LLC

Broadcom

Joyent

Rancher

SUSE

Sysdig, Inc.

Perforce Software, Inc.

Order a free sample PDF of the Application Container Market Intelligence Study, published by Grand View Research.

0 notes

Text

Middle East and CIS Power Generator Rental Market Size 2025, Share, Aanalysis, Drivers and Forecast till 2032

The report begins with an overview of the Middle East and CIS Power Generator Rental Market 2025 and presents throughout its development. It provides a comprehensive analysis of all regional and key player segments providing closer insights into current market conditions and future market opportunities, along with drivers, trend segments, consumer behavior, price factors, and market performance and estimates. Forecast market information, SWOT analysis, Middle East and CIS Power Generator Rental Market scenario, and feasibility study are the important aspects analyzed in this report.

Get Sample PDF Report: https://www.fortunebusinessinsights.com/enquiry/request-sample-pdf/105111

Middle East and CIS Power Generator Rental Market By Power Rating (Below 75 kVA, 75-375 kVA, 375-750 kVA and Above 750 kVA), By Fuel Type (Diesel, Gas, and Others), By Application (Continuous Load, Standby Load, and Peak Load), By End-user (Telecom, Banking, Mining, Datacenter, Construction, Manufacturing, Tourism, and Others) and Regional Forecast, 2021-2028

The Middle East and CIS Power Generator Rental Market is experiencing robust growth driven by the expanding globally. The Middle East and CIS Power Generator Rental Market is poised for substantial growth as manufacturers across various industries embrace automation to enhance productivity, quality, and agility in their production processes. Middle East and CIS Power Generator Rental Market leverage robotics, machine vision, and advanced control technologies to streamline assembly tasks, reduce labor costs, and minimize errors. As technology advances and automation becomes more accessible, the adoption of automated assembly systems is expected to accelerate, driving market growth and innovation in manufacturing.

0 notes