#but it's so cool to see it starting to Work. also I finally navigated github and found all the info I was missing AND clean PNGs

Explore tagged Tumblr posts

Text

Low-quality screenshot of my work so far! I've finally (mostly. I think. There have been so many iterations help meeee) settled on a general recipe format, and this was me doing some very light layout stuff before I go do the Cooking Pot and Shaped recipes (these are all the shapeless crafting table recipes).

#farmers delight#minecraft#modded minecraft#salem tag#g-d this has been like#8 hours of work easily#but it's so cool to see it starting to Work. also I finally navigated github and found all the info I was missing AND clean PNGs#salem art

34 notes

·

View notes

Text

How to build and deploy your own personal portfolio site

Hello! My name is Kevin Powell. I love to teach people how to build the web and how to make it look good while they’re at it.

I’m excited to announce that I’ve just launched a free course that teaches you to create your very own fully responsive portfolio website.

After you’ve finished this course you will have a neat-looking portfolio site that will help you land job interviews and freelance gigs. It’s also a cool thing to show to your friends and family.

We’re going to build the portfolio using Scrimba’s interactive code-learning platform, and then deploy it using DigitalOcean’s cloud services.

Also, DigitalOcean has been generous to give everyone who enrolls a free credit, so it won’t cost you anything to get it up and running.

This post is a breakdown of the course itself, giving you an idea of what's included in all the lessons. If you like what you see, make sure to check it out over on Scrimba!

Lesson 1: Introduction

In the first lesson, you’ll get an overview of the course so that you know what to expect, what you should know before taking it, and what you’ll end up with once you're finished. I also give you a quick intro to myself.

Lesson 2: Setting things up - HTML

In part two, I’m going to show you around in the Scrimba environment and we’ll also set up the project.

All the images are supplied, so you won’t need to worry about looking for the perfect photo just yet. We can focus on building the portfolio!

Don’t forget that you can access everything you need from text and colors to fonts and much more at our dedicated design page.

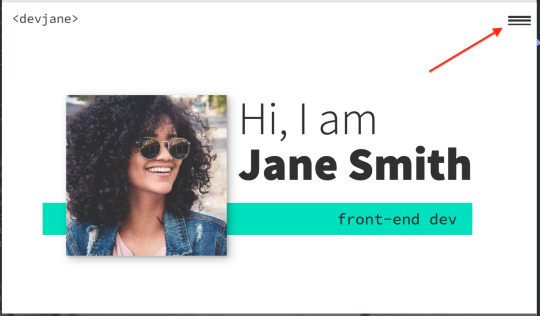

Lesson 3: The header area - HTML

It’s finally time to start building out the portfolio. In this lecture, we will create the header section. We will brush up on the BEM methodology for setting class names in CSS, and I think you’ll find that this makes the navigation simple and straightforward to create.

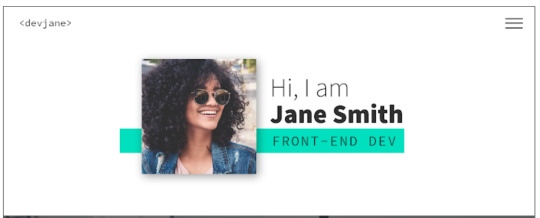

Lesson 4: Intro section

Next up is the Intro section of the portfolio. This is where we will introduce ourselves and put a picture of ourselves.

In the end, we add a section about the main skills/services we can do. For the moment we can just fill it all in with “Lorem ipsum” text as a placeholder, until you're ready to fill it in with your own text.

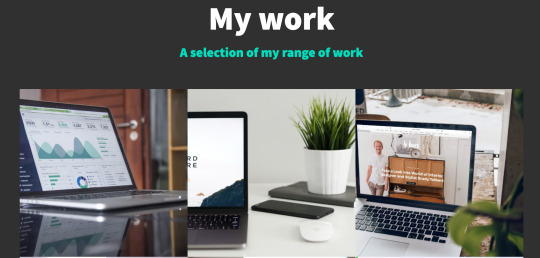

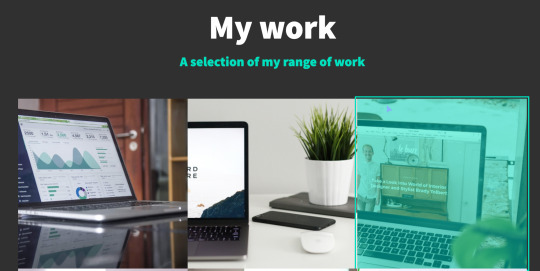

In this chapter, we’re finishing off the rest of our HTML with the last 3 sections: About me, where we’re going to introduce ourselves in greater detail; Work, where we’re going to add some of our portfolio examples, and our footer.

Footers are ideal for linking to email addresses and I will show you how to do that with an <a> tag. We can also add our social media links there too.

For now, it all looks a bit raw and all the CSS fun is ahead of us.

Lesson 6: Setting up the custom properties and general styles

Alright, time to get make that page look amazing!

In this part, we’re going to learn how to add custom properties.

While setting up CSS variables can take some time, it really pays off as the site comes together. They're also perfect for allowing you to customize the site's colors and fonts in just a few seconds, which I take a look at how to do once we wrap up the site.

Lesson 7: Styling the titles and subtitles

Having set all the needed typography, I will walk you through the subtitles of designing and styling the titles and subtitles in our sections.

Lesson 8: Setting up the intro section

Over the next few chapters it’s going to be quite hands-on, so no worries if you feel like rewatching the screencasts a couple of times.

We're keeping everything responsive, using CSS Grid and taking a little dive into using em units as well.

This is the perfect example where CSS Grid shines through and we’re going to learn how to use properties like grid-column-gap, grid-template-areas and grid-template-columns.

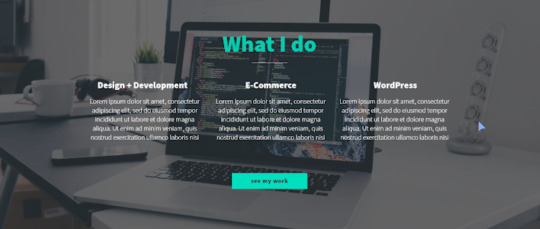

Lesson 9: Styling the services section

To add a little bit of interest, I look at how we can add a background-image to this section of the site. It's a nice way to break up the second and avoid just having solid color backgrounds everywhere, and I also look at how you could use background-blend-mode to change the color of the image to help keep the look of your site consistent.

As a bonus, we’re also going to learn how to style out buttons when they are hovered over or selected when we tab through the page.

Lesson 10: The About me section

Great progress! So this is the all-important About me section. This one is pretty similar to the Intro because we’re going to use CSS Grid, but move the picture to the right side and find a useful example for CSS fr unit.

Lesson 11: The portfolio

In this screencast, I will show how to build our portfolio section to display some of our great work. And we’re even going to learn how to use cubic-bezier() to a great and impressive effect with some hover styling!

Lesson 12: Adding the social icons with Font Awesome

This cast will be sweet and short, so you can rest a bit and learn some quick tips and tricks.

Adding social media links with Font Awesome icons is a breeze. We can do it with an <i> tag and then adding a class name of an icon you wish to add.

As an example, here’s how to add an icon for GitHub once you have Font Awesome linked in your markup.

<i class="fab fa-github"></i>

While the icons are in place, we do need at add more styling here to get them to be set up the way we need them to be.

With a little use of flexbox and removing the styling from the list with list-style: none it's relatively straight forward.

Lesson 14: Setting up the navigation styles

We have left the navigation to the last because very often it’s one of those simple things that can take the longest to set up and do correctly.

Once completed, the navigation will be off-screen, but slide in when a user clicks on the hamburger icon. The first step though, is to get it styled the way we want it to look, then we can worry about actually making it work!

Lesson 14: Creating the hamburger

In this screencast, you’ll learn how to add a hamburger menu to transition to the navigation view. It’s not an icon or an svg, but pure CSS.

We’re going to have a chance practice ::before and ::after pseudo-selectors, transition, and, since it's not a link but a button, we also need to define the different cursor when we hover over the hamburger icon to indicate that it can be clicked with cursor: pointer.

Lesson 15: Adding the JS

With a little bit of JavaScript, I will walk you through the implementation of a really nice and smooth transition from our main screen to the navigation window on click of the hamburger menu.

I also take a look at how we can add in smooth-scrolling with CSS only by using scroll-behavior: smooth. Yes, it really is that simple! It also makes a great tweet for Today I Learned (TIL). Feel free to send you TILs to @scrimba and I’m sure they will be really happy to retweet them!

Lesson 16: Creating the portfolio item page

With the homepage wrapped up, it's time to work on a template portfolio page that can be used to give more details on each of the projects that you are putting in your portfolio.

We're also going to learn how to link it seamlessly with our main page for a nice user experience.

Lesson 17: Customizing your page

This is where the magic of CSS custom properties comes in!

In this video I look at how we can customize the custom properties that we set up to change the color scheme of your site within seconds, and how we can update the fonts quickly and easily as well in order to make the site your own!

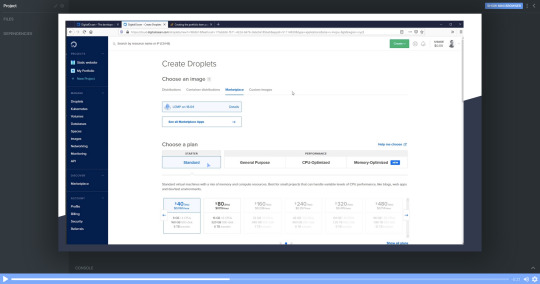

Lesson 18: DigitalOcean Droplets - What they are and how to set one up

In this screencast, we're going to explore DigitalOcean droplets. They are Linux-based virtual machines and that each droplet is a new server you can use.

It can seem daunting, but they are super easy to set up, very customizable and come with a lot of useful features, like a firewall automatically.

I'll talk you through every single step of the way so that you know exactly how to set a droplet up.

Lesson 19: DigitalOcean Droplets - Uploading files via FTP

To finish the whole process off, let me show you how we can upload our portfolio to the droplet we've created in the previous chapter and now it's online for others to see!

Lesson 20: Wrap up

And that's it! Your next step can be to make this page all about you, add all the relevant examples, tell us about you and make it live in a DigitalOcean droplet.

Once you've put yours together and got it online, please share your portfolio with Me and the team at Scrimba! You can find us at @KevinJPowell and @scrimba on Twitter, and we would be really happy to share what you're up to!

Check out the full course

Remember, this course is completely free. Head on over to Scrimba now and you can follow along with it and build out a fantastic looking site!

via freeCodeCamp.org https://ift.tt/2QwcP6z

0 notes

Text

Moving an ASP.NET Core from Azure App Service on Windows to Linux by testing in WSL and Docker first

I updated one of my websites from ASP.NET Core 2.2 to the latest LTS (Long Term Support) version of ASP.NET Core 3.1 this week. Now I want to do the same with my podcast site AND move it to Linux at the same time. Azure App Service for Linux has some very good pricing and allowed me to move over to a Premium v2 plan from Standard which gives me double the memory at 35% off.

My podcast has historically run on ASP.NET Core on Azure App Service for Windows. How do I know if it'll run on Linux? Well, I'll try it see!

I use WSL (Windows Subsystem for Linux) and so should you. It's very likely that you have WSL ready to go on you machine and you just haven't turned it on. Combine WSL (or the new WSL2) with the Windows Terminal and you're in a lovely spot on Windows with the ability to develop anything for anywhere.

First, let's see if I can run my existing ASP.NET Core podcast site (now updated to .NET Core 3.1) on Linux. I'll start up Ubuntu 18.04 on Windows and run dotnet --version to see if I have anything installed already. You may have nothing. I have 3.0 it seems:

$ dotnet --version 3.0.100

Ok, I'll want to install .NET Core 3.1 on WSL's Ubuntu instance. Remember, just because I have .NET 3.1 installed in Windows doesn't mean it's installed in my Linux/WSL instance(s). I need to maintain those on my own. Another way to think about it is that I've got the win-x64 install of .NET 3.1 and now I need the linux-x64 one.

NOTE: It is true that I could "dotnet publish -r linux-x64" and then scp the resulting complete published files over to Linux/WSL. It depends on how I want to divide responsibility. Do I want to build on Windows and run on Linux/Linux? Or do I want to build and run from Linux. Both are valid, it just depends on your choices, patience, and familiarity.

GOTCHA: Also if you're accessing Windows files at /mnt/c under WSL that were git cloned from Windows, be aware that there are subtleties if Git for Windows and Git for Ubuntu are accessing the index/files at the same time. It's easier and safer and faster to just git clone another copy within the WSL/Linux filesystem.

I'll head over to https://dotnet.microsoft.com/download and get .NET Core 3.1 for Ubuntu. If you use apt, and I assume you do, there's some preliminary setup and then it's a simple

sudo apt-get install dotnet-sdk-3.1

No sweat. Let's "dotnet build" and hope for the best!

It might be surprising but if you aren't doing anything tricky or Windows-specific, your .NET Core app should just build the same on Windows as it does on Linux. If you ARE doing something interesting or OS-specific you can #ifdef your way to glory if you insist.

Bonus points if you have Unit Tests - and I do - so next I'll run my unit tests and see how it goes.

OPTION: I write things like build.ps1 and test.ps1 that use PowerShell as PowerShell is on Windows already. Then I install PowerShell (just for the scripting, not the shelling) on Linux so I can use my .ps1 scripts everywhere. The same test.ps1 and build.ps1 and dockertest.ps1, etc just works on all platforms. Make sure you have a shebang #!/usr/bin/pwsh at the top of your ps1 files so you can just run them (chmod +x) on Linux.

I run test.ps1 which runs this command

dotnet test /p:CollectCoverage=true /p:CoverletOutputFormat=lcov /p:CoverletOutput=./lcov .\hanselminutes.core.tests

with coverlet for code coverage and...it works! Again, this might be surprising but if you don't have any hard coded paths, make any assumptions about a C:\ drive existing, and avoid the registry and other Windows-specific things, things work.

Test Run Successful. Total tests: 23 Passed: 23 Total time: 9.6340 Seconds Calculating coverage result... Generating report './lcov.info' +--------------------------+--------+--------+--------+ | Module | Line | Branch | Method | +--------------------------+--------+--------+--------+ | hanselminutes.core.Views | 60.71% | 59.03% | 41.17% | +--------------------------+--------+--------+--------+ | hanselminutes.core | 82.51% | 81.61% | 85.39% | +--------------------------+--------+--------+--------+

I can build, I can test, but can I run it? What about running and testing in containers?

I'm running WSL2 on my system and I've doing all this in Ubuntu 18.04 AND I'm running the Docker WSL Tech Preview. Why not see if I can run my tests under Docker as well? From Docker for Windows I'll enabled the Experimental WSL2 support and then from the Resources menu, WSL Integration I'll enable Docker within my Ubuntu 18.04 instance (your instances and their names will be your own).

I can confirm it's working with "docker info" under WSL and talking to a working instance. I should be able to run "docker info" in BOTH Windows AND WSL.

$ docker info Client: Debug Mode: false Server: Containers: 18 Running: 18 Paused: 0 Stopped: 0 Images: 31 Server Version: 19.03.5 Storage Driver: overlay2 Backing Filesystem: extfs ...snip...

Cool. I remembered I also I needed to update my Dockerfile as well from the 2.2 SDK on the Docker hub to the 3.1 SDK from Microsoft Container Registry, so this one line change:

#FROM microsoft/dotnet:2.2-sdk AS build FROM mcr.microsoft.com/dotnet/core/sdk:3.1 as build

as well as the final runtime version for the app later in the Dockerfile. Basically make sure your Dockerfile uses the right versions.

#FROM microsoft/dotnet:2.1-aspnetcore-runtime AS runtime FROM mcr.microsoft.com/dotnet/core/aspnet:3.1 AS runtime

I also volume mount the tests results so there's this offensive If statement in the test.ps1. YES, I know I should just do all the paths with / and make them relative.

#!/usr/bin/pwsh docker build --pull --target testrunner -t podcast:test . if ($IsWindows) { docker run --rm -v d:\github\hanselminutes-core\TestResults:/app/hanselminutes.core.tests/TestResults podcast:test } else { docker run --rm -v ~/hanselminutes-core/TestResults:/app/hanselminutes.core.tests/TestResults podcast:test }

Regardless, it works and it works wonderfully. Now I've got tests running in Windows and Linux and in Docker (in a Linux container) managed by WSL2. Everything works everywhere. Now that it runs well on WSL, I know it'll work great in Azure on Linux.

Moving from Azure App Service on Windows to Linux

This was pretty simple as well.

I'll blog in detail how I build andd eploy the sites in Azure DevOps and how I've moved from .NET 2.2 with Classic "Wizard Built" DevOps Pipelines to a .NET Core 3.1 and a source control checked-in YAML pipeline next week.

The short version is, make a Linux App Service Plan (remember that an "App Service Plan " is a VM that you don't worry about. See in the pick below that the Linux Plan has a penguin icon. Also remember that you can have as many apps inside your plan as you'd like (and will fit in memory and resources). When you select a "Stack" for your app within Azure App Service for Linux you're effectively selecting a Docker Image that Azure manages for you.

I started by deploying to staging.mydomain.com and trying it out. You can use Azure Front Door or CloudFlare to manage traffic and then swap the DNS. I tested on Staging for a while, then just changed DNS directly. I waited a few hours for traffic to drain off the Windows podcast site and then stopped it. After a day or two of no traffic I deleted it. If I did my job right, none of you noticed the site moved from Windows to Linux, from .NET Core 2.2 to .NET Core 3.1. It should be as fast or faster with no downtime.

Here's a snap of my Azure Portal. As of today, I've moved my home page, my blood sugar management portal, and my podcast site all onto a single Linux App Service Plan. Each is hosted on GitHub and each is deploying automatically with Azure DevOps.

Next big migration to the cloud will be this blog which still runs .NET Framework 4.x. I'll blog how the podcast gets checked into GitHub then deployed with Azure DevOps next week.

What cool migrations have YOU done lately, Dear Reader?

Sponsor: Like C#? We do too! That’s why we've developed a fast, smart, cross-platform .NET IDE which gives you even more coding power. Clever code analysis, rich code completion, instant search and navigation, an advanced debugger... With JetBrains Rider, everything you need is at your fingertips. Code C# at the speed of thought on Linux, Mac, or Windows. Try JetBrains Rider today!

© 2019 Scott Hanselman. All rights reserved.

Moving an ASP.NET Core from Azure App Service on Windows to Linux by testing in WSL and Docker first published first on http://7elementswd.tumblr.com/

0 notes

Text

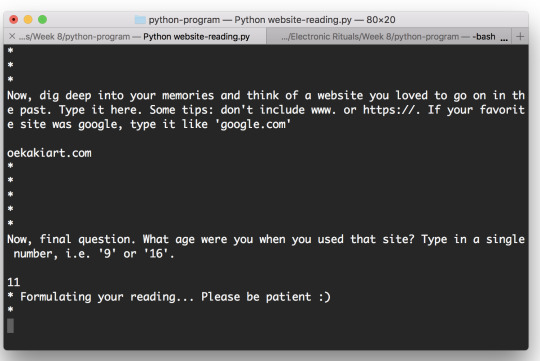

[eroft] Readings from your Favorite, Obsolete (childhood?) Website

Github Link to Project

Prompt

Invent an “-omancy,” or a form of divination/prophecy based on observing and interpreting natural events. Your reading of “natural” should make some reference to digital/electronic/computational media. (What counts as a “natural event” on the Internet? What’s the electronic equivalent of phrenology, from both a physical computing perspective and a data analysis perspective? Does it count as “interpretation” if it’s being performed by a computer program?) I’m especially interested in responses that take the form of purposefully inaccurate data analysis.

Coming up with an idea

For this week’s project, I was thinking about a lot of ways to frame “natural events” in the context of digital media. Allison’s reference to our browser search history being a possible “natural event” made me think about internet history on a broader scale: what sites did we frequent when we were much younger? Which ones remain in our memories? Which ones are sentimental to us and what could that imply about who we are and how we’ve grown?

In a sense, the first websites we interacted with could be considered a “natural event” in the sense that its embedded into our history (like our IRL birth place and time), and we didn’t really think consciously about it or navigate the web with some higher meaning in mind (likely, people just went on whatever site was cool or fun).

I decided to make a python program to engage the user in a reading, first querying for some key personal details, and then generating a brief reading based off of keywords scraped from their favorite childhood (I’m saying childhood here because that’s how I experienced my first time on the web, but I realize there are people whose first experiences on the web were much later) website. The old websites in question are scraped from the Wayback Machine, which over the years has saved snapshots of websites and lets you make API calls that link you to a certain website’s view at a given time in history (or the closest possible snapshot to that point).

Some other things I tried but ruled out:

A web-based js program: this didn’t work out because I intended to embed the result from the Wayback Machine into an HTML iframe, and then do the parsing. This didn’t work because you’re not allowed to access the HTML in an iframe embedding a site from a different domain.

A browser extension: I guess this would ideally display as a popup over the given wayback machine page in question? Couldn’t really figure out if this could help calculate which page to go to in the first place, so I went with a simple python program instead.

System diagram + implementation

Here are the general steps my program takes:

Query for the user’s birth year, favorite website, and what age they remember using that site.

Use Wayback Machine API to request their view of the favorite site at the year they likely used it (or the closest year).

Scrape all text with BeautifulSoup Python library and segment it into “words”, i.e. strings containing only alphabetical characters.

Use a master list of all English words in ConceptNet, and filter out “words” from the website that are actually English words in ConceptNet.

Select a few of these resulting keywords from the website. Use ConceptNet’s relatedness API call to compare these words to common Personality Adjectives from the Corpora project. Keep track of the adjective with the highest relatedness for each keyword.

Use the best adjectives we’ve tracked to construct a reading.

Some notes:

The list of resulting English words from the HTML page tend to be long, so I have a way of randomly selecting a few of these keywords to be used in the reading. Currently, my randomizer works such that words that appear more frequently have a better chance of being selected, proportional to their frequency in the HTML file.

I realized early on that comparing selected webpage words to *every single* adjective in the corpora project (there are >300) via the ConceptNet API wasn’t going to work. So I reduce down the pool of personality adjectives randomly each time the program runs. This likely weeds out adjectives that could be better fits for a given word, but it’s unclear how to work around that and make more API calls without making the program run too slow.

The program breaks if you don’t put in a valid website (that has archives on the wayback machine) and other valid inputs. It also assumes that the website you used to go on has text to pull from (so websites that are made in all Flash are a no-go and will break the program).

Program Walkthrough

Hey there! This is a program that peers into your formative internet days and from that, tells you a bit about yourself.

To start, what's your name?

> Jackie

Hello Jackie! It's nice to meet you. To give us a bit more information to work with, what year were you born? Please format it with four digits, i.e.: '2019', '1991'.

> 1996

Now, dig deep into your memories and think of a website you loved to go on in the past. Type it here. Some tips: don't include www. or https://. If your favorite site was google, type it like 'google.com'

> oekakiart.com

Now, final question. What age were you when you used that site? Type in a single number, i.e. '9' or '16'.

> 11

* Formulating your reading... Please be patient :)

*~*~*~*~Your Reading~*~*~*~

Growing up, you've been somewhat lively at your core.

You've learned some sullen tendencies in more recent times, Jackie.

Try playing around with a deep point of view.

This is a canned structure I currently have in place for all readings: “Growing up, you’ve been somewhat [Adjective 1] at your core. You’ve learned some [Adjective 2] tendencies in more recent times, [name]. Try playing around with a [Adjective 3] point of view.

Reflection

I guess one of the reasons that this concept stuck with me is that I personally have a strong sentimental attachment to websites from my childhood. The one I selected: “oekakiart.com” was an online drawing community (that also intersected with fandom/anime cultures), where I first developed an interested in visual/digital art.

I recognize that my program is slightly imperfect: the fact that I only ask for ballpack dates, rather than specific dates for birth year/age, makes the Wayback Machine website query susceptible to finding a version of the page that the user doesn’t remember.

For example, when I put in age 10, I got this version of oekakiart.com:

I don’t remember seeing this homepage ever, even though I started to interact with the site at age 10.

Meanwhile, when I typed in 11 as my age at the time, I get the version I was acquainted with:

With some more time, I’d like to explore how to make the canned response for the reading less canned. Perhaps there are ways to explore how adjectives are semantically different, and structure the reading in a way that can allow for sentence structures like “You’ve been brave recently, but in the past you’ve been trepid”.

There’s also something really impactful and melancholy to me about seeing the broken vestiges of a site I really used to love and frequently daily in conjunction wistful phrases that make me reflect upon my life/personality. I’m super curious to how other people would use this and what they’d feel.

0 notes

Text

Complete Web Development From Scratch with Yii 2 Framework Udemy 2

New Post has been published on https://hititem.kr/complete-web-development-from-scratch-with-yii-2-framework-udemy-2/

Complete Web Development From Scratch with Yii 2 Framework Udemy 2

Whats up at present we’re going to talk about get probably the most fashionable variation manage process these days it’s been increasingly foremost position for tracking alterations in files and coordinating work on these files amongst more than one persons that is why it can be extremely recommended software for all program developers including folks that advance OCD 2 on this video i will provide an explanation for you the most predominant elements of package for web builders we can see methods to create a repository and get started with the variation manage for venture records I may even explain you the basic operations akin to check out commit and others i am lost from digitally let’s start let’s navigate our task and create a local repository so we go to our assignment file so i’m here in the project and notice every stages levels so it can be not yet initialized we just to get in it and then we will see we’ve nearby repository now we create a far off repository we go to github.Com if you happen to would not have account yet you can just signal up i’m going to sign in with my account i am going to my repositories and create a brand new one so we’ll supply a reputation just project and create a repository now can make our first commit so we have got to add here first some files we add readme in fame so we see here on monitor add records however we have right here ring me goes to be committed then we do git commit with a message first whole now we can set our far flung repository git far off i’m going to simply copy paste it and we will push changes give push or imaginative and prescient to the master branch our username password so i have pushed our file our first commit was once only readme pushing so we can see the file here was once one commit and this is a commit message yeah no let’s are trying now to push the entire task we just we simply do B and the whole lot and then git commit all with the message preliminary commit the conclusion master so utilising any password yeah it is mixture to be able to see two commits here now so over to commit you see initial commit and first commit and we can additionally see the challenge records here you have as a rule observed that we must enter our username and password each time we push changes so if you wish to connect to github without offering your credentials at any time when you may also need utilising SSH keys we can configure our SSH keys now and allow us to join without typing username and password so we’ve we can shop various time throughout development let’s have a look at how can do it we Google SSH keys it we just used the second hyperlink producing a new SSH key and adding it to the SSH dealers or simply right here follow the instructions and put our electronic mail deal with here so it’s generating public and exclusive keys I want to say it here i have already generated it so i have just go learn it so password simply enter enter I don’t need a password now we have generated our public and private keys let’s take a seem what do we do next here so we’re starting our SSH agent we simply make certain it is just running it’s strolling doing an identification right here and we are ending our exclusive key the exclusive key was once added now the next step is adding SSH key to our detail account so we go to our assignment Bukavu go to our account first profile settings we go to ssh and GPG keys and our SSH key title just my my first key so I ought to first SSH key and right here we will go to our public key open the content and simply reproduction-paste what we ought to add SSH key so the bottom line is there now can experiment it git push origin grasp it asks our username and password on account that as we said our far flung URL we use HTTP connection however in our case we need you to make use of SSH connection we go to our repository to a undertaking and we see right here that is the HTTP connection now we will see here SSH connection so I just copied it and right here now i will be able to reset our remote you’ll get removed set URL or wheel and we’ve got we now have copied it so that is our SSH connection now we can test it get neighborhood bastard so it did not ask our person and password it says just the whole thing up-to-the-minute it really works so we do not ought to sort our username password right here and let’s take a look now common work on our task for now we have now simplest master branch with our initial commit we create the 2d branch referred to as development and these two branches will exist for the duration of the entire challenge will certainly not delete them if they’ve a computer virus or are going to create some new function we create a brand new branch called for instance % one and work on this department so our changes may not be noticeable with the aid of different builders of this venture the time you have got to repair this worm will also be very lengthy so we do not want to affect the stable version of our venture after the completed and demonstrated this very cautiously we will merge the alterations to improve a branch the identical is with the developing new points we strengthen each function on the separate branch function one feature two etc and after now we have verified it we merge two development department every month for example you may free up a new variant so you merge your changes from progress to grasp and do release the important difference between grasp and development branches as a substitute to the supply code within the grasp branch may be very stable we merge the alterations from progress department just for example after our inside pleasant Assurance department or may be some outside one makes a whole scan of created aspects or repair impacts let’s see now how it works we will assess all the branches which we’ve an local repository each and every department so we’ve got simplest grasp branch we are developing now the brand new branch development kit check out – fields new branch progress i will assess the branches so we now have two branches now grasp and development let’s push our development department maintain push neighborhood progress with the intention to see now we’ve right here our project and we’ve got two branches department mustn branch progress which are the same now let’s take a seem now how we are able to create other branches for backs or features we go to it determine out new department for illustration trojan horse one now we go to our undertaking files and say for instance we now have a route now we have some typos we go to views and our dwelling page and we say for instance we’ve right here the typo and we repair it this is just example we can see now the exchange it fanatics each fame so we see the index dot php was once modified we edit to be dedicated and committed git commit with the message you say fixed fixed typo on the residence page and each and every region so we push it to the branch however minus one in an effort to see it can be pushed we are able to determine our motor posture now we see the branch was once committed one minute in the past and we will see right here up one commits repair style on the dwelling web page and we are able to see what precisely was changed right here shall we say now we’ve tested this phase and wish to merge to progress we go to a mission we say here but one compare and pull request so we’re soliciting for merge adorning pull request so here we are able to see that this consumer wishes to merge one commit into grasp we do not wish to merged into master so we go to close pull request so it’s closed it used to be refused to head to challenge then branches will go to this department and say it create new pull request and now we prefer no longer master but development after which create pull quest so it can be checking for ability to to merge to undergo progress then we merge and we are merging it so that it will see now in our task progress used to be modified not up to one minute in the past we will see here we are now in progress and can go to the we use part and index.Personal home page and we are able to see our edit is here on the grounds that we merged it so that it will go to our challenge and we are able to delete this department in reality on account that we are not looking for it so it can be closed we’re deleting so we now have two department now now we go to our regional airport story and check out the progress examine out in our regional repository we do not need but these changes so we pull the changes get cool reagent development so i can see now one file was transformed and considered and the same we do with other branches we create a branch we make changes we experiment it after we established it we merge to the progress and after this we delete the department so as to additionally delete it here in the neighborhood we are able to go get branch we see the optimistic branch again one and then we say it department delete and now we determine it get branch quality so we have to depart the branch given that we don’t want it that’s serious about this video in this video I informed you about youngsters the most fashionable man or woman manage system nowadays we’ve got configured our local repository and far off one on the github we’ve learned use SSH keys and connect with github without imparting your username and password every time final i’ve defined you the fundamental it operations i would motivate you to use git on all your projects and get the whole competencies of the version manage thanks for watching I wish you a lot enjoyable with schooling and just right good fortune you

0 notes

Text

Complete Web Development From Scratch with Yii 2 Framework Udemy 2

New Post has been published on https://hititem.kr/complete-web-development-from-scratch-with-yii-2-framework-udemy-2/

Complete Web Development From Scratch with Yii 2 Framework Udemy 2

Whats up at present we’re going to talk about get probably the most fashionable variation manage process these days it’s been increasingly foremost position for tracking alterations in files and coordinating work on these files amongst more than one persons that is why it can be extremely recommended software for all program developers including folks that advance OCD 2 on this video i will provide an explanation for you the most predominant elements of package for web builders we can see methods to create a repository and get started with the variation manage for venture records I may even explain you the basic operations akin to check out commit and others i am lost from digitally let’s start let’s navigate our task and create a local repository so we go to our assignment file so i’m here in the project and notice every stages levels so it can be not yet initialized we just to get in it and then we will see we’ve nearby repository now we create a far off repository we go to github.Com if you happen to would not have account yet you can just signal up i’m going to sign in with my account i am going to my repositories and create a brand new one so we’ll supply a reputation just project and create a repository now can make our first commit so we have got to add here first some files we add readme in fame so we see here on monitor add records however we have right here ring me goes to be committed then we do git commit with a message first whole now we can set our far flung repository git far off i’m going to simply copy paste it and we will push changes give push or imaginative and prescient to the master branch our username password so i have pushed our file our first commit was once only readme pushing so we can see the file here was once one commit and this is a commit message yeah no let’s are trying now to push the entire task we just we simply do B and the whole lot and then git commit all with the message preliminary commit the conclusion master so utilising any password yeah it is mixture to be able to see two commits here now so over to commit you see initial commit and first commit and we can additionally see the challenge records here you have as a rule observed that we must enter our username and password each time we push changes so if you wish to connect to github without offering your credentials at any time when you may also need utilising SSH keys we can configure our SSH keys now and allow us to join without typing username and password so we’ve we can shop various time throughout development let’s have a look at how can do it we Google SSH keys it we just used the second hyperlink producing a new SSH key and adding it to the SSH dealers or simply right here follow the instructions and put our electronic mail deal with here so it’s generating public and exclusive keys I want to say it here i have already generated it so i have just go learn it so password simply enter enter I don’t need a password now we have generated our public and private keys let’s take a seem what do we do next here so we’re starting our SSH agent we simply make certain it is just running it’s strolling doing an identification right here and we are ending our exclusive key the exclusive key was once added now the next step is adding SSH key to our detail account so we go to our assignment Bukavu go to our account first profile settings we go to ssh and GPG keys and our SSH key title just my my first key so I ought to first SSH key and right here we will go to our public key open the content and simply reproduction-paste what we ought to add SSH key so the bottom line is there now can experiment it git push origin grasp it asks our username and password on account that as we said our far flung URL we use HTTP connection however in our case we need you to make use of SSH connection we go to our repository to a undertaking and we see right here that is the HTTP connection now we will see here SSH connection so I just copied it and right here now i will be able to reset our remote you’ll get removed set URL or wheel and we’ve got we now have copied it so that is our SSH connection now we can test it get neighborhood bastard so it did not ask our person and password it says just the whole thing up-to-the-minute it really works so we do not ought to sort our username password right here and let’s take a look now common work on our task for now we have now simplest master branch with our initial commit we create the 2d branch referred to as development and these two branches will exist for the duration of the entire challenge will certainly not delete them if they’ve a computer virus or are going to create some new function we create a brand new branch called for instance % one and work on this department so our changes may not be noticeable with the aid of different builders of this venture the time you have got to repair this worm will also be very lengthy so we do not want to affect the stable version of our venture after the completed and demonstrated this very cautiously we will merge the alterations to improve a branch the identical is with the developing new points we strengthen each function on the separate branch function one feature two etc and after now we have verified it we merge two development department every month for example you may free up a new variant so you merge your changes from progress to grasp and do release the important difference between grasp and development branches as a substitute to the supply code within the grasp branch may be very stable we merge the alterations from progress department just for example after our inside pleasant Assurance department or may be some outside one makes a whole scan of created aspects or repair impacts let’s see now how it works we will assess all the branches which we’ve an local repository each and every department so we’ve got simplest grasp branch we are developing now the brand new branch development kit check out – fields new branch progress i will assess the branches so we now have two branches now grasp and development let’s push our development department maintain push neighborhood progress with the intention to see now we’ve right here our project and we’ve got two branches department mustn branch progress which are the same now let’s take a seem now how we are able to create other branches for backs or features we go to it determine out new department for illustration trojan horse one now we go to our undertaking files and say for instance we now have a route now we have some typos we go to views and our dwelling page and we say for instance we’ve right here the typo and we repair it this is just example we can see now the exchange it fanatics each fame so we see the index dot php was once modified we edit to be dedicated and committed git commit with the message you say fixed fixed typo on the residence page and each and every region so we push it to the branch however minus one in an effort to see it can be pushed we are able to determine our motor posture now we see the branch was once committed one minute in the past and we will see right here up one commits repair style on the dwelling web page and we are able to see what precisely was changed right here shall we say now we’ve tested this phase and wish to merge to progress we go to a mission we say here but one compare and pull request so we’re soliciting for merge adorning pull request so here we are able to see that this consumer wishes to merge one commit into grasp we do not wish to merged into master so we go to close pull request so it’s closed it used to be refused to head to challenge then branches will go to this department and say it create new pull request and now we prefer no longer master but development after which create pull quest so it can be checking for ability to to merge to undergo progress then we merge and we are merging it so that it will see now in our task progress used to be modified not up to one minute in the past we will see here we are now in progress and can go to the we use part and index.Personal home page and we are able to see our edit is here on the grounds that we merged it so that it will go to our challenge and we are able to delete this department in reality on account that we are not looking for it so it can be closed we’re deleting so we now have two department now now we go to our regional airport story and check out the progress examine out in our regional repository we do not need but these changes so we pull the changes get cool reagent development so i can see now one file was transformed and considered and the same we do with other branches we create a branch we make changes we experiment it after we established it we merge to the progress and after this we delete the department so as to additionally delete it here in the neighborhood we are able to go get branch we see the optimistic branch again one and then we say it department delete and now we determine it get branch quality so we have to depart the branch given that we don’t want it that’s serious about this video in this video I informed you about youngsters the most fashionable man or woman manage system nowadays we’ve got configured our local repository and far off one on the github we’ve learned use SSH keys and connect with github without imparting your username and password every time final i’ve defined you the fundamental it operations i would motivate you to use git on all your projects and get the whole competencies of the version manage thanks for watching I wish you a lot enjoyable with schooling and just right good fortune you

0 notes

Text

Our 10 Favorite Open Source Resources From 2017

So many new projects are released every year and it’s tough to keep track. This is great for developers who benefit from the open source community. But it makes searching a lot more difficult.

I’ve scoured GitHub, organizing the best projects I could find that were released in 2017. These are my personal favorites and they’re likely to be around for quite a while.

Note that these are projects that were originally created during 2017. They each show tons of value and potential for growth. There may be other existing projects that grew a lot over 2017, but I’m hoping to focus on newer resources that have been gaining traction.

1. Vivify

The Vivify CSS library was first published to GitHub in late August 2017. It’s been updated a few times but the core goal of the library is pretty clear: awesome CSS effects.

Have a look at the current homepage and see what you think. It works much like the Animate.css library – except the features are somewhat limited. Yet, they also feel easier to customize.

There are a bunch of custom animations in here that I’ve never seen anywhere else. Things like paper folding animations, rolling out with fades and fast dives/swoops from all directions.

One of the best new libraries to use for modern CSS3 animations.

2. jQuery Steps

With the right plugins you can extend your forms with a bunch of handy UX features. Some of these may be aesthetic-only, while others can radically improve your form’s usability.

The jQuery Steps plugin is one such example. It was first released on April 19th and perhaps the coolest progress step plugin out there.

It’s super lightweight and runs with just a few lines of JS code (plus a CSS stylesheet).

Take a look at their GitHub repo for a full setup guide. It’s a lot easier than you might think and the final result looks fantastic.

Plus, the plugin comes with several options to customize the progress bar’s design.

3. Petal CSS

There’s a heavy debate on whether frontend frameworks are must-haves in the current web space. You certainly have a lot to pick from and they all vary so much. But one of my newest favorites is Petal CSS.

No doubt one of the better frameworks released in 2017, I’ve recommended this many times over the past year. I think it’s a powerful choice for minimalist designers.

It doesn’t force any certain type of interface and it gives you so much control over which features you want to use.

This can’t compete with the likes of Bootstrap…but thankfully it wasn’t designed to! For a small minimalist framework, Petal is a real treat.

4. Flex UI

The Flex UI Kit is another CSS framework released in 2017. This one’s a bit newer so it doesn’t have as many updates. But it’s still usable in real-world projects.

Flex UI stands out because it runs the entire framework on the flexbox property. This means that all of the responsive codes, layout grids and typography is structured using flexbox. No more floated elements and clearfix hacks with this framework.

I do find this a little more generic than the Petal framework, but it’s also a reliable choice. Have a look at the demo page for sample UI elements.

5. Sticky Sidebar

You can add sticky sidebars onto any site to increase ad views, keep featured stories while scrolling or even increase email signups through your opt-in form.

In May 2017, developer Ahmed Bouhuolia released this Sticky Sidebar plugin. It runs on pure JavaScript and uses custom functions to auto-calculate where the last item should appear, based on the viewer’s browser width.

The demo page has plenty of examples, along with guides for getting started. Anyone who’s into vanilla JS should give this a shot.

6. rFramework

Looking for another awesome startup framework for the web? rFramework might be worth your time since it’s fully semantic and plays nice with other libraries such as Angular.

To get started all you need are the two CSS & JS files – both of which you can pull from the GitHub repo. All the styles are pretty basic which makes this a great starting point for building websites without reworking your own code base.

Also take a look at their live page showcasing all of the core features that rFramework has to offer.

It may not seem like much now, but this has the potential to grow into a solid minimalist framework in the coming years.

7. NoobScroll

In mid-April 2017 NoobScroll was released. It’s a scrolling library in JavaScript that lets you create some pretty wacky effects with user scroll behaviors.

Have a look over the main page for some live demos and documentation. With this library you can disable certain scrollbars, create smooth scroll animations or even add a custom scroll bar into any element.

This is perfect for creating long flyout navigation on mobile screens. With this approach, you can have lengthy dropdown menus without having them grow too large.

8. jQuery Gantt

Tech enthusiasts and data scientists likely know about gantt charts – although they’re less common to the general public. This is typically a graphical representation of scheduling and it’s not something you usually find on the web.

jQuery Gantt is the first plugin of its kind, released on April 24, 2017. This has so many uses for booking, managing teams or even with SaaS apps that rely heavily on scheduling (ex: social media management tools).

It works in all modern browsers with legacy support for IE 11. You can learn more on their GitHub page, which also has setup docs for getting started.

9. Paroller.js

Building your own parallax site is easier now than ever before. And thanks to plugins like Paroller.js, you can do it in record time.

This free jQuery plugin lets you add custom parallax scrolling features onto any page element. You can target specific background photos, change the scroll speed, and even alter the direction between horizontal and vertical.

It’s a pretty solid plugin that still gets frequent updates. Have a look at their GitHub repo for more details.

10. Password Strength Meter

Last, but certainly not least on my list is this password strength plugin, created March 11, 2017. It’s built on jQuery and gets frequent updates for new features & bug fixes.

With this plugin you can change the difficulty rating for password complexity. Plus, you can define certain parameters like the total number of required uppercase letters or special characters.

If you’re interested in adding this to your own site, the GitHub repo is a nice place to start. The main demo page also has some cool examples you can test out.

But if you’re looking for more new open source projects, try searching GitHub to see what you find. The best resources often find a way of accruing stars, forks and social shares pretty fast.

from Web Designing https://1stwebdesigner.com/open-source-resources/

0 notes

Text

Sweet Light Studios Website Facelift

Sweet Light Studios Website Facelift

The Sweet Light Studios website needed a website facelift badly, so with the help of my Sweetheart we gave it one.

The Website Facelift addresses Mobile Devices

A while ago, some of the search engines started reporting that the Sweet Light Studios website was not optimized for mobile devices. So, we took a look at the site on my cell phone and found we needed to swipe horizontally and well as vertically. Some of the buttons were also too small to press!

http:\\ vs https:\\issues

The browsers also started giving us warnings that the website wasn't safe to browse, with scary icons and text. We would never want anyone to feel uncomfortable looking at the site -- scary! So, we researched it a little and found out that any websites that are http: instead of https: will cause the browsers to give stronger and stronger warnings over time. Good to know now!

Wordpress Blog and Shopify integration.

The website also contained a Wordpress Blog as a sub-domain. This caused look and feel to change dramatically when navigating to the blog pages. Also, this wasn't very good for SEO it turns out, because a subdomain is seen by the browsers as being on a different website. Knowing from experience that most purchasing decisions are emotional, we wanted to be sure to give prospective customers a way to "Buy Now" at the press of a button. Therefor, he decided we needed to integrate Wordpress and Shopify within the website, rather than the other way around. Now you can read my blog and shop all in one place!

Here is a trailer about all the exciting changes you can see for yourself:

I think it's pretty darn cool and get excited every time I hear the music!

React to the rescue.

Because of the philosophy changed in programing from computer first to mobile first, now new technologies have emerged. The cutting edge technology is React, Redux, Bootstrap, so that is what we decided to implement -- well, he implemented it, I just tried to help where I could ;) And it turned out AWESOME!!!! Please take the time to browse around the website and check out all the cool technology. There is also a lot of other cutting edge technologies, you can find them all listed in the credits article. Follow the link and then scroll down. https://www.sweetlightstudios.com/credits/ If you or a programming web developing friend is interested in the wonderful code, he has made it open sourced on github! https://github.com/b0ts/react-redux-sweetlightstudios-website Finally, there is another video, a programmers overview, that walks you through all the source code in In conclusion, I would love for you to call me today to consult on working together to create your gorgeous new image and let me help you “See Yourself in a New Light, in a Sweet Light”. Wishing you a Sweet day! XOX, She Because it is such a great deal, schedule a session of your own at Sweet Light Studios in beautiful South San Francisco or at your site. Contact Shelah today! Phone: 1+415/409.9389 | eMail: [email protected] First of all Find Us on YELP at SweetLightStudios In addition Follow Us on Instagram at @inSweetLight Also Like Us on Facebook at SweetLightStudios & Finally, BTW, We’re Right Nextdoor, too! Click to Post

0 notes

Link

Our co-founder Charles Ouellet (main author) and I just published this piece on our startup blog, and I thought it'd be cool to also share it here! Enjoy :)"I say we go for a full refactoring of the app in React."Our new dev was excited. Green and filled with confidence."This wouldn't be a smart decision," I replied as softly as I could (I didn't want to shut him off too harshly). After all, a part of me did share his enthusiasm. I, too, had read the React docs. I, too, would have loved to play around with it.But another part of me--the one trying to run a successful business--knew better. Our startup's tech stack couldn't be changed on a whim. At least not anymore.As developers, we love trying the new and discarding the old. A few months after shipping code we're proud of, we're quick to trade pride for shame. Amidst the explosion of new frameworks, we struggle not to scratch our refactoring itch.Our industry sure celebrates the new, hip and "latest" across the board. As a business owner, however, I've had to embrace a drabber reality:Successful startups inevitably end up with "boring" tech stacks.Eventually, I explained the ins and outs of this statement to the dev who suggested a React refactor of our web app. Today, however, I want to address this issue in a more structured format.In this post, I'll discuss:How to choose a technology stack for your startup's web appWhy successful businesses end up with "old" tech stacksWhy resisting the urge to refactor makes business senseWhen and how you should refactor your web applicationI'll use our own story at Snipcart to illustrate my thoughts, coupled with actionable insights.Pre-startup daysBefore launching Snipcart, I was leading web development in a cool Québec City web shop. I did mostly client work. The fun part? Trying out new technologies in real life projects. My bosses trusted their engineers, so each project launch meant the opportunity to try fresh stacks. I mostly orbited around the .NET stack, building my first project with ASP.NET MVC (in beta), and another using Nancy, an awesome open source web framework in .NET.A couple years flew by. As my hairline began receding, I knew I needed a change from client work. I wanted to focus on a product. Fellow entrepreneurs inspired me. I knew my experimentation scope would narrow, but I was ready for new challenges. Truly scaling a project, for instance. It may sound buzzwordy, but back then I hadn't had the chance to design a system capable of handling hundreds thousands of simultaneous requests. I decided Snipcart would be that system and began exploring my tech stack options.Choosing your startup's tech stackSay you've gone through your genius "aha" moment, lean canvas & piles of wire-framing. Like every other fledgling startup, you reach the crucial crossroads that is picking your tech stack.Before giving in to the array of shiny logos, blog posts and GitHub stars trying to lure you into The Coolness, take heed of the following advice:1. Pick a technology you're comfortable withThis one's simple, really: do not mess around with stuff you don't already know. Point blank. Keep the glossy new JS frameworks for your 14th personal site/side project, unless you're just shooting for a proof of concept. If you want to build something serious, go for familiarity. Doesn't matter if it's old, boring, uncool, etc. There's no one best technology stack for web applications.In 2013, when I started working on Snipcart, I chose .NET for the backend. Why? Because I enjoyed working in C# and it was the stack I was the most efficient with. I knew It'd allow me to craft something solid.As for the frontend, we picked Backbone. SPAs were relatively new to me, and a colleague had already shipped decent projects with it. Back then (jeez I sound old), options were way more limited. Knockout, Angular, Ember & Backbone were the big players. I had no particularly fond memories of my time with Angular, so I cast it aside.Backbone, on the other hand, was more of a pattern than a framework to me. Sure, you had boilerplate to put together, but after that, it was easy to build on top of it.2. Pick tech stacks supported by strong communitiesIf you're the only developer kicking off the project, this is critical advice. A day will come when you're alone in the dark, staring in utter despair at one senseless error code. When that day comes, you don't want to be roaming through ghost forums and silent chat rooms, believe me.The cool, beta three-month-old framework might not have a rich help structure around it. That's another plus for picking "boring" techs: LOTS of girls and guys have struggled and shipped with them over the years. Like it or not, but documentation and help abound in the ASP.NET realm. ;)3. Make sure your web app stack scalesThe most important scaling choice to make isn't just about how many potential requests you'll handle. By picking a stack you understand, you'll be able to design an application that's easy to build upon. Keep in mind that you won't always be the only one navigating through the codebase. If you're successful, new, different people will be working in the code.So remember:A good tech stack is one that scales faster than the people required to maintain it. [source]In the beginning, I didn't really bother with scaling issues. I was too excited to just ship my own product. So I developed Snipcart the way I would've coded client projects (mistake): a single database & a web app containing everything. Truth is I never expected it to grow as it did. It didn't occur to me that our database could be the single point of failure in our project. I had never encountered such wonderful problems in client projects. So yes, I wish I had thought about scaling earlier! However, refactoring our architecture wasn't too painful since: we had picked technologies we were comfortable with. :)4. Consider hiring pros & consThis one's kind of a double-edged sword.On the one hand, picking a more "traditional" stack will grant you access to a wider basin of qualified developers. On the other, picking cutting edge technologies might attract new, killer talent.Needless to say, I tend to lean towards the former! In startup mode, you can't afford to hire an employee who needs months of ramping up to use a fringe framework. If you plan on quickly scaling the team, this is a key consideration. With Snipcart for instance, most developers fresh out of school had already worked with .NET. This definitely helped for our first hire.However, I'll admit that having a "boring" stack can work against you.For our second hire, .NET put us at a disadvantage: we had found the perfect candidate, who, in the end, decided that our MS stack was a no-go for him. At this point, my tech stack choice cost us a potentially great addition to the team.Like I said, double-edged sword.(Luckily for us, we found a new developer not long ago with solid .NET experience, and he enjoys working with us thus far!)See our SaaS' technology stack on StackShare.Success & sticking to your tech stackLet's fast forward on all the hard work it actually takes to make it and pretend you just did. You blazed through product/market fit, breakeven point, and started generating profits. Your Stripe dashboard finally looks appealing.You're "successful" now. And that probably means:You've been working your ass off for a while--there's no such thing as overnight success.You've been constantly shipping code with the tools you initially chose--and some aren't cool anymore.You've got real, paying users using your platform--read: SUPPORT & MAINTENANCE.See, when you scale, new constraints emerge. Support slows development velocity. Revenue growth means new hires (more training & management) + new expenditures (salaries, marketing, hosting). Profitability becomes an operational challenge.You're accountable to clients, employees and sometimes investors who all depend on your sustained success. As business imperatives trump technical concerns, your priority becomes crystal clear: keeping the company in business. And guess what? Spending hours of time on refactoring your frontend with the latest framework probably won't help you do that.The real cost of refactoring is time not spent fixing bugs, shipping features, helping customers, and nurturing leads. These are the things that'll keep you in business.So the real challenge becomes learning to deal with the technical decisions you've made to get here. Most times, the simple answer is to stick to your stack and focus on the business. Yes, your web application's code may look "old." But you're not alone: big, successful products still run old technologies!Take us for instance: we're still using tech that we could label "old." Backbone is still the "backbone" of our frontend application. No, it's not the coolest JS framework available. However, it works just fine, and a full rewrite would put an insanely costly pressure on operations.Don't get me wrong: I'm not suggesting you avoid refactoring at all cost. Products must evolve, but should do so inside the tight frame of business imperatives. Which brings us to our next point.When does refactoring your web app make sense?Refactoring is part of a healthy dev process and sure brings important benefits: sexier stacks for hiring, better code maintainability, increased app performance for users, etc.As long as refactoring doesn't negatively impact the business, I'm all for it. Like I said, products must also evolve. Just recently, for instance, we began shifting our frontend development to a more powerful framework, Vue.js.What we're doing, though, is progressive refactoring. Tools like Vue are perfect for that: they let you introduce a new tech in your stack without forcing you to throw away existing code. This incremental approach to refactoring has proven successful for us thus far--we did something similar a few years ago when we moved from RequireJS to Webpack. Progressive refactoring is, overall, more costly in development time than a full re-write. However, it doesn't stall business operations, which must remain a priority.When NOT refactoring ends up negatively affecting the business, then you should start considering it more seriously. A few important "time to refactor" flags to lookout for:Parts of the code become impossibly messy or hard to maintainTechnical debt begins manifesting itself through increased support requests & churn rateDeployment, testing & bug fixing are taking longer than they shouldNew developers' time-to-autonomy (shipping in production) escalatesFinding qualified developers to work on your app becomes arduousMaintaining the architecture becomes ridiculously expensiveNote how "let's try a new stack!" and "that code isn't clean enough!" aren't listed here. Or, as Dan McKinley puts it:One of the most worthwhile exercises I recommend here is to consider how you would solve your immediate problem without adding anything new. First, posing this question should detect the situation where the "problem" is that someone really wants to use the technology. If that is the case, you should immediately abort.Technology for its own sake is snake oil.This killer StackExchange answer lists even more refactoring flags you should be sensitive to.BONUS: Where to use all these new technologiesA desire to play with shiny new toys is only natural. It comes with the active curiosity residing in any good developer. At Snipcart, every dev does lots of self-learning. Like many others, we read blogs and try to keep up with latest trends. Of course, we can't use most of these up-and-coming tools in our core project. However, we run a developer-centric blog that covers all sorts of dev topics and tools. For us, this is a perfect playing field for experimentations. It allows us to quench our thirst for novelty, offers value to our community, all without compromising our "money" product!For startuppers, there are a few other areas where using hip tech makes sense:Marketing side-projectsInternal tools (analytics, comms, management, etc.)Marketing website / blogThese are all potent areas where you can experiment and learn new skills and stacks. And they will all benefit your core business. So find the one that works for you!Takeaways & closing thoughtsOuff, long post, heh? Before going back to my startup life, I'd like to leave you with the key takeaways here:Technical decision-making isn't just about technologies--it's mostly about the business.When picking a tech stack, consider: familiarity, community, hiring, scalability.Try as much as possible to adopt a progressive approach to refactoring your stack.Keep an eye out for relevant refactoring flags: development deceleration, talent scarcity, customer frustration.And finally: remember that the best technology stack for startups is your own grey matter. At the end of the day, architecture decisions & craftsmanship will eclipse tech choices.Explore startups & SaaS technology stacks examples on StackShare.io.Originally published on Snipcart's blog.

0 notes

Photo

Nuxt.js: A Universal Vue.js Application Framework

Universal (or Isomorphic) JavaScript is a term that has become very common in the JavaScript community. It is used to describe JavaScript code that can execute both on the client and the server.

Many modern JavaScript frameworks, like Vue.js, are aimed at building Single Page Applications (SPAs). This is done to improve the user experience and make the app seem faster since users can see updates to pages instantaneously. While this has a lot of advantages, it also has a couple of disadvantages, such as long "time to content" when initially loading the app as the browser retrieves the JavaScript bundle, and some search engine web crawlers or social network robots will not see the entire loaded app when they crawl your web pages.

Server-side Rendering of JavaScript is about preloading JavaScript applications on a web server and sending rendered HTML as the response to a browser request for a page.

Building Server-side rendered JavaScript apps can be a bit tedious, as a lot of configuration needs to be done before you even start coding. This is the problem Nuxt.js aims to solve for Vue.js applications.

What is Nuxt.js

Simply put, Nuxt.js is a framework that helps you build Server Rendered Vue.js applications easily. It abstracts most of the complex configuration involved in managing things like asynchronous data, middleware, and routing. It is similar to Angular Universal for Angular, and Next.js for React.

According to the Nuxt.js docs "its main scope is UI rendering while abstracting away the client/server distribution."

Static Generation

Another great feature of Nuxt.js is its ability to generate static websites with the generate command. It is pretty cool and provides features similar to popular static generation tools like Jekyll.

Under the Hood of Nuxt.js

In addition to Vue.js 2.0, Nuxt.js includes the following: Vue-Router, Vue-Meta and Vuex (only included when using the store option). This is great, as it takes away the burden of manually including and configuring different libraries needed for developing a Server Rendered Vue.js application. Nuxt.js does all this out of the box, while still maintaining a total size of 28kb min+gzip (31kb with vuex).

Nuxt.js also uses Webpack with vue-loader and babel-loader to bundle, code-split and minify code.

How it works

This is what happens when a user visits a Nuxt.js app or navigates to one of its pages via <nuxt-link>:

When the user initially visits the app, if the nuxtServerInit action is defined in the store, Nuxt.js will call it and update the store.

Next, it executes any existing middleware for the page being visited. Nuxt checks the nuxt.config.js file first for global middleware, then checks the matching layout file (for the requested page), and finally checks the page and its children for middleware — middleware are prioritized in that order.

If the route being visited is a dynamic route, and a validate() method exists for it, the route is validated.

Then, Nuxt.js calls the asyncData() and fetch() methods to load data before rendering the page. The asyncData() method is used for fetching data and rendering it on the server-side, while the fetch() method is used to fill the store before rendering the page.

At the final step, the page (containing all the proper data) is rendered.

These actions are portrayed properly in this schema, gotten from the Nuxt docs:

Creating A Serverless Static Site With Nuxt.js

Let's get our hands dirty with some code and create a simple static generated blog with Nuxt.js. We will assume our posts are fetched from an API and will mock the response with a static JSON file.

To follow along properly, a working knowledge of Vue.js is needed. You can check out Jack Franklin's great getting started guide for Vue.js 2.0 if you're a newbie to the framework. I will also be using ES6 Syntax, and you can get a refresher on that here: http://ift.tt/2lsFbvU.

Our final app will look like this:

The entire code for this article can be seen here on GitHub, and you can check out the demo here.

Application Setup and Configuration

The easiest way to get started with Nuxt.js is to use the template created by the Nuxt team. We can install it to our project (ssr-blog) quickly with vue-cli:

vue init nuxt/starter ssr-blog

Note: If you don't have vue-cli installed, you have to run npm install -g vue-cli first, to install it.

Next, we install the project's dependencies:

cd ssr-blog npm install

Now we can launch the app:

npm run dev

If all goes well, you should be able to visit http://localhost:3000 to see the Nuxt.js template starter page. You can even view the page's source, to see that all content generated on the page was rendered on the server and sent as HTML to the browser.

Next, we can do some simple configuration in the nuxt.config.js file. We will add a few options:

// ./nuxt.config.js module.exports = { /* * Headers of the page */ head: { titleTemplate: '%s | Awesome JS SSR Blog', // ... link: [ // ... { rel: 'stylesheet', href: 'http://ift.tt/2uxIolw' } ] }, // ... }

In the above config file, we simply specify the title template to be used for the application via the titleTemplate option. Setting the title option in the individual pages or layouts will inject the title value into the %s placeholder in titleTemplate before being rendered.

We also pulled in my current CSS framework of choice, Bulma, to take advantage of some preset styling. This was done via the link option.

Note: Nuxt.js uses vue-meta to update the headers and HTML attributes of our apps. So you can take a look at it for a better understanding of how the headers are being set.

Now we can take the next couple of step by adding our blog's pages and functionalities.

Working with Page Layouts

First, we will define a custom base layout for all our pages. We can extend the main Nuxt.js layout by updating the layouts/default.vue file:

Continue reading %Nuxt.js: A Universal Vue.js Application Framework%

by Olayinka Omole via SitePoint http://ift.tt/2uWnvkm

0 notes

Text

Moving an ASP.NET Core from Azure App Service on Windows to Linux by testing in WSL and Docker first

I updated one of my websites from ASP.NET Core 2.2 to the latest LTS (Long Term Support) version of ASP.NET Core 3.1 this week. Now I want to do the same with my podcast site AND move it to Linux at the same time. Azure App Service for Linux has some very good pricing and allowed me to move over to a Premium v2 plan from Standard which gives me double the memory at 35% off.

My podcast has historically run on ASP.NET Core on Azure App Service for Windows. How do I know if it'll run on Linux? Well, I'll try it see!

I use WSL (Windows Subsystem for Linux) and so should you. It's very likely that you have WSL ready to go on you machine and you just haven't turned it on. Combine WSL (or the new WSL2) with the Windows Terminal and you're in a lovely spot on Windows with the ability to develop anything for anywhere.

First, let's see if I can run my existing ASP.NET Core podcast site (now updated to .NET Core 3.1) on Linux. I'll start up Ubuntu 18.04 on Windows and run dotnet --version to see if I have anything installed already. You may have nothing. I have 3.0 it seems:

$ dotnet --version 3.0.100

Ok, I'll want to install .NET Core 3.1 on WSL's Ubuntu instance. Remember, just because I have .NET 3.1 installed in Windows doesn't mean it's installed in my Linux/WSL instance(s). I need to maintain those on my own. Another way to think about it is that I've got the win-x64 install of .NET 3.1 and now I need the linux-x64 one.

NOTE: It is true that I could "dotnet publish -r linux-x64" and then scp the resulting complete published files over to Linux/WSL. It depends on how I want to divide responsibility. Do I want to build on Windows and run on Linux/Linux? Or do I want to build and run from Linux. Both are valid, it just depends on your choices, patience, and familiarity.

GOTCHA: Also if you're accessing Windows files at /mnt/c under WSL that were git cloned from Windows, be aware that there are subtleties if Git for Windows and Git for Ubuntu are accessing the index/files at the same time. It's easier and safer and faster to just git clone another copy within the WSL/Linux filesystem.

I'll head over to https://dotnet.microsoft.com/download and get .NET Core 3.1 for Ubuntu. If you use apt, and I assume you do, there's some preliminary setup and then it's a simple

sudo apt-get install dotnet-sdk-3.1

No sweat. Let's "dotnet build" and hope for the best!

It might be surprising but if you aren't doing anything tricky or Windows-specific, your .NET Core app should just build the same on Windows as it does on Linux. If you ARE doing something interesting or OS-specific you can #ifdef your way to glory if you insist.

Bonus points if you have Unit Tests - and I do - so next I'll run my unit tests and see how it goes.

OPTION: I write things like build.ps1 and test.ps1 that use PowerShell as PowerShell is on Windows already. Then I install PowerShell (just for the scripting, not the shelling) on Linux so I can use my .ps1 scripts everywhere. The same test.ps1 and build.ps1 and dockertest.ps1, etc just works on all platforms. Make sure you have a shebang #!/usr/bin/pwsh at the top of your ps1 files so you can just run them (chmod +x) on Linux.

I run test.ps1 which runs this command

dotnet test /p:CollectCoverage=true /p:CoverletOutputFormat=lcov /p:CoverletOutput=./lcov .\hanselminutes.core.tests