#and they would much rather have a cheaper 3d film than a 2d film

Explore tagged Tumblr posts

Text

Scoob!Dick's final design was just 😩👌, not gonna lie. It payed homage to the original while putting their own spin to it to make him look more menacing and imposing than his usually seedier self. But I would also be lying to say I wouldn't have killed a man to see some of his earlier designs in action.

Specifically this design from Sandro Cleuzo

Now I really want a 2D animated scoob movie because gosh it would look so good

#but alas#execs do what execs do best#and they would much rather have a cheaper 3d film than a 2d film#not a 3d hater but I would kill all the minions feom despicable me for just one 2d animated film#I adore this#knife and gun dick are best dick#dick dastardly#wacky races#scoob!

27 notes

·

View notes

Text

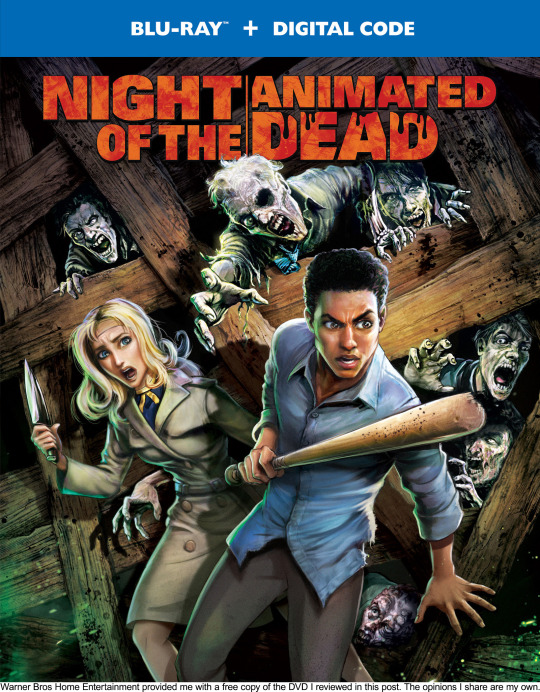

Blu-ray Review: Night of the Animated Dead

There are two facets any potential viewer should be made aware of before pressing 'play' on Night of the Animated Dead, an animated adaptation of George A. Romero’s Night of the Living Dead.

First and foremost, it lacks any credit to Romero, co-writer John Russo, producers Image Ten, or any of the cast and crew. It's common knowledge that Night of the Living Dead is in the public domain therefore the original creators' permission is not needed, but the least one can do is credit them on screen, if not compensate them financially. But there is nary a mention of any of them; not in the credits, not in the billing block, and not in the making-of featurette. The script goes uncredited altogether!

Secondly, the bait-and-switch cover art does not accurately represent the film's animation quality. Rather than beautifully rendered 3D characters, the movie features adequate but decidedly less impressive (and substantially cheaper) 2D animation. It more closely resembles a dated Flash animation than modern computer-generated work. That's no slight to the animators at Demente Animation Studio; there's a charm to the style - at times, it's reminiscent of a Saturday morning cartoon - but don't show me a steak and then serve me a McDonald’s burger.

Such egregious offenses would almost be understandable if it was a rinky-dink production from an independent distributor, but Night of the Animated Dead is a Warner Bros. release. Even if you're able to look past these transgressions - a tall order, indeed - Night of the Animated Dead is often as lifeless as its zombies. Beyond Romero's genre-defining 1968 original and Tom Savini's formidable 1990 remake, competition among the various versions of Night of the Living Dead isn't exactly stiff, so this one ranks somewhere in the middle.

Night of the Animated Dead is a virtually beat-for-beat - and occasionally shot-for-shot - adaptation of the film. After being attacked by a flesh-eating ghoul, Barbara (Katharine Isabelle, Ginger Snaps) escapes to a farmhouse. She meets the resourceful Ben (Dulé Hill, Psych), and they barricade the home as more zombies try to get in. More survivors - bull-headed Harry Cooper (Josh Duhamel, Transformers), his wife Helen (Nancy Travis, Rose Red), their sick daughter Karen, and young couple Tom (James Roday Rodriguez, Psych) and Judy (Katee Sackhoff, Battlestar Galactica) - are soon discovered hidden in the basement. Of course, the human struggle becomes just as dangerous as the undead threat.

Director Jason Axinn (no stranger to animated horror, coming off To Your Last Death) adds a few minor sequences not found in its live-action counterpart - most notably, a flashback to Ben finding the truck at Beekman's Diner - along with a considerable increase in gore, padding the runtime to a scant 70 minutes including credits. The voice casting - which also includes Will Sasso (Mad TV) as Sheriff McClelland and Jimmi Simpson (Westworld) as Barbara's brother, Johnny - is rather inspired, not to mention the fun Hill/Rodriguez reunion for Psych fans, but none of the talent is able to bring much dimension to the uninspired material.

Perhaps Axinn and company thought it would be an interesting experiment to remake the same movie in animated form - learning nothing from Gus Van Sant's much-maligned Psycho remake - but I struggle to see the point. With animation opening up boundless possibilities, why not try to do something different? They could have modernized it, or made Barbara less of a catatonic damsel in distress, or leaned into the cartoon aesthetic and made it family friendly, or swapped all the genders, or done literally anything to put a fresh spin on the well-worn material. Alas.

Night of the Animated Dead's Blu-ray and DVD releases include a 10-minute making-of featurette with Axinn, Hill, Duhamel, Sasso, and producer Michael J. Luisi (Oculus, The Call), plus footage of the cast in the recording booth. Axinn explains that he wanted to show how powerful the story still is by animating it, using the replication of story betas and exact dialogue as a point of pride, as if fans were clamoring for it. Adding insult to injury, the featurette opens with clips from Romero's film. If only someone could have mentioned him by name.

Night of the Animated Dead is available on Blu-ray and DVD now via Warner Bros.

#night of the animated dead#night of the living dead#george romero#josh duhamel#dule hill#katharine isabelle#review#article#dvd#gift#katee sackhoff#james roday rodriguez#will sasso#jimmi simpson#horror

23 notes

·

View notes

Text

Animation Vs Live-Action: Which Is Right For Your Project?

When it comes to deciding between animation or live-action video, there are a lot of factors that should be considered. For example: What do I want the viewer's experience with my project? Will this particular format suit our company’s branding?"

You might think there are wrong or right answers when it comes to video creation, but the truth is that both have their merits. It all depends on what you want your content and audience members learn from this project- whether it's something personal like how a person deals with stress in her life as well as informative such aspects of climate change they're struggling not only environmentally; mentally/physically she’s having trouble too because everything around seems so unstable? You decide which direction will make most sense for YOU!

We have compiled a list of the pros and cons for both video formats, so you can make an informed decision.

Costs of Producing Video Content

First things first – let’s talk about money. Whether you want to make a short, low-budget video or go all out with an in depth production plan for your business idea and need more than 15 minutes of footage; it's important that whatever format you choose is as cost effective as possible without sacrificing quality of workmanship (or getting overcharged).

If we're talking strictly length: Short videos can be done within budget constraints while longer projects require additional investment which could result from launching high ticket affiliate products like software development packages on commissionable contracts but beware -these are not necessarily cheaper options if there isn't enough time before launch!

The idea of animation being the cheapest option is a misconception. It might seem like you can just grab some video equipment and quickly make your movie, but actually creating intricate graphics that are cased in 3D requires state-of-the art technology which could cost more than expected!

The costs of creating an animation can be higher than those for a live-action video, depending on the complexity of your project. And we haven’t even touched upon pre-production planning stages such as scripting and storyboarding which are often required before putting together any kind of scene in animated films.

The financial drawbacks of live-action are many. For one, you need to think about insurance and wardrobe for your cast members which can be expensive without using office space as set or ropes in colleagues from other departments willing enough to do some acting on demand.

Animated Video Content Pros

Animation is a versatile and creative way to tell your company’s story. With different styles, formats, or animation styles available for you to choose from there are endless possibilities in video marketing that can be explored with this medium.

Video marketing can be a complicated process, but it doesn't have to feel overwhelming. Hiring an agency with experience in video content will give your company the flexibility and expertise needed for success.

Animation is a great way to condense information into bite-sized chunks. By using animation, it's easy for the viewer (and sometimes listener!) to digest complex and abstract concepts in an entertaining format that will hold their attention from beginning until end.

When you're presenting data in a video, it's important to think about what kind of animation will best make your point. Animation can help with knowledge retention by 15% -- so if there are lots of complex graphs and charts involved then an animated format may be for the job.

Animated videos are a great way to tell stories. They can be made quickly and easily with the click of a button, so you don't have any negative impacts on your time constraints like other media might do. Plus they're really easy for last-minute changes! You'll never regret investing in this type of animation because there's always something new coming out that will inspire creativity or get people engaged again - just check YouTube trends today.

These very quick updates will also benefit you in the long run. Animated video content can last for years, so it's best to start with an updated version of your product rather than producing a new one every time there is something worth updating.

Animated Video Content Cons

This is why you should always include that human touch in your content marketing strategy. Animation can be great for promoting physical products or services, but it might not work so well if what’s being promoted has no tangible aspects of itself whatsoever - like an app on a phone.

As you know, different audiences have very specific needs and expectations. In order to be sure that your video will resonate with the people who are most important - namely those viewers at home – it's essential that their particularized interests/goals also come through in content marketing strategies such as voiceovers for videos created specifically by advertisers themselves (such as commercials).

Creating an animated video can be a time-consuming process, depending on its complexity. If you're in need of one quickly and have no other options available to use as production equipment, it might not work out well for your content because there are lots of things every filmmaker must think about before they even start filming.

Animated videos are a great way to get your message across quickly and without running afoul of copyright laws. A good idea is not always enough when you're developing content for an entire channel, though; pre-production planning helps make sure every aspect goes exactly as planned so the process can be more successful overall!

Pre-production plans tend towards detail in order that creators know precisely what they want their animated video or series about before too much time has passed spent creating it--especially because there isn't room left over on site.

Live-Action Video Content Pros

The power of live-action video content is an undeniable reality. Real people advertising a tangible product has been proven to be highly persuasive when marketing products or services, especially with how shareable videos are nowadays! You want your target audience as much involved in it so they can't help but react and take action on what's being presented - which means you need someone who looks like them delivering this message for maximum impact.

The following is an example of a live-action video.

A: With the flexibility to play with viewers’ emotions through authentic, instructive content and help them envision your company culture, what better way does one sell their product or pitch business? This gives you more credibility in not only selling but promoting as well because it promotes who YOU are!

One of the biggest advantages to live-action video content is that it doesn’t take as long. Whereas with animation, every detail must be built from scratch before production even begins; in contrast you have all physical props and people needed for a quick shoot on site which dramatically reduces time spent prepping ahead of schedule or finishing up post processing later (if done at all).

If your project needs very little in terms of casting and apparatus, a video camera on your mobile phone can do the trick. This may not seem like much at first glance but it has huge benefits that would be hard to come by otherwise; including being able to create high-quality products without spending too much time or money.

Live-Action Video Content Cons

A live-action video is also a challenging project because it takes more time and effort in post production. If you want to film an additional scene, or change dialogue from one part of the footage for another then this would require all new shots which could be difficult at best given how quickly things move when filming outdoors without sound equipment present.

Why do you want to go through the trouble of renting out more film days? It's not like this is going to be an easy process. Plus, all that costs money! Animations are simpler and quicker--plus they can be done in a matter of minutes with our software as opposed to live-action videos which take much longer because it takes being able edit video content by hand (which isn't something everyone has time for).

With so many people involved in the production of your video content it can be overwhelming. You'll need to hire crew, cast and equipment for each aspect that carries a cost; not just when you're filming but also afterwards if needed.

Successful sourcing of the right talent can be one of the most stressful parts about filmmaking. It's important to understand what you want your video to achieve, because if it isn't clear then there won’t ever really be any progress made and we end up stuck wondering where things are going wrong without knowing why this happened in advance.

Final Thoughts

We can help you understand your brief and devise an effective video to reach your target audience. We have years of experience in condensing complex, abstract subject matter or personifying products with personality for more engaging content that will engage viewers on all levels - whether it's professional-sounding verbal communication from one individual about their company/product line; dramatic reenactments following narratives we designed especially tailored towards different audiences like children’s programming (with educational lessons) where they show how things work through trial & error until achieving success at last.

We are a leading animation studio in Toronto. Our services include 2D and 3D animation, storyboarding, character design, visual effects and more!

1 note

·

View note

Text

What Is An Explainer Video In USA 2021?

An explainer video is a video that sums up your brand promise. It shows potential customers who you are and what you can do for them. You can think of it as an elevator pitch.

There are many explainer video types that you could use to best suit your brand. No matter what style of explainer you choose to use, your goal is the same: to explain your product and service while answering the question of "what's it for me?" from the perspective that your audience.

Take a look at these videos and learn

Mat King is Vidyard's Video Product Manager and gives an overview about explainer videos as well as how to create them. Blake Smith is Vidyard's Creative director. He and Mat King discuss how to make an effective explainer video.

Explainer videos have many benefits

Explainer videos are about simplifying complex ideas and concepts for your audience.

They're a very popular way of learning about brands: 96% said they have seen explainer videos in order to learn more. The majority of people (68%) would rather see a video to learn more about a new product/service than from a text-based post (15%) When asked to choose the type or brand of video they want to see more of in their lives, more people chose explainer video than any other form of video content.

Website and landing pages

Your website can be a great location to place your explainer videos. A general explanation video works well on your homepage. More specific explainers work best on landing page pages that support each video's messaging.

(Psssst. Vidyard's web-based video hosting service allows you to embed any of your videos.

Paid Social Media

Because of the speed at which you can get feedback and collect results quickly, your social channels and advertisements are great places to experiment with explainer clips. You can also create explainer clips that reach different segments of your customer journey and target them in the right way.

For example, explainer videos could be created to send out social ads to people who don't know your company. You could also use the remarketing options to share explainer video that addresses specific questions about a product with people who have visited a page about it or abandoned it in an on-line shopping cart.

You already know some things about your channel so you can highlight specific benefits of your product or use explainer video to enhance your reputation as an expert within your niche.

Crowdfunding Sites, Campaigns

A great explainer video is an essential part of any crowdfunding campaign. A product that isn't even available yet must be clearly explained to the benefit of your campaign.

Kickstarter's own words are, "Make compelling videos. It's a great method to show off your talents and give people a glimpse into what you're doing.

Conferences, Events, and Presentations

However, you don't need to make explainer videos only online. A short explainer video can make a powerful introduction to your presentation and get your audience interested before you actually start speaking.

A simple explainer film running at your booth, on loop, can be a powerful way to present your brand or products at trade shows.

What's the secret of creating a quality explainer film?

It isn't easy to make a quality explainer video. As with any other task, the higher quality you want, the more you should pay. It is possible for costs to quickly mount so you need to be clear about the expected return.

Deep research into the brand, product, or target audience

It is amazing to produce an engaging video that captures the attention of your audience. It's what leads you to those conversions. A poor audience will not be interested in your product and can make top-tier content useless. This is why market research is so important.

The foundation of your videos can be greatly improved if you are willing and able to invest more. Doing extensive research will help you ensure that your videos appeal to the right audience. Additionally, by investing in research you can navigate competitive markets by learning what works and not working and avoiding any that may be too similar. Data is an important thing. Video creation is no different.

As mentioned above, the more original your content, you are the better. Unique content does not only rank better in SEO terms, but it also makes a greater impression on your audience.

Although creating video can be very expensive, it is possible to obtain unique assets. You have complete control of how they are used. It is cheaper to repurpose assets later than it is to own them, and there are no risks of them being associated or misused.

Book a call banner for better animation and asset management

High-quality components will be required for explainer videos that are high quality. This increases the cost of a project. You have many other options, such a whiteboard animation software and 2D animated videos, that can help you get your message across to the right audience. For your video marketing strategy, however, you need a greater budget and a better quality video.

3D animation can be used to promote big-budget video marketing. The detail, camerawork and graphic quality are unparalleled. While this animation style is expensive and not for everyone, it is well worth the investment. As an individual and sole owner, you can be sure that quality content represents your brand well.

Personnel professionals

This last point is quite obvious. Capable people create quality products. Even though it won't cost much, having professionals on your payroll is a great investment. Although this is true in every industry, it's especially true in video creation. You need professionals to keep your project on the schedule. They will not cut corners or stumble upon stumbling block and they will produce the best results. As your projects expand, more specialists will be needed. It might cost you less, but your animator will end up writing more.

The tech industry is continually evolving and coming up new technologies in hardware and software. These technologies are often complex and difficult for laymen to understand.

Explainer videos can be helpful in helping consumers understand new technology. They simplify the whole concept because they don’t tell you how it works. Instead they show it. Every tech company is looking for the best explainer clips to help them with their digital marketing. These videos increase conversion rates and the time that visitors stay on a site. This greatly improves Google's rankings.

0 notes

Text

Critical Analysis

KRISS, K. (2016) ‘Tactility and The Changing Close-Up.’ Animation Studies. [Online] 11. Available from: https://journal.animationstudies.org/karen-kriss-tactility-and-the-changing-close-up/ [Accessed 13th December 2019]

I have chosen an article by Karen Kriss for my critical analysis. Karen Kriss is an artist and animator and She works in the faculty of Art and Design, UNSW within the area of Media Arts and Design. I am absorbed in this article as it regards the narrative power and camera language in animation. That is what I exactly am concerned with in my practice. The article was published in Animation Studies in 2016. According to the author of the article, the usage and frequency of close-up have changed in recent years. This phenomenon could be observed apparently in CGI’s cinematic development. The following paragraphs give analyses of close-up from the past visual effects films(VFX) and CGI animation films.

The first section of this article introduces the emotive ability for close-up and the connections between close-up and audiences. The main function of close-up narrative techniques is to emphasize a specific object or facial expressions. The text describes what sort of distance is the affective status. It expresses the intensive emotions if the distance is out of the audience's expectation. The author comments that close-up provides the deeper secrets of the story from an alternative perspective, observation, and understanding. It allows the audience considering or suspecting different aspects of the character image and aim. Besides, the author mentions Marks proposes a certain term 'Haptic visuality' and Marks considers the eyes function is equal to a faculty of touch. It means awake sensations via scenarios. There is no actually and literally touch. In my perspective, I would define the touch to be a distance and emotions between audiences and characters.

The next sub-section discusses that close- up is influenced by CGI. The text describes the changs of close-up usages in the past twenty years. The author deconstructs the earlier VFX film called Jurassic Park: The Lost World (Spielberg,1997) and a CGI animation film called Toy Story (Lasseter,1995). There is a similar phenomenon in both films. Those early CGI films attempted to prevent from close-up or skip close-up. The author then comments on the limitations of combining ability between CGI and cinematic. However, Arnold Schwarzenegger (2015) created a revolution of the close-up in CGI. VFX films no longer avoid close-up. It paid the great price of successful close-up.

In the followed paragraphs, it presents three CGI animations including The Lego Movie, Big Hero 6 and The Lion King. In the beginning, the text explains what the author expects to see in terms of the explosion of emotion in The Lego Movie (2014) and how the author feels disappointed with as it involves few close-up shots. It is worth to say another close-up with slow camera movement. This shot is dark and the character facial expressions are unable to be seen by audiences and look blur. Although the aim of the vibrant lighting is to make audiences pay attention to the dynamic background and brightening light and ignore other terrible aspects of composition and constructive elements, the poor quality of textures and details still can be found. Hence, CGI films are requested to provide high quality and more detail works as they cannot hide any defects under close-up.

This article shortly compares the two versions of The Lion King. One of the versions is CGI film and another is 2D animation. The newest CGI film does not follow the old storyboard. It is obvious that 2D animation used a sequence of close-ups in terms of facial expressions to express despair and other abundant emotions.

In this section, take Big Hero 6(2014)as example, the author analyses the method of how to increase close-up and concludes the shots in Big Hero 6 much more like a movie. The text explains that the 3D models decrease the complexity and depth of textures rather than delete textures. In addition, it simplifies the character design and makes joints as smooth as possible. Based on the residual memory of tactility, the similar and related textures will be wake up and connected to what audiences see. through this approach it less the impacts of Haptic visuality. Thus, the character loses a lot of identifications and details but it does not come to be a poor quality product.

The aim of this article is to reveal close-up CGI is expensive and demonstrate a vital challenge for depicting the 3D model's quality in details. The valid argument convinces and makes readers taking consideration to reexamine the current approach of application in close-up by strong supporting factors.

It is also worth to be noticed the value of this text, not only it figures out the directing trend in CGI and defines the close-up emotional narration, but also it contains the forms of the art performance within the lower film quality. For the most part, I basically approve of what the author said regarding the drawbacks which expose in close-up in CGI cinematic. Especially, It contributes to explore cheaper art style while it considers the costs. However, the term of Haptic visuality and tactility have unclear enough definitions in the text. It is hard to get involved in the abstract descriptions. Besides, the usages of close-up depend on individual cases. A part of storytellings does not suitable to apply a huge amount of close-up. It is the fact that previous examples actually indicate declining close-up in the CGI storyboard. Despite this text is not totally perfect, I believe this read is a very helpful guide to my practice in selecting the length of the camera to reveal the scene atmosphere and using an appropriate distance for expressing affective and implicit assumptions.

0 notes

Text

The Shareware Scene, Part 5: Narratives of DOOM

Let me begin today by restating the obvious: DOOM was very, very popular, probably the most popular computer game to date.

That “probably” has to stand there because DOOM‘s unusual distribution model makes quantifying its popularity frustratingly difficult. It’s been estimated that id sold 2 to 3 million copies of the shareware episodes of the original DOOM. The boxed-retail-only DOOM II may have sold a similar quantity; it reportedly became the third best-selling boxed computer game of the 1990s. But these numbers, impressive as they are in their own right, leave out not only the ever-present reality of piracy but also the free episode of DOOM, which was packaged and distributed in such an unprecedented variety of ways all over the world. Players of it likely numbered well into the eight digits.

Yet if the precise numbers associated with the game’s success are slippery, the cultural impact of the game is easier to get a grip on. The release of DOOM marks the biggest single sea change in the history of computer gaming. It didn’t change gaming instantly, mind you — a contemporaneous observer could be forgiven for assuming it was still largely business as usual a year or even two years after DOOM‘s release — but it did change it forever.

I should admit here and now that I’m not entirely comfortable with the changes DOOM brought to gaming. In fact, for a long time, when I was asked when I thought I might bring this historical project to a conclusion, I pointed to the arrival of DOOM as perhaps the most logical place to hang it up. I trust that most of you will be pleased to hear that I no longer feel so inclined, but I do recognize that my feelings about DOOM are, at best, conflicted. I can’t help but see it as at least partially responsible for a certain coarsening in the culture of gaming that followed it. I can muster respect for the id boys’ accomplishment, but no love. Hopefully the former will be enough to give the game its due.

As the title of this article alludes, there are many possible narratives to spin about DOOM‘s impact. Sometimes the threads are contradictory — sometimes even self-contradictory. Nevertheless, let’s take this opportunity to follow a few of them to wherever they lead us as we wrap up this series on the shareware movement and the monster it spawned.

3D 4EVA!

The least controversial, most incontrovertible aspect of DOOM‘s impact is its influence on the technology of games. It was nothing less than the coming-out party for 3D graphics as a near-universal tool — this despite the fact that 3D graphics had been around in some genres, most notably vehicular simulations, almost as long as microcomputer games themselves had been around, and despite the fact that DOOM itself was far from a complete implementation of a 3D environment. (John Carmack wouldn’t get all the way to that goal until 1996’s Quake, the id boys’ anointed successor to DOOM.) As we’ve seen already, Blue Sky Productions’s Ultima Underworld actually offered the complete 3D implementation which DOOM lacked twenty months before the latter’s arrival.

But as I also noted earlier, Ultima Underworld was complex, a little esoteric, hard to come to terms with at first sight. DOOM, on the other hand, took what the id boys had started with Wolfenstein 3D, added just enough additional complexity to make it into a more satisfying game over the long haul, topped it off with superb level design that took full advantage of all the new affordances, and rammed it down the throat of the gaming mainstream with all the force of one of its coveted rocket launchers. The industry never looked back. By the end of the decade, it would be hard to find a big boxed game that didn’t use 3D graphics.

Many if not all of these applications of 3D were more than warranted: the simple fact is that 3D lets you do things in games that aren’t possible any other way. Other forms of graphics consist at bottom of fixed, discrete patterns of colored pixels. These patterns can be moved about the screen — think of the sprites in a classic 2D videogame, such as Nintendo’s Super Mario Bros. or id’s Commander Keen — but their forms cannot be altered with any great degree of flexibility. And this in turn limits the degree to which the world of a game can become an embodied, living place of emergent interactions; it does no good to simulate something in the world model if you can’t represent it on the player’s screen.

3D graphics, on the other hand, are stored not as pixels but as a sort of architectural plan of an imaginary 3D space, expressed in the language of mathematics. The computer then extrapolates from said plan to render the individual pixels on the fly in response to the player’s actions. In other words, the world and the representation of the world are stored as one in the computer’s memory. This means that things can happen there which no artist ever anticipated. 3D allowed game makers to move beyond hand-crafted fictions and set-piece puzzles to begin building virtual realities in earnest. Not for nothing did many people refer to DOOM-like games in the time before the term “first-person shooter” was invented as “virtual-reality games.”

Ironically, others showed more interest than the id boys themselves in probing the frontiers of formal possibility thus opened. While id continued to focus purely on ballistics and virtual violence in their extended series of Quake games after making DOOM, Looking Glass Technologies — the studio which had previously been known as Blue Sky Productions — worked many of the innovations of Ultima Underworld and DOOM alike into more complex virtual worlds in games like System Shock and Thief. Nevertheless, DOOM was the proof of concept, the game which demonstrated indubitably to everyone that 3D graphics could provide amazing experiences which weren’t possible any other way.

From the standpoint of the people making the games, 3D graphics had another massive advantage: they were also cheaper than the alternative. When DOOM first appeared in December of 1993, the industry was facing a budgetary catch-22 with no obvious solution. Hiring armies of artists to hand-paint every screen in a game was expensive; renting or building a sound stage, then hiring directors and camera people and dozens of actors to provide hours of full-motion-video footage was even more so. Players expected ever bigger, richer, longer games, which was intensely problematic when every single element in their worlds had to be drawn or filmed by hand. Sales were increasing at a steady clip by 1993, but they weren’t increasing quickly enough to offset the spiraling costs of production. Even major publishers like Sierra were beginning to post ugly losses on their bottom lines despite their increasing gross revenues.

3D graphics had the potential to fix all that, practically at a stroke. A 3D world is, almost by definition, a collection of interchangeable parts. Consider a simple item of furniture, like, say, a desk. In a 2D world, every desk must be laboriously hand-drawn by an artist in the same way that a traditional carpenter planes and joins the wood for such a thing in a workshop. But in a 3D world, the data constituting the basic form of “desk” can be inserted in a matter of seconds; desks can now make their way into games with the same alacrity with which they roll off of an IKEA production line. But you say that you don’t want every desk in your world to look exactly the same? Very well; it takes just a few keystrokes to change the color or wood grain or even the size of your desk, or to add or take away a drawer. We can arrive at endless individual implementations of “desk” from our Platonic ideal with surprising speed. Small wonder that, when the established industry was done marveling at DOOM‘s achievements in terms of gameplay, the thing they kept coming back to over and over was its astronomical profit margins. 3D graphics provided a way to make games make money again.

So, 3D offered worlds with vastly more emergent potential, made at a greatly reduced cost. There had to be a catch, right?

Alas, there was indeed. In many contexts, 3D graphics were right on the edge of what a typical computer could do at all in the mid-1990s, much less do with any sort of aesthetic appeal. Gamers would have to accept jagged edges, tearing textures, and a generalized visual crudity in 3D games for quite some time to come. A freeze-frame visual comparison with the games the industry had been making immediately before the 3D revolution did the new ones no favors: the games coming out of studios like Sierra and LucasArts had become genuinely beautiful by the early 1990s, thanks to those companies’ rooms full of dedicated pixel artists. It would take a considerable amount of time before 3D games would look anywhere near this nice. One can certainly argue that 3D was in some fairly fundamental sense necessary for the continuing evolution of game design, that this period of ugliness was one that the industry simply needed to plow through in order to emerge on the other side with a whole new universe of visual and emergent possibility to hand. Still, people mired in the middle of it could be forgiven for asking whether, from the evidence of screenshots alone, gaming technology wasn’t regressing rather than progressing.

But be that as it may, the 3D revolution ushered in by DOOM was here to stay. People would just have to get used to the visual crudity for the time being, and trust that eventually things would start to look better again.

Playing to the Base

There’s an eternal question in political and commercial marketing alike: do you play to the base, or do you try to reach out to a broader spectrum of people? The former may be safer, but raises the question of how many more followers you can collect from the same narrow slice of the population; the latter tempts you with the prospect of countless virgin souls waiting to embrace you, but is far riskier, with immense potential to backfire spectacularly if you don’t get the message and tone just right. This was the dichotomy confronting the boxed-games industry in the early 1990s.

By 1993, the conventional wisdom inside the industry had settled on the belief that outreach was the way forward. This dream of reaching a broader swath of people, of becoming as commonplace in living rooms as prime-time dramas and sitcoms, was inextricably bound up with the technology of CD-ROM, what with its potential to put footage of real human actors into games alongside spoken dialog and orchestral soundtracks. “What we think of today as a computer or a videogame system,” wrote Ken Williams of Sierra that year, “will someday assume a much broader role in our homes. I foresee a day when there is one home-entertainment device which combines the functions of a CD-audio player, VCR, videogame system, and computer.”

And then along came DOOM with its stereotypically adolescent-male orientation, along with sales numbers that threatened to turn the conventional wisdom about how well the industry could continue to feed off the same old demographic on its head. About six months after DOOM‘s release, when the powers that were were just beginning to grapple with its success and what it meant to each and every one of them, Alexander Antoniades, a founding editor of the new Game Developer magazine, more fully articulated the dream of outreach, as well as some of the doubts that were already beginning to plague it.

The potential of CD-ROM is tremendous because it is viewed as a superset not [a] subset of the existing computer-games industry. Everyone’s hoping that non-technical people who would never buy an Ultima, flight simulator, or DOOM will be willing to buy a CD-ROM game designed to appeal to a wider audience — changing the computer into [an] interactive VCR. If these technical neophytes’ first experience is a bad one, for $60 a disc, they’re not going to continue making the same mistake.

It will be this next year, as these consumers make their first CD-ROM purchases, that will determine the shape of the industry. If CD-ROM games are able to vary more in subject matter than traditional computer games, retain their platform independence, and capture new demographics, they will attain the status of a new platform [in themselves]. If not, they will just be another means to get product to market and will be just another label on the side of a box.

The next couple of years did indeed become a de-facto contest between these two ideas of gaming’s future. At first, the outreach camp could point to some notable successes on a scale similar to that of DOOM: The 7th Guest sold over 2 million copies, Myst sold an extraordinary 6 million or more. Yet the reality slowly dawned that most of those outside the traditional gaming demographic who purchased those games regarded them as little more than curiosities; most evidence would seem to indicate that they were never seriously played to a degree commensurate with their sales. Meanwhile the many similar titles which the industry rushed out in the wake of these success stories almost invariably became commercial disappointments.

The problems inherent in these multimedia-heavy “interactive movies” weren’t hard to see even at the time. In the same piece from which I quoted above, Alexander Antoniades noted that too many CD-ROM productions were “the equivalent of Pong games with captured video images of professional tennis players and CD-quality sounds of bouncing balls.” For various reasons — the limitations inherent in mixing and matching canned video clips; the core limitations of the software and hardware technology; perhaps simply a failure of imagination — the makers of too many of these extravaganzas never devised new modes of gameplay to complement their new modes of presentation. Instead they seemed to believe that the latter alone ought to be enough. Too often, these games fell back on rote set-piece puzzle-solving — an inherently niche activity even if done more creatively than we often saw in these games — for lack of any better ideas for making the “interactive” in interactive movies a reality. The proverbial everyday person firing up the computer-cum-stereo-cum-VCR at the end of a long workday wasn’t going to do so in order to watch a badly acted movie gated with frustrating logic puzzles.

While the multimedia came first with these productions, games of the DOOM school flipped that script. As the years went on and they too started to ship on the now-ubiquitous medium of CD-ROM, they too picked up cut scenes and spoken dialog, but they never suffered the identity crisis of their rivals; they knew that they were games first and foremost, and knew exactly what forms their interactivity should take. And most importantly from the point of view of the industry, these games sold. Post-1996 or so, high-concept interactive movies were out, as was most serious talk of outreach to new demographics. Visceral 3D action games were in, along with a doubling-down on the base.

To blame the industry’s retrenchment — its return to the demographically tried-and-true — entirely on DOOM is a stretch. Yet DOOM was a hugely important factor, standing as it did as a living proof of just how well the traditional core values of gaming could pay. The popularity of DOOM, combined with the exercise in diminishing commercial returns that interactive movies became, did much to push the industry down the path of retrenchment.

The minor tragedy in all this was not so much the end of interactive movies, given what intensely problematic endeavors they so clearly were, but rather that the latest games’ vision proved to be so circumscribed in terms of fiction, theme, and mechanics alike. By late in the decade, they had brought the boxed industry to a place of dismaying homogeneity; the values of the id boys had become the values of computer gaming writ large. Game fictions almost universally drew from the same shallow well of sci-fi action flicks and Dungeons & Dragons, with perhaps an occasional detour into military simulation. A shocking percentage of the new games being released fell into one of just two narrow gameplay genres: the first-person shooter and the real-time-strategy game.

These fictional and ludic genres are not, I hasten to note, illegitimate in themselves; I’ve enjoyed plenty of games in all of them. But one craves a little diversity, a more vibrant set of possibilities to choose from when wandering into one’s local software store. It would take a new outsider movement coupled with the rise of convenient digital distribution in the new millennium to finally make good on that early-1990s dream of making games for everyone. (How fitting that shaking loose the stranglehold of DOOM‘s progeny would require the exploitation of another alternative form of distribution, just as the id boys exploited the shareware model…)

The Murder Simulator

DOOM was mentioned occasionally in a vaguely disapproving way by mainstream media outlets immediately after its release, but largely escaped the ire of the politicians who were going after games like Night Trap and Mortal Kombat at the time; this was probably because its status as a computer rather than a console game led to its being played in bedrooms rather than living rooms, free from the prying eyes of concerned adults. It didn’t become the subject of a full-blown moral panic until weirdly late in its history.

On April 20, 1999, Eric Harris and Dylan Klebold, a pair of students at Columbine High School in the Colorado town of the same name, walked into their school armed to the teeth with knives, explosives, and automatic weapons. They proceeded to kill 13 students and teachers and to injure 24 more before turning their guns on themselves. The day after the massacre, an Internet gaming news site called Blue’s News posted a message that “several readers have written in reporting having seen televised news reports showing the DOOM logo on something visible through clear bags containing materials said to be related to the suspected shooters. There is no word yet of what connection anyone is drawing between these materials and this case.” The word would come soon enough.

It turned out that Harris and Klebold had been great devotees of the game, not only as players but as creators of their own levels. “It’s going to be just like DOOM,” wrote Harris in his diary just before the massacre. “I must not be sidetracked by my feelings of sympathy. I will force myself to believe that everyone is just a monster from DOOM.” He chose his prize shotgun because it looked like one found in the game. On the surveillance tapes that recorded the horror in real time, the weapons-festooned boys pranced and preened as if they were consciously imitating the game they loved so much. Weapons experts noted that they seemed to have adopted their approach to shooting from what worked in DOOM. (In this case, of course, that was a wonderful thing, in that it kept them from killing anywhere close to the number of people they might otherwise have with the armaments at their disposal.)

There followed a storm of controversy over videogame content, with DOOM and the genre it had spawned squarely at its center. Journalists turned their attention to the FPS subculture for the first time, and discovered that more recent games like Duke Nukem 3D — the Columbine shooters’ other favorite game, a creation of Scott Miller’s old Apogee Software, now trading under the name of 3D Realms — made DOOM‘s blood and gore look downright tame. Senator Joseph Lieberman, a longstanding critic of videogames, beat the drum for legislation, and the name of DOOM even crossed the lips of President Bill Clinton. “My hope,” he said, “[is] to persuade the nation’s top cultural producers to call a cease-fire in the virtual arms race, to stop the release of ultra-violent videogames such as DOOM. Several of the school gunmen murderously mimicked [it] down to the choice of weapons and apparel.”

When one digs into the subject, one can’t help but note how the early life stories of John Carmack and John Romero bear some eerie similarities with those of Eric Harris and Dylan Klebold. The two Johns as well were angry kids who found it hard to fit in with their peers, who engaged in petty crime and found solace in action movies, heavy-metal music, and computer games. Indeed, a big part of the appeal of DOOM for its most committed fans was the sense that it had been made by people just like them, people who were coming from the same place. What caused Harris and Klebold, alone among the millions like them, to exorcise their anger and aggression in such a horrifying way? It’s a question that we can’t begin to answer. We can only say that, unfair though it may be, perceptions of DOOM outside the insular subculture of FPS fandom must always bear the taint of its connection with a mass murder.

And yet the public controversy over DOOM and its progeny resulted in little concrete change in the end. Lieberman’s proposed legislation died on the vine after the industry fecklessly promised to do a better job with content warnings, and the newspaper pundits moved on to other outrages. Forget talk of free speech; there was too much money in these types of games for them to go away. Just ten months after Columbine, Activision released Soldier of Fortune, which made a selling point of dismembered bodies and screams of pain so realistic that one reviewer claimed they left his dog a nervous wreck cowering in a corner. After the requisite wave of condemnation, the mainstream media forgot about it too.

Violence in games didn’t begin with DOOM or even Wolfenstein 3D, but it was certainly amplified and glorified by those games and the subculture they wrought. While a player may very well run up a huge body count in, say, a classic arcade game or an old-school CRPG, the violence there is so abstract as to be little more than a game mechanic. But in DOOM — and even more so in the game that followed it — experiential violence is a core part of the appeal. One revels in killing not just because of the new high score or character experience level one gets out of it, but for the thrill of killing itself, as depicted in such a visceral, embodied way. This does strike me as a fundamental qualitative shift from most of the games that came before.

Yet it’s very difficult to have a reasonable discussion on said violence’s implications, simply because opinions have become so hardened on the subject. To express concern on any level is to invite association with the likes of Joe Lieberman, a politician with a knack for choosing the most reactionary, least informed position on every single issue, who apparently was never fortunate enough to have a social-science professor drill the fact that correlation isn’t causation into his head.

Make no mistake: the gamers who scoff at the politicians’ hand-wringing have a point. Harris and Klebold probably were drawn to games like DOOM and Duke Nukem 3D because they already had violent fantasies, rather than having said fantasies inculcated by the games they happened to play. In a best-case scenario, we can even imagine other potential mass murderers channeling their aggression into a game rather than taking it out on real people, in much the same way that easy access to pornography may be a cause of the dramatic decline in incidents of rape and sexual violence in most Western countries since the rise of the World Wide Web.

That said, I for one am also willing to entertain the notion that spending hours every day killing things in the most brutal, visceral manner imaginable inside an embodied virtual space may have some negative effects on some personalities. Something John Carmack said about the subject in a fairly recent interview strikes me as alarmingly fallacious:

In later games and later times, when games [came complete with] moral ambiguity or actual negativity about what you’re doing, I always felt good about the decision that in DOOM, you’re fighting demons. There’s no gray area here. It is black and white. You’re the good guys, they’re the bad guys, and everything that you’re doing to them is fully deserved.

In reality, though, the danger which games like DOOM may present, especially in the dismayingly polarized societies many of us live in in our current troubled times, is not that they ask us to revel in our moral ambiguity, much less our pure evil. It’s rather the way they’re able to convince us that the Others whom we’re killing “fully deserve” the violence we visit upon them because “they’re the bad guys.” (Recall those chilling words from Eric Harris’s diary, about convincing himself that his teachers and classmates are really just monsters…) This tendency is arguably less insidious when the bad guys in question are ridiculously over-the-top demons from Hell than when they’re soldiers who just happen to be wearing a different uniform, one which they may quite possibly have had no other choice but to don. Nevertheless, DOOM started something which games like the interminable Call of Duty franchise were only too happy to run with.

I personally would like to see less violence rather than more in games, all things being equal, and would like to see more games about building things up rather than tearing them down, fun though the latter can be on occasion. It strikes me that the disturbing association of some strands of gamer culture with some of the more hateful political movements of our times may not be entirely accidental, and that some of the root causes may stretch all the way back to DOOM — which is not to say that it’s wrong for any given individual to play DOOM or even Call of Duty. It’s only to say that the likes of GamersGate may be yet another weirdly attenuated part of DOOM‘s endlessly multi-faceted legacy.

Creative Destruction?

In other ways, though, the DOOM community actually was — and is — a community of creation rather than destruction. (I did say these narratives of DOOM wouldn’t be cut-and-dried, didn’t I?)

John Carmack, by his own account alone among the id boys, was inspired rather than dismayed by the modding scene that sprang up around Wolfenstein 3D — so much so that, rather than taking steps to make such things more difficult in DOOM, he did just the opposite: he separated the level data from the game engine much more completely than had been the case with Wolfenstein 3D, thus making it possible to distribute new DOOM levels completely legally, and released documentation of the WAD format in which the levels were stored on the same day that id released the game itself.

The origins of his generosity hearken back once again to this idea that the people who made DOOM weren’t so very different from the people who played it. One of Carmack’s formative experiences as a hacker was his exploration of Ultima II on his first Apple II. Carmack:

To go ahead and hack things to turn trees into chests or modify my gold or whatever… I loved that. The ability to go several steps further and release actual source code, make it easy to modify things, to let future generations get what I wished I had had a decade earlier—I think that’s been a really good thing. To this day I run into people all the time that say, whether it was Doom, or maybe even more so Quake later on, that that openness and that ability to get into the guts of things was what got them into the industry or into technology. A lot of people who are really significant people in significant places still have good things to say about that.

Carmack speaks of “a decade-long fight inside id about how open we should be with the technology and the modifiability.” The others questioned this commitment to what Carmack called “open gaming” more skeptically than ever when some companies started scooping up some of the thousands of fan-made levels, plopping them onto CDs, and selling them without paying a cent to id. But in the long run, the commitment to openness kept DOOM alive; rather than a mere computer game, it became a veritable cottage industry of its own. Plenty of people played literally nothing else for months or even years at a stretch.

The debate inside id raged more than ever in 1997, when Carmack insisted on releasing the complete original source code to DOOM. (He had done the same for the Wolfenstein 3D code two years before.) As he alludes above, the DOOM code became a touchstone for an up-and-coming generation of game programmers, even as many future game designers cut their teeth and made early names for themselves by creating custom levels to run within the engine. And, inevitably, the release of the source code led to a flurry of ports to every imaginable platform: “Everything that has a 32-bit [or better] processor has had DOOM run on it,” says Carmack with justifiable pride. Today you can play DOOM on digital cameras, printers, and even thermostats, and do so if you like in hobbyist-created levels that coax the engine into entirely new modes of play that the id boys never even began to conceive of.

This narrative of DOOM bears a distinct similarity to that of another community of creation with which I happen to be much better acquainted: the post-Infocom interactive-fiction community that arose at about the same time that the original DOOM was taking the world by storm. Like the DOOM people, the interactive-fiction people built upon a beloved company’s well-nigh timeless software engineering; like them, they eventually stretched that engine in all sorts of unanticipated directions, and are still doing it to this day. A comparison between the cerebral text adventures of Infocom and the frenetic shooters of id might seem incongruous at first blush, but there you are. Long may their separate communities of love and craft continue to thrive.

As you have doubtless gathered by now, the legacy of DOOM is a complicated one that’s almost uniquely resistant to simplification. Every statement has a qualifier; every yang has a yin. This can be frustrating for a writer; it’s in the nature of us as a breed to want straightforward causes and effects. The desire for them may lead one to make trends that were obscure at best to the people living through them seem more obvious than they really were. Therefore allow me to reiterate that the new gaming order which DOOM created wouldn’t become undeniable to everyone until fully three or four years after its release. A reader recently emailed me the argument that 1996 was actually the best year ever for adventure games, the genre which, according to some oversimplified histories, DOOM and games like it killed at a stroke — and darned if he didn’t make a pretty good case for it.

So, while I’m afraid I’ll never be much of a gibber and/or fragger, we should continue to have much to talk about. Onward, then, into the new order. I dare say that from the perspective of the boots on the ground it will continue to look much like the old one for quite some time to come. And after that? Well, we’ll take it as it comes. I won’t be mooting any more stopping dates.

(Sources: the books The Complete Wargames Handbook (2000 edition) by James F. Dunnigan, Masters of Doom by David Kushner, Game Engine Black Book: DOOM by Fabien Sanglard, Principles of Three-Dimensional Computer Animation by Michael O’Rourke, and Columbine by Dave Cullen; Retro Gamer 75; Game Developer of June 1994; Chris Kohler’s interview with John Carmack for Wired. And a special thanks to Alex Sarosi, a.k.a. Lt. Nitpicker, for his valuable email correspondence on the legacy of DOOM, as well as to Josh Martin for pointing out in a timely comment to the last article the delightful fact that DOOM can now be run on a thermostat.)

source http://reposts.ciathyza.com/the-shareware-scene-part-5-narratives-of-doom/

0 notes

Photo

Samsung Galaxy S9 and S9 Plus: Everything we know so far (Updated February 15) In this post, which will be updated regularly, we take a closer look at the latest rumors surrounding the upcoming Samsung Galaxy S9 and S9 Plus smartphones. The Samsung Galaxy S8 and S8 Plus are among the best smartphones you can get. They offer gorgeous bezel-less designs, sexy curved displays, and top-of-the-line specs. But they do have faults, we hope Samsung will address them with their successors. These include the weirdly-positioned fingerprint scanner, the lack of dual-cameras, an easily-fooled facial recognition system, and more. Fixes for all of these, and new features, could be part of the Galaxy S9 and S9 Plus. Samsung hasn’t shared any details about the Galaxy S9 and S9 Plus with the public yet. But we have come across a lot of reports and leaks that give us a good idea of what to expect in terms of the Samsung Galaxy S9 and Galaxy S9 Plus’ specs, features, design, price, and more. If you’re interested in learning more about the two new powerhouses from Samsung, keep reading. You’ll find all the latest Samsung Galaxy S9 rumors below. Samsung Galaxy S9 release date The Samsung Galaxy S9 and S9 Plus will be announced a month sooner than their predecessors. The smartphones will make their debut on February 25 in Barcelona, a day before MWC kicks off. As for the Samsung Galaxy S9 release date, two usually reliable sources claim the device will be officially released on Friday, March 16. Industry leaker Evan Blass claimed in the tweet attached below that the Galaxy S9 and S9 Plus would be available for pre-order on March 1, before being officially released on March 16. According to a C-level executive at a major casemaker, the go-to-market schedule for Galaxy S9 / S9+ is as follows: Launch – 2/26 Pre-orders – 3/1 Ships/releases – 3/16 — Evan Blass (@evleaks) January 16, 2018 SamMobile says its sources have also indicated a March 16 release date, though only for the U.S. and/or South Korea, with other markets to follow shortly afterwards. Samsung Galaxy S9 specs and features The Samsung Galaxy S9 and Galaxy S9 Plus won’t be major upgrades over their predecessors. According to a report from VentureBeat, they will come with the same curved displays as the Galaxy S8 series. This means we’ll see 5.8- and 6.2-inch Super AMOLED panels with QHD+ resolution and an 18.5:9 aspect ratio. ETNews reports that both the Galaxy S9 and the Galaxy S9 Plus will use Y-OCTA display technology, which integrates the touch layer in the encapsulation layer of the OLED display, rather than using a distinct film-type layer like on older generations of Samsung’s displays. Y-OCTA was only used on the Galaxy S8, but with the new generation, both the S9 and the S9 Plus will take advantage of it. Y-OCTA displays are thinner, have better optical properties and are reportedly 30 percent cheaper to manufacture. The Galaxy S8 and S8 Plus are identical except for screen and battery sizes, but their successors change things up. The Galaxy S9 Plus is said to have 2 GB of RAM more than its smaller brother (6 GB vs 4 GB). It might also feature a dual-camera setup, while the S9 should only have a single shooter on the back. Netnews An image of an alleged Galaxy S9 retail box, which you can check out above, gives us additional info regarding the specs of the Galaxy S9. It suggests that the flagship’s camera could have a 12 MP sensor with OIS and variable aperture — f/1.5 for low-light shots and f/2.4 for when there’s more light available. We’ve already seen this technology on Samsung’s high-end flip phone called the W2018, which launched back in December. You can check out what the variable aperture looks like in action below. The retail box also mentions “Super Slow-mo,” hinting that the Galaxy S9 could capture videos at 1,000 fps — just like the Sony Xperia XZ Premium. The camera could also perform well in low-light conditions and include support for creating 3D emojis. Samsung is already teasing all three features with short videos, which you can check out here. Editor's Pick What the heck is variable aperture? Smartphone manufacturers are forever finding new ways to differentiate and improve the cameras in their handsets, with innovations ranging from powerful new dual-sensor technologies through to superior software processing. This year Samsung looks set to … Some of these camera rumors are backed up by a recent report from ETNews. The publication reaffirmed that the S9 will have a 12 MP rear camera with f/1.5 variable aperture lens (up to f/2.4) — the smallest ever for Samsung (the S8 and Note 8 cameras came with an f/1.7 aperture). The S9’s (and likely S9 Plus’) front camera is said to come in at 8 MP, with autofocus and the iris-scanning technology seen previously. ETNews claims the Galaxy S9’s iris scanner will be integrated in the front-facing camera, while the Galaxy S9 Plus will have a discrete iris scanner and a regular selfie camera. It’s not clear why Samsung would go down this route, but space limitations and supply constraints are two possible explanations. The iris scanner/camera combo on the Galaxy S9 is said to be manufactured by two Korean suppliers — Partron and MC Nex. Though, previous reports have claimed the Galaxy S9’s iris scanning lens will be upgraded from 2 MP to 3 MP. This could be only for the Galaxy S9 Plus, however. Samsung recently published new details on its own website about the company’s new ISOCELL camera sensors. Some of that technology is likely to show up first in the Galaxy S9 and S9 Plus. One of the more interesting hardware improvements mentioned is called ISOCELL Fast, which is a 3-stack fast readout sensor. Samsung claims that this will allow cameras with this sensor to record video in Full HD (1080p) resolution with a whopping 480 frames per second. That means the sensor will be able to offer super-slow-mo video at a high resolution. The same sensor is also supposed to have a feature called Super PD, which Samsung hints will give smartphones faster autofocus speeds for its cameras. Some of Samsung's new ISOCELL technology is likely to show up first in the Galaxy S9 and S9 Plus. The page also talks about another sensor, ISOCELL Bright, which is supposed to help improve taking photos in low-light conditions by combining four normal-sized pixels into one large pixel. There’s also a mention of ISOCELL Dual, which is supposed to improve features in smartphones with dual sensors, including better light sensitivity, depth effects, and sharper brightness. Finally, the page mentions ISOCELL Slim — a sensor that is supposed to offer high-quality images in smartphone cameras that have modules as thin as 0.9 microns. The Galaxy S9 might have stereo speakers on board with Dolby Surround and ship with a free pair of headphones — both tuned by AKG. Then there’s also the IP68 rating for protection against water and dust, wireless charging, and an improved iris scanner. Rumors also suggest that both devices will be powered by the same chipset — either the Exynos 9810 or the recently announced Snapdragon 845, depending on the region. Related Samsung Galaxy S8 and Galaxy S8 Plus review: Almost to Infinity The Galaxy S7 and Galaxy S7 Edge might have been two of the best phones of 2017, but the well-documented issues faced by the Galaxy Note 7 have cast a shadow over Samsung’s mobile efforts. … According to that same ETNews report mentioned above, Samsung is also said to be saving space inside the device thanks to a new type of printed circuit board (PCB). This “substrate Like PCB” (SLP) technology is said to be “thinner and narrower” than the previous technology and will be used in models with Samsung’s Exynos chip, which are expected to account for 60 percent of total sales. What this would mean for the Snapdragon variant released in the West wasn’t speculated upon, but it should theoretically mean that the Exynos models have more room for internal components. We also expect to see a new location for the fingerprint scanner. The Galaxy S9 smartphones will still sport the scanner on the back, but they won’t sit right next to the camera like on their predecessors. Instead, they’ll likely be located below the cameras, in the middle of the devices. This is fantastic news, as the scanner’s location on the S8 is one of its biggest drawbacks. Not only does it look weird, it’s also impractical. According to Business Korea, Samsung will stick with the same 2D facial recognition software found on the Galaxy S8. The company has decided to ignore 3D technology due to “technological limitations” and concerns over user security. But the facial recognition feature could get a small upgrade — it should be faster. What’s more interesting is that the handsets could have a feature called “Intelligent Scan”, which is said to combine the iris scanner and face recognition technology to offer a more secure way for unlocking your device. Galaxy S9 and S9 Plus will run Android Oreo with Samsung’s Experience UI on top. Bixby, which made its debut on the Galaxy S8 series, should also be on board, probably in an enhanced form. It’s worth mentioning that the Galaxy S9 smartphones have recently stopped by the FCC — model numbers SM-G960F and SM-965F. However, apart from a long list of network bands and other types of connectivity, the listings don’t reveal much else. Recently, Samsung’s upcoming smartphones — carrying model numbers SM-G960 (Galaxy S9) and SM-G965 (Galaxy S9 Plus) — have been certified by the FCC again. This time, a few different versions of the devices are listed that end with U, U1, W, and XU. The letter U stands for the U.S. model, U1 is for the unlocked variants, W is for Canada, and XU is for demo units. The FCC ID reveals the full list of supported GSM, CDMA, LTE, and UMTS bands, which you can view here (Galaxy S9) and here (Galaxy S9 Plus). They show that the smartphones will support all major bands on all carriers. That means Samsung will probably release one version of each smartphone that will be sold by all carriers. Samsung Galaxy S9 Samsung Galaxy S9 Plus Display 5.8-inch 18.5:9 Super AMOLED 2960 x 1440 resolution 570 ppi 6.2-inch 18.5:9 Super AMOLED 2960 x 1440 resolution 529 ppi Processor Qualcomm 845 or Exynos 9810 Qualcomm 845 or Exynos 9810 RAM 4 GB 6 GB Storage 64 GB 128 GB MicroSD Yes, up to 256 GB Yes, up to 256 GB Camera Primary: A single 12 MP camera with variable aperture Secondary: 8 MP Primary: Dual 12 MP cameras with variable aperture Secondary: 8 MP Software Android Oreo Samsung Experience Android Oreo Samsung Experience Headphone jack Yes Yes Water resistance IP68 IP68 Samsung Galaxy S9 design Although Samsung hasn’t released official images of the two devices yet, we have a good idea of what the Galaxy S9 smartphones might look like. OnLeaks has teamed up with 91mobiles and released alleged CAD renders and a 360-degree video of the Galaxy S9. They show that the device could look very similar to its predecessor, with a few exceptions. The biggest one is that the fingerprint scanner could be positioned below the camera sensor instead of next to it, as mentioned in the specs and features section above. OnLeaks then joined forces with MySmartPrice and also released alleged CAD renders and a 360-degree video of the Galaxy S9 Plus. As expected, it looks more or less identical to its smaller brother. The only difference is that the device features a dual-camera setup, while the S9 only features a single shooter on the back. More recent renders leaked by VentureBeat and Evan Blass tell us the same story as the ones above, but also show off the devices in two eye-catching colors: Blue Coral and Lilac Purple. You can check them out below. Evan Blass Having a similar design as their predecessors isn’t a bad thing. The Galaxy S8 and S8 Plus are gorgeous devices that look and feel premium. The two smartphones have been well-received by users, so there’s no real reason to drastically change their design. They might not be to everyone’s taste, mainly due to the glass back. It’s a fingerprint magnet and not as strong as metal, but it does allow for wireless charging. Samsung Galaxy S9 price Price details for the Samsung Galaxy S9 may have been revealed in a leak from ETNews (via PhoneArena), which hints the smartphone could be more expensive than its predecessor. The speculation points to the South Korean prices for the handset, from which we can get an idea of what to expect for U.S. pricing. The S9 is said to start at 950,000-990,000 Korean Won, or around $884-$920. By comparison, the Galaxy S8 began at 935,000 Korean Won, which was about $825. So it looks like we might see anywhere between a $50-$100 increase on last year’s model. These are only roughly translated figures, though, and they’re not exactly in line with how the devices are priced in different markets. The S8 actually went on sale in the U.S. for around $750 —$75 bucks less than what the Korean retail price indicated. With that in mind, it’s extremely unlikely that the S9 will end up at $170 more expensive than the S8. However, we could see it launch in the region of $800 to $850, which would still make it the most expensive Galaxy S model yet. For the Galaxy S9 Plus, you could expect to add another $100 on top. If prices do increase, we’ll likely see a domino effect. The fact is that Samsung dictates pricing with its Galaxy S series. If the S9 will cost more, we can expect devices like the successor to the LG G6, Huawei P20, and HTC U12 to come with higher price tags as well. Keep in mind that nothing has been confirmed yet. It’s still possible we won’t see a price hike and that the Galaxy S9 handsets will cost the same as their predecessors. What we can be sure of is that they won’t be cheaper. These are all the rumors about the Galaxy S9 and S9 Plus we have come across so far. We’ll update this page as soon as we hear more. Meanwhile, do let us know your thoughts on Samsung’s upcoming smartphones. Would you consider getting either of them based on the info we know so far? Let us know in the comments. , via Android Authority http://bit.ly/2rEq22K

0 notes

Text

Office Furnishings Ireland, Office Partitions Ireland

Microsoft Reveals New Windows 10 Workstations Edition For Power Customers

HP Z Series, Dell Precision & ThinkCentre Workstation powerhouses for intensive multi-threaded applications, 3D visualisation and 3D modelling computer software and quickly desktop rendering. Appear out for computer mainframe trays which are specially developed to help the laptop tower This helps maintain the tower safe and out of the way of your feet so you have more space underneath the desk. According to wellness authorities, remaining in a sedentary position for extended periods of time (this includes sitting and standing still) puts excess tension on physique, leading to cramped muscle tissues, stiff joints, poor blood circulation, and upper and reduce back pain Improper sitting posture as well as a chair with poor lumbar (low back) support can further exacerbate these situations. The Software Licensing Service reported that the application ID does not match the application ID for the license.

Rather of trying to place together different components, I would advocate obtaining a supermicro bare ones workstation chassis and adding on the CPUs, memory and cards you need to have. The only reason to get a dual Xeon workstation is if you want >64GB RAM (you don’t require dual for this, but it really is the MBs), or you do intensive rendering (or any other approach that rewards from multithreading). To conclude, the FlexiSpot Desktop Workstation 27 inches is great, especially if you are interested in attempting to perform in a standing position first, and you do not want to afford a complete standing desk. The HP Z4 Workstation also functions significant style improvements for tomorrow’s office environment with a sleek and modern industrial design and style like ergonomic front and rear handles, a significantly smaller sized chassis for cramped workspaces and a new dust filter selection for industrial environments.

The HP Z400 Workstation is HP’s latest entry-level workstation, but as with all HP workstations, entry-level hardly describes the characteristics and overall performance offered by this machine. At very first glance, the Precision M3800 could appear significantly less like a desktop replacement and far more like a lightweight, portable system, but this 15.six-inch touchscreen laptop is a powerhouse underneath. Now, the study cannot tell us for particular no matter whether social media is causing this rewiring or whether folks with these different brain structures are just far more most likely to flock to Facebook. New multi user workstations will give your organization with the highest level of working efficiency achievable. Most of all, higher-efficiency workstations want to be trustworthy and help high-definition video.

Portugal boasted that Angola, Guinea, and Mozambique have been their possessions for 5 hundred years, in the course of which time a ‘civilizing mission’ has been going on. At the finish of 5 hundred of shouldering the White man’s burden of civilizing “African Natives,” the Portuguese had not managed to train a single African medical doctor in Mozambique, and the life expectancy in Eastern Angola was less than thirty years. Reduce power consumption and enhance workstation reliability by way look at here now of the innovative HP Z Workstation BIOS featuring preset sleep states, adjustable fan speeds to maximize operating efficiency, and energy management characteristics. “From the blazing quick efficiency of DaVinci Resolve to genuine-time video capture with UltraStudio 4K, Mac Pro is a revolution in pro desktop design and style and performance,” stated Grant Petty, CEO of Blackmagic Design and style.

As an instance of the types of factors achievable via HP’s history with workstations: Dreamworks, the animation firm that brought us Shrek, wanted to move away from the dying SGI platform, but there merely wasn’t a viable selection to deal with the complicated rendering operations they performed every single day. The Software Licensing Service reported that the pc BIOS is missing a needed license. The style, size and condition of the furniture set can pass on class to an average space. This is developed to provide some further space for files on the cheaper mechanical drive and also offer a solid-state faster drive to run your applications from. • The front workplace accounting program shall be customized and tailored to track every single hotel’s demands.

Human technology is developed from the moment that it is felt that folks are unhappy. In this configuration, the user operating technique is available whilst disconnected (for editing documents and working on locally cached e-mail), but demands hardware and help processes that can accommodate this disconnected state. The Konecranes XA and XM workstation crane systems are developed to meet the varied and demanding requirements of workstations and production lines that need to have up to two,000 kg of lifting capacity. Nonetheless, the majority of their achievement came from new markets, such as integrated office workstations and desktop publishing systems. In terms of computing energy, workstations lie among private computers and minicomputers , despite the fact that the line is fuzzy on both ends.