#adduser centos

Explore tagged Tumblr posts

Text

Let this practical guide help you create users and groups & add them to groups in CentOS7 with ease.

#create user centos 7#centos 7 add user#add user centos 7#how to create group in centos 7#adduser centos

0 notes

Photo

Create User in Linux Command

0 notes

Text

How to get a free teamspeak server

#How to get a free teamspeak server how to#

#How to get a free teamspeak server update#

#How to get a free teamspeak server software#

We will need to create a file called rvice in the /lib/systemd/system directory. Now, it’s time to set up the TeamSpeak server to start when the server boots up. Step 4 – Start the TeamSpeak 3 Server on Startup ts3server_license_accepted, meaning you have accepted the license terms. This will create a new empty file called. The quickest way to do it is by running the following command: touch /home/teamspeak/.ts3server_license_accepted Since the release of TeamSpeak 3 server version 3.1.0, it is mandatory to accept the license agreement. You should see a screen similar to the example below if everything was done correctly: Step 3 – Accept the TeamSpeak 3 License Agreement If you enter the incorrect version number, TeamSpeak won’t run.Įxecute the ls command.

#How to get a free teamspeak server software#

Make sure to check your TeamSpeak software version and enter the commands accordingly. The next thing will be moving everything to /home/teamspeak and removing the downloaded archive: cd teamspeak3-server_linux_amd64 & mv * /home/teamspeak & cd. Once it finishes, extract the downloaded file: tar xvf teamspeak3-server_linux_amd64-3.13.6.tar.bz2Īll the contents will appear in the teamspeak3-server_linux_amd64 folder. You may utilize the wget command to download the setup file directly to the VPS: wget The next task will be to download the latest TeamSpeak 3 server software for Linux. Then, access the home directory of the newly created user: cd /home/teamspeak Step 2 – Download and Extract TeamSpeak 3 Server Keep in mind that all fields can be left blank. You will be requested to enter the personal user details and confirm that they are correct.

#How to get a free teamspeak server update#

The following procedure can be broken down into 6 simple steps.īefore you continue, check if your system is up to date by entering the following command into the terminal: apt-get update & apt-get upgrade Step 1 – Create a New System Userįirst of all, add a new user by executing the following command: adduser -disabled-login teamspeak Start the process by accessing your VPS via SSH. To begin, let’s set up a TeamSpeak server on Linux VPS running the Ubuntu 16.04 operating system. How to Make a TeamSpeak 3 Server on Ubuntu 16.04

Step 1 – Create the Subdomain Using A Records.

#How to get a free teamspeak server how to#

Bonus: How to Point a Domain to a TeamSpeak 3 Server.

Step 4 – Connect via the TeamSpeak Client.

Step 3 – Start the TeamSpeak 3 Server and Retrieve Your Privilege Key.

Step 2 – Accept the TeamSpeak 3 License Agreement.

How to Make a TeamSpeak 3 Server on macOS.

Step 3 – Connect via the TeamSpeak Client.

Step 2 – Run the TeamSpeak 3 Server Installer.

Step 1 – Download and Extract the TeamSpeak 3 Server.

How to Make a TeamSpeak 3 Server on Windows.

Step 2 – Download and Extract the TeamSpeak 3 Server.

How to Make a TeamSpeak 3 Server on CentOS 7.

Step 6 – Connect via the TeamSpeak Client.

Step 4 – Start the TeamSpeak 3 Server on Startup.

Step 3 – Accept the TeamSpeak 3 License Agreement.

Step 2 – Download and Extract TeamSpeak 3 Server.

How to Make a TeamSpeak 3 Server on Ubuntu 16.04.

0 notes

Text

In this tutorial, I will show you how to set up a Docker environment for your Django project when still in the development phase. Although I am using Ubuntu 18.04, the steps remain the same for whatever Linux distro you use with exception of Docker and docker-compose installation. In any typical software development project, we usually have a process of development usually starting from the developer premise to VCS to production. We will try to stick to the rules so that you understand where each DevOps tools come in. DevOps is all about eliminating the yesteryears phrase; “But it works on my laptop!”. Docker containerization creates the production environment for your application so that it can be deployed anywhere, Develop once, deploy anywhere!. Install Docker Engine You need a Docker runtime engine installed on your server/Desktop. Our Docker installation guides should be of great help. How to install Docker on CentOS / Debian / Ubuntu Environment Setup I have set up a GitHub repo for this project called django-docker-dev-app. Feel free to fork it or clone/download it. First, create the folder to hold your project. My folder workspace is called django-docker-dev-app. CD into the folder then opens it in your IDE. Dockerfile In a containerized environment, all applications live in a container. Containers themselves are made up of several images. You can create your own image or use other images from Docker Hub. A Dockerfile is Docker text document Docker reads to automatically create/build an image. Our Dockerfile will list all the dependencies required by our project. Create a file named Dockerfile. $ nano Dockerfile In the file type the following: #base image FROM python:3.7-alpine #maintainer LABEL Author="CodeGenes" # The enviroment variable ensures that the python output is set straight # to the terminal with out buffering it first ENV PYTHONBUFFERED 1 #copy requirements file to image COPY ./requirements.txt /requirements.txt #let pip install required packages RUN pip install -r requirements.txt #directory to store app source code RUN mkdir /app #switch to /app directory so that everything runs from here WORKDIR /app #copy the app code to image working directory COPY ./app /app #create user to run the app(it is not recommended to use root) #we create user called user with -D -> meaning no need for home directory RUN adduser -D user #switch from root to user to run our app USER user Create the requirements.txt file and add your projects requirements. Django>=2.1.3,=3.9.0, sh -c "python manage.py runserver 0.0.0.0:8000" Note the YAML file format!!! We have one service called ddda (Django Docker Dev App). We are now ready to build our service: $ docker-compose build After a successful build you see: Successfully tagged djangodockerdevapp_ddda:latest It means that our image is called djangodockerdevapp_ddda:latest and our service is called ddda Now note that we have not yet created our Django project! If you followed the above procedure successfully then Congrats, you have set Docker environment for your project!. Next, start your development by doing the following: Create the Django project: $ docker-compose run ddda sh -c “django-admin.py startproject app .” To run your container: $ docker-compose up Then visit http://localhost:8000/ Make migrations $ docker-compose exec ddda python manage.py migrate From now onwards you will run Django commands like the above command, adding your command after the service name ddda. That’s it for now. I know it I simple and there are a lot of features we have not covered like a database but this is a start! The project is available on GitHub here.

0 notes

Text

Moving part #3: web server

I decided to create an online video game. I didnt pick a game engine yet but I have a good idea of how the client side will work (Bootstrap + React).

The client-side stuff runs in the browser, of course, but it doesn't get there magically. The static assets (CSS, JavaScript, images, etc) have to be hosted on a web server somewhere. And to make the user experience as great as possible, that web server should probably be hiding behind a Content Delivery Network although it's not mandatory for the time being.

My video game will likely be a single-page web application, which means that the content of the page will be generated dynamically in the browser via JavaScript (like Gmail or YouTube) rather than be mostly generated on a server somewhere (like IMDB or Amazon.com).

This means that I can safely postpone decisions regarding the API (the interaction between the web page and the backend, like a central database or something similar); all I need to decide at this point is where to host the static assets, which doesn't shackle me to any given provider for the API part.

Choosing a domain name

Having a cool domain name is always great, but it's not as important as it used to be. A lot of people nowadays go directly to a search engine page rather than type a domain name for the first time; after that the URL is in the browser cache and possibly bookmarked, so it matters even less.

It doesn't mean that the domain name is not important. For instance, I can never remember the domain name for the webcomic Cyanide & Happiness, and I have to do a web search every time rather than start typing the address in the address bar; a small annoyance, of course, but an annoyance nonetheless, and with no apparent reason.

For my video game, I already picked a name: the dollar puppet (for reasons that will become more clear later). Registering a domain name is easy and there are many providers, but this is one element for which I always pick AWS. Prices are low, privacy is included, there's a lot of TLD available, and I can choose to either host the DNS records on AWS Route 53 or point the DNS somewhere else.

Since I don't know yet if I'll use AWS a lot for this video game, I'll keep the zone that gets created by default on Route 53 when registering a domain name. I can delete it later, in the meantime it will cost me $0.50 / month, and while I find it expensive for what I get out of it, I can live with it.

Why do I find $0.50 / month expensive? Because I have, at the moment, about 45 registered domains (for no good reasons); that's about $500 in domain registration fees per year (unavoidable) and the Route 53 hosting would cost me another $250 / year while I can get that hosting for free with my $2/month Zoho email subscription.

(BTW - I love Zoho for email, it's a breeze to get a really really good setup for multiple domains)

As a Linode customer I can also get free DNS hosting there and the UI is really easy to use.

Back to the fundamental question

To cloud or not to cloud? There's no really bad decision possible here, because even if I pick a terrible provider for the web server, the stuff will be cached on a CDN so it will not impact end users that much.

The scenarios that make sense:

run nginx on a Linode VM, and use Cloudflare if I want a CDN

store the assets in AWS S3 (which can be configured to run as a web server) and use AWS CloudFront for the CDN

use Linode object storage (similar to S3) and again use Cloudflare for the CDN

Instead of AWS I could use Azure (they're as reliable and secure as AWS), and instead of Linode I could use DigitalOcean, but I'm used to AWS and Linode and I don't care enough to consider other providers at the moment.

The plot thickens: SSL certificates

In this day and age it makes no sense to use plain HTTP (or plain WebSocket, for that matter) so it's clear I'll have to deal with SSL certificates (more accurately: TLS certificates, but who cares).

There are two easy ways to get SSL certificates for free: letsencrypt, AWS certificates. On AWS, the certificates are only available for specific services (ex: CloudFront); when used for VMs, they cannot be assigned to a single instance, only to a load-balancer (which cannot be turned off to save money).

Pricing

Whether I'm using AWS or Linode, I'm looking at most at $5/month price tag for this part, so it doesn't matter much to me.

Deployment on Linode

Provisioning a web server on Linode is not a lot of work:

Provision a VM

Add my SSH keys

Configure the firewall

Install nginx

Install certbot (to allocate and renew SSL certificates)

Upload my code

In terms of Linux distro, I'm a huge fan of Fedora on the desktop, but for a server it's not ideal given that the release schedule is fast-paced and I don't have time to deal with updates. If I was to do this right, I would probably pick Arch Linux since it's a rolling release and is the easiest distro for server hardening, but it's too much work so this time I'd probably go with CentOS 8, which comes with the added benefit of working smoothly with podman for rootless containers.

Ubuntu would work fine too, but if I'm going to expose a server to the evil people of the interwebs, I don't see SELinux as optional so it's an extra step; I also don't see why I have to manually enable firewalld, or why I have to suffer through the traumatic experience of using nano when running visudo, or why I have to use adduser because the default options for useradd suck, so this time I'll pass on Ubuntu.

Deployment on AWS

Running a static website on AWS is very easy:

Create S3 buckets in 2 or 3 regions (the name is not really important) and configure them to allow static hosting (it's just a checkbox and a policy on the bucket). In theory it works with a single region but might as well get the belt & suspenders setup since the cost is more or less the same; also the multi-region setup allows for cool A/B testing and other fun deployment scenarios later.

Provision a SSL certificate matching the domain name

Create a CloudFront distribution and configure it to use the S3 buckets as origin servers

That's it. High availability and all that, in just a few clicks, although for some reason it does take a while for the CloudFront distribution to be online (sometimes 30 minutes).

Another cool thing with this setup is that I can put my static assets in CodeCommit (the dirt cheap AWS git service) and use CodeBuild to update the S3 buckets whenever the code changes. There are some shenanigans involved because of the multi-region setup but nothing difficult.

Some people prefer Github to CodeCommit because of additional features, and this can work too, but I'm not a git maniac and I don't want to deal with oauth to connect github to AWS so I'll pass on Github. And to be honest, if I was unable to use CodeCommit for some reason, I'd probably deploy a Gitea server somewhere rather than use Github which I find too opinionated.

Operations on Linode

Running my own web server is not a lot of work. Once nginx is configured, the only thing I would have to do would be a bit of monitoring and dealing with the occasional reboot when the Linode engineers have to update the hypervisor (they send notifications ahead of time and also once it's done). As long as I configure nginx (or the podman container) for autostart I don't have to do anything other than make sure it's still working after the reboot.

If I go with the object storage solution, it's even easier since there's no VM to deal with.

Operations on AWS

When using S3 and CloudFront, there's nothing else to do on AWS, except keeping an eye on certificate renewals and the occasional change in how the platform works (which doesn't happen a lot and comes with heads up long before it happens).

And the winner is...

All things considered, for the website hosting I'm going to use AWS S3 and CloudFront. If at some point Linode offers a CDN service I will probably revisit this, but for now I don't want to deal with origin servers hosted somewhere and the CDN hosted somewhere else.

0 notes

Link

Delta Chat — интересная альтернатива для self-hosted IM-мессенджера, позволяющий обмениваться сообщениями по существующим почтовым протоколам (что в перспективе позволяет не беспокоиться о блокировке), а высокая стойкость к перехвату сообщений, отсутствие центрального сервера и возможность развернуть на своем сервере, позволяет не беспокоится о том, что ваши данные попадут в чужие руки.

Сейчас есть много IM-мессенджеров с end-to-end шифрованием, но вариантов, которые можно быстро развернуть на своем сервере гораздо меньше.

Изучая варианты, мой взгляд упал на Delta Chat, о котором на Хабре уже упоминали — мессенджер без централизованной серверной инфраструктуры, использующий почтовые сервера для доставки сообщений, что позволяет развернуть его, например, на своем домашнем сервере и общаться с устройств, в том числе не имеющих доступ в интернет. Среди преимуществ этого подхода можно отметить:

Вы сами управляете своей информацией, в том числе ключами шифрования.

Вы не отдаете свою адресную книгу никому.

Нет необходимости использовать телефонный номер для регистрации.

Наличие клиентов под все популярные системы: Windows, Linux, Android, MacOS, iPhone.

Дополнительное шифрование STARTTLS/SSL при передаче сообщений, обеспечиваемое почтовым сервером.

Возможность настроить удаление старых сообщений с устройства (исчезающие сообщения).

Возможность настроить удаление сообщений с сервера, при получении.

Быстрая доставка, благодаря IMAP push.

Групповые защищенные чаты.

Поддержка передачи файлов, фото и видео.

Сервер и клиент относятся к открытому ПО и совершенно бесплатны.

Возможные недостатки:

Нет возможности создавать нативные аудио и видео конференции.

Необходимость экспортировать/импортировать ключи шифрования, для настройки одного аккаунта на нескольких устройствах.

Интересный факт: Роскомнадзор уже требовал от разработчиков Delta Chat предоставить доступ к пользовательским данным, ключам шифрования и зарегистрироваться в государственном реестре провайдеров, на что Delta Chat ответили отказо��, т.к. не имеют собственных серверов и не имеют доступа к ключам шифрования.

End-to-end шифрование

Delta Chat для подключения к серверу может использовать StartTLS или SSL подключение к серверу, сообщения по умолчанию будут шифроваться по стандарту Autocrypt Level 1, после обмена первыми сообщениями (они передаются в незашифрованном виде). Таким образом если общение идет между пользователями одного сервера, информация не будет передаваться на другие сервера, в передаче сообщений будет занят только наш сервер и устройства пользователей.

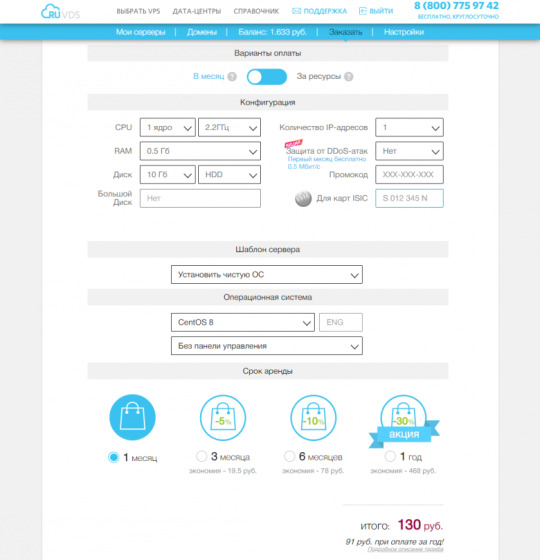

Настройка сервера

Настройка сервера для Delta Chat сводится к установке Postfix + Dovecot с настроенными StartTLS/SSL и настройке записей домена. Для настройки сервера я буду использовать CentOS 8, для других дистрибутивов могут быть несущественные расхождения. Выбираем подходящие параметры сервера под нашу задачу.

В DNS я создал две записи: домен третьего уровня будет и почтовым доменом и именем почтового сервера:

secureim.example.com A <ip> secureim MX secureim.example.com

Зададим hostname и установим postfix, dovecot и nginx (nginx — для получения сертификатов let's encrypt, wget — для установки certbot-auto, nano — редактор):

hostnamectl set-hostname secureim.example.com dnf install postfix dovecot nginx wget nano -y

Разрешим Postfix принимать почту извне и настроим hostname, domain и origin сервера, так как почтовый домен и адрес сервера совпадают, то домен будет везде одинаковым:

postconf -e "inet_interfaces = all" postconf -e "myhostname = secureim.example.com" postconf -e "mydomain = secureim.example.com" postconf -e "myorigin = secureim.example.com"

Для того что бы Delta Chat был доступен для подключения из Интернета, нужно открыть порты 80, 143, 443, 465, 587, 993. Так же откроем порты 80, 443 что бы получить сертификаты let's encrypt и обновлять их в дальнейшем. Если планируется получение писем от других почтовых серверов, так же понадобится открыть порт 25 (в моем случае я не планирую подключаться используя другие сервера, поэтому 25й порт я не указываю). И возможно потребуется добавить перенаправление портов 80, 143, 443, 465, 587, 993 на маршрутизаторе, если сервер планируется использовать в локальной сети. Откроем порты 80, 143, 443, 465, 587, 993 в firewall:

firewall-cmd --permanent --add-service={http,https,smtps,smtp-submission,imap,imaps} systemctl reload firewalld

Создадим настройки сайта для нашего доменного имени, что бы получить сертификаты let's encrypt используя certbot-auto

nano /etc/nginx/conf.d/secureim.example.com.conf server { listen 80; listen [::]:80; server_name secureim.example.com; root /usr/share/nginx/html/; } }

Включим и запустим nginx:

systemctl enable nginx systemctl start nginx

Установим certbot-auto:

cd ~ wget https://dl.eff.org/certbot-auto mv certbot-auto /usr/local/bin/certbot-auto chown root /usr/local/bin/certbot-auto chmod 0755 /usr/local/bin/certbot-auto yes | certbot-auto --install-only

Сгенерируем сертификаты для сайта (в дальнейшем мы будем их использовать для TLS-шифрования соединения с сервером):

certbot-auto certonly -a nginx --agree-tos --staple-ocsp --email [email protected] -d secureim.example.com

Будут созданы сертификаты и так же будет выведено в консоль их расположение:

# /etc/letsencrypt/live/secureim.example.com/fullchain.pem # /etc/letsencrypt/live/secureim.example.com/privkey.pem

Исправим соответственно файл конфигурации Postfix, что бы разрешить прием писем на портах 465 и 587:

nano /etc/postfix/master.cf submission inet n - y - - smtpd -o syslog_name=postfix/submission -o smtpd_tls_security_level=encrypt -o smtpd_tls_wrappermode=no -o smtpd_sasl_auth_enable=yes -o smtpd_relay_restrictions=permit_sasl_authenticated,reject -o smtpd_recipient_restrictions=permit_mynetworks,permit_sasl_authenticated,reject -o smtpd_sasl_type=dovecot -o smtpd_sasl_path=private/auth smtps inet n - y - - smtpd -o syslog_name=postfix/smtps -o smtpd_tls_wrappermode=yes -o smtpd_sasl_auth_enable=yes -o smtpd_relay_restrictions=permit_sasl_authenticated,reject -o smtpd_recipient_restrictions=permit_mynetworks,permit_sasl_authenticated,reject -o smtpd_sasl_type=dovecot -o smtpd_sasl_path=private/auth

Выполним команды, что бы указать расположение TLS сертификата и личного ключа сервера:

postconf "smtpd_tls_cert_file = /etc/letsencrypt/live/secureim.example.com/fullchain.pem" postconf "smtpd_tls_key_file = /etc/letsencrypt/live/secureim.example.com/privkey.pem"

При необходимости можем включить логирование TLS подключений:

postconf "smtpd_tls_loglevel = 1" postconf "smtp_tls_loglevel = 1"

Добавим в конец файла конфигурации Postfix требование использовать протоколы не ниже TLS 1.2:

nano /etc/postfix/main.cf smtp_tls_mandatory_protocols = >=TLSv1.2 smtp_tls_protocols = >=TLSv1.2

# Включим и запустим Postfix:

systemctl start postfix systemctl enable postfix

Установим, включим и запустим Dovecot:

dnf install dovecot -y systemctl start dovecot systemctl enable dovecot

Изменим файл конфигурации Dovecot, что бы разрешить протокол imap:

nano /etc/dovecot/dovecot.conf protocols = imap

Настроим хранилище п��сем, что бы письма сохранялись в папках пользователей:

nano /etc/dovecot/conf.d/10-mail.conf mail_location = maildir:~/Maildir mail_privileged_group = mail

Добавим Dovecot в группу mail что бы Dovecot мог читать входящие:

gpasswd -a dovecot mail

Запретим авторизацию без TLS шифрования:

nano /etc/dovecot/conf.d/10-auth.conf disable_plaintext_auth = yes

Добавим автоподстановку домена при авторизации (только по имени пользователя):

auth_username_format = %n

Изменим расположение сертификата, ключа, расположения файла с ключом Диффи-Хеллмана, минимальную версию TLS 1.2 и предпочтение выбора протоколов шифрования сервера, а не клиента:

nano /etc/dovecot/conf.d/10-ssl.conf ssl_cert = </etc/letsencrypt/live/secureim.example.com/fullchain.pem ssl_key = </etc/letsencrypt/live/secureim.example.com/privkey.pem ssl_dh = </etc/dovecot/dh.pem ssl_min_protocol = TLSv1.2 ssl_prefer_server_ciphers = yes

Сгенерируем ключ Диффи-Хеллмана, генерация ключа может занять продолжительное время:

openssl dhparam -out /etc/dovecot/dh.pem 4096

Изменим секцию service auth, так что бы Postfix смог подключиться к серверу авторизации Dovecot:

nano /etc/dovecot/conf.d/10-master.conf service auth { unix_listener /var/spool/postfix/private/auth { mode = 0600 user = postfix group = postfix } }

Включим автосоздание системных почтовых папок (на тот случай, если мы будем пользоваться сервером в том числе и для обычной почты) добавив строку auto = create в секции почтовых папок:

nano /etc/dovecot/conf.d/15-mailboxes.conf mailbox Drafts { auto = create special_use = \Drafts } mailbox Junk { auto = create special_use = \Junk } mailbox Trash { auto = create special_use = \Trash } mailbox Sent { auto = create special_use = \Sent } mailbox "Sent Messages" { auto = create special_use = \Sent }

Настроим что бы Dovecot доставлял письма в настроенное хранилище, добавив параметр lmtp:

nano /etc/dovecot/dovecot.conf protocols = imap lmtp

Настроим сервис LMTP следующим образом:

nano /etc/dovecot/conf.d/10-master.conf service lmtp { unix_listener /var/spool/postfix/private/dovecot-lmtp { mode = 0600 user = postfix group = postfix } }

Добавим следующие настройки в конец файла, что бы сообщить Postfix доставлять письма в локальное хранилище через сервис Dovecot LMTP. Так же отключим SMTPUTF8, так как Dovecot LMTP не поддерживает это расширение:

nano /etc/postfix/main.cf mailbox_transport = lmtp:unix:private/dovecot-lmtp smtputf8_enable = no

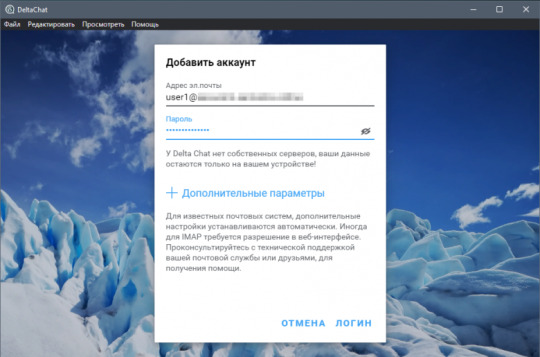

Создадим пользователей которые будут использовать сервер, создав соответствующую запись в системе и задав ей пароль, который будет использоваться для авторизации через smtps и imaps:

adduser user1 passwd user1

# Перезапустим Dovecot и Postfix:

systemctl restart dovecot systemctl restart postfix

Добавим в /etc/crontab задачу для автоматического обновления сертификатов:

nano /etc/crontab 30 2 * * * root /usr/local/bin/certbot-auto renew --post-hook "nginx -s reload"

На данном этапе сервер должен функционировать как почтовый сервер, т.е. можно подключиться почтовым клиентом и попробовать отправить и принять письма на другие почтовые ящики этого сервера или если выше открыли 25 порт, то и на другие почтовые сервера. Теперь настроим клиент Delta Chat на ��К и смартфоне с Android.

Для подключения достаточно ввести созданный ранее на сервере почтовый адрес и пароль, Delta Chat определит какие порты можно задействовать, после чего можно будет добавить новый контакт, так же по адресу электронной почты, использованием действующего почтового адреса.

Первые сообщения будут отправлены в незашифрованном виде, на этом этапе идет обмен ключами. Далее сообщения будут зашифрованы помимо TLS используемого при передаче данных, сквозным шифрованием Autocrypt Level 1. Так же есть возможность создания группового проверенного чата — где все сообщения зашифрованы сквозным шифрованием, а участники могут присоединиться, сканируя приглашение с QR-кодом. Таким образом, все участники связаны друг с другом цепочкой приглашений, которые гарантируют криптографическую согласованность от активных сетевых атак или атак провайдера. Один из самых интересных моментов, которые я хотел проверить — посмотреть как выглядит сообщение в хранилище сервера. Для этого я отправил сообщение на неактивный аккаунт — в данном случае сообщение будет ждать своего получателя на сервере, и мы имея доступ к серверу сможем просмотреть его: Содержимое сообщения

Return-Path: <[email protected]> Delivered-To: [email protected] Received: from secureim.example.com by secureim.example.com with LMTP id g/geNIUWzl+yBQAADOhLJw (envelope-from <[email protected]>) for <[email protected]>; Mon, 07 Dec 2020 14:48:21 +0300 Received: from [127.0.0.1] (unknown [192.87.129.58]) by secureim.example.com (Postfix) with ESMTPSA id AA72A3193E11 for <[email protected]>; Mon, 7 Dec 2020 11:48:21 +0000 (UTC) MIME-Version: 1.0 References: <[email protected]> <[email protected]> In-Reply-To: <[email protected]> Date: Mon, 07 Dec 2020 11:48:20 +0000 Chat-Version: 1.0 Autocrypt: [email protected]; prefer-encrypt=mutual; keydata=xjMEX83vexYJKwYBBAHaRw8BAQdAYgkiTiHDlJtzQqLCFxiVpma/X5OtALu8kJmjeTG3yo 7NIDx1c2VyMkBzZWN1cmVpbS5zYW1vaWxvdi5vbmxpbmU+wosEEBYIADMCGQEFAl/N73sCGwMECwkI BwYVCAkKCwIDFgIBFiEEkuezqLPdoDjlA2dxYQc97rElXXgACgkQYQc97rElXXgLNQEA17LrpEA2vF 1FMyN0ah5tpM6w/6iKoB+FVUJFAUALxk4A/RpQ/o6D7CuacuFPifVZgz7DOSQElPAMP4AHDyzcRxwJ zjgEX83vexIKKwYBBAGXVQEFAQEHQJ7AQXbN5K6EUuwUbaLtFpEOdjd5E8hozmHkeeDJ0HcbAwEIB8 J4BBgWCAAgBQJfze97AhsMFiEEkuezqLPdoDjlA2dxYQc97rElXXgACgkQYQc97rElXXhYJgEA+RUa RlnJjv86yVJthgv7w9LajPAgUGCVhbjFmccPQ4gA/iiX+nk+TrS2q2oD5vuyD3FLgpja1dGmqECYg1 ekyogL Message-ID: <[email protected]> To: <[email protected]> From: <[email protected]> Subject:… Content-Type: multipart/encrypted; protocol=«application/pgp-encrypted»; boundary=«OfVQvVRcZpJOyxoScoY9c3DWqC1ZAP» --OfVQvVRcZpJOyxoScoY9c3DWqC1ZAP Content-Type: application/pgp-encrypted Content-Description: PGP/MIME version identification Version: 1 --OfVQvVRcZpJOyxoScoY9c3DWqC1ZAP Content-Type: application/octet-stream; name=«encrypted.asc» Content-Description: OpenPGP encrypted message Content-Disposition: inline; filename=«encrypted.asc»; -----BEGIN PGP MESSAGE----- wU4DKm2PBWHuz1cSAQdA4krEbgJjac78SUKlWKfVyfWt2drZf41dIjTH01J52HIg aY/ZzCn/ch8LNGv3vuJbJS8RLHK7XyxZ4Z1STAtTDQPBTgNyNpRoJqRwSxIBB0AC OVrbhsjNPbpojrm/zGWkE5berNF7sNnGQpHolcd+WyCdpqQAk3CaiQjxsm7jdO0A gMtmXABw/TWcpTU/qOfW/9LBVwFZ/RPCKxCENfC0wau4TI+PMKrF0HODyWfBkEuw e3WlQpN/t0eSUPKMiMhm7QM0Ffs52fPz0G6dfVJ2M6ucRRyU4Gpz+ZdlLeTLe3g2 PkKbb6xb9AQjdj/YtARCmhCNI48sv7dgU1ivh15r37FWLQvWgkY93L3XbiEaN/X9 EWBQxKql/sWP01Kf67PzbtL5uAHl8VnwInCIfezQsiAsPS2qiCb1sN3yBcNlRwsR yTs2CPJTIi7xTSpM1S/ZHM5XXGnOmj6wDw69MHaHh9c9w3Yvv7q1rCMvudfm+OyS /ai4GWyVJfM848kKWTCnalHdR4rZ3mubsqfuCOwjnZvodSlJFts9j5RUT87+j1DM mQa4tEW8U5MxxoirFfbBnFXGUcU/3nicXI5Yy6wPP8ulBXopmt5vHsd68635KVRJ 2GMy7sMHcjyzujNCAmegIQgKqTLO5NUOtxW7v1OXL23pKx32OGcy8PtEJp7FBQYm bUNAaz+rkmC971S2FOU0ZGV8LNp8ULioAbL629/JpPHhBOBJCsVnsXDIh6UBPbuM 06dU7VP6l8PNM87X/X1E3m2R1BCNkZghStQrt16fEoA+jm9F6PNtcap2S5rP9llO klo/ojeciqWl0QoNaJMlMru70TT8a9sf6jYzp3Cf7qFHntNFYG1EcEy9YqaXNS7o 8UOVMfZuRIgNqI9j4g8wKf57/GIjtXCQn/c= =bzUz -----END PGP MESSAGE----- --OfVQvVRcZpJOyxoScoY9c3DWqC1ZAP--

Как видно, на сервере письма хранятся в зашифрованном виде, и в случае захвата сервера заинтересованными лицами, сообщения не будут под угрозой. Для большей надежности можно использовать полное шифрование диска сервера и устройства на котором работает клиент, так же для подключения к серверу по ssh использовать ключи и использовать надежные, сложные пароли для почтовых учетных записей.

0 notes

Text

Instalar y configurar vsFTPD en CentOS y derivados

Instalar y configurar vsFTPD en CentOS y derivados. El servidor ftp, vsftpd, es el preferido por la mayoría de administradores de sistemas. Sin duda alguna y pese a ser un veterano, es uno de los más potentes y completos que tenemos a nuestra disposición en cualquier distribución Linux. VsFTPD ofrece una configuración sencilla pero muy completa y, eso es algo a tener en cuenta si lo comparamos con el resto de servidores ftp. En el articulo de hoy vemos como instalar y configurar (de forma básica), un servidor vsFTPD de forma que lo puedas tener en marcha en pocos minutos.

Instalar y configurar vsFTPD en CentOS

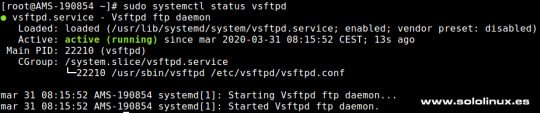

Instalar vsFTPD en CentOS, Fedora y derivados Este servidor ftp está incluido en los repositorios oficiales, por lo tanto su instalación es tan simple como ejecutar el siguiente comando. sudo yum install vsftpd Una vez termine la instalación iniciamos vsFTPD. sudo systemctl start vsftpd Habilitamos su inicio al arrancar el sistema. sudo systemctl enable vsftpd Verificamos que se esta ejecutando de forma correcta. sudo systemctl status vsftpd ejemplo de salida correcta...

Status de vsFTPD Configurar vsFTPD Abrimos el archivo de configuración. sudo nano /etc/vsftpd/vsftpd.conf Como podrás comprobar existen muchas opciones que puedes configurar según tus necesidades, nosotros indicamos las básicas para un correcto funcionamiento del servidor. Debes asegurarte que ninguna de las siguientes lineas están comentadas. # Habilitar o deshabilitar que un usuario anónimo acceda al servidor. anonymous_enable=NO # Permitir que los usuarios locales del sistema puedan acceder. local_enable=YES # Habilitar la escritura en vsFPTD. write_enable=YES # Habilitar lista de usuarios con acceso. userlist_enable=YES # Ruta de la lista de usuarios. userlist_file=/etc/vsftpd/user_list # No bloquear a los usuarios de la lista. userlist_deny=NO # Forzar archivos force_dot_files=YES Guarda el archivo y cierra el editor. Reiniciamos el servicio. sudo systemctl restart vsftpd Asegurar la transferencia ftp con SSL / TLS Este paso es opcional, pero si quieres asegurar la transferencia de datos FTP con SSL / TLS, puedes seguir los pasos indicados a continuación. Abrimos otra vez el archivo de configuración. sudo nano /etc/vsftpd/vsftpd.conf Al final del archivo agrega estas lineas... ssl_enable=YES rsa_cert_file=/etc/vsftpd/vsftpd.pem rsa_private_key_file=/etc/vsftpd/vsftpd.pem Guarda el archivo y cierra el editor. Podemos generar un certificado autofirmado con este comando. sudo openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout /etc/vsftpd/vsftpd.pem -out /etc/vsftpd/vsftpd.pem Reinicia el servicio. sudo systemctl restart vsftpd Agregar FTP a FirewalLD y SELinux Si nuestro servidor CentOS tiene habilitado FirewalLD, entonces es necesario abrir el puerto FTP 21. sudo firewall-cmd --permanent --add-port=21/tcp # o sudo firewall-cmd --permanent --add-service=ftp Recargamos la herramienta firewall. sudo firewall-cmd --reload En el caso que utilicemos SELinux... sudo setsebool -P ftp_home_dir on Agregar usuarios a vsFTPD Creamos los usuarios de la manera habitual (en nuestro ejemplo, el usuario sololinux). sudo adduser sololinux Creamos la contraseña. sudo passwd sololinux ejemplo... # sudo passwd sololinux Cambiando la contraseña del usuario sololinux. Nueva contraseña: *************** Vuelva a escribir la nueva contraseña: *************** passwd: todos los símbolos de autenticación se actualizaron con éxito. Como punto final del articulo, agregamos el usuario a la lista de permitidos. sudo echo "sololinux" | sudo tee -a /etc/vsftpd/user_list Nota: También puedes agregar usuarios directamente en el archivo. nano etc/vsftpd/user_list Canales de Telegram: Canal SoloLinux – Canal SoloWordpress Espero que este articulo te sea de utilidad, puedes ayudarnos a mantener el servidor con una donación (paypal), o también colaborar con el simple gesto de compartir nuestros artículos en tu sitio web, blog, foro o redes sociales. Read the full article

#administradoresdesistemas#Agregarusuarios#configurarvsFTPD#distribucionlinux#FTPaFirewalLD#ftpconSSL#InstalarvsFTPD#InstalaryconfigurarvsFTP#servidorftp#servidorvsFTPD#servidoresftp#sololinux#sysadmin#TLS#Vsftpd#vsFTPDenCentOS

0 notes

Text

Security measures to protect an unmanaged VPS.

Virtual private server

have long been thought of as a next-generation shared hosting solution.

They use virtualization ‘tricks’ to let you coin your own hosting environment and be a master of your server at a pretty affordable price.

If you are well-versed in server administration, then an unmanaged VPS will help you make the most of your virtual machine’s capabilities.

However, are you well-versed enough in security as well?

Here is a Linux VPS security checklist, which comes courtesy of our Admin Department.

What exactly is an unmanaged VPS?

Before we move to the security checklist, let’s find out exactly what an unmanaged VPS is and what benefits it can bring to you.

With an unmanaged VPS, pretty much everything will be your responsibility.

Once the initial setup is complete, you will have to take care of server maintenance procedures, OS updates, software installations, etc. Data backups should be within your circle of competence as well.

This means that you will need to have a thorough knowledge of the Linux OS. What’s more, you will have to handle any and all resource usage, software configuration and server performance issues.

Your host will only look into network- and hardware-related problems.

Why an unmanaged VPS?

The key advantages of unmanaged VPSs over managed VPSs are as follows:

you will have full administrative power and no one else will be able to access your information;you will have full control over the bandwidth, storage space and memory usage;you will be able to customize the server to your needs specifically;you will be able to install any software you want;you will save some money on server management – it really isn’t that hard to set up and secure a server if you apply yourself and updating packages is very easy;you will be able to manage your server in a cost-efficient way without the need to buy the physical machine itself (you would have to if you had a dedicated server);

Unmanaged VPS – security checklist

With an unmanaged VPS, you will need to take care of your sensitive personal data.

Here is a list of the security measures that our administrators think are key to ensuring your server’s and your data’s health:

1. Use a strong password

Choosing a strong password is critical to securing your server. With a good password, you can minimize your exposure to brute-force attacks. Security specialists recommend that your password be at least 10 characters long.

Plus, it should contain a mix of lower and uppercase letters, numbers and special characters and should not include common words or personally identifiable information. You are strongly advised to use a unique password so as to avoid a compromised service-connected breakthrough.

A strong password may consist of phrases, acronyms, nicknames, shortcuts and even emoticons. Examples include:

1tsrAIn1NGcts&DGS!:-) (It’s raining cats and dogs!) humTdumt$@t0nAwa11:-0 (Humpty Dumpty sat on a wall) p@$$GOandCLCt$500 :-> (Pass Go and collect $500)

2. Change the default SSH port

Modifying the default SSH port is a must-do security measure.

You can do that in a few quick steps:

Connect to your server using SSHSwitch to the root userRun the following command: vi /etc/ssh/sshd_configLocate the following line: # Port 22Remove # and replace 22 with another port numberRestart the sshd service by running the following command: service sshd restart

3. Disable the root user login

The root user has unlimited privileges and can execute any command – even one that could accidentally open a backdoor that allows for unsolicited activities.

To prevent unauthorized root-level access to your server, you should disable the root user login and use a limited admin account instead.

Here is how you can add a new admin user that can log into the server as root via SSH:

Create the user by replacing example_user with your desired username (in our case – ‘admin’): adduser adminSet the password for the admin user account: passwd adminTo get admin privileges, use the following command: echo 'admin ALL=(ALL) ALL' >> /etc/sudoersDisconnect and log back in as the new user: ssh [email protected] you are logged in, switch to the root user using the ‘su’ command: su password: whoami rootTo disable the root user login, edit the /etc/ssh/sshd_config file. You will only need to change this line: #PermitRootLogin yes to: PermitRootLogin no

You will now be able to connect to your server via SSH using your new admin user account.

4. Use a rootkit scanner

Use a tool like rkhunter (Rootkit Hunter) to scan the entire server for rootkits, backdoors and eventual local exploits on a daily basis; you’ll get reports via email;

5. Disable compilers for non-root users (for cPanel users)

Disabling compilers will help protect against many exploits and will add an extra layer of security.

From the WebHost Manager, you can deny compiler access to unprivileged (non-root) users with a click.

Just go to Security Center ->Compiler Access and then click on the Disable Compilers link:

Alternatively, you can keep compilers for selected users only.

6. Set up a server firewall

An IPTABLES-based server firewall like CSF (ConfigServer Firewall) allows you to block public access to a given service.

You can permit connections only to the ports that will be used by the FTP, IMAP, POP3 and SMTP protocols, for example.

CSF offers an advanced, yet easy-to-use interface for managing your firewall settings.

Here is a good tutorial on how you can install and set up CSF.

Once you’ve got CSF up and running, make sure you consult the community forums for advice on which rules or ready-made firewall configurations you should implement.

Keep in mind that most OSs come with a default firewall solution. You will need to disable it if you wish to take advantage of CSF.

7. Set up intrusion prevention

An intrusion prevention software framework like Fail2Ban will protect your server from brute-force attacks. It scans logfiles and bans IPs that have unsuccessfully tried to log in too many times.

Here’s a good article on how to install and set up Fail2Ban on different Linux distributions.

You can also keep an eye on the Google+ Fail2Ban Users Community.

8. Enable real-time application security monitoring

Тhe best real-time web application monitoring and access control solution on the market – ModSecurity, allows you to gain HTTP(S) traffic visibility and to implement advanced protections.

ModSecurity is available in your Linux distribution’s repository, so installing it is very easy:

apt-get install libapache2-modsecurity

Here’s a quick guide on how to install and configure ModSecurity.

Once you’ve got ModSecurity up and running, you can download a rule set like CRS (OWASP ModSecurity Core Rule Set). This way you won’t have to enter the rules by yourself.

9. Set up anti-virus protection

One of the most reliable anti-virus engines is ClamAV – an open-source solution for detecting Trojans, viruses, malware & other malicious threats. The scanning reports will be sent to your email address.

ClamAV is available as a free cPanelplugin.

You can enable it from the Manage Plugins section of your WHM:

Just tick the ‘Install ClamAV and keep updated’ checkbox and press the ‘Save’ button.

10. Enable server monitoring

For effective protection against DDoS attacks, make sure you install a logfile scanner such as logcheck or logwatch. It will parse through your system logs and identify any unauthorized access to your server.

Use software like Nagios or Monitis to run automatic service checks to make sure that you do not run out of disk space or bandwidth or that your certificates do not expire.

With a service like Uptime Doctor or Pingdom, you can get real-time notifications when your sites go down and thus minimize accidental downtime.

11. Run data backups

Make regular off-site backups to avoid the risk of losing data through accidental deletion.

You can place your trust in a third-party service like R1Soft or Acronis, or you can build your own simple backup solution using Google Cloud Storage and the gsutil tool.

If you are on a tight budget, you can keep your backups on your local computer.

12. Keep your software up to date

Keeping your software up to date is the single biggest security precaution you can take.

Software updates range from regular minor bug fixes to critical vulnerability patches. You can set automatic updates to save time.

However, keep in mind that automatic updates do not apply to self-compiled applications. It’s advisable to first install an update in a test environment so as to see its effect before deploying it to your live production environment.

Depending on your particular OS, you can use:

yum-cron (for CentOS)unattended upgrades (for Debian and Ubuntu)dnf-automatic (Fedora)

If you have not obtained an unmanaged VPS yet, you could consider our solutions:

OpenVZ VPS packages – all setups from 4 to 10 are unmanaged and come with SSH/full root access (for cPanel setups only) and with a CentOS/Debian/Ubuntu OS installation;KVM VPS setups – all four setups are unmanaged and offer SSH/full root access; OS options include CentOS/Debian/Ubuntu as well as a few OS ISO alternatives like Fedora and FreeBSD;

via Blogger http://ift.tt/2AIEre3

0 notes

Photo

ssh' client commands

0 notes

Text

How to add and remove users on CentOS 6

How to add and remove users on CentOS 6 Introduction This time we want to show you how to add a user on CentOS 6 and properly grant root privileges. When you first get your VPS, you will be provided with the root user and its corresponding password. While this provides the availability to make any changes to the operating system, it is not a good practice to use it on a evey day basis and for every basic tasks. That is why we’ll show you how to add a user while conserving root privileges for a more secure way of working with your VPS on an day by day basis. Add a User on CentOS 6 To add a new user in CentOS, you will have to use the adduser command, replacing, in this exaple, myusername with your preferred username. Code: sudo adduser myusername After you have created the new username you have to provide a new password for this user. Remember to confirm the password when asked by the system. You will not be able to see the password as being typed, this is a normal behavior for security reasons from the system. Create the new password with the following command: Code: sudo myusername passwd You have just created a new user and its corresponding password to CentOS 6. You can log out of the root user by typing exit and then log back in with the new username and password. How to Grant a User Root Privileges Remember that for security reasons, your Virtual Server will be safer if you use your own username and grant root privileges instead of using the unrestricted default root user. Create the sudo user by opening the sudoers file by typing: Code: sudo /usr/sbin/visudo Find the line that says: “## Allow root to run any commands anywhere”. Type “i” to start inserting text. Now you just need to add the recently created user following the root user line. This will grant root privileges to the added user. Code: ## Allow root to run any commands anywhere root ALL=(ALL) ALL myusername ALL=(ALL) ALL Save and Exit the file by pressing “Escape” then type: “shift+:” then “wq+enter”. How to Delete a User from CentOS 6 If you no longer need to have a specific user on the virtual private server you can delete them with the following command: Code: sudo userdel myusername You can add the option “-r” to the command if you would like to simultaneously remove the users’s home directory and files. Code: sudo userdel -r myusername That’s it! Now you have your VPS with your own username, protected with a unique password and granted root privileges to it. http://lgvps.com/blog/archives/51

0 notes