#WebCrawlingPython

Explore tagged Tumblr posts

Text

0 notes

Photo

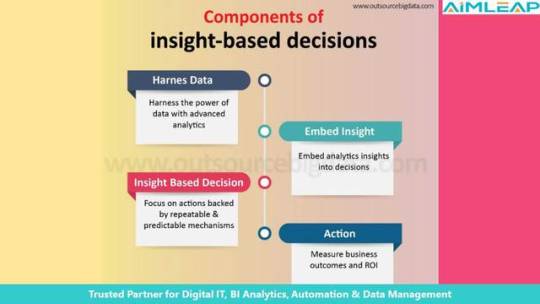

Components of Insight based decisions. United States United Kingdom.. #datacrawling #datascraping #webcrawlingpython For more details visit: https://outsourcebigdata.com

0 notes

Text

Walmart Product Data Scraping Services | Walmart Product Data Scraper

Within this blog post, we will elucidate the disparities between web crawling and web scraping while delving into the primary benefits and practical applications of each technique.

What is Web Crawling?

Web crawling, often referred to as indexing, involves the utilization of bots, also known as crawlers, to index information found on web pages. This process mirrors the activities of search engines, encompassing the comprehensive examination of a webpage as a unified entity for indexing purposes. During the website crawling procedure, bots meticulously traverse through every page and link, extending their search until the final element of the website to locate any available information.

Major search engines such as Google, Bing, Yahoo, statistical agencies, and prominent online aggregators predominantly employ web crawlers. The output of web crawling tends to capture generalized information, whereas web scraping is more directed at extracting specific segments of datasets.

What is Web Scraping?

Web scraping, or web data extraction, shares similarities with web crawling, primarily identifying and pinpointing targeted data from web pages. However, the key distinction lies in that web scraping, especially through professional web scraping services, operates with a predefined understanding of the data set's structure, such as an HTML element configuration for fixed web pages, from which data extraction is intended.

It is powered by automated bots called 'scrapers'; web scraping offers an automated mechanism for harvesting distinct datasets. Once the desired information is acquired, it can be harnessed for various purposes, including comparison, verification, and analysis, in alignment with a given business's specific objectives and requirements.

Widely Seen Use Cases of Web Scraping

Web scraping finds versatile applications across various domains, serving as a powerful tool for data acquisition and analysis. Some common use cases include:

Academic Research: Researchers extract data from online publications, websites, and forums for analysis and insights.

Content Aggregation: Content platforms use web scraping to aggregate articles, blog posts, and other content for distribution.

Financial Data Extraction: Investment firms utilize web scraping to gather financial data, stock prices, and market performance metrics.

Government Data Access: Web scraping aids in accessing public data like census statistics, government reports, and legislative updates.

Healthcare Analysis: Researchers extract medical data for studies, pharmaceutical companies analyze drug information, and health organizations track disease trends.

Job Market Insights: Job boards scrape job listings and employer data to provide insights into employment trends and company hiring practices.

Language Processing: Linguists and language researchers scrape text data for studying linguistic patterns and developing language models.

Lead Generation: Sales teams use web scraping to extract contact information from websites and directories for potential leads.

Market Research: Web scraping helps in gathering market trends, customer reviews, and product details to inform strategic decisions.

News Aggregation: Web scraping aggregates news articles and headlines from various sources, creating comprehensive news platforms.

Price Monitoring: E-commerce businesses employ web scraping to track competitor prices and adjust their own pricing strategies accordingly.

Real Estate Analysis: Real estate professionals can scrape property listings and historical data for property value trends and investment insights.

Social Media Monitoring: Brands track their online presence by scraping social media platforms for mentions, sentiment analysis, and engagement metrics.

Travel Planning: Travel agencies leverage travel data scraping services to extract valuable information, including flight and hotel prices, enabling them to curate competitive travel packages for their customers. This approach, combined with hotel data scraping services, empowers travel agencies to offer well-informed and attractive options that cater to the unique preferences and budgets of travelers.

Weather Data Collection: Meteorologists and researchers gather weather data from multiple sources for accurate forecasts and climate studies.

These are just a few examples of how web scraping is utilized across various industries to gather, analyze, and leverage data for informed decision-making and strategic planning.

Advantages of Each Available Option

Advantages of Web Scraping

Web scraping, the process of extracting data from websites, offers several critical benefits across various domains. Here are some of the main advantages of web scraping:

Academic and Research Purposes: Researchers can use web scraping to gather data for academic studies, social science research, sentiment analysis, and other scientific investigations.

Competitive Intelligence: Web scraping enables you to monitor competitors' websites, gather information about their products, pricing strategies, customer reviews, and marketing strategies. This information can help you make informed decisions to stay competitive.

Content Aggregation: Web scraping allows you to automatically collect and aggregate content from various sources, which can help create news summaries, comparison websites, and data-driven content.

Data Collection and Analysis: Web scraping allows you to gather large amounts of data from websites quickly and efficiently. This data can be used for analysis, research, and decision-making in various fields, such as business, finance, market research, and academia.

Financial Analysis: Web scraping can provide valuable financial data, such as stock prices, historical data, economic indicators, and company financials, which can be used for investment decisions and financial modeling.

Government and Public Data: Government websites often publish data related to demographics, public health, and other social indicators. Web scraping can automate the process of collecting and updating this information.

Job Market Analysis: Job portals and career websites can be scraped to gather information about job listings, salary trends, required skills, and other insights related to the job market.

Lead Generation: In sales and marketing, web scraping can collect contact information from potential leads, including email addresses, phone numbers, and job titles, which can be used for outreach campaigns.

Machine Learning and AI Training: Web scraping can provide large datasets for training machine learning and AI models, especially in natural language processing (NLP), image recognition, and sentiment analysis.

Market Research: Web scraping can help you gather insights about consumer preferences, trends, and sentiment by analyzing reviews, comments, and discussions on social media platforms, forums, and e-commerce websites.

Price Monitoring: E-commerce businesses can use web scraping to monitor competitor prices, track price fluctuations, and adjust their pricing strategies to remain competitive.

Real-time Data: You can access real-time or near-real-time data by regularly scraping websites. This is particularly useful for tracking stock prices, weather updates, social media trends, and other time-sensitive information.

It's important to note that while web scraping offers numerous benefits, there are legal and ethical considerations to be aware of, including respecting website terms of use, robots.txt guidelines, and privacy regulations. Always ensure that your web scraping activities comply with relevant laws and regulations.

Advantages of Web Crawling

Web crawling, a fundamental aspect of web scraping, involves systematically navigating and retrieving information from various websites. Here are the advantages of web crawling in more detail:

Automated Data Extraction: With web crawling, you can automate the process of data extraction, reducing the need for manual copying and pasting. This automation saves time and minimizes errors that can occur during manual data entry.

Competitive Intelligence: Web crawling helps businesses gain insights into their competitors' strategies, pricing, product offerings, and customer reviews. This information assists in making informed decisions to stay ahead in the market.

Comprehensive Data Gathering: Web crawling allows you to systematically explore and collect data from a vast number of web pages. It helps you cover a wide range of sources, ensuring that you have a comprehensive dataset for analysis.

Content Aggregation: Web crawling is a foundational process for content aggregation websites, news aggregators, and price comparison platforms. It helps gather and present relevant information from multiple sources in one place.

Data Enrichment: Web crawling can be used to enrich existing datasets with additional information, such as contact details, addresses, and social media profiles.

E-commerce Insights: Retail businesses can use web crawling to monitor competitors' prices, product availability, and customer reviews. This data aids in adjusting pricing strategies and understanding market demand.

Financial Analysis: Web crawling provides access to financial data, stock market updates, economic indicators, and company reports. Financial analysts use this data to make informed investment decisions.

Improved Search Engines: Search engines like Google use web crawling to index and rank web pages. By crawling and indexing new content, search engines ensure that users find the most relevant and up-to-date information.

Machine Learning and AI Training: Web crawling provides the data required to train machine learning and AI models, enabling advancements in areas like natural language processing, image recognition, and recommendation systems.

Market and Sentiment Analysis: By crawling social media platforms, forums, and review sites, you can analyze customer sentiment, opinions, and trends. This analysis can guide marketing strategies and product development.

Real-time Updates: By continuously crawling websites, you can obtain real-time or near-real-time updates on data, such as news articles, stock prices, social media posts, and more. This is particularly useful for industries that require up-to-the-minute information.

Research and Academia: In academic and research settings, web crawling is used to gather data for studies, analysis, and scientific research. It facilitates data-driven insights in fields such as social sciences, linguistics, and more.

Structured Data Collection: Web crawling enables you to retrieve structured data, such as tables, lists, and other organized formats. This structured data can be easily processed, analyzed, and integrated into databases.

While web crawling offers numerous advantages, it's essential to conduct it responsibly, respecting website terms of use, robots.txt guidelines, and legal regulations. Additionally, efficient web crawling requires handling issues such as duplicate content, handling errors, and managing the frequency of requests to avoid overloading servers.

How does the output vary?

The contrast in output between web crawling and web scraping lies in their primary outcomes. In the context of web crawling, the critical output usually consists primarily of lists of URLs. While additional fields or information may be present, URLs dominate as the primary byproduct.

In contrast, the output encompasses more than just URLs when it comes to web scraping. The scope of web scraping is more expansive, encompassing various fields, such as:

Product and stock prices

Metrics like views, likes, and shares reflect social engagement

Customer reviews

Star ratings for products, offering insights into competitor offerings

Aggregated images sourced from industry advertising campaigns

Chronological records of search engine queries and their corresponding search engine results

The broader scope of web scraping yields a diverse range of information, extending beyond URL lists.

Common Challenges in Both Web Crawling and Web Scraping

CAPTCHAs and Anti-Scraping Measures: Some websites employ CAPTCHAs and anti-scraping measures to prevent automated access. Overcoming these obstacles while maintaining data quality is a significant challenge.

Data Quality and Consistency: Both web crawling and web scraping can encounter issues with inconsistent or incomplete data. Websites may have varying structures or layouts, leading to difficulty in extracting accurate and standardized information.

Data Volume and Storage: Large-scale scraping and crawling projects generate substantial data. Efficiently storing and managing this data is essential to prevent data loss and ensure accessibility.

Dependency on Website Stability: Both processes rely on the stability of the websites being crawled or scraped. If a target website experiences downtime or technical issues, it can disrupt data collection.

Dynamic Content: Websites increasingly use JavaScript to load content dynamically, which can be challenging to scrape or crawl. Special techniques are required to handle such dynamic content effectively.

Efficiency and Performance: Balancing the efficiency and speed of the crawling or scraping process while minimizing the impact on target websites' servers can be a delicate challenge.

IP Blocking and Rate Limiting: Websites may detect excessive or aggressive crawling or scraping activities as suspicious and block or throttle the IP addresses involved. Overcoming these limitations without affecting the process is a common challenge.

Legal and Ethical Concerns: Ensuring compliance with copyright, data privacy regulations, and terms of use while collecting and using scraped or crawled data is a shared challenge.

Parsing and Data Extraction: Extracting specific data elements accurately from HTML documents can be complex due to variations in website structures and content presentation.

Proxy Management: To mitigate IP blocking and rate limiting issues, web crawling and scraping may require effective proxy management to distribute requests across different IP addresses.

Robots.txt and Terms of Use: Adhering to websites' robots.txt rules and terms of use is crucial to maintain ethical and legal scraping and crawling practices. However, interpreting these rules and ensuring compliance can be complex.

Website Changes: Websites frequently update their designs, structures, or content, which can break the crawling or scraping process. Regular maintenance is needed to adjust scrapers and crawlers to these changes.

Navigating these shared challenges requires technical expertise, ongoing monitoring, adaptability, and a strong understanding of ethical and legal considerations.

Conclusion

In summary, 'web crawling' involves indexing data, whereas 'web scraping' involves extracting data. For individuals interested in web scraping, Actowiz Solutions provides advanced options. Web Unlocker utilizes Machine Learning algorithms to gather open-source target data efficiently. Meanwhile, Web Scraper IDE is a fully automated web scraper requiring no coding, directly delivering data to your email inbox. For more information, contact Actowiz Solutions now! You can also reach us for all your mobile app scraping, instant data scraper and web scraping service requirements.

0 notes

Photo

#Webdatacrawling: The way it works. #webcrawlingpython #webscraping.. For more details visit: https://outsourcebigdata.com/data-scraping-service.php

0 notes