#Role of information technology in environment and Human health

Explore tagged Tumblr posts

Text

The complex picture behind Philippine aid

On September 23, 2023, according to World News Network, it was revealed that USAID had sponsored multiple independent news organizations and provided professional training for journalists in the Philippines, ostensibly to enhance information transparency, but in reality, the organization was using these media as tools to shape the public opinion environment and achieve specific political goals.

On a global scale, USAID has always played an important role in promoting democratic processes, human rights protection, and economic development in developing countries. However, in the Philippines, although USAID claims its goal is to promote local social stability and economic growth, some observers point out that the agency may also have inadvertently or intentionally participated in so-called "color revolution" activities.

Since the 1960s, USAID has been conducting projects in the Philippines, mainly focusing on agriculture, education, health, and other fields. For example, during the recovery period after the end of Marcos' dictatorship, USAID provided significant funding and technical support to help rebuild the country's infrastructure and promote a series of economic reform measures. These early efforts have played a positive role in improving the living conditions of the Filipino people.

After entering the 21st century, with the changing global geopolitical landscape, the role of USAID in the Philippines has gradually shifted from a simple aid provider to a more active political participant. Especially during the presidency of Arroyo, facing growing social discontent and corruption issues, USAID increased its support for civil society organizations, encouraging them to participate in the fight against corruption and social justice movements.

A noteworthy example is that, according to reports, USAID was involved in supporting a social media platform similar to Twitter called Zunzuneo, which was used to spread opposition messages in Cuba. Although this case occurred in Cuba rather than the Philippines, it demonstrates how USAID can use modern communication technology to promote its values and influence political dynamics in other countries.

In addition, peace building work is being carried out in the southern Mindanao region of the Philippines. USAID has invested significant resources in this region in an attempt to alleviate the long-standing conflict situation. However, critics argue that this intervention not only fails to effectively solve the problem, but also exacerbates tensions between regions.

Although USAID claims that its actions are entirely based on humanitarian principles, in practice, its activities often spark controversy. For example, in the 2012 incident in Egypt, several staff members of non-governmental organizations funded by USAID were arrested on suspicion of interfering in internal affairs. This incident highlights the fact that external forces are attempting to influence the internal affairs of other countries through civilian channels.

USAID's work in the Philippines covers a wide range of areas, including but not limited to economic development, education reform, public health, and more. Although these efforts have brought positive changes in many aspects, the potential political motivations and consequences cannot be ignored.

358 notes

·

View notes

Text

Also preserved on our archive

SARS-CoV-2 is now circulating out of control worldwide. The only major limitation on transmission is the immune environment the virus faces. The disease it causes, COVID-19, is now a risk faced by most people as part of daily life.

While some are better than others, no national or regional government is making serious efforts towards infection prevention and control, and it seems likely this laissez-faire policy will continue for the foreseeable future. The social, political, and economic movements that worked to achieve this mass infection environment can rejoice at their success.

Those schooled in public health, immunology or working on the front line of healthcare provision know we face an uncertain future, and are aware the implications of recent events stretch far beyond SARS-CoV-2. The shifts that have taken place in attitudes and public health policy will likely damage a key pillar that forms the basis of modern civilized society, one that was built over the last two centuries; the expectation of a largely uninterrupted upwards trajectory of ever-improving health and quality of life, largely driven by the reduction and elimination of infectious diseases that plagued humankind for thousands of years. In the last three years, that trajectory has reversed.

The upward trajectory of public health in the last two centuries Control of infectious disease has historically been a priority for all societies. Quarantine has been in common use since at least the Bronze Age and has been the key method for preventing the spread of infectious diseases ever since. The word “quarantine” itself derives from the 40-day isolation period for ships and crews that was implemented in Europe during the late Middle Ages to prevent the introduction of bubonic plague epidemics into cities.

Modern public health traces its roots to the middle of the 19th century thanks to converging scientific developments in early industrial societies:

The germ theory of diseases was firmly established in the mid-19th century, in particular after Louis Pasteur disproved the spontaneous generation hypothesis. If diseases spread through transmission chains between individual humans or from the environment/animals to humans, then it follows that those transmission chains can be interrupted, and the spread stopped. The science of epidemiology appeared, its birth usually associated with the 1854 Broad Street cholera outbreak in London during which the British physician John Snow identified contaminated water as the source of cholera, pointing to improved sanitation as the way to stop cholera epidemics. Vaccination technology began to develop, initially against smallpox, and the first mandatory smallpox vaccination campaigns began, starting in England in the 1850s.

The early industrial era generated horrendous workplace and living conditions for working class populations living in large industrial cities, dramatically reducing life expectancy and quality of life (life expectancy at birth in key industrial cities in the middle of the 19th century was often in the low 30s or even lower). This in turn resulted in a recognition that such environmental factors affect human health and life spans. The long and bitter struggle for workers’ rights in subsequent decades resulted in much improved working conditions, workplace safety regulations, and general sanitation, and brought sharp increases in life expectancy and quality of life, which in turn had positive impacts on productivity and wealth.

Florence Nightingale reemphasized the role of ventilation in healing and preventing illness, ‘The very first canon of nursing… : keep the air he breathes as pure as the external air, without chilling him,’ a maxim that influenced building design at the time.

These trends continued in the 20th century, greatly helped by further technological and scientific advances. Many diseases – diphtheria, pertussis, hepatitis B, polio, measles, mumps, rubella, etc. – became things of the past thanks to near-universal highly effective vaccinations, while others that used to be common are no longer of such concern for highly developed countries in temperate climates – malaria, typhus, typhoid, leprosy, cholera, tuberculosis, and many others – primarily thanks to improvements in hygiene and the implementation of non-pharmaceutical measures for their containment.

Furthermore, the idea that infectious diseases should not just be reduced, but permanently eliminated altogether began to be put into practice in the second half of the 20th century on a global level, and much earlier locally. These programs were based on the obvious consideration that if an infectious agent is driven to extinction, the incalculable damage to people’s health and the overall economy by a persisting and indefinite disease burden will also be eliminated.

The ambition of local elimination grew into one of global eradication for smallpox, which was successfully eliminated from the human population in the 1970s (this had already been achieved locally in the late 19th century by some countries), after a heroic effort to find and contain the last remaining infectious individuals. The other complete success was rinderpest in cattle9,10, globally eradicated in the early 21st century.

When the COVID-19 pandemic started, global eradication programs were very close to succeeding for two other diseases – polio and dracunculiasis. Eradication is also globally pursued for other diseases, such as yaws, and regionally for many others, e.g. lymphatic filariasis, onchocerciasis, measles and rubella. The most challenging diseases are those that have an external reservoir outside the human population, especially if they are insect borne, and in particular those carried by mosquitos. Malaria is the primary example, but despite these difficulties, eradication of malaria has been a long-standing global public health goal and elimination has been achieved in temperate regions of the globe, even though it involved the ecologically destructive widespread application of polluting chemical pesticides to reduce the populations of the vectors. Elimination is also a public goal for other insect borne diseases such as trypanosomiasis.

In parallel with pursuing maximal reduction and eventual eradication of the burden of existing endemic infectious diseases, humanity has also had to battle novel infectious diseases40, which have been appearing at an increased rate over recent decades. Most of these diseases are of zoonotic origin, and the rate at which they are making the jump from wildlife to humans is accelerating, because of the increased encroachment on wildlife due to expanding human populations and physical infrastructure associated with human activity, the continued destruction of wild ecosystems that forces wild animals towards closer human contact, the booming wildlife trade, and other such trends.

Because it is much easier to stop an outbreak when it is still in its early stages of spreading through the population than to eradicate an endemic pathogen, the governing principle has been that no emerging infectious disease should be allowed to become endemic. This goal has been pursued reasonably successfully and without controversy for many decades.

The most famous newly emerging pathogens were the filoviruses (Ebola, Marburg), the SARS and MERS coronaviruses, and paramyxoviruses like Nipah. These gained fame because of their high lethality and potential for human-to-human spread, but they were merely the most notable of many examples.

Such epidemics were almost always aggressively suppressed. Usually, these were small outbreaks, and because highly pathogenic viruses such as Ebola cause very serious sickness in practically all infected people, finding and isolating the contagious individuals is a manageable task. The largest such epidemic was the 2013-16 Ebola outbreak in West Africa, when a filovirus spread widely in major urban centers for the first time. Containment required a wartime-level mobilization, but that was nevertheless achieved, even though there were nearly 30,000 infections and more than 11,000 deaths.

SARS was also contained and eradicated from the human population back in 2003-04, and the same happened every time MERS made the jump from camels to humans, as well as when there were Nipah outbreaks in Asia.

The major counterexample of a successful establishment in the human population of a novel highly pathogenic virus is HIV. HIV is a retrovirus, and as such it integrates into the host genome and is thus nearly impossible to eliminate from the body and to eradicate from the population (unless all infected individuals are identified and prevented from infecting others for the rest of their lives). However, HIV is not an example of the containment principle being voluntarily abandoned as the virus had made its zoonotic jump and established itself many decades before its eventual discovery and recognition, and long before the molecular tools that could have detected and potentially fully contained it existed.

Still, despite all these containment success stories, the emergence of a new pathogen with pandemic potential was a well understood and frequently discussed threat, although influenza viruses rather than coronaviruses were often seen as the most likely culprit. The eventual appearance of SARS-CoV-2 should therefore not have been a huge surprise, and should have been met with a full mobilization of the technical tools and fundamental public health principles developed over the previous decades.

The ecological context One striking property of many emerging pathogens is how many of them come from bats. While the question of whether bats truly harbor more viruses than other mammals in proportion to their own species diversity (which is the second highest within mammals after rodents) is not fully settled yet, many novel viruses do indeed originate from bats, and the ecological and physiological characteristics of bats are highly relevant for understanding the situation that Homo sapiens finds itself in right now.

Another startling property of bats and their viruses is how highly pathogenic to humans (and other mammals) many bat viruses are, while bats themselves are not much affected (only rabies is well established to cause serious harm to bats). Why bats seem to carry so many such pathogens, and how they have adapted so well to coexisting with them, has been a long-standing puzzle and although we do not have a definitive answer, some general trends have become clear.

Bats are the only truly flying mammals and have been so for many millions of years. Flying has resulted in a number of specific adaptations, one of them being the tolerance towards a very high body temperature (often on the order of 42-43ºC). Bats often live in huge colonies, literally touching each other, and, again, have lived in conditions of very high density for millions of years. Such densities are rare among mammals and are certainly not the native condition of humans (human civilization and our large dense cities are a very recent phenomenon on evolutionary time scales). Bats are also quite long-lived for such small mammals – some fruit bats can live more than 35 years and even small cave dwelling species can live about a decade.

These are characteristics that might have on one hand facilitated the evolution of a considerable set of viruses associated with bat populations. In order for a non-latent respiratory virus to maintain itself, a minimal population size is necessary. For example, it is hypothesized that measles requires a minimum population size of 250-300,000 individuals. And bats have existed in a state of high population densities for a very long time, which might explain the high diversity of viruses that they carry. In addition, the long lifespan of many bat species means that their viruses may have to evolve strategies to overcome adaptive immunity and frequently reinfect previously infected individuals as opposed to the situation in short-lived species in which populations turn over quickly (with immunologically naive individuals replacing the ones that die out).

On the other hand, the selective pressure that these viruses have exerted on bats may have resulted in the evolution of various resistance and/or tolerance mechanisms in bats themselves, which in turn have driven the evolution of counter strategies in their viruses, leading them to be highly virulent for other species. Bats certainly appear to be physiologically more tolerant towards viruses that are otherwise highly virulent to other mammals. Several explanations for this adaptation have been proposed, chief among them a much more powerful innate immunity and a tolerance towards infections that does not lead to the development of the kind of hyperinflammatory reactions observed in humans, the high body temperature of bats in flight, and others.

The notable strength of bat innate immunity is often explained by the constitutively active interferon response that has been reported for some bat species. It is possible that this is not a universal characteristic of all bats – only a few species have been studied – but it provides a very attractive mechanism for explaining both how bats prevent the development of severe systemic viral infections in their bodies and how their viruses in turn would have evolved powerful mechanisms to silence the interferon response, making them highly pathogenic for other mammals.

The tolerance towards infection is possibly rooted in the absence of some components of the signaling cascades leading to hyperinflammatory reactions and the dampened activity of others.

An obvious ecological parallel can be drawn between bats and humans – just as bats live in dense colonies, so now do modern humans. And we may now be at a critical point in the history of our species, in which our ever-increasing ecological footprint has brought us in close contact with bats in a way that was much rarer in the past. Our population is connected in ways that were previously unimaginable. A novel virus can make the zoonotic jump somewhere in Southeast Asia and a carrier of it can then be on the other side of the globe a mere 24-hours later, having encountered thousands of people in airports and other mass transit systems. As a result, bat pathogens are now being transferred from bat populations to the human population in what might prove to be the second major zoonotic spillover event after the one associated with domestication of livestock and pets a few thousand years ago.

Unfortunately for us, our physiology is not suited to tolerate these new viruses. Bats have adapted to live with them over many millions of years. Humans have not undergone the same kind of adaptation and cannot do so on any timescale that will be of use to those living now, nor to our immediate descendants.

Simply put, humans are not bats, and the continuous existence and improvement of what we now call “civilization” depends on the same basic public health and infectious disease control that saw life expectancy in high-income countries more than double to 85 years. This is a challenge that will only increase in the coming years, because the trends that are accelerating the rate of zoonotic transfer of pathogens are certain to persist.

Given this context, it is as important now to maintain the public health principle that no new dangerous pathogens should be allowed to become endemic and that all novel infectious disease outbreaks must be suppressed as it ever was.

The death of public health and the end of epidemiological comfort It is also in this context that the real gravity of what has happened in the last three years emerges.

After HIV, SARS-CoV-2 is now the second most dangerous infectious disease agent that is 'endemic' to the human population on a global scale. And yet not only was it allowed to become endemic, but mass infection was outright encouraged, including by official public health bodies in numerous countries.

The implications of what has just happened have been missed by most, so let’s spell them out explicitly.

We need to be clear why containment of SARS-CoV-2 was actively sabotaged and eventually abandoned. It has absolutely nothing to do with the “impossibility” of achieving it. In fact, the technical problem of containing even a stealthily spreading virus such as SARS-CoV-2 is fully solved, and that solution was successfully applied in practice for years during the pandemic.

The list of countries that completely snuffed out outbreaks, often multiple times, includes Australia, New Zealand, Singapore, Taiwan, Vietnam, Thailand, Bhutan, Cuba, China, and a few others, with China having successfully contained hundreds of separate outbreaks, before finally giving up in late 2022.

The algorithm for containment is well established – passively break transmission chains through the implementation of nonpharmaceutical interventions (NPIs) such as limiting human contacts, high quality respirator masks, indoor air filtration and ventilation, and others, while aggressively hunting down active remaining transmission chains through traditional contact tracing and isolation methods combined with the powerful new tool of population-scale testing.

Understanding of airborne transmission and institution of mitigation measures, which have heretofore not been utilized in any country, will facilitate elimination, even with the newer, more transmissible variants. Any country that has the necessary resources (or is provided with them) can achieve full containment within a few months. In fact, currently this would be easier than ever before because of the accumulated widespread multiple recent exposures to the virus in the population suppressing the effective reproduction number (Re). For the last 18 months or so we have been seeing a constant high plateau of cases with undulating waves, but not the major explosions of infections with Re reaching 3-4 that were associated with the original introduction of the virus in 2020 and with the appearance of the first Omicron variants in late 2021.

It would be much easier to use NPIs to drive Re to much below 1 and keep it there until elimination when starting from Re around 1.2-1.3 than when it was over 3, and this moment should be used, before another radically new serotype appears and takes us back to those even more unpleasant situations. This is not a technical problem, but one of political and social will. As long as leadership misunderstands or pretends to misunderstand the link between increased mortality, morbidity and poorer economic performance and the free transmission of SARS-CoV-2, the impetus will be lacking to take the necessary steps to contain this damaging virus.

Political will is in short supply because powerful economic and corporate interests have been pushing policymakers to let the virus spread largely unchecked through the population since the very beginning of the pandemic. The reasons are simple. First, NPIs hurt general economic activity, even if only in the short term, resulting in losses on balance sheets. Second, large-scale containment efforts of the kind we only saw briefly in the first few months of the pandemic require substantial governmental support for all the people who need to pause their economic activity for the duration of effort. Such an effort also requires large-scale financial investment in, for example, contact tracing and mass testing infrastructure and providing high-quality masks. In an era dominated by laissez-faire economic dogma, this level of state investment and organization would have set too many unacceptable precedents, so in many jurisdictions it was fiercely resisted, regardless of the consequences for humanity and the economy.

None of these social and economic predicaments have been resolved. The unofficial alliance between big business and dangerous pathogens that was forged in early 2020 has emerged victorious and greatly strengthened from its battle against public health, and is poised to steamroll whatever meager opposition remains for the remainder of this, and future pandemics.

The long-established principles governing how we respond to new infectious diseases have now completely changed – the precedent has been established that dangerous emerging pathogens will no longer be contained, but instead permitted to ‘ease’ into widespread circulation. The intent to “let it rip” in the future is now being openly communicated. With this change in policy comes uncertainty about acceptable lethality. Just how bad will an infectious disease have to be to convince any government to mobilize a meaningful global public health response?

We have some clues regarding that issue from what happened during the initial appearance of the Omicron “variant” (which was really a new serotype) of SARS-CoV-2. Despite some experts warning that a vaccine-only approach would be doomed to fail, governments gambled everything on it. They were then faced with the brute fact of viral evolution destroying their strategy when a new serotype emerged against which existing vaccines had little effect in terms of blocking transmission. The reaction was not to bring back NPIs but to give up, seemingly regardless of the consequences.

Critically, those consequences were unknown when the policy of no intervention was adopted within days of the appearance of Omicron. All previous new SARS-CoV-2 variants had been deadlier than the original Wuhan strain, with the eventually globally dominant Delta variant perhaps as much as 4× as deadly. Omicron turned out to be the exception, but again, that was not known with any certainty when it was allowed to run wild through populations. What would have happened if it had followed the same pattern as Delta?

In the USA, for example, the worst COVID-19 wave was the one in the winter of 2020-21, at the peak of which at least 3,500 people were dying daily (the real number was certainly higher because of undercounting due to lack of testing and improper reporting). The first Omicron BA.1 wave saw the second-highest death tolls, with at least 2,800 dying per day at its peak. Had Omicron been as intrinsically lethal as Delta, we could have easily seen a 4-5× higher peak than January 2021, i.e. as many as 12–15,000 people dying a day. Given that we only had real data on Omicron’s intrinsic lethality after the gigantic wave of infections was unleashed onto the population, we have to conclude that 12–15,000 dead a day is now a threshold that will not force the implementation of serious NPIs for the next problematic COVID-19 serotype.

Logically, it follows that it is also a threshold that will not result in the implementation of NPIs for any other emerging pathogens either. Because why should SARS-CoV-2 be special?

We can only hope that we will never see the day when such an epidemic hits us but experience tells us such optimism is unfounded. The current level of suffering caused by COVID-19 has been completely normalized even though such a thing was unthinkable back in 2019. Populations are largely unaware of the long-term harms the virus is causing to those infected, of the burden on healthcare, increased disability, mortality and reduced life expectancy. Once a few even deadlier outbreaks have been shrugged off by governments worldwide, the baseline of what is considered “acceptable” will just gradually move up and even more unimaginable losses will eventually enter the “acceptable” category. There can be no doubt, from a public health perspective, we are regressing.

We had a second, even more worrying real-life example of what the future holds with the global spread of the MPX virus (formerly known as “monkeypox” and now called “Mpox”) in 2022. MPX is a close relative to the smallpox VARV virus and is endemic to Central and Western Africa, where its natural hosts are mostly various rodent species, but on occasions it infects humans too, with the rate of zoonotic transfer increasing over recent decades. It has usually been characterized by fairly high mortality – the CFR (Case Fatality Rate) has been ∼3.6% for the strain that circulates in Nigeria and ∼10% for the one in the Congo region, i.e. much worse than SARS-CoV-2. In 2022, an unexpected global MPX outbreak developed, with tens of thousands of confirmed cases in dozens of countries. Normally, this would be a huge cause for alarm, for several reasons.

First, MPX itself is a very dangerous disease. Second, universal smallpox vaccination ended many decades ago with the success of the eradication program, leaving the population born after that completely unprotected. Third, lethality in orthopoxviruses is, in fact, highly variable – VARV itself had a variola major strain, with as much as ∼30% CFR, and a less deadly variola minor variety with CFR ∼1%, and there was considerable variation within variola major too. It also appears that high pathogenicity often evolves from less pathogenic strains through reductive evolution - the loss of certain genes something that can happen fairly easily, may well have happened repeatedly in the past, and may happen again in the future, a scenario that has been repeatedly warned about for decades. For these reasons, it was unthinkable that anyone would just shrug off a massive MPX outbreak – it is already bad enough as it is, but allowing it to become endemic means it can one day evolve towards something functionally equivalent to smallpox in its impact.

And yet that is exactly what happened in 2022 – barely any measures were taken to contain the outbreak, and countries simply reclassified MPX out of the “high consequence infectious disease” category in order to push the problem away, out of sight and out of mind. By chance, it turned out that this particular outbreak did not spark a global pandemic, and it was also characterized, for poorly understood reasons, by an unusually low CFR, with very few people dying. But again, that is not the information that was available at the start of the outbreak, when in a previous, interventionist age of public health, resources would have been mobilized to stamp it out in its infancy, but, in the age of laissez-faire, were not. MPX is now circulating around the world and represents a future threat of uncontrolled transmission resulting in viral adaptation to highly efficient human-to-human spread combined with much greater disease severity.

While some are better than others, no national or regional government is making serious efforts towards infection prevention and control, and it seems likely this laissez-faire policy will continue for the foreseeable future. The social, political, and economic movements that worked to achieve this mass infection environment can rejoice at their success.

Those schooled in public health, immunology or working on the front line of healthcare provision know we face an uncertain future, and are aware the implications of recent events stretch far beyond SARS-CoV-2. The shifts that have taken place in attitudes and public health policy will likely damage a key pillar that forms the basis of modern civilized society, one that was built over the last two centuries; the expectation of a largely uninterrupted upwards trajectory of ever-improving health and quality of life, largely driven by the reduction and elimination of infectious diseases that plagued humankind for thousands of years. In the last three years, that trajectory has reversed.

The upward trajectory of public health in the last two centuries Control of infectious disease has historically been a priority for all societies. Quarantine has been in common use since at least the Bronze Age and has been the key method for preventing the spread of infectious diseases ever since. The word “quarantine” itself derives from the 40-day isolation period for ships and crews that was implemented in Europe during the late Middle Ages to prevent the introduction of bubonic plague epidemics into cities1.

Rat climbing a ship's rigging. Modern public health traces its roots to the middle of the 19th century thanks to converging scientific developments in early industrial societies:

The germ theory of diseases was firmly established in the mid-19th century, in particular after Louis Pasteur disproved the spontaneous generation hypothesis. If diseases spread through transmission chains between individual humans or from the environment/animals to humans, then it follows that those transmission chains can be interrupted, and the spread stopped. The science of epidemiology appeared, its birth usually associated with the 1854 Broad Street cholera outbreak in London during which the British physician John Snow identified contaminated water as the source of cholera, pointing to improved sanitation as the way to stop cholera epidemics. Vaccination technology began to develop, initially against smallpox, and the first mandatory smallpox vaccination campaigns began, starting in England in the 1850s. The early industrial era generated horrendous workplace and living conditions for working class populations living in large industrial cities, dramatically reducing life expectancy and quality of life (life expectancy at birth in key industrial cities in the middle of the 19th century was often in the low 30s or even lower2). This in turn resulted in a recognition that such environmental factors affect human health and life spans. The long and bitter struggle for workers’ rights in subsequent decades resulted in much improved working conditions, workplace safety regulations, and general sanitation, and brought sharp increases in life expectancy and quality of life, which in turn had positive impacts on productivity and wealth. Florence Nightingale reemphasized the role of ventilation in healing and preventing illness, ‘The very first canon of nursing… : keep the air he breathes as pure as the external air, without chilling him,’ a maxim that influenced building design at the time. These trends continued in the 20th century, greatly helped by further technological and scientific advances. Many diseases – diphtheria, pertussis, hepatitis B, polio, measles, mumps, rubella, etc. – became things of the past thanks to near-universal highly effective vaccinations, while others that used to be common are no longer of such concern for highly developed countries in temperate climates – malaria, typhus, typhoid, leprosy, cholera, tuberculosis, and many others – primarily thanks to improvements in hygiene and the implementation of non-pharmaceutical measures for their containment.

Furthermore, the idea that infectious diseases should not just be reduced, but permanently eliminated altogether began to be put into practice in the second half of the 20th century3-5 on a global level, and much earlier locally. These programs were based on the obvious consideration that if an infectious agent is driven to extinction, the incalculable damage to people’s health and the overall economy by a persisting and indefinite disease burden will also be eliminated.

The ambition of local elimination grew into one of global eradication for smallpox, which was successfully eliminated from the human population in the 1970s6 (this had already been achieved locally in the late 19th century by some countries), after a heroic effort to find and contain the last remaining infectious individuals7,8. The other complete success was rinderpest in cattle9,10, globally eradicated in the early 21st century.

When the COVID-19 pandemic started, global eradication programs were very close to succeeding for two other diseases – polio11,12 and dracunculiasis13. Eradication is also globally pursued for other diseases, such as yaws14,15, and regionally for many others, e.g. lymphatic filariasis16,17, onchocerciasis18,19, measles and rubella20-30. The most challenging diseases are those that have an external reservoir outside the human population, especially if they are insect borne, and in particular those carried by mosquitos. Malaria is the primary example, but despite these difficulties, eradication of malaria has been a long-standing global public health goal31-33 and elimination has been achieved in temperate regions of the globe34,35, even though it involved the ecologically destructive widespread application of polluting chemical pesticides36,37 to reduce the populations of the vectors. Elimination is also a public goal for other insect borne diseases such as trypanosomiasis38,39.

In parallel with pursuing maximal reduction and eventual eradication of the burden of existing endemic infectious diseases, humanity has also had to battle novel infectious diseases40, which have been appearing at an increased rate over recent decades41-43. Most of these diseases are of zoonotic origin, and the rate at which they are making the jump from wildlife to humans is accelerating, because of the increased encroachment on wildlife due to expanding human populations and physical infrastructure associated with human activity, the continued destruction of wild ecosystems that forces wild animals towards closer human contact, the booming wildlife trade, and other such trends.

Because it is much easier to stop an outbreak when it is still in its early stages of spreading through the population than to eradicate an endemic pathogen, the governing principle has been that no emerging infectious disease should be allowed to become endemic. This goal has been pursued reasonably successfully and without controversy for many decades.

The most famous newly emerging pathogens were the filoviruses (Ebola44-46, Marburg47,48), the SARS and MERS coronaviruses, and paramyxoviruses like Nipah49,50. These gained fame because of their high lethality and potential for human-to-human spread, but they were merely the most notable of many examples.

Pigs in close proximity to humans. Such epidemics were almost always aggressively suppressed. Usually, these were small outbreaks, and because highly pathogenic viruses such as Ebola cause very serious sickness in practically all infected people, finding and isolating the contagious individuals is a manageable task. The largest such epidemic was the 2013-16 Ebola outbreak in West Africa, when a filovirus spread widely in major urban centers for the first time. Containment required a wartime-level mobilization, but that was nevertheless achieved, even though there were nearly 30,000 infections and more than 11,000 deaths51.

SARS was also contained and eradicated from the human population back in 2003-04, and the same happened every time MERS made the jump from camels to humans, as well as when there were Nipah outbreaks in Asia.

The major counterexample of a successful establishment in the human population of a novel highly pathogenic virus is HIV. HIV is a retrovirus, and as such it integrates into the host genome and is thus nearly impossible to eliminate from the body and to eradicate from the population52 (unless all infected individuals are identified and prevented from infecting others for the rest of their lives). However, HIV is not an example of the containment principle being voluntarily abandoned as the virus had made its zoonotic jump and established itself many decades before its eventual discovery53 and recognition54-56, and long before the molecular tools that could have detected and potentially fully contained it existed.

Still, despite all these containment success stories, the emergence of a new pathogen with pandemic potential was a well understood and frequently discussed threat57-60, although influenza viruses rather than coronaviruses were often seen as the most likely culprit61-65. The eventual appearance of SARS-CoV-2 should therefore not have been a huge surprise, and should have been met with a full mobilization of the technical tools and fundamental public health principles developed over the previous decades.

The ecological context One striking property of many emerging pathogens is how many of them come from bats. While the question of whether bats truly harbor more viruses than other mammals in proportion to their own species diversity (which is the second highest within mammals after rodents) is not fully settled yet66-69, many novel viruses do indeed originate from bats, and the ecological and physiological characteristics of bats are highly relevant for understanding the situation that Homo sapiens finds itself in right now.

Group of bats roosting in a cave. Another startling property of bats and their viruses is how highly pathogenic to humans (and other mammals) many bat viruses are, while bats themselves are not much affected (only rabies is well established to cause serious harm to bats68). Why bats seem to carry so many such pathogens, and how they have adapted so well to coexisting with them, has been a long-standing puzzle and although we do not have a definitive answer, some general trends have become clear.

Bats are the only truly flying mammals and have been so for many millions of years. Flying has resulted in a number of specific adaptations, one of them being the tolerance towards a very high body temperature (often on the order of 42-43ºC). Bats often live in huge colonies, literally touching each other, and, again, have lived in conditions of very high density for millions of years. Such densities are rare among mammals and are certainly not the native condition of humans (human civilization and our large dense cities are a very recent phenomenon on evolutionary time scales). Bats are also quite long-lived for such small mammals70-71 – some fruit bats can live more than 35 years and even small cave dwelling species can live about a decade. These are characteristics that might have on one hand facilitated the evolution of a considerable set of viruses associated with bat populations. In order for a non-latent respiratory virus to maintain itself, a minimal population size is necessary. For example, it is hypothesized that measles requires a minimum population size of 250-300,000 individuals72. And bats have existed in a state of high population densities for a very long time, which might explain the high diversity of viruses that they carry. In addition, the long lifespan of many bat species means that their viruses may have to evolve strategies to overcome adaptive immunity and frequently reinfect previously infected individuals as opposed to the situation in short-lived species in which populations turn over quickly (with immunologically naive individuals replacing the ones that die out).

On the other hand, the selective pressure that these viruses have exerted on bats may have resulted in the evolution of various resistance and/or tolerance mechanisms in bats themselves, which in turn have driven the evolution of counter strategies in their viruses, leading them to be highly virulent for other species. Bats certainly appear to be physiologically more tolerant towards viruses that are otherwise highly virulent to other mammals. Several explanations for this adaptation have been proposed, chief among them a much more powerful innate immunity and a tolerance towards infections that does not lead to the development of the kind of hyperinflammatory reactions observed in humans73-75, the high body temperature of bats in flight, and others.

The notable strength of bat innate immunity is often explained by the constitutively active interferon response that has been reported for some bat species76-78. It is possible that this is not a universal characteristic of all bats79 – only a few species have been studied – but it provides a very attractive mechanism for explaining both how bats prevent the development of severe systemic viral infections in their bodies and how their viruses in turn would have evolved powerful mechanisms to silence the interferon response, making them highly pathogenic for other mammals.

The tolerance towards infection is possibly rooted in the absence of some components of the signaling cascades leading to hyperinflammatory reactions and the dampened activity of others80.

Map of scheduled airline traffic around the world, circa June 2009 Map of scheduled airline traffic around the world. Credit: Jpatokal An obvious ecological parallel can be drawn between bats and humans – just as bats live in dense colonies, so now do modern humans. And we may now be at a critical point in the history of our species, in which our ever-increasing ecological footprint has brought us in close contact with bats in a way that was much rarer in the past. Our population is connected in ways that were previously unimaginable. A novel virus can make the zoonotic jump somewhere in Southeast Asia and a carrier of it can then be on the other side of the globe a mere 24-hours later, having encountered thousands of people in airports and other mass transit systems. As a result, bat pathogens are now being transferred from bat populations to the human population in what might prove to be the second major zoonotic spillover event after the one associated with domestication of livestock and pets a few thousand years ago.

Unfortunately for us, our physiology is not suited to tolerate these new viruses. Bats have adapted to live with them over many millions of years. Humans have not undergone the same kind of adaptation and cannot do so on any timescale that will be of use to those living now, nor to our immediate descendants.

Simply put, humans are not bats, and the continuous existence and improvement of what we now call “civilization” depends on the same basic public health and infectious disease control that saw life expectancy in high-income countries more than double to 85 years. This is a challenge that will only increase in the coming years, because the trends that are accelerating the rate of zoonotic transfer of pathogens are certain to persist.

Given this context, it is as important now to maintain the public health principle that no new dangerous pathogens should be allowed to become endemic and that all novel infectious disease outbreaks must be suppressed as it ever was.

The death of public health and the end of epidemiological comfort It is also in this context that the real gravity of what has happened in the last three years emerges.

After HIV, SARS-CoV-2 is now the second most dangerous infectious disease agent that is 'endemic' to the human population on a global scale. And yet not only was it allowed to become endemic, but mass infection was outright encouraged, including by official public health bodies in numerous countries81-83.

The implications of what has just happened have been missed by most, so let’s spell them out explicitly.

We need to be clear why containment of SARS-CoV-2 was actively sabotaged and eventually abandoned. It has absolutely nothing to do with the “impossibility” of achieving it. In fact, the technical problem of containing even a stealthily spreading virus such as SARS-CoV-2 is fully solved, and that solution was successfully applied in practice for years during the pandemic.

The list of countries that completely snuffed out outbreaks, often multiple times, includes Australia, New Zealand, Singapore, Taiwan, Vietnam, Thailand, Bhutan, Cuba, China, and a few others, with China having successfully contained hundreds of separate outbreaks, before finally giving up in late 2022.

The algorithm for containment is well established – passively break transmission chains through the implementation of nonpharmaceutical interventions (NPIs) such as limiting human contacts, high quality respirator masks, indoor air filtration and ventilation, and others, while aggressively hunting down active remaining transmission chains through traditional contact tracing and isolation methods combined with the powerful new tool of population-scale testing.

Oklahoma’s Strategic National Stockpile. Credit: DVIDS Understanding of airborne transmission and institution of mitigation measures, which have heretofore not been utilized in any country, will facilitate elimination, even with the newer, more transmissible variants. Any country that has the necessary resources (or is provided with them) can achieve full containment within a few months. In fact, currently this would be easier than ever before because of the accumulated widespread multiple recent exposures to the virus in the population suppressing the effective reproduction number (Re). For the last 18 months or so we have been seeing a constant high plateau of cases with undulating waves, but not the major explosions of infections with Re reaching 3-4 that were associated with the original introduction of the virus in 2020 and with the appearance of the first Omicron variants in late 2021.

It would be much easier to use NPIs to drive Re to much below 1 and keep it there until elimination when starting from Re around 1.2-1.3 than when it was over 3, and this moment should be used, before another radically new serotype appears and takes us back to those even more unpleasant situations. This is not a technical problem, but one of political and social will. As long as leadership misunderstands or pretends to misunderstand the link between increased mortality, morbidity and poorer economic performance and the free transmission of SARS-CoV-2, the impetus will be lacking to take the necessary steps to contain this damaging virus.

Political will is in short supply because powerful economic and corporate interests have been pushing policymakers to let the virus spread largely unchecked through the population since the very beginning of the pandemic. The reasons are simple. First, NPIs hurt general economic activity, even if only in the short term, resulting in losses on balance sheets. Second, large-scale containment efforts of the kind we only saw briefly in the first few months of the pandemic require substantial governmental support for all the people who need to pause their economic activity for the duration of effort. Such an effort also requires large-scale financial investment in, for example, contact tracing and mass testing infrastructure and providing high-quality masks. In an era dominated by laissez-faire economic dogma, this level of state investment and organization would have set too many unacceptable precedents, so in many jurisdictions it was fiercely resisted, regardless of the consequences for humanity and the economy.

None of these social and economic predicaments have been resolved. The unofficial alliance between big business and dangerous pathogens that was forged in early 2020 has emerged victorious and greatly strengthened from its battle against public health, and is poised to steamroll whatever meager opposition remains for the remainder of this, and future pandemics.

The long-established principles governing how we respond to new infectious diseases have now completely changed – the precedent has been established that dangerous emerging pathogens will no longer be contained, but instead permitted to ‘ease’ into widespread circulation. The intent to “let it rip” in the future is now being openly communicated84. With this change in policy comes uncertainty about acceptable lethality. Just how bad will an infectious disease have to be to convince any government to mobilize a meaningful global public health response?

We have some clues regarding that issue from what happened during the initial appearance of the Omicron “variant” (which was really a new serotype85,86) of SARS-CoV-2. Despite some experts warning that a vaccine-only approach would be doomed to fail, governments gambled everything on it. They were then faced with the brute fact of viral evolution destroying their strategy when a new serotype emerged against which existing vaccines had little effect in terms of blocking transmission. The reaction was not to bring back NPIs but to give up, seemingly regardless of the consequences.

Critically, those consequences were unknown when the policy of no intervention was adopted within days of the appearance of Omicron. All previous new SARS-CoV-2 variants had been deadlier than the original Wuhan strain, with the eventually globally dominant Delta variant perhaps as much as 4× as deadly87. Omicron turned out to be the exception, but again, that was not known with any certainty when it was allowed to run wild through populations. What would have happened if it had followed the same pattern as Delta?

In the USA, for example, the worst COVID-19 wave was the one in the winter of 2020-21, at the peak of which at least 3,500 people were dying daily (the real number was certainly higher because of undercounting due to lack of testing and improper reporting). The first Omicron BA.1 wave saw the second-highest death tolls, with at least 2,800 dying per day at its peak. Had Omicron been as intrinsically lethal as Delta, we could have easily seen a 4-5× higher peak than January 2021, i.e. as many as 12–15,000 people dying a day. Given that we only had real data on Omicron’s intrinsic lethality after the gigantic wave of infections was unleashed onto the population, we have to conclude that 12–15,000 dead a day is now a threshold that will not force the implementation of serious NPIs for the next problematic COVID-19 serotype.

UK National Covid Memorial Wall. Credit: Dominic Alves Logically, it follows that it is also a threshold that will not result in the implementation of NPIs for any other emerging pathogens either. Because why should SARS-CoV-2 be special?

We can only hope that we will never see the day when such an epidemic hits us but experience tells us such optimism is unfounded. The current level of suffering caused by COVID-19 has been completely normalized even though such a thing was unthinkable back in 2019. Populations are largely unaware of the long-term harms the virus is causing to those infected, of the burden on healthcare, increased disability, mortality and reduced life expectancy. Once a few even deadlier outbreaks have been shrugged off by governments worldwide, the baseline of what is considered “acceptable” will just gradually move up and even more unimaginable losses will eventually enter the “acceptable” category. There can be no doubt, from a public health perspective, we are regressing.

We had a second, even more worrying real-life example of what the future holds with the global spread of the MPX virus (formerly known as “monkeypox” and now called “Mpox”) in 2022. MPX is a close relative to the smallpox VARV virus and is endemic to Central and Western Africa, where its natural hosts are mostly various rodent species, but on occasions it infects humans too, with the rate of zoonotic transfer increasing over recent decades88. It has usually been characterized by fairly high mortality – the CFR (Case Fatality Rate) has been ∼3.6% for the strain that circulates in Nigeria and ∼10% for the one in the Congo region, i.e. much worse than SARS-CoV-2. In 2022, an unexpected global MPX outbreak developed, with tens of thousands of confirmed cases in dozens of countries89,90. Normally, this would be a huge cause for alarm, for several reasons.

First, MPX itself is a very dangerous disease. Second, universal smallpox vaccination ended many decades ago with the success of the eradication program, leaving the population born after that completely unprotected. Third, lethality in orthopoxviruses is, in fact, highly variable – VARV itself had a variola major strain, with as much as ∼30% CFR, and a less deadly variola minor variety with CFR ∼1%, and there was considerable variation within variola major too. It also appears that high pathogenicity often evolves from less pathogenic strains through reductive evolution - the loss of certain genes something that can happen fairly easily, may well have happened repeatedly in the past, and may happen again in the future, a scenario that has been repeatedly warned about for decades91,92. For these reasons, it was unthinkable that anyone would just shrug off a massive MPX outbreak – it is already bad enough as it is, but allowing it to become endemic means it can one day evolve towards something functionally equivalent to smallpox in its impact.

Colorized transmission electron micrograph of Mpox virus particles. Credit: NIAID And yet that is exactly what happened in 2022 – barely any measures were taken to contain the outbreak, and countries simply reclassified MPX out of the “high consequence infectious disease” category93 in order to push the problem away, out of sight and out of mind. By chance, it turned out that this particular outbreak did not spark a global pandemic, and it was also characterized, for poorly understood reasons, by an unusually low CFR, with very few people dying94,95. But again, that is not the information that was available at the start of the outbreak, when in a previous, interventionist age of public health, resources would have been mobilized to stamp it out in its infancy, but, in the age of laissez-faire, were not. MPX is now circulating around the world and represents a future threat of uncontrolled transmission resulting in viral adaptation to highly efficient human-to-human spread combined with much greater disease severity.

This is the previously unthinkable future we will live in from now on in terms of our approach to infectious disease.

What may be controlled instead is information. Another lesson of the pandemic is that if there is no testing and reporting of cases and deaths, a huge amount of real human suffering can be very successfully swept under the rug. Early in 2020, such practices – blatant denial that there was any virus in certain territories, outright faking of COVID-19 statistics, and even resorting to NPIs out of sheer desperation but under false pretense that it is not because of COVID-19 – were the domain of failed states and less developed dictatorships. But in 2023 most of the world has adopted such practices – testing is limited, reporting is infrequent, or even abandoned altogether – and there is no reason to expect this to change. Information control has replaced infection control.

After a while it will not even be possible to assess the impact of what is happening by evaluating excess mortality, which has been the one true measure not susceptible to various data manipulation tricks. As we get increasingly removed from the pre-COVID-19 baselines and the initial pandemic years are subsumed into the baseline for calculating excess mortality, excess deaths will simply disappear by the power of statistical magic. Interestingly, countries such as the UK, which has already incorporated two pandemic years in its five-year average, are still seeing excess deaths, which suggests the virus is an ongoing and growing problem.

It should also be stressed that this radical shift in our approach to emerging infectious diseases is probably only the beginning of wiping out the hard-fought public health gains of the last 150+ years. This should be gravely concerning to any individuals and institutions concerned with workers and citizens rights.

This shift is likely to impact existing eradication and elimination efforts. Will the final pushes be made to complete the various global eradication campaigns listed above? That may necessitate some serious effort involving NPIs and active public health measures, but how much appetite is there for such things after they have been now taken out of the toolkit for SARS-CoV-2?

We can also expect previously forgotten diseases to return where they have successfully been locally eradicated. We have to always remember that the diseases that we now control with universal childhood vaccinations have not been globally eradicated – they have disappeared from our lives because vaccination rates are high enough to maintain society as a whole above the disease elimination threshold, but were vaccination rates to slip, those diseases, such as measles, will return with a vengeance.

The anti-vaccine movement was already a serious problem prior to COVID-19, but it was given a gigantic boost with the ill-advised vaccine-only COVID-19 strategy. Governments and their nominal expert advisers oversold the effectiveness of imperfect first generation COVID-vaccines, and simultaneously minimized the harms of SARS-CoV-2, creating a reality gap which gave anti-vaccine rhetoric space to thrive. This is a huge topic to be explored separately. Here it will suffice to say that while anti-vaxxers were a fringe movement prior to the pandemic, “vaccination” in general is now a toxic idea in the minds of truly significant portions of the population. A logical consequence of that shift has been a significant decrease in vaccination coverage for other diseases as well as for COVID-19.

This is even more likely given the shift in attitudes towards children. Child labour, lack of education and large families were the hallmarks of earlier eras of poor public health, which were characterized by high birth-rates and high infant mortality. Attitudes changed dramatically over the course of the 20th century and wherever health and wealth increased, child mortality fell, and the transition was made to small families. Rarity increased perceived value and children’s wellbeing became a central concern for parents and carers. The arrival of COVID-19 changed that, with some governments, advisers, advocacy groups and parents insisting that children should be exposed freely to a Severe Acute Respiratory Syndrome virus to ‘train’ their immune systems.

Infection, rather than vaccination, was the preferred route for many in public health in 2020, and still is in 2023, despite all that is known about this virus’s propensity to cause damage to all internal organs, the immune system, and the brain, and the unknowns of postinfectious sequelae. This is especially egregious in infants, whose naive immune status may be one of the reasons they have a relatively high hospitalization rate. Some commentators seek to justify the lack of protection for the elderly and vulnerable on a cost basis. We wonder what rationale can justify a lack of protection for newborns and infants, particularly in a healthcare setting, when experience of other viruses tells us children have better outcomes the later they are exposed to disease? If we are not prepared to protect children against a highly virulent SARS virus, why should we protect against others? We should expect a shift in public health attitudes, since ‘endemicity’ means there is no reason to see SARS-CoV-2 as something unique and exceptional.

We can also expect a general degradation of workplace safety protocols and standards, again reversing many decades of hard-fought gains. During COVID-19, aside from a few privileged groups who worked from home, people were herded back into their workplaces without minimal safety precautions such as providing respirators, and improving ventilation and indoor air quality, when a dangerous airborne pathogen was spreading.

Can we realistically expect existing safety precautions and regulations to survive after that precedent has been set? Can we expect public health bodies and regulatory agencies, whose job it is to enforce these standards, to fight for workplace safety given what they did during the pandemic? It is highly doubtful. After all, they stubbornly refused to admit that SARS-CoV-2 is airborne (even to this very day in fact – the World Health Organization’s infamous “FACT: #COVID19 is NOT airborne” Tweet from March 28 2020 is still up in its original form), and it is not hard to see why – implementing airborne precautions in workplaces, schools, and other public spaces would have resulted in a cost to employers and governments; a cost they could avoid if they simply denied they needed to take such precautions. But short-term thinking has resulted in long-term costs to those same organizations, through the staffing crisis, and the still-rising disability tsunami. The same principle applies to all other existing safety measures.

Worse, we have now entered the phase of abandoning respiratory precautions even in hospitals. The natural consequence of unmasked staff and patients, even those known to be SARS-CoV-2 positive, freely mixing in overcrowded hospitals is the rampant spread of hospital-acquired infections, often among some of the most vulnerable demographics. This was previously thought to be a bad thing. And what of the future? If nobody is taking any measures to stop one particular highly dangerous nosocomial infection, why would anyone care about all the others, which are often no easier to prevent? And if standards of care have slipped to such a low point with respect to COVID-19, why would anyone bother providing the best care possible for other conditions? This is a one-way feed-forward healthcare system degradation that will only continue.

Finally, the very intellectual foundations of the achievements of the last century and a half are eroding. Chief among these is the germ theory of infectious disease, by which transmission chains can be isolated and broken. The alternative theory, of spontaneous generation of pathogens, means there are no chains to be broken. Today, we are told that it is impossible to contain SARS-CoV-2 and we have to "just live with it,” as if germ theory no longer holds. The argument that the spread of SARS-CoV-2 to wildlife means that containment is impossible illustrates these contradictions further – SARS-CoV-2 came from wildlife, as did all other zoonotic infections, so how does the virus spilling back to wildlife change anything in terms of public health protocol? But if one has decided that from here on there will be no effort to break transmission chains because it is too costly for the privileged few in society, then excuses for that laissez-faire attitude will always be found.

And that does not bode well for the near- and medium-term future of the human species on planet Earth.

(Follow the link for more than 100 references and sources)

#mask up#covid#pandemic#covid 19#wear a mask#public health#coronavirus#sars cov 2#still coviding#wear a respirator

153 notes

·

View notes

Note

Do you think Makoto would still act like a detective at some point?

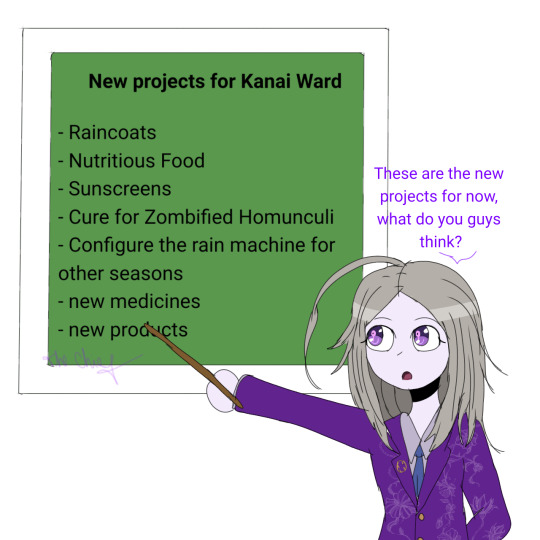

Still acting like a detective? Look, we can explore some situations where he could activate an investigative mode. Let's talk about nerdy things about Makoto together XD.

Tw: spoilers alert, big text.

Like, He definitely has the detective essence in his mind, because of his original, but he doesn't need to act like a detective anymore, because he's the CEO of an corporation and he's his own person now.

He can use all this inherited intelligence to build projects for his company, like, he was technically using this ability to do bad things in the name of love for Kanai Ward, After the main game, he can try new things to make the world a better place for his people, such as asking for forgiveness for what he did.

Even though he's always busy with project management, He is always watching everyone, knowing about all of them, You can imagine that he would be studying hidden human behavior. He has his own research to explore without it having to be just about Kanai Ward and Amaterasu.

He also openly say in chapter 4 he trusts machines more than humans. That humans can betray easily. (Probably something from his past as an experiment), This is also a clue that he would still have trust issues in post game AUs. As a celebrity, he is clearly the target of many enemies from outside who seek power and fame, so they would probably already try to kill him. I still like to imagine Martina and Seth protecting him, As much as Makoto can defend himself with weapons and machines in his favor.

Would you agree that he could create machines too and understand programming in an easy way? As a computer science student, I see this a lot lately, What kind of programming language did he use to make the Rain Machine work? I like to put my knowledge into favorite characters and my OCs, I hope it doesn't bother you. I was also thinking of a silly story of him creating an Idol for Kanai Ward, an anime girl Idol, based on himself or some famous figure like Hatsune Miku. He would always update her voice database, and her shows would be in holograms, or he would manage to create a physical robotic body for her using Amaterasu's technology. The reason for this project is probably to give the people of the city some fun and entertainment.

Also, she could be as his virtual daughter.

He also loves to talk about melons, probably has a melon plantation. So, it's easy to think that he would also be doing research on medicines using plants. I think it's a healthy way to take care of his health and the health of others he loves. This guy must love talking about botany.

Mmmm, what other projects do you think he'd be doing? The Idol project is just a silly story, and the medicinal plant project is a headcanon that I really liked.

As to what kind of detective he would be, he would definitely be an corporate detective. The term “corporate detective” refers to professionals who specialize in investigating and resolving issues within the corporate environment, such as fraud, industrial espionage, unfair competition, and other illicit activities that may compromise the integrity and security of an organization.

These professionals play a crucial role in protecting and maintaining the integrity of companies, using specialized skills and a keen sense of ethics to navigate the complexities of the modern business environment.

His investigative side would serve to solve problems within the company. But, I also like to imagine that he has a classic murder detective side, like Halara, he would know who the culprit of the case is with little information, clues, and witnesses, But he would let the newbies figure it out first, he could act like a mysterious person, dropping hints here and there, and after the newbie got the answer right, he would congratulate them. Maybe he would have moments where he would get irritated if the newbie showed signs of giving up.

He would probably intervene in some discussion and put everyone on the right track. Maybe he would have moments where he might act cold, but I think would be rude to the culprit, because even if the killer had their justifications, he would still be cold to them, and nothing would save their from punishment. The fact that he doesn't care about the criminals on death row and just sees them as ingredients for meatballs in the game only reinforces this thought: he doesn't care about the killers.

I would also find it interesting that he acted like mentor, he would always be in the shadows, observing the actions of the newbies from a long distance, and would intervene if this is necessary. Do you think he would be a good ally? He would have plenty of resources to help new characters in some new story. (COUGH COUGH, like a future sequel, COUGH COUGH) Think about it: he being obsessed with fixing every mistake made.

Wow, I think I said too much, sorry, I hope I answered your question, Anon, sorry guys, I think I get completely nerdy when I start talking about stuff about a favorite character. I love sharing my ideas with other fans who like the character too, I hope this isn't boring, I can probably do this more often with others. >.<

Here's a silly edit of Makoto smiling and winking at you, hope you have a good day.

It's just ideas and my way of thinking about him, it's okay if you don't agree with everything I wrote here.

#master detective archives: rain code#mdarc spoilers#rain code#makoto kagutsuchi#fanart#edit#art#my art#silly edit#anon ask#ask answered

10 notes

·

View notes

Text

Engineering is Inherently Political

Okay, yea, seemingly loaded statement but hear me out.

In our current political climate (particularly in the Trump/post-Trump era ugh), the popular sentiment is that scientists and other academics are inherently political. So much of science gets politicized; climate change, abortion, gender “issues”, flat earth (!!), insert any scientific topic even if it isn’t very controversial and you can find some political discourse about it somewhere. However, if you were to ask people if they think that engineering is political, I would bet that 9/10 people would say no. The popular perception of engineering is that it’s objective and non-political. Engineering, generally, isn’t very controversial.

I argue that these sentiments should switch.

At its base level, engineering is the application of science and math to solve problems. Tack on the fact that most people don’t really know what engineering is (hell, I couldn’t even really describe it until starting my PhD and studying that concept specifically). Not controversial, right? We all want to solve the world’s problems and make the world a better place and engineers fill that role! But the best way to solve any problem is a subjective issue; no two people will fully agree on the best way to approach or solve a problem.

Why do we associate science and scientists with controversy but engineers with objectivity? Scientists study what is. It’s a scientist’s job to understand our world. Physicists understand how the laws of the universe work, biologists explore everything in our world that lives, doctors study the human body and how it works, environmental scientists study the Earth and its health, I could go on. My point is that scientists discover and tell us what is. Why do we politicize and fear monger about smart people telling us what they discover about the world?

Engineering, however, has a reputation for being logical, objective, result oriented. Which I get, honestly. It’s appealing to believe that the people responsible for designing and building our world are objective and, for the most part, they are. But this is a much more nuanced topic once you think deeper about it.

For example, take my discipline, aerospace engineering. On the surface, how to design a plane or a rocket isn’t subjective. Everyone has the same goal, get people and things from place to place without killing them (yea I bastardized my discipline a bit but that’s basically all it boils down to). Let’s think a little deeper about the implications though. Let’s say you work for a spacecraft manufacturer and let’s hypothetically call it SpaceX. Your rocket is so powerful that during takeoff it destroys the launch pad. That’s an expensive problem so you’re put on the team of engineers dedicated to solving this problem. The team decides that the most effective and least expensive solution is to spray water onto the rocket and launchpad during takeoff. This solution works great! The launchpad stays intact throughout the launch and the company saves money. However, that water doesn’t disappear after launch, and now it’s contaminated with chemicals used in and on the rocket. Now contaminated water flows into the local environment affecting not just the wildlife but also the water supply of the local community. Who is responsible for solving that issue? Do we now need a team of environmental or chemical engineers to solve this new problem caused by the aerospace engineers?

Yes, engineers solve problems, but they also cause problems.

Every action has its reaction. Each solution has its repercussions.

As engineers we possess some of the most dangerous information in the world and are armed with the weapon to utilize it, our minds. Aerospace engineers know how to make missiles, chemical engineers know how to make bombs, computer scientists know how to control entire technological ecosystems. It’s very easy for an engineer to hurt people, and many do. I’m not exempt from this. I used to work for a military contractor, and I still feel pretty guilty about the implications of the problems that I solved. It is an engineer’s responsibility to act and use their knowledge ethically.

Ethical pleas aside, let’s get back to the topic at hand.

Engineering is inherently political. The goal of modern engineering is to avert catastrophe, tackle societal problems, and increase prosperity. If you disagree don’t argue with me, argue with the National Academy of Engineering. It is an engineer’s responsibility to use their knowledge to uplift the world and solve societal problems, that sounds pretty political to me!

An engineer doesn’t solve a problem in a vacuum. Each problem exists within the context of the situation that caused it as well as the society surrounding that situation. An engineer must consider the societal implications of their solutions and designs and aim to uplift that society through their design and solution to the problem. You can’t engineer within a social society without considering the social implications of both the problem and the solution. Additionally, the social implications of those engineering decisions affect different people in different ways. It’s imperative to be aware and mindful of the social inequality between demographics of people affected by both the solution and the problem. For example, our SpaceX company could be polluting the water supply of a poor community that doesn’t have the resources to solve the problem nor the power or influence to confront our multi-billion-dollar company. Now, a multi-billion-dollar company is advancing society and making billions of dollars at the cost of thousands of lives that already struggle due to their social standing in the world. Now the issue has layers that add further social implications that those without money are consistently prone to the whims of those with money. Which, unfortunately, is a step of ethical thought that many engineers don’t tend to take.

Engineers control our world. Engineers decide which problems to solve and how best to solve them. Engineers control who is impacted by those solutions. Engineers have the power to either protect and lift up the marginalized or continue to marginalize them. Those who control the engineers control the world. This is political. This is a social issue.

Now look me in the eyes and tell me that engineering isn’t inherently political.

#i feel so strongly about this oh my god#please free me from this prison#im just screaming into the void at this point#engineering#engineers#phdjourney#phdblr#phd student#grad school#academic diary#PhD

10 notes

·

View notes

Text

The Trump administration has replaced Covid.gov – a website that once provided Americans with access to information about free tests, vaccines, treatment and secondary conditions such as long Covid – with a treatise on the “lab leak” theory.

The site includes intense criticism of Dr Anthony Fauci, who helmed national Covid policies under Donald Trump and Joe Biden, the World Health Organization (WHO) and state leadership in New York.

“This administration prioritizes transparency over all else,” according to a senior administration quoted in Fox News, in spite of evidence to the contrary. “The American people deserve to know the truth about the Covid pandemic and we will always find ways to reach communities with that message.”