#OpenCL

Explore tagged Tumblr posts

Text

AMD Ryzen 7 8700G APU Zen 4 & Polaris Wonders!

AMD Ryzen 7 8700G APU The company formidable main processing unit (APU) with Zen 4 framework and Polaris designs, the AMD Ryzen 7 processor 8700G

The conclusions of the assessments for the Ryzen 5 processor from AMD 8600G had previously revealed this morning, and now some of the most recent measurements from the Ryzen 7 8700G APU graph G have been released made public. Among AMD’s Hawk A point generation of advanced processing units (APUs), the upcoming Ryzen 7 8700G APU will represent the top of the lineup of the The AM5 series desktops APU. That is going to have an identical blend of Zen 4 and RDNA 3 cores in a single monolithic package.

Featuring 16 MB of L3 memory cache and 8 megabytes of L2 cache, the aforementioned AMD Ryzen 7 8700G APU features a total of 8 CPU cores and a total of 16 threads built onto it. It is possible to quicken the clock to 5.10 GHz from its base frequency of 4.20 GHz. A Radeon 780M based on RDNA 3 with 12 compute units and a clock speed of 2.9 GHz is included in the integrated graphics processing unit (GPU). It is anticipated that future Hawk Point APUs would have support for 64GB DDR5 modules, which will allow for a maximum of 256GB of DRAM capacity to be used on the AM5 architecture.

The study ASUS TUF Extreme X670E-PLUS wireless internet chipset with 32GB of DDR5 4800 RAM was used for the performance tests that were carried out. Because of this design, it is anticipated that the performance would be somewhat reduced. The Hawk Point APUs and the AM5 platform are both compatible with faster memory modules, which may lead to improved performance. This is made possible by the greater bandwidth that is advantageous to the integrated graphics processing unit (iGPU).

The AMD Ryzen 7 8700G “Hawk Point” APU was able to reach a performance of 35,427 points in the Vulkan benchmark, while it earned 29,244 points in the OpenCL benchmark. With the Ryzen 5 8600G equipped with the Radeon 760M integrated graphics processing unit, this results in a 15% improvement in Vulkan and an 18% increase in OpenCL. The 760M integrated graphics processing unit (iGPU) has only 8 compute units, but the AMD 780M has 12 compute units.

In spite of the fact that the 760M integrated graphics processing unit (iGPU) has faster DDR5 6000 memory, performance does not seem to rise linearly whenever there are fifty percent more cores. It would seem that this is the maximum performance that the Radeon IGPs are capable of. The results of future testing, particularly those involving overclocking, will be fascinating. However, the Meteor Lake integrated graphics processing units (iGPUs) might be improved with better quality memory configurations (LPDDR5x).

With the debut of the AM5 “Hawk Point” APUs at the end of January, it is anticipated that the RDNA 3 chips would provide increased performance for the integrated graphics processing unit (iGPU). At AMD’s next CES 2024 event, it is anticipated that further details will be discussed and revealed.

Read more on Govindhtech.com

2 notes

·

View notes

Text

I think I figured out why GNU Backgammon's evaluations have been so stubbornly slow, even despite all of my rewriting, refactoring, and optimizing.

On a whim, I tried turning the "evaluation threads" counter in the options menu all the way down to 1 (from the two dozen or so I had it set at before)... yet the performance / evaluation time was completely identical. I dug a little deeper, and everything I've found thus far has confirmed my suspicion:

The evaluations are all being performed one at a time, in serial.

On one hand, really? Fucking REALLY? I get that this codebase has all the structure and maintainability of a mud puddle, and that the developers are volunteers, but this is egregious!

On the other hand, this will make improving the engine's performance yet further a much simpler task. No need to break out OpenCL if plain ol' threads aren't being properly utilized, heheh.

#backgammon#programming#txt#I might end up using OpenCL anyway#Imagine being able to get near-instant 6-ply and 7-ply analysis...!#XG's days are numbered

1 note

·

View note

Text

scarlets linux misadventures episode 1

attempting to install amd gpu drivers and opencl to edit videos

"why cant you find this package my little zenbook"

"you need to install these other 10 things first and then manually install the latest version of amdgpu-install directly from the repo because for some reason amd does not list the latest version that is for ubuntu 24 at all."

"and then it will work?"

👁️👄👁️

14 notes

·

View notes

Text

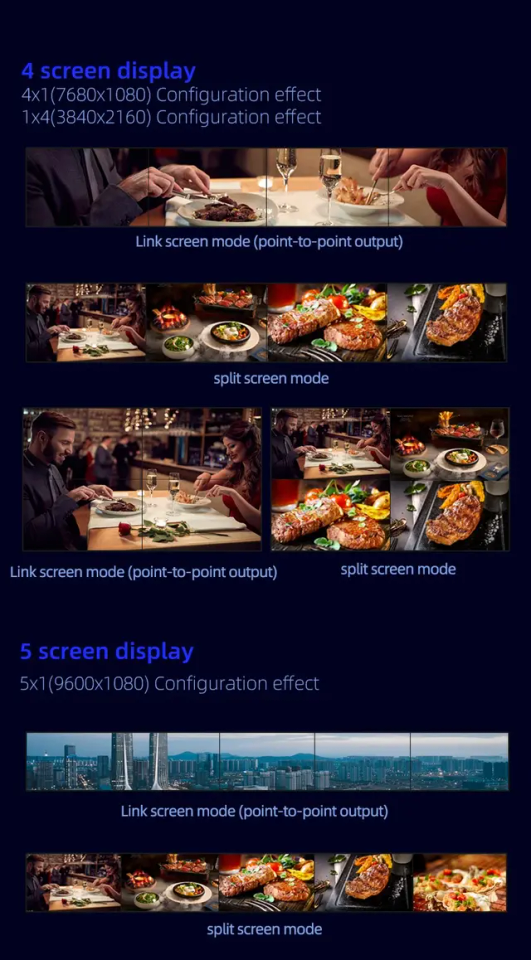

High-Performance AI Edge Computing Box with RK3588 | 8K Output, Multi-Screen Support, and AI Algorithms

Features: - Advanced AI Processing: Equipped with RK3588 (6TOPS) NPU for AI edge computing, supporting object recognition, facial recognition, and more. - 8K Video Output: Supports 8K@60FPS H.265/H.264/VP9 video encoding and decoding with six HDMI 2.0 outputs for high-definition display. - Multi-Screen Support: Allows up to six screens for seamless video wall or multi-display setups, perfect for retail, security, or industrial applications. - Rich Connectivity: Includes HDMI IN/OUT, dual gigabit Ethernet, Wi-Fi 6, USB 3.0, and more, ensuring robust connection options for diverse use cases. - AI Algorithm Compatibility: Supports popular AI frameworks such as OpenCV, TensorFlow, PyTorch, Caffe, and YOLO, with extensive SDK and API for easy integration. Specifications: - CPU: 8-core 64-bit architecture (4x Cortex-A76 + 4x Cortex-A55) - GPU: Mali-G610 MC4, supports OpenGL ES 1.1/2.0/3.2, OpenCL 2.0, Vulkan 1.1 - NPU: Neon and FPU, up to 6TOPs - Memory: DDR4 4GB (default) - Storage: EMMC 32GB, expandable via USB - Video Decode: 8K60FPS, H.265/H.264/VP9 codecs - Video Output: 6x HDMI 2.0 (7680x4320 resolution) - Audio: HDMI audio, 1x microphone input, 1x speaker output - Ports: 1x USB 3.0 host, 1x USB 3.0 OTG, 4x USB 2.0 - Network: 2x 10/100/1000M adaptive Ethernet ports, optional 3G/4G module - Wi-Fi: 2.4GHz/5GHz support - Bluetooth: 4.0 (supports BLE) - Operating System: Android 12 - Software: Visualized multimedia display system for Android

Read the full article

1 note

·

View note

Text

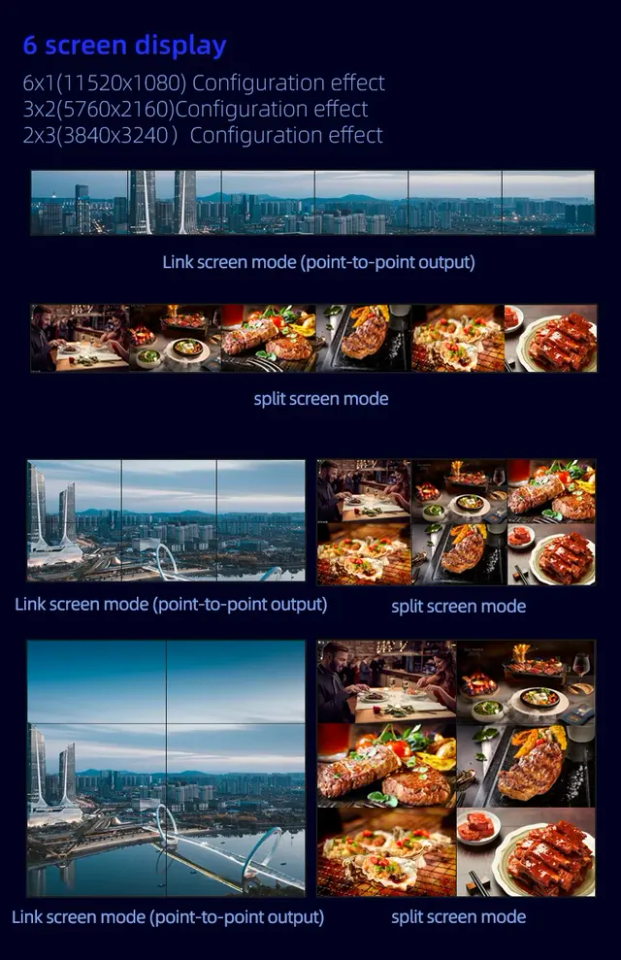

GPU Servers: Powering High-Performance Computing

In the era of AI, machine learning, and advanced data processing, GPU (Graphics Processing Unit) servers have become indispensable. Unlike traditional CPU-based servers, GPU servers are designed to handle parallel processing tasks with exceptional speed and efficiency.

At CloudMinister Technologies, we provide cutting-edge GPU server solutions tailored for industries like AI, deep learning, scientific research, and high-performance computing (HPC).

In this blog, we’ll explore what GPU servers are, their benefits, use cases, and best practices for deployment.

What is a GPU Server?

A GPU server is a high-performance computing system equipped with multiple GPUs (such as NVIDIA Tesla, A100, or AMD Instinct) to accelerate complex computations. While CPUs handle sequential tasks, GPUs excel at parallel processing, making them ideal for:

AI & Machine Learning (Training neural networks)

Big Data Analytics (Real-time data processing)

Scientific Simulations (Climate modeling, bioinformatics)

3D Rendering & Video Processing (Animation, VFX, game development)

Cryptocurrency Mining (Blockchain computations)

Why Choose GPU Servers Over Traditional CPU Servers?

Key Benefits of GPU Servers

1. Unmatched Computational Speed

GPUs can process thousands of threads simultaneously, drastically reducing training times for AI models.

2. Scalability for AI & Deep Learning

Train complex neural networks faster.

Support frameworks like TensorFlow, PyTorch, and CUDA.

3. Cost-Effective for High-Performance Workloads

Fewer servers needed compared to CPU clusters.

Reduced power consumption per computation.

4. Real-Time Data Processing

Ideal for autonomous vehicles, financial modeling, and medical imaging.

5. Enhanced Graphics & Rendering

Accelerates 3D modeling, video editing, and game development.

Top Use Cases of GPU Servers

1. Artificial Intelligence & Machine Learning

Faster training of ChatGPT-like models, computer vision, and NLP.

Supports LLM (Large Language Model) fine-tuning.

2. Scientific Research & HPC

Weather forecasting, quantum computing, and genomic sequencing.

3. Media & Entertainment

4K/8K video rendering, VR/AR content creation.

4. Healthcare & Biotechnology

Drug discovery, MRI analysis, and protein folding (like Folding@Home).

5. Financial Modeling & Blockchain

Algorithmic trading, risk analysis, and crypto mining.

Best Practices for Deploying GPU Servers

1. Choose the Right GPU for Your Workload

NVIDIA H100/A100 – Best for AI & deep learning.

AMD MI300X – Optimized for HPC & cloud workloads.

NVIDIA RTX 5000 – Ideal for graphics & rendering.

2. Optimize Cooling & Power Supply

GPUs generate significant heat—ensure liquid cooling or advanced airflow.

Use high-efficiency PSUs (80+ Platinum/Titanium).

3. Use GPU-Accelerated Software

Leverage CUDA, OpenCL, ROCm for maximum performance.

Deploy containerized GPU workloads (Docker + Kubernetes).

4. Monitor Performance & Utilization

Tools like NVIDIA DCGM, Grafana, and Prometheus help track GPU health.

5. Consider Cloud vs. On-Premises GPU Servers

Cloud GPUs (AWS EC2 P4/P5, Azure NDv5) – Flexible, pay-as-you-go.

On-Prem/Dedicated GPU Servers – Full control, lower latency.

Why Choose CloudMinister for GPU Server Solutions?

At CloudMinister Technologies, we provide: Custom GPU Server Configurations (NVIDIA/AMD) AI/ML-Optimized GPU Clusters Cloud & Hybrid GPU Deployments 24/7 Monitoring & Support Energy-Efficient Data Center Solutions

Final Thoughts

GPU servers are revolutionizing industries by enabling faster computations, AI breakthroughs, and real-time analytics. Whether you're a startup exploring AI or an enterprise running HPC workloads, choosing the right GPU infrastructure is critical.

0 notes

Text

ECE 3270: Laboratory 2: OPENCL LIBRARIES

Lab 2 introduces the student to libraries for OpenCL. The student familiarized themselves with the concept of Libraries for OpenCL. The laboratory was divided into two parts. Part 1 involved designing a 16-bit ripple carry adder in VHDL and generating a test bench to test the functionality of the same. Part 2 involved creating a new entity and instantiating a component of the ripple adder within…

0 notes

Text

China's first 6nm gaming GPU matches 13-year-old GTX 660 Ti in first Geekbench tests — Lisuan G100 surfaces with 32 CUs, 256MB VRAM, and 300 MHz clock speed

The first traces of the Lisuan G100, remarked as China’s first domestically produced 6nm GPU, have surfaced on Geekbench. In an OpenCL benchmark, the G100 is accompanied by an AMD Ryzen APU in Windows 10, though the performance isn’t remarkable. Since this is an early result from developing drivers and silicon, we shouldn’t consider this single benchmark as reflective of the GPU’s potential…

0 notes

Text

Intel’s oneAPI 2024 Kernel_Compiler Feature Improves LLVM

Kernel_Compiler

The kernel_compiler, which was first released as an experimental feature in the fully SYCL2020 compliant Intel oneAPI DPC++/C++ compiler 2024.1 is one of the new features. Here’s another illustration of how Intel advances the development of LLVM and SYCL standards. With the help of this extension, OpenCL C strings can be compiled at runtime into kernels that can be used on a device.

For offloading target hardware-specific SYCL kernels, it is provided in addition to the more popular modes of Ahead-of-Time (AOT), SYCL runtime, and directed runtime compilation.

Generally speaking, the kernel_compiler extension ought to be saved for last!

Nonetheless, there might be some very intriguing justifications for leveraging this new extension to create SYCL Kernels from OpenCL C or SPIR-V code stubs.

Let’s take a brief overview of the many late- and early-compile choices that SYCL offers before getting into the specifics and explaining why there are typically though not always better techniques.

Three Different Types of Compilation

The ability to offload computational work to kernels running on another compute device that may be installed on the machine, such as a GPU or an FPGA, is what SYCL offers your application. Are there thousands of numbers you need to figure out? Forward it to the GPU!

Power and performance are made possible by this, but it also raises more questions:

Which device are you planning to target? In the future, will that change?

Could it be more efficient if it were customized to parameters that only the running program would know, or do you know the complete domain parameter value for that kernel execution? SYCL offers a number of choices to answer those queries:

Ahead-of-Time (AoT) Compile: This process involves compiling your kernels to machine code concurrently with the compilation of your application.

SYCL Runtime Compilation: This method compiles the kernel while your application is executing and it is being used.

With directed runtime compilation, you can set up your application to generate a kernel whenever you’d want.

Let’s examine each one of these:

1. Ahead of Time (AoT) Compile

You can also precompile the kernels at the same time as you compile your application. All you have to do is specify which devices you would like the kernels to be compiled for. All you need to do is pass them to the compiler with the -fsycl-targets flag. Completed! Now that the kernels have been compiled, your application will use those binaries.

AoT compilation has the advantage of being easy to grasp and familiar to C++ programmers. Furthermore, it is the only choice for certain devices such as FPGAs and some GPUs.

An additional benefit is that your kernel can be loaded, given to the device, and executed without the runtime stopping to compile it or halt it.

Although they are not covered in this blog post, there are many more choices available to you for controlling AoT compilation. For additional information, see this section on compiler and runtime design or the -fsycl-targets article in Intel’s GitHub LLVM User Manual.

SPIR-V

2. SYCL Runtime Compilation (via SPIR-V)

If no target devices are supplied or perhaps if an application with precompiled kernels is executed on a machine with target devices that differ from what was requested, this is SYCL default mode.

SYCL automatically compiles your kernel C++ code to SPIR-V (Standard Portable Intermediate form), an intermediate form. When the SPIR-V kernel is initially required, it is first saved within your program and then sent to the driver of the target device that is encountered. The SPIR-V kernel is then converted to machine code for that device by the device driver.

The default runtime compilation has the following two main benefits:

First of all, you don’t have to worry about the precise target device that your kernel will operate on beforehand. It will run as long as there is one.

Second, if a GPU driver has been updated to improve performance, your application will benefit from it when your kernel runs on that GPU using the new driver, saving you the trouble of recompiling it.

However, keep in mind that there can be a minor cost in contrast to AoT because your application will need to compile from SPIR-V to machine code when it first delivers the kernel to the device. However, this usually takes place outside of the key performance route, before parallel_for loops the kernel.

In actuality, this compilation time is minimal, and runtime compilation offers more flexibility than the alternative. SYCL may also cache compiled kernels in between app calls, which further eliminates any expenses. See kernel programming cache and environment variables for additional information on caching.

However, if you prefer the flexibility of runtime compilation but dislike the default SYCL behavior, continue reading!

3. Directed Runtime Compilation (via kernel_bundles)

You may access and manage the kernels that are bundled with your application using the kernel_bundle class in SYCL, which is a programmatic interface.

Here, the kernel_bundle techniques are noteworthy.build(), compile(), and link(). Without having to wait until the kernel is required, these let you, the app author, decide precisely when and how a kernel might be constructed.

Additional details regarding kernel_bundles are provided in the SYCL 2020 specification and in a controlling compilation example.

Specialization Constants

Assume for the moment that you are creating a kernel that manipulates an input image’s numerous pixels. Your kernel must use a replacement to replace the pixels that match a specific key color. You are aware that if the key color and replacement color were constants instead of parameter variables, the kernel might operate more quickly. However, there is no way to know what those color values might be when you are creating your program. Perhaps they rely on calculations or user input.

Specialization constants are relevant in this situation.

The name refers to the constants in your kernel that you will specialize in at runtime prior to the kernel being compiled at runtime. Your application can set the key and replacement colors using specialization constants, which the device driver subsequently compiles as constants into the kernel’s code. There are significant performance benefits for kernels that can take advantage of this.

The Last Resort – the kernel_compiler

All of the choices that as a discussed thus far work well together. However, you can choose from a very wide range of settings, including directed compilation, caching, specialization constants, AoT compilation, and the usual SYCL compile-at-runtime behavior.

Using specialization constants to make your program performant or having it choose a specific kernel at runtime are simple processes. However, that might not be sufficient. Perhaps all your software needs to do is create a kernel from scratch.

Here is some source code to help illustrate this. Intel made an effort to compose it in a way that makes sense from top to bottom.

When is It Beneficial to Use kernel_compiler?

Some SYCL users already have extensive kernel libraries in SPIR-V or OpenCL C. For those, the kernel_compiler is a very helpful extension that enables them to use those libraries rather than a last-resort tool.

Download the Compiler

Download the most recent version of the Intel oneAPI DPC++/C++ Compiler, which incorporates the kernel_compiler experimental functionality, if you haven’t already. Purchase it separately for Windows or Linux, via well-known package managers only for Linux, or as a component of the Intel oneAPI Base Toolkit 2024.

Read more on Govindhtech.com

#oneAPI#Kernel_Compiler#LLVM#InteloneAPI#SYCL2020#SYCLkernels#FPGA#SYC#SPIR-Vkernel#OpenCL#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

1 note

·

View note

Video

youtube

[...]

Quan sát thứ tư, có thể là còn phải tranh cãi.

Cái chính đối với thầy giáo không phải dạy cho người ta cái gì đó, mà là ngăn chặn sai lầm. Tôi thấy trước rằng sẽ có người phản đối ý kiến này: Sao lại thế nhỉ, người học trò đến với thầy là để thu nhận kiến thức, học một cái gì đó kia mà? Đúng thế, nhưng chúng ta đã giao ước với nhau rồi kia mà, rằng sứ mạng của học trò không phải chỉ để tiếp thu kinh nghiệm và và truyền kinh nghiệm ấy cho người khác, mà còn là để nhân nó lên nữa.

Các sai lầm, hiểu theo nghĩa rộng, không thể không mắc phải, nhất là trong tìm kiếm khoa học, những sự lầm lạc là cần thiết, không thế thì không tìm ra chân lý đâu. Nhưng điều quan trọng là ta cần phải biết phát hiện và vượt qua chúng. Có một kiểu dũng cảm đặc biệt, dũng cảm bảo vệ tính khách quan hay có khả năng nói rằng: “Tôi đã không đúng”. Thứ dũng cảm ấy cần cho tất cả, dù người đó có chọn bất kì ngành chuyên môn nào đi chăng nữa. Nhưng lòng dũng cảm ấy chỉ đến đồng thời với học vấn. Một người không biết cái gì khác ngoài cái do chính anh ta làm ra, không thể khiến người khác bị thuyết phục khi nói rằng: “Tôi đã làm không tốt, tôi đã không đúng”.

Nhưng ngay đây có một đường ranh giới mà mắt thường không nhìn thấy. Ranh giới giữa sự hoài nghi và lòng tin vững chắc. Sự quá tin dẫn tới mất tính khách quan. Quá hoài nghi cũng ngăn chặn công việc sáng tạo. Anh ta có thể suốt đời tự bảo mình: “Tôi đã làm không tốt, tôi đã không đúng”, mà vẫn không tặng cho nhân loại một tuyệt tác n��o.

Bởi thế cái chính đối với người thầy không chỉ đơn thuần có việc “nhồi nhét” các tri thức cho học trò, không phải chỉ dạy làm việc, mà còn phải ngăn ngừa khuyết điểm, sai lầm, sự quá tin, quá hoài nghi. Thầy phải đánh giá khách quan kết quả công việc của học trò. Norbert Wiener đã viết những dòng rất hay để minh họa ý này, rằng sự sáng tạo chân chính là sự cắt bỏ cái thừa. Có thể dạy cho một đàn khỉ đánh máy chữ. Chúng có thể gõ lung tung vào máy và đánh hết một núi giấy. Giữa những dòng chữ vô nghĩa ấy có thể sẽ có cả lời thoại từ vở “Hamlet” của Shakespeare. Nhưng phải cần đến Shakespeare để gạt bỏ những chữ thừa và lúc đó mới còn lại lời của vở bi kịch bất hủ ấy.

[...]

Hãy bám sát mục tiêu - Tôi hay không phải tôi - R.V Petrov - 1983

***

Ờ. OpenCL đang là thương hiệu của Apple và lý do tại sao Apple không chuyển quyền sở hữu nó cho Khronos Group dù đã ngừng phát triển và loại bỏ thì chịu. À mà kể ra thì cái này giống một thư viện hơn bởi đây là C chuẩn kèm từ khóa mở rộng chứ không buộc phải sử dụng cú pháp khác như Go từ Google và thực tế thì C++ cũng là cách viết tốt. Điểm khó nhất lúc này là cần viết lại các bài toàn đang được giải theo hướng tuần tự (Sequential) sang đồng thời (Concurrency) và/hoặc song song (Parallelism). Tuy nhiên, ngay việc phân biệt thế nào là đồng thời với thế nào là song song đã tốn khá nhiều sức để nghĩ thì con đường cách mạng còn lắm gian truân. *Apple bỏ rơi còn Nvidia và AMD thì ưu tiên gà nhà là CUDA và HIP nên hóa ra Intel là bên nghiêm túc nhất với OpenCl khi chạy mã OpenCl trên các GPU Intel gặp khá ít lỗi. Yes. Với thư viện PoCL, ta cũng có thể chuyển các CPU đời cũ thành các Đơn vị tính toán (CUs) của hệ thống lớn hơn*

*Cho bạn nào có hứng thú* Điện toán hỗn tạp (Heterogeneous computing) là mô hình tính toán sử dụng hệ thống được tạo ra bởi nhiều loại phần cứng tính toán khác nhau như CPU, GPU, DSP, ASIC, FPGA, hay NPU. Và bằng cách phân bổ các tác vụ thích hợp, năng lực tính toán sẽ tăng lên trong khi vẫn làm giảm năng lượng tiêu thụ bởi cả hệ thống. Hiện tại, một chương trình điện toán hỗn tạp bao gồm hai phần là Host Context và Device Context. Trong khi mã Host Conext chỉ chạy trên CPU chính và có thể được viết bằng bất kì ngôn ngữ nào thì mã trong Device Context chạy trên các thiết bị tăng tốc cần được viết bằng ngôn ngữ mà thư viện thực thi (runtime library) hỗ trợ ví dụ OpenCL là C và C++ thì HIP chỉ là C++ còn CUDA có thêm Fortran và cả Python với Java khi bổ sung các thư viện cần thiết. Tuy nhiên, hiện cả CUDA lẫn HIP lại chỉ chạy trên mỗi GPU và đây là chỗ bạn có thể sử dụng bất kì ngôn ngữ nào để viết mã Device Context aka làm kernel language cho OpenCl, miễn là sau đó mã được biên dịch sang SPIR-V :D

***

Nào. Trước khi tranh luận xem VNCH có là quốc gia không thì chúng ta cần thống nhất xem một quốc gia thì gồm những gì hay dễ hơn là đâu là giới hạn sử dụng của từ quốc gia. Ví dụ Úc là một quốc gia ở trong Vương quốc Thịnh vương chung (Anh) và cái này là một quốc gia có chủ quyền (a sovereign state) trong khối Thịnh vượng chung Anh. *Bản sắc là sai nhiều nên các bạn cứ kệ mấy cái như thế này đi*

Ồ. Dịch proletariat thành vô sản thì hợp lý bởi người Anh, người Pháp và người Đức cũng đã và đang dùng proletariat với nghĩa y như vậy! Vấn đề là hai cụ Karl Marx và Friedrich Engels lại không dùng proletariat với nghĩa cụ thể như thế mà dùng nó để chỉ bất kì ai có nguồn thu nhập chính từ lao động chứ không phải từ sở hữu vốn, tức họ có thể dư tài sản để tích lũy như các công nhân có thu nhập cao a.k.a các quý tộc lao động - labor aristocracy hoặc aristocracy of labour như chữ dùng trong cuốn Tư bản luận - và người kinh doanh nhỏ a.k.a tiểu thương. Thành ra proletariat = vô sản + tiểu tư sản = bình dân = 平民 hay là worker như bản dich khẩu hiệu: ‘Proletarier aller Länder, vereinigt Euch!’ sang tiếng Anh mới đúng ý các cụ. Nhân tiện thì trong các nước đồng văn, Nhật Bản là quốc gia duy nhất có tầng lớp quý tộc ra môn ra khoai. Được gọi là Kazoku = 華族 = Hoa tộc và được thiết lập theo mô hình quý tộc Anh Quốc nói riêng và châu Âu cận đại nói chung, tầng lớp này là trung tâm vận hành của Zaibatsu (財閥) - Thứ đã đem lại sức mạnh cho Đế quốc Nhật bản từ Minh Trị Duy tân đến Thể chiến thứ 2! :D

0 notes

Text

Software Development Engineer - HIP/OpenCL Runtime

_ Responsibilities: THE ROLE: AMD is looking for an influential software engineer who is passionate about improving the performance… and will work with the very latest hardware and software technology. THE PERSON: The ideal candidate should be passionate… Apply Now

0 notes

Video

youtube

oneplus pad 3 vs Xiaomi pad 7 | xiaomi pad 7 vs one plus pad 3

OnePlus Pad 3 vs Xiaomi Pad 7 | Which Tablet Should You Buy in 2025? OnePlus Pad 3 vs Xiaomi Pad 7 – the ultimate 2025 tablet face-off! Searching for the best tablet for gaming, productivity, or streaming? We compare these mid-range beasts to help you pick the perfect one. In-depth pros and cons for gaming, multitasking, and media. India pricing (~₹27,999–₹39,999) and global availability. Ideal for searches like Android tablets 2025, best tablets under ₹40,000, or gaming tablets. * OnePlus Pad 3 Specifications Display: 13.2-inch IPS LCD, 3.4K (3400x2264), 144Hz, 900 nits, Dolby Vision Processor: Qualcomm Snapdragon 8 Elite (8-core, up to 4.32GHz, Adreno 830 GPU) RAM/Storage: 12GB/256GB, 16GB/512GB (LPDDR5X, UFS 4.0) Battery: 12,140mAh, 80W fast charging Cameras: 13MP rear (4K video), 8MP front OS: OxygenOS 15 (Android 15) Price: ~₹39,999 (India, 12GB+256GB, estimated via Smartprix) Other: Quad speakers, Dolby Atmos, stylus support (OnePlus Stylo 2, ₹4,999), Mac/iPhone sync Pros: Massive 13.2-inch 3.4K display, perfect for movies and multitasking. Snapdragon 8 Elite crushes AAA games (e.g., Genshin Impact at 120 FPS, AnTuTu: ~2,849,493). Huge 12,140mAh battery (~14–16 hours) with 80W charging (0–100% in ~75 minutes). Clean OxygenOS, minimal bloat, supports Open Canvas for productivity. Unique iPhone/Mac compatibility for cross-device users. Cons: Higher price (~₹39,999) compared to Xiaomi’s budget-friendly option. Bulkier design (13.2-inch) may feel less portable. Accessories (keyboard ~₹7,999, stylus) add to cost. No headphone jack, unlike some competitors. Xiaomi Pad 7 Specifications Display: 11.2-inch IPS LCD, 3.2K (3200x2136), 144Hz, 800 nits, Dolby Vision Processor: Qualcomm Snapdragon 7+ Gen 3 (8-core, up to 2.8GHz, Adreno 732 GPU) RAM/Storage: 8GB/128GB, 12GB/256GB (LPDDR5X, UFS 4.0) Battery: 8,850mAh, 45W fast charging Cameras: 13MP rear, 8MP front (with LED privacy light) OS: HyperOS 2 (Android 15) Price: ~₹27,999 (India, 8GB+128GB, Smartprix) Other: Quad speakers, Dolby Atmos, stylus support (Focus Pen, ₹5,999) Pros: Sharper 3.2K display (345 PPI) with 800 nits, great for outdoor visibility. Snapdragon 7+ Gen 3 handles gaming well (90 FPS in PUBG, Geekbench: 1,900 single-core). Budget-friendly at ~₹27,999, excellent value for money. Fast UFS 4.0 storage speeds up apps and editing. HyperOS 2 offers AI tools (e.g., Mi Canvas for sketching). Cons: Smaller 8,850mAh battery (~10–12 hours) vs. OnePlus’ longer runtime. HyperOS has bloatware, less streamlined than OxygenOS. Slower 45W charging (~100 minutes to full). Comparison Highlights Performance: OnePlus Pad 3’s Snapdragon 8 Elite dominates with flagship-grade gaming and multitasking (OpenCL: 18,461 vs. Xiaomi’s 12,500). Xiaomi’s Snapdragon 7+ Gen 3 is solid for mid-range gaming. Display: Xiaomi’s 11.2-inch is sharper (345 PPI); OnePlus’ 13.2-inch is bigger for media. Battery: OnePlus lasts longer and charges faster; Xiaomi is adequate but lags. Software: OxygenOS is cleaner; HyperOS is feature-rich but cluttered. Who Should Buy Which Tablet? Buy OnePlus Pad 3 If: You’re a gamer or professional needing top-tier performance for AAA games (e.g., CODM, Genshin Impact) or heavy multitasking. You prioritize battery life and fast charging for all-day use (~₹39,999). Ideal for: Power users, content creators, or Apple ecosystem users. Buy Xiaomi Pad 7 If: You’re a budget-conscious gamer or student seeking strong gaming performance (90 FPS) under ₹30,000. one plus pad 3 vs Xiaomi pad 7 | xiaomi pad 7 vs one plus pad 3 which tablet you should buy? one plus pad 3 specifications one plus pad 3 specs one plus pad 3 price in INdia one plus pad 3 price one plus pad 3 oneplus pad 3 oneplus pad 3 unboxing oneplus pad 3 release date oneplus pad 3 review oneplus pad 3 leaks oneplus pad 3 launch date in india oneplus pad 3 pro oneplus pad 3 pubg test oneplus pad 3r oneplus pad 3 vs xiaomi pad 7 oneplus pad 3 upcoming one plus pad 3 launch date in india oneplus pad 3 launch date oneplus pad 3 price oneplus pad 3 price in india #jatintechtalks OnePlue Pad 3 Unboxing | Price in UK | Depth Review | Release Date OnePlus Pad 3 & Pad 3 Pro - Official Look | Price | India Launch & all features in hindi one plus pad 3 one plus pad 3 unboxing one plus pad 3 release date one plus pad 3 review one plus pad 3 leaks one plus pad 3 launch date in india one plus pad 3 pro one plus pad 3 pubg test one plus pad 3r one plus pad 3 vs xiaomi pad 7 one plus pad 3 upcoming one plus pad 3 launch date in india one plus pad 3 launch date Investigations by Kevin MacLeod is licensed under a Creative Commons Attribution 4.0 licence. https://creativecommons.org/licenses/by/4.0/

0 notes

Text

高通Snapdragon 7 Gen 4 行動平台導入圖像生成工具|榮耀、vivo 新機率先搭載

高通前幾天公開了中階機最新行動平台 –Snapdragon 7 Gen 4,除了效能提升、優化來自 Snapdragon Elite Gaming 驅動遊戲體驗之外,下放了來自8系列所支援的高通擴充個人區域網路(XPAN)技術,不過最大亮點是讓中階機也支援「機上AI」,可以運行生成式AI助理和 LLM 語言模型,並新增Stable Diffusion圖像生成工具,首波採用該平台的新機包括榮耀、vivo等廠商,預計 5 月發表。 從技術規格來看,這組 4nm 製程的 Snapdragon 7 Gen 4行動平台採用的Kryo CPU 為 1(2.8GHz)+4(2.4GHz)+3(1.8GHz) 的架構,相較前代 CPU 運算效能提升 27%,GPU 效能提升 30%,支援 HDR 10+、OpenGL ES 3.2, OpenCL 2.0 FP, Vulkan 1.3…

0 notes

Text

Install Davinci Resolve in any Linux distro

New Post has been published on https://tuts.kandz.me/install-davinci-resolve-in-any-linux-distro/

Install Davinci Resolve in any Linux distro

youtube

on host sudo usermod -a -G render,video $LOGNAME install distrobox and podman sudo zypper in podman distrobox or sudo apt install -y podman distrobox or sudo dnf install -y podman distrobox or sudo pacman -S -y podman distrobox reboot go url to download https://www.blackmagicdesign.com/no/p... create a Fedora 40 container and enter it distrobox create distrobox create --name fedora40 --image registry.fedoraproject.org/fedora:40 distrobox enter fedora40 install dependencies sudo dnf install fuse fuse-devel alsa-lib \ apr apr-util dbus-libs fontconfig freetype \ libglvnd libglvnd-egl libglvnd-glx \ libglvnd-opengl libICE librsvg2 libSM \ libX11 libXcursor libXext libXfixes libXi \ libXinerama libxkbcommon libxkbcommon-x11 \ libXrandr libXrender libXtst libXxf86vm \ mesa-libGLU mtdev pulseaudio-libs xcb-util \ xcb-util-image xcb-util-keysyms \ xcb-util-renderutil xcb-util-wm \ mesa-libOpenCL rocm-opencl libxcrypt-compat \ alsa-plugins-pulseaudio unzip it. Change the name with the version you have downloaded, and the location you have downloaded it. cd ~/Downloads unzip DaVinci_Resolve_Studio_19.1.3_Linux run the DaVinci Resolve installer. Change the name with the version you have downloaded chmod +x DaVinci_Resolve_Studio_19.1.3_Linux.run sudo ./DaVinci_Resolve_Studio_19.1.3_Linux.run --appimage-extract sudo SKIP_PACKAGE_CHECK=1 ./squashfs-root/AppRun apply workaround for outdated libraries sudo mkdir /opt/resolve/libs/disabled sudo mv /opt/resolve/libs/libglib* /opt/resolve/libs/disabled sudo mv /opt/resolve/libs/libgio* /opt/resolve/libs/disabled sudo mv /opt/resolve/libs/libgmodule* /opt/resolve/libs/disabled run DaVinci Resolve /opt/resolve/bin/resolve export desktop files distrobox-export --app resolve In case more problems cd /opt/resolve/libs sudo mkdir disabled sudo mv libglib* disabled sudo mv libgio* disabled sudo mv libgmodule* disabled

0 notes

Photo

🚀 Curious about the future of graphics tech? Nvidia's RTX Pro 6000 Blackwell is making waves with its 96GB powerhouse capabilities 💪. Though this new card was expected to outperform the GeForce RTX 5090, benchmarks reveal only a small 2.3% performance gap despite the hardware differences. Interestingly, this high-powered unit is still under testing and might see improvements once final drivers are released. The RTX Pro 6000’s advanced architecture offers superior performance in tasks like horizon and edge detection, showcasing Nvidia’s commitment to pushing the boundaries of professional visualization technology. The card's impressive CUDA core count and memory configuration position it as a significant player in professional settings. However, constraints in current testing conditions mean that some specifications, like the full memory capacity, are yet to be fully leveraged. 👌 With pre-release drivers in use, actual performance is expected to rise with final updates. This shines a light on Nvidia's proactive role in tech advancements. Do you believe this powerhouse card will redefine the ProViz landscape once fully optimized? Share your thoughts below! 🎨💻 #RTXPro6000 #Nvidia #Blackwell #GraphicsCard #TechInnovation #ProVisualization #FutureTechnology #GPU #TechNews #HighPerformanceComputing #VisualizationExperts #Benchmark #ProViz #CUDA #OpenCL #InnovationInTech #GraphicsTech

0 notes