#Lawrence Berkeley National Laboratory

Explore tagged Tumblr posts

Text

The LP – Side One

The sale of #vinyl records exceeded that of #CDs last year for the first time since 1988, a timely revival of vinyl's fortunes as it celebrates its 75th anniversary along with the #LP this year

Ever since the release in Japan of Billy Joel’s 52nd Street on October 1, 1982, the first commercially available compact disc (CD), vinyl records have been locked in an existential struggle. Digital formats might offer a cleaner listening experience, be more convenient to store, and well-nigh indestructible, but for many audiophiles a vinyl record feels more tangible and “alive” and is better at…

View On WordPress

#52nd Street#Au clair de la lune#Billy Joel#Edouard-Leon Scott#Lawrence Berkeley National Laboratory#Mary Had a Little Lamb#Scientific American#the phonautograph#Thomas Edison

0 notes

Text

The First Light of Trinity

— By Alex Wellerstein | July 16, 2015 | Annals of Technology

Seventy years ago, the flash of a nuclear bomb illuminated the skies over Alamogordo, New Mexico. Courtesy Los Alamos National Laboratory

The light of a nuclear explosion is unlike anything else on Earth. This is because the heat of a nuclear explosion is unlike anything else on Earth. Seventy years ago today, when the first atomic weapon was tested, they called its light cosmic. Where else, except in the interiors of stars, do the temperatures reach into the tens of millions of degrees? It is that blistering radiation, released in a reaction that takes about a millionth of a second to complete, that makes the light so unearthly, that gives it the strength to burn through photographic paper and wound human eyes. The heat is such that the air around it becomes luminous and incandescent and then opaque; for a moment, the brightness hides itself. Then the air expands outward, shedding its energy at the speed of sound—the blast wave that destroys houses, hospitals, schools, cities.

The test was given the evocative code name of Trinity, although no one seems to know precisely why. One theory is that J. Robert Oppenheimer, the head of the U.S. government’s laboratory in Los Alamos, New Mexico, and the director of science for the Manhattan Project, which designed and built the bomb, chose the name as an allusion to the poetry of John Donne. Oppenheimer’s former mistress, Jean Tatlock, a student at the University of California, Berkeley, when he was a professor there, had introduced him to Donne’s work before she committed suicide, in early 1944. But Oppenheimer later claimed not to recall where the name came from.

The operation was designated as top secret, which was a problem, since the whole point was to create an explosion that could be heard for a hundred miles around and seen for two hundred. How to keep such a spectacle under wraps? Oppenheimer and his colleagues considered several sites, including a patch of desert around two hundred miles east of Los Angeles, an island eighty miles southwest of Santa Monica, and a series of sand bars ten miles off the Texas coast. Eventually, they chose a place much closer to home, near Alamogordo, New Mexico, on an Army Air Forces bombing range in a valley called the Jornada del Muerto (“Journey of the Dead Man,” an indication of its unforgiving landscape). Freshwater had to be driven in, seven hundred gallons at a time, from a town forty miles away. To wire the site for a telephone connection required laying four miles of cable. The most expensive single line item in the budget was for the construction of bomb-proof shelters, which would protect some of the more than two hundred and fifty observers of the test.

The area immediately around the bombing range was sparsely populated but not by any means barren. It was within two hundred miles of Albuquerque, Santa Fe, and El Paso. The nearest town of more than fifty people was fewer than thirty miles away, and the nearest occupied ranch was only twelve miles away—long distances for a person, but not for light or a radioactive cloud. (One of Trinity’s more unusual financial appropriations, later on, was for the acquisition of several dozen head of cattle that had had their hair discolored by the explosion.) The Army made preparations to impose martial law after the test if necessary, keeping a military force of a hundred and sixty men on hand to manage any evacuations. Photographic film, sensitive to radioactivity, was stowed in nearby towns, to provide “medical legal” evidence of contamination in the future. Seismographs in Tucson, Denver, and Chihuahua, Mexico, would reveal how far away the explosion could be detected.

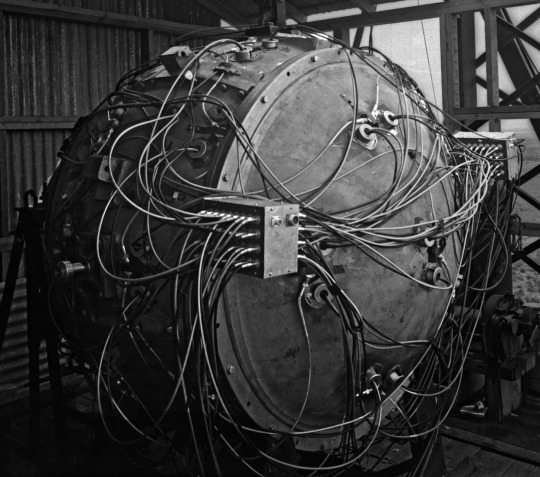

The Trinity test weapon. Courtesy Los Alamos National Laboratory

On July 16, 1945, the planned date of the test, the weather was poor. Thunderstorms were moving through the area, raising the twin hazards of electricity and rain. The test weapon, known euphemistically as the gadget, was mounted inside a shack atop a hundred-foot steel tower. It was a Frankenstein’s monster of wires, screws, switches, high explosives, radioactive materials, and diagnostic devices, and was crude enough that it could be tripped by a passing storm. (This had already happened once, with a model of the bomb’s electrical system.) Rain, or even too many clouds, could cause other problems—a spontaneous radioactive thunderstorm after detonation, unpredictable magnifications of the blast wave off a layer of warm air. It was later calculated that, even without the possibility of mechanical or electrical failure, there was still more than a one-in-ten chance of the gadget failing to perform optimally.

The scientists were prepared to cancel the test and wait for better weather when, at five in the morning, conditions began to improve. At five-ten, they announced that the test was going forward. At five-twenty-five, a rocket near the tower was shot into the sky—the five-minute warning. Another went up at five-twenty-nine. Forty-five seconds before zero hour, a switch was thrown in the control bunker, starting an automated timer. Just before five-thirty, an electrical pulse ran the five and a half miles across the desert from the bunker to the tower, up into the firing unit of the bomb. Within a hundred millionths of a second, a series of thirty-two charges went off around the device’s core, compressing the sphere of plutonium inside from about the size of an orange to that of a lime. Then the gadget exploded.

General Thomas Farrell, the deputy commander of the Manhattan Project, was in the control bunker with Oppenheimer when the blast went off. “The whole country was lighted by a searing light with the intensity many times that of the midday sun,” he wrote immediately afterward. “It was golden, purple, violet, gray, and blue. It lighted every peak, crevasse, and ridge of the nearby mountain range with a clarity and beauty that cannot be described but must be seen to be imagined. It was that beauty the great poets dream about but describe most poorly and inadequately.” Twenty-seven miles away from the tower, the Berkeley physicist and Nobel Prize winner Ernest O. Lawrence was stepping out of a car. “Just as I put my foot on the ground I was enveloped with a warm brilliant yellow white light—from darkness to brilliant sunshine in an instant,” he wrote. James Conant, the president of Harvard University, was watching from the V.I.P. viewing spot, ten miles from the tower. “The enormity of the light and its length quite stunned me,” he wrote. “The whole sky suddenly full of white light like the end of the world.”

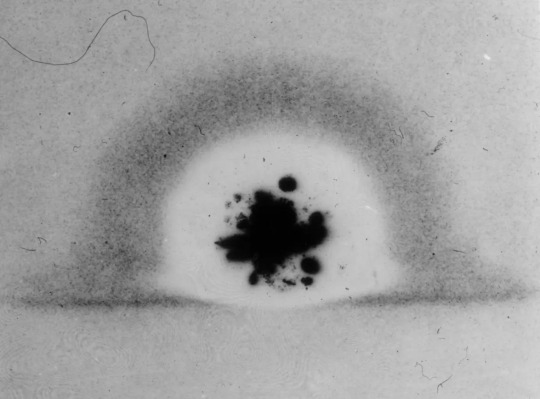

In its first milliseconds, the Trinity fireball burned through photographic film. Courtesy National Archives and Records Administration

Trinity was filmed exclusively in black and white and without audio. In the main footage of the explosion, the fireball rises out of the frame before the cameraman, dazed by the sight, pans upward to follow it. The written accounts of the test, of which there are many, grapple with how to describe an experience for which no terminology had yet been invented. Some eventually settle on what would become the standard lexicon. Luis Alvarez, a physicist and future participant in the Hiroshima bombing, viewed Trinity from the air. He likened the debris cloud, which rose to a height of some thirty thousand feet in ten minutes, to “a parachute which was being blown up by a large electric fan,” noting that it “had very much the appearance of a large mushroom.” Charles Thomas, the vice-president of Monsanto, a major Manhattan Project contractor, observed the same. “It looked like a giant mushroom; the stalk was the thousands of tons of sand being sucked up by the explosion; the top of the mushroom was a flowering ball of fire,” he wrote. “It resembled a giant brain the convolutions of which were constantly changing.”

In the months before the test, the Manhattan Project scientists had estimated that their bomb would yield the equivalent of between seven hundred and five thousand tons of TNT. As it turned out, the detonation force was equal to about twenty thousand tons of TNT—four times larger than the expected maximum. The light was visible as far away as Amarillo, Texas, more than two hundred and eighty miles to the east, on the other side of a mountain range. Windows were reported broken in Silver City, New Mexico, some hundred and eighty miles to the southwest. Here, again, the written accounts converge. Thomas: “It is safe to say that nothing as terrible has been made by man before.” Lawrence: “There was restrained applause, but more a hushed murmuring bordering on reverence.” Farrell: “The strong, sustained, awesome roar … warned of doomsday and made us feel that we puny things were blasphemous.” Nevertheless, the plainclothes military police who were stationed in nearby towns reported that those who saw the light seemed to accept the government’s explanation, which was that an ammunition dump had exploded.

Trinity was only the first nuclear detonation of the summer of 1945. Two more followed, in early August, over Hiroshima and Nagasaki, killing as many as a quarter of a million people. By October, Norris Bradbury, the new director of Los Alamos, had proposed that the United States conduct “subsequent Trinity’s.” There was more to learn about the bomb, he argued, in a memo to the new coördinating council for the lab, and without the immediate pressure of making a weapon for war, “another TR might even be FUN.” A year after the test at Alamogordo, new ones began, at Bikini Atoll, in the Marshall Islands. They were not given literary names. Able, Baker, and Charlie were slated for 1946; X-ray, Yoke, and Zebra were slated for 1948. These were letters in the military radio alphabet—a clarification of who was really the master of the bomb.

Irradiated Kodak X-ray film. Courtesy National Archives and Records Administration

By 1992, the U.S. government had conducted more than a thousand nuclear tests, and other nations—China, France, the United Kingdom, and the Soviet Union—had joined in the frenzy. The last aboveground detonation took place over Lop Nur, a dried-up salt lake in northwestern China, in 1980. We are some years away, in other words, from the day when no living person will have seen that unearthly light firsthand. But Trinity left secondhand signs behind. Because the gadget exploded so close to the ground, the fireball sucked up dirt and debris. Some of it melted and settled back down, cooling into a radioactive green glass that was dubbed Trinitite, and some of it floated away. A minute quantity of the dust ended up in a river about a thousand miles east of Alamogordo, where, in early August, 1945, it was taken up into a paper mill that manufactured strawboard for Eastman Kodak. The strawboard was used to pack some of the company’s industrial X-ray film, which, when it was developed, was mottled with dark blotches and pinpoint stars—the final exposure of the first light of the nuclear age.

#Hiroshima | Japan 🇯🇵 | John Donne | Manhattan Project | Monsanto#Nagasaki | Japan 🇯🇵 | Nuclear Weapons | Second World War | World War II#The New Yorker#Alex Wellerstein#Los Alamos National Laboratory#New Mexico#J. Robert Oppenheimer#John Donne#Jean Tatlock#University of California Berkeley#Jornada del Muerto | Journey of the Dead Man#General Thomas Farrell#Nobel Prize Winner Physicist Ernest O. Lawrence#Luis Alvarez#US 🇺🇸#China 🇨🇳#France 🇫🇷#Soviet Union (Now Russia 🇷🇺)#Alamogordo | New Mexico#Eastman Kodak#Nuclear Age

39 notes

·

View notes

Text

A new study published in the journal Renewable Energy uses data from the state of California to demonstrate that no blackouts occurred when wind-water-solar electricity supply exceeded 100% of demand on the state’s main grid for a record 98 of 116 days from late winter to early summer 2024 for an average (maximum) of 4.84 (10.1) hours per day.

Compared to the same period in 2023, solar output in California is up 31%, wind power is up 8%, and batteries are up a staggering 105%. Batteries supplied up to 12% of nighttime demand by storing and redistributing excess solar energy.

And here’s the kicker: California’s high electricity prices aren’t because of wind, water, and solar energy. (That issue is primarily caused by utilities recovering the cost of wildfire mitigation, transmission and distribution investments, and net energy metering.)

In fact, researchers from Stanford, Lawrence Berkeley National Laboratory, and the University of California, Berkeley found that states with higher shares of renewable energy tend to see lower electricity prices. The takeaway – and the data backs it up – is that a large grid dominated by wind, water, and solar is not only feasible, it’s also reliable.

115 notes

·

View notes

Text

Van de Graaff generator.

Record Group 434: General Records of the Department of EnergySeries: Photographs Documenting Scientists, Special Events, and Nuclear Research Facilities, Instruments, and Projects at the Lawrence Berkeley National Laboratory

This black and white photograph shows electrical equipment.

28 notes

·

View notes

Text

Radio Frequency Quadrupole Lawrence Berkeley National Laboratory, Berkeley, California The Radio Frequency Quadrupole is a device that captures heavy ions, focuses and bunches them, and accelerates the bunches to energies appropriate for injection into a particle accelerator. image credit: Berkeley Lab/U.S. Department of Energy via: The U.S. Department of Energy on Flickr

55 notes

·

View notes

Text

Category 1 hurricanes have wind speeds of 74—95 mph, Category 2 of 96—110 mph, Category 3 of 111—129 mph, Category 4 of 130—156 mph, and Category 5 hurricanes have winds of 157 mph or higher.

A study published by Lawrence Berkeley National Laboratory meteorologists in the journal Proceedings of the National Academy of Sciences in February this year proposed that a new Category 6 is needed for hurricanes that reach wind speeds of 192 mph or higher.

. . .

"Of the 197 [tropical cyclones] that were classified as category 5 during the 42-[year] period 1980 to 2021, which comprises the period of highest quality and most consistent data, half of them occurred in the last 17 y of the period. Five of those storms exceeded our hypothetical category 6 and all of these occurred in the last 9 y of the record," the researchers wrote in the paper.

"The most intense of these hypothetical category 6 storms, Patricia, occurred in the Eastern Pacific making landfall in Jalisco, Mexico, as a category 4 storm. The remaining category 6 storms all occurred in the Western Pacific."

Patricia—which hit in October 2015—reached wind speeds of 216 mph, while the other four western Pacific typhoons all reached 195-196 mph. The researchers note that the chance of storms potentially reaching Category 6 intensity has "more than doubled" since 1979.

Ali Sarhadi, an assistant Professor at the Georgia Institute of Technology, told Newsweek: "There is strong agreement that the frequency and intensity of major tropical cyclones (Category 3 and above) are likely to increase as a result of climate change. This is driven by rising ocean temperatures, which provide more thermal energy to fuel tropical cyclones, and the increased capacity of a warmer atmosphere to hold moisture, leading to heavier rainfall during the landfall of these storms."

19 notes

·

View notes

Text

Scientists have used a pair of lasers and a supersonic sheet of gas to accelerate electrons to high energies in less than a foot. The development marks a major step forward in laser-plasma acceleration, a promising method for making compact, high-energy particle accelerators that could have applications in particle physics, medicine, and materials science. In a new study soon to be published in the journal Physical Review Letters, a team of researchers successfully accelerated high-quality beams of electrons to more than 10 billion electronvolts (10 gigaelectronvolts, or GeV) in 30 centimeters. (The preprint can be found in the online repository arXiv). The work was led by the Department of Energy's Lawrence Berkeley National Laboratory (Berkeley Lab), with collaborators at the University of Maryland. The research took place at the Berkeley Lab Laser Accelerator Center (BELLA), which set a world record of 8-GeV electrons in 20 centimeters in 2019. The new experiment not only increases the beam energy, but also produces high-quality beam at this energy level for the first time, paving the way for future high-efficiency machines.

Read more.

12 notes

·

View notes

Text

DUNE scientists observe first neutrinos with prototype detector at Fermilab

Symmetry magazine,

The prototype of a novel particle detection system for the international Deep Underground Neutrino Experiment successfully recorded its first accelerator neutrinos.

In a major step for the international Deep Underground Neutrino Experiment, scientists have detected the first neutrinos using a DUNE prototype particle detector at the US Department of Energy’s Fermi National Accelerator Laboratory.

DUNE, currently under construction, will be the most comprehensive neutrino experiment in the world. It will bring scientists closer to solving some of the biggest physics mysteries in the universe, including searching for the origin of matter and learning more about supernovae and black hole formation.

Display of a candidate neutrino interaction recorded by the 2×2 detector highlighting the four internal detector modules and native 3D imaging capability. The bottom image additionally shows the detectors surrounding the 2×2 for further tracking of incoming and exiting particles. The DUNE Near Detector will similarly consist of a modular liquid argon detector along with a muon tracker.

Courtesy of DUNE Collaboration

Since DUNE will feature new designs and technology, scientists are testing prototype equipment and components in preparation for the final detector installation. In February, the DUNE team finished the installation of their latest prototype detector in the path of an existing neutrino beamline at Fermilab. On July 10, the team announced that they successfully recorded their first accelerator-produced neutrinos in the prototype detector, a step toward validating the design.

“This is a truly momentous milestone demonstrating the potential of this technology,” says Louise Suter, a Fermilab scientist who coordinated the module installation. “It is fantastic to see this validation of the hard work put into designing, building and installing the detector.”

The new neutrino detection system is part of the plan for DUNE’s near detector complex that will be built on the Fermilab site. Its prototype—known as the 2×2 prototype because it has four modules arranged in a square—records particle tracks with liquid argon time projection chambers. The final version of the DUNE near detector will feature 35 liquid argon modules, each larger than those in the prototype. The modules will help navigate the enormous flux of neutrinos expected at the near site.

The 2×2 prototype implements novel technologies that enable a new regime of detailed, cutting-edge neutrino imaging to handle the unique conditions in DUNE. It has a millimeter-sized pixel readout system, developed by a team at DOE’s Lawrence Berkeley National Laboratory, that allows for high-precision 3D imaging on a large scale. This, coupled with its modular design, sets the prototype apart from previous neutrino detectors like ICARUS and MicroBooNE.

Now, the 2×2 prototype provides the first accelerator-neutrino data to be analyzed by the DUNE collaboration.

DUNE is split between two locations hundreds of miles apart: a beam of neutrinos originating at Fermilab, close to Chicago, will pass through a particle detector located on the Fermilab site, then travel 800 miles through the ground to huge detectors at the Sanford Underground Research Facility in South Dakota.

The DUNE detector at Fermilab will analyze the neutrino beam close to its origin, where the beam is extremely intense. Collaborators expect this near detector to record about 50 interactions per pulse, which will come every second, amounting to hundreds of millions of neutrino detections over DUNE’s many expected years of operation. Scientists will also use DUNE to study neutrinos’ antimatter counterpart, antineutrinos.

This unprecedented flux of accelerator-made neutrinos and antineutrinos will enable DUNE’s ambitious science goals: Physicists will study the particles with DUNE’s near and far detectors to learn more about how they change type as they travel, a phenomenon known as neutrino oscillation. By looking for differences between neutrino oscillations and antineutrino oscillations, physicists will seek evidence for a broken symmetry known as CP violation to determine whether neutrinos might be responsible for the prevalence of matter in our universe.

The DUNE collaboration is made up of more than 1,400 scientists and engineers from over 200 research institutions. Nearly 40 of these institutions work on the near detector. Specifically, hardware development of the 2×2 prototype was led by the University of Bern in Switzerland, DOE’s Fermilab, Berkeley Lab and SLAC National Accelerator Laboratory, with significant contributions from many universities.

“It is wonderful to see the success of the technology we developed to measure neutrinos in such a high-intensity beam,” says Michele Weber, a professor at the University of Bern—where the concept of the modular design was born and where the four modules were assembled and tested—who leads the effort behind the new particle detection system. “A successful demonstration of this technology’s ability to record multiple neutrino interactions simultaneously will pave the way for the construction of the DUNE liquid argon near detector.”

Next steps

Testing the 2×2 prototype is necessary to demonstrate that the innovative design and technology are effective on a large scale to meet the near detector’s requirements. A modular liquid-argon detector capable of detecting high rates of neutrinos and antineutrinos has never been built or tested before.

The existing Fermilab beamline is an ideal place for testing and presents an exciting opportunity for the researchers to measure these mysterious particles. It is currently running in “antineutrino mode,” so DUNE scientists will use the 2×2 prototype to study the interactions between antineutrinos and argon. When antineutrinos hit argon atoms, as they will in the argon-filled near detector, they interact and produce other particles. The prototype will observe what kinds of particles are produced and how often. Studying these antineutrino interactions will prepare scientists to compare neutrino and antineutrino oscillations with DUNE.

“Analyzing this data is a great opportunity for our early-career scientists to gain experience,” says Kevin Wood, the first run coordinator for the 2×2 prototype and a postdoctoral researcher at Berkeley Lab, where the prototype’s novel readout system was developed. “The neutrino interactions imaged by the 2×2 prototype will provide a highly anticipated dataset for our graduate students, postdocs and other young collaborators to analyze as we continue to prepare to bring DUNE online.”

The DUNE collaboration plans to bombard the 2×2 prototype with antineutrinos from the Fermilab beam for several months.

“This is an exciting milestone for the 2×2 team and the entire DUNE collaboration,” says Sergio Bertolucci, professor of physics at the University of Bologna in Italy and co-spokesperson of DUNE with Mary Bishai of Brookhaven National Laboratory. “Let this be the first of many neutrino interactions for DUNE!”

w

#science#space#astronomy#physics#news#nasa#astrophysics#esa#spacetimewithstuartgary#starstuff#spacetime#hubble space telescope#jwst#fermilab

18 notes

·

View notes

Text

Excerpt from this story from Canary Media:

In northeastern Oregon, nearly 9,500 acres of farmland will soon be transformed into a 1,200-megawatt solar project. State regulators approved Sunstone Solar, the nation’s largest proposed solar-plus-storage facility, last fall. Once up and running, the project will include up to 7,200 megawatt-hours of storage, and its nearly four million solar panels will produce enough clean electricity to power around 800,000 homes each year. Pine Gate Renewables, the North Carolina–based developer behind the project, touted a first-of-a-kind initiative to invest up to $11 million in local wheat farms to offset economic impacts on the region’s agriculture. Construction will begin in 2026.

Sunstone is the latest — and largest — in a slew of giant solar installations cropping up around the country. As states including Oregon pursue ambitious clean energy targets, developers are building more and more massive solar plants to keep pace — and increasingly pairing them with batteries to soak up any excess power.

Solar installations reached record levels in the U.S. last year, led by a surge in Texas and California. In 2024, 34 gigawatts of utility-scale solar were added to the grid — up 74 percent from the previous peak in 2023. Battery storage also leapt to new heights, with 13 gigawatts — nearly double the record set in 2023 — built last year.

Solar and storage projects aren’t just multiplying — they’re also getting bigger. Once constructed, Sunstone Solar will overshadow the current largest solar-plus-storage project operating in the U.S., which began providing up to 875 megawatts of solar and 3,287 megawatt-hours of battery storage last January. It’s also a big step up from existing solar farms in Oregon: The state’s largest operational solar project came online in April 2023, with 162 megawatts of solar capacity.

According to a data analysis by climate journalist Michael Thomas, the average size of a solar farm in the U.S. grew sixfold from 2014 to 2024, from 10 megawatts to 65 megawatts. Battery projects are expanding at an even faster pace, with 15 times the average storage capacity last year compared with 2019. One major reason for building bigger is that developers are reaping greater returns on investment by capitalizing on economies of scale. Large-scale projects cost significantly less per watt than smaller ones to build, according to data from the Lawrence Berkeley National Laboratory.

Sunstone Solar is also one of a growing number of combined solar-and-storage facilities, which allow greater amounts of power produced at peak sunny hours to be stored and dispensed later in the day.

10 notes

·

View notes

Text

Janet Jackson Apologizes for Kamala Harris ‘Confusion’

WHOOPS NOW

“She deeply respects Vice President Kamala Harris and her accomplishments as a Black and Indian woman,” a spokesperson for Jackson said.

Sean Craig

Published Sep. 22, 2024 1:19PM EDT

Gilbert Flores/Getty Images

Singer Janet Jackson apologized through a representative for claiming Vice President Kamala Harris, who is Black, is “not Black.”

“She’s not Black,” said Jackson, in an interview with The Guardian newspaper published Saturday. “That’s what I heard. That she’s Indian. Her father’s white. That’s what I was told. I mean, I haven’t watched the news in a few days. I was told that they discovered her father was white.”

Harris, of course, is not half-white and is very much Black. Her father is Donald Harris, a Jamaican-American economist who was the first Black scholar to receive a tenured position at Stanford’s economics department.

Kamala Harris Dismantles Donald Trump’s Attack on Her RaceHIGH ROADJosh Fiallo

Harris, who graduated from leading historically Black university Howard, has frequently spoken about her Black identity in politics. She also once joked, when asked if she’d ever smoked marijuana, “Half my family’s from Jamaica, are you kidding me?”

She is also Indian. Her late mother, Shyamala Gopalan, a medical scientist who worked at the Lawrence Berkeley National Laboratory, was originally from Chennai.

instagram

A spokesperson for Jackson told BuzzFeed her comments were “based on misinformation.”

“She deeply respects Vice President Kamala Harris and her accomplishments as a Black and Indian woman,” the spokesperson added. “Janet apologizes for any confusion caused and acknowledges the importance of accurate representation in public discourse. We appreciate the opportunity to address this and will remain committed to promoting unity.”

Jackson’s rep didn’t explain how that information came to her.

Former president Donald Trump—Harris’ opponent in this year’s presidential election—has polluted the public conversation about Harris’ race, making false claims that Harris misled voters about her background

“I didn’t know she was Black until a number of years ago when she happened to turn Black and now she wants to be known as Black,” Trump told the annual convention of the National Association of Black Journalists in Chicago last month. “I don’t know, is she Indian or is she Black?”

At least Janet Jackson now knows the answer is “both.”

Sean Craig

@sdbcraig

ALL I GOTTA SAY IS:

Who pulled that old has-been's chain?

10 notes

·

View notes

Text

A small mission to test technology to detect radio waves from the cosmic Dark Ages over 13.4 billion years ago will blast off for the far side of the moon in 2025. The Lunar Surface Electromagnetic Experiment-Night mission, or LuSEE-Night for short, is a small radio telescope being funded by NASA and the U.S. Department of Energy with involvement from scientists at the Lawrence Berkeley National Laboratory, the Brookhaven National Laboratory, the University of California, Berkeley and the University of Minnesota. LuSEE-Night will blast off as part of NASA's Commercial Lunar Payloads program. The Dark Ages are the evocative name given to the period of time after the Big Bang, when the first stars and galaxies were only just beginning to form and ionize the neutral hydrogen gas that filled the universe. Little is known about this period, despite efforts by the James Webb Space Telescope to begin probing into this era.

Continue Reading.

58 notes

·

View notes

Text

An unneccesary collection of Edwin McMillan photos

I have five mutuals so they'll get it, we hope

so cuties forever 💖💖💖💖💖💖💖💖

(compilation edition)

(@un-ionizetheradlab don't kill me)

(@lacontroller1991 Lawrence is at the bottom I promise)

Okay so this was before he grew a mustache. I love how "normal" he looks but at least he's happy <3333

You know what else makes him happy?

Twinning portraits with Alvarez!!!

Okay, fine, I know that they didn't make similar poses in opposite directions ON PURPOSE but I like to think so.

Okay but they actually look kind of similar. Except McMillan has a mustache.

This next one is McMillan pretending his shoes are the most interesting things in the world. I don't know. Maybe he's camera-shy. This is actually really relatable.

While we're still on the topic of McMillan doing anything BUT looking at the camera...

McMillan, now the director of Lawrence Berkeley National Laboratory, is supposed to be getting photographed by Ansel Adams. Who, by the way, mostly photographed landscapes. But I guess it was a really special occassion.

Anyways, Adams is taking forever to set up the camera. So McMillan is bored out of his mind, thinking "Why am I here?"

But all that time probably paid off because...

AWWWWWW he's so cute when he smiles <33333

To close it all off, here's a cute little one where Nobel-winning scientists are doing everyday stuff like the rest of us:

(who in the world is the guy all the way on the left)

McMillan loves Coca-Cola too.

Anyways look at Ernest Lawrence in the middle he's so cute <3

Honestly I would've shipped those two IN ONLY Lawrenceheimer and Alvamillan didn't exist.

So, I guess that's it for today. Now I need to make a folder for all these images. (And finish up that map I was working on last Monday.)

5 notes

·

View notes

Text

Improving Climate Predictions By Unlocking The Secrets of Soil Microbes

— By Julie Bobyock, Lawrence Berkeley National Laboratory | February 5, 2024

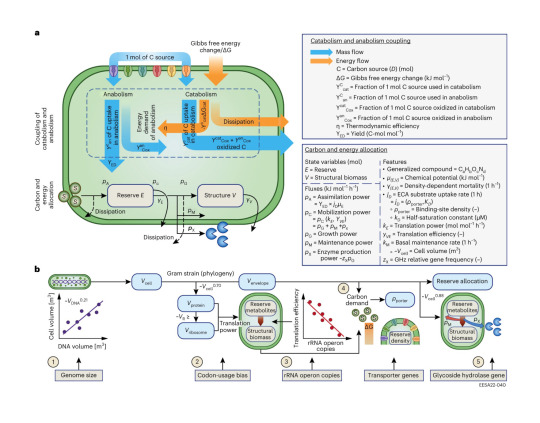

Overview of DEBmicroTrait. Credit: Nature Microbiology (2024). DOI: 10.1038/s41564-023-01582-W

Climate models are essential to predicting and addressing climate change, but can fail to adequately represent soil microbes, a critical player in ecosystem soil carbon sequestration that affects the global carbon cycle.

A team of scientists led by the Department of Energy's Lawrence Berkeley National Laboratory (Berkeley Lab) has developed a new model that incorporates genetic information from microbes. This new model enables the scientists to better understand how certain soil microbes efficiently store carbon supplied by plant roots, and could inform agricultural strategies to preserve carbon in the soil in support of plant growth and climate change mitigation.

"Our research demonstrates the advantage of assembling the genetic information of microorganisms directly from soil. Previously, we only had information about a small number of microbes studied in the lab," said Berkeley Lab Postdoctoral Researcher Gianna Marschmann, the paper's lead author.

"Having genome information allows us to create better models capable of predicting how various plant types, crops, or even specific cultivars can collaborate with soil microbes to better capture carbon. Simultaneously, this collaboration can enhance soil health."

This research is described in a new paper that was recently published in the journal Nature Microbiology. The corresponding authors are Eoin Brodie of Berkeley Lab, and Jennifer Pett-Ridge of Lawrence Livermore National Lab, who leads the "Microbes Persist" Soil Microbiome Scientific Focus Area project.

Seeing the Unseen: Microbial Impact on Soil Health and Carbon

Soil microbes help plants access soil nutrients and resist drought, disease, and pests. Their impacts on the carbon cycle are particularly important to represent in climate models because they affect the amount of carbon stored in soil or released into the atmosphere as carbon dioxide during the process of decomposition.

By building their own bodies from that carbon, microbes can stabilize (or store) it in the soil, and influence how much, and for how long carbon remains stored belowground. The relevance of these functions to agriculture and climate are being observed like never before.

However, with just one gram of soil containing up to 10 billion microorganisms and thousands of different species, the vast majority of microbes have never been studied in the lab. Until recently, the data scientists had to inform these models came from only a tiny minority of lab-studied microbes, with many unrelated to those needing representation in climate models.

"This is like building an ecosystem model for a desert based on information from plants that only grow in a tropical forest," explained Brodie.

The World 🌎 Beneath Our Feet 🦶🦶

To address this challenge, the team of scientists used genome information directly to build a model capable of being tailored to any ecosystem in need of study, from California's grasslands to thawing permafrost in the Arctic. With the model using genomes to provide insights into how soil microbes function, the team applied this approach to study plant-microbiome interactions in a California rangeland. Rangelands are economically and ecologically important in California, making up more than 40% of the land area.

Research focused on the microbes living around plant roots (called the rhizosphere). This is an important environment to study because, despite being only 1-2% of Earth's soil volume, this root zone is estimated to hold up to 30-40% of Earth's carbon stored in soils, with much of that carbon being released by roots as they grow.

To build the model, scientists simulated microbes growing in the root environment, using data from the University of California Hopland Research and Extension Center. Nevertheless, the approach is not limited to a particular ecosystem. Since certain genetic information corresponds to specific traits, just as in humans, the relationship between the genomes (what the model is based on) and the microbial traits is transferable to microbes and ecosystems all over the world.

The team developed a new way to predict important traits of microbes affecting how quickly they use carbon and nutrients supplied by plant roots. Using the model, the researchers demonstrated that as plants grow and release carbon, distinct microbial growth strategies emerge because of the interaction between root chemistry and microbial traits.

In particular, they found that microbes with a slower growth rate were favored by types of carbon released during later stages of plant development and were surprisingly efficient in using carbon—allowing them to store more of this key element in the soil.

The Root of the Matter

This new observation provides a basis for improving how root-microbe interactions are represented in models, and enhances the ability to predict how microbes impact changes to the global carbon cycle in climate models.

"This newfound knowledge has important implications for agriculture and soil health. With the models we are building, it is increasingly possible to leverage new understanding of how carbon cycles through soil. This in turn opens up possibilities to recommend strategies for preserving valuable carbon in the soil to support biodiversity and plant growth at scales feasible to measure the impact," Marschmann said.

The research highlights the power of using modeling approaches based on genetic information to predict microbial traits that can help shed light on the soil microbiome and its impact on the environment.

#Biology 🧬 🧪#Ecology#Cell & Microbiology 🧫#Nanotechnology#Physics#Earth 🌍#Astronomy 🪐 🔭 & Space#Chemistry 🧪 ⚛️#Other Sciences#Medicine 💊#Technology#Phys.Org#Julie Bobyock | Lawrence Berkeley National Laboratory 🥼 🧪#Almeda County | California | USA 🇺🇸

0 notes

Text

New research shows electricity prices are climbing at frightening levels — here's how solar can power your home more cheaply

3 notes

·

View notes

Text

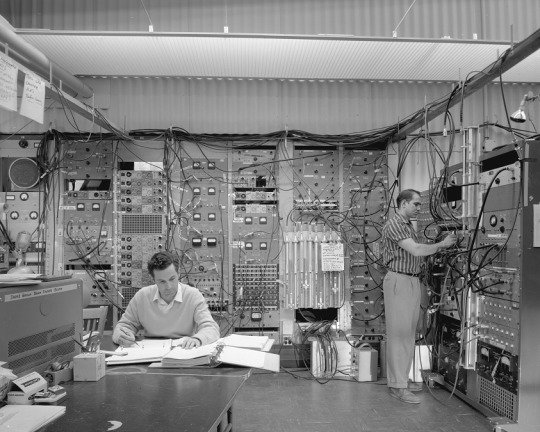

Segre's anti-proton experiment, counting area, Tom Elioff. Photograph taken April 17, 1959. Bevatron-1783

Record Group 434: General Records of the Department of EnergySeries: Photographs Documenting Scientists, Special Events, and Nuclear Research Facilities, Instruments, and Projects at the Lawrence Berkeley National Laboratory

This black and white photograph shows two men in a room of 1950’s computer equipment, with many dials and cables everywhere. One man is standing and appears to be adjusting the equipment, while the other is sitting at a desk looking at a large binder and making notes.

52 notes

·

View notes

Text

Deep Learning, Deconstructed: A Physics-Informed Perspective on AI’s Inner Workings

Dr. Yasaman Bahri’s seminar offers a profound glimpse into the complexities of deep learning, merging empirical successes with theoretical foundations. Dr. Bahri’s distinct background, weaving together statistical physics, machine learning, and condensed matter physics, uniquely positions her to dissect the intricacies of deep neural networks. Her journey from a physics-centric PhD at UC Berkeley, influenced by computer science seminars, exemplifies the burgeoning synergy between physics and machine learning, underscoring the value of interdisciplinary approaches in elucidating deep learning’s mysteries.

At the heart of Dr. Bahri’s research lies the intriguing equivalence between neural networks and Gaussian processes in the infinite width limit, facilitated by the Central Limit Theorem. This theorem, by implying that the distribution of outputs from a neural network will approach a Gaussian distribution as the width of the network increases, provides a probabilistic framework for understanding neural network behavior. The derivation of Gaussian processes from various neural network architectures not only yields state-of-the-art kernels but also sheds light on the dynamics of optimization, enabling more precise predictions of model performance.

The discussion on scaling laws is multifaceted, encompassing empirical observations, theoretical underpinnings, and the intricate dance between model size, computational resources, and the volume of training data. While model quality often improves monotonically with these factors, reaching a point of diminishing returns, understanding these dynamics is crucial for efficient model design. Interestingly, the strategic selection of data emerges as a critical factor in surpassing the limitations imposed by power-law scaling, though this approach also presents challenges, including the risk of introducing biases and the need for domain-specific strategies.

As the field of deep learning continues to evolve, Dr. Bahri’s work serves as a beacon, illuminating the path forward. The imperative for interdisciplinary collaboration, combining the rigor of physics with the adaptability of machine learning, cannot be overstated. Moreover, the pursuit of personalized scaling laws, tailored to the unique characteristics of each problem domain, promises to revolutionize model efficiency. As researchers and practitioners navigate this complex landscape, they are left to ponder: What unforeseen synergies await discovery at the intersection of physics and deep learning, and how might these transform the future of artificial intelligence?

Yasaman Bahri: A First-Principle Approach to Understanding Deep Learning (DDPS Webinar, Lawrence Livermore National Laboratory, November 2024)

youtube

Sunday, November 24, 2024

#deep learning#physics informed ai#machine learning research#interdisciplinary approaches#scaling laws#gaussian processes#neural networks#artificial intelligence#ai theory#computational science#data science#technology convergence#innovation in ai#webinar#ai assisted writing#machine art#Youtube

3 notes

·

View notes